[jira] [Updated] (FLINK-15421) GroupAggsHandler throws java.time.LocalDateTime cannot be cast to java.sql.Timestamp

[

https://issues.apache.org/jira/browse/FLINK-15421?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Benchao Li updated FLINK-15421:

---

Description:

`TimestmapType` has two types of physical representation: `Timestamp` and

`LocalDateTime`. When we use following SQL, it will conflict each other:

{quote}SELECT

SUM(cnt) as s,

MAX(ts)

FROM

SELECT

`string`,

`int`,

COUNT * AS cnt,

MAX(rowtime) as ts

FROM T1

GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

GROUP BY `string`

{quote}

with 'table.exec.emit.early-fire.enabled' = true.

The exceptions is below:

{quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime cannot

be cast to java.sql.Timestamp

at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

at

org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

at

org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

at

org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

at

org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527)

at java.lang.Thread.run(Thread.java:748)

{quote}

I also create a UT to quickly reproduce this bug in `WindowAggregateITCase`:

```scala

@Test

def testEarlyFireWithTumblingWindow(): Unit = {

val stream = failingDataSource(data)

.assignTimestampsAndWatermarks(

new TimestampAndWatermarkWithOffset

[(Long, Int, Double, Float, BigDecimal, String, String)](10L))

val table = stream.toTable(tEnv,

'rowtime.rowtime, 'int, 'double, 'float, 'bigdec, 'string, 'name)

tEnv.registerTable("T1", table)

tEnv.getConfig.getConfiguration.setBoolean("table.exec.emit.early-fire.enabled",

true)

tEnv.getConfig.getConfiguration.setString("table.exec.emit.early-fire.delay",

"1000 ms")

val sql =

"""

|SELECT

| SUM(cnt) as s,

| MAX(ts)

|FROM

| (SELECT

| `string`,

| `int`,

| COUNT(*) AS cnt,

| MAX(rowtime) as ts

| FROM T1

| GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND))

|GROUP BY `string`

|""".stripMargin

tEnv.sqlQuery(sql).toRetractStream[Row].print()

env.execute()

}

```

was:

`TimestmapType` has two types of physical representation: `Timestamp` and

`LocalDateTime`. When we use following SQL, it will conflict each other:

{quote}SELECT

SUM(cnt) as s,

MAX(ts)

FROM

SELECT

`string`,

`int`,

COUNT * AS cnt,

MAX(rowtime) as ts

FROM T1

GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

GROUP BY `string`

{quote}

with 'table.exec.emit.early-fire.enabled' = true.

The exceptions is below:

{quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime cannot

be cast to java.sql.Timestamp

at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

at

org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

at

org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

at

org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

at

org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

at org.apache.flink.runtime.taskmanager.

[jira] [Commented] (FLINK-15418) StreamExecMatchRule not set FlinkRelDistribution

[ https://issues.apache.org/jira/browse/FLINK-15418?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17003976#comment-17003976 ] Benchao Li commented on FLINK-15418: [~jark] I've fixed this bug, could you help to verify and assign this issue to me? I'd like to contribute it to the community. > StreamExecMatchRule not set FlinkRelDistribution > > > Key: FLINK-15418 > URL: https://issues.apache.org/jira/browse/FLINK-15418 > Project: Flink > Issue Type: Bug >Affects Versions: 1.9.1, 1.10.0 >Reporter: Benchao Li >Priority: Major > > StreamExecMatchRule forgets to set FlinkRelDistribution. When match clause > with `partition by`, and parallelism > 1, will result in following exception: > ``` > Caused by: java.lang.NullPointerException > at > org.apache.flink.runtime.state.heap.StateTable.put(StateTable.java:336) > at > org.apache.flink.runtime.state.heap.StateTable.put(StateTable.java:159) > at > org.apache.flink.runtime.state.heap.HeapMapState.put(HeapMapState.java:100) > at > org.apache.flink.runtime.state.UserFacingMapState.put(UserFacingMapState.java:52) > at > org.apache.flink.cep.nfa.sharedbuffer.SharedBuffer.registerEvent(SharedBuffer.java:141) > at > org.apache.flink.cep.nfa.sharedbuffer.SharedBufferAccessor.registerEvent(SharedBufferAccessor.java:74) > at org.apache.flink.cep.nfa.NFA$EventWrapper.getEventId(NFA.java:483) > at org.apache.flink.cep.nfa.NFA.computeNextStates(NFA.java:605) > at org.apache.flink.cep.nfa.NFA.doProcess(NFA.java:292) > at org.apache.flink.cep.nfa.NFA.process(NFA.java:228) > at > org.apache.flink.cep.operator.CepOperator.processEvent(CepOperator.java:420) > at > org.apache.flink.cep.operator.CepOperator.processElement(CepOperator.java:242) > at > org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173) > at > org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151) > at > org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128) > at > org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69) > at > org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311) > at > org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187) > at > org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488) > at > org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470) > at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702) > at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527) > at java.lang.Thread.run(Thread.java:748) > ``` -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot commented on issue #10702: [FLINK-15412][hive] LocalExecutorITCase#testParameterizedTypes failed…

flinkbot commented on issue #10702: [FLINK-15412][hive] LocalExecutorITCase#testParameterizedTypes failed… URL: https://github.com/apache/flink/pull/10702#issuecomment-569214736 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit c0a381f057e721c85e693a280e7be30a62163475 (Fri Dec 27 07:54:23 UTC 2019) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! * **This pull request references an unassigned [Jira ticket](https://issues.apache.org/jira/browse/FLINK-15412).** According to the [code contribution guide](https://flink.apache.org/contributing/contribute-code.html), tickets need to be assigned before starting with the implementation work. Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Updated] (FLINK-15412) LocalExecutorITCase#testParameterizedTypes failed in travis

[

https://issues.apache.org/jira/browse/FLINK-15412?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-15412:

---

Labels: pull-request-available (was: )

> LocalExecutorITCase#testParameterizedTypes failed in travis

> ---

>

> Key: FLINK-15412

> URL: https://issues.apache.org/jira/browse/FLINK-15412

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Client

>Reporter: Dian Fu

>Priority: Major

> Labels: pull-request-available

> Fix For: 1.10.0

>

>

> The travis of release-1.9 failed with the following error:

> {code:java}

> 14:43:17.916 [INFO] Running

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase

> 14:44:47.388 [ERROR] Tests run: 34, Failures: 0, Errors: 1, Skipped: 1, Time

> elapsed: 89.468 s <<< FAILURE! - in

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase

> 14:44:47.388 [ERROR] testParameterizedTypes[Planner:

> blink](org.apache.flink.table.client.gateway.local.LocalExecutorITCase) Time

> elapsed: 7.88 s <<< ERROR!

> org.apache.flink.table.client.gateway.SqlExecutionException: Invalid SQL

> statement at

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase.testParameterizedTypes(LocalExecutorITCase.java:557)

> Caused by: org.apache.flink.table.api.ValidationException: SQL validation

> failed. findAndCreateTableSource failed

> at

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase.testParameterizedTypes(LocalExecutorITCase.java:557)

> Caused by: org.apache.flink.table.api.TableException:

> findAndCreateTableSource failed

> at

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase.testParameterizedTypes(LocalExecutorITCase.java:557)

> Caused by: org.apache.flink.table.api.NoMatchingTableFactoryException:

> Could not find a suitable table factory for

> 'org.apache.flink.table.factories.TableSourceFactory' in

> the classpath.

> Reason: No context matches.

> {code}

> instance: [https://api.travis-ci.org/v3/job/629636106/log.txt]

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-15421) GroupAggsHandler throws java.time.LocalDateTime cannot be cast to java.sql.Timestamp

[

https://issues.apache.org/jira/browse/FLINK-15421?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17003974#comment-17003974

]

Benchao Li commented on FLINK-15421:

[~lzljs3620320] Sorry about the format of the code. Could you tell me how to

write a code block correctly in issue description ?

> GroupAggsHandler throws java.time.LocalDateTime cannot be cast to

> java.sql.Timestamp

>

>

> Key: FLINK-15421

> URL: https://issues.apache.org/jira/browse/FLINK-15421

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.9.1, 1.10.0

>Reporter: Benchao Li

>Priority: Major

> Fix For: 1.9.2, 1.10.0

>

>

> `TimestmapType` has two types of physical representation: `Timestamp` and

> `LocalDateTime`. When we use following SQL, it will conflict each other:

> {quote}SELECT

> SUM(cnt) as s,

> MAX(ts)

> FROM

> SELECT

> `string`,

> `int`,

> COUNT * AS cnt,

> MAX(rowtime) as ts

> FROM T1

> GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

> GROUP BY `string`

> {quote}

> with 'table.exec.emit.early-fire.enabled' = true.

> The exceptions is below:

> {quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime

> cannot be cast to java.sql.Timestamp

> at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

> at

> org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

> at

> org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

> at

> org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

> at

> org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

> at

> org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527)

> at java.lang.Thread.run(Thread.java:748)

> {quote}

> I also create a UT to quickly reproduce this bug in `WindowAggregateITCase`:

> {quote}@Test

> def testEarlyFireWithTumblingWindow(): Unit = {

> val stream = failingDataSource(data)

> .assignTimestampsAndWatermarks(

> new TimestampAndWatermarkWithOffset

> [(Long, Int, Double, Float, BigDecimal, String, String)](10L))

> val table = stream.toTable(tEnv,

> 'rowtime.rowtime, 'int, 'double, 'float, 'bigdec, 'string, 'name)

> tEnv.registerTable("T1", table)

>

> tEnv.getConfig.getConfiguration.setBoolean("table.exec.emit.early-fire.enabled",

> true)

>

> tEnv.getConfig.getConfiguration.setString("table.exec.emit.early-fire.delay",

> "1000 ms")

> val sql =

> """

> |SELECT

> | SUM(cnt) as s,

> | MAX(ts)

> |FROM

> | (SELECT

> | `string`,

> | `int`,

> | COUNT(*) AS cnt,

> | MAX(rowtime) as ts

> | FROM T1

> | GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND))

> |GROUP BY `string`

> |""".stripMargin

> tEnv.sqlQuery(sql).toRetractStream[Row].print()

> env.execute()

> }

>

>

> {quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] lirui-apache commented on issue #10702: [FLINK-15412][hive] LocalExecutorITCase#testParameterizedTypes failed…

lirui-apache commented on issue #10702: [FLINK-15412][hive] LocalExecutorITCase#testParameterizedTypes failed… URL: https://github.com/apache/flink/pull/10702#issuecomment-569214511 I'm just back porting FLINK-15240. cc @KurtYoung @bowenli86 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (FLINK-15421) GroupAggsHandler throws java.time.LocalDateTime cannot be cast to java.sql.Timestamp

[

https://issues.apache.org/jira/browse/FLINK-15421?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17003973#comment-17003973

]

Jingsong Lee commented on FLINK-15421:

--

Thanks [~libenchao] for reporting. Can you format the code in description?

> GroupAggsHandler throws java.time.LocalDateTime cannot be cast to

> java.sql.Timestamp

>

>

> Key: FLINK-15421

> URL: https://issues.apache.org/jira/browse/FLINK-15421

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.9.1, 1.10.0

>Reporter: Benchao Li

>Priority: Major

> Fix For: 1.9.2, 1.10.0

>

>

> `TimestmapType` has two types of physical representation: `Timestamp` and

> `LocalDateTime`. When we use following SQL, it will conflict each other:

> {quote}SELECT

> SUM(cnt) as s,

> MAX(ts)

> FROM

> SELECT

> `string`,

> `int`,

> COUNT * AS cnt,

> MAX(rowtime) as ts

> FROM T1

> GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

> GROUP BY `string`

> {quote}

> with 'table.exec.emit.early-fire.enabled' = true.

> The exceptions is below:

> {quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime

> cannot be cast to java.sql.Timestamp

> at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

> at

> org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

> at

> org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

> at

> org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

> at

> org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

> at

> org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527)

> at java.lang.Thread.run(Thread.java:748)

> {quote}

> I also create a UT to quickly reproduce this bug in `WindowAggregateITCase`:

> {quote}@Test

> def testEarlyFireWithTumblingWindow(): Unit = {

> val stream = failingDataSource(data)

> .assignTimestampsAndWatermarks(

> new TimestampAndWatermarkWithOffset

> [(Long, Int, Double, Float, BigDecimal, String, String)](10L))

> val table = stream.toTable(tEnv,

> 'rowtime.rowtime, 'int, 'double, 'float, 'bigdec, 'string, 'name)

> tEnv.registerTable("T1", table)

>

> tEnv.getConfig.getConfiguration.setBoolean("table.exec.emit.early-fire.enabled",

> true)

>

> tEnv.getConfig.getConfiguration.setString("table.exec.emit.early-fire.delay",

> "1000 ms")

> val sql =

> """

> |SELECT

> | SUM(cnt) as s,

> | MAX(ts)

> |FROM

> | (SELECT

> | `string`,

> | `int`,

> | COUNT(*) AS cnt,

> | MAX(rowtime) as ts

> | FROM T1

> | GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND))

> |GROUP BY `string`

> |""".stripMargin

> tEnv.sqlQuery(sql).toRetractStream[Row].print()

> env.execute()

> }

>

>

> {quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] lirui-apache opened a new pull request #10702: [FLINK-15412][hive] LocalExecutorITCase#testParameterizedTypes failed…

lirui-apache opened a new pull request #10702: [FLINK-15412][hive] LocalExecutorITCase#testParameterizedTypes failed… URL: https://github.com/apache/flink/pull/10702 … in travis ## What is the purpose of the change Fix LocalExecutorITCase#testParameterizedTypes in release-1.9 ## Brief change log - Back port the changes in FLINK-15240. ## Verifying this change Covered by existing tests ## Does this pull request potentially affect one of the following parts: NA ## Documentation NA This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Updated] (FLINK-15421) GroupAggsHandler throws java.time.LocalDateTime cannot be cast to java.sql.Timestamp

[

https://issues.apache.org/jira/browse/FLINK-15421?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Benchao Li updated FLINK-15421:

---

Description:

`TimestmapType` has two types of physical representation: `Timestamp` and

`LocalDateTime`. When we use following SQL, it will conflict each other:

{quote}SELECT

SUM(cnt) as s,

MAX(ts)

FROM

SELECT

`string`,

`int`,

COUNT * AS cnt,

MAX(rowtime) as ts

FROM T1

GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

GROUP BY `string`

{quote}

with 'table.exec.emit.early-fire.enabled' = true.

The exceptions is below:

{quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime cannot

be cast to java.sql.Timestamp

at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

at

org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

at

org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

at

org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

at

org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527)

at java.lang.Thread.run(Thread.java:748)

{quote}

I also create a UT to quickly reproduce this bug in `WindowAggregateITCase`:

{quote}@Test

def testEarlyFireWithTumblingWindow(): Unit = {

val stream = failingDataSource(data)

.assignTimestampsAndWatermarks(

new TimestampAndWatermarkWithOffset

[(Long, Int, Double, Float, BigDecimal, String, String)](10L))

val table = stream.toTable(tEnv,

'rowtime.rowtime, 'int, 'double, 'float, 'bigdec, 'string, 'name)

tEnv.registerTable("T1", table)

tEnv.getConfig.getConfiguration.setBoolean("table.exec.emit.early-fire.enabled",

true)

tEnv.getConfig.getConfiguration.setString("table.exec.emit.early-fire.delay",

"1000 ms")

val sql =

"""

|SELECT

| SUM(cnt) as s,

| MAX(ts)

|FROM

| (SELECT

| `string`,

| `int`,

| COUNT(*) AS cnt,

| MAX(rowtime) as ts

| FROM T1

| GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND))

|GROUP BY `string`

|""".stripMargin

tEnv.sqlQuery(sql).toRetractStream[Row].print()

env.execute()

}

{quote}

was:

`TimestmapType` has two types of physical representation: `Timestamp` and

`LocalDateTime`. When we use following SQL, it will conflict each other:

{quote}SELECT

SUM(cnt) as s,

MAX(ts)

FROM

SELECT

`string`,

`int`,

COUNT * AS cnt,

MAX(rowtime) as ts

FROM T1

GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

GROUP BY `string`

{quote}

with 'table.exec.emit.early-fire.enabled' = true.

The exceptions is below:

{quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime cannot

be cast to java.sql.Timestamp

at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

at

org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

at

org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

at

org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

at

org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

at org.apache.flink.runtime.taskmanager

[jira] [Updated] (FLINK-15421) GroupAggsHandler throws java.time.LocalDateTime cannot be cast to java.sql.Timestamp

[

https://issues.apache.org/jira/browse/FLINK-15421?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jingsong Lee updated FLINK-15421:

-

Component/s: Table SQL / Planner

> GroupAggsHandler throws java.time.LocalDateTime cannot be cast to

> java.sql.Timestamp

>

>

> Key: FLINK-15421

> URL: https://issues.apache.org/jira/browse/FLINK-15421

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.9.1, 1.10.0

>Reporter: Benchao Li

>Priority: Major

> Fix For: 1.9.2, 1.10.0

>

>

> `TimestmapType` has two types of physical representation: `Timestamp` and

> `LocalDateTime`. When we use following SQL, it will conflict each other:

> {quote}SELECT

> SUM(cnt) as s,

> MAX(ts)

> FROM

> SELECT

> `string`,

> `int`,

> COUNT * AS cnt,

> MAX(rowtime) as ts

> FROM T1

> GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

> GROUP BY `string`

> {quote}

> with 'table.exec.emit.early-fire.enabled' = true.

> The exceptions is below:

> {quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime

> cannot be cast to java.sql.Timestamp

> at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

> at

> org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

> at

> org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

> at

> org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

> at

> org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

> at

> org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527)

> at java.lang.Thread.run(Thread.java:748)

> {quote}

> I also create a UT to quickly reproduce this bug in `WindowAggregateITCase`:

>

> @Test

> def testEarlyFireWithTumblingWindow(): Unit = {

> val stream = failingDataSource(data)

> .assignTimestampsAndWatermarks(

> new TimestampAndWatermarkWithOffset

> [(Long, Int, Double, Float, BigDecimal, String, String)](10L))

> val table = stream.toTable(tEnv,

> 'rowtime.rowtime, 'int, 'double, 'float, 'bigdec, 'string, 'name)

> tEnv.registerTable("T1", table)

>

> tEnv.getConfig.getConfiguration.setBoolean("table.exec.emit.early-fire.enabled",

> true)

>

> tEnv.getConfig.getConfiguration.setString("table.exec.emit.early-fire.delay",

> "1000 ms")

> val sql =

> """

> |SELECT

> | SUM(cnt) as s,

> | MAX(ts)

> |FROM

> | (SELECT

> | `string`,

> | `int`,

> | COUNT(*) AS cnt,

> | MAX(rowtime) as ts

> | FROM T1

> | GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND))

> |GROUP BY `string`

> |""".stripMargin

> tEnv.sqlQuery(sql).toRetractStream[Row].print()

> env.execute()

> }

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-15421) GroupAggsHandler throws java.time.LocalDateTime cannot be cast to java.sql.Timestamp

[

https://issues.apache.org/jira/browse/FLINK-15421?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Benchao Li updated FLINK-15421:

---

Description:

`TimestmapType` has two types of physical representation: `Timestamp` and

`LocalDateTime`. When we use following SQL, it will conflict each other:

{quote}SELECT

SUM(cnt) as s,

MAX(ts)

FROM

SELECT

`string`,

`int`,

COUNT * AS cnt,

MAX(rowtime) as ts

FROM T1

GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

GROUP BY `string`

{quote}

with 'table.exec.emit.early-fire.enabled' = true.

The exceptions is below:

{quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime cannot

be cast to java.sql.Timestamp

at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

at

org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

at

org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

at

org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

at

org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527)

at java.lang.Thread.run(Thread.java:748)

{quote}

I also create a UT to quickly reproduce this bug in `WindowAggregateITCase`:

@Test

def testEarlyFireWithTumblingWindow(): Unit = {

val stream = failingDataSource(data)

.assignTimestampsAndWatermarks(

new TimestampAndWatermarkWithOffset

[(Long, Int, Double, Float, BigDecimal, String, String)](10L))

val table = stream.toTable(tEnv,

'rowtime.rowtime, 'int, 'double, 'float, 'bigdec, 'string, 'name)

tEnv.registerTable("T1", table)

tEnv.getConfig.getConfiguration.setBoolean("table.exec.emit.early-fire.enabled",

true)

tEnv.getConfig.getConfiguration.setString("table.exec.emit.early-fire.delay",

"1000 ms")

val sql =

"""

|SELECT

| SUM(cnt) as s,

| MAX(ts)

|FROM

| (SELECT

| `string`,

| `int`,

| COUNT(*) AS cnt,

| MAX(rowtime) as ts

| FROM T1

| GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND))

|GROUP BY `string`

|""".stripMargin

tEnv.sqlQuery(sql).toRetractStream[Row].print()

env.execute()

}

was:

`TimestmapType` has two types of physical representation: `Timestamp` and

`LocalDateTime`. When we use following SQL, it will conflict each other:

{quote}SELECT

SUM(cnt) as s,

MAX(ts)

FROM

SELECT

`string`,

`int`,

COUNT * AS cnt,

MAX(rowtime) as ts

FROM T1

GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

GROUP BY `string`

{quote}

with 'table.exec.emit.early-fire.enabled' = true.

The exceptions is below:

{quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime cannot

be cast to java.sql.Timestamp

at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

at

org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

at

org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

at

org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

at

org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

at org.apache.flink.runtime.taskmanager.Task.doRun

[jira] [Updated] (FLINK-15421) GroupAggsHandler throws java.time.LocalDateTime cannot be cast to java.sql.Timestamp

[

https://issues.apache.org/jira/browse/FLINK-15421?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jingsong Lee updated FLINK-15421:

-

Fix Version/s: 1.10.0

1.9.2

> GroupAggsHandler throws java.time.LocalDateTime cannot be cast to

> java.sql.Timestamp

>

>

> Key: FLINK-15421

> URL: https://issues.apache.org/jira/browse/FLINK-15421

> Project: Flink

> Issue Type: Bug

>Affects Versions: 1.9.1, 1.10.0

>Reporter: Benchao Li

>Priority: Major

> Fix For: 1.9.2, 1.10.0

>

>

> `TimestmapType` has two types of physical representation: `Timestamp` and

> `LocalDateTime`. When we use following SQL, it will conflict each other:

> {quote}SELECT

> SUM(cnt) as s,

> MAX(ts)

> FROM

> SELECT

> `string`,

> `int`,

> COUNT * AS cnt,

> MAX(rowtime) as ts

> FROM T1

> GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

> GROUP BY `string`

> {quote}

> with 'table.exec.emit.early-fire.enabled' = true.

> The exceptions is below:

> {quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime

> cannot be cast to java.sql.Timestamp

> at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

> at

> org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

> at

> org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

> at

> org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

> at

> org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

> at

> org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527)

> at java.lang.Thread.run(Thread.java:748)

> {quote}

> I also create a UT to quickly reproduce this bug in `WindowAggregateITCase`:

>

> @Test

> def testEarlyFireWithTumblingWindow(): Unit = {

> val stream = failingDataSource(data)

> .assignTimestampsAndWatermarks(

> new TimestampAndWatermarkWithOffset

> [(Long, Int, Double, Float, BigDecimal, String, String)](10L))

> val table = stream.toTable(tEnv,

> 'rowtime.rowtime, 'int, 'double, 'float, 'bigdec, 'string, 'name)

> tEnv.registerTable("T1", table)

>

> tEnv.getConfig.getConfiguration.setBoolean("table.exec.emit.early-fire.enabled",

> true)

>

> tEnv.getConfig.getConfiguration.setString("table.exec.emit.early-fire.delay",

> "1000 ms")

> val sql =

> """

> |SELECT

> | SUM(cnt) as s,

> | MAX(ts)

> |FROM

> | (SELECT

> | `string`,

> | `int`,

> | COUNT(*) AS cnt,

> | MAX(rowtime) as ts

> | FROM T1

> | GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND))

> |GROUP BY `string`

> |""".stripMargin

> tEnv.sqlQuery(sql).toRetractStream[Row].print()

> env.execute()

> }

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-15420) Cast string to timestamp will loose precision

[

https://issues.apache.org/jira/browse/FLINK-15420?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17003950#comment-17003950

]

Jingsong Lee commented on FLINK-15420:

--

CC: [~docete]

> Cast string to timestamp will loose precision

> -

>

> Key: FLINK-15420

> URL: https://issues.apache.org/jira/browse/FLINK-15420

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Reporter: Jingsong Lee

>Priority: Blocker

> Fix For: 1.10.0

>

>

> {code:java}

> cast('2010-10-14 12:22:22.123456' as timestamp(9))

> {code}

> Will produce "2010-10-14 12:22:22.123" in blink planner, this should not

> happen.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-15421) GroupAggsHandler throws java.time.LocalDateTime cannot be cast to java.sql.Timestamp

[

https://issues.apache.org/jira/browse/FLINK-15421?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17003951#comment-17003951

]

Jingsong Lee commented on FLINK-15421:

--

CC: [~docete]

> GroupAggsHandler throws java.time.LocalDateTime cannot be cast to

> java.sql.Timestamp

>

>

> Key: FLINK-15421

> URL: https://issues.apache.org/jira/browse/FLINK-15421

> Project: Flink

> Issue Type: Bug

>Affects Versions: 1.9.1, 1.10.0

>Reporter: Benchao Li

>Priority: Major

>

> `TimestmapType` has two types of physical representation: `Timestamp` and

> `LocalDateTime`. When we use following SQL, it will conflict each other:

> {quote}SELECT

> SUM(cnt) as s,

> MAX(ts)

> FROM

> SELECT

> `string`,

> `int`,

> COUNT * AS cnt,

> MAX(rowtime) as ts

> FROM T1

> GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

> GROUP BY `string`

> {quote}

> with 'table.exec.emit.early-fire.enabled' = true.

> The exceptions is below:

> {quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime

> cannot be cast to java.sql.Timestamp

> at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

> at

> org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

> at

> org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

> at

> org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

> at

> org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

> at

> org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527)

> at java.lang.Thread.run(Thread.java:748)

> {quote}

> I also create a UT to quickly reproduce this bug in `WindowAggregateITCase`:

> @Test

> def testEarlyFireWithTumblingWindow(): Unit = {

> val stream = failingDataSource(data)

> .assignTimestampsAndWatermarks(

> new TimestampAndWatermarkWithOffset

> [(Long, Int, Double, Float, BigDecimal, String, String)](10L))

> val table = stream.toTable(tEnv,

> 'rowtime.rowtime, 'int, 'double, 'float, 'bigdec, 'string, 'name)

> tEnv.registerTable("T1", table)

>

> tEnv.getConfig.getConfiguration.setBoolean("table.exec.emit.early-fire.enabled",

> true)

>

> tEnv.getConfig.getConfiguration.setString("table.exec.emit.early-fire.delay",

> "1000 ms")

> val sql =

> """

> |SELECT

> | SUM(cnt) as s,

> | MAX(ts)

> |FROM

> | (SELECT

> | `string`,

> | `int`,

> | COUNT(*) AS cnt,

> | MAX(rowtime) as ts

> | FROM T1

> | GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND))

> |GROUP BY `string`

> |""".stripMargin

> tEnv.sqlQuery(sql).toRetractStream[Row].print()

> env.execute()

> }

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-15421) GroupAggsHandler throws java.time.LocalDateTime cannot be cast to java.sql.Timestamp

[

https://issues.apache.org/jira/browse/FLINK-15421?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Benchao Li updated FLINK-15421:

---

Description:

`TimestmapType` has two types of physical representation: `Timestamp` and

`LocalDateTime`. When we use following SQL, it will conflict each other:

{quote}SELECT

SUM(cnt) as s,

MAX(ts)

FROM

SELECT

`string`,

`int`,

COUNT * AS cnt,

MAX(rowtime) as ts

FROM T1

GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

GROUP BY `string`

{quote}

with 'table.exec.emit.early-fire.enabled' = true.

The exceptions is below:

{quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime cannot

be cast to java.sql.Timestamp

at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

at

org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

at

org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

at

org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

at

org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527)

at java.lang.Thread.run(Thread.java:748)

{quote}

I also create a UT to quickly reproduce this bug in `WindowAggregateITCase`:

@Test

def testEarlyFireWithTumblingWindow(): Unit = {

val stream = failingDataSource(data)

.assignTimestampsAndWatermarks(

new TimestampAndWatermarkWithOffset

[(Long, Int, Double, Float, BigDecimal, String, String)](10L))

val table = stream.toTable(tEnv,

'rowtime.rowtime, 'int, 'double, 'float, 'bigdec, 'string, 'name)

tEnv.registerTable("T1", table)

tEnv.getConfig.getConfiguration.setBoolean("table.exec.emit.early-fire.enabled",

true)

tEnv.getConfig.getConfiguration.setString("table.exec.emit.early-fire.delay",

"1000 ms")

val sql =

"""

|SELECT

| SUM(cnt) as s,

| MAX(ts)

|FROM

| (SELECT

| `string`,

| `int`,

| COUNT(*) AS cnt,

| MAX(rowtime) as ts

| FROM T1

| GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND))

|GROUP BY `string`

|""".stripMargin

tEnv.sqlQuery(sql).toRetractStream[Row].print()

env.execute()

}

was:

`TimestmapType` has two types of physical representation: `Timestamp` and

`LocalDateTime`. When we use following SQL, it will conflict each other:

{quote}SELECT

SUM(cnt) as s,

MAX(ts)

FROM

SELECT

`string`,

`int`,

COUNT * AS cnt,

MAX(rowtime) as ts

FROM T1

GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

GROUP BY `string`

{quote}

with 'table.exec.emit.early-fire.enabled' = true.

The exceptions is below:

{quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime cannot

be cast to java.sql.Timestamp

at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

at

org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

at

org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

at

org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

at

org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

at org.apache.flink.runtime.taskmanager.Task.doRun(Ta

[jira] [Updated] (FLINK-15421) GroupAggsHandler throws java.time.LocalDateTime cannot be cast to java.sql.Timestamp

[

https://issues.apache.org/jira/browse/FLINK-15421?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Benchao Li updated FLINK-15421:

---

Description:

`TimestmapType` has two types of physical representation: `Timestamp` and

`LocalDateTime`. When we use following SQL, it will conflict each other:

{quote}SELECT

SUM(cnt) as s,

MAX(ts)

FROM

SELECT

`string`,

`int`,

COUNT * AS cnt,

MAX(rowtime) as ts

FROM T1

GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

GROUP BY `string`

{quote}

with 'table.exec.emit.early-fire.enabled' = true.

The exceptions is below:

{quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime cannot

be cast to java.sql.Timestamp

at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

at

org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

at

org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

at

org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

at

org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527)

at java.lang.Thread.run(Thread.java:748)

{quote}

I also create a UT to quickly reproduce this bug in `WindowAggregateITCase`:

{{@Test}}

{{ def testEarlyFireWithTumblingWindow(): Unit = {}}

{{ val stream = failingDataSource(data)}}

{{ .assignTimestampsAndWatermarks(}}

{{ new TimestampAndWatermarkWithOffset}}

{{ [(Long, Int, Double, Float, BigDecimal, String, String)](10L))}}

{{ val table = stream.toTable(tEnv,}}

{{ 'rowtime.rowtime, 'int, 'double, 'float, 'bigdec, 'string, 'name)}}

{{ tEnv.registerTable("T1", table)}}

{{

tEnv.getConfig.getConfiguration.setBoolean("table.exec.emit.early-fire.enabled",

true)}}

{{

tEnv.getConfig.getConfiguration.setString("table.exec.emit.early-fire.delay",

"1000 ms")}}{{val sql =}}

{{ """}}

{{ |SELECT}}

{{ | SUM(cnt) as s,}}

{{ | MAX(ts)}}

{{ |FROM}}

{{ | (SELECT}}

{{ | `string`,}}

{{ | `int`,}}

{{ | COUNT(*) AS cnt,}}

{{ | MAX(rowtime) as ts}}

{{ | FROM T1}}

{{ | GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND))}}

{{ |GROUP BY `string`}}

{{ |""".stripMargin}}{{tEnv.sqlQuery(sql).toRetractStream[Row].print()}}

{{ env.execute()}}

{{ }}}

was:

`TimestmapType` has two types of physical representation: `Timestamp` and

`LocalDateTime`. When we use following SQL, it will conflict each other:

{quote}SELECT

SUM(cnt) as s,

MAX(ts)

FROM

SELECT

`string`,

`int`,

COUNT(*) AS cnt,

MAX(rowtime) as ts

FROM T1

GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

GROUP BY `string`

{quote}

with 'table.exec.emit.early-fire.enabled' = true.

The exceptions is below:

{quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime cannot

be cast to java.sql.Timestamp

at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

at

org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

at

org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

at

org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

at

org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

at

org.apache.flink.stre

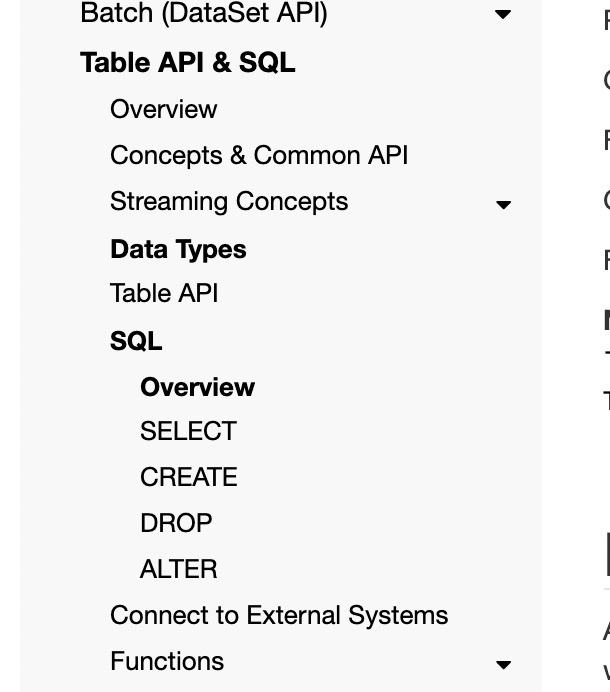

[GitHub] [flink] wuchong commented on issue #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

wuchong commented on issue #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability URL: https://github.com/apache/flink/pull/10669#issuecomment-569213420 Hi @danny0405 , thanks for the suggestion. I agree with you. Many users don't understand what is DML and DDL, and they do not need to know that at all. I restructured the pages again to `SELECT`, `CREATE`, `DROP`, `ALTER` pages, so that users can find what they need from the title quickly. Please have a look again @danny0405 , @bowenli86 , @JingsongLi .  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Created] (FLINK-15421) GroupAggsHandler throws java.time.LocalDateTime cannot be cast to java.sql.Timestamp

Benchao Li created FLINK-15421:

--

Summary: GroupAggsHandler throws java.time.LocalDateTime cannot be

cast to java.sql.Timestamp

Key: FLINK-15421

URL: https://issues.apache.org/jira/browse/FLINK-15421

Project: Flink

Issue Type: Bug

Affects Versions: 1.9.1, 1.10.0

Reporter: Benchao Li

`TimestmapType` has two types of physical representation: `Timestamp` and

`LocalDateTime`. When we use following SQL, it will conflict each other:

{quote}SELECT

SUM(cnt) as s,

MAX(ts)

FROM

SELECT

`string`,

`int`,

COUNT(*) AS cnt,

MAX(rowtime) as ts

FROM T1

GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND)

GROUP BY `string`

{quote}

with 'table.exec.emit.early-fire.enabled' = true.

The exceptions is below:

{quote}Caused by: java.lang.ClassCastException: java.time.LocalDateTime cannot

be cast to java.sql.Timestamp

at GroupAggsHandler$83.getValue(GroupAggsHandler$83.java:529)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:164)

at

org.apache.flink.table.runtime.operators.aggregate.GroupAggFunction.processElement(GroupAggFunction.java:43)

at

org.apache.flink.streaming.api.operators.KeyedProcessOperator.processElement(KeyedProcessOperator.java:85)

at

org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitRecord(OneInputStreamTask.java:173)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:151)

at

org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:128)

at

org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:69)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:311)

at

org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:187)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:488)

at

org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:470)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:702)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:527)

at java.lang.Thread.run(Thread.java:748)

{quote}

I also create a UT to quickly reproduce this bug in `WindowAggregateITCase`:

{quote} @Test

def testEarlyFireWithTumblingWindow(): Unit = {

val stream = failingDataSource(data)

.assignTimestampsAndWatermarks(

new TimestampAndWatermarkWithOffset

[(Long, Int, Double, Float, BigDecimal, String, String)](10L))

val table = stream.toTable(tEnv,

'rowtime.rowtime, 'int, 'double, 'float, 'bigdec, 'string, 'name)

tEnv.registerTable("T1", table)

tEnv.getConfig.getConfiguration.setBoolean("table.exec.emit.early-fire.enabled",

true)

tEnv.getConfig.getConfiguration.setString("table.exec.emit.early-fire.delay",

"1000 ms")

val sql =

"""

|SELECT

| SUM(cnt) as s,

| MAX(ts)

|FROM

| (SELECT

|`string`,

|`int`,

|COUNT(*) AS cnt,

|MAX(rowtime) as ts

| FROM T1

| GROUP BY `string`, `int`, TUMBLE(rowtime, INTERVAL '10' SECOND))

|GROUP BY `string`

|""".stripMargin

tEnv.sqlQuery(sql).toRetractStream[Row].print()

env.execute()

}{quote}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Created] (FLINK-15420) Cast string to timestamp will loose precision

Jingsong Lee created FLINK-15420:

Summary: Cast string to timestamp will loose precision

Key: FLINK-15420

URL: https://issues.apache.org/jira/browse/FLINK-15420

Project: Flink

Issue Type: Bug

Components: Table SQL / Planner

Reporter: Jingsong Lee

Fix For: 1.10.0

{code:java}

cast('2010-10-14 12:22:22.123456' as timestamp(9))

{code}

Will produce "2010-10-14 12:22:22.123" in blink planner, this should not happen.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on issue #10695: [FLINK-15377] Remove the useless stage in Mesos dockerfile

flinkbot edited a comment on issue #10695: [FLINK-15377] Remove the useless stage in Mesos dockerfile URL: https://github.com/apache/flink/pull/10695#issuecomment-569014612 ## CI report: * 97ac65498d207e34e891dcd59c87b6a44f04bead Travis: [FAILURE](https://travis-ci.com/flink-ci/flink/builds/142361209) Azure: [FAILURE](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3927) * e58625466acbbf46aeb41b14561f2c63ba075297 Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142364869) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3930) * cb283afa0c2f0cf95a6628d9b4cb6b53a52fb019 Travis: [FAILURE](https://travis-ci.com/flink-ci/flink/builds/142421957) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3945) * 12e322af2d7393cfb8d17432147c930ce7143841 Travis: [PENDING](https://travis-ci.com/flink-ci/flink/builds/142432715) Azure: [PENDING](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3948) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #10681: [FLINK-14849][hive][doc] Fix documentation about Hive dependencies

flinkbot edited a comment on issue #10681: [FLINK-14849][hive][doc] Fix documentation about Hive dependencies URL: https://github.com/apache/flink/pull/10681#issuecomment-568831538 ## CI report: * 4347bedaf5a20884347af39baf197932238c6d24 Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142300202) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3900) * b940b474f8617f292159ef85d675e62890e3c222 Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142308230) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3905) * 0379fb44aa182467b12c6647c0c2f1d1ba0a25fc Travis: [FAILURE](https://travis-ci.com/flink-ci/flink/builds/142348340) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3922) * ab0da875807ef1e178167f2683826879a3a82f74 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Created] (FLINK-15419) Validate SQL syntax not need to depend on connector jar

Kaibo Zhou created FLINK-15419:

--

Summary: Validate SQL syntax not need to depend on connector jar

Key: FLINK-15419

URL: https://issues.apache.org/jira/browse/FLINK-15419

Project: Flink

Issue Type: Improvement

Components: Table SQL / API

Reporter: Kaibo Zhou

Fix For: 1.11.0

As a platform user, I want to integrate Flink SQL in my platform.

The users will register Source/Sink Tables and Functions to catalog service

through UI, and write SQL scripts on Web SQLEditor. I want to validate the SQL

syntax and validate that all catalog objects exist (table, fields, UDFs).

After some investigation, I decided to use the `tEnv.sqlUpdate/sqlQuery` API to

do this.`SqlParser` and`FlinkSqlParserImpl` is not a good choice, as it will

not read the catalog.

The users have registered *Kafka* source/sink table in the catalog, so the

validation logic will be:

{code:java}

TableEnvironment tableEnv =

tEnv.registerCatalog(CATALOG_NAME, catalog);

tEnv.useCatalog(CATALOG_NAME);

tEnv.useDatabase(DB_NAME);

tEnv.sqlUpdate("INSERT INTO sinkTable SELECT f1,f2 FROM sourceTable");

or

tEnv.sqlQuery("SELECT * FROM tableName")

{code}

It will through exception on Flink 1.9.0 because I do not have

`flink-connector-kafka_2.11-1.9.0.jar` in my classpath.

{code:java}

org.apache.flink.table.api.ValidationException: SQL validation failed.

findAndCreateTableSource failed.org.apache.flink.table.api.ValidationException:

SQL validation failed. findAndCreateTableSource failed. at

org.apache.flink.table.planner.calcite.FlinkPlannerImpl.validate(FlinkPlannerImpl.scala:125)

at

org.apache.flink.table.planner.operations.SqlToOperationConverter.convert(SqlToOperationConverter.java:82)

at

org.apache.flink.table.planner.delegation.PlannerBase.parse(PlannerBase.scala:132)

at

org.apache.flink.table.api.internal.TableEnvironmentImpl.sqlUpdate(TableEnvironmentImpl.java:335)

The following factories have been considered:

org.apache.flink.formats.json.JsonRowFormatFactory

org.apache.flink.table.planner.delegation.BlinkPlannerFactory

org.apache.flink.table.planner.delegation.BlinkExecutorFactory

org.apache.flink.table.catalog.GenericInMemoryCatalogFactory

org.apache.flink.table.sources.CsvBatchTableSourceFactory

org.apache.flink.table.sources.CsvAppendTableSourceFactory

org.apache.flink.table.sinks.CsvBatchTableSinkFactory

org.apache.flink.table.sinks.CsvAppendTableSinkFactory

at

org.apache.flink.table.factories.TableFactoryService.filterByContext(TableFactoryService.java:283)

at

org.apache.flink.table.factories.TableFactoryService.filter(TableFactoryService.java:191)

at

org.apache.flink.table.factories.TableFactoryService.findSingleInternal(TableFactoryService.java:144)

at

org.apache.flink.table.factories.TableFactoryService.find(TableFactoryService.java:97)

at

org.apache.flink.table.factories.TableFactoryUtil.findAndCreateTableSource(TableFactoryUtil.java:64)

{code}

For a platform provider, the user's SQL may depend on *ANY* connector or even a

custom connector. It is complicated to do dynamic loading connector jar after

parser the connector type in SQL. And this requires the users must upload their

custom connector jar before doing a syntax check.

I hope that Flink can provide a friendly way to verify the syntax of SQL whose

tables/functions are already registered in the catalog, *NOT* need to depend on

the jar of the connector. This makes it easier for SQL to be integrated by

external platforms.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-15412) LocalExecutorITCase#testParameterizedTypes failed in travis

[

https://issues.apache.org/jira/browse/FLINK-15412?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17003944#comment-17003944

]

Rui Li commented on FLINK-15412:

The failure was introduced since FLINK-15240 was not properly ported to

release-1.9. I'll submit a fix for it.

> LocalExecutorITCase#testParameterizedTypes failed in travis

> ---

>

> Key: FLINK-15412

> URL: https://issues.apache.org/jira/browse/FLINK-15412

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Client

>Reporter: Dian Fu

>Priority: Major

> Fix For: 1.10.0

>

>

> The travis of release-1.9 failed with the following error:

> {code:java}

> 14:43:17.916 [INFO] Running

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase

> 14:44:47.388 [ERROR] Tests run: 34, Failures: 0, Errors: 1, Skipped: 1, Time

> elapsed: 89.468 s <<< FAILURE! - in

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase

> 14:44:47.388 [ERROR] testParameterizedTypes[Planner:

> blink](org.apache.flink.table.client.gateway.local.LocalExecutorITCase) Time

> elapsed: 7.88 s <<< ERROR!

> org.apache.flink.table.client.gateway.SqlExecutionException: Invalid SQL

> statement at

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase.testParameterizedTypes(LocalExecutorITCase.java:557)

> Caused by: org.apache.flink.table.api.ValidationException: SQL validation

> failed. findAndCreateTableSource failed

> at

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase.testParameterizedTypes(LocalExecutorITCase.java:557)

> Caused by: org.apache.flink.table.api.TableException:

> findAndCreateTableSource failed

> at

> org.apache.flink.table.client.gateway.local.LocalExecutorITCase.testParameterizedTypes(LocalExecutorITCase.java:557)

> Caused by: org.apache.flink.table.api.NoMatchingTableFactoryException:

> Could not find a suitable table factory for

> 'org.apache.flink.table.factories.TableSourceFactory' in

> the classpath.

> Reason: No context matches.

> {code}

> instance: [https://api.travis-ci.org/v3/job/629636106/log.txt]

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] zjuwangg commented on issue #9919: [FLINK-13303][hive]add hive e2e connector test

zjuwangg commented on issue #9919: [FLINK-13303][hive]add hive e2e connector test URL: https://github.com/apache/flink/pull/9919#issuecomment-569210087 I'll close this PR and open a new PR FLINK-13437 to cover this based on new Java based test framework. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] zjuwangg closed pull request #9919: [FLINK-13303][hive]add hive e2e connector test

zjuwangg closed pull request #9919: [FLINK-13303][hive]add hive e2e connector test URL: https://github.com/apache/flink/pull/9919 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Comment Edited] (FLINK-15388) The assigned slot bae00218c818157649eb9e3c533b86af_32 was removed.

[

https://issues.apache.org/jira/browse/FLINK-15388?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17003934#comment-17003934

]

Xintong Song edited comment on FLINK-15388 at 12/27/19 7:13 AM:

One thing draw my attention, it seems there are quite some error messages like

"Exception occurred in REST handler: Job 9bf1a8b3b40ddccb5aa258f150a750b1 not

found". This indicates something that monitoring other jobs are accessing the

wrong rest server address.

I tried to print out the time and amount of such error message, and find that

the timepoints with lots of such error messages quite match the timepoints when

there are high prometheus scrape duration.

!屏幕快照 2019-12-27 下午3.05.36.png!

This might be the reason that affects the heartbeats, because rest server need

to access the rpc main thread.

I would suggest to first find out where the rest queries come from and try to

eliminate them, see if the problem still exist after that.

was (Author: xintongsong):

One thing draw my attention, it seems there are quite some error messages like

"Exception occurred in REST handler: Job 9bf1a8b3b40ddccb5aa258f150a750b1 not

found". This indicates something that monitoring other jobs are accessing the

wrong rest server address.

I tried to print out the time and amount of such error message, and find that

the timepoints with lots of such error messages quite match the timepoints when

there are high prometheus scrape duration.

This might be the reason that affects the heartbeats, because rest server need

to access the rpc main thread.

!屏幕快照 2019-12-27 下午3.05.36.png!

I would suggest to first find out where the rest queries come from and try to

eliminate them, see if the problem still exist after that.

> The assigned slot bae00218c818157649eb9e3c533b86af_32 was removed.

> --

>

> Key: FLINK-15388