[jira] [Updated] (FLINK-17896) HiveCatalog can work with new table factory because of is_generic

[

https://issues.apache.org/jira/browse/FLINK-17896?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-17896:

---

Labels: pull-request-available (was: )

> HiveCatalog can work with new table factory because of is_generic

> -

>

> Key: FLINK-17896

> URL: https://issues.apache.org/jira/browse/FLINK-17896

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Hive, Table SQL / API

>Reporter: Jingsong Lee

>Assignee: Jingsong Lee

>Priority: Blocker

> Labels: pull-request-available

> Fix For: 1.11.0

>

>

> {code:java}

> [ERROR] Could not execute SQL statement. Reason:

> org.apache.flink.table.api.ValidationException: Unsupported options found for

> connector 'print'.Unsupported options:is_genericSupported options:connector

> print-identifier

> property-version

> standard-error

> {code}

> This's because HiveCatalog put is_generic property into generic tables, but

> the new factory will not delete it.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Assigned] (FLINK-17896) HiveCatalog can work with new table factory because of is_generic

[

https://issues.apache.org/jira/browse/FLINK-17896?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jingsong Lee reassigned FLINK-17896:

Assignee: Jingsong Lee

> HiveCatalog can work with new table factory because of is_generic

> -

>

> Key: FLINK-17896

> URL: https://issues.apache.org/jira/browse/FLINK-17896

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Hive, Table SQL / API

>Reporter: Jingsong Lee

>Assignee: Jingsong Lee

>Priority: Blocker

> Fix For: 1.11.0

>

>

> {code:java}

> [ERROR] Could not execute SQL statement. Reason:

> org.apache.flink.table.api.ValidationException: Unsupported options found for

> connector 'print'.Unsupported options:is_genericSupported options:connector

> print-identifier

> property-version

> standard-error

> {code}

> This's because HiveCatalog put is_generic property into generic tables, but

> the new factory will not delete it.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] JingsongLi opened a new pull request #12316: [FLINK-17896][hive] HiveCatalog can work with new table factory because of is_generic

JingsongLi opened a new pull request #12316: URL: https://github.com/apache/flink/pull/12316 ## What is the purpose of the change ``` [ERROR] Could not execute SQL statement. Reason: org.apache.flink.table.api.ValidationException: Unsupported options found for connector 'print'.Unsupported options:is_genericSupported options:connector print-identifier property-version standard-error ``` This's because HiveCatalog put is_generic property into generic tables, but the new factory will not delete it. ## Brief change log - Implement `HiveDynamicTableFactory` to remove `is_generic` flag before create table source&sink ## Verifying this change `HiveCatalogITCase.testNewTableFactory` ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] aljoscha commented on pull request #11972: [FLINK-17058] Adding ProcessingTimeoutTrigger of nested triggers.

aljoscha commented on pull request #11972: URL: https://github.com/apache/flink/pull/11972#issuecomment-633411543 @flinkbot run azure This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] zhuzhurk commented on a change in pull request #12256: [FLINK-17018][runtime] DefaultExecutionSlotAllocator allocates slots in bulks

zhuzhurk commented on a change in pull request #12256: URL: https://github.com/apache/flink/pull/12256#discussion_r429754093 ## File path: flink-runtime/src/main/java/org/apache/flink/runtime/scheduler/DefaultExecutionSlotAllocator.java ## @@ -67,23 +76,211 @@ private final SlotProviderStrategy slotProviderStrategy; + private final SlotOwner slotOwner; + private final InputsLocationsRetriever inputsLocationsRetriever; + // temporary hack. can be removed along with the individual slot allocation code path + // once bulk slot allocation is fully functional + private final boolean enableBulkSlotAllocation; Review comment: Good suggestion! Will give a try. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-shaded] zentol commented on pull request #83: [FLINK-17221] Remove Jersey dependencies from yarn-common exclusions

zentol commented on pull request #83: URL: https://github.com/apache/flink-shaded/pull/83#issuecomment-633410154 The associated JIRA has been closed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-shaded] zentol closed pull request #83: [FLINK-17221] Remove Jersey dependencies from yarn-common exclusions

zentol closed pull request #83: URL: https://github.com/apache/flink-shaded/pull/83 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Closed] (FLINK-16499) Flink shaded hadoop could not work when Yarn timeline service is enabled

[

https://issues.apache.org/jira/browse/FLINK-16499?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Chesnay Schepler closed FLINK-16499.

Fix Version/s: (was: shaded-11.0)

Resolution: Won't Fix

The hadoop modules have been dropped from flink-shaded in FLINK-17685.

> Flink shaded hadoop could not work when Yarn timeline service is enabled

>

>

> Key: FLINK-16499

> URL: https://issues.apache.org/jira/browse/FLINK-16499

> Project: Flink

> Issue Type: Bug

> Components: BuildSystem / Shaded

>Affects Versions: shaded-10.0

>Reporter: Yang Wang

>Priority: Major

>

> When the Yarn timeline service is enabled (via

> {{yarn.timeline-service.enabled=true}} in yarn-site.xml), flink-shaded-hadoop

> could not work to submit Flink job to Yarn cluster. The following exception

> will be thrown.

>

> The root cause is the {{jersey-core-xx.jar}} is not bundled into

> {{flink-shaded-hadoop-xx}}{{.jar}}.

>

> {code:java}

> 2020-03-09 03:35:34,396 ERROR org.apache.flink.client.cli.CliFrontend

> [] - Fatal error while running command line interface.2020-03-09

> 03:35:34,396 ERROR org.apache.flink.client.cli.CliFrontend

> [] - Fatal error while running command line

> interface.java.lang.NoClassDefFoundError: javax/ws/rs/ext/MessageBodyReader

> at java.lang.ClassLoader.defineClass1(Native Method) ~[?:1.8.0_242] at

> java.lang.ClassLoader.defineClass(ClassLoader.java:757) ~[?:1.8.0_242] at

> java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

> ~[?:1.8.0_242] at

> java.net.URLClassLoader.defineClass(URLClassLoader.java:468) ~[?:1.8.0_242]

> at java.net.URLClassLoader.access$100(URLClassLoader.java:74) ~[?:1.8.0_242]

> at java.net.URLClassLoader$1.run(URLClassLoader.java:369) ~[?:1.8.0_242] at

> java.net.URLClassLoader$1.run(URLClassLoader.java:363) ~[?:1.8.0_242] at

> java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_242] at

> java.net.URLClassLoader.findClass(URLClassLoader.java:362) ~[?:1.8.0_242] at

> java.lang.ClassLoader.loadClass(ClassLoader.java:419) ~[?:1.8.0_242] at

> sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:352) ~[?:1.8.0_242]

> at java.lang.ClassLoader.loadClass(ClassLoader.java:352) ~[?:1.8.0_242] at

> java.lang.ClassLoader.defineClass1(Native Method) ~[?:1.8.0_242] at

> java.lang.ClassLoader.defineClass(ClassLoader.java:757) ~[?:1.8.0_242] at

> java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

> ~[?:1.8.0_242] at

> java.net.URLClassLoader.defineClass(URLClassLoader.java:468) ~[?:1.8.0_242]

> at java.net.URLClassLoader.access$100(URLClassLoader.java:74) ~[?:1.8.0_242]

> at java.net.URLClassLoader$1.run(URLClassLoader.java:369) ~[?:1.8.0_242] at

> java.net.URLClassLoader$1.run(URLClassLoader.java:363) ~[?:1.8.0_242] at

> java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_242] at

> java.net.URLClassLoader.findClass(URLClassLoader.java:362) ~[?:1.8.0_242] at

> java.lang.ClassLoader.loadClass(ClassLoader.java:419) ~[?:1.8.0_242] at

> sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:352) ~[?:1.8.0_242]

> at java.lang.ClassLoader.loadClass(ClassLoader.java:352) ~[?:1.8.0_242] at

> java.lang.ClassLoader.defineClass1(Native Method) ~[?:1.8.0_242] at

> java.lang.ClassLoader.defineClass(ClassLoader.java:757) ~[?:1.8.0_242] at

> java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

> ~[?:1.8.0_242] at

> java.net.URLClassLoader.defineClass(URLClassLoader.java:468) ~[?:1.8.0_242]

> at java.net.URLClassLoader.access$100(URLClassLoader.java:74) ~[?:1.8.0_242]

> at java.net.URLClassLoader$1.run(URLClassLoader.java:369) ~[?:1.8.0_242] at

> java.net.URLClassLoader$1.run(URLClassLoader.java:363) ~[?:1.8.0_242] at

> java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_242] at

> java.net.URLClassLoader.findClass(URLClassLoader.java:362) ~[?:1.8.0_242] at

> java.lang.ClassLoader.loadClass(ClassLoader.java:419) ~[?:1.8.0_242] at

> sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:352) ~[?:1.8.0_242]

> at java.lang.ClassLoader.loadClass(ClassLoader.java:352) ~[?:1.8.0_242] at

> org.apache.hadoop.yarn.util.timeline.TimelineUtils.(TimelineUtils.java:50)

> ~[flink-shaded-hadoop-2-uber-2.8.3-7.0.jar:2.8.3-7.0] at

> org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.serviceInit(YarnClientImpl.java:179)

> ~[flink-shaded-hadoop-2-uber-2.8.3-7.0.jar:2.8.3-7.0] at

> org.apache.hadoop.service.AbstractService.init(AbstractService.java:163)

> ~[flink-shaded-hadoop-2-uber-2.8.3-7.0.jar:2.8.3-7.0] at

> org.apache.flink.yarn.YarnClusterClientFactory.getClusterDescriptor(YarnClusterClientFactory.java:71)

> ~[flink-dist_2.11-1.10.0-vvr-0.1-SNAPSHOT.jar:1.10.0-vvr-0.1-SNAPSHOT] at

[GitHub] [flink] flinkbot edited a comment on pull request #12309: [FLINK-17889][hive] Hive can not work with filesystem connector

flinkbot edited a comment on pull request #12309: URL: https://github.com/apache/flink/pull/12309#issuecomment-633345845 ## CI report: * c0f98fc48c889443462b8a1056e50ce8082d0314 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2095) * f6aec0584d0e5ba97e7b5c4707d93d97465b4704 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2112) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12278: [FLINK-17019][runtime] Fulfill slot requests in request order

flinkbot edited a comment on pull request #12278: URL: https://github.com/apache/flink/pull/12278#issuecomment-632060550 ## CI report: * 30dbfc08b3a4147d18dc00aebb2e31773f1fdbc1 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=1997) * a3a4256ccc6300fcac27d7625461c078a1510604 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12260: [FLINK-17189][table-planner] Table with proctime attribute cannot be read from Hive catalog

flinkbot edited a comment on pull request #12260: URL: https://github.com/apache/flink/pull/12260#issuecomment-631229314 ## CI report: * a3a71c5920058f4bbbdc5f11022b8b7736b291cf UNKNOWN * 081a62a66ef0a1b687b10e6b41ed8066b0c7992d Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2101) * cb99a301a3d1625909fbf94e8543e3a7b0726685 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2111) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #12315: [FLINK-17917][yarn] Ignore the external resource with a value of 0 in…

flinkbot commented on pull request #12315: URL: https://github.com/apache/flink/pull/12315#issuecomment-633409833 ## CI report: * 9108fb04b2b8cc0eebc941f7e179fcffed296138 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12314: [FLINK-17756][table-api-java] Drop table/view shouldn't take effect o…

flinkbot edited a comment on pull request #12314: URL: https://github.com/apache/flink/pull/12314#issuecomment-633403476 ## CI report: * b7a68f0f07ab9bb2abc3182d72c0dc80ed59dda1 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2113) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12028: [FLINK-17553][table]fix plan error when constant exists in group window key

flinkbot edited a comment on pull request #12028: URL: https://github.com/apache/flink/pull/12028#issuecomment-625656822 ## CI report: * e51960bfa9933fc5515fde87896dbee100ef41f8 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=796) * 5ffb28916f4d997ff9294f2df87389c79a7d1951 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] zhuzhurk commented on a change in pull request #12256: [FLINK-17018][runtime] DefaultExecutionSlotAllocator allocates slots in bulks

zhuzhurk commented on a change in pull request #12256:

URL: https://github.com/apache/flink/pull/12256#discussion_r429758351

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/jobmaster/slotpool/PhysicalSlotRequestBulk.java

##

@@ -0,0 +1,57 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.jobmaster.slotpool;

+

+import org.apache.flink.runtime.clusterframework.types.AllocationID;

+import org.apache.flink.runtime.jobmaster.SlotRequestId;

+

+import java.util.Collection;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.Map;

+import java.util.function.Function;

+import java.util.stream.Collectors;

+

+/**

+ * Represents a bulk of physical slot requests.

+ */

+public class PhysicalSlotRequestBulk {

+

+ final Map pendingRequests;

Review comment:

ok.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] zhuzhurk commented on a change in pull request #12256: [FLINK-17018][runtime] DefaultExecutionSlotAllocator allocates slots in bulks

zhuzhurk commented on a change in pull request #12256:

URL: https://github.com/apache/flink/pull/12256#discussion_r429758258

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/jobmaster/slotpool/PhysicalSlotRequest.java

##

@@ -0,0 +1,79 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.jobmaster.slotpool;

+

+import org.apache.flink.runtime.clusterframework.types.SlotProfile;

+import org.apache.flink.runtime.jobmaster.SlotRequestId;

+

+/**

+ * Represents a request for a physical slot.

+ */

+public class PhysicalSlotRequest {

+

+ private SlotRequestId slotRequestId;

+

+ private SlotProfile slotProfile;

+

+ private boolean slotWillBeOccupiedIndefinitely;

+

+ public PhysicalSlotRequest(

+ final SlotRequestId slotRequestId,

+ final SlotProfile slotProfile,

+ final boolean slotWillBeOccupiedIndefinitely) {

+

+ this.slotRequestId = slotRequestId;

+ this.slotProfile = slotProfile;

+ this.slotWillBeOccupiedIndefinitely =

slotWillBeOccupiedIndefinitely;

+ }

+

+ public SlotRequestId getSlotRequestId() {

+ return slotRequestId;

+ }

+

+ public SlotProfile getSlotProfile() {

+ return slotProfile;

+ }

+

+ boolean willSlotBeOccupiedIndefinitely() {

+ return slotWillBeOccupiedIndefinitely;

+ }

+

+ /**

+* Result of a {@link PhysicalSlotRequest}.

+*/

+ public static class Result {

+

+ private SlotRequestId slotRequestId;

Review comment:

ok.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] zhuzhurk commented on a change in pull request #12256: [FLINK-17018][runtime] DefaultExecutionSlotAllocator allocates slots in bulks

zhuzhurk commented on a change in pull request #12256:

URL: https://github.com/apache/flink/pull/12256#discussion_r429758061

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/jobmaster/slotpool/PhysicalSlotRequest.java

##

@@ -0,0 +1,79 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.jobmaster.slotpool;

+

+import org.apache.flink.runtime.clusterframework.types.SlotProfile;

+import org.apache.flink.runtime.jobmaster.SlotRequestId;

+

+/**

+ * Represents a request for a physical slot.

+ */

+public class PhysicalSlotRequest {

+

+ private SlotRequestId slotRequestId;

Review comment:

ok.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] wuchong commented on pull request #12254: [FLINK-17802][kafka] Set offset commit only if group id is configured for new Kafka Table source

wuchong commented on pull request #12254: URL: https://github.com/apache/flink/pull/12254#issuecomment-633405996 @flinkbot run azure This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] wuchong commented on pull request #12252: [FLINK-17802][kafka] Set offset commit only if group id is configured for new Kafka Table source

wuchong commented on pull request #12252: URL: https://github.com/apache/flink/pull/12252#issuecomment-633405699 @flinkbot run azure This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] zhuzhurk commented on a change in pull request #12256: [FLINK-17018][runtime] DefaultExecutionSlotAllocator allocates slots in bulks

zhuzhurk commented on a change in pull request #12256:

URL: https://github.com/apache/flink/pull/12256#discussion_r429755150

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/jobmaster/slotpool/PhysicalSlotRequestBulkTracker.java

##

@@ -0,0 +1,86 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.jobmaster.slotpool;

+

+import org.apache.flink.annotation.VisibleForTesting;

+import org.apache.flink.util.clock.Clock;

+

+import java.util.IdentityHashMap;

+import java.util.Map;

+import java.util.Set;

+

+import static org.apache.flink.util.Preconditions.checkState;

+

+/**

+ * Tracks physical slot request bulks.

+ */

+class PhysicalSlotRequestBulkTracker {

+

+ private final Clock clock;

+

+ /** Timestamps indicate when bulks become unfulfillable. */

+ private final Map

bulkUnfulfillableTimestamps;

+

+ PhysicalSlotRequestBulkTracker(final Clock clock) {

+ this.clock = clock;

+ this.bulkUnfulfillableTimestamps = new IdentityHashMap<>();

+ }

+

+ void track(final PhysicalSlotRequestBulk bulk) {

+ // a bulk is initially unfulfillable

+ bulkUnfulfillableTimestamps.put(bulk,

clock.relativeTimeMillis());

+ }

+

+ void untrack(final PhysicalSlotRequestBulk bulk) {

+ bulkUnfulfillableTimestamps.remove(bulk);

+ }

+

+ boolean isTracked(final PhysicalSlotRequestBulk bulk) {

+ return bulkUnfulfillableTimestamps.containsKey(bulk);

+ }

+

+ void markBulkFulfillable(final PhysicalSlotRequestBulk bulk) {

+ checkState(isTracked(bulk));

+

+ bulkUnfulfillableTimestamps.put(bulk, Long.MAX_VALUE);

+ }

+

+ void markBulkUnfulfillable(final PhysicalSlotRequestBulk bulk, final

long currentTimestamp) {

Review comment:

This is to reduce the invocations of `System.nanoTime()` and ensures the

timestamp is consistent in

`SchedulerImpl#checkPhysicalSlotRequestBulkTimeout(...)`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] rmetzger commented on pull request #12301: [FLINK-16572] [pubsub,e2e] Add check to see if adding a timeout to th…

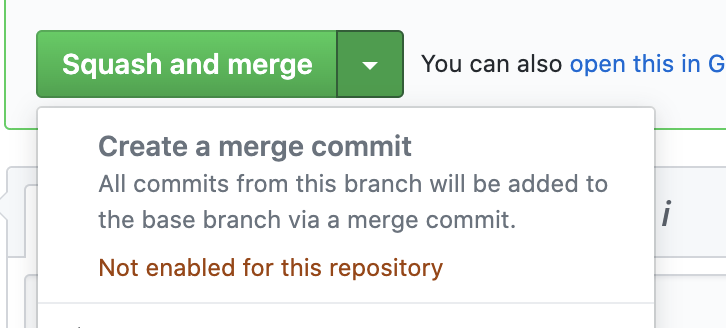

rmetzger commented on pull request #12301: URL: https://github.com/apache/flink/pull/12301#issuecomment-633404724 Yes, that's my fault. The GitHub "merge" button hat an error and then produced this merge commit when I clicked "try again" :( Merge commits are actually disabled for this repository. I guess this is a Bug in GitHub.  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] carp84 commented on pull request #12078: [FLINK-17610][state] Align the behavior of result of internal map state to return empty iterator

carp84 commented on pull request #12078: URL: https://github.com/apache/flink/pull/12078#issuecomment-633404190 Please also rebase on the latest code base to resolve conflicts. Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] carp84 commented on a change in pull request #12078: [FLINK-17610][state] Align the behavior of result of internal map state to return empty iterator

carp84 commented on a change in pull request #12078:

URL: https://github.com/apache/flink/pull/12078#discussion_r429752787

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/state/ttl/TtlStateTestBase.java

##

@@ -208,7 +209,7 @@ public void testExactExpirationOnWrite() throws Exception {

timeProvider.time = 300;

assertEquals(EXPIRED_UNAVAIL, ctx().emptyValue, ctx().get());

- assertEquals("Original state should be cleared on access",

ctx().emptyValue, ctx().getOriginal());

+ assertTrue(ctx().isOriginalEmptyValue());

Review comment:

```suggestion

assertTrue("Original state should be cleared on access",

ctx().isOriginalEmptyValue());

```

##

File path:

flink-state-backends/flink-statebackend-rocksdb/src/test/java/org/apache/flink/contrib/streaming/state/ttl/RocksDBTtlStateTestBase.java

##

@@ -161,11 +162,11 @@ private void testCompactFilter(boolean takeSnapshot,

boolean rescaleAfterRestore

setTimeAndCompact(stateDesc, 170L);

sbetc.setCurrentKey("k1");

- assertEquals("Expired original state should be unavailable",

ctx().emptyValue, ctx().getOriginal());

+ assertTrue(ctx().isOriginalEmptyValue());

Review comment:

```suggestion

assertTrue("Expired original state should be unavailable",

ctx().isOriginalEmptyValue());

```

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/state/ttl/TtlStateTestBase.java

##

@@ -222,7 +223,7 @@ public void testRelaxedExpirationOnWrite() throws Exception

{

timeProvider.time = 120;

assertEquals(EXPIRED_AVAIL, ctx().getUpdateEmpty, ctx().get());

- assertEquals("Original state should be cleared on access",

ctx().emptyValue, ctx().getOriginal());

+ assertTrue(ctx().isOriginalEmptyValue());

Review comment:

```suggestion

assertTrue("Original state should be cleared on access",

ctx().isOriginalEmptyValue());

```

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/state/ttl/TtlStateTestBase.java

##

@@ -247,7 +248,7 @@ public void testExactExpirationOnRead() throws Exception {

timeProvider.time = 250;

assertEquals(EXPIRED_UNAVAIL, ctx().emptyValue, ctx().get());

- assertEquals("Original state should be cleared on access",

ctx().emptyValue, ctx().getOriginal());

+ assertTrue(ctx().isOriginalEmptyValue());

Review comment:

```suggestion

assertTrue("Original state should be cleared on access",

ctx().isOriginalEmptyValue());

```

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/state/ttl/TtlStateTestBase.java

##

@@ -509,7 +510,7 @@ public void testIncrementalCleanup() throws Exception {

private void checkExpiredKeys(int startKey, int endKey) throws

Exception {

for (int i = startKey; i < endKey; i++) {

sbetc.setCurrentKey(Integer.toString(i));

- assertEquals("Original state should be cleared",

ctx().emptyValue, ctx().getOriginal());

+ assertTrue(ctx().isOriginalEmptyValue());

Review comment:

```suggestion

assertTrue("Original state should be cleared",

ctx().isOriginalEmptyValue());

```

##

File path:

flink-state-backends/flink-statebackend-rocksdb/src/test/java/org/apache/flink/contrib/streaming/state/ttl/RocksDBTtlStateTestBase.java

##

@@ -161,11 +162,11 @@ private void testCompactFilter(boolean takeSnapshot,

boolean rescaleAfterRestore

setTimeAndCompact(stateDesc, 170L);

sbetc.setCurrentKey("k1");

- assertEquals("Expired original state should be unavailable",

ctx().emptyValue, ctx().getOriginal());

+ assertTrue(ctx().isOriginalEmptyValue());

assertEquals(EXPIRED_UNAVAIL, ctx().emptyValue, ctx().get());

sbetc.setCurrentKey("k2");

- assertEquals("Expired original state should be unavailable",

ctx().emptyValue, ctx().getOriginal());

+ assertTrue(ctx().isOriginalEmptyValue());

Review comment:

```suggestion

assertTrue("Expired original state should be unavailable",

ctx().isOriginalEmptyValue());

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] zhuzhurk commented on a change in pull request #12256: [FLINK-17018][runtime] DefaultExecutionSlotAllocator allocates slots in bulks

zhuzhurk commented on a change in pull request #12256: URL: https://github.com/apache/flink/pull/12256#discussion_r429754093 ## File path: flink-runtime/src/main/java/org/apache/flink/runtime/scheduler/DefaultExecutionSlotAllocator.java ## @@ -67,23 +76,211 @@ private final SlotProviderStrategy slotProviderStrategy; + private final SlotOwner slotOwner; + private final InputsLocationsRetriever inputsLocationsRetriever; + // temporary hack. can be removed along with the individual slot allocation code path + // once bulk slot allocation is fully functional + private final boolean enableBulkSlotAllocation; Review comment: Will give a try. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12313: [FLINK-17005][docs] Translate the CREATE TABLE ... LIKE syntax documentation to Chinese

flinkbot edited a comment on pull request #12313: URL: https://github.com/apache/flink/pull/12313#issuecomment-633389597 ## CI report: * c225fa8b2a774a8579501e136004a9d64db89244 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2108) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #12314: [FLINK-17756][table-api-java] Drop table/view shouldn't take effect o…

flinkbot commented on pull request #12314: URL: https://github.com/apache/flink/pull/12314#issuecomment-633403476 ## CI report: * b7a68f0f07ab9bb2abc3182d72c0dc80ed59dda1 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #12315: [FLINK-17917][yarn] Ignore the external resource with a value of 0 in…

flinkbot commented on pull request #12315: URL: https://github.com/apache/flink/pull/12315#issuecomment-633403452 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 9108fb04b2b8cc0eebc941f7e179fcffed296138 (Mon May 25 06:33:47 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! * **This pull request references an unassigned [Jira ticket](https://issues.apache.org/jira/browse/FLINK-17917).** According to the [code contribution guide](https://flink.apache.org/contributing/contribute-code.html), tickets need to be assigned before starting with the implementation work. Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] zhuzhurk commented on a change in pull request #12256: [FLINK-17018][runtime] DefaultExecutionSlotAllocator allocates slots in bulks

zhuzhurk commented on a change in pull request #12256:

URL: https://github.com/apache/flink/pull/12256#discussion_r429753738

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/jobmaster/slotpool/SingleLogicalSlot.java

##

@@ -168,6 +185,11 @@ public void release(Throwable cause) {

releaseFuture.complete(null);

}

+ @Override

+ public boolean willOccupySlotIndefinitely() {

Review comment:

It is intentional.

SingleLogicalSlot has 2 roles. One is logical slot and the other is physical

slot payload.

It will occupy a physical slot indefinitely iff it will be occupied

indefinitely by a task.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12309: [FLINK-17889][hive] Hive can not work with filesystem connector

flinkbot edited a comment on pull request #12309: URL: https://github.com/apache/flink/pull/12309#issuecomment-633345845 ## CI report: * c0f98fc48c889443462b8a1056e50ce8082d0314 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2095) * f6aec0584d0e5ba97e7b5c4707d93d97465b4704 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-17406) Add documentation about dynamic table options

[ https://issues.apache.org/jira/browse/FLINK-17406?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen updated FLINK-17406: --- Summary: Add documentation about dynamic table options (was: add documentation about dynamic table options) > Add documentation about dynamic table options > - > > Key: FLINK-17406 > URL: https://issues.apache.org/jira/browse/FLINK-17406 > Project: Flink > Issue Type: Sub-task > Components: Documentation >Reporter: Kurt Young >Assignee: Danny Chen >Priority: Major > Fix For: 1.11.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] wuchong commented on a change in pull request #12289: [FLINK-17874][Connectors/HBase]Handling the NPE for hbase-connector

wuchong commented on a change in pull request #12289:

URL: https://github.com/apache/flink/pull/12289#discussion_r429753647

##

File path:

flink-connectors/flink-hbase/src/main/java/org/apache/flink/addons/hbase/util/HBaseTypeUtils.java

##

@@ -81,13 +81,16 @@ public static Object deserializeToObject(byte[] value, int

typeIdx, Charset stri

* Serialize the Java Object to byte array with the given type.

*/

public static byte[] serializeFromObject(Object value, int typeIdx,

Charset stringCharset) {

+ if (value == null){

+ return EMPTY_BYTES;

Review comment:

Yes, I think we should better handle in this PR, because the empty bytes

(for types exclude bytes and string) are introduced in this PR.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12260: [FLINK-17189][table-planner] Table with proctime attribute cannot be read from Hive catalog

flinkbot edited a comment on pull request #12260: URL: https://github.com/apache/flink/pull/12260#issuecomment-631229314 ## CI report: * a3a71c5920058f4bbbdc5f11022b8b7736b291cf UNKNOWN * 081a62a66ef0a1b687b10e6b41ed8066b0c7992d Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2101) * cb99a301a3d1625909fbf94e8543e3a7b0726685 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] wuchong commented on a change in pull request #12275: [FLINK-16021][table-common] DescriptorProperties.putTableSchema does …

wuchong commented on a change in pull request #12275:

URL: https://github.com/apache/flink/pull/12275#discussion_r429746785

##

File path:

flink-table/flink-table-common/src/main/java/org/apache/flink/table/descriptors/DescriptorProperties.java

##

@@ -241,6 +244,19 @@ public void putTableSchema(String key, TableSchema schema)

{

Arrays.asList(WATERMARK_ROWTIME,

WATERMARK_STRATEGY_EXPR, WATERMARK_STRATEGY_DATA_TYPE),

watermarkValues);

}

+

+ if (schema.getPrimaryKey().isPresent()) {

+ final UniqueConstraint uniqueConstraint =

schema.getPrimaryKey().get();

+ final List> uniqueConstraintValues = new

ArrayList<>();

+ uniqueConstraintValues.add(Arrays.asList(

+ uniqueConstraint.getName(),

+ uniqueConstraint.getType().name(),

+ String.join(",",

uniqueConstraint.getColumns(;

+ putIndexedFixedProperties(

+ key + '.' + CONSTRAINT_UNIQUE,

+ Arrays.asList(NAME, TYPE,

CONSTRAINT_UNIQUE_COLUMNS),

+ uniqueConstraintValues);

+ }

Review comment:

Because we only support primary key now. I think we can have a dedicate

primary key properties, so that we don't need to handle the index. For example:

```java

public static final String PRIMARY_KEY_NAME = "primary-key.name";

public static final String PRIMARY_KEY_COLUMNS = "primary-key.columns";

schema.getPrimaryKey().ifPresent(pk -> {

putString(key + "." + PRIMARY_KEY_NAME, pk.getName());

putString(key + "." + PRIMARY_KEY_COLUMNS, String.join(",",

pk.getColumns()));

});

```

This is also helpful for users who write yaml:

```

tables:

- name: TableNumber1

type: source-table

schema:

primary-key

name: constraint1

columns: f1, f2

```

##

File path:

flink-table/flink-table-common/src/main/java/org/apache/flink/table/descriptors/DescriptorProperties.java

##

@@ -610,7 +626,9 @@ public DataType getDataType(String key) {

public Optional getOptionalTableSchema(String key) {

// filter for number of fields

final int fieldCount = properties.keySet().stream()

- .filter((k) -> k.startsWith(key) && k.endsWith('.' +

TABLE_SCHEMA_NAME))

+ .filter((k) -> k.startsWith(key)

+ // "key." is the prefix.

+ &&

SCHEMA_COLUMN_NAME_SUFFIX.matcher(k.substring(key.length() + 1)).matches())

Review comment:

We can just to exclude the primary key, then don't need the regex

matching.

```

.filter((k) -> k.startsWith(key) && !k.startsWith(key + "." + PRIMARY_KEY)

&& k.endsWith('.' + NAME))

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (FLINK-17917) ResourceInformationReflector#getExternalResources should ignore the external resource with a value of 0

[

https://issues.apache.org/jira/browse/FLINK-17917?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-17917:

---

Labels: pull-request-available (was: )

> ResourceInformationReflector#getExternalResources should ignore the external

> resource with a value of 0

> ---

>

> Key: FLINK-17917

> URL: https://issues.apache.org/jira/browse/FLINK-17917

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Coordination

>Affects Versions: 1.11.0

>Reporter: Yangze Guo

>Priority: Blocker

> Labels: pull-request-available

> Fix For: 1.11.0

>

>

> *Background*: In FLINK-17390, we leverage

> {{WorkerSpecContainerResourceAdapter.InternalContainerResource}} to handle

> container matching logic. In FLINK-17407, we introduce external resources in

> {{WorkerSpecContainerResourceAdapter.InternalContainerResource}}.

> On containers returned by Yarn, we try to get the corresponding worker specs

> by:

> - Convert the container to {{InternalContainerResource}}

> - Get the WorkerResourceSpec from {{containerResourceToWorkerSpecs}} map.

> *Problem*: Container mismatch could happen in the below scenario:

> - Flink does not allocate any external resources, the {{externalResources}}

> of {{InternalContainerResource}} is an empty map.

> - The returned container contains all the resources (with a value of 0)

> defined in Yarn's {{resource-types.xml}}. The {{externalResources}} of

> {{InternalContainerResource}} has one or more entries with a value of 0.

> - These two {{InternalContainerResource}} do not match.

> To solve this problem, we could ignore all the external resources with a

> value of 0 in "ResourceInformationReflector#getExternalResources".

> cc [~trohrmann] Could you assign this to me.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] KarmaGYZ opened a new pull request #12315: [FLINK-17917][yarn] Ignore the external resource with a value of 0 in…

KarmaGYZ opened a new pull request #12315: URL: https://github.com/apache/flink/pull/12315 … ResourceInformationReflector#getExternalResources ## What is the purpose of the change ResourceInformationReflector#getExternalResources should ignore the external resource with a value of 0. ## Brief change log ResourceInformationReflector#getExternalResources should ignore the external resource with a value of 0. ## Verifying this change This change added tests and can be verified as follows: - `ResourceInformationReflectorTest#testGetResourceInformationIgnoreResourceWithZeroValue` ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? no This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-17917) ResourceInformationReflector#getExternalResources should ignore the external resource with a value of 0

[

https://issues.apache.org/jira/browse/FLINK-17917?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Yangze Guo updated FLINK-17917:

---

Description:

*Background*: In FLINK-17390, we leverage

{{WorkerSpecContainerResourceAdapter.InternalContainerResource}} to handle

container matching logic. In FLINK-17407, we introduce external resources in

{{WorkerSpecContainerResourceAdapter.InternalContainerResource}}.

On containers returned by Yarn, we try to get the corresponding worker specs

by:

- Convert the container to {{InternalContainerResource}}

- Get the WorkerResourceSpec from {{containerResourceToWorkerSpecs}} map.

*Problem*: Container mismatch could happen in the below scenario:

- Flink does not allocate any external resources, the {{externalResources}} of

{{InternalContainerResource}} is an empty map.

- The returned container contains all the resources (with a value of 0)

defined in Yarn's {{resource-types.xml}}. The {{externalResources}} of

{{InternalContainerResource}} has one or more entries with a value of 0.

- These two {{InternalContainerResource}} do not match.

To solve this problem, we could ignore all the external resources with a value

of 0 in "ResourceInformationReflector#getExternalResources".

cc [~trohrmann] Could you assign this to me.

was:

*Background*: In FLINK-17390, we leverage

{{WorkerSpecContainerResourceAdapter.InternalContainerResource}} to handle

container matching logic. In FLINK-17407, we introduce external resources in

{{WorkerSpecContainerResourceAdapter.InternalContainerResource}}.

On containers returned by Yarn, we try to get the corresponding worker specs by:

- Convert the container to {{InternalContainerResource}}

- Get the WorkerResourceSpec from {{containerResourceToWorkerSpecs}} map.

Container mismatch could happen in the below scenario:

- Flink does not allocate any external resources, the {{externalResources}} of

{{InternalContainerResource}} is an empty map.

- The returned container contains all the resources (with a value of 0) defined

in Yarn's {{resource-types.xml}}. The {{externalResources}} of

{{InternalContainerResource}} has one or more entries with a value of 0.

- These two {{InternalContainerResource}} do not match.

To solve this problem, we could ignore all the external resources with a value

of 0 in "ResourceInformationReflector#getExternalResources".

cc [~trohrmann] Could you assign this to me.

> ResourceInformationReflector#getExternalResources should ignore the external

> resource with a value of 0

> ---

>

> Key: FLINK-17917

> URL: https://issues.apache.org/jira/browse/FLINK-17917

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Coordination

>Affects Versions: 1.11.0

>Reporter: Yangze Guo

>Priority: Blocker

> Fix For: 1.11.0

>

>

> *Background*: In FLINK-17390, we leverage

> {{WorkerSpecContainerResourceAdapter.InternalContainerResource}} to handle

> container matching logic. In FLINK-17407, we introduce external resources in

> {{WorkerSpecContainerResourceAdapter.InternalContainerResource}}.

> On containers returned by Yarn, we try to get the corresponding worker specs

> by:

> - Convert the container to {{InternalContainerResource}}

> - Get the WorkerResourceSpec from {{containerResourceToWorkerSpecs}} map.

> *Problem*: Container mismatch could happen in the below scenario:

> - Flink does not allocate any external resources, the {{externalResources}}

> of {{InternalContainerResource}} is an empty map.

> - The returned container contains all the resources (with a value of 0)

> defined in Yarn's {{resource-types.xml}}. The {{externalResources}} of

> {{InternalContainerResource}} has one or more entries with a value of 0.

> - These two {{InternalContainerResource}} do not match.

> To solve this problem, we could ignore all the external resources with a

> value of 0 in "ResourceInformationReflector#getExternalResources".

> cc [~trohrmann] Could you assign this to me.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Closed] (FLINK-17542) Unify slot request timeout handling for streaming and batch tasks

[ https://issues.apache.org/jira/browse/FLINK-17542?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Zhu Zhu closed FLINK-17542. --- Resolution: Abandoned Superseded by FLINK-17018. > Unify slot request timeout handling for streaming and batch tasks > - > > Key: FLINK-17542 > URL: https://issues.apache.org/jira/browse/FLINK-17542 > Project: Flink > Issue Type: Sub-task > Components: Runtime / Coordination >Affects Versions: 1.11.0 >Reporter: Zhu Zhu >Assignee: Zhu Zhu >Priority: Major > Labels: pull-request-available > Fix For: 1.12.0 > > > There are 2 different slot request timeout handling mechanism for batch and > streaming tasks. > For streaming tasks, the slot request will fail if it is not fulfilled within > slotRequestTimeout. > For batch tasks, the slot request will be checked periodically to see whether > it is fulfillable, and only fails if it has been unfulfillable for a certain > period(slotRequestTimeout). > With slot marked with whether they will be occupied indefinitely, we can > unify these handling. See > [FLIP-119|https://cwiki.apache.org/confluence/display/FLINK/FLIP-119+Pipelined+Region+Scheduling#FLIP-119PipelinedRegionScheduling-ExtendedSlotProviderInterface] > for more details. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (FLINK-17017) Implement Bulk Slot Allocation

[

https://issues.apache.org/jira/browse/FLINK-17017?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Zhu Zhu closed FLINK-17017.

---

Resolution: Abandoned

Superseded by FLINK-17018.

> Implement Bulk Slot Allocation

> --

>

> Key: FLINK-17017

> URL: https://issues.apache.org/jira/browse/FLINK-17017

> Project: Flink

> Issue Type: Sub-task

> Components: Runtime / Coordination

>Affects Versions: 1.11.0

>Reporter: Zhu Zhu

>Assignee: Zhu Zhu

>Priority: Major

> Labels: pull-request-available

> Fix For: 1.12.0

>

>

> SlotProvider should support bulk slot allocation so that we can check to see

> if the resource requirements of a slot request bulk can be fulfilled at the

> same time.

> The SlotProvider interface should be extended with an bulk slot allocation

> method which accepts a bulk of slot requests as one of the parameters.

> {code:java}

> CompletableFuture> allocateSlots(

> Collection slotRequests,

> Time allocationTimeout);

>

> class LogicalSlotRequest {

> SlotRequestId slotRequestId;

> ScheduledUnit scheduledUnit;

> SlotProfile slotProfile;

> boolean slotWillBeOccupiedIndefinitely;

> }

> {code}

> More details see [FLIP-119#Bulk Slot

> Allocation|https://cwiki.apache.org/confluence/display/FLINK/FLIP-119+Pipelined+Region+Scheduling#FLIP-119PipelinedRegionScheduling-BulkSlotAllocation]

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] KarmaGYZ commented on pull request #12301: [FLINK-16572] [pubsub,e2e] Add check to see if adding a timeout to th…

KarmaGYZ commented on pull request #12301: URL: https://github.com/apache/flink/pull/12301#issuecomment-633401619 It seems something went wrong with the commit message in master branch: `Merge pull request #12301 from Xeli/FLINK-16572-logs` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-17916) Provide API to separate KafkaShuffle's Producer and Consumer to different jobs

[ https://issues.apache.org/jira/browse/FLINK-17916?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuan Mei updated FLINK-17916: - Summary: Provide API to separate KafkaShuffle's Producer and Consumer to different jobs (was: Separate KafkaShuffle's Producer and Consumer to different jobs) > Provide API to separate KafkaShuffle's Producer and Consumer to different jobs > -- > > Key: FLINK-17916 > URL: https://issues.apache.org/jira/browse/FLINK-17916 > Project: Flink > Issue Type: Improvement > Components: API / DataStream, Connectors / Kafka >Affects Versions: 1.11.0 >Reporter: Yuan Mei >Priority: Major > Fix For: 1.11.0 > > > Follow up of FLINK-15670 > *Separate sink (producer) and source (consumer) to different jobs* > * In the same job, a sink and a source are recovered independently according > to regional failover. However, they share the same checkpoint coordinator and > correspondingly, share the same global checkpoint snapshot. > * That says if the consumer fails, the producer can not commit written data > because of two-phase commit set-up (the producer needs a checkpoint-complete > signal to complete the second stage) > * Same applies to the producer > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-16572) CheckPubSubEmulatorTest is flaky on Azure

[ https://issues.apache.org/jira/browse/FLINK-16572?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17115740#comment-17115740 ] Robert Metzger commented on FLINK-16572: Thanks a lot. Merged as part of https://github.com/apache/flink/commit/50253c6b89e3c92cac23edda6556770a63643c90 > CheckPubSubEmulatorTest is flaky on Azure > - > > Key: FLINK-16572 > URL: https://issues.apache.org/jira/browse/FLINK-16572 > Project: Flink > Issue Type: Bug > Components: Connectors / Google Cloud PubSub, Tests >Affects Versions: 1.11.0 >Reporter: Aljoscha Krettek >Assignee: Richard Deurwaarder >Priority: Critical > Labels: pull-request-available, test-stability > Fix For: 1.11.0 > > Time Spent: 10m > Remaining Estimate: 0h > > Log: > https://dev.azure.com/aljoschakrettek/Flink/_build/results?buildId=56&view=logs&j=1f3ed471-1849-5d3c-a34c-19792af4ad16&t=ce095137-3e3b-5f73-4b79-c42d3d5f8283&l=7842 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (FLINK-17894) RowGenerator in datagen connector should be serializable

[ https://issues.apache.org/jira/browse/FLINK-17894?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jingsong Lee closed FLINK-17894. Resolution: Fixed master: edfb7c4d7a004fe60c8cd34ebca88d2f7cc5f212 release-1.11: 3f73694e9c005e62d661ed785c01bdd7060c1485 > RowGenerator in datagen connector should be serializable > > > Key: FLINK-17894 > URL: https://issues.apache.org/jira/browse/FLINK-17894 > Project: Flink > Issue Type: Bug > Components: Table SQL / API >Reporter: Jingsong Lee >Assignee: Jingsong Lee >Priority: Blocker > Labels: pull-request-available > Fix For: 1.11.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-17916) Separate KafkaShuffle's Producer and Consumer to different jobs

[ https://issues.apache.org/jira/browse/FLINK-17916?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuan Mei updated FLINK-17916: - Description: Follow up of FLINK-15670 *Separate sink (producer) and source (consumer) to different jobs* * In the same job, a sink and a source are recovered independently according to regional failover. However, they share the same checkpoint coordinator and correspondingly, share the same global checkpoint snapshot. * That says if the consumer fails, the producer can not commit written data because of two-phase commit set-up (the producer needs a checkpoint-complete signal to complete the second stage) * Same applies to the producer was: Follow up of FLINK-15670 Separate sink (producer) and source (consumer) to different jobs. * In the same job, a sink and a source are recovered independently according to regional failover. However, they share the same checkpoint coordinator and correspondingly, share the same global checkpoint snapshot * That says if the consumer fails, the producer can not commit written data because of two-phase commit set-up (the producer needs a checkpoint-complete signal to complete the second stage) > Separate KafkaShuffle's Producer and Consumer to different jobs > --- > > Key: FLINK-17916 > URL: https://issues.apache.org/jira/browse/FLINK-17916 > Project: Flink > Issue Type: Improvement > Components: API / DataStream, Connectors / Kafka >Affects Versions: 1.11.0 >Reporter: Yuan Mei >Priority: Major > Fix For: 1.11.0 > > > Follow up of FLINK-15670 > *Separate sink (producer) and source (consumer) to different jobs* > * In the same job, a sink and a source are recovered independently according > to regional failover. However, they share the same checkpoint coordinator and > correspondingly, share the same global checkpoint snapshot. > * That says if the consumer fails, the producer can not commit written data > because of two-phase commit set-up (the producer needs a checkpoint-complete > signal to complete the second stage) > * Same applies to the producer > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] rmetzger merged pull request #12301: [FLINK-16572] [pubsub,e2e] Add check to see if adding a timeout to th…

rmetzger merged pull request #12301: URL: https://github.com/apache/flink/pull/12301 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] JingsongLi merged pull request #12310: [FLINK-17894][table] RowGenerator in datagen connector should be serializable

JingsongLi merged pull request #12310: URL: https://github.com/apache/flink/pull/12310 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] rmetzger commented on pull request #12301: [FLINK-16572] [pubsub,e2e] Add check to see if adding a timeout to th…

rmetzger commented on pull request #12301: URL: https://github.com/apache/flink/pull/12301#issuecomment-633399279 Thanks a lot! Merging. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-17911) K8s e2e: error: timed out waiting for the condition on deployments/flink-native-k8s-session-1

[

https://issues.apache.org/jira/browse/FLINK-17911?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17115737#comment-17115737

]

Yang Wang commented on FLINK-17911:

---

Totally agree with you. Let's keep this ticket open.

> K8s e2e: error: timed out waiting for the condition on

> deployments/flink-native-k8s-session-1

> -

>

> Key: FLINK-17911

> URL: https://issues.apache.org/jira/browse/FLINK-17911

> Project: Flink

> Issue Type: Bug

> Components: Deployment / Kubernetes, Tests

>Affects Versions: 1.11.0

>Reporter: Robert Metzger

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=2062&view=logs&j=91bf6583-3fb2-592f-e4d4-d79d79c3230a&t=94459a52-42b6-5bfc-5d74-690b5d3c6de8

> {code}

> error: timed out waiting for the condition on

> deployments/flink-native-k8s-session-1

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] JingsongLi commented on pull request #12310: [FLINK-17894][table] RowGenerator in datagen connector should be serializable

JingsongLi commented on pull request #12310: URL: https://github.com/apache/flink/pull/12310#issuecomment-633399177 Thanks @zjuwangg for the review, merging... This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-17916) Separate KafkaShuffle read/write to different environments

[ https://issues.apache.org/jira/browse/FLINK-17916?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuan Mei updated FLINK-17916: - Description: Follow up of FLINK-15670 Separate sink (producer) and source (consumer) to different jobs. * In the same job, a sink and a source are recovered independently according to regional failover. However, they share the same checkpoint coordinator and correspondingly, share the same global checkpoint snapshot * That says if the consumer fails, the producer can not commit written data because of two-phase commit set-up (the producer needs a checkpoint-complete signal to complete the second stage) > Separate KafkaShuffle read/write to different environments > -- > > Key: FLINK-17916 > URL: https://issues.apache.org/jira/browse/FLINK-17916 > Project: Flink > Issue Type: Improvement > Components: API / DataStream, Connectors / Kafka >Affects Versions: 1.11.0 >Reporter: Yuan Mei >Priority: Major > Fix For: 1.11.0 > > > Follow up of FLINK-15670 > Separate sink (producer) and source (consumer) to different jobs. > * In the same job, a sink and a source are recovered independently according > to regional failover. However, they share the same checkpoint coordinator and > correspondingly, share the same global checkpoint snapshot > * That says if the consumer fails, the producer can not commit written data > because of two-phase commit set-up (the producer needs a checkpoint-complete > signal to complete the second stage) -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-17916) Separate KafkaShuffle's Producer and Consumer to different jobs

[ https://issues.apache.org/jira/browse/FLINK-17916?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuan Mei updated FLINK-17916: - Summary: Separate KafkaShuffle's Producer and Consumer to different jobs (was: Separate KafkaShuffle read/write to different environments) > Separate KafkaShuffle's Producer and Consumer to different jobs > --- > > Key: FLINK-17916 > URL: https://issues.apache.org/jira/browse/FLINK-17916 > Project: Flink > Issue Type: Improvement > Components: API / DataStream, Connectors / Kafka >Affects Versions: 1.11.0 >Reporter: Yuan Mei >Priority: Major > Fix For: 1.11.0 > > > Follow up of FLINK-15670 > Separate sink (producer) and source (consumer) to different jobs. > * In the same job, a sink and a source are recovered independently according > to regional failover. However, they share the same checkpoint coordinator and > correspondingly, share the same global checkpoint snapshot > * That says if the consumer fails, the producer can not commit written data > because of two-phase commit set-up (the producer needs a checkpoint-complete > signal to complete the second stage) -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-17911) K8s e2e: error: timed out waiting for the condition on deployments/flink-native-k8s-session-1

[

https://issues.apache.org/jira/browse/FLINK-17911?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17115736#comment-17115736

]

Robert Metzger commented on FLINK-17911:

Thanks for looking into the issue!

I would propose to keep the ticket open for some time to see how frequently

this issue happens. Most likely, it is very rate and we should not invest time

into it now.

> K8s e2e: error: timed out waiting for the condition on

> deployments/flink-native-k8s-session-1

> -

>

> Key: FLINK-17911

> URL: https://issues.apache.org/jira/browse/FLINK-17911

> Project: Flink

> Issue Type: Bug

> Components: Deployment / Kubernetes, Tests

>Affects Versions: 1.11.0

>Reporter: Robert Metzger

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=2062&view=logs&j=91bf6583-3fb2-592f-e4d4-d79d79c3230a&t=94459a52-42b6-5bfc-5d74-690b5d3c6de8

> {code}

> error: timed out waiting for the condition on

> deployments/flink-native-k8s-session-1

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] zjuwangg commented on pull request #12310: [FLINK-17894][table] RowGenerator in datagen connector should be serializable

zjuwangg commented on pull request #12310: URL: https://github.com/apache/flink/pull/12310#issuecomment-633397428 LGTM. Thanks for your fix. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-17911) K8s e2e: error: timed out waiting for the condition on deployments/flink-native-k8s-session-1

[

https://issues.apache.org/jira/browse/FLINK-17911?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17115735#comment-17115735

]

Yang Wang commented on FLINK-17911:

---

[~rmetzger] Thanks for creating this issue. The root cause is we failed to

build the docker image.

{code:java}

+ wget -nv -O /usr/local/bin/gosu.asc

https://github.com/tianon/gosu/releases/download/1.11/gosu-amd64.asc

https://github.com/tianon/gosu/releases/download/1.11/gosu-amd64.asc:

2020-05-22 21:00:29 ERROR 500: Internal Server Error.

{code}

> K8s e2e: error: timed out waiting for the condition on

> deployments/flink-native-k8s-session-1

> -

>

> Key: FLINK-17911

> URL: https://issues.apache.org/jira/browse/FLINK-17911

> Project: Flink

> Issue Type: Bug

> Components: Deployment / Kubernetes, Tests

>Affects Versions: 1.11.0

>Reporter: Robert Metzger

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=2062&view=logs&j=91bf6583-3fb2-592f-e4d4-d79d79c3230a&t=94459a52-42b6-5bfc-5d74-690b5d3c6de8

> {code}

> error: timed out waiting for the condition on

> deployments/flink-native-k8s-session-1

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-17896) HiveCatalog can work with new table factory because of is_generic

[

https://issues.apache.org/jira/browse/FLINK-17896?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jingsong Lee updated FLINK-17896:

-

Description:

{code:java}

[ERROR] Could not execute SQL statement. Reason:

org.apache.flink.table.api.ValidationException: Unsupported options found for

connector 'print'.Unsupported options:is_genericSupported options:connector

print-identifier

property-version

standard-error

{code}

This's because HiveCatalog put is_generic property into generic tables, but the

new factory will not delete it.

> HiveCatalog can work with new table factory because of is_generic

> -

>

> Key: FLINK-17896

> URL: https://issues.apache.org/jira/browse/FLINK-17896

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Hive, Table SQL / API

>Reporter: Jingsong Lee

>Priority: Blocker

> Fix For: 1.11.0

>

>

> {code:java}

> [ERROR] Could not execute SQL statement. Reason:

> org.apache.flink.table.api.ValidationException: Unsupported options found for

> connector 'print'.Unsupported options:is_genericSupported options:connector

> print-identifier

> property-version

> standard-error

> {code}

> This's because HiveCatalog put is_generic property into generic tables, but

> the new factory will not delete it.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-17916) Separate KafkaShuffle read/write to different environments

[ https://issues.apache.org/jira/browse/FLINK-17916?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuan Mei updated FLINK-17916: - Fix Version/s: (was: 1.12.0) 1.11.0 > Separate KafkaShuffle read/write to different environments > -- > > Key: FLINK-17916 > URL: https://issues.apache.org/jira/browse/FLINK-17916 > Project: Flink > Issue Type: Improvement > Components: API / DataStream, Connectors / Kafka >Affects Versions: 1.11.0 >Reporter: Yuan Mei >Priority: Major > Fix For: 1.11.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (FLINK-17917) ResourceInformationReflector#getExternalResources should ignore the external resource with a value of 0

Yangze Guo created FLINK-17917:

--

Summary: ResourceInformationReflector#getExternalResources should

ignore the external resource with a value of 0

Key: FLINK-17917

URL: https://issues.apache.org/jira/browse/FLINK-17917

Project: Flink

Issue Type: Bug

Components: Runtime / Coordination

Affects Versions: 1.11.0

Reporter: Yangze Guo

Fix For: 1.11.0

*Background*: In FLINK-17390, we leverage

{{WorkerSpecContainerResourceAdapter.InternalContainerResource}} to handle

container matching logic. In FLINK-17407, we introduce external resources in

{{WorkerSpecContainerResourceAdapter.InternalContainerResource}}.

On containers returned by Yarn, we try to get the corresponding worker specs by:

- Convert the container to {{InternalContainerResource}}

- Get the WorkerResourceSpec from {{containerResourceToWorkerSpecs}} map.

Container mismatch could happen in the below scenario:

- Flink does not allocate any external resources, the {{externalResources}} of

{{InternalContainerResource}} is an empty map.

- The returned container contains all the resources (with a value of 0) defined