[GitHub] [flink-web] tzulitai closed pull request #379: [release] [statefun] Announcement post and downloads for Stateful Functions v2.2.0

tzulitai closed pull request #379: URL: https://github.com/apache/flink-web/pull/379 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Closed] (FLINK-19408) Update flink-statefun-docker release scripts for cross release Java 8 and 11

[

https://issues.apache.org/jira/browse/FLINK-19408?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Tzu-Li (Gordon) Tai closed FLINK-19408.

---

Fix Version/s: (was: statefun-2.3.0)

statefun-2.2.0

Resolution: Fixed

flink-statefun-docker/master: 6f384d34bc74ae58b9bfb539a3197812f38c7f3c

> Update flink-statefun-docker release scripts for cross release Java 8 and 11

>

>

> Key: FLINK-19408

> URL: https://issues.apache.org/jira/browse/FLINK-19408

> Project: Flink

> Issue Type: New Feature

> Components: Stateful Functions

>Reporter: Tzu-Li (Gordon) Tai

>Assignee: Tzu-Li (Gordon) Tai

>Priority: Blocker

> Labels: pull-request-available

> Fix For: statefun-2.2.0

>

>

> Currently, the {{add-version.sh}} script in the {{flink-statefun-docker}}

> repo does not generate Dockerfiles for different Java versions.

> Since we have decided to cross-release images for Java 8 and 11, that script

> needs to be updated as well.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink-statefun-docker] tzulitai merged pull request #8: [FLINK-19408] Update add-version.sh for cross-releasing Java 8 and 11

tzulitai merged pull request #8: URL: https://github.com/apache/flink-statefun-docker/pull/8 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-statefun-docker] tzulitai merged pull request #10: [release] Add Dockerfiles for 2.2.0 release

tzulitai merged pull request #10: URL: https://github.com/apache/flink-statefun-docker/pull/10 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-19432) Whether to capture the updates which don't change any monitored columns

[ https://issues.apache.org/jira/browse/FLINK-19432?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17203008#comment-17203008 ] Zhengchao Shi commented on FLINK-19432: --- But sometimes this will cause a lot of "-U" and "+U" output, making the downstream do some useless calculations > Whether to capture the updates which don't change any monitored columns > --- > > Key: FLINK-19432 > URL: https://issues.apache.org/jira/browse/FLINK-19432 > Project: Flink > Issue Type: Improvement > Components: Formats (JSON, Avro, Parquet, ORC, SequenceFile) >Affects Versions: 1.11.1 >Reporter: Zhengchao Shi >Priority: Major > Fix For: 1.12.0 > > > with `debezium-json` and `canal-json`: > Whether to capture the updates which don't change any monitored columns. This > may happen if the monitored columns (columns defined in Flink SQL DDL) is a > subset of the columns in database table. We can provide an optional option, > default 'true', which means all the updates will be captured. You can set to > 'false' to only capture changed updates -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (FLINK-19417) Fix the bug of the method from_data_stream in table_environement

[ https://issues.apache.org/jira/browse/FLINK-19417?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dian Fu closed FLINK-19417. --- Resolution: Fixed master: 900071bb7a9073f67b8af1097ee858e59626593c > Fix the bug of the method from_data_stream in table_environement > > > Key: FLINK-19417 > URL: https://issues.apache.org/jira/browse/FLINK-19417 > Project: Flink > Issue Type: Bug > Components: API / Python >Reporter: Huang Xingbo >Assignee: Nicholas Jiang >Priority: Major > Labels: pull-request-available > Fix For: 1.12.0 > > > The parameter fields should be str or expression *, not the current list > [str]. And the table_env object passed to the Table object should be Python's > TableEnvironment, not Java's TableEnvironment -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-19417) Fix the bug of the method from_data_stream in table_environement

[ https://issues.apache.org/jira/browse/FLINK-19417?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dian Fu updated FLINK-19417: Affects Version/s: 1.12.0 > Fix the bug of the method from_data_stream in table_environement > > > Key: FLINK-19417 > URL: https://issues.apache.org/jira/browse/FLINK-19417 > Project: Flink > Issue Type: Bug > Components: API / Python >Affects Versions: 1.12.0 >Reporter: Huang Xingbo >Assignee: Nicholas Jiang >Priority: Major > Labels: pull-request-available > Fix For: 1.12.0 > > > The parameter fields should be str or expression *, not the current list > [str]. And the table_env object passed to the Table object should be Python's > TableEnvironment, not Java's TableEnvironment -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] dianfu closed pull request #13491: [FLINK-19417][python] Fix the bug of the method from_data_stream in table_environement

dianfu closed pull request #13491: URL: https://github.com/apache/flink/pull/13491 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13491: [FLINK-19417][python] Fix the bug of the method from_data_stream in table_environement

flinkbot edited a comment on pull request #13491: URL: https://github.com/apache/flink/pull/13491#issuecomment-699620559 ## CI report: * 0131e7e07336c24798a7d3b6692807c93a96a42c UNKNOWN * f998eecfa23b9ed74ac2eb95a4b390d8efb6d849 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7012) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-19416) Support Python datetime object in from_collection of Python DataStream

[ https://issues.apache.org/jira/browse/FLINK-19416?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17202997#comment-17202997 ] Dian Fu commented on FLINK-19416: - [~nicholasjiang] Have assigned it to you. > Support Python datetime object in from_collection of Python DataStream > -- > > Key: FLINK-19416 > URL: https://issues.apache.org/jira/browse/FLINK-19416 > Project: Flink > Issue Type: Improvement > Components: API / Python >Reporter: Huang Xingbo >Assignee: Nicholas Jiang >Priority: Major > Fix For: 1.12.0 > > > Support Python datetime object in from_collection of Python DataStream -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (FLINK-19416) Support Python datetime object in from_collection of Python DataStream

[ https://issues.apache.org/jira/browse/FLINK-19416?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dian Fu reassigned FLINK-19416: --- Assignee: Nicholas Jiang > Support Python datetime object in from_collection of Python DataStream > -- > > Key: FLINK-19416 > URL: https://issues.apache.org/jira/browse/FLINK-19416 > Project: Flink > Issue Type: Improvement > Components: API / Python >Reporter: Huang Xingbo >Assignee: Nicholas Jiang >Priority: Major > Fix For: 1.12.0 > > > Support Python datetime object in from_collection of Python DataStream -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink-web] tzulitai commented on pull request #379: [release] [statefun] Announcement post and downloads for Stateful Functions v2.2.0

tzulitai commented on pull request #379: URL: https://github.com/apache/flink-web/pull/379#issuecomment-699761575 Thanks a lot for the review and corrections @morsapaes @alpinegizmo! I'm merging this now for the announcement along with your fixes. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13495: [Test]Fix case demo is more obvious to understand for ReinterpretAsKeyedStream

flinkbot edited a comment on pull request #13495: URL: https://github.com/apache/flink/pull/13495#issuecomment-699753576 ## CI report: * b64812a0cb08a25ee0853c57230ffe12ccc9ceb3 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7014) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #13495: [Test]Fix case demo is more obvious to understand for ReinterpretAsKeyedStream

flinkbot commented on pull request #13495: URL: https://github.com/apache/flink/pull/13495#issuecomment-699753576 ## CI report: * b64812a0cb08a25ee0853c57230ffe12ccc9ceb3 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13494: [kafka connector]Fix cast question for properies() method in kafka ConnectorDescriptor

flinkbot edited a comment on pull request #13494: URL: https://github.com/apache/flink/pull/13494#issuecomment-699748753 ## CI report: * b139f0662c5c86ae97742c6dc6742aaef4f97a97 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7013) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #13495: [Test]Fix case demo is more obvious to understand for ReinterpretAsKeyedStream

flinkbot commented on pull request #13495: URL: https://github.com/apache/flink/pull/13495#issuecomment-699750515 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit b64812a0cb08a25ee0853c57230ffe12ccc9ceb3 (Mon Sep 28 03:33:41 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! * **Invalid pull request title: No valid Jira ID provided** Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

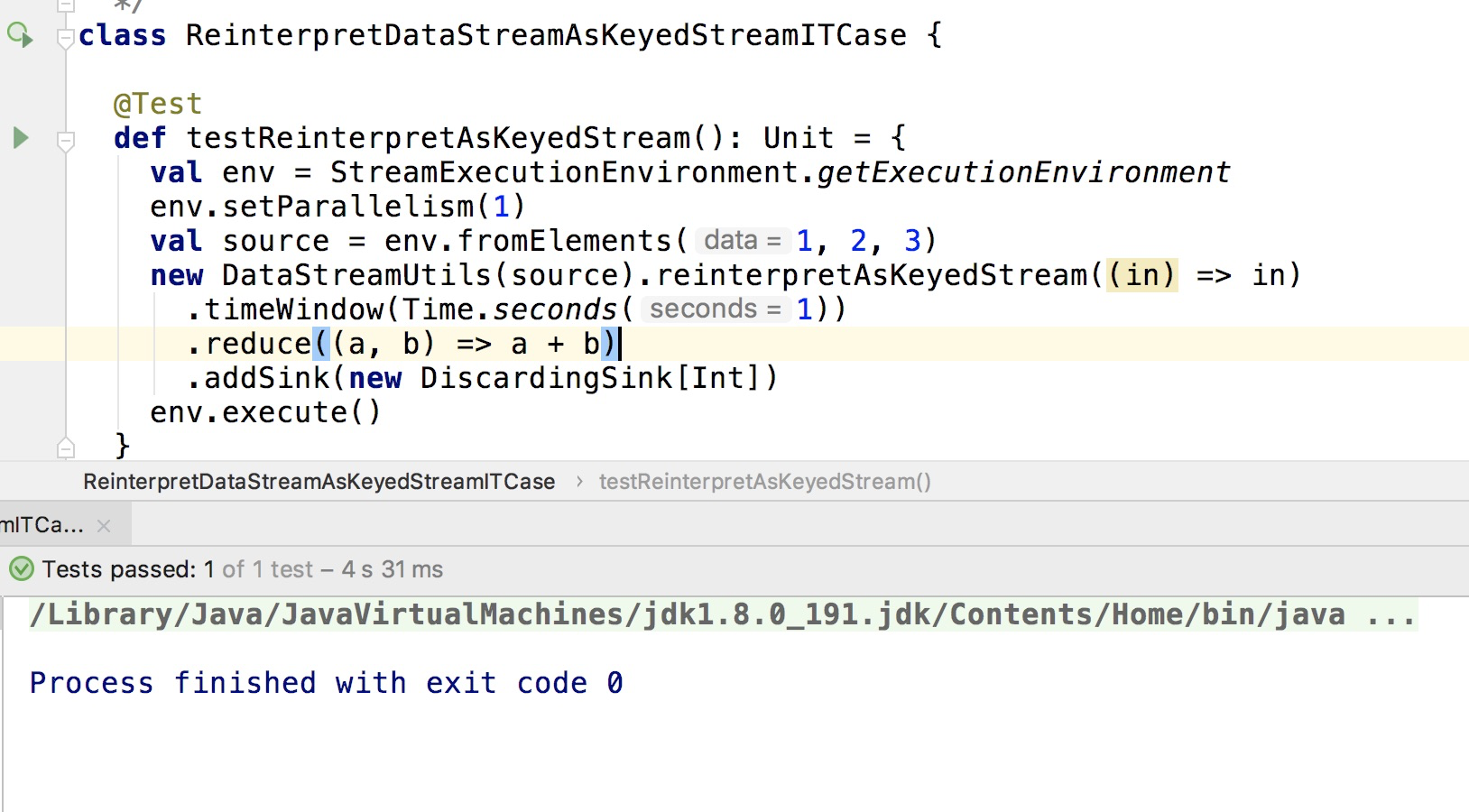

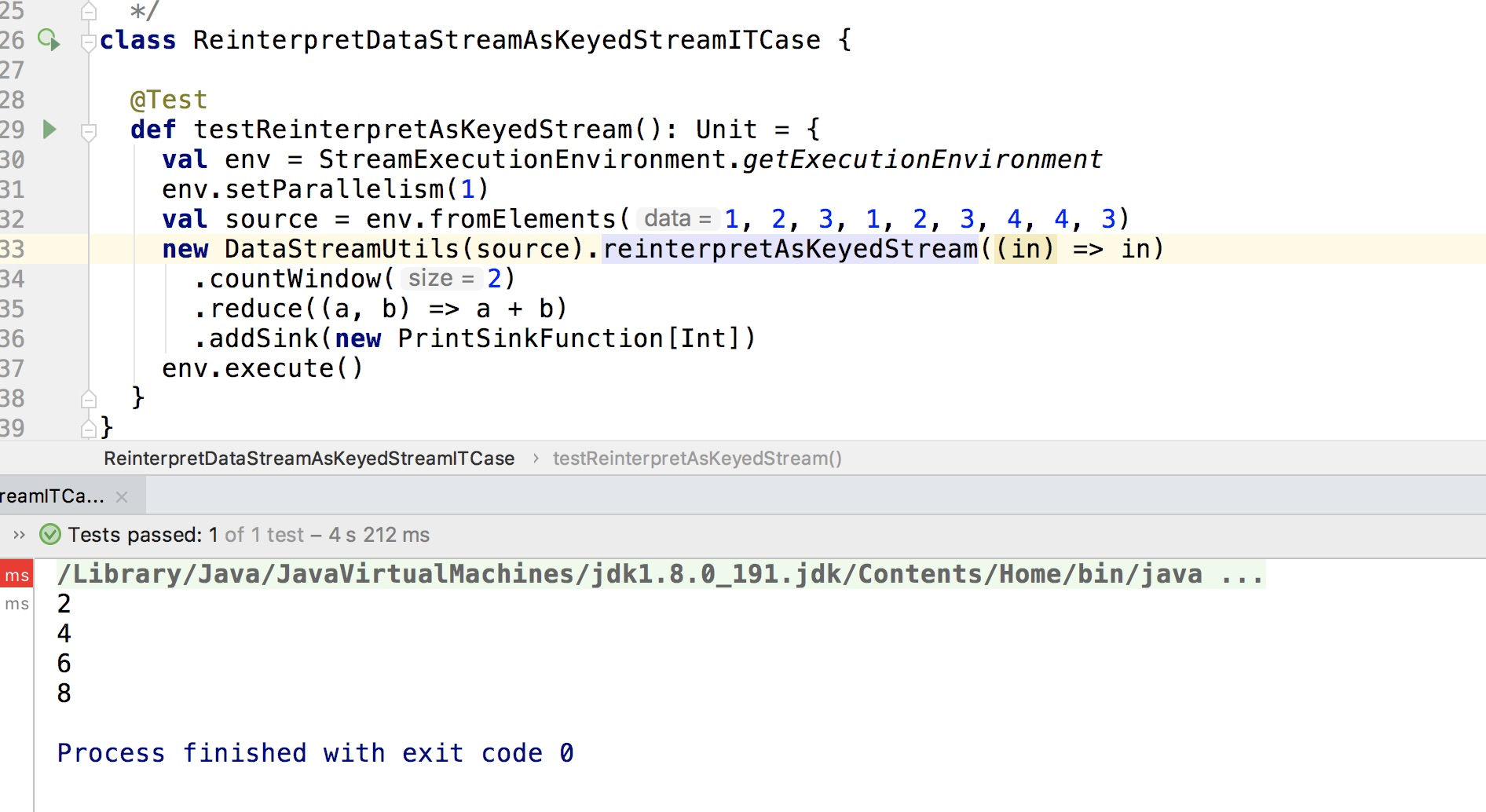

[GitHub] [flink] hehuiyuan opened a new pull request #13495: Fix case demo is more obvious to understand for ReinterpretAsKeyedStream

hehuiyuan opened a new pull request #13495: URL: https://github.com/apache/flink/pull/13495 ## What is the purpose of the change To make case demo more obvious. Change  To  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-19432) Whether to capture the updates which don't change any monitored columns

[ https://issues.apache.org/jira/browse/FLINK-19432?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17202984#comment-17202984 ] Benchao Li commented on FLINK-19432: [~tinny] IMHO, this may introduce some overhead in the format. If it does not have critical impact I prefer to keep as it is. CC [~jark], WDYT? > Whether to capture the updates which don't change any monitored columns > --- > > Key: FLINK-19432 > URL: https://issues.apache.org/jira/browse/FLINK-19432 > Project: Flink > Issue Type: Improvement > Components: Formats (JSON, Avro, Parquet, ORC, SequenceFile) >Affects Versions: 1.11.1 >Reporter: Zhengchao Shi >Priority: Major > Fix For: 1.12.0 > > > with `debezium-json` and `canal-json`: > Whether to capture the updates which don't change any monitored columns. This > may happen if the monitored columns (columns defined in Flink SQL DDL) is a > subset of the columns in database table. We can provide an optional option, > default 'true', which means all the updates will be captured. You can set to > 'false' to only capture changed updates -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot commented on pull request #13494: [kafka connector]Fix cast question for properies() method in kafka ConnectorDescriptor

flinkbot commented on pull request #13494: URL: https://github.com/apache/flink/pull/13494#issuecomment-699748753 ## CI report: * b139f0662c5c86ae97742c6dc6742aaef4f97a97 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-18112) Approximate Task-Local Recovery -- Milestone One

[ https://issues.apache.org/jira/browse/FLINK-18112?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuan Mei updated FLINK-18112: - Description: This is the Jira ticket for Milestone One of [FLIP-135 Approximate Task-Local Recovery|https://cwiki.apache.org/confluence/display/FLINK/FLIP-135+Approximate+Task-Local+Recovery] In short, in Approximate Task-Local Recovery, if a task fails, only the failed task restarts without affecting the rest of the job. To ease discussion, we divide the problem of approximate task-local recovery into three parts with each part only focusing on addressing a set of problems. This Jira ticket focuses on address the first milestone. Milestone One: sink recovery. Here a sink task stands for no consumers reading data from it. In this scenario, if a sink vertex fails, the sink is restarted from the last successfully completed checkpoint and data loss is expected. If a non-sink vertex fails, a regional failover strategy takes place. In milestone one, we focus on issues related to task failure handling and upstream reconnection. Milestone one includes two parts of change: *Part 1*: Network Part: how the failed task able to link to the upstream Result(Sub)Partitions, and continue processing data *Part 2*: Scheduling part, a new failover strategy to restart the sink only when the sink fails. was: This is the Jira ticket for Milestone One of [FLIP-135 Approximate Task-Local Recovery|[https://cwiki.apache.org/confluence/display/FLINK/FLIP-135+Approximate+Task-Local+Recovery].] In short, in Approximate Task-Local Recovery, if a task fails, only the failed task restarts without affecting the rest of the job. To ease discussion, we divide the problem of approximate task-local recovery into three parts with each part only focusing on addressing a set of problems. This Jira ticket focuses on address the first milestone. Milestone One: sink recovery. Here a sink task stands for no consumers reading data from it. In this scenario, if a sink vertex fails, the sink is restarted from the last successfully completed checkpoint and data loss is expected. If a non-sink vertex fails, a regional failover strategy takes place. In milestone one, we focus on issues related to task failure handling and upstream reconnection. Milestone one includes two parts of change: *Part 1*: Network Part: how the failed task able to link to the upstream Result(Sub)Partitions, and continue processing data *Part 2*: Scheduling part, a new failover strategy to restart the sink only when the sink fails. > Approximate Task-Local Recovery -- Milestone One > > > Key: FLINK-18112 > URL: https://issues.apache.org/jira/browse/FLINK-18112 > Project: Flink > Issue Type: New Feature > Components: Runtime / Checkpointing, Runtime / Coordination, Runtime > / Network >Affects Versions: 1.12.0 >Reporter: Yuan Mei >Assignee: Yuan Mei >Priority: Major > Fix For: 1.12.0 > > > This is the Jira ticket for Milestone One of [FLIP-135 Approximate Task-Local > Recovery|https://cwiki.apache.org/confluence/display/FLINK/FLIP-135+Approximate+Task-Local+Recovery] > In short, in Approximate Task-Local Recovery, if a task fails, only the > failed task restarts without affecting the rest of the job. To ease > discussion, we divide the problem of approximate task-local recovery into > three parts with each part only focusing on addressing a set of problems. > This Jira ticket focuses on address the first milestone. > Milestone One: sink recovery. Here a sink task stands for no consumers > reading data from it. In this scenario, if a sink vertex fails, the sink is > restarted from the last successfully completed checkpoint and data loss is > expected. If a non-sink vertex fails, a regional failover strategy takes > place. In milestone one, we focus on issues related to task failure handling > and upstream reconnection. > > Milestone one includes two parts of change: > *Part 1*: Network Part: how the failed task able to link to the upstream > Result(Sub)Partitions, and continue processing data > *Part 2*: Scheduling part, a new failover strategy to restart the sink only > when the sink fails. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-18112) Approximate Task-Local Recovery -- Milestone One

[ https://issues.apache.org/jira/browse/FLINK-18112?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuan Mei updated FLINK-18112: - Description: This is the Jira ticket for Milestone One of [FLIP-135 Approximate Task-Local Recovery|[https://cwiki.apache.org/confluence/display/FLINK/FLIP-135+Approximate+Task-Local+Recovery].] In short, in Approximate Task-Local Recovery, if a task fails, only the failed task restarts without affecting the rest of the job. To ease discussion, we divide the problem of approximate task-local recovery into three parts with each part only focusing on addressing a set of problems. This Jira ticket focuses on address the first milestone. Milestone One: sink recovery. Here a sink task stands for no consumers reading data from it. In this scenario, if a sink vertex fails, the sink is restarted from the last successfully completed checkpoint and data loss is expected. If a non-sink vertex fails, a regional failover strategy takes place. In milestone one, we focus on issues related to task failure handling and upstream reconnection. Milestone one includes two parts of change: *Part 1*: Network Part: how the failed task able to link to the upstream Result(Sub)Partitions, and continue processing data *Part 2*: Scheduling part, a new failover strategy to restart the sink only when the sink fails. was: Build a prototype of single task failure recovery to address and answer the following questions: *Step 1*: Scheduling part, restart a single node without restarting the upstream or downstream nodes. *Step 2*: Checkpointing part, as my understanding of how regional failover works, this part might not need modification. *Step 3*: Network part - how the recovered node able to link to the upstream ResultPartitions, and continue getting data - how the downstream node able to link to the recovered node, and continue getting node - how different netty transit mode affects the results - what if the failed node buffered data pool is full *Step 4*: Failover process verification > Approximate Task-Local Recovery -- Milestone One > > > Key: FLINK-18112 > URL: https://issues.apache.org/jira/browse/FLINK-18112 > Project: Flink > Issue Type: New Feature > Components: Runtime / Checkpointing, Runtime / Coordination, Runtime > / Network >Affects Versions: 1.12.0 >Reporter: Yuan Mei >Assignee: Yuan Mei >Priority: Major > Fix For: 1.12.0 > > > This is the Jira ticket for Milestone One of [FLIP-135 Approximate Task-Local > Recovery|[https://cwiki.apache.org/confluence/display/FLINK/FLIP-135+Approximate+Task-Local+Recovery].] > In short, in Approximate Task-Local Recovery, if a task fails, only the > failed task restarts without affecting the rest of the job. To ease > discussion, we divide the problem of approximate task-local recovery into > three parts with each part only focusing on addressing a set of problems. > This Jira ticket focuses on address the first milestone. > Milestone One: sink recovery. Here a sink task stands for no consumers > reading data from it. In this scenario, if a sink vertex fails, the sink is > restarted from the last successfully completed checkpoint and data loss is > expected. If a non-sink vertex fails, a regional failover strategy takes > place. In milestone one, we focus on issues related to task failure handling > and upstream reconnection. > > Milestone one includes two parts of change: > *Part 1*: Network Part: how the failed task able to link to the upstream > Result(Sub)Partitions, and continue processing data > *Part 2*: Scheduling part, a new failover strategy to restart the sink only > when the sink fails. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] HuangXingBo commented on pull request #13492: [FLINK-19181][python] Make python processes respect the calculated managed memory fraction

HuangXingBo commented on pull request #13492:

URL: https://github.com/apache/flink/pull/13492#issuecomment-699746710

@dianfu The memory configuration shown in the python udf related documents

is as follows

`table_env.get_config().get_configuration().set_string("taskmanager.memory.task.off-heap.size",

'80m')`

which I think can be removed together.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] HuangXingBo commented on a change in pull request #13492: [FLINK-19181][python] Make python processes respect the calculated managed memory fraction

HuangXingBo commented on a change in pull request #13492:

URL: https://github.com/apache/flink/pull/13492#discussion_r495662360

##

File path: flink-python/src/main/java/org/apache/flink/python/PythonOptions.java

##

@@ -156,7 +156,7 @@

*/

public static final ConfigOption USE_MANAGED_MEMORY =

ConfigOptions

.key("python.fn-execution.memory.managed")

- .defaultValue(false)

+ .defaultValue(true)

Review comment:

We need to also change the default value of

`python.fn-execution.memory.managed` in `python_configuration.html`

##

File path: flink-python/pyflink/fn_execution/beam/beam_sdk_worker_main.py

##

@@ -23,10 +25,34 @@

except ImportError:

import pyflink.fn_execution.beam.beam_operations_slow

+# resource is only available in Unix

+try:

+import resource

+has_resource_module = True

+except ImportError:

+has_resource_module = False

+

# force to register the coders to SDK Harness

import pyflink.fn_execution.beam.beam_coders # noqa # pylint:

disable=unused-import

import apache_beam.runners.worker.sdk_worker_main

+

+def set_memory_limit():

+memory_limit = int(os.environ.get('_PYTHON_WORKER_MEMORY_LIMIT', "-1"))

+if memory_limit > 0 and has_resource_module:

Review comment:

For the case that `has_resource_module is false`, but `memory_limit>0`

is set, can we logging a warning.

##

File path:

flink-python/src/main/java/org/apache/flink/streaming/api/runners/python/beam/BeamPythonFunctionRunner.java

##

@@ -235,6 +274,14 @@ public void close() throws Exception {

jobBundleFactory = null;

}

+ try {

+ if (sharedResources != null) {

+sharedResources.close();

Review comment:

```suggestion

sharedResources.close();

```

##

File path:

flink-python/src/main/java/org/apache/flink/streaming/api/runners/python/beam/BeamPythonFunctionRunner.java

##

@@ -117,13 +120,19 @@

private transient boolean bundleStarted;

+ private static final String MANAGED_MEMORY_RESOURCE_ID =

"python-process-managed-memory";

Review comment:

Move the declaration of the variables `MANAGED_MEMORY_RESOURCE_ID` and

`PYTHON_WORKER_MEMORY_LIMIT` to the front of `bundleStarted` ?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #13494: Fix cast question for properies() method in kafka ConnectorDescriptor

flinkbot commented on pull request #13494: URL: https://github.com/apache/flink/pull/13494#issuecomment-699743921 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit b139f0662c5c86ae97742c6dc6742aaef4f97a97 (Mon Sep 28 03:05:52 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! * **Invalid pull request title: No valid Jira ID provided** Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13491: [FLINK-19417][python] Fix the bug of the method from_data_stream in table_environement

flinkbot edited a comment on pull request #13491: URL: https://github.com/apache/flink/pull/13491#issuecomment-699620559 ## CI report: * 0131e7e07336c24798a7d3b6692807c93a96a42c UNKNOWN * 20a9a58f2aa694681754572c716ee0b408484315 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7004) * f998eecfa23b9ed74ac2eb95a4b390d8efb6d849 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7012) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13482: test

flinkbot edited a comment on pull request #13482: URL: https://github.com/apache/flink/pull/13482#issuecomment-698903502 ## CI report: * 5fb5255b9edc3cd74b836a89489a0a81591a514f Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6999) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7010) Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7002) * 81efe483e7dbcff7e365b535e2dcae6153f217a8 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7011) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-18112) Approximate Task-Local Recovery -- Milestone One

[ https://issues.apache.org/jira/browse/FLINK-18112?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuan Mei updated FLINK-18112: - Summary: Approximate Task-Local Recovery -- Milestone One (was: Approximate Task-Local Recovery) > Approximate Task-Local Recovery -- Milestone One > > > Key: FLINK-18112 > URL: https://issues.apache.org/jira/browse/FLINK-18112 > Project: Flink > Issue Type: New Feature > Components: Runtime / Checkpointing, Runtime / Coordination, Runtime > / Network >Affects Versions: 1.12.0 >Reporter: Yuan Mei >Assignee: Yuan Mei >Priority: Major > Fix For: 1.12.0 > > > Build a prototype of single task failure recovery to address and answer the > following questions: > *Step 1*: Scheduling part, restart a single node without restarting the > upstream or downstream nodes. > *Step 2*: Checkpointing part, as my understanding of how regional failover > works, this part might not need modification. > *Step 3*: Network part > - how the recovered node able to link to the upstream ResultPartitions, and > continue getting data > - how the downstream node able to link to the recovered node, and continue > getting node > - how different netty transit mode affects the results > - what if the failed node buffered data pool is full > *Step 4*: Failover process verification -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-18112) Approximate Task-Local Recovery

[ https://issues.apache.org/jira/browse/FLINK-18112?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuan Mei updated FLINK-18112: - Summary: Approximate Task-Local Recovery (was: Single Task Approximate Failure Recovery) > Approximate Task-Local Recovery > --- > > Key: FLINK-18112 > URL: https://issues.apache.org/jira/browse/FLINK-18112 > Project: Flink > Issue Type: New Feature > Components: Runtime / Checkpointing, Runtime / Coordination, Runtime > / Network >Affects Versions: 1.12.0 >Reporter: Yuan Mei >Assignee: Yuan Mei >Priority: Major > Fix For: 1.12.0 > > > Build a prototype of single task failure recovery to address and answer the > following questions: > *Step 1*: Scheduling part, restart a single node without restarting the > upstream or downstream nodes. > *Step 2*: Checkpointing part, as my understanding of how regional failover > works, this part might not need modification. > *Step 3*: Network part > - how the recovered node able to link to the upstream ResultPartitions, and > continue getting data > - how the downstream node able to link to the recovered node, and continue > getting node > - how different netty transit mode affects the results > - what if the failed node buffered data pool is full > *Step 4*: Failover process verification -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] hehuiyuan opened a new pull request #13494: Fix cast question for properies() method in kafka ConnectorDescriptor

hehuiyuan opened a new pull request #13494:

URL: https://github.com/apache/flink/pull/13494

## What is the purpose of the change

This pull resquest fixes Kafka connector. There is a cast problem when use

properties method.

```

Properties props = new Properties();

props.put( "enable.auto.commit", "false");

props.put( "fetch.max.wait.ms", "3000");

props.put("flink.poll-timeout", 5000);

props.put( "flink.partition-discovery.interval-millis", false);

kafka = new Kafka()

.version("0.11")

.topic(topic)

.properties(props);

```

```

Exception in thread "main" java.lang.ClassCastException: java.lang.Integer

cannot be cast to java.lang.String

Exception in thread "main" java.lang.ClassCastException: java.lang.Boolean

cannot be cast to java.lang.String

```

## Brief change log

- *change (String) v > String.valueOf() in Kafka.java*

## Documentation

- Does this pull request introduce a new feature? ( no)

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (FLINK-18112) Single Task Approximate Failure Recovery

[ https://issues.apache.org/jira/browse/FLINK-18112?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuan Mei updated FLINK-18112: - Summary: Single Task Approximate Failure Recovery (was: Single Task Failure Recovery Prototype) > Single Task Approximate Failure Recovery > > > Key: FLINK-18112 > URL: https://issues.apache.org/jira/browse/FLINK-18112 > Project: Flink > Issue Type: New Feature > Components: Runtime / Checkpointing, Runtime / Coordination, Runtime > / Network >Affects Versions: 1.12.0 >Reporter: Yuan Mei >Assignee: Yuan Mei >Priority: Major > Fix For: 1.12.0 > > > Build a prototype of single task failure recovery to address and answer the > following questions: > *Step 1*: Scheduling part, restart a single node without restarting the > upstream or downstream nodes. > *Step 2*: Checkpointing part, as my understanding of how regional failover > works, this part might not need modification. > *Step 3*: Network part > - how the recovered node able to link to the upstream ResultPartitions, and > continue getting data > - how the downstream node able to link to the recovered node, and continue > getting node > - how different netty transit mode affects the results > - what if the failed node buffered data pool is full > *Step 4*: Failover process verification -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] wangyang0918 commented on a change in pull request #13322: [FLINK-17480][kubernetes] Support running PyFlink on Kubernetes.

wangyang0918 commented on a change in pull request #13322:

URL: https://github.com/apache/flink/pull/13322#discussion_r495664630

##

File path:

flink-end-to-end-tests/test-scripts/test_kubernetes_pyflink_application.sh

##

@@ -0,0 +1,83 @@

+#!/usr/bin/env bash

+

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+

+source "$(dirname "$0")"/common_kubernetes.sh

+

+CURRENT_DIR=`cd "$(dirname "$0")" && pwd -P`

+CLUSTER_ROLE_BINDING="flink-role-binding-default"

+CLUSTER_ID="flink-native-k8s-pyflink-application-1"

+PURE_FLINK_IMAGE_NAME="test_kubernetes_application"

+PYFLINK_IMAGE_NAME="test_kubernetes_pyflink_application"

+LOCAL_LOGS_PATH="${TEST_DATA_DIR}/log"

+

+function internal_cleanup {

+kubectl delete deployment ${CLUSTER_ID}

+kubectl delete clusterrolebinding ${CLUSTER_ROLE_BINDING}

+}

+

+start_kubernetes

+

+build_image ${PURE_FLINK_IMAGE_NAME}

+

+# Build PyFlink wheel package

Review comment:

We have to make sure that this serious of commands to build an image for

python is stable. Otherwise, the devs will suffer a lot from this failure.

Do you know how long it will take to run this E2E test?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13482: test

flinkbot edited a comment on pull request #13482: URL: https://github.com/apache/flink/pull/13482#issuecomment-698903502 ## CI report: * 5fb5255b9edc3cd74b836a89489a0a81591a514f Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6999) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7010) Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7002) * 81efe483e7dbcff7e365b535e2dcae6153f217a8 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-15578) Implement exactly-once JDBC sink

[ https://issues.apache.org/jira/browse/FLINK-15578?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17202969#comment-17202969 ] Kenzyme Le commented on FLINK-15578: Great! Thanks for the update. > Implement exactly-once JDBC sink > > > Key: FLINK-15578 > URL: https://issues.apache.org/jira/browse/FLINK-15578 > Project: Flink > Issue Type: New Feature > Components: Connectors / JDBC >Reporter: Roman Khachatryan >Priority: Major > Labels: pull-request-available > Fix For: 1.12.0 > > Time Spent: 10m > Remaining Estimate: 0h > > As per discussion in the dev mailing list, there are two options: > # Write-ahead log > # Two-phase commit (XA) > the latter being preferable. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-19432) Whether to capture the updates which don't change any monitored columns

[ https://issues.apache.org/jira/browse/FLINK-19432?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17202968#comment-17202968 ] Zhengchao Shi commented on FLINK-19432: --- [~libenchao] What do you think about this improvement in "debezium-json", > Whether to capture the updates which don't change any monitored columns > --- > > Key: FLINK-19432 > URL: https://issues.apache.org/jira/browse/FLINK-19432 > Project: Flink > Issue Type: Improvement > Components: Formats (JSON, Avro, Parquet, ORC, SequenceFile) >Affects Versions: 1.11.1 >Reporter: Zhengchao Shi >Priority: Major > Fix For: 1.12.0 > > > with `debezium-json` and `canal-json`: > Whether to capture the updates which don't change any monitored columns. This > may happen if the monitored columns (columns defined in Flink SQL DDL) is a > subset of the columns in database table. We can provide an optional option, > default 'true', which means all the updates will be captured. You can set to > 'false' to only capture changed updates -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] HuangXingBo commented on pull request #13491: [FLINK-19417][python] Fix the bug of the method from_data_stream in table_environement

HuangXingBo commented on pull request #13491: URL: https://github.com/apache/flink/pull/13491#issuecomment-699735286 @SteNicholas Thanks a lot for the update. LGTM. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13482: test

flinkbot edited a comment on pull request #13482: URL: https://github.com/apache/flink/pull/13482#issuecomment-698903502 ## CI report: * 5fb5255b9edc3cd74b836a89489a0a81591a514f Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7002) Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6999) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7010) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-19438) Queryable State needs to support both read-uncommitted and read-committed

[ https://issues.apache.org/jira/browse/FLINK-19438?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sheep updated FLINK-19438: -- Summary: Queryable State needs to support both read-uncommitted and read-committed (was: Queryable State need implement both read-uncommitted and read-committed ) > Queryable State needs to support both read-uncommitted and read-committed > -- > > Key: FLINK-19438 > URL: https://issues.apache.org/jira/browse/FLINK-19438 > Project: Flink > Issue Type: Wish > Components: Runtime / Queryable State >Reporter: sheep >Priority: Major > > Flink exposes its managed keyed (partitioned) state to the outside world and > allows the user to query a job’s state from outside Flink. From a traditional > database isolation-level viewpoint, the queries access uncommitted state, > thus following the read-uncommitted isolation level. > I fully understand Flink provides read-uncommitted state query in order to > query real-time state. But the read-committed state is also important (I > cannot fully explain). From Flink 1.9, querying even modifying the state in > Checkpoint has been implemented. The state in Checkpoint is equivalent to > read-committed state. So, users can query read-committed state via the state > processor api. > *Flink should provide users for configuration of isolation level of > Queryable State by integration of the two levels of state query.* -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-19438) Queryable State need implement both read-uncommitted and read-committed

[ https://issues.apache.org/jira/browse/FLINK-19438?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sheep updated FLINK-19438: -- Summary: Queryable State need implement both read-uncommitted and read-committed (was: Queryable State need implement both read-uncommitted and read-committed) > Queryable State need implement both read-uncommitted and read-committed > > > Key: FLINK-19438 > URL: https://issues.apache.org/jira/browse/FLINK-19438 > Project: Flink > Issue Type: Wish > Components: Runtime / Queryable State >Reporter: sheep >Priority: Major > > Flink exposes its managed keyed (partitioned) state to the outside world and > allows the user to query a job’s state from outside Flink. From a traditional > database isolation-level viewpoint, the queries access uncommitted state, > thus following the read-uncommitted isolation level. > I fully understand Flink provides read-uncommitted state query in order to > query real-time state. But the read-committed state is also important (I > cannot fully explain). From Flink 1.9, querying even modifying the state in > Checkpoint has been implemented. The state in Checkpoint is equivalent to > read-committed state. So, users can query read-committed state via the state > processor api. > *Flink should provide users for configuration of isolation level of > Queryable State by integration of the two levels of state query.* -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (FLINK-19438) Queryable State need implement both read-uncommitted and read-committed

sheep created FLINK-19438: - Summary: Queryable State need implement both read-uncommitted and read-committed Key: FLINK-19438 URL: https://issues.apache.org/jira/browse/FLINK-19438 Project: Flink Issue Type: Wish Components: Runtime / Queryable State Reporter: sheep Flink exposes its managed keyed (partitioned) state to the outside world and allows the user to query a job’s state from outside Flink. From a traditional database isolation-level viewpoint, the queries access uncommitted state, thus following the read-uncommitted isolation level. I fully understand Flink provides read-uncommitted state query in order to query real-time state. But the read-committed state is also important (I cannot fully explain). From Flink 1.9, querying even modifying the state in Checkpoint has been implemented. The state in Checkpoint is equivalent to read-committed state. So, users can query read-committed state via the state processor api. *Flink should provide users for configuration of isolation level of Queryable State by integration of the two levels of state query.* -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] zhijiangW commented on pull request #13482: test

zhijiangW commented on pull request #13482: URL: https://github.com/apache/flink/pull/13482#issuecomment-699733170 @flinkbot run azure This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13491: [FLINK-19417][python] Fix the bug of the method from_data_stream in table_environement

flinkbot edited a comment on pull request #13491: URL: https://github.com/apache/flink/pull/13491#issuecomment-699620559 ## CI report: * 0131e7e07336c24798a7d3b6692807c93a96a42c UNKNOWN * 20a9a58f2aa694681754572c716ee0b408484315 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=7004) * f998eecfa23b9ed74ac2eb95a4b390d8efb6d849 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-19416) Support Python datetime object in from_collection of Python DataStream

[ https://issues.apache.org/jira/browse/FLINK-19416?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17202951#comment-17202951 ] Huang Xingbo commented on FLINK-19416: -- [~nicholasjiang] Thanks a lot. [~dianfu] Could you help assign this JIRA to [~nicholasjiang]. > Support Python datetime object in from_collection of Python DataStream > -- > > Key: FLINK-19416 > URL: https://issues.apache.org/jira/browse/FLINK-19416 > Project: Flink > Issue Type: Improvement > Components: API / Python >Reporter: Huang Xingbo >Priority: Major > Fix For: 1.12.0 > > > Support Python datetime object in from_collection of Python DataStream -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] dianfu commented on pull request #13491: [FLINK-19417][python] Fix the bug of the method from_data_stream in table_environement

dianfu commented on pull request #13491: URL: https://github.com/apache/flink/pull/13491#issuecomment-699719124 @SteNicholas Thanks for the update. LGTM. There are check style issues. Could you take a look at? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-19437) FileSourceTextLinesITCase.testContinuousTextFileSource failed with "SimpleStreamFormat is not splittable, but found split end (0) different from file length (198)"

[

https://issues.apache.org/jira/browse/FLINK-19437?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Dian Fu updated FLINK-19437:

Labels: test-stability (was: )

> FileSourceTextLinesITCase.testContinuousTextFileSource failed with

> "SimpleStreamFormat is not splittable, but found split end (0) different from

> file length (198)"

> ---

>

> Key: FLINK-19437

> URL: https://issues.apache.org/jira/browse/FLINK-19437

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=7008=logs=fc5181b0-e452-5c8f-68de-1097947f6483=62110053-334f-5295-a0ab-80dd7e2babbf

> {code}

> 2020-09-27T21:58:38.9199090Z [ERROR]

> testContinuousTextFileSource(org.apache.flink.connector.file.src.FileSourceTextLinesITCase)

> Time elapsed: 0.517 s <<< ERROR!

> 2020-09-27T21:58:38.9199619Z java.lang.RuntimeException: Failed to fetch next

> result

> 2020-09-27T21:58:38.9200118Z at

> org.apache.flink.streaming.api.operators.collect.CollectResultIterator.nextResultFromFetcher(CollectResultIterator.java:106)

> 2020-09-27T21:58:38.9200722Z at

> org.apache.flink.streaming.api.operators.collect.CollectResultIterator.hasNext(CollectResultIterator.java:77)

> 2020-09-27T21:58:38.9201290Z at

> org.apache.flink.streaming.api.datastream.DataStreamUtils.collectRecordsFromUnboundedStream(DataStreamUtils.java:150)

> 2020-09-27T21:58:38.9201920Z at

> org.apache.flink.connector.file.src.FileSourceTextLinesITCase.testContinuousTextFileSource(FileSourceTextLinesITCase.java:136)

> 2020-09-27T21:58:38.9202570Z at

> sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> 2020-09-27T21:58:38.9203054Z at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> 2020-09-27T21:58:38.9203539Z at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> 2020-09-27T21:58:38.9203968Z at

> java.lang.reflect.Method.invoke(Method.java:498)

> 2020-09-27T21:58:38.9204369Z at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

> 2020-09-27T21:58:38.9204844Z at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> 2020-09-27T21:58:38.9205359Z at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

> 2020-09-27T21:58:38.9205814Z at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> 2020-09-27T21:58:38.9206240Z at

> org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:55)

> 2020-09-27T21:58:38.9206611Z at

> org.junit.rules.RunRules.evaluate(RunRules.java:20)

> 2020-09-27T21:58:38.9206971Z at

> org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

> 2020-09-27T21:58:38.9207404Z at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

> 2020-09-27T21:58:38.9207971Z at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

> 2020-09-27T21:58:38.9208404Z at

> org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

> 2020-09-27T21:58:38.9208877Z at

> org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

> 2020-09-27T21:58:38.9209279Z at

> org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

> 2020-09-27T21:58:38.9209680Z at

> org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

> 2020-09-27T21:58:38.9210064Z at

> org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

> 2020-09-27T21:58:38.9210476Z at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

> 2020-09-27T21:58:38.9210881Z at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

> 2020-09-27T21:58:38.9211272Z at

> org.junit.rules.RunRules.evaluate(RunRules.java:20)

> 2020-09-27T21:58:38.9211638Z at

> org.junit.runners.ParentRunner.run(ParentRunner.java:363)

> 2020-09-27T21:58:38.9212305Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365)

> 2020-09-27T21:58:38.9213157Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273)

> 2020-09-27T21:58:38.9213663Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238)

> 2020-09-27T21:58:38.9214123Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159)

> 2020-09-27T21:58:38.9214620Z at

> org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

> 2020-09-27T21:58:38.9215148Z at

>

[jira] [Commented] (FLINK-18818) HadoopRenameCommitterHDFSTest.testCommitOneFile[Override: false] failed with "java.io.IOException: The stream is closed"

[

https://issues.apache.org/jira/browse/FLINK-18818?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17202949#comment-17202949

]

Dian Fu commented on FLINK-18818:

-

HadoopRenameCommitterHDFSTest.testCommitMultipleFilesMixed has also failed with

the same error:

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=7008=logs=5cae8624-c7eb-5c51-92d3-4d2dacedd221=420bd9ec-164e-562e-8947-0dacde3cec91

> HadoopRenameCommitterHDFSTest.testCommitOneFile[Override: false] failed with

> "java.io.IOException: The stream is closed"

>

>

> Key: FLINK-18818

> URL: https://issues.apache.org/jira/browse/FLINK-18818

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem, Tests

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=5177=logs=5cae8624-c7eb-5c51-92d3-4d2dacedd221=420bd9ec-164e-562e-8947-0dacde3cec91

> {code}

> 2020-08-04T20:56:51.1835382Z [ERROR] testCommitOneFile[Override:

> false](org.apache.flink.formats.hadoop.bulk.committer.HadoopRenameCommitterHDFSTest)

> Time elapsed: 0.046 s <<< ERROR!

> 2020-08-04T20:56:51.1835950Z java.io.IOException: The stream is closed

> 2020-08-04T20:56:51.1836413Z at

> org.apache.hadoop.net.SocketOutputStream.write(SocketOutputStream.java:118)

> 2020-08-04T20:56:51.1836867Z at

> java.io.BufferedOutputStream.flushBuffer(BufferedOutputStream.java:82)

> 2020-08-04T20:56:51.1837313Z at

> java.io.BufferedOutputStream.flush(BufferedOutputStream.java:140)

> 2020-08-04T20:56:51.1837712Z at

> java.io.DataOutputStream.flush(DataOutputStream.java:123)

> 2020-08-04T20:56:51.1838116Z at

> java.io.FilterOutputStream.close(FilterOutputStream.java:158)

> 2020-08-04T20:56:51.1838527Z at

> org.apache.hadoop.hdfs.DataStreamer.closeStream(DataStreamer.java:987)

> 2020-08-04T20:56:51.1838974Z at

> org.apache.hadoop.hdfs.DataStreamer.closeInternal(DataStreamer.java:839)

> 2020-08-04T20:56:51.1839404Z at

> org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:834)

> 2020-08-04T20:56:51.1839775Z Suppressed: java.io.IOException: The stream is

> closed

> 2020-08-04T20:56:51.1840184Z at

> org.apache.hadoop.net.SocketOutputStream.write(SocketOutputStream.java:118)

> 2020-08-04T20:56:51.1840641Z at

> java.io.BufferedOutputStream.flushBuffer(BufferedOutputStream.java:82)

> 2020-08-04T20:56:51.1841087Z at

> java.io.BufferedOutputStream.flush(BufferedOutputStream.java:140)

> 2020-08-04T20:56:51.1841509Z at

> java.io.FilterOutputStream.close(FilterOutputStream.java:158)

> 2020-08-04T20:56:51.1841910Z at

> java.io.FilterOutputStream.close(FilterOutputStream.java:159)

> 2020-08-04T20:56:51.1842207Z ... 3 more

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Created] (FLINK-19437) FileSourceTextLinesITCase.testContinuousTextFileSource failed with "SimpleStreamFormat is not splittable, but found split end (0) different from file length (198)"

Dian Fu created FLINK-19437:

---

Summary: FileSourceTextLinesITCase.testContinuousTextFileSource

failed with "SimpleStreamFormat is not splittable, but found split end (0)

different from file length (198)"

Key: FLINK-19437

URL: https://issues.apache.org/jira/browse/FLINK-19437

Project: Flink

Issue Type: Bug

Components: Connectors / FileSystem

Affects Versions: 1.12.0

Reporter: Dian Fu

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=7008=logs=fc5181b0-e452-5c8f-68de-1097947f6483=62110053-334f-5295-a0ab-80dd7e2babbf

{code}

2020-09-27T21:58:38.9199090Z [ERROR]

testContinuousTextFileSource(org.apache.flink.connector.file.src.FileSourceTextLinesITCase)

Time elapsed: 0.517 s <<< ERROR!

2020-09-27T21:58:38.9199619Z java.lang.RuntimeException: Failed to fetch next

result

2020-09-27T21:58:38.9200118Zat

org.apache.flink.streaming.api.operators.collect.CollectResultIterator.nextResultFromFetcher(CollectResultIterator.java:106)

2020-09-27T21:58:38.9200722Zat

org.apache.flink.streaming.api.operators.collect.CollectResultIterator.hasNext(CollectResultIterator.java:77)

2020-09-27T21:58:38.9201290Zat

org.apache.flink.streaming.api.datastream.DataStreamUtils.collectRecordsFromUnboundedStream(DataStreamUtils.java:150)

2020-09-27T21:58:38.9201920Zat

org.apache.flink.connector.file.src.FileSourceTextLinesITCase.testContinuousTextFileSource(FileSourceTextLinesITCase.java:136)

2020-09-27T21:58:38.9202570Zat

sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

2020-09-27T21:58:38.9203054Zat

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

2020-09-27T21:58:38.9203539Zat

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

2020-09-27T21:58:38.9203968Zat

java.lang.reflect.Method.invoke(Method.java:498)

2020-09-27T21:58:38.9204369Zat

org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

2020-09-27T21:58:38.9204844Zat

org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

2020-09-27T21:58:38.9205359Zat

org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

2020-09-27T21:58:38.9205814Zat

org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

2020-09-27T21:58:38.9206240Zat

org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:55)

2020-09-27T21:58:38.9206611Zat

org.junit.rules.RunRules.evaluate(RunRules.java:20)

2020-09-27T21:58:38.9206971Zat

org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

2020-09-27T21:58:38.9207404Zat

org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

2020-09-27T21:58:38.9207971Zat

org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

2020-09-27T21:58:38.9208404Zat

org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

2020-09-27T21:58:38.9208877Zat

org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

2020-09-27T21:58:38.9209279Zat

org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

2020-09-27T21:58:38.9209680Zat

org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

2020-09-27T21:58:38.9210064Zat

org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

2020-09-27T21:58:38.9210476Zat

org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

2020-09-27T21:58:38.9210881Zat

org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

2020-09-27T21:58:38.9211272Zat

org.junit.rules.RunRules.evaluate(RunRules.java:20)

2020-09-27T21:58:38.9211638Zat

org.junit.runners.ParentRunner.run(ParentRunner.java:363)

2020-09-27T21:58:38.9212305Zat

org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365)

2020-09-27T21:58:38.9213157Zat

org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273)

2020-09-27T21:58:38.9213663Zat

org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238)

2020-09-27T21:58:38.9214123Zat

org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159)

2020-09-27T21:58:38.9214620Zat

org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

2020-09-27T21:58:38.9215148Zat

org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:345)

2020-09-27T21:58:38.9215650Zat

org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:126)

2020-09-27T21:58:38.9216095Zat

org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:418)

2020-09-27T21:58:38.9216516Z Caused by: java.io.IOException: Failed to fetch

job execution result

2020-09-27T21:58:38.9217004Zat

[jira] [Updated] (FLINK-19437) FileSourceTextLinesITCase.testContinuousTextFileSource failed with "SimpleStreamFormat is not splittable, but found split end (0) different from file length (198)"

[

https://issues.apache.org/jira/browse/FLINK-19437?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Dian Fu updated FLINK-19437:

Component/s: Tests

> FileSourceTextLinesITCase.testContinuousTextFileSource failed with

> "SimpleStreamFormat is not splittable, but found split end (0) different from

> file length (198)"

> ---

>

> Key: FLINK-19437

> URL: https://issues.apache.org/jira/browse/FLINK-19437

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem, Tests

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=7008=logs=fc5181b0-e452-5c8f-68de-1097947f6483=62110053-334f-5295-a0ab-80dd7e2babbf

> {code}

> 2020-09-27T21:58:38.9199090Z [ERROR]

> testContinuousTextFileSource(org.apache.flink.connector.file.src.FileSourceTextLinesITCase)

> Time elapsed: 0.517 s <<< ERROR!

> 2020-09-27T21:58:38.9199619Z java.lang.RuntimeException: Failed to fetch next

> result

> 2020-09-27T21:58:38.9200118Z at

> org.apache.flink.streaming.api.operators.collect.CollectResultIterator.nextResultFromFetcher(CollectResultIterator.java:106)

> 2020-09-27T21:58:38.9200722Z at

> org.apache.flink.streaming.api.operators.collect.CollectResultIterator.hasNext(CollectResultIterator.java:77)

> 2020-09-27T21:58:38.9201290Z at

> org.apache.flink.streaming.api.datastream.DataStreamUtils.collectRecordsFromUnboundedStream(DataStreamUtils.java:150)

> 2020-09-27T21:58:38.9201920Z at

> org.apache.flink.connector.file.src.FileSourceTextLinesITCase.testContinuousTextFileSource(FileSourceTextLinesITCase.java:136)

> 2020-09-27T21:58:38.9202570Z at

> sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> 2020-09-27T21:58:38.9203054Z at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> 2020-09-27T21:58:38.9203539Z at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> 2020-09-27T21:58:38.9203968Z at

> java.lang.reflect.Method.invoke(Method.java:498)

> 2020-09-27T21:58:38.9204369Z at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

> 2020-09-27T21:58:38.9204844Z at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> 2020-09-27T21:58:38.9205359Z at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

> 2020-09-27T21:58:38.9205814Z at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> 2020-09-27T21:58:38.9206240Z at

> org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:55)

> 2020-09-27T21:58:38.9206611Z at

> org.junit.rules.RunRules.evaluate(RunRules.java:20)

> 2020-09-27T21:58:38.9206971Z at

> org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

> 2020-09-27T21:58:38.9207404Z at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

> 2020-09-27T21:58:38.9207971Z at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

> 2020-09-27T21:58:38.9208404Z at

> org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

> 2020-09-27T21:58:38.9208877Z at

> org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

> 2020-09-27T21:58:38.9209279Z at

> org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

> 2020-09-27T21:58:38.9209680Z at

> org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

> 2020-09-27T21:58:38.9210064Z at

> org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

> 2020-09-27T21:58:38.9210476Z at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

> 2020-09-27T21:58:38.9210881Z at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

> 2020-09-27T21:58:38.9211272Z at

> org.junit.rules.RunRules.evaluate(RunRules.java:20)

> 2020-09-27T21:58:38.9211638Z at

> org.junit.runners.ParentRunner.run(ParentRunner.java:363)

> 2020-09-27T21:58:38.9212305Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365)

> 2020-09-27T21:58:38.9213157Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273)

> 2020-09-27T21:58:38.9213663Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238)

> 2020-09-27T21:58:38.9214123Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159)

> 2020-09-27T21:58:38.9214620Z at

> org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

> 2020-09-27T21:58:38.9215148Z at

>

[jira] [Commented] (FLINK-19437) FileSourceTextLinesITCase.testContinuousTextFileSource failed with "SimpleStreamFormat is not splittable, but found split end (0) different from file length (198)"

[

https://issues.apache.org/jira/browse/FLINK-19437?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17202948#comment-17202948

]

Dian Fu commented on FLINK-19437:

-

cc [~sewen]

> FileSourceTextLinesITCase.testContinuousTextFileSource failed with

> "SimpleStreamFormat is not splittable, but found split end (0) different from

> file length (198)"

> ---

>

> Key: FLINK-19437

> URL: https://issues.apache.org/jira/browse/FLINK-19437

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem, Tests

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=7008=logs=fc5181b0-e452-5c8f-68de-1097947f6483=62110053-334f-5295-a0ab-80dd7e2babbf

> {code}

> 2020-09-27T21:58:38.9199090Z [ERROR]

> testContinuousTextFileSource(org.apache.flink.connector.file.src.FileSourceTextLinesITCase)

> Time elapsed: 0.517 s <<< ERROR!

> 2020-09-27T21:58:38.9199619Z java.lang.RuntimeException: Failed to fetch next

> result

> 2020-09-27T21:58:38.9200118Z at

> org.apache.flink.streaming.api.operators.collect.CollectResultIterator.nextResultFromFetcher(CollectResultIterator.java:106)

> 2020-09-27T21:58:38.9200722Z at

> org.apache.flink.streaming.api.operators.collect.CollectResultIterator.hasNext(CollectResultIterator.java:77)

> 2020-09-27T21:58:38.9201290Z at

> org.apache.flink.streaming.api.datastream.DataStreamUtils.collectRecordsFromUnboundedStream(DataStreamUtils.java:150)

> 2020-09-27T21:58:38.9201920Z at

> org.apache.flink.connector.file.src.FileSourceTextLinesITCase.testContinuousTextFileSource(FileSourceTextLinesITCase.java:136)

> 2020-09-27T21:58:38.9202570Z at

> sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> 2020-09-27T21:58:38.9203054Z at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> 2020-09-27T21:58:38.9203539Z at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> 2020-09-27T21:58:38.9203968Z at

> java.lang.reflect.Method.invoke(Method.java:498)

> 2020-09-27T21:58:38.9204369Z at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

> 2020-09-27T21:58:38.9204844Z at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> 2020-09-27T21:58:38.9205359Z at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

> 2020-09-27T21:58:38.9205814Z at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> 2020-09-27T21:58:38.9206240Z at

> org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:55)

> 2020-09-27T21:58:38.9206611Z at

> org.junit.rules.RunRules.evaluate(RunRules.java:20)

> 2020-09-27T21:58:38.9206971Z at

> org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

> 2020-09-27T21:58:38.9207404Z at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

> 2020-09-27T21:58:38.9207971Z at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

> 2020-09-27T21:58:38.9208404Z at

> org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

> 2020-09-27T21:58:38.9208877Z at

> org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

> 2020-09-27T21:58:38.9209279Z at

> org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

> 2020-09-27T21:58:38.9209680Z at

> org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

> 2020-09-27T21:58:38.9210064Z at

> org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

> 2020-09-27T21:58:38.9210476Z at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

> 2020-09-27T21:58:38.9210881Z at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

> 2020-09-27T21:58:38.9211272Z at

> org.junit.rules.RunRules.evaluate(RunRules.java:20)

> 2020-09-27T21:58:38.9211638Z at

> org.junit.runners.ParentRunner.run(ParentRunner.java:363)

> 2020-09-27T21:58:38.9212305Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365)

> 2020-09-27T21:58:38.9213157Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273)

> 2020-09-27T21:58:38.9213663Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238)

> 2020-09-27T21:58:38.9214123Z at

> org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159)

> 2020-09-27T21:58:38.9214620Z at

> org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

>

[jira] [Updated] (FLINK-19436) TPC-DS end-to-end test (Blink planner) failed during shutdown

[

https://issues.apache.org/jira/browse/FLINK-19436?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Dian Fu updated FLINK-19436:

Labels: test-stability (was: )

> TPC-DS end-to-end test (Blink planner) failed during shutdown

> -

>

> Key: FLINK-19436

> URL: https://issues.apache.org/jira/browse/FLINK-19436

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner, Tests

>Affects Versions: 1.11.0

>Reporter: Dian Fu

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=7009=logs=c88eea3b-64a0-564d-0031-9fdcd7b8abee=2b7514ee-e706-5046-657b-3430666e7bd9

> {code}

> 2020-09-27T22:37:53.2236467Z Stopping taskexecutor daemon (pid: 2992) on host

> fv-az655.

> 2020-09-27T22:37:53.4450715Z Stopping standalonesession daemon (pid: 2699) on

> host fv-az655.

> 2020-09-27T22:37:53.8014537Z Skipping taskexecutor daemon (pid: 11173),

> because it is not running anymore on fv-az655.

> 2020-09-27T22:37:53.8019740Z Skipping taskexecutor daemon (pid: 11561),

> because it is not running anymore on fv-az655.

> 2020-09-27T22:37:53.8022857Z Skipping taskexecutor daemon (pid: 11849),

> because it is not running anymore on fv-az655.

> 2020-09-27T22:37:53.8023616Z Skipping taskexecutor daemon (pid: 12180),

> because it is not running anymore on fv-az655.

> 2020-09-27T22:37:53.8024327Z Skipping taskexecutor daemon (pid: 12950),

> because it is not running anymore on fv-az655.

> 2020-09-27T22:37:53.8025027Z Skipping taskexecutor daemon (pid: 13472),

> because it is not running anymore on fv-az655.

> 2020-09-27T22:37:53.8025727Z Skipping taskexecutor daemon (pid: 16577),

> because it is not running anymore on fv-az655.