[GitHub] [flink] lirui-apache commented on pull request #14203: [FLINK-20241][hive] Improve exception message when hive deps are miss…

lirui-apache commented on pull request #14203: URL: https://github.com/apache/flink/pull/14203#issuecomment-734138103 @StephanEwen @JingsongLi Would you mind have a look at the PR? Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (FLINK-20365) The native k8s cluster could not be unregistered when executing Python DataStream application attachedly.

[ https://issues.apache.org/jira/browse/FLINK-20365?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dian Fu reassigned FLINK-20365: --- Assignee: Shuiqiang Chen > The native k8s cluster could not be unregistered when executing Python > DataStream application attachedly. > - > > Key: FLINK-20365 > URL: https://issues.apache.org/jira/browse/FLINK-20365 > Project: Flink > Issue Type: Bug > Components: API / Python >Reporter: Shuiqiang Chen >Assignee: Shuiqiang Chen >Priority: Major > Fix For: 1.12.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-20345) Adds an Expand node only if there are multiple distinct aggregate functions in an Aggregate when executes SplitAggregateRule

[

https://issues.apache.org/jira/browse/FLINK-20345?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17239107#comment-17239107

]

Jingsong Lee commented on FLINK-20345:

--

[~qingru zhang] Assigned to u

> Adds an Expand node only if there are multiple distinct aggregate functions

> in an Aggregate when executes SplitAggregateRule

>

>

> Key: FLINK-20345

> URL: https://issues.apache.org/jira/browse/FLINK-20345

> Project: Flink

> Issue Type: Improvement

> Components: Table SQL / Planner

>Affects Versions: 1.11.2

>Reporter: Andy

>Assignee: Andy

>Priority: Major

> Fix For: 1.11.3

>

>

> As mentioned in [Flink

> Document|https://ci.apache.org/projects/flink/flink-docs-stable/dev/table/tuning/streaming_aggregation_optimization.html],

> we could split distinct aggregation to solve skew data on distinct keys

> which is a very good optimization. However, an unnecessary `Expand` node will

> be generated under some special cases, like the following sql.

> {code:java}

> SELECT COUNT(c) AS pv, COUNT(DISTINCT c) AS uv FROM T GROUP BY a

> {code}

> Which plan is like the following text, the Expand and filter condition in

> aggregate functions could be removed.

> {code:java}

> Sink(name=[DataStreamTableSink], fields=[pv, uv])

> +- Calc(select=[pv, uv])

>+- GroupAggregate(groupBy=[a], partialFinalType=[FINAL], select=[a,

> $SUM0_RETRACT($f2_0) AS $f1, $SUM0_RETRACT($f3) AS $f2])

> +- Exchange(distribution=[hash[a]])

> +- GroupAggregate(groupBy=[a, $f2], partialFinalType=[PARTIAL],

> select=[a, $f2, COUNT(c) FILTER $g_1 AS $f2_0, COUNT(DISTINCT c) FILTER $g_0

> AS $f3])

> +- Exchange(distribution=[hash[a, $f2]])

>+- Calc(select=[a, c, $f2, =($e, 1) AS $g_1, =($e, 0) AS $g_0])

> +- Expand(projects=[{a=[$0], c=[$1], $f2=[$2], $e=[0]},

> {a=[$0], c=[$1], $f2=[null], $e=[1]}])

> +- Calc(select=[a, c, MOD(HASH_CODE(c), 1024) AS $f2])

> +- MiniBatchAssigner(interval=[1000ms],

> mode=[ProcTime])

>+- DataStreamScan(table=[[default_catalog,

> default_database, T]], fields=[a, b, c]){code}

> An `Expand` node is only necessary when multiple aggregate function with

> different distinct keys appear in an Aggregate.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-20345) Adds an Expand node only if there are multiple distinct aggregate functions in an Aggregate when executes SplitAggregateRule

[

https://issues.apache.org/jira/browse/FLINK-20345?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jingsong Lee updated FLINK-20345:

-

Fix Version/s: (was: 1.11.3)

1.13.0

> Adds an Expand node only if there are multiple distinct aggregate functions

> in an Aggregate when executes SplitAggregateRule

>

>

> Key: FLINK-20345

> URL: https://issues.apache.org/jira/browse/FLINK-20345

> Project: Flink

> Issue Type: Improvement

> Components: Table SQL / Planner

>Affects Versions: 1.11.2

>Reporter: Andy

>Assignee: Andy

>Priority: Major

> Fix For: 1.13.0

>

>

> As mentioned in [Flink

> Document|https://ci.apache.org/projects/flink/flink-docs-stable/dev/table/tuning/streaming_aggregation_optimization.html],

> we could split distinct aggregation to solve skew data on distinct keys

> which is a very good optimization. However, an unnecessary `Expand` node will

> be generated under some special cases, like the following sql.

> {code:java}

> SELECT COUNT(c) AS pv, COUNT(DISTINCT c) AS uv FROM T GROUP BY a

> {code}

> Which plan is like the following text, the Expand and filter condition in

> aggregate functions could be removed.

> {code:java}

> Sink(name=[DataStreamTableSink], fields=[pv, uv])

> +- Calc(select=[pv, uv])

>+- GroupAggregate(groupBy=[a], partialFinalType=[FINAL], select=[a,

> $SUM0_RETRACT($f2_0) AS $f1, $SUM0_RETRACT($f3) AS $f2])

> +- Exchange(distribution=[hash[a]])

> +- GroupAggregate(groupBy=[a, $f2], partialFinalType=[PARTIAL],

> select=[a, $f2, COUNT(c) FILTER $g_1 AS $f2_0, COUNT(DISTINCT c) FILTER $g_0

> AS $f3])

> +- Exchange(distribution=[hash[a, $f2]])

>+- Calc(select=[a, c, $f2, =($e, 1) AS $g_1, =($e, 0) AS $g_0])

> +- Expand(projects=[{a=[$0], c=[$1], $f2=[$2], $e=[0]},

> {a=[$0], c=[$1], $f2=[null], $e=[1]}])

> +- Calc(select=[a, c, MOD(HASH_CODE(c), 1024) AS $f2])

> +- MiniBatchAssigner(interval=[1000ms],

> mode=[ProcTime])

>+- DataStreamScan(table=[[default_catalog,

> default_database, T]], fields=[a, b, c]){code}

> An `Expand` node is only necessary when multiple aggregate function with

> different distinct keys appear in an Aggregate.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Assigned] (FLINK-20345) Adds an Expand node only if there are multiple distinct aggregate functions in an Aggregate when executes SplitAggregateRule

[

https://issues.apache.org/jira/browse/FLINK-20345?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jingsong Lee reassigned FLINK-20345:

Assignee: Andy

> Adds an Expand node only if there are multiple distinct aggregate functions

> in an Aggregate when executes SplitAggregateRule

>

>

> Key: FLINK-20345

> URL: https://issues.apache.org/jira/browse/FLINK-20345

> Project: Flink

> Issue Type: Improvement

> Components: Table SQL / Planner

>Affects Versions: 1.11.2

>Reporter: Andy

>Assignee: Andy

>Priority: Major

> Fix For: 1.11.3

>

>

> As mentioned in [Flink

> Document|https://ci.apache.org/projects/flink/flink-docs-stable/dev/table/tuning/streaming_aggregation_optimization.html],

> we could split distinct aggregation to solve skew data on distinct keys

> which is a very good optimization. However, an unnecessary `Expand` node will

> be generated under some special cases, like the following sql.

> {code:java}

> SELECT COUNT(c) AS pv, COUNT(DISTINCT c) AS uv FROM T GROUP BY a

> {code}

> Which plan is like the following text, the Expand and filter condition in

> aggregate functions could be removed.

> {code:java}

> Sink(name=[DataStreamTableSink], fields=[pv, uv])

> +- Calc(select=[pv, uv])

>+- GroupAggregate(groupBy=[a], partialFinalType=[FINAL], select=[a,

> $SUM0_RETRACT($f2_0) AS $f1, $SUM0_RETRACT($f3) AS $f2])

> +- Exchange(distribution=[hash[a]])

> +- GroupAggregate(groupBy=[a, $f2], partialFinalType=[PARTIAL],

> select=[a, $f2, COUNT(c) FILTER $g_1 AS $f2_0, COUNT(DISTINCT c) FILTER $g_0

> AS $f3])

> +- Exchange(distribution=[hash[a, $f2]])

>+- Calc(select=[a, c, $f2, =($e, 1) AS $g_1, =($e, 0) AS $g_0])

> +- Expand(projects=[{a=[$0], c=[$1], $f2=[$2], $e=[0]},

> {a=[$0], c=[$1], $f2=[null], $e=[1]}])

> +- Calc(select=[a, c, MOD(HASH_CODE(c), 1024) AS $f2])

> +- MiniBatchAssigner(interval=[1000ms],

> mode=[ProcTime])

>+- DataStreamScan(table=[[default_catalog,

> default_database, T]], fields=[a, b, c]){code}

> An `Expand` node is only necessary when multiple aggregate function with

> different distinct keys appear in an Aggregate.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] flinkbot commented on pull request #14228: [FLINK-20349][table-planner-blink] Fix checking for deadlock caused by exchange

flinkbot commented on pull request #14228: URL: https://github.com/apache/flink/pull/14228#issuecomment-734132510 ## CI report: * 523a5b439481c59e89ed2c548b36cc0ae8df69c9 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] AHeise commented on pull request #13885: [FLINK-19911] add read buffer for input stream

AHeise commented on pull request #13885: URL: https://github.com/apache/flink/pull/13885#issuecomment-734129526 > > > > `LocalFileSystem` > > > > > > > > > Yes, it looks like `LocalFileSystem` should also be a good candidate. In particular `#open(Path, int)` currently ignores the buffer size... > > > I'm wondering if it makes more sense to create two PRs though. Even though the solution is pretty much the same, the underlying issues are different imho. The smaller a PR, the faster we can usually go. However, since they are so related, I wouldn't mind both issues being resolved in the same PR. > > > > > > Hi @AHeise , thanks for your quick reply. > > I think it is okay to create two PRs. The current PR developed `FsDataBuefferedInputStream` and applied it to `HadoopFileSysytem`. > > The next PR considers whether other FileSystem are profitable. > > Hi @AHeise , > > Do you think this plan is OK? If ok, I will develop the code of the current PR when I have time. Yes @1996fanrui , please go ahead. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-20345) Adds an Expand node only if there are multiple distinct aggregate functions in an Aggregate when executes SplitAggregateRule

[

https://issues.apache.org/jira/browse/FLINK-20345?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17239105#comment-17239105

]

Andy commented on FLINK-20345:

--

[~lzljs3620320] I agree with you. Could I take this issue?

> Adds an Expand node only if there are multiple distinct aggregate functions

> in an Aggregate when executes SplitAggregateRule

>

>

> Key: FLINK-20345

> URL: https://issues.apache.org/jira/browse/FLINK-20345

> Project: Flink

> Issue Type: Improvement

> Components: Table SQL / Planner

>Affects Versions: 1.11.2

>Reporter: Andy

>Priority: Major

> Fix For: 1.11.3

>

>

> As mentioned in [Flink

> Document|https://ci.apache.org/projects/flink/flink-docs-stable/dev/table/tuning/streaming_aggregation_optimization.html],

> we could split distinct aggregation to solve skew data on distinct keys

> which is a very good optimization. However, an unnecessary `Expand` node will

> be generated under some special cases, like the following sql.

> {code:java}

> SELECT COUNT(c) AS pv, COUNT(DISTINCT c) AS uv FROM T GROUP BY a

> {code}

> Which plan is like the following text, the Expand and filter condition in

> aggregate functions could be removed.

> {code:java}

> Sink(name=[DataStreamTableSink], fields=[pv, uv])

> +- Calc(select=[pv, uv])

>+- GroupAggregate(groupBy=[a], partialFinalType=[FINAL], select=[a,

> $SUM0_RETRACT($f2_0) AS $f1, $SUM0_RETRACT($f3) AS $f2])

> +- Exchange(distribution=[hash[a]])

> +- GroupAggregate(groupBy=[a, $f2], partialFinalType=[PARTIAL],

> select=[a, $f2, COUNT(c) FILTER $g_1 AS $f2_0, COUNT(DISTINCT c) FILTER $g_0

> AS $f3])

> +- Exchange(distribution=[hash[a, $f2]])

>+- Calc(select=[a, c, $f2, =($e, 1) AS $g_1, =($e, 0) AS $g_0])

> +- Expand(projects=[{a=[$0], c=[$1], $f2=[$2], $e=[0]},

> {a=[$0], c=[$1], $f2=[null], $e=[1]}])

> +- Calc(select=[a, c, MOD(HASH_CODE(c), 1024) AS $f2])

> +- MiniBatchAssigner(interval=[1000ms],

> mode=[ProcTime])

>+- DataStreamScan(table=[[default_catalog,

> default_database, T]], fields=[a, b, c]){code}

> An `Expand` node is only necessary when multiple aggregate function with

> different distinct keys appear in an Aggregate.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Closed] (FLINK-20362) Broken Link in dev/table/sourceSinks.zh.md

[

https://issues.apache.org/jira/browse/FLINK-20362?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Dian Fu closed FLINK-20362.

---

Resolution: Fixed

Fixed in

- master via 47d81ce1328fb5979e237048bdfc8bae5d88d283

- release-1.12 via 3ebeb6c8f5ab6791e32a4f98210a4b8fd8e45cd8

> Broken Link in dev/table/sourceSinks.zh.md

> --

>

> Key: FLINK-20362

> URL: https://issues.apache.org/jira/browse/FLINK-20362

> Project: Flink

> Issue Type: Bug

> Components: Documentation, Table SQL / API

>Affects Versions: 1.12.0

>Reporter: Huang Xingbo

>Assignee: Shengkai Fang

>Priority: Major

> Labels: pull-request-available

> Fix For: 1.12.0

>

>

> When executing the script build_docs.sh, it will throw the following

> exception:

> {code:java}

> Liquid Exception: Could not find document 'dev/table/legacySourceSinks.md' in

> tag 'link'. Make sure the document exists and the path is correct. in

> dev/table/sourceSinks.zh.md Could not find document

> 'dev/table/legacySourceSinks.md' in tag 'link'.

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on pull request #14227: [FLINK-20245][hive][docs] Document how to create a Hive catalog from DDL

flinkbot edited a comment on pull request #14227: URL: https://github.com/apache/flink/pull/14227#issuecomment-734118464 ## CI report: * 22f1148159795b2c6e292bc018df4292fd0692a8 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=10152) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] dianfu closed pull request #14226: [FLINK-20362][doc] Fix broken link in sourceSinks.zh.md

dianfu closed pull request #14226: URL: https://github.com/apache/flink/pull/14226 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #14228: [FLINK-20349][table-planner-blink] Fix checking for deadlock caused by exchange

flinkbot commented on pull request #14228: URL: https://github.com/apache/flink/pull/14228#issuecomment-734123858 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 523a5b439481c59e89ed2c548b36cc0ae8df69c9 (Thu Nov 26 07:23:59 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-20349) Query fails with "A conflict is detected. This is unexpected."

[

https://issues.apache.org/jira/browse/FLINK-20349?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-20349:

---

Labels: pull-request-available (was: )

> Query fails with "A conflict is detected. This is unexpected."

> --

>

> Key: FLINK-20349

> URL: https://issues.apache.org/jira/browse/FLINK-20349

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.12.0

>Reporter: Rui Li

>Assignee: Caizhi Weng

>Priority: Major

> Labels: pull-request-available

> Fix For: 1.12.0

>

>

> The test case to reproduce:

> {code}

> @Test

> public void test() throws Exception {

> tableEnv.executeSql("create table src(key string,val string)");

> tableEnv.executeSql("SELECT sum(char_length(src5.src1_value))

> FROM " +

> "(SELECT src3.*, src4.val as src4_value,

> src4.key as src4_key FROM src src4 JOIN " +

> "(SELECT src2.*, src1.key as src1_key, src1.val

> as src1_value FROM src src1 JOIN src src2 ON src1.key = src2.key) src3 " +

> "ON src3.src1_key = src4.key) src5").collect();

> }

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] TsReaper opened a new pull request #14228: [FLINK-20349][table-planner-blink] Fix checking for deadlock caused by exchange

TsReaper opened a new pull request #14228: URL: https://github.com/apache/flink/pull/14228 ## What is the purpose of the change This is the bug fix for FLINK-20349, where the checking for deadlock caused by exchange in the deadlock break-up algorithm does not work for some cases. ## Brief change log - Fix checking for deadlock caused by exchange ## Verifying this change This change added tests and can be verified by running the added tests. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? not applicable This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (FLINK-20331) UnalignedCheckpointITCase.execute failed with "Sequence number for checkpoint 20 is not known (it was likely been overwritten by a newer checkpoint 21)"

[

https://issues.apache.org/jira/browse/FLINK-20331?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17239097#comment-17239097

]

Arvid Heise edited comment on FLINK-20331 at 11/26/20, 7:18 AM:

Not a real bug but a too strict assumption.

Merged a fix as

[50af0b161b1962d9db5c692aa965b310a4000da9|https://github.com/apache/flink/commit/50af0b161b1962d9db5c692aa965b310a4000da9]

in master.

Merged as 2c77c38d55 into release-1.12.

was (Author: aheise):

Not a real bug but a too strict assumption.

Merged a fix as

[50af0b161b1962d9db5c692aa965b310a4000da9|https://github.com/apache/flink/commit/50af0b161b1962d9db5c692aa965b310a4000da9]

in master.

> UnalignedCheckpointITCase.execute failed with "Sequence number for checkpoint

> 20 is not known (it was likely been overwritten by a newer checkpoint 21)"

>

>

> Key: FLINK-20331

> URL: https://issues.apache.org/jira/browse/FLINK-20331

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Checkpointing

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Assignee: Roman Khachatryan

>Priority: Blocker

> Labels: pull-request-available, test-stability

> Fix For: 1.12.0

>

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=10059&view=logs&j=119bbba7-f5e3-5e08-e72d-09f1529665de&t=7dc1f5a9-54e1-502e-8b02-c7df69073cfc

> {code}

> 2020-11-24T22:42:17.6704402Z [ERROR] execute[parallel pipeline with mixed

> channels, p =

> 20](org.apache.flink.test.checkpointing.UnalignedCheckpointITCase) Time

> elapsed: 7.901 s <<< ERROR!

> 2020-11-24T22:42:17.6706095Z

> org.apache.flink.runtime.client.JobExecutionException: Job execution failed.

> 2020-11-24T22:42:17.6707450Z at

> org.apache.flink.runtime.jobmaster.JobResult.toJobExecutionResult(JobResult.java:147)

> 2020-11-24T22:42:17.6708569Z at

> org.apache.flink.runtime.minicluster.MiniClusterJobClient.lambda$getJobExecutionResult$2(MiniClusterJobClient.java:119)

> 2020-11-24T22:42:17.6709626Z at

> java.util.concurrent.CompletableFuture.uniApply(CompletableFuture.java:616)

> 2020-11-24T22:42:17.6710452Z at

> java.util.concurrent.CompletableFuture$UniApply.tryFire(CompletableFuture.java:591)

> 2020-11-24T22:42:17.6711271Z at

> java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)

> 2020-11-24T22:42:17.6713170Z at

> java.util.concurrent.CompletableFuture.complete(CompletableFuture.java:1975)

> 2020-11-24T22:42:17.6713974Z at

> org.apache.flink.runtime.rpc.akka.AkkaInvocationHandler.lambda$invokeRpc$0(AkkaInvocationHandler.java:229)

> 2020-11-24T22:42:17.6714517Z at

> java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:774)

> 2020-11-24T22:42:17.6715372Z at

> java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:750)

> 2020-11-24T22:42:17.6715871Z at

> java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)

> 2020-11-24T22:42:17.6716514Z at

> java.util.concurrent.CompletableFuture.complete(CompletableFuture.java:1975)

> 2020-11-24T22:42:17.6718475Z at

> org.apache.flink.runtime.concurrent.FutureUtils$1.onComplete(FutureUtils.java:996)

> 2020-11-24T22:42:17.6719322Z at

> akka.dispatch.OnComplete.internal(Future.scala:264)

> 2020-11-24T22:42:17.6719887Z at

> akka.dispatch.OnComplete.internal(Future.scala:261)

> 2020-11-24T22:42:17.6720271Z at

> akka.dispatch.japi$CallbackBridge.apply(Future.scala:191)

> 2020-11-24T22:42:17.6720645Z at

> akka.dispatch.japi$CallbackBridge.apply(Future.scala:188)

> 2020-11-24T22:42:17.6721114Z at

> scala.concurrent.impl.CallbackRunnable.run(Promise.scala:36)

> 2020-11-24T22:42:17.6721585Z at

> org.apache.flink.runtime.concurrent.Executors$DirectExecutionContext.execute(Executors.java:74)

> 2020-11-24T22:42:17.6722078Z at

> scala.concurrent.impl.CallbackRunnable.executeWithValue(Promise.scala:44)

> 2020-11-24T22:42:17.6722738Z at

> scala.concurrent.impl.Promise$DefaultPromise.tryComplete(Promise.scala:252)

> 2020-11-24T22:42:17.6723183Z at

> akka.pattern.PromiseActorRef.$bang(AskSupport.scala:572)

> 2020-11-24T22:42:17.6723862Z at

> akka.pattern.PipeToSupport$PipeableFuture$$anonfun$pipeTo$1.applyOrElse(PipeToSupport.scala:22)

> 2020-11-24T22:42:17.6724435Z at

> akka.pattern.PipeToSupport$PipeableFuture$$anonfun$pipeTo$1.applyOrElse(PipeToSupport.scala:21)

> 2020-11-24T22:42:17.6724914Z at

> scala.concurrent.Future$$anonfun$andThen$1.apply(Future.scala:436)

> 2020-11-24T22:42:17.6725323Z at

> scala.concurrent.Future$$anonfun$andThen$1.apply(Future.scala:435)

> 2020-11-24T22:42:17.6725866Z at

> scala.concurrent.impl.CallbackRunnable.run(Prom

[jira] [Commented] (FLINK-20327) The Hive's read/write page should redirect to SQL Fileystem connector

[ https://issues.apache.org/jira/browse/FLINK-20327?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17239100#comment-17239100 ] Leonard Xu commented on FLINK-20327: I'd like to take this, could you help assign this to me ? [~dwysakowicz] > The Hive's read/write page should redirect to SQL Fileystem connector > - > > Key: FLINK-20327 > URL: https://issues.apache.org/jira/browse/FLINK-20327 > Project: Flink > Issue Type: Improvement > Components: Connectors / Hive, Documentation >Reporter: Dawid Wysakowicz >Priority: Critical > Fix For: 1.12.0 > > > Right now the Hive's read/write page redirects to SQL filesystem connector > with a note: ??Please see the StreamingFileSink for a full list of available > configurations.?? but this page actually has no configuration options. We > should link to > https://ci.apache.org/projects/flink/flink-docs-master/dev/table/connectors/filesystem.html > instead which cover the SQL related configuration. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (FLINK-20339) `FileWriter` support to load StreamingFileSink's state.

[ https://issues.apache.org/jira/browse/FLINK-20339?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Guowei Ma closed FLINK-20339. - Resolution: Won't Fix > `FileWriter` support to load StreamingFileSink's state. > --- > > Key: FLINK-20339 > URL: https://issues.apache.org/jira/browse/FLINK-20339 > Project: Flink > Issue Type: Sub-task > Components: API / DataStream >Affects Versions: 1.12.0 >Reporter: Guowei Ma >Priority: Critical > Fix For: 1.12.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (FLINK-20338) Make the `StatefulSinkWriterOperator` load `StreamingFileSink`'s state.

[ https://issues.apache.org/jira/browse/FLINK-20338?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Guowei Ma closed FLINK-20338. - Resolution: Won't Fix > Make the `StatefulSinkWriterOperator` load `StreamingFileSink`'s state. > --- > > Key: FLINK-20338 > URL: https://issues.apache.org/jira/browse/FLINK-20338 > Project: Flink > Issue Type: Sub-task > Components: API / DataStream >Affects Versions: 1.12.0 >Reporter: Guowei Ma >Priority: Critical > Labels: pull-request-available > Fix For: 1.12.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-20337) Make migrate `StreamingFileSink` to `FileSink` possible

[ https://issues.apache.org/jira/browse/FLINK-20337?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17239098#comment-17239098 ] Guowei Ma commented on FLINK-20337: --- Sorry [~kkl0u] for responsing so late. I think it is good for other to follow what is happen if we merge the two tasks together. So I would close the two sub tasks. > Make migrate `StreamingFileSink` to `FileSink` possible > --- > > Key: FLINK-20337 > URL: https://issues.apache.org/jira/browse/FLINK-20337 > Project: Flink > Issue Type: Improvement > Components: API / DataStream >Affects Versions: 1.12.0 >Reporter: Guowei Ma >Assignee: Guowei Ma >Priority: Critical > Fix For: 1.12.0 > > > Flink-1.12 introduces the `FileSink` in FLINK-19510, which can guarantee the > exactly once semantics both in the streaming and batch execution mode. We > need to provide a way for the user who uses `StreamingFileSink` to migrate > from `StreamingFileSink` to `FileSink`. > For this purpose we propose to let the new sink writer operator could load > the previous StreamingFileSink's state and then the `SinkWriter` could have > the opertunity to handle the old state. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot commented on pull request #14227: [FLINK-20245][hive][docs] Document how to create a Hive catalog from DDL

flinkbot commented on pull request #14227: URL: https://github.com/apache/flink/pull/14227#issuecomment-734118464 ## CI report: * 22f1148159795b2c6e292bc018df4292fd0692a8 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (FLINK-20331) UnalignedCheckpointITCase.execute failed with "Sequence number for checkpoint 20 is not known (it was likely been overwritten by a newer checkpoint 21)"

[

https://issues.apache.org/jira/browse/FLINK-20331?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Arvid Heise resolved FLINK-20331.

-

Resolution: Fixed

> UnalignedCheckpointITCase.execute failed with "Sequence number for checkpoint

> 20 is not known (it was likely been overwritten by a newer checkpoint 21)"

>

>

> Key: FLINK-20331

> URL: https://issues.apache.org/jira/browse/FLINK-20331

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Checkpointing

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Assignee: Roman Khachatryan

>Priority: Blocker

> Labels: pull-request-available, test-stability

> Fix For: 1.12.0

>

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=10059&view=logs&j=119bbba7-f5e3-5e08-e72d-09f1529665de&t=7dc1f5a9-54e1-502e-8b02-c7df69073cfc

> {code}

> 2020-11-24T22:42:17.6704402Z [ERROR] execute[parallel pipeline with mixed

> channels, p =

> 20](org.apache.flink.test.checkpointing.UnalignedCheckpointITCase) Time

> elapsed: 7.901 s <<< ERROR!

> 2020-11-24T22:42:17.6706095Z

> org.apache.flink.runtime.client.JobExecutionException: Job execution failed.

> 2020-11-24T22:42:17.6707450Z at

> org.apache.flink.runtime.jobmaster.JobResult.toJobExecutionResult(JobResult.java:147)

> 2020-11-24T22:42:17.6708569Z at

> org.apache.flink.runtime.minicluster.MiniClusterJobClient.lambda$getJobExecutionResult$2(MiniClusterJobClient.java:119)

> 2020-11-24T22:42:17.6709626Z at

> java.util.concurrent.CompletableFuture.uniApply(CompletableFuture.java:616)

> 2020-11-24T22:42:17.6710452Z at

> java.util.concurrent.CompletableFuture$UniApply.tryFire(CompletableFuture.java:591)

> 2020-11-24T22:42:17.6711271Z at

> java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)

> 2020-11-24T22:42:17.6713170Z at

> java.util.concurrent.CompletableFuture.complete(CompletableFuture.java:1975)

> 2020-11-24T22:42:17.6713974Z at

> org.apache.flink.runtime.rpc.akka.AkkaInvocationHandler.lambda$invokeRpc$0(AkkaInvocationHandler.java:229)

> 2020-11-24T22:42:17.6714517Z at

> java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:774)

> 2020-11-24T22:42:17.6715372Z at

> java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:750)

> 2020-11-24T22:42:17.6715871Z at

> java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)

> 2020-11-24T22:42:17.6716514Z at

> java.util.concurrent.CompletableFuture.complete(CompletableFuture.java:1975)

> 2020-11-24T22:42:17.6718475Z at

> org.apache.flink.runtime.concurrent.FutureUtils$1.onComplete(FutureUtils.java:996)

> 2020-11-24T22:42:17.6719322Z at

> akka.dispatch.OnComplete.internal(Future.scala:264)

> 2020-11-24T22:42:17.6719887Z at

> akka.dispatch.OnComplete.internal(Future.scala:261)

> 2020-11-24T22:42:17.6720271Z at

> akka.dispatch.japi$CallbackBridge.apply(Future.scala:191)

> 2020-11-24T22:42:17.6720645Z at

> akka.dispatch.japi$CallbackBridge.apply(Future.scala:188)

> 2020-11-24T22:42:17.6721114Z at

> scala.concurrent.impl.CallbackRunnable.run(Promise.scala:36)

> 2020-11-24T22:42:17.6721585Z at

> org.apache.flink.runtime.concurrent.Executors$DirectExecutionContext.execute(Executors.java:74)

> 2020-11-24T22:42:17.6722078Z at

> scala.concurrent.impl.CallbackRunnable.executeWithValue(Promise.scala:44)

> 2020-11-24T22:42:17.6722738Z at

> scala.concurrent.impl.Promise$DefaultPromise.tryComplete(Promise.scala:252)

> 2020-11-24T22:42:17.6723183Z at

> akka.pattern.PromiseActorRef.$bang(AskSupport.scala:572)

> 2020-11-24T22:42:17.6723862Z at

> akka.pattern.PipeToSupport$PipeableFuture$$anonfun$pipeTo$1.applyOrElse(PipeToSupport.scala:22)

> 2020-11-24T22:42:17.6724435Z at

> akka.pattern.PipeToSupport$PipeableFuture$$anonfun$pipeTo$1.applyOrElse(PipeToSupport.scala:21)

> 2020-11-24T22:42:17.6724914Z at

> scala.concurrent.Future$$anonfun$andThen$1.apply(Future.scala:436)

> 2020-11-24T22:42:17.6725323Z at

> scala.concurrent.Future$$anonfun$andThen$1.apply(Future.scala:435)

> 2020-11-24T22:42:17.6725866Z at

> scala.concurrent.impl.CallbackRunnable.run(Promise.scala:36)

> 2020-11-24T22:42:17.6726313Z at

> akka.dispatch.BatchingExecutor$AbstractBatch.processBatch(BatchingExecutor.scala:55)

> 2020-11-24T22:42:17.6726829Z at

> akka.dispatch.BatchingExecutor$BlockableBatch$$anonfun$run$1.apply$mcV$sp(BatchingExecutor.scala:91)

> 2020-11-24T22:42:17.6727376Z at

> akka.dispatch.BatchingExecutor$BlockableBatch$$anonfun$run$1.apply(BatchingExecutor.scala:91)

> 2020-11-24T22:42:17.6727891Z at

> akka.dispatch.BatchingExecutor$BlockableBatch$$anonfun$run$1.apply(BatchingExecutor.scala:91)

> 2020

[jira] [Commented] (FLINK-20331) UnalignedCheckpointITCase.execute failed with "Sequence number for checkpoint 20 is not known (it was likely been overwritten by a newer checkpoint 21)"

[

https://issues.apache.org/jira/browse/FLINK-20331?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17239097#comment-17239097

]

Arvid Heise commented on FLINK-20331:

-

Not a real bug but a too strict assumption.

Merged a fix as

[50af0b161b1962d9db5c692aa965b310a4000da9|https://github.com/apache/flink/commit/50af0b161b1962d9db5c692aa965b310a4000da9]

in master.

> UnalignedCheckpointITCase.execute failed with "Sequence number for checkpoint

> 20 is not known (it was likely been overwritten by a newer checkpoint 21)"

>

>

> Key: FLINK-20331

> URL: https://issues.apache.org/jira/browse/FLINK-20331

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Checkpointing

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Assignee: Roman Khachatryan

>Priority: Blocker

> Labels: pull-request-available, test-stability

> Fix For: 1.12.0

>

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=10059&view=logs&j=119bbba7-f5e3-5e08-e72d-09f1529665de&t=7dc1f5a9-54e1-502e-8b02-c7df69073cfc

> {code}

> 2020-11-24T22:42:17.6704402Z [ERROR] execute[parallel pipeline with mixed

> channels, p =

> 20](org.apache.flink.test.checkpointing.UnalignedCheckpointITCase) Time

> elapsed: 7.901 s <<< ERROR!

> 2020-11-24T22:42:17.6706095Z

> org.apache.flink.runtime.client.JobExecutionException: Job execution failed.

> 2020-11-24T22:42:17.6707450Z at

> org.apache.flink.runtime.jobmaster.JobResult.toJobExecutionResult(JobResult.java:147)

> 2020-11-24T22:42:17.6708569Z at

> org.apache.flink.runtime.minicluster.MiniClusterJobClient.lambda$getJobExecutionResult$2(MiniClusterJobClient.java:119)

> 2020-11-24T22:42:17.6709626Z at

> java.util.concurrent.CompletableFuture.uniApply(CompletableFuture.java:616)

> 2020-11-24T22:42:17.6710452Z at

> java.util.concurrent.CompletableFuture$UniApply.tryFire(CompletableFuture.java:591)

> 2020-11-24T22:42:17.6711271Z at

> java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)

> 2020-11-24T22:42:17.6713170Z at

> java.util.concurrent.CompletableFuture.complete(CompletableFuture.java:1975)

> 2020-11-24T22:42:17.6713974Z at

> org.apache.flink.runtime.rpc.akka.AkkaInvocationHandler.lambda$invokeRpc$0(AkkaInvocationHandler.java:229)

> 2020-11-24T22:42:17.6714517Z at

> java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:774)

> 2020-11-24T22:42:17.6715372Z at

> java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:750)

> 2020-11-24T22:42:17.6715871Z at

> java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)

> 2020-11-24T22:42:17.6716514Z at

> java.util.concurrent.CompletableFuture.complete(CompletableFuture.java:1975)

> 2020-11-24T22:42:17.6718475Z at

> org.apache.flink.runtime.concurrent.FutureUtils$1.onComplete(FutureUtils.java:996)

> 2020-11-24T22:42:17.6719322Z at

> akka.dispatch.OnComplete.internal(Future.scala:264)

> 2020-11-24T22:42:17.6719887Z at

> akka.dispatch.OnComplete.internal(Future.scala:261)

> 2020-11-24T22:42:17.6720271Z at

> akka.dispatch.japi$CallbackBridge.apply(Future.scala:191)

> 2020-11-24T22:42:17.6720645Z at

> akka.dispatch.japi$CallbackBridge.apply(Future.scala:188)

> 2020-11-24T22:42:17.6721114Z at

> scala.concurrent.impl.CallbackRunnable.run(Promise.scala:36)

> 2020-11-24T22:42:17.6721585Z at

> org.apache.flink.runtime.concurrent.Executors$DirectExecutionContext.execute(Executors.java:74)

> 2020-11-24T22:42:17.6722078Z at

> scala.concurrent.impl.CallbackRunnable.executeWithValue(Promise.scala:44)

> 2020-11-24T22:42:17.6722738Z at

> scala.concurrent.impl.Promise$DefaultPromise.tryComplete(Promise.scala:252)

> 2020-11-24T22:42:17.6723183Z at

> akka.pattern.PromiseActorRef.$bang(AskSupport.scala:572)

> 2020-11-24T22:42:17.6723862Z at

> akka.pattern.PipeToSupport$PipeableFuture$$anonfun$pipeTo$1.applyOrElse(PipeToSupport.scala:22)

> 2020-11-24T22:42:17.6724435Z at

> akka.pattern.PipeToSupport$PipeableFuture$$anonfun$pipeTo$1.applyOrElse(PipeToSupport.scala:21)

> 2020-11-24T22:42:17.6724914Z at

> scala.concurrent.Future$$anonfun$andThen$1.apply(Future.scala:436)

> 2020-11-24T22:42:17.6725323Z at

> scala.concurrent.Future$$anonfun$andThen$1.apply(Future.scala:435)

> 2020-11-24T22:42:17.6725866Z at

> scala.concurrent.impl.CallbackRunnable.run(Promise.scala:36)

> 2020-11-24T22:42:17.6726313Z at

> akka.dispatch.BatchingExecutor$AbstractBatch.processBatch(BatchingExecutor.scala:55)

> 2020-11-24T22:42:17.6726829Z at

> akka.dispatch.BatchingExecutor$BlockableBatch$$anonfun$run$1.apply$mcV$sp(BatchingExecutor.scala:91)

> 2020-11-24T22:42:17.6727376Z at

> a

[GitHub] [flink] flinkbot edited a comment on pull request #13983: [FLINK-19989][python] Add collect operation in Python DataStream API

flinkbot edited a comment on pull request #13983: URL: https://github.com/apache/flink/pull/13983#issuecomment-723574143 ## CI report: * fe0505f91d71c3a5947bdac49a8b3fb91983d5c5 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=9922) * 5ed0db74dfc9f605365d6cbf811b5092b3eca062 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=10150) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] 1996fanrui commented on pull request #13885: [FLINK-19911] add read buffer for input stream

1996fanrui commented on pull request #13885: URL: https://github.com/apache/flink/pull/13885#issuecomment-734116774 > > > `LocalFileSystem` > > > > > > Yes, it looks like `LocalFileSystem` should also be a good candidate. In particular `#open(Path, int)` currently ignores the buffer size... > > I'm wondering if it makes more sense to create two PRs though. Even though the solution is pretty much the same, the underlying issues are different imho. The smaller a PR, the faster we can usually go. However, since they are so related, I wouldn't mind both issues being resolved in the same PR. > > Hi @AHeise , thanks for your quick reply. > > I think it is okay to create two PRs. The current PR developed `FsDataBuefferedInputStream` and applied it to `HadoopFileSysytem`. > > The next PR considers whether other FileSystem are profitable. Hi @AHeise , Do you think this plan is OK? If ok, I will develop the code of the current PR when I have time. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] AHeise merged pull request #14218: [FLINK-20331][checkpointing][task] Don't fail the task if unaligned checkpoint was subsumed

AHeise merged pull request #14218: URL: https://github.com/apache/flink/pull/14218 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-20213) Partition commit is delayed when records keep coming

[ https://issues.apache.org/jira/browse/FLINK-20213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17239096#comment-17239096 ] zouyunhe commented on FLINK-20213: -- [~lzljs3620320] OK > Partition commit is delayed when records keep coming > > > Key: FLINK-20213 > URL: https://issues.apache.org/jira/browse/FLINK-20213 > Project: Flink > Issue Type: Bug > Components: Connectors / FileSystem, Table SQL / Ecosystem >Affects Versions: 1.11.2 >Reporter: Jingsong Lee >Assignee: Jingsong Lee >Priority: Major > Labels: pull-request-available > Fix For: 1.12.0, 1.11.3 > > Attachments: image-2020-11-26-12-00-23-542.png, > image-2020-11-26-12-00-55-829.png > > > When set partition-commit.delay=0, Users expect partitions to be committed > immediately. > However, if the record of this partition continues to flow in, the bucket for > the partition will be activated, and no inactive bucket will appear. > We need to consider listening to bucket created. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (FLINK-20367) Show the in-use config of job to users

zlzhang0122 created FLINK-20367: --- Summary: Show the in-use config of job to users Key: FLINK-20367 URL: https://issues.apache.org/jira/browse/FLINK-20367 Project: Flink Issue Type: Improvement Reporter: zlzhang0122 Now the config can be set from global cluster configuration and single job code , since we can't absolutely sure that which config is in-use except we check it in the start-up log. I think maybe we can show the in-use config of job to users and this can be helpful! -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (FLINK-20316) update the deduplication section of query page

[ https://issues.apache.org/jira/browse/FLINK-20316?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jark Wu closed FLINK-20316. --- Resolution: Fixed Fixed in - master (1.13.0): 54ac81a4b2acc8239f9ff809e4b2c7d090740e1b - 1.12.0: 4c8a71b6b6f590520051ccb6ef9f47633f533916 > update the deduplication section of query page > -- > > Key: FLINK-20316 > URL: https://issues.apache.org/jira/browse/FLINK-20316 > Project: Flink > Issue Type: Task > Components: Documentation, Table SQL / API >Affects Versions: 1.12.0 >Reporter: Leonard Xu >Assignee: Leonard Xu >Priority: Major > Labels: pull-request-available > Fix For: 1.12.0 > > > We have supported deduplication in row time and deduplicate in mini-batch > mode, but the document did not update, we need to update the doc. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] lirui-apache commented on pull request #14227: [FLINK-20245][hive][docs] Document how to create a Hive catalog from DDL

lirui-apache commented on pull request #14227: URL: https://github.com/apache/flink/pull/14227#issuecomment-734106437 @dawidwys @JingsongLi Could you take a look at the PR? It only updates the eng page, I'll sync the changes to zh page once we think it's good enough. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #14227: [FLINK-20245][hive][docs] Document how to create a Hive catalog from DDL

flinkbot commented on pull request #14227: URL: https://github.com/apache/flink/pull/14227#issuecomment-734106347 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 22f1148159795b2c6e292bc018df4292fd0692a8 (Thu Nov 26 06:40:27 UTC 2020) **Warnings:** * Documentation files were touched, but no `.zh.md` files: Update Chinese documentation or file Jira ticket. Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13983: [FLINK-19989][python] Add collect operation in Python DataStream API

flinkbot edited a comment on pull request #13983: URL: https://github.com/apache/flink/pull/13983#issuecomment-723574143 ## CI report: * fe0505f91d71c3a5947bdac49a8b3fb91983d5c5 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=9922) * 5ed0db74dfc9f605365d6cbf811b5092b3eca062 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] lirui-apache commented on pull request #14227: [FLINK-20245][hive][docs] Document how to create a Hive catalog from DDL

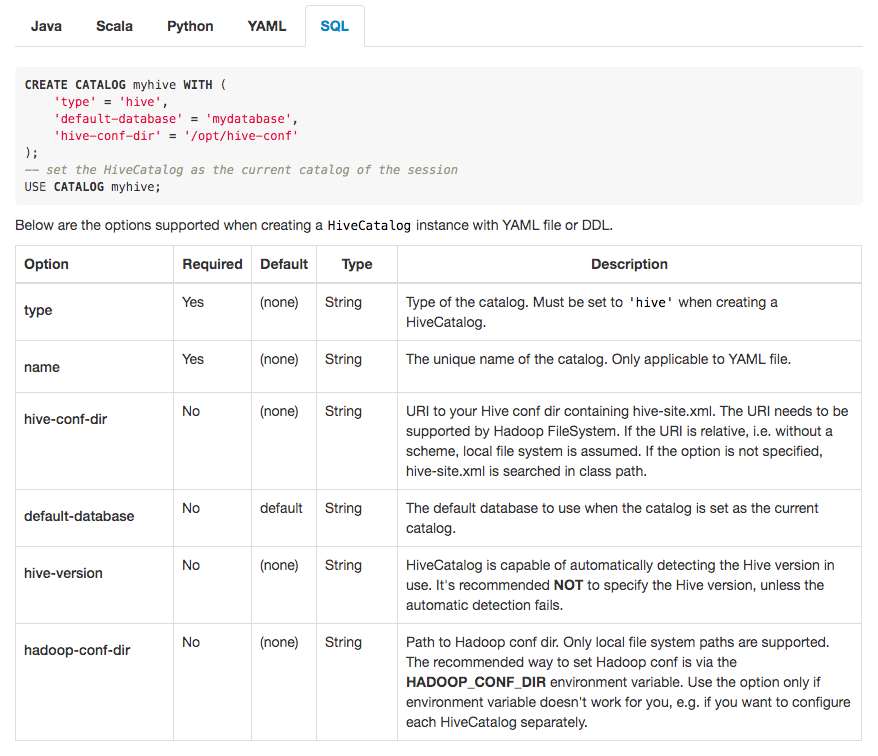

lirui-apache commented on pull request #14227: URL: https://github.com/apache/flink/pull/14227#issuecomment-734105833  Attached is a screenshot of the updated page. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-20245) Document how to create a Hive catalog from DDL

[ https://issues.apache.org/jira/browse/FLINK-20245?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-20245: --- Labels: pull-request-available (was: ) > Document how to create a Hive catalog from DDL > -- > > Key: FLINK-20245 > URL: https://issues.apache.org/jira/browse/FLINK-20245 > Project: Flink > Issue Type: Improvement > Components: Connectors / Hive, Documentation >Reporter: Dawid Wysakowicz >Assignee: Rui Li >Priority: Critical > Labels: pull-request-available > Fix For: 1.12.0 > > > I'd appreciate if the documentation contained a description how to create the > hive catalog from DDL. What I am missing especially are the options that > HiveCatalog expects (type, conf-dir). We should have a table somewhere with a > description possible values etc. the same way as we have such tables for > other connectors and formats. See e.g. > https://ci.apache.org/projects/flink/flink-docs-master/dev/table/connectors/kafka.html#connector-options -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] lirui-apache opened a new pull request #14227: [FLINK-20245][hive][docs] Document how to create a Hive catalog from DDL

lirui-apache opened a new pull request #14227: URL: https://github.com/apache/flink/pull/14227 ## What is the purpose of the change Document how to create `HiveCatalog` with DDL and add table of supported options. ## Brief change log - Document how to create `HiveCatalog` with DDL and add table of supported options. ## Verifying this change NA ## Does this pull request potentially affect one of the following parts: NA ## Documentation NA This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-20366) ColumnIntervalUtil#getColumnIntervalWithFilter does not consider the case when the predicate is a false constant

[

https://issues.apache.org/jira/browse/FLINK-20366?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17239086#comment-17239086

]

godfrey he commented on FLINK-20366:

good catch [~TsReaper], I will fix it

> ColumnIntervalUtil#getColumnIntervalWithFilter does not consider the case

> when the predicate is a false constant

>

>

> Key: FLINK-20366

> URL: https://issues.apache.org/jira/browse/FLINK-20366

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Reporter: Caizhi Weng

>Priority: Major

> Fix For: 1.12.0

>

>

> To reproduce this bug, add the following test case to

> {{DeadlockBreakupTest.scala}}

> {code:scala}

> @Test

> def testSubplanReuse_DeadlockCausedByReusingExchangeInAncestor(): Unit = {

> util.tableEnv.getConfig.getConfiguration.setBoolean(

> OptimizerConfigOptions.TABLE_OPTIMIZER_REUSE_SUB_PLAN_ENABLED, true)

> util.tableEnv.getConfig.getConfiguration.setBoolean(

> OptimizerConfigOptions.TABLE_OPTIMIZER_MULTIPLE_INPUT_ENABLED, false)

> util.tableEnv.getConfig.getConfiguration.setString(

> ExecutionConfigOptions.TABLE_EXEC_DISABLED_OPERATORS,

> "NestedLoopJoin,SortMergeJoin")

> val sqlQuery =

> """

> |WITH T1 AS (SELECT x1.*, x2.a AS k, x2.b AS v FROM x x1 LEFT JOIN x x2

> ON x1.a = x2.a WHERE x2.b > 0)

> |SELECT x.a, T1.* FROM x LEFT JOIN T1 ON x.a = T1.k WHERE x.b > 0 AND

> T1.v = 0

> |""".stripMargin

> util.verifyPlan(sqlQuery)

> }

> {code}

> And we'll get the exception stack

> {code}

> java.lang.RuntimeException: Error while applying rule

> FlinkLogicalJoinConverter(in:NONE,out:LOGICAL), args

> [rel#414:LogicalJoin.NONE.any.[](left=RelSubset#406,right=RelSubset#413,condition==($0,

> $4),joinType=inner)]

> at

> org.apache.calcite.plan.volcano.VolcanoRuleCall.onMatch(VolcanoRuleCall.java:256)

> at

> org.apache.calcite.plan.volcano.IterativeRuleDriver.drive(IterativeRuleDriver.java:58)

> at

> org.apache.calcite.plan.volcano.VolcanoPlanner.findBestExp(VolcanoPlanner.java:510)

> at

> org.apache.calcite.tools.Programs$RuleSetProgram.run(Programs.java:312)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkVolcanoProgram.optimize(FlinkVolcanoProgram.scala:64)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram$$anonfun$optimize$1.apply(FlinkChainedProgram.scala:62)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram$$anonfun$optimize$1.apply(FlinkChainedProgram.scala:58)

> at

> scala.collection.TraversableOnce$$anonfun$foldLeft$1.apply(TraversableOnce.scala:157)

> at

> scala.collection.TraversableOnce$$anonfun$foldLeft$1.apply(TraversableOnce.scala:157)

> at scala.collection.Iterator$class.foreach(Iterator.scala:891)

> at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

> at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

> at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

> at

> scala.collection.TraversableOnce$class.foldLeft(TraversableOnce.scala:157)

> at scala.collection.AbstractTraversable.foldLeft(Traversable.scala:104)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram.optimize(FlinkChainedProgram.scala:57)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.optimizeTree(BatchCommonSubGraphBasedOptimizer.scala:86)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.org$apache$flink$table$planner$plan$optimize$BatchCommonSubGraphBasedOptimizer$$optimizeBlock(BatchCommonSubGraphBasedOptimizer.scala:57)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer$$anonfun$doOptimize$1.apply(BatchCommonSubGraphBasedOptimizer.scala:45)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer$$anonfun$doOptimize$1.apply(BatchCommonSubGraphBasedOptimizer.scala:45)

> at scala.collection.immutable.List.foreach(List.scala:392)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.doOptimize(BatchCommonSubGraphBasedOptimizer.scala:45)

> at

> org.apache.flink.table.planner.plan.optimize.CommonSubGraphBasedOptimizer.optimize(CommonSubGraphBasedOptimizer.scala:77)

> at

> org.apache.flink.table.planner.delegation.PlannerBase.optimize(PlannerBase.scala:286)

> at

> org.apache.flink.table.planner.utils.TableTestUtilBase.getOptimizedPlan(TableTestBase.scala:431)

> at

> org.apache.flink.table.planner.utils.TableTestUtilBase.doVerifyPlan(TableTestBase.scala:348)

> at

> org.apache.fl

[GitHub] [flink] wuchong merged pull request #14214: [FLINK-20316][doc] update the deduplication section of query page

wuchong merged pull request #14214: URL: https://github.com/apache/flink/pull/14214 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (FLINK-20366) ColumnIntervalUtil#getColumnIntervalWithFilter does not consider the case when the predicate is a false constant

[

https://issues.apache.org/jira/browse/FLINK-20366?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

godfrey he reassigned FLINK-20366:

--

Assignee: godfrey he

> ColumnIntervalUtil#getColumnIntervalWithFilter does not consider the case

> when the predicate is a false constant

>

>

> Key: FLINK-20366

> URL: https://issues.apache.org/jira/browse/FLINK-20366

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Reporter: Caizhi Weng

>Assignee: godfrey he

>Priority: Major

> Fix For: 1.12.0

>

>

> To reproduce this bug, add the following test case to

> {{DeadlockBreakupTest.scala}}

> {code:scala}

> @Test

> def testSubplanReuse_DeadlockCausedByReusingExchangeInAncestor(): Unit = {

> util.tableEnv.getConfig.getConfiguration.setBoolean(

> OptimizerConfigOptions.TABLE_OPTIMIZER_REUSE_SUB_PLAN_ENABLED, true)

> util.tableEnv.getConfig.getConfiguration.setBoolean(

> OptimizerConfigOptions.TABLE_OPTIMIZER_MULTIPLE_INPUT_ENABLED, false)

> util.tableEnv.getConfig.getConfiguration.setString(

> ExecutionConfigOptions.TABLE_EXEC_DISABLED_OPERATORS,

> "NestedLoopJoin,SortMergeJoin")

> val sqlQuery =

> """

> |WITH T1 AS (SELECT x1.*, x2.a AS k, x2.b AS v FROM x x1 LEFT JOIN x x2

> ON x1.a = x2.a WHERE x2.b > 0)

> |SELECT x.a, T1.* FROM x LEFT JOIN T1 ON x.a = T1.k WHERE x.b > 0 AND

> T1.v = 0

> |""".stripMargin

> util.verifyPlan(sqlQuery)

> }

> {code}

> And we'll get the exception stack

> {code}

> java.lang.RuntimeException: Error while applying rule

> FlinkLogicalJoinConverter(in:NONE,out:LOGICAL), args

> [rel#414:LogicalJoin.NONE.any.[](left=RelSubset#406,right=RelSubset#413,condition==($0,

> $4),joinType=inner)]

> at

> org.apache.calcite.plan.volcano.VolcanoRuleCall.onMatch(VolcanoRuleCall.java:256)

> at

> org.apache.calcite.plan.volcano.IterativeRuleDriver.drive(IterativeRuleDriver.java:58)

> at

> org.apache.calcite.plan.volcano.VolcanoPlanner.findBestExp(VolcanoPlanner.java:510)

> at

> org.apache.calcite.tools.Programs$RuleSetProgram.run(Programs.java:312)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkVolcanoProgram.optimize(FlinkVolcanoProgram.scala:64)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram$$anonfun$optimize$1.apply(FlinkChainedProgram.scala:62)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram$$anonfun$optimize$1.apply(FlinkChainedProgram.scala:58)

> at

> scala.collection.TraversableOnce$$anonfun$foldLeft$1.apply(TraversableOnce.scala:157)

> at

> scala.collection.TraversableOnce$$anonfun$foldLeft$1.apply(TraversableOnce.scala:157)

> at scala.collection.Iterator$class.foreach(Iterator.scala:891)

> at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

> at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

> at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

> at

> scala.collection.TraversableOnce$class.foldLeft(TraversableOnce.scala:157)

> at scala.collection.AbstractTraversable.foldLeft(Traversable.scala:104)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram.optimize(FlinkChainedProgram.scala:57)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.optimizeTree(BatchCommonSubGraphBasedOptimizer.scala:86)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.org$apache$flink$table$planner$plan$optimize$BatchCommonSubGraphBasedOptimizer$$optimizeBlock(BatchCommonSubGraphBasedOptimizer.scala:57)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer$$anonfun$doOptimize$1.apply(BatchCommonSubGraphBasedOptimizer.scala:45)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer$$anonfun$doOptimize$1.apply(BatchCommonSubGraphBasedOptimizer.scala:45)

> at scala.collection.immutable.List.foreach(List.scala:392)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.doOptimize(BatchCommonSubGraphBasedOptimizer.scala:45)

> at

> org.apache.flink.table.planner.plan.optimize.CommonSubGraphBasedOptimizer.optimize(CommonSubGraphBasedOptimizer.scala:77)

> at

> org.apache.flink.table.planner.delegation.PlannerBase.optimize(PlannerBase.scala:286)

> at

> org.apache.flink.table.planner.utils.TableTestUtilBase.getOptimizedPlan(TableTestBase.scala:431)

> at

> org.apache.flink.table.planner.utils.TableTestUtilBase.doVerifyPlan(TableTestBase.scala:348)

> at

> org.apache.flink.table.planner.utils.TableT

[jira] [Closed] (FLINK-20184) update hive streaming read and temporal table documents

[ https://issues.apache.org/jira/browse/FLINK-20184?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jingsong Lee closed FLINK-20184. Resolution: Fixed master (1.13): 47ae3341b5f5796e397ff255baadc95d4426e15d release-1.12: f051510709c05f63ae1708caa266a6f192e29aa0 > update hive streaming read and temporal table documents > --- > > Key: FLINK-20184 > URL: https://issues.apache.org/jira/browse/FLINK-20184 > Project: Flink > Issue Type: Task > Components: Connectors / Hive, Documentation >Reporter: Leonard Xu >Assignee: Leonard Xu >Priority: Major > Labels: pull-request-available > Fix For: 1.12.0 > > > The hive streaming read and temporal table document has been out of style, we > need to update it. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-20366) ColumnIntervalUtil#getColumnIntervalWithFilter does not consider the case when the predicate is a false constant

[

https://issues.apache.org/jira/browse/FLINK-20366?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17239084#comment-17239084

]

Caizhi Weng commented on FLINK-20366:

-

[~godfreyhe] please take a look.

> ColumnIntervalUtil#getColumnIntervalWithFilter does not consider the case

> when the predicate is a false constant

>

>

> Key: FLINK-20366

> URL: https://issues.apache.org/jira/browse/FLINK-20366

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Reporter: Caizhi Weng

>Priority: Major

> Fix For: 1.12.0

>

>

> To reproduce this bug, add the following test case to

> {{DeadlockBreakupTest.scala}}

> {code:scala}

> @Test

> def testSubplanReuse_DeadlockCausedByReusingExchangeInAncestor(): Unit = {

> util.tableEnv.getConfig.getConfiguration.setBoolean(

> OptimizerConfigOptions.TABLE_OPTIMIZER_REUSE_SUB_PLAN_ENABLED, true)

> util.tableEnv.getConfig.getConfiguration.setBoolean(

> OptimizerConfigOptions.TABLE_OPTIMIZER_MULTIPLE_INPUT_ENABLED, false)

> util.tableEnv.getConfig.getConfiguration.setString(

> ExecutionConfigOptions.TABLE_EXEC_DISABLED_OPERATORS,

> "NestedLoopJoin,SortMergeJoin")

> val sqlQuery =

> """

> |WITH T1 AS (SELECT x1.*, x2.a AS k, x2.b AS v FROM x x1 LEFT JOIN x x2

> ON x1.a = x2.a WHERE x2.b > 0)

> |SELECT x.a, T1.* FROM x LEFT JOIN T1 ON x.a = T1.k WHERE x.b > 0 AND

> T1.v = 0

> |""".stripMargin

> util.verifyPlan(sqlQuery)

> }

> {code}

> And we'll get the exception stack

> {code}

> java.lang.RuntimeException: Error while applying rule

> FlinkLogicalJoinConverter(in:NONE,out:LOGICAL), args

> [rel#414:LogicalJoin.NONE.any.[](left=RelSubset#406,right=RelSubset#413,condition==($0,

> $4),joinType=inner)]

> at

> org.apache.calcite.plan.volcano.VolcanoRuleCall.onMatch(VolcanoRuleCall.java:256)

> at

> org.apache.calcite.plan.volcano.IterativeRuleDriver.drive(IterativeRuleDriver.java:58)

> at

> org.apache.calcite.plan.volcano.VolcanoPlanner.findBestExp(VolcanoPlanner.java:510)

> at

> org.apache.calcite.tools.Programs$RuleSetProgram.run(Programs.java:312)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkVolcanoProgram.optimize(FlinkVolcanoProgram.scala:64)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram$$anonfun$optimize$1.apply(FlinkChainedProgram.scala:62)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram$$anonfun$optimize$1.apply(FlinkChainedProgram.scala:58)

> at

> scala.collection.TraversableOnce$$anonfun$foldLeft$1.apply(TraversableOnce.scala:157)

> at

> scala.collection.TraversableOnce$$anonfun$foldLeft$1.apply(TraversableOnce.scala:157)

> at scala.collection.Iterator$class.foreach(Iterator.scala:891)

> at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

> at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

> at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

> at

> scala.collection.TraversableOnce$class.foldLeft(TraversableOnce.scala:157)

> at scala.collection.AbstractTraversable.foldLeft(Traversable.scala:104)

> at

> org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram.optimize(FlinkChainedProgram.scala:57)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.optimizeTree(BatchCommonSubGraphBasedOptimizer.scala:86)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.org$apache$flink$table$planner$plan$optimize$BatchCommonSubGraphBasedOptimizer$$optimizeBlock(BatchCommonSubGraphBasedOptimizer.scala:57)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer$$anonfun$doOptimize$1.apply(BatchCommonSubGraphBasedOptimizer.scala:45)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer$$anonfun$doOptimize$1.apply(BatchCommonSubGraphBasedOptimizer.scala:45)

> at scala.collection.immutable.List.foreach(List.scala:392)

> at

> org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.doOptimize(BatchCommonSubGraphBasedOptimizer.scala:45)

> at

> org.apache.flink.table.planner.plan.optimize.CommonSubGraphBasedOptimizer.optimize(CommonSubGraphBasedOptimizer.scala:77)

> at

> org.apache.flink.table.planner.delegation.PlannerBase.optimize(PlannerBase.scala:286)

> at

> org.apache.flink.table.planner.utils.TableTestUtilBase.getOptimizedPlan(TableTestBase.scala:431)

> at

> org.apache.flink.table.planner.utils.TableTestUtilBase.doVerifyPlan(TableTestBase.scala:348)

> at

> org.apache.flink

[jira] [Created] (FLINK-20366) ColumnIntervalUtil#getColumnIntervalWithFilter does not consider the case when the predicate is a false constant

Caizhi Weng created FLINK-20366:

---

Summary: ColumnIntervalUtil#getColumnIntervalWithFilter does not

consider the case when the predicate is a false constant

Key: FLINK-20366

URL: https://issues.apache.org/jira/browse/FLINK-20366

Project: Flink

Issue Type: Bug

Components: Table SQL / Planner

Reporter: Caizhi Weng

Fix For: 1.12.0

To reproduce this bug, add the following test case to

{{DeadlockBreakupTest.scala}}

{code:scala}

@Test

def testSubplanReuse_DeadlockCausedByReusingExchangeInAncestor(): Unit = {

util.tableEnv.getConfig.getConfiguration.setBoolean(

OptimizerConfigOptions.TABLE_OPTIMIZER_REUSE_SUB_PLAN_ENABLED, true)

util.tableEnv.getConfig.getConfiguration.setBoolean(

OptimizerConfigOptions.TABLE_OPTIMIZER_MULTIPLE_INPUT_ENABLED, false)

util.tableEnv.getConfig.getConfiguration.setString(

ExecutionConfigOptions.TABLE_EXEC_DISABLED_OPERATORS,

"NestedLoopJoin,SortMergeJoin")

val sqlQuery =

"""

|WITH T1 AS (SELECT x1.*, x2.a AS k, x2.b AS v FROM x x1 LEFT JOIN x x2

ON x1.a = x2.a WHERE x2.b > 0)

|SELECT x.a, T1.* FROM x LEFT JOIN T1 ON x.a = T1.k WHERE x.b > 0 AND

T1.v = 0

|""".stripMargin

util.verifyPlan(sqlQuery)

}

{code}

And we'll get the exception stack

{code}

java.lang.RuntimeException: Error while applying rule

FlinkLogicalJoinConverter(in:NONE,out:LOGICAL), args

[rel#414:LogicalJoin.NONE.any.[](left=RelSubset#406,right=RelSubset#413,condition==($0,

$4),joinType=inner)]

at

org.apache.calcite.plan.volcano.VolcanoRuleCall.onMatch(VolcanoRuleCall.java:256)

at

org.apache.calcite.plan.volcano.IterativeRuleDriver.drive(IterativeRuleDriver.java:58)

at

org.apache.calcite.plan.volcano.VolcanoPlanner.findBestExp(VolcanoPlanner.java:510)

at

org.apache.calcite.tools.Programs$RuleSetProgram.run(Programs.java:312)

at

org.apache.flink.table.planner.plan.optimize.program.FlinkVolcanoProgram.optimize(FlinkVolcanoProgram.scala:64)

at

org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram$$anonfun$optimize$1.apply(FlinkChainedProgram.scala:62)

at

org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram$$anonfun$optimize$1.apply(FlinkChainedProgram.scala:58)

at

scala.collection.TraversableOnce$$anonfun$foldLeft$1.apply(TraversableOnce.scala:157)

at

scala.collection.TraversableOnce$$anonfun$foldLeft$1.apply(TraversableOnce.scala:157)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

at

scala.collection.TraversableOnce$class.foldLeft(TraversableOnce.scala:157)

at scala.collection.AbstractTraversable.foldLeft(Traversable.scala:104)

at

org.apache.flink.table.planner.plan.optimize.program.FlinkChainedProgram.optimize(FlinkChainedProgram.scala:57)

at

org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.optimizeTree(BatchCommonSubGraphBasedOptimizer.scala:86)

at

org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.org$apache$flink$table$planner$plan$optimize$BatchCommonSubGraphBasedOptimizer$$optimizeBlock(BatchCommonSubGraphBasedOptimizer.scala:57)

at

org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer$$anonfun$doOptimize$1.apply(BatchCommonSubGraphBasedOptimizer.scala:45)

at

org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer$$anonfun$doOptimize$1.apply(BatchCommonSubGraphBasedOptimizer.scala:45)

at scala.collection.immutable.List.foreach(List.scala:392)

at

org.apache.flink.table.planner.plan.optimize.BatchCommonSubGraphBasedOptimizer.doOptimize(BatchCommonSubGraphBasedOptimizer.scala:45)

at

org.apache.flink.table.planner.plan.optimize.CommonSubGraphBasedOptimizer.optimize(CommonSubGraphBasedOptimizer.scala:77)

at

org.apache.flink.table.planner.delegation.PlannerBase.optimize(PlannerBase.scala:286)

at

org.apache.flink.table.planner.utils.TableTestUtilBase.getOptimizedPlan(TableTestBase.scala:431)

at

org.apache.flink.table.planner.utils.TableTestUtilBase.doVerifyPlan(TableTestBase.scala:348)

at

org.apache.flink.table.planner.utils.TableTestUtilBase.verifyPlan(TableTestBase.scala:271)

at

org.apache.flink.table.planner.plan.batch.sql.DeadlockBreakupTest.testSubplanReuse_DeadlockCausedByReusingExchangeInAncestor(DeadlockBreakupTest.scala:248)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccess

[GitHub] [flink] JingsongLi merged pull request #14182: [FLINK-20184][doc] update hive streaming read and temporal table document

JingsongLi merged pull request #14182: URL: https://github.com/apache/flink/pull/14182 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #14204: [FLINK-20325][build] Move docs_404_check to CI stage

flinkbot edited a comment on pull request #14204: URL: https://github.com/apache/flink/pull/14204#issuecomment-733048767 ## CI report: * 6550d3e1b01af94d4f652f993834b75272da1020 UNKNOWN * 595ab82c651f8c225193eeeba90d6366fdff341d Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=10145) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #14103: [FLINK-18545] Introduce `pipeline.name` to allow users to specify job name by configuration

flinkbot edited a comment on pull request #14103: URL: https://github.com/apache/flink/pull/14103#issuecomment-729030820 ## CI report: * cda47b16b8fa216fefb5399249026c2a54e907ed Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=10146) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-20213) Partition commit is delayed when records keep coming

[ https://issues.apache.org/jira/browse/FLINK-20213?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17239080#comment-17239080 ] Jingsong Lee commented on FLINK-20213: -- Hi [~zouyunhe], I think your correct commit delay should be 1 hour plus 1min > Partition commit is delayed when records keep coming > > > Key: FLINK-20213 > URL: https://issues.apache.org/jira/browse/FLINK-20213 > Project: Flink > Issue Type: Bug > Components: Connectors / FileSystem, Table SQL / Ecosystem >Affects Versions: 1.11.2 >Reporter: Jingsong Lee >Assignee: Jingsong Lee >Priority: Major > Labels: pull-request-available > Fix For: 1.12.0, 1.11.3 > > Attachments: image-2020-11-26-12-00-23-542.png, > image-2020-11-26-12-00-55-829.png > > > When set partition-commit.delay=0, Users expect partitions to be committed > immediately. > However, if the record of this partition continues to flow in, the bucket for > the partition will be activated, and no inactive bucket will appear. > We need to consider listening to bucket created. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-20213) Partition commit is delayed when records keep coming