[GitHub] [flink] Myasuka commented on pull request #15941: [FLINK-22659]Adding execution.checkpointing.interval to Flink Doc

Myasuka commented on pull request #15941: URL: https://github.com/apache/flink/pull/15941#issuecomment-844769265 @lixmgl , you could use git force push to update your previous commits so that those commit messages could be updated. I just noticed that you create the PR against `release-1.13`, And actually we would always create PR against `master` branch except that too much code difference across those branches. Committer who helps to merge your PR to `master` branch would help to pick your commit to other `release-xxx` branch if necessary. Please create another PR against `master` branch and closing this PR. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #15927: [FLINK-22639][runtime] ClassLoaderUtil cannot print classpath of Flin…

flinkbot edited a comment on pull request #15927: URL: https://github.com/apache/flink/pull/15927#issuecomment-842054909 ## CI report: * ce1bf535ffd28ea2b7fc10264a184f05e8980aed Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=18160) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #15944: [FLINK-22694][e2e] Use sql file in TPCH end to end tests

flinkbot edited a comment on pull request #15944: URL: https://github.com/apache/flink/pull/15944#issuecomment-843049332 ## CI report: * b64a13c6b1f1c16817a40ca21a20574f51eabcfb Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=18085) * 1045b5d73acfd0b8dadd5601d001274ac6ed00f4 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=18163) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] beyond1920 opened a new pull request #15963: [FLINK-22719][table-planner-blink] Fall back to regular join instead of thrown exception if a join does not satisfy conditions to translat

beyond1920 opened a new pull request #15963: URL: https://github.com/apache/flink/pull/15963 ## What is the purpose of the change This pr aims to remove throw exception logical in `WindowJoinUtil`#`containsWindowStartEqualityAndEndEquality`. After the update, the physical node will fall back to regular join instead of thrown exception if a join does not satisfy conditions to translate into WindowJoin. ## Brief change log - remove throw exception logical in `WindowJoinUtil` ## Verifying this change - * Exists UT in `WindowJoinTest` ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: (no) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? (no) - If yes, how is the feature documented? (not applicable) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-22719) WindowJoinUtil.containsWindowStartEqualityAndEndEquality should not throw exception

[ https://issues.apache.org/jira/browse/FLINK-22719?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-22719: --- Labels: pull-request-available (was: ) > WindowJoinUtil.containsWindowStartEqualityAndEndEquality should not throw > exception > --- > > Key: FLINK-22719 > URL: https://issues.apache.org/jira/browse/FLINK-22719 > Project: Flink > Issue Type: Bug > Components: Table SQL / Planner >Reporter: Jingsong Lee >Priority: Major > Labels: pull-request-available > Fix For: 1.14.0 > > > This will broke regular join sql. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] rmetzger commented on a change in pull request #15884: [FLINK-22266] Fix stop-with-savepoint operation in AdaptiveScheduler

rmetzger commented on a change in pull request #15884:

URL: https://github.com/apache/flink/pull/15884#discussion_r635821863

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/scheduler/adaptive/ExecutingTest.java

##

@@ -258,6 +305,23 @@ public void

testFalseReportsViaUpdateTaskExecutionStateAreIgnored() throws Excep

}

}

+@Test

+public void testExecutionVertexMarkedAsFailedOnDeploymentFailure() throws

Exception {

+try (MockExecutingContext ctx = new MockExecutingContext()) {

+MockExecutionJobVertex mejv =

+new

MockExecutionJobVertex(FailOnDeployMockExecutionVertex::new);

+ExecutionGraph executionGraph =

+new MockExecutionGraph(() ->

Collections.singletonList(mejv));

+Executing exec =

+new

ExecutingStateBuilder().setExecutionGraph(executionGraph).build(ctx);

+

+assertThat(

+((FailOnDeployMockExecutionVertex)

mejv.getMockExecutionVertex())

+.getMarkedFailure(),

+is(instanceOf(JobException.class)));

Review comment:

The error handling of markFailed is difficult to test, because so many

components are involved. But in my opinion, we have good test coverage:

markFailed will (through the DefaultExecutionGraph) notify the

`InternalFailuresListener` about the task failure. The

`UpdateSchedulerNgOnInternalFailuresListener` implementation used by adaptive

scheduler will call updateTaskExecutionState on the scheduler. This chain of

calls will be used for example for the failure in the

`AdaptiveSchedulerITCase.testGlobalFailoverCanRecoverState()` test.

For the Executing state, we have tests that exceptions during deployment

lead to a markFailed call

(`testExecutionVertexMarkedAsFailedOnDeploymentFailure`), and failures reported

via updateTaskExecutionState to appropriate error handling

(`testFailureReportedViaUpdateTaskExecutionStateCausesFailingOnNoRestart`,

`testFailureReportedViaUpdateTaskExecutionStateCausesRestart`,

`testFalseReportsViaUpdateTaskExecutionStateAreIgnored`).

Adding a test that a markFailed call will notify the

`InternalFailuresListener` is out of the scope of the ExecutingTest (because we

are testing the ExecutionVertex and Execution classes).

Adding a test that a markFailed call will call updateTaskExecutionState will

need to go through a test specific `InternalFailuresListener`: Since all the

relevant calls on ExecutingState are already covered, this would only test the

test specific `InternalFailuresListener`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #15963: [FLINK-22719][table-planner-blink] Fall back to regular join instead of thrown exception if a join does not satisfy conditions to translate

flinkbot commented on pull request #15963: URL: https://github.com/apache/flink/pull/15963#issuecomment-844772764 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit da47dc6ac7b349a1c7fccb37da5028f02203706e (Thu May 20 07:05:58 UTC 2021) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! * **This pull request references an unassigned [Jira ticket](https://issues.apache.org/jira/browse/FLINK-22719).** According to the [code contribution guide](https://flink.apache.org/contributing/contribute-code.html), tickets need to be assigned before starting with the implementation work. Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-22693) EXPLAIN cannot be used on Hbase table when useing ROW type

[ https://issues.apache.org/jira/browse/FLINK-22693?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17348114#comment-17348114 ] sadf commented on FLINK-22693: -- I guess from the error exception that the type does not match, so I made the following attempt To make the type match, I changed this class org.apache.flink.table.planner.calcite.FlinkTypeFactory to let it return StructKind.NONE ,but it didn't work Caused by: org.apache.calcite.runtime.CalciteContextException: From line 1, column 60 to line 1, column 70: Cannot assign to target field 'f1' of type RecordType(INTEGER name) from source field 'f1' of type RecordType(INTEGER EXPR$0)Caused by: org.apache.calcite.runtime.CalciteContextException: From line 1, column 60 to line 1, column 70: Cannot assign to target field 'f1' of type RecordType(INTEGER name) from source field 'f1' of type RecordType(INTEGER EXPR$0) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.calcite.runtime.Resources$ExInstWithCause.ex(Resources.java:467) at org.apache.calcite.sql.SqlUtil.newContextException(SqlUtil.java:883) at org.apache.calcite.sql.SqlUtil.newContextException(SqlUtil.java:868) at org.apache.calcite.sql.validate.SqlValidatorImpl.newValidationError(SqlValidatorImpl.java:5043) at org.apache.calcite.sql.validate.SqlValidatorImpl.checkTypeAssignment(SqlValidatorImpl.java:4714) at org.apache.calcite.sql.validate.SqlValidatorImpl.validateInsert(SqlValidatorImpl.java:4417) at org.apache.calcite.sql.SqlInsert.validate(SqlInsert.java:158) at org.apache.calcite.sql.validate.SqlValidatorImpl.validateScopedExpression(SqlValidatorImpl.java:1016) > EXPLAIN cannot be used on Hbase table when useing ROW type > -- > > Key: FLINK-22693 > URL: https://issues.apache.org/jira/browse/FLINK-22693 > Project: Flink > Issue Type: Bug > Components: Connectors / HBase, Table SQL / API >Affects Versions: 1.12.2 >Reporter: sadf >Priority: Major > Attachments: Driver.java, hbase.PNG, pom.xml > > > We use ’EXPLAIN PLAN FOR‘ as a way of validating SQL > calcite will no longer work and throw an exception when we sink to HBase > table and use row type > !hbase.PNG! -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] rmetzger commented on a change in pull request #15884: [FLINK-22266] Fix stop-with-savepoint operation in AdaptiveScheduler

rmetzger commented on a change in pull request #15884:

URL: https://github.com/apache/flink/pull/15884#discussion_r635823536

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/scheduler/adaptive/Executing.java

##

@@ -59,9 +69,17 @@

super(context, executionGraph, executionGraphHandler,

operatorCoordinatorHandler, logger);

this.context = context;

this.userCodeClassLoader = userCodeClassLoader;

+Preconditions.checkState(

+executionGraph.getState() == JobStatus.RUNNING, "Assuming

running execution graph");

-deploy();

-

+if (executingStateBehavior == Behavior.DEPLOY_ON_ENTER) {

+onAllExecutionVertexes(this::deploySafely);

+} else if (executingStateBehavior == Behavior.EXPECT_RUNNING) {

+onAllExecutionVertexes(this::expectRunning);

+} else {

+throw new IllegalStateException(

+"Unexpected executing state behavior " +

executingStateBehavior);

+}

Review comment:

Indeed, that's a good simplification. I pushed a commit addressing this

item.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] lixmgl closed pull request #15941: [FLINK-22659]Adding execution.checkpointing.interval to Flink Doc

lixmgl closed pull request #15941: URL: https://github.com/apache/flink/pull/15941 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-22701) "bin/flink savepoint -d :savepointpath" cannot locate savepointpath arg

[

https://issues.apache.org/jira/browse/FLINK-22701?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17348119#comment-17348119

]

Yun Tang commented on FLINK-22701:

--

Could you paste the actual command you used (do not use {{:savepointpath}} to

replace your real path)?

> "bin/flink savepoint -d :savepointpath" cannot locate savepointpath arg

> ---

>

> Key: FLINK-22701

> URL: https://issues.apache.org/jira/browse/FLINK-22701

> Project: Flink

> Issue Type: Bug

> Components: Command Line Client

>Affects Versions: 1.12.2

> Environment: Kubernetes standalone cluster

>Reporter: Jinliang Guo

>Priority: Major

>

> Hi there,

>

> When I use "bin/flink savepoint -d :savepointpath" to dispose a savepoint,

> got following exceptions:

> The program finished with the following

> exception:java.lang.NullPointerException: Missing required argument:

> savepoint path. Usage: bin/flink savepoint -d

> at

> org.apache.flink.util.Preconditions.checkNotNull(Preconditions.java:76)

> at

> org.apache.flink.client.cli.CliFrontend.disposeSavepoint(CliFrontend.java:785)

> at

> org.apache.flink.client.cli.CliFrontend.lambda$savepoint$8(CliFrontend.java:723)

> at

> org.apache.flink.client.cli.CliFrontend.runClusterAction(CliFrontend.java:1002)

> at

> org.apache.flink.client.cli.CliFrontend.savepoint(CliFrontend.java:719)

> at

> org.apache.flink.client.cli.CliFrontend.parseAndRun(CliFrontend.java:1072)

> at

> org.apache.flink.client.cli.CliFrontend.lambda$main$10(CliFrontend.java:1132)

> at java.base/java.security.AccessController.doPrivileged(Native Method)

> at java.base/javax.security.auth.Subject.doAs(Subject.java:423)

> at

> org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

> at

> org.apache.flink.runtime.security.contexts.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41)

> at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1132)

> However, if I use "bin/flink savepoint -dispose :savepointpath", it works

> good.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] fapaul opened a new pull request #15964: [BP-1.13][FLINK-22434] Store suspended execution graphs on termination to keep them accessible

fapaul opened a new pull request #15964: URL: https://github.com/apache/flink/pull/15964 Unchanged backport of #15799 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-22437) Miss adding parallesim for filter operator in batch mode

[

https://issues.apache.org/jira/browse/FLINK-22437?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17348120#comment-17348120

]

godfrey he commented on FLINK-22437:

[~zoucao] would you like to take this ticket ?

> Miss adding parallesim for filter operator in batch mode

>

>

> Key: FLINK-22437

> URL: https://issues.apache.org/jira/browse/FLINK-22437

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.12.2

>Reporter: zoucao

>Priority: Major

>

> when I execute batch sql as follow in flink-1.12.2, I found lots of small

> files in hdfs. In filesystem connector, `GroupedPartitionWriter` will be

> used, and it close the last partiton if a new record does not belong to the

> existing partition. The phenomenon occurred if there are more than one

> partiton's records are sent to filesystem sink at the same time. Hive source

> can determine parallesim by the number of file and partition, and the

> parallesim will extended by sort operator, but in

> `CommonPhysicalSink#createSinkTransformation`,a filter operator will be add

> to support `SinkNotNullEnforcer`, there is no parallesim set for it, so

> filesystem sink operator can not get the correct parallesim from inputstream.

> {code:java}

> CREATE CATALOG myHive with (

> 'type'='hive',

> 'property-version'='1',

> 'default-database' = 'flink_sql_online_test'

> );

> -- SET table.sql-dialect=hive;

> -- CREATE TABLE IF NOT EXISTS myHive.flink_sql_online_test.hive_sink (

> --`timestamp` BIGINT,

> --`time` STRING,

> --id BIGINT,

> --product STRING,

> --price DOUBLE,

> --canSell STRING,

> --selledNum BIGINT

> -- ) PARTITIONED BY (

> --dt STRING,

> --`hour` STRING,

> -- `min` STRING

> -- ) TBLPROPERTIES (

> --'partition.time-extractor.timestamp-pattern'='$dt $hr:$min:00',

> --'sink.partition-commit.trigger'='partition-time',

> --'sink.partition-commit.delay'='1 min',

> --'sink.partition-commit.policy.kind'='metastore,success-file'

> -- );

> create table fs_sink (

> `timestamp` BIGINT,

> `time` STRING,

> id BIGINT,

> product STRING,

> price DOUBLE,

> canSell STRING,

> selledNum BIGINT,

> dt STRING,

> `hour` STRING,

> `min` STRING

> ) PARTITIONED BY (dt, `hour`, `min`) with (

> 'connector'='filesystem',

> 'path'='hdfs://',

> 'format'='csv'

> );

> insert into fs_sink

> select * from myHive.flink_sql_online_test.hive_sink;

> {code}

> I think this problem can be fixed by adding a parallesim for it just like

> {code:java}

> val dataStream = new DataStream(env, inputTransformation).filter(enforcer)

> .setParallelism(inputTransformation.getParallelism)

> {code}

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

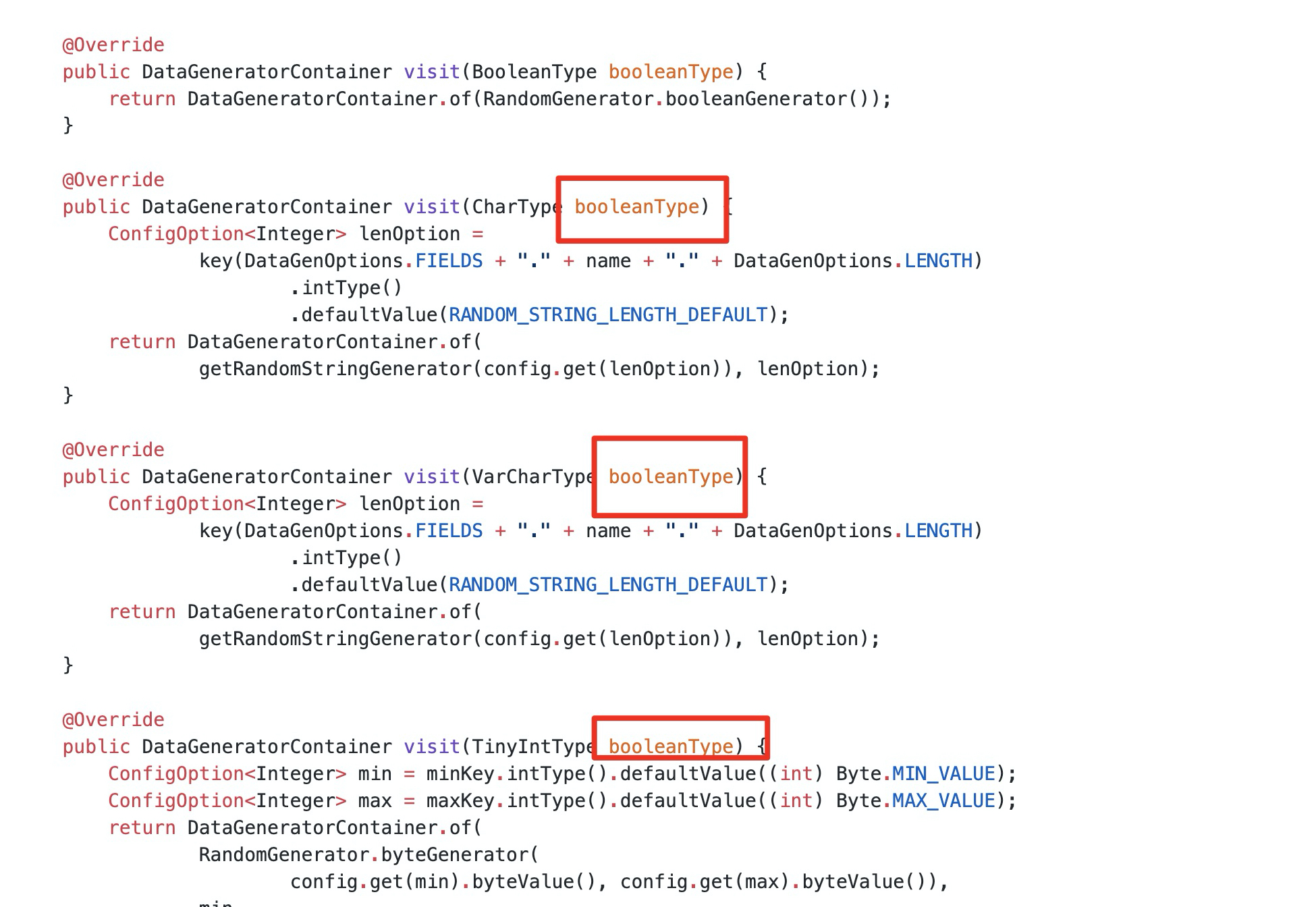

[GitHub] [flink] fhan688 opened a new pull request #15965: [datagen] Normalize parameter names in RandomGeneratorVisitor and SequenceGeneratorVisitor

fhan688 opened a new pull request #15965: URL: https://github.com/apache/flink/pull/15965 ## What is the purpose of the change This PR normalized parameter names in RandomGeneratorVisitor and SequenceGeneratorVisitor. related methods:  ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: (no) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? (no) - If yes, how is the feature documented? (not documented) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (FLINK-22719) WindowJoinUtil.containsWindowStartEqualityAndEndEquality should not throw exception

[ https://issues.apache.org/jira/browse/FLINK-22719?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jingsong Lee reassigned FLINK-22719: Assignee: Andy > WindowJoinUtil.containsWindowStartEqualityAndEndEquality should not throw > exception > --- > > Key: FLINK-22719 > URL: https://issues.apache.org/jira/browse/FLINK-22719 > Project: Flink > Issue Type: Bug > Components: Table SQL / Planner >Reporter: Jingsong Lee >Assignee: Andy >Priority: Major > Labels: pull-request-available > Fix For: 1.14.0 > > > This will broke regular join sql. -- This message was sent by Atlassian Jira (v8.3.4#803005)

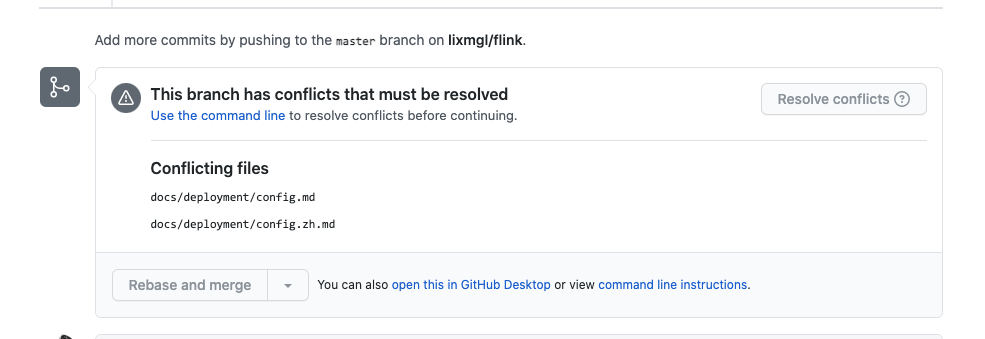

[GitHub] [flink] lixmgl opened a new pull request #15966: [FLINK-22659][docs] Add execution.checkpointing.interval to configura…

lixmgl opened a new pull request #15966: URL: https://github.com/apache/flink/pull/15966 …tion docs ## What is the purpose of the change *Adding execution.checkpointing.interval to Flink configuration docs* ## Brief change log *(for example:)* - *The TaskInfo is stored in the blob store on job creation time as a persistent artifact* - *Deployments RPC transmits only the blob storage reference* - *TaskManagers retrieve the TaskInfo from the blob cache* ## Verifying this change *(Please pick either of the following options)* **This change is a trivial rework / code cleanup without any test coverage.** *(or)* This change is already covered by existing tests, such as *(please describe tests)*. *(or)* This change added tests and can be verified as follows: *(example:)* - *Added integration tests for end-to-end deployment with large payloads (100MB)* - *Extended integration test for recovery after master (JobManager) failure* - *Added test that validates that TaskInfo is transferred only once across recoveries* - *Manually verified the change by running a 4 node cluser with 2 JobManagers and 4 TaskManagers, a stateful streaming program, and killing one JobManager and two TaskManagers during the execution, verifying that recovery happens correctly.* ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (yes / **no**) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (yes / **no**) - The serializers: (yes / **no** / don't know) - The runtime per-record code paths (performance sensitive): (yes / **no** / don't know) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: (yes / **no** / don't know) - The S3 file system connector: (yes / **no** / don't know) ## Documentation - Does this pull request introduce a new feature? (yes / **no**) - If yes, how is the feature documented? (**not applicable** / docs / JavaDocs / not documented) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #15965: [datagen] Normalize parameter names in RandomGeneratorVisitor and SequenceGeneratorVisitor

flinkbot commented on pull request #15965: URL: https://github.com/apache/flink/pull/15965#issuecomment-844792525 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 9ab417c63ef4d6a0f4f3b785980914395901e955 (Thu May 20 07:23:25 UTC 2021) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! * **Invalid pull request title: No valid Jira ID provided** Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #15964: [BP-1.13][FLINK-22434] Store suspended execution graphs on termination to keep them accessible

flinkbot commented on pull request #15964: URL: https://github.com/apache/flink/pull/15964#issuecomment-844792577 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit c2550460db2c0951911dd3219190d0f59e9d1ac6 (Thu May 20 07:23:28 UTC 2021) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (FLINK-22659) 'execution.checkpointing.interval' missing in Flink doc

[

https://issues.apache.org/jira/browse/FLINK-22659?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17346650#comment-17346650

]

Rainie Li edited comment on FLINK-22659 at 5/20/21, 7:23 AM:

-

Please review https://github.com/apache/flink/pull/15966

was (Author: rainieli):

Please review [https://github.com/apache/flink/pull/15941]

> 'execution.checkpointing.interval' missing in Flink doc

>

>

> Key: FLINK-22659

> URL: https://issues.apache.org/jira/browse/FLINK-22659

> Project: Flink

> Issue Type: Bug

> Components: Documentation

>Affects Versions: 1.13.0

>Reporter: wangqinghuan

>Assignee: Rainie Li

>Priority: Minor

> Labels: pull-request-available

> Time Spent: 1h

> Remaining Estimate: 0h

>

> Flink deployment configuration describe how to configure checkpointing in

> flink-conf.yaml[https://ci.apache.org/projects/flink/flink-docs-master/docs/deployment/config/]

> {quote}*Checkpointing*

> You can configure checkpointing directly in code within your Flink job or

> application. Putting these values here in the configuration defines them as

> defaults in case the application does not configure anything.

> {quote}

> *

>

> {quote}{{state.backend}}: The state backend to use. This defines the data

> structure mechanism for taking snapshots. Common values are {{filesystem}} or

> {{rocksdb}}.

> {quote} *

> {quote}{{state.checkpoints.dir}}: The directory to write checkpoints to. This

> takes a path URI like _s3://mybucket/flink-app/checkpoints_ or

> _hdfs://namenode:port/flink/checkpoints_.

> {quote}

> *

> {quote}{{state.savepoints.dir}}: The default directory for savepoints. Takes

> a path URI, similar to {{state.checkpoints.dir}}.

> {quote}

> In my test for Flink-1.13.0, however,Flink checkpointing was not enabled

> without 'execution.checkpointing.interval' value in flink-conf.yaml. In order

> to enable checkpointing in case the application does not configure anything,

> we need to configure these values in flink-conf.yaml.

> * {{state.backend}}:

> * {{state.checkpoints.dir:}}

> * {{state.savepoints.dir:}}

> * execution.checkpointing.interval:

> {{'execution.checkpointing.interval' value missing in document.}}

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] lixmgl commented on pull request #15941: [FLINK-22659]Adding execution.checkpointing.interval to Flink Doc

lixmgl commented on pull request #15941: URL: https://github.com/apache/flink/pull/15941#issuecomment-844793503 thanks @Myasuka here is the new PR: https://github.com/apache/flink/pull/15966 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #15966: [FLINK-22659][docs] Add execution.checkpointing.interval to configura…

flinkbot commented on pull request #15966: URL: https://github.com/apache/flink/pull/15966#issuecomment-844795394 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 50986672248961509dc127d1df42ec8ef96b5ecd (Thu May 20 07:25:39 UTC 2021) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #15808: [FLINK-21229] add confluent schema registry ssl support

flinkbot edited a comment on pull request #15808: URL: https://github.com/apache/flink/pull/15808#issuecomment-829227618 ## CI report: * c55980ce590f38d3f0cd23ac97bd331d1a1c3b15 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=18148) * 0494b5f517497eda59a8c75748c1ece64f73a0d9 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #15884: [FLINK-22266] Fix stop-with-savepoint operation in AdaptiveScheduler

flinkbot edited a comment on pull request #15884: URL: https://github.com/apache/flink/pull/15884#issuecomment-836859750 ## CI report: * 94a973c0c9f5daa60afa36d7bec4ff2b811d9c7b Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=18033) * 8f0b36f54fa0e38dc83565eb2d5a8c4ce204fb82 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #15963: [FLINK-22719][table-planner-blink] Fall back to regular join instead of thrown exception if a join does not satisfy conditions to translate

flinkbot commented on pull request #15963: URL: https://github.com/apache/flink/pull/15963#issuecomment-844797710 ## CI report: * 2a5da984eedad7dcdef215e093c80e89e6658491 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] lirui-apache closed pull request #15920: [FLINK-22661][hive] HiveInputFormatPartitionReader can return invalid…

lirui-apache closed pull request #15920: URL: https://github.com/apache/flink/pull/15920 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-22437) Miss adding parallesim for filter operator in batch mode

[

https://issues.apache.org/jira/browse/FLINK-22437?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17348127#comment-17348127

]

zoucao commented on FLINK-22437:

hi [~godfreyhe], thanks for your reply, I am glad to take it. By the way, I

think it can be easily fixed by add Parallelism for filter operator, WDYS ?

> Miss adding parallesim for filter operator in batch mode

>

>

> Key: FLINK-22437

> URL: https://issues.apache.org/jira/browse/FLINK-22437

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.12.2

>Reporter: zoucao

>Priority: Major

>

> when I execute batch sql as follow in flink-1.12.2, I found lots of small

> files in hdfs. In filesystem connector, `GroupedPartitionWriter` will be

> used, and it close the last partiton if a new record does not belong to the

> existing partition. The phenomenon occurred if there are more than one

> partiton's records are sent to filesystem sink at the same time. Hive source

> can determine parallesim by the number of file and partition, and the

> parallesim will extended by sort operator, but in

> `CommonPhysicalSink#createSinkTransformation`,a filter operator will be add

> to support `SinkNotNullEnforcer`, there is no parallesim set for it, so

> filesystem sink operator can not get the correct parallesim from inputstream.

> {code:java}

> CREATE CATALOG myHive with (

> 'type'='hive',

> 'property-version'='1',

> 'default-database' = 'flink_sql_online_test'

> );

> -- SET table.sql-dialect=hive;

> -- CREATE TABLE IF NOT EXISTS myHive.flink_sql_online_test.hive_sink (

> --`timestamp` BIGINT,

> --`time` STRING,

> --id BIGINT,

> --product STRING,

> --price DOUBLE,

> --canSell STRING,

> --selledNum BIGINT

> -- ) PARTITIONED BY (

> --dt STRING,

> --`hour` STRING,

> -- `min` STRING

> -- ) TBLPROPERTIES (

> --'partition.time-extractor.timestamp-pattern'='$dt $hr:$min:00',

> --'sink.partition-commit.trigger'='partition-time',

> --'sink.partition-commit.delay'='1 min',

> --'sink.partition-commit.policy.kind'='metastore,success-file'

> -- );

> create table fs_sink (

> `timestamp` BIGINT,

> `time` STRING,

> id BIGINT,

> product STRING,

> price DOUBLE,

> canSell STRING,

> selledNum BIGINT,

> dt STRING,

> `hour` STRING,

> `min` STRING

> ) PARTITIONED BY (dt, `hour`, `min`) with (

> 'connector'='filesystem',

> 'path'='hdfs://',

> 'format'='csv'

> );

> insert into fs_sink

> select * from myHive.flink_sql_online_test.hive_sink;

> {code}

> I think this problem can be fixed by adding a parallesim for it just like

> {code:java}

> val dataStream = new DataStream(env, inputTransformation).filter(enforcer)

> .setParallelism(inputTransformation.getParallelism)

> {code}

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on pull request #15004: [FLINK-21253][table-planner-blink] Support grouping set syntax for GroupWindowAggregate

flinkbot edited a comment on pull request #15004: URL: https://github.com/apache/flink/pull/15004#issuecomment-785052726 ## CI report: * b826a818fcd0d47f2454575c59d324519e2c6ad9 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=18140) * 4b01a38ffe4968511607b84c58309bf75183085a UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #15808: [FLINK-21229] add confluent schema registry ssl support

flinkbot edited a comment on pull request #15808: URL: https://github.com/apache/flink/pull/15808#issuecomment-829227618 ## CI report: * c55980ce590f38d3f0cd23ac97bd331d1a1c3b15 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=18148) * 0494b5f517497eda59a8c75748c1ece64f73a0d9 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=18164) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #15884: [FLINK-22266] Fix stop-with-savepoint operation in AdaptiveScheduler

flinkbot edited a comment on pull request #15884: URL: https://github.com/apache/flink/pull/15884#issuecomment-836859750 ## CI report: * 94a973c0c9f5daa60afa36d7bec4ff2b811d9c7b Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=18033) * 8f0b36f54fa0e38dc83565eb2d5a8c4ce204fb82 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=18165) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #15963: [FLINK-22719][table-planner-blink] Fall back to regular join instead of thrown exception if a join does not satisfy conditions to tra

flinkbot edited a comment on pull request #15963: URL: https://github.com/apache/flink/pull/15963#issuecomment-844797710 ## CI report: * 2a5da984eedad7dcdef215e093c80e89e6658491 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=18166) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #15964: [BP-1.13][FLINK-22434] Store suspended execution graphs on termination to keep them accessible

flinkbot commented on pull request #15964: URL: https://github.com/apache/flink/pull/15964#issuecomment-844823190 ## CI report: * c2550460db2c0951911dd3219190d0f59e9d1ac6 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #15965: [datagen] Normalize parameter names in RandomGeneratorVisitor and SequenceGeneratorVisitor

flinkbot commented on pull request #15965: URL: https://github.com/apache/flink/pull/15965#issuecomment-844823334 ## CI report: * 9ab417c63ef4d6a0f4f3b785980914395901e955 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #15966: [FLINK-22659][docs] Add execution.checkpointing.interval to configura…

flinkbot commented on pull request #15966: URL: https://github.com/apache/flink/pull/15966#issuecomment-844823503 ## CI report: * 50986672248961509dc127d1df42ec8ef96b5ecd UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Closed] (FLINK-11310) Convert predicates to IN or NOT_IN for Project

[ https://issues.apache.org/jira/browse/FLINK-11310?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] godfrey he closed FLINK-11310. -- Resolution: Won't Fix Currently, the or expressions will be converted to Sarg, and will be put into a HashSet when code gen. So this issue is invalid now, I will close it. > Convert predicates to IN or NOT_IN for Project > -- > > Key: FLINK-11310 > URL: https://issues.apache.org/jira/browse/FLINK-11310 > Project: Flink > Issue Type: Improvement > Components: Table SQL / Planner >Reporter: Hequn Cheng >Priority: Major > Labels: auto-unassigned, pull-request-available > Time Spent: 20m > Remaining Estimate: 0h > > In FLINK-10474, we force translate IN into a predicate to avoid translating > to a JOIN. In addition, we add a Rule to convert the predicates back to IN so > that we can generate code using a HashSet for the IN. > However, FLINK-10474 only takes Filter into consideration. It would be great > to also convert predicates in Project to IN. It not only will improve the > performance for the Project, but also will avoid the problem raised in > FLINK-11308, as all predicates will be converted into one IN expression. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] yittg commented on pull request #15768: [FLINK-22451][table] Support (*) as parameter of UDFs in Table API

yittg commented on pull request #15768: URL: https://github.com/apache/flink/pull/15768#issuecomment-844825760 Great, really appreciate for you help. @wuchong -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-22720) UpsertKafkaTableITCase.testAggregate fail due to ConcurrentModificationException

[

https://issues.apache.org/jira/browse/FLINK-22720?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17348133#comment-17348133

]

Jark Wu commented on FLINK-22720:

-

I will take this.

> UpsertKafkaTableITCase.testAggregate fail due to

> ConcurrentModificationException

>

>

> Key: FLINK-22720

> URL: https://issues.apache.org/jira/browse/FLINK-22720

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka, Tests

>Affects Versions: 1.14.0

>Reporter: Guowei Ma

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=18151&view=logs&j=c5612577-f1f7-5977-6ff6-7432788526f7&t=53f6305f-55e6-561c-8f1e-3a1dde2c77df&l=6613

> {code:java}

> 2021-05-19T21:28:02.8689083Z May 19 21:28:02 [ERROR] testAggregate[format =

> avro](org.apache.flink.streaming.connectors.kafka.table.UpsertKafkaTableITCase)

> Time elapsed: 2.067 s <<< ERROR!

> 2021-05-19T21:28:02.8708337Z May 19 21:28:02

> java.util.ConcurrentModificationException

> 2021-05-19T21:28:02.8710333Z May 19 21:28:02 at

> java.util.HashMap$HashIterator.nextNode(HashMap.java:1445)

> 2021-05-19T21:28:02.8712083Z May 19 21:28:02 at

> java.util.HashMap$ValueIterator.next(HashMap.java:1474)

> 2021-05-19T21:28:02.8712680Z May 19 21:28:02 at

> java.util.AbstractCollection.toArray(AbstractCollection.java:141)

> 2021-05-19T21:28:02.8713142Z May 19 21:28:02 at

> java.util.ArrayList.addAll(ArrayList.java:583)

> 2021-05-19T21:28:02.8716029Z May 19 21:28:02 at

> org.apache.flink.table.planner.factories.TestValuesRuntimeFunctions.lambda$getResults$0(TestValuesRuntimeFunctions.java:114)

> 2021-05-19T21:28:02.8717007Z May 19 21:28:02 at

> java.util.HashMap$Values.forEach(HashMap.java:981)

> 2021-05-19T21:28:02.8718041Z May 19 21:28:02 at

> org.apache.flink.table.planner.factories.TestValuesRuntimeFunctions.getResults(TestValuesRuntimeFunctions.java:114)

> 2021-05-19T21:28:02.8719339Z May 19 21:28:02 at

> org.apache.flink.table.planner.factories.TestValuesTableFactory.getResults(TestValuesTableFactory.java:184)

> 2021-05-19T21:28:02.8720309Z May 19 21:28:02 at

> org.apache.flink.streaming.connectors.kafka.table.KafkaTableTestUtils.waitingExpectedResults(KafkaTableTestUtils.java:82)

> 2021-05-19T21:28:02.8721311Z May 19 21:28:02 at

> org.apache.flink.streaming.connectors.kafka.table.UpsertKafkaTableITCase.wordFreqToUpsertKafka(UpsertKafkaTableITCase.java:440)

> 2021-05-19T21:28:02.8730402Z May 19 21:28:02 at

> org.apache.flink.streaming.connectors.kafka.table.UpsertKafkaTableITCase.testAggregate(UpsertKafkaTableITCase.java:73)

> 2021-05-19T21:28:02.8731390Z May 19 21:28:02 at

> sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> 2021-05-19T21:28:02.8732095Z May 19 21:28:02 at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> 2021-05-19T21:28:02.8732935Z May 19 21:28:02 at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> 2021-05-19T21:28:02.8733726Z May 19 21:28:02 at

> java.lang.reflect.Method.invoke(Method.java:498)

> 2021-05-19T21:28:02.8734598Z May 19 21:28:02 at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

> 2021-05-19T21:28:02.8735450Z May 19 21:28:02 at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> 2021-05-19T21:28:02.8736313Z May 19 21:28:02 at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

> 2021-05-19T21:28:02.8737329Z May 19 21:28:02 at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> 2021-05-19T21:28:02.8738165Z May 19 21:28:02 at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> 2021-05-19T21:28:02.8738989Z May 19 21:28:02 at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> 2021-05-19T21:28:02.8739741Z May 19 21:28:02 at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

> 2021-05-19T21:28:02.8740563Z May 19 21:28:02 at

> org.apache.flink.util.TestNameProvider$1.evaluate(TestNameProvider.java:45)

> 2021-05-19T21:28:02.8741340Z May 19 21:28:02 at

> org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:55)

> 2021-05-19T21:28:02.8742077Z May 19 21:28:02 at

> org.junit.rules.RunRules.evaluate(RunRules.java:20)

> 2021-05-19T21:28:02.8742802Z May 19 21:28:02 at

> org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

> 2021-05-19T21:28:02.8743594Z May 19 21:28:02 at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

> 2021-05-19T21:28:02.8744811Z May 19 21:28:02 at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassR

[jira] [Assigned] (FLINK-22720) UpsertKafkaTableITCase.testAggregate fail due to ConcurrentModificationException

[

https://issues.apache.org/jira/browse/FLINK-22720?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jark Wu reassigned FLINK-22720:

---

Assignee: Jark Wu

> UpsertKafkaTableITCase.testAggregate fail due to

> ConcurrentModificationException

>

>

> Key: FLINK-22720

> URL: https://issues.apache.org/jira/browse/FLINK-22720

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka, Tests

>Affects Versions: 1.14.0

>Reporter: Guowei Ma

>Assignee: Jark Wu

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=18151&view=logs&j=c5612577-f1f7-5977-6ff6-7432788526f7&t=53f6305f-55e6-561c-8f1e-3a1dde2c77df&l=6613

> {code:java}

> 2021-05-19T21:28:02.8689083Z May 19 21:28:02 [ERROR] testAggregate[format =

> avro](org.apache.flink.streaming.connectors.kafka.table.UpsertKafkaTableITCase)

> Time elapsed: 2.067 s <<< ERROR!

> 2021-05-19T21:28:02.8708337Z May 19 21:28:02

> java.util.ConcurrentModificationException

> 2021-05-19T21:28:02.8710333Z May 19 21:28:02 at

> java.util.HashMap$HashIterator.nextNode(HashMap.java:1445)

> 2021-05-19T21:28:02.8712083Z May 19 21:28:02 at

> java.util.HashMap$ValueIterator.next(HashMap.java:1474)

> 2021-05-19T21:28:02.8712680Z May 19 21:28:02 at

> java.util.AbstractCollection.toArray(AbstractCollection.java:141)

> 2021-05-19T21:28:02.8713142Z May 19 21:28:02 at

> java.util.ArrayList.addAll(ArrayList.java:583)

> 2021-05-19T21:28:02.8716029Z May 19 21:28:02 at

> org.apache.flink.table.planner.factories.TestValuesRuntimeFunctions.lambda$getResults$0(TestValuesRuntimeFunctions.java:114)

> 2021-05-19T21:28:02.8717007Z May 19 21:28:02 at

> java.util.HashMap$Values.forEach(HashMap.java:981)

> 2021-05-19T21:28:02.8718041Z May 19 21:28:02 at

> org.apache.flink.table.planner.factories.TestValuesRuntimeFunctions.getResults(TestValuesRuntimeFunctions.java:114)

> 2021-05-19T21:28:02.8719339Z May 19 21:28:02 at

> org.apache.flink.table.planner.factories.TestValuesTableFactory.getResults(TestValuesTableFactory.java:184)

> 2021-05-19T21:28:02.8720309Z May 19 21:28:02 at

> org.apache.flink.streaming.connectors.kafka.table.KafkaTableTestUtils.waitingExpectedResults(KafkaTableTestUtils.java:82)

> 2021-05-19T21:28:02.8721311Z May 19 21:28:02 at

> org.apache.flink.streaming.connectors.kafka.table.UpsertKafkaTableITCase.wordFreqToUpsertKafka(UpsertKafkaTableITCase.java:440)

> 2021-05-19T21:28:02.8730402Z May 19 21:28:02 at

> org.apache.flink.streaming.connectors.kafka.table.UpsertKafkaTableITCase.testAggregate(UpsertKafkaTableITCase.java:73)

> 2021-05-19T21:28:02.8731390Z May 19 21:28:02 at

> sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> 2021-05-19T21:28:02.8732095Z May 19 21:28:02 at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> 2021-05-19T21:28:02.8732935Z May 19 21:28:02 at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> 2021-05-19T21:28:02.8733726Z May 19 21:28:02 at

> java.lang.reflect.Method.invoke(Method.java:498)

> 2021-05-19T21:28:02.8734598Z May 19 21:28:02 at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

> 2021-05-19T21:28:02.8735450Z May 19 21:28:02 at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> 2021-05-19T21:28:02.8736313Z May 19 21:28:02 at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

> 2021-05-19T21:28:02.8737329Z May 19 21:28:02 at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> 2021-05-19T21:28:02.8738165Z May 19 21:28:02 at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> 2021-05-19T21:28:02.8738989Z May 19 21:28:02 at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> 2021-05-19T21:28:02.8739741Z May 19 21:28:02 at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

> 2021-05-19T21:28:02.8740563Z May 19 21:28:02 at

> org.apache.flink.util.TestNameProvider$1.evaluate(TestNameProvider.java:45)

> 2021-05-19T21:28:02.8741340Z May 19 21:28:02 at

> org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:55)

> 2021-05-19T21:28:02.8742077Z May 19 21:28:02 at

> org.junit.rules.RunRules.evaluate(RunRules.java:20)

> 2021-05-19T21:28:02.8742802Z May 19 21:28:02 at

> org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

> 2021-05-19T21:28:02.8743594Z May 19 21:28:02 at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

> 2021-05-19T21:28:02.8744811Z May 19 21:28:02 at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

>

[jira] [Commented] (FLINK-21979) Job can be restarted from the beginning after it reached a terminal state

[

https://issues.apache.org/jira/browse/FLINK-21979?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17348137#comment-17348137

]

Till Rohrmann commented on FLINK-21979:

---

We need to fix this problem in {{1.14.0}}.

> Job can be restarted from the beginning after it reached a terminal state

> -

>

> Key: FLINK-21979

> URL: https://issues.apache.org/jira/browse/FLINK-21979

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Coordination

>Affects Versions: 1.11.3, 1.12.2, 1.13.0

>Reporter: Till Rohrmann

>Priority: Critical

> Fix For: 1.14.0

>

>

> Currently, the {{JobMaster}} removes all checkpoints after a job reaches a

> globally terminal state. Then it notifies the {{Dispatcher}} about the

> termination of the job. The {{Dispatcher}} then removes the job from the

> {{SubmittedJobGraphStore}}. If the {{Dispatcher}} process fails before doing

> that it might get restarted. In this case, the {{Dispatcher}} would still

> find the job in the {{SubmittedJobGraphStore}} and recover it. Since the

> {{CompletedCheckpointStore}} is empty, it would start executing this job from

> the beginning.

> I think we must not remove job state before the job has not been marked as

> done or made inaccessible for any restarted processes. Concretely, we should

> first remove the job from the {{SubmittedJobGraphStore}} and only then delete

> the checkpoints. Ideally all the job related cleanup operation happens

> atomically.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-21979) Job can be restarted from the beginning after it reached a terminal state

[

https://issues.apache.org/jira/browse/FLINK-21979?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Till Rohrmann updated FLINK-21979:

--

Labels: (was: stale-critical)

> Job can be restarted from the beginning after it reached a terminal state

> -

>

> Key: FLINK-21979

> URL: https://issues.apache.org/jira/browse/FLINK-21979

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Coordination

>Affects Versions: 1.11.3, 1.12.2, 1.13.0

>Reporter: Till Rohrmann

>Priority: Critical

> Fix For: 1.14.0

>

>

> Currently, the {{JobMaster}} removes all checkpoints after a job reaches a

> globally terminal state. Then it notifies the {{Dispatcher}} about the

> termination of the job. The {{Dispatcher}} then removes the job from the

> {{SubmittedJobGraphStore}}. If the {{Dispatcher}} process fails before doing

> that it might get restarted. In this case, the {{Dispatcher}} would still

> find the job in the {{SubmittedJobGraphStore}} and recover it. Since the

> {{CompletedCheckpointStore}} is empty, it would start executing this job from

> the beginning.

> I think we must not remove job state before the job has not been marked as

> done or made inaccessible for any restarted processes. Concretely, we should

> first remove the job from the {{SubmittedJobGraphStore}} and only then delete

> the checkpoints. Ideally all the job related cleanup operation happens

> atomically.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-20427) Remove CheckpointConfig.setPreferCheckpointForRecovery because it can lead to data loss

[

https://issues.apache.org/jira/browse/FLINK-20427?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Till Rohrmann updated FLINK-20427:

--

Labels: (was: stale-critical)

> Remove CheckpointConfig.setPreferCheckpointForRecovery because it can lead to

> data loss

> ---

>

> Key: FLINK-20427

> URL: https://issues.apache.org/jira/browse/FLINK-20427

> Project: Flink

> Issue Type: Bug

> Components: API / DataStream, Runtime / Checkpointing

>Affects Versions: 1.12.0

>Reporter: Till Rohrmann

>Priority: Critical

> Fix For: 1.14.0

>

>

> The {{CheckpointConfig.setPreferCheckpointForRecovery}} allows to configure

> whether Flink prefers checkpoints for recovery if the

> {{CompletedCheckpointStore}} contains savepoints and checkpoints. This is

> problematic because due to this feature, Flink might prefer older checkpoints

> over newer savepoints for recovery. Since some components expect that the

> always the latest checkpoint/savepoint is used (e.g. the

> {{SourceCoordinator}}), it breaks assumptions and can lead to

> {{SourceSplits}} which are not read. This effectively means that the system

> loses data. Similarly, this behaviour can cause that exactly once sinks might

> output results multiple times which violates the processing guarantees.

> Hence, I believe that we should remove this setting because it changes

> Flink's behaviour in some very significant way potentially w/o the user

> noticing.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-20427) Remove CheckpointConfig.setPreferCheckpointForRecovery because it can lead to data loss

[

https://issues.apache.org/jira/browse/FLINK-20427?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17348138#comment-17348138

]

Till Rohrmann commented on FLINK-20427:

---

I think this is still a very important issue to sort out and fix because it can

lead to unwanted data loss.

> Remove CheckpointConfig.setPreferCheckpointForRecovery because it can lead to

> data loss

> ---

>

> Key: FLINK-20427

> URL: https://issues.apache.org/jira/browse/FLINK-20427

> Project: Flink

> Issue Type: Bug

> Components: API / DataStream, Runtime / Checkpointing

>Affects Versions: 1.12.0

>Reporter: Till Rohrmann

>Priority: Critical

> Fix For: 1.14.0

>

>

> The {{CheckpointConfig.setPreferCheckpointForRecovery}} allows to configure

> whether Flink prefers checkpoints for recovery if the

> {{CompletedCheckpointStore}} contains savepoints and checkpoints. This is

> problematic because due to this feature, Flink might prefer older checkpoints

> over newer savepoints for recovery. Since some components expect that the

> always the latest checkpoint/savepoint is used (e.g. the

> {{SourceCoordinator}}), it breaks assumptions and can lead to

> {{SourceSplits}} which are not read. This effectively means that the system

> loses data. Similarly, this behaviour can cause that exactly once sinks might

> output results multiple times which violates the processing guarantees.

> Hence, I believe that we should remove this setting because it changes

> Flink's behaviour in some very significant way potentially w/o the user

> noticing.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-21928) DuplicateJobSubmissionException after JobManager failover

[ https://issues.apache.org/jira/browse/FLINK-21928?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Till Rohrmann updated FLINK-21928: -- Labels: (was: stale-critical) > DuplicateJobSubmissionException after JobManager failover > - > > Key: FLINK-21928 > URL: https://issues.apache.org/jira/browse/FLINK-21928 > Project: Flink > Issue Type: Bug > Components: Runtime / Coordination >Affects Versions: 1.10.3, 1.11.3, 1.12.2, 1.13.0 > Environment: StandaloneApplicationClusterEntryPoint using a fixed job > ID, High Availability enabled >Reporter: Ufuk Celebi >Priority: Critical > Fix For: 1.14.0 > > > Consider the following scenario: > * Environment: StandaloneApplicationClusterEntryPoint using a fixed job ID, > high availability enabled > * Flink job reaches a globally terminal state > * Flink job is marked as finished in the high-availability service's > RunningJobsRegistry > * The JobManager fails over > On recovery, the [Dispatcher throws DuplicateJobSubmissionException, because > the job is marked as done in the > RunningJobsRegistry|https://github.com/apache/flink/blob/release-1.12.2/flink-runtime/src/main/java/org/apache/flink/runtime/dispatcher/Dispatcher.java#L332-L340]. > When this happens, users cannot get out of the situation without manually > redeploying the JobManager process and changing the job ID^1^. > The desired semantics are that we don't want to re-execute a job that has > reached a globally terminal state. In this particular case, we know that the > job has already reached such a state (as it has been marked in the registry). > Therefore, we could handle this case by executing the regular termination > sequence instead of throwing a DuplicateJobSubmission. > --- > ^1^ With ZooKeeper HA, the respective node is not ephemeral. In Kubernetes > HA, there is no notion of ephemeral data that is tied to a session in the > first place afaik. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-22437) Miss adding parallesim for filter operator in batch mode

[

https://issues.apache.org/jira/browse/FLINK-22437?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17348139#comment-17348139

]

godfrey he commented on FLINK-22437:

[~zoucao] yes, I think that is the easiest approach

> Miss adding parallesim for filter operator in batch mode

>

>

> Key: FLINK-22437

> URL: https://issues.apache.org/jira/browse/FLINK-22437

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.12.2

>Reporter: zoucao

>Priority: Major

>

> when I execute batch sql as follow in flink-1.12.2, I found lots of small

> files in hdfs. In filesystem connector, `GroupedPartitionWriter` will be

> used, and it close the last partiton if a new record does not belong to the

> existing partition. The phenomenon occurred if there are more than one

> partiton's records are sent to filesystem sink at the same time. Hive source

> can determine parallesim by the number of file and partition, and the

> parallesim will extended by sort operator, but in

> `CommonPhysicalSink#createSinkTransformation`,a filter operator will be add

> to support `SinkNotNullEnforcer`, there is no parallesim set for it, so

> filesystem sink operator can not get the correct parallesim from inputstream.

> {code:java}

> CREATE CATALOG myHive with (

> 'type'='hive',

> 'property-version'='1',

> 'default-database' = 'flink_sql_online_test'

> );

> -- SET table.sql-dialect=hive;

> -- CREATE TABLE IF NOT EXISTS myHive.flink_sql_online_test.hive_sink (

> --`timestamp` BIGINT,

> --`time` STRING,

> --id BIGINT,

> --product STRING,

> --price DOUBLE,

> --canSell STRING,

> --selledNum BIGINT

> -- ) PARTITIONED BY (

> --dt STRING,

> --`hour` STRING,

> -- `min` STRING

> -- ) TBLPROPERTIES (

> --'partition.time-extractor.timestamp-pattern'='$dt $hr:$min:00',

> --'sink.partition-commit.trigger'='partition-time',

> --'sink.partition-commit.delay'='1 min',

> --'sink.partition-commit.policy.kind'='metastore,success-file'

> -- );

> create table fs_sink (

> `timestamp` BIGINT,

> `time` STRING,

> id BIGINT,

> product STRING,

> price DOUBLE,

> canSell STRING,

> selledNum BIGINT,

> dt STRING,

> `hour` STRING,

> `min` STRING

> ) PARTITIONED BY (dt, `hour`, `min`) with (

> 'connector'='filesystem',

> 'path'='hdfs://',

> 'format'='csv'

> );

> insert into fs_sink

> select * from myHive.flink_sql_online_test.hive_sink;

> {code}

> I think this problem can be fixed by adding a parallesim for it just like

> {code:java}

> val dataStream = new DataStream(env, inputTransformation).filter(enforcer)

> .setParallelism(inputTransformation.getParallelism)

> {code}

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Assigned] (FLINK-22437) Miss adding parallesim for filter operator in batch mode

[

https://issues.apache.org/jira/browse/FLINK-22437?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

godfrey he reassigned FLINK-22437:

--

Assignee: zoucao

> Miss adding parallesim for filter operator in batch mode

>

>

> Key: FLINK-22437

> URL: https://issues.apache.org/jira/browse/FLINK-22437

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.12.2

>Reporter: zoucao

>Assignee: zoucao

>Priority: Major

>

> when I execute batch sql as follow in flink-1.12.2, I found lots of small

> files in hdfs. In filesystem connector, `GroupedPartitionWriter` will be

> used, and it close the last partiton if a new record does not belong to the

> existing partition. The phenomenon occurred if there are more than one

> partiton's records are sent to filesystem sink at the same time. Hive source

> can determine parallesim by the number of file and partition, and the

> parallesim will extended by sort operator, but in

> `CommonPhysicalSink#createSinkTransformation`,a filter operator will be add

> to support `SinkNotNullEnforcer`, there is no parallesim set for it, so

> filesystem sink operator can not get the correct parallesim from inputstream.

> {code:java}

> CREATE CATALOG myHive with (

> 'type'='hive',

> 'property-version'='1',

> 'default-database' = 'flink_sql_online_test'

> );

> -- SET table.sql-dialect=hive;

> -- CREATE TABLE IF NOT EXISTS myHive.flink_sql_online_test.hive_sink (

> --`timestamp` BIGINT,

> --`time` STRING,

> --id BIGINT,

> --product STRING,

> --price DOUBLE,

> --canSell STRING,

> --selledNum BIGINT

> -- ) PARTITIONED BY (

> --dt STRING,

> --`hour` STRING,

> -- `min` STRING

> -- ) TBLPROPERTIES (

> --'partition.time-extractor.timestamp-pattern'='$dt $hr:$min:00',

> --'sink.partition-commit.trigger'='partition-time',

> --'sink.partition-commit.delay'='1 min',

> --'sink.partition-commit.policy.kind'='metastore,success-file'

> -- );

> create table fs_sink (

> `timestamp` BIGINT,

> `time` STRING,

> id BIGINT,

> product STRING,

> price DOUBLE,

> canSell STRING,

> selledNum BIGINT,

> dt STRING,

> `hour` STRING,

> `min` STRING

> ) PARTITIONED BY (dt, `hour`, `min`) with (

> 'connector'='filesystem',

> 'path'='hdfs://',

> 'format'='csv'

> );

> insert into fs_sink

> select * from myHive.flink_sql_online_test.hive_sink;

> {code}

> I think this problem can be fixed by adding a parallesim for it just like

> {code:java}

> val dataStream = new DataStream(env, inputTransformation).filter(enforcer)

> .setParallelism(inputTransformation.getParallelism)

> {code}

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Comment Edited] (FLINK-22437) Miss adding parallesim for filter operator in batch mode

[

https://issues.apache.org/jira/browse/FLINK-22437?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17348139#comment-17348139

]

godfrey he edited comment on FLINK-22437 at 5/20/21, 8:03 AM:

--

[~zoucao] yes, I think that is the easiest approach. Assign to you

was (Author: godfreyhe):

[~zoucao] yes, I think that is the easiest approach

> Miss adding parallesim for filter operator in batch mode

>

>

> Key: FLINK-22437

> URL: https://issues.apache.org/jira/browse/FLINK-22437

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.12.2

>Reporter: zoucao

>Assignee: zoucao

>Priority: Major

>

> when I execute batch sql as follow in flink-1.12.2, I found lots of small

> files in hdfs. In filesystem connector, `GroupedPartitionWriter` will be

> used, and it close the last partiton if a new record does not belong to the

> existing partition. The phenomenon occurred if there are more than one

> partiton's records are sent to filesystem sink at the same time. Hive source

> can determine parallesim by the number of file and partition, and the

> parallesim will extended by sort operator, but in

> `CommonPhysicalSink#createSinkTransformation`,a filter operator will be add

> to support `SinkNotNullEnforcer`, there is no parallesim set for it, so

> filesystem sink operator can not get the correct parallesim from inputstream.

> {code:java}

> CREATE CATALOG myHive with (

> 'type'='hive',

> 'property-version'='1',

> 'default-database' = 'flink_sql_online_test'

> );

> -- SET table.sql-dialect=hive;

> -- CREATE TABLE IF NOT EXISTS myHive.flink_sql_online_test.hive_sink (

> --`timestamp` BIGINT,

> --`time` STRING,

> --id BIGINT,

> --product STRING,

> --price DOUBLE,

> --canSell STRING,

> --selledNum BIGINT

> -- ) PARTITIONED BY (

> --dt STRING,

> --`hour` STRING,

> -- `min` STRING

> -- ) TBLPROPERTIES (

> --'partition.time-extractor.timestamp-pattern'='$dt $hr:$min:00',

> --'sink.partition-commit.trigger'='partition-time',

> --'sink.partition-commit.delay'='1 min',

> --'sink.partition-commit.policy.kind'='metastore,success-file'

> -- );

> create table fs_sink (

> `timestamp` BIGINT,

> `time` STRING,

> id BIGINT,

> product STRING,

> price DOUBLE,

> canSell STRING,

> selledNum BIGINT,

> dt STRING,

> `hour` STRING,

> `min` STRING

> ) PARTITIONED BY (dt, `hour`, `min`) with (

> 'connector'='filesystem',

> 'path'='hdfs://',

> 'format'='csv'

> );

> insert into fs_sink

> select * from myHive.flink_sql_online_test.hive_sink;

> {code}

> I think this problem can be fixed by adding a parallesim for it just like

> {code:java}