[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * 603f6ad9b9f8cf86cf63e7c6dc94dc56e3a2fd76 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27254) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] ZhijieYang commented on a change in pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

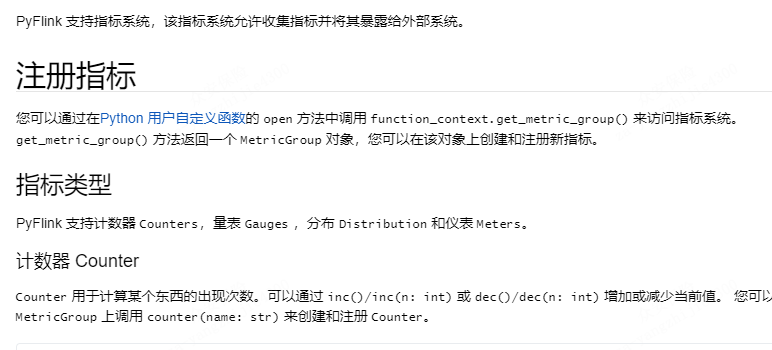

ZhijieYang commented on a change in pull request #17902: URL: https://github.com/apache/flink/pull/17902#discussion_r759015168 ## File path: docs/content.zh/docs/ops/metrics.md ## @@ -28,26 +28,26 @@ under the License. # 指标 -Flink exposes a metric system that allows gathering and exposing metrics to external systems. +Flink 公开了一个指标系统,允许收集和公开指标给外部系统。 -## Registering metrics +## 注册指标 Review comment: sorry, I can't find the link here, what's your mean? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * 603f6ad9b9f8cf86cf63e7c6dc94dc56e3a2fd76 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27254) * e11b9136c39e3ab98011fdb54b5c9e5ea32e18e8 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-ml] zhipeng93 commented on a change in pull request #28: [Flink-24556] Add Estimator and Transformer for logistic regression

zhipeng93 commented on a change in pull request #28:

URL: https://github.com/apache/flink-ml/pull/28#discussion_r759012504

##

File path:

flink-ml-lib/src/main/java/org/apache/flink/ml/classification/linear/LogisticRegression.java

##

@@ -0,0 +1,653 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.ml.classification.linear;

+

+import org.apache.flink.api.common.functions.RichMapFunction;

+import org.apache.flink.api.common.state.ListState;

+import org.apache.flink.api.common.state.ListStateDescriptor;

+import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

+import org.apache.flink.api.common.typeinfo.PrimitiveArrayTypeInfo;

+import org.apache.flink.api.common.typeinfo.TypeInformation;

+import org.apache.flink.api.common.typeutils.base.DoubleComparator;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.api.java.tuple.Tuple3;

+import org.apache.flink.api.java.typeutils.TupleTypeInfo;

+import org.apache.flink.iteration.DataStreamList;

+import org.apache.flink.iteration.IterationBody;

+import org.apache.flink.iteration.IterationBodyResult;

+import org.apache.flink.iteration.IterationConfig;

+import org.apache.flink.iteration.IterationConfig.OperatorLifeCycle;

+import org.apache.flink.iteration.IterationListener;

+import org.apache.flink.iteration.Iterations;

+import org.apache.flink.iteration.ReplayableDataStreamList;

+import org.apache.flink.iteration.operator.OperatorStateUtils;

+import org.apache.flink.ml.api.Estimator;

+import org.apache.flink.ml.common.broadcast.BroadcastUtils;

+import org.apache.flink.ml.common.datastream.DataStreamUtils;

+import org.apache.flink.ml.common.iteration.TerminateOnMaxIterOrTol;

+import org.apache.flink.ml.linalg.BLAS;

+import org.apache.flink.ml.param.Param;

+import org.apache.flink.ml.util.ParamUtils;

+import org.apache.flink.ml.util.ReadWriteUtils;

+import org.apache.flink.runtime.state.StateInitializationContext;

+import org.apache.flink.runtime.state.StateSnapshotContext;

+import org.apache.flink.streaming.api.datastream.DataStream;

+import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

+import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

+import org.apache.flink.streaming.api.operators.AbstractStreamOperator;

+import org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator;

+import org.apache.flink.streaming.api.operators.BoundedMultiInput;

+import org.apache.flink.streaming.api.operators.BoundedOneInput;

+import org.apache.flink.streaming.api.operators.OneInputStreamOperator;

+import org.apache.flink.streaming.api.operators.TwoInputStreamOperator;

+import org.apache.flink.streaming.runtime.streamrecord.StreamRecord;

+import org.apache.flink.table.api.Table;

+import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

+import org.apache.flink.table.api.internal.TableImpl;

+import org.apache.flink.util.Collector;

+import org.apache.flink.util.OutputTag;

+import org.apache.flink.util.Preconditions;

+

+import org.apache.commons.collections.IteratorUtils;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.Arrays;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Random;

+

+/**

+ * This class implements methods to train a logistic regression model. For

details, see

+ * https://en.wikipedia.org/wiki/Logistic_regression.

+ */

+public class LogisticRegression

+implements Estimator,

+LogisticRegressionParams {

+

+private Map, Object> paramMap = new HashMap<>();

+

+private static final OutputTag> MODEL_OUTPUT =

+new OutputTag>("MODEL_OUTPUT") {};

+

+public LogisticRegression() {

+ParamUtils.initializeMapWithDefaultValues(this.paramMap, this);

+}

+

+@Override

+public Map, Object> getParamMap() {

+return paramMap;

+}

+

+@Override

+public void save(String path) throws IOException {

+ReadWriteUtils.saveMetadata(this, path);

+}

+

+public static LogisticRegression load(StreamExecutionEnvironment env,

String path)

+throws IOException {

+return

[jira] [Commented] (FLINK-24348) Kafka ITCases (e.g. KafkaTableITCase) fail with "ContainerLaunch Container startup failed"

[

https://issues.apache.org/jira/browse/FLINK-24348?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450930#comment-17450930

]

Arvid Heise commented on FLINK-24348:

-

Okay the script "testcontainers_start.sh" is generated in KafkaContainer in

test containers 1.16.0. With

https://github.com/testcontainers/testcontainers-java/pull/2078 that was

changed and either it fixes the issue or should provide us better logs, so I'm

proposing to upgrade to 1.16.2.

> Kafka ITCases (e.g. KafkaTableITCase) fail with "ContainerLaunch Container

> startup failed"

> --

>

> Key: FLINK-24348

> URL: https://issues.apache.org/jira/browse/FLINK-24348

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.14.0, 1.15.0

>Reporter: Dawid Wysakowicz

>Assignee: Arvid Heise

>Priority: Critical

> Labels: test-stability

> Fix For: 1.15.0

>

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=24338=logs=b0097207-033c-5d9a-b48c-6d4796fbe60d=8338a7d2-16f7-52e5-f576-4b7b3071eb3d=7140

> {code}

> Sep 21 02:44:33 org.testcontainers.containers.ContainerLaunchException:

> Container startup failed

> Sep 21 02:44:33 at

> org.testcontainers.containers.GenericContainer.doStart(GenericContainer.java:334)

> Sep 21 02:44:33 at

> org.testcontainers.containers.KafkaContainer.doStart(KafkaContainer.java:97)

> Sep 21 02:44:33 at

> org.apache.flink.streaming.connectors.kafka.table.KafkaTableTestBase$1.doStart(KafkaTableTestBase.java:71)

> Sep 21 02:44:33 at

> org.testcontainers.containers.GenericContainer.start(GenericContainer.java:315)

> Sep 21 02:44:33 at

> org.testcontainers.containers.GenericContainer.starting(GenericContainer.java:1060)

> Sep 21 02:44:33 at

> org.testcontainers.containers.FailureDetectingExternalResource$1.evaluate(FailureDetectingExternalResource.java:29)

> Sep 21 02:44:33 at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> Sep 21 02:44:33 at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> Sep 21 02:44:33 at org.junit.rules.RunRules.evaluate(RunRules.java:20)

> Sep 21 02:44:33 at

> org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

> Sep 21 02:44:33 at

> org.junit.runners.ParentRunner.run(ParentRunner.java:413)

> Sep 21 02:44:33 at org.junit.runner.JUnitCore.run(JUnitCore.java:137)

> Sep 21 02:44:33 at org.junit.runner.JUnitCore.run(JUnitCore.java:115)

> Sep 21 02:44:33 at

> org.junit.vintage.engine.execution.RunnerExecutor.execute(RunnerExecutor.java:43)

> Sep 21 02:44:33 at

> java.util.stream.ForEachOps$ForEachOp$OfRef.accept(ForEachOps.java:183)

> Sep 21 02:44:33 at

> java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:193)

> Sep 21 02:44:33 at

> java.util.Iterator.forEachRemaining(Iterator.java:116)

> Sep 21 02:44:33 at

> java.util.Spliterators$IteratorSpliterator.forEachRemaining(Spliterators.java:1801)

> Sep 21 02:44:33 at

> java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482)

> Sep 21 02:44:33 at

> java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472)

> Sep 21 02:44:33 at

> java.util.stream.ForEachOps$ForEachOp.evaluateSequential(ForEachOps.java:150)

> Sep 21 02:44:33 at

> java.util.stream.ForEachOps$ForEachOp$OfRef.evaluateSequential(ForEachOps.java:173)

> Sep 21 02:44:33 at

> java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234)

> Sep 21 02:44:33 at

> java.util.stream.ReferencePipeline.forEach(ReferencePipeline.java:485)

> Sep 21 02:44:33 at

> org.junit.vintage.engine.VintageTestEngine.executeAllChildren(VintageTestEngine.java:82)

> Sep 21 02:44:33 at

> org.junit.vintage.engine.VintageTestEngine.execute(VintageTestEngine.java:73)

> Sep 21 02:44:33 at

> org.junit.platform.launcher.core.DefaultLauncher.execute(DefaultLauncher.java:220)

> Sep 21 02:44:33 at

> org.junit.platform.launcher.core.DefaultLauncher.lambda$execute$6(DefaultLauncher.java:188)

> Sep 21 02:44:33 at

> org.junit.platform.launcher.core.DefaultLauncher.withInterceptedStreams(DefaultLauncher.java:202)

> Sep 21 02:44:33 at

> org.junit.platform.launcher.core.DefaultLauncher.execute(DefaultLauncher.java:181)

> Sep 21 02:44:33 at

> org.junit.platform.launcher.core.DefaultLauncher.execute(DefaultLauncher.java:128)

> Sep 21 02:44:33 at

> org.apache.maven.surefire.junitplatform.JUnitPlatformProvider.invokeAllTests(JUnitPlatformProvider.java:150)

> Sep 21 02:44:33 at

> org.apache.maven.surefire.junitplatform.JUnitPlatformProvider.invoke(JUnitPlatformProvider.java:120)

>

[jira] [Assigned] (FLINK-18634) FlinkKafkaProducerITCase.testRecoverCommittedTransaction failed with "Timeout expired after 60000milliseconds while awaiting InitProducerId"

[

https://issues.apache.org/jira/browse/FLINK-18634?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Fabian Paul reassigned FLINK-18634:

---

Assignee: (was: Fabian Paul)

> FlinkKafkaProducerITCase.testRecoverCommittedTransaction failed with "Timeout

> expired after 6milliseconds while awaiting InitProducerId"

>

>

> Key: FLINK-18634

> URL: https://issues.apache.org/jira/browse/FLINK-18634

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka, Tests

>Affects Versions: 1.11.0, 1.12.0, 1.13.0, 1.14.0, 1.15.0

>Reporter: Dian Fu

>Priority: Major

> Labels: auto-unassigned, test-stability

> Fix For: 1.15.0, 1.14.1

>

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=4590=logs=c5f0071e-1851-543e-9a45-9ac140befc32=684b1416-4c17-504e-d5ab-97ee44e08a20

> {code}

> 2020-07-17T11:43:47.9693015Z [ERROR] Tests run: 12, Failures: 0, Errors: 1,

> Skipped: 0, Time elapsed: 269.399 s <<< FAILURE! - in

> org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducerITCase

> 2020-07-17T11:43:47.9693862Z [ERROR]

> testRecoverCommittedTransaction(org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducerITCase)

> Time elapsed: 60.679 s <<< ERROR!

> 2020-07-17T11:43:47.9694737Z org.apache.kafka.common.errors.TimeoutException:

> org.apache.kafka.common.errors.TimeoutException: Timeout expired after

> 6milliseconds while awaiting InitProducerId

> 2020-07-17T11:43:47.9695376Z Caused by:

> org.apache.kafka.common.errors.TimeoutException: Timeout expired after

> 6milliseconds while awaiting InitProducerId

> {code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Assigned] (FLINK-22342) FlinkKafkaProducerITCase fails with producer leak

[

https://issues.apache.org/jira/browse/FLINK-22342?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Fabian Paul reassigned FLINK-22342:

---

Assignee: (was: Fabian Paul)

> FlinkKafkaProducerITCase fails with producer leak

> -

>

> Key: FLINK-22342

> URL: https://issues.apache.org/jira/browse/FLINK-22342

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.14.0, 1.13.3

>Reporter: Dawid Wysakowicz

>Priority: Major

> Labels: test-stability

> Fix For: 1.15.0, 1.14.1

>

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=16732=logs=c5f0071e-1851-543e-9a45-9ac140befc32=684b1416-4c17-504e-d5ab-97ee44e08a20=6386

> {code}

> [ERROR]

> testScaleDownBeforeFirstCheckpoint(org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducerITCase)

> Time elapsed: 8.854 s <<< FAILURE!

> java.lang.AssertionError: Detected producer leak. Thread name:

> kafka-producer-network-thread |

> producer-MockTask-002a002c-11

> at org.junit.Assert.fail(Assert.java:88)

> at

> org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducerITCase.checkProducerLeak(FlinkKafkaProducerITCase.java:728)

> at

> org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducerITCase.testScaleDownBeforeFirstCheckpoint(FlinkKafkaProducerITCase.java:381)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:55)

> at org.junit.rules.RunRules.evaluate(RunRules.java:20)

> at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

> at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

> at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

> at org.junit.rules.RunRules.evaluate(RunRules.java:20)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:363)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.execute(JUnit4Provider.java:365)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeWithRerun(JUnit4Provider.java:273)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.executeTestSet(JUnit4Provider.java:238)

> at

> org.apache.maven.surefire.junit4.JUnit4Provider.invoke(JUnit4Provider.java:159)

> at

> org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

> at

> org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:345)

> at

> org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:126)

> at

> org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:418)

> {code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink-ml] zhipeng93 commented on a change in pull request #28: [Flink-24556] Add Estimator and Transformer for logistic regression

zhipeng93 commented on a change in pull request #28:

URL: https://github.com/apache/flink-ml/pull/28#discussion_r759008827

##

File path:

flink-ml-lib/src/main/java/org/apache/flink/ml/classification/linear/LogisticRegression.java

##

@@ -0,0 +1,653 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.ml.classification.linear;

+

+import org.apache.flink.api.common.functions.RichMapFunction;

+import org.apache.flink.api.common.state.ListState;

+import org.apache.flink.api.common.state.ListStateDescriptor;

+import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

+import org.apache.flink.api.common.typeinfo.PrimitiveArrayTypeInfo;

+import org.apache.flink.api.common.typeinfo.TypeInformation;

+import org.apache.flink.api.common.typeutils.base.DoubleComparator;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.api.java.tuple.Tuple3;

+import org.apache.flink.api.java.typeutils.TupleTypeInfo;

+import org.apache.flink.iteration.DataStreamList;

+import org.apache.flink.iteration.IterationBody;

+import org.apache.flink.iteration.IterationBodyResult;

+import org.apache.flink.iteration.IterationConfig;

+import org.apache.flink.iteration.IterationConfig.OperatorLifeCycle;

+import org.apache.flink.iteration.IterationListener;

+import org.apache.flink.iteration.Iterations;

+import org.apache.flink.iteration.ReplayableDataStreamList;

+import org.apache.flink.iteration.operator.OperatorStateUtils;

+import org.apache.flink.ml.api.Estimator;

+import org.apache.flink.ml.common.broadcast.BroadcastUtils;

+import org.apache.flink.ml.common.datastream.DataStreamUtils;

+import org.apache.flink.ml.common.iteration.TerminateOnMaxIterOrTol;

+import org.apache.flink.ml.linalg.BLAS;

+import org.apache.flink.ml.param.Param;

+import org.apache.flink.ml.util.ParamUtils;

+import org.apache.flink.ml.util.ReadWriteUtils;

+import org.apache.flink.runtime.state.StateInitializationContext;

+import org.apache.flink.runtime.state.StateSnapshotContext;

+import org.apache.flink.streaming.api.datastream.DataStream;

+import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

+import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

+import org.apache.flink.streaming.api.operators.AbstractStreamOperator;

+import org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator;

+import org.apache.flink.streaming.api.operators.BoundedMultiInput;

+import org.apache.flink.streaming.api.operators.BoundedOneInput;

+import org.apache.flink.streaming.api.operators.OneInputStreamOperator;

+import org.apache.flink.streaming.api.operators.TwoInputStreamOperator;

+import org.apache.flink.streaming.runtime.streamrecord.StreamRecord;

+import org.apache.flink.table.api.Table;

+import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

+import org.apache.flink.table.api.internal.TableImpl;

+import org.apache.flink.util.Collector;

+import org.apache.flink.util.OutputTag;

+import org.apache.flink.util.Preconditions;

+

+import org.apache.commons.collections.IteratorUtils;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.Arrays;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Random;

+

+/**

+ * This class implements methods to train a logistic regression model. For

details, see

+ * https://en.wikipedia.org/wiki/Logistic_regression.

+ */

+public class LogisticRegression

+implements Estimator,

+LogisticRegressionParams {

+

+private Map, Object> paramMap = new HashMap<>();

+

+private static final OutputTag> MODEL_OUTPUT =

+new OutputTag>("MODEL_OUTPUT") {};

+

+public LogisticRegression() {

+ParamUtils.initializeMapWithDefaultValues(this.paramMap, this);

+}

+

+@Override

+public Map, Object> getParamMap() {

+return paramMap;

+}

+

+@Override

+public void save(String path) throws IOException {

+ReadWriteUtils.saveMetadata(this, path);

+}

+

+public static LogisticRegression load(StreamExecutionEnvironment env,

String path)

+throws IOException {

+return

[GitHub] [flink-ml] zhipeng93 commented on a change in pull request #28: [Flink-24556] Add Estimator and Transformer for logistic regression

zhipeng93 commented on a change in pull request #28:

URL: https://github.com/apache/flink-ml/pull/28#discussion_r759007852

##

File path:

flink-ml-lib/src/main/java/org/apache/flink/ml/classification/linear/LogisticRegression.java

##

@@ -0,0 +1,653 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.ml.classification.linear;

+

+import org.apache.flink.api.common.functions.RichMapFunction;

+import org.apache.flink.api.common.state.ListState;

+import org.apache.flink.api.common.state.ListStateDescriptor;

+import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

+import org.apache.flink.api.common.typeinfo.PrimitiveArrayTypeInfo;

+import org.apache.flink.api.common.typeinfo.TypeInformation;

+import org.apache.flink.api.common.typeutils.base.DoubleComparator;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.api.java.tuple.Tuple3;

+import org.apache.flink.api.java.typeutils.TupleTypeInfo;

+import org.apache.flink.iteration.DataStreamList;

+import org.apache.flink.iteration.IterationBody;

+import org.apache.flink.iteration.IterationBodyResult;

+import org.apache.flink.iteration.IterationConfig;

+import org.apache.flink.iteration.IterationConfig.OperatorLifeCycle;

+import org.apache.flink.iteration.IterationListener;

+import org.apache.flink.iteration.Iterations;

+import org.apache.flink.iteration.ReplayableDataStreamList;

+import org.apache.flink.iteration.operator.OperatorStateUtils;

+import org.apache.flink.ml.api.Estimator;

+import org.apache.flink.ml.common.broadcast.BroadcastUtils;

+import org.apache.flink.ml.common.datastream.DataStreamUtils;

+import org.apache.flink.ml.common.iteration.TerminateOnMaxIterOrTol;

+import org.apache.flink.ml.linalg.BLAS;

+import org.apache.flink.ml.param.Param;

+import org.apache.flink.ml.util.ParamUtils;

+import org.apache.flink.ml.util.ReadWriteUtils;

+import org.apache.flink.runtime.state.StateInitializationContext;

+import org.apache.flink.runtime.state.StateSnapshotContext;

+import org.apache.flink.streaming.api.datastream.DataStream;

+import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

+import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

+import org.apache.flink.streaming.api.operators.AbstractStreamOperator;

+import org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator;

+import org.apache.flink.streaming.api.operators.BoundedMultiInput;

+import org.apache.flink.streaming.api.operators.BoundedOneInput;

+import org.apache.flink.streaming.api.operators.OneInputStreamOperator;

+import org.apache.flink.streaming.api.operators.TwoInputStreamOperator;

+import org.apache.flink.streaming.runtime.streamrecord.StreamRecord;

+import org.apache.flink.table.api.Table;

+import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

+import org.apache.flink.table.api.internal.TableImpl;

+import org.apache.flink.util.Collector;

+import org.apache.flink.util.OutputTag;

+import org.apache.flink.util.Preconditions;

+

+import org.apache.commons.collections.IteratorUtils;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.Arrays;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Random;

+

+/**

+ * This class implements methods to train a logistic regression model. For

details, see

+ * https://en.wikipedia.org/wiki/Logistic_regression.

+ */

+public class LogisticRegression

+implements Estimator,

+LogisticRegressionParams {

+

+private Map, Object> paramMap = new HashMap<>();

+

+private static final OutputTag> MODEL_OUTPUT =

+new OutputTag>("MODEL_OUTPUT") {};

+

+public LogisticRegression() {

+ParamUtils.initializeMapWithDefaultValues(this.paramMap, this);

+}

+

+@Override

+public Map, Object> getParamMap() {

+return paramMap;

+}

+

+@Override

+public void save(String path) throws IOException {

+ReadWriteUtils.saveMetadata(this, path);

+}

+

+public static LogisticRegression load(StreamExecutionEnvironment env,

String path)

+throws IOException {

+return

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * 603f6ad9b9f8cf86cf63e7c6dc94dc56e3a2fd76 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27254) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * 603f6ad9b9f8cf86cf63e7c6dc94dc56e3a2fd76 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-ml] zhipeng93 commented on a change in pull request #28: [Flink-24556] Add Estimator and Transformer for logistic regression

zhipeng93 commented on a change in pull request #28:

URL: https://github.com/apache/flink-ml/pull/28#discussion_r759003838

##

File path:

flink-ml-lib/src/main/java/org/apache/flink/ml/classification/linear/LogisticRegression.java

##

@@ -0,0 +1,653 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.ml.classification.linear;

+

+import org.apache.flink.api.common.functions.RichMapFunction;

+import org.apache.flink.api.common.state.ListState;

+import org.apache.flink.api.common.state.ListStateDescriptor;

+import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

+import org.apache.flink.api.common.typeinfo.PrimitiveArrayTypeInfo;

+import org.apache.flink.api.common.typeinfo.TypeInformation;

+import org.apache.flink.api.common.typeutils.base.DoubleComparator;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.api.java.tuple.Tuple3;

+import org.apache.flink.api.java.typeutils.TupleTypeInfo;

+import org.apache.flink.iteration.DataStreamList;

+import org.apache.flink.iteration.IterationBody;

+import org.apache.flink.iteration.IterationBodyResult;

+import org.apache.flink.iteration.IterationConfig;

+import org.apache.flink.iteration.IterationConfig.OperatorLifeCycle;

+import org.apache.flink.iteration.IterationListener;

+import org.apache.flink.iteration.Iterations;

+import org.apache.flink.iteration.ReplayableDataStreamList;

+import org.apache.flink.iteration.operator.OperatorStateUtils;

+import org.apache.flink.ml.api.Estimator;

+import org.apache.flink.ml.common.broadcast.BroadcastUtils;

+import org.apache.flink.ml.common.datastream.DataStreamUtils;

+import org.apache.flink.ml.common.iteration.TerminateOnMaxIterOrTol;

+import org.apache.flink.ml.linalg.BLAS;

+import org.apache.flink.ml.param.Param;

+import org.apache.flink.ml.util.ParamUtils;

+import org.apache.flink.ml.util.ReadWriteUtils;

+import org.apache.flink.runtime.state.StateInitializationContext;

+import org.apache.flink.runtime.state.StateSnapshotContext;

+import org.apache.flink.streaming.api.datastream.DataStream;

+import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

+import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

+import org.apache.flink.streaming.api.operators.AbstractStreamOperator;

+import org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator;

+import org.apache.flink.streaming.api.operators.BoundedMultiInput;

+import org.apache.flink.streaming.api.operators.BoundedOneInput;

+import org.apache.flink.streaming.api.operators.OneInputStreamOperator;

+import org.apache.flink.streaming.api.operators.TwoInputStreamOperator;

+import org.apache.flink.streaming.runtime.streamrecord.StreamRecord;

+import org.apache.flink.table.api.Table;

+import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

+import org.apache.flink.table.api.internal.TableImpl;

+import org.apache.flink.util.Collector;

+import org.apache.flink.util.OutputTag;

+import org.apache.flink.util.Preconditions;

+

+import org.apache.commons.collections.IteratorUtils;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.Arrays;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Random;

+

+/**

+ * This class implements methods to train a logistic regression model. For

details, see

+ * https://en.wikipedia.org/wiki/Logistic_regression.

+ */

+public class LogisticRegression

+implements Estimator,

+LogisticRegressionParams {

+

+private Map, Object> paramMap = new HashMap<>();

+

+private static final OutputTag> MODEL_OUTPUT =

+new OutputTag>("MODEL_OUTPUT") {};

+

+public LogisticRegression() {

+ParamUtils.initializeMapWithDefaultValues(this.paramMap, this);

+}

+

+@Override

+public Map, Object> getParamMap() {

+return paramMap;

+}

+

+@Override

+public void save(String path) throws IOException {

+ReadWriteUtils.saveMetadata(this, path);

+}

+

+public static LogisticRegression load(StreamExecutionEnvironment env,

String path)

+throws IOException {

+return

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * 603f6ad9b9f8cf86cf63e7c6dc94dc56e3a2fd76 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17949: [hotfix][docs] Fix Scala example for MiniCluster test

flinkbot edited a comment on pull request #17949: URL: https://github.com/apache/flink/pull/17949#issuecomment-982158256 ## CI report: * a787ab271f51e7152894777d4740113b8070c011 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27242) * fce2ff2a8bf7a8cda2f1ace8bab72ceaf47a982b Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27253) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17949: [hotfix][docs] Fix Scala example for MiniCluster test

flinkbot edited a comment on pull request #17949: URL: https://github.com/apache/flink/pull/17949#issuecomment-982158256 ## CI report: * a787ab271f51e7152894777d4740113b8070c011 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27242) * fce2ff2a8bf7a8cda2f1ace8bab72ceaf47a982b UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] twalthr commented on a change in pull request #17878: [FLINK-24507][table] Cleanup DateTimeUtils

twalthr commented on a change in pull request #17878:

URL: https://github.com/apache/flink/pull/17878#discussion_r758998441

##

File path:

flink-python/src/main/java/org/apache/flink/table/runtime/typeutils/serializers/python/DateSerializer.java

##

@@ -39,6 +41,11 @@

public static final DateSerializer INSTANCE = new DateSerializer();

+@SuppressWarnings({"unchecked", "rawtypes"})

+private static final DataStructureConverter converter =

Review comment:

@slinkydeveloper you forgot to drop the static field. This

`DateSerializer` is actually technical debt because the Python API still uses

the old type system and thus has to use `java.sql.` types. Hopefully this will

be fixed at some point.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (FLINK-22300) Why TimeEvictor of Keyed Windows evictor do not support ProcessingTime of TimeCharacteristic

[

https://issues.apache.org/jira/browse/FLINK-22300?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450921#comment-17450921

]

Arvid Heise commented on FLINK-22300:

-

Okay I see, so Flink would never evict the data. That is indeed a bug.

What do you think is the proper fix? I have a hard time to come up with proper

semantics beyond saying that users can't use {{TimeEvictor}} with processing

time. For a window in processing time, a user probably wants to retain all

elements before trigger and evict all elements after the trigger in all cases.

For other cases, they would use ingestion or event time.

> Why TimeEvictor of Keyed Windows evictor do not support ProcessingTime of

> TimeCharacteristic

>

>

> Key: FLINK-22300

> URL: https://issues.apache.org/jira/browse/FLINK-22300

> Project: Flink

> Issue Type: Improvement

> Components: API / DataStream

>Affects Versions: 1.11.3

>Reporter: Bo Huang

>Priority: Minor

> Labels: auto-deprioritized-major, stale-minor

>

> StreamExecutionEnvironment.setStreamTimeCharacteristic(TimeCharacteristic.ProcessingTime).

> The data after Windows process can not be evicted by TimeEvictor of Keyed

> Windows Beause TimestampedValue have no timestamp value.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * 603f6ad9b9f8cf86cf63e7c6dc94dc56e3a2fd76 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17952: [FLINK-25011] Introduce vertex parallelism decider and the default implementation.

flinkbot edited a comment on pull request #17952: URL: https://github.com/apache/flink/pull/17952#issuecomment-982356774 ## CI report: * 53241c7762943233cafc115a929c73a9e4abd878 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27251) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #17952: [FLINK-25011] Introduce vertex parallelism decider and the default implementation.

flinkbot commented on pull request #17952: URL: https://github.com/apache/flink/pull/17952#issuecomment-982357716 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 53241c7762943233cafc115a929c73a9e4abd878 (Tue Nov 30 07:29:09 UTC 2021) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] Airblader commented on pull request #17788: [FLINK-15826][Tabel SQL/API] Add renameFunction() to Catalog

Airblader commented on pull request #17788: URL: https://github.com/apache/flink/pull/17788#issuecomment-982357283 @shenzhu My PR was merged now. If you rebase I think those violations should not show up anymore. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #17952: [FLINK-25011] Introduce vertex parallelism decider and the default implementation.

flinkbot commented on pull request #17952: URL: https://github.com/apache/flink/pull/17952#issuecomment-982356774 ## CI report: * 53241c7762943233cafc115a929c73a9e4abd878 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Closed] (FLINK-25047) Resolve most (trivial) architectural violations in flink-table

[ https://issues.apache.org/jira/browse/FLINK-25047?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Timo Walther closed FLINK-25047. Fix Version/s: 1.15.0 Resolution: Fixed Fixed in master: a09cc4704433cb76b936a51b422d812e1ae57945 > Resolve most (trivial) architectural violations in flink-table > -- > > Key: FLINK-25047 > URL: https://issues.apache.org/jira/browse/FLINK-25047 > Project: Flink > Issue Type: Improvement > Components: Table SQL / API >Reporter: Ingo Bürk >Assignee: Ingo Bürk >Priority: Minor > Labels: pull-request-available > Fix For: 1.15.0 > > -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (FLINK-25011) Introduce VertexParallelismDecider

[ https://issues.apache.org/jira/browse/FLINK-25011?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-25011: --- Labels: pull-request-available (was: ) > Introduce VertexParallelismDecider > -- > > Key: FLINK-25011 > URL: https://issues.apache.org/jira/browse/FLINK-25011 > Project: Flink > Issue Type: Sub-task > Components: Runtime / Coordination >Reporter: Lijie Wang >Assignee: Lijie Wang >Priority: Major > Labels: pull-request-available > > Introduce VertexParallelismDecider and provide a default implementation. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [flink] twalthr closed pull request #17898: [FLINK-25047][table] Resolve architectural violations

twalthr closed pull request #17898: URL: https://github.com/apache/flink/pull/17898 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] wanglijie95 opened a new pull request #17952: [FLINK-25011] Introduce vertex parallelism decider and the default implementation.

wanglijie95 opened a new pull request #17952: URL: https://github.com/apache/flink/pull/17952 ## What is the purpose of the change Introduce VertexParallelismDecider and default implementation for adaptive batch scheduler. ## Brief change log Introduce VertexParallelismDecider and default implementation for adaptive batch scheduler. ## Verifying this change Unit tests for the default implementation. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: (no) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? (no) - If yes, how is the feature documented? (not applicable) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * 603f6ad9b9f8cf86cf63e7c6dc94dc56e3a2fd76 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17749: [FLINK-24758][Connectors / FileSystem] filesystem sink: add partitiontime-extractor.formatter-pattern to allow user to speify DateTi

flinkbot edited a comment on pull request #17749: URL: https://github.com/apache/flink/pull/17749#issuecomment-965029957 ## CI report: * 2ce453d313fcea2e1fae39900e6ba97b7a129246 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27243) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * 603f6ad9b9f8cf86cf63e7c6dc94dc56e3a2fd76 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * 603f6ad9b9f8cf86cf63e7c6dc94dc56e3a2fd76 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-24348) Kafka ITCases (e.g. KafkaTableITCase) fail with "ContainerLaunch Container startup failed"

[

https://issues.apache.org/jira/browse/FLINK-24348?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450912#comment-17450912

]

Arvid Heise commented on FLINK-24348:

-

I have not found out much yesterday. It is a standard Unix error but I'm unsure

if it is a general issue with test containers or with the specific kafka

container.

> Kafka ITCases (e.g. KafkaTableITCase) fail with "ContainerLaunch Container

> startup failed"

> --

>

> Key: FLINK-24348

> URL: https://issues.apache.org/jira/browse/FLINK-24348

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.14.0, 1.15.0

>Reporter: Dawid Wysakowicz

>Assignee: Arvid Heise

>Priority: Critical

> Labels: test-stability

> Fix For: 1.15.0

>

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=24338=logs=b0097207-033c-5d9a-b48c-6d4796fbe60d=8338a7d2-16f7-52e5-f576-4b7b3071eb3d=7140

> {code}

> Sep 21 02:44:33 org.testcontainers.containers.ContainerLaunchException:

> Container startup failed

> Sep 21 02:44:33 at

> org.testcontainers.containers.GenericContainer.doStart(GenericContainer.java:334)

> Sep 21 02:44:33 at

> org.testcontainers.containers.KafkaContainer.doStart(KafkaContainer.java:97)

> Sep 21 02:44:33 at

> org.apache.flink.streaming.connectors.kafka.table.KafkaTableTestBase$1.doStart(KafkaTableTestBase.java:71)

> Sep 21 02:44:33 at

> org.testcontainers.containers.GenericContainer.start(GenericContainer.java:315)

> Sep 21 02:44:33 at

> org.testcontainers.containers.GenericContainer.starting(GenericContainer.java:1060)

> Sep 21 02:44:33 at

> org.testcontainers.containers.FailureDetectingExternalResource$1.evaluate(FailureDetectingExternalResource.java:29)

> Sep 21 02:44:33 at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> Sep 21 02:44:33 at

> org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> Sep 21 02:44:33 at org.junit.rules.RunRules.evaluate(RunRules.java:20)

> Sep 21 02:44:33 at

> org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

> Sep 21 02:44:33 at

> org.junit.runners.ParentRunner.run(ParentRunner.java:413)

> Sep 21 02:44:33 at org.junit.runner.JUnitCore.run(JUnitCore.java:137)

> Sep 21 02:44:33 at org.junit.runner.JUnitCore.run(JUnitCore.java:115)

> Sep 21 02:44:33 at

> org.junit.vintage.engine.execution.RunnerExecutor.execute(RunnerExecutor.java:43)

> Sep 21 02:44:33 at

> java.util.stream.ForEachOps$ForEachOp$OfRef.accept(ForEachOps.java:183)

> Sep 21 02:44:33 at

> java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:193)

> Sep 21 02:44:33 at

> java.util.Iterator.forEachRemaining(Iterator.java:116)

> Sep 21 02:44:33 at

> java.util.Spliterators$IteratorSpliterator.forEachRemaining(Spliterators.java:1801)

> Sep 21 02:44:33 at

> java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482)

> Sep 21 02:44:33 at

> java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472)

> Sep 21 02:44:33 at

> java.util.stream.ForEachOps$ForEachOp.evaluateSequential(ForEachOps.java:150)

> Sep 21 02:44:33 at

> java.util.stream.ForEachOps$ForEachOp$OfRef.evaluateSequential(ForEachOps.java:173)

> Sep 21 02:44:33 at

> java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234)

> Sep 21 02:44:33 at

> java.util.stream.ReferencePipeline.forEach(ReferencePipeline.java:485)

> Sep 21 02:44:33 at

> org.junit.vintage.engine.VintageTestEngine.executeAllChildren(VintageTestEngine.java:82)

> Sep 21 02:44:33 at

> org.junit.vintage.engine.VintageTestEngine.execute(VintageTestEngine.java:73)

> Sep 21 02:44:33 at

> org.junit.platform.launcher.core.DefaultLauncher.execute(DefaultLauncher.java:220)

> Sep 21 02:44:33 at

> org.junit.platform.launcher.core.DefaultLauncher.lambda$execute$6(DefaultLauncher.java:188)

> Sep 21 02:44:33 at

> org.junit.platform.launcher.core.DefaultLauncher.withInterceptedStreams(DefaultLauncher.java:202)

> Sep 21 02:44:33 at

> org.junit.platform.launcher.core.DefaultLauncher.execute(DefaultLauncher.java:181)

> Sep 21 02:44:33 at

> org.junit.platform.launcher.core.DefaultLauncher.execute(DefaultLauncher.java:128)

> Sep 21 02:44:33 at

> org.apache.maven.surefire.junitplatform.JUnitPlatformProvider.invokeAllTests(JUnitPlatformProvider.java:150)

> Sep 21 02:44:33 at

> org.apache.maven.surefire.junitplatform.JUnitPlatformProvider.invoke(JUnitPlatformProvider.java:120)

> Sep 21 02:44:33 at

>

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * 603f6ad9b9f8cf86cf63e7c6dc94dc56e3a2fd76 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * 603f6ad9b9f8cf86cf63e7c6dc94dc56e3a2fd76 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * c0becb684fd81c109a102859b15308d26be7f69c UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] ZhijieYang commented on a change in pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

ZhijieYang commented on a change in pull request #17902:

URL: https://github.com/apache/flink/pull/17902#discussion_r758982717

##

File path: docs/content.zh/docs/ops/metrics.md

##

@@ -28,26 +28,26 @@ under the License.

# 指标

-Flink exposes a metric system that allows gathering and exposing metrics to

external systems.

+Flink 公开了一个指标系统,允许收集和公开指标给外部系统。

-## Registering metrics

+## 注册指标

-You can access the metric system from any user function that extends

[RichFunction]({{< ref "docs/dev/datastream/user_defined_functions"

>}}#rich-functions) by calling `getRuntimeContext().getMetricGroup()`.

-This method returns a `MetricGroup` object on which you can create and

register new metrics.

+你可以通过调用从 [RichFunction]({{< ref "docs/dev/datastream/user_defined_functions"

>}}#rich-functions)

+扩展的任何用户函数的 `getRuntimeContext().getMetricGroup()` 方法访问指标系统。

+此方法返回一个 `MetricGroup` 对象,你可以在该对象上创建和注册新指标。

-### Metric types

+## 指标类型

-Flink supports `Counters`, `Gauges`, `Histograms` and `Meters`.

+Flink 支持计数器 `Counters`,量表 `Gauges`,直方图 `Histogram` 和仪表 `Meters`。

Review comment:

because I saw the

`https://nightlies.apache.org/flink/flink-docs-master/zh/docs/dev/python/table/metrics/`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-25104) Kafka table source cannot be used as bounded

[ https://issues.apache.org/jira/browse/FLINK-25104?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450908#comment-17450908 ] Hang Ruan commented on FLINK-25104: --- I will add stoppingOffsetsInitializer setting in the table options. If stoppingOffsetsInitializer is set, the kafka table source will be treated as bounded. > Kafka table source cannot be used as bounded > > > Key: FLINK-25104 > URL: https://issues.apache.org/jira/browse/FLINK-25104 > Project: Flink > Issue Type: Improvement > Components: Connectors / Kafka >Reporter: Hang Ruan >Priority: Minor > > In DataStream API, we could use a Kafka source as bounded by > `builder.setBounded`. > In SQL, there is not a way to set a Kafka table source as bounded. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [flink] flinkbot edited a comment on pull request #17902: [FLINK-25050][docs-zh] Translate "Metrics" page of "Operations" into Chinese

flinkbot edited a comment on pull request #17902: URL: https://github.com/apache/flink/pull/17902#issuecomment-978895303 ## CI report: * 8a398a42490ca1ab93a23b93d9e8c3c2abf14670 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27038) * 17af34f57d57f6e2731cab1d54a9f4765f383e74 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27250) * c0becb684fd81c109a102859b15308d26be7f69c UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-25105) Enables final checkpoint by default