[GitHub] [flink] flinkbot edited a comment on pull request #18119: [FLINK-24947] Support hostNetwork for native K8s integration on session mode

flinkbot edited a comment on pull request #18119: URL: https://github.com/apache/flink/pull/18119#issuecomment-994734000 ## CI report: * c15632cf1ee4b38d0060a87c3bedb5cb4d545264 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29851) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-25740) PulsarSourceOrderedE2ECase fails on azure

[

https://issues.apache.org/jira/browse/FLINK-25740?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Till Rohrmann updated FLINK-25740:

--

Priority: Critical (was: Major)

> PulsarSourceOrderedE2ECase fails on azure

> -

>

> Key: FLINK-25740

> URL: https://issues.apache.org/jira/browse/FLINK-25740

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Pulsar

>Affects Versions: 1.15.0

>Reporter: Roman Khachatryan

>Priority: Critical

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=29789&view=logs&j=af184cdd-c6d8-5084-0b69-7e9c67b35f7a&t=160c9ae5-96fd-516e-1c91-deb81f59292a&l=16385

> {code}

> [ERROR] Errors:

> [ERROR]

> PulsarSourceOrderedE2ECase>SourceTestSuiteBase.testIdleReader:187->SourceTestSuiteBase.gene

> rateAndWriteTestData:315 » BrokerPersistence

> [ERROR]

> PulsarSourceOrderedE2ECase>SourceTestSuiteBase.testIdleReader:187->SourceTestSuiteBase.gene

> rateAndWriteTestData:315 » BrokerPersistence

> [ERROR]

> PulsarSourceOrderedE2ECase>SourceTestSuiteBase.testMultipleSplits:145->SourceTestSuiteBase.

> generateAndWriteTestData:315 » BrokerPersistence

> [ERROR]

> PulsarSourceOrderedE2ECase>SourceTestSuiteBase.testMultipleSplits:145->SourceTestSuiteBase.

> generateAndWriteTestData:315 » BrokerPersistence

> [ERROR]

> PulsarSourceOrderedE2ECase>SourceTestSuiteBase.testSourceSingleSplit:105->SourceTestSuiteBa

> se.generateAndWriteTestData:315 » BrokerPersistence

> [ERROR]

> PulsarSourceOrderedE2ECase>SourceTestSuiteBase.testSourceSingleSplit:105->SourceTestSuiteBa

> se.generateAndWriteTestData:315 » BrokerPersistence

> [ERROR]

> PulsarSourceOrderedE2ECase>SourceTestSuiteBase.testTaskManagerFailure:232 »

> BrokerPersisten ce

> [ERROR]

> PulsarSourceOrderedE2ECase>SourceTestSuiteBase.testTaskManagerFailure:232 »

> BrokerPersisten ce

> [ERROR]

> PulsarSourceUnorderedE2ECase>UnorderedSourceTestSuiteBase.testOneSplitWithMultipleConsumers

> :60 » BrokerPersistence

> [ERROR]

> PulsarSourceUnorderedE2ECase>UnorderedSourceTestSuiteBase.testOneSplitWithMultipleConsumers

> :60 » BrokerPersistence

> {code}

> {code}

> 2022-01-20T15:28:37.1467261Z Jan 20 15:28:37 [ERROR]

> org.apache.flink.tests.util.pulsar.PulsarSourceUnorderedE2ECase.testOneSplitWithMultipleConsumers(TestEnvironment,

> ExternalContext)[2] Time elapsed: 77.698 s <<< ERROR!

> 2022-01-20T15:28:37.1469146Z Jan 20 15:28:37

> org.apache.pulsar.client.api.PulsarClientException$BrokerPersistenceException:

> org.apache.bookkeeper.mledger.ManagedLedgerException: Not enough non-faulty

> bookies available

> 2022-01-20T15:28:37.1470062Z Jan 20 15:28:37at

> org.apache.pulsar.client.api.PulsarClientException.unwrap(PulsarClientException.java:985)

> 2022-01-20T15:28:37.1470802Z Jan 20 15:28:37at

> org.apache.pulsar.client.impl.ProducerBuilderImpl.create(ProducerBuilderImpl.java:95)

> 2022-01-20T15:28:37.1471598Z Jan 20 15:28:37at

> org.apache.flink.connector.pulsar.testutils.runtime.PulsarRuntimeOperator.sendMessages(PulsarRuntimeOperator.java:172)

> 2022-01-20T15:28:37.1472451Z Jan 20 15:28:37at

> org.apache.flink.connector.pulsar.testutils.runtime.PulsarRuntimeOperator.sendMessages(PulsarRuntimeOperator.java:167)

> 2022-01-20T15:28:37.1473307Z Jan 20 15:28:37at

> org.apache.flink.connector.pulsar.testutils.PulsarPartitionDataWriter.writeRecords(PulsarPartitionDataWriter.java:41)

> 2022-01-20T15:28:37.1474209Z Jan 20 15:28:37at

> org.apache.flink.tests.util.pulsar.common.UnorderedSourceTestSuiteBase.testOneSplitWithMultipleConsumers(UnorderedSourceTestSuiteBase.java:60)

> 2022-01-20T15:28:37.1474949Z Jan 20 15:28:37at

> sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> 2022-01-20T15:28:37.1475658Z Jan 20 15:28:37at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> 2022-01-20T15:28:37.1476383Z Jan 20 15:28:37at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> 2022-01-20T15:28:37.1477030Z Jan 20 15:28:37at

> java.lang.reflect.Method.invoke(Method.java:498)

> 2022-01-20T15:28:37.1477670Z Jan 20 15:28:37at

> org.junit.platform.commons.util.ReflectionUtils.invokeMethod(ReflectionUtils.java:725)

> 2022-01-20T15:28:37.1478388Z Jan 20 15:28:37at

> org.junit.jupiter.engine.execution.MethodInvocation.proceed(MethodInvocation.java:60)

> {code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink] flinkbot edited a comment on pull request #18359: [FLINK-25484][connectors/filesystem] Support inactivityInterval config in table api

flinkbot edited a comment on pull request #18359: URL: https://github.com/apache/flink/pull/18359#issuecomment-1012981632 ## CI report: * 82871d3416aefbce86d6e32e3e26a51c12841534 Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29768) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] slinkydeveloper commented on a change in pull request #18353: [FLINK-25129][docs]project configuation changes in docs

slinkydeveloper commented on a change in pull request #18353:

URL: https://github.com/apache/flink/pull/18353#discussion_r789423489

##

File path: docs/content/docs/dev/configuration/overview.md

##

@@ -52,46 +52,36 @@ In Maven syntax, it would look like:

{{< tabs "a49d57a4-27ee-4dd3-a2b8-a673b99b011e" >}}

{{< tab "Java" >}}

-```xml

-

- org.apache.flink

- flink-streaming-java

- {{< version >}}

- provided

-

-```

+

+{{< artifact flink-streaming-java withProvidedScope >}}

+

{{< /tab >}}

{{< tab "Scala" >}}

-```xml

-

- org.apache.flink

- flink-streaming-scala{{< scala_version >}}

- {{< version >}}

- provided

-

-```

-{{< /tab >}}

-{{< /tabs >}}

-**Important:** Note that all these dependencies have their scope set to

*provided*. This means that

-they are needed to compile against, but that they should not be packaged into

the project's resulting

-application JAR file. If not set to *provided*, the best case scenario is that

the resulting JAR

-becomes excessively large, because it also contains all Flink core

dependencies. The worst case scenario

-is that the Flink core dependencies that are added to the application's JAR

file clash with some of

-your own dependency versions (which is normally avoided through inverted

classloading).

+{{< artifact flink-streaming-scala withScalaVersion withProvidedScope >}}

-**Note on IntelliJ:** To make the applications run within IntelliJ IDEA, it is

necessary to tick the

-`Include dependencies with "Provided" scope` box in the run configuration. If

this option is not available

-(possibly due to using an older IntelliJ IDEA version), then a workaround is

to create a test that

-calls the application's `main()` method.

+{{< /tab >}}

+{{< /tabs >}}

Review comment:

Please remove these tabs and replace them with simple tabs for

maven/gradle/sbt conf like here:

https://github.com/slinkydeveloper/flink/commit/5d49dd7a0c0b0b824ed72942136a1857aaea91b9#diff-0bf4db953b94c9b897e098765f0ecf359afb3954363dd8c29574dbe3548c7d01R50

Telling me the syntax for the maven dependencies is not really useful here.

##

File path: docs/content/docs/dev/table/sourcesSinks.md

##

@@ -106,6 +106,41 @@ that the planner can handle.

{{< top >}}

+

+Project Configuration

+-

+

+If you want to implement a custom format, the following dependency is usually

sufficient and can be

+used for JAR files for the SQL Client:

+

+```xml

+

+ org.apache.flink

+ flink-table-common

+ {{< version >}}

+ provided

+

+```

+

+If you want to develop a connector that needs to bridge with DataStream APIs

(i.e. if you want to adapt

+a DataStream connector to the Table API), you need to add this dependency:

+

+```xml

+

+ org.apache.flink

+ flink-table-api-java-bridge

+ {{< version >}}

+ provided

+

+```

Review comment:

Use the artifact docgen tag

##

File path: docs/content/docs/dev/configuration/connector.md

##

@@ -0,0 +1,72 @@

+---

+title: "Dependencies: Connectors and Formats"

+weight: 5

+type: docs

+---

+

+

+# Dependencies: Connectors and Formats

+

+Flink can read from and write to various external systems via [connectors]({{<

ref "docs/connectors/table/overview" >}})

+and define the [format]({{< ref "docs/connectors/table/formats/overview" >}})

in which to store the

+data (i.e. mapping binary data onto table columns).

+

+The way that the information is serialized is represented in the external

system and that system needs

+to know how to read this data in a format that can be read by Flink. This is

done through format dependencies.

+

+Most applications need specific connectors to run. Flink provides a set of

table formats that can be

+used with table connectors (with the dependencies for both being fairly

unified). These are not part

+of Flink's core dependencies and must be added as dependencies to the

application.

+

+## Adding Connector Dependencies

+

+As an example, you can add the Kafka connector as a dependency like this

(Maven syntax):

+

+{{< artifact flink-connector-kafka >}}

+

+We recommend packaging the application code and all its required dependencies

into one *JAR-with-dependencies*

+which we refer to as the *application JAR*. The application JAR can be

submitted to an already running

+Flink cluster, or added to a Flink application container image.

+

+Projects created from the `Java Project Template`, the `Scala Project

Template`, or Gradle are configured

+to automatically include the application dependencies into the application JAR

when you run `mvn clean package`.

+For projects that are not set up from those templates, we recommend adding the

Maven Shade Plugin to

+build the application jar with all required dependencies.

+

+**Important:** For Maven (and other build tools) to correctly package the

dependencies into the application jar,

+these application dependencies must be specified in scope *compile* (unlike

the core dependencies, which

+must be specified in

[GitHub] [flink] slinkydeveloper commented on pull request #13081: [FLINK-18590][json] Support json array explode to multi messages

slinkydeveloper commented on pull request #13081: URL: https://github.com/apache/flink/pull/13081#issuecomment-1018272859 @poan0508 this PR is looking for someone to finalize it. Wanna take a crack at it? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

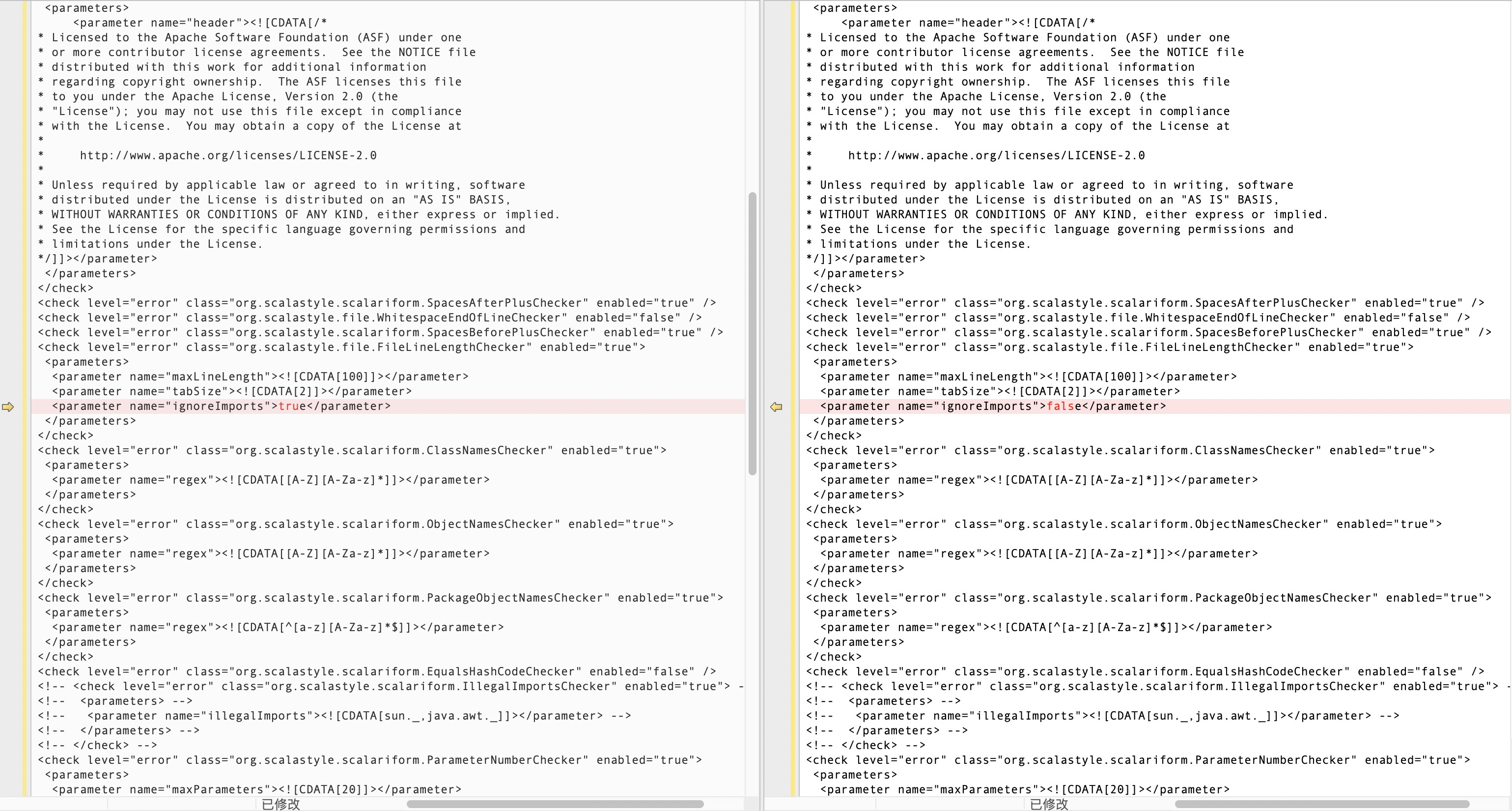

[GitHub] [flink] JingsongLi commented on a change in pull request #18394: [FLINK-25520][Table SQL/API] Implement "ALTER TABLE ... COMPACT" SQL

JingsongLi commented on a change in pull request #18394:

URL: https://github.com/apache/flink/pull/18394#discussion_r789435121

##

File path:

flink-table/flink-table-planner/src/test/scala/org/apache/flink/table/planner/utils/TableTestBase.scala

##

@@ -47,21 +53,30 @@ import org.apache.flink.table.expressions.Expression

import org.apache.flink.table.factories.{FactoryUtil, PlannerFactoryUtil,

StreamTableSourceFactory}

import org.apache.flink.table.functions._

import org.apache.flink.table.module.ModuleManager

-import org.apache.flink.table.operations.{ModifyOperation, Operation,

QueryOperation, SinkModifyOperation}

+import org.apache.flink.table.operations.ModifyOperation

+import org.apache.flink.table.operations.Operation

+import org.apache.flink.table.operations.QueryOperation

+import org.apache.flink.table.operations.SinkModifyOperation

Review comment:

Your scala style may be something wrong... can you check for Flink Scala

style?

##

File path:

flink-table/flink-table-planner/src/main/java/org/apache/flink/table/planner/operations/SqlToOperationConverter.java

##

@@ -572,14 +574,19 @@ private Operation convertAlterTableReset(

return new AlterTableOptionsOperation(tableIdentifier,

oldTable.copy(newOptions));

}

-private Operation convertAlterTableCompact(

+/**

+ * Convert `ALTER TABLE ... COMPACT` operation to {@link ModifyOperation}

for Flink's managed

+ * table to trigger a compaction batch job.

+ */

+private ModifyOperation convertAlterTableCompact(

ObjectIdentifier tableIdentifier,

-ResolvedCatalogTable resolvedCatalogTable,

+ContextResolvedTable contextResolvedTable,

SqlAlterTableCompact alterTableCompact) {

Catalog catalog =

catalogManager.getCatalog(tableIdentifier.getCatalogName()).orElse(null);

+ResolvedCatalogTable resolvedCatalogTable =

contextResolvedTable.getResolvedTable();

if (ManagedTableListener.isManagedTable(catalog,

resolvedCatalogTable)) {

-LinkedHashMap partitionKVs =

alterTableCompact.getPartitionKVs();

-CatalogPartitionSpec partitionSpec = null;

+Map partitionKVs =

alterTableCompact.getPartitionKVs();

+CatalogPartitionSpec partitionSpec = new

CatalogPartitionSpec(Collections.emptyMap());

if (partitionKVs != null) {

List orderedPartitionKeys =

resolvedCatalogTable.getPartitionKeys();

Review comment:

Minor: partitionKeys, no need to `orderedPartitionKeys`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] dawidwys commented on pull request #18405: [FLINK-25683][streaming-java] wrong result if table transfrom to Data…

dawidwys commented on pull request #18405: URL: https://github.com/apache/flink/pull/18405#issuecomment-1018277879 Have you tried building Flink from the command line? Usually that helps with any auto generated files, at least that's what I do. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-25276) FLIP-182: Support native and incremental savepoints

[ https://issues.apache.org/jira/browse/FLINK-25276?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Piotr Nowojski updated FLINK-25276: --- Summary: FLIP-182: Support native and incremental savepoints (was: Support native and incremental savepoints) > FLIP-182: Support native and incremental savepoints > --- > > Key: FLINK-25276 > URL: https://issues.apache.org/jira/browse/FLINK-25276 > Project: Flink > Issue Type: New Feature >Reporter: Piotr Nowojski >Priority: Major > > Motivation. Currently with non incremental canonical format savepoints, with > very large state, both taking and recovery from savepoints can take very long > time. Providing options to take native format and incremental savepoint would > alleviate this problem. > In the past the main challenge lied in the ownership semantic and files clean > up of such incremental savepoints. However with FLINK-25154 implemented some > of those concerns can be solved. Incremental savepoint could leverage "force > full snapshot" mode provided by FLINK-25192, to duplicate/copy all of the > savepoint files out of the Flink's ownership scope. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Created] (FLINK-25744) Support native savepoints (w/o modifying the statebackend specific snapshot strategies)

Piotr Nowojski created FLINK-25744: -- Summary: Support native savepoints (w/o modifying the statebackend specific snapshot strategies) Key: FLINK-25744 URL: https://issues.apache.org/jira/browse/FLINK-25744 Project: Flink Issue Type: Sub-task Components: Runtime / Checkpointing Reporter: Piotr Nowojski Assignee: Dawid Wysakowicz For example w/o incremental RocksDB support. But HashMap and Full RocksDB should be working out of the box w/o extra changes. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (FLINK-25276) FLIP-182: Support native and incremental savepoints

[ https://issues.apache.org/jira/browse/FLINK-25276?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Piotr Nowojski updated FLINK-25276: --- Affects Version/s: 1.14.3 > FLIP-182: Support native and incremental savepoints > --- > > Key: FLINK-25276 > URL: https://issues.apache.org/jira/browse/FLINK-25276 > Project: Flink > Issue Type: New Feature >Affects Versions: 1.14.3 >Reporter: Piotr Nowojski >Priority: Major > > Motivation. Currently with non incremental canonical format savepoints, with > very large state, both taking and recovery from savepoints can take very long > time. Providing options to take native format and incremental savepoint would > alleviate this problem. > In the past the main challenge lied in the ownership semantic and files clean > up of such incremental savepoints. However with FLINK-25154 implemented some > of those concerns can be solved. Incremental savepoint could leverage "force > full snapshot" mode provided by FLINK-25192, to duplicate/copy all of the > savepoint files out of the Flink's ownership scope. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (FLINK-25276) FLIP-182: Support native and incremental savepoints

[ https://issues.apache.org/jira/browse/FLINK-25276?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Piotr Nowojski updated FLINK-25276: --- Component/s: Runtime / Checkpointing > FLIP-182: Support native and incremental savepoints > --- > > Key: FLINK-25276 > URL: https://issues.apache.org/jira/browse/FLINK-25276 > Project: Flink > Issue Type: New Feature > Components: Runtime / Checkpointing >Affects Versions: 1.14.3 >Reporter: Piotr Nowojski >Priority: Major > > Motivation. Currently with non incremental canonical format savepoints, with > very large state, both taking and recovery from savepoints can take very long > time. Providing options to take native format and incremental savepoint would > alleviate this problem. > In the past the main challenge lied in the ownership semantic and files clean > up of such incremental savepoints. However with FLINK-25154 implemented some > of those concerns can be solved. Incremental savepoint could leverage "force > full snapshot" mode provided by FLINK-25192, to duplicate/copy all of the > savepoint files out of the Flink's ownership scope. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (FLINK-25276) FLIP-182: Support native and incremental savepoints

[ https://issues.apache.org/jira/browse/FLINK-25276?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Piotr Nowojski updated FLINK-25276: --- Fix Version/s: 1.15.0 > FLIP-182: Support native and incremental savepoints > --- > > Key: FLINK-25276 > URL: https://issues.apache.org/jira/browse/FLINK-25276 > Project: Flink > Issue Type: New Feature > Components: Runtime / Checkpointing >Affects Versions: 1.14.3 >Reporter: Piotr Nowojski >Priority: Major > Fix For: 1.15.0 > > > Motivation. Currently with non incremental canonical format savepoints, with > very large state, both taking and recovery from savepoints can take very long > time. Providing options to take native format and incremental savepoint would > alleviate this problem. > In the past the main challenge lied in the ownership semantic and files clean > up of such incremental savepoints. However with FLINK-25154 implemented some > of those concerns can be solved. Incremental savepoint could leverage "force > full snapshot" mode provided by FLINK-25192, to duplicate/copy all of the > savepoint files out of the Flink's ownership scope. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Created] (FLINK-25745) Support RocksDB incremental native savepoints

Piotr Nowojski created FLINK-25745: -- Summary: Support RocksDB incremental native savepoints Key: FLINK-25745 URL: https://issues.apache.org/jira/browse/FLINK-25745 Project: Flink Issue Type: Sub-task Components: Runtime / State Backends Reporter: Piotr Nowojski Fix For: 1.15.0 Respect CheckpointType.SharingFilesStrategy#NO_SHARING flag in RocksIncrementalSnapshotStrategy. We also need to make sure that RocksDBIncrementalSnapshotStrategy is creating self contained/relocatable snapshots (using CheckpointedStateScope#EXCLUSIVE for native savepoints) -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [flink] flinkbot edited a comment on pull request #18324: [FLINK-25557][checkpoint] Introduce incremental/full checkpoint size stats

flinkbot edited a comment on pull request #18324: URL: https://github.com/apache/flink/pull/18324#issuecomment-1009752905 ## CI report: * 647c5b7e76e310ff363a31eb9de04c544f2effd9 Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29852) * e53e06e0f6e692252e3e87b4fe797fb306c297ae Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29865) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] SteNicholas closed pull request #15729: [FLINK-22234][runtime] Read savepoint before creating ExecutionGraph

SteNicholas closed pull request #15729: URL: https://github.com/apache/flink/pull/15729 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] SteNicholas closed pull request #13298: [FLINK-19038][table] It doesn't support to call Table.limit() continuously

SteNicholas closed pull request #13298: URL: https://github.com/apache/flink/pull/13298 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] SteNicholas closed pull request #14028: [FLINK-20020][client] Make UnsuccessfulExecutionException part of the JobClient.getJobExecutionResult() contract

SteNicholas closed pull request #14028: URL: https://github.com/apache/flink/pull/14028 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #18137: [FLINK-25287][connector-testing-framework] Refactor interfaces of connector testing framework to support more scenarios

flinkbot edited a comment on pull request #18137: URL: https://github.com/apache/flink/pull/18137#issuecomment-996615034 ## CI report: * 66280958ba0046cbef940e575fc61a4ec6d62253 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29747) * d3a3caf926f972fa7b83edc1d66d9883aba15376 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29853) * f67c40496c3c04c45f07a8e49ccf7413e5854244 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29862) * 1123a75a23288a4161b08133a6cdcc71030b384e UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] fapaul commented on a change in pull request #18397: [FLINK-25702][Kafka] Use the configure feature provided by the kafka Serializer/Deserializer.

fapaul commented on a change in pull request #18397:

URL: https://github.com/apache/flink/pull/18397#discussion_r789453203

##

File path:

flink-connectors/flink-connector-kafka/src/main/java/org/apache/flink/connector/kafka/source/reader/deserializer/KafkaValueOnlyDeserializerWrapper.java

##

@@ -62,8 +75,15 @@ public void open(DeserializationSchema.InitializationContext

context) throws Exc

deserializerClass.getName(),

Deserializer.class,

getClass().getClassLoader());

+

+if (config.isEmpty()) {

+return;

+}

+

if (deserializer instanceof Configurable) {

((Configurable) deserializer).configure(config);

+} else {

Review comment:

Currently, we have the following scenarios:

1. `De/Serializer implements Configurable` I would **only** call

`Configurable.configure`

2. Does not implement Configurable but a configuration is given call

`De/Serializer.configure`

3. No configuration is given we call `De/Serializer.configure` with an empty

map?

Probably you have to write tests for all of them although I am not fully

sure about the last. I guess calling `configure` with an empty map does not

hurt.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #18137: [FLINK-25287][connector-testing-framework] Refactor interfaces of connector testing framework to support more scenarios

flinkbot edited a comment on pull request #18137: URL: https://github.com/apache/flink/pull/18137#issuecomment-996615034 ## CI report: * d3a3caf926f972fa7b83edc1d66d9883aba15376 Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29853) * f67c40496c3c04c45f07a8e49ccf7413e5854244 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29862) * 1123a75a23288a4161b08133a6cdcc71030b384e Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29869) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #18437: [FLINK-25712][connector/tests] Merge flink-connector-testing into flink-connector-test-utils

flinkbot edited a comment on pull request #18437: URL: https://github.com/apache/flink/pull/18437#issuecomment-1018174136 ## CI report: * be3d03d337bb7358ee949445f0530a73d02c43dc Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29854) * 049a0ebc6535291170b03e739521344d54809682 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29863) * 66184529bb0e4f34be6b7e3755d06cd50939f894 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #18436: [BP-1.13][FLINK-24334][k8s] Set FLINK_LOG_DIR environment for JobManager and TaskManager pod if configured via options

flinkbot edited a comment on pull request #18436: URL: https://github.com/apache/flink/pull/18436#issuecomment-1018094327 ## CI report: * f87a46d760404ab5d51b2d5ed554459d3b07fde6 Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29845) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #18437: [FLINK-25712][connector/tests] Merge flink-connector-testing into flink-connector-test-utils

flinkbot edited a comment on pull request #18437: URL: https://github.com/apache/flink/pull/18437#issuecomment-1018174136 ## CI report: * be3d03d337bb7358ee949445f0530a73d02c43dc Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29854) * 049a0ebc6535291170b03e739521344d54809682 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29863) * 66184529bb0e4f34be6b7e3755d06cd50939f894 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29870) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-24623) Prevent usage of EventTimeWindows when EventTime is disabled

[

https://issues.apache.org/jira/browse/FLINK-24623?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17479905#comment-17479905

]

Alexander Fedulov commented on FLINK-24623:

---

[~Dario] Hi Dario, could you propose your approach in a form of a PR?

> Prevent usage of EventTimeWindows when EventTime is disabled

>

>

> Key: FLINK-24623

> URL: https://issues.apache.org/jira/browse/FLINK-24623

> Project: Flink

> Issue Type: Improvement

> Components: API / DataStream

>Reporter: Dario Heinisch

>Priority: Not a Priority

> Original Estimate: 24h

> Remaining Estimate: 24h

>

> Having the following stream will never process values after the windowing as

> event time based has been disabled via the Watermark strategy:

> {code:java}

> public class PlaygroundJob {

> public static void main(String[] args) throws Exception {

> StreamExecutionEnvironment env =

> StreamExecutionEnvironment.createLocalEnvironmentWithWebUI(new

> Configuration()); DataStreamSource> source =

> env.addSource(new SourceFunction>() {

> @Override

> public void run(SourceContext>

> sourceContext) throws Exception {

> int i = 0;

> while (true) {

> Tuple2 tuple =

> Tuple2.of(System.currentTimeMillis(), i++ % 10);

> sourceContext.collect(tuple);

> }

> } @Override

> public void cancel() {

> } }); source.assignTimestampsAndWatermarks(

> // Switch noWatermarks() to forMonotonousTimestamps()

> // and values are being printed.

> WatermarkStrategy.>noWatermarks()

> .withTimestampAssigner((t, timestamp) -> t.f0)

> ).keyBy(t -> t.f1)

> .window(TumblingEventTimeWindows.of(Time.seconds(1)))

> .process(new ProcessWindowFunction Integer>, String, Integer, TimeWindow>() {

> @Override

> public void process(Integer key, Context context,

> Iterable> iterable, Collector out) throws

> Exception {

> int count = 0;

> Iterator> iter =

> iterable.iterator();

> while (iter.hasNext()) {

> count++;

> iter.next();

> } out.collect("Key: " + key

> + " count: " + count); }

> }).print(); env.execute();

> }

> }{code}

>

> The issue is that the stream makes use of _noWatermarks()_ which effectively

> disables any event time windowing.

> As this pipeline can never process values it is faulty and Flink should throw

> an Exception when starting up.

>

>

> Proposed change:

> We extend the interface

> [WatermarkStrategy|https://github.com/apache/flink/blob/master/flink-core/src/main/java/org/apache/flink/api/common/eventtime/WatermarkStrategy.java#L55]

> with the method _boolean isEventTime()_.

> We create a new class named _EventTimeWindowPreconditions_ and add the

> following method to it where we make use of _isEventTime()_:

>

> {code:java}

> public static void hasPrecedingEventTimeGenerator(final

> List> predecessors) {

> for (int i = predecessors.size() - 1; i >= 0; i--) {

> final Transformation pre = predecessors.get(i);

> if (pre instanceof TimestampsAndWatermarksTransformation) {

> TimestampsAndWatermarksTransformation

> timestampsAndWatermarksTransformation =

> (TimestampsAndWatermarksTransformation) pre;

> final WatermarkStrategy waStrat =

> timestampsAndWatermarksTransformation.getWatermarkStrategy();

> // assert that it generates timestamps or throw exception

> if (!waStrat.isEventTime()) {

> // TODO: Custom exception

> throw new IllegalArgumentException(

> "Cannot use an EventTime window with a preceding

> water mark generator which"

> + " does not ingest event times. Did you use

> noWatermarks() as the WatermarkStrategy"

> + " and used EventTime windows such as

> SlidingEventTimeWindows/SlidingEventTimeWindows ?"

> + " These windows will never window any

> values as your stream does not support event time"

> );

> }

> // We have to terminate the check now as we have found the first

> most recent

> // timestamp assigner for this window and ensured that it

> actually adds event

> // time stamps. If there has been previously

[jira] [Comment Edited] (FLINK-24623) Prevent usage of EventTimeWindows when EventTime is disabled

[

https://issues.apache.org/jira/browse/FLINK-24623?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17479905#comment-17479905

]

Alexander Fedulov edited comment on FLINK-24623 at 1/21/22, 8:47 AM:

-

[~Dario] Hi Dario, could you propose your approach as a PR?

was (Author: afedulov):

[~Dario] Hi Dario, could you propose your approach in a form of a PR?

> Prevent usage of EventTimeWindows when EventTime is disabled

>

>

> Key: FLINK-24623

> URL: https://issues.apache.org/jira/browse/FLINK-24623

> Project: Flink

> Issue Type: Improvement

> Components: API / DataStream

>Reporter: Dario Heinisch

>Priority: Not a Priority

> Original Estimate: 24h

> Remaining Estimate: 24h

>

> Having the following stream will never process values after the windowing as

> event time based has been disabled via the Watermark strategy:

> {code:java}

> public class PlaygroundJob {

> public static void main(String[] args) throws Exception {

> StreamExecutionEnvironment env =

> StreamExecutionEnvironment.createLocalEnvironmentWithWebUI(new

> Configuration()); DataStreamSource> source =

> env.addSource(new SourceFunction>() {

> @Override

> public void run(SourceContext>

> sourceContext) throws Exception {

> int i = 0;

> while (true) {

> Tuple2 tuple =

> Tuple2.of(System.currentTimeMillis(), i++ % 10);

> sourceContext.collect(tuple);

> }

> } @Override

> public void cancel() {

> } }); source.assignTimestampsAndWatermarks(

> // Switch noWatermarks() to forMonotonousTimestamps()

> // and values are being printed.

> WatermarkStrategy.>noWatermarks()

> .withTimestampAssigner((t, timestamp) -> t.f0)

> ).keyBy(t -> t.f1)

> .window(TumblingEventTimeWindows.of(Time.seconds(1)))

> .process(new ProcessWindowFunction Integer>, String, Integer, TimeWindow>() {

> @Override

> public void process(Integer key, Context context,

> Iterable> iterable, Collector out) throws

> Exception {

> int count = 0;

> Iterator> iter =

> iterable.iterator();

> while (iter.hasNext()) {

> count++;

> iter.next();

> } out.collect("Key: " + key

> + " count: " + count); }

> }).print(); env.execute();

> }

> }{code}

>

> The issue is that the stream makes use of _noWatermarks()_ which effectively

> disables any event time windowing.

> As this pipeline can never process values it is faulty and Flink should throw

> an Exception when starting up.

>

>

> Proposed change:

> We extend the interface

> [WatermarkStrategy|https://github.com/apache/flink/blob/master/flink-core/src/main/java/org/apache/flink/api/common/eventtime/WatermarkStrategy.java#L55]

> with the method _boolean isEventTime()_.

> We create a new class named _EventTimeWindowPreconditions_ and add the

> following method to it where we make use of _isEventTime()_:

>

> {code:java}

> public static void hasPrecedingEventTimeGenerator(final

> List> predecessors) {

> for (int i = predecessors.size() - 1; i >= 0; i--) {

> final Transformation pre = predecessors.get(i);

> if (pre instanceof TimestampsAndWatermarksTransformation) {

> TimestampsAndWatermarksTransformation

> timestampsAndWatermarksTransformation =

> (TimestampsAndWatermarksTransformation) pre;

> final WatermarkStrategy waStrat =

> timestampsAndWatermarksTransformation.getWatermarkStrategy();

> // assert that it generates timestamps or throw exception

> if (!waStrat.isEventTime()) {

> // TODO: Custom exception

> throw new IllegalArgumentException(

> "Cannot use an EventTime window with a preceding

> water mark generator which"

> + " does not ingest event times. Did you use

> noWatermarks() as the WatermarkStrategy"

> + " and used EventTime windows such as

> SlidingEventTimeWindows/SlidingEventTimeWindows ?"

> + " These windows will never window any

> values as your stream does not support event time"

> );

> }

> // We have to terminate the check now as we have found the first

> most recent

> //

[GitHub] [flink] dannycranmer commented on pull request #18421: [FLINK-25731][connectors/kinesis] Deprecated FlinkKinesisConsumer / F…

dannycranmer commented on pull request #18421: URL: https://github.com/apache/flink/pull/18421#issuecomment-1018302126 LGTM, merging -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] dannycranmer merged pull request #18421: [FLINK-25731][connectors/kinesis] Deprecated FlinkKinesisConsumer / F…

dannycranmer merged pull request #18421: URL: https://github.com/apache/flink/pull/18421 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] rkhachatryan commented on a change in pull request #18324: [FLINK-25557][checkpoint] Introduce incremental/full checkpoint size stats

rkhachatryan commented on a change in pull request #18324:

URL: https://github.com/apache/flink/pull/18324#discussion_r789465033

##

File path:

flink-state-backends/flink-statebackend-changelog/src/main/java/org/apache/flink/state/changelog/ChangelogKeyedStateBackend.java

##

@@ -368,19 +372,34 @@ public boolean

deregisterKeySelectionListener(KeySelectionListener listener)

// collections don't change once started and handles are immutable

List prevDeltaCopy =

new

ArrayList<>(changelogStateBackendStateCopy.getRestoredNonMaterialized());

+long incrementalMaterializeSize = 0L;

if (delta != null && delta.getStateSize() > 0) {

prevDeltaCopy.add(delta);

+incrementalMaterializeSize += delta.getIncrementalStateSize();

}

if (prevDeltaCopy.isEmpty()

&&

changelogStateBackendStateCopy.getMaterializedSnapshot().isEmpty()) {

return SnapshotResult.empty();

} else {

+List materializedSnapshot =

+changelogStateBackendStateCopy.getMaterializedSnapshot();

+for (KeyedStateHandle keyedStateHandle : materializedSnapshot) {

+if (!lastCompletedHandles.contains(keyedStateHandle)) {

+incrementalMaterializeSize +=

keyedStateHandle.getStateSize();

Review comment:

The data uploaded during the async phase is (usually) created during the

sync phase. So "Async Persist Checkpoint Data Size" is not very precise. The

current UI does distinguish duration of sync and async phases; also nothing

prevents backend from persisting everything during the sync phase.

Something like "Foreground persist data size" would be more precise, but it

would confuse non-changelog users I guess. WDYT?

So maybe "Sync/async Persist Checkpoint Data Size"?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Created] (FLINK-25746) Failed to run ITCase locally with IDEA under flink-orc and flink-parquet module

Jane Chan created FLINK-25746:

-

Summary: Failed to run ITCase locally with IDEA under flink-orc

and flink-parquet module

Key: FLINK-25746

URL: https://issues.apache.org/jira/browse/FLINK-25746

Project: Flink

Issue Type: Bug

Components: Formats (JSON, Avro, Parquet, ORC, SequenceFile)

Reporter: Jane Chan

Recently, it has been observed that several integration test cases failed when

running from IDEA locally, but running them from the maven command line is OK.

h4. How to reproduce

{code:java}

// switch to master branch

git fetch origin

git rebase origin/master

mvn clean install -DskipTests -Dfast -Pskip-webui-build -Dscala-2.12 -T 1C

{code}

Then run the following tests from IntelliJ IDEA

h4. The affected tests

{code:java}

org.apache.flink.orc.OrcFileSystemITCase

org.apache.flink.orc.OrcFsStreamingSinkITCase

org.apache.flink.formats.parquet.ParquetFileCompactionITCase

org.apache.flink.formats.parquet.ParquetFileSystemITCase

org.apache.flink.formats.parquet.ParquetFsStreamingSinkITCase {code}

h4. The stack trace

{code:java}

java.lang.NoClassDefFoundError: com/google/common/base/MoreObjects at

org.apache.calcite.config.CalciteSystemProperty.loadProperties(CalciteSystemProperty.java:404)

at

org.apache.calcite.config.CalciteSystemProperty.(CalciteSystemProperty.java:47)

at org.apache.calcite.util.Util.(Util.java:152)

at org.apache.calcite.sql.type.SqlTypeName.(SqlTypeName.java:142)

at

org.apache.calcite.sql.type.SqlTypeFamily.getTypeNames(SqlTypeFamily.java:163)

at org.apache.calcite.sql.type.ReturnTypes.(ReturnTypes.java:127)

at org.apache.calcite.sql.SqlSetOperator.(SqlSetOperator.java:45)

at

org.apache.calcite.sql.fun.SqlStdOperatorTable.(SqlStdOperatorTable.java:97)

at

org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:101)

at

org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:91)

at

org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:234)

at

org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:215)

at org.apache.calcite.tools.Frameworks.newConfigBuilder(Frameworks.java:199)

at

org.apache.flink.table.planner.delegation.PlannerContext.createFrameworkConfig(PlannerContext.java:145)

at

org.apache.flink.table.planner.delegation.PlannerContext.(PlannerContext.java:129)

at

org.apache.flink.table.planner.delegation.PlannerBase.(PlannerBase.scala:118)

at

org.apache.flink.table.planner.delegation.StreamPlanner.(StreamPlanner.scala:55)

at

org.apache.flink.table.planner.delegation.DefaultPlannerFactory.create(DefaultPlannerFactory.java:62)

at

org.apache.flink.table.factories.PlannerFactoryUtil.createPlanner(PlannerFactoryUtil.java:53)

at

org.apache.flink.table.api.bridge.scala.internal.StreamTableEnvironmentImpl$.create(StreamTableEnvironmentImpl.scala:323)

at

org.apache.flink.table.api.bridge.scala.StreamTableEnvironment$.create(StreamTableEnvironment.scala:925)

at

org.apache.flink.table.planner.runtime.utils.StreamingTestBase.before(StreamingTestBase.scala:54)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

at

org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at

org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

at

org.junit.internal.runners.statements.RunBefores.invokeMethod(RunBefores.java:33)

at

org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:24)

at

org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

at

org.junit.rules.ExpectedException$ExpectedExceptionStatement.evaluate(ExpectedException.java:258)

at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

at

org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:299)

at

org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:293)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassNotFoundException: com.google.common.base.MoreObjects

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 38 more

java.lang.NoClassDefFoundError: Could not initialize class

org.apache.cal

[jira] [Updated] (FLINK-25746) Failed to run ITCase locally with IDEA under flink-orc and flink-parquet module

[

https://issues.apache.org/jira/browse/FLINK-25746?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jane Chan updated FLINK-25746:

--

Attachment: image-2022-01-21-16-54-12-354.png

> Failed to run ITCase locally with IDEA under flink-orc and flink-parquet

> module

> ---

>

> Key: FLINK-25746

> URL: https://issues.apache.org/jira/browse/FLINK-25746

> Project: Flink

> Issue Type: Bug

> Components: Formats (JSON, Avro, Parquet, ORC, SequenceFile)

>Reporter: Jane Chan

>Priority: Major

> Attachments: image-2022-01-21-16-54-12-354.png

>

>

> Recently, it has been observed that several integration test cases failed

> when running from IDEA locally, but running them from the maven command line

> is OK.

> h4. How to reproduce

> {code:java}

> // switch to master branch

> git fetch origin

> git rebase origin/master

> mvn clean install -DskipTests -Dfast -Pskip-webui-build -Dscala-2.12 -T 1C

> {code}

> Then run the following tests from IntelliJ IDEA

> h4. The affected tests

> {code:java}

> org.apache.flink.orc.OrcFileSystemITCase

> org.apache.flink.orc.OrcFsStreamingSinkITCase

> org.apache.flink.formats.parquet.ParquetFileCompactionITCase

> org.apache.flink.formats.parquet.ParquetFileSystemITCase

> org.apache.flink.formats.parquet.ParquetFsStreamingSinkITCase {code}

> h4. The stack trace

> !image-2022-01-21-16-54-12-354.png!

> {code:java}

> java.lang.NoClassDefFoundError: com/google/common/base/MoreObjects at

> org.apache.calcite.config.CalciteSystemProperty.loadProperties(CalciteSystemProperty.java:404)

> at

> org.apache.calcite.config.CalciteSystemProperty.(CalciteSystemProperty.java:47)

> at org.apache.calcite.util.Util.(Util.java:152)

> at org.apache.calcite.sql.type.SqlTypeName.(SqlTypeName.java:142)

> at

> org.apache.calcite.sql.type.SqlTypeFamily.getTypeNames(SqlTypeFamily.java:163)

> at org.apache.calcite.sql.type.ReturnTypes.(ReturnTypes.java:127)

> at org.apache.calcite.sql.SqlSetOperator.(SqlSetOperator.java:45)

> at

> org.apache.calcite.sql.fun.SqlStdOperatorTable.(SqlStdOperatorTable.java:97)

> at

> org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:101)

> at

> org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:91)

> at

> org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:234)

> at

> org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:215)

> at

> org.apache.calcite.tools.Frameworks.newConfigBuilder(Frameworks.java:199)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.createFrameworkConfig(PlannerContext.java:145)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.(PlannerContext.java:129)

> at

> org.apache.flink.table.planner.delegation.PlannerBase.(PlannerBase.scala:118)

> at

> org.apache.flink.table.planner.delegation.StreamPlanner.(StreamPlanner.scala:55)

> at

> org.apache.flink.table.planner.delegation.DefaultPlannerFactory.create(DefaultPlannerFactory.java:62)

> at

> org.apache.flink.table.factories.PlannerFactoryUtil.createPlanner(PlannerFactoryUtil.java:53)

> at

> org.apache.flink.table.api.bridge.scala.internal.StreamTableEnvironmentImpl$.create(StreamTableEnvironmentImpl.scala:323)

> at

> org.apache.flink.table.api.bridge.scala.StreamTableEnvironment$.create(StreamTableEnvironment.scala:925)

> at

> org.apache.flink.table.planner.runtime.utils.StreamingTestBase.before(StreamingTestBase.scala:54)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.RunBefores.invokeMethod(RunBefores.java:33)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:24)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at

> org.junit.rules.ExpectedException$ExpectedExceptionStatement.evaluate(ExpectedException.java:258)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> at

> org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:299)

> at

> org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.

[jira] [Updated] (FLINK-25746) Failed to run ITCase locally with IDEA under flink-orc and flink-parquet module

[

https://issues.apache.org/jira/browse/FLINK-25746?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jane Chan updated FLINK-25746:

--

Description:

Recently, it has been observed that several integration test cases failed when

running from IDEA locally, but running them from the maven command line is OK.

h4. How to reproduce

{code:java}

// switch to master branch

git fetch origin

git rebase origin/master

mvn clean install -DskipTests -Dfast -Pskip-webui-build -Dscala-2.12 -T 1C

{code}

Then run the following tests from IntelliJ IDEA

h4. The affected tests

{code:java}

org.apache.flink.orc.OrcFileSystemITCase

org.apache.flink.orc.OrcFsStreamingSinkITCase

org.apache.flink.formats.parquet.ParquetFileCompactionITCase

org.apache.flink.formats.parquet.ParquetFileSystemITCase

org.apache.flink.formats.parquet.ParquetFsStreamingSinkITCase {code}

h4. The stack trace

!image-2022-01-21-16-54-12-354.png!

{code:java}

java.lang.NoClassDefFoundError: com/google/common/base/MoreObjects at

org.apache.calcite.config.CalciteSystemProperty.loadProperties(CalciteSystemProperty.java:404)

at

org.apache.calcite.config.CalciteSystemProperty.(CalciteSystemProperty.java:47)

at org.apache.calcite.util.Util.(Util.java:152)

at org.apache.calcite.sql.type.SqlTypeName.(SqlTypeName.java:142)

at

org.apache.calcite.sql.type.SqlTypeFamily.getTypeNames(SqlTypeFamily.java:163)

at org.apache.calcite.sql.type.ReturnTypes.(ReturnTypes.java:127)

at org.apache.calcite.sql.SqlSetOperator.(SqlSetOperator.java:45)

at

org.apache.calcite.sql.fun.SqlStdOperatorTable.(SqlStdOperatorTable.java:97)

at

org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:101)

at

org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:91)

at

org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:234)

at

org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:215)

at org.apache.calcite.tools.Frameworks.newConfigBuilder(Frameworks.java:199)

at

org.apache.flink.table.planner.delegation.PlannerContext.createFrameworkConfig(PlannerContext.java:145)

at

org.apache.flink.table.planner.delegation.PlannerContext.(PlannerContext.java:129)

at

org.apache.flink.table.planner.delegation.PlannerBase.(PlannerBase.scala:118)

at

org.apache.flink.table.planner.delegation.StreamPlanner.(StreamPlanner.scala:55)

at

org.apache.flink.table.planner.delegation.DefaultPlannerFactory.create(DefaultPlannerFactory.java:62)

at

org.apache.flink.table.factories.PlannerFactoryUtil.createPlanner(PlannerFactoryUtil.java:53)

at

org.apache.flink.table.api.bridge.scala.internal.StreamTableEnvironmentImpl$.create(StreamTableEnvironmentImpl.scala:323)

at

org.apache.flink.table.api.bridge.scala.StreamTableEnvironment$.create(StreamTableEnvironment.scala:925)

at

org.apache.flink.table.planner.runtime.utils.StreamingTestBase.before(StreamingTestBase.scala:54)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

at

org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at

org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

at

org.junit.internal.runners.statements.RunBefores.invokeMethod(RunBefores.java:33)

at

org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:24)

at

org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

at

org.junit.rules.ExpectedException$ExpectedExceptionStatement.evaluate(ExpectedException.java:258)

at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

at

org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:299)

at

org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:293)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassNotFoundException: com.google.common.base.MoreObjects

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 38 more

java.lang.NoClassDefFoundError: Could not initialize class

org.apache.calcite.sql2rel.StandardConvertletTable at

org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:234)

at

org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.ja

[GitHub] [flink] flinkbot edited a comment on pull request #18153: [FLINK-25568][connectors/elasticsearch] Add Elasticsearch 7 Source

flinkbot edited a comment on pull request #18153: URL: https://github.com/apache/flink/pull/18153#issuecomment-997756404 ## CI report: * b29c358b51b4a803f129eab3ad8723747510d3c0 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29791) * dc3cdf818723ec45e61511b4ed1e0b08cabbff29 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #18437: [FLINK-25712][connector/tests] Merge flink-connector-testing into flink-connector-test-utils

flinkbot edited a comment on pull request #18437: URL: https://github.com/apache/flink/pull/18437#issuecomment-1018174136 ## CI report: * be3d03d337bb7358ee949445f0530a73d02c43dc Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29854) * 049a0ebc6535291170b03e739521344d54809682 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29863) * 66184529bb0e4f34be6b7e3755d06cd50939f894 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29870) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-25746) Failed to run ITCase locally with IDEA under flink-orc and flink-parquet module

[

https://issues.apache.org/jira/browse/FLINK-25746?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jane Chan updated FLINK-25746:

--

Attachment: image-2022-01-21-16-56-42-156.png

> Failed to run ITCase locally with IDEA under flink-orc and flink-parquet

> module

> ---

>

> Key: FLINK-25746

> URL: https://issues.apache.org/jira/browse/FLINK-25746

> Project: Flink

> Issue Type: Bug

> Components: Formats (JSON, Avro, Parquet, ORC, SequenceFile)

>Reporter: Jane Chan

>Priority: Major

> Attachments: image-2022-01-21-16-54-12-354.png,

> image-2022-01-21-16-56-42-156.png

>

>

> Recently, it has been observed that several integration test cases failed

> when running from IDEA locally, but running them from the maven command line

> is OK.

> h4. How to reproduce

> {code:java}

> // switch to master branch

> git fetch origin

> git rebase origin/master

> mvn clean install -DskipTests -Dfast -Pskip-webui-build -Dscala-2.12 -T 1C

> {code}

> Then run the following tests from IntelliJ IDEA

> h4. The affected tests

> {code:java}

> org.apache.flink.orc.OrcFileSystemITCase

> org.apache.flink.orc.OrcFsStreamingSinkITCase

> org.apache.flink.formats.parquet.ParquetFileCompactionITCase

> org.apache.flink.formats.parquet.ParquetFileSystemITCase

> org.apache.flink.formats.parquet.ParquetFsStreamingSinkITCase {code}

> h4. The stack trace

> !image-2022-01-21-16-54-12-354.png!

> {code:java}

> java.lang.NoClassDefFoundError: com/google/common/base/MoreObjects at

> org.apache.calcite.config.CalciteSystemProperty.loadProperties(CalciteSystemProperty.java:404)

> at

> org.apache.calcite.config.CalciteSystemProperty.(CalciteSystemProperty.java:47)

> at org.apache.calcite.util.Util.(Util.java:152)

> at org.apache.calcite.sql.type.SqlTypeName.(SqlTypeName.java:142)

> at

> org.apache.calcite.sql.type.SqlTypeFamily.getTypeNames(SqlTypeFamily.java:163)

> at org.apache.calcite.sql.type.ReturnTypes.(ReturnTypes.java:127)

> at org.apache.calcite.sql.SqlSetOperator.(SqlSetOperator.java:45)

> at

> org.apache.calcite.sql.fun.SqlStdOperatorTable.(SqlStdOperatorTable.java:97)

> at

> org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:101)

> at

> org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:91)

> at

> org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:234)

> at

> org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:215)

> at

> org.apache.calcite.tools.Frameworks.newConfigBuilder(Frameworks.java:199)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.createFrameworkConfig(PlannerContext.java:145)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.(PlannerContext.java:129)

> at

> org.apache.flink.table.planner.delegation.PlannerBase.(PlannerBase.scala:118)

> at

> org.apache.flink.table.planner.delegation.StreamPlanner.(StreamPlanner.scala:55)

> at

> org.apache.flink.table.planner.delegation.DefaultPlannerFactory.create(DefaultPlannerFactory.java:62)

> at

> org.apache.flink.table.factories.PlannerFactoryUtil.createPlanner(PlannerFactoryUtil.java:53)

> at

> org.apache.flink.table.api.bridge.scala.internal.StreamTableEnvironmentImpl$.create(StreamTableEnvironmentImpl.scala:323)

> at

> org.apache.flink.table.api.bridge.scala.StreamTableEnvironment$.create(StreamTableEnvironment.scala:925)

> at

> org.apache.flink.table.planner.runtime.utils.StreamingTestBase.before(StreamingTestBase.scala:54)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.RunBefores.invokeMethod(RunBefores.java:33)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:24)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at

> org.junit.rules.ExpectedException$ExpectedExceptionStatement.evaluate(ExpectedException.java:258)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> at

> org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:299)

> at

> org.junit.internal.runners.statements.FailOnTimeout

[jira] [Commented] (FLINK-25746) Failed to run ITCase locally with IDEA under flink-orc and flink-parquet module

[

https://issues.apache.org/jira/browse/FLINK-25746?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17479909#comment-17479909

]

Jane Chan commented on FLINK-25746:

---

While it's ok when running tests from the command line

{code:java}

mvn test -Dtest=ParquetFileCompactionITCase {code}

!image-2022-01-21-16-56-42-156.png!

> Failed to run ITCase locally with IDEA under flink-orc and flink-parquet

> module

> ---

>

> Key: FLINK-25746

> URL: https://issues.apache.org/jira/browse/FLINK-25746

> Project: Flink

> Issue Type: Bug

> Components: Formats (JSON, Avro, Parquet, ORC, SequenceFile)

>Reporter: Jane Chan

>Priority: Major

> Attachments: image-2022-01-21-16-54-12-354.png,

> image-2022-01-21-16-56-42-156.png

>

>

> Recently, it has been observed that several integration test cases failed

> when running from IDEA locally, but running them from the maven command line

> is OK.

> h4. How to reproduce

> {code:java}

> // switch to master branch

> git fetch origin

> git rebase origin/master

> mvn clean install -DskipTests -Dfast -Pskip-webui-build -Dscala-2.12 -T 1C

> {code}

> Then run the following tests from IntelliJ IDEA

> h4. The affected tests

> {code:java}

> org.apache.flink.orc.OrcFileSystemITCase

> org.apache.flink.orc.OrcFsStreamingSinkITCase

> org.apache.flink.formats.parquet.ParquetFileCompactionITCase

> org.apache.flink.formats.parquet.ParquetFileSystemITCase

> org.apache.flink.formats.parquet.ParquetFsStreamingSinkITCase {code}

> h4. The stack trace

> !image-2022-01-21-16-54-12-354.png!

> {code:java}

> java.lang.NoClassDefFoundError: com/google/common/base/MoreObjects at

> org.apache.calcite.config.CalciteSystemProperty.loadProperties(CalciteSystemProperty.java:404)

> at

> org.apache.calcite.config.CalciteSystemProperty.(CalciteSystemProperty.java:47)

> at org.apache.calcite.util.Util.(Util.java:152)

> at org.apache.calcite.sql.type.SqlTypeName.(SqlTypeName.java:142)

> at

> org.apache.calcite.sql.type.SqlTypeFamily.getTypeNames(SqlTypeFamily.java:163)

> at org.apache.calcite.sql.type.ReturnTypes.(ReturnTypes.java:127)

> at org.apache.calcite.sql.SqlSetOperator.(SqlSetOperator.java:45)

> at

> org.apache.calcite.sql.fun.SqlStdOperatorTable.(SqlStdOperatorTable.java:97)

> at

> org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:101)

> at

> org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:91)

> at

> org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:234)

> at

> org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:215)

> at

> org.apache.calcite.tools.Frameworks.newConfigBuilder(Frameworks.java:199)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.createFrameworkConfig(PlannerContext.java:145)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.(PlannerContext.java:129)

> at

> org.apache.flink.table.planner.delegation.PlannerBase.(PlannerBase.scala:118)

> at

> org.apache.flink.table.planner.delegation.StreamPlanner.(StreamPlanner.scala:55)

> at

> org.apache.flink.table.planner.delegation.DefaultPlannerFactory.create(DefaultPlannerFactory.java:62)

> at

> org.apache.flink.table.factories.PlannerFactoryUtil.createPlanner(PlannerFactoryUtil.java:53)

> at

> org.apache.flink.table.api.bridge.scala.internal.StreamTableEnvironmentImpl$.create(StreamTableEnvironmentImpl.scala:323)

> at

> org.apache.flink.table.api.bridge.scala.StreamTableEnvironment$.create(StreamTableEnvironment.scala:925)

> at

> org.apache.flink.table.planner.runtime.utils.StreamingTestBase.before(StreamingTestBase.scala:54)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.RunBefores.invokeMethod(RunBefores.java:33)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:24)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at

> org.junit.rules.ExpectedException$ExpectedExceptionStatement.evaluate(ExpectedException.java:258)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> at

[jira] [Updated] (FLINK-25746) Failed to run ITCase locally with IDEA under flink-orc and flink-parquet module

[

https://issues.apache.org/jira/browse/FLINK-25746?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jane Chan updated FLINK-25746:

--

Affects Version/s: 1.15.0

> Failed to run ITCase locally with IDEA under flink-orc and flink-parquet

> module

> ---

>

> Key: FLINK-25746

> URL: https://issues.apache.org/jira/browse/FLINK-25746

> Project: Flink

> Issue Type: Bug

> Components: Formats (JSON, Avro, Parquet, ORC, SequenceFile)

>Affects Versions: 1.15.0

>Reporter: Jane Chan

>Priority: Major

> Attachments: image-2022-01-21-16-54-12-354.png,

> image-2022-01-21-16-56-42-156.png

>

>

> Recently, it has been observed that several integration test cases failed

> when running from IDEA locally, but running them from the maven command line

> is OK.

> h4. How to reproduce

> {code:java}

> // switch to master branch

> git fetch origin

> git rebase origin/master

> mvn clean install -DskipTests -Dfast -Pskip-webui-build -Dscala-2.12 -T 1C

> {code}

> Then run the following tests from IntelliJ IDEA

> h4. The affected tests

> {code:java}

> org.apache.flink.orc.OrcFileSystemITCase

> org.apache.flink.orc.OrcFsStreamingSinkITCase

> org.apache.flink.formats.parquet.ParquetFileCompactionITCase

> org.apache.flink.formats.parquet.ParquetFileSystemITCase

> org.apache.flink.formats.parquet.ParquetFsStreamingSinkITCase {code}

> h4. The stack trace

> !image-2022-01-21-16-54-12-354.png!

> {code:java}

> java.lang.NoClassDefFoundError: com/google/common/base/MoreObjects at

> org.apache.calcite.config.CalciteSystemProperty.loadProperties(CalciteSystemProperty.java:404)

> at

> org.apache.calcite.config.CalciteSystemProperty.(CalciteSystemProperty.java:47)

> at org.apache.calcite.util.Util.(Util.java:152)

> at org.apache.calcite.sql.type.SqlTypeName.(SqlTypeName.java:142)

> at

> org.apache.calcite.sql.type.SqlTypeFamily.getTypeNames(SqlTypeFamily.java:163)

> at org.apache.calcite.sql.type.ReturnTypes.(ReturnTypes.java:127)

> at org.apache.calcite.sql.SqlSetOperator.(SqlSetOperator.java:45)

> at

> org.apache.calcite.sql.fun.SqlStdOperatorTable.(SqlStdOperatorTable.java:97)

> at

> org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:101)

> at

> org.apache.calcite.sql2rel.StandardConvertletTable.(StandardConvertletTable.java:91)

> at

> org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:234)

> at

> org.apache.calcite.tools.Frameworks$ConfigBuilder.(Frameworks.java:215)

> at

> org.apache.calcite.tools.Frameworks.newConfigBuilder(Frameworks.java:199)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.createFrameworkConfig(PlannerContext.java:145)

> at

> org.apache.flink.table.planner.delegation.PlannerContext.(PlannerContext.java:129)

> at

> org.apache.flink.table.planner.delegation.PlannerBase.(PlannerBase.scala:118)

> at

> org.apache.flink.table.planner.delegation.StreamPlanner.(StreamPlanner.scala:55)

> at

> org.apache.flink.table.planner.delegation.DefaultPlannerFactory.create(DefaultPlannerFactory.java:62)

> at

> org.apache.flink.table.factories.PlannerFactoryUtil.createPlanner(PlannerFactoryUtil.java:53)

> at

> org.apache.flink.table.api.bridge.scala.internal.StreamTableEnvironmentImpl$.create(StreamTableEnvironmentImpl.scala:323)

> at

> org.apache.flink.table.api.bridge.scala.StreamTableEnvironment$.create(StreamTableEnvironment.scala:925)

> at

> org.apache.flink.table.planner.runtime.utils.StreamingTestBase.before(StreamingTestBase.scala:54)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)