[GitHub] [flink] KarmaGYZ commented on pull request #19481: [FLINK-27256][runtime] Log the root exception in closing the task man…

KarmaGYZ commented on PR #19481: URL: https://github.com/apache/flink/pull/19481#issuecomment-1099873917 @flinkbot run azure -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #19484: [FLINK-27258] [Doc] fix chinese deploment doc malformed text

flinkbot commented on PR #19484: URL: https://github.com/apache/flink/pull/19484#issuecomment-1099832665 ## CI report: * 714c3dfc1eec638a1ec71330e31bb0dab6bbe8ed UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

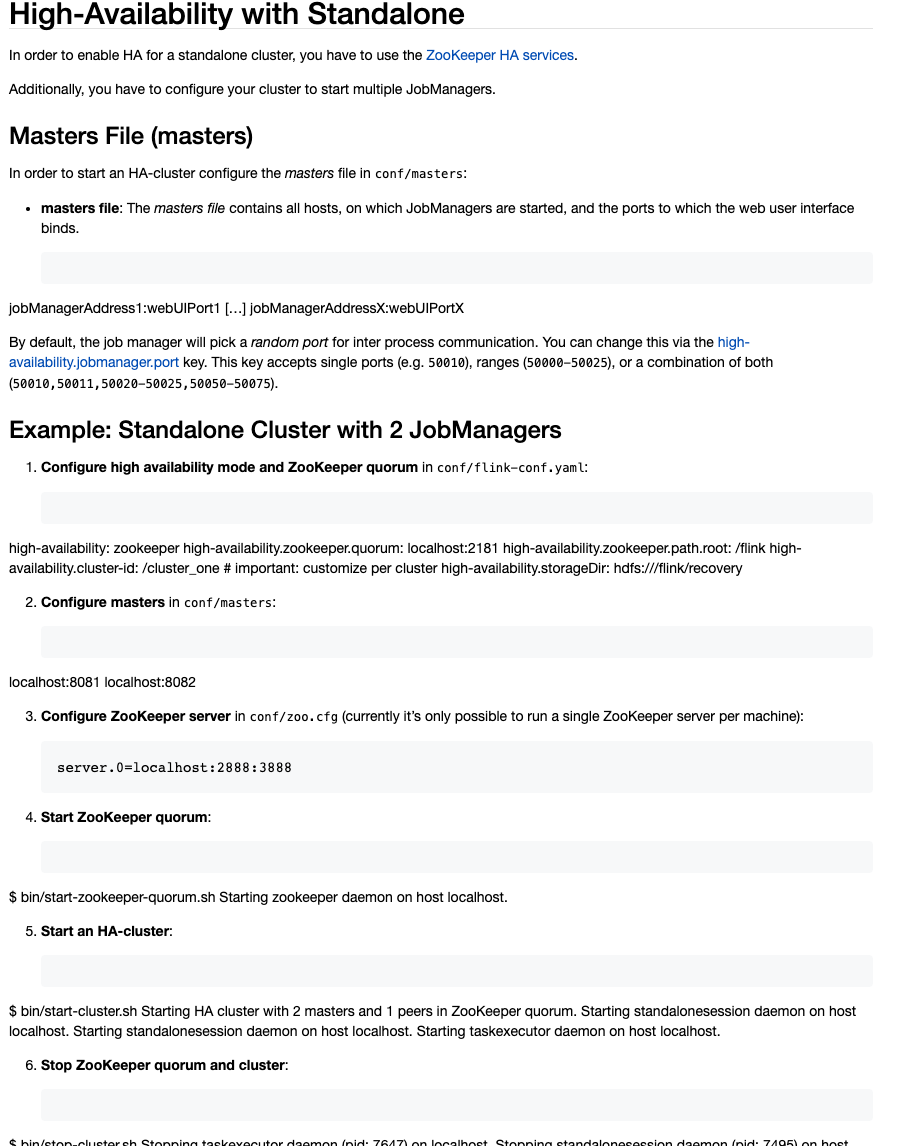

[jira] [Updated] (FLINK-27258) Deployment Chinese document malformed text

[ https://issues.apache.org/jira/browse/FLINK-27258?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-27258: --- Labels: pull-request-available (was: ) > Deployment Chinese document malformed text > -- > > Key: FLINK-27258 > URL: https://issues.apache.org/jira/browse/FLINK-27258 > Project: Flink > Issue Type: Bug > Components: Documentation >Affects Versions: 1.12.7, 1.13.6, 1.14.4 >Reporter: FanJia >Priority: Minor > Labels: pull-request-available > Attachments: image-2022-04-15-11-35-19-712.png > > > !image-2022-04-15-11-35-19-712.png! > malformed text need to be fix. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [flink] BenJFan opened a new pull request, #19484: [FLINK-27258] [Doc] fix chinese deploment doc malformed text

BenJFan opened a new pull request, #19484: URL: https://github.com/apache/flink/pull/19484 ## What is the purpose of the change  fix chinese deploment doc malformed text ## Brief change log - fix chinese deploment doc malformed text -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] mas-chen commented on a diff in pull request #19456: [FLINK-27041][connector/kafka] Catch IllegalStateException in KafkaPartitionSplitReader.fetch() to handle no valid partition case

mas-chen commented on code in PR #19456:

URL: https://github.com/apache/flink/pull/19456#discussion_r851041663

##

flink-connectors/flink-connector-kafka/src/main/java/org/apache/flink/connector/kafka/source/reader/KafkaPartitionSplitReader.java:

##

@@ -131,12 +138,7 @@ public RecordsWithSplitIds>

fetch() throws IOExce

kafkaSourceReaderMetrics.maybeAddRecordsLagMetric(consumer, tp);

}

-// Some splits are discovered as empty when handling split additions.

These splits should be

-// added to finished splits to clean up states in split fetcher and

source reader.

-if (!emptySplits.isEmpty()) {

-recordsBySplits.finishedSplits.addAll(emptySplits);

-emptySplits.clear();

-}

+markEmptySplitsAsFinished(recordsBySplits);

Review Comment:

Good catch!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink-kubernetes-operator] tweise commented on a diff in pull request #165: [FLINK-26140] Support rollback strategies

tweise commented on code in PR #165:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/165#discussion_r851041385

##

flink-kubernetes-operator/src/main/java/org/apache/flink/kubernetes/operator/reconciler/deployment/ApplicationReconciler.java:

##

@@ -102,36 +111,84 @@ public void reconcile(FlinkDeployment flinkApp, Context

context, Configuration e

}

if (currentJobState == JobState.SUSPENDED && desiredJobState ==

JobState.RUNNING) {

if (upgradeMode == UpgradeMode.STATELESS) {

-deployFlinkJob(flinkApp, effectiveConfig,

Optional.empty());

-} else if (upgradeMode == UpgradeMode.LAST_STATE

-|| upgradeMode == UpgradeMode.SAVEPOINT) {

-restoreFromLastSavepoint(flinkApp, effectiveConfig);

+deployFlinkJob(currentJobSpec, status, effectiveConfig,

Optional.empty());

+} else {

+restoreFromLastSavepoint(currentJobSpec, status,

effectiveConfig);

}

stateAfterReconcile = JobState.RUNNING;

}

-IngressUtils.updateIngressRules(flinkApp, effectiveConfig,

kubernetesClient);

+IngressUtils.updateIngressRules(

+deployMeta, currentDeploySpec, effectiveConfig,

kubernetesClient);

ReconciliationUtils.updateForSpecReconciliationSuccess(flinkApp,

stateAfterReconcile);

-} else if (SavepointUtils.shouldTriggerSavepoint(flinkApp) &&

isJobRunning(flinkApp)) {

+} else if (ReconciliationUtils.shouldRollBack(reconciliationStatus,

effectiveConfig)) {

+rollbackApplication(flinkApp);

+} else if (SavepointUtils.shouldTriggerSavepoint(currentJobSpec,

status)

+&& isJobRunning(status)) {

triggerSavepoint(flinkApp, effectiveConfig);

ReconciliationUtils.updateSavepointReconciliationSuccess(flinkApp);

+} else {

+LOG.info("Deployment is fully reconciled, nothing to do.");

}

}

+private void rollbackApplication(FlinkDeployment flinkApp) throws

Exception {

+ReconciliationStatus reconciliationStatus =

flinkApp.getStatus().getReconciliationStatus();

+

+if (reconciliationStatus.getState() !=

ReconciliationStatus.State.ROLLING_BACK) {

+LOG.warn("Preparing to roll back to last stable spec.");

+if (flinkApp.getStatus().getError() == null) {

+flinkApp.getStatus()

+.setError(

+"Deployment is not ready within the configured

timeout, rolling-back.");

+}

+

reconciliationStatus.setState(ReconciliationStatus.State.ROLLING_BACK);

+return;

+}

+

+LOG.warn("Executing roll-back operation");

+

+FlinkDeploymentSpec rollbackSpec =

reconciliationStatus.deserializeLastStableSpec();

+Configuration rollbackConfig =

+FlinkUtils.getEffectiveConfig(flinkApp.getMetadata(),

rollbackSpec, defaultConfig);

+

+UpgradeMode upgradeMode = flinkApp.getSpec().getJob().getUpgradeMode();

+

+suspendJob(

Review Comment:

Agreed that we should not change the spec, which in some case may also not

be possible due to access control. The reason not to change

`lastReconciledSpec` is more related to the implementation as it pertains to

the status, nevertheless I think the current approach makes sense.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] dianfu commented on a diff in pull request #19480: [FLINK-27213][API/Python]Add PurgingTrigger

dianfu commented on code in PR #19480: URL: https://github.com/apache/flink/pull/19480#discussion_r851034880 ## flink-python/pyflink/datastream/window.py: ## @@ -840,6 +841,65 @@ def register_next_fire_timestamp(self, ctx.register_processing_time_timer(next_fire_timestamp) +class PurgingTrigger(Trigger[T, TimeWindow]): Review Comment: Why it's always TimeWindow? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-27259) Observer should not clear savepoint errors even though deployment is healthy

Yang Wang created FLINK-27259:

-

Summary: Observer should not clear savepoint errors even though

deployment is healthy

Key: FLINK-27259

URL: https://issues.apache.org/jira/browse/FLINK-27259

Project: Flink

Issue Type: Bug

Components: Kubernetes Operator

Reporter: Yang Wang

Even though the deployment is healthy and job is running, triggering savepoint

still could fail with errors. See FLINK-27257 for more information. These

errors should not be cleared in {{{}AbstractDeploymentObserver{}}}.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Created] (FLINK-27258) Deployment Chinese document malformed text

FanJia created FLINK-27258: -- Summary: Deployment Chinese document malformed text Key: FLINK-27258 URL: https://issues.apache.org/jira/browse/FLINK-27258 Project: Flink Issue Type: Bug Components: Documentation Affects Versions: 1.14.4, 1.13.6, 1.12.7 Reporter: FanJia Attachments: image-2022-04-15-11-35-19-712.png !image-2022-04-15-11-35-19-712.png! malformed text need to be fix. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-27258) Deployment Chinese document malformed text

[ https://issues.apache.org/jira/browse/FLINK-27258?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17522637#comment-17522637 ] FanJia commented on FLINK-27258: Let me fix it for my first issue. > Deployment Chinese document malformed text > -- > > Key: FLINK-27258 > URL: https://issues.apache.org/jira/browse/FLINK-27258 > Project: Flink > Issue Type: Bug > Components: Documentation >Affects Versions: 1.12.7, 1.13.6, 1.14.4 >Reporter: FanJia >Priority: Minor > Attachments: image-2022-04-15-11-35-19-712.png > > > !image-2022-04-15-11-35-19-712.png! > malformed text need to be fix. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-27218) Serializer in OperatorState has not been updated when new Serializers are NOT incompatible

[

https://issues.apache.org/jira/browse/FLINK-27218?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17522634#comment-17522634

]

Yun Tang commented on FLINK-27218:

--

[~mayuehappy] I think you're right, this would affect the correctness when

executing copy during snapshot. Do you like to take this ticket to fix this bug?

> Serializer in OperatorState has not been updated when new Serializers are NOT

> incompatible

> --

>

> Key: FLINK-27218

> URL: https://issues.apache.org/jira/browse/FLINK-27218

> Project: Flink

> Issue Type: Bug

> Components: Runtime / State Backends

>Affects Versions: 1.15.1

>Reporter: Yue Ma

>Priority: Major

> Attachments: image-2022-04-13-14-50-10-921.png

>

>

> OperatorState such as *BroadcastState* or *PartitionableListState* can only

> be constructed via {*}DefaultOperatorStateBackend{*}. But when

> *BroadcastState* or *PartitionableListState* Serializer changes after we

> restart the job , it seems to have the following problems .

> As an example, we can see how PartitionableListState is initialized.

> First, RestoreOperation will construct a restored PartitionableListState

> based on the information in the snapshot.

> Then StateMetaInfo in partitionableListState will be updated as the

> following code

> {code:java}

> TypeSerializerSchemaCompatibility stateCompatibility =

>

> restoredPartitionableListStateMetaInfo.updatePartitionStateSerializer(newPartitionStateSerializer);

> partitionableListState.setStateMetaInfo(restoredPartitionableListStateMetaInfo);{code}

> The main problem is that there is also an *internalListCopySerializer* in

> *PartitionableListState* that is built using the previous Serializer and it

> has not been updated.

> Therefore, when we update the StateMetaInfo, the *internalListCopySerializer*

> also needs to be updated.

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Commented] (FLINK-27257) Flink kubernetes operator triggers savepoint failed because of not all tasks running

[

https://issues.apache.org/jira/browse/FLINK-27257?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17522632#comment-17522632

]

Yang Wang commented on FLINK-27257:

---

The expected behavior is the subsequent reconciliation should trigger the

savepoint again.

> Flink kubernetes operator triggers savepoint failed because of not all tasks

> running

>

>

> Key: FLINK-27257

> URL: https://issues.apache.org/jira/browse/FLINK-27257

> Project: Flink

> Issue Type: Bug

> Components: Kubernetes Operator

>Reporter: Yang Wang

>Priority: Major

>

> {code:java}

> 2022-04-15 02:38:56,551 o.a.f.k.o.s.FlinkService [INFO

> ][default/flink-example-statemachine] Fetching savepoint result with

> triggerId: 182d7f176496856d7b33fe2f3767da18

> 2022-04-15 02:38:56,690 o.a.f.k.o.s.FlinkService

> [ERROR][default/flink-example-statemachine] Savepoint error

> org.apache.flink.runtime.checkpoint.CheckpointException: Checkpoint

> triggering task Source: Custom Source (1/2) of job

> is not being executed at the moment.

> Aborting checkpoint. Failure reason: Not all required tasks are currently

> running.

> at

> org.apache.flink.runtime.checkpoint.DefaultCheckpointPlanCalculator.checkTasksStarted(DefaultCheckpointPlanCalculator.java:143)

> at

> org.apache.flink.runtime.checkpoint.DefaultCheckpointPlanCalculator.lambda$calculateCheckpointPlan$1(DefaultCheckpointPlanCalculator.java:105)

> at

> java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1604)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.lambda$handleRunAsync$4(AkkaRpcActor.java:455)

> at

> org.apache.flink.runtime.concurrent.akka.ClassLoadingUtils.runWithContextClassLoader(ClassLoadingUtils.java:68)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRunAsync(AkkaRpcActor.java:455)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:213)

> at

> org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:78)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:163)

> at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:24)

> at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:20)

> at scala.PartialFunction.applyOrElse(PartialFunction.scala:123)

> at scala.PartialFunction.applyOrElse$(PartialFunction.scala:122)

> at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:20)

> at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171)

> at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

> at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

> at akka.actor.Actor.aroundReceive(Actor.scala:537)

> at akka.actor.Actor.aroundReceive$(Actor.scala:535)

> at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:220)

> at akka.actor.ActorCell.receiveMessage(ActorCell.scala:580)

> at akka.actor.ActorCell.invoke(ActorCell.scala:548)

> at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:270)

> at akka.dispatch.Mailbox.run(Mailbox.scala:231)

> at akka.dispatch.Mailbox.exec(Mailbox.scala:243)

> at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

> at

> java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1056)

> at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1692)

> at

> java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:175)

> 2022-04-15 02:38:56,693 o.a.f.k.o.o.SavepointObserver

> [ERROR][default/flink-example-statemachine] Checkpoint triggering task

> Source: Custom Source (1/2) of job is not

> being executed at the moment. Aborting checkpoint. Failure reason: Not all

> required tasks are currently running. {code}

> How to reproduce?

> Update arbitrary fields(e.g. parallelism) along with

> {{{}savepointTriggerNonce{}}}.

>

> The root cause might be the running state return by

> {{ClusterClient#listJobs()}} does not mean all the tasks are running.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] (FLINK-27257) Flink kubernetes operator triggers savepoint failed because of not all tasks running

[ https://issues.apache.org/jira/browse/FLINK-27257 ]

Yang Wang deleted comment on FLINK-27257:

---

was (Author: fly_in_gis):

It is also strange that the subsequent reconciliation does not trigger the

savepoint again. Otherwise, this should not be a problem.

> Flink kubernetes operator triggers savepoint failed because of not all tasks

> running

>

>

> Key: FLINK-27257

> URL: https://issues.apache.org/jira/browse/FLINK-27257

> Project: Flink

> Issue Type: Bug

> Components: Kubernetes Operator

>Reporter: Yang Wang

>Priority: Major

>

> {code:java}

> 2022-04-15 02:38:56,551 o.a.f.k.o.s.FlinkService [INFO

> ][default/flink-example-statemachine] Fetching savepoint result with

> triggerId: 182d7f176496856d7b33fe2f3767da18

> 2022-04-15 02:38:56,690 o.a.f.k.o.s.FlinkService

> [ERROR][default/flink-example-statemachine] Savepoint error

> org.apache.flink.runtime.checkpoint.CheckpointException: Checkpoint

> triggering task Source: Custom Source (1/2) of job

> is not being executed at the moment.

> Aborting checkpoint. Failure reason: Not all required tasks are currently

> running.

> at

> org.apache.flink.runtime.checkpoint.DefaultCheckpointPlanCalculator.checkTasksStarted(DefaultCheckpointPlanCalculator.java:143)

> at

> org.apache.flink.runtime.checkpoint.DefaultCheckpointPlanCalculator.lambda$calculateCheckpointPlan$1(DefaultCheckpointPlanCalculator.java:105)

> at

> java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1604)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.lambda$handleRunAsync$4(AkkaRpcActor.java:455)

> at

> org.apache.flink.runtime.concurrent.akka.ClassLoadingUtils.runWithContextClassLoader(ClassLoadingUtils.java:68)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRunAsync(AkkaRpcActor.java:455)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:213)

> at

> org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:78)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:163)

> at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:24)

> at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:20)

> at scala.PartialFunction.applyOrElse(PartialFunction.scala:123)

> at scala.PartialFunction.applyOrElse$(PartialFunction.scala:122)

> at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:20)

> at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171)

> at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

> at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

> at akka.actor.Actor.aroundReceive(Actor.scala:537)

> at akka.actor.Actor.aroundReceive$(Actor.scala:535)

> at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:220)

> at akka.actor.ActorCell.receiveMessage(ActorCell.scala:580)

> at akka.actor.ActorCell.invoke(ActorCell.scala:548)

> at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:270)

> at akka.dispatch.Mailbox.run(Mailbox.scala:231)

> at akka.dispatch.Mailbox.exec(Mailbox.scala:243)

> at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

> at

> java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1056)

> at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1692)

> at

> java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:175)

> 2022-04-15 02:38:56,693 o.a.f.k.o.o.SavepointObserver

> [ERROR][default/flink-example-statemachine] Checkpoint triggering task

> Source: Custom Source (1/2) of job is not

> being executed at the moment. Aborting checkpoint. Failure reason: Not all

> required tasks are currently running. {code}

> How to reproduce?

> Update arbitrary fields(e.g. parallelism) along with

> {{{}savepointTriggerNonce{}}}.

>

> The root cause might be the running state return by

> {{ClusterClient#listJobs()}} does not mean all the tasks are running.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink] liuzhuang2017 commented on pull request #19483: [hotfix][docs-zh] Fix "Google Cloud PubSub" Chinese page under "DataStream Connectors"

liuzhuang2017 commented on PR #19483: URL: https://github.com/apache/flink/pull/19483#issuecomment-1099812887 @wuchong ,Thanks for your review. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (FLINK-27236) No task slot allocated for job in larege-scale job

[

https://issues.apache.org/jira/browse/FLINK-27236?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17522631#comment-17522631

]

Lijie Wang edited comment on FLINK-27236 at 4/15/22 3:15 AM:

-

[~freeke]

-> At least, the previous execution shouldn't be still allocated to the tm .

Honestly, I have no idea about how to implement it. I think it is inevitable

that the slot states in RM/TM and JM will be inconsistent for a short period of

time, because they are different akka actors and can only synchronize

information via RPC.

In a streaming job (only one pipeline region), all task deployments are

performed in the same RPC Call (but this RPC time may be very long due to slow

deployement, for example 30s). That is, during this period, if RM/TM wants to

notify JM of slot states via RPC, JM can only handle it after 30s.

Unfortunately, at that point the task deployments have completed(and

encountered the mentioned problem).

Do you have any idea about that?

was (Author: wanglijie95):

[~freeke]

-> At least, the previous execution shouldn't be still allocated to the tm .

Honestly, I have no idea about how to implement it. I think it is inevitable

that the slot states in RM/TM and JM will be inconsistent for a short period of

time, because they are different akka actors and can only synchronize

information via RPC.

In a streaming job (only one pipeline region), all task deployments are

performed in the same RPC Call (but this RPC time may be very long due to slow

deployement, for example 30s). That is, during this period, if RM/TM wants to

notify JM of slot states via RPC, JM can only handle it after 30s.

Unfortunately, at that point the task deployments have completed(and

encountered the mentioned problem).

Do you have any idea about this?

> No task slot allocated for job in larege-scale job

> --

>

> Key: FLINK-27236

> URL: https://issues.apache.org/jira/browse/FLINK-27236

> Project: Flink

> Issue Type: Bug

>Affects Versions: 1.13.3

>Reporter: yanpengshi

>Priority: Major

> Attachments: jobmanager.log.26, taskmanager.log, topology.png

>

> Original Estimate: 444h

> Remaining Estimate: 444h

>

> Hey,

>

> We run a large-scale flink job containing six vertices with 3k parallelism.

> The Topology is shown below.

> !topology.png!

> We meets the following exception in jobmanager.log:[^jobmanager.log.26]

> {code:java}

> 2022-03-02 08:01:16,601 INFO [1998]

> [org.apache.flink.runtime.executiongraph.Execution.transitionState(Execution.java:1446)]

> - Source: tdbank_exposure_wx - Flat Map (772/3000)

> (6cd18d4ead1887a4e19fd3f337a6f4f8) switched from DEPLOYING to FAILED on

> container_e03_1639558254334_10048_01_004716 @ 11.104.77.40

> (dataPort=39313).java.util.concurrent.CompletionException:

> org.apache.flink.runtime.taskexecutor.exceptions.TaskSubmissionException: No

> task slot allocated for job ID ed780087 and

> allocation ID beb058d837c09e8d5a4a6aaf2426ca99. {code}

>

> In the taskmanager.log [^taskmanager.log], the slot is freed due to timeout

> and the taskmanager receives the new allocated request. By increasing the

> value of key: taskmanager.slot.timeout, we can avoid this exception

> temporarily.

> Here are some our guesses:

> # When the job is scheduled, the slot and execution have been bound, and

> then the task is deployed to the corresponding taskmanager.

> # The slot is released after the idle interval times out and notify the

> ResouceManager the slot free. Thus, the resourceManager will assign other

> request to the slot.

> # The task is deployed to taskmanager according the previous correspondence

>

> The key problems are :

> # When the slot is free, the execution is not unassigned from the slot;

> # The slot state is not consistent in JobMaster and ResourceManager

>

> Has anyone else encountered this problem? When the slot is freed, how can we

> unassign the previous bounded execution? Or we need to update the resource

> address of the execution. @[~zhuzh] @[~wanglijie95]

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Commented] (FLINK-27236) No task slot allocated for job in larege-scale job

[

https://issues.apache.org/jira/browse/FLINK-27236?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17522631#comment-17522631

]

Lijie Wang commented on FLINK-27236:

[~freeke]

-> At least, the previous execution shouldn't be still allocated to the tm .

Honestly, I have no idea about how to implement it. I think it is inevitable

that the slot states in RM/TM and JM will be inconsistent for a short period of

time, because they are different akka actors and can only synchronize

information via RPC.

In a streaming job (only one pipeline region), all task deployments are

performed in the same RPC Call (but this RPC time may be very long due to slow

deployement, for example 30s). That is, during this period, if RM/TM wants to

notify JM of slot states via RPC, JM can only handle it after 30s.

Unfortunately, at that point the task deployments have completed(and

encountered the mentioned problem).

Do you have any idea about this?

> No task slot allocated for job in larege-scale job

> --

>

> Key: FLINK-27236

> URL: https://issues.apache.org/jira/browse/FLINK-27236

> Project: Flink

> Issue Type: Bug

>Affects Versions: 1.13.3

>Reporter: yanpengshi

>Priority: Major

> Attachments: jobmanager.log.26, taskmanager.log, topology.png

>

> Original Estimate: 444h

> Remaining Estimate: 444h

>

> Hey,

>

> We run a large-scale flink job containing six vertices with 3k parallelism.

> The Topology is shown below.

> !topology.png!

> We meets the following exception in jobmanager.log:[^jobmanager.log.26]

> {code:java}

> 2022-03-02 08:01:16,601 INFO [1998]

> [org.apache.flink.runtime.executiongraph.Execution.transitionState(Execution.java:1446)]

> - Source: tdbank_exposure_wx - Flat Map (772/3000)

> (6cd18d4ead1887a4e19fd3f337a6f4f8) switched from DEPLOYING to FAILED on

> container_e03_1639558254334_10048_01_004716 @ 11.104.77.40

> (dataPort=39313).java.util.concurrent.CompletionException:

> org.apache.flink.runtime.taskexecutor.exceptions.TaskSubmissionException: No

> task slot allocated for job ID ed780087 and

> allocation ID beb058d837c09e8d5a4a6aaf2426ca99. {code}

>

> In the taskmanager.log [^taskmanager.log], the slot is freed due to timeout

> and the taskmanager receives the new allocated request. By increasing the

> value of key: taskmanager.slot.timeout, we can avoid this exception

> temporarily.

> Here are some our guesses:

> # When the job is scheduled, the slot and execution have been bound, and

> then the task is deployed to the corresponding taskmanager.

> # The slot is released after the idle interval times out and notify the

> ResouceManager the slot free. Thus, the resourceManager will assign other

> request to the slot.

> # The task is deployed to taskmanager according the previous correspondence

>

> The key problems are :

> # When the slot is free, the execution is not unassigned from the slot;

> # The slot state is not consistent in JobMaster and ResourceManager

>

> Has anyone else encountered this problem? When the slot is freed, how can we

> unassign the previous bounded execution? Or we need to update the resource

> address of the execution. @[~zhuzh] @[~wanglijie95]

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink] flinkbot commented on pull request #19483: [hotfix][docs-zh] Fix "Google Cloud PubSub" Chinese page under "DataStream Connectors"

flinkbot commented on PR #19483: URL: https://github.com/apache/flink/pull/19483#issuecomment-1099811000 ## CI report: * deb4187089fc00f51f320a4983d00584e802d779 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] wuchong commented on pull request #19483: [hotfix][docs-zh] Fix "Google Cloud PubSub" Chinese page under "DataStream Connectors"

wuchong commented on PR #19483: URL: https://github.com/apache/flink/pull/19483#issuecomment-1099810162 I will merge it when the CI passed. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

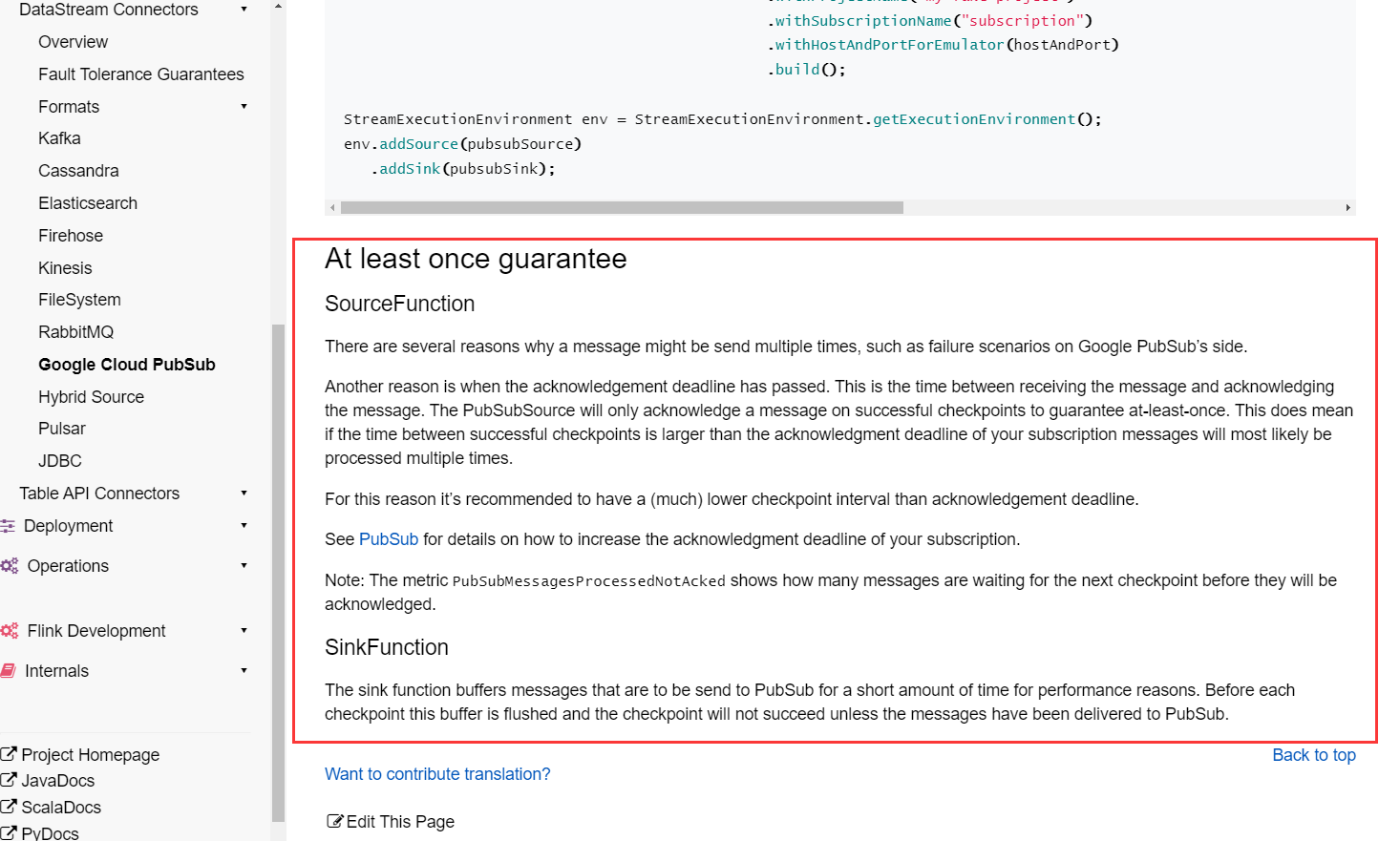

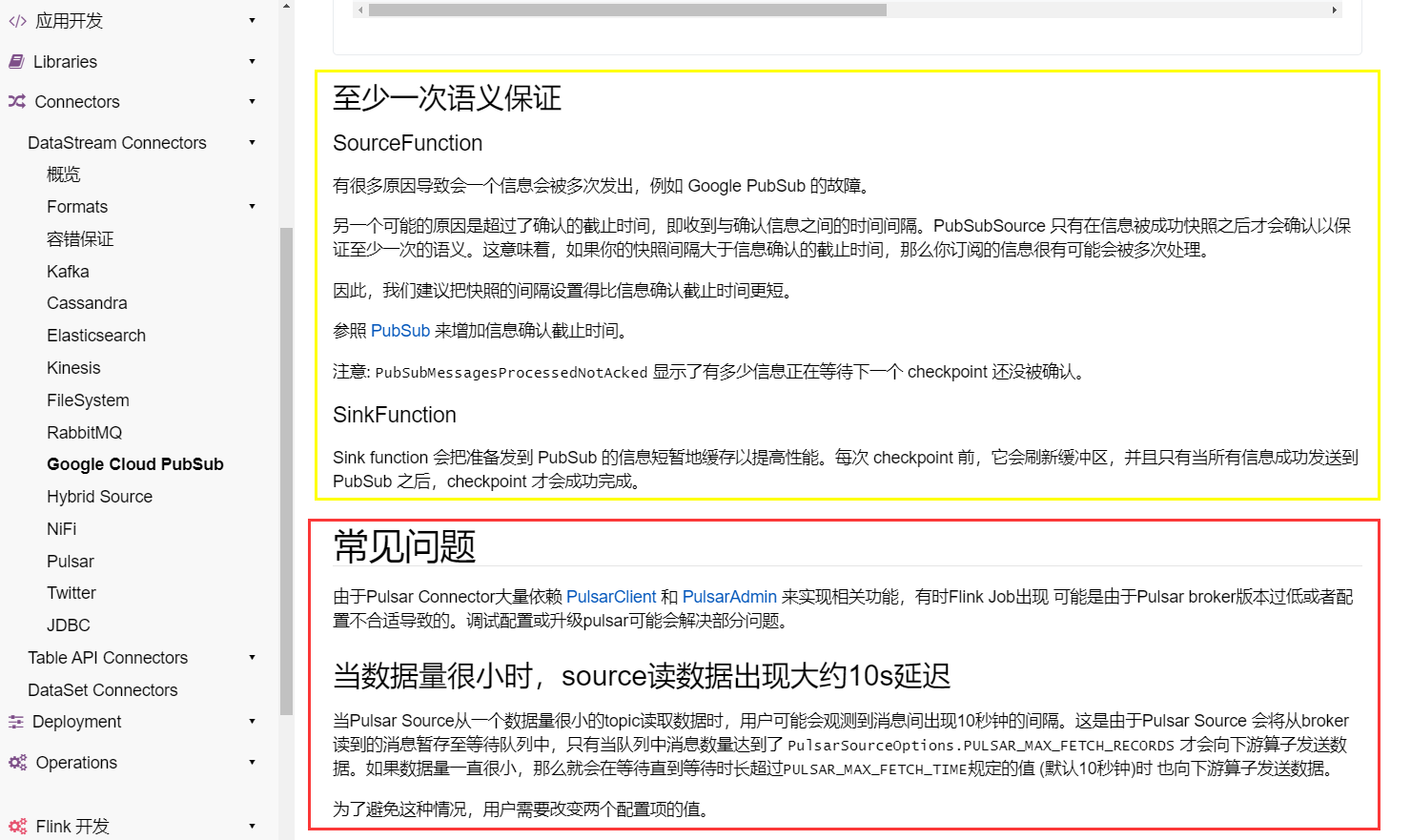

[GitHub] [flink] liuzhuang2017 opened a new pull request, #19483: [hotfix][docs-zh] Fix "Google Cloud PubSub" Chinese page under "DataStream Connectors"

liuzhuang2017 opened a new pull request, #19483: URL: https://github.com/apache/flink/pull/19483 ## What is the purpose of the change # **This is the English document:**  # **This is the Chinese document:**  We can see from the above picture that compared with the English document, the Chinese document has a part that is irrelevant to the content. ## Brief change log Fix "Google Cloud PubSub" Chinese page under "DataStream Connectors" ## Verifying this change This change is a trivial rework / code cleanup without any test coverage. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-27055) java.lang.ArrayIndexOutOfBoundsException in BinarySegmentUtils

[

https://issues.apache.org/jira/browse/FLINK-27055?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17522629#comment-17522629

]

Kenny Ma commented on FLINK-27055:

--

[~jark] do you have any suggestion? Upgrading to 1.14 would require major

refactoring in our application and it is not guaranteed that the issue doesn't

exist in the newer version.

> java.lang.ArrayIndexOutOfBoundsException in BinarySegmentUtils

> --

>

> Key: FLINK-27055

> URL: https://issues.apache.org/jira/browse/FLINK-27055

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Runtime

>Affects Versions: 1.12.0

>Reporter: Kenny Ma

>Priority: Major

>

> I am using SQL for my streaming job and the job keeps failing with the

> java.lang.ArrayIndexOutOfBoundsException thrown in BinarySegmentUtils.

> Stacktrace:

>

> {code:java}

> java.lang.ArrayIndexOutOfBoundsException: Index 1 out of bounds for length 1

> at

> org.apache.flink.table.data.binary.BinarySegmentUtils.getLongSlowly(BinarySegmentUtils.java:773)

> at

> org.apache.flink.table.data.binary.BinarySegmentUtils.getLongMultiSegments(BinarySegmentUtils.java:763)

> at

> org.apache.flink.table.data.binary.BinarySegmentUtils.getLong(BinarySegmentUtils.java:751)

> at

> org.apache.flink.table.data.binary.BinaryArrayData.getString(BinaryArrayData.java:210)

> at

> org.apache.flink.table.data.ArrayData.lambda$createElementGetter$95d74a6c$1(ArrayData.java:250)

> at

> org.apache.flink.table.data.conversion.MapMapConverter.toExternal(MapMapConverter.java:79)

> at StreamExecCalc$11860.processElement(Unknown Source)

> at

> org.apache.flink.streaming.runtime.tasks.ChainingOutput.pushToOperator(ChainingOutput.java:112)

> at

> org.apache.flink.streaming.runtime.tasks.ChainingOutput.collect(ChainingOutput.java:93)

> at

> org.apache.flink.streaming.runtime.tasks.ChainingOutput.collect(ChainingOutput.java:39)

> at

> org.apache.flink.streaming.api.operators.CountingOutput.collect(CountingOutput.java:52)

> at

> org.apache.flink.streaming.api.operators.CountingOutput.collect(CountingOutput.java:30)

> at

> org.apache.flink.streaming.api.operators.TimestampedCollector.collect(TimestampedCollector.java:53)

> at

> org.apache.flink.table.runtime.operators.window.AggregateWindowOperator.collect(AggregateWindowOperator.java:183)

> at

> org.apache.flink.table.runtime.operators.window.AggregateWindowOperator.emitWindowResult(AggregateWindowOperator.java:176)

> at

> org.apache.flink.table.runtime.operators.window.WindowOperator.onEventTime(WindowOperator.java:384)

> at

> org.apache.flink.streaming.api.operators.InternalTimerServiceImpl.advanceWatermark(InternalTimerServiceImpl.java:276)

> at

> org.apache.flink.streaming.api.operators.InternalTimeServiceManagerImpl.advanceWatermark(InternalTimeServiceManagerImpl.java:183)

> at

> org.apache.flink.streaming.api.operators.AbstractStreamOperator.processWatermark(AbstractStreamOperator.java:600)

> at

> org.apache.flink.streaming.runtime.tasks.OneInputStreamTask$StreamTaskNetworkOutput.emitWatermark(OneInputStreamTask.java:199)

> at

> org.apache.flink.streaming.runtime.streamstatus.StatusWatermarkValve.findAndOutputNewMinWatermarkAcrossAlignedChannels(StatusWatermarkValve.java:173)

> at

> org.apache.flink.streaming.runtime.streamstatus.StatusWatermarkValve.inputWatermark(StatusWatermarkValve.java:95)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.processElement(StreamTaskNetworkInput.java:181)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskNetworkInput.emitNext(StreamTaskNetworkInput.java:152)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:67)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:372)

> at

> org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:186)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:575)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:539)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:722)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:547)

> {code}

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Commented] (FLINK-27257) Flink kubernetes operator triggers savepoint failed because of not all tasks running

[

https://issues.apache.org/jira/browse/FLINK-27257?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17522628#comment-17522628

]

Yang Wang commented on FLINK-27257:

---

It is also strange that the subsequent reconciliation does not trigger the

savepoint again. Otherwise, this should not be a problem.

> Flink kubernetes operator triggers savepoint failed because of not all tasks

> running

>

>

> Key: FLINK-27257

> URL: https://issues.apache.org/jira/browse/FLINK-27257

> Project: Flink

> Issue Type: Bug

> Components: Kubernetes Operator

>Reporter: Yang Wang

>Priority: Major

>

> {code:java}

> 2022-04-15 02:38:56,551 o.a.f.k.o.s.FlinkService [INFO

> ][default/flink-example-statemachine] Fetching savepoint result with

> triggerId: 182d7f176496856d7b33fe2f3767da18

> 2022-04-15 02:38:56,690 o.a.f.k.o.s.FlinkService

> [ERROR][default/flink-example-statemachine] Savepoint error

> org.apache.flink.runtime.checkpoint.CheckpointException: Checkpoint

> triggering task Source: Custom Source (1/2) of job

> is not being executed at the moment.

> Aborting checkpoint. Failure reason: Not all required tasks are currently

> running.

> at

> org.apache.flink.runtime.checkpoint.DefaultCheckpointPlanCalculator.checkTasksStarted(DefaultCheckpointPlanCalculator.java:143)

> at

> org.apache.flink.runtime.checkpoint.DefaultCheckpointPlanCalculator.lambda$calculateCheckpointPlan$1(DefaultCheckpointPlanCalculator.java:105)

> at

> java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1604)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.lambda$handleRunAsync$4(AkkaRpcActor.java:455)

> at

> org.apache.flink.runtime.concurrent.akka.ClassLoadingUtils.runWithContextClassLoader(ClassLoadingUtils.java:68)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRunAsync(AkkaRpcActor.java:455)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:213)

> at

> org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:78)

> at

> org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:163)

> at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:24)

> at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:20)

> at scala.PartialFunction.applyOrElse(PartialFunction.scala:123)

> at scala.PartialFunction.applyOrElse$(PartialFunction.scala:122)

> at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:20)

> at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171)

> at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

> at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

> at akka.actor.Actor.aroundReceive(Actor.scala:537)

> at akka.actor.Actor.aroundReceive$(Actor.scala:535)

> at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:220)

> at akka.actor.ActorCell.receiveMessage(ActorCell.scala:580)

> at akka.actor.ActorCell.invoke(ActorCell.scala:548)

> at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:270)

> at akka.dispatch.Mailbox.run(Mailbox.scala:231)

> at akka.dispatch.Mailbox.exec(Mailbox.scala:243)

> at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

> at

> java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1056)

> at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1692)

> at

> java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:175)

> 2022-04-15 02:38:56,693 o.a.f.k.o.o.SavepointObserver

> [ERROR][default/flink-example-statemachine] Checkpoint triggering task

> Source: Custom Source (1/2) of job is not

> being executed at the moment. Aborting checkpoint. Failure reason: Not all

> required tasks are currently running. {code}

> How to reproduce?

> Update arbitrary fields(e.g. parallelism) along with

> {{{}savepointTriggerNonce{}}}.

>

> The root cause might be the running state return by

> {{ClusterClient#listJobs()}} does not mean all the tasks are running.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Commented] (FLINK-27247) ScalarOperatorGens.numericCasting is not compatible with legacy behavior

[

https://issues.apache.org/jira/browse/FLINK-27247?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17522626#comment-17522626

]

xuyang commented on FLINK-27247:

Hi, [~matriv], I think currently this is not the problem with casting. Because

this part of code gen doesn't not care of the nullable with the type and other

part of code gen will avoid the nullable exists. For example, you can see the

following code generated:

{code:java}

// the result rand(...) will be null only if the arg in rand is nullable, like

"rand(cast (null as int))"

// so if the sql is "rand() + 1", the generated code is:

isNull$3 = false;

result$4 = random$2.nextDouble();

// and if the sql is "rand(cast(null as int))":

isNull$3 = true;

result$4 = -1.0d;

if (!isNull$3) {

result$4 = random$2.nextDouble();

}{code}

This part that i fixed is only about the code : "result$4 =

random$2.nextDouble();" and this should just ignore the nullable between

DOUBLE and DOUBLE NOT NULL. And actually the logic of the legacy code does this

by pre-checking the same type not necessary to cast before casting this

different types.

I strongly agree with you that the logic in casting should throw an exception

if it meets casting a type from nullable to not nullable. But the problem of

this issue is before casting logic.

By the way, I think converting casting logic to different rules is a good

improvement but should not affect the base logic when change the code. You can

see the code before and after rewriting casting rules:

before:

{code:java}

// no casting necessary

if (isInteroperable(operandType, resultType)) {

operandTerm => s"$operandTerm"

}

// decimal to decimal, may have different precision/scale

else if (isDecimal(resultType) && isDecimal(operandType)) {

val dt = resultType.asInstanceOf[DecimalType]

operandTerm =>

s"$DECIMAL_UTIL.castToDecimal($operandTerm, ${dt.getPrecision},

${dt.getScale})"

}

// non_decimal_numeric to decimal

else if ...{code}

after:

{code:java}

// All numeric rules are assumed to be instance of

AbstractExpressionCodeGeneratorCastRule

val rule = CastRuleProvider.resolve(operandType, resultType)

rule match {

case codeGeneratorCastRule: ExpressionCodeGeneratorCastRule[_, _] =>

operandTerm =>

codeGeneratorCastRule.generateExpression(

toCodegenCastContext(ctx),

operandTerm,

operandType,

resultType

)

case _ =>

throw new CodeGenException(s"Unsupported casting from $operandType to

$resultType.")

} {code}

Looking forward to your reply :)

> ScalarOperatorGens.numericCasting is not compatible with legacy behavior

>

>

> Key: FLINK-27247

> URL: https://issues.apache.org/jira/browse/FLINK-27247

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Reporter: xuyang

>Priority: Minor

> Labels: pull-request-available

>

> Add the following test cases in ScalarFunctionsTest:

> {code:java}

> // code placeholder

> @Test

> def test(): Unit ={

> testSqlApi("rand(1) + 1","")

> } {code}

> it will throw the following exception:

> {code:java}

> // code placeholder

> org.apache.flink.table.planner.codegen.CodeGenException: Unsupported casting

> from DOUBLE to DOUBLE NOT NULL.

> at

> org.apache.flink.table.planner.codegen.calls.ScalarOperatorGens$.numericCasting(ScalarOperatorGens.scala:1734)

> at

> org.apache.flink.table.planner.codegen.calls.ScalarOperatorGens$.generateBinaryArithmeticOperator(ScalarOperatorGens.scala:85)

> at

> org.apache.flink.table.planner.codegen.ExprCodeGenerator.generateCallExpression(ExprCodeGenerator.scala:507)

> at

> org.apache.flink.table.planner.codegen.ExprCodeGenerator.visitCall(ExprCodeGenerator.scala:481)

> at

> org.apache.flink.table.planner.codegen.ExprCodeGenerator.visitCall(ExprCodeGenerator.scala:57)

> at org.apache.calcite.rex.RexCall.accept(RexCall.java:174)

> at

> org.apache.flink.table.planner.codegen.ExprCodeGenerator.$anonfun$visitCall$1(ExprCodeGenerator.scala:478)

> at

> scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:233)

> at

> scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:58)

> at

> scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:51)

> at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:47)

> at scala.collection.TraversableLike.map(TraversableLike.scala:233)

> at scala.collection.TraversableLike.map$(TraversableLike.scala:226)

> at scala.collection.AbstractTraversable.map(Traversable.scala:104)

> at

> org.apache.flink.table.planner.codegen.ExprCodeGenerator.visitCall(ExprCodeGenerator.scala:469)

> ... {code}

> This is because in ScalarOperatorGens#numericCasting, FLINK-24779

[jira] [Commented] (FLINK-27121) Translate "Configuration#overview" paragraph and the code example in "Application Development > Table API & SQL" to Chinese

[

https://issues.apache.org/jira/browse/FLINK-27121?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17522625#comment-17522625

]

LEI ZHOU commented on FLINK-27121:

--

[~martijnvisser] ok,thanks very much!!!

> Translate "Configuration#overview" paragraph and the code example in

> "Application Development > Table API & SQL" to Chinese

> ---

>

> Key: FLINK-27121

> URL: https://issues.apache.org/jira/browse/FLINK-27121

> Project: Flink

> Issue Type: Improvement

> Components: Documentation

>Reporter: Marios Trivyzas

>Assignee: LEI ZHOU

>Priority: Major

> Labels: chinese-translation, pull-request-available

>

> After [https://github.com/apache/flink/pull/19387

> |https://github.com/apache/flink/pull/19387] is merged, we need to update the

> translation for

> [https://nightlies.apache.org/flink/flink-docs-master/zh/docs/dev/table/config/#overview]

> The markdown file is located in

> {noformat}

> flink/docs/content.zh/docs/dev/table/config.md{noformat}

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink] flinkbot commented on pull request #19482: [FLINK-27244][hive] Support read sub-directories in partition directory with Hive tables

flinkbot commented on PR #19482: URL: https://github.com/apache/flink/pull/19482#issuecomment-1099802626 ## CI report: * e9b2b761abf6aee6415b4daec7374340015c0426 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-24932) Frocksdb cannot run on Apple M1

[

https://issues.apache.org/jira/browse/FLINK-24932?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17522624#comment-17522624

]

Yun Tang commented on FLINK-24932:

--

[~maver1ck] Thanks for your information. We actually always watch the progress.

However, since Flink community leverage FRocksDB which is based on the original

RocksDB, we need some time to bump the RocksDB version.

> Frocksdb cannot run on Apple M1

> ---

>

> Key: FLINK-24932

> URL: https://issues.apache.org/jira/browse/FLINK-24932

> Project: Flink

> Issue Type: Sub-task

> Components: Runtime / State Backends

>Reporter: Yun Tang

>Priority: Minor

>

> After we bump up RocksDB version to 6.20.3, we support to run RocksDB on

> linux arm cluster. However, according to the feedback from Robert, Apple M1

> machines cannot run FRocksDB yet:

> {code:java}

> java.lang.Exception: Exception while creating StreamOperatorStateContext.

> at

> org.apache.flink.streaming.api.operators.StreamTaskStateInitializerImpl.streamOperatorStateContext(StreamTaskStateInitializerImpl.java:255)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.streaming.api.operators.AbstractStreamOperator.initializeState(AbstractStreamOperator.java:268)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.streaming.runtime.tasks.RegularOperatorChain.initializeStateAndOpenOperators(RegularOperatorChain.java:109)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.restoreGates(StreamTask.java:711)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.streaming.runtime.tasks.StreamTaskActionExecutor$1.call(StreamTaskActionExecutor.java:55)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.restoreInternal(StreamTask.java:687)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.restore(StreamTask.java:654)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.runtime.taskmanager.Task.runWithSystemExitMonitoring(Task.java:958)

> ~[flink-runtime-1.14.0.jar:1.14.0]

> at

> org.apache.flink.runtime.taskmanager.Task.restoreAndInvoke(Task.java:927)

> ~[flink-runtime-1.14.0.jar:1.14.0]

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:766)

> ~[flink-runtime-1.14.0.jar:1.14.0]

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:575)

> ~[flink-runtime-1.14.0.jar:1.14.0]

> at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_312]

> Caused by: org.apache.flink.util.FlinkException: Could not restore keyed

> state backend for StreamFlatMap_c21234bcbf1e8eb4c61f1927190efebd_(1/1) from

> any of the 1 provided restore options.

> at

> org.apache.flink.streaming.api.operators.BackendRestorerProcedure.createAndRestore(BackendRestorerProcedure.java:160)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.streaming.api.operators.StreamTaskStateInitializerImpl.keyedStatedBackend(StreamTaskStateInitializerImpl.java:346)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.streaming.api.operators.StreamTaskStateInitializerImpl.streamOperatorStateContext(StreamTaskStateInitializerImpl.java:164)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> ... 11 more

> Caused by: java.io.IOException: Could not load the native RocksDB library

> at

> org.apache.flink.contrib.streaming.state.EmbeddedRocksDBStateBackend.ensureRocksDBIsLoaded(EmbeddedRocksDBStateBackend.java:882)

> ~[flink-statebackend-rocksdb_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.contrib.streaming.state.EmbeddedRocksDBStateBackend.createKeyedStateBackend(EmbeddedRocksDBStateBackend.java:402)

> ~[flink-statebackend-rocksdb_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.contrib.streaming.state.RocksDBStateBackend.createKeyedStateBackend(RocksDBStateBackend.java:345)

> ~[flink-statebackend-rocksdb_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.contrib.streaming.state.RocksDBStateBackend.createKeyedStateBackend(RocksDBStateBackend.java:87)

> ~[flink-statebackend-rocksdb_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.streaming.api.operators.StreamTaskStateInitializerImpl.lambda$keyedStatedBackend$1(StreamTaskStateInitializerImpl.java:329)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.streaming.api.operators.BackendRestorerProcedure.attemptCreateAndRestore(BackendRestorerProcedure.java:168)

> ~[flink-streaming-java_2.11-1.14.0.jar:1.14.0]

> at

> org.apache.flink.streaming.api.operators.BackendRestorerProcedure.createAndRestore(BackendRestorerProcedure.java:135)

>

[jira] [Created] (FLINK-27257) Flink kubernetes operator triggers savepoint failed because of not all tasks running

Yang Wang created FLINK-27257:

-

Summary: Flink kubernetes operator triggers savepoint failed

because of not all tasks running

Key: FLINK-27257

URL: https://issues.apache.org/jira/browse/FLINK-27257

Project: Flink

Issue Type: Bug

Components: Kubernetes Operator

Reporter: Yang Wang

{code:java}

2022-04-15 02:38:56,551 o.a.f.k.o.s.FlinkService [INFO

][default/flink-example-statemachine] Fetching savepoint result with triggerId:

182d7f176496856d7b33fe2f3767da18

2022-04-15 02:38:56,690 o.a.f.k.o.s.FlinkService

[ERROR][default/flink-example-statemachine] Savepoint error

org.apache.flink.runtime.checkpoint.CheckpointException: Checkpoint triggering

task Source: Custom Source (1/2) of job is not

being executed at the moment. Aborting checkpoint. Failure reason: Not all

required tasks are currently running.

at

org.apache.flink.runtime.checkpoint.DefaultCheckpointPlanCalculator.checkTasksStarted(DefaultCheckpointPlanCalculator.java:143)

at

org.apache.flink.runtime.checkpoint.DefaultCheckpointPlanCalculator.lambda$calculateCheckpointPlan$1(DefaultCheckpointPlanCalculator.java:105)

at

java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1604)

at

org.apache.flink.runtime.rpc.akka.AkkaRpcActor.lambda$handleRunAsync$4(AkkaRpcActor.java:455)

at

org.apache.flink.runtime.concurrent.akka.ClassLoadingUtils.runWithContextClassLoader(ClassLoadingUtils.java:68)

at

org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRunAsync(AkkaRpcActor.java:455)

at

org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:213)

at

org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:78)

at

org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:163)

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:24)

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:20)

at scala.PartialFunction.applyOrElse(PartialFunction.scala:123)

at scala.PartialFunction.applyOrElse$(PartialFunction.scala:122)

at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:20)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

at akka.actor.Actor.aroundReceive(Actor.scala:537)

at akka.actor.Actor.aroundReceive$(Actor.scala:535)

at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:220)

at akka.actor.ActorCell.receiveMessage(ActorCell.scala:580)

at akka.actor.ActorCell.invoke(ActorCell.scala:548)

at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:270)

at akka.dispatch.Mailbox.run(Mailbox.scala:231)

at akka.dispatch.Mailbox.exec(Mailbox.scala:243)

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

at

java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1056)

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1692)

at

java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:175)

2022-04-15 02:38:56,693 o.a.f.k.o.o.SavepointObserver

[ERROR][default/flink-example-statemachine] Checkpoint triggering task Source:

Custom Source (1/2) of job is not being

executed at the moment. Aborting checkpoint. Failure reason: Not all required

tasks are currently running. {code}

How to reproduce?

Update arbitrary fields(e.g. parallelism) along with

{{{}savepointTriggerNonce{}}}.

The root cause might be the running state return by

{{ClusterClient#listJobs()}} does not mean all the tasks are running.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink-kubernetes-operator] tweise commented on a diff in pull request #165: [FLINK-26140] Support rollback strategies

tweise commented on code in PR #165:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/165#discussion_r851023715

##

flink-kubernetes-operator/src/main/java/org/apache/flink/kubernetes/operator/observer/deployment/ApplicationObserver.java:

##

@@ -70,23 +70,28 @@ protected Optional updateJobStatus(

}

@Override

-public void observeIfClusterReady(

-FlinkDeployment flinkApp, Context context, Configuration

lastValidatedConfig) {

+protected boolean observeFlinkCluster(

+FlinkDeployment flinkApp, Context context, Configuration

deployedConfig) {

+

+JobStatus jobStatus = flinkApp.getStatus().getJobStatus();

+

boolean jobFound =

jobStatusObserver.observe(

-flinkApp.getStatus().getJobStatus(),

-lastValidatedConfig,

-new ApplicationObserverContext(flinkApp, context,

lastValidatedConfig));

+jobStatus,

+deployedConfig,

+new ApplicationObserverContext(flinkApp, context,

deployedConfig));

if (jobFound) {

savepointObserver

-.observe(

-

flinkApp.getStatus().getJobStatus().getSavepointInfo(),

-flinkApp.getStatus().getJobStatus().getJobId(),

-lastValidatedConfig)

+.observe(jobStatus.getSavepointInfo(),

jobStatus.getJobId(), deployedConfig)

.ifPresent(

error ->

ReconciliationUtils.updateForReconciliationError(

flinkApp, error));

}

+return isJobReady(jobStatus);

+}

+

+private boolean isJobReady(JobStatus jobStatus) {

+return

org.apache.flink.api.common.JobStatus.RUNNING.name().equals(jobStatus.getState());

Review Comment:

Can we add a TODO here? RUNNING doesn't mean that the job is executing as

expected, even a job that flip flops may intermittently have RUNNING status.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] snailHumming commented on pull request #19401: [FLINK-25716][docs-zh] Translate "Streaming Concepts" page of "Applic…

snailHumming commented on PR #19401: URL: https://github.com/apache/flink/pull/19401#issuecomment-1099800909 Should I create a new PR for applying these changes to master branch? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-27244) Support subdirectories with Hive tables

[

https://issues.apache.org/jira/browse/FLINK-27244?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-27244:

---

Labels: pull-request-available (was: )

> Support subdirectories with Hive tables

> ---

>

> Key: FLINK-27244

> URL: https://issues.apache.org/jira/browse/FLINK-27244

> Project: Flink

> Issue Type: Sub-task

> Components: Connectors / Hive

>Reporter: luoyuxia

>Priority: Major

> Labels: pull-request-available

>

> Hive support to read recursive directory by setting the property 'set

> mapred.input.dir.recursive=true', and Spark also support [such

> behavior|[https://stackoverflow.com/questions/42026043/how-to-recursively-read-hadoop-files-from-directory-using-spark]].

> For normal case, it won't happed for reading recursive directory. But it may

> happen in the following case:

> I have a paritioned table `fact_tz` with partition day/hour

> {code:java}

> CREATE TABLE fact_tz(x int) PARTITIONED BY (ds STRING, hr STRING) {code}

> Then I want to create an external table `fact_daily` refering to `fact_tz`,

> but with a coarse-grained partition day.

> {code:java}

> create external table fact_daily(x int) PARTITIONED BY (ds STRING) location

> 'fact_tz_localtion' ;

> ALTER TABLE fact_daily ADD PARTITION (ds='1') location

> 'fact_tz_localtion/ds=1'{code}

> But it wll throw exception "Not a file: fact_tz_localtion/ds=1" when try to

> query this table `fact_daily` for it's the first level of the origin

> partition and is actually a directory .

>

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink] luoyuxia opened a new pull request, #19482: [FLINK-27244][hive] Support read sub-directories in partition directory with Hive tables

luoyuxia opened a new pull request, #19482: URL: https://github.com/apache/flink/pull/19482 ## What is the purpose of the change Support read sub-directories in partition directory with Hive tables ## Brief change log Add an option to read partition directory recursively with Hive tables. ## Verifying this change Newly test [...link-connector-hive/src/test/java/org/apache/flink/connectors/hive/HiveDialectITCase.java](https://github.com/apache/flink/compare/master...luoyuxia:FLINK-27244?expand=1#diff-748a9fa63f15b3bd3ea5426a5b42179cadee77ecf3359c83f846aedb27d96871)#testTableWithSubDirsInPartitionDir ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? no -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #19481: [FLINK-27256][runtime] Log the root exception in closing the task man…

flinkbot commented on PR #19481: URL: https://github.com/apache/flink/pull/19481#issuecomment-1099795917 ## CI report: * fd30fc5691495b033a82c8289454d16fd9f6107f UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-27256) Log the root exception in closing the task manager connection

[ https://issues.apache.org/jira/browse/FLINK-27256?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-27256: --- Labels: pull-request-available (was: ) > Log the root exception in closing the task manager connection > - > > Key: FLINK-27256 > URL: https://issues.apache.org/jira/browse/FLINK-27256 > Project: Flink > Issue Type: Improvement > Components: Runtime / Coordination >Reporter: Yangze Guo >Assignee: Yangze Guo >Priority: Major > Labels: pull-request-available > Fix For: 1.16.0 > > > When close the task manager connection, we'd better log the root cause of it > for debugging. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [flink] KarmaGYZ opened a new pull request, #19481: [FLINK-27256][runtime] Log the root exception in closing the task man…

KarmaGYZ opened a new pull request, #19481: URL: https://github.com/apache/flink/pull/19481 …ager connection ## What is the purpose of the change Log the root exception in closing the task manager connection for ease of debugging. ## Brief change log Log the root exception in closing the task manager connection. ## Verifying this change This change is a trivial rework / code cleanup without any test coverage. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? (not applicable -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-27212) Failed to CAST('abcde', VARBINARY)

[

https://issues.apache.org/jira/browse/FLINK-27212?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17522618#comment-17522618

]

Wenlong Lyu commented on FLINK-27212:

-

[~matriv] I think you may have some misunderstanding here. Regarding x'XXX', it

means a hexdecimal

literal(https://dev.mysql.com/doc/refman/8.0/en/hexadecimal-literals.html), it

requires even number of values, the error in calcite means that the literal is

illegal. it is irrelevant to the casting behavior I think.

BTW, we may need a FLIP for such kind of change to collect more feedbacks from

devs and users. I think it is better to keep it the same as former versions,

and make the decision later.

> Failed to CAST('abcde', VARBINARY)

> --

>

> Key: FLINK-27212

> URL: https://issues.apache.org/jira/browse/FLINK-27212

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Runtime

>Affects Versions: 1.16.0

>Reporter: Shengkai Fang

>Assignee: Marios Trivyzas

>Priority: Blocker

> Fix For: 1.16.0

>

>

> Please add test in the CalcITCase

> {code:scala}

> @Test

> def testCalc(): Unit = {

> val sql =

> """

> |SELECT CAST('abcde' AS VARBINARY(6))

> |""".stripMargin

> val result = tEnv.executeSql(sql)

> print(result.getResolvedSchema)

> result.print()

> }

> {code}

> The exception is

> {code:java}

> Caused by: org.apache.flink.table.api.TableException: Odd number of

> characters.

> at

> org.apache.flink.table.utils.EncodingUtils.decodeHex(EncodingUtils.java:203)

> at StreamExecCalc$33.processElement(Unknown Source)

> at

> org.apache.flink.streaming.runtime.tasks.ChainingOutput.pushToOperator(ChainingOutput.java:99)

> at

> org.apache.flink.streaming.runtime.tasks.ChainingOutput.collect(ChainingOutput.java:80)

> at

> org.apache.flink.streaming.runtime.tasks.ChainingOutput.collect(ChainingOutput.java:39)

> at

> org.apache.flink.streaming.api.operators.CountingOutput.collect(CountingOutput.java:56)

> at

> org.apache.flink.streaming.api.operators.CountingOutput.collect(CountingOutput.java:29)

> at

> org.apache.flink.streaming.api.operators.StreamSourceContexts$ManualWatermarkContext.processAndCollect(StreamSourceContexts.java:418)

> at

> org.apache.flink.streaming.api.operators.StreamSourceContexts$WatermarkContext.collect(StreamSourceContexts.java:513)

> at

> org.apache.flink.streaming.api.operators.StreamSourceContexts$SwitchingOnClose.collect(StreamSourceContexts.java:103)

> at

> org.apache.flink.streaming.api.functions.source.InputFormatSourceFunction.run(InputFormatSourceFunction.java:92)

> at

> org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:110)

> at

> org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:67)

> at

> org.apache.flink.streaming.runtime.tasks.SourceStreamTask$LegacySourceFunctionThread.run(SourceStreamTask.java:332)

> {code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink] flinkbot commented on pull request #19480: [FLINK-27213][API/Python]Add PurgingTrigger

flinkbot commented on PR #19480: URL: https://github.com/apache/flink/pull/19480#issuecomment-1099791847 ## CI report: * 2bb742ac2836616e82401169eed3f24a8a608315 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-27256) Log the root exception in closing the task manager connection

Yangze Guo created FLINK-27256: -- Summary: Log the root exception in closing the task manager connection Key: FLINK-27256 URL: https://issues.apache.org/jira/browse/FLINK-27256 Project: Flink Issue Type: Improvement Components: Runtime / Coordination Reporter: Yangze Guo Assignee: Yangze Guo Fix For: 1.16.0 When close the task manager connection, we'd better log the root cause of it for debugging. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (FLINK-27213) Add PurgingTrigger

[ https://issues.apache.org/jira/browse/FLINK-27213?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-27213: --- Labels: pull-request-available (was: ) > Add PurgingTrigger > --- > > Key: FLINK-27213 > URL: https://issues.apache.org/jira/browse/FLINK-27213 > Project: Flink > Issue Type: New Feature > Components: API / Python >Reporter: zhangjingcun >Priority: Major > Labels: pull-request-available > > Introduce PurgingTrigger which is already supported in the Java API -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [flink] cun8cun8 opened a new pull request, #19480: [FLINK-27213][API/Python]Add PurgingTrigger

cun8cun8 opened a new pull request, #19480: URL: https://github.com/apache/flink/pull/19480 ## What is the purpose of the change *(For example: This pull request makes task deployment go through the blob server, rather than through RPC. That way we avoid re-transferring them on each deployment (during recovery).)* ## Brief change log *(for example:)* - *The TaskInfo is stored in the blob store on job creation time as a persistent artifact* - *Deployments RPC transmits only the blob storage reference* - *TaskManagers retrieve the TaskInfo from the blob cache* ## Verifying this change Please make sure both new and modified tests in this PR follows the conventions defined in our code quality guide: https://flink.apache.org/contributing/code-style-and-quality-common.html#testing *(Please pick either of the following options)* This change is a trivial rework / code cleanup without any test coverage. *(or)* This change is already covered by existing tests, such as *(please describe tests)*. *(or)* This change added tests and can be verified as follows: *(example:)* - *Added integration tests for end-to-end deployment with large payloads (100MB)* - *Extended integration test for recovery after master (JobManager) failure* - *Added test that validates that TaskInfo is transferred only once across recoveries* - *Manually verified the change by running a 4 node cluster with 2 JobManagers and 4 TaskManagers, a stateful streaming program, and killing one JobManager and two TaskManagers during the execution, verifying that recovery happens correctly.* ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (yes / no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (yes / no) - The serializers: (yes / no / don't know) - The runtime per-record code paths (performance sensitive): (yes / no / don't know) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: (yes / no / don't know) - The S3 file system connector: (yes / no / don't know) ## Documentation - Does this pull request introduce a new feature? (yes / no) - If yes, how is the feature documented? (not applicable / docs / JavaDocs / not documented) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] xuyangzhong commented on pull request #19465: [FLINK-27239][table-planner] rewrite PreValidateReWriter from scala to java

xuyangzhong commented on PR #19465: URL: https://github.com/apache/flink/pull/19465#issuecomment-1099790332 @flinkbot run azure -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] PatrickRen commented on a diff in pull request #19456: [FLINK-27041][connector/kafka] Catch IllegalStateException in KafkaPartitionSplitReader.fetch() to handle no valid partition cas

PatrickRen commented on code in PR #19456:

URL: https://github.com/apache/flink/pull/19456#discussion_r851005151

##

flink-connectors/flink-connector-kafka/src/main/java/org/apache/flink/connector/kafka/source/reader/KafkaPartitionSplitReader.java:

##

@@ -131,12 +138,7 @@ public RecordsWithSplitIds>

fetch() throws IOExce

kafkaSourceReaderMetrics.maybeAddRecordsLagMetric(consumer, tp);

}

-// Some splits are discovered as empty when handling split additions.

These splits should be

-// added to finished splits to clean up states in split fetcher and

source reader.

-if (!emptySplits.isEmpty()) {

-recordsBySplits.finishedSplits.addAll(emptySplits);

-emptySplits.clear();

-}

+markEmptySplitsAsFinished(recordsBySplits);

Review Comment:

The stopping offset of unbounded partitions is set to Long.MAX, so they

won't be treated as empty.

https://github.com/apache/flink/blob/release-1.14.4/flink-connectors/flink-connector-kafka/src/main/java/org/apache/flink/connector/kafka/source/reader/KafkaPartitionSplitReader.java#L311-L331