[jira] [Commented] (FLINK-21966) Support Kinesis connector in Python DataStream API.

[ https://issues.apache.org/jira/browse/FLINK-21966?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543794#comment-17543794 ] Dian Fu commented on FLINK-21966: - [~pemide] Thanks for the offering. I have assigned it to you~ > Support Kinesis connector in Python DataStream API. > --- > > Key: FLINK-21966 > URL: https://issues.apache.org/jira/browse/FLINK-21966 > Project: Flink > Issue Type: Sub-task > Components: API / Python >Reporter: Shuiqiang Chen >Assignee: pengmd >Priority: Major > Labels: pull-request-available > Fix For: 1.16.0 > > -- This message was sent by Atlassian Jira (v8.20.7#820007)

[jira] [Assigned] (FLINK-21966) Support Kinesis connector in Python DataStream API.

[ https://issues.apache.org/jira/browse/FLINK-21966?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dian Fu reassigned FLINK-21966: --- Assignee: pengmd > Support Kinesis connector in Python DataStream API. > --- > > Key: FLINK-21966 > URL: https://issues.apache.org/jira/browse/FLINK-21966 > Project: Flink > Issue Type: Sub-task > Components: API / Python >Reporter: Shuiqiang Chen >Assignee: pengmd >Priority: Major > Labels: pull-request-available > Fix For: 1.16.0 > > -- This message was sent by Atlassian Jira (v8.20.7#820007)

[GitHub] [flink] chucheng92 commented on pull request #19827: [FLINK-27806][table] Support binary & varbinary types in datagen connector

chucheng92 commented on PR #19827: URL: https://github.com/apache/flink/pull/19827#issuecomment-1140769198 @flinkbot run azure -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] chucheng92 commented on pull request #19827: [FLINK-27806][table] Support binary & varbinary types in datagen connector

chucheng92 commented on PR #19827: URL: https://github.com/apache/flink/pull/19827#issuecomment-1140768985 @wuchong can u help me to review this pr? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] chucheng92 commented on pull request #19827: [FLINK-27806][table] Support binary & varbinary types in datagen connector

chucheng92 commented on PR #19827: URL: https://github.com/apache/flink/pull/19827#issuecomment-1140768703 @flinkbot attention @wuchong @lirui-apache -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-21966) Support Kinesis connector in Python DataStream API.

[ https://issues.apache.org/jira/browse/FLINK-21966?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543792#comment-17543792 ] pengmd commented on FLINK-21966: [~dianfu] I am very interested in this issue. Could you please assign this issue to me? > Support Kinesis connector in Python DataStream API. > --- > > Key: FLINK-21966 > URL: https://issues.apache.org/jira/browse/FLINK-21966 > Project: Flink > Issue Type: Sub-task > Components: API / Python >Reporter: Shuiqiang Chen >Priority: Major > Labels: pull-request-available > Fix For: 1.16.0 > > -- This message was sent by Atlassian Jira (v8.20.7#820007)

[GitHub] [flink-table-store] LadyForest commented on a diff in pull request #138: [FLINK-27707] Implement ManagedTableFactory#onCompactTable

LadyForest commented on code in PR #138:

URL: https://github.com/apache/flink-table-store/pull/138#discussion_r884472377

##

flink-table-store-connector/src/main/java/org/apache/flink/table/store/connector/TableStoreManagedFactory.java:

##

@@ -183,6 +204,84 @@ public void onDropTable(Context context, boolean

ignoreIfNotExists) {

@Override

public Map onCompactTable(

Context context, CatalogPartitionSpec catalogPartitionSpec) {

-throw new UnsupportedOperationException("Not implement yet");

+Map newOptions = new

HashMap<>(context.getCatalogTable().getOptions());

+FileStore fileStore = buildTableStore(context).buildFileStore();

+FileStoreScan.Plan plan =

+fileStore

+.newScan()

+.withPartitionFilter(

+PredicateConverter.CONVERTER.fromMap(

+

catalogPartitionSpec.getPartitionSpec(),

+fileStore.partitionType()))

+.plan();

+

+Preconditions.checkState(

+plan.snapshotId() != null && !plan.files().isEmpty(),

+"The specified %s to compact does not exist any snapshot",

+catalogPartitionSpec.getPartitionSpec().isEmpty()

+? "table"

+: String.format("partition %s",

catalogPartitionSpec.getPartitionSpec()));

+Map>> groupBy =

plan.groupByPartFiles();

+if

(!Boolean.parseBoolean(newOptions.get(COMPACTION_RESCALE_BUCKET.key( {

+groupBy =

+pickManifest(

+groupBy,

+new

FileStoreOptions(Configuration.fromMap(newOptions))

+.mergeTreeOptions(),

+new

KeyComparatorSupplier(fileStore.partitionType()).get());

+}

+try {

+newOptions.put(

+COMPACTION_SCANNED_MANIFEST.key(),

+Base64.getEncoder()

+.encodeToString(

+InstantiationUtil.serializeObject(

+new PartitionedManifestMeta(

+plan.snapshotId(),

groupBy;

+} catch (IOException e) {

+throw new RuntimeException(e);

+}

+return newOptions;

+}

+

+@VisibleForTesting

+Map>> pickManifest(

Review Comment:

> You have picked files here, but how to make sure that writer will compact

these files?

As offline discussed, the main purpose for `ALTER TABLE COMPACT` is to

squeeze those files which have key range overlapped or too small. It is not

exactly what universal compaction does. As a result, when after picking these

files at the planning phase, the runtime should not pick them again, because

they are already picked. So `FileStoreWriteImpl` should create different

compact strategies for ① the auto-compaction triggered by ordinary writes v.s.

② the manual triggered compaction. For the latter, the strategy should directly

return all the files it receives.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink-table-store] LadyForest commented on a diff in pull request #138: [FLINK-27707] Implement ManagedTableFactory#onCompactTable

LadyForest commented on code in PR #138:

URL: https://github.com/apache/flink-table-store/pull/138#discussion_r884472377

##

flink-table-store-connector/src/main/java/org/apache/flink/table/store/connector/TableStoreManagedFactory.java:

##

@@ -183,6 +204,84 @@ public void onDropTable(Context context, boolean

ignoreIfNotExists) {

@Override

public Map onCompactTable(

Context context, CatalogPartitionSpec catalogPartitionSpec) {

-throw new UnsupportedOperationException("Not implement yet");

+Map newOptions = new

HashMap<>(context.getCatalogTable().getOptions());

+FileStore fileStore = buildTableStore(context).buildFileStore();

+FileStoreScan.Plan plan =

+fileStore

+.newScan()

+.withPartitionFilter(

+PredicateConverter.CONVERTER.fromMap(

+

catalogPartitionSpec.getPartitionSpec(),

+fileStore.partitionType()))

+.plan();

+

+Preconditions.checkState(

+plan.snapshotId() != null && !plan.files().isEmpty(),

+"The specified %s to compact does not exist any snapshot",

+catalogPartitionSpec.getPartitionSpec().isEmpty()

+? "table"

+: String.format("partition %s",

catalogPartitionSpec.getPartitionSpec()));

+Map>> groupBy =

plan.groupByPartFiles();

+if

(!Boolean.parseBoolean(newOptions.get(COMPACTION_RESCALE_BUCKET.key( {

+groupBy =

+pickManifest(

+groupBy,

+new

FileStoreOptions(Configuration.fromMap(newOptions))

+.mergeTreeOptions(),

+new

KeyComparatorSupplier(fileStore.partitionType()).get());

+}

+try {

+newOptions.put(

+COMPACTION_SCANNED_MANIFEST.key(),

+Base64.getEncoder()

+.encodeToString(

+InstantiationUtil.serializeObject(

+new PartitionedManifestMeta(

+plan.snapshotId(),

groupBy;

+} catch (IOException e) {

+throw new RuntimeException(e);

+}

+return newOptions;

+}

+

+@VisibleForTesting

+Map>> pickManifest(

Review Comment:

> You have picked files here, but how to make sure that writer will compact

these files?

As offline discussed, the main purpose for `ALTER TABLE COMPACT` is to

squeeze those files which have key range overlapped or too small. It is not

exactly what universal compaction does. As a result, when after picking these

files at the planning phase, the runtime should not pick them again, because

they are already picked. So `FileStoreWriteImpl` should create different

compact strategies for the auto-compaction for normal write v.s. the manual

triggered compaction. For the latter, the strategy should directly return all

the files it receives.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink-table-store] LadyForest commented on a diff in pull request #138: [FLINK-27707] Implement ManagedTableFactory#onCompactTable

LadyForest commented on code in PR #138:

URL: https://github.com/apache/flink-table-store/pull/138#discussion_r884456680

##

flink-table-store-connector/src/test/java/org/apache/flink/table/store/connector/TableStoreManagedFactoryTest.java:

##

@@ -256,9 +284,288 @@ public void testCreateAndCheckTableStore(

}

}

+@Test

+public void testOnCompactTableForNoSnapshot() {

+RowType partType = RowType.of();

+MockTableStoreManagedFactory mockTableStoreManagedFactory =

+new MockTableStoreManagedFactory(partType,

NON_PARTITIONED_ROW_TYPE);

+prepare(

+TABLE + "_" + UUID.randomUUID(),

+partType,

+NON_PARTITIONED_ROW_TYPE,

+NON_PARTITIONED,

+true);

+assertThatThrownBy(

+() ->

+mockTableStoreManagedFactory.onCompactTable(

+context, new

CatalogPartitionSpec(emptyMap(

+.isInstanceOf(IllegalStateException.class)

+.hasMessageContaining("The specified table to compact does not

exist any snapshot");

+}

+

+@ParameterizedTest

+@ValueSource(booleans = {true, false})

+public void testOnCompactTableForNonPartitioned(boolean rescaleBucket)

throws Exception {

+RowType partType = RowType.of();

+runTest(

+new MockTableStoreManagedFactory(partType,

NON_PARTITIONED_ROW_TYPE),

+TABLE + "_" + UUID.randomUUID(),

+partType,

+NON_PARTITIONED_ROW_TYPE,

+NON_PARTITIONED,

+rescaleBucket);

+}

+

+@ParameterizedTest

+@ValueSource(booleans = {true, false})

+public void testOnCompactTableForSinglePartitioned(boolean rescaleBucket)

throws Exception {

+runTest(

+new MockTableStoreManagedFactory(

+SINGLE_PARTITIONED_PART_TYPE,

SINGLE_PARTITIONED_ROW_TYPE),

+TABLE + "_" + UUID.randomUUID(),

+SINGLE_PARTITIONED_PART_TYPE,

+SINGLE_PARTITIONED_ROW_TYPE,

+SINGLE_PARTITIONED,

+rescaleBucket);

+}

+

+@ParameterizedTest

+@ValueSource(booleans = {true, false})

+public void testOnCompactTableForMultiPartitioned(boolean rescaleBucket)

throws Exception {

+runTest(

+new MockTableStoreManagedFactory(),

+TABLE + "_" + UUID.randomUUID(),

+DEFAULT_PART_TYPE,

+DEFAULT_ROW_TYPE,

+MULTI_PARTITIONED,

+rescaleBucket);

+}

+

+@MethodSource("provideManifest")

+@ParameterizedTest

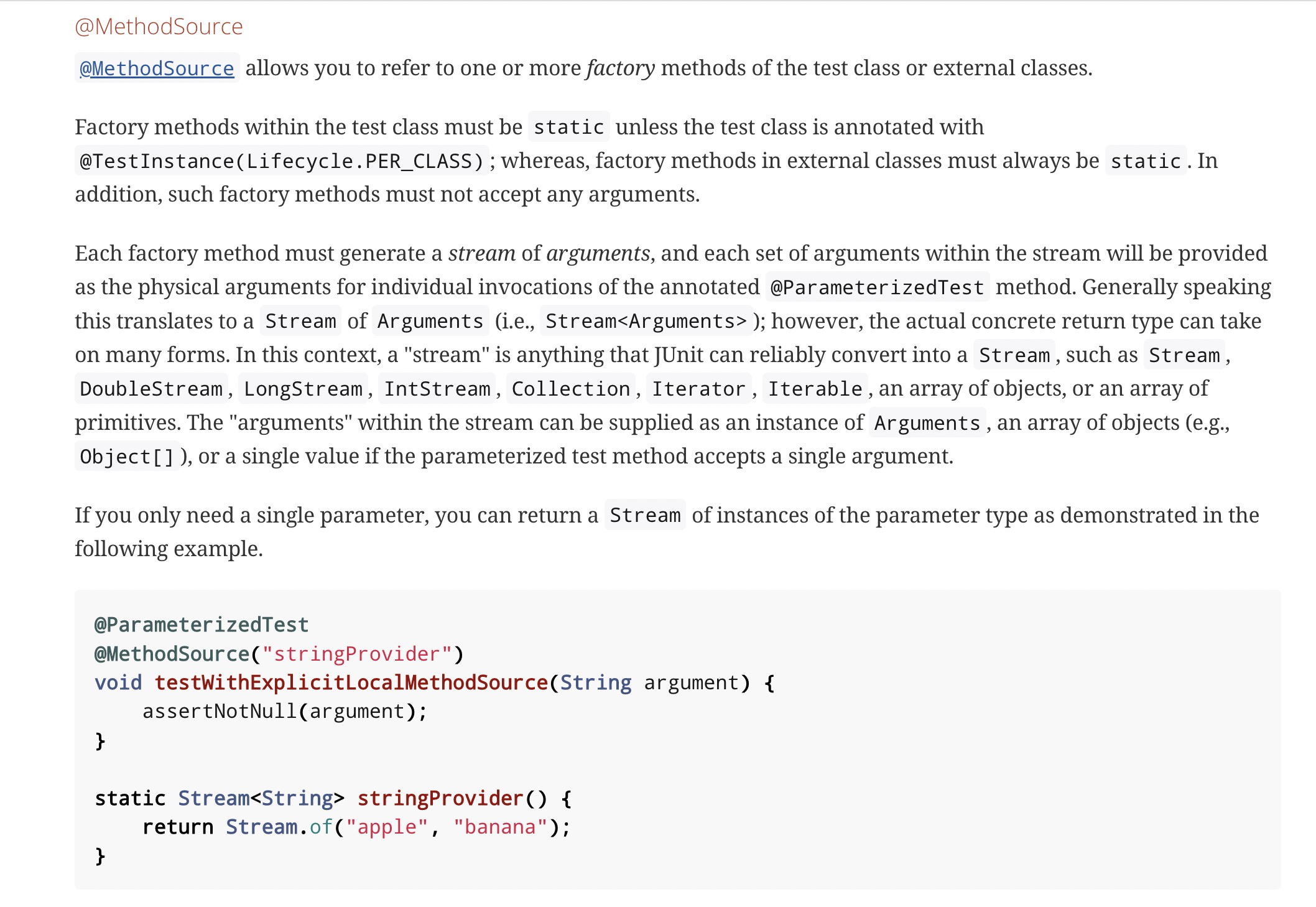

Review Comment:

> Can we have a name for each parameter? Very difficult to maintain without

a name.

I cannot understand well what you mean. Do you suggest not use Junit5

parameterized test? Method source doc illustrates the usage.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink-kubernetes-operator] morhidi commented on a diff in pull request #244: [FLINK-27520] Use admission-controller-framework in Webhook

morhidi commented on code in PR #244:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/244#discussion_r884442238

##

flink-kubernetes-webhook/pom.xml:

##

@@ -36,6 +36,19 @@ under the License.

org.apache.flink

flink-kubernetes-operator

${project.version}

+provided

+

+

+

+io.javaoperatorsdk

+operator-framework-framework-core

+${operator.sdk.admission-controller.version}

+

+

+*

Review Comment:

Had to keep the excludes in the dependency for the unit tests.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink-kubernetes-operator] wangyang0918 commented on pull request #237: [FLINK-27257] Flink kubernetes operator triggers savepoint failed because of not all tasks running

wangyang0918 commented on PR #237: URL: https://github.com/apache/flink-kubernetes-operator/pull/237#issuecomment-1140726331 For Flink batch jobs, we do not require all the tasks running to indicate that the Flink job is running. So it does not make sense when we want to use `getJobDetails` to verify whether the job is running. But I agree it could be used to check whether all the tasks are running before triggering a savepoint. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-kubernetes-operator] morhidi commented on a diff in pull request #244: [FLINK-27520] Use admission-controller-framework in Webhook

morhidi commented on code in PR #244:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/244#discussion_r884434216

##

flink-kubernetes-webhook/pom.xml:

##

@@ -36,6 +36,19 @@ under the License.

org.apache.flink

flink-kubernetes-operator

${project.version}

+provided

+

+

+

+io.javaoperatorsdk

+operator-framework-framework-core

+${operator.sdk.admission-controller.version}

+

+

+*

Review Comment:

Thanks @gyfora for the suggestion, I like it better myself. Changed and

pushed it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] zou-can commented on pull request #19828: [FLINK-27762][connector/kafka] KafkaPartitionSplitReader#wakeup should only unblock KafkaConsumer#poll invocation

zou-can commented on PR #19828:

URL: https://github.com/apache/flink/pull/19828#issuecomment-1140717266

As i commented on https://issues.apache.org/jira/browse/FLINK-27762

The exception we met is in method

**KafkaPartitionSplitReader#removeEmptySplits**. But i can't find any action

for handling this exception in that method.

```

// KafkaPartitionSplitReader#removeEmptySplits

private void removeEmptySplits() {

List emptyPartitions = new ArrayList<>();

for (TopicPartition tp : consumer.assignment()) {

// WakeUpException is thrown here.

// since KafkaConsumer#wakeUp is called before, if execute

KafkaConsumer#postion in 'if statement' above, it will throw WakeUpException.

if (consumer.position(tp) >= getStoppingOffset(tp)) {

emptyPartitions.add(tp);

}

}

// ignore irrelevant code...

}

```

What I'm focus is **how to handle WakeUpException** after it was thrown.

As what is done in **KafkaPartitionSplitReader#fetch**

```

// KafkaPartitionSplitReader#fetch

public RecordsWithSplitIds> fetch() throws

IOException {

ConsumerRecords consumerRecords;

try {

consumerRecords = consumer.poll(Duration.ofMillis(POLL_TIMEOUT));

} catch (WakeupException we) {

// catch exception and return empty result.

return new KafkaPartitionSplitRecords(

ConsumerRecords.empty(), kafkaSourceReaderMetrics);

}

// ignore irrelevant code...

}

```

Maybe we should catch this exception in

**KafkaPartitionSplitReader#removeEmptySplits**, and retry

**KafkaConsumer#postion** again.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (FLINK-27009) Support SQL job submission in flink kubernetes opeartor

[ https://issues.apache.org/jira/browse/FLINK-27009?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543770#comment-17543770 ] Márton Balassi commented on FLINK-27009: [~wangyang0918] I do not have well-formed thoughts yet, I was only thinking about SQLScript to this point, but I do see how that come become tedious and warrant a SQLScriptURI instead or as an option. For the sake of concise examples it would be nice to be able to drop the sql into a field, but I am not hell-bent on it. > Support SQL job submission in flink kubernetes opeartor > --- > > Key: FLINK-27009 > URL: https://issues.apache.org/jira/browse/FLINK-27009 > Project: Flink > Issue Type: New Feature > Components: Kubernetes Operator >Reporter: Biao Geng >Assignee: Biao Geng >Priority: Major > > Currently, the flink kubernetes opeartor is for jar job using application or > session cluster. For SQL job, there is no out of box solution in the > operator. > One simple and short-term solution is to wrap the SQL script into a jar job > using table API with limitation. > The long-term solution may work with > [FLINK-26541|https://issues.apache.org/jira/browse/FLINK-26541] to achieve > the full support. -- This message was sent by Atlassian Jira (v8.20.7#820007)

[jira] [Commented] (FLINK-27831) Provide example of Beam on the k8s operator

[ https://issues.apache.org/jira/browse/FLINK-27831?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543769#comment-17543769 ] Márton Balassi commented on FLINK-27831: On second thought the example has heavy dependencies on the Beam side, might make more sense for it to live in Beam instead with only some of the docs in the flink-kubernetes-operator side. [~mxm] what do you think? > Provide example of Beam on the k8s operator > --- > > Key: FLINK-27831 > URL: https://issues.apache.org/jira/browse/FLINK-27831 > Project: Flink > Issue Type: Improvement > Components: Kubernetes Operator >Reporter: Márton Balassi >Assignee: Márton Balassi >Priority: Minor > Fix For: kubernetes-operator-1.1.0 > > > Multiple users have asked for whether the operator supports Beam jobs in > different shapes. I assume that running a Beam job ultimately with the > current operator ultimately comes down to having the right jars on the > classpath / packaged into the user's fatjar. > At this stage I suggest adding one such example, providing it might attract > new users. -- This message was sent by Atlassian Jira (v8.20.7#820007)

[jira] [Comment Edited] (FLINK-27834) Flink kubernetes operator dockerfile could not work with podman

[

https://issues.apache.org/jira/browse/FLINK-27834?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543768#comment-17543768

]

Zili Chen edited comment on FLINK-27834 at 5/30/22 3:42 AM:

Thanks for your notification [~wangyang0918]. I'll take a look today.

was (Author: tison):

Thanks for your notification [~wangyang0918]. I'll try a look today.

> Flink kubernetes operator dockerfile could not work with podman

> ---

>

> Key: FLINK-27834

> URL: https://issues.apache.org/jira/browse/FLINK-27834

> Project: Flink

> Issue Type: Bug

> Components: Kubernetes Operator

>Reporter: Yang Wang

>Priority: Major

>

> [1/2] STEP 16/19: COPY *.git ./.git

> Error: error building at STEP "COPY *.git ./.git": checking on sources under

> "/root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0": Rel: can't

> make relative to

> /root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0; copier: stat:

> ["/*.git"]: no such file or directory

>

> podman version

> Client: Podman Engine

> Version: 4.0.2

> API Version: 4.0.2

>

>

> I think the root cause is "*.git" is not respected by podman. Maybe we could

> simply copy the whole directory when building the image.

>

> {code:java}

> WORKDIR /app

> COPY . .

> RUN --mount=type=cache,target=/root/.m2 mvn -ntp clean install -pl

> !flink-kubernetes-docs -DskipTests=$SKIP_TESTS {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

[jira] [Commented] (FLINK-27834) Flink kubernetes operator dockerfile could not work with podman

[

https://issues.apache.org/jira/browse/FLINK-27834?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543768#comment-17543768

]

Zili Chen commented on FLINK-27834:

---

Thanks for your notification [~wangyang0918]. I'll try a look today.

> Flink kubernetes operator dockerfile could not work with podman

> ---

>

> Key: FLINK-27834

> URL: https://issues.apache.org/jira/browse/FLINK-27834

> Project: Flink

> Issue Type: Bug

> Components: Kubernetes Operator

>Reporter: Yang Wang

>Priority: Major

>

> [1/2] STEP 16/19: COPY *.git ./.git

> Error: error building at STEP "COPY *.git ./.git": checking on sources under

> "/root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0": Rel: can't

> make relative to

> /root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0; copier: stat:

> ["/*.git"]: no such file or directory

>

> podman version

> Client: Podman Engine

> Version: 4.0.2

> API Version: 4.0.2

>

>

> I think the root cause is "*.git" is not respected by podman. Maybe we could

> simply copy the whole directory when building the image.

>

> {code:java}

> WORKDIR /app

> COPY . .

> RUN --mount=type=cache,target=/root/.m2 mvn -ntp clean install -pl

> !flink-kubernetes-docs -DskipTests=$SKIP_TESTS {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

[GitHub] [flink-table-store] LadyForest commented on a diff in pull request #138: [FLINK-27707] Implement ManagedTableFactory#onCompactTable

LadyForest commented on code in PR #138:

URL: https://github.com/apache/flink-table-store/pull/138#discussion_r884394501

##

flink-table-store-connector/src/main/java/org/apache/flink/table/store/connector/TableStoreManagedFactory.java:

##

@@ -183,6 +204,84 @@ public void onDropTable(Context context, boolean

ignoreIfNotExists) {

@Override

public Map onCompactTable(

Context context, CatalogPartitionSpec catalogPartitionSpec) {

-throw new UnsupportedOperationException("Not implement yet");

+Map newOptions = new

HashMap<>(context.getCatalogTable().getOptions());

+FileStore fileStore = buildTableStore(context).buildFileStore();

+FileStoreScan.Plan plan =

+fileStore

+.newScan()

+.withPartitionFilter(

+PredicateConverter.CONVERTER.fromMap(

+

catalogPartitionSpec.getPartitionSpec(),

+fileStore.partitionType()))

+.plan();

+

+Preconditions.checkState(

+plan.snapshotId() != null && !plan.files().isEmpty(),

+"The specified %s to compact does not exist any snapshot",

+catalogPartitionSpec.getPartitionSpec().isEmpty()

+? "table"

+: String.format("partition %s",

catalogPartitionSpec.getPartitionSpec()));

+Map>> groupBy =

plan.groupByPartFiles();

+if

(!Boolean.parseBoolean(newOptions.get(COMPACTION_RESCALE_BUCKET.key( {

+groupBy =

+pickManifest(

+groupBy,

+new

FileStoreOptions(Configuration.fromMap(newOptions))

+.mergeTreeOptions(),

+new

KeyComparatorSupplier(fileStore.partitionType()).get());

+}

+try {

+newOptions.put(

+COMPACTION_SCANNED_MANIFEST.key(),

+Base64.getEncoder()

+.encodeToString(

+InstantiationUtil.serializeObject(

+new PartitionedManifestMeta(

+plan.snapshotId(),

groupBy;

+} catch (IOException e) {

+throw new RuntimeException(e);

+}

+return newOptions;

+}

+

+@VisibleForTesting

+Map>> pickManifest(

+Map>> groupBy,

+MergeTreeOptions options,

+Comparator keyComparator) {

+Map>> filtered = new

HashMap<>();

+

+for (Map.Entry>>

partEntry :

+groupBy.entrySet()) {

+Map> manifests = new HashMap<>();

+for (Map.Entry> bucketEntry :

+partEntry.getValue().entrySet()) {

+List smallFiles =

Review Comment:

> For example:

inputs: File1(0-10) File2(10-90) File3(90-100)

merged: File4(0-100) File2(10-90)

This can lead to results with overlapping.

I agree with you that "we cannot pick small files at random". But the

example you provide cannot prove this. These three files are all overlapped.

The small file threshold will pick File1(0-10) and File3(90-100), and the

interval partition will pick all of them. So after deduplication, they all get

compacted.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (FLINK-27831) Provide example of Beam on the k8s operator

[ https://issues.apache.org/jira/browse/FLINK-27831?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543767#comment-17543767 ] Márton Balassi commented on FLINK-27831: I managed to get the Beam [WordCount|https://github.com/apache/beam/blob/master/examples/java/src/main/java/org/apache/beam/examples/WordCount.java] example based on the [quickstart|https://beam.apache.org/get-started/quickstart-java/#get-the-example-code] running with Flink 1.14 on the operator. It needs a bit of tweaking to make it pretty, will create a PR soonish. > Provide example of Beam on the k8s operator > --- > > Key: FLINK-27831 > URL: https://issues.apache.org/jira/browse/FLINK-27831 > Project: Flink > Issue Type: Improvement > Components: Kubernetes Operator >Reporter: Márton Balassi >Assignee: Márton Balassi >Priority: Minor > Fix For: kubernetes-operator-1.1.0 > > > Multiple users have asked for whether the operator supports Beam jobs in > different shapes. I assume that running a Beam job ultimately with the > current operator ultimately comes down to having the right jars on the > classpath / packaged into the user's fatjar. > At this stage I suggest adding one such example, providing it might attract > new users. -- This message was sent by Atlassian Jira (v8.20.7#820007)

[jira] [Commented] (FLINK-27762) Kafka WakeUpException during handling splits changes

[

https://issues.apache.org/jira/browse/FLINK-27762?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543766#comment-17543766

]

Qingsheng Ren commented on FLINK-27762:

---

Thanks a lot for the review [~zoucan] ! What about moving the discussion about

code and PRs to Github?

> Kafka WakeUpException during handling splits changes

>

>

> Key: FLINK-27762

> URL: https://issues.apache.org/jira/browse/FLINK-27762

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.14.3

>Reporter: zoucan

>Assignee: Qingsheng Ren

>Priority: Major

> Labels: pull-request-available

>

>

> We enable dynamic partition discovery in our flink job, but job failed when

> kafka partition is changed.

> Exception detail is shown as follows:

> {code:java}

> java.lang.RuntimeException: One or more fetchers have encountered exception

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcherManager.checkErrors(SplitFetcherManager.java:225)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.getNextFetch(SourceReaderBase.java:169)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.pollNext(SourceReaderBase.java:130)

> at

> org.apache.flink.streaming.api.operators.SourceOperator.emitNext(SourceOperator.java:350)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskSourceInput.emitNext(StreamTaskSourceInput.java:68)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:65)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:496)

> at

> org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:203)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:809)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:761)

> at

> org.apache.flink.runtime.taskmanager.Task.runWithSystemExitMonitoring(Task.java:958)

> at

> org.apache.flink.runtime.taskmanager.Task.restoreAndInvoke(Task.java:937)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:766)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:575)

> at java.lang.Thread.run(Thread.java:748)

> Caused by: java.lang.RuntimeException: SplitFetcher thread 0 received

> unexpected exception while polling the records

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.runOnce(SplitFetcher.java:150)

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.run(SplitFetcher.java:105)

> at

> java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

> at java.util.concurrent.FutureTask.run(FutureTask.java:266)

> at

> java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

> at

> java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

> ... 1 more

> Caused by: org.apache.kafka.common.errors.WakeupException

> at

> org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.maybeTriggerWakeup(ConsumerNetworkClient.java:511)

> at

> org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:275)

> at

> org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:233)

> at

> org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:224)

> at

> org.apache.kafka.clients.consumer.KafkaConsumer.position(KafkaConsumer.java:1726)

> at

> org.apache.kafka.clients.consumer.KafkaConsumer.position(KafkaConsumer.java:1684)

> at

> org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader.removeEmptySplits(KafkaPartitionSplitReader.java:315)

> at

> org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader.handleSplitsChanges(KafkaPartitionSplitReader.java:200)

> at

> org.apache.flink.connector.base.source.reader.fetcher.AddSplitsTask.run(AddSplitsTask.java:51)

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.runOnce(SplitFetcher.java:142)

> ... 6 more {code}

>

> After preliminary investigation, according to source code of KafkaSource,

> At first:

> method *org.apache.kafka.clients.consumer.KafkaConsumer.wakeup()* will be

> called if consumer is polling data.

> Later:

> method *org.apache.kafka.clients.consumer.KafkaConsumer.position()* will be

> called during handle splits changes.

> Since consumer has been waken up, it will throw WakeUpException.

--

This message was sent

[GitHub] [flink-table-store] LadyForest commented on a diff in pull request #138: [FLINK-27707] Implement ManagedTableFactory#onCompactTable

LadyForest commented on code in PR #138: URL: https://github.com/apache/flink-table-store/pull/138#discussion_r884390344 ## flink-table-store-core/src/main/java/org/apache/flink/table/store/file/utils/FileStorePathFactory.java: ## @@ -61,20 +61,24 @@ public FileStorePathFactory(Path root, RowType partitionType, String defaultPart this.root = root; this.uuid = UUID.randomUUID().toString(); -String[] partitionColumns = partitionType.getFieldNames().toArray(new String[0]); -this.partitionComputer = -new RowDataPartitionComputer( -defaultPartValue, -partitionColumns, -partitionType.getFields().stream() -.map(f -> LogicalTypeDataTypeConverter.toDataType(f.getType())) -.toArray(DataType[]::new), -partitionColumns); +this.partitionComputer = getPartitionComputer(partitionType, defaultPartValue); this.manifestFileCount = new AtomicInteger(0); this.manifestListCount = new AtomicInteger(0); } +public static RowDataPartitionComputer getPartitionComputer( Review Comment: > Just for test? Maybe for logging too. Currently, the log does not reveal the readable partition -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-table-store] JingsongLi commented on a diff in pull request #138: [FLINK-27707] Implement ManagedTableFactory#onCompactTable

JingsongLi commented on code in PR #138:

URL: https://github.com/apache/flink-table-store/pull/138#discussion_r884388037

##

flink-table-store-connector/src/main/java/org/apache/flink/table/store/connector/TableStoreManagedFactory.java:

##

@@ -183,6 +204,84 @@ public void onDropTable(Context context, boolean

ignoreIfNotExists) {

@Override

public Map onCompactTable(

Context context, CatalogPartitionSpec catalogPartitionSpec) {

-throw new UnsupportedOperationException("Not implement yet");

+Map newOptions = new

HashMap<>(context.getCatalogTable().getOptions());

+FileStore fileStore = buildTableStore(context).buildFileStore();

+FileStoreScan.Plan plan =

+fileStore

+.newScan()

+.withPartitionFilter(

+PredicateConverter.CONVERTER.fromMap(

+

catalogPartitionSpec.getPartitionSpec(),

+fileStore.partitionType()))

+.plan();

+

+Preconditions.checkState(

+plan.snapshotId() != null && !plan.files().isEmpty(),

+"The specified %s to compact does not exist any snapshot",

+catalogPartitionSpec.getPartitionSpec().isEmpty()

+? "table"

+: String.format("partition %s",

catalogPartitionSpec.getPartitionSpec()));

+Map>> groupBy =

plan.groupByPartFiles();

+if

(!Boolean.parseBoolean(newOptions.get(COMPACTION_RESCALE_BUCKET.key( {

+groupBy =

+pickManifest(

+groupBy,

+new

FileStoreOptions(Configuration.fromMap(newOptions))

+.mergeTreeOptions(),

+new

KeyComparatorSupplier(fileStore.partitionType()).get());

+}

+try {

+newOptions.put(

+COMPACTION_SCANNED_MANIFEST.key(),

+Base64.getEncoder()

+.encodeToString(

+InstantiationUtil.serializeObject(

+new PartitionedManifestMeta(

+plan.snapshotId(),

groupBy;

+} catch (IOException e) {

+throw new RuntimeException(e);

+}

+return newOptions;

+}

+

+@VisibleForTesting

+Map>> pickManifest(

+Map>> groupBy,

+MergeTreeOptions options,

+Comparator keyComparator) {

+Map>> filtered = new

HashMap<>();

+

+for (Map.Entry>>

partEntry :

+groupBy.entrySet()) {

+Map> manifests = new HashMap<>();

+for (Map.Entry> bucketEntry :

+partEntry.getValue().entrySet()) {

+List smallFiles =

Review Comment:

Here we cannot pick small files at random, which would cause the merged

files min and max to skip the middle data.

For example:

inputs: File1(0-10) File2(10-90) File3(90-100)

merged: File4(0-100) File2(10-90)

This can lead to results with overlapping.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (FLINK-27834) Flink kubernetes operator dockerfile could not work with podman

[

https://issues.apache.org/jira/browse/FLINK-27834?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543762#comment-17543762

]

Yang Wang commented on FLINK-27834:

---

cc [~Tison] I remember you also have the same idea when fixing FLINK-27746.

> Flink kubernetes operator dockerfile could not work with podman

> ---

>

> Key: FLINK-27834

> URL: https://issues.apache.org/jira/browse/FLINK-27834

> Project: Flink

> Issue Type: Bug

> Components: Kubernetes Operator

>Reporter: Yang Wang

>Priority: Major

>

> [1/2] STEP 16/19: COPY *.git ./.git

> Error: error building at STEP "COPY *.git ./.git": checking on sources under

> "/root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0": Rel: can't

> make relative to

> /root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0; copier: stat:

> ["/*.git"]: no such file or directory

>

> podman version

> Client: Podman Engine

> Version: 4.0.2

> API Version: 4.0.2

>

>

> I think the root cause is "*.git" is not respected by podman. Maybe we could

> simply copy the whole directory when building the image.

>

> {code:java}

> WORKDIR /app

> COPY . .

> RUN --mount=type=cache,target=/root/.m2 mvn -ntp clean install -pl

> !flink-kubernetes-docs -DskipTests=$SKIP_TESTS {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

[jira] [Commented] (FLINK-27834) Flink kubernetes operator dockerfile could not work with podman

[

https://issues.apache.org/jira/browse/FLINK-27834?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543761#comment-17543761

]

Gyula Fora commented on FLINK-27834:

Maybe this is not a big concern, would love to hear what [~matyas] or

[~jbusche] think

> Flink kubernetes operator dockerfile could not work with podman

> ---

>

> Key: FLINK-27834

> URL: https://issues.apache.org/jira/browse/FLINK-27834

> Project: Flink

> Issue Type: Bug

> Components: Kubernetes Operator

>Reporter: Yang Wang

>Priority: Major

>

> [1/2] STEP 16/19: COPY *.git ./.git

> Error: error building at STEP "COPY *.git ./.git": checking on sources under

> "/root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0": Rel: can't

> make relative to

> /root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0; copier: stat:

> ["/*.git"]: no such file or directory

>

> podman version

> Client: Podman Engine

> Version: 4.0.2

> API Version: 4.0.2

>

>

> I think the root cause is "*.git" is not respected by podman. Maybe we could

> simply copy the whole directory when building the image.

>

> {code:java}

> WORKDIR /app

> COPY . .

> RUN --mount=type=cache,target=/root/.m2 mvn -ntp clean install -pl

> !flink-kubernetes-docs -DskipTests=$SKIP_TESTS {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

[jira] [Commented] (FLINK-27834) Flink kubernetes operator dockerfile could not work with podman

[

https://issues.apache.org/jira/browse/FLINK-27834?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543760#comment-17543760

]

Gyula Fora commented on FLINK-27834:

yes, the user can have anything in their local directory by accident

> Flink kubernetes operator dockerfile could not work with podman

> ---

>

> Key: FLINK-27834

> URL: https://issues.apache.org/jira/browse/FLINK-27834

> Project: Flink

> Issue Type: Bug

> Components: Kubernetes Operator

>Reporter: Yang Wang

>Priority: Major

>

> [1/2] STEP 16/19: COPY *.git ./.git

> Error: error building at STEP "COPY *.git ./.git": checking on sources under

> "/root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0": Rel: can't

> make relative to

> /root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0; copier: stat:

> ["/*.git"]: no such file or directory

>

> podman version

> Client: Podman Engine

> Version: 4.0.2

> API Version: 4.0.2

>

>

> I think the root cause is "*.git" is not respected by podman. Maybe we could

> simply copy the whole directory when building the image.

>

> {code:java}

> WORKDIR /app

> COPY . .

> RUN --mount=type=cache,target=/root/.m2 mvn -ntp clean install -pl

> !flink-kubernetes-docs -DskipTests=$SKIP_TESTS {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

[jira] [Commented] (FLINK-27834) Flink kubernetes operator dockerfile could not work with podman

[

https://issues.apache.org/jira/browse/FLINK-27834?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543759#comment-17543759

]

Yang Wang commented on FLINK-27834:

---

Do you mean we might accidentally bundle the credentials into the image?

> Flink kubernetes operator dockerfile could not work with podman

> ---

>

> Key: FLINK-27834

> URL: https://issues.apache.org/jira/browse/FLINK-27834

> Project: Flink

> Issue Type: Bug

> Components: Kubernetes Operator

>Reporter: Yang Wang

>Priority: Major

>

> [1/2] STEP 16/19: COPY *.git ./.git

> Error: error building at STEP "COPY *.git ./.git": checking on sources under

> "/root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0": Rel: can't

> make relative to

> /root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0; copier: stat:

> ["/*.git"]: no such file or directory

>

> podman version

> Client: Podman Engine

> Version: 4.0.2

> API Version: 4.0.2

>

>

> I think the root cause is "*.git" is not respected by podman. Maybe we could

> simply copy the whole directory when building the image.

>

> {code:java}

> WORKDIR /app

> COPY . .

> RUN --mount=type=cache,target=/root/.m2 mvn -ntp clean install -pl

> !flink-kubernetes-docs -DskipTests=$SKIP_TESTS {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

[jira] [Assigned] (FLINK-25865) Support to set restart policy of TaskManager pod for native K8s integration

[

https://issues.apache.org/jira/browse/FLINK-25865?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Yang Wang reassigned FLINK-25865:

-

Assignee: Aitozi

> Support to set restart policy of TaskManager pod for native K8s integration

> ---

>

> Key: FLINK-25865

> URL: https://issues.apache.org/jira/browse/FLINK-25865

> Project: Flink

> Issue Type: Improvement

> Components: Deployment / Kubernetes

>Reporter: Yang Wang

>Assignee: Aitozi

>Priority: Major

>

> After FLIP-201, Flink's TaskManagers will be able to be restarted without

> losing its local state. So it is reasonable to make the restart policy[1] of

> TaskManager pod could be configured.

> The current restart policy is {{{}Never{}}}. Flink will always delete the

> failed TaskManager pod directly and create a new one instead. This ticket

> could help to decrease the recovery time of TaskManager failure.

>

> Please note that the working directory needs to be located in the

> emptyDir[1], which is retained in different restarts.

>

> [1].

> https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#restart-policy

> [2]. https://kubernetes.io/docs/concepts/storage/volumes/#emptydir

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

[jira] [Commented] (FLINK-27834) Flink kubernetes operator dockerfile could not work with podman

[

https://issues.apache.org/jira/browse/FLINK-27834?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543758#comment-17543758

]

Gyula Fora commented on FLINK-27834:

I have bad feelings about this, it might lead to accidentally leaking

credentials from local working directories.

> Flink kubernetes operator dockerfile could not work with podman

> ---

>

> Key: FLINK-27834

> URL: https://issues.apache.org/jira/browse/FLINK-27834

> Project: Flink

> Issue Type: Bug

> Components: Kubernetes Operator

>Reporter: Yang Wang

>Priority: Major

>

> [1/2] STEP 16/19: COPY *.git ./.git

> Error: error building at STEP "COPY *.git ./.git": checking on sources under

> "/root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0": Rel: can't

> make relative to

> /root/FLINK/release-1.0-rc2/flink-kubernetes-operator-1.0.0; copier: stat:

> ["/*.git"]: no such file or directory

>

> podman version

> Client: Podman Engine

> Version: 4.0.2

> API Version: 4.0.2

>

>

> I think the root cause is "*.git" is not respected by podman. Maybe we could

> simply copy the whole directory when building the image.

>

> {code:java}

> WORKDIR /app

> COPY . .

> RUN --mount=type=cache,target=/root/.m2 mvn -ntp clean install -pl

> !flink-kubernetes-docs -DskipTests=$SKIP_TESTS {code}

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

[jira] [Comment Edited] (FLINK-27762) Kafka WakeUpException during handling splits changes

[

https://issues.apache.org/jira/browse/FLINK-27762?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543756#comment-17543756

]

zoucan edited comment on FLINK-27762 at 5/30/22 2:50 AM:

-

[~renqs]

Thanks for your effort for this issue.

I‘ve reviewed your pr and i have a question. The exception i met is in method

{*}KafkaPartitionSplitReader#removeEmptySplits{*}. But i can't find any action

for handling this exception in that method.

{code:java}

//

org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader#removeEmptySplits

private void removeEmptySplits() {

List emptyPartitions = new ArrayList<>();

for (TopicPartition tp : consumer.assignment()) {

// WakeUpException is thrown here.

// since KafkaConsumer#wakeUp is called before,if execute

KafkaConsumer#postion() in 'if statement' above, it will throw WakeUpException.

if (consumer.position(tp) >= getStoppingOffset(tp)) {

emptyPartitions.add(tp);

}

}

// ignore irrelevant code...

} {code}

Maybe we should check whether *KafkaConsumer#wakeUp* is called before, or catch

WakeUpException in *KafkaPartitionSplitReader#removeEmptySplits.*

was (Author: JIRAUSER289972):

[~renqs]

Thanks for your effort for this issue.

I‘ve reviewed your pr and i have a question. The exception i met is in method

{*}KafkaPartitionSplitReader#removeEmptySplits{*}. But i can't find any action

for handling this exception.

{code:java}

//

org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader#removeEmptySplits

private void removeEmptySplits() {

List emptyPartitions = new ArrayList<>();

for (TopicPartition tp : consumer.assignment()) {

// WakeUpException is thrown here.

// since KafkaConsumer#wakeUp is called before,if execute

KafkaConsumer#postion() in 'if statement' above, it will throw WakeUpException.

if (consumer.position(tp) >= getStoppingOffset(tp)) {

emptyPartitions.add(tp);

}

}

// ignore irrelevant code...

} {code}

Maybe we should check whether *KafkaConsumer#wakeUp* is called before, or catch

WakeUpException in *KafkaPartitionSplitReader#removeEmptySplits.*

> Kafka WakeUpException during handling splits changes

>

>

> Key: FLINK-27762

> URL: https://issues.apache.org/jira/browse/FLINK-27762

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.14.3

>Reporter: zoucan

>Assignee: Qingsheng Ren

>Priority: Major

> Labels: pull-request-available

>

>

> We enable dynamic partition discovery in our flink job, but job failed when

> kafka partition is changed.

> Exception detail is shown as follows:

> {code:java}

> java.lang.RuntimeException: One or more fetchers have encountered exception

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcherManager.checkErrors(SplitFetcherManager.java:225)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.getNextFetch(SourceReaderBase.java:169)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.pollNext(SourceReaderBase.java:130)

> at

> org.apache.flink.streaming.api.operators.SourceOperator.emitNext(SourceOperator.java:350)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskSourceInput.emitNext(StreamTaskSourceInput.java:68)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:65)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:496)

> at

> org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:203)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:809)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:761)

> at

> org.apache.flink.runtime.taskmanager.Task.runWithSystemExitMonitoring(Task.java:958)

> at

> org.apache.flink.runtime.taskmanager.Task.restoreAndInvoke(Task.java:937)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:766)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:575)

> at java.lang.Thread.run(Thread.java:748)

> Caused by: java.lang.RuntimeException: SplitFetcher thread 0 received

> unexpected exception while polling the records

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.runOnce(SplitFetcher.java:150)

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.run(SplitFetcher.java:105)

> at

> java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

> at jav

[GitHub] [flink] flinkbot commented on pull request #19843: [hotfix] Fix comment typo in TypeExtractor class

flinkbot commented on PR #19843: URL: https://github.com/apache/flink/pull/19843#issuecomment-1140628091 ## CI report: * 056a8e7e0028e39bd5428a8b40b22454e01a0560 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (FLINK-27762) Kafka WakeUpException during handling splits changes

[

https://issues.apache.org/jira/browse/FLINK-27762?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543756#comment-17543756

]

zoucan edited comment on FLINK-27762 at 5/30/22 2:46 AM:

-

[~renqs]

Thanks for your effort for this issue.

I‘ve reviewed your pr and i have a question. The exception i met is in method

{*}KafkaPartitionSplitReader#removeEmptySplits{*}. But i can't find any action

for handling this exception.

{code:java}

//

org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader#removeEmptySplits

private void removeEmptySplits() {

List emptyPartitions = new ArrayList<>();

for (TopicPartition tp : consumer.assignment()) {

// WakeUpException is thrown here.

// since KafkaConsumer#wakeUp is called before,if execute

KafkaConsumer#postion() in 'if statement' above, it will throw WakeUpException.

if (consumer.position(tp) >= getStoppingOffset(tp)) {

emptyPartitions.add(tp);

}

}

// ignore irrelevant code...

} {code}

Maybe we should check whether *KafkaConsumer#wakeUp* is called before, or catch

WakeUpException in *KafkaPartitionSplitReader#removeEmptySplits.*

was (Author: JIRAUSER289972):

[~renqs]

Thanks for your effort for this issue.

I‘ve reviewed your pr and i have a question. The exception i met is in method

{*}KafkaPartitionSplitReader#removeEmptySplits{*}. But i can't find any action

to handling this exception.

{code:java}

//

org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader#removeEmptySplits

private void removeEmptySplits() {

List emptyPartitions = new ArrayList<>();

for (TopicPartition tp : consumer.assignment()) {

// WakeUpException is thrown here.

// since KafkaConsumer#wakeUp is called before,if execute

KafkaConsumer#postion() in 'if statement' above, it will throw WakeUpException.

if (consumer.position(tp) >= getStoppingOffset(tp)) {

emptyPartitions.add(tp);

}

}

// ignore irrelevant code...

} {code}

Maybe we should check whether *KafkaConsumer#wakeUp* is called before, or catch

WakeUpException in *KafkaPartitionSplitReader#removeEmptySplits.*

> Kafka WakeUpException during handling splits changes

>

>

> Key: FLINK-27762

> URL: https://issues.apache.org/jira/browse/FLINK-27762

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.14.3

>Reporter: zoucan

>Assignee: Qingsheng Ren

>Priority: Major

> Labels: pull-request-available

>

>

> We enable dynamic partition discovery in our flink job, but job failed when

> kafka partition is changed.

> Exception detail is shown as follows:

> {code:java}

> java.lang.RuntimeException: One or more fetchers have encountered exception

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcherManager.checkErrors(SplitFetcherManager.java:225)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.getNextFetch(SourceReaderBase.java:169)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.pollNext(SourceReaderBase.java:130)

> at

> org.apache.flink.streaming.api.operators.SourceOperator.emitNext(SourceOperator.java:350)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskSourceInput.emitNext(StreamTaskSourceInput.java:68)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:65)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:496)

> at

> org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:203)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:809)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:761)

> at

> org.apache.flink.runtime.taskmanager.Task.runWithSystemExitMonitoring(Task.java:958)

> at

> org.apache.flink.runtime.taskmanager.Task.restoreAndInvoke(Task.java:937)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:766)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:575)

> at java.lang.Thread.run(Thread.java:748)

> Caused by: java.lang.RuntimeException: SplitFetcher thread 0 received

> unexpected exception while polling the records

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.runOnce(SplitFetcher.java:150)

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.run(SplitFetcher.java:105)

> at

> java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

> at java.util.concurren

[jira] [Comment Edited] (FLINK-27762) Kafka WakeUpException during handling splits changes

[

https://issues.apache.org/jira/browse/FLINK-27762?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543756#comment-17543756

]

zoucan edited comment on FLINK-27762 at 5/30/22 2:45 AM:

-

[~renqs]

Thanks for your effort for this issue.

I‘ve reviewed your pr and i have a question. The exception i met is in method

{*}KafkaPartitionSplitReader#removeEmptySplits{*}. But i can't find any action

to handling this exception.

{code:java}

//

org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader#removeEmptySplits

private void removeEmptySplits() {

List emptyPartitions = new ArrayList<>();

for (TopicPartition tp : consumer.assignment()) {

// WakeUpException is thrown here.

// since KafkaConsumer#wakeUp is called before,if execute

KafkaConsumer#postion() in 'if statement' above, it will throw WakeUpException.

if (consumer.position(tp) >= getStoppingOffset(tp)) {

emptyPartitions.add(tp);

}

}

// ignore irrelevant code...

} {code}

Maybe we should check whether *KafkaConsumer#wakeUp* is called before, or catch

WakeUpException in *KafkaPartitionSplitReader#removeEmptySplits.*

was (Author: JIRAUSER289972):

[~renqs]

Thanks for your effort for this issue.

I‘ve reviewed your pr and i have a question. The exception i met is in method

{*}KafkaPartitionSplitReader#removeEmptySplits{*}. But i can't find any action

to handling this exception.

{code:java}

//

org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader#removeEmptySplits

private void removeEmptySplits() {

List emptyPartitions = new ArrayList<>();

for (TopicPartition tp : consumer.assignment()) {

// WakeUpException is thrown here.

// since KafkaConsumer#wakeUp is called before,if execute

KafkaConsumer#postion() in 'if statement' above, it will throw WakeUpException.

if (consumer.position(tp) >= getStoppingOffset(tp)) {

emptyPartitions.add(tp);

}

}

// ignore irrelevant code...

} {code}

Maybe we should check whether *KafkaConsumer#wakeUp* is called before, or catch

WakeUpException in *KafkaPartitionSplitReader#removeEmptySplits.*

> Kafka WakeUpException during handling splits changes

>

>

> Key: FLINK-27762

> URL: https://issues.apache.org/jira/browse/FLINK-27762

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.14.3

>Reporter: zoucan

>Assignee: Qingsheng Ren

>Priority: Major

> Labels: pull-request-available

>

>

> We enable dynamic partition discovery in our flink job, but job failed when

> kafka partition is changed.

> Exception detail is shown as follows:

> {code:java}

> java.lang.RuntimeException: One or more fetchers have encountered exception

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcherManager.checkErrors(SplitFetcherManager.java:225)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.getNextFetch(SourceReaderBase.java:169)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.pollNext(SourceReaderBase.java:130)

> at

> org.apache.flink.streaming.api.operators.SourceOperator.emitNext(SourceOperator.java:350)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskSourceInput.emitNext(StreamTaskSourceInput.java:68)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:65)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:496)

> at

> org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:203)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:809)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:761)

> at

> org.apache.flink.runtime.taskmanager.Task.runWithSystemExitMonitoring(Task.java:958)

> at

> org.apache.flink.runtime.taskmanager.Task.restoreAndInvoke(Task.java:937)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:766)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:575)

> at java.lang.Thread.run(Thread.java:748)

> Caused by: java.lang.RuntimeException: SplitFetcher thread 0 received

> unexpected exception while polling the records

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.runOnce(SplitFetcher.java:150)

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.run(SplitFetcher.java:105)

> at

> java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

> at java.util.conc

[GitHub] [flink] deadwind4 opened a new pull request, #19843: [hotfix] Fix comment typo in TypeExtractor class

deadwind4 opened a new pull request, #19843: URL: https://github.com/apache/flink/pull/19843 Fix comment typo in TypeExtractor class. Add a `the` before `highest`. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-table-store] JingsongLi commented on a diff in pull request #138: [FLINK-27707] Implement ManagedTableFactory#onCompactTable

JingsongLi commented on code in PR #138:

URL: https://github.com/apache/flink-table-store/pull/138#discussion_r884378698

##

flink-table-store-connector/src/test/java/org/apache/flink/table/store/connector/TableStoreManagedFactoryTest.java:

##

@@ -256,9 +284,288 @@ public void testCreateAndCheckTableStore(

}

}

+@Test

+public void testOnCompactTableForNoSnapshot() {

+RowType partType = RowType.of();

+MockTableStoreManagedFactory mockTableStoreManagedFactory =

+new MockTableStoreManagedFactory(partType,

NON_PARTITIONED_ROW_TYPE);

+prepare(

+TABLE + "_" + UUID.randomUUID(),

+partType,

+NON_PARTITIONED_ROW_TYPE,

+NON_PARTITIONED,

+true);

+assertThatThrownBy(

+() ->

+mockTableStoreManagedFactory.onCompactTable(

+context, new

CatalogPartitionSpec(emptyMap(

+.isInstanceOf(IllegalStateException.class)

+.hasMessageContaining("The specified table to compact does not

exist any snapshot");

+}

+

+@ParameterizedTest

+@ValueSource(booleans = {true, false})

+public void testOnCompactTableForNonPartitioned(boolean rescaleBucket)

throws Exception {

+RowType partType = RowType.of();

+runTest(

+new MockTableStoreManagedFactory(partType,

NON_PARTITIONED_ROW_TYPE),

+TABLE + "_" + UUID.randomUUID(),

+partType,

+NON_PARTITIONED_ROW_TYPE,

+NON_PARTITIONED,

+rescaleBucket);

+}

+

+@ParameterizedTest

+@ValueSource(booleans = {true, false})

+public void testOnCompactTableForSinglePartitioned(boolean rescaleBucket)

throws Exception {

+runTest(

+new MockTableStoreManagedFactory(

+SINGLE_PARTITIONED_PART_TYPE,

SINGLE_PARTITIONED_ROW_TYPE),

+TABLE + "_" + UUID.randomUUID(),

+SINGLE_PARTITIONED_PART_TYPE,

+SINGLE_PARTITIONED_ROW_TYPE,

+SINGLE_PARTITIONED,

+rescaleBucket);

+}

+

+@ParameterizedTest

+@ValueSource(booleans = {true, false})

+public void testOnCompactTableForMultiPartitioned(boolean rescaleBucket)

throws Exception {

+runTest(

+new MockTableStoreManagedFactory(),

+TABLE + "_" + UUID.randomUUID(),

+DEFAULT_PART_TYPE,

+DEFAULT_ROW_TYPE,

+MULTI_PARTITIONED,

+rescaleBucket);

+}

+

+@MethodSource("provideManifest")

+@ParameterizedTest

Review Comment:

Can we have a name for each parameter?

Very difficult to maintain without a name.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (FLINK-27762) Kafka WakeUpException during handling splits changes

[

https://issues.apache.org/jira/browse/FLINK-27762?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543756#comment-17543756

]

zoucan commented on FLINK-27762:

[~renqs]

Thanks for your effort for this issue.

I‘ve reviewed your pr and i have a question. The exception i met is in method

{*}KafkaPartitionSplitReader#removeEmptySplits{*}. But i can't find any action

to handling this exception.

{code:java}

//

org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader#removeEmptySplits

private void removeEmptySplits() {

List emptyPartitions = new ArrayList<>();

for (TopicPartition tp : consumer.assignment()) {

// WakeUpException is thrown here.

// since KafkaConsumer#wakeUp is called before,if execute

KafkaConsumer#postion() in 'if statement' above, it will throw WakeUpException.

if (consumer.position(tp) >= getStoppingOffset(tp)) {

emptyPartitions.add(tp);

}

}

// ignore irrelevant code...

} {code}

Maybe we should check whether *KafkaConsumer#wakeUp* is called before, or catch

WakeUpException in *KafkaPartitionSplitReader#removeEmptySplits.*

> Kafka WakeUpException during handling splits changes

>

>

> Key: FLINK-27762

> URL: https://issues.apache.org/jira/browse/FLINK-27762

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.14.3

>Reporter: zoucan

>Assignee: Qingsheng Ren

>Priority: Major

> Labels: pull-request-available

>

>

> We enable dynamic partition discovery in our flink job, but job failed when

> kafka partition is changed.

> Exception detail is shown as follows:

> {code:java}

> java.lang.RuntimeException: One or more fetchers have encountered exception

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcherManager.checkErrors(SplitFetcherManager.java:225)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.getNextFetch(SourceReaderBase.java:169)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.pollNext(SourceReaderBase.java:130)

> at

> org.apache.flink.streaming.api.operators.SourceOperator.emitNext(SourceOperator.java:350)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskSourceInput.emitNext(StreamTaskSourceInput.java:68)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:65)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:496)

> at

> org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:203)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:809)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:761)

> at

> org.apache.flink.runtime.taskmanager.Task.runWithSystemExitMonitoring(Task.java:958)

> at

> org.apache.flink.runtime.taskmanager.Task.restoreAndInvoke(Task.java:937)

> at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:766)

> at org.apache.flink.runtime.taskmanager.Task.run(Task.java:575)

> at java.lang.Thread.run(Thread.java:748)

> Caused by: java.lang.RuntimeException: SplitFetcher thread 0 received

> unexpected exception while polling the records

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.runOnce(SplitFetcher.java:150)

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.run(SplitFetcher.java:105)

> at

> java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

> at java.util.concurrent.FutureTask.run(FutureTask.java:266)

> at

> java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

> at

> java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

> ... 1 more

> Caused by: org.apache.kafka.common.errors.WakeupException

> at

> org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.maybeTriggerWakeup(ConsumerNetworkClient.java:511)

> at

> org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:275)

> at

> org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:233)

> at

> org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:224)

> at

> org.apache.kafka.clients.consumer.KafkaConsumer.position(KafkaConsumer.java:1726)

> at

> org.apache.kafka.clients.consumer.KafkaConsumer.position(KafkaConsumer.java:1684)

> at

> org.apache.flink.connector.kafka.source.reader.KafkaPartiti

[jira] [Comment Edited] (FLINK-27762) Kafka WakeUpException during handling splits changes

[

https://issues.apache.org/jira/browse/FLINK-27762?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17543756#comment-17543756

]

zoucan edited comment on FLINK-27762 at 5/30/22 2:44 AM:

-

[~renqs]

Thanks for your effort for this issue.

I‘ve reviewed your pr and i have a question. The exception i met is in method

{*}KafkaPartitionSplitReader#removeEmptySplits{*}. But i can't find any action

to handling this exception.

{code:java}

//

org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader#removeEmptySplits

private void removeEmptySplits() {

List emptyPartitions = new ArrayList<>();

for (TopicPartition tp : consumer.assignment()) {

// WakeUpException is thrown here.

// since KafkaConsumer#wakeUp is called before,if execute

KafkaConsumer#postion() in 'if statement' above, it will throw WakeUpException.

if (consumer.position(tp) >= getStoppingOffset(tp)) {

emptyPartitions.add(tp);

}

}

// ignore irrelevant code...

} {code}

Maybe we should check whether *KafkaConsumer#wakeUp* is called before, or catch

WakeUpException in *KafkaPartitionSplitReader#removeEmptySplits.*

was (Author: JIRAUSER289972):

[~renqs]

Thanks for your effort for this issue.

I‘ve reviewed your pr and i have a question. The exception i met is in method

{*}KafkaPartitionSplitReader#removeEmptySplits{*}. But i can't find any action

to handling this exception.

{code:java}

//

org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader#removeEmptySplits

private void removeEmptySplits() {

List emptyPartitions = new ArrayList<>();

for (TopicPartition tp : consumer.assignment()) {

// WakeUpException is thrown here.

// since KafkaConsumer#wakeUp is called before,if execute

KafkaConsumer#postion() in 'if statement' above, it will throw WakeUpException.

if (consumer.position(tp) >= getStoppingOffset(tp)) {

emptyPartitions.add(tp);

}

}

// ignore irrelevant code...

} {code}

Maybe we should check whether *KafkaConsumer#wakeUp* is called before, or catch

WakeUpException in *KafkaPartitionSplitReader#removeEmptySplits.*

> Kafka WakeUpException during handling splits changes

>

>

> Key: FLINK-27762

> URL: https://issues.apache.org/jira/browse/FLINK-27762

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.14.3

>Reporter: zoucan

>Assignee: Qingsheng Ren

>Priority: Major

> Labels: pull-request-available

>

>

> We enable dynamic partition discovery in our flink job, but job failed when

> kafka partition is changed.

> Exception detail is shown as follows:

> {code:java}

> java.lang.RuntimeException: One or more fetchers have encountered exception

> at

> org.apache.flink.connector.base.source.reader.fetcher.SplitFetcherManager.checkErrors(SplitFetcherManager.java:225)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.getNextFetch(SourceReaderBase.java:169)

> at

> org.apache.flink.connector.base.source.reader.SourceReaderBase.pollNext(SourceReaderBase.java:130)

> at

> org.apache.flink.streaming.api.operators.SourceOperator.emitNext(SourceOperator.java:350)

> at

> org.apache.flink.streaming.runtime.io.StreamTaskSourceInput.emitNext(StreamTaskSourceInput.java:68)

> at

> org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:65)

> at

> org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:496)

> at