[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r578246029

##

File path: extensions/librdkafka/rdkafka_utils.h

##

@@ -0,0 +1,105 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#pragma once

+

+#include

+#include

+#include

+#include

+#include

+#include

+#include

+

+#include "core/logging/LoggerConfiguration.h"

+#include "utils/OptionalUtils.h"

+#include "utils/gsl.h"

+#include "rdkafka.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace utils {

+

+enum class KafkaEncoding {

+ UTF8,

+ HEX

+};

+

+struct rd_kafka_conf_deleter {

+ void operator()(rd_kafka_conf_t* ptr) const noexcept {

rd_kafka_conf_destroy(ptr); }

+};

+

+struct rd_kafka_producer_deleter {

+ void operator()(rd_kafka_t* ptr) const noexcept {

+rd_kafka_resp_err_t flush_ret = rd_kafka_flush(ptr, 1 /* ms */); //

Matching the wait time of KafkaConnection.cpp

+// If concerned, we could log potential errors here:

+// if (RD_KAFKA_RESP_ERR__TIMED_OUT == flush_ret) {

+// std::cerr << "Deleting producer failed: time-out while trying to

flush" << std::endl;

+// }

+rd_kafka_destroy(ptr);

+ }

+};

+

+struct rd_kafka_consumer_deleter {

+ void operator()(rd_kafka_t* ptr) const noexcept {

+rd_kafka_consumer_close(ptr);

+rd_kafka_destroy(ptr);

+ }

+};

+

+struct rd_kafka_topic_partition_list_deleter {

+ void operator()(rd_kafka_topic_partition_list_t* ptr) const noexcept {

rd_kafka_topic_partition_list_destroy(ptr); }

+};

+

+struct rd_kafka_topic_conf_deleter {

+ void operator()(rd_kafka_topic_conf_t* ptr) const noexcept {

rd_kafka_topic_conf_destroy(ptr); }

+};

+struct rd_kafka_topic_deleter {

+ void operator()(rd_kafka_topic_t* ptr) const noexcept {

rd_kafka_topic_destroy(ptr); }

+};

+

+struct rd_kafka_message_deleter {

+ void operator()(rd_kafka_message_t* ptr) const noexcept {

rd_kafka_message_destroy(ptr); }

+};

+

+struct rd_kafka_headers_deleter {

+ void operator()(rd_kafka_headers_t* ptr) const noexcept {

rd_kafka_headers_destroy(ptr); }

+};

+

+template

+void kafka_headers_for_each(const rd_kafka_headers_t* headers, T

key_value_handle) {

Review comment:

Updated.

##

File path: extensions/librdkafka/rdkafka_utils.cpp

##

@@ -0,0 +1,121 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#include

+

+#include "rdkafka_utils.h"

+

+#include "Exception.h"

+#include "utils/StringUtils.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace utils {

+

+void setKafkaConfigurationField(rd_kafka_conf_t* configuration, const

std::string& field_name, const std::string& value) {

+ static std::array errstr{};

+ rd_kafka_conf_res_t result;

+ result = rd_kafka_conf_set(configuration, field_name.c_str(), value.c_str(),

errstr.data(), errstr.size());

+ if (RD_KAFKA_CONF_OK != result) {

+const std::string error_msg { errstr.data() };

+throw Exception(PROCESS_SCHEDULE_EXCEPTION, "rd_kafka configuration error:

" + error_msg);

+ }

+}

+

+void print_topics_list(logging::Logger& logger,

rd_kafka_topic_partition_list_t* kf_topic_partition_list) {

+ for (std::size_t i = 0; i < kf_topic_partition_list->cnt; ++i) {

+logger.log_debug("kf_topic_partition_list: topic: %s, partition: %d,

offset: %" PRId64 ".",

+kf_topic_partition_list->elems[i].topic,

kf_topic_partition_list->elems[i].pa

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r578221481

##

File path: extensions/librdkafka/ConsumeKafka.cpp

##

@@ -0,0 +1,570 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#include "ConsumeKafka.h"

+

+#include

+#include

+

+#include "core/PropertyValidation.h"

+#include "utils/ProcessorConfigUtils.h"

+#include "utils/gsl.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace core {

+// The upper limit for Max Poll Time is 4 seconds. This is because Watchdog

would potentially start

+// reporting issues with the processor health otherwise

+class ConsumeKafkaMaxPollTimeValidator : public TimePeriodValidator {

+ public:

+ ConsumeKafkaMaxPollTimeValidator(const std::string &name) // NOLINT

+ : TimePeriodValidator(name) {

+ }

+ ~ConsumeKafkaMaxPollTimeValidator() override = default;

+

+ ValidationResult validate(const std::string& subject, const std::string&

input) const override {

+uint64_t value;

+TimeUnit timeUnit;

+uint64_t value_as_ms;

+return

ValidationResult::Builder::createBuilder().withSubject(subject).withInput(input).isValid(

+core::TimePeriodValue::StringToTime(input, value, timeUnit) &&

+org::apache::nifi::minifi::core::Property::ConvertTimeUnitToMS(value,

timeUnit, value_as_ms) &&

+0 < value_as_ms && value_as_ms <= 4000).build();

+ }

+};

+} // namespace core

+namespace processors {

+

+constexpr const std::size_t ConsumeKafka::DEFAULT_MAX_POLL_RECORDS;

+constexpr char const* ConsumeKafka::DEFAULT_MAX_POLL_TIME;

+

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_NAMES;

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_PATTERNS;

+

+core::Property

ConsumeKafka::KafkaBrokers(core::PropertyBuilder::createProperty("Kafka

Brokers")

+ ->withDescription("A comma-separated list of known Kafka Brokers in the

format :.")

+ ->withDefaultValue("localhost:9092",

core::StandardValidators::get().NON_BLANK_VALIDATOR)

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::SecurityProtocol(core::PropertyBuilder::createProperty("Security

Protocol")

+ ->withDescription("This property is currently not supported. Protocol used

to communicate with brokers. Corresponds to Kafka's 'security.protocol'

property.")

+ ->withAllowableValues({SECURITY_PROTOCOL_PLAINTEXT/*,

SECURITY_PROTOCOL_SSL, SECURITY_PROTOCOL_SASL_PLAINTEXT,

SECURITY_PROTOCOL_SASL_SSL*/ })

+ ->withDefaultValue(SECURITY_PROTOCOL_PLAINTEXT)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNames(core::PropertyBuilder::createProperty("Topic Names")

+ ->withDescription("The name of the Kafka Topic(s) to pull from. Multiple

topic names are supported as a comma separated list.")

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNameFormat(core::PropertyBuilder::createProperty("Topic Name

Format")

+ ->withDescription("Specifies whether the Topic(s) provided are a comma

separated list of names or a single regular expression.")

+ ->withAllowableValues({TOPIC_FORMAT_NAMES,

TOPIC_FORMAT_PATTERNS})

+ ->withDefaultValue(TOPIC_FORMAT_NAMES)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::HonorTransactions(core::PropertyBuilder::createProperty("Honor

Transactions")

+ ->withDescription(

+ "Specifies whether or not MiNiFi should honor transactional guarantees

when communicating with Kafka. If false, the Processor will use an \"isolation

level\" of "

+ "read_uncomitted. This means that messages will be received as soon as

they are written to Kafka but will be pulled, even if the producer cancels the

transactions. "

+ "If this value is true, MiNiFi will not receive any messages for which

the producer's transaction was canceled, but this can result in some latency

since the consumer "

+ "must wait for the producer to finish its entire transaction instead of

pulling as the messages become available.")

+ ->withDefaultValue(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::GroupID(core::Propert

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r577719214

##

File path: extensions/librdkafka/ConsumeKafka.cpp

##

@@ -0,0 +1,578 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#include "ConsumeKafka.h"

+

+#include

+#include

+

+#include "core/PropertyValidation.h"

+#include "utils/ProcessorConfigUtils.h"

+#include "utils/gsl.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace core {

+// The upper limit for Max Poll Time is 4 seconds. This is because Watchdog

would potentially start

+// reporting issues with the processor health otherwise

+class ConsumeKafkaMaxPollTimeValidator : public TimePeriodValidator {

+ public:

+ ConsumeKafkaMaxPollTimeValidator(const std::string &name) // NOLINT

+ : TimePeriodValidator(name) {

+ }

+ ~ConsumeKafkaMaxPollTimeValidator() override = default;

+

+ ValidationResult validate(const std::string& subject, const std::string&

input) const override {

+uint64_t value;

+TimeUnit timeUnit;

+uint64_t value_as_ms;

+return

ValidationResult::Builder::createBuilder().withSubject(subject).withInput(input).isValid(

+core::TimePeriodValue::StringToTime(input, value, timeUnit) &&

+org::apache::nifi::minifi::core::Property::ConvertTimeUnitToMS(value,

timeUnit, value_as_ms) &&

+0 < value_as_ms && value_as_ms <= 4000).build();

+ }

+};

+} // namespace core

+namespace processors {

+

+constexpr const std::size_t ConsumeKafka::DEFAULT_MAX_POLL_RECORDS;

+constexpr char const* ConsumeKafka::DEFAULT_MAX_POLL_TIME;

+

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_NAMES;

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_PATTERNS;

+

+core::Property

ConsumeKafka::KafkaBrokers(core::PropertyBuilder::createProperty("Kafka

Brokers")

+ ->withDescription("A comma-separated list of known Kafka Brokers in the

format :.")

+ ->withDefaultValue("localhost:9092",

core::StandardValidators::get().NON_BLANK_VALIDATOR)

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::SecurityProtocol(core::PropertyBuilder::createProperty("Security

Protocol")

+ ->withDescription("This property is currently not supported. Protocol used

to communicate with brokers. Corresponds to Kafka's 'security.protocol'

property.")

+ ->withAllowableValues({SECURITY_PROTOCOL_PLAINTEXT/*,

SECURITY_PROTOCOL_SSL, SECURITY_PROTOCOL_SASL_PLAINTEXT,

SECURITY_PROTOCOL_SASL_SSL*/ })

+ ->withDefaultValue(SECURITY_PROTOCOL_PLAINTEXT)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNames(core::PropertyBuilder::createProperty("Topic Names")

+ ->withDescription("The name of the Kafka Topic(s) to pull from. Multiple

topic names are supported as a comma separated list.")

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNameFormat(core::PropertyBuilder::createProperty("Topic Name

Format")

+ ->withDescription("Specifies whether the Topic(s) provided are a comma

separated list of names or a single regular expression.")

+ ->withAllowableValues({TOPIC_FORMAT_NAMES,

TOPIC_FORMAT_PATTERNS})

+ ->withDefaultValue(TOPIC_FORMAT_NAMES)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::HonorTransactions(core::PropertyBuilder::createProperty("Honor

Transactions")

+ ->withDescription(

+ "Specifies whether or not MiNiFi should honor transactional guarantees

when communicating with Kafka. If false, the Processor will use an \"isolation

level\" of "

+ "read_uncomitted. This means that messages will be received as soon as

they are written to Kafka but will be pulled, even if the producer cancels the

transactions. "

+ "If this value is true, MiNiFi will not receive any messages for which

the producer's transaction was canceled, but this can result in some latency

since the consumer "

+ "must wait for the producer to finish its entire transaction instead of

pulling as the messages become available.")

+ ->withDefaultValue(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::GroupID(core::Propert

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r577708270

##

File path: extensions/librdkafka/ConsumeKafka.h

##

@@ -124,6 +124,17 @@ class ConsumeKafka : public core::Processor {

// Initialize, overwrite by NiFi RetryFlowFile

void initialize() override;

+ class WriteCallback : public OutputStreamCallback {

Review comment:

Indeed it does not need to be, this is just where I found it on the

first match when searching similar patterns (ApplyTemplate). Changed it to

`private`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r577701277

##

File path: extensions/librdkafka/ConsumeKafka.h

##

@@ -0,0 +1,181 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#pragma once

+

+#include

+#include

+#include

+#include

+

+#include "core/Processor.h"

+#include "core/logging/LoggerConfiguration.h"

+#include "rdkafka.h"

+#include "rdkafka_utils.h"

+#include "KafkaConnection.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace processors {

+

+class ConsumeKafka : public core::Processor {

+ public:

+ static constexpr char const* ProcessorName = "ConsumeKafka";

+

+ // Supported Properties

+ static core::Property KafkaBrokers;

+ static core::Property SecurityProtocol;

+ static core::Property TopicNames;

+ static core::Property TopicNameFormat;

+ static core::Property HonorTransactions;

+ static core::Property GroupID;

+ static core::Property OffsetReset;

+ static core::Property KeyAttributeEncoding;

+ static core::Property MessageDemarcator;

+ static core::Property MessageHeaderEncoding;

+ static core::Property HeadersToAddAsAttributes;

+ static core::Property DuplicateHeaderHandling;

+ static core::Property MaxPollRecords;

+ static core::Property MaxPollTime;

+ static core::Property SessionTimeout;

+

+ // Supported Relationships

+ static const core::Relationship Success;

+

+ // Security Protocol allowable values

+ static constexpr char const* SECURITY_PROTOCOL_PLAINTEXT = "PLAINTEXT";

+ static constexpr char const* SECURITY_PROTOCOL_SSL = "SSL";

+ static constexpr char const* SECURITY_PROTOCOL_SASL_PLAINTEXT =

"SASL_PLAINTEXT";

+ static constexpr char const* SECURITY_PROTOCOL_SASL_SSL = "SASL_SSL";

+

+ // Topic Name Format allowable values

+ static constexpr char const* TOPIC_FORMAT_NAMES = "Names";

+ static constexpr char const* TOPIC_FORMAT_PATTERNS = "Patterns";

+

+ // Offset Reset allowable values

+ static constexpr char const* OFFSET_RESET_EARLIEST = "earliest";

+ static constexpr char const* OFFSET_RESET_LATEST = "latest";

+ static constexpr char const* OFFSET_RESET_NONE = "none";

+

+ // Key Attribute Encoding allowable values

+ static constexpr char const* KEY_ATTR_ENCODING_UTF_8 = "UTF-8";

+ static constexpr char const* KEY_ATTR_ENCODING_HEX = "Hex";

+

+ // Message Header Encoding allowable values

+ static constexpr char const* MSG_HEADER_ENCODING_UTF_8 = "UTF-8";

+ static constexpr char const* MSG_HEADER_ENCODING_HEX = "Hex";

+

+ // Duplicate Header Handling allowable values

+ static constexpr char const* MSG_HEADER_KEEP_FIRST = "Keep First";

+ static constexpr char const* MSG_HEADER_KEEP_LATEST = "Keep Latest";

+ static constexpr char const* MSG_HEADER_COMMA_SEPARATED_MERGE =

"Comma-separated Merge";

+

+ // Flowfile attributes written

+ static constexpr char const* KAFKA_COUNT_ATTR = "kafka.count"; // Always 1

until we start supporting merging from batches

+ static constexpr char const* KAFKA_MESSAGE_KEY_ATTR = "kafka.key";

+ static constexpr char const* KAFKA_OFFSET_ATTR = "kafka.offset";

+ static constexpr char const* KAFKA_PARTITION_ATTR = "kafka.partition";

+ static constexpr char const* KAFKA_TOPIC_ATTR = "kafka.topic";

+

+ static constexpr const std::size_t DEFAULT_MAX_POLL_RECORDS{ 1 };

+ static constexpr char const* DEFAULT_MAX_POLL_TIME = "4 seconds";

+ static constexpr const std::size_t METADATA_COMMUNICATIONS_TIMEOUT_MS{ 6

};

+

+ explicit ConsumeKafka(std::string name, utils::Identifier uuid =

utils::Identifier()) :

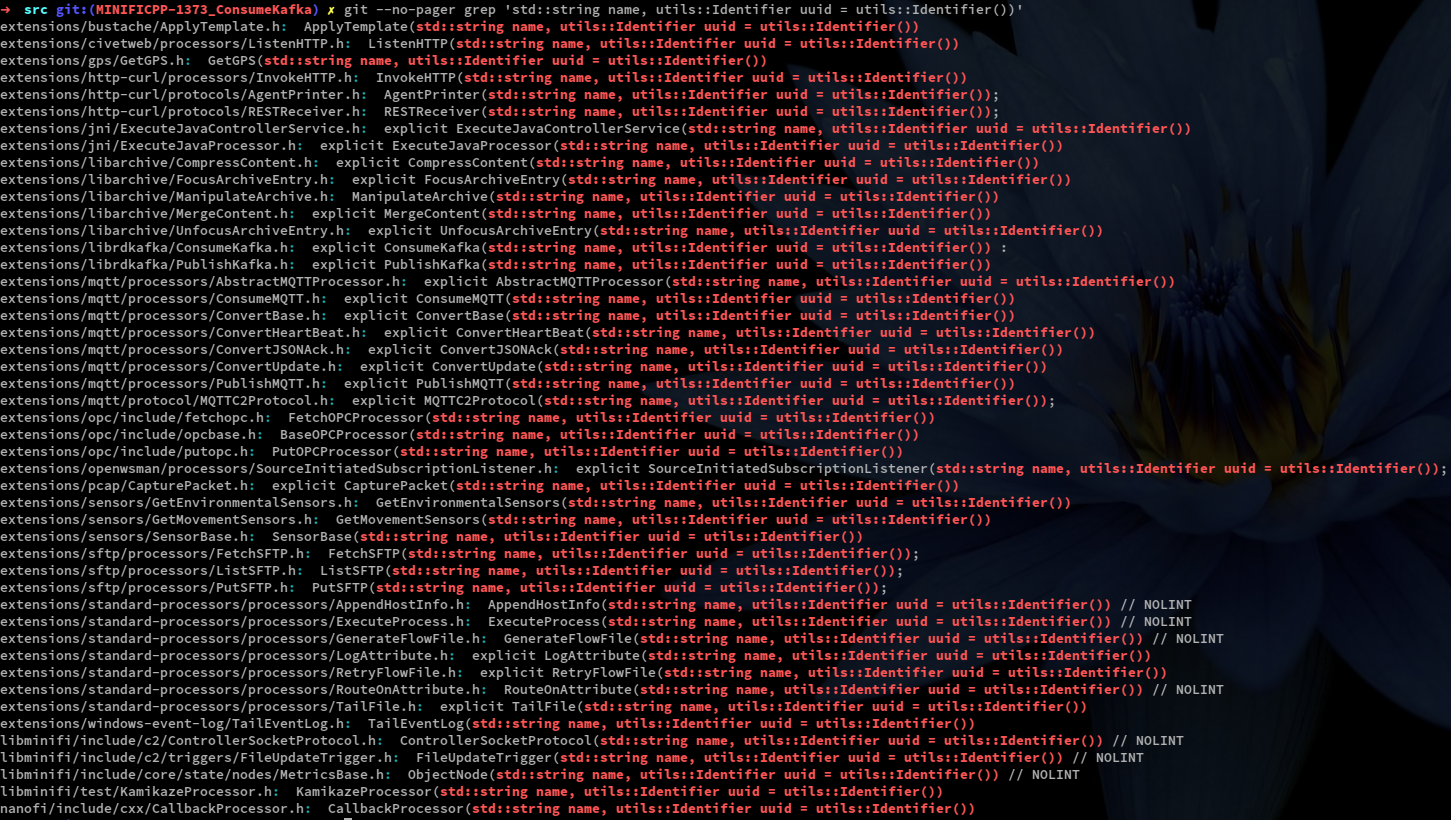

Review comment:

Changed it for this function, and added a Jira for the

search-and-replace task:

https://issues.apache.org/jira/browse/MINIFICPP-1502

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r577510561

##

File path: extensions/librdkafka/ConsumeKafka.cpp

##

@@ -0,0 +1,578 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#include "ConsumeKafka.h"

+

+#include

+#include

+

+#include "core/PropertyValidation.h"

+#include "utils/ProcessorConfigUtils.h"

+#include "utils/gsl.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace core {

+// The upper limit for Max Poll Time is 4 seconds. This is because Watchdog

would potentially start

+// reporting issues with the processor health otherwise

+class ConsumeKafkaMaxPollTimeValidator : public TimePeriodValidator {

+ public:

+ ConsumeKafkaMaxPollTimeValidator(const std::string &name) // NOLINT

+ : TimePeriodValidator(name) {

+ }

+ ~ConsumeKafkaMaxPollTimeValidator() override = default;

+

+ ValidationResult validate(const std::string& subject, const std::string&

input) const override {

+uint64_t value;

+TimeUnit timeUnit;

+uint64_t value_as_ms;

+return

ValidationResult::Builder::createBuilder().withSubject(subject).withInput(input).isValid(

+core::TimePeriodValue::StringToTime(input, value, timeUnit) &&

+org::apache::nifi::minifi::core::Property::ConvertTimeUnitToMS(value,

timeUnit, value_as_ms) &&

+0 < value_as_ms && value_as_ms <= 4000).build();

+ }

+};

+} // namespace core

+namespace processors {

+

+constexpr const std::size_t ConsumeKafka::DEFAULT_MAX_POLL_RECORDS;

+constexpr char const* ConsumeKafka::DEFAULT_MAX_POLL_TIME;

+

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_NAMES;

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_PATTERNS;

+

+core::Property

ConsumeKafka::KafkaBrokers(core::PropertyBuilder::createProperty("Kafka

Brokers")

+ ->withDescription("A comma-separated list of known Kafka Brokers in the

format :.")

+ ->withDefaultValue("localhost:9092",

core::StandardValidators::get().NON_BLANK_VALIDATOR)

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::SecurityProtocol(core::PropertyBuilder::createProperty("Security

Protocol")

+ ->withDescription("This property is currently not supported. Protocol used

to communicate with brokers. Corresponds to Kafka's 'security.protocol'

property.")

+ ->withAllowableValues({SECURITY_PROTOCOL_PLAINTEXT/*,

SECURITY_PROTOCOL_SSL, SECURITY_PROTOCOL_SASL_PLAINTEXT,

SECURITY_PROTOCOL_SASL_SSL*/ })

+ ->withDefaultValue(SECURITY_PROTOCOL_PLAINTEXT)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNames(core::PropertyBuilder::createProperty("Topic Names")

+ ->withDescription("The name of the Kafka Topic(s) to pull from. Multiple

topic names are supported as a comma separated list.")

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNameFormat(core::PropertyBuilder::createProperty("Topic Name

Format")

+ ->withDescription("Specifies whether the Topic(s) provided are a comma

separated list of names or a single regular expression.")

+ ->withAllowableValues({TOPIC_FORMAT_NAMES,

TOPIC_FORMAT_PATTERNS})

+ ->withDefaultValue(TOPIC_FORMAT_NAMES)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::HonorTransactions(core::PropertyBuilder::createProperty("Honor

Transactions")

+ ->withDescription(

+ "Specifies whether or not MiNiFi should honor transactional guarantees

when communicating with Kafka. If false, the Processor will use an \"isolation

level\" of "

+ "read_uncomitted. This means that messages will be received as soon as

they are written to Kafka but will be pulled, even if the producer cancels the

transactions. "

+ "If this value is true, MiNiFi will not receive any messages for which

the producer's transaction was canceled, but this can result in some latency

since the consumer "

+ "must wait for the producer to finish its entire transaction instead of

pulling as the messages become available.")

+ ->withDefaultValue(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::GroupID(core::Propert

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r577502720

##

File path: extensions/librdkafka/ConsumeKafka.cpp

##

@@ -0,0 +1,578 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#include "ConsumeKafka.h"

+

+#include

+#include

+

+#include "core/PropertyValidation.h"

+#include "utils/ProcessorConfigUtils.h"

+#include "utils/gsl.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace core {

+// The upper limit for Max Poll Time is 4 seconds. This is because Watchdog

would potentially start

+// reporting issues with the processor health otherwise

+class ConsumeKafkaMaxPollTimeValidator : public TimePeriodValidator {

+ public:

+ ConsumeKafkaMaxPollTimeValidator(const std::string &name) // NOLINT

+ : TimePeriodValidator(name) {

+ }

+ ~ConsumeKafkaMaxPollTimeValidator() override = default;

+

+ ValidationResult validate(const std::string& subject, const std::string&

input) const override {

+uint64_t value;

+TimeUnit timeUnit;

+uint64_t value_as_ms;

+return

ValidationResult::Builder::createBuilder().withSubject(subject).withInput(input).isValid(

+core::TimePeriodValue::StringToTime(input, value, timeUnit) &&

+org::apache::nifi::minifi::core::Property::ConvertTimeUnitToMS(value,

timeUnit, value_as_ms) &&

+0 < value_as_ms && value_as_ms <= 4000).build();

+ }

+};

+} // namespace core

+namespace processors {

+

+constexpr const std::size_t ConsumeKafka::DEFAULT_MAX_POLL_RECORDS;

+constexpr char const* ConsumeKafka::DEFAULT_MAX_POLL_TIME;

+

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_NAMES;

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_PATTERNS;

+

+core::Property

ConsumeKafka::KafkaBrokers(core::PropertyBuilder::createProperty("Kafka

Brokers")

+ ->withDescription("A comma-separated list of known Kafka Brokers in the

format :.")

+ ->withDefaultValue("localhost:9092",

core::StandardValidators::get().NON_BLANK_VALIDATOR)

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::SecurityProtocol(core::PropertyBuilder::createProperty("Security

Protocol")

+ ->withDescription("This property is currently not supported. Protocol used

to communicate with brokers. Corresponds to Kafka's 'security.protocol'

property.")

+ ->withAllowableValues({SECURITY_PROTOCOL_PLAINTEXT/*,

SECURITY_PROTOCOL_SSL, SECURITY_PROTOCOL_SASL_PLAINTEXT,

SECURITY_PROTOCOL_SASL_SSL*/ })

+ ->withDefaultValue(SECURITY_PROTOCOL_PLAINTEXT)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNames(core::PropertyBuilder::createProperty("Topic Names")

+ ->withDescription("The name of the Kafka Topic(s) to pull from. Multiple

topic names are supported as a comma separated list.")

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNameFormat(core::PropertyBuilder::createProperty("Topic Name

Format")

+ ->withDescription("Specifies whether the Topic(s) provided are a comma

separated list of names or a single regular expression.")

+ ->withAllowableValues({TOPIC_FORMAT_NAMES,

TOPIC_FORMAT_PATTERNS})

+ ->withDefaultValue(TOPIC_FORMAT_NAMES)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::HonorTransactions(core::PropertyBuilder::createProperty("Honor

Transactions")

+ ->withDescription(

+ "Specifies whether or not MiNiFi should honor transactional guarantees

when communicating with Kafka. If false, the Processor will use an \"isolation

level\" of "

+ "read_uncomitted. This means that messages will be received as soon as

they are written to Kafka but will be pulled, even if the producer cancels the

transactions. "

+ "If this value is true, MiNiFi will not receive any messages for which

the producer's transaction was canceled, but this can result in some latency

since the consumer "

+ "must wait for the producer to finish its entire transaction instead of

pulling as the messages become available.")

+ ->withDefaultValue(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::GroupID(core::Propert

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r577493724

##

File path: extensions/librdkafka/ConsumeKafka.cpp

##

@@ -0,0 +1,578 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#include "ConsumeKafka.h"

+

+#include

+#include

+

+#include "core/PropertyValidation.h"

+#include "utils/ProcessorConfigUtils.h"

+#include "utils/gsl.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace core {

+// The upper limit for Max Poll Time is 4 seconds. This is because Watchdog

would potentially start

+// reporting issues with the processor health otherwise

+class ConsumeKafkaMaxPollTimeValidator : public TimePeriodValidator {

+ public:

+ ConsumeKafkaMaxPollTimeValidator(const std::string &name) // NOLINT

+ : TimePeriodValidator(name) {

+ }

+ ~ConsumeKafkaMaxPollTimeValidator() override = default;

+

+ ValidationResult validate(const std::string& subject, const std::string&

input) const override {

+uint64_t value;

+TimeUnit timeUnit;

+uint64_t value_as_ms;

+return

ValidationResult::Builder::createBuilder().withSubject(subject).withInput(input).isValid(

+core::TimePeriodValue::StringToTime(input, value, timeUnit) &&

+org::apache::nifi::minifi::core::Property::ConvertTimeUnitToMS(value,

timeUnit, value_as_ms) &&

+0 < value_as_ms && value_as_ms <= 4000).build();

+ }

+};

+} // namespace core

+namespace processors {

+

+constexpr const std::size_t ConsumeKafka::DEFAULT_MAX_POLL_RECORDS;

+constexpr char const* ConsumeKafka::DEFAULT_MAX_POLL_TIME;

+

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_NAMES;

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_PATTERNS;

+

+core::Property

ConsumeKafka::KafkaBrokers(core::PropertyBuilder::createProperty("Kafka

Brokers")

+ ->withDescription("A comma-separated list of known Kafka Brokers in the

format :.")

+ ->withDefaultValue("localhost:9092",

core::StandardValidators::get().NON_BLANK_VALIDATOR)

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::SecurityProtocol(core::PropertyBuilder::createProperty("Security

Protocol")

+ ->withDescription("This property is currently not supported. Protocol used

to communicate with brokers. Corresponds to Kafka's 'security.protocol'

property.")

+ ->withAllowableValues({SECURITY_PROTOCOL_PLAINTEXT/*,

SECURITY_PROTOCOL_SSL, SECURITY_PROTOCOL_SASL_PLAINTEXT,

SECURITY_PROTOCOL_SASL_SSL*/ })

+ ->withDefaultValue(SECURITY_PROTOCOL_PLAINTEXT)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNames(core::PropertyBuilder::createProperty("Topic Names")

+ ->withDescription("The name of the Kafka Topic(s) to pull from. Multiple

topic names are supported as a comma separated list.")

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNameFormat(core::PropertyBuilder::createProperty("Topic Name

Format")

+ ->withDescription("Specifies whether the Topic(s) provided are a comma

separated list of names or a single regular expression.")

+ ->withAllowableValues({TOPIC_FORMAT_NAMES,

TOPIC_FORMAT_PATTERNS})

+ ->withDefaultValue(TOPIC_FORMAT_NAMES)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::HonorTransactions(core::PropertyBuilder::createProperty("Honor

Transactions")

+ ->withDescription(

+ "Specifies whether or not MiNiFi should honor transactional guarantees

when communicating with Kafka. If false, the Processor will use an \"isolation

level\" of "

+ "read_uncomitted. This means that messages will be received as soon as

they are written to Kafka but will be pulled, even if the producer cancels the

transactions. "

+ "If this value is true, MiNiFi will not receive any messages for which

the producer's transaction was canceled, but this can result in some latency

since the consumer "

+ "must wait for the producer to finish its entire transaction instead of

pulling as the messages become available.")

+ ->withDefaultValue(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::GroupID(core::Propert

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r577491948

##

File path: extensions/librdkafka/ConsumeKafka.h

##

@@ -0,0 +1,181 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#pragma once

+

+#include

+#include

+#include

+#include

+

+#include "core/Processor.h"

+#include "core/logging/LoggerConfiguration.h"

+#include "rdkafka.h"

+#include "rdkafka_utils.h"

+#include "KafkaConnection.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace processors {

+

+class ConsumeKafka : public core::Processor {

+ public:

+ static constexpr char const* ProcessorName = "ConsumeKafka";

+

+ // Supported Properties

+ static core::Property KafkaBrokers;

+ static core::Property SecurityProtocol;

+ static core::Property TopicNames;

+ static core::Property TopicNameFormat;

+ static core::Property HonorTransactions;

+ static core::Property GroupID;

+ static core::Property OffsetReset;

+ static core::Property KeyAttributeEncoding;

+ static core::Property MessageDemarcator;

+ static core::Property MessageHeaderEncoding;

+ static core::Property HeadersToAddAsAttributes;

+ static core::Property DuplicateHeaderHandling;

+ static core::Property MaxPollRecords;

+ static core::Property MaxPollTime;

+ static core::Property SessionTimeout;

+

+ // Supported Relationships

+ static const core::Relationship Success;

+

+ // Security Protocol allowable values

+ static constexpr char const* SECURITY_PROTOCOL_PLAINTEXT = "PLAINTEXT";

+ static constexpr char const* SECURITY_PROTOCOL_SSL = "SSL";

+ static constexpr char const* SECURITY_PROTOCOL_SASL_PLAINTEXT =

"SASL_PLAINTEXT";

+ static constexpr char const* SECURITY_PROTOCOL_SASL_SSL = "SASL_SSL";

+

+ // Topic Name Format allowable values

+ static constexpr char const* TOPIC_FORMAT_NAMES = "Names";

+ static constexpr char const* TOPIC_FORMAT_PATTERNS = "Patterns";

+

+ // Offset Reset allowable values

+ static constexpr char const* OFFSET_RESET_EARLIEST = "earliest";

+ static constexpr char const* OFFSET_RESET_LATEST = "latest";

+ static constexpr char const* OFFSET_RESET_NONE = "none";

+

+ // Key Attribute Encoding allowable values

+ static constexpr char const* KEY_ATTR_ENCODING_UTF_8 = "UTF-8";

+ static constexpr char const* KEY_ATTR_ENCODING_HEX = "Hex";

+

+ // Message Header Encoding allowable values

+ static constexpr char const* MSG_HEADER_ENCODING_UTF_8 = "UTF-8";

+ static constexpr char const* MSG_HEADER_ENCODING_HEX = "Hex";

+

+ // Duplicate Header Handling allowable values

+ static constexpr char const* MSG_HEADER_KEEP_FIRST = "Keep First";

+ static constexpr char const* MSG_HEADER_KEEP_LATEST = "Keep Latest";

+ static constexpr char const* MSG_HEADER_COMMA_SEPARATED_MERGE =

"Comma-separated Merge";

+

+ // Flowfile attributes written

+ static constexpr char const* KAFKA_COUNT_ATTR = "kafka.count"; // Always 1

until we start supporting merging from batches

+ static constexpr char const* KAFKA_MESSAGE_KEY_ATTR = "kafka.key";

+ static constexpr char const* KAFKA_OFFSET_ATTR = "kafka.offset";

+ static constexpr char const* KAFKA_PARTITION_ATTR = "kafka.partition";

+ static constexpr char const* KAFKA_TOPIC_ATTR = "kafka.topic";

+

+ static constexpr const std::size_t DEFAULT_MAX_POLL_RECORDS{ 1 };

+ static constexpr char const* DEFAULT_MAX_POLL_TIME = "4 seconds";

+ static constexpr const std::size_t METADATA_COMMUNICATIONS_TIMEOUT_MS{ 6

};

+

+ explicit ConsumeKafka(std::string name, utils::Identifier uuid =

utils::Identifier()) :

Review comment:

Agreed, I did not even notice it when copy-pasting another processor.

Maybe we should consider updating all occurences of this usage?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r577488062

##

File path: libminifi/test/TestBase.cpp

##

@@ -61,44 +62,41 @@ TestPlan::~TestPlan() {

for (auto& processor : configured_processors_) {

processor->setScheduledState(core::ScheduledState::STOPPED);

}

+ for (auto& connection : relationships_) {

+// This is a patch solving circular references between processors and

connections

+connection->setSource(nullptr);

+connection->setDestination(nullptr);

+ }

controller_services_provider_->clearControllerServices();

}

std::shared_ptr TestPlan::addProcessor(const

std::shared_ptr &processor, const std::string& /*name*/, const

std::initializer_list& relationships,

-bool linkToPrevious) {

+bool linkToPrevious) {

if (finalized) {

return nullptr;

}

std::lock_guard guard(mutex);

-

utils::Identifier uuid = utils::IdGenerator::getIdGenerator()->generate();

-

processor->setStreamFactory(stream_factory);

// initialize the processor

processor->initialize();

processor->setFlowIdentifier(flow_version_->getFlowIdentifier());

-

processor_mapping_[processor->getUUID()] = processor;

-

if (!linkToPrevious) {

termination_ = *(relationships.begin());

} else {

std::shared_ptr last = processor_queue_.back();

-

if (last == nullptr) {

last = processor;

termination_ = *(relationships.begin());

}

-

std::stringstream connection_name;

connection_name << last->getUUIDStr() << "-to-" << processor->getUUIDStr();

-logger_->log_info("Creating %s connection for proc %d",

connection_name.str(), processor_queue_.size() + 1);

Review comment:

Restored the original. I am fine with having this log, but seems more of

an annoyance than an useful log line. Other parts creating `minifi::Connection`

objects don't log so seeing log lines on part of the connections being created

could be misleading.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r577478587

##

File path: libminifi/test/unit/StringUtilsTests.cpp

##

@@ -50,6 +50,16 @@ TEST_CASE("TestStringUtils::split4", "[test split

classname]") {

REQUIRE(expected ==

StringUtils::split(org::apache::nifi::minifi::core::getClassName(),

"::"));

}

+TEST_CASE("TestStringUtils::split5", "[test split delimiter not specified]") {

Review comment:

There is no default value for the delimiter. Updated the test

description to say `"test split with delimiter set to empty string"`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r577477152

##

File path: libminifi/test/TestBase.cpp

##

@@ -111,28 +109,19 @@ std::shared_ptr

TestPlan::addProcessor(const std::shared_ptr node =

std::make_shared(processor);

-

processor_nodes_.push_back(node);

-

// std::shared_ptr context =

std::make_shared(node, controller_services_provider_,

prov_repo_, flow_repo_, configuration_, content_repo_);

-

auto contextBuilder =

core::ClassLoader::getDefaultClassLoader().instantiate("ProcessContextBuilder");

-

contextBuilder =

contextBuilder->withContentRepository(content_repo_)->withFlowFileRepository(flow_repo_)->withProvider(controller_services_provider_.get())->withProvenanceRepository(prov_repo_)->withConfiguration(configuration_);

-

auto context = contextBuilder->build(node);

-

processor_contexts_.push_back(context);

-

processor_queue_.push_back(processor);

-

return processor;

}

std::shared_ptr TestPlan::addProcessor(const std::string

&processor_name, const utils::Identifier& uuid, const std::string &name,

-const

std::initializer_list& relationships, bool linkToPrevious) {

+ const std::initializer_list& relationships, bool

linkToPrevious) {

Review comment:

Updated.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r577476694

##

File path: libminifi/test/TestBase.cpp

##

@@ -150,7 +139,7 @@ std::shared_ptr

TestPlan::addProcessor(const std::string &proce

}

std::shared_ptr TestPlan::addProcessor(const std::string

&processor_name, const std::string &name, const

std::initializer_list& relationships,

-bool linkToPrevious) {

+ bool linkToPrevious) {

Review comment:

That is correct, updated.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r576144662

##

File path: extensions/librdkafka/rdkafka_utils.h

##

@@ -0,0 +1,104 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#pragma once

+

+#include

+#include

+#include

+#include

+#include

+#include

+#include

+

+#include "core/logging/LoggerConfiguration.h"

+#include "utils/OptionalUtils.h"

+#include "rdkafka.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace utils {

+

+enum class KafkaEncoding {

+ UTF8,

+ HEX

+};

+

+struct rd_kafka_conf_deleter {

+ void operator()(rd_kafka_conf_t* ptr) const noexcept {

rd_kafka_conf_destroy(ptr); }

+};

+

+struct rd_kafka_producer_deleter {

+ void operator()(rd_kafka_t* ptr) const noexcept {

+rd_kafka_resp_err_t flush_ret = rd_kafka_flush(ptr, 1 /* ms */); //

Matching the wait time of KafkaConnection.cpp

+// If concerned, we could log potential errors here:

+// if (RD_KAFKA_RESP_ERR__TIMED_OUT == flush_ret) {

+// std::cerr << "Deleting producer failed: time-out while trying to

flush" << std::endl;

+// }

+rd_kafka_destroy(ptr);

+ }

+};

+

+struct rd_kafka_consumer_deleter {

+ void operator()(rd_kafka_t* ptr) const noexcept {

+rd_kafka_consumer_close(ptr);

+rd_kafka_destroy(ptr);

+ }

+};

+

+struct rd_kafka_topic_partition_list_deleter {

+ void operator()(rd_kafka_topic_partition_list_t* ptr) const noexcept {

rd_kafka_topic_partition_list_destroy(ptr); }

+};

+

+struct rd_kafka_topic_conf_deleter {

+ void operator()(rd_kafka_topic_conf_t* ptr) const noexcept {

rd_kafka_topic_conf_destroy(ptr); }

+};

+struct rd_kafka_topic_deleter {

+ void operator()(rd_kafka_topic_t* ptr) const noexcept {

rd_kafka_topic_destroy(ptr); }

+};

+

+struct rd_kafka_message_deleter {

+ void operator()(rd_kafka_message_t* ptr) const noexcept {

rd_kafka_message_destroy(ptr); }

+};

+

+struct rd_kafka_headers_deleter {

+ void operator()(rd_kafka_headers_t* ptr) const noexcept {

rd_kafka_headers_destroy(ptr); }

+};

+

+template

+void kafka_headers_for_each(const rd_kafka_headers_t* headers, T

key_value_handle) {

+ const char *key; // Null terminated, not to be freed

+ const void *value;

+ std::size_t size;

+ for (std::size_t i = 0; RD_KAFKA_RESP_ERR_NO_ERROR ==

rd_kafka_header_get_all(headers, i, &key, &value, &size); ++i) {

+key_value_handle(std::string(key), std::string(static_cast(value), size));

Review comment:

I replaced this with a `gsl::span`, but there is still a copy - now

performed by the caller, and the function signature looks less clean.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r576122753

##

File path: libminifi/test/TestBase.cpp

##

@@ -62,78 +63,51 @@ TestPlan::~TestPlan() {

for (auto& processor : configured_processors_) {

processor->setScheduledState(core::ScheduledState::STOPPED);

}

+ for (auto& connection : relationships_) {

+// This is a patch solving circular references between processors and

connections

+connection->setSource(nullptr);

+connection->setDestination(nullptr);

+ }

controller_services_provider_->clearControllerServices();

}

std::shared_ptr TestPlan::addProcessor(const

std::shared_ptr &processor, const std::string &name, const

std::initializer_list& relationships,

-bool linkToPrevious) {

+bool linkToPrevious) {

if (finalized) {

return nullptr;

}

std::lock_guard guard(mutex);

-

utils::Identifier uuid = utils::IdGenerator::getIdGenerator()->generate();

-

processor->setStreamFactory(stream_factory);

// initialize the processor

processor->initialize();

processor->setFlowIdentifier(flow_version_->getFlowIdentifier());

-

processor_mapping_[processor->getUUID()] = processor;

-

if (!linkToPrevious) {

termination_ = *(relationships.begin());

} else {

std::shared_ptr last = processor_queue_.back();

-

if (last == nullptr) {

last = processor;

termination_ = *(relationships.begin());

}

-

-std::stringstream connection_name;

-connection_name << last->getUUIDStr() << "-to-" << processor->getUUIDStr();

-logger_->log_info("Creating %s connection for proc %d",

connection_name.str(), processor_queue_.size() + 1);

-std::shared_ptr connection =

std::make_shared(flow_repo_, content_repo_,

connection_name.str());

-

for (const auto& relationship : relationships) {

- connection->addRelationship(relationship);

-}

-

-// link the connections so that we can test results at the end for this

-connection->setSource(last);

-connection->setDestination(processor);

-

-connection->setSourceUUID(last->getUUID());

-connection->setDestinationUUID(processor->getUUID());

-last->addConnection(connection);

-if (last != processor) {

- processor->addConnection(connection);

+ addConnection(last, relationship, processor);

Review comment:

Why do you expect behavioural differences between the two? If you want

to have more ellaborate test-setups, you need to have the flexibility of

separately linking up relationships on processors.

From a more pragmatical standpoint, this change is also tested through all

the unit tests ran.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r567663765

##

File path: extensions/librdkafka/ConsumeKafka.cpp

##

@@ -0,0 +1,579 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#include "ConsumeKafka.h"

+

+#include

+#include

+

+#include "core/PropertyValidation.h"

+#include "utils/ProcessorConfigUtils.h"

+#include "utils/gsl.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace core {

+// The upper limit for Max Poll Time is 4 seconds. This is because Watchdog

would potentially start

+// reporting issues with the processor health otherwise

+class ConsumeKafkaMaxPollTimeValidator : public TimePeriodValidator {

+ public:

+ ConsumeKafkaMaxPollTimeValidator(const std::string &name) // NOLINT

+ : TimePeriodValidator(name) {

+ }

+ ~ConsumeKafkaMaxPollTimeValidator() override = default;

+

+ ValidationResult validate(const std::string& subject, const std::string&

input) const override {

+uint64_t value;

+TimeUnit timeUnit;

+uint64_t value_as_ms;

+return

ValidationResult::Builder::createBuilder().withSubject(subject).withInput(input).isValid(

+core::TimePeriodValue::StringToTime(input, value, timeUnit) &&

+org::apache::nifi::minifi::core::Property::ConvertTimeUnitToMS(value,

timeUnit, value_as_ms) &&

+0 < value_as_ms && value_as_ms <= 4000).build();

+ }

+};

+} // namespace core

+namespace processors {

+

+constexpr const std::size_t ConsumeKafka::DEFAULT_MAX_POLL_RECORDS;

+constexpr char const* ConsumeKafka::DEFAULT_MAX_POLL_TIME;

+

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_NAMES;

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_PATTERNS;

+

+core::Property

ConsumeKafka::KafkaBrokers(core::PropertyBuilder::createProperty("Kafka

Brokers")

+ ->withDescription("A comma-separated list of known Kafka Brokers in the

format :.")

+ ->withDefaultValue("localhost:9092",

core::StandardValidators::get().NON_BLANK_VALIDATOR)

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::SecurityProtocol(core::PropertyBuilder::createProperty("Security

Protocol")

+ ->withDescription("This property is currently not supported. Protocol used

to communicate with brokers. Corresponds to Kafka's 'security.protocol'

property.")

+ ->withAllowableValues({SECURITY_PROTOCOL_PLAINTEXT/*,

SECURITY_PROTOCOL_SSL, SECURITY_PROTOCOL_SASL_PLAINTEXT,

SECURITY_PROTOCOL_SASL_SSL*/ })

+ ->withDefaultValue(SECURITY_PROTOCOL_PLAINTEXT)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNames(core::PropertyBuilder::createProperty("Topic Names")

+ ->withDescription("The name of the Kafka Topic(s) to pull from. Multiple

topic names are supported as a comma separated list.")

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNameFormat(core::PropertyBuilder::createProperty("Topic Name

Format")

+ ->withDescription("Specifies whether the Topic(s) provided are a comma

separated list of names or a single regular expression.")

+ ->withAllowableValues({TOPIC_FORMAT_NAMES,

TOPIC_FORMAT_PATTERNS})

+ ->withDefaultValue(TOPIC_FORMAT_NAMES)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::HonorTransactions(core::PropertyBuilder::createProperty("Honor

Transactions")

+ ->withDescription(

+ "Specifies whether or not MiNiFi should honor transactional guarantees

when communicating with Kafka. If false, the Processor will use an \"isolation

level\" of "

+ "read_uncomitted. This means that messages will be received as soon as

they are written to Kafka but will be pulled, even if the producer cancels the

transactions. "

+ "If this value is true, MiNiFi will not receive any messages for which

the producer's transaction was canceled, but this can result in some latency

since the consumer "

+ "must wait for the producer to finish its entire transaction instead of

pulling as the messages become available.")

+ ->withDefaultValue(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::GroupID(core::Propert

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r567646819

##

File path: extensions/librdkafka/ConsumeKafka.cpp

##

@@ -0,0 +1,579 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#include "ConsumeKafka.h"

+

+#include

+#include

+

+#include "core/PropertyValidation.h"

+#include "utils/ProcessorConfigUtils.h"

+#include "utils/gsl.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace core {

+// The upper limit for Max Poll Time is 4 seconds. This is because Watchdog

would potentially start

+// reporting issues with the processor health otherwise

+class ConsumeKafkaMaxPollTimeValidator : public TimePeriodValidator {

+ public:

+ ConsumeKafkaMaxPollTimeValidator(const std::string &name) // NOLINT

+ : TimePeriodValidator(name) {

+ }

+ ~ConsumeKafkaMaxPollTimeValidator() override = default;

+

+ ValidationResult validate(const std::string& subject, const std::string&

input) const override {

+uint64_t value;

+TimeUnit timeUnit;

+uint64_t value_as_ms;

+return

ValidationResult::Builder::createBuilder().withSubject(subject).withInput(input).isValid(

+core::TimePeriodValue::StringToTime(input, value, timeUnit) &&

+org::apache::nifi::minifi::core::Property::ConvertTimeUnitToMS(value,

timeUnit, value_as_ms) &&

+0 < value_as_ms && value_as_ms <= 4000).build();

+ }

+};

+} // namespace core

+namespace processors {

+

+constexpr const std::size_t ConsumeKafka::DEFAULT_MAX_POLL_RECORDS;

+constexpr char const* ConsumeKafka::DEFAULT_MAX_POLL_TIME;

+

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_NAMES;

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_PATTERNS;

+

+core::Property

ConsumeKafka::KafkaBrokers(core::PropertyBuilder::createProperty("Kafka

Brokers")

+ ->withDescription("A comma-separated list of known Kafka Brokers in the

format :.")

+ ->withDefaultValue("localhost:9092",

core::StandardValidators::get().NON_BLANK_VALIDATOR)

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::SecurityProtocol(core::PropertyBuilder::createProperty("Security

Protocol")

+ ->withDescription("This property is currently not supported. Protocol used

to communicate with brokers. Corresponds to Kafka's 'security.protocol'

property.")

+ ->withAllowableValues({SECURITY_PROTOCOL_PLAINTEXT/*,

SECURITY_PROTOCOL_SSL, SECURITY_PROTOCOL_SASL_PLAINTEXT,

SECURITY_PROTOCOL_SASL_SSL*/ })

+ ->withDefaultValue(SECURITY_PROTOCOL_PLAINTEXT)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNames(core::PropertyBuilder::createProperty("Topic Names")

+ ->withDescription("The name of the Kafka Topic(s) to pull from. Multiple

topic names are supported as a comma separated list.")

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNameFormat(core::PropertyBuilder::createProperty("Topic Name

Format")

+ ->withDescription("Specifies whether the Topic(s) provided are a comma

separated list of names or a single regular expression.")

+ ->withAllowableValues({TOPIC_FORMAT_NAMES,

TOPIC_FORMAT_PATTERNS})

+ ->withDefaultValue(TOPIC_FORMAT_NAMES)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::HonorTransactions(core::PropertyBuilder::createProperty("Honor

Transactions")

+ ->withDescription(

+ "Specifies whether or not MiNiFi should honor transactional guarantees

when communicating with Kafka. If false, the Processor will use an \"isolation

level\" of "

+ "read_uncomitted. This means that messages will be received as soon as

they are written to Kafka but will be pulled, even if the producer cancels the

transactions. "

+ "If this value is true, MiNiFi will not receive any messages for which

the producer's transaction was canceled, but this can result in some latency

since the consumer "

+ "must wait for the producer to finish its entire transaction instead of

pulling as the messages become available.")

+ ->withDefaultValue(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::GroupID(core::Propert

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r567646819

##

File path: extensions/librdkafka/ConsumeKafka.cpp

##

@@ -0,0 +1,579 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+#include "ConsumeKafka.h"

+

+#include

+#include

+

+#include "core/PropertyValidation.h"

+#include "utils/ProcessorConfigUtils.h"

+#include "utils/gsl.h"

+

+namespace org {

+namespace apache {

+namespace nifi {

+namespace minifi {

+namespace core {

+// The upper limit for Max Poll Time is 4 seconds. This is because Watchdog

would potentially start

+// reporting issues with the processor health otherwise

+class ConsumeKafkaMaxPollTimeValidator : public TimePeriodValidator {

+ public:

+ ConsumeKafkaMaxPollTimeValidator(const std::string &name) // NOLINT

+ : TimePeriodValidator(name) {

+ }

+ ~ConsumeKafkaMaxPollTimeValidator() override = default;

+

+ ValidationResult validate(const std::string& subject, const std::string&

input) const override {

+uint64_t value;

+TimeUnit timeUnit;

+uint64_t value_as_ms;

+return

ValidationResult::Builder::createBuilder().withSubject(subject).withInput(input).isValid(

+core::TimePeriodValue::StringToTime(input, value, timeUnit) &&

+org::apache::nifi::minifi::core::Property::ConvertTimeUnitToMS(value,

timeUnit, value_as_ms) &&

+0 < value_as_ms && value_as_ms <= 4000).build();

+ }

+};

+} // namespace core

+namespace processors {

+

+constexpr const std::size_t ConsumeKafka::DEFAULT_MAX_POLL_RECORDS;

+constexpr char const* ConsumeKafka::DEFAULT_MAX_POLL_TIME;

+

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_NAMES;

+constexpr char const* ConsumeKafka::TOPIC_FORMAT_PATTERNS;

+

+core::Property

ConsumeKafka::KafkaBrokers(core::PropertyBuilder::createProperty("Kafka

Brokers")

+ ->withDescription("A comma-separated list of known Kafka Brokers in the

format :.")

+ ->withDefaultValue("localhost:9092",

core::StandardValidators::get().NON_BLANK_VALIDATOR)

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::SecurityProtocol(core::PropertyBuilder::createProperty("Security

Protocol")

+ ->withDescription("This property is currently not supported. Protocol used

to communicate with brokers. Corresponds to Kafka's 'security.protocol'

property.")

+ ->withAllowableValues({SECURITY_PROTOCOL_PLAINTEXT/*,

SECURITY_PROTOCOL_SSL, SECURITY_PROTOCOL_SASL_PLAINTEXT,

SECURITY_PROTOCOL_SASL_SSL*/ })

+ ->withDefaultValue(SECURITY_PROTOCOL_PLAINTEXT)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNames(core::PropertyBuilder::createProperty("Topic Names")

+ ->withDescription("The name of the Kafka Topic(s) to pull from. Multiple

topic names are supported as a comma separated list.")

+ ->supportsExpressionLanguage(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::TopicNameFormat(core::PropertyBuilder::createProperty("Topic Name

Format")

+ ->withDescription("Specifies whether the Topic(s) provided are a comma

separated list of names or a single regular expression.")

+ ->withAllowableValues({TOPIC_FORMAT_NAMES,

TOPIC_FORMAT_PATTERNS})

+ ->withDefaultValue(TOPIC_FORMAT_NAMES)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::HonorTransactions(core::PropertyBuilder::createProperty("Honor

Transactions")

+ ->withDescription(

+ "Specifies whether or not MiNiFi should honor transactional guarantees

when communicating with Kafka. If false, the Processor will use an \"isolation

level\" of "

+ "read_uncomitted. This means that messages will be received as soon as

they are written to Kafka but will be pulled, even if the producer cancels the

transactions. "

+ "If this value is true, MiNiFi will not receive any messages for which

the producer's transaction was canceled, but this can result in some latency

since the consumer "

+ "must wait for the producer to finish its entire transaction instead of

pulling as the messages become available.")

+ ->withDefaultValue(true)

+ ->isRequired(true)

+ ->build());

+

+core::Property

ConsumeKafka::GroupID(core::Propert

[GitHub] [nifi-minifi-cpp] hunyadi-dev commented on a change in pull request #940: MINIFICPP-1373 - Implement ConsumeKafka

hunyadi-dev commented on a change in pull request #940:

URL: https://github.com/apache/nifi-minifi-cpp/pull/940#discussion_r567644990

##

File path: extensions/librdkafka/ConsumeKafka.cpp

##

@@ -0,0 +1,579 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0