[GitHub] [kafka] showuon commented on pull request #8623: MINOR: Update the documentations

showuon commented on pull request #8623: URL: https://github.com/apache/kafka/pull/8623#issuecomment-630618738 Thank you, @kkonstantine for many nice catches!! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kkonstantine commented on pull request #8623: MINOR: Update the documentations

kkonstantine commented on pull request #8623: URL: https://github.com/apache/kafka/pull/8623#issuecomment-630618055 ok to test This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kkonstantine commented on a change in pull request #8623: MINOR: Update the documentations

kkonstantine commented on a change in pull request #8623: URL: https://github.com/apache/kafka/pull/8623#discussion_r427064231 ## File path: docs/ops.html ## @@ -477,19 +477,25 @@ Limiting Bandwidth Usage during Da Throttle was removed. The administrator can also validate the assigned configs using the kafka-configs.sh. There are two pairs of throttle - configuration used to manage the throttling process. The throttle value itself. This is configured, at a broker + configuration used to manage the throttling process. First pair refers to the throttle value itself. This is configured, at a broker level, using the dynamic properties: - leader.replication.throttled.rate - follower.replication.throttled.rate + +leader.replication.throttled.rate +follower.replication.throttled.rate + + + Then there is the configuration pair of enumerated set of throttled replicas: Review comment: ```suggestion Then there is the configuration pair of enumerated sets of throttled replicas: ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8623: MINOR: Update the documentations

showuon commented on a change in pull request #8623: URL: https://github.com/apache/kafka/pull/8623#discussion_r427059479 ## File path: docs/ops.html ## @@ -477,16 +477,20 @@ Limiting Bandwidth Usage during Da Throttle was removed. The administrator can also validate the assigned configs using the kafka-configs.sh. There are two pairs of throttle - configuration used to manage the throttling process. The throttle value itself. This is configured, at a broker + configuration used to manage the throttling process. This is configured, at a broker level, using the dynamic properties: - leader.replication.throttled.rate - follower.replication.throttled.rate + +leader.replication.throttled.rate +follower.replication.throttled.rate + There is also an enumerated set of throttled replicas: Review comment: Good suggestion! after the update, it's more clear!  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8623: MINOR: Update the documentations

showuon commented on a change in pull request #8623: URL: https://github.com/apache/kafka/pull/8623#discussion_r427054507 ## File path: docs/connect.html ## @@ -129,9 +129,11 @@ TransformationsThe file source connector reads each line as a String. We will wrap each line in a Map and then add a second field to identify the origin of the event. To do this, we use two transformations: HoistField to place the input line inside a Map -InsertField to add the static field. In this example we'll indicate that the record came from a file connector Review comment: Yes, you're right! After 2nd reading, it indeed refer to the content coming from a file in `InsertField`. I'll revert this change back. Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8623: MINOR: Update the documentations

showuon commented on a change in pull request #8623: URL: https://github.com/apache/kafka/pull/8623#discussion_r427051965 ## File path: docs/connect.html ## @@ -103,7 +103,7 @@ Configuring Connecto topics.regex - A Java regular expression of topics to use as input for this connector -For any other options, you should consult the documentation for the connector. +For any other options, you should consult the documentation for the connector. Review comment: Yes, you're right. I read again, and they indeed refer to the docs of each individual connectors. I'll revert this change back. Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kkonstantine commented on a change in pull request #8623: MINOR: Update the documentations

kkonstantine commented on a change in pull request #8623: URL: https://github.com/apache/kafka/pull/8623#discussion_r427036583 ## File path: docs/connect.html ## @@ -129,9 +129,11 @@ TransformationsThe file source connector reads each line as a String. We will wrap each line in a Map and then add a second field to identify the origin of the event. To do this, we use two transformations: HoistField to place the input line inside a Map -InsertField to add the static field. In this example we'll indicate that the record came from a file connector Review comment: This is also not correct. This refers to what we say above as: _then add a second field to identify the origin of the event_ So this correctly refers to what will be added in this new field when using `InsertField` ## File path: docs/security.html ## @@ -398,7 +398,7 @@ Host Name Verification ssl.keystore.password=test1234 ssl.key.password=test1234 -Other configuration settings that may also be needed depending on our requirements and the broker configuration: +Other configuration settings that may also be needed depending on requirements and the broker configuration: Review comment: I'm not sure this is redundant. This refers to the user's requirements. ## File path: docs/connect.html ## @@ -103,7 +103,7 @@ Configuring Connecto topics.regex - A Java regular expression of topics to use as input for this connector -For any other options, you should consult the documentation for the connector. +For any other options, you should consult the documentation for the connector. Review comment: That's not accurate. The documentation for the connector is not the same as the Worker configs. This indeed refers to the docs of each individual connector ## File path: docs/ops.html ## @@ -477,16 +477,20 @@ Limiting Bandwidth Usage during Da Throttle was removed. The administrator can also validate the assigned configs using the kafka-configs.sh. There are two pairs of throttle - configuration used to manage the throttling process. The throttle value itself. This is configured, at a broker + configuration used to manage the throttling process. This is configured, at a broker Review comment: This is not redundant. It refers to the two pairs of properties shown below. You can say instead: ```suggestion configuration used to manage the throttling process. First pair refers to the throttle value itself. This is configured, at a broker ``` then below you could amend the sentence to say: _There is also an enumerated set of throttled replicas:_ -> _Then there is the configuration pair of enumerated set of throttled replicas:_ ## File path: docs/ops.html ## @@ -477,16 +477,20 @@ Limiting Bandwidth Usage during Da Throttle was removed. The administrator can also validate the assigned configs using the kafka-configs.sh. There are two pairs of throttle - configuration used to manage the throttling process. The throttle value itself. This is configured, at a broker + configuration used to manage the throttling process. This is configured, at a broker level, using the dynamic properties: - leader.replication.throttled.rate - follower.replication.throttled.rate + +leader.replication.throttled.rate +follower.replication.throttled.rate + There is also an enumerated set of throttled replicas: Review comment: Then here you could amend the sentence to say: ```suggestion Then there is the configuration pair of throttled replicas: ``` ## File path: docs/ops.html ## @@ -477,16 +477,20 @@ Limiting Bandwidth Usage during Da Throttle was removed. The administrator can also validate the assigned configs using the kafka-configs.sh. There are two pairs of throttle - configuration used to manage the throttling process. The throttle value itself. This is configured, at a broker + configuration used to manage the throttling process. This is configured, at a broker level, using the dynamic properties: - leader.replication.throttled.rate - follower.replication.throttled.rate + +leader.replication.throttled.rate +follower.replication.throttled.rate + There is also an enumerated set of throttled replicas: - leader.replication.throttled.replicas - follower.replication.throttled.replicas + +leader.replication.throttled.replicas +follower.replication.throttled.replicas + Which are configured per topic. All four config values are automatically assigned by kafka-reassign-partitions.sh Review comment: Then you can add a break here: ```suggestion Which are configured per topic. All four config values are automatically assigned

[GitHub] [kafka] showuon commented on pull request #8623: MINOR: Update the documentations

showuon commented on pull request #8623: URL: https://github.com/apache/kafka/pull/8623#issuecomment-630580908 hi @cmccabe @guozhangwang @gwenshap , a simple doc fix/update to connect.html/op.html/security.html. Please help review. Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8622: MINOR: Update stream documentation

showuon commented on a change in pull request #8622:

URL: https://github.com/apache/kafka/pull/8622#discussion_r427028165

##

File path: docs/streams/upgrade-guide.html

##

@@ -35,7 +35,7 @@ Upgrade Guide and API Changes

Upgrading from any older version to {{fullDotVersion}} is possible:

you will need to do two rolling bounces, where during the first rolling bounce

phase you set the config upgrade.from="older version"

-(possible values are "0.10.0" - "2.4") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

+(possible values are "0.10.0" - "2.3") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

Review comment:

I've also updated in the kafka-site repo:

https://github.com/apache/kafka-site/pull/265/commits/513a8205e6b115ca4f876aa5d95d3756061266d5.

Thank you.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (KAFKA-10020) KIP-616: Rename implicit Serdes instances in kafka-streams-scala

[ https://issues.apache.org/jira/browse/KAFKA-10020?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuriy Badalyantc updated KAFKA-10020: - Component/s: streams Affects Version/s: 2.5.0 Priority: Minor (was: Major) > KIP-616: Rename implicit Serdes instances in kafka-streams-scala > > > Key: KAFKA-10020 > URL: https://issues.apache.org/jira/browse/KAFKA-10020 > Project: Kafka > Issue Type: Improvement > Components: streams >Affects Versions: 2.5.0 >Reporter: Yuriy Badalyantc >Priority: Minor > > To fix name clash, names of implicits in the > org.apache.kafka.streams.scala.Serdes should be changed. Details are in the > [KIP-616|https://cwiki.apache.org/confluence/display/KAFKA/KIP-616%3A+Rename+implicit+Serdes+instances+in+kafka-streams-scala]. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (KAFKA-10020) KIP-616: Rename implicit Serdes instances in kafka-streams-scala

Yuriy Badalyantc created KAFKA-10020: Summary: KIP-616: Rename implicit Serdes instances in kafka-streams-scala Key: KAFKA-10020 URL: https://issues.apache.org/jira/browse/KAFKA-10020 Project: Kafka Issue Type: Improvement Reporter: Yuriy Badalyantc To fix name clash, names of implicits in the org.apache.kafka.streams.scala.Serdes should be changed. Details are in the [KIP-616|https://cwiki.apache.org/confluence/display/KAFKA/KIP-616%3A+Rename+implicit+Serdes+instances+in+kafka-streams-scala]. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] LMnet commented on a change in pull request #8049: MINOR: Added missing default serdes to the streams.scala.Serdes

LMnet commented on a change in pull request #8049:

URL: https://github.com/apache/kafka/pull/8049#discussion_r427015288

##

File path:

streams/streams-scala/src/main/scala/org/apache/kafka/streams/scala/Serdes.scala

##

@@ -30,12 +32,15 @@ object Serdes {

implicit def JavaLong: Serde[java.lang.Long] = JSerdes.Long()

implicit def ByteArray: Serde[Array[Byte]] = JSerdes.ByteArray()

implicit def Bytes: Serde[org.apache.kafka.common.utils.Bytes] =

JSerdes.Bytes()

+ implicit def byteBufferSerde: Serde[ByteBuffer] = JSerdes.ByteBuffer()

+ implicit def shortSerde: Serde[Short] =

JSerdes.Short().asInstanceOf[Serde[Short]]

implicit def Float: Serde[Float] = JSerdes.Float().asInstanceOf[Serde[Float]]

implicit def JavaFloat: Serde[java.lang.Float] = JSerdes.Float()

implicit def Double: Serde[Double] =

JSerdes.Double().asInstanceOf[Serde[Double]]

implicit def JavaDouble: Serde[java.lang.Double] = JSerdes.Double()

implicit def Integer: Serde[Int] = JSerdes.Integer().asInstanceOf[Serde[Int]]

implicit def JavaInteger: Serde[java.lang.Integer] = JSerdes.Integer()

+ implicit def uuidSerde: Serde[UUID] = JSerdes.UUID()

Review comment:

I created the KIP:

https://cwiki.apache.org/confluence/display/KAFKA/KIP-616%3A+Rename+implicit+Serdes+instances+in+kafka-streams-scala

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (KAFKA-10019) MirrorMaker 2 did not function properly after restart (message lost, messages arriving slowly)

[ https://issues.apache.org/jira/browse/KAFKA-10019?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Kay updated KAFKA-10019: Description: MM2 did not function properly after stopping a running MM2 process then starting it again. Consumer did not receive all messages (even messages being sent after MM2 restarted). The messages arriving to the consumer were no longer at the rate as specified in "--message" --and "--timeout". To reproduce the issue # Environment: ## Region 1: one Kafka cluster, two MM2 instances, 1 producer instance ## Region 2: one Kafka cluster, two MM2 instances, 1 consumer instance # Producer (in region 1) started sending 1000 messages. ## ./bin/kafka-producer-perf-test.sh --producer.config config/producer.properties --topic topic1 --record-size 480 --num-records 1000 --throughput 17 # Consumer (in region 2) started receiving messages. ## while true; do ./bin/kafka-consumer-perf-test.sh --threads 60 *--timeout 5000* --consumer.config config/consumer.properties --topic region1.topic1 *--messages 250* --group region2-consume-region1topic1 --broker-list $KAFKA_BROKERS; done > consumer.log & # Consumer received the first 500 messages (250, 250), as "--message" specified. # Killed the MM2 process on one of two instances in both regions. # Consumer started receiving the remaining messages at a much slower "rate" (160, 29, 19, 11, 9, 6, 5, 5, 0,.. 3, 0,... 2, 0,... 1). # Restarted the MM2 processes killed at (4). # Producer sent another 1000 messages. # Still, messages no longer arrived at the "--message" rate (250 * N), but e.g. 37, 30, 23, 13, 9, 0, 1, 3... # And consumer did not receive all new 1000 messages sent after MM2 restarted. Please see the producer and consumer log files attached. In the consumer log file, you can see that after the first 2 consecutive "250" messages arrived, the message arrived differently. *Issue Summary* # MM2 does not recover from restarting its process. # After killing a MM2 process in the MM2 EC2 instance, a Consumer no longer received the messages at the rate of "--message" and "--timeout". # Consumer did not receive all messages even those messages were published after the mm2 process restarted. # Consumer no longer received messages at the rate of "--message" and "-timeout" even after the mm2 process restarted. was: MM2 did not function properly after stopping a running MM2 process then starting it again. Consumer did not receive all messages (even messages being sent after MM2 restarted). The messages arriving to the consumer were no longer at the rate as specified in "--message" and "--timeout". To reproduce the issue # Environment: ## Region 1: one Kafka cluster, two MM2 instances, 1 producer instance ## Region 2: one Kafka cluster, two MM2 instances, 1 consumer instance # **Producer (in region 1) started sending 1000 messages. ## ./bin/kafka-producer-perf-test.sh --producer.config config/producer.properties --topic topic1 --record-size 480 --num-records 1000 --throughput 17 # Consumer (in region 2) started receiving messages. ## while true; do ./bin/kafka-consumer-perf-test.sh --threads 60 *--timeout 5000* --consumer.config config/consumer.properties --topic region1.topic1 *--messages 250* --group region2-consume-region1topic1 --broker-list $KAFKA_BROKERS; done > consumer.log & # Consumer received the first 500 messages (250, 250), as "--message" specified. # Killed the MM2 process on one of two instances in both regions. # Consumer started receiving the remaining messages at a much slower "rate" (160, 29, 19, 11, 9, 6, 5, 5, 0,.. 3, 0,... 2, 0,... 1). # Restarted the MM2 processes killed at (4). # Producer sent another 1000 messages. # Still, messages no longer arrived at the "--message" rate (250 * N), but e.g. 37, 30, 23, 13, 9, 0, 1, 3... # And consumer did not receive all new 1000 messages sent after MM2 restarted. Please see the producer and consumer log files attached. In the consumer log file, you can see that after the first 2 consecutive "250" messages arrived, the message arrived differently. *Issue Summary* # MM2 does not recover from restarting its process. # After killing a MM2 process in the MM2 EC2 instance, a Consumer no longer received the messages at the rate of "--message" and "--timeout". # Consumer did not receive all messages even those messages were published after the mm2 process restarted. # Consumer no longer received messages at the rate of "--message" and "-timeout" even after the mm2 process restarted. > MirrorMaker 2 did not function properly after restart (message lost, messages > arriving slowly) > -- > > Key: KAFKA-10019 > URL: https://issues.apache.org/jira/browse/KAFKA-10019 > Project: Kafka > Issue Type: Bug >

[jira] [Created] (KAFKA-10019) MirrorMaker 2 did not function properly after restart (message lost, messages arriving slowly)

Kay created KAFKA-10019: --- Summary: MirrorMaker 2 did not function properly after restart (message lost, messages arriving slowly) Key: KAFKA-10019 URL: https://issues.apache.org/jira/browse/KAFKA-10019 Project: Kafka Issue Type: Bug Components: mirrormaker Affects Versions: 2.4.1 Environment: Amazon Linux 2 MSK clusters: kafka.m5.large, 3 AZ, 3 brokers MM2 instances: c5.2xlarge Producer/Consumer instances: c5.2xlarge Reporter: Kay Attachments: 2a-consumer.log, 2a-producer.log MM2 did not function properly after stopping a running MM2 process then starting it again. Consumer did not receive all messages (even messages being sent after MM2 restarted). The messages arriving to the consumer were no longer at the rate as specified in "--message" and "--timeout". To reproduce the issue # Environment: ## Region 1: one Kafka cluster, two MM2 instances, 1 producer instance ## Region 2: one Kafka cluster, two MM2 instances, 1 consumer instance # **Producer (in region 1) started sending 1000 messages. ## ./bin/kafka-producer-perf-test.sh --producer.config config/producer.properties --topic topic1 --record-size 480 --num-records 1000 --throughput 17 # Consumer (in region 2) started receiving messages. ## while true; do ./bin/kafka-consumer-perf-test.sh --threads 60 *--timeout 5000* --consumer.config config/consumer.properties --topic region1.topic1 *--messages 250* --group region2-consume-region1topic1 --broker-list $KAFKA_BROKERS; done > consumer.log & # Consumer received the first 500 messages (250, 250), as "--message" specified. # Killed the MM2 process on one of two instances in both regions. # Consumer started receiving the remaining messages at a much slower "rate" (160, 29, 19, 11, 9, 6, 5, 5, 0,.. 3, 0,... 2, 0,... 1). # Restarted the MM2 processes killed at (4). # Producer sent another 1000 messages. # Still, messages no longer arrived at the "--message" rate (250 * N), but e.g. 37, 30, 23, 13, 9, 0, 1, 3... # And consumer did not receive all new 1000 messages sent after MM2 restarted. Please see the producer and consumer log files attached. In the consumer log file, you can see that after the first 2 consecutive "250" messages arrived, the message arrived differently. *Issue Summary* # MM2 does not recover from restarting its process. # After killing a MM2 process in the MM2 EC2 instance, a Consumer no longer received the messages at the rate of "--message" and "--timeout". # Consumer did not receive all messages even those messages were published after the mm2 process restarted. # Consumer no longer received messages at the rate of "--message" and "-timeout" even after the mm2 process restarted. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] ableegoldman commented on a change in pull request #8622: MINOR: Update stream documentation

ableegoldman commented on a change in pull request #8622:

URL: https://github.com/apache/kafka/pull/8622#discussion_r427019844

##

File path: docs/streams/upgrade-guide.html

##

@@ -35,7 +35,7 @@ Upgrade Guide and API Changes

Upgrading from any older version to {{fullDotVersion}} is possible:

you will need to do two rolling bounces, where during the first rolling bounce

phase you set the config upgrade.from="older version"

-(possible values are "0.10.0" - "2.4") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

+(possible values are "0.10.0" - "2.3") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

Review comment:

Awesome, thank you!

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8622: MINOR: Update stream documentation

showuon commented on a change in pull request #8622:

URL: https://github.com/apache/kafka/pull/8622#discussion_r427018759

##

File path: docs/streams/upgrade-guide.html

##

@@ -35,7 +35,7 @@ Upgrade Guide and API Changes

Upgrading from any older version to {{fullDotVersion}} is possible:

you will need to do two rolling bounces, where during the first rolling bounce

phase you set the config upgrade.from="older version"

-(possible values are "0.10.0" - "2.4") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

+(possible values are "0.10.0" - "2.3") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

Review comment:

Thanks for suggestion, @ableegoldman , it makes it more clear! I've

updated in this commit

https://github.com/apache/kafka/pull/8622/commits/a3accf681e72bbba9a774a464253c1ccd7746188.

Thank you.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-6520) When a Kafka Stream can't communicate with the server, it's Status stays RUNNING

[ https://issues.apache.org/jira/browse/KAFKA-6520?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17110830#comment-17110830 ] Matthias J. Sax commented on KAFKA-6520: [~guozhang] I think your idea about leveraging KIP-572 might not work. I dug though the code and none of the blocking calls that might through a `TimeoutException` are on the regular processing code path. Only during task initialization or restore, blocking calls are made. During normal processing, only `poll()` / `pause()` / `resume()` are called and those methods don't throw a `TimeoutException`. Thoughts? [~VinceMu] Yes, the main purpose is to have a KafkaStreams client state DISCONNECTED. Thread state is an internal implementation detail. > When a Kafka Stream can't communicate with the server, it's Status stays > RUNNING > > > Key: KAFKA-6520 > URL: https://issues.apache.org/jira/browse/KAFKA-6520 > Project: Kafka > Issue Type: Improvement > Components: streams >Reporter: Michael Kohout >Priority: Major > Labels: newbie, user-experience > > KIP WIP: > [https://cwiki.apache.org/confluence/display/KAFKA/KIP-457%3A+Add+DISCONNECTED+status+to+Kafka+Streams] > When you execute the following scenario the application is always in RUNNING > state > > 1)start kafka > 2)start app, app connects to kafka and starts processing > 3)kill kafka(stop docker container) > 4)the application doesn't give any indication that it's no longer > connected(Stream State is still RUNNING, and the uncaught exception handler > isn't invoked) > > > It would be useful if the Stream State had a DISCONNECTED status. > > See > [this|https://groups.google.com/forum/#!topic/confluent-platform/nQh2ohgdrIQ] > for a discussion from the google user forum. This is a link to a related > issue. > - > Update: there are some discussions on the PR itself which leads me to think > that a more general solution should be at the ClusterConnectionStates rather > than at the Streams or even Consumer level. One proposal would be: > * Add a new metric named `failedConnection` in SelectorMetrics which is > recorded at `connect()` and `pollSelectionKeys()` functions, upon capture the > IOException / RuntimeException which indicates the connection disconnected. > * And then users of Consumer / Streams can monitor on this metric, which > normally will only have close to zero values as we have transient > disconnects, if it is spiking it means the brokers are consistently being > unavailable indicting the state. > [~Yohan123] WDYT? -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] LMnet commented on a change in pull request #8049: MINOR: Added missing default serdes to the streams.scala.Serdes

LMnet commented on a change in pull request #8049:

URL: https://github.com/apache/kafka/pull/8049#discussion_r427015288

##

File path:

streams/streams-scala/src/main/scala/org/apache/kafka/streams/scala/Serdes.scala

##

@@ -30,12 +32,15 @@ object Serdes {

implicit def JavaLong: Serde[java.lang.Long] = JSerdes.Long()

implicit def ByteArray: Serde[Array[Byte]] = JSerdes.ByteArray()

implicit def Bytes: Serde[org.apache.kafka.common.utils.Bytes] =

JSerdes.Bytes()

+ implicit def byteBufferSerde: Serde[ByteBuffer] = JSerdes.ByteBuffer()

+ implicit def shortSerde: Serde[Short] =

JSerdes.Short().asInstanceOf[Serde[Short]]

implicit def Float: Serde[Float] = JSerdes.Float().asInstanceOf[Serde[Float]]

implicit def JavaFloat: Serde[java.lang.Float] = JSerdes.Float()

implicit def Double: Serde[Double] =

JSerdes.Double().asInstanceOf[Serde[Double]]

implicit def JavaDouble: Serde[java.lang.Double] = JSerdes.Double()

implicit def Integer: Serde[Int] = JSerdes.Integer().asInstanceOf[Serde[Int]]

implicit def JavaInteger: Serde[java.lang.Integer] = JSerdes.Integer()

+ implicit def uuidSerde: Serde[UUID] = JSerdes.UUID()

Review comment:

I created the KIP:

https://cwiki.apache.org/confluence/display/KAFKA/KIP-616+-+Rename+implicit+Serdes+instances+in+kafka-streams-scala

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-4327) Move Reset Tool from core to streams

[

https://issues.apache.org/jira/browse/KAFKA-4327?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17110829#comment-17110829

]

Matthias J. Sax commented on KAFKA-4327:

Thanks [~jeqo]. The original idea was to move it to `streams` module. Why do

you want to move it to `tools` module? Or are you referring to move it to

_package_ {{o.a.k.streams.tools}}?

What parser we are using is an implementation details and does not need to be

discussed on a KIP. I am fine with your proposal.

However, moving the class to a different module/package and removing zookeeper

parameter is a breaking change. Thus, we can work on this ticket only for the

3.0.0 release. It's unclear atm, what the release number after 2.6 will be.

There was a discussion to maybe do a major release, but as long as the decision

is no made, I would recommend to hold off. Writing the KIP could be done right

now, but working on a PR would be too early as we don't know if/when it could

get merged?

> Move Reset Tool from core to streams

>

>

> Key: KAFKA-4327

> URL: https://issues.apache.org/jira/browse/KAFKA-4327

> Project: Kafka

> Issue Type: Improvement

> Components: streams

>Reporter: Matthias J. Sax

>Assignee: Jorge Esteban Quilcate Otoya

>Priority: Blocker

> Labels: needs-kip

> Fix For: 3.0.0

>

>

> This is a follow up on https://issues.apache.org/jira/browse/KAFKA-4008

> Currently, Kafka Streams Application Reset Tool is part of {{core}} module

> due to ZK dependency. After KIP-4 got merged, this dependency can be dropped

> and the Reset Tool can be moved to {{streams}} module.

> This should also update {{InternalTopicManager#filterExistingTopics}} that

> revers to ResetTool in an exception message:

> {{"Use 'kafka.tools.StreamsResetter' tool"}}

> -> {{"Use '" + kafka.tools.StreamsResetter.getClass().getName() + "' tool"}}

> Doing this JIRA also requires to update the docs with regard to broker

> backward compatibility -- not all broker support "topic delete request" and

> thus, the reset tool will not be backward compatible to all broker versions.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] ableegoldman commented on a change in pull request #8622: MINOR: Update stream documentation

ableegoldman commented on a change in pull request #8622:

URL: https://github.com/apache/kafka/pull/8622#discussion_r427013934

##

File path: docs/streams/upgrade-guide.html

##

@@ -35,7 +35,7 @@ Upgrade Guide and API Changes

Upgrading from any older version to {{fullDotVersion}} is possible:

you will need to do two rolling bounces, where during the first rolling bounce

phase you set the config upgrade.from="older version"

-(possible values are "0.10.0" - "2.4") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

+(possible values are "0.10.0" - "2.3") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

Review comment:

Can we just add a small qualifier in the first line?

`you will need to do two rolling bounces` --> `if upgrading from 2.3 or

below, you will need to do two rolling bounces`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8622: MINOR: Update stream documentation

showuon commented on a change in pull request #8622:

URL: https://github.com/apache/kafka/pull/8622#discussion_r427012797

##

File path: docs/streams/upgrade-guide.html

##

@@ -35,7 +35,7 @@ Upgrade Guide and API Changes

Upgrading from any older version to {{fullDotVersion}} is possible:

you will need to do two rolling bounces, where during the first rolling bounce

phase you set the config upgrade.from="older version"

-(possible values are "0.10.0" - "2.4") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

+(possible values are "0.10.0" - "2.3") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

Review comment:

hi @ableegoldman , After reading the whole paragraph again, I think

you're right.

> (possible values are "0.10.0" - "2.3") and during the second you remove

it.

> This is required to safely upgrade to the new cooperative rebalancing

protocol of the embedded consumer.

> you can safely switch over to cooperative at any time once the entire

group is on 2.4+ by removing the config value and bouncing.

So, Because we explicitly said since 2.4+, there'll be cooperative

rebalancing protocol available, I think here we keep it as `2.3` is fine and

correct.

Thank you.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8622: MINOR: Update stream documentation

showuon commented on a change in pull request #8622:

URL: https://github.com/apache/kafka/pull/8622#discussion_r427012797

##

File path: docs/streams/upgrade-guide.html

##

@@ -35,7 +35,7 @@ Upgrade Guide and API Changes

Upgrading from any older version to {{fullDotVersion}} is possible:

you will need to do two rolling bounces, where during the first rolling bounce

phase you set the config upgrade.from="older version"

-(possible values are "0.10.0" - "2.4") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

+(possible values are "0.10.0" - "2.3") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

Review comment:

hi @ableegoldman , After reading the whole paragraph again, I think

you're right.

> (possible values are "0.10.0" - "2.3") and during the second you remove

it.

> This is required to safely upgrade to the new cooperative rebalancing

protocol of the embedded consumer.

> you can safely switch over to cooperative at any time once the entire

group is on 2.4+ by removing the config value and bouncing.

So, Because we explicitly said since 2.4+, there'll be cooperative

rebalancing protocol available, I think here we keep it as `2.3` is fine and

correct.

Or do you have any other suggestion?

Thank you.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8622: MINOR: Update stream documentation

showuon commented on a change in pull request #8622:

URL: https://github.com/apache/kafka/pull/8622#discussion_r427012797

##

File path: docs/streams/upgrade-guide.html

##

@@ -35,7 +35,7 @@ Upgrade Guide and API Changes

Upgrading from any older version to {{fullDotVersion}} is possible:

you will need to do two rolling bounces, where during the first rolling bounce

phase you set the config upgrade.from="older version"

-(possible values are "0.10.0" - "2.4") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

+(possible values are "0.10.0" - "2.3") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

Review comment:

hi @ableegoldman , After reading the whole paragraph again, I think

you're right.

> (possible values are "0.10.0" - "2.3") and during the second you remove

it.

> This is required to safely upgrade to the new cooperative rebalancing

protocol of the embedded consumer.

> you can safely switch over to cooperative at any time once the entire

group is on 2.4+ by removing the config value and bouncing.

So, I think here we keep it as `2.3` is fine and correct.

Thank you.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8622: MINOR: Update stream documentation

showuon commented on a change in pull request #8622:

URL: https://github.com/apache/kafka/pull/8622#discussion_r427012797

##

File path: docs/streams/upgrade-guide.html

##

@@ -35,7 +35,7 @@ Upgrade Guide and API Changes

Upgrading from any older version to {{fullDotVersion}} is possible:

you will need to do two rolling bounces, where during the first rolling bounce

phase you set the config upgrade.from="older version"

-(possible values are "0.10.0" - "2.4") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

+(possible values are "0.10.0" - "2.3") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

Review comment:

hi @ableegoldman , After reading the whole paragraph again, I think

you're right.

> This is required to safely upgrade to the new cooperative rebalancing

protocol of the embedded consumer.

> you can safely switch over to cooperative at any time once the entire

group is on 2.4+ by removing the config value and bouncing.

So, I think here we keep it as `2.3` is fine and correct.

Thank you.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #8622: MINOR: Update stream documentation

ableegoldman commented on a change in pull request #8622:

URL: https://github.com/apache/kafka/pull/8622#discussion_r427009991

##

File path: docs/streams/upgrade-guide.html

##

@@ -35,7 +35,7 @@ Upgrade Guide and API Changes

Upgrading from any older version to {{fullDotVersion}} is possible:

you will need to do two rolling bounces, where during the first rolling bounce

phase you set the config upgrade.from="older version"

-(possible values are "0.10.0" - "2.4") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

+(possible values are "0.10.0" - "2.3") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

Review comment:

@showuon Can we clarify that you only need to do this if you're

upgrading from 2.3 or below? I know this seems implied by the fact that the

config's possible values stop at 2.3 but there are always creative

interpretations of seemingly obvious things 🙂

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #8622: MINOR: Update stream documentation

ableegoldman commented on a change in pull request #8622:

URL: https://github.com/apache/kafka/pull/8622#discussion_r427009991

##

File path: docs/streams/upgrade-guide.html

##

@@ -35,7 +35,7 @@ Upgrade Guide and API Changes

Upgrading from any older version to {{fullDotVersion}} is possible:

you will need to do two rolling bounces, where during the first rolling bounce

phase you set the config upgrade.from="older version"

-(possible values are "0.10.0" - "2.4") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

+(possible values are "0.10.0" - "2.3") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

Review comment:

@showuon Can we clarify that you only need to do this if you're

upgrading from 2.3 or below? I know this seems implied by the fact that the

config's possible values stop at 2.3 but there are always creative

interpretations of seemingly obvious thing 🙂

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8622: MINOR: Update stream documentation

showuon commented on a change in pull request #8622:

URL: https://github.com/apache/kafka/pull/8622#discussion_r427009933

##

File path: docs/streams/upgrade-guide.html

##

@@ -35,7 +35,7 @@ Upgrade Guide and API Changes

Upgrading from any older version to {{fullDotVersion}} is possible:

you will need to do two rolling bounces, where during the first rolling bounce

phase you set the config upgrade.from="older version"

-(possible values are "0.10.0" - "2.3") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

+(possible values are "0.10.0" - "2.4") and during the

second you remove it. This is required to safely upgrade to the new cooperative

rebalancing protocol of the embedded consumer. Note that you will remain using

the old eager

Review comment:

Thank you, @ableegoldman @abbccdda @bbejeck , I've reverted back this

version to `2.3` now in this commit

https://github.com/apache/kafka/pull/8622/commits/460768e71f5c7d427a6faffdded9b0478ade1db1.

Thank you very much!

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #8681: KAFKA-10010: Should make state store registration idempotent

ableegoldman commented on a change in pull request #8681:

URL: https://github.com/apache/kafka/pull/8681#discussion_r427006280

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ProcessorStateManager.java

##

@@ -266,7 +266,8 @@ public void registerStore(final StateStore store, final

StateRestoreCallback sta

}

if (stores.containsKey(storeName)) {

-throw new IllegalArgumentException(format("%sStore %s has already

been registered.", logPrefix, storeName));

+log.warn("State Store {} has already been registered, which could

be due to a half-way registration" +

Review comment:

Re: your concern, I don't think we can assume that a user's state

store's `init` method is idempotent. AFAIK nothing should change that's

relevant to the state store registration, but if something does (eg

TaskCorrupted) we'd have to wipe out everything and start it all again anyways

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8623: MINOR: Update the documentations

showuon commented on a change in pull request #8623: URL: https://github.com/apache/kafka/pull/8623#discussion_r425522820 ## File path: docs/security.html ## @@ -1438,7 +1438,7 @@ Examples bin/kafka-acls.sh --authorizer-properties zookeeper.connect=localhost:2181 --add --allow-principal User:Bob --allow-principal User:Alice --allow-host 198.51.100.0 --allow-host 198.51.100.1 --operation Read --operation Write --topic Test-topic By default, all principals that don't have an explicit acl that allows access for an operation to a resource are denied. In rare cases where an allow acl is defined that allows access to all but some principal we will have to use the --deny-principal and --deny-host option. For example, if we want to allow all users to Read from Test-topic but only deny User:BadBob from IP 198.51.100.3 we can do so using following commands: bin/kafka-acls.sh --authorizer-properties zookeeper.connect=localhost:2181 --add --allow-principal User:* --allow-host * --deny-principal User:BadBob --deny-host 198.51.100.3 --operation Read --topic Test-topic -Note that ``--allow-host`` and ``deny-host`` only support IP addresses (hostnames are not supported). +Note that --allow-host and --deny-host only support IP addresses (hostnames are not supported). Review comment: There's no format like ` `` ` in the documentation anywhere else. Replace with `` formatting here. before:  after:  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8623: MINOR: Update the documentations

showuon commented on a change in pull request #8623: URL: https://github.com/apache/kafka/pull/8623#discussion_r420534565 ## File path: docs/security.html ## @@ -398,7 +398,7 @@ Host Name Verification ssl.keystore.password=test1234 ssl.key.password=test1234 -Other configuration settings that may also be needed depending on our requirements and the broker configuration: +Other configuration settings that may also be needed depending on requirements and the broker configuration: Review comment: Remove the redundant `our` here before:  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #8623: MINOR: Update the documentations

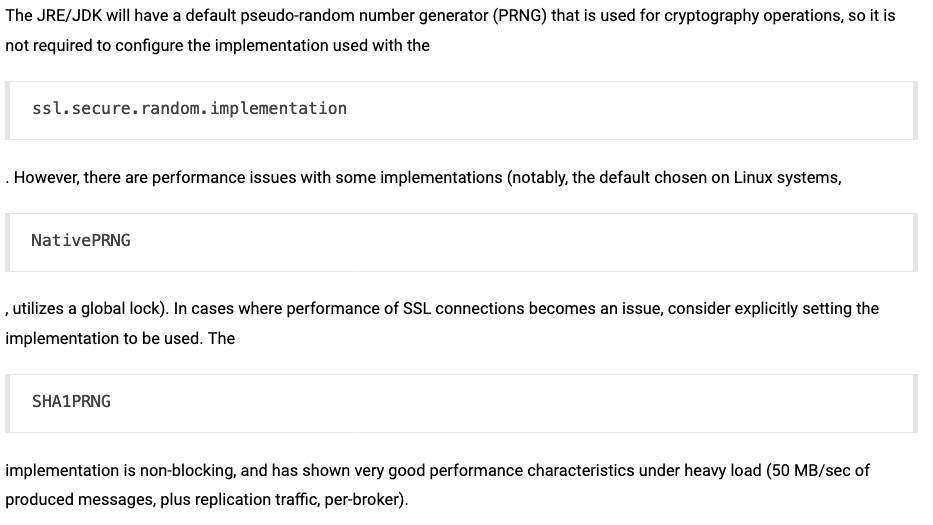

showuon commented on a change in pull request #8623: URL: https://github.com/apache/kafka/pull/8623#discussion_r420534465 ## File path: docs/security.html ## @@ -361,9 +361,9 @@ Host Name Verification The JRE/JDK will have a default pseudo-random number generator (PRNG) that is used for cryptography operations, so it is not required to configure the -implementation used with the ssl.secure.random.implementation. However, there are performance issues with some implementations (notably, the -default chosen on Linux systems, NativePRNG, utilizes a global lock). In cases where performance of SSL connections becomes an issue, -consider explicitly setting the implementation to be used. The SHA1PRNG implementation is non-blocking, and has shown very good performance +implementation used with the ssl.secure.random.implementation. However, there are performance issues with some implementations (notably, the +default chosen on Linux systems, NativePRNG, utilizes a global lock). In cases where performance of SSL connections becomes an issue, +consider explicitly setting the implementation to be used. The SHA1PRNG implementation is non-blocking, and has shown very good performance Review comment: Fix wrong content format. **Before:**  **After:**  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda commented on pull request #8682: KAFKA-10011: Remove task id from lockedTaskDirectories during handleLostAll

abbccdda commented on pull request #8682: URL: https://github.com/apache/kafka/pull/8682#issuecomment-630551559 Will attempt Sophie's suggestion here This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #8681: KAFKA-10010: Should make state store registration idempotent

ableegoldman commented on a change in pull request #8681:

URL: https://github.com/apache/kafka/pull/8681#discussion_r427003560

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ProcessorStateManager.java

##

@@ -266,7 +266,8 @@ public void registerStore(final StateStore store, final

StateRestoreCallback sta

}

if (stores.containsKey(storeName)) {

-throw new IllegalArgumentException(format("%sStore %s has already

been registered.", logPrefix, storeName));

+log.warn("State Store {} has already been registered, which could

be due to a half-way registration" +

Review comment:

Nah, I think we should actually keep this (although

`IllegalStateException` seems to make more sense, can we change it?) -- we

should just make sure we don't reach it

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (KAFKA-9989) StreamsUpgradeTest.test_metadata_upgrade could not guarantee all processor gets assigned task

[ https://issues.apache.org/jira/browse/KAFKA-9989?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Boyang Chen updated KAFKA-9989: --- Attachment: 166.tgz > StreamsUpgradeTest.test_metadata_upgrade could not guarantee all processor > gets assigned task > - > > Key: KAFKA-9989 > URL: https://issues.apache.org/jira/browse/KAFKA-9989 > Project: Kafka > Issue Type: Bug > Components: streams, system tests >Reporter: Boyang Chen >Assignee: HaiyuanZhao >Priority: Major > Labels: newbie > Attachments: 166.tgz > > > System test StreamsUpgradeTest.test_metadata_upgrade could fail due to: > "Never saw output 'processed [0-9]* records' on ubuntu@worker6" > which if we take a closer look at, the rebalance happens but has no task > assignment. We should fix this problem by making the rebalance result as part > of the check, and wait for the finalized assignment (non-empty) before > kicking off the record processing validation. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] abbccdda commented on a change in pull request #8681: KAFKA-10010: Should make state store registration idempotent

abbccdda commented on a change in pull request #8681:

URL: https://github.com/apache/kafka/pull/8681#discussion_r426998545

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ProcessorStateManager.java

##

@@ -266,7 +266,8 @@ public void registerStore(final StateStore store, final

StateRestoreCallback sta

}

if (stores.containsKey(storeName)) {

-throw new IllegalArgumentException(format("%sStore %s has already

been registered.", logPrefix, storeName));

+log.warn("State Store {} has already been registered, which could

be due to a half-way registration" +

Review comment:

So are we still required to remove the illegal argument exception here?

What I'm concerned is that the latest version of state store initialization

might be different from previous iteration, so it's safer to just go through

the entire procedure once more.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] abbccdda commented on a change in pull request #8680: KAFKA-9755: Implement read path for feature versioning system (KIP-584)

abbccdda commented on a change in pull request #8680:

URL: https://github.com/apache/kafka/pull/8680#discussion_r426884892

##

File path:

clients/src/main/java/org/apache/kafka/common/feature/VersionLevelRange.java

##

@@ -0,0 +1,39 @@

+package org.apache.kafka.common.feature;

+

+import java.util.Map;

+

+/**

+ * A specialization of VersionRange representing a range of version levels.

The main specialization

+ * is that the class uses different serialization keys for min/max attributes.

+ *

+ * NOTE: This is the backing class used to define the min/max version levels

for finalized features.

+ */

+public class VersionLevelRange extends VersionRange {

Review comment:

In terms of naming, do you think `FinalizedVersionRange` is more

explicit? Also when I look closer at the class hierarchy, I feel the sharing

point between finalized version range and supported version range should be

extracted to avoid weird inheritance. What I'm proposing is to have

`VersionRange` as a super class with two subclasses: `SupportedVersionRange`

and `FinalizedVersionRange`, and make `minKeyLabel` and `maxKeyLabel` abstract

functions, WDYT?

##

File path:

clients/src/test/java/org/apache/kafka/common/feature/VersionRangeTest.java

##

@@ -0,0 +1,150 @@

+package org.apache.kafka.common.feature;

+

+import java.util.HashMap;

+import java.util.Map;

+

+import org.junit.Test;

+

+import static org.junit.Assert.assertEquals;

+import static org.junit.Assert.assertFalse;

+import static org.junit.Assert.assertThrows;

+import static org.junit.Assert.assertTrue;

+

+public class VersionRangeTest {

+@Test

+public void testFailDueToInvalidParams() {

+// min and max can't be < 1.

+assertThrows(

+IllegalArgumentException.class,

+() -> new VersionRange(0, 0));

+assertThrows(

+IllegalArgumentException.class,

+() -> new VersionRange(-1, -1));

+// min can't be < 1.

+assertThrows(

+IllegalArgumentException.class,

+() -> new VersionRange(0, 1));

+assertThrows(

+IllegalArgumentException.class,

+() -> new VersionRange(-1, 1));

+// max can't be < 1.

+assertThrows(

+IllegalArgumentException.class,

+() -> new VersionRange(1, 0));

+assertThrows(

+IllegalArgumentException.class,

+() -> new VersionRange(1, -1));

+// min can't be > max.

+assertThrows(

+IllegalArgumentException.class,

+() -> new VersionRange(2, 1));

+}

+

+@Test

+public void testSerializeDeserializeTest() {

+VersionRange versionRange = new VersionRange(1, 2);

+assertEquals(1, versionRange.min());

+assertEquals(2, versionRange.max());

+

+Map serialized = versionRange.serialize();

+assertEquals(

+new HashMap() {

+{

+put("min_version", versionRange.min());

+put("max_version", versionRange.max());

+}

+},

+serialized

+);

+

+VersionRange deserialized = VersionRange.deserialize(serialized);

+assertEquals(1, deserialized.min());

+assertEquals(2, deserialized.max());

+assertEquals(versionRange, deserialized);

+}

+

+@Test

+public void testDeserializationFailureTest() {

+// min_version can't be < 1.

+Map invalidWithBadMinVersion = new HashMap() {

+{

+put("min_version", 0L);

+put("max_version", 1L);

+}

+};

+assertThrows(

+IllegalArgumentException.class,

+() -> VersionRange.deserialize(invalidWithBadMinVersion));

+

+// max_version can't be < 1.

+Map invalidWithBadMaxVersion = new HashMap() {

+{

+put("min_version", 1L);

+put("max_version", 0L);

+}

+};

+assertThrows(

+IllegalArgumentException.class,

+() -> VersionRange.deserialize(invalidWithBadMaxVersion));

+

+// min_version and max_version can't be < 1.

+Map invalidWithBadMinMaxVersion = new HashMap() {

+{

+put("min_version", 0L);

+put("max_version", 0L);

+}

+};

+assertThrows(

+IllegalArgumentException.class,

+() -> VersionRange.deserialize(invalidWithBadMinMaxVersion));

+

+// min_version can't be > max_version.

+Map invalidWithLowerMaxVersion = new HashMap() {

+{

+put("min_version", 2L);

+put("max_version", 1L);

+}

+};

+assertThrows(

+IllegalArgumentException.class,

+() -> VersionRange.deserialize(invalidWithLowerMaxVersion));

+

+// min_version key missing.

+Map invalidWithMinKeyMissing = n

[jira] [Commented] (KAFKA-10016) Support For Purge Topic

[

https://issues.apache.org/jira/browse/KAFKA-10016?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17110772#comment-17110772

]

Matthias J. Sax commented on KAFKA-10016:

-

Isn't this already supported via

[https://cwiki.apache.org/confluence/display/KAFKA/KIP-107%3A+Add+deleteRecordsBefore%28%29+API+in+AdminClient]?

> Support For Purge Topic

> ---

>

> Key: KAFKA-10016

> URL: https://issues.apache.org/jira/browse/KAFKA-10016

> Project: Kafka

> Issue Type: Improvement

>Reporter: David Mollitor

>Priority: Major

>

> Some discussions about how to purge a topic. Please add native support for

> this operation. Is there a "starting offset" for each topic? Such a vehicle

> would allow for this value to be easily set with the current offeset and the

> brokers will skip (and clean) everything before that.

>

> [https://stackoverflow.com/questions/16284399/purge-kafka-topic]

>

> {code:none}

> kafka-topics --topic mytopic --purge

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (KAFKA-10004) KAFKA-10004: ConfigCommand fails to find default broker configs without ZK

[

https://issues.apache.org/jira/browse/KAFKA-10004?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Colin McCabe updated KAFKA-10004:

-

Summary: KAFKA-10004: ConfigCommand fails to find default broker configs

without ZK (was: using kafka-configs.sh --describe for brokers will have error

when querying default broker)

> KAFKA-10004: ConfigCommand fails to find default broker configs without ZK

> --

>

> Key: KAFKA-10004

> URL: https://issues.apache.org/jira/browse/KAFKA-10004

> Project: Kafka

> Issue Type: Bug

>Affects Versions: 2.5.0

>Reporter: Luke Chen

>Assignee: Luke Chen

>Priority: Major

> Fix For: 2.6.0

>

>

> When running

> {code:java}

> bin/kafka-configs.sh --describe --bootstrap-server localhost:9092

> --entity-type brokers

> {code}

> the output will be:

> Dynamic configs for broker 0 are:

> Dynamic configs for broker are:

> *The entity name for brokers must be a valid integer broker id, found:

> *

>

> The default entity cannot successfully get the configs.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] ableegoldman commented on a change in pull request #8677: KAFKA-9999: Make internal topic creation error non-fatal

ableegoldman commented on a change in pull request #8677:

URL: https://github.com/apache/kafka/pull/8677#discussion_r426983346

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/InternalTopicManager.java

##

@@ -171,7 +173,7 @@ public InternalTopicManager(final Admin adminClient, final

StreamsConfig streams

"This can happen if the Kafka cluster is temporary not

available. " +

"You can increase admin client config `retries` to be

resilient against this error.", retries);

log.error(timeoutAndRetryError);

-throw new StreamsException(timeoutAndRetryError);

+throw new TaskMigratedException("Time out for creating internal

topics", new TimeoutException(timeoutAndRetryError));

Review comment:

Actually, I'm not sure we necessarily even _need_ to call on the

`FallbackPriorTaskAssignor`, we just need to schedule the followup and remove

the affected tasks from the assignment

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on pull request #8675: KAFKA-10004: Fix the default broker configs cannot be displayed when using kafka-configs.sh --describe

cmccabe commented on pull request #8675: URL: https://github.com/apache/kafka/pull/8675#issuecomment-630526017 @showuon : thanks for the fix! You might want to fix your git configuration since your email is showing up as `showuon <43372967+show...@users.noreply.github.com>` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cmccabe merged pull request #8675: KAFKA-10004: Fix the default broker configs cannot be displayed when using kafka-configs.sh --describe

cmccabe merged pull request #8675: URL: https://github.com/apache/kafka/pull/8675 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #8677: KAFKA-9999: Make internal topic creation error non-fatal

ableegoldman commented on a change in pull request #8677:

URL: https://github.com/apache/kafka/pull/8677#discussion_r426981901

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/InternalTopicManager.java

##

@@ -171,7 +173,7 @@ public InternalTopicManager(final Admin adminClient, final

StreamsConfig streams

"This can happen if the Kafka cluster is temporary not

available. " +

"You can increase admin client config `retries` to be

resilient against this error.", retries);

log.error(timeoutAndRetryError);

-throw new StreamsException(timeoutAndRetryError);

+throw new TaskMigratedException("Time out for creating internal

topics", new TimeoutException(timeoutAndRetryError));

Review comment:

Good point.. what if we just call on the `FallbackPriorTaskAssignor`

like we do when `listOffsets` fails, and then remove any tasks that involve

internal topics we failed to create? And schedule the followup rebalance for

"immediately"

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] jiameixie commented on pull request #8692: KAFKA-10018:Change sh to bash

jiameixie commented on pull request #8692: URL: https://github.com/apache/kafka/pull/8692#issuecomment-630523741 @ijuma @abbccdda @mjsax @halorgium @astubbs @alexism @glasser PTAL, thanks This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on pull request #8675: KAFKA-10004: Fix the default broker configs cannot be displayed when using kafka-configs.sh --describe

cmccabe commented on pull request #8675: URL: https://github.com/apache/kafka/pull/8675#issuecomment-630523110 LGTM This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman edited a comment on pull request #8682: KAFKA-10011: Remove task id from lockedTaskDirectories during handleLostAll

ableegoldman edited a comment on pull request #8682: URL: https://github.com/apache/kafka/pull/8682#issuecomment-630522346 I also think we should reset/clear the set at the beginning of `tryToLockAllNonEmptyTaskDirectories`, so basically we're always dealing with the current set of actually-locked tasks and don't need to worry about removing them during `handleLostAll` or `handleCorruption/Assignment`, etc This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on pull request #8682: KAFKA-10011: Remove task id from lockedTaskDirectories during handleLostAll

ableegoldman commented on pull request #8682: URL: https://github.com/apache/kafka/pull/8682#issuecomment-630522346 I also think we should reset/clear the set at the beginning of `tryToLockAllNonEmptyTaskDirectories` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jiameixie opened a new pull request #8692: KAFKA-10018:Change sh to bash

jiameixie opened a new pull request #8692: URL: https://github.com/apache/kafka/pull/8692 "#!/bin/sh" is used in kafka-server-stop.sh and zookeeper-server-stop.sh. [[ is a bash-builtin and used. Modern Debian and Ubuntu systems, which symlink sh to dash by default. So "[[: not found" will occur. Change "#!/bin/sh" into "#!/bin/bash" can avoid this error. Modify and make all scripts using bash. Change-Id: I733c6e31f76d768e71ac0e040a33da8f4bd8f005 Signed-off-by: Jiamei Xie *More detailed description of your change, if necessary. The PR title and PR message become the squashed commit message, so use a separate comment to ping reviewers.* *Summary of testing strategy (including rationale) for the feature or bug fix. Unit and/or integration tests are expected for any behaviour change and system tests should be considered for larger changes.* ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] guozhangwang commented on a change in pull request #8681: KAFKA-10010: Should make state store registration idempotent

guozhangwang commented on a change in pull request #8681:

URL: https://github.com/apache/kafka/pull/8681#discussion_r426975369

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ProcessorStateManager.java

##

@@ -266,7 +266,8 @@ public void registerStore(final StateStore store, final

StateRestoreCallback sta

}

if (stores.containsKey(storeName)) {

-throw new IllegalArgumentException(format("%sStore %s has already

been registered.", logPrefix, storeName));

+log.warn("State Store {} has already been registered, which could

be due to a half-way registration" +

Review comment:

+1, we can rely on `storeManager#getStore` inside `StateManagerUtil` to

check if the store is already registered.

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ProcessorStateManager.java

##

@@ -266,7 +266,8 @@ public void registerStore(final StateStore store, final

StateRestoreCallback sta

}

if (stores.containsKey(storeName)) {

-throw new IllegalArgumentException(format("%sStore %s has already

been registered.", logPrefix, storeName));

+log.warn("State Store {} has already been registered, which could

be due to a half-way registration" +

+"in the previous round", storeName);

Review comment:

nit: we could make the warn log entry more clear that we did not

override the registered the store, e.g. "Skipped registering state store {}

since it has already existed in the state manager, ..."

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Created] (KAFKA-10018) Change sh to bash

jiamei xie created KAFKA-10018: -- Summary: Change sh to bash Key: KAFKA-10018 URL: https://issues.apache.org/jira/browse/KAFKA-10018 Project: Kafka Issue Type: Bug Components: admin Reporter: jiamei xie Assignee: jiamei xie "#!/bin/sh" is used in kafka-server-stop.sh and zookeeper-server-stop.sh. [[ is a bash-builtin and used. Modern Debian and Ubuntu systems, which symlink sh to dash by default. So "[[: not found" will occur. Change "#!/bin/sh" into "#!/bin/bash" can avoid this error. Modify and make all scripts using bash. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] guozhangwang commented on pull request #8682: KAFKA-10011: Remove task id from lockedTaskDirectories during handleLostAll

guozhangwang commented on pull request #8682:

URL: https://github.com/apache/kafka/pull/8682#issuecomment-630515587

I agree with @ableegoldman here, after the `while (taskIdIterator.hasNext()`

loop we can see if there are still remaining tasks, and then log an WARN

similar to the end of `handleRevocation` before clearing them:

```

if (!remainingPartitions.isEmpty()) {

log.warn("The following partitions {} are missing from the task

partitions. It could potentially " +

"due to race condition of consumer detecting the

heartbeat failure, or the tasks " +

"have been cleaned up by the handleAssignment

callback.", remainingPartitions);

}

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-6520) When a Kafka Stream can't communicate with the server, it's Status stays RUNNING