[jira] [Updated] (KAFKA-10181) Redirect AlterConfig/IncrementalAlterConfig to the controller

[ https://issues.apache.org/jira/browse/KAFKA-10181?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Boyang Chen updated KAFKA-10181: Description: In the new Admin client, the AlterConfig/IncrementalAlterConfig request should be redirected to the active controller. (was: In the new Admin client, the request should always be routed towards the controller.) > Redirect AlterConfig/IncrementalAlterConfig to the controller > - > > Key: KAFKA-10181 > URL: https://issues.apache.org/jira/browse/KAFKA-10181 > Project: Kafka > Issue Type: Sub-task > Components: admin >Reporter: Boyang Chen >Assignee: Boyang Chen >Priority: Major > Fix For: 2.7.0 > > > In the new Admin client, the AlterConfig/IncrementalAlterConfig request > should be redirected to the active controller. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (KAFKA-10181) Redirect AlterConfig/IncrementalAlterConfig to the controller

[ https://issues.apache.org/jira/browse/KAFKA-10181?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Boyang Chen updated KAFKA-10181: Summary: Redirect AlterConfig/IncrementalAlterConfig to the controller (was: AlterConfig/IncrementalAlterConfig should go to controller) > Redirect AlterConfig/IncrementalAlterConfig to the controller > - > > Key: KAFKA-10181 > URL: https://issues.apache.org/jira/browse/KAFKA-10181 > Project: Kafka > Issue Type: Sub-task > Components: admin >Reporter: Boyang Chen >Assignee: Boyang Chen >Priority: Major > Fix For: 2.7.0 > > > In the new Admin client, the request should always be routed towards the > controller. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (KAFKA-10270) Add a broker to controller channel manager to redirect AlterConfig

[ https://issues.apache.org/jira/browse/KAFKA-10270?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Boyang Chen updated KAFKA-10270: Fix Version/s: 2.7.0 > Add a broker to controller channel manager to redirect AlterConfig > -- > > Key: KAFKA-10270 > URL: https://issues.apache.org/jira/browse/KAFKA-10270 > Project: Kafka > Issue Type: Sub-task >Reporter: Boyang Chen >Assignee: Boyang Chen >Priority: Major > Fix For: 2.7.0 > > > Per KIP-590 requirement, we need to have a dedicate communication channel > from broker to the controller. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (KAFKA-10270) Add a broker to controller channel manager to redirect AlterConfig

[ https://issues.apache.org/jira/browse/KAFKA-10270?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Boyang Chen resolved KAFKA-10270. - Resolution: Fixed > Add a broker to controller channel manager to redirect AlterConfig > -- > > Key: KAFKA-10270 > URL: https://issues.apache.org/jira/browse/KAFKA-10270 > Project: Kafka > Issue Type: Sub-task >Reporter: Boyang Chen >Assignee: Boyang Chen >Priority: Major > > Per KIP-590 requirement, we need to have a dedicate communication channel > from broker to the controller. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (KAFKA-10326) Both serializer and deserializer should be able to see the generated client id

Chia-Ping Tsai created KAFKA-10326: -- Summary: Both serializer and deserializer should be able to see the generated client id Key: KAFKA-10326 URL: https://issues.apache.org/jira/browse/KAFKA-10326 Project: Kafka Issue Type: Bug Reporter: Chia-Ping Tsai Assignee: Chia-Ping Tsai Producer and consumer generate client id when users don't define it. the generated client id is passed to all configurable components (for example, metrics reporter) except for serializer/deseriaizer. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (KAFKA-10325) Implement KIP-649: Dynamic Client Configuration

Ryan Dielhenn created KAFKA-10325: - Summary: Implement KIP-649: Dynamic Client Configuration Key: KAFKA-10325 URL: https://issues.apache.org/jira/browse/KAFKA-10325 Project: Kafka Issue Type: New Feature Reporter: Ryan Dielhenn Implement KIP-649: Dynamic Client Configuration -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167488#comment-17167488 ] Ismael Juma commented on KAFKA-10324: - Yeah, it's usually ok to have one smaller batch per segment. And in the common case where you are reading from the end of the log, there may not be another segment. Scanning ahead seems ok for down conversion, but it's not clear if it's worth it for the common case (there is a cost to scanning ahead). > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10307) Topology cycles in KTableKTableForeignKeyInnerJoinMultiIntegrationTest#shouldInnerJoinMultiPartitionQueryable

[

https://issues.apache.org/jira/browse/KAFKA-10307?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167487#comment-17167487

]

John Roesler commented on KAFKA-10307:

--

Hey [~bchen225242] , it seems like this was just a misunderstanding. Can we

close the ticket? Or have I missed something?

> Topology cycles in

> KTableKTableForeignKeyInnerJoinMultiIntegrationTest#shouldInnerJoinMultiPartitionQueryable

> -

>

> Key: KAFKA-10307

> URL: https://issues.apache.org/jira/browse/KAFKA-10307

> Project: Kafka

> Issue Type: Bug

> Components: streams

>Affects Versions: 2.4.0, 2.5.0, 2.6.0

>Reporter: Boyang Chen

>Priority: Major

>

> We have spotted a cycled topology for the foreign-key join test

> *shouldInnerJoinMultiPartitionQueryable*, not sure yet whether this is a bug

> in the algorithm or the test only. Used

> [https://zz85.github.io/kafka-streams-viz/] to visualize:

> {code:java}

> Sub-topology: 0

> Source: KTABLE-SOURCE-19 (topics:

> [KTABLE-FK-JOIN-SUBSCRIPTION-RESPONSE-17-topic])

> --> KTABLE-FK-JOIN-SUBSCRIPTION-RESPONSE-RESOLVER-PROCESSOR-20

> Source: KTABLE-SOURCE-32 (topics:

> [KTABLE-FK-JOIN-SUBSCRIPTION-RESPONSE-30-topic])

> --> KTABLE-FK-JOIN-SUBSCRIPTION-RESPONSE-RESOLVER-PROCESSOR-33

> Source: KSTREAM-SOURCE-01 (topics: [table1])

> --> KTABLE-SOURCE-02

> Processor:

> KTABLE-FK-JOIN-SUBSCRIPTION-RESPONSE-RESOLVER-PROCESSOR-20 (stores:

> [table1-STATE-STORE-00])

> --> KTABLE-FK-JOIN-OUTPUT-21

> <-- KTABLE-SOURCE-19

> Processor:

> KTABLE-FK-JOIN-SUBSCRIPTION-RESPONSE-RESOLVER-PROCESSOR-33 (stores:

> [INNER-store1])

> --> KTABLE-FK-JOIN-OUTPUT-34

> <-- KTABLE-SOURCE-32

> Processor: KTABLE-FK-JOIN-OUTPUT-21 (stores: [INNER-store1])

> --> KTABLE-FK-JOIN-SUBSCRIPTION-REGISTRATION-23

> <-- KTABLE-FK-JOIN-SUBSCRIPTION-RESPONSE-RESOLVER-PROCESSOR-20

> Processor: KTABLE-FK-JOIN-OUTPUT-34 (stores: [INNER-store2])

> --> KTABLE-TOSTREAM-35

> <-- KTABLE-FK-JOIN-SUBSCRIPTION-RESPONSE-RESOLVER-PROCESSOR-33

> Processor: KTABLE-SOURCE-02 (stores:

> [table1-STATE-STORE-00])

> --> KTABLE-FK-JOIN-SUBSCRIPTION-REGISTRATION-10

> <-- KSTREAM-SOURCE-01

> Processor: KTABLE-FK-JOIN-SUBSCRIPTION-REGISTRATION-10 (stores:

> [])

> --> KTABLE-SINK-11

> <-- KTABLE-SOURCE-02

> Processor: KTABLE-FK-JOIN-SUBSCRIPTION-REGISTRATION-23 (stores:

> [])

> --> KTABLE-SINK-24

> <-- KTABLE-FK-JOIN-OUTPUT-21

> Processor: KTABLE-TOSTREAM-35 (stores: [])

> --> KSTREAM-SINK-36

> <-- KTABLE-FK-JOIN-OUTPUT-34

> Sink: KSTREAM-SINK-36 (topic: output-)

> <-- KTABLE-TOSTREAM-35

> Sink: KTABLE-SINK-11 (topic:

> KTABLE-FK-JOIN-SUBSCRIPTION-REGISTRATION-09-topic)

> <-- KTABLE-FK-JOIN-SUBSCRIPTION-REGISTRATION-10

> Sink: KTABLE-SINK-24 (topic:

> KTABLE-FK-JOIN-SUBSCRIPTION-REGISTRATION-22-topic)

> <-- KTABLE-FK-JOIN-SUBSCRIPTION-REGISTRATION-23 Sub-topology: 1

> Source: KSTREAM-SOURCE-04 (topics: [table2])

> --> KTABLE-SOURCE-05

> Source: KTABLE-SOURCE-12 (topics:

> [KTABLE-FK-JOIN-SUBSCRIPTION-REGISTRATION-09-topic])

> --> KTABLE-FK-JOIN-SUBSCRIPTION-PROCESSOR-14

> Processor: KTABLE-FK-JOIN-SUBSCRIPTION-PROCESSOR-14 (stores:

> [KTABLE-FK-JOIN-SUBSCRIPTION-STATE-STORE-13])

> --> KTABLE-FK-JOIN-SUBSCRIPTION-PROCESSOR-15

> <-- KTABLE-SOURCE-12

> Processor: KTABLE-SOURCE-05 (stores:

> [table2-STATE-STORE-03])

> --> KTABLE-FK-JOIN-SUBSCRIPTION-PROCESSOR-16

> <-- KSTREAM-SOURCE-04

> Processor: KTABLE-FK-JOIN-SUBSCRIPTION-PROCESSOR-15 (stores:

> [table2-STATE-STORE-03])

> --> KTABLE-SINK-18

> <-- KTABLE-FK-JOIN-SUBSCRIPTION-PROCESSOR-14

> Processor: KTABLE-FK-JOIN-SUBSCRIPTION-PROCESSOR-16 (stores:

> [KTABLE-FK-JOIN-SUBSCRIPTION-STATE-STORE-13])

> --> KTABLE-SINK-18

> <-- KTABLE-SOURCE-05

> Sink: KTABLE-SINK-18 (topic:

> KTABLE-FK-JOIN-SUBSCRIPTION-RESPONSE-17-topic)

> <-- KTABLE-FK-JOIN-SUBSCRIPTION-PROCESSOR-15,

> KTABLE-FK-JOIN-SUBSCRIPTION-PROCESSOR-16 Sub-topology: 2

> Source: KSTREAM-SOURCE-07 (topics: [table3])

> --> KTABLE-SOURCE-08

>

[jira] [Updated] (KAFKA-3672) Introduce globally consistent checkpoint in Kafka Streams

[ https://issues.apache.org/jira/browse/KAFKA-3672?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] John Roesler updated KAFKA-3672: Issue Type: New Feature (was: Bug) > Introduce globally consistent checkpoint in Kafka Streams > - > > Key: KAFKA-3672 > URL: https://issues.apache.org/jira/browse/KAFKA-3672 > Project: Kafka > Issue Type: New Feature > Components: streams >Affects Versions: 0.10.0.0 >Reporter: Guozhang Wang >Priority: Major > Labels: user-experience > > This is originate from the idea of rethinking about the checkpoint file > creation condition: > Today the checkpoint file containing the checkpointed offsets is written upon > stream task clean shutdown, and is read and deleted upon stream task > (re-)construction. The rationale is that if upon task re-construction, the > checkpoint file is missing, it indicates that the underlying persistent state > store (rocksDB, for example)'s state may not be consistent with the committed > offsets, and hence we'd better to wipe-out the maybe-broken state storage and > rebuild from the beginning of the offset. > However, we may able to do better than this setting if we can fully control > the persistent store flushing time to be aligned with committing, and hence > as long as we commit, we are always guaranteed to get a clear checkpoint. > This may be generalized to a "global state checkpoint" mechanism in Kafka > Streams, which may also subsume KAFKA-3184 for non persistent stores. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167485#comment-17167485 ] Tommy Becker commented on KAFKA-10324: -- Understood but seems like as your fetch offset approaches the end of a segment you'll get a smaller batch. Just pointing out that scanning ahead to grab as many records as will fit into the max fetch size could be a general, albeit small improvement across the board. > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (KAFKA-9210) kafka stream loss data

[

https://issues.apache.org/jira/browse/KAFKA-9210?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

John Roesler resolved KAFKA-9210.

-

Resolution: Fixed

> kafka stream loss data

> --

>

> Key: KAFKA-9210

> URL: https://issues.apache.org/jira/browse/KAFKA-9210

> Project: Kafka

> Issue Type: Bug

> Components: streams

>Affects Versions: 2.0.1

>Reporter: panpan.liu

>Priority: Major

> Attachments: app.log, screenshot-1.png

>

>

> kafka broker: 2.0.1

> kafka stream client: 2.1.0

> # two applications run at the same time

> # after some days,I stop one application(in k8s)

> # The flollowing log occured and I check the data and find that value is

> less than what I expected.

> {quote}Partitions

> [flash-app-xmc-worker-share-store-minute-repartition-1]Partitions

> [flash-app-xmc-worker-share-store-minute-repartition-1] for changelog

> flash-app-xmc-worker-share-store-minute-changelog-12019-11-19

> 05:50:49.816|WARN

> |flash-client-xmc-StreamThread-3|o.a.k.s.p.i.StoreChangelogReader|101|stream-thread

> [flash-client-xmc-StreamThread-3] Restoring StreamTasks failed. Deleting

> StreamTasks stores to recreate from

> scratch.org.apache.kafka.clients.consumer.OffsetOutOfRangeException: Offsets

> out of range with no configured reset policy for partitions:

> \{flash-app-xmc-worker-share-store-minute-changelog-1=6128684} at

> org.apache.kafka.clients.consumer.internals.Fetcher.parseCompletedFetch(Fetcher.java:987)2019-11-19

> 05:50:49.817|INFO

> |flash-client-xmc-StreamThread-3|o.a.k.s.p.i.StoreChangelogReader|105|stream-thread

> [flash-client-xmc-StreamThread-3] Reinitializing StreamTask TaskId: 10_1

> ProcessorTopology: KSTREAM-SOURCE-70: topics:

> [flash-app-xmc-worker-share-store-minute-repartition] children:

> [KSTREAM-AGGREGATE-67] KSTREAM-AGGREGATE-67: states:

> [worker-share-store-minute] children: [KTABLE-TOSTREAM-71]

> KTABLE-TOSTREAM-71: children: [KSTREAM-SINK-72]

> KSTREAM-SINK-72: topic:

> StaticTopicNameExtractor(xmc-worker-share-minute)Partitions

> [flash-app-xmc-worker-share-store-minute-repartition-1] for changelog

> flash-app-xmc-worker-share-store-minute-changelog-12019-11-19

> 05:50:49.842|WARN

> |flash-client-xmc-StreamThread-3|o.a.k.s.p.i.StoreChangelogReader|101|stream-thread

> [flash-client-xmc-StreamThread-3] Restoring StreamTasks failed. Deleting

> StreamTasks stores to recreate from

> scratch.org.apache.kafka.clients.consumer.OffsetOutOfRangeException: Offsets

> out of range with no configured reset policy for partitions:

> \{flash-app-xmc-worker-share-store-minute-changelog-1=6128684} at

> org.apache.kafka.clients.consumer.internals.Fetcher.parseCompletedFetch(Fetcher.java:987)2019-11-19

> 05:50:49.842|INFO

> |flash-client-xmc-StreamThread-3|o.a.k.s.p.i.StoreChangelogReader|105|stream-thread

> [flash-client-xmc-StreamThread-3] Reinitializing StreamTask TaskId: 10_1

> ProcessorTopology: KSTREAM-SOURCE-70: topics:

> [flash-app-xmc-worker-share-store-minute-repartition] children:

> [KSTREAM-AGGREGATE-67] KSTREAM-AGGREGATE-67: states:

> [worker-share-store-minute] children: [KTABLE-TOSTREAM-71]

> KTABLE-TOSTREAM-71: children: [KSTREAM-SINK-72]

> KSTREAM-SINK-72: topic:

> StaticTopicNameExtractor(xmc-worker-share-minute)Partitions

> [flash-app-xmc-worker-share-store-minute-repartition-1] for changelog

> flash-app-xmc-worker-share-store-minute-changelog-12019-11-19

> 05:50:49.905|WARN

> |flash-client-xmc-StreamThread-3|o.a.k.s.p.i.StoreChangelogReader|101|stream-thread

> [flash-client-xmc-StreamThread-3] Restoring StreamTasks failed. Deleting

> StreamTasks stores to recreate from

> scratch.org.apache.kafka.clients.consumer.OffsetOutOfRangeException: Offsets

> out of range with no configured reset policy for partitions:

> \{flash-app-xmc-worker-share-store-minute-changelog-1=6128684} at

> org.apache.kafka.clients.consumer.internals.Fetcher.parseCompletedFetch(Fetcher.java:987)2019-11-19

> 05:50:49.906|INFO

> |flash-client-xmc-StreamThread-3|o.a.k.s.p.i.StoreChangelogReader|105|stream-thread

> [flash-client-xmc-StreamThread-3] Reinitializing StreamTask TaskId: 10_1

> ProcessorTopology: KSTREAM-SOURCE-70: topics:

> [flash-app-xmc-worker-share-store-minute-repartition] children:

> [KSTREAM-AGGREGATE-67] KSTREAM-AGGREGATE-67: states:

> [worker-share-store-minute]

> {quote}

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167481#comment-17167481 ] Ismael Juma commented on KAFKA-10324: - Segments generally are larger than the fetch response size, so it's usually not an issue. > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Comment Edited] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167481#comment-17167481 ] Ismael Juma edited comment on KAFKA-10324 at 7/29/20, 8:00 PM: --- Segments generally are typically significantly larger than the fetch response size, so it's usually not an issue. was (Author: ijuma): Segments generally are larger than the fetch response size, so it's usually not an issue. > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167480#comment-17167480 ] Tommy Becker commented on KAFKA-10324: -- So is it always the case that records returned in a FetchResponse are all from a single segment? Might that not be inefficient? > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167444#comment-17167444 ] Jason Gustafson commented on KAFKA-10324: - Thanks. Unfortunately it's not super straightforward to fix, but we have some ideas. Basically we have to modify the down-conversion logic so that it can read across multiple segments until it finds a non-empty batch to return. > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167431#comment-17167431 ] Tommy Becker commented on KAFKA-10324: -- Yes. See my comment above. > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167413#comment-17167413 ] Jason Gustafson commented on KAFKA-10324: - Aha, it is because it is at the end of the segment. Was that true in the other cases? > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] cmccabe commented on a change in pull request #9012: KAFKA-10270: A broker to controller channel manager

cmccabe commented on a change in pull request #9012:

URL: https://github.com/apache/kafka/pull/9012#discussion_r462385360

##

File path:

core/src/main/scala/kafka/server/BrokerToControllerChannelManager.scala

##

@@ -0,0 +1,188 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package kafka.server

+

+import java.util.concurrent.{LinkedBlockingDeque, TimeUnit}

+

+import kafka.common.{InterBrokerSendThread, RequestAndCompletionHandler}

+import kafka.utils.Logging

+import org.apache.kafka.clients._

+import org.apache.kafka.common.requests.AbstractRequest

+import org.apache.kafka.common.utils.{LogContext, Time}

+import org.apache.kafka.common.Node

+import org.apache.kafka.common.metrics.Metrics

+import org.apache.kafka.common.network._

+import org.apache.kafka.common.protocol.Errors

+import org.apache.kafka.common.security.JaasContext

+

+import scala.collection.mutable

+import scala.jdk.CollectionConverters._

+

+/**

+ * This class manages the connection between a broker and the controller. It

runs a single

+ * {@link BrokerToControllerRequestThread} which uses the broker's metadata

cache as its own metadata to find

+ * and connect to the controller. The channel is async and runs the network

connection in the background.

+ * The maximum number of in-flight requests are set to one to ensure orderly

response from the controller, therefore

+ * care must be taken to not block on outstanding requests for too long.

+ */

+class BrokerToControllerChannelManager(metadataCache:

kafka.server.MetadataCache,

+ time: Time,

+ metrics: Metrics,

+ config: KafkaConfig,

+ threadNamePrefix: Option[String] =

None) extends Logging {

+ private val requestQueue = new

LinkedBlockingDeque[BrokerToControllerQueueItem]

+ private val logContext = new

LogContext(s"[broker-${config.brokerId}-to-controller] ")

+ private val manualMetadataUpdater = new ManualMetadataUpdater()

+ private val requestThread = newRequestThread

+

+ def start(): Unit = {

+requestThread.start()

+ }

+

+ def shutdown(): Unit = {

+requestThread.shutdown()

+requestThread.awaitShutdown()

+ }

+

+ private[server] def newRequestThread = {

+val brokerToControllerListenerName =

config.controlPlaneListenerName.getOrElse(config.interBrokerListenerName)

+val brokerToControllerSecurityProtocol =

config.controlPlaneSecurityProtocol.getOrElse(config.interBrokerSecurityProtocol)

+

+val networkClient = {

+ val channelBuilder = ChannelBuilders.clientChannelBuilder(

+brokerToControllerSecurityProtocol,

+JaasContext.Type.SERVER,

+config,

+brokerToControllerListenerName,

+config.saslMechanismInterBrokerProtocol,

+time,

+config.saslInterBrokerHandshakeRequestEnable,

+logContext

+ )

+ val selector = new Selector(

+NetworkReceive.UNLIMITED,

+Selector.NO_IDLE_TIMEOUT_MS,

+metrics,

+time,

+"BrokerToControllerChannel",

+Map("BrokerId" -> config.brokerId.toString).asJava,

+false,

+channelBuilder,

+logContext

+ )

+ new NetworkClient(

+selector,

+manualMetadataUpdater,

+config.brokerId.toString,

+1,

+0,

+0,

+Selectable.USE_DEFAULT_BUFFER_SIZE,

+Selectable.USE_DEFAULT_BUFFER_SIZE,

+config.requestTimeoutMs,

+config.connectionSetupTimeoutMs,

+config.connectionSetupTimeoutMaxMs,

+ClientDnsLookup.USE_ALL_DNS_IPS,

+time,

+false,

+new ApiVersions,

+logContext

+ )

+}

+val threadName = threadNamePrefix match {

+ case None => s"broker-${config.brokerId}-to-controller-send-thread"

+ case Some(name) =>

s"$name:broker-${config.brokerId}-to-controller-send-thread"

+}

+

+new BrokerToControllerRequestThread(networkClient, manualMetadataUpdater,

requestQueue, metadataCache, config,

+ brokerToControllerListenerName, time, threadName)

+ }

+

+ private[server] def sendRequest(request: AbstractRequest.Builder[_ <:

[GitHub] [kafka] chia7712 commented on pull request #9097: KAFKA-10319: Skip unknown offsets when computing sum of changelog offsets

chia7712 commented on pull request #9097: URL: https://github.com/apache/kafka/pull/9097#issuecomment-665504521 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cadonna opened a new pull request #9098: KAFKA-9924: Prepare RocksDB and metrics for RocksDB properties recording

cadonna opened a new pull request #9098: URL: https://github.com/apache/kafka/pull/9098 This PR refactors the RocksDB store and the metrics infrastructure in Streams in preparation of the recordings of the RocksDB properties specified in KIP-607. The refactoring includes: - wrapper around `BlockedBasedTableConfig` to make the cache accessible to the RocksDB metrics recorder - RocksDB metrics recorder now takes also the DB instance and the cache in addition to the statistics - The value providers for the metrics are added to the RockDB metrics recorder also if the recording level is INFO. - The creation of the RocksDB metrics recording trigger is moved to `StreamsMetricsImpl` ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] badaiaqrandista edited a comment on pull request #4807: KAFKA-6733: Support of printing additional ConsumerRecord fields in DefaultMessageFormatter

badaiaqrandista edited a comment on pull request #4807: URL: https://github.com/apache/kafka/pull/4807#issuecomment-665660018 This PR should be closed as it has been superseded by PR 9099 (https://github.com/apache/kafka/pull/9099). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167400#comment-17167400 ] Tommy Becker edited comment on KAFKA-10324 at 7/29/20, 5:54 PM: [~hachikuji] wrt why the broker does not seem to send subsequent batches, I'm not sure. But I can tell you I see this behavior even with max.partition.fetch.bytes set to Integer.MAX_VALUE. Maybe this has something to do with down conversion? Anyway, here's an excerpt from a dump of the segment containing the problematic offset, which is 13920987: baseOffset: 13920966 lastOffset: 13920987 count: 6 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 49 isTransactional: false isControl: false position: 98516844 CreateTime: 1595224747691 size: 4407 magic: 2 compresscodec: NONE crc: 1598305187 isvalid: true \| offset: 13920978 CreateTime: 1595224747691 keysize: 36 valuesize: 681 sequence: -1 headerKeys: [] \| offset: 13920979 CreateTime: 1595224747691 keysize: 36 valuesize: 677 sequence: -1 headerKeys: [] \| offset: 13920980 CreateTime: 1595224747691 keysize: 36 valuesize: 680 sequence: -1 headerKeys: [] \| offset: 13920984 CreateTime: 1595224747691 keysize: 36 valuesize: 681 sequence: -1 headerKeys: [] \| offset: 13920985 CreateTime: 1595224747691 keysize: 36 valuesize: 677 sequence: -1 headerKeys: [] \| offset: 13920986 CreateTime: 1595224747691 keysize: 36 valuesize: 680 sequence: -1 headerKeys: [] End of segment is here was (Author: twbecker): [~hachikuji] wrt why the broker does not seem to send subsequent batches, I'm not sure. But I can tell you I see this behavior even with max.partition.fetch.bytes set to Integer.MAX_VALUE. Maybe this has something to do with down conversion? Anyway, here's an excerpt from a dump of the segment containing the problematic offset, which is 13920987: baseOffset: 13920966 lastOffset: 13920987 count: 6 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 49 isTransactional: false isControl: false position: 98516844 CreateTime: 1595224747691 size: 4407 magic: 2 compresscodec: NONE crc: 1598305187 isvalid: true | offset: 13920978 CreateTime: 1595224747691 keysize: 36 valuesize: 681 sequence: -1 headerKeys: [] | offset: 13920979 CreateTime: 1595224747691 keysize: 36 valuesize: 677 sequence: -1 headerKeys: [] | offset: 13920980 CreateTime: 1595224747691 keysize: 36 valuesize: 680 sequence: -1 headerKeys: [] | offset: 13920984 CreateTime: 1595224747691 keysize: 36 valuesize: 681 sequence: -1 headerKeys: [] | offset: 13920985 CreateTime: 1595224747691 keysize: 36 valuesize: 677 sequence: -1 headerKeys: [] | offset: 13920986 CreateTime: 1595224747691 keysize: 36 valuesize: 680 sequence: -1 headerKeys: [] End of segment is here > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] cadonna commented on pull request #9087: HOTFIX: Set session timeout and heartbeat interval to default to decrease flakiness

cadonna commented on pull request #9087: URL: https://github.com/apache/kafka/pull/9087#issuecomment-665526207 I did some runs of the `RestoreIntegrationTest` and the runtime does not significantly change between runs with default values for session timeout and heartbeat interval and reduced values for session timeout and heartbeat interval. Hence, I set the two configs to their default values for the entire test. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9092: KAFKA-10163; Define `controller_mutation_rate` as a Double instead of a Long

chia7712 commented on a change in pull request #9092:

URL: https://github.com/apache/kafka/pull/9092#discussion_r462394461

##

File path: core/src/main/scala/kafka/server/DynamicConfig.scala

##

@@ -103,7 +103,7 @@ object DynamicConfig {

.define(ProducerByteRateOverrideProp, LONG, DefaultProducerOverride,

MEDIUM, ProducerOverrideDoc)

.define(ConsumerByteRateOverrideProp, LONG, DefaultConsumerOverride,

MEDIUM, ConsumerOverrideDoc)

.define(RequestPercentageOverrideProp, DOUBLE, DefaultRequestOverride,

MEDIUM, RequestOverrideDoc)

- .define(ControllerMutationOverrideProp, LONG, DefaultConsumerOverride,

MEDIUM, ControllerMutationOverrideDoc)

+ .define(ControllerMutationOverrideProp, DOUBLE, DefaultConsumerOverride,

MEDIUM, ControllerMutationOverrideDoc)

Review comment:

why not changing the type of default value from ```Long``` to

```Double```?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] dajac commented on a change in pull request #9099: KAFKA-6733: Printing additional ConsumerRecord fields in DefaultMessageFormatter

dajac commented on a change in pull request #9099:

URL: https://github.com/apache/kafka/pull/9099#discussion_r462306541

##

File path: core/src/main/scala/kafka/tools/ConsoleConsumer.scala

##

@@ -459,48 +466,32 @@ class DefaultMessageFormatter extends MessageFormatter {

var printKey = false

var printValue = true

var printPartition = false

- var keySeparator = "\t".getBytes(StandardCharsets.UTF_8)

- var lineSeparator = "\n".getBytes(StandardCharsets.UTF_8)

+ var printOffset = false

+ var printHeaders = false

+ var keySeparator = utfBytes("\t")

+ var lineSeparator = utfBytes("\n")

+ var headersSeparator = utfBytes(",")

+ var nullLiteral = utfBytes("null")

var keyDeserializer: Option[Deserializer[_]] = None

var valueDeserializer: Option[Deserializer[_]] = None

-

- override def configure(configs: Map[String, _]): Unit = {

-val props = new java.util.Properties()

-configs.asScala.foreach { case (key, value) => props.put(key,

value.toString) }

-if (props.containsKey("print.timestamp"))

- printTimestamp =

props.getProperty("print.timestamp").trim.equalsIgnoreCase("true")

-if (props.containsKey("print.key"))

- printKey = props.getProperty("print.key").trim.equalsIgnoreCase("true")

-if (props.containsKey("print.value"))

- printValue =

props.getProperty("print.value").trim.equalsIgnoreCase("true")

-if (props.containsKey("print.partition"))

- printPartition =

props.getProperty("print.partition").trim.equalsIgnoreCase("true")

-if (props.containsKey("key.separator"))

- keySeparator =

props.getProperty("key.separator").getBytes(StandardCharsets.UTF_8)

-if (props.containsKey("line.separator"))

- lineSeparator =

props.getProperty("line.separator").getBytes(StandardCharsets.UTF_8)

-// Note that `toString` will be called on the instance returned by

`Deserializer.deserialize`

-if (props.containsKey("key.deserializer")) {

- keyDeserializer =

Some(Class.forName(props.getProperty("key.deserializer")).getDeclaredConstructor()

-.newInstance().asInstanceOf[Deserializer[_]])

-

keyDeserializer.get.configure(propertiesWithKeyPrefixStripped("key.deserializer.",

props).asScala.asJava, true)

-}

-// Note that `toString` will be called on the instance returned by

`Deserializer.deserialize`

-if (props.containsKey("value.deserializer")) {

- valueDeserializer =

Some(Class.forName(props.getProperty("value.deserializer")).getDeclaredConstructor()

-.newInstance().asInstanceOf[Deserializer[_]])

-

valueDeserializer.get.configure(propertiesWithKeyPrefixStripped("value.deserializer.",

props).asScala.asJava, false)

-}

- }

-

- private def propertiesWithKeyPrefixStripped(prefix: String, props:

Properties): Properties = {

-val newProps = new Properties()

-props.asScala.foreach { case (key, value) =>

- if (key.startsWith(prefix) && key.length > prefix.length)

-newProps.put(key.substring(prefix.length), value)

-}

-newProps

+ var headersDeserializer: Option[Deserializer[_]] = None

+

+ override def init(props: Properties): Unit = {

Review comment:

`init(props: Properties)` has been deprecated. It would be great if we

could keep using `configure(configs: Map[String, _])` as before. I think that

we should also try to directly extract the values from the `Map` instead of

using a `Properties`.

##

File path: core/src/test/scala/kafka/tools/DefaultMessageFormatterTest.scala

##

@@ -0,0 +1,235 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package unit.kafka.tools

+

+import java.io.{ByteArrayOutputStream, Closeable, PrintStream}

+import java.nio.charset.StandardCharsets

+import java.util

+import java.util.Properties

+

+import kafka.tools.DefaultMessageFormatter

+import org.apache.kafka.clients.consumer.ConsumerRecord

+import org.apache.kafka.common.header.Header

+import org.apache.kafka.common.header.internals.{RecordHeader, RecordHeaders}

+import org.apache.kafka.common.record.TimestampType

+import org.apache.kafka.common.serialization.Deserializer

+import org.junit.Assert._

+import org.junit.Test

+import org.junit.runner.RunWith

+import

[GitHub] [kafka] cadonna commented on pull request #9098: KAFKA-9924: Prepare RocksDB and metrics for RocksDB properties recording

cadonna commented on pull request #9098: URL: https://github.com/apache/kafka/pull/9098#issuecomment-665616943 Call for review: @vvcephei @guozhangwang This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167400#comment-17167400 ] Tommy Becker edited comment on KAFKA-10324 at 7/29/20, 5:46 PM: [~hachikuji] wrt why the broker does not seem to send subsequent batches, I'm not sure. But I can tell you I see this behavior even with max.partition.fetch.bytes set to Integer.MAX_VALUE. Maybe this has something to do with down conversion? Anyway, here's an excerpt from a dump of the segment containing the problematic offset, which is 13920987: baseOffset: 13920966 lastOffset: 13920987 count: 6 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 49 isTransactional: false isControl: false position: 98516844 CreateTime: 1595224747691 size: 4407 magic: 2 compresscodec: NONE crc: 1598305187 isvalid: true | offset: 13920978 CreateTime: 1595224747691 keysize: 36 valuesize: 681 sequence: -1 headerKeys: [] | offset: 13920979 CreateTime: 1595224747691 keysize: 36 valuesize: 677 sequence: -1 headerKeys: [] | offset: 13920980 CreateTime: 1595224747691 keysize: 36 valuesize: 680 sequence: -1 headerKeys: [] | offset: 13920984 CreateTime: 1595224747691 keysize: 36 valuesize: 681 sequence: -1 headerKeys: [] | offset: 13920985 CreateTime: 1595224747691 keysize: 36 valuesize: 677 sequence: -1 headerKeys: [] | offset: 13920986 CreateTime: 1595224747691 keysize: 36 valuesize: 680 sequence: -1 headerKeys: [] End of segment is here was (Author: twbecker): [~hachikuji] wrt why the broker does not seem to send subsequent batches, I'm not sure. But I can tell you I see this behavior even with max.partition.fetch.bytes set to Integer.MAX_VALUE. Maybe this has something to do with down conversion? Anyway, here's an excerpt from a dump of the segment containing the problematic offset, which is 13920987: baseOffset: 13920966 lastOffset: 13920987 count: 6 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 49 isTransactional: false isControl: false position: 98516844 CreateTime: 1595224747691 size: 4407 magic: 2 compresscodec: NONE crc: 1598305187 isvalid: true | offset: 13920978 CreateTime: 1595224747691 keysize: 36 valuesize: 681 sequence: -1 headerKeys: [] | offset: 13920979 CreateTime: 1595224747691 keysize: 36 valuesize: 677 sequence: -1 headerKeys: [] | offset: 13920980 CreateTime: 1595224747691 keysize: 36 valuesize: 680 sequence: -1 headerKeys: [] | offset: 13920984 CreateTime: 1595224747691 keysize: 36 valuesize: 681 sequence: -1 headerKeys: [] | offset: 13920985 CreateTime: 1595224747691 keysize: 36 valuesize: 677 sequence: -1 headerKeys: [] | offset: 13920986 CreateTime: 1595224747691 keysize: 36 valuesize: 680 sequence: -1 headerKeys: [] ### End of segment is here > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] mumrah commented on pull request #9008: KAFKA-9629 Use generated protocol for Fetch API

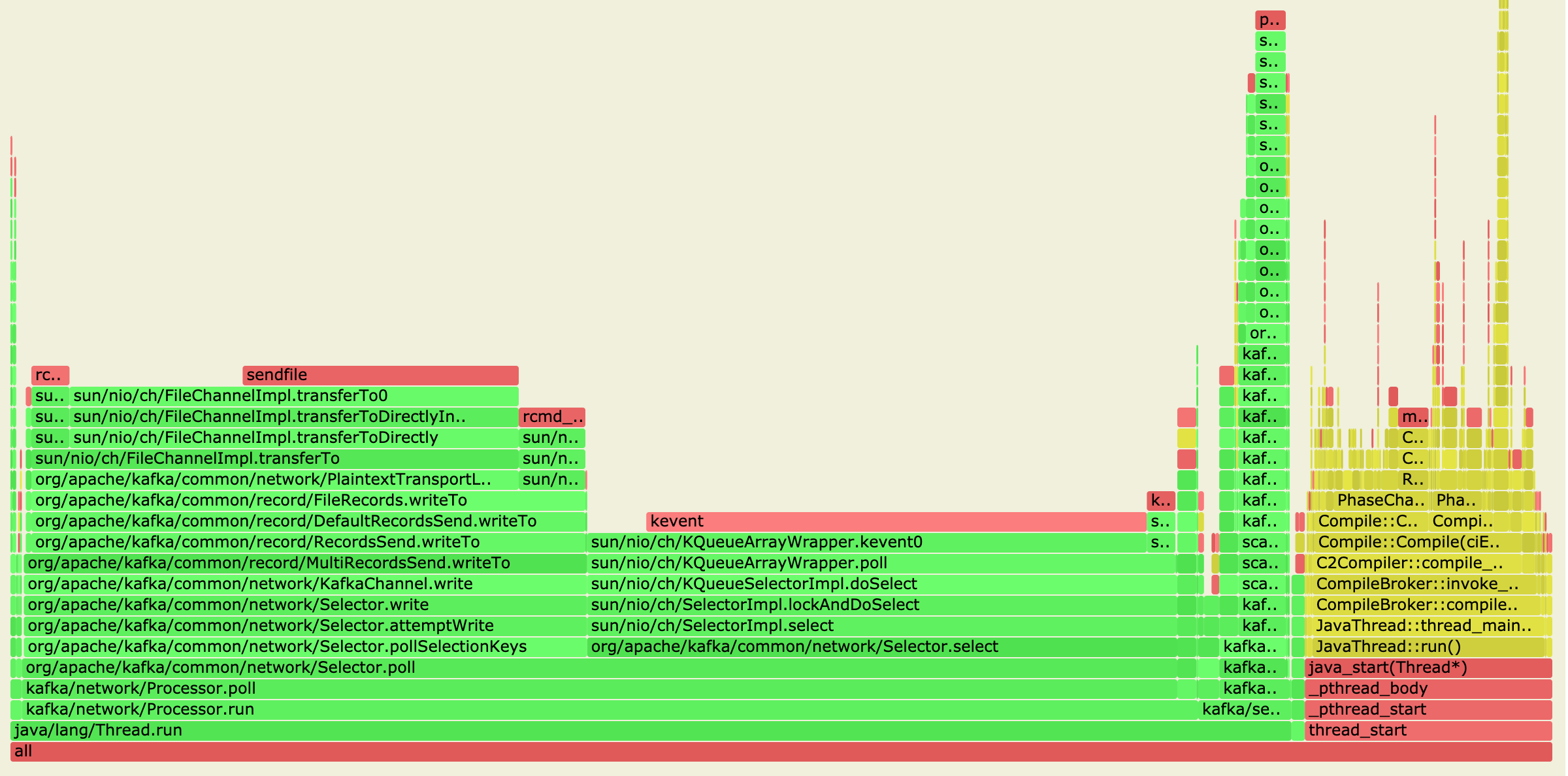

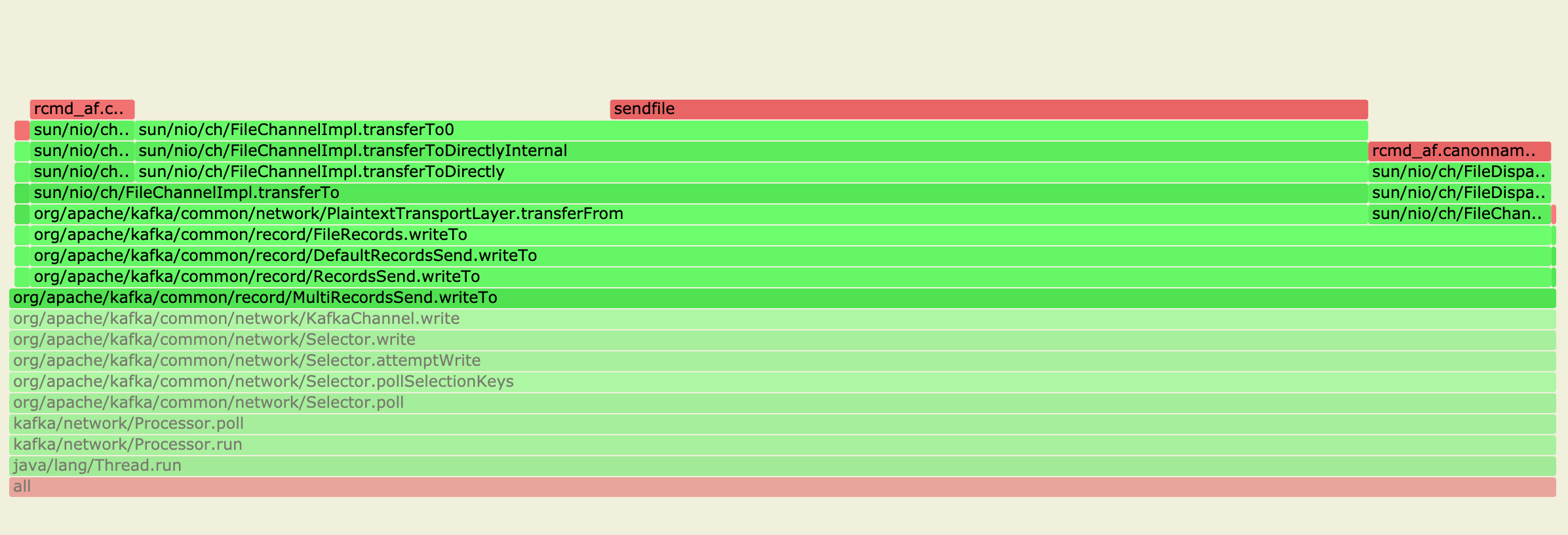

mumrah commented on pull request #9008: URL: https://github.com/apache/kafka/pull/9008#issuecomment-665795947 I ran a console consumer perf test (at @hachikuji's suggestion) and took a profile.  Zoomed in a bit on the records part:  This was with only a handful of partitions on a single broker (on my laptop), but it confirms that the new FetchResponse serialization is hitting the same sendfile path as the previous code. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167400#comment-17167400 ] Tommy Becker commented on KAFKA-10324: -- [~hachikuji] wrt why the broker does not seem to send subsequent batches, I'm not sure. But I can tell you I see this behavior even with max.partition.fetch.bytes set to Integer.MAX_VALUE. Maybe this has something to do with down conversion? Anyway, here's an excerpt from a dump of the segment containing the problematic offset, which is 13920987: baseOffset: 13920966 lastOffset: 13920987 count: 6 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 49 isTransactional: false isControl: false position: 98516844 CreateTime: 1595224747691 size: 4407 magic: 2 compresscodec: NONE crc: 1598305187 isvalid: true | offset: 13920978 CreateTime: 1595224747691 keysize: 36 valuesize: 681 sequence: -1 headerKeys: [] | offset: 13920979 CreateTime: 1595224747691 keysize: 36 valuesize: 677 sequence: -1 headerKeys: [] | offset: 13920980 CreateTime: 1595224747691 keysize: 36 valuesize: 680 sequence: -1 headerKeys: [] | offset: 13920984 CreateTime: 1595224747691 keysize: 36 valuesize: 681 sequence: -1 headerKeys: [] | offset: 13920985 CreateTime: 1595224747691 keysize: 36 valuesize: 677 sequence: -1 headerKeys: [] | offset: 13920986 CreateTime: 1595224747691 keysize: 36 valuesize: 680 sequence: -1 headerKeys: [] ### End of segment is here > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] cadonna commented on a change in pull request #9094: KAFKA-10054: KIP-613, add TRACE-level e2e latency metrics

cadonna commented on a change in pull request #9094:

URL: https://github.com/apache/kafka/pull/9094#discussion_r462327050

##

File path:

streams/src/main/java/org/apache/kafka/streams/state/internals/MeteredKeyValueStore.java

##

@@ -227,6 +234,14 @@ protected Bytes keyBytes(final K key) {

return byteEntries;

}

+private void maybeRecordE2ELatency() {

+if (e2eLatencySensor.shouldRecord() && e2eLatencySensor.hasMetrics()) {

Review comment:

I think, you do not need to check for metrics with

`e2eLatencySensor.hasMetrics()`. There should always be metrics within this

sensor.

`hasMetrics()` is used in `StreamsMetricsImpl#maybeMeasureLatency()` because

some sensors may not contain any metrics due to the built-in metrics version.

For instance, the destroy sensor exists for built-in metrics version 0.10.0-2.4

but not for latest. To avoid version checks in the record processing code, we

just create an empty sensor and call record on it effectively not recording any

metrics for this sensor for version latest.

We do not hide newly added metrics if the built-in version is set to an

older version.

Same applies to the other uses of `hasMetrics()` introduced in this PR.

##

File path:

streams/src/main/java/org/apache/kafka/streams/state/internals/MeteredSessionStore.java

##

@@ -248,4 +253,12 @@ public void close() {

private Bytes keyBytes(final K key) {

return Bytes.wrap(serdes.rawKey(key));

}

+

+private void maybeRecordE2ELatency() {

+if (e2eLatencySensor.shouldRecord() && e2eLatencySensor.hasMetrics()) {

Review comment:

Your approach makes sense to me. I agree that the latency should refer

to the update in the state store and not to record itself. If a record updates

the state more than once then latency should be measured each time.

##

File path:

streams/src/main/java/org/apache/kafka/streams/state/internals/metrics/StateStoreMetrics.java

##

@@ -443,6 +447,25 @@ public static Sensor suppressionBufferSizeSensor(final

String threadId,

);

}

+public static Sensor e2ELatencySensor(final String threadId,

+ final String taskId,

+ final String storeType,

+ final String storeName,

+ final StreamsMetricsImpl

streamsMetrics) {

+final Sensor sensor = streamsMetrics.storeLevelSensor(threadId,

taskId, storeName, RECORD_E2E_LATENCY, RecordingLevel.TRACE);

+final Map tagMap =

streamsMetrics.storeLevelTagMap(threadId, taskId, storeType, storeName);

+addAvgAndMinAndMaxToSensor(

+sensor,

+STATE_STORE_LEVEL_GROUP,

Review comment:

You need to use the `stateStoreLevelGroup()` here instead of

`STATE_STORE_LEVEL_GROUP` because the group name depends on the version and the

store type.

##

File path:

streams/src/test/java/org/apache/kafka/streams/integration/MetricsIntegrationTest.java

##

@@ -668,6 +671,9 @@ private void checkKeyValueStoreMetrics(final String

group0100To24,

checkMetricByName(listMetricStore, SUPPRESSION_BUFFER_SIZE_CURRENT, 0);

checkMetricByName(listMetricStore, SUPPRESSION_BUFFER_SIZE_AVG, 0);

checkMetricByName(listMetricStore, SUPPRESSION_BUFFER_SIZE_MAX, 0);

+checkMetricByName(listMetricStore, RECORD_E2E_LATENCY_AVG,

expectedNumberofE2ELatencyMetrics);

+checkMetricByName(listMetricStore, RECORD_E2E_LATENCY_MIN,

expectedNumberofE2ELatencyMetrics);

+checkMetricByName(listMetricStore, RECORD_E2E_LATENCY_MAX,

expectedNumberofE2ELatencyMetrics);

Review comment:

I agree with you, it should always be 1. It is the group of the metrics.

See my comment in `StateStoreMetrics`. I am glad this test served its purpose,

because I did not notice this in the unit tests!

##

File path:

streams/src/test/java/org/apache/kafka/streams/processor/internals/StreamTaskTest.java

##

@@ -468,38 +468,44 @@ public void

shouldRecordE2ELatencyOnProcessForSourceNodes() {

}

@Test

-public void shouldRecordE2ELatencyMinAndMax() {

+public void shouldRecordE2ELatencyAvgAndMinAndMax() {

time = new MockTime(0L, 0L, 0L);

metrics = new Metrics(new

MetricConfig().recordLevel(Sensor.RecordingLevel.INFO), time);

task = createStatelessTask(createConfig(false, "0"),

StreamsConfig.METRICS_LATEST);

final String sourceNode = source1.name();

-final Metric maxMetric = getProcessorMetric("record-e2e-latency",

"%s-max", task.id().toString(), sourceNode, StreamsConfig.METRICS_LATEST);

+final Metric avgMetric = getProcessorMetric("record-e2e-latency",

"%s-avg", task.id().toString(), sourceNode, StreamsConfig.METRICS_LATEST);

final Metric minMetric = getProcessorMetric("record-e2e-latency",

"%s-min",

[GitHub] [kafka] badaiaqrandista opened a new pull request #9099: KAFKA-6733: Printing additional ConsumerRecord fields in DefaultMessageFormatter

badaiaqrandista opened a new pull request #9099: URL: https://github.com/apache/kafka/pull/9099 Implementation of KIP-431 - Support of printing additional ConsumerRecord fields in DefaultMessageFormatter https://cwiki.apache.org/confluence/display/KAFKA/KIP-431%3A+Support+of+printing+additional+ConsumerRecord+fields+in+DefaultMessageFormatter *More detailed description of your change, if necessary. The PR title and PR message become the squashed commit message, so use a separate comment to ping reviewers.* *Summary of testing strategy (including rationale) for the feature or bug fix. Unit and/or integration tests are expected for any behaviour change and system tests should be considered for larger changes.* ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] guozhangwang commented on pull request #9087: HOTFIX: Set session timeout and heartbeat interval to default to decrease flakiness

guozhangwang commented on pull request #9087: URL: https://github.com/apache/kafka/pull/9087#issuecomment-665779451 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda commented on pull request #9012: KAFKA-10270: A broker to controller channel manager

abbccdda commented on pull request #9012: URL: https://github.com/apache/kafka/pull/9012#issuecomment-665757647 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mumrah commented on a change in pull request #9012: KAFKA-10270: A broker to controller channel manager

mumrah commented on a change in pull request #9012:

URL: https://github.com/apache/kafka/pull/9012#discussion_r462352682

##

File path:

core/src/main/scala/kafka/server/BrokerToControllerChannelManager.scala

##

@@ -0,0 +1,188 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package kafka.server

+

+import java.util.concurrent.{LinkedBlockingDeque, TimeUnit}

+

+import kafka.common.{InterBrokerSendThread, RequestAndCompletionHandler}

+import kafka.utils.Logging

+import org.apache.kafka.clients._

+import org.apache.kafka.common.requests.AbstractRequest

+import org.apache.kafka.common.utils.{LogContext, Time}

+import org.apache.kafka.common.Node

+import org.apache.kafka.common.metrics.Metrics

+import org.apache.kafka.common.network._

+import org.apache.kafka.common.protocol.Errors

+import org.apache.kafka.common.security.JaasContext

+

+import scala.collection.mutable

+import scala.jdk.CollectionConverters._

+

+/**

+ * This class manages the connection between a broker and the controller. It

runs a single

+ * {@link BrokerToControllerRequestThread} which uses the broker's metadata

cache as its own metadata to find

+ * and connect to the controller. The channel is async and runs the network

connection in the background.

+ * The maximum number of in-flight requests are set to one to ensure orderly

response from the controller, therefore

+ * care must be taken to not block on outstanding requests for too long.

+ */

+class BrokerToControllerChannelManager(metadataCache:

kafka.server.MetadataCache,

+ time: Time,

+ metrics: Metrics,

+ config: KafkaConfig,

+ threadNamePrefix: Option[String] =

None) extends Logging {

+ private val requestQueue = new

LinkedBlockingDeque[BrokerToControllerQueueItem]

+ private val logContext = new

LogContext(s"[broker-${config.brokerId}-to-controller] ")

+ private val manualMetadataUpdater = new ManualMetadataUpdater()

+ private val requestThread = newRequestThread

+

+ def start(): Unit = {

+requestThread.start()

+ }

+

+ def shutdown(): Unit = {

+requestThread.shutdown()

+requestThread.awaitShutdown()

+ }

+

+ private[server] def newRequestThread = {

+val brokerToControllerListenerName =

config.controlPlaneListenerName.getOrElse(config.interBrokerListenerName)

+val brokerToControllerSecurityProtocol =

config.controlPlaneSecurityProtocol.getOrElse(config.interBrokerSecurityProtocol)

+

+val networkClient = {

+ val channelBuilder = ChannelBuilders.clientChannelBuilder(

+brokerToControllerSecurityProtocol,

+JaasContext.Type.SERVER,

+config,

+brokerToControllerListenerName,

+config.saslMechanismInterBrokerProtocol,

+time,

+config.saslInterBrokerHandshakeRequestEnable,

+logContext

+ )

+ val selector = new Selector(

+NetworkReceive.UNLIMITED,

+Selector.NO_IDLE_TIMEOUT_MS,

+metrics,

+time,

+"BrokerToControllerChannel",

+Map("BrokerId" -> config.brokerId.toString).asJava,

+false,

+channelBuilder,

+logContext

+ )

+ new NetworkClient(

+selector,

+manualMetadataUpdater,

+config.brokerId.toString,

+1,

+0,

+0,

+Selectable.USE_DEFAULT_BUFFER_SIZE,

+Selectable.USE_DEFAULT_BUFFER_SIZE,

+config.requestTimeoutMs,

+config.connectionSetupTimeoutMs,

+config.connectionSetupTimeoutMaxMs,

+ClientDnsLookup.USE_ALL_DNS_IPS,

+time,

+false,

+new ApiVersions,

+logContext

+ )

+}

+val threadName = threadNamePrefix match {

+ case None => s"broker-${config.brokerId}-to-controller-send-thread"

+ case Some(name) =>

s"$name:broker-${config.brokerId}-to-controller-send-thread"

+}

+

+new BrokerToControllerRequestThread(networkClient, manualMetadataUpdater,

requestQueue, metadataCache, config,

+ brokerToControllerListenerName, time, threadName)

+ }

+

+ private[server] def sendRequest(request: AbstractRequest.Builder[_ <:

[GitHub] [kafka] mumrah commented on a change in pull request #9008: KAFKA-9629 Use generated protocol for Fetch API

mumrah commented on a change in pull request #9008:

URL: https://github.com/apache/kafka/pull/9008#discussion_r462364719

##

File path:

clients/src/main/java/org/apache/kafka/common/requests/FetchResponse.java

##

@@ -366,225 +255,128 @@ public FetchResponse(Errors error,

LinkedHashMap>

responseData,

int throttleTimeMs,

int sessionId) {

-this.error = error;

-this.responseData = responseData;

-this.throttleTimeMs = throttleTimeMs;

-this.sessionId = sessionId;

+this.data = toMessage(throttleTimeMs, error,

responseData.entrySet().iterator(), sessionId);

+this.responseDataMap = responseData;

}

-public static FetchResponse parse(Struct struct) {

-LinkedHashMap>

responseData = new LinkedHashMap<>();

-for (Object topicResponseObj : struct.getArray(RESPONSES_KEY_NAME)) {

-Struct topicResponse = (Struct) topicResponseObj;

-String topic = topicResponse.get(TOPIC_NAME);

-for (Object partitionResponseObj :

topicResponse.getArray(PARTITIONS_KEY_NAME)) {

-Struct partitionResponse = (Struct) partitionResponseObj;

-Struct partitionResponseHeader =

partitionResponse.getStruct(PARTITION_HEADER_KEY_NAME);

-int partition = partitionResponseHeader.get(PARTITION_ID);

-Errors error =

Errors.forCode(partitionResponseHeader.get(ERROR_CODE));

-long highWatermark =

partitionResponseHeader.get(HIGH_WATERMARK);

-long lastStableOffset =

partitionResponseHeader.getOrElse(LAST_STABLE_OFFSET,

INVALID_LAST_STABLE_OFFSET);

-long logStartOffset =

partitionResponseHeader.getOrElse(LOG_START_OFFSET, INVALID_LOG_START_OFFSET);

-Optional preferredReadReplica = Optional.of(

-partitionResponseHeader.getOrElse(PREFERRED_READ_REPLICA,

INVALID_PREFERRED_REPLICA_ID)

-

).filter(Predicate.isEqual(INVALID_PREFERRED_REPLICA_ID).negate());

-

-BaseRecords baseRecords =

partitionResponse.getRecords(RECORD_SET_KEY_NAME);

-if (!(baseRecords instanceof MemoryRecords))

-throw new IllegalStateException("Unknown records type

found: " + baseRecords.getClass());

-MemoryRecords records = (MemoryRecords) baseRecords;

-

-List abortedTransactions = null;

-if

(partitionResponseHeader.hasField(ABORTED_TRANSACTIONS_KEY_NAME)) {

-Object[] abortedTransactionsArray =

partitionResponseHeader.getArray(ABORTED_TRANSACTIONS_KEY_NAME);

-if (abortedTransactionsArray != null) {

-abortedTransactions = new

ArrayList<>(abortedTransactionsArray.length);

-for (Object abortedTransactionObj :

abortedTransactionsArray) {

-Struct abortedTransactionStruct = (Struct)

abortedTransactionObj;

-long producerId =

abortedTransactionStruct.get(PRODUCER_ID);

-long firstOffset =

abortedTransactionStruct.get(FIRST_OFFSET);

-abortedTransactions.add(new

AbortedTransaction(producerId, firstOffset));

-}

-}

-}

-

-PartitionData partitionData = new

PartitionData<>(error, highWatermark, lastStableOffset,

-logStartOffset, preferredReadReplica,

abortedTransactions, records);

-responseData.put(new TopicPartition(topic, partition),

partitionData);

-}

-}

-return new FetchResponse<>(Errors.forCode(struct.getOrElse(ERROR_CODE,

(short) 0)), responseData,

-struct.getOrElse(THROTTLE_TIME_MS, DEFAULT_THROTTLE_TIME),

struct.getOrElse(SESSION_ID, INVALID_SESSION_ID));

+public FetchResponse(FetchResponseData fetchResponseData) {

+this.data = fetchResponseData;

+this.responseDataMap = toResponseDataMap(fetchResponseData);

}

@Override

public Struct toStruct(short version) {

-return toStruct(version, throttleTimeMs, error,

responseData.entrySet().iterator(), sessionId);

+return data.toStruct(version);

}

@Override

-protected Send toSend(String dest, ResponseHeader responseHeader, short

apiVersion) {

-Struct responseHeaderStruct = responseHeader.toStruct();

-Struct responseBodyStruct = toStruct(apiVersion);

-

-// write the total size and the response header

-ByteBuffer buffer = ByteBuffer.allocate(responseHeaderStruct.sizeOf()

+ 4);

-buffer.putInt(responseHeaderStruct.sizeOf() +

responseBodyStruct.sizeOf());

-responseHeaderStruct.writeTo(buffer);

+public Send toSend(String dest, ResponseHeader responseHeader, short

apiVersion) {

+// Generate the Sends for

[GitHub] [kafka] chia7712 commented on pull request #9096: MINOR: Add comments to constrainedAssign and generalAssign method

chia7712 commented on pull request #9096: URL: https://github.com/apache/kafka/pull/9096#issuecomment-665504255 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] badaiaqrandista commented on pull request #4807: KAFKA-6733: Support of printing additional ConsumerRecord fields in DefaultMessageFormatter

badaiaqrandista commented on pull request #4807: URL: https://github.com/apache/kafka/pull/4807#issuecomment-665660018 Closing this PR as it has been superseded by PR 9099 (https://github.com/apache/kafka/pull/9099). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] soarez commented on pull request #9000: KAFKA-10036 Improve handling and documentation of Suppliers

soarez commented on pull request #9000: URL: https://github.com/apache/kafka/pull/9000#issuecomment-665668720 @mjsax what can we do to proceed? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] gwenshap commented on pull request #9054: KAFKA-10282: Remove Log metrics immediately when deleting log

gwenshap commented on pull request #9054: URL: https://github.com/apache/kafka/pull/9054#issuecomment-665773125 retest this please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167387#comment-17167387 ] Jason Gustafson commented on KAFKA-10324: - [~twbecker] Thanks for the report. I'm trying to understand why the fetch is not including batches beyond the one with the last offset removed. Is that because the batch itself is already satisfying the fetch max bytes? It would be helpful to include a snippet from a dump of the log with the batch that is causing problems. > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format

[ https://issues.apache.org/jira/browse/KAFKA-10324?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17167371#comment-17167371 ] Tommy Becker commented on KAFKA-10324: -- Thanks for the response [~ijuma]. Yes, these are Java consumers, and I agree it's odd that this has not been found before now. I have limited familiarity with the code base, so it's possible I'm missing something but I believe the issue is as I described. In my tests I'm trying to consume a 25GB topic and have found 2 distinct offsets which the consumer cannot advance beyond, and they are both cases where the offset: # Is the lastOffset in the last batch of its log segment # Does not actually exist, presumably due to log compaction. > Pre-0.11 consumers can get stuck when messages are downconverted from V2 > format > --- > > Key: KAFKA-10324 > URL: https://issues.apache.org/jira/browse/KAFKA-10324 > Project: Kafka > Issue Type: Bug >Reporter: Tommy Becker >Priority: Major > > As noted in KAFKA-5443, The V2 message format preserves a batch's lastOffset > even if that offset gets removed due to log compaction. If a pre-0.11 > consumer seeks to such an offset and issues a fetch, it will get an empty > batch, since offsets prior to the requested one are filtered out during > down-conversion. KAFKA-5443 added consumer-side logic to advance the fetch > offset in this case, but this leaves old consumers unable to consume these > topics. > The exact behavior varies depending on consumer version. The 0.10.0.0 > consumer throws RecordTooLargeException and dies, believing that the record > must not have been returned because it was too large. The 0.10.1.0 consumer > simply spins fetching the same empty batch over and over. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10324) Pre-0.11 consumers can get stuck when messages are downconverted from V2 format