[jira] [Updated] (KAFKA-12273) InterBrokerSendThread#pollOnce throws FatalExitError even though it is shutdown correctly

[ https://issues.apache.org/jira/browse/KAFKA-12273?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Chia-Ping Tsai updated KAFKA-12273: --- Fix Version/s: 2.8.0 > InterBrokerSendThread#pollOnce throws FatalExitError even though it is > shutdown correctly > - > > Key: KAFKA-12273 > URL: https://issues.apache.org/jira/browse/KAFKA-12273 > Project: Kafka > Issue Type: Test >Reporter: Chia-Ping Tsai >Assignee: Chia-Ping Tsai >Priority: Major > Fix For: 2.8.0 > > > kafka tests sometimes shutdown gradle with non-zero code. The (one of) root > cause is that InterBrokerSendThread#pollOnce encounters DisconnectException > when NetworkClient is closing. DisconnectException should be viewed as > "expected" error as we do close it. In other words, > InterBrokerSendThread#pollOnce should swallow it. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] chia7712 commented on pull request #10124: MINOR: apply Utils.isBlank to code base

chia7712 commented on pull request #10124: URL: https://github.com/apache/kafka/pull/10124#issuecomment-780347504 @tang7526 Thanks for your patch. Could you fix following code also? 1. https://github.com/apache/kafka/blob/trunk/connect/runtime/src/main/java/org/apache/kafka/connect/runtime/isolation/Plugins.java#L448 1. https://github.com/apache/kafka/blob/trunk/clients/src/test/java/org/apache/kafka/common/security/oauthbearer/internals/unsecured/OAuthBearerScopeUtilsTest.java#L31 1. https://github.com/apache/kafka/blob/trunk/connect/api/src/test/java/org/apache/kafka/connect/data/ValuesTest.java#L914 1. https://github.com/apache/kafka/blob/trunk/core/src/main/scala/kafka/network/SocketServer.scala#L642 1. https://github.com/apache/kafka/blob/trunk/core/src/main/scala/kafka/server/KafkaServer.scala#L473 1. https://github.com/apache/kafka/blob/trunk/core/src/main/scala/kafka/utils/Log4jController.scala#L74 1. https://github.com/apache/kafka/blob/trunk/core/src/main/scala/kafka/utils/Log4jController.scala#L83 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cadonna commented on a change in pull request #10052: KAFKA-12289: Adding test cases for prefix scan in InMemoryKeyValueStore

cadonna commented on a change in pull request #10052:

URL: https://github.com/apache/kafka/pull/10052#discussion_r577363631

##

File path:

streams/src/test/java/org/apache/kafka/streams/state/internals/InMemoryKeyValueStoreTest.java

##

@@ -60,4 +67,22 @@ public void shouldRemoveKeysWithNullValues() {

assertThat(store.get(0), nullValue());

}

+

+

+@Test

+public void shouldReturnKeysWithGivenPrefix(){

+store = createKeyValueStore(driver.context());

+final String value = "value";

+final List> entries = new ArrayList<>();

+entries.add(new KeyValue<>(1, value));

+entries.add(new KeyValue<>(2, value));

+entries.add(new KeyValue<>(11, value));

+entries.add(new KeyValue<>(13, value));

+

+store.putAll(entries);

+final KeyValueIterator keysWithPrefix =

store.prefixScan(1, new IntegerSerializer());

Review comment:

That sounds good!

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] vamossagar12 commented on a change in pull request #10052: KAFKA-12289: Adding test cases for prefix scan in InMemoryKeyValueStore

vamossagar12 commented on a change in pull request #10052:

URL: https://github.com/apache/kafka/pull/10052#discussion_r577363103

##

File path:

streams/src/test/java/org/apache/kafka/streams/state/internals/InMemoryKeyValueStoreTest.java

##

@@ -60,4 +67,22 @@ public void shouldRemoveKeysWithNullValues() {

assertThat(store.get(0), nullValue());

}

+

+

+@Test

+public void shouldReturnKeysWithGivenPrefix(){

+store = createKeyValueStore(driver.context());

+final String value = "value";

+final List> entries = new ArrayList<>();

+entries.add(new KeyValue<>(1, value));

+entries.add(new KeyValue<>(2, value));

+entries.add(new KeyValue<>(11, value));

+entries.add(new KeyValue<>(13, value));

+

+store.putAll(entries);

+final KeyValueIterator keysWithPrefix =

store.prefixScan(1, new IntegerSerializer());

Review comment:

> The reason, we get only `1` when we scan for prefix `1` is that the

integer serializer serializes `11` and `13` in the least significant byte

instead of serializing `1` in the byte before the least significant byte and

`1` and `3` in the least significant byte. With the former the **byte**

lexicographical order of `1 2 11 13` would be `1 2 11 13` which corresponds to

the natural order of integers. With the latter the **byte** lexicographical

order of `1 2 11 13` would be `1 11 13 2` which corresponds to the string

lexicographical order. So the serializer determines the order of the entries

and the store always returns the entries in byte lexicographical order.

>

> You will experience a similar when you call `range(-1, 2)` on the

in-memory state store in the unit test. You will get back an empty result since

`-1` is larger then `2` in byte lexicographical order

> when the `IntegerSerializer` is used. Also not the warning that is output,

especially this part `... or serdes that don't preserve ordering when

lexicographically comparing the serialized bytes ...`

>

> I think we should clearly state this limitation in the javadocs of the

`prefixScan()` as we have done for `range()`, maybe with an example.

>

> Currently, to get `prefixScan()` working for all types, we would need to

do a complete scan (i.e. `all()`) followed by a filter, right?

That is correct. That is the only way currently.

>

> Double checking: Is my understanding correct? @ableegoldman

I think adding a warning similar to the range() query would be good. I will

do that as part of the PR. However, in this test class, adding test cases for

the integer serializer won't make sense. Probably I will create another KVStore

and add tests for those. Is that ok, @cadonna ?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] vamossagar12 commented on a change in pull request #10052: KAFKA-12289: Adding test cases for prefix scan in InMemoryKeyValueStore

vamossagar12 commented on a change in pull request #10052:

URL: https://github.com/apache/kafka/pull/10052#discussion_r577360887

##

File path:

streams/src/test/java/org/apache/kafka/streams/state/internals/InMemoryKeyValueStoreTest.java

##

@@ -60,4 +67,22 @@ public void shouldRemoveKeysWithNullValues() {

assertThat(store.get(0), nullValue());

}

+

+

+@Test

+public void shouldReturnKeysWithGivenPrefix(){

+store = createKeyValueStore(driver.context());

+final String value = "value";

+final List> entries = new ArrayList<>();

+entries.add(new KeyValue<>(1, value));

+entries.add(new KeyValue<>(2, value));

+entries.add(new KeyValue<>(11, value));

+entries.add(new KeyValue<>(13, value));

+

+store.putAll(entries);

+final KeyValueIterator keysWithPrefix =

store.prefixScan(1, new IntegerSerializer());

Review comment:

Thanks @cadonna , @ableegoldman for the detailed explanation. I

understood the behaviour now.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ijuma commented on pull request #10123: KAFKA-12327: Remove MethodHandle usage in CompressionType

ijuma commented on pull request #10123: URL: https://github.com/apache/kafka/pull/10123#issuecomment-780326554 I'm not sure it's worth it since the cost of compressing on the broker during fetches is significantly higher than compressing during produce (the data is already on the heap for the latter and there are usually multiple fetches per produce). That is not to say that it's never useful, just that the ROI seems a bit low. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kamalcph opened a new pull request #10139: MINOR: Print the warning log before truncation.

kamalcph opened a new pull request #10139: URL: https://github.com/apache/kafka/pull/10139 - After truncation, hw can be moved to the truncation offset. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jsancio opened a new pull request #10138: KAFKA-12331: Use LEO for the base offset of LeaderChangeMessage batch

jsancio opened a new pull request #10138: URL: https://github.com/apache/kafka/pull/10138 WIP *More detailed description of your change, if necessary. The PR title and PR message become the squashed commit message, so use a separate comment to ping reviewers.* *Summary of testing strategy (including rationale) for the feature or bug fix. Unit and/or integration tests are expected for any behaviour change and system tests should be considered for larger changes.* ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #9906: KAFKA-10885 Refactor MemoryRecordsBuilderTest/MemoryRecordsTest to avoid a lot of…

chia7712 commented on pull request #9906: URL: https://github.com/apache/kafka/pull/9906#issuecomment-780309024 @g1geordie Thanks for your updating. I will merge it tomorrow :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #10137: KAFKA-12268: Implement task idling semantics via currentLag API

vvcephei commented on a change in pull request #10137: URL: https://github.com/apache/kafka/pull/10137#discussion_r577333186 ## File path: clients/src/main/java/org/apache/kafka/clients/consumer/Consumer.java ## @@ -243,6 +244,11 @@ */ Map endOffsets(Collection partitions, Duration timeout); +/** + * @see KafkaConsumer#currentLag(TopicPartition) + */ +OptionalLong currentLag(TopicPartition topicPartition); Review comment: Woah, you are _fast_, @chia7712 ! I just sent a message to the vote thread. I wanted to submit this PR first so that the vote thread message can have the full context available. Do you mind reading over what I said there? If it sounds good to you, then I'll update the KIP, and we can maybe put this whole mess to bed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #10137: KAFKA-12268: Implement task idling semantics via currentLag API

chia7712 commented on a change in pull request #10137: URL: https://github.com/apache/kafka/pull/10137#discussion_r577332179 ## File path: clients/src/main/java/org/apache/kafka/clients/consumer/Consumer.java ## @@ -243,6 +244,11 @@ */ Map endOffsets(Collection partitions, Duration timeout); +/** + * @see KafkaConsumer#currentLag(TopicPartition) + */ +OptionalLong currentLag(TopicPartition topicPartition); Review comment: Pardon me, KIP-695 does not include this change. It seems KIP-695 is still based on `metadata`? Please correct me If I misunderstand anything :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #10123: KAFKA-12327: Remove MethodHandle usage in CompressionType

chia7712 commented on pull request #10123: URL: https://github.com/apache/kafka/pull/10123#issuecomment-780303685 > Today, the solution would be to either: Include the relevant native compression libraries Limit topic data to the compression algorithms supported on the relevant platform Both seem doable. @ijuma Thanks for the sharing. IIRC, kafka producer which does not support the compression can send uncompressed data to server. The data get compressed on server-side. It is a useful feature since the compression does not obstruct us from producing data on env which can't load native compression libraries. In contrast with producer, kafka consumer can't get data from compressed topic if it can't load native compression libraries. WDYT? Does it pay to support such scenario? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei opened a new pull request #10137: KAFKA-12268: Implement task idling semantics via currentLag API

vvcephei opened a new pull request #10137: URL: https://github.com/apache/kafka/pull/10137 Implements KIP-695 Reverts a previous behavior change to Consumer.poll and replaces it with a new Consumer.currentLag API, which returns the client's currently cached lag. Uses this new API to implement the desired task idling semantics improvement from KIP-695. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda commented on a change in pull request #10135: KAFKA-10348: Share client channel between forwarding and auto creation manager

abbccdda commented on a change in pull request #10135: URL: https://github.com/apache/kafka/pull/10135#discussion_r577322703 ## File path: core/src/main/scala/kafka/server/KafkaServer.scala ## @@ -134,6 +134,8 @@ class KafkaServer( var autoTopicCreationManager: AutoTopicCreationManager = null + var clientToControllerChannelManager: Option[BrokerToControllerChannelManager] = None + var alterIsrManager: AlterIsrManager = null Review comment: We are planning to consolidate into two channels eventually: 1. broker to controller channel 2. client to controller channel here, auto topic creation and forwarding fall into the 2nd category, while AlterIsr would be the 1st category. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #10130: MINOR: Fix typo in MirrorMaker

chia7712 commented on pull request #10130: URL: https://github.com/apache/kafka/pull/10130#issuecomment-780286687 @runom Thanks for your patch :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 merged pull request #10130: MINOR: Fix typo in MirrorMaker

chia7712 merged pull request #10130: URL: https://github.com/apache/kafka/pull/10130 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #10130: MINOR: Fix typo in MirrorMaker

chia7712 commented on pull request #10130: URL: https://github.com/apache/kafka/pull/10130#issuecomment-780286136 the error is traced by #10024 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 merged pull request #10082: MINOR: use 'mapKey' to avoid unnecessary grouped data

chia7712 merged pull request #10082: URL: https://github.com/apache/kafka/pull/10082 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dengziming commented on a change in pull request #10135: KAFKA-10348: Share client channel between forwarding and auto creation manager

dengziming commented on a change in pull request #10135: URL: https://github.com/apache/kafka/pull/10135#discussion_r577312082 ## File path: core/src/main/scala/kafka/server/KafkaServer.scala ## @@ -134,6 +134,8 @@ class KafkaServer( var autoTopicCreationManager: AutoTopicCreationManager = null + var clientToControllerChannelManager: Option[BrokerToControllerChannelManager] = None + var alterIsrManager: AlterIsrManager = null Review comment: Hello, I have a question, should we also share the channel between alterIsrManager and autoCreationManager, furthermore, also share the same one with alterReplicaStateManager proposed in KIP-589. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #10082: MINOR: use 'mapKey' to avoid unnecessary grouped data

chia7712 commented on pull request #10082: URL: https://github.com/apache/kafka/pull/10082#issuecomment-780282255 the error is traced by #10024 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #10082: MINOR: use 'mapKey' to avoid unnecessary grouped data

chia7712 commented on pull request #10082: URL: https://github.com/apache/kafka/pull/10082#issuecomment-780282166 > Looks like ConsumerProtocolAssignment was also changed to have mapKey, so worth updating the PR to include that information. done This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

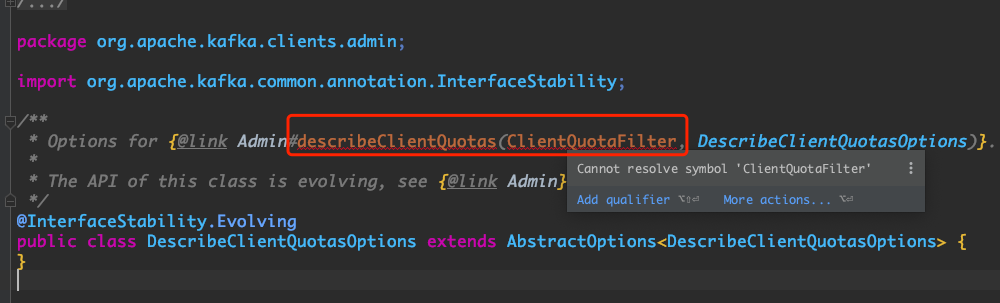

[GitHub] [kafka] dengziming opened a new pull request #10136: MONOR: Import classes that is used is docs to fix warnings.

dengziming opened a new pull request #10136: URL: https://github.com/apache/kafka/pull/10136 *More detailed description of your change* Fix this:  *Summary of testing strategy (including rationale)* None ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (KAFKA-12331) KafkaRaftClient should use the LEO when appending LeaderChangeMessage

[

https://issues.apache.org/jira/browse/KAFKA-12331?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jason Gustafson reassigned KAFKA-12331:

---

Assignee: Jose Armando Garcia Sancio

> KafkaRaftClient should use the LEO when appending LeaderChangeMessage

> -

>

> Key: KAFKA-12331

> URL: https://issues.apache.org/jira/browse/KAFKA-12331

> Project: Kafka

> Issue Type: Sub-task

> Components: replication

>Reporter: Jose Armando Garcia Sancio

>Assignee: Jose Armando Garcia Sancio

>Priority: Major

>

> KafkaMetadataLog's appendAsLeader expects the base offset to match the LEO.

> This is enforced when KafkaRaftClient uses the BatchAccumulator to create

> batches. When creating the control batch for the LeaderChangeMessage the

> KafkaRaftClient doesn't use the BatchAccumulator and instead creates the

> batch with the default base offset of 0.

> This causes the validation in KafkaMetadataLog to fail with the following

> exception:

> {code:java}

> kafka.common.UnexpectedAppendOffsetException: Unexpected offset in append to

> @metadata-0. First offset 0 is less than the next offset 5. First 10 offsets

> in append: ArrayBuffer(0), last offset in append: 0. Log start offset = 0

> at kafka.log.Log.append(Log.scala:1217)

> at kafka.log.Log.appendAsLeader(Log.scala:1092)

> at kafka.raft.KafkaMetadataLog.appendAsLeader(KafkaMetadataLog.scala:92)

> at

> org.apache.kafka.raft.KafkaRaftClient.appendAsLeader(KafkaRaftClient.java:1158)

> at

> org.apache.kafka.raft.KafkaRaftClient.appendLeaderChangeMessage(KafkaRaftClient.java:449)

> at

> org.apache.kafka.raft.KafkaRaftClient.onBecomeLeader(KafkaRaftClient.java:409)

> at

> org.apache.kafka.raft.KafkaRaftClient.maybeTransitionToLeader(KafkaRaftClient.java:463)

> at

> org.apache.kafka.raft.KafkaRaftClient.handleVoteResponse(KafkaRaftClient.java:663)

> at

> org.apache.kafka.raft.KafkaRaftClient.handleResponse(KafkaRaftClient.java:1530)

> at

> org.apache.kafka.raft.KafkaRaftClient.handleInboundMessage(KafkaRaftClient.java:1652)

> at org.apache.kafka.raft.KafkaRaftClient.poll(KafkaRaftClient.java:2183)

> at kafka.raft.KafkaRaftManager$RaftIoThread.doWork(RaftManager.scala:52)

> at kafka.utils.ShutdownableThread.run(ShutdownableThread.scala:96)

> {code}

> We should make the following changes:

> # Fix MockLog to perform similar validation as

> KafkaMetadataLog::appendAsLeader

> # Use the LEO when creating the control batch for the LeaderChangedMessage

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (KAFKA-12331) KafkaRaftClient should use the LEO when appending LeaderChangeMessage

[

https://issues.apache.org/jira/browse/KAFKA-12331?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jose Armando Garcia Sancio updated KAFKA-12331:

---

Description:

KafkaMetadataLog's appendAsLeader expects the base offset to match the LEO.

This is enforced when KafkaRaftClient uses the BatchAccumulator to create

batches. When creating the control batch for the LeaderChangeMessage the

KafkaRaftClient doesn't use the BatchAccumulator and instead creates the batch

with the default base offset of 0.

This causes the validation in KafkaMetadataLog to fail with the following

exception:

{code:java}

kafka.common.UnexpectedAppendOffsetException: Unexpected offset in append to

@metadata-0. First offset 0 is less than the next offset 5. First 10 offsets in

append: ArrayBuffer(0), last offset in append: 0. Log start offset = 0

at kafka.log.Log.append(Log.scala:1217)

at kafka.log.Log.appendAsLeader(Log.scala:1092)

at kafka.raft.KafkaMetadataLog.appendAsLeader(KafkaMetadataLog.scala:92)

at

org.apache.kafka.raft.KafkaRaftClient.appendAsLeader(KafkaRaftClient.java:1158)

at

org.apache.kafka.raft.KafkaRaftClient.appendLeaderChangeMessage(KafkaRaftClient.java:449)

at

org.apache.kafka.raft.KafkaRaftClient.onBecomeLeader(KafkaRaftClient.java:409)

at

org.apache.kafka.raft.KafkaRaftClient.maybeTransitionToLeader(KafkaRaftClient.java:463)

at

org.apache.kafka.raft.KafkaRaftClient.handleVoteResponse(KafkaRaftClient.java:663)

at

org.apache.kafka.raft.KafkaRaftClient.handleResponse(KafkaRaftClient.java:1530)

at

org.apache.kafka.raft.KafkaRaftClient.handleInboundMessage(KafkaRaftClient.java:1652)

at org.apache.kafka.raft.KafkaRaftClient.poll(KafkaRaftClient.java:2183)

at kafka.raft.KafkaRaftManager$RaftIoThread.doWork(RaftManager.scala:52)

at kafka.utils.ShutdownableThread.run(ShutdownableThread.scala:96)

{code}

We should make the following changes:

# Fix MockLog to perform similar validation as KafkaMetadataLog::appendAsLeader

# Use the LEO when creating the control batch for the LeaderChangedMessage

was:

KafkaMetadataLog's appendAsLeader expects the base offset to match the LEO.

This is enforced when KafkaRaftClient uses the BatchAccumulator to create

batches. When creating the control batch for the LeaderChangeMessage the

KafkaRaftClient doesn't use the BatchAccumulator and instead creates the batch

with the default base offset of 0.

This causes the validation in KafkaMetadataLog to fail with the following

exception:

{code:java}

kafka.common.UnexpectedAppendOffsetException: Unexpected offset in append to

@metadata-0. First offset 0 is less than the next offset 5. First 10 offsets in

append: ArrayBuffer(0), last offset in append: 0. Log start offset = 0

at kafka.log.Log.append(Log.scala:1217)

at kafka.log.Log.appendAsLeader(Log.scala:1092)

at kafka.raft.KafkaMetadataLog.appendAsLeader(KafkaMetadataLog.scala:92)

at

org.apache.kafka.raft.KafkaRaftClient.appendAsLeader(KafkaRaftClient.java:1158)

at

org.apache.kafka.raft.KafkaRaftClient.appendLeaderChangeMessage(KafkaRaftClient.java:449)

at

org.apache.kafka.raft.KafkaRaftClient.onBecomeLeader(KafkaRaftClient.java:409)

at

org.apache.kafka.raft.KafkaRaftClient.maybeTransitionToLeader(KafkaRaftClient.java:463)

at

org.apache.kafka.raft.KafkaRaftClient.handleVoteResponse(KafkaRaftClient.java:663)

at

org.apache.kafka.raft.KafkaRaftClient.handleResponse(KafkaRaftClient.java:1530)

at

org.apache.kafka.raft.KafkaRaftClient.handleInboundMessage(KafkaRaftClient.java:1652)

at org.apache.kafka.raft.KafkaRaftClient.poll(KafkaRaftClient.java:2183)

at kafka.raft.KafkaRaftManager$RaftIoThread.doWork(RaftManager.scala:52)

at kafka.utils.ShutdownableThread.run(ShutdownableThread.scala:96)

{code}

> KafkaRaftClient should use the LEO when appending LeaderChangeMessage

> -

>

> Key: KAFKA-12331

> URL: https://issues.apache.org/jira/browse/KAFKA-12331

> Project: Kafka

> Issue Type: Sub-task

> Components: replication

>Reporter: Jose Armando Garcia Sancio

>Priority: Major

>

> KafkaMetadataLog's appendAsLeader expects the base offset to match the LEO.

> This is enforced when KafkaRaftClient uses the BatchAccumulator to create

> batches. When creating the control batch for the LeaderChangeMessage the

> KafkaRaftClient doesn't use the BatchAccumulator and instead creates the

> batch with the default base offset of 0.

> This causes the validation in KafkaMetadataLog to fail with the following

> exception:

> {code:java}

> kafka.common.UnexpectedAppendOffsetException: Unexpected offset in append to

> @metadata-0. First offset 0 is less than

[jira] [Created] (KAFKA-12331) KafkaRaftClient should use the LEO when appending LeaderChangeMessage

Jose Armando Garcia Sancio created KAFKA-12331:

--

Summary: KafkaRaftClient should use the LEO when appending

LeaderChangeMessage

Key: KAFKA-12331

URL: https://issues.apache.org/jira/browse/KAFKA-12331

Project: Kafka

Issue Type: Sub-task

Components: replication

Reporter: Jose Armando Garcia Sancio

KafkaMetadataLog's appendAsLeader expects the base offset to match the LEO.

This is enforced when KafkaRaftClient uses the BatchAccumulator to create

batches. When creating the control batch for the LeaderChangeMessage the

KafkaRaftClient doesn't use the BatchAccumulator and instead creates the batch

with the default base offset of 0.

This causes the validation in KafkaMetadataLog to fail with the following

exception:

{code:java}

kafka.common.UnexpectedAppendOffsetException: Unexpected offset in append to

@metadata-0. First offset 0 is less than the next offset 5. First 10 offsets in

append: ArrayBuffer(0), last offset in append: 0. Log start offset = 0

at kafka.log.Log.append(Log.scala:1217)

at kafka.log.Log.appendAsLeader(Log.scala:1092)

at kafka.raft.KafkaMetadataLog.appendAsLeader(KafkaMetadataLog.scala:92)

at

org.apache.kafka.raft.KafkaRaftClient.appendAsLeader(KafkaRaftClient.java:1158)

at

org.apache.kafka.raft.KafkaRaftClient.appendLeaderChangeMessage(KafkaRaftClient.java:449)

at

org.apache.kafka.raft.KafkaRaftClient.onBecomeLeader(KafkaRaftClient.java:409)

at

org.apache.kafka.raft.KafkaRaftClient.maybeTransitionToLeader(KafkaRaftClient.java:463)

at

org.apache.kafka.raft.KafkaRaftClient.handleVoteResponse(KafkaRaftClient.java:663)

at

org.apache.kafka.raft.KafkaRaftClient.handleResponse(KafkaRaftClient.java:1530)

at

org.apache.kafka.raft.KafkaRaftClient.handleInboundMessage(KafkaRaftClient.java:1652)

at org.apache.kafka.raft.KafkaRaftClient.poll(KafkaRaftClient.java:2183)

at kafka.raft.KafkaRaftManager$RaftIoThread.doWork(RaftManager.scala:52)

at kafka.utils.ShutdownableThread.run(ShutdownableThread.scala:96)

{code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] abbccdda opened a new pull request #10135: KAFKA-10348: Share client channel between forwarding and auto creation manager

abbccdda opened a new pull request #10135: URL: https://github.com/apache/kafka/pull/10135 We want to consolidate forwarding and auto creation channel into one channel to reduce the unnecessary connections maintained between brokers and controller. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] junrao commented on a change in pull request #10070: KAFKA-12276: Add the quorum controller code

junrao commented on a change in pull request #10070:

URL: https://github.com/apache/kafka/pull/10070#discussion_r577278241

##

File path:

metadata/src/main/java/org/apache/kafka/controller/QuorumController.java

##

@@ -0,0 +1,920 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kafka.controller;

+

+import org.apache.kafka.clients.admin.AlterConfigOp.OpType;

+import org.apache.kafka.common.config.ConfigDef;

+import org.apache.kafka.common.config.ConfigResource;

+import org.apache.kafka.common.errors.ApiException;

+import org.apache.kafka.common.errors.NotControllerException;

+import org.apache.kafka.common.errors.UnknownServerException;

+import org.apache.kafka.common.message.AlterIsrRequestData;

+import org.apache.kafka.common.message.AlterIsrResponseData;

+import org.apache.kafka.common.message.BrokerHeartbeatRequestData;

+import org.apache.kafka.common.message.BrokerRegistrationRequestData;

+import org.apache.kafka.common.message.CreateTopicsRequestData;

+import org.apache.kafka.common.message.CreateTopicsResponseData;

+import org.apache.kafka.common.message.ElectLeadersRequestData;

+import org.apache.kafka.common.message.ElectLeadersResponseData;

+import org.apache.kafka.common.metadata.ConfigRecord;

+import org.apache.kafka.common.metadata.FenceBrokerRecord;

+import org.apache.kafka.common.metadata.IsrChangeRecord;

+import org.apache.kafka.common.metadata.MetadataRecordType;

+import org.apache.kafka.common.metadata.PartitionRecord;

+import org.apache.kafka.common.metadata.QuotaRecord;

+import org.apache.kafka.common.metadata.RegisterBrokerRecord;

+import org.apache.kafka.common.metadata.TopicRecord;

+import org.apache.kafka.common.metadata.UnfenceBrokerRecord;

+import org.apache.kafka.common.metadata.UnregisterBrokerRecord;

+import org.apache.kafka.common.protocol.ApiMessage;

+import org.apache.kafka.common.quota.ClientQuotaAlteration;

+import org.apache.kafka.common.quota.ClientQuotaEntity;

+import org.apache.kafka.common.requests.ApiError;

+import org.apache.kafka.common.utils.LogContext;

+import org.apache.kafka.common.utils.Time;

+import org.apache.kafka.common.utils.Utils;

+import org.apache.kafka.metadata.ApiMessageAndVersion;

+import org.apache.kafka.metadata.BrokerHeartbeatReply;

+import org.apache.kafka.metadata.BrokerRegistrationReply;

+import org.apache.kafka.metadata.FeatureManager;

+import org.apache.kafka.metadata.VersionRange;

+import org.apache.kafka.metalog.MetaLogLeader;

+import org.apache.kafka.metalog.MetaLogListener;

+import org.apache.kafka.metalog.MetaLogManager;

+import org.apache.kafka.queue.EventQueue.EarliestDeadlineFunction;

+import org.apache.kafka.queue.EventQueue;

+import org.apache.kafka.queue.KafkaEventQueue;

+import org.apache.kafka.timeline.SnapshotRegistry;

+import org.slf4j.Logger;

+

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.List;

+import java.util.Map.Entry;

+import java.util.Map;

+import java.util.Optional;

+import java.util.Random;

+import java.util.Set;

+import java.util.concurrent.CompletableFuture;

+import java.util.concurrent.RejectedExecutionException;

+import java.util.concurrent.TimeUnit;

+import java.util.function.Supplier;

+import java.util.stream.Collectors;

+

+

+public final class QuorumController implements Controller {

+/**

+ * A builder class which creates the QuorumController.

+ */

+static public class Builder {

+private final int nodeId;

+private Time time = Time.SYSTEM;

+private String threadNamePrefix = null;

+private LogContext logContext = null;

+private Map configDefs =

Collections.emptyMap();

+private MetaLogManager logManager = null;

+private Map supportedFeatures =

Collections.emptyMap();

+private short defaultReplicationFactor = 3;

+private int defaultNumPartitions = 1;

+private ReplicaPlacementPolicy replicaPlacementPolicy =

+new SimpleReplicaPlacementPolicy(new Random());

+private long sessionTimeoutNs = TimeUnit.NANOSECONDS.convert(18,

TimeUnit.SECONDS);

+

+public Builder(int nodeId) {

+this.nodeId = nodeId;

+}

+

+public

[GitHub] [kafka] junrao commented on a change in pull request #10070: KAFKA-12276: Add the quorum controller code

junrao commented on a change in pull request #10070:

URL: https://github.com/apache/kafka/pull/10070#discussion_r577268593

##

File path:

metadata/src/main/java/org/apache/kafka/controller/ClusterControlManager.java

##

@@ -0,0 +1,456 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kafka.controller;

+

+import org.apache.kafka.common.Endpoint;

+import org.apache.kafka.common.errors.DuplicateBrokerRegistrationException;

+import org.apache.kafka.common.errors.StaleBrokerEpochException;

+import org.apache.kafka.common.errors.UnsupportedVersionException;

+import org.apache.kafka.common.message.BrokerHeartbeatRequestData;

+import org.apache.kafka.common.message.BrokerRegistrationRequestData;

+import org.apache.kafka.common.metadata.FenceBrokerRecord;

+import org.apache.kafka.common.metadata.RegisterBrokerRecord;

+import org.apache.kafka.common.metadata.UnfenceBrokerRecord;

+import org.apache.kafka.common.metadata.UnregisterBrokerRecord;

+import org.apache.kafka.common.security.auth.SecurityProtocol;

+import org.apache.kafka.common.utils.LogContext;

+import org.apache.kafka.common.utils.Time;

+import org.apache.kafka.metadata.ApiMessageAndVersion;

+import org.apache.kafka.metadata.BrokerHeartbeatReply;

+import org.apache.kafka.metadata.BrokerRegistration;

+import org.apache.kafka.metadata.BrokerRegistrationReply;

+import org.apache.kafka.metadata.FeatureManager;

+import org.apache.kafka.metadata.VersionRange;

+import org.apache.kafka.timeline.SnapshotRegistry;

+import org.apache.kafka.timeline.TimelineHashMap;

+import org.slf4j.Logger;

+

+import java.util.ArrayList;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Map;

+import java.util.Optional;

+import java.util.Set;

+import java.util.concurrent.CompletableFuture;

+

+

+public class ClusterControlManager {

+class ReadyBrokersFuture {

+private final CompletableFuture future;

+private final int minBrokers;

+

+ReadyBrokersFuture(CompletableFuture future, int minBrokers) {

+this.future = future;

+this.minBrokers = minBrokers;

+}

+

+boolean check() {

+int numUnfenced = 0;

+for (BrokerRegistration registration :

brokerRegistrations.values()) {

+if (!registration.fenced()) {

+numUnfenced++;

+}

+if (numUnfenced >= minBrokers) {

+return true;

+}

+}

+return false;

+}

+}

+

+/**

+ * The SLF4J log context.

+ */

+private final LogContext logContext;

+

+/**

+ * The SLF4J log object.

+ */

+private final Logger log;

+

+/**

+ * The Kafka clock object to use.

+ */

+private final Time time;

+

+/**

+ * How long sessions should last, in nanoseconds.

+ */

+private final long sessionTimeoutNs;

+

+/**

+ * The replica placement policy to use.

+ */

+private final ReplicaPlacementPolicy placementPolicy;

+

+/**

+ * Maps broker IDs to broker registrations.

+ */

+private final TimelineHashMap

brokerRegistrations;

+

+/**

+ * The broker heartbeat manager, or null if this controller is on standby.

+ */

+private BrokerHeartbeatManager heartbeatManager;

+

+/**

+ * A future which is completed as soon as we have the given number of

brokers

+ * ready.

+ */

+private Optional readyBrokersFuture;

+

+ClusterControlManager(LogContext logContext,

+ Time time,

+ SnapshotRegistry snapshotRegistry,

+ long sessionTimeoutNs,

+ ReplicaPlacementPolicy placementPolicy) {

+this.logContext = logContext;

+this.log = logContext.logger(ClusterControlManager.class);

+this.time = time;

+this.sessionTimeoutNs = sessionTimeoutNs;

+this.placementPolicy = placementPolicy;

+this.brokerRegistrations = new TimelineHashMap<>(snapshotRegistry, 0);

+this.heartbeatManager = null;

+this.readyBrokersFuture = Optional.empty();

+}

+

+/**

+ * Transition

[GitHub] [kafka] junrao commented on a change in pull request #10070: KAFKA-12276: Add the quorum controller code

junrao commented on a change in pull request #10070:

URL: https://github.com/apache/kafka/pull/10070#discussion_r577263866

##

File path:

metadata/src/main/java/org/apache/kafka/controller/QuorumController.java

##

@@ -0,0 +1,920 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kafka.controller;

+

+import org.apache.kafka.clients.admin.AlterConfigOp.OpType;

+import org.apache.kafka.common.config.ConfigDef;

+import org.apache.kafka.common.config.ConfigResource;

+import org.apache.kafka.common.errors.ApiException;

+import org.apache.kafka.common.errors.NotControllerException;

+import org.apache.kafka.common.errors.UnknownServerException;

+import org.apache.kafka.common.message.AlterIsrRequestData;

+import org.apache.kafka.common.message.AlterIsrResponseData;

+import org.apache.kafka.common.message.BrokerHeartbeatRequestData;

+import org.apache.kafka.common.message.BrokerRegistrationRequestData;

+import org.apache.kafka.common.message.CreateTopicsRequestData;

+import org.apache.kafka.common.message.CreateTopicsResponseData;

+import org.apache.kafka.common.message.ElectLeadersRequestData;

+import org.apache.kafka.common.message.ElectLeadersResponseData;

+import org.apache.kafka.common.metadata.ConfigRecord;

+import org.apache.kafka.common.metadata.FenceBrokerRecord;

+import org.apache.kafka.common.metadata.IsrChangeRecord;

+import org.apache.kafka.common.metadata.MetadataRecordType;

+import org.apache.kafka.common.metadata.PartitionRecord;

+import org.apache.kafka.common.metadata.QuotaRecord;

+import org.apache.kafka.common.metadata.RegisterBrokerRecord;

+import org.apache.kafka.common.metadata.TopicRecord;

+import org.apache.kafka.common.metadata.UnfenceBrokerRecord;

+import org.apache.kafka.common.metadata.UnregisterBrokerRecord;

+import org.apache.kafka.common.protocol.ApiMessage;

+import org.apache.kafka.common.quota.ClientQuotaAlteration;

+import org.apache.kafka.common.quota.ClientQuotaEntity;

+import org.apache.kafka.common.requests.ApiError;

+import org.apache.kafka.common.utils.LogContext;

+import org.apache.kafka.common.utils.Time;

+import org.apache.kafka.common.utils.Utils;

+import org.apache.kafka.metadata.ApiMessageAndVersion;

+import org.apache.kafka.metadata.BrokerHeartbeatReply;

+import org.apache.kafka.metadata.BrokerRegistrationReply;

+import org.apache.kafka.metadata.FeatureManager;

+import org.apache.kafka.metadata.VersionRange;

+import org.apache.kafka.metalog.MetaLogLeader;

+import org.apache.kafka.metalog.MetaLogListener;

+import org.apache.kafka.metalog.MetaLogManager;

+import org.apache.kafka.queue.EventQueue.EarliestDeadlineFunction;

+import org.apache.kafka.queue.EventQueue;

+import org.apache.kafka.queue.KafkaEventQueue;

+import org.apache.kafka.timeline.SnapshotRegistry;

+import org.slf4j.Logger;

+

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.List;

+import java.util.Map.Entry;

+import java.util.Map;

+import java.util.Optional;

+import java.util.Random;

+import java.util.Set;

+import java.util.concurrent.CompletableFuture;

+import java.util.concurrent.RejectedExecutionException;

+import java.util.concurrent.TimeUnit;

+import java.util.function.Supplier;

+import java.util.stream.Collectors;

+

+

+public final class QuorumController implements Controller {

+/**

+ * A builder class which creates the QuorumController.

+ */

+static public class Builder {

+private final int nodeId;

+private Time time = Time.SYSTEM;

+private String threadNamePrefix = null;

+private LogContext logContext = null;

+private Map configDefs =

Collections.emptyMap();

+private MetaLogManager logManager = null;

+private Map supportedFeatures =

Collections.emptyMap();

+private short defaultReplicationFactor = 3;

+private int defaultNumPartitions = 1;

+private ReplicaPlacementPolicy replicaPlacementPolicy =

+new SimpleReplicaPlacementPolicy(new Random());

+private long sessionTimeoutNs = TimeUnit.NANOSECONDS.convert(18,

TimeUnit.SECONDS);

+

+public Builder(int nodeId) {

+this.nodeId = nodeId;

+}

+

+public

[GitHub] [kafka] junrao commented on a change in pull request #10070: KAFKA-12276: Add the quorum controller code

junrao commented on a change in pull request #10070:

URL: https://github.com/apache/kafka/pull/10070#discussion_r577262652

##

File path:

metadata/src/main/java/org/apache/kafka/controller/ReplicationControlManager.java

##

@@ -0,0 +1,712 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kafka.controller;

+

+import org.apache.kafka.clients.admin.AlterConfigOp.OpType;

+import org.apache.kafka.common.ElectionType;

+import org.apache.kafka.common.Uuid;

+import org.apache.kafka.common.config.ConfigResource;

+import org.apache.kafka.common.errors.InvalidReplicationFactorException;

+import org.apache.kafka.common.errors.InvalidRequestException;

+import org.apache.kafka.common.errors.InvalidTopicException;

+import org.apache.kafka.common.internals.Topic;

+import org.apache.kafka.common.message.AlterIsrRequestData;

+import org.apache.kafka.common.message.AlterIsrResponseData;

+import

org.apache.kafka.common.message.CreateTopicsRequestData.CreatableReplicaAssignment;

+import org.apache.kafka.common.message.CreateTopicsRequestData.CreatableTopic;

+import

org.apache.kafka.common.message.CreateTopicsRequestData.CreatableTopicCollection;

+import org.apache.kafka.common.message.CreateTopicsRequestData;

+import

org.apache.kafka.common.message.CreateTopicsResponseData.CreatableTopicResult;

+import org.apache.kafka.common.message.CreateTopicsResponseData;

+import org.apache.kafka.common.message.ElectLeadersRequestData.TopicPartitions;

+import org.apache.kafka.common.message.ElectLeadersRequestData;

+import

org.apache.kafka.common.message.ElectLeadersResponseData.PartitionResult;

+import

org.apache.kafka.common.message.ElectLeadersResponseData.ReplicaElectionResult;

+import org.apache.kafka.common.message.ElectLeadersResponseData;

+import org.apache.kafka.common.metadata.IsrChangeRecord;

+import org.apache.kafka.common.metadata.PartitionRecord;

+import org.apache.kafka.common.metadata.TopicRecord;

+import org.apache.kafka.common.protocol.Errors;

+import org.apache.kafka.common.requests.ApiError;

+import org.apache.kafka.common.utils.LogContext;

+import org.apache.kafka.controller.BrokersToIsrs.TopicPartition;

+import org.apache.kafka.metadata.ApiMessageAndVersion;

+import org.apache.kafka.timeline.SnapshotRegistry;

+import org.apache.kafka.timeline.TimelineHashMap;

+import org.slf4j.Logger;

+

+import java.util.AbstractMap.SimpleImmutableEntry;

+import java.util.ArrayList;

+import java.util.Arrays;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.Iterator;

+import java.util.List;

+import java.util.Map;

+import java.util.Map.Entry;

+import java.util.Objects;

+import java.util.Random;

+

+import static org.apache.kafka.clients.admin.AlterConfigOp.OpType.SET;

+import static org.apache.kafka.common.config.ConfigResource.Type.TOPIC;

+

+

+public class ReplicationControlManager {

+static class TopicControlInfo {

+private final Uuid id;

+private final TimelineHashMap parts;

+

+TopicControlInfo(SnapshotRegistry snapshotRegistry, Uuid id) {

+this.id = id;

+this.parts = new TimelineHashMap<>(snapshotRegistry, 0);

+}

+}

+

+static class PartitionControlInfo {

+private final int[] replicas;

+private final int[] isr;

+private final int[] removingReplicas;

+private final int[] addingReplicas;

+private final int leader;

+private final int leaderEpoch;

+private final int partitionEpoch;

+

+PartitionControlInfo(PartitionRecord record) {

+this(Replicas.toArray(record.replicas()),

+Replicas.toArray(record.isr()),

+Replicas.toArray(record.removingReplicas()),

+Replicas.toArray(record.addingReplicas()),

+record.leader(),

+record.leaderEpoch(),

+record.partitionEpoch());

+}

+

+PartitionControlInfo(int[] replicas, int[] isr, int[] removingReplicas,

+ int[] addingReplicas, int leader, int leaderEpoch,

+ int partitionEpoch) {

+this.replicas = replicas;

+this.isr = isr;

+

[GitHub] [kafka] junrao commented on a change in pull request #10070: KAFKA-12276: Add the quorum controller code

junrao commented on a change in pull request #10070:

URL: https://github.com/apache/kafka/pull/10070#discussion_r577259797

##

File path:

metadata/src/main/java/org/apache/kafka/controller/QuorumController.java

##

@@ -0,0 +1,920 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kafka.controller;

+

+import org.apache.kafka.clients.admin.AlterConfigOp.OpType;

+import org.apache.kafka.common.config.ConfigDef;

+import org.apache.kafka.common.config.ConfigResource;

+import org.apache.kafka.common.errors.ApiException;

+import org.apache.kafka.common.errors.NotControllerException;

+import org.apache.kafka.common.errors.UnknownServerException;

+import org.apache.kafka.common.message.AlterIsrRequestData;

+import org.apache.kafka.common.message.AlterIsrResponseData;

+import org.apache.kafka.common.message.BrokerHeartbeatRequestData;

+import org.apache.kafka.common.message.BrokerRegistrationRequestData;

+import org.apache.kafka.common.message.CreateTopicsRequestData;

+import org.apache.kafka.common.message.CreateTopicsResponseData;

+import org.apache.kafka.common.message.ElectLeadersRequestData;

+import org.apache.kafka.common.message.ElectLeadersResponseData;

+import org.apache.kafka.common.metadata.ConfigRecord;

+import org.apache.kafka.common.metadata.FenceBrokerRecord;

+import org.apache.kafka.common.metadata.IsrChangeRecord;

+import org.apache.kafka.common.metadata.MetadataRecordType;

+import org.apache.kafka.common.metadata.PartitionRecord;

+import org.apache.kafka.common.metadata.QuotaRecord;

+import org.apache.kafka.common.metadata.RegisterBrokerRecord;

+import org.apache.kafka.common.metadata.TopicRecord;

+import org.apache.kafka.common.metadata.UnfenceBrokerRecord;

+import org.apache.kafka.common.metadata.UnregisterBrokerRecord;

+import org.apache.kafka.common.protocol.ApiMessage;

+import org.apache.kafka.common.quota.ClientQuotaAlteration;

+import org.apache.kafka.common.quota.ClientQuotaEntity;

+import org.apache.kafka.common.requests.ApiError;

+import org.apache.kafka.common.utils.LogContext;

+import org.apache.kafka.common.utils.Time;

+import org.apache.kafka.common.utils.Utils;

+import org.apache.kafka.metadata.ApiMessageAndVersion;

+import org.apache.kafka.metadata.BrokerHeartbeatReply;

+import org.apache.kafka.metadata.BrokerRegistrationReply;

+import org.apache.kafka.metadata.FeatureManager;

+import org.apache.kafka.metadata.VersionRange;

+import org.apache.kafka.metalog.MetaLogLeader;

+import org.apache.kafka.metalog.MetaLogListener;

+import org.apache.kafka.metalog.MetaLogManager;

+import org.apache.kafka.queue.EventQueue.EarliestDeadlineFunction;

+import org.apache.kafka.queue.EventQueue;

+import org.apache.kafka.queue.KafkaEventQueue;

+import org.apache.kafka.timeline.SnapshotRegistry;

+import org.slf4j.Logger;

+

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.List;

+import java.util.Map.Entry;

+import java.util.Map;

+import java.util.Optional;

+import java.util.Random;

+import java.util.Set;

+import java.util.concurrent.CompletableFuture;

+import java.util.concurrent.RejectedExecutionException;

+import java.util.concurrent.TimeUnit;

+import java.util.function.Supplier;

+import java.util.stream.Collectors;

+

+

+public final class QuorumController implements Controller {

+/**

+ * A builder class which creates the QuorumController.

+ */

+static public class Builder {

+private final int nodeId;

+private Time time = Time.SYSTEM;

+private String threadNamePrefix = null;

+private LogContext logContext = null;

+private Map configDefs =

Collections.emptyMap();

+private MetaLogManager logManager = null;

+private Map supportedFeatures =

Collections.emptyMap();

+private short defaultReplicationFactor = 3;

+private int defaultNumPartitions = 1;

+private ReplicaPlacementPolicy replicaPlacementPolicy =

+new SimpleReplicaPlacementPolicy(new Random());

+private long sessionTimeoutNs = TimeUnit.NANOSECONDS.convert(18,

TimeUnit.SECONDS);

+

+public Builder(int nodeId) {

+this.nodeId = nodeId;

+}

+

+public

[GitHub] [kafka] mjsax commented on pull request #9670: MINOR: Clarify config names for EOS versions 1 and 2

mjsax commented on pull request #9670: URL: https://github.com/apache/kafka/pull/9670#issuecomment-780225430 Thanks @JimGalasyn! Merged to `trunk` and cherry-picked to `2.8`, `2.7`, and `2.6` branches. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mjsax merged pull request #9670: MINOR: Clarify config names for EOS versions 1 and 2

mjsax merged pull request #9670: URL: https://github.com/apache/kafka/pull/9670 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-12328) Find out partition of a store iterator

[

https://issues.apache.org/jira/browse/KAFKA-12328?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17285569#comment-17285569

]

Matthias J. Sax commented on KAFKA-12328:

-

You should be able to use `ProcessorContext.taskId()` – task-ids are encoded as

"_" so with some string parsing you should

be able to get the partition number.

Because no record is processed in a punctuation, there is not really a

partition number... (`ProcessorContext.partition()` returns the partition of

the currently processed record). – I guess, strictly speaking (given the

current design), the partition number is fixed (tied to the task) so we could

actually always return it independently if we have a record at hand or not.

> Find out partition of a store iterator

> --

>

> Key: KAFKA-12328

> URL: https://issues.apache.org/jira/browse/KAFKA-12328

> Project: Kafka

> Issue Type: Wish

> Components: streams

>Reporter: fml2

>Priority: Major

>

> This question was posted [on

> stakoverflow|https://stackoverflow.com/questions/66032099/kafka-streams-how-to-get-the-partition-an-iterartor-is-iterating-over]

> and got an answer but the solution is quite complicated hence this ticket.

>

> In my Kafka Streams application, I have a task that sets up a scheduled (by

> the wall time) punctuator. The punctuator iterates over the entries of a

> store and does something with them. Like this:

> {code:java}

> var store = context().getStateStore("MyStore");

> var iter = store.all();

> while (iter.hasNext()) {

>var entry = iter.next();

>// ... do something with the entry

> }

> // Print a summary (now): N entries processed

> // Print a summary (wish): N entries processed in partition P

> {code}

> Is it possible to find out which partition the punctuator operates on? The

> java docs for {{ProcessorContext.partition()}} states that this method

> returns {{-1}} within punctuators.

> I've read [Kafka Streams: Punctuate vs

> Process|https://stackoverflow.com/questions/50776987/kafka-streams-punctuate-vs-process]

> and the answers there. I can understand that a task is, in general, not tied

> to a particular partition. But an iterator should be tied IMO.

> How can I find out the partition?

> Or is my assumption that a particular instance of a store iterator is tied to

> a partion wrong?

> What I need it for: I'd like to include the partition number in some log

> messages. For now, I have several nearly identical log messages stating that

> the punctuator does this and that. In order to make those messages "unique"

> I'd like to include the partition number into them.

> Since I'm working with a single store here (which might be partitioned), I

> assume that every single execution of the punctuator is bound to a single

> partition of that store.

>

> It would be cool if there were a method {{iterator.partition}} (or similar)

> to get this information.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (KAFKA-12328) Find out partition of a store iterator

[

https://issues.apache.org/jira/browse/KAFKA-12328?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Matthias J. Sax updated KAFKA-12328:

Component/s: streams

> Find out partition of a store iterator

> --

>

> Key: KAFKA-12328

> URL: https://issues.apache.org/jira/browse/KAFKA-12328

> Project: Kafka

> Issue Type: Wish

> Components: streams

>Reporter: fml2

>Priority: Major

>

> This question was posted [on

> stakoverflow|https://stackoverflow.com/questions/66032099/kafka-streams-how-to-get-the-partition-an-iterartor-is-iterating-over]

> and got an answer but the solution is quite complicated hence this ticket.

>

> In my Kafka Streams application, I have a task that sets up a scheduled (by

> the wall time) punctuator. The punctuator iterates over the entries of a

> store and does something with them. Like this:

> {code:java}

> var store = context().getStateStore("MyStore");

> var iter = store.all();

> while (iter.hasNext()) {

>var entry = iter.next();

>// ... do something with the entry

> }

> // Print a summary (now): N entries processed

> // Print a summary (wish): N entries processed in partition P

> {code}

> Is it possible to find out which partition the punctuator operates on? The

> java docs for {{ProcessorContext.partition()}} states that this method

> returns {{-1}} within punctuators.

> I've read [Kafka Streams: Punctuate vs

> Process|https://stackoverflow.com/questions/50776987/kafka-streams-punctuate-vs-process]

> and the answers there. I can understand that a task is, in general, not tied

> to a particular partition. But an iterator should be tied IMO.

> How can I find out the partition?

> Or is my assumption that a particular instance of a store iterator is tied to

> a partion wrong?

> What I need it for: I'd like to include the partition number in some log

> messages. For now, I have several nearly identical log messages stating that

> the punctuator does this and that. In order to make those messages "unique"

> I'd like to include the partition number into them.

> Since I'm working with a single store here (which might be partitioned), I

> assume that every single execution of the punctuator is bound to a single

> partition of that store.

>

> It would be cool if there were a method {{iterator.partition}} (or similar)

> to get this information.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] mjsax opened a new pull request #10134: TRIVIAL: fix JavaDocs formatting

mjsax opened a new pull request #10134: URL: https://github.com/apache/kafka/pull/10134 Should be cherry-picked to `2.8` branch. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #10063: KAFKA-12258: Add support for splitting appending records

hachikuji commented on a change in pull request #10063:

URL: https://github.com/apache/kafka/pull/10063#discussion_r577225891

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -79,67 +80,110 @@ public BatchAccumulator(

}

/**

- * Append a list of records into an atomic batch. We guarantee all records

- * are included in the same underlying record batch so that either all of

- * the records become committed or none of them do.

+ * Append a list of records into as many batches as necessary.

*

- * @param epoch the expected leader epoch. If this does not match, then

- * {@link Long#MAX_VALUE} will be returned as an offset which

- * cannot become committed.

- * @param records the list of records to include in a batch

- * @return the expected offset of the last record (which will be

- * {@link Long#MAX_VALUE} if the epoch does not match), or null if

- * no memory could be allocated for the batch at this time

+ * The order of the elements in the records argument will match the order

in the batches.

+ * This method will use as many batches as necessary to serialize all of

the records. Since

+ * this method can split the records into multiple batches it is possible

that some of the

+ * recors will get committed while other will not when the leader fails.

+ *

+ * @param epoch the expected leader epoch. If this does not match, then

{@link Long#MAX_VALUE}

+ * will be returned as an offset which cannot become committed

+ * @param records the list of records to include in the batches

+ * @return the expected offset of the last record; {@link Long#MAX_VALUE}

if the epoch does not

+ * match; null if no memory could be allocated for the batch at

this time

+ * @throws RecordBatchTooLargeException if the size of one record T is

greater than the maximum

+ * batch size; if this exception is throw some of the elements in

records may have

+ * been committed

*/

public Long append(int epoch, List records) {

+return append(epoch, records, false);

+}

+

+/**

+ * Append a list of records into an atomic batch. We guarantee all records

are included in the

+ * same underlying record batch so that either all of the records become

committed or none of

+ * them do.

+ *

+ * @param epoch the expected leader epoch. If this does not match, then

{@link Long#MAX_VALUE}

+ * will be returned as an offset which cannot become committed

+ * @param records the list of records to include in a batch

+ * @return the expected offset of the last record; {@link Long#MAX_VALUE}

if the epoch does not

+ * match; null if no memory could be allocated for the batch at

this time

+ * @throws RecordBatchTooLargeException if the size of the records is

greater than the maximum

+ * batch size; if this exception is throw none of the elements in

records were

+ * committed

+ */

+public Long appendAtomic(int epoch, List records) {

+return append(epoch, records, true);

+}

+

+private Long append(int epoch, List records, boolean isAtomic) {

if (epoch != this.epoch) {

-// If the epoch does not match, then the state machine probably

-// has not gotten the notification about the latest epoch change.

-// In this case, ignore the append and return a large offset value

-// which will never be committed.

return Long.MAX_VALUE;

}

ObjectSerializationCache serializationCache = new

ObjectSerializationCache();

-int batchSize = 0;

-for (T record : records) {

-batchSize += serde.recordSize(record, serializationCache);

-}

-

-if (batchSize > maxBatchSize) {

-throw new IllegalArgumentException("The total size of " + records

+ " is " + batchSize +

-", which exceeds the maximum allowed batch size of " +

maxBatchSize);

-}

+int[] recordSizes = records

+.stream()

+.mapToInt(record -> serde.recordSize(record, serializationCache))

+.toArray();

appendLock.lock();

try {

maybeCompleteDrain();

-BatchBuilder batch = maybeAllocateBatch(batchSize);

-if (batch == null) {

-return null;

-}

-

-// Restart the linger timer if necessary

-if (!lingerTimer.isRunning()) {

-lingerTimer.reset(time.milliseconds() + lingerMs);

+BatchBuilder batch = null;

+if (isAtomic) {

+batch = maybeAllocateBatch(recordSizes);

}

for (T record : records) {

+if (!isAtomic) {

+batch =

[GitHub] [kafka] hachikuji commented on a change in pull request #10063: KAFKA-12258: Add support for splitting appending records

hachikuji commented on a change in pull request #10063:

URL: https://github.com/apache/kafka/pull/10063#discussion_r577216920

##

File path: raft/src/main/java/org/apache/kafka/raft/RaftClient.java

##

@@ -77,6 +79,29 @@ default void handleResign() {}

*/

void register(Listener listener);

+/**

+ * Append a list of records to the log. The write will be scheduled for

some time

+ * in the future. There is no guarantee that appended records will be

written to

+ * the log and eventually committed. While the order of the records is

preserve, they can

+ * be appended to the log using one or more batches. This means that each

record could

+ * be committed independently.

Review comment:

It might already be clear enough given the previous sentence, but maybe

we could emphasize that if any record becomes committed, then all records

ordered before it are guaranteed to be committed as well.

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -79,67 +80,110 @@ public BatchAccumulator(

}

/**

- * Append a list of records into an atomic batch. We guarantee all records

- * are included in the same underlying record batch so that either all of

- * the records become committed or none of them do.

+ * Append a list of records into as many batches as necessary.

*

- * @param epoch the expected leader epoch. If this does not match, then

- * {@link Long#MAX_VALUE} will be returned as an offset which

- * cannot become committed.

- * @param records the list of records to include in a batch

- * @return the expected offset of the last record (which will be

- * {@link Long#MAX_VALUE} if the epoch does not match), or null if

- * no memory could be allocated for the batch at this time

+ * The order of the elements in the records argument will match the order

in the batches.

+ * This method will use as many batches as necessary to serialize all of

the records. Since

+ * this method can split the records into multiple batches it is possible

that some of the

+ * recors will get committed while other will not when the leader fails.

+ *

+ * @param epoch the expected leader epoch. If this does not match, then

{@link Long#MAX_VALUE}

+ * will be returned as an offset which cannot become committed

+ * @param records the list of records to include in the batches

+ * @return the expected offset of the last record; {@link Long#MAX_VALUE}

if the epoch does not

+ * match; null if no memory could be allocated for the batch at

this time

+ * @throws RecordBatchTooLargeException if the size of one record T is

greater than the maximum

+ * batch size; if this exception is throw some of the elements in

records may have

+ * been committed

*/

public Long append(int epoch, List records) {

+return append(epoch, records, false);

+}

+

+/**

+ * Append a list of records into an atomic batch. We guarantee all records

are included in the

+ * same underlying record batch so that either all of the records become

committed or none of

+ * them do.

+ *

+ * @param epoch the expected leader epoch. If this does not match, then

{@link Long#MAX_VALUE}

+ * will be returned as an offset which cannot become committed

+ * @param records the list of records to include in a batch

+ * @return the expected offset of the last record; {@link Long#MAX_VALUE}

if the epoch does not

+ * match; null if no memory could be allocated for the batch at

this time

+ * @throws RecordBatchTooLargeException if the size of the records is

greater than the maximum

+ * batch size; if this exception is throw none of the elements in

records were

+ * committed

+ */

+public Long appendAtomic(int epoch, List records) {

+return append(epoch, records, true);

+}

+

+private Long append(int epoch, List records, boolean isAtomic) {

if (epoch != this.epoch) {

-// If the epoch does not match, then the state machine probably

-// has not gotten the notification about the latest epoch change.

-// In this case, ignore the append and return a large offset value

-// which will never be committed.

return Long.MAX_VALUE;

}

ObjectSerializationCache serializationCache = new

ObjectSerializationCache();

-int batchSize = 0;

-for (T record : records) {

-batchSize += serde.recordSize(record, serializationCache);

-}

-

-if (batchSize > maxBatchSize) {

-throw new IllegalArgumentException("The total size of " + records

+ " is " + batchSize +

-", which exceeds the maximum allowed batch size of " +

maxBatchSize);

[GitHub] [kafka] cmccabe commented on a change in pull request #10070: KAFKA-12276: Add the quorum controller code

cmccabe commented on a change in pull request #10070:

URL: https://github.com/apache/kafka/pull/10070#discussion_r577217605

##

File path:

metadata/src/main/java/org/apache/kafka/controller/QuorumController.java

##

@@ -0,0 +1,920 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with