[GitHub] [kafka] ItherNiT commented on pull request #6329: KAFKA-1194: Fix renaming open files on Windows

ItherNiT commented on pull request #6329: URL: https://github.com/apache/kafka/pull/6329#issuecomment-799172904 Any update when this will be integrated into kafka? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-7870) Error sending fetch request (sessionId=1578860481, epoch=INITIAL) to node 2: java.io.IOException: Connection to 2 was disconnected before the response was read.

[

https://issues.apache.org/jira/browse/KAFKA-7870?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17301470#comment-17301470

]

ShiminHuang commented on KAFKA-7870:

We actually found this in version 2.4.1 as well

> Error sending fetch request (sessionId=1578860481, epoch=INITIAL) to node 2:

> java.io.IOException: Connection to 2 was disconnected before the response was

> read.

>

>

> Key: KAFKA-7870

> URL: https://issues.apache.org/jira/browse/KAFKA-7870

> Project: Kafka

> Issue Type: Bug

> Components: clients

>Affects Versions: 2.1.0

>Reporter: Chakhsu Lau

>Priority: Blocker

>

> We build a kafka cluster with 5 brokers. But one of brokers suddenly stopped

> running during the run. And it happened twice in the same broker. Here is the

> log and is this a bug in kafka ?

> {code:java}

> [2019-01-25 12:57:14,686] INFO [ReplicaFetcher replicaId=3, leaderId=2,

> fetcherId=0] Error sending fetch request (sessionId=1578860481,

> epoch=INITIAL) to node 2: java.io.IOException: Connection to 2 was

> disconnected before the response was read.

> (org.apache.kafka.clients.FetchSessionHandler)

> [2019-01-25 12:57:14,687] WARN [ReplicaFetcher replicaId=3, leaderId=2,

> fetcherId=0] Error in response for fetch request (type=FetchRequest,

> replicaId=3, maxWait=500, minBytes=1, maxBytes=10485760,

> fetchData={api-result-bi-heatmap-8=(offset=0, logStartOffset=0,

> maxBytes=1048576, currentLeaderEpoch=Optional[4]),

> api-result-bi-heatmap-save-12=(offset=0, logStartOffset=0, maxBytes=1048576,

> currentLeaderEpoch=Optional[4]), api-result-bi-heatmap-task-2=(offset=2,

> logStartOffset=0, maxBytes=1048576, currentLeaderEpoch=Optional[4]),

> api-result-bi-flow-39=(offset=1883206, logStartOffset=0, maxBytes=1048576,

> currentLeaderEpoch=Optional[4]), __consumer_offsets-47=(offset=349437,

> logStartOffset=0, maxBytes=1048576, currentLeaderEpoch=Optional[4]),

> api-result-bi-heatmap-track-6=(offset=1039889, logStartOffset=0,

> maxBytes=1048576, currentLeaderEpoch=Optional[4]),

> api-result-bi-heatmap-task-17=(offset=0, logStartOffset=0, maxBytes=1048576,

> currentLeaderEpoch=Optional[4]), __consumer_offsets-2=(offset=0,

> logStartOffset=0, maxBytes=1048576, currentLeaderEpoch=Optional[4]),

> api-result-bi-heatmap-aggs-19=(offset=1255056, logStartOffset=0,

> maxBytes=1048576, currentLeaderEpoch=Optional[4])},

> isolationLevel=READ_UNCOMMITTED, toForget=, metadata=(sessionId=1578860481,

> epoch=INITIAL)) (kafka.server.ReplicaFetcherThread)

> java.io.IOException: Connection to 2 was disconnected before the response was

> read

> at

> org.apache.kafka.clients.NetworkClientUtils.sendAndReceive(NetworkClientUtils.java:97)

> at

> kafka.server.ReplicaFetcherBlockingSend.sendRequest(ReplicaFetcherBlockingSend.scala:97)

> at

> kafka.server.ReplicaFetcherThread.fetchFromLeader(ReplicaFetcherThread.scala:190)

> at

> kafka.server.AbstractFetcherThread.kafka$server$AbstractFetcherThread$$processFetchRequest(AbstractFetcherThread.scala:241)

> at

> kafka.server.AbstractFetcherThread$$anonfun$maybeFetch$1.apply(AbstractFetcherThread.scala:130)

> at

> kafka.server.AbstractFetcherThread$$anonfun$maybeFetch$1.apply(AbstractFetcherThread.scala:129)

> at scala.Option.foreach(Option.scala:257)

> at

> kafka.server.AbstractFetcherThread.maybeFetch(AbstractFetcherThread.scala:129)

> at kafka.server.AbstractFetcherThread.doWork(AbstractFetcherThread.scala:111)

> at kafka.utils.ShutdownableThread.run(ShutdownableThread.scala:82)

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Created] (KAFKA-12465) Decide whether inconsistent cluster id error are fatal

dengziming created KAFKA-12465: -- Summary: Decide whether inconsistent cluster id error are fatal Key: KAFKA-12465 URL: https://issues.apache.org/jira/browse/KAFKA-12465 Project: Kafka Issue Type: Sub-task Reporter: dengziming Currently, we just log an error when an inconsistent cluster-id occurred. We should set a window during startup when these errors are fatal but after that window, we no longer treat them to be fatal. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] dengziming commented on a change in pull request #10289: KAFKA-12440: ClusterId validation for Vote, BeginQuorum, EndQuorum and FetchSnapshot

dengziming commented on a change in pull request #10289:

URL: https://github.com/apache/kafka/pull/10289#discussion_r594127628

##

File path: raft/src/main/java/org/apache/kafka/raft/KafkaRaftClient.java

##

@@ -939,12 +951,27 @@ private FetchResponseData buildEmptyFetchResponse(

);

}

-private boolean hasValidClusterId(FetchRequestData request) {

+private boolean hasValidClusterId(ApiMessage request) {

Review comment:

It's a bit difficult to figure out how to add the window, we could not

simply rely on a fixed configuration, I add a ticket to track this problem:

https://issues.apache.org/jira/browse/KAFKA-12465.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (KAFKA-12465) Decide whether inconsistent cluster id error are fatal

[ https://issues.apache.org/jira/browse/KAFKA-12465?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] dengziming updated KAFKA-12465: --- Description: Currently, we just log an error when an inconsistent cluster-id occurred. We should set a window during startup when these errors are fatal but after that window, we no longer treat them to be fatal. see https://github.com/apache/kafka/pull/10289#discussion_r592853088 (was: Currently, we just log an error when an inconsistent cluster-id occurred. We should set a window during startup when these errors are fatal but after that window, we no longer treat them to be fatal.) > Decide whether inconsistent cluster id error are fatal > -- > > Key: KAFKA-12465 > URL: https://issues.apache.org/jira/browse/KAFKA-12465 > Project: Kafka > Issue Type: Sub-task >Reporter: dengziming >Priority: Major > > Currently, we just log an error when an inconsistent cluster-id occurred. We > should set a window during startup when these errors are fatal but after that > window, we no longer treat them to be fatal. see > https://github.com/apache/kafka/pull/10289#discussion_r592853088 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] dajac commented on a change in pull request #10304: KAFKA-12454:Add ERROR logging on kafka-log-dirs when given brokerIds do not exist in current kafka cluster

dajac commented on a change in pull request #10304:

URL: https://github.com/apache/kafka/pull/10304#discussion_r594184162

##

File path: core/src/main/scala/kafka/admin/LogDirsCommand.scala

##

@@ -39,19 +39,31 @@ object LogDirsCommand {

def describe(args: Array[String], out: PrintStream): Unit = {

val opts = new LogDirsCommandOptions(args)

val adminClient = createAdminClient(opts)

-val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(!_.isEmpty)

-val brokerList = Option(opts.options.valueOf(opts.brokerListOpt))

match {

-case Some(brokerListStr) =>

brokerListStr.split(',').filter(!_.isEmpty).map(_.toInt)

-case None =>

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toArray

-}

+val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(_.nonEmpty)

+var nonExistBrokers: Set[Int] = Set.empty

+try {

+val clusterBrokers: Set[Int] =

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toSet

Review comment:

nit: We can remove specifying `Set[Int]`.

##

File path: core/src/main/scala/kafka/admin/LogDirsCommand.scala

##

@@ -39,19 +39,31 @@ object LogDirsCommand {

def describe(args: Array[String], out: PrintStream): Unit = {

val opts = new LogDirsCommandOptions(args)

val adminClient = createAdminClient(opts)

-val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(!_.isEmpty)

-val brokerList = Option(opts.options.valueOf(opts.brokerListOpt))

match {

-case Some(brokerListStr) =>

brokerListStr.split(',').filter(!_.isEmpty).map(_.toInt)

-case None =>

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toArray

-}

+val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(_.nonEmpty)

+var nonExistBrokers: Set[Int] = Set.empty

+try {

+val clusterBrokers: Set[Int] =

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toSet

+val brokerList = Option(opts.options.valueOf(opts.brokerListOpt))

match {

+case Some(brokerListStr) =>

+val inputBrokers: Set[Int] =

brokerListStr.split(',').filter(_.nonEmpty).map(_.toInt).toSet

Review comment:

ditto.

##

File path: core/src/main/scala/kafka/admin/LogDirsCommand.scala

##

@@ -39,19 +39,31 @@ object LogDirsCommand {

def describe(args: Array[String], out: PrintStream): Unit = {

val opts = new LogDirsCommandOptions(args)

val adminClient = createAdminClient(opts)

-val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(!_.isEmpty)

-val brokerList = Option(opts.options.valueOf(opts.brokerListOpt))

match {

-case Some(brokerListStr) =>

brokerListStr.split(',').filter(!_.isEmpty).map(_.toInt)

-case None =>

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toArray

-}

+val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(_.nonEmpty)

+var nonExistBrokers: Set[Int] = Set.empty

+try {

+val clusterBrokers: Set[Int] =

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toSet

+val brokerList = Option(opts.options.valueOf(opts.brokerListOpt))

match {

+case Some(brokerListStr) =>

+val inputBrokers: Set[Int] =

brokerListStr.split(',').filter(_.nonEmpty).map(_.toInt).toSet

+nonExistBrokers = inputBrokers.diff(clusterBrokers)

+inputBrokers

+case None => clusterBrokers

+}

Review comment:

nit: We usually avoid using mutable variable unless it is really

necessary. In this case, I would rather return the `nonExistingBrokers` when

the argument is processed. Something like this:

```

val (existingBrokers, nonExistingBrokers) =

Option(opts.options.valueOf(opts.brokerListOpt)) match {

case Some(brokerListStr) =>

val inputBrokers: Set[Int] =

brokerListStr.split(',').filter(_.nonEmpty).map(_.toInt).toSet

(inputBrokers, inputBrokers.diff(clusterBrokers)

case None => (clusterBrokers, Set.empty)

}

```

##

File path: core/src/main/scala/kafka/admin/LogDirsCommand.scala

##

@@ -39,19 +39,31 @@ object LogDirsCommand {

def describe(args: Array[String], out: PrintStream): Unit = {

val opts = new LogDirsCommandOptions(args)

val adminClient = createAdminClient(opts)

-val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(!_.isEmpty)

-val brokerList = Option(opts.options.valueOf(opts.brokerListOpt))

match {

-case Some(brokerListStr) =>

brokerListStr.split(',').filter(!_.isEmpty).map(_.toInt)

-case None =>

adminClient.descr

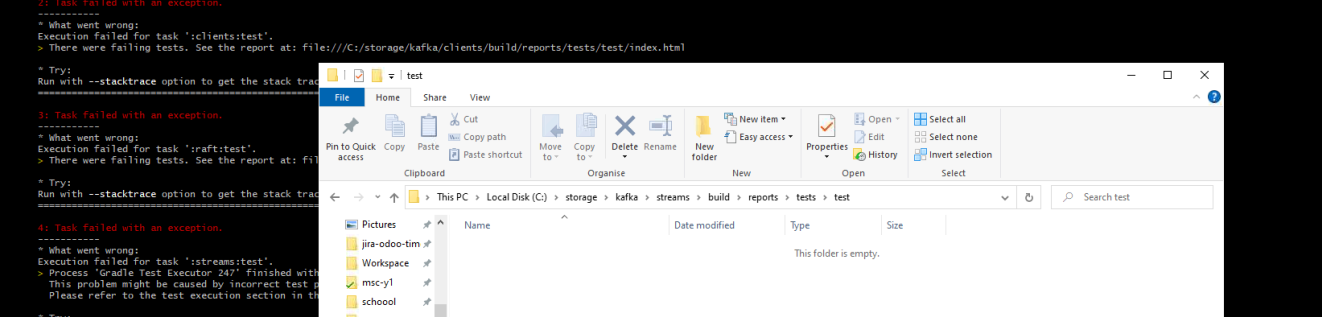

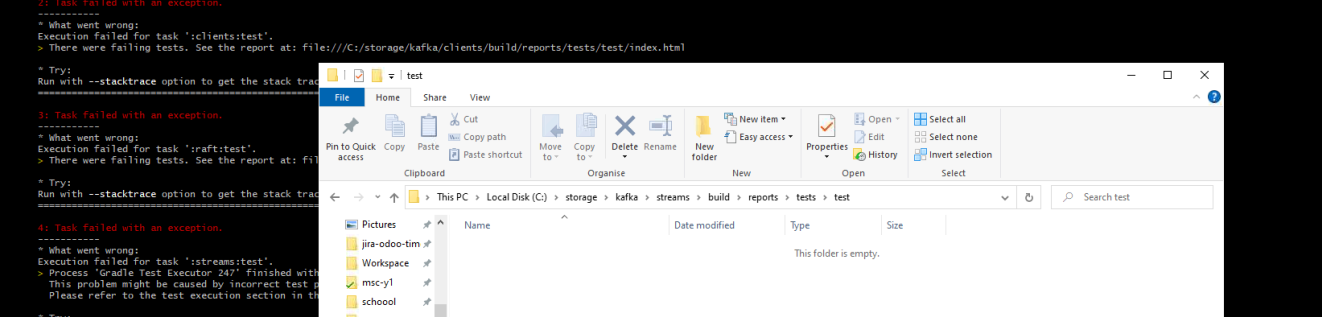

[GitHub] [kafka] ikdekker edited a comment on pull request #10313: KAFKA-12456: Log "Listeners are not identical across brokers" message at WARN/INFO instead of ERROR

ikdekker edited a comment on pull request #10313: URL: https://github.com/apache/kafka/pull/10313#issuecomment-798355603 Hello Kafka Committers, this contribution is part of a university course. We would appreciate any kind of feedback. This initial PR is purposefully a very simple one for us to get familiar with the process of PRs in this repo. We attempted running tests (by executing `./gradlew tests`), but reports were not generated. The test output did indicate a report was generated into the builds directory. Is this a known issue, or expected behaviour? We followed the Readme as stated in the contributing page (https://github.com/apache/kafka/blob/trunk/README.md). On the [PR guideline page](https://cwiki.apache.org/confluence/display/KAFKA/Contributing+Code+Changes), it says we should update the status of https://issues.apache.org/jira/browse/KAFKA-12456 to submit a patch. It seems we do not have the rights to do this, is this right? Thanks for your time! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mimaison merged pull request #10308: MINOR: Update year in NOTICE

mimaison merged pull request #10308: URL: https://github.com/apache/kafka/pull/10308 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cadonna opened a new pull request #10317: KAFKA-10357: Add setup method to internal topics

cadonna opened a new pull request #10317: URL: https://github.com/apache/kafka/pull/10317 For KIP-698, we need a way to setup internal topics without validating them. This PR adds a setup method to the InternalTopicManager for that purpose. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cadonna commented on a change in pull request #10317: KAFKA-10357: Add setup method to internal topics

cadonna commented on a change in pull request #10317:

URL: https://github.com/apache/kafka/pull/10317#discussion_r594287719

##

File path:

streams/src/test/java/org/apache/kafka/streams/processor/internals/InternalTopicManagerTest.java

##

@@ -653,56 +798,22 @@ public void

shouldReportMisconfigurationsOfCleanupPolicyForWindowedChangelogTopi

@Test

public void

shouldReportMisconfigurationsOfCleanupPolicyForRepartitionTopics() {

final long retentionMs = 1000;

-mockAdminClient.addTopic(

Review comment:

Just refactorings from here to the end.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cadonna commented on pull request #10317: KAFKA-10357: Add setup method to internal topics

cadonna commented on pull request #10317: URL: https://github.com/apache/kafka/pull/10317#issuecomment-799376130 Call for review: @rodesai This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (KAFKA-12464) Enhance constrained sticky Assign algorithm

[

https://issues.apache.org/jira/browse/KAFKA-12464?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Luke Chen updated KAFKA-12464:

--

Description:

In KAFKA-9987, we did a great improvement for the case when all consumers were

subscribed to same set of topics. The algorithm contains 4 phases:

# Reassign as many previously owned partitions as possible, up to the maxQuota

# Fill remaining members up to minQuota

# If we ran out of unassigned partitions before filling all consumers, we need

to start stealing partitions from the over-full consumers at max capacity

# Otherwise we may have run out of unfilled consumers before assigning all

partitions, in which case we should just distribute one partition each to all

consumers at min capacity

Take an example for better understanding:

*example:*

Current status: 2 consumers (C0, C1), and 10 topic partitions: t1p0, t1p1, ...

t1p9

Suppose, current assignment is:

_C0: t1p0, t1p1, t1p2, t1p3, t1p4_

_C1: t1p5, t1p6, t1p7, t1p8, t1p9_

Now, new consumer added: C2, so we'll do:

# Reassign as many previously owned partitions as possible, up to the maxQuota

After this phase, the assignment will be: (maxQuota will be 4)

_C0: t1p0, t1p1, t1p2, t1p3_

_C1: t1p5, t1p6, t1p7, t1p8_

# Fill remaining members up to minQuota

After this phase, the assignment will be:

_C0: t1p0, t1p1, t1p2, t1p3_

_C1: t1p5, t1p6, t1p7, t1p8_

_C2: t1p4, t1p9_

# If we ran out of unassigned partitions before filling all consumers, we need

to start stealing partitions from the over-full consumers at max capacity

After this phase, the assignment will be:

_C0: t1p0, t1p1, t1p2_

_C1: t1p5, t1p6, t1p7, t1p8_

_C2: t1p4, t1p9,_ _t1p3_

# Otherwise we may have run out of unfilled consumers before assigning all

partitions, in which case we should just distribute one partition each to all

consumers at min capacity

As we can see, we need 3 phases to complete the assignment. But we can actually

completed with 2 phases. Here's the updated algorithm:

# Reassign as many previously owned partitions as possible, up to the

maxQuota, and also considering the numMaxQuota by the remainder of (Partitions

/ Consumers)

# Fill remaining members up to maxQuota if current maxQuotaMember <

numMaxQuota, otherwise, to minQuota

By considering the numMaxQuota, the original step 1 won't be too aggressive to

assign too many partitions to consumers, and the step 2 won't be too

conservative to assign not enough partitions to consumers, so that we don't

need step 3 and step 4 to balance them.

{{So, the updated Pseudo-code sketch of the algorithm:}}

C_f := (P/N)_floor, the floor capacity

C_c := (P/N)_ceil, the ceiling capacity

*C_r := (P%N) the allowed number of members with C_c partitions assigned*

*num_max_capacity_members := current number of members with C_c partitions

assigned (default to 0)*

members := the sorted set of all consumers

partitions := the set of all partitions

unassigned_partitions := the set of partitions not yet assigned, initialized

to be all partitions

unfilled_members := the set of consumers not yet at capacity, initialized to

empty

-max_capacity_members := the set of members with exactly C_c partitions

assigned, initialized to empty-

member.owned_partitions := the set of previously owned partitions encoded in

the Subscription

// Reassign as many previously owned partitions as possible, *by considering

the num_max_capacity_members*

for member : members

remove any partitions that are no longer in the subscription from its

owned partitions

remove all owned_partitions if the generation is old

if member.owned_partitions.size < C_f

assign all owned partitions to member and remove from

unassigned_partitions

add member to unfilled_members

-else if member.owned_partitions.size == C_f-

-assign first C_f owned_partitions to member and remove from

unassigned_partitions-

else if member.owned_partitions.size >= C_c *&& num_max_capacity_members

< C_r*

*assign first C_c owned_partitions to member and remove from

unassigned_partitions*

*num_max_capacity_members++*

a-dd member to max_capacity_members-

*else*

*assign first C_f owned_partitions to member and remove from

unassigned_partitions*

sort unassigned_partitions in partition order, ie t0_p0, t1_p0, t2_p0, t0_p1,

t1_p0 (for data parallelism)

sort unfilled_members by memberId (for determinism)

// Fill remaining members *up to the C_r numbers of C_c, otherwise, to C_f*

for member : unfilled_members

compute the remaining capacity as -C = C_f - num_assigned_partitions-

if num_max_capacity_members < C_r:

C = C_c - num_assigned_partitions

num_max_capacity_members++

else

C = C_f - num_assigned_partitions

pop the first C partitions from unassig

[jira] [Commented] (KAFKA-10582) Mirror Maker 2 not replicating new topics until restart

[ https://issues.apache.org/jira/browse/KAFKA-10582?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17301648#comment-17301648 ] Ravishankar S R commented on KAFKA-10582: - Hi, I'm facing same problem. I'm using kafka 2.6.1. In my case, kafka restart on one instance works if restart done within few minutes of new topic creation. However, if it takes long time to restart then restarting on one instance doesn't help and both instances needs to be restarted in parallel (stop both instance and start both instance). Please let me know if there is any workaround to overcome this problem. Best Regards, Ravi > Mirror Maker 2 not replicating new topics until restart > --- > > Key: KAFKA-10582 > URL: https://issues.apache.org/jira/browse/KAFKA-10582 > Project: Kafka > Issue Type: Bug > Components: mirrormaker >Affects Versions: 2.5.1 > Environment: RHEL 7 Linux. >Reporter: Robert Martin >Priority: Minor > > We are using Mirror Maker 2 from the 2.5.1 release for replication on some > clusters. Replication is working as expected for existing topics. When we > create a new topic, however, Mirror Maker 2 creates the replicated topic as > expected but never starts replicating it. If we restart Mirror Maker 2 > within 2-3 minutes the topic starts replicating as expected. From > documentation we haveve seen it appears this should start replicating without > a restart based on the settings we have. > *Example:* > Create topic "mytesttopic" on source cluster > MirrorMaker 2 creates "source.mytesttopioc" on target cluster with no issue > MirrorMaker 2 does not replicate "mytesttopic" -> "source.mytesttopic" > Restart MirrorMaker 2 and now replication works for "mytesttopic" -> > "source.mytesttopic" > *Example config:* > name = source->target > group.id = source-to-target > clusters = source, target > source.bootstrap.servers = sourcehosts:9092 > target.bootstrap.servers = targethosts:9092 > source->target.enabled = true > source->target.topics = .* > target->source = false > target->source.topics = .* > replication.factor=3 > checkpoints.topic.replication.factor=3 > heartbeats.topic.replication.factor=3 > offset-syncs.topic.replication.factor=3 > offset.storage.replication.factor=3 > status.storage.replication.factor=3 > config.storage.replication.factor=3 > tasks.max = 16 > refresh.topics.enabled = true > sync.topic.configs.enabled = true > refresh.topics.interval.seconds = 300 > refresh.groups.interval.seconds = 300 > readahead.queue.capacity = 100 > emit.checkpoints.enabled = true > emit.checkpoints.interval.seconds = 5 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] dajac opened a new pull request #10318: KAFKA-12330; FetchSessionCache may cause starvation for partitions when FetchResponse is full

dajac opened a new pull request #10318: URL: https://github.com/apache/kafka/pull/10318 The incremental FetchSessionCache sessions deprioritizes partitions where a response is returned. This may happen if log metadata such as log start offset, hwm, etc is returned, or if data for that partition is returned. When a fetch response fills to maxBytes, data may not be returned for partitions even if the fetch offset is lower than the fetch upper bound. However, the fetch response will still contain updates to metadata such as hwm if that metadata has changed. This can lead to degenerate behavior where a partition's hwm or log start offset is updated resulting in the next fetch being unnecessarily skipped for that partition. At first this appeared to be worse, as hwm updates occur frequently, but starvation should result in hwm movement becoming blocked, allowing a fetch to go through and then becoming unstuck. However, it'll still require one more fetch request than necessary to do so. Consumers may be affected more than replica fetchers, however they often remove partitions with fetched data from the next fetch request and this may be helping prevent starvation. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dajac opened a new pull request #10319: MINOR; Various code cleanups

dajac opened a new pull request #10319: URL: https://github.com/apache/kafka/pull/10319 ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] wenbingshen commented on pull request #10304: KAFKA-12454:Add ERROR logging on kafka-log-dirs when given brokerIds do not exist in current kafka cluster

wenbingshen commented on pull request #10304: URL: https://github.com/apache/kafka/pull/10304#issuecomment-799498049 > @wenbingshen Thanks for the updates. I have left few more minor comments. Also, it seems that the build failed. Could you check it? Thank you for your review and suggestions. I have submitted the latest code, and the code has been tested and compiled successfully. Please help review it again, thank you. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (KAFKA-12463) Update default consumer partition assignor for sink tasks

[

https://issues.apache.org/jira/browse/KAFKA-12463?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17301327#comment-17301327

]

Chris Egerton edited comment on KAFKA-12463 at 3/15/21, 3:26 PM:

-

Ah, thanks [~ableegoldman], I'd misread the javadocs for the cooperative sticky

assignor. Updated the description to point to 2.4 instead of 2.3.

RE clearness on the upgrade section in KIP-429–I didn't see a specific section

for Connect, and both of the sections that were there ("Consumer" and

"Streams") provided a different procedure than the one I proposed here. It

seems like an implicit goal of both of them is to arrive at an end state where

all consumers only provide the cooperative assignor in their list of supported

assignors, instead of the cooperative assignor first and with the other, older

assignor behind it. I'm wondering if the lack of that goal is why this

different approach (which only requires one-step rolling as opposed to two) is

viable here but not necessarily for other applications?

was (Author: chrisegerton):

Ah, thanks [~ableegoldman], I'd misread the javadocs for the cooperative sticky

assignor. Updated the description to point to 2.4 instead of 2.3.

RE clearness on the upgrade section in KIP-429–I didn't see a specific section

for Connect, and both of the sections that were provided ("Consumer" and

"Streams") provided a different procedure than the one I proposed here. It

seems like an implicit goal of both of them is to arrive at an end state where

all consumers only provide the cooperative assignor in their list of supported

assignors, instead of the cooperative assignor first and with the other, older

assignor behind it. I'm wondering if the lack of that goal is why this

different approach (which only requires one-step rolling as opposed to two) is

viable here but not necessarily for other applications?

> Update default consumer partition assignor for sink tasks

> -

>

> Key: KAFKA-12463

> URL: https://issues.apache.org/jira/browse/KAFKA-12463

> Project: Kafka

> Issue Type: Improvement

> Components: KafkaConnect

>Reporter: Chris Egerton

>Assignee: Chris Egerton

>Priority: Major

>

> Kafka consumers have a pluggable [partition assignment

> interface|https://kafka.apache.org/27/javadoc/org/apache/kafka/clients/consumer/ConsumerPartitionAssignor.html]

> that comes with several out-of-the-box implementations including the

> [RangeAssignor|https://kafka.apache.org/27/javadoc/org/apache/kafka/clients/consumer/RangeAssignor.html],

>

> [RoundRobinAssignor|https://kafka.apache.org/27/javadoc/org/apache/kafka/clients/consumer/RoundRobinAssignor.html],

>

> [StickyAssignor|https://kafka.apache.org/27/javadoc/org/apache/kafka/clients/consumer/StickyAssignor.html],

> and

> [CooperativeStickyAssignor|https://kafka.apache.org/27/javadoc/org/apache/kafka/clients/consumer/CooperativeStickyAssignor.html].

> If no partition assignor is configured with a consumer, the {{RangeAssignor}}

> is used by default. Although there are some benefits to this assignor

> including stability of assignment across generations and simplicity of

> design, it comes with a major drawback: the number of active consumers in a

> group is limited to the number of partitions in the topic(s) with the most

> partitions. For an example of the worst case, in a consumer group where every

> member is subscribed to ten topics that each have one partition, only one

> member of that group will be assigned any topic partitions.

> This can end up producing counterintuitive and even frustrating behavior when

> a sink connector is brought up with N tasks to read from some collection of

> topics with a total of N topic partitions, but some tasks end up idling and

> not processing any data.

>

> [KIP-429|https://cwiki.apache.org/confluence/display/KAFKA/KIP-429%3A+Kafka+Consumer+Incremental+Rebalance+Protocol]

> introduced the {{CooperativeStickyAssignor}}, which seeks to provide a

> stable assignment across generations wherever possible, provide the most even

> assignment possible (taking into account possible differences in

> subscriptions across consumers in the group), and allow consumers to continue

> processing data during rebalance. The documentation for the assignor states

> that "Users should prefer this assignor for newer clusters."

> We should alter the default consumer configuration for sink tasks to use the

> new {{CooperativeStickyAssignor}}. In order to do this in a

> backwards-compatible fashion that also enables rolling upgrades, this should

> be implemented by setting the {{partition.assignment.strategy}} property of

> sink task consumers to the list

> {{org.apache.kafka.clients.consumer.CooperativeStickyAssigno

[jira] [Commented] (KAFKA-12463) Update default consumer partition assignor for sink tasks

[

https://issues.apache.org/jira/browse/KAFKA-12463?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17301706#comment-17301706

]

Chris Egerton commented on KAFKA-12463:

---

cc [~rhauch] what do you think about this?

> Update default consumer partition assignor for sink tasks

> -

>

> Key: KAFKA-12463

> URL: https://issues.apache.org/jira/browse/KAFKA-12463

> Project: Kafka

> Issue Type: Improvement

> Components: KafkaConnect

>Reporter: Chris Egerton

>Assignee: Chris Egerton

>Priority: Major

>

> Kafka consumers have a pluggable [partition assignment

> interface|https://kafka.apache.org/27/javadoc/org/apache/kafka/clients/consumer/ConsumerPartitionAssignor.html]

> that comes with several out-of-the-box implementations including the

> [RangeAssignor|https://kafka.apache.org/27/javadoc/org/apache/kafka/clients/consumer/RangeAssignor.html],

>

> [RoundRobinAssignor|https://kafka.apache.org/27/javadoc/org/apache/kafka/clients/consumer/RoundRobinAssignor.html],

>

> [StickyAssignor|https://kafka.apache.org/27/javadoc/org/apache/kafka/clients/consumer/StickyAssignor.html],

> and

> [CooperativeStickyAssignor|https://kafka.apache.org/27/javadoc/org/apache/kafka/clients/consumer/CooperativeStickyAssignor.html].

> If no partition assignor is configured with a consumer, the {{RangeAssignor}}

> is used by default. Although there are some benefits to this assignor

> including stability of assignment across generations and simplicity of

> design, it comes with a major drawback: the number of active consumers in a

> group is limited to the number of partitions in the topic(s) with the most

> partitions. For an example of the worst case, in a consumer group where every

> member is subscribed to ten topics that each have one partition, only one

> member of that group will be assigned any topic partitions.

> This can end up producing counterintuitive and even frustrating behavior when

> a sink connector is brought up with N tasks to read from some collection of

> topics with a total of N topic partitions, but some tasks end up idling and

> not processing any data.

>

> [KIP-429|https://cwiki.apache.org/confluence/display/KAFKA/KIP-429%3A+Kafka+Consumer+Incremental+Rebalance+Protocol]

> introduced the {{CooperativeStickyAssignor}}, which seeks to provide a

> stable assignment across generations wherever possible, provide the most even

> assignment possible (taking into account possible differences in

> subscriptions across consumers in the group), and allow consumers to continue

> processing data during rebalance. The documentation for the assignor states

> that "Users should prefer this assignor for newer clusters."

> We should alter the default consumer configuration for sink tasks to use the

> new {{CooperativeStickyAssignor}}. In order to do this in a

> backwards-compatible fashion that also enables rolling upgrades, this should

> be implemented by setting the {{partition.assignment.strategy}} property of

> sink task consumers to the list

> {{org.apache.kafka.clients.consumer.CooperativeStickyAssignor,

> org.apache.kafka.clients.consumer.RangeAssignor}} when no worker-level or

> connector-level override is present.

> This way, consumer groups for sink connectors on Connect clusters in the

> process of being upgraded will continue to use the {{RangeAssignor}} until

> all workers in the cluster have been upgraded, and then will switch over to

> the new {{CooperativeStickyAssignor}} automatically.

>

> This improvement is viable as far back as -2.3- 2.4, when the

> {{CooperativeStickyAssignor}} was introduced, but given that it is not a bug

> fix, should only be applied to the Connect framework in an upcoming minor

> release. This does not preclude users from following the steps outlined here

> to improve sink connector behavior on existing clusters by modifying their

> worker configs to use {{consumer.partition.assignment.strategy =}}

> {{org.apache.kafka.clients.consumer.CooperativeStickyAssignor,

> org.apache.kafka.clients.consumer.RangeAssignor}}, or doing the same on a

> per-connector basis using the

> {{consumer.override.partition.assignment.strategy}} property.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] abbccdda merged pull request #10135: KAFKA-10348: Share client channel between forwarding and auto creation manager

abbccdda merged pull request #10135: URL: https://github.com/apache/kafka/pull/10135 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda opened a new pull request #10320: MINOR: revert stream logging level back to ERROR

abbccdda opened a new pull request #10320: URL: https://github.com/apache/kafka/pull/10320 An accidental change of logging level for streams from https://github.com/apache/kafka/pull/9579, correcting it. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dajac commented on a change in pull request #10304: KAFKA-12454:Add ERROR logging on kafka-log-dirs when given brokerIds do not exist in current kafka cluster

dajac commented on a change in pull request #10304:

URL: https://github.com/apache/kafka/pull/10304#discussion_r594476389

##

File path: core/src/test/scala/unit/kafka/admin/LogDirsCommandTest.scala

##

@@ -0,0 +1,52 @@

+package unit.kafka.admin

Review comment:

We must add the licence header here. You can copy it from another file.

##

File path: core/src/main/scala/kafka/admin/LogDirsCommand.scala

##

@@ -39,19 +39,29 @@ object LogDirsCommand {

def describe(args: Array[String], out: PrintStream): Unit = {

val opts = new LogDirsCommandOptions(args)

val adminClient = createAdminClient(opts)

-val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(!_.isEmpty)

-val brokerList = Option(opts.options.valueOf(opts.brokerListOpt))

match {

-case Some(brokerListStr) =>

brokerListStr.split(',').filter(!_.isEmpty).map(_.toInt)

-case None =>

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toArray

-}

+val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(_.nonEmpty)

+try {

+val clusterBrokers =

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toSet

+val (existingBrokers, nonExistingBrokers) =

Option(opts.options.valueOf(opts.brokerListOpt)) match {

+case Some(brokerListStr) =>

+val inputBrokers =

brokerListStr.split(',').filter(_.nonEmpty).map(_.toInt).toSet

+(inputBrokers, inputBrokers.diff(clusterBrokers))

+case None => (clusterBrokers, Set.empty)

+}

-out.println("Querying brokers for log directories information")

-val describeLogDirsResult: DescribeLogDirsResult =

adminClient.describeLogDirs(brokerList.map(Integer.valueOf).toSeq.asJava)

-val logDirInfosByBroker =

describeLogDirsResult.allDescriptions.get().asScala.map { case (k, v) => k ->

v.asScala }

+if (nonExistingBrokers.nonEmpty) {

+out.println(s"ERROR: The given node(s) does not exist from

broker-list: ${nonExistingBrokers.mkString(",")}. Current cluster exist

node(s): ${clusterBrokers.mkString(",")}")

Review comment:

nit: Should we say `--broker-list` instead of `broker-list`? Also,

should we say `broker(s)` instead of `node(s)` to be consistent with the

message below?

##

File path: core/src/main/scala/kafka/admin/LogDirsCommand.scala

##

@@ -39,19 +39,29 @@ object LogDirsCommand {

def describe(args: Array[String], out: PrintStream): Unit = {

val opts = new LogDirsCommandOptions(args)

val adminClient = createAdminClient(opts)

-val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(!_.isEmpty)

-val brokerList = Option(opts.options.valueOf(opts.brokerListOpt))

match {

-case Some(brokerListStr) =>

brokerListStr.split(',').filter(!_.isEmpty).map(_.toInt)

-case None =>

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toArray

-}

+val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(_.nonEmpty)

+try {

+val clusterBrokers =

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toSet

+val (existingBrokers, nonExistingBrokers) =

Option(opts.options.valueOf(opts.brokerListOpt)) match {

+case Some(brokerListStr) =>

+val inputBrokers =

brokerListStr.split(',').filter(_.nonEmpty).map(_.toInt).toSet

+(inputBrokers, inputBrokers.diff(clusterBrokers))

+case None => (clusterBrokers, Set.empty)

+}

-out.println("Querying brokers for log directories information")

-val describeLogDirsResult: DescribeLogDirsResult =

adminClient.describeLogDirs(brokerList.map(Integer.valueOf).toSeq.asJava)

-val logDirInfosByBroker =

describeLogDirsResult.allDescriptions.get().asScala.map { case (k, v) => k ->

v.asScala }

+if (nonExistingBrokers.nonEmpty) {

+out.println(s"ERROR: The given node(s) does not exist from

broker-list: ${nonExistingBrokers.mkString(",")}. Current cluster exist

node(s): ${clusterBrokers.mkString(",")}")

+} else {

+out.println("Querying brokers for log directories information")

+val describeLogDirsResult: DescribeLogDirsResult =

adminClient.describeLogDirs(existingBrokers.map(Integer.valueOf).toSeq.asJava)

Review comment:

nit: `DescribeLogDirsResult` can be removed.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] wenbingshen commented on a change in pull request #10304: KAFKA-12454:Add ERROR logging on kafka-log-dirs when given brokerIds do not exist in current kafka cluster

wenbingshen commented on a change in pull request #10304: URL: https://github.com/apache/kafka/pull/10304#discussion_r594482210 ## File path: core/src/test/scala/unit/kafka/admin/LogDirsCommandTest.scala ## @@ -0,0 +1,52 @@ +package unit.kafka.admin Review comment: Sorry, after checking the compilation report, I have realized this problem and I have made changes. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] wenbingshen commented on a change in pull request #10304: KAFKA-12454:Add ERROR logging on kafka-log-dirs when given brokerIds do not exist in current kafka cluster

wenbingshen commented on a change in pull request #10304:

URL: https://github.com/apache/kafka/pull/10304#discussion_r594483345

##

File path: core/src/main/scala/kafka/admin/LogDirsCommand.scala

##

@@ -39,19 +39,29 @@ object LogDirsCommand {

def describe(args: Array[String], out: PrintStream): Unit = {

val opts = new LogDirsCommandOptions(args)

val adminClient = createAdminClient(opts)

-val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(!_.isEmpty)

-val brokerList = Option(opts.options.valueOf(opts.brokerListOpt))

match {

-case Some(brokerListStr) =>

brokerListStr.split(',').filter(!_.isEmpty).map(_.toInt)

-case None =>

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toArray

-}

+val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(_.nonEmpty)

+try {

+val clusterBrokers =

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toSet

+val (existingBrokers, nonExistingBrokers) =

Option(opts.options.valueOf(opts.brokerListOpt)) match {

+case Some(brokerListStr) =>

+val inputBrokers =

brokerListStr.split(',').filter(_.nonEmpty).map(_.toInt).toSet

+(inputBrokers, inputBrokers.diff(clusterBrokers))

+case None => (clusterBrokers, Set.empty)

+}

-out.println("Querying brokers for log directories information")

-val describeLogDirsResult: DescribeLogDirsResult =

adminClient.describeLogDirs(brokerList.map(Integer.valueOf).toSeq.asJava)

-val logDirInfosByBroker =

describeLogDirsResult.allDescriptions.get().asScala.map { case (k, v) => k ->

v.asScala }

+if (nonExistingBrokers.nonEmpty) {

+out.println(s"ERROR: The given node(s) does not exist from

broker-list: ${nonExistingBrokers.mkString(",")}. Current cluster exist

node(s): ${clusterBrokers.mkString(",")}")

Review comment:

Good idea.I will act right away.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] wenbingshen commented on a change in pull request #10304: KAFKA-12454:Add ERROR logging on kafka-log-dirs when given brokerIds do not exist in current kafka cluster

wenbingshen commented on a change in pull request #10304:

URL: https://github.com/apache/kafka/pull/10304#discussion_r594484169

##

File path: core/src/main/scala/kafka/admin/LogDirsCommand.scala

##

@@ -39,19 +39,29 @@ object LogDirsCommand {

def describe(args: Array[String], out: PrintStream): Unit = {

val opts = new LogDirsCommandOptions(args)

val adminClient = createAdminClient(opts)

-val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(!_.isEmpty)

-val brokerList = Option(opts.options.valueOf(opts.brokerListOpt))

match {

-case Some(brokerListStr) =>

brokerListStr.split(',').filter(!_.isEmpty).map(_.toInt)

-case None =>

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toArray

-}

+val topicList =

opts.options.valueOf(opts.topicListOpt).split(",").filter(_.nonEmpty)

+try {

+val clusterBrokers =

adminClient.describeCluster().nodes().get().asScala.map(_.id()).toSet

+val (existingBrokers, nonExistingBrokers) =

Option(opts.options.valueOf(opts.brokerListOpt)) match {

+case Some(brokerListStr) =>

+val inputBrokers =

brokerListStr.split(',').filter(_.nonEmpty).map(_.toInt).toSet

+(inputBrokers, inputBrokers.diff(clusterBrokers))

+case None => (clusterBrokers, Set.empty)

+}

-out.println("Querying brokers for log directories information")

-val describeLogDirsResult: DescribeLogDirsResult =

adminClient.describeLogDirs(brokerList.map(Integer.valueOf).toSeq.asJava)

-val logDirInfosByBroker =

describeLogDirsResult.allDescriptions.get().asScala.map { case (k, v) => k ->

v.asScala }

+if (nonExistingBrokers.nonEmpty) {

+out.println(s"ERROR: The given node(s) does not exist from

broker-list: ${nonExistingBrokers.mkString(",")}. Current cluster exist

node(s): ${clusterBrokers.mkString(",")}")

+} else {

+out.println("Querying brokers for log directories information")

+val describeLogDirsResult: DescribeLogDirsResult =

adminClient.describeLogDirs(existingBrokers.map(Integer.valueOf).toSeq.asJava)

Review comment:

Sorry, I forgot this, I will change it right away

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] guozhangwang merged pull request #10232: KAFKA-12352: Make sure all rejoin group and reset state has a reason

guozhangwang merged pull request #10232: URL: https://github.com/apache/kafka/pull/10232 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jsancio commented on a change in pull request #10289: KAFKA-12440: ClusterId validation for Vote, BeginQuorum, EndQuorum and FetchSnapshot

jsancio commented on a change in pull request #10289:

URL: https://github.com/apache/kafka/pull/10289#discussion_r594490953

##

File path: raft/src/main/java/org/apache/kafka/raft/KafkaRaftClient.java

##

@@ -939,12 +951,27 @@ private FetchResponseData buildEmptyFetchResponse(

);

}

-private boolean hasValidClusterId(FetchRequestData request) {

+private boolean hasValidClusterId(ApiMessage request) {

Review comment:

We can implement that when handling a response, invalid cluster id are

fatal unless a previous response contained a valid cluster id.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] wenbingshen commented on pull request #10304: KAFKA-12454:Add ERROR logging on kafka-log-dirs when given brokerIds do not exist in current kafka cluster

wenbingshen commented on pull request #10304: URL: https://github.com/apache/kafka/pull/10304#issuecomment-799585108 > @wenbingshen Thanks for the updates. Let few more minot comments. Thank you for your commonts.I submitted the latest code, please review it, thank you! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jsancio commented on a change in pull request #10276: KAFKA-12253: Add tests that cover all of the cases for ReplicatedLog's validateOffsetAndEpoch

jsancio commented on a change in pull request #10276:

URL: https://github.com/apache/kafka/pull/10276#discussion_r594509680

##

File path: raft/src/test/java/org/apache/kafka/raft/MockLogTest.java

##

@@ -732,13 +760,17 @@ public int hashCode() {

}

private void appendAsLeader(Collection records, int epoch) {

+appendAsLeader(records, epoch, log.endOffset().offset);

+}

+

+private void appendAsLeader(Collection records, int epoch,

long initialOffset) {

log.appendAsLeader(

-MemoryRecords.withRecords(

-log.endOffset().offset,

-CompressionType.NONE,

-records.toArray(new SimpleRecord[records.size()])

-),

-epoch

+MemoryRecords.withRecords(

+initialOffset,

+CompressionType.NONE,

+records.toArray(new SimpleRecord[records.size()])

+),

+epoch

Review comment:

Indentation looks off. We indent 4 spaces.

##

File path: core/src/test/scala/kafka/raft/KafkaMetadataLogTest.scala

##

@@ -413,22 +413,53 @@ final class KafkaMetadataLogTest {

assertTrue(log.deleteBeforeSnapshot(snapshotId))

val resultOffsetAndEpoch = log.validateOffsetAndEpoch(offset, epoch)

-assertEquals(ValidOffsetAndEpoch.Type.VALID,

resultOffsetAndEpoch.getType())

+assertEquals(ValidOffsetAndEpoch.Type.VALID, resultOffsetAndEpoch.getType)

assertEquals(new OffsetAndEpoch(offset, epoch),

resultOffsetAndEpoch.offsetAndEpoch())

}

@Test

- def testValidateEpochUnknown(): Unit = {

+ def testValidateUnknownEpochLessThanLastKnownGreaterThanOldestSnapshot():

Unit = {

+val offset = 10

+val numOfRecords = 5

+

val log = buildMetadataLog(tempDir, mockTime)

+log.updateHighWatermark(new LogOffsetMetadata(offset))

+val snapshotId = new OffsetAndEpoch(offset, 1)

+TestUtils.resource(log.createSnapshot(snapshotId)) { snapshot =>

+ snapshot.freeze()

+}

+log.truncateToLatestSnapshot()

-val numberOfRecords = 1

-val epoch = 1

-append(log, numberOfRecords, epoch)

+append(log, numOfRecords, epoch = 1, initialOffset = 10)

+append(log, numOfRecords, epoch = 2, initialOffset = 15)

+append(log, numOfRecords, epoch = 4, initialOffset = 20)

-val resultOffsetAndEpoch = log.validateOffsetAndEpoch(numberOfRecords,

epoch + 10)

-assertEquals(ValidOffsetAndEpoch.Type.DIVERGING,

resultOffsetAndEpoch.getType())

-assertEquals(new OffsetAndEpoch(log.endOffset.offset, epoch),

resultOffsetAndEpoch.offsetAndEpoch())

+// offset is not equal to oldest snapshot's offset

+val resultOffsetAndEpoch = log.validateOffsetAndEpoch(100, 3)

+assertEquals(ValidOffsetAndEpoch.Type.DIVERGING,

resultOffsetAndEpoch.getType)

+assertEquals(new OffsetAndEpoch(20, 2),

resultOffsetAndEpoch.offsetAndEpoch())

+ }

+

+ @Test

+ def testValidateUnknownEpochLessThanLeaderGreaterThanOldestSnapshot(): Unit

= {

Review comment:

How about `testValidateEpochLessThanFirstEpochInLog`? If you agree,

let's change it in `MockLogTest` also.

##

File path: raft/src/main/java/org/apache/kafka/raft/ValidOffsetAndEpoch.java

##

@@ -25,7 +27,7 @@

this.offsetAndEpoch = offsetAndEpoch;

}

-public Type type() {

+public Type getType() {

Review comment:

By the way we can also just change the name of the type and field to

something like `public Kind kind()`

##

File path: raft/src/test/java/org/apache/kafka/raft/MockLogTest.java

##

@@ -750,4 +782,13 @@ private void appendBatch(int numRecords, int epoch) {

appendAsLeader(records, epoch);

}

+

+private void appendBatch(int numRecords, int epoch, long initialOffset) {

Review comment:

How about `private void appendBatch(int numberOfRecords, int epoch)` and

always use the LEO like `appendAsLeader(Collection records, int

epoch)`?

##

File path: core/src/test/scala/kafka/raft/KafkaMetadataLogTest.scala

##

@@ -413,22 +413,53 @@ final class KafkaMetadataLogTest {

assertTrue(log.deleteBeforeSnapshot(snapshotId))

val resultOffsetAndEpoch = log.validateOffsetAndEpoch(offset, epoch)

-assertEquals(ValidOffsetAndEpoch.Type.VALID,

resultOffsetAndEpoch.getType())

+assertEquals(ValidOffsetAndEpoch.Type.VALID, resultOffsetAndEpoch.getType)

assertEquals(new OffsetAndEpoch(offset, epoch),

resultOffsetAndEpoch.offsetAndEpoch())

}

@Test

- def testValidateEpochUnknown(): Unit = {

+ def testValidateUnknownEpochLessThanLastKnownGreaterThanOldestSnapshot():

Unit = {

+val offset = 10

+val numOfRecords = 5

+

val log = buildMetadataLog(tempDir, mockTime)

+log.updateHighWatermark(new LogOffsetMetadata(offset))

+val snapshotId = new OffsetAndEpoch(offset, 1)

+TestUtils.resource(log.createSnapshot(snapshotId)) { snapshot =>

+ snapshot.

[GitHub] [kafka] andrewegel commented on pull request #10056: KAFKA-12293: remove JCenter and Bintray repositories mentions out of build gradle scripts (sunset is announced for those repositories)

andrewegel commented on pull request #10056: URL: https://github.com/apache/kafka/pull/10056#issuecomment-799593438 At the time of my comment here, jcenter is throwing 500s to my kafka build processes: https://status.bintray.com/incidents/ctv4bdfz08bg Now is a good as time as any to take this change to remove this project's dependence on the jcenter service. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jolshan commented on a change in pull request #10282: KAFKA-12426: Missing logic to create partition.metadata files in RaftReplicaManager

jolshan commented on a change in pull request #10282: URL: https://github.com/apache/kafka/pull/10282#discussion_r594552602 ## File path: core/src/main/scala/kafka/cluster/Partition.scala ## @@ -308,27 +308,29 @@ class Partition(val topicPartition: TopicPartition, s"different from the requested log dir $logDir") false case None => -createLogIfNotExists(isNew = false, isFutureReplica = true, highWatermarkCheckpoints) +// not sure if topic ID should be none here, but not sure if we have access in ReplicaManager where this is called. Review comment: TODO: remove this when we decide if we want to pass in None or a topicID This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jolshan commented on a change in pull request #10282: KAFKA-12426: Missing logic to create partition.metadata files in RaftReplicaManager

jolshan commented on a change in pull request #10282:

URL: https://github.com/apache/kafka/pull/10282#discussion_r594553183

##

File path:

jmh-benchmarks/src/main/java/org/apache/kafka/jmh/server/CheckpointBench.java

##

@@ -145,7 +145,7 @@ public void setup() {

OffsetCheckpoints checkpoints = (logDir, topicPartition) ->

Option.apply(0L);

for (TopicPartition topicPartition : topicPartitions) {

final Partition partition =

this.replicaManager.createPartition(topicPartition);

-partition.createLogIfNotExists(true, false, checkpoints);

+partition.createLogIfNotExists(true, false, checkpoints,

Option.empty());

Review comment:

I think we can just not set the topic ID here, but want to confirm.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] jolshan commented on a change in pull request #10282: KAFKA-12426: Missing logic to create partition.metadata files in RaftReplicaManager

jolshan commented on a change in pull request #10282:

URL: https://github.com/apache/kafka/pull/10282#discussion_r594558352

##

File path: core/src/main/scala/kafka/log/Log.scala

##

@@ -349,11 +352,20 @@ class Log(@volatile private var _dir: File,

// Delete partition metadata file if the version does not support topic

IDs.

// Recover topic ID if present and topic IDs are supported

+// If we were provided a topic ID when creating the log, partition

metadata files are supported, and one does not yet exist

+// write to the partition metadata file.

+// Ensure we do not try to assign a provided topicId that is inconsistent

with the ID on file.

if (partitionMetadataFile.exists()) {

if (!keepPartitionMetadataFile)

partitionMetadataFile.delete()

-else

- topicId = partitionMetadataFile.read().topicId

+else {

+ val fileTopicId = partitionMetadataFile.read().topicId

+ if (topicId.isDefined && fileTopicId != topicId.get)

+throw new IllegalStateException(s"Tried to assign topic ID

$topicId to log, but log already contained topicId $fileTopicId")

Review comment:

I don't know if it is possible to get to this error message. I think in

most cases, the log should be grabbed if it already exists in the

makeLeader/makeFollower path. In the log loading path, the topicId should be

None. I thought it would be good to throw this error to know that something was

wrong with the code, but maybe there is a better way. (Like maybe if topicId is

defined in general)

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] C0urante commented on pull request #10014: KAFKA-12252 and KAFKA-12262: Fix session key rotation when leadership changes

C0urante commented on pull request #10014: URL: https://github.com/apache/kafka/pull/10014#issuecomment-799623900 @rhauch ping 🙂 This has been waiting for a while and the only concern that's been raised is unrelated to the fix at hand. Can you take another look? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda merged pull request #10320: MINOR: revert stream logging level back to ERROR

abbccdda merged pull request #10320: URL: https://github.com/apache/kafka/pull/10320 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-12464) Enhance constrained sticky Assign algorithm

[

https://issues.apache.org/jira/browse/KAFKA-12464?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17301824#comment-17301824

]

A. Sophie Blee-Goldman commented on KAFKA-12464:

Thanks for the proposal – I think the basic idea makes sense. There is an

existing scale test in AbstractStickyAssignorTest called

testLargeAssignmentAndGroupWithUniformSubscription() which you can use to

measure the improvement. It already has pretty good performance but further

optimizations are welcome! If this has a significant impact we should also

scale up the number of consumers and/or partitions in that test. The aim is to

have it complete in a few seconds when running locally. Right now I believe we

run it with 1mil partitions and 2,000 consumers; the 1mil partitions is

probably already close (or beyond) the limits of what kafka can handle for a

single consumer group in general, but I'd be interested in seeing how much we

can push the size of the consumer group with this enhancement.

Some quick notes about the second enhancement: (1) we need to track the revoked

partitions as well (this is for the CooperativeStickyAssignor, needed for

cooperative rebalancing), so we should also have something like

allRevokedPartitions.removeAll(ownedPartitions.subList(maxQuota,ownedPartitions.size()));

and (2), I think the second line should be

unassignedPartitions.removeAll(ownedPartitions.subList(0, maxQuota));

since we assigned the first sublist of the ownedPartitions list, not the second.

(also (3), I'm guessing you just left this out for brevity but we still need to

assign partitions in the case ownedPartitions.size() < maxQuota. Might be good

to include this branch in the ticket description for clarity)

Looking forward to the PR!

> Enhance constrained sticky Assign algorithm

> ---

>

> Key: KAFKA-12464

> URL: https://issues.apache.org/jira/browse/KAFKA-12464

> Project: Kafka

> Issue Type: Improvement

>Affects Versions: 2.7.0

>Reporter: Luke Chen

>Assignee: Luke Chen

>Priority: Major

>

> In KAFKA-9987, we did a great improvement for the case when all consumers

> were subscribed to same set of topics. The algorithm contains 4 phases:

> # Reassign as many previously owned partitions as possible, up to the

> maxQuota

> # Fill remaining members up to minQuota

> # If we ran out of unassigned partitions before filling all consumers, we

> need to start stealing partitions from the over-full consumers at max capacity

> # Otherwise we may have run out of unfilled consumers before assigning all

> partitions, in which case we should just distribute one partition each to all

> consumers at min capacity

>

> Take an example for better understanding:

> *example:*

> Current status: 2 consumers (C0, C1), and 10 topic partitions: t1p0, t1p1,

> ... t1p9

> Suppose, current assignment is:

> _C0: t1p0, t1p1, t1p2, t1p3, t1p4_

> _C1: t1p5, t1p6, t1p7, t1p8, t1p9_

> Now, new consumer added: C2, so we'll do:

> # Reassign as many previously owned partitions as possible, up to the

> maxQuota

> After this phase, the assignment will be: (maxQuota will be 4)

> _C0: t1p0, t1p1, t1p2, t1p3_

> _C1: t1p5, t1p6, t1p7, t1p8_

> # Fill remaining members up to minQuota

> After this phase, the assignment will be:

> _C0: t1p0, t1p1, t1p2, t1p3_

> _C1: t1p5, t1p6, t1p7, t1p8_

> _C2: t1p4, t1p9_

> # If we ran out of unassigned partitions before filling all consumers, we

> need to start stealing partitions from the over-full consumers at max capacity

> After this phase, the assignment will be:

> _C0: t1p0, t1p1, t1p2_

> _C1: t1p5, t1p6, t1p7, t1p8_

> _C2: t1p4, t1p9,_ _t1p3_

> # Otherwise we may have run out of unfilled consumers before assigning all

> partitions, in which case we should just distribute one partition each to all

> consumers at min capacity

>

>

> As we can see, we need 3 phases to complete the assignment. But we can

> actually completed with 2 phases. Here's the updated algorithm:

> # Reassign as many previously owned partitions as possible, up to the

> maxQuota, and also considering the numMaxQuota by the remainder of

> (Partitions / Consumers)

> # Fill remaining members up to maxQuota if current maxQuotaMember <

> numMaxQuota, otherwise, to minQuota

>

> By considering the numMaxQuota, the original step 1 won't be too aggressive

> to assign too many partitions to consumers, and the step 2 won't be too

> conservative to assign not enough partitions to consumers, so that we don't

> need step 3 and step 4 to balance them.

>

> {{So, the updated Pseudo-code sketch of the algorithm:}}

> C_f := (P/N)_floor, the floor capacity

> C_c := (P/N)_ceil, the ceiling capacity

> *C_r := (P%N) the allowed number of members with C_c partitions assigned*

> *num_max_capacity_members :=

[GitHub] [kafka] junrao commented on a change in pull request #10218: KAFKA-12368: Added inmemory implementations for RemoteStorageManager and RemoteLogMetadataManager.

junrao commented on a change in pull request #10218:

URL: https://github.com/apache/kafka/pull/10218#discussion_r594509836

##

File path:

clients/src/main/java/org/apache/kafka/server/log/remote/storage/RemoteLogSegmentState.java

##

@@ -87,4 +89,27 @@ public byte id() {

public static RemoteLogSegmentState forId(byte id) {

return STATE_TYPES.get(id);

}

+

+public static boolean isValidTransition(RemoteLogSegmentState srcState,

RemoteLogSegmentState targetState) {

+Objects.requireNonNull(targetState, "targetState can not be null");

+

+if (srcState == null) {

+// If the source state is null, check the target state as the

initial state viz DELETE_PARTITION_MARKED

Review comment:

DELETE_PARTITION_MARKED is not part of RemoteLogSegmentState.

##

File path:

remote-storage/src/main/java/org/apache/kafka/server/log/remote/storage/RemoteLogMetadataCache.java

##

@@ -0,0 +1,172 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.storage;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.util.ArrayList;

+import java.util.Collections;

+import java.util.Comparator;

+import java.util.HashSet;

+import java.util.Iterator;

+import java.util.Map;

+import java.util.NavigableMap;

+import java.util.Optional;

+import java.util.Set;

+import java.util.concurrent.ConcurrentHashMap;

+import java.util.concurrent.ConcurrentMap;

+import java.util.concurrent.ConcurrentSkipListMap;

+import java.util.stream.Collectors;

+

+/**

+ * This class provides an inmemory cache of remote log segment metadata. This

maintains the lineage of segments

+ * with respect to epoch evolution. It also keeps track of segments which are

not considered to be copied to remote

+ * storage.

+ */

+public class RemoteLogMetadataCache {

+private static final Logger log =

LoggerFactory.getLogger(RemoteLogMetadataCache.class);

+

+private final ConcurrentMap

idToSegmentMetadata

+= new ConcurrentHashMap<>();

+

+// It keeps the segments which are not yet reached to

COPY_SEGMENT_FINISHED state.

+private final Set remoteLogSegmentIdInProgress = new

HashSet<>();

+

+// It will have all the segments except with state as COPY_SEGMENT_STARTED.

+private final ConcurrentMap> leaderEpochToOffsetToId

+= new ConcurrentHashMap<>();

+

+private void addRemoteLogSegmentMetadata(RemoteLogSegmentMetadata

remoteLogSegmentMetadata) {

+log.debug("Adding remote log segment metadata: [{}]",

remoteLogSegmentMetadata);

+idToSegmentMetadata.put(remoteLogSegmentMetadata.remoteLogSegmentId(),

remoteLogSegmentMetadata);

+Map leaderEpochToOffset =

remoteLogSegmentMetadata.segmentLeaderEpochs();

+for (Map.Entry entry : leaderEpochToOffset.entrySet()) {

+leaderEpochToOffsetToId.computeIfAbsent(entry.getKey(), k -> new

ConcurrentSkipListMap<>())

+.put(entry.getValue(),

remoteLogSegmentMetadata.remoteLogSegmentId());

+}

+}

+

+public Optional remoteLogSegmentMetadata(int

leaderEpoch, long offset) {

+NavigableMap offsetToId =

leaderEpochToOffsetToId.get(leaderEpoch);

+if (offsetToId == null || offsetToId.isEmpty()) {

+return Optional.empty();

+}

+

+// look for floor entry as the given offset may exist in this entry.

+Map.Entry entry =

offsetToId.floorEntry(offset);

+if (entry == null) {

+// if the offset is lower than the minimum offset available in

metadata then return empty.

+return Optional.empty();

+}

+

+RemoteLogSegmentMetadata metadata =

idToSegmentMetadata.get(entry.getValue());

+// check whether the given offset with leaderEpoch exists in this

segment.

+// check for epoch's offset boundaries with in this segment.

+// 1. get the next epoch's start offset -1 if exists

+// 2. if no next epoch exists, then segment end offset can be

considered as epoch's relative end offset.

+Map.Entry nextEntry = metadata.segmentLeaderEpochs()

+.higherEntry(leaderEpoch);

+long epochEndOf

[GitHub] [kafka] hachikuji commented on a change in pull request #10309: KAFKA-12181; Loosen raft fetch offset validation of remote replicas

hachikuji commented on a change in pull request #10309:

URL: https://github.com/apache/kafka/pull/10309#discussion_r594599970

##

File path: raft/src/test/java/org/apache/kafka/raft/RaftEventSimulationTest.java

##

@@ -401,6 +401,51 @@ private void checkBackToBackLeaderFailures(QuorumConfig

config) {

}

}

+@Test

+public void checkSingleNodeCommittedDataLossQuorumSizeThree() {

+checkSingleNodeCommittedDataLoss(new QuorumConfig(3, 0));

+}

+

+private void checkSingleNodeCommittedDataLoss(QuorumConfig config) {

+assertTrue(config.numVoters > 2,

+"This test requires the cluster to be able to recover from one

failed node");

+

+for (int seed = 0; seed < 100; seed++) {

+// We run this test without the `MonotonicEpoch` and

`MajorityReachedHighWatermark`

+// invariants since the loss of committed data on one node can

violate them.

+

+Cluster cluster = new Cluster(config, seed);

+EventScheduler scheduler = new EventScheduler(cluster.random,

cluster.time);

+scheduler.addInvariant(new MonotonicHighWatermark(cluster));

+scheduler.addInvariant(new SingleLeader(cluster));

+scheduler.addValidation(new ConsistentCommittedData(cluster));

+

+MessageRouter router = new MessageRouter(cluster);

+

+cluster.startAll();

+schedulePolling(scheduler, cluster, 3, 5);

+scheduler.schedule(router::deliverAll, 0, 2, 5);

+scheduler.schedule(new SequentialAppendAction(cluster), 0, 2, 3);

+scheduler.runUntil(() -> cluster.anyReachedHighWatermark(10));

+

+RaftNode node = cluster.randomRunning().orElseThrow(() ->

+new AssertionError("Failed to find running node")

+);

+

+// Kill a random node and drop all of its persistent state. The

Raft

+// protocol guarantees should still ensure we lose no committed

data

+// as long as a new leader is elected before the failed node is

restarted.

+cluster.kill(node.nodeId);

+cluster.deletePersistentState(node.nodeId);

+scheduler.runUntil(() -> !cluster.hasLeader(node.nodeId) &&

cluster.hasConsistentLeader());

Review comment:

It is checking consistent `ElectionState`, which is basically the same

as verifying all `quorum-state` files match.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] wcarlson5 opened a new pull request #10321: Minor:timeout issue in Remove thread

wcarlson5 opened a new pull request #10321: URL: https://github.com/apache/kafka/pull/10321 timeout is a duration not a point in time ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] wcarlson5 commented on pull request #10321: Minor:timeout issue in Remove thread

wcarlson5 commented on pull request #10321: URL: https://github.com/apache/kafka/pull/10321#issuecomment-799685448 @cadonna @ableegoldman Can I get a look at this? @vvcephei This will need to be picked to 2.8 as well This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-12313) Consider deprecating the default.windowed.serde.inner.class configs

[