[GitHub] [hadoop-ozone] aryangupta1998 commented on pull request #868: HDDS-3457. Fix ozonefs put and mkdir KEY_NOT_FOUND issue when ACL enable

aryangupta1998 commented on pull request #868: URL: https://github.com/apache/hadoop-ozone/pull/868#issuecomment-684394220 Hi @captainzmc, I enabled ACL and performed mkdir using o3fs, but 'checkAccess' function is never reached. I have put some System.out.println statements in 'checkAccess' function, but nothing is being printed. Can you please help! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] runzhiwang commented on a change in pull request #1370: HDDS-4176. Fix failed UT: test2WayCommitForTimeoutException

runzhiwang commented on a change in pull request #1370:

URL: https://github.com/apache/hadoop-ozone/pull/1370#discussion_r480831186

##

File path:

hadoop-ozone/integration-test/src/test/java/org/apache/hadoop/ozone/client/rpc/TestWatchForCommit.java

##

@@ -297,9 +298,11 @@ public void test2WayCommitForTimeoutException() throws

Exception {

xceiverClient.getPipeline()));

reply.getResponse().get();

Assert.assertEquals(3, ratisClient.getCommitInfoMap().size());

+List datanodeDetails = pipeline.getNodes();

for (HddsDatanodeService dn : cluster.getHddsDatanodes()) {

// shutdown the ratis follower

- if (ContainerTestHelper.isRatisFollower(dn, pipeline)) {

+ if (datanodeDetails.contains(dn.getDatanodeDetails())

+ && ContainerTestHelper.isRatisFollower(dn, pipeline)) {

Review comment:

@amaliujia Thanks for your review. TestWatchForCommit already

startCluster with 9 nodes, maybe it is enough.

https://github.com/apache/hadoop-ozone/blob/master/hadoop-ozone/integration-test/src/test/java/org/apache/hadoop/ozone/client/rpc/TestWatchForCommit.java#L124

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] flirmnave commented on a change in pull request #1368: HDDS-4156. add hierarchical layout to Chinese doc

flirmnave commented on a change in pull request #1368: URL: https://github.com/apache/hadoop-ozone/pull/1368#discussion_r480821571 ## File path: hadoop-hdds/docs/content/concept/_index.zh.md ## @@ -2,7 +2,7 @@ title: 概念 date: "2017-10-10" menu: main -weight: 6 +weight: 3 Review comment: Hi, I see that it weight 3 in english version. https://github.com/apache/hadoop-ozone/blame/master/hadoop-hdds/docs/content/concept/_index.md#L5 So I change it, Should I change it back? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] amaliujia commented on a change in pull request #1370: HDDS-4176. Fix failed UT: test2WayCommitForTimeoutException

amaliujia commented on a change in pull request #1370:

URL: https://github.com/apache/hadoop-ozone/pull/1370#discussion_r480794854

##

File path:

hadoop-ozone/integration-test/src/test/java/org/apache/hadoop/ozone/client/rpc/TestWatchForCommit.java

##

@@ -297,9 +298,11 @@ public void test2WayCommitForTimeoutException() throws

Exception {

xceiverClient.getPipeline()));

reply.getResponse().get();

Assert.assertEquals(3, ratisClient.getCommitInfoMap().size());

+List datanodeDetails = pipeline.getNodes();

for (HddsDatanodeService dn : cluster.getHddsDatanodes()) {

// shutdown the ratis follower

- if (ContainerTestHelper.isRatisFollower(dn, pipeline)) {

+ if (datanodeDetails.contains(dn.getDatanodeDetails())

+ && ContainerTestHelper.isRatisFollower(dn, pipeline)) {

Review comment:

This logic looks right to me.

Can you bump up the number of DN to make tests run against higher number DN:

https://github.com/apache/hadoop-ozone/blob/34ee8311b0d0a37878fe1fd2e5d8c1b91aa8cc8f/hadoop-ozone/integration-test/src/test/java/org/apache/hadoop/ozone/client/rpc/Test2WayCommitInRatis.java#L109

Which seems will cover your change?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3698) Ozone Non-Rolling upgrades

[ https://issues.apache.org/jira/browse/HDDS-3698?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan updated HDDS-3698: Summary: Ozone Non-Rolling upgrades (was: Ozone Non-Rolling upgrades.) > Ozone Non-Rolling upgrades > -- > > Key: HDDS-3698 > URL: https://issues.apache.org/jira/browse/HDDS-3698 > Project: Hadoop Distributed Data Store > Issue Type: New Feature >Reporter: Aravindan Vijayan >Assignee: Aravindan Vijayan >Priority: Major > Attachments: Ozone Non-Rolling Upgrades (Presentation).pdf, Ozone > Non-Rolling Upgrades Doc v1.1.pdf, Ozone Non-Rolling Upgrades.pdf > > > Support for Non-rolling upgrades in Ozone. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4183) Support backward compatible upgrade to a version with key prefix management.

[ https://issues.apache.org/jira/browse/HDDS-4183?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan updated HDDS-4183: Description: HDDS-2939 makes fundamental changes to how OM writes & reads its metadata. Since this is about as big a layout change as can be, we have to make sure this is properly managed through the upgrade flow. In addition to upgrades, we should support downgrades from pre-finalized state of OM. cc [~rakeshr] / [~msingh] > Support backward compatible upgrade to a version with key prefix management. > > > Key: HDDS-4183 > URL: https://issues.apache.org/jira/browse/HDDS-4183 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Manager >Reporter: Aravindan Vijayan >Assignee: Aravindan Vijayan >Priority: Major > Fix For: 1.1.0 > > > HDDS-2939 makes fundamental changes to how OM writes & reads its metadata. > Since this is about as big a layout change as can be, we have to make sure > this is properly managed through the upgrade flow. In addition to upgrades, > we should support downgrades from pre-finalized state of OM. > cc [~rakeshr] / [~msingh] -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Assigned] (HDDS-4183) Support backward compatible upgrade to a version with key prefix management

[ https://issues.apache.org/jira/browse/HDDS-4183?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan reassigned HDDS-4183: --- Assignee: (was: Aravindan Vijayan) > Support backward compatible upgrade to a version with key prefix management > --- > > Key: HDDS-4183 > URL: https://issues.apache.org/jira/browse/HDDS-4183 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Manager >Reporter: Aravindan Vijayan >Priority: Major > Fix For: 1.1.0 > > > HDDS-2939 makes fundamental changes to how OM writes & reads its metadata. > Since this is about as big a layout change as can be, we have to make sure > this is properly managed through the upgrade flow. In addition to upgrades, > we should support downgrades from pre-finalized state of OM. > cc [~rakeshr] / [~msingh] -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4183) Support backward compatible upgrade to a version with key prefix management

[ https://issues.apache.org/jira/browse/HDDS-4183?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan updated HDDS-4183: Summary: Support backward compatible upgrade to a version with key prefix management (was: Support backward compatible upgrade to a version with key prefix management.) > Support backward compatible upgrade to a version with key prefix management > --- > > Key: HDDS-4183 > URL: https://issues.apache.org/jira/browse/HDDS-4183 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Manager >Reporter: Aravindan Vijayan >Assignee: Aravindan Vijayan >Priority: Major > Fix For: 1.1.0 > > > HDDS-2939 makes fundamental changes to how OM writes & reads its metadata. > Since this is about as big a layout change as can be, we have to make sure > this is properly managed through the upgrade flow. In addition to upgrades, > we should support downgrades from pre-finalized state of OM. > cc [~rakeshr] / [~msingh] -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] amaliujia commented on a change in pull request #1340: HDDS-3188 Enable SCM group with failover proxy for SCM block location.

amaliujia commented on a change in pull request #1340:

URL: https://github.com/apache/hadoop-ozone/pull/1340#discussion_r480720338

##

File path:

hadoop-hdds/framework/src/main/java/org/apache/hadoop/hdds/scm/proxy/SCMBlockLocationFailoverProxyProvider.java

##

@@ -0,0 +1,281 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hadoop.hdds.scm.proxy;

+

+import com.google.common.annotations.VisibleForTesting;

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.hdds.conf.ConfigurationSource;

+import org.apache.hadoop.hdds.scm.ScmConfigKeys;

+import org.apache.hadoop.hdds.scm.protocol.ScmBlockLocationProtocol;

+import org.apache.hadoop.hdds.scm.protocolPB.ScmBlockLocationProtocolPB;

+import org.apache.hadoop.hdds.utils.LegacyHadoopConfigurationSource;

+import org.apache.hadoop.io.retry.FailoverProxyProvider;

+import org.apache.hadoop.io.retry.RetryPolicy;

+import org.apache.hadoop.io.retry.RetryPolicy.RetryAction;

+import org.apache.hadoop.ipc.ProtobufRpcEngine;

+import org.apache.hadoop.ipc.RPC;

+import org.apache.hadoop.net.NetUtils;

+import org.apache.hadoop.security.UserGroupInformation;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.Closeable;

+import java.io.IOException;

+import java.net.InetSocketAddress;

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+

+import static org.apache.hadoop.hdds.scm.ScmConfigKeys.OZONE_SCM_NAMES;

+import static

org.apache.hadoop.hdds.scm.ScmConfigKeys.OZONE_SCM_SERVICE_IDS_KEY;

+import static org.apache.hadoop.hdds.HddsUtils.getScmAddressForBlockClients;

+import static org.apache.hadoop.hdds.HddsUtils.getPortNumberFromConfigKeys;

+

+/**

+ * Failover proxy provider for SCM.

+ */

+public class SCMBlockLocationFailoverProxyProvider implements

+FailoverProxyProvider, Closeable {

+ public static final Logger LOG =

+ LoggerFactory.getLogger(SCMBlockLocationFailoverProxyProvider.class);

+

+ private Map> scmProxies;

+ private Map scmProxyInfoMap;

+ private List scmNodeIDList;

+

+ private String currentProxySCMNodeId;

+ private int currentProxyIndex;

+

+ private final ConfigurationSource conf;

+ private final long scmVersion;

+

+ private final String scmServiceId;

+

+ private String lastAttemptedLeader;

+

+ private final int maxRetryCount;

+ private final long retryInterval;

+

+ public static final String SCM_DUMMY_NODEID_PREFIX = "scm";

+

+ public SCMBlockLocationFailoverProxyProvider(ConfigurationSource conf) {

+this.conf = conf;

+this.scmVersion = RPC.getProtocolVersion(ScmBlockLocationProtocol.class);

+this.scmServiceId = conf.getTrimmed(OZONE_SCM_SERVICE_IDS_KEY);

+this.scmProxies = new HashMap<>();

+this.scmProxyInfoMap = new HashMap<>();

+this.scmNodeIDList = new ArrayList<>();

+loadConfigs();

+

+this.currentProxyIndex = 0;

+currentProxySCMNodeId = scmNodeIDList.get(currentProxyIndex);

+

+this.maxRetryCount = conf.getObject(SCMBlockClientConfig.class)

+.getRetryCount();

+this.retryInterval = conf.getObject(SCMBlockClientConfig.class)

+.getRetryInterval();

+ }

+

+ @VisibleForTesting

+ protected Collection getSCMAddressList() {

+Collection scmAddressList =

+conf.getTrimmedStringCollection(OZONE_SCM_NAMES);

+Collection resultList = new ArrayList<>();

+if (scmAddressList.isEmpty()) {

+ // fall back

+ resultList.add(getScmAddressForBlockClients(conf));

+} else {

+ for (String scmAddress : scmAddressList) {

+LOG.info("SCM Address for proxy is {}", scmAddress);

+int indexOfComma = scmAddress.lastIndexOf(":");

Review comment:

The default implementation of `getTrimmedStringCollection` seems does

not validate the format of ip address in string:

https://github.com/apache/hadoop-ozone/blob/34ee8311b0d0a37878fe1fd2e5d8c1b91aa8cc8f/hadoop-hdds/config/src/main/java/org/apache/hadoop/hdds/conf/ConfigurationSource.java#L99

Is the format is already validated somewhere else? if not this

implementation will be fragile.

This is an

[jira] [Assigned] (HDDS-4183) Support backward compatible upgrade to a version with key prefix management.

[ https://issues.apache.org/jira/browse/HDDS-4183?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan reassigned HDDS-4183: --- Assignee: Aravindan Vijayan > Support backward compatible upgrade to a version with key prefix management. > > > Key: HDDS-4183 > URL: https://issues.apache.org/jira/browse/HDDS-4183 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Manager >Reporter: Aravindan Vijayan >Assignee: Aravindan Vijayan >Priority: Major > Fix For: 1.1.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4183) Support backward compatible upgrade to a version with key prefix management.

Aravindan Vijayan created HDDS-4183: --- Summary: Support backward compatible upgrade to a version with key prefix management. Key: HDDS-4183 URL: https://issues.apache.org/jira/browse/HDDS-4183 Project: Hadoop Distributed Data Store Issue Type: Sub-task Components: Ozone Manager Reporter: Aravindan Vijayan Fix For: 1.1.0 -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4182) Onboard HDDS-3869 into Layout version management

[ https://issues.apache.org/jira/browse/HDDS-4182?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan updated HDDS-4182: Parent: HDDS-3698 Issue Type: Sub-task (was: Bug) > Onboard HDDS-3869 into Layout version management > > > Key: HDDS-4182 > URL: https://issues.apache.org/jira/browse/HDDS-4182 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task >Reporter: Aravindan Vijayan >Assignee: Aravindan Vijayan >Priority: Major > > In HDDS-3869 (Use different column families for datanode block and metadata), > there was a backward compatible change made in the Ozone datanode RocksDB. > This JIRA tracks the effort to use a "Layout Version" to track this change > such that it is NOT used before finalizing the cluster. > cc [~erose], [~hkoneru] -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4182) Onboard HDDS-3869 into Layout version management

Aravindan Vijayan created HDDS-4182: --- Summary: Onboard HDDS-3869 into Layout version management Key: HDDS-4182 URL: https://issues.apache.org/jira/browse/HDDS-4182 Project: Hadoop Distributed Data Store Issue Type: Bug Reporter: Aravindan Vijayan In HDDS-3869 (Use different column families for datanode block and metadata), there was a backward compatible change made in the Ozone datanode RocksDB. This JIRA tracks the effort to use a "Layout Version" to track this change such that it is NOT used before finalizing the cluster. cc [~erose], [~hkoneru] -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Assigned] (HDDS-4182) Onboard HDDS-3869 into Layout version management

[ https://issues.apache.org/jira/browse/HDDS-4182?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan reassigned HDDS-4182: --- Assignee: Aravindan Vijayan > Onboard HDDS-3869 into Layout version management > > > Key: HDDS-4182 > URL: https://issues.apache.org/jira/browse/HDDS-4182 > Project: Hadoop Distributed Data Store > Issue Type: Bug >Reporter: Aravindan Vijayan >Assignee: Aravindan Vijayan >Priority: Major > > In HDDS-3869 (Use different column families for datanode block and metadata), > there was a backward compatible change made in the Ozone datanode RocksDB. > This JIRA tracks the effort to use a "Layout Version" to track this change > such that it is NOT used before finalizing the cluster. > cc [~erose], [~hkoneru] -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] amaliujia commented on a change in pull request #1340: HDDS-3188 Enable SCM group with failover proxy for SCM block location.

amaliujia commented on a change in pull request #1340:

URL: https://github.com/apache/hadoop-ozone/pull/1340#discussion_r480712834

##

File path:

hadoop-hdds/server-scm/src/main/java/org/apache/hadoop/hdds/scm/protocol/ScmBlockLocationProtocolServerSideTranslatorPB.java

##

@@ -94,9 +95,33 @@ public ScmBlockLocationProtocolServerSideTranslatorPB(

.setTraceID(traceID);

}

+ private boolean isLeader() throws ServiceException {

+if (!(impl instanceof SCMBlockProtocolServer)) {

+ throw new ServiceException("Should be SCMBlockProtocolServer");

Review comment:

nit: might be useful to print which class current `impl` is.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4181) Add acceptance tests for upgrade, finalization and downgrade

Aravindan Vijayan created HDDS-4181: --- Summary: Add acceptance tests for upgrade, finalization and downgrade Key: HDDS-4181 URL: https://issues.apache.org/jira/browse/HDDS-4181 Project: Hadoop Distributed Data Store Issue Type: Sub-task Reporter: Aravindan Vijayan Fix For: 1.1.0 -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4180) Container Layout Version Management in Datanode

Aravindan Vijayan created HDDS-4180: --- Summary: Container Layout Version Management in Datanode Key: HDDS-4180 URL: https://issues.apache.org/jira/browse/HDDS-4180 Project: Hadoop Distributed Data Store Issue Type: Sub-task Reporter: Aravindan Vijayan * Chunk Layout Version persistence in Datanode * Add versioned read of Container Metadata File in Datanode -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4179) Implement post-finalize SCM logic to allow nodes of only new version to participate in pipelines.

Aravindan Vijayan created HDDS-4179: --- Summary: Implement post-finalize SCM logic to allow nodes of only new version to participate in pipelines. Key: HDDS-4179 URL: https://issues.apache.org/jira/browse/HDDS-4179 Project: Hadoop Distributed Data Store Issue Type: Sub-task Components: Ozone Datanode, SCM Reporter: Aravindan Vijayan Fix For: 1.1.0 -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4178) SCM Finalize command implementation.

Aravindan Vijayan created HDDS-4178: --- Summary: SCM Finalize command implementation. Key: HDDS-4178 URL: https://issues.apache.org/jira/browse/HDDS-4178 Project: Hadoop Distributed Data Store Issue Type: Sub-task Components: SCM Reporter: Aravindan Vijayan Fix For: 1.1.0 * RPC endpoint implementation * Ratis request to persist MLV, Trigger DN Finalize, Pipeline close. (WHEN MLV changes) -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Commented] (HDDS-3188) Enable Multiple SCMs

[ https://issues.apache.org/jira/browse/HDDS-3188?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188114#comment-17188114 ] Rui Wang commented on HDDS-3188: Update JIRA description to say support "2N + 1" SCMs. (The original text was "2N"). > Enable Multiple SCMs > > > Key: HDDS-3188 > URL: https://issues.apache.org/jira/browse/HDDS-3188 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: SCM >Reporter: Li Cheng >Assignee: Li Cheng >Priority: Major > Labels: pull-request-available > > Need to supports 2N + 1 SCMs. Add configs and logic to support multiple SCMs. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] runzhiwang commented on pull request #1370: HDDS-4176. Fix failed UT: test2WayCommitForTimeoutException

runzhiwang commented on pull request #1370: URL: https://github.com/apache/hadoop-ozone/pull/1370#issuecomment-684175039 @elek Could you help review this patch ? Thank you very much. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3188) Enable Multiple SCMs

[ https://issues.apache.org/jira/browse/HDDS-3188?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Rui Wang updated HDDS-3188: --- Description: Need to supports 2N + 1 SCMs. Add configs and logic to support multiple SCMs. (was: Need to supports 2N SCMs. Add configs and logic to support multiple SCMs.) > Enable Multiple SCMs > > > Key: HDDS-3188 > URL: https://issues.apache.org/jira/browse/HDDS-3188 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: SCM >Reporter: Li Cheng >Assignee: Li Cheng >Priority: Major > Labels: pull-request-available > > Need to supports 2N + 1 SCMs. Add configs and logic to support multiple SCMs. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

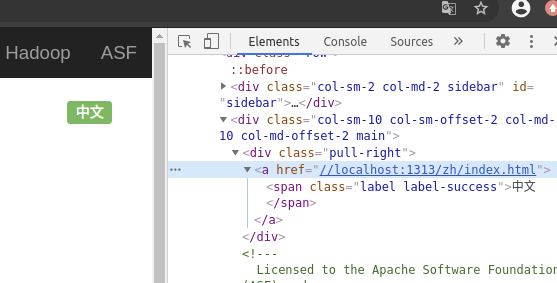

[jira] [Assigned] (HDDS-4166) Documentation index page redirects to the wrong address

[ https://issues.apache.org/jira/browse/HDDS-4166?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Neo Yang reassigned HDDS-4166: -- Assignee: Neo Yang > Documentation index page redirects to the wrong address > --- > > Key: HDDS-4166 > URL: https://issues.apache.org/jira/browse/HDDS-4166 > Project: Hadoop Distributed Data Store > Issue Type: Bug > Components: documentation >Reporter: Yiqun Lin >Assignee: Neo Yang >Priority: Minor > Labels: pull-request-available > Attachments: image-2020-08-29-10-35-34-633.png > > > Reading Chinese doc of Ozone that introduced in HDDS-2708. I find a page > error that index page redirects a wrong page. > The steps: > 1. Jump into > [https://ci-hadoop.apache.org/view/Hadoop%20Ozone/job/ozone-doc-master/lastSuccessfulBuild/artifact/hadoop-hdds/docs/public/index.html] > 2. Click the page switch button. > 3. The wrong page we will jumped into: > > [https://ci-hadoop.apache.org/view/Hadoop%20Ozone/job/ozone-doc-master/lastSuccessfulBuild/artifact/hadoop-hdds/docs/public/zh/] > !image-2020-08-29-10-35-34-633.png! > It missed the 'index.html' at the end of the address, actually this address > is expected to > [https://ci-hadoop.apache.org/view/Hadoop%20Ozone/job/ozone-doc-master/lastSuccessfulBuild/artifact/hadoop-hdds/docs/public/zh/index.html] > The same error happened when I switch the index page from Chinese doc to > English doc. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4148) Create servlet to get SCM DB checkpoint

[ https://issues.apache.org/jira/browse/HDDS-4148?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Siddharth Wagle updated HDDS-4148: -- Summary: Create servlet to get SCM DB checkpoint (was: create servlet to get SCM DB checkpoint) > Create servlet to get SCM DB checkpoint > --- > > Key: HDDS-4148 > URL: https://issues.apache.org/jira/browse/HDDS-4148 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: SCM >Reporter: Prashant Pogde >Assignee: Prashant Pogde >Priority: Major > Labels: pull-request-available > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4177) Bootstrap Recon SCM Container DB

[ https://issues.apache.org/jira/browse/HDDS-4177?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Siddharth Wagle updated HDDS-4177: -- Summary: Bootstrap Recon SCM Container DB (was: get container DB from SCM servlet and bootstrap recon container DB ) > Bootstrap Recon SCM Container DB > > > Key: HDDS-4177 > URL: https://issues.apache.org/jira/browse/HDDS-4177 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task >Reporter: Prashant Pogde >Assignee: Prashant Pogde >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4177) get container DB from SCM servlet and bootstrap recon container DB

Prashant Pogde created HDDS-4177: Summary: get container DB from SCM servlet and bootstrap recon container DB Key: HDDS-4177 URL: https://issues.apache.org/jira/browse/HDDS-4177 Project: Hadoop Distributed Data Store Issue Type: Sub-task Reporter: Prashant Pogde Assignee: Prashant Pogde -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4166) Documentation index page redirects to the wrong address

[ https://issues.apache.org/jira/browse/HDDS-4166?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HDDS-4166: - Labels: pull-request-available (was: ) > Documentation index page redirects to the wrong address > --- > > Key: HDDS-4166 > URL: https://issues.apache.org/jira/browse/HDDS-4166 > Project: Hadoop Distributed Data Store > Issue Type: Bug > Components: documentation >Reporter: Yiqun Lin >Priority: Minor > Labels: pull-request-available > Attachments: image-2020-08-29-10-35-34-633.png > > > Reading Chinese doc of Ozone that introduced in HDDS-2708. I find a page > error that index page redirects a wrong page. > The steps: > 1. Jump into > [https://ci-hadoop.apache.org/view/Hadoop%20Ozone/job/ozone-doc-master/lastSuccessfulBuild/artifact/hadoop-hdds/docs/public/index.html] > 2. Click the page switch button. > 3. The wrong page we will jumped into: > > [https://ci-hadoop.apache.org/view/Hadoop%20Ozone/job/ozone-doc-master/lastSuccessfulBuild/artifact/hadoop-hdds/docs/public/zh/] > !image-2020-08-29-10-35-34-633.png! > It missed the 'index.html' at the end of the address, actually this address > is expected to > [https://ci-hadoop.apache.org/view/Hadoop%20Ozone/job/ozone-doc-master/lastSuccessfulBuild/artifact/hadoop-hdds/docs/public/zh/index.html] > The same error happened when I switch the index page from Chinese doc to > English doc. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] cku328 opened a new pull request #1372: HDDS-4166. Documentation index page redirects to the wrong address

cku328 opened a new pull request #1372: URL: https://github.com/apache/hadoop-ozone/pull/1372 ## What changes were proposed in this pull request? The server for the edge version of the documentation doesn't appear to use `index.html` as the default index page, but instead displays a list of directories. If the file name of the page is `index.html`, it doesn't add `index.html` to the `Permalink` parameters, which seems to be hugo's style. I found similar cases on the internet. (ref. [https://discourse.gohugo.io/t/ugly-urls-with-page-resources/10037](https://discourse.gohugo.io/t/ugly-urls-with-page-resources/10037)) The easiest solution is to check the permalink suffix and if it's not `html` then print `index.html` at the end. ## What is the link to the Apache JIRA https://issues.apache.org/jira/browse/HDDS-4166 ## How was this patch tested? Tested locally using `hugo serve`  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] amaliujia commented on pull request #1367: HDDS-4169. Fix some minor errors in StorageContainerManager.md

amaliujia commented on pull request #1367: URL: https://github.com/apache/hadoop-ozone/pull/1367#issuecomment-684161934 Thanks! LGTM This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] runzhiwang commented on pull request #1371: HDDS-2922. Balance ratis leader distribution in datanodes

runzhiwang commented on pull request #1371: URL: https://github.com/apache/hadoop-ozone/pull/1371#issuecomment-684160456 @bshashikant @lokeshj1703 @mukul1987 @elek @xiaoyuyao Could you help review this patch ? Thank you very much. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-2922) Balance ratis leader distribution in datanodes

[ https://issues.apache.org/jira/browse/HDDS-2922?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HDDS-2922: - Labels: pull-request-available (was: ) > Balance ratis leader distribution in datanodes > -- > > Key: HDDS-2922 > URL: https://issues.apache.org/jira/browse/HDDS-2922 > Project: Hadoop Distributed Data Store > Issue Type: Improvement >Reporter: Li Cheng >Assignee: runzhiwang >Priority: Major > Labels: pull-request-available > > Ozone should be able to recommend leader host to Ratis via pipeline creation. > The leader host can be recommended based on rack awareness and load balance. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

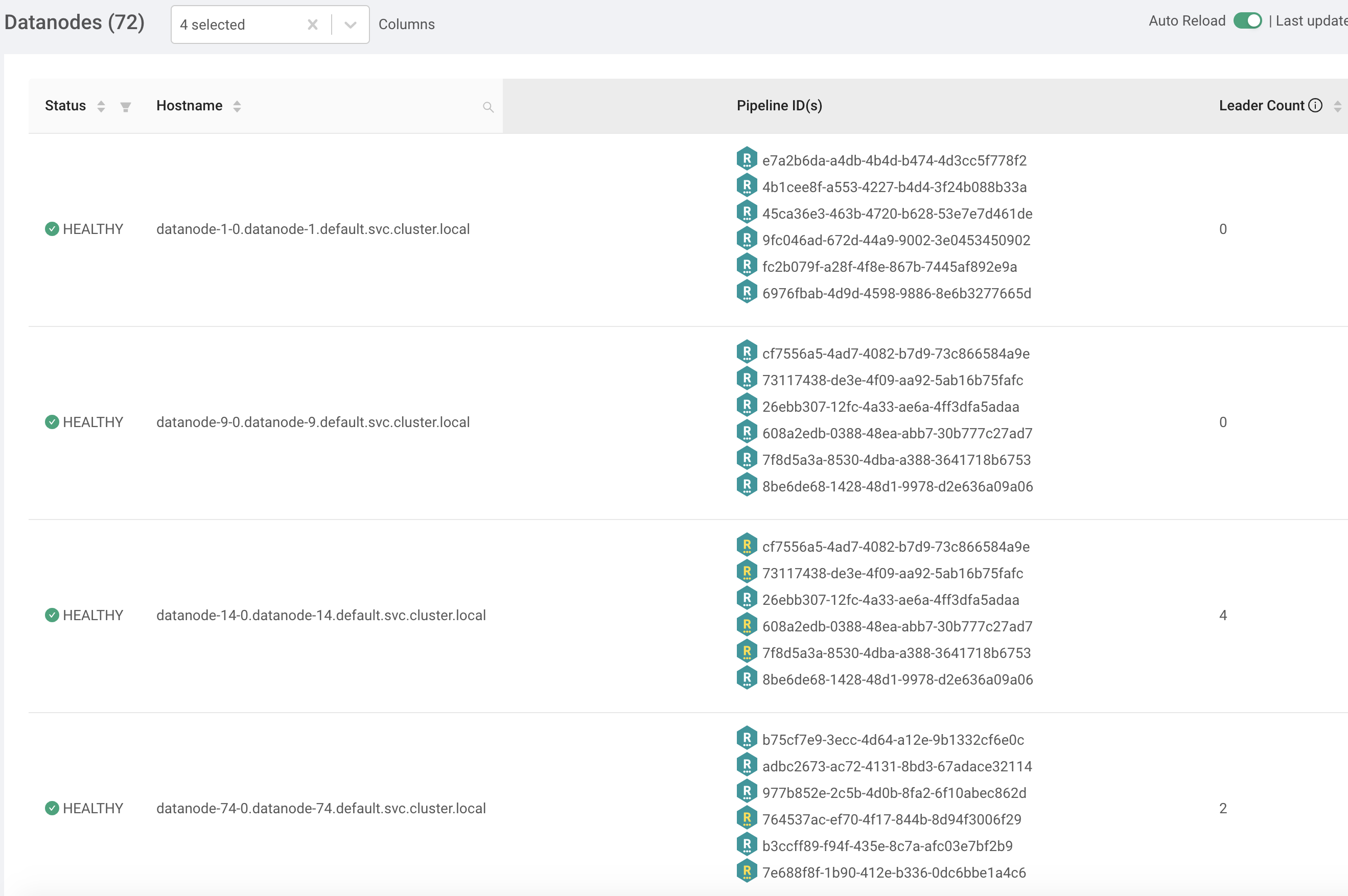

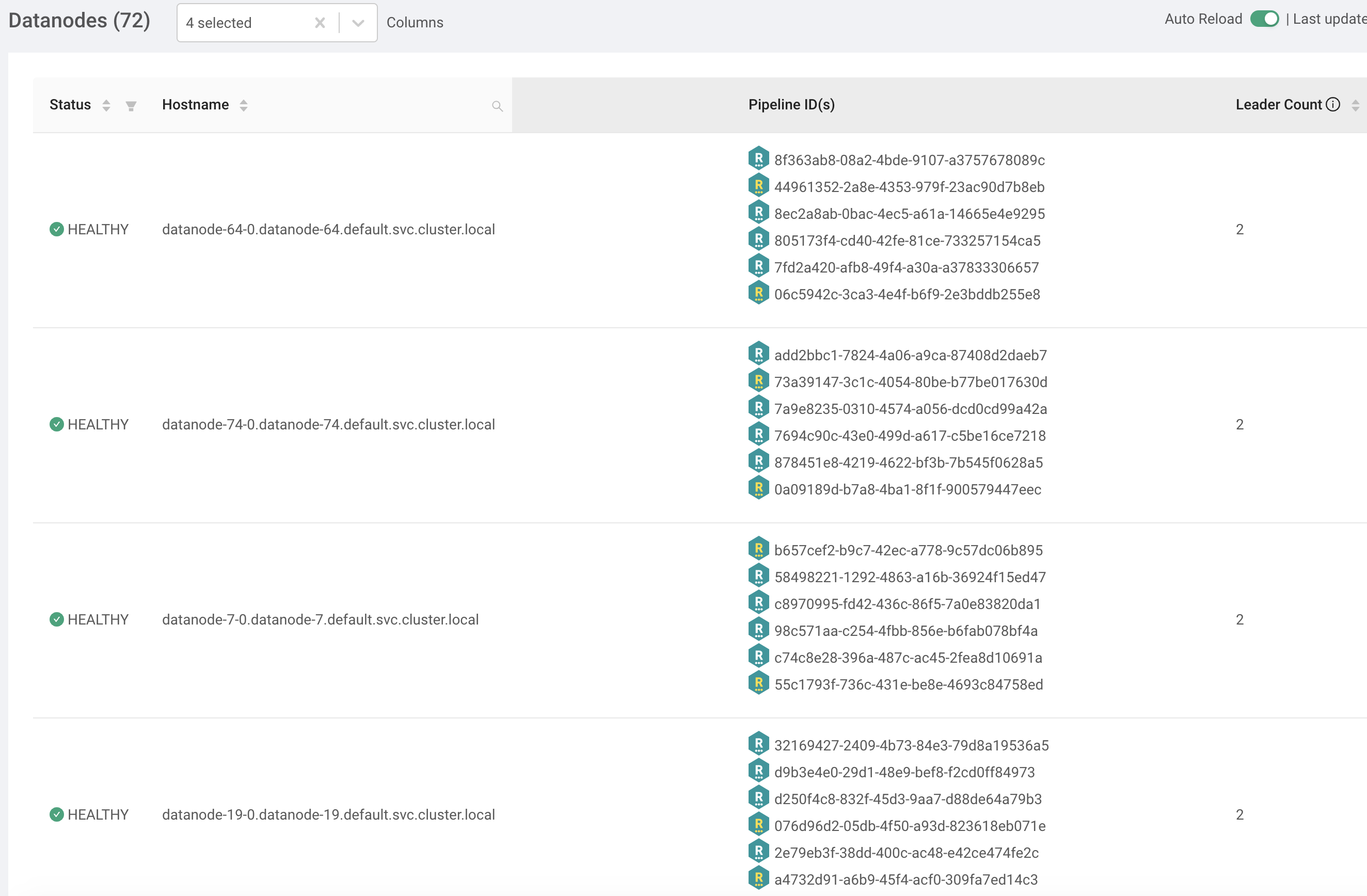

[GitHub] [hadoop-ozone] runzhiwang opened a new pull request #1371: HDDS-2922. Balance ratis leader distribution in datanodes

runzhiwang opened a new pull request #1371: URL: https://github.com/apache/hadoop-ozone/pull/1371 ## What changes were proposed in this pull request? **What's the problem ?** When enable multi-raft, the leader distribution in datanodes is not balance. In my test, there are 72 datanodes, each datanode engage in 6 pipelines, so there are 144 pipelines. As the image shows, the leader number of the 4 datanodes is 0, 0, 4, 2, it's not balance. Because ratis leader not only accept client request, but also replicate log to 2 followers, and follower only replicate log from leader, so the leader's load is at least 3 times of follower. So we need to balance leader.  **How to improve ?** With the guidance of @szetszwo , [RATIS-967](https://issues.apache.org/jira/browse/RATIS-967) not only support priority in leader election, but also support lower priority leader try to yield leadership to higher priority peer when higher priority peer's log catch up. So in ozone 1. assign the suggested leader with higher priority, and 2 followers with lower priority, then we can achieve leader distribution's balance. 2. record the suggested leader count in DatanodeDetails, when create pipeline, choose the datanode with the smallest suggested leader count as the suggested leader. 3. to avoid we lose the suggested leader count in SCM when restart SCM, we also record it in datanode, when scm restart, datanode will report the suggested leader count to SCM. As the following image shows, there are 72 datanodes, each datanode engage in 6 pipelines, so there are 144 pipelines. The leader count of each datanode is 2, there is no exception, we achieve the leader distribution's balance.  ## What is the link to the Apache JIRA https://issues.apache.org/jira/browse/HDDS-2922 ## How was this patch tested? add new ut. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-2922) Balance ratis leader distribution in datanodes

[ https://issues.apache.org/jira/browse/HDDS-2922?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] runzhiwang updated HDDS-2922: - Summary: Balance ratis leader distribution in datanodes (was: Recommend leader host to Ratis via pipeline creation) > Balance ratis leader distribution in datanodes > -- > > Key: HDDS-2922 > URL: https://issues.apache.org/jira/browse/HDDS-2922 > Project: Hadoop Distributed Data Store > Issue Type: Improvement >Reporter: Li Cheng >Assignee: runzhiwang >Priority: Major > > Ozone should be able to recommend leader host to Ratis via pipeline creation. > The leader host can be recommended based on rack awareness and load balance. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

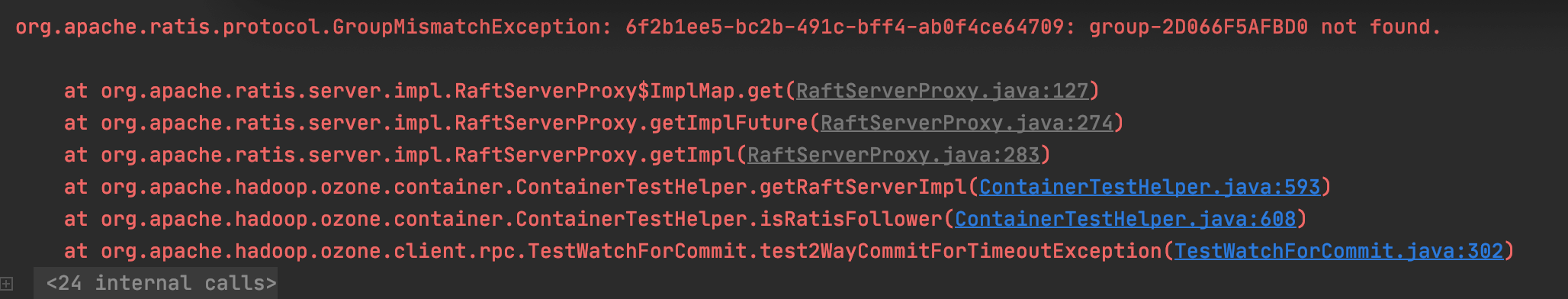

[jira] [Updated] (HDDS-4176) Fix failed UT: test2WayCommitForTimeoutException

[ https://issues.apache.org/jira/browse/HDDS-4176?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HDDS-4176: - Labels: pull-request-available (was: ) > Fix failed UT: test2WayCommitForTimeoutException > > > Key: HDDS-4176 > URL: https://issues.apache.org/jira/browse/HDDS-4176 > Project: Hadoop Distributed Data Store > Issue Type: Bug >Reporter: runzhiwang >Assignee: runzhiwang >Priority: Major > Labels: pull-request-available > > org.apache.ratis.protocol.GroupMismatchException: > 6f2b1ee5-bc2b-491c-bff4-ab0f4ce64709: group-2D066F5AFBD0 not found. > at > org.apache.ratis.server.impl.RaftServerProxy$ImplMap.get(RaftServerProxy.java:127) > at > org.apache.ratis.server.impl.RaftServerProxy.getImplFuture(RaftServerProxy.java:274) > at > org.apache.ratis.server.impl.RaftServerProxy.getImpl(RaftServerProxy.java:283) > at > org.apache.hadoop.ozone.container.ContainerTestHelper.getRaftServerImpl(ContainerTestHelper.java:593) > at > org.apache.hadoop.ozone.container.ContainerTestHelper.isRatisFollower(ContainerTestHelper.java:608) > at > org.apache.hadoop.ozone.client.rpc.TestWatchForCommit.test2WayCommitForTimeoutException(TestWatchForCommit.java:302) -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] runzhiwang opened a new pull request #1370: HDDS-4176. Fix failed UT: test2WayCommitForTimeoutException

runzhiwang opened a new pull request #1370:

URL: https://github.com/apache/hadoop-ozone/pull/1370

## What changes were proposed in this pull request?

What's the problem ?

What's the reason of the failed ut ?

When cluster create 9 nodes, the following code try to shutdown the follower

of the pipeline. Actually, the pipeline only exist in 3 nodes, but the

following code check 9 nodes, so the pipeline can not found in the other 6

nodes.

```

for (HddsDatanodeService dn : cluster.getHddsDatanodes()) {

// shutdown the ratis follower

if (ContainerTestHelper.isRatisFollower(dn, pipeline)) {

cluster.shutdownHddsDatanode(dn.getDatanodeDetails());

break;

}

}

```

## What is the link to the Apache JIRA

https://issues.apache.org/jira/browse/HDDS-4176

## How was this patch tested?

Existed ut.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] cku328 commented on a change in pull request #1367: HDDS-4169. Fix some minor errors in StorageContainerManager.md

cku328 commented on a change in pull request #1367: URL: https://github.com/apache/hadoop-ozone/pull/1367#discussion_r480582833 ## File path: hadoop-hdds/docs/content/concept/StorageContainerManager.md ## @@ -46,7 +46,7 @@ replicas. If there is a loss of data node or a disk, SCM detects it and instructs data nodes make copies of the missing blocks to ensure high availability. - 3. **SCM's Ceritificate authority** is in + 3. **SCM's Certificate authority** is in Review comment: Thanks @amaliujia for the suggestion. Both names sound good to me. I'll update the patch based on your suggestion. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Commented] (HDDS-4157) doc/content/concept does not match the title in doc/content/concept/index.md

[ https://issues.apache.org/jira/browse/HDDS-4157?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188091#comment-17188091 ] Xiang Zhang commented on HDDS-4157: --- cc [~cxorm] [~cq19430213] [~xyao] > doc/content/concept does not match the title in doc/content/concept/index.md > > > Key: HDDS-4157 > URL: https://issues.apache.org/jira/browse/HDDS-4157 > Project: Hadoop Distributed Data Store > Issue Type: Improvement > Components: documentation >Reporter: Xiang Zhang >Priority: Major > > According to my understanding, the title in index.md should be the same as > the directory name. With that being said, the title in > doc/content/concept/index.md is updated to architecture, should the directory > name also be updated to architecture (now is concept) ? -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] iamabug commented on a change in pull request #1368: HDDS-4156. add hierarchical layout to Chinese doc

iamabug commented on a change in pull request #1368: URL: https://github.com/apache/hadoop-ozone/pull/1368#discussion_r480564649 ## File path: hadoop-hdds/docs/content/concept/_index.zh.md ## @@ -2,7 +2,7 @@ title: 概念 date: "2017-10-10" menu: main -weight: 6 +weight: 3 Review comment: Could you please explain the reason why `weight` is changed here? @flirmnave This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4176) Fix failed UT: test2WayCommitForTimeoutException

[ https://issues.apache.org/jira/browse/HDDS-4176?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] runzhiwang updated HDDS-4176: - Description: org.apache.ratis.protocol.GroupMismatchException: 6f2b1ee5-bc2b-491c-bff4-ab0f4ce64709: group-2D066F5AFBD0 not found. at org.apache.ratis.server.impl.RaftServerProxy$ImplMap.get(RaftServerProxy.java:127) at org.apache.ratis.server.impl.RaftServerProxy.getImplFuture(RaftServerProxy.java:274) at org.apache.ratis.server.impl.RaftServerProxy.getImpl(RaftServerProxy.java:283) at org.apache.hadoop.ozone.container.ContainerTestHelper.getRaftServerImpl(ContainerTestHelper.java:593) at org.apache.hadoop.ozone.container.ContainerTestHelper.isRatisFollower(ContainerTestHelper.java:608) at org.apache.hadoop.ozone.client.rpc.TestWatchForCommit.test2WayCommitForTimeoutException(TestWatchForCommit.java:302) > Fix failed UT: test2WayCommitForTimeoutException > > > Key: HDDS-4176 > URL: https://issues.apache.org/jira/browse/HDDS-4176 > Project: Hadoop Distributed Data Store > Issue Type: Bug >Reporter: runzhiwang >Assignee: runzhiwang >Priority: Major > > org.apache.ratis.protocol.GroupMismatchException: > 6f2b1ee5-bc2b-491c-bff4-ab0f4ce64709: group-2D066F5AFBD0 not found. > at > org.apache.ratis.server.impl.RaftServerProxy$ImplMap.get(RaftServerProxy.java:127) > at > org.apache.ratis.server.impl.RaftServerProxy.getImplFuture(RaftServerProxy.java:274) > at > org.apache.ratis.server.impl.RaftServerProxy.getImpl(RaftServerProxy.java:283) > at > org.apache.hadoop.ozone.container.ContainerTestHelper.getRaftServerImpl(ContainerTestHelper.java:593) > at > org.apache.hadoop.ozone.container.ContainerTestHelper.isRatisFollower(ContainerTestHelper.java:608) > at > org.apache.hadoop.ozone.client.rpc.TestWatchForCommit.test2WayCommitForTimeoutException(TestWatchForCommit.java:302) -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4176) Fix failed UT: test2WayCommitForTimeoutException

runzhiwang created HDDS-4176: Summary: Fix failed UT: test2WayCommitForTimeoutException Key: HDDS-4176 URL: https://issues.apache.org/jira/browse/HDDS-4176 Project: Hadoop Distributed Data Store Issue Type: Bug Reporter: runzhiwang Assignee: runzhiwang -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4156) add hierarchical layout to Chinese doc

[ https://issues.apache.org/jira/browse/HDDS-4156?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Xiang Zhang updated HDDS-4156: -- Description: English doc is updated a lot in https://issues.apache.org/jira/browse/HDDS-4042, and its flat structure becomes more hierarchical, to keep the consistency, we need to update the Chinese doc too. (was: English doc is updated a lot in https://issues.apache.org/jira/browse/HDDS-4042, and its structure turns into more ) > add hierarchical layout to Chinese doc > -- > > Key: HDDS-4156 > URL: https://issues.apache.org/jira/browse/HDDS-4156 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task >Reporter: Xiang Zhang >Assignee: Zheng Huang-Mu >Priority: Major > Labels: pull-request-available > > English doc is updated a lot in > https://issues.apache.org/jira/browse/HDDS-4042, and its flat structure > becomes more hierarchical, to keep the consistency, we need to update the > Chinese doc too. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Commented] (HDDS-4156) add hierarchical layout to Chinese doc

[ https://issues.apache.org/jira/browse/HDDS-4156?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188089#comment-17188089 ] Xiang Zhang commented on HDDS-4156: --- Thanks for the reminder [~cxorm], the description is added. > add hierarchical layout to Chinese doc > -- > > Key: HDDS-4156 > URL: https://issues.apache.org/jira/browse/HDDS-4156 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task >Reporter: Xiang Zhang >Assignee: Zheng Huang-Mu >Priority: Major > Labels: pull-request-available > > English doc is updated a lot in > https://issues.apache.org/jira/browse/HDDS-4042, and its flat structure > becomes more hierarchical, to keep the consistency, we need to update the > Chinese doc too. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4156) add hierarchical layout to Chinese doc

[ https://issues.apache.org/jira/browse/HDDS-4156?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Xiang Zhang updated HDDS-4156: -- Description: English doc is updated a lot in https://issues.apache.org/jira/browse/HDDS-4042, and its structure turns into more (was: English doc is updated a lot in https://issues.apache.org/jira/browse/HDDS-4042, and the doc ) > add hierarchical layout to Chinese doc > -- > > Key: HDDS-4156 > URL: https://issues.apache.org/jira/browse/HDDS-4156 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task >Reporter: Xiang Zhang >Assignee: Zheng Huang-Mu >Priority: Major > Labels: pull-request-available > > English doc is updated a lot in > https://issues.apache.org/jira/browse/HDDS-4042, and its structure turns into > more -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4156) add hierarchical layout to Chinese doc

[ https://issues.apache.org/jira/browse/HDDS-4156?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Xiang Zhang updated HDDS-4156: -- Description: English doc is updated a lot in https://issues.apache.org/jira/browse/HDDS-4042, and the doc > add hierarchical layout to Chinese doc > -- > > Key: HDDS-4156 > URL: https://issues.apache.org/jira/browse/HDDS-4156 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task >Reporter: Xiang Zhang >Assignee: Zheng Huang-Mu >Priority: Major > Labels: pull-request-available > > English doc is updated a lot in > https://issues.apache.org/jira/browse/HDDS-4042, and the doc -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4175) Implement Datanode Finalization

Aravindan Vijayan created HDDS-4175: --- Summary: Implement Datanode Finalization Key: HDDS-4175 URL: https://issues.apache.org/jira/browse/HDDS-4175 Project: Hadoop Distributed Data Store Issue Type: Sub-task Components: Ozone Datanode Reporter: Aravindan Vijayan Fix For: 1.1.0 * Create FinalizeCommand in SCM and Datanode protocol. * Create FinalizeCommand Handler in Datanode. * Datanode Finalization should FAIL if there are open containers on it. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4174) Add current HDDS layout version to Datanode heartbeat and registration.

Aravindan Vijayan created HDDS-4174: --- Summary: Add current HDDS layout version to Datanode heartbeat and registration. Key: HDDS-4174 URL: https://issues.apache.org/jira/browse/HDDS-4174 Project: Hadoop Distributed Data Store Issue Type: Sub-task Components: Ozone Datanode Reporter: Aravindan Vijayan Fix For: 1.1.0 Add the layout version as a field to proto. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4104) Provide a way to get the default value and key of java-based-configuration easily

[ https://issues.apache.org/jira/browse/HDDS-4104?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HDDS-4104: - Labels: pull-request-available (was: ) > Provide a way to get the default value and key of java-based-configuration > easily > - > > Key: HDDS-4104 > URL: https://issues.apache.org/jira/browse/HDDS-4104 > Project: Hadoop Distributed Data Store > Issue Type: Improvement >Affects Versions: 0.6.0 >Reporter: maobaolong >Priority: Minor > Labels: pull-request-available > > - getDefaultValue > - getKeyName -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4173) Implement HDDS Version management using the LayoutVersionManager interface.

Aravindan Vijayan created HDDS-4173: --- Summary: Implement HDDS Version management using the LayoutVersionManager interface. Key: HDDS-4173 URL: https://issues.apache.org/jira/browse/HDDS-4173 Project: Hadoop Distributed Data Store Issue Type: Sub-task Components: Ozone Datanode, SCM Affects Versions: 1.1.0 Reporter: Aravindan Vijayan Fix For: 1.1.0 * Create HDDS Layout Feature Catalog similar to the OM Layout Feature Catalog. * Any layout change to SCM and Datanode needs to be recorded here as a Layout Feature. * This includes new SCM HA requests, new container layouts in DN etc. * Create a HDDSLayoutVersionManager similar to OMLayoutVersionManager. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] maobaolong opened a new pull request #1369: HDDS-4104. Provide a way to get the default value and key of java-based-configuration easily

maobaolong opened a new pull request #1369: URL: https://github.com/apache/hadoop-ozone/pull/1369 ## What changes were proposed in this pull request? Provide a way to get the default value and key of java-based-configuration easily. ## What is the link to the Apache JIRA HDDS-4104 ## How was this patch tested? This PR with the related unit tests. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4104) Provide a way to get the default value and key of java-based-configuration easily

[ https://issues.apache.org/jira/browse/HDDS-4104?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] maobaolong updated HDDS-4104: - Description: - getDefaultValue - getKeyName was: - getDefaultValue - getKeyName - getValue > Provide a way to get the default value and key of java-based-configuration > easily > - > > Key: HDDS-4104 > URL: https://issues.apache.org/jira/browse/HDDS-4104 > Project: Hadoop Distributed Data Store > Issue Type: Improvement >Affects Versions: 0.6.0 >Reporter: maobaolong >Priority: Minor > > - getDefaultValue > - getKeyName -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Created] (HDDS-4172) Implement Finalize command in Ozone Manager server.

Aravindan Vijayan created HDDS-4172: --- Summary: Implement Finalize command in Ozone Manager server. Key: HDDS-4172 URL: https://issues.apache.org/jira/browse/HDDS-4172 Project: Hadoop Distributed Data Store Issue Type: Sub-task Components: Ozone Manager Affects Versions: 0.7.0 Reporter: Aravindan Vijayan Assignee: Istvan Fajth Fix For: 0.7.0 Using changes from HDDS-4141 and HDDS-3829, we can finish the OM finalization logic by implementing the Ratis request to Finalize. On the server side, this finalize command should update the internal Upgrade state to "Finalized". This operation can be a No-Op if there are no layout changes across an upgrade. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4141) Implement Finalize command in Ozone Manager client.

[ https://issues.apache.org/jira/browse/HDDS-4141?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan updated HDDS-4141: Description: * On the client side, add a new command to finalize OM through CLI. (was: * On the client side, add a new command to finalize OM through CLI. * On the server side, this finalize command should update the internal Upgrade state to "Finalized". This operation can be a No-Op if there are no layout changes across an upgrade.) > Implement Finalize command in Ozone Manager client. > --- > > Key: HDDS-4141 > URL: https://issues.apache.org/jira/browse/HDDS-4141 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Manager >Reporter: Aravindan Vijayan >Assignee: Istvan Fajth >Priority: Major > Fix For: 0.7.0 > > > * On the client side, add a new command to finalize OM through CLI. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4141) Implement Finalize command in Ozone Manager client.

[ https://issues.apache.org/jira/browse/HDDS-4141?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan updated HDDS-4141: Summary: Implement Finalize command in Ozone Manager client. (was: Implement Finalize command in Ozone Manager) > Implement Finalize command in Ozone Manager client. > --- > > Key: HDDS-4141 > URL: https://issues.apache.org/jira/browse/HDDS-4141 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Manager >Reporter: Aravindan Vijayan >Assignee: Istvan Fajth >Priority: Major > Fix For: 0.7.0 > > > * On the client side, add a new command to finalize OM through CLI. > * On the server side, this finalize command should update the internal > Upgrade state to "Finalized". This operation can be a No-Op if there are no > layout changes across an upgrade. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] avijayanhwx commented on a change in pull request #1322: HDDS-3829. Introduce Layout Feature interface in Ozone.

avijayanhwx commented on a change in pull request #1322:

URL: https://github.com/apache/hadoop-ozone/pull/1322#discussion_r480488966

##

File path:

hadoop-hdds/common/src/main/java/org/apache/hadoop/ozone/upgrade/AbstractLayoutVersionManager.java

##

@@ -0,0 +1,110 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hadoop.ozone.upgrade;

+

+import java.util.Arrays;

+import java.util.HashMap;

+import java.util.Iterator;

+import java.util.Map;

+import java.util.Optional;

+import java.util.TreeMap;

+

+import com.google.common.base.Preconditions;

+

+/**

+ * Layout Version Manager containing generic method implementations.

+ */

+@SuppressWarnings("visibilitymodifier")

+public abstract class AbstractLayoutVersionManager implements

+LayoutVersionManager {

+

+ protected int metadataLayoutVersion; // MLV.

+ protected int softwareLayoutVersion; // SLV.

+ protected TreeMap features = new TreeMap<>();

+ protected Map featureMap = new HashMap<>();

+ protected volatile boolean isInitialized = false;

+

+ protected void init(int version, LayoutFeature[] lfs) {

+if (!isInitialized) {

+ metadataLayoutVersion = version;

+ initializeFeatures(lfs);

+ softwareLayoutVersion = features.lastKey();

+ isInitialized = true;

+}

+ }

+

+ protected void initializeFeatures(LayoutFeature[] lfs) {

+Arrays.stream(lfs).forEach(f -> {

+ Preconditions.checkArgument(!featureMap.containsKey(f.name()));

+ Preconditions.checkArgument(!features.containsKey(f.layoutVersion()));

+ features.put(f.layoutVersion(), f);

+ featureMap.put(f.name(), f);

+});

+ }

+

+ public int getMetadataLayoutVersion() {

+return metadataLayoutVersion;

+ }

+

+ public int getSoftwareLayoutVersion() {

+return softwareLayoutVersion;

+ }

+

+ public boolean needsFinalization() {

+return metadataLayoutVersion < softwareLayoutVersion;

+ }

+

+ public boolean isAllowed(LayoutFeature layoutFeature) {

+return layoutFeature.layoutVersion() <= metadataLayoutVersion;

Review comment:

@linyiqun It is by design that newer "layout" features cannot work in

Ozone until finalize. We will have a concept of Minimum Compatible layout

version in the future, which is used to denote the minimum Layout version from

which you can upgrade to the current layout version.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3829) Introduce Layout Feature interface in Ozone

[ https://issues.apache.org/jira/browse/HDDS-3829?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan updated HDDS-3829: Resolution: Fixed Status: Resolved (was: Patch Available) > Introduce Layout Feature interface in Ozone > --- > > Key: HDDS-3829 > URL: https://issues.apache.org/jira/browse/HDDS-3829 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Manager >Reporter: Aravindan Vijayan >Assignee: Aravindan Vijayan >Priority: Major > Labels: pull-request-available > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-3829) Introduce Layout Feature interface in Ozone

[ https://issues.apache.org/jira/browse/HDDS-3829?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan updated HDDS-3829: Description: * Implement the concept of a 'Layout Feature' in Ozone (with sample usage in Ozone Manager), which defines a specific change in on-disk layout in Ozone. * Every feature is associated with a layout version, and an API corresponding to the feature cannot be invoked (throws NOT_SUPPORTED_OPERATION) before finalization. * Created an annotation based 'aspect' for "guarding" new APIs that are introduced by Layout Features. Check out TestOMLayoutFeatureAspect#testCheckLayoutFeature. * Added sample features and tests for ease of review (To be removed before commit). * Created an abstract VersionManager and an inherited OM Version manager to initialize features, check if feature is allowed, check need to finalize, do finalization. > Introduce Layout Feature interface in Ozone > --- > > Key: HDDS-3829 > URL: https://issues.apache.org/jira/browse/HDDS-3829 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Manager >Reporter: Aravindan Vijayan >Assignee: Aravindan Vijayan >Priority: Major > Labels: pull-request-available > > * Implement the concept of a 'Layout Feature' in Ozone (with sample usage in > Ozone Manager), which defines a specific change in on-disk layout in Ozone. > * Every feature is associated with a layout version, and an API corresponding > to the feature cannot be invoked (throws NOT_SUPPORTED_OPERATION) before > finalization. > * Created an annotation based 'aspect' for "guarding" new APIs that are > introduced by Layout Features. Check out > TestOMLayoutFeatureAspect#testCheckLayoutFeature. > * Added sample features and tests for ease of review (To be removed before > commit). > * Created an abstract VersionManager and an inherited OM Version manager to > initialize features, check if feature is allowed, check need to finalize, do > finalization. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] amaliujia commented on a change in pull request #1342: HDDS-4135: In ContainerStateManagerV2, modification of RocksDB should be consistent with that of memory state.

amaliujia commented on a change in pull request #1342:

URL: https://github.com/apache/hadoop-ozone/pull/1342#discussion_r480481261

##

File path:

hadoop-hdds/server-scm/src/main/java/org/apache/hadoop/hdds/scm/container/ContainerStateManagerImpl.java

##

@@ -334,8 +347,12 @@ ContainerInfo getMatchingContainer(final long size, String

owner,

throw new UnsupportedOperationException("Not yet implemented!");

}

- public void removeContainer(final HddsProtos.ContainerID id) {

-containers.removeContainer(ContainerID.getFromProtobuf(id));

+ @Override

+ public void removeContainer(final HddsProtos.ContainerID id)

Review comment:

So there is no easy way to test such changes?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] amaliujia commented on a change in pull request #1367: HDDS-4169. Fix some minor errors in StorageContainerManager.md

amaliujia commented on a change in pull request #1367: URL: https://github.com/apache/hadoop-ozone/pull/1367#discussion_r480480468 ## File path: hadoop-hdds/docs/content/concept/StorageContainerManager.md ## @@ -46,7 +46,7 @@ replicas. If there is a loss of data node or a disk, SCM detects it and instructs data nodes make copies of the missing blocks to ensure high availability. - 3. **SCM's Ceritificate authority** is in + 3. **SCM's Certificate authority** is in Review comment: Should it be both upper case or lower case for the first character? E.g. either `Certificate Authority` or `certificate authority`? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4121) Implement OmMetadataMangerImpl#getExpiredOpenKeys

[ https://issues.apache.org/jira/browse/HDDS-4121?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Bharat Viswanadham updated HDDS-4121: - Component/s: (was: OM HA) Ozone Manager > Implement OmMetadataMangerImpl#getExpiredOpenKeys > - > > Key: HDDS-4121 > URL: https://issues.apache.org/jira/browse/HDDS-4121 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Manager >Reporter: Ethan Rose >Assignee: Ethan Rose >Priority: Major > Labels: pull-request-available > Fix For: 1.1.0 > > > Implement the getExpiredOpenKeys method in OmMetadataMangerImpl to return > keys in the open key table that are older than a configurable time interval. > The method will be modified to take a parameter limiting how many keys are > returned. This value will be configurable with the existing > ozone.open.key.expire.threshold setting, which currently has a default value > of 1 day. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Resolved] (HDDS-4121) Implement OmMetadataMangerImpl#getExpiredOpenKeys

[ https://issues.apache.org/jira/browse/HDDS-4121?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Bharat Viswanadham resolved HDDS-4121. -- Fix Version/s: 1.1.0 Resolution: Fixed > Implement OmMetadataMangerImpl#getExpiredOpenKeys > - > > Key: HDDS-4121 > URL: https://issues.apache.org/jira/browse/HDDS-4121 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: OM HA >Reporter: Ethan Rose >Assignee: Ethan Rose >Priority: Minor > Labels: pull-request-available > Fix For: 1.1.0 > > > Implement the getExpiredOpenKeys method in OmMetadataMangerImpl to return > keys in the open key table that are older than a configurable time interval. > The method will be modified to take a parameter limiting how many keys are > returned. This value will be configurable with the existing > ozone.open.key.expire.threshold setting, which currently has a default value > of 1 day. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4121) Implement OmMetadataMangerImpl#getExpiredOpenKeys

[ https://issues.apache.org/jira/browse/HDDS-4121?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Bharat Viswanadham updated HDDS-4121: - Affects Version/s: (was: 0.6.0) 1.0.0 > Implement OmMetadataMangerImpl#getExpiredOpenKeys > - > > Key: HDDS-4121 > URL: https://issues.apache.org/jira/browse/HDDS-4121 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Manager >Affects Versions: 1.0.0 >Reporter: Ethan Rose >Assignee: Ethan Rose >Priority: Major > Labels: pull-request-available > Fix For: 1.1.0 > > > Implement the getExpiredOpenKeys method in OmMetadataMangerImpl to return > keys in the open key table that are older than a configurable time interval. > The method will be modified to take a parameter limiting how many keys are > returned. This value will be configurable with the existing > ozone.open.key.expire.threshold setting, which currently has a default value > of 1 day. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4121) Implement OmMetadataMangerImpl#getExpiredOpenKeys

[ https://issues.apache.org/jira/browse/HDDS-4121?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Bharat Viswanadham updated HDDS-4121: - Priority: Major (was: Minor) > Implement OmMetadataMangerImpl#getExpiredOpenKeys > - > > Key: HDDS-4121 > URL: https://issues.apache.org/jira/browse/HDDS-4121 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: OM HA >Reporter: Ethan Rose >Assignee: Ethan Rose >Priority: Major > Labels: pull-request-available > Fix For: 1.1.0 > > > Implement the getExpiredOpenKeys method in OmMetadataMangerImpl to return > keys in the open key table that are older than a configurable time interval. > The method will be modified to take a parameter limiting how many keys are > returned. This value will be configurable with the existing > ozone.open.key.expire.threshold setting, which currently has a default value > of 1 day. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4121) Implement OmMetadataMangerImpl#getExpiredOpenKeys

[ https://issues.apache.org/jira/browse/HDDS-4121?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Bharat Viswanadham updated HDDS-4121: - Affects Version/s: 0.6.0 > Implement OmMetadataMangerImpl#getExpiredOpenKeys > - > > Key: HDDS-4121 > URL: https://issues.apache.org/jira/browse/HDDS-4121 > Project: Hadoop Distributed Data Store > Issue Type: Sub-task > Components: Ozone Manager >Affects Versions: 0.6.0 >Reporter: Ethan Rose >Assignee: Ethan Rose >Priority: Major > Labels: pull-request-available > Fix For: 1.1.0 > > > Implement the getExpiredOpenKeys method in OmMetadataMangerImpl to return > keys in the open key table that are older than a configurable time interval. > The method will be modified to take a parameter limiting how many keys are > returned. This value will be configurable with the existing > ozone.open.key.expire.threshold setting, which currently has a default value > of 1 day. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 commented on pull request #1351: HDDS-4121. Implement OmMetadataMangerImpl#getExpiredOpenKeys.

bharatviswa504 commented on pull request #1351: URL: https://github.com/apache/hadoop-ozone/pull/1351#issuecomment-684063895 Thank You @errose28 for the contribution. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[GitHub] [hadoop-ozone] bharatviswa504 merged pull request #1351: HDDS-4121. Implement OmMetadataMangerImpl#getExpiredOpenKeys.

bharatviswa504 merged pull request #1351: URL: https://github.com/apache/hadoop-ozone/pull/1351 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4150) recon.api.TestEndpoints is flaky

[ https://issues.apache.org/jira/browse/HDDS-4150?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aravindan Vijayan updated HDDS-4150: Fix Version/s: (was: 0.7.0) > recon.api.TestEndpoints is flaky > > > Key: HDDS-4150 > URL: https://issues.apache.org/jira/browse/HDDS-4150 > Project: Hadoop Distributed Data Store > Issue Type: Bug >Affects Versions: 0.7.0 >Reporter: Marton Elek >Assignee: Vivek Ratnavel Subramanian >Priority: Blocker > > Failed on the PR: > https://github.com/apache/hadoop-ozone/pull/1349 > And on the master: > https://github.com/elek/ozone-build-results/blob/master/2020/08/25/2533/unit/hadoop-ozone/recon/org.apache.hadoop.ozone.recon.api.TestEndpoints.txt > and here: > https://github.com/elek/ozone-build-results/blob/master/2020/08/22/2499/unit/hadoop-ozone/recon/org.apache.hadoop.ozone.recon.api.TestEndpoints.txt -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: ozone-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: ozone-issues-h...@hadoop.apache.org

[jira] [Updated] (HDDS-4150) recon.api.TestEndpoints is flaky