[GitHub] spark pull request #20812: [SPARK-23669] Executors fetch jars and name the j...

Github user jinxing64 commented on a diff in the pull request:

https://github.com/apache/spark/pull/20812#discussion_r175010008

--- Diff: core/src/main/scala/org/apache/spark/executor/Executor.scala ---

@@ -752,11 +752,10 @@ private[spark] class Executor(

if (currentTimeStamp < timestamp) {

logInfo("Fetching " + name + " with timestamp " + timestamp)

// Fetch file with useCache mode, close cache for local mode.

- Utils.fetchFile(name, new File(SparkFiles.getRootDirectory()),

conf,

-env.securityManager, hadoopConf, timestamp, useCache =

!isLocal)

+ val url = Utils.fetchFile(name, new

File(SparkFiles.getRootDirectory()), conf,

+env.securityManager, hadoopConf, timestamp, useCache =

!isLocal,

+conf.getBoolean("spark.jars.withDecoratedName",

false)).toURI.toURL

currentJars(name) = timestamp

- // Add it to our class loader

- val url = new File(SparkFiles.getRootDirectory(),

localName).toURI.toURL

--- End diff --

Well, I think we don't need it . The `File` returned by `Utils.fetchFile`

is the local file. We don't need `localName` to initialize the local file here.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20794: [SPARK-23644][CORE][UI] Use absolute path for REST call ...

Github user jerryshao commented on the issue: https://github.com/apache/spark/pull/20794 Merging to master and brach 2.3. Thanks! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20659: [DO-NOT-MERGE] Try to update Hive to 2.3.2

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20659 **[Test build #88299 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88299/testReport)** for PR 20659 at commit [`b92918b`](https://github.com/apache/spark/commit/b92918b0da63afaae0a8f22971df004186360953). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20795: [SPARK-23486]cache the function name from the catalog fo...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20795 **[Test build #88292 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88292/testReport)** for PR 20795 at commit [`1e5ba02`](https://github.com/apache/spark/commit/1e5ba02f942f4aa7d6a24d76c2123700663f401f). * This patch **fails due to an unknown error code, -9**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20841: [SPARK-23706][PYTHON] spark.conf.get(value, default=None...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20841 **[Test build #88298 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88298/testReport)** for PR 20841 at commit [`d8ee18f`](https://github.com/apache/spark/commit/d8ee18fab1a6183dfffa6e070e852fda67e1d809). * This patch **fails due to an unknown error code, -9**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20817: [SPARK-23599][SQL] Add a UUID generator from Pseudo-Rand...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20817 **[Test build #88290 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88290/testReport)** for PR 20817 at commit [`75b80a2`](https://github.com/apache/spark/commit/75b80a249c9e5492e6fe159ac527feaea4f46c5a). * This patch **fails due to an unknown error code, -9**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20841: [SPARK-23706][PYTHON] spark.conf.get(value, default=None...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20841 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/88298/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19222: [SPARK-10399][CORE][SQL] Introduce multiple MemoryBlocks...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/19222 **[Test build #88293 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88293/testReport)** for PR 19222 at commit [`c6d45ea`](https://github.com/apache/spark/commit/c6d45ea3eed791dbd67246068d77b9b239c209e6). * This patch **fails due to an unknown error code, -9**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19222: [SPARK-10399][CORE][SQL] Introduce multiple MemoryBlocks...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/19222 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/88293/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20795: [SPARK-23486]cache the function name from the catalog fo...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20795 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/88292/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20841: [SPARK-23706][PYTHON] spark.conf.get(value, default=None...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20841 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20817: [SPARK-23599][SQL] Add a UUID generator from Pseudo-Rand...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20817 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/88290/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20817: [SPARK-23599][SQL] Add a UUID generator from Pseudo-Rand...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20817 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20795: [SPARK-23486]cache the function name from the catalog fo...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20795 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19222: [SPARK-10399][CORE][SQL] Introduce multiple MemoryBlocks...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/19222 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20659: [DO-NOT-MERGE] Try to update Hive to 2.3.2

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20659 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20659: [DO-NOT-MERGE] Try to update Hive to 2.3.2

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20659 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/1554/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20841: [SPARK-23706][PYTHON] spark.conf.get(value, default=None...

Github user viirya commented on the issue: https://github.com/apache/spark/pull/20841 retest this please. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20794: [SPARK-23644][CORE][UI] Use absolute path for RES...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/20794 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20817: [SPARK-23599][SQL] Add a UUID generator from Pseudo-Rand...

Github user viirya commented on the issue: https://github.com/apache/spark/pull/20817 retest this please. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20841: [SPARK-23706][PYTHON] spark.conf.get(value, default=None...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20841 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/1555/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20841: [SPARK-23706][PYTHON] spark.conf.get(value, default=None...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20841 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20841: [SPARK-23706][PYTHON] spark.conf.get(value, default=None...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20841 **[Test build #88300 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88300/testReport)** for PR 20841 at commit [`d8ee18f`](https://github.com/apache/spark/commit/d8ee18fab1a6183dfffa6e070e852fda67e1d809). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20817: [SPARK-23599][SQL] Add a UUID generator from Pseudo-Rand...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20817 **[Test build #88301 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88301/testReport)** for PR 20817 at commit [`75b80a2`](https://github.com/apache/spark/commit/75b80a249c9e5492e6fe159ac527feaea4f46c5a). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17234: [SPARK-19892][MLlib] Implement findAnalogies method for ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/17234 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20817: [SPARK-23599][SQL] Add a UUID generator from Pseudo-Rand...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20817 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/1556/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20817: [SPARK-23599][SQL] Add a UUID generator from Pseudo-Rand...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20817 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20794: [SPARK-23644][CORE][UI] Use absolute path for REST call ...

Github user jerryshao commented on the issue: https://github.com/apache/spark/pull/20794 @mgaido91 the PR has conflict with branch 2.3, so I don't cherry-pick it to 2.3. If you want to backport, please create another backport PR. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20579: [SPARK-23372][SQL] Writing empty struct in parque...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/20579#discussion_r175013773

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DataSource.scala

---

@@ -542,6 +542,11 @@ case class DataSource(

throw new AnalysisException("Cannot save interval data type into

external storage.")

}

+if (data.schema.size == 0) {

--- End diff --

Is it required? This is a behavior change. Can we exclude it from this PR?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20806: [SPARK-23661][SQL] Implement treeAggregate on Dataset AP...

Github user viirya commented on the issue: https://github.com/apache/spark/pull/20806 @WeichenXu123 The `seqOp`/`comOp` can be arbitrary and works on domain objects, I'm not sure if built-in agg functions can satisfy all the use of it. For example, it seems hard to express `IDF.DocumentFrequencyAggregator` in built-in agg functions if any. One possible way is to use `Aggregator` and developers can write their aggregation function when doing treeAggregate. One advantage of `seqOp`/`comOp` is that ML developers don't need to learn how to write `Aggregator`. It may let them exposed to some concepts like `Encoder`. I have concerned that ML developer should know this or not. Anyway, to work with built-in agg functions or `Aggregator`, because it uses SQL aggregation system, we may need to overhaul the current aggregation system to support tree-style aggregation. Although it can benefit more situations not just ML, it needs more thinking and design. You can think of this as a workaround for now. Thus it is only for private use. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20812 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/1557/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20812 **[Test build #88302 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88302/testReport)** for PR 20812 at commit [`4ac1e8e`](https://github.com/apache/spark/commit/4ac1e8ecb5af688bc341e6256e9003244130fd25). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20812 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20812 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/1558/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20812 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20841: [SPARK-23706][PYTHON] spark.conf.get(value, default=None...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20841 **[Test build #88300 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88300/testReport)** for PR 20841 at commit [`d8ee18f`](https://github.com/apache/spark/commit/d8ee18fab1a6183dfffa6e070e852fda67e1d809). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20812 **[Test build #88303 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88303/testReport)** for PR 20812 at commit [`4473878`](https://github.com/apache/spark/commit/4473878d4bfc457a10522248798429465310adaa). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20841: [SPARK-23706][PYTHON] spark.conf.get(value, default=None...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20841 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20841: [SPARK-23706][PYTHON] spark.conf.get(value, default=None...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20841 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/88300/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user jerryshao commented on the issue: https://github.com/apache/spark/pull/20812 Does it only fix the jars added by `sc.addJar` or using non-yarn mode? Because yarn uses distributed cache at start, so it has a different code path, right? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20808: [SPARK-23662][SQL] Support selective tests in SQL...

Github user gatorsmile commented on a diff in the pull request: https://github.com/apache/spark/pull/20808#discussion_r175017634 --- Diff: sql/core/src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala --- @@ -51,6 +51,11 @@ import org.apache.spark.sql.types.StructType * SPARK_GENERATE_GOLDEN_FILES=1 build/sbt "sql/test-only *SQLQueryTestSuite" * }}} * + * For selective tests, run: --- End diff -- Why not directly using something like > build/sbt "~sql/test-only *SQLQueryTestSuite -- -z inline-table.sql -z random.sql" --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20800: [SPARK-23627][SQL] Provide isEmpty in Dataset

Github user gatorsmile commented on a diff in the pull request: https://github.com/apache/spark/pull/20800#discussion_r175018339 --- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala --- @@ -511,6 +511,14 @@ class Dataset[T] private[sql]( */ def isLocal: Boolean = logicalPlan.isInstanceOf[LocalRelation] + /** + * Returns true if the `Dataset` is empty. + * + * @group basic + * @since 2.4.0 + */ + def isEmpty: Boolean = rdd.isEmpty() --- End diff -- Building a rdd is not cheap. The current impl does not perform well. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20809: [SPARK-23667][CORE] Better scala version check

Github user viirya commented on the issue: https://github.com/apache/spark/pull/20809 For the case, shouldn't we just set `SPARK_SCALA_VERSION`? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20579: [SPARK-23372][SQL] Writing empty struct in parque...

Github user dilipbiswal commented on a diff in the pull request:

https://github.com/apache/spark/pull/20579#discussion_r175019613

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DataSource.scala

---

@@ -542,6 +542,11 @@ case class DataSource(

throw new AnalysisException("Cannot save interval data type into

external storage.")

}

+if (data.schema.size == 0) {

--- End diff --

@gatorsmile May i request you to please quickly go through Wenchen's and

Ryan's comments above ? My understanding is that , we want to consistently

rejecting writing empty schema for all the data sources ? Please let me know.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20827: [SPARK-23666][SQL] Do not display exprIds of Alia...

Github user viirya commented on a diff in the pull request: https://github.com/apache/spark/pull/20827#discussion_r175019770 --- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/namedExpressions.scala --- @@ -324,14 +324,14 @@ case class AttributeReference( * A place holder used when printing expressions without debugging information such as the * expression id or the unresolved indicator. */ -case class PrettyAttribute( +case class PrettyNamedExpression( --- End diff -- This rename looks a bit weird to me because now `PrettyNamedExpression` extends `Attribute`. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20844: [SPARK-23707][SQL] Fresh 'initRange' name to avoi...

GitHub user ConeyLiu opened a pull request: https://github.com/apache/spark/pull/20844 [SPARK-23707][SQL] Fresh 'initRange' name to avoid method name conflicts ## What changes were proposed in this pull request? We should call `ctx.freshName` to get the `initRange` to avoid name conflicts. ## How was this patch tested? Existing UT. You can merge this pull request into a Git repository by running: $ git pull https://github.com/ConeyLiu/spark range Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20844.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20844 commit d69f32ca49e3b2fa730d0520f48403eeebce60e4 Author: Xianyang Liu Date: 2018-03-16T07:56:52Z Fresh 'initRange' name to avoid method name conflicts --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20844: [SPARK-23707][SQL] Fresh 'initRange' name to avoid metho...

Github user ConeyLiu commented on the issue: https://github.com/apache/spark/pull/20844 @cloud-fan pls take a look, this is a small change. Thanks a lot. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20844: [SPARK-23707][SQL] Fresh 'initRange' name to avoid metho...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20844 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20683: [SPARK-8605] Exclude files in StreamingContext. textFile...

Github user gaborgsomogyi commented on the issue: https://github.com/apache/spark/pull/20683 Don't really understand the issue itself. Which filesystem used this case? Why is it not possible to use Hadoop-compatible filesystem like HDFS for instance? This supports atomic rename. [See here](https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/filesystem/introduction.html#Atomicity) --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20844: [SPARK-23707][SQL] Fresh 'initRange' name to avoid metho...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20844 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20799: [SPARK-23635][YARN] AM env variable should not overwrite...

Github user jerryshao commented on the issue: https://github.com/apache/spark/pull/20799 Thanks for the review, let me merge to master. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20799: [SPARK-23635][YARN] AM env variable should not ov...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/20799 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20795: [SPARK-23486]cache the function name from the cat...

Github user dilipbiswal commented on a diff in the pull request:

https://github.com/apache/spark/pull/20795#discussion_r175022409

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

---

@@ -1192,11 +1195,23 @@ class Analyzer(

* @see https://issues.apache.org/jira/browse/SPARK-19737

*/

object LookupFunctions extends Rule[LogicalPlan] {

-override def apply(plan: LogicalPlan): LogicalPlan =

plan.transformAllExpressions {

- case f: UnresolvedFunction if !catalog.functionExists(f.name) =>

-withPosition(f) {

- throw new

NoSuchFunctionException(f.name.database.getOrElse("default"), f.name.funcName)

-}

+override def apply(plan: LogicalPlan): LogicalPlan = {

+ val catalogFunctionNameSet = new

mutable.HashSet[FunctionIdentifier]()

+ plan.transformAllExpressions {

+case f: UnresolvedFunction if

catalogFunctionNameSet.contains(f.name) => f

+case f: UnresolvedFunction if catalog.functionExists(f.name) =>

+ catalogFunctionNameSet.add(normalizeFuncName(f.name))

+ f

+case f: UnresolvedFunction =>

+ withPosition(f) {

+throw new

NoSuchFunctionException(f.name.database.getOrElse("default"),

+ f.name.funcName)

+ }

+ }

+}

+

+private def normalizeFuncName(name: FunctionIdentifier):

FunctionIdentifier = {

+ FunctionIdentifier(name.funcName.toLowerCase(Locale.ROOT),

name.database)

--- End diff --

@viirya Shouldn't we be using the current database if database is not

specified ? I am trying to understand why we should use "default" here ?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20795: [SPARK-23486]cache the function name from the cat...

Github user viirya commented on a diff in the pull request:

https://github.com/apache/spark/pull/20795#discussion_r175023480

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

---

@@ -1192,11 +1195,23 @@ class Analyzer(

* @see https://issues.apache.org/jira/browse/SPARK-19737

*/

object LookupFunctions extends Rule[LogicalPlan] {

-override def apply(plan: LogicalPlan): LogicalPlan =

plan.transformAllExpressions {

- case f: UnresolvedFunction if !catalog.functionExists(f.name) =>

-withPosition(f) {

- throw new

NoSuchFunctionException(f.name.database.getOrElse("default"), f.name.funcName)

-}

+override def apply(plan: LogicalPlan): LogicalPlan = {

+ val catalogFunctionNameSet = new

mutable.HashSet[FunctionIdentifier]()

+ plan.transformAllExpressions {

+case f: UnresolvedFunction if

catalogFunctionNameSet.contains(f.name) => f

+case f: UnresolvedFunction if catalog.functionExists(f.name) =>

+ catalogFunctionNameSet.add(normalizeFuncName(f.name))

+ f

+case f: UnresolvedFunction =>

+ withPosition(f) {

+throw new

NoSuchFunctionException(f.name.database.getOrElse("default"),

+ f.name.funcName)

+ }

+ }

+}

+

+private def normalizeFuncName(name: FunctionIdentifier):

FunctionIdentifier = {

+ FunctionIdentifier(name.funcName.toLowerCase(Locale.ROOT),

name.database)

--- End diff --

@dilipbiswal Ah, right. Sorry it should be normalized as

`name.database.orElse(Some(catalog.getCurrentDatabase))`.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20795: [SPARK-23486]cache the function name from the cat...

Github user dilipbiswal commented on a diff in the pull request:

https://github.com/apache/spark/pull/20795#discussion_r175025479

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

---

@@ -1192,11 +1195,23 @@ class Analyzer(

* @see https://issues.apache.org/jira/browse/SPARK-19737

*/

object LookupFunctions extends Rule[LogicalPlan] {

-override def apply(plan: LogicalPlan): LogicalPlan =

plan.transformAllExpressions {

- case f: UnresolvedFunction if !catalog.functionExists(f.name) =>

-withPosition(f) {

- throw new

NoSuchFunctionException(f.name.database.getOrElse("default"), f.name.funcName)

-}

+override def apply(plan: LogicalPlan): LogicalPlan = {

+ val catalogFunctionNameSet = new

mutable.HashSet[FunctionIdentifier]()

+ plan.transformAllExpressions {

+case f: UnresolvedFunction if

catalogFunctionNameSet.contains(f.name) => f

+case f: UnresolvedFunction if catalog.functionExists(f.name) =>

+ catalogFunctionNameSet.add(normalizeFuncName(f.name))

+ f

+case f: UnresolvedFunction =>

+ withPosition(f) {

+throw new

NoSuchFunctionException(f.name.database.getOrElse("default"),

+ f.name.funcName)

+ }

+ }

+}

+

+private def normalizeFuncName(name: FunctionIdentifier):

FunctionIdentifier = {

+ FunctionIdentifier(name.funcName.toLowerCase(Locale.ROOT),

name.database)

--- End diff --

@viirya Thank you.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20795: [SPARK-23486]cache the function name from the cat...

Github user viirya commented on a diff in the pull request:

https://github.com/apache/spark/pull/20795#discussion_r175026010

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

---

@@ -1192,11 +1195,24 @@ class Analyzer(

* @see https://issues.apache.org/jira/browse/SPARK-19737

*/

object LookupFunctions extends Rule[LogicalPlan] {

-override def apply(plan: LogicalPlan): LogicalPlan =

plan.transformAllExpressions {

- case f: UnresolvedFunction if !catalog.functionExists(f.name) =>

-withPosition(f) {

- throw new

NoSuchFunctionException(f.name.database.getOrElse("default"), f.name.funcName)

-}

+override def apply(plan: LogicalPlan): LogicalPlan = {

+ val catalogFunctionNameSet = new

mutable.HashSet[FunctionIdentifier]()

+ plan.transformAllExpressions {

+case f: UnresolvedFunction

+ if catalogFunctionNameSet.contains(normalizeFuncName(f.name)) =>

f

+case f: UnresolvedFunction if catalog.functionExists(f.name) =>

+ catalogFunctionNameSet.add(normalizeFuncName(f.name))

+ f

+case f: UnresolvedFunction =>

+ withPosition(f) {

+throw new

NoSuchFunctionException(f.name.database.getOrElse("default"),

--- End diff --

Then I think this should be current database instead of default.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20795: [SPARK-23486]cache the function name from the cat...

Github user dilipbiswal commented on a diff in the pull request:

https://github.com/apache/spark/pull/20795#discussion_r175026674

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

---

@@ -1192,11 +1195,24 @@ class Analyzer(

* @see https://issues.apache.org/jira/browse/SPARK-19737

*/

object LookupFunctions extends Rule[LogicalPlan] {

-override def apply(plan: LogicalPlan): LogicalPlan =

plan.transformAllExpressions {

- case f: UnresolvedFunction if !catalog.functionExists(f.name) =>

-withPosition(f) {

- throw new

NoSuchFunctionException(f.name.database.getOrElse("default"), f.name.funcName)

-}

+override def apply(plan: LogicalPlan): LogicalPlan = {

+ val catalogFunctionNameSet = new

mutable.HashSet[FunctionIdentifier]()

+ plan.transformAllExpressions {

+case f: UnresolvedFunction

+ if catalogFunctionNameSet.contains(normalizeFuncName(f.name)) =>

f

+case f: UnresolvedFunction if catalog.functionExists(f.name) =>

+ catalogFunctionNameSet.add(normalizeFuncName(f.name))

+ f

+case f: UnresolvedFunction =>

+ withPosition(f) {

+throw new

NoSuchFunctionException(f.name.database.getOrElse("default"),

--- End diff --

@viirya Yeah..

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20795: [SPARK-23486]cache the function name from the cat...

Github user viirya commented on a diff in the pull request:

https://github.com/apache/spark/pull/20795#discussion_r175029024

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

---

@@ -1192,11 +1195,23 @@ class Analyzer(

* @see https://issues.apache.org/jira/browse/SPARK-19737

*/

object LookupFunctions extends Rule[LogicalPlan] {

-override def apply(plan: LogicalPlan): LogicalPlan =

plan.transformAllExpressions {

- case f: UnresolvedFunction if !catalog.functionExists(f.name) =>

-withPosition(f) {

- throw new

NoSuchFunctionException(f.name.database.getOrElse("default"), f.name.funcName)

-}

+override def apply(plan: LogicalPlan): LogicalPlan = {

+ val catalogFunctionNameSet = new

mutable.HashSet[FunctionIdentifier]()

+ plan.transformAllExpressions {

+case f: UnresolvedFunction if

catalogFunctionNameSet.contains(f.name) => f

+case f: UnresolvedFunction if catalog.functionExists(f.name) =>

+ catalogFunctionNameSet.add(normalizeFuncName(f.name))

+ f

+case f: UnresolvedFunction =>

+ withPosition(f) {

+throw new

NoSuchFunctionException(f.name.database.getOrElse("default"),

+ f.name.funcName)

+ }

+ }

+}

+

+private def normalizeFuncName(name: FunctionIdentifier):

FunctionIdentifier = {

+ FunctionIdentifier(name.funcName.toLowerCase(Locale.ROOT),

name.database)

--- End diff --

@kevinyu98 For built-in functions, we don't need to normalize their

database name.

Rethink about it, actually here it is not for function resolution, I think

it is OK to leave it as `name.database`.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20808: [SPARK-23662][SQL] Support selective tests in SQL...

Github user maropu closed the pull request at: https://github.com/apache/spark/pull/20808 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20808: [SPARK-23662][SQL] Support selective tests in SQL...

Github user maropu commented on a diff in the pull request: https://github.com/apache/spark/pull/20808#discussion_r175030155 --- Diff: sql/core/src/test/scala/org/apache/spark/sql/SQLQueryTestSuite.scala --- @@ -51,6 +51,11 @@ import org.apache.spark.sql.types.StructType * SPARK_GENERATE_GOLDEN_FILES=1 build/sbt "sql/test-only *SQLQueryTestSuite" * }}} * + * For selective tests, run: --- End diff -- oh, I didn't know `sbt` can do. I usually use `maven` and it seems `maven` cannot do that. Anyway, it's okay to use `sbt for this case. Thanks! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20831: [SPARK-23614][SQL] Fix incorrect reuse exchange w...

Github user maropu commented on a diff in the pull request: https://github.com/apache/spark/pull/20831#discussion_r175030682 --- Diff: sql/core/src/main/scala/org/apache/spark/sql/execution/columnar/InMemoryRelation.scala --- @@ -68,6 +69,15 @@ case class InMemoryRelation( override protected def innerChildren: Seq[SparkPlan] = Seq(child) + override def doCanonicalize(): logical.LogicalPlan = +copy(output = output.map(QueryPlan.normalizeExprId(_, child.output)), + storageLevel = StorageLevel.NONE, + child = child.canonicalized, + tableName = None)( + _cachedColumnBuffers, + sizeInBytesStats, + statsOfPlanToCache) --- End diff -- aha, ok. Thanks! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19573: [SPARK-22350][SQL] select grouping__id from subquery

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/19573 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/1559/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19573: [SPARK-22350][SQL] select grouping__id from subquery

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/19573 Build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20845: [SPARK-23708][CORE] Correct comment for function ...

GitHub user caneGuy opened a pull request:

https://github.com/apache/spark/pull/20845

[SPARK-23708][CORE] Correct comment for function addShutDownHook in

ShutdownHookManager

## What changes were proposed in this pull request?

Comment below is not right.

```

/**

* Adds a shutdown hook with the given priority. Hooks with lower

priority values run

* first.

*

* @param hook The code to run during shutdown.

* @return A handle that can be used to unregister the shutdown hook.

*/

def addShutdownHook(priority: Int)(hook: () => Unit): AnyRef = {

shutdownHooks.add(priority, hook)

}

```

## How was this patch tested?

UT

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/caneGuy/spark zhoukang/fix-shutdowncomment

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/spark/pull/20845.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #20845

commit 0199a30783dcef86c5e643fdbcf8b31d4675326b

Author: zhoukang

Date: 2018-03-16T09:21:58Z

Correct comment for function addShutDownHook in ShutdownHookManager

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20845: [SPARK-23708][CORE] Correct comment for function addShut...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20845 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20845: [SPARK-23708][CORE] Correct comment for function addShut...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20845 **[Test build #88304 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88304/testReport)** for PR 20845 at commit [`0199a30`](https://github.com/apache/spark/commit/0199a30783dcef86c5e643fdbcf8b31d4675326b). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20800: [SPARK-23627][SQL] Provide isEmpty in Dataset

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/20800#discussion_r175039379

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -511,6 +511,14 @@ class Dataset[T] private[sql](

*/

def isLocal: Boolean = logicalPlan.isInstanceOf[LocalRelation]

+ /**

+ * Returns true if the `Dataset` is empty.

+ *

+ * @group basic

+ * @since 2.4.0

+ */

+ def isEmpty: Boolean = rdd.isEmpty()

--- End diff --

How about this?

```

def isEmpty: Boolean = withAction("isEmpty",

groupBy().count().queryExecution) { plan =>

plan.executeCollect().head.getLong(0) == 0

}

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20809: [SPARK-23667][CORE] Better scala version check

Github user gczsjdy commented on the issue: https://github.com/apache/spark/pull/20809 @viirya Yes, but this is only for people who will investigate on Spark code, and it also requires manual efforts. Isn't it better if we get this automatically? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20827: [SPARK-23666][SQL] Do not display exprIds of Alias in us...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20827 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/1560/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20827: [SPARK-23666][SQL] Do not display exprIds of Alias in us...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20827 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20827: [SPARK-23666][SQL] Do not display exprIds of Alias in us...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20827 **[Test build #88305 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88305/testReport)** for PR 20827 at commit [`57c3633`](https://github.com/apache/spark/commit/57c3633dee4f79883d3bb8907fc5d7bfa4d44262). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20846: [SPARK-5498][SQL][FOLLOW] add schema to table par...

GitHub user liutang123 opened a pull request: https://github.com/apache/spark/pull/20846 [SPARK-5498][SQL][FOLLOW] add schema to table partition ## What changes were proposed in this pull request? When query a orc table witch some partition schemas are different from table schema, ClassCastException will occured. reproduction: `create table test_par(a string) PARTITIONED BY (`b` bigint) ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.orc.OrcSerde' STORED AS INPUTFORMAT 'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat'; ALTER TABLE test_par CHANGE a a bigint restrict; -- in hive select * from test_par;` ## How was this patch tested? manual test. You can merge this pull request into a Git repository by running: $ git pull https://github.com/liutang123/spark SPARK-5498 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20846.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20846 commit 2317bfdf18fc1a7b21cd43e0ec12f5e957fb1895 Author: liutang123 Date: 2017-06-21T04:27:42Z Merge pull request #1 from apache/master 20170521 pull request commit 821b1f88e15bbe2bf7147f9cfa2e39ce7cb52b12 Author: liutang123 Date: 2017-11-24T08:54:11Z Merge branch 'master' of https://github.com/liutang123/spark commit 1841f60861a96fb1508257c84e8703ca1ffb57de Author: liutang123 Date: 2017-11-24T08:54:59Z Merge branch 'master' of https://github.com/apache/spark commit 16f4a52aa556cdc5182570979bad9b4cf6f092d5 Author: liutang123 Date: 2018-03-16T10:10:57Z Merge branch 'master' of https://github.com/apache/spark --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20846: [SPARK-5498][SQL][FOLLOW] add schema to table par...

Github user liutang123 closed the pull request at: https://github.com/apache/spark/pull/20846 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20846: [SPARK-5498][SQL][FOLLOW] add schema to table par...

GitHub user liutang123 reopened a pull request: https://github.com/apache/spark/pull/20846 [SPARK-5498][SQL][FOLLOW] add schema to table partition ## What changes were proposed in this pull request? When query a orc table witch some partition schemas are different from table schema, ClassCastException will occured. reproduction: `create table test_par(a string) PARTITIONED BY (`b` bigint) ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.orc.OrcSerde' STORED AS INPUTFORMAT 'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat';` `ALTER TABLE test_par CHANGE a a bigint restrict; -- in hive `select * from test_par;` ## How was this patch tested? manual test. You can merge this pull request into a Git repository by running: $ git pull https://github.com/liutang123/spark SPARK-5498 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20846.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20846 commit 2317bfdf18fc1a7b21cd43e0ec12f5e957fb1895 Author: liutang123 Date: 2017-06-21T04:27:42Z Merge pull request #1 from apache/master 20170521 pull request commit 821b1f88e15bbe2bf7147f9cfa2e39ce7cb52b12 Author: liutang123 Date: 2017-11-24T08:54:11Z Merge branch 'master' of https://github.com/liutang123/spark commit 1841f60861a96fb1508257c84e8703ca1ffb57de Author: liutang123 Date: 2017-11-24T08:54:59Z Merge branch 'master' of https://github.com/apache/spark commit 16f4a52aa556cdc5182570979bad9b4cf6f092d5 Author: liutang123 Date: 2018-03-16T10:10:57Z Merge branch 'master' of https://github.com/apache/spark commit cdd5987178280f0424e9a828dd348df11e62758a Author: liutang123 Date: 2018-03-16T10:29:23Z [SPARK-5498][SQL][FOLLOW] add schema to table partition. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20846: [SPARK-5498][SQL][FOLLOW] add schema to table partition

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20846 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20846: [SPARK-5498][SQL][FOLLOW] add schema to table partition

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20846 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20824: [SPARK-23683][SQL][FOLLOW-UP] FileCommitProtocol....

Github user steveloughran commented on a diff in the pull request:

https://github.com/apache/spark/pull/20824#discussion_r175050086

--- Diff:

core/src/main/scala/org/apache/spark/internal/io/FileCommitProtocol.scala ---

@@ -145,15 +146,23 @@ object FileCommitProtocol {

jobId: String,

outputPath: String,

dynamicPartitionOverwrite: Boolean = false): FileCommitProtocol = {

+

+logDebug(s"Creating committer $className; job $jobId;

output=$outputPath;" +

+ s" dynamic=$dynamicPartitionOverwrite")

val clazz =

Utils.classForName(className).asInstanceOf[Class[FileCommitProtocol]]

// First try the constructor with arguments (jobId: String,

outputPath: String,

// dynamicPartitionOverwrite: Boolean).

// If that doesn't exist, try the one with (jobId: string, outputPath:

String).

try {

val ctor = clazz.getDeclaredConstructor(classOf[String],

classOf[String], classOf[Boolean])

+ logDebug("Using (String, String, Boolean) constructor")

ctor.newInstance(jobId, outputPath,

dynamicPartitionOverwrite.asInstanceOf[java.lang.Boolean])

} catch {

case _: NoSuchMethodException =>

+logDebug("Falling back to (String, String) constructor")

+require(!dynamicPartitionOverwrite,

+ "Dynamic Partition Overwrite is enabled but" +

+s" the committer ${className} does not have the appropriate

constructor")

--- End diff --

something like that would work, though it'd be bit more

convoluted...InsertIntoFSRelation would have to check, and then handle the

situation of missing support.

One thing to consider in any form is: all implementations of

FileCommitProtocol should be aware of the new Dynamic Partition overwrite

feature...adding a new 3-arg constructor is an implicit way of saying "I

understand this". Where it's weak is there's no way for for it to say "I

understand this and will handle it myself" Because essentially that's what

being done in the [Netflix Partioned

committer(https://github.com/apache/hadoop/blob/trunk/hadoop-tools/hadoop-aws/src/main/java/org/apache/hadoop/fs/s3a/commit/staging/PartitionedStagingCommitter.java#L142),

which purges all parts for which the new job has data. With that committer, if

the insert asks for the feature then the FileCommitProtocol binding to it could

(somehow) turn this on and so handle everything internally.

Like I said, a more complex model. It'd need changes a fair way through

things and then the usual complexity of getting commit logic.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user jinxing64 commented on the issue: https://github.com/apache/spark/pull/20812 @jerryshao Thanks for comment; Yes, this change is only for `sc.addJar` and the jars will be named with a prefix when executor `updateDependencies`. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20817: [SPARK-23599][SQL] Add a UUID generator from Pseudo-Rand...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20817 **[Test build #88301 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88301/testReport)** for PR 20817 at commit [`75b80a2`](https://github.com/apache/spark/commit/75b80a249c9e5492e6fe159ac527feaea4f46c5a). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20817: [SPARK-23599][SQL] Add a UUID generator from Pseudo-Rand...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20817 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/88301/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20817: [SPARK-23599][SQL] Add a UUID generator from Pseudo-Rand...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20817 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20812 **[Test build #88303 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88303/testReport)** for PR 20812 at commit [`4473878`](https://github.com/apache/spark/commit/4473878d4bfc457a10522248798429465310adaa). * This patch **fails Spark unit tests**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20812 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/88303/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20812 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20701: [SPARK-23528][ML] Add numIter to ClusteringSummary

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20701 **[Test build #88306 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88306/testReport)** for PR 20701 at commit [`8b16af6`](https://github.com/apache/spark/commit/8b16af6b790cc014302636a56a74b3ec8bf37892). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20701: [SPARK-23528][ML] Add numIter to ClusteringSummary

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20701 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20701: [SPARK-23528][ML] Add numIter to ClusteringSummary

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20701 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/1561/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20659: [DO-NOT-MERGE] Try to update Hive to 2.3.2

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20659 **[Test build #88299 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88299/testReport)** for PR 20659 at commit [`b92918b`](https://github.com/apache/spark/commit/b92918b0da63afaae0a8f22971df004186360953). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20659: [DO-NOT-MERGE] Try to update Hive to 2.3.2

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20659 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20659: [DO-NOT-MERGE] Try to update Hive to 2.3.2

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20659 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/88299/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20629: [SPARK-23451][ML] Deprecate KMeans.computeCost

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20629 **[Test build #88307 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88307/testReport)** for PR 20629 at commit [`05680ea`](https://github.com/apache/spark/commit/05680ea59495a8a2c5ba15f18f77559b0cc81b98). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20629: [SPARK-23451][ML] Deprecate KMeans.computeCost

Github user mgaido91 commented on the issue: https://github.com/apache/spark/pull/20629 @MLnick I checked and adding the `computeCosts` to `ClusteringEvaluator` has a small drawback: we have to compute the centers for each cluster and then we can compute the costs, which involved two passes on the dataset. Since this and that this evaluation looks not very useful in practice, is it worth according to you to add it nonetheless? Thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20629: [SPARK-23451][ML] Deprecate KMeans.computeCost

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20629 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20629: [SPARK-23451][ML] Deprecate KMeans.computeCost

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20629 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/1562/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

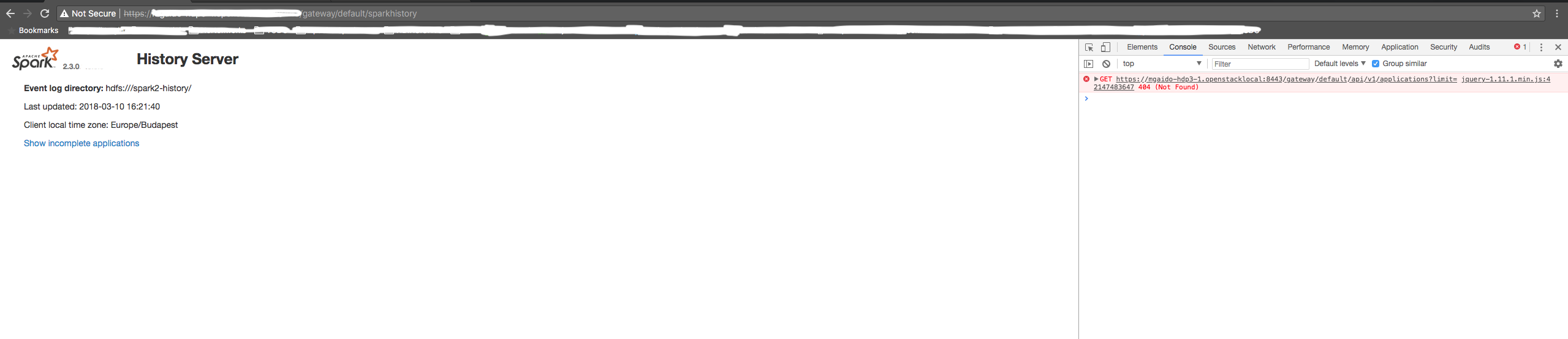

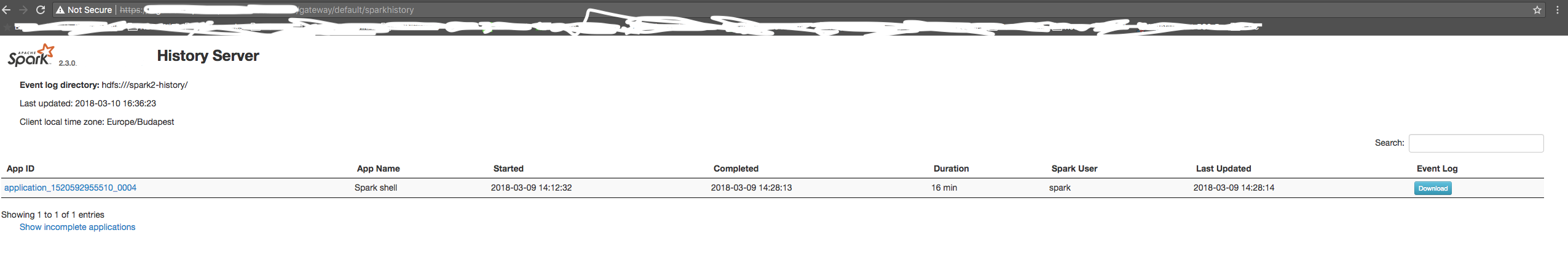

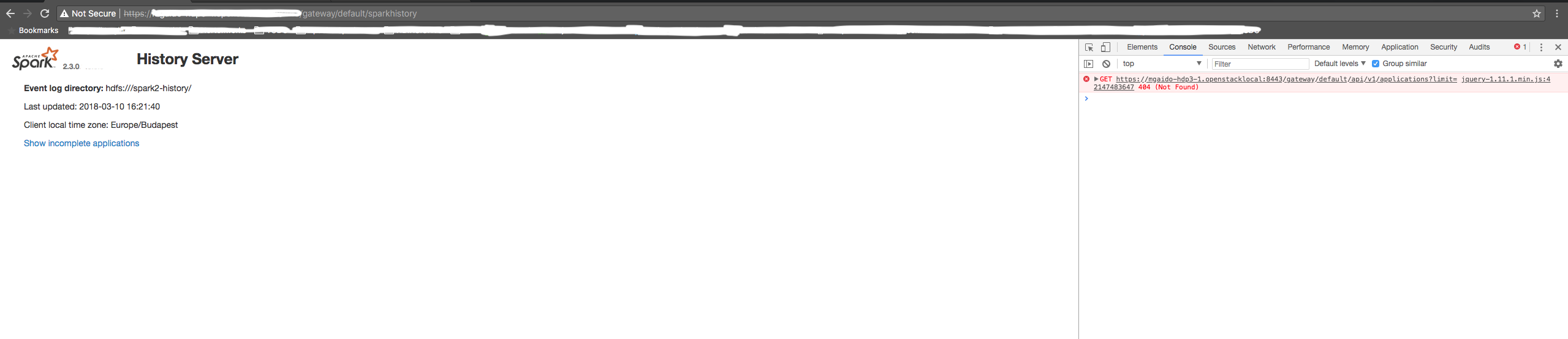

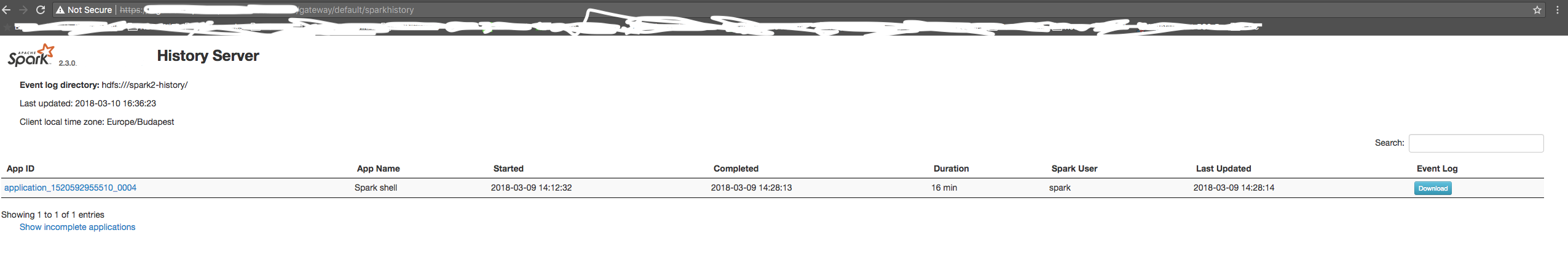

[GitHub] spark pull request #20847: [SPARK-23644][CORE][UI] Use absolute path for RES...

GitHub user mgaido91 opened a pull request: https://github.com/apache/spark/pull/20847 [SPARK-23644][CORE][UI] Use absolute path for REST call in SHS SHS is using a relative path for the REST API call to get the list of the application is a relative path call. In case of the SHS being consumed through a proxy, it can be an issue if the path doesn't end with a "/". Therefore, we should use an absolute path for the REST call as it is done for all the other resources. manual tests Before the change:  After the change:  Author: Marco Gaido Closes #20794 from mgaido91/SPARK-23644. ## What changes were proposed in this pull request? (Please fill in changes proposed in this fix) ## How was this patch tested? (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. You can merge this pull request into a Git repository by running: $ git pull https://github.com/mgaido91/spark SPARK-23644_2.3 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20847.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20847 commit f130a3603386acee670788d545a9d38969fcf39f Author: Marco Gaido Date: 2018-03-16T07:12:26Z [SPARK-23644][CORE][UI] Use absolute path for REST call in SHS SHS is using a relative path for the REST API call to get the list of the application is a relative path call. In case of the SHS being consumed through a proxy, it can be an issue if the path doesn't end with a "/". Therefore, we should use an absolute path for the REST call as it is done for all the other resources. manual tests Before the change:  After the change:  Author: Marco Gaido Closes #20794 from mgaido91/SPARK-23644. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20812 **[Test build #88302 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88302/testReport)** for PR 20812 at commit [`4ac1e8e`](https://github.com/apache/spark/commit/4ac1e8ecb5af688bc341e6256e9003244130fd25). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20847: [SPARK-23644][CORE][UI] Use absolute path for REST call ...

Github user mgaido91 commented on the issue: https://github.com/apache/spark/pull/20847 cc @jerryshao --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20812 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20812: [SPARK-23669] Executors fetch jars and name the jars wit...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20812 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/88302/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20847: [SPARK-23644][CORE][UI] Use absolute path for REST call ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/20847 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org