[GitHub] spark issue #21821: [SPARK-24867] [SQL] Add AnalysisBarrier to DataFrameWrit...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21821 **[Test build #93319 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93319/testReport)** for PR 21821 at commit [`9edc28f`](https://github.com/apache/spark/commit/9edc28fdcb7261f01db716f65e723668a493327e). * This patch **fails Spark unit tests**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21813: [SPARK-24424][SQL] Support ANSI-SQL compliant syn...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/21813 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21813: [SPARK-24424][SQL] Support ANSI-SQL compliant syntax for...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21813 Thanks! Merged to master. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21823: [SPARK-24870][SQL]Cache can't work normally if there are...

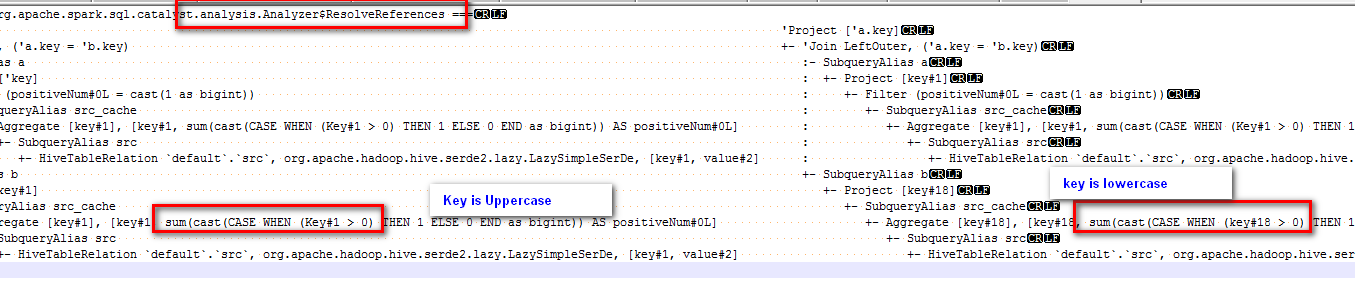

Github user eatoncys commented on the issue: https://github.com/apache/spark/pull/21823 @cloud-fan Cast 'Key' to lower case is done by rule of ResolveReferences:  --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21049: [SPARK-23957][SQL] Remove redundant sort operators from ...

Github user dilipbiswal commented on the issue: https://github.com/apache/spark/pull/21049 @gatorsmile Sure. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20057: [SPARK-22880][SQL] Add cascadeTruncate option to JDBC da...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/20057 **[Test build #93322 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93322/testReport)** for PR 20057 at commit [`bc75051`](https://github.com/apache/spark/commit/bc75051f5f4a47ef045c93bd933b2f95635100ad). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21813: [SPARK-24424][SQL] Support ANSI-SQL compliant syntax for...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21813 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/93313/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21813: [SPARK-24424][SQL] Support ANSI-SQL compliant syntax for...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21813 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21823: [SPARK-24870][SQL]Cache can't work normally if there are...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/21823 > so the sql analyzer get the filed of 'key' from the original table 'src', which is lowercase. shouldn't we always do it? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21813: [SPARK-24424][SQL] Support ANSI-SQL compliant syntax for...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21813 **[Test build #93313 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93313/testReport)** for PR 21813 at commit [`2ecf3e1`](https://github.com/apache/spark/commit/2ecf3e183e51f13ec9c92692d29854f01eb327c5). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21049: [SPARK-23957][SQL] Remove redundant sort operators from ...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21049 @dilipbiswal Can you take this over? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20057: [SPARK-22880][SQL] Add cascadeTruncate option to JDBC da...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/20057 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20057: [SPARK-22880][SQL] Add cascadeTruncate option to ...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/20057#discussion_r203949498

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JdbcUtils.scala

---

@@ -105,7 +105,12 @@ object JdbcUtils extends Logging {

val statement = conn.createStatement

try {

statement.setQueryTimeout(options.queryTimeout)

- statement.executeUpdate(dialect.getTruncateQuery(options.table))

+ if (options.isCascadeTruncate.isDefined) {

+statement.executeUpdate(dialect.getTruncateQuery(options.table,

+ options.isCascadeTruncate))

+ } else {

+statement.executeUpdate(dialect.getTruncateQuery(options.table))

+ }

--- End diff --

+1 to the above comment of @dongjoon-hyun

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21805: [SPARK-24850][SQL] fix str representation of Cach...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/21805#discussion_r203949905

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/DatasetCacheSuite.scala ---

@@ -206,4 +206,19 @@ class DatasetCacheSuite extends QueryTest with

SharedSQLContext with TimeLimits

// first time use, load cache

checkDataset(df5, Row(10))

}

+

+ test("SPARK-24850 InMemoryRelation string representation does not

include cached plan") {

+val dummyQueryExecution = spark.range(0, 1).toDF().queryExecution

+val inMemoryRelation = InMemoryRelation(

+ true,

+ 1000,

+ StorageLevel.MEMORY_ONLY,

+ dummyQueryExecution.sparkPlan,

+ Some("test-relation"),

+ dummyQueryExecution.logical)

+

+

assert(!inMemoryRelation.simpleString.contains(dummyQueryExecution.sparkPlan.toString))

+assert(inMemoryRelation.simpleString.contains(

+ "CachedRDDBuilder(true, 1000, StorageLevel(memory, deserialized, 1

replicas))"))

--- End diff --

How about just comparing explain output results like the query in this pr

description?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20057: [SPARK-22880][SQL] Add cascadeTruncate option to ...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/20057#discussion_r203948972

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/jdbc/JdbcDialects.scala ---

@@ -120,11 +121,26 @@ abstract class JdbcDialect extends Serializable {

* The SQL query that should be used to truncate a table. Dialects can

override this method to

* return a query that is suitable for a particular database. For

PostgreSQL, for instance,

* a different query is used to prevent "TRUNCATE" affecting other

tables.

- * @param table The name of the table.

+ * @param table The table to truncate

* @return The SQL query to use for truncating a table

*/

@Since("2.3.0")

def getTruncateQuery(table: String): String = {

+getTruncateQuery(table, isCascadingTruncateTable)

+ }

+

+ /**

+ * The SQL query that should be used to truncate a table. Dialects can

override this method to

+ * return a query that is suitable for a particular database. For

PostgreSQL, for instance,

+ * a different query is used to prevent "TRUNCATE" affecting other

tables.

+ * @param table The table to truncate

+ * @param cascade Whether or not to cascade the truncation

+ * @return The SQL query to use for truncating a table

+ */

+ @Since("2.4.0")

+ def getTruncateQuery(

+table: String,

+cascade: Option[Boolean] = isCascadingTruncateTable): String = {

--- End diff --

Nit: indent.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21804: [SPARK-24268][SQL] Use datatype.catalogString in ...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/21804 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21804: [SPARK-24268][SQL] Use datatype.catalogString in error m...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21804 LGTM Thanks! Merged to master. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21815: [SPARK-23731][SQL] Make FileSourceScanExec canonicalizab...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/21815 LGTM --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21815: [SPARK-23731][SQL] Make FileSourceScanExec canoni...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21815#discussion_r203947912

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/FileSourceScanExecSuite.scala

---

@@ -0,0 +1,36 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution

+

+import org.apache.spark.sql.test.SharedSQLContext

+

+class FileSourceScanExecSuite extends SharedSQLContext {

+ test("FileSourceScanExec should be canonicalizable on executor side") {

--- End diff --

I'd like to put this test in `QueryPlanSuite`, with name `SPARK-: query

plans can be serialized and deserialized`.

In the test we don't need to trigger a job, just call

`spark.env.serializer` to serialize and deserialize the `FileSourceScanExec`

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21804: [SPARK-24268][SQL] Use datatype.catalogString in ...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21804#discussion_r203947486

--- Diff: mllib/src/main/scala/org/apache/spark/ml/feature/HashingTF.scala

---

@@ -104,7 +104,7 @@ class HashingTF @Since("1.4.0") (@Since("1.4.0")

override val uid: String)

override def transformSchema(schema: StructType): StructType = {

val inputType = schema($(inputCol)).dataType

require(inputType.isInstanceOf[ArrayType],

- s"The input column must be ArrayType, but got $inputType.")

+ s"The input column must be ${ArrayType.simpleString}, but got

${inputType.catalogString}.")

--- End diff --

let us change all?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21804: [SPARK-24268][SQL] Use datatype.catalogString in ...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21804#discussion_r203947449

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/feature/FeatureHasher.scala ---

@@ -208,8 +208,9 @@ class FeatureHasher(@Since("2.3.0") override val uid:

String) extends Transforme

require(dataType.isInstanceOf[NumericType] ||

dataType.isInstanceOf[StringType] ||

dataType.isInstanceOf[BooleanType],

-s"FeatureHasher requires columns to be of NumericType, BooleanType

or StringType. " +

- s"Column $fieldName was $dataType")

+s"FeatureHasher requires columns to be of

${NumericType.simpleString}, " +

--- End diff --

Let us change all?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21798: [SPARK-24836][SQL] New option for Avro datasource - igno...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21798 LGTM except a comment --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21823: [SPARK-24870][SQL]Cache can't work normally if there are...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21823 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21823: [SPARK-24870][SQL]Cache can't work normally if there are...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21823 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/93312/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21823: [SPARK-24870][SQL]Cache can't work normally if there are...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21823 **[Test build #93312 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93312/testReport)** for PR 21823 at commit [`2b2a5a3`](https://github.com/apache/spark/commit/2b2a5a33ed58ce07fd2515eb01e80acbedeb8b2a). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21772: [SPARK-24809] [SQL] Serializing LongHashedRelation in ex...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21772 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/93314/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21772: [SPARK-24809] [SQL] Serializing LongHashedRelation in ex...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21772 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21798: [SPARK-24836][SQL] New option for Avro datasource...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21798#discussion_r203946680

--- Diff:

external/avro/src/main/scala/org/apache/spark/sql/avro/AvroFileFormat.scala ---

@@ -275,10 +273,12 @@ private[avro] object AvroFileFormat {

}

}

- def ignoreFilesWithoutExtensions(conf: Configuration): Boolean = {

-// Files without .avro extensions are not ignored by default

-val defaultValue = false

+ def ignoreExtension(conf: Configuration, options: AvroOptions): Boolean

= {

--- End diff --

Can we move this to `object AvroOptions`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21815: [SPARK-23731][SQL] Make FileSourceScanExec canonicalizab...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21815 cc @gengliangwang @cloud-fan This needs a careful review. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21774: [SPARK-24811][SQL]Avro: add new function from_avr...

Github user gengliangwang commented on a diff in the pull request:

https://github.com/apache/spark/pull/21774#discussion_r203945945

--- Diff:

external/avro/src/main/scala/org/apache/spark/sql/avro/package.scala ---

@@ -36,4 +40,27 @@ package object avro {

@scala.annotation.varargs

def avro(sources: String*): DataFrame =

reader.format("avro").load(sources: _*)

}

+

+ /**

+ * Converts a binary column of avro format into its corresponding

catalyst value. The specified

+ * schema must match the read data, otherwise the behavior is undefined:

it may fail or return

+ * arbitrary result.

+ *

+ * @param data the binary column.

+ * @param avroType the avro type.

+ */

+ @Experimental

+ def from_avro(data: Column, avroType: Schema): Column = {

--- End diff --

I am actually +1 with @HyukjinKwon .

To make everyone happy, I will add another override API, but I don't think

we should have more.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21775: [SPARK-24812][SQL] Last Access Time in the table descrip...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21775 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21775: [SPARK-24812][SQL] Last Access Time in the table descrip...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21775 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/93311/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21805: [SPARK-24850][SQL] fix str representation of Cach...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21805#discussion_r203945646

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/DatasetCacheSuite.scala ---

@@ -206,4 +206,19 @@ class DatasetCacheSuite extends QueryTest with

SharedSQLContext with TimeLimits

// first time use, load cache

checkDataset(df5, Row(10))

}

+

+ test("SPARK-24850 InMemoryRelation string representation does not

include cached plan") {

+val dummyQueryExecution = spark.range(0, 1).toDF().queryExecution

+val inMemoryRelation = InMemoryRelation(

+ true,

+ 1000,

+ StorageLevel.MEMORY_ONLY,

+ dummyQueryExecution.sparkPlan,

+ Some("test-relation"),

+ dummyQueryExecution.logical)

+

+

assert(!inMemoryRelation.simpleString.contains(dummyQueryExecution.sparkPlan.toString))

+assert(inMemoryRelation.simpleString.contains(

+ "CachedRDDBuilder(true, 1000, StorageLevel(memory, deserialized, 1

replicas))"))

--- End diff --

Or we might not need the batch size in the plan.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21805: [SPARK-24850][SQL] fix str representation of Cach...

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21805#discussion_r203945605

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/DatasetCacheSuite.scala ---

@@ -206,4 +206,19 @@ class DatasetCacheSuite extends QueryTest with

SharedSQLContext with TimeLimits

// first time use, load cache

checkDataset(df5, Row(10))

}

+

+ test("SPARK-24850 InMemoryRelation string representation does not

include cached plan") {

+val dummyQueryExecution = spark.range(0, 1).toDF().queryExecution

+val inMemoryRelation = InMemoryRelation(

+ true,

+ 1000,

+ StorageLevel.MEMORY_ONLY,

+ dummyQueryExecution.sparkPlan,

+ Some("test-relation"),

+ dummyQueryExecution.logical)

+

+

assert(!inMemoryRelation.simpleString.contains(dummyQueryExecution.sparkPlan.toString))

+assert(inMemoryRelation.simpleString.contains(

+ "CachedRDDBuilder(true, 1000, StorageLevel(memory, deserialized, 1

replicas))"))

--- End diff --

`true` and `1000` look confusing to end users. Can we improve it?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21775: [SPARK-24812][SQL] Last Access Time in the table descrip...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21775 **[Test build #93311 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93311/testReport)** for PR 21775 at commit [`b527fdc`](https://github.com/apache/spark/commit/b527fdc5919296ffa12e1be54367b9132ecee61e). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21774: [SPARK-24811][SQL]Avro: add new function from_avr...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21774#discussion_r203945436

--- Diff:

external/avro/src/main/scala/org/apache/spark/sql/avro/package.scala ---

@@ -36,4 +40,27 @@ package object avro {

@scala.annotation.varargs

def avro(sources: String*): DataFrame =

reader.format("avro").load(sources: _*)

}

+

+ /**

+ * Converts a binary column of avro format into its corresponding

catalyst value. The specified

+ * schema must match the read data, otherwise the behavior is undefined:

it may fail or return

+ * arbitrary result.

+ *

+ * @param data the binary column.

+ * @param avroType the avro type.

+ */

+ @Experimental

+ def from_avro(data: Column, avroType: Schema): Column = {

--- End diff --

ah sorry i thought you are talking about the `data` parameter.

Yes, for `avroType` parameter, we should have a string version

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21805: [SPARK-24850][SQL] fix str representation of CachedRDDBu...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21805 ok to test --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21774: [SPARK-24811][SQL]Avro: add new function from_avr...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21774#discussion_r203943047

--- Diff:

external/avro/src/main/scala/org/apache/spark/sql/avro/AvroDataToCatalyst.scala

---

@@ -0,0 +1,64 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql

+

+import org.apache.avro.generic.GenericDatumReader

+import org.apache.avro.io.{BinaryDecoder, DecoderFactory}

+

+import org.apache.spark.sql.avro.{AvroDeserializer, SchemaConverters,

SerializableSchema}

+import org.apache.spark.sql.catalyst.expressions.{ExpectsInputTypes,

Expression, UnaryExpression}

+import org.apache.spark.sql.catalyst.expressions.codegen.{CodegenContext,

CodeGenerator, ExprCode}

+import org.apache.spark.sql.types.{AbstractDataType, BinaryType, DataType}

+

+case class AvroDataToCatalyst(child: Expression, avroType:

SerializableSchema)

+ extends UnaryExpression with ExpectsInputTypes {

+

+ override def inputTypes: Seq[AbstractDataType] = Seq(BinaryType)

+

+ override lazy val dataType: DataType =

+SchemaConverters.toSqlType(avroType.value).dataType

+

+ override def nullable: Boolean = true

+

+ @transient private lazy val reader = new

GenericDatumReader[Any](avroType.value)

+

+ @transient private lazy val deserializer = new

AvroDeserializer(avroType.value, dataType)

+

+ @transient private var decoder: BinaryDecoder = _

+

+ @transient private var result: Any = _

+

+ override def nullSafeEval(input: Any): Any = {

+val binary = input.asInstanceOf[Array[Byte]]

+decoder = DecoderFactory.get().binaryDecoder(binary, 0, binary.length,

decoder)

+result = reader.read(result, decoder)

+deserializer.deserialize(result)

+ }

+

+ override def simpleString: String = {

+s"from_avro(${child.sql}, ${dataType.simpleString})"

--- End diff --

It's not used in `sql`, we can override `sql` here and use untruncated

version.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21817: [SPARK-24861][SS][test] create corrected temp dir...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/21817 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21817: [SPARK-24861][SS][test] create corrected temp directorie...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/21817 thanks, merging to master! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21822: [SPARK-24865] Remove AnalysisBarrier - WIP

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21822 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1156/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21822: [SPARK-24865] Remove AnalysisBarrier - WIP

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21822 **[Test build #93320 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93320/testReport)** for PR 21822 at commit [`83ffa51`](https://github.com/apache/spark/commit/83ffa51f4b165152dea214be4d73dd518d742a56). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20146: [SPARK-11215][ML] Add multiple columns support to String...

Github user viirya commented on the issue: https://github.com/apache/spark/pull/20146 @HyukjinKwon Thanks. Closed and re-opened now. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20146: [SPARK-11215][ML] Add multiple columns support to...

GitHub user viirya reopened a pull request: https://github.com/apache/spark/pull/20146 [SPARK-11215][ML] Add multiple columns support to StringIndexer ## What changes were proposed in this pull request? This takes over #19621 to add multi-column support to StringIndexer. ## How was this patch tested? Added tests. You can merge this pull request into a Git repository by running: $ git pull https://github.com/viirya/spark-1 SPARK-11215 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20146.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20146 commit bb990f1a6511d8ce20f4fff254dfe0ff43262a10 Author: Liang-Chi Hsieh Date: 2018-01-03T03:51:59Z Add multi-column support to StringIndexer. commit 26cc94bb335cf0ba3bcdbc2b78effd447026792c Author: Liang-Chi Hsieh Date: 2018-01-07T01:42:28Z Fix glm test. commit 540c364d2a70ecd6ee5b92fadedc5e9b85026d2c Author: Liang-Chi Hsieh Date: 2018-01-16T08:20:19Z Merge remote-tracking branch 'upstream/master' into SPARK-11215 commit 18acbbf7b70b87c75ba62be863580fe9accc23b4 Author: Liang-Chi Hsieh Date: 2018-01-24T12:03:26Z Improve test cases. commit b884fb5c0ce1e627390d08d8425721ea8e4d Author: Liang-Chi Hsieh Date: 2018-01-27T00:58:06Z Merge remote-tracking branch 'upstream/master' into SPARK-11215 commit 76ff7bf6054a687abd9fc16c8044020a5454d95f Author: Liang-Chi Hsieh Date: 2018-04-19T11:27:21Z Merge remote-tracking branch 'upstream/master' into SPARK-11215 commit 50af02eaccce7cecb7c3093d5bc14675ca860c22 Author: Liang-Chi Hsieh Date: 2018-04-19T11:30:46Z Change from 2.3 to 2.4. commit c1be2c7e28ebdfed580577a108d2f254834caed7 Author: Liang-Chi Hsieh Date: 2018-04-23T10:15:49Z Address comments. commit ed35d875414ba3cf8751a77463f61665e9c373b0 Author: Liang-Chi Hsieh Date: 2018-04-23T14:00:16Z Address comment. commit a1dcfda85243a1e2210177f2acfb78821c539b17 Author: Liang-Chi Hsieh Date: 2018-04-24T06:41:07Z Use SQL Aggregator for counting string labels. commit c1685228f7ec7f4904bca67efaee70498b9894c8 Author: Liang-Chi Hsieh Date: 2018-04-25T13:28:24Z Merge remote-tracking branch 'upstream/master' into SPARK-11215 commit a6551b02a10428d66e0dadcfcb5a8da3798ec814 Author: Liang-Chi Hsieh Date: 2018-04-26T04:13:09Z Drop NA values for both frequency and alphabet order types. commit c003bd3d6c58cf19249ff0ba9dd10140971d655c Author: Liang-Chi Hsieh Date: 2018-07-18T18:58:21Z Merge remote-tracking branch 'upstream/master' into SPARK-11215 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20146: [SPARK-11215][ML] Add multiple columns support to...

Github user viirya closed the pull request at: https://github.com/apache/spark/pull/20146 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21821: [SPARK-24867] [SQL] Add AnalysisBarrier to DataFrameWrit...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21821 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21774: [SPARK-24811][SQL]Avro: add new function from_avr...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21774#discussion_r203938957

--- Diff:

external/avro/src/main/scala/org/apache/spark/sql/avro/package.scala ---

@@ -36,4 +40,27 @@ package object avro {

@scala.annotation.varargs

def avro(sources: String*): DataFrame =

reader.format("avro").load(sources: _*)

}

+

+ /**

+ * Converts a binary column of avro format into its corresponding

catalyst value. The specified

+ * schema must match the read data, otherwise the behavior is undefined:

it may fail or return

+ * arbitrary result.

+ *

--- End diff --

+1

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21774: [SPARK-24811][SQL]Avro: add new function from_avr...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21774#discussion_r203938964

--- Diff:

external/avro/src/main/scala/org/apache/spark/sql/avro/AvroDataToCatalyst.scala

---

@@ -0,0 +1,64 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql

+

+import org.apache.avro.generic.GenericDatumReader

+import org.apache.avro.io.{BinaryDecoder, DecoderFactory}

+

+import org.apache.spark.sql.avro.{AvroDeserializer, SchemaConverters,

SerializableSchema}

+import org.apache.spark.sql.catalyst.expressions.{ExpectsInputTypes,

Expression, UnaryExpression}

+import org.apache.spark.sql.catalyst.expressions.codegen.{CodegenContext,

CodeGenerator, ExprCode}

+import org.apache.spark.sql.types.{AbstractDataType, BinaryType, DataType}

+

+case class AvroDataToCatalyst(child: Expression, avroType:

SerializableSchema)

+ extends UnaryExpression with ExpectsInputTypes {

+

+ override def inputTypes: Seq[AbstractDataType] = Seq(BinaryType)

+

+ override lazy val dataType: DataType =

+SchemaConverters.toSqlType(avroType.value).dataType

+

+ override def nullable: Boolean = true

+

+ @transient private lazy val reader = new

GenericDatumReader[Any](avroType.value)

+

+ @transient private lazy val deserializer = new

AvroDeserializer(avroType.value, dataType)

+

+ @transient private var decoder: BinaryDecoder = _

+

+ @transient private var result: Any = _

+

+ override def nullSafeEval(input: Any): Any = {

+val binary = input.asInstanceOf[Array[Byte]]

+decoder = DecoderFactory.get().binaryDecoder(binary, 0, binary.length,

decoder)

+result = reader.read(result, decoder)

+deserializer.deserialize(result)

+ }

+

+ override def simpleString: String = {

+s"from_avro(${child.sql}, ${dataType.simpleString})"

--- End diff --

but this is being used in `sql` though. Do we prefer truncated string form

in `sql` too?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21774: [SPARK-24811][SQL]Avro: add new function from_avr...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21774#discussion_r203938936

--- Diff:

external/avro/src/main/scala/org/apache/spark/sql/avro/package.scala ---

@@ -36,4 +40,27 @@ package object avro {

@scala.annotation.varargs

def avro(sources: String*): DataFrame =

reader.format("avro").load(sources: _*)

}

+

+ /**

+ * Converts a binary column of avro format into its corresponding

catalyst value. The specified

+ * schema must match the read data, otherwise the behavior is undefined:

it may fail or return

+ * arbitrary result.

+ *

+ * @param data the binary column.

+ * @param avroType the avro type.

+ */

+ @Experimental

+ def from_avro(data: Column, avroType: Schema): Column = {

--- End diff --

`Column` can support arbitrary expressions. Personally I don't like these

string-based overloads...

Since it's in an external module, I think we can only add python/R/SQL

version when we have a persistent UDF API.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21821: [SPARK-24867] [SQL] Add AnalysisBarrier to DataFrameWrit...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21821 **[Test build #93319 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93319/testReport)** for PR 21821 at commit [`9edc28f`](https://github.com/apache/spark/commit/9edc28fdcb7261f01db716f65e723668a493327e). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21774: [SPARK-24811][SQL]Avro: add new function from_avr...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21774#discussion_r203938730

--- Diff:

external/avro/src/main/scala/org/apache/spark/sql/avro/AvroDataToCatalyst.scala

---

@@ -0,0 +1,64 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql

+

+import org.apache.avro.generic.GenericDatumReader

+import org.apache.avro.io.{BinaryDecoder, DecoderFactory}

+

+import org.apache.spark.sql.avro.{AvroDeserializer, SchemaConverters,

SerializableSchema}

+import org.apache.spark.sql.catalyst.expressions.{ExpectsInputTypes,

Expression, UnaryExpression}

+import org.apache.spark.sql.catalyst.expressions.codegen.{CodegenContext,

CodeGenerator, ExprCode}

+import org.apache.spark.sql.types.{AbstractDataType, BinaryType, DataType}

+

+case class AvroDataToCatalyst(child: Expression, avroType:

SerializableSchema)

+ extends UnaryExpression with ExpectsInputTypes {

+

+ override def inputTypes: Seq[AbstractDataType] = Seq(BinaryType)

+

+ override lazy val dataType: DataType =

+SchemaConverters.toSqlType(avroType.value).dataType

+

+ override def nullable: Boolean = true

+

+ @transient private lazy val reader = new

GenericDatumReader[Any](avroType.value)

+

+ @transient private lazy val deserializer = new

AvroDeserializer(avroType.value, dataType)

+

+ @transient private var decoder: BinaryDecoder = _

+

+ @transient private var result: Any = _

+

+ override def nullSafeEval(input: Any): Any = {

+val binary = input.asInstanceOf[Array[Byte]]

+decoder = DecoderFactory.get().binaryDecoder(binary, 0, binary.length,

decoder)

+result = reader.read(result, decoder)

+deserializer.deserialize(result)

+ }

+

+ override def simpleString: String = {

+s"from_avro(${child.sql}, ${dataType.simpleString})"

--- End diff --

IIRC `simpleString` will be used in the plan string and should not be too

long.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21823: [SPARK-24870][SQL]Cache can't work normally if there are...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/21823 do you know why it's happening? It's super weird that `select key from src_cache where positiveNum = 1` can hit the cache but `select key from src_cache` can not. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21533: [SPARK-24195][Core] Ignore the files with "local" scheme...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21533 Thanks everyone for your help! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21320: [SPARK-4502][SQL] Parquet nested column pruning - founda...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21320 @HyukjinKwon We plan to merge this highly desirable feature into Spark 2.4 release. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21733: [SPARK-24763][SS] Remove redundant key data from value i...

Github user HeartSaVioR commented on the issue: https://github.com/apache/spark/pull/21733 Now I'd like to propose changing default behavior to apply new path but keeping backward compatibility, so applied it to the patch. I'm still open on decision to apply it as advanced option as first approach, and happy to roll back when we decide on that way. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21820: [SPARK-24868][PYTHON]add sequence function in Python

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21820 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21820: [SPARK-24868][PYTHON]add sequence function in Python

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21820 **[Test build #93317 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93317/testReport)** for PR 21820 at commit [`725c1b7`](https://github.com/apache/spark/commit/725c1b74d13309a567698b24150d1e7e06662769). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21824: [SPARK-24871][SQL] Refactor Concat and MapConcat to avoi...

Github user ueshin commented on the issue: https://github.com/apache/spark/pull/21824 also cc @cloud-fan @gatorsmile --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21802: [SPARK-23928][SQL] Add shuffle collection function.

Github user ueshin commented on the issue: https://github.com/apache/spark/pull/21802 cc @cloud-fan @gatorsmile --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21809: [SPARK-24851] : Map a Stage ID to it's Associated...

Github user jiangxb1987 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21809#discussion_r203936074

--- Diff: core/src/main/scala/org/apache/spark/status/AppStatusStore.scala

---

@@ -94,6 +94,13 @@ private[spark] class AppStatusStore(

}.toSeq

}

+ def getJobIdsAssociatedWithStage(stageId: Int): Seq[Set[Int]] = {

--- End diff --

We don't need to fetch all the stage attempts, just the first of it is

enough to get all the jobIds.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21818: [SPARK-24860][SQL] Support setting of partitionOv...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21818#discussion_r203934987

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/sources/InsertSuite.scala ---

@@ -486,6 +486,25 @@ class InsertSuite extends DataSourceTest with

SharedSQLContext {

}

}

}

+ test("SPARK-24860: dynamic partition overwrite specified per source

without catalog table") {

+withTempPath { path =>

+ Seq((1, 1, 1)).toDF("i", "part1", "part2")

--- End diff --

can we simplify the test? ideally we only need one partition column, and

write some initial data to the table. then do an overwrite with

partitionOverwriteMode=dynamic, and another overwrite with

partitionOverwriteMode=static.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21824: [SPARK-24871][SQL] Refactor Concat and MapConcat to avoi...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21824 **[Test build #93316 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93316/testReport)** for PR 21824 at commit [`644a30d`](https://github.com/apache/spark/commit/644a30d32e390f39f25515bb251e120e69502f27). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21820: [SPARK-24868][PYTHON]add sequence function in Python

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21820 **[Test build #93317 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93317/testReport)** for PR 21820 at commit [`725c1b7`](https://github.com/apache/spark/commit/725c1b74d13309a567698b24150d1e7e06662769). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21818: [SPARK-24860][SQL] Support setting of partitionOv...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21818#discussion_r203934743

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/InsertIntoHadoopFsRelationCommand.scala

---

@@ -91,8 +92,12 @@ case class InsertIntoHadoopFsRelationCommand(

val pathExists = fs.exists(qualifiedOutputPath)

-val enableDynamicOverwrite =

- sparkSession.sessionState.conf.partitionOverwriteMode ==

PartitionOverwriteMode.DYNAMIC

+val parameters = CaseInsensitiveMap(options)

+

+val enableDynamicOverwrite = parameters.get("partitionOverwriteMode")

+ .map(mode => PartitionOverwriteMode.withName(mode.toUpperCase))

+ .getOrElse(sparkSession.sessionState.conf.partitionOverwriteMode) ==

+ PartitionOverwriteMode.DYNAMIC

--- End diff --

nit: this is too long

```

val partitionOverwriteMode = parameters.get("partitionOverwriteMode")

val enableDynamicOverwrite = partitionOverwriteMode ==

PartitionOverwriteMode.DYNAMIC

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21824: [SPARK-24871][SQL] Refactor Concat and MapConcat to avoi...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21824 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1153/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21818: [SPARK-24860][SQL] Support setting of partitionOv...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21818#discussion_r203934766

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/sources/InsertSuite.scala ---

@@ -486,6 +486,25 @@ class InsertSuite extends DataSourceTest with

SharedSQLContext {

}

}

}

+ test("SPARK-24860: dynamic partition overwrite specified per source

without catalog table") {

--- End diff --

nit: add a blank file before this new test case

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21824: [SPARK-24871][SQL] Refactor Concat and MapConcat to avoi...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21824 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21824: [SPARK-24871][SQL] Refactor Concat and MapConcat to avoi...

Github user ueshin commented on the issue: https://github.com/apache/spark/pull/21824 cc @mn-mikke @bersprockets --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r203934633

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetReadSupport.scala

---

@@ -288,6 +310,27 @@ private[parquet] object ParquetReadSupport {

}

}

+ /**

+ * Computes the structural intersection between two Parquet group types.

+ */

+ private def intersectParquetGroups(

+ groupType1: GroupType, groupType2: GroupType): Option[GroupType] = {

+val fields =

+ groupType1.getFields.asScala

+.filter(field => groupType2.containsField(field.getName))

+.flatMap {

+ case field1: GroupType =>

--- End diff --

`field1` -> `field`.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21824: [SPARK-24871][SQL] Refactor Concat and MapConcat ...

GitHub user ueshin opened a pull request: https://github.com/apache/spark/pull/21824 [SPARK-24871][SQL] Refactor Concat and MapConcat to avoid creating concatenator object for each row. ## What changes were proposed in this pull request? Refactor `Concat` and `MapConcat` to: - avoid creating concatenator object for each row. - make `Concat` handle `containsNull` properly. - make `Concat` shortcut if `null` child is found. ## How was this patch tested? Added some tests and existing tests. You can merge this pull request into a Git repository by running: $ git pull https://github.com/ueshin/apache-spark issues/SPARK-24871/refactor_concat_mapconcat Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21824.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21824 commit c4d4b28756d93ecc5c1e9a50e705e2a9cb4b8460 Author: Takuya UESHIN Date: 2018-07-19T08:32:15Z Refactor `Concat` and `MapConcat` to avoid creating concatenator for each row. commit 644a30d32e390f39f25515bb251e120e69502f27 Author: Takuya UESHIN Date: 2018-07-19T10:38:20Z Make `Concat` shortcut if null child is found. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21820: [SPARK-24868][PYTHON]add sequence function in Pyt...

Github user huaxingao commented on a diff in the pull request:

https://github.com/apache/spark/pull/21820#discussion_r203934505

--- Diff: python/pyspark/sql/functions.py ---

@@ -2551,6 +2551,27 @@ def map_concat(*cols):

return Column(jc)

+@since(2.4)

+def sequence(start, stop, step=None):

+"""

+Generate a sequence of integers from start to stop, incrementing by

step.

+If step is not set, incrementing by 1 if start is less than or equal

to stop, otherwise -1.

+

+>>> df1 = spark.createDataFrame([(-2, 2)], ('C1', 'C2'))

+>>> df1.select(sequence('C1', 'C2').alias('r')).collect()

+[Row(r=[-2, -1, 0, 1, 2])]

+>>> df2 = spark.createDataFrame([(4, -4, -2)], ('C1', 'C2', 'C3'))

--- End diff --

It will throw ```java.lang.IllegalArgumentException```.

There is a check in ```collectionOperations.scala```

```

require(

(step > num.zero && start <= stop)

|| (step < num.zero && start >= stop)

|| (step == num.zero && start == stop),

s"Illegal sequence boundaries: $start to $stop by $step")

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r203934281

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetReadSupport.scala

---

@@ -47,16 +47,25 @@ import org.apache.spark.sql.types._

*

* Due to this reason, we no longer rely on [[ReadContext]] to pass

requested schema from [[init()]]

* to [[prepareForRead()]], but use a private `var` for simplicity.

+ *

+ * @param parquetMrCompatibility support reading with parquet-mr or

Spark's built-in Parquet reader

*/

-private[parquet] class ParquetReadSupport(val convertTz: Option[TimeZone])

+private[parquet] class ParquetReadSupport(val convertTz: Option[TimeZone],

+parquetMrCompatibility: Boolean)

extends ReadSupport[UnsafeRow] with Logging {

private var catalystRequestedSchema: StructType = _

+ /**

+ * Construct a [[ParquetReadSupport]] with [[convertTz]] set to [[None]]

and

+ * [[parquetMrCompatibility]] set to [[false]].

+ *

+ * We need a zero-arg constructor for SpecificParquetRecordReaderBase.

But that is only

+ * used in the vectorized reader, where we get the convertTz value

directly, and the value here

+ * is ignored. Further, we set [[parquetMrCompatibility]] to [[false]]

as this constructor is only

+ * called by the Spark reader.

--- End diff --

re:

https://github.com/apache/spark/pull/21320/files/cb858f202e49d69f2044681e37f982dc10676296#r199631341

actually, it doesn't looks clear to me too. What does the flag indicate? you

mean normal parquet reader vs vectorized parquet reader?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r203933423

--- Diff:

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/planning/SelectedFieldSuite.scala

---

@@ -0,0 +1,387 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.planning

+

+import org.scalatest.BeforeAndAfterAll

+import org.scalatest.exceptions.TestFailedException

+

+import org.apache.spark.SparkFunSuite

+import org.apache.spark.sql.catalyst.dsl.plans._

+import org.apache.spark.sql.catalyst.expressions.NamedExpression

+import org.apache.spark.sql.catalyst.parser.CatalystSqlParser

+import org.apache.spark.sql.catalyst.plans.logical.LocalRelation

+import org.apache.spark.sql.types._

+

+// scalastyle:off line.size.limit

+class SelectedFieldSuite extends SparkFunSuite with BeforeAndAfterAll {

+ // The test schema as a tree string, i.e. `schema.treeString`

+ // root

+ // |-- col1: string (nullable = false)

+ // |-- col2: struct (nullable = true)

+ // ||-- field1: integer (nullable = true)

+ // ||-- field2: array (nullable = true)

+ // |||-- element: integer (containsNull = false)

+ // ||-- field3: array (nullable = false)

+ // |||-- element: struct (containsNull = true)

+ // ||||-- subfield1: integer (nullable = true)

+ // ||||-- subfield2: integer (nullable = true)

+ // ||||-- subfield3: array (nullable = true)

+ // |||||-- element: integer (containsNull = true)

+ // ||-- field4: map (nullable = true)

+ // |||-- key: string

+ // |||-- value: struct (valueContainsNull = false)

+ // ||||-- subfield1: integer (nullable = true)

+ // ||||-- subfield2: array (nullable = true)

+ // |||||-- element: integer (containsNull = false)

+ // ||-- field5: array (nullable = false)

+ // |||-- element: struct (containsNull = true)

+ // ||||-- subfield1: struct (nullable = false)

+ // |||||-- subsubfield1: integer (nullable = true)

+ // |||||-- subsubfield2: integer (nullable = true)

+ // ||||-- subfield2: struct (nullable = true)

+ // |||||-- subsubfield1: struct (nullable = true)

+ // ||||||-- subsubsubfield1: string (nullable =

true)

+ // |||||-- subsubfield2: integer (nullable = true)

+ // ||-- field6: struct (nullable = true)

+ // |||-- subfield1: string (nullable = false)

+ // |||-- subfield2: string (nullable = true)

+ // ||-- field7: struct (nullable = true)

+ // |||-- subfield1: struct (nullable = true)

+ // ||||-- subsubfield1: integer (nullable = true)

+ // ||||-- subsubfield2: integer (nullable = true)

+ // ||-- field8: map (nullable = true)

+ // |||-- key: string

+ // |||-- value: array (valueContainsNull = false)

+ // ||||-- element: struct (containsNull = true)

+ // |||||-- subfield1: integer (nullable = true)

+ // |||||-- subfield2: array (nullable = true)

+ // ||||||-- element: integer (containsNull = false)

+ // ||-- field9: map (nullable = true)

+ // |||-- key: string

+ // |||-- value: integer (valueContainsNull = false)

+ // |-- col3: array (nullable = false)

+ // ||-- element: struct (containsNull = false)

+ // |||-- field1: struct (nullable = true)

+ // ||||-- subfield1: integer (nullable = false)

+ // ||||-- subfield2: integer (nullable = true)

+ // |||-- field2: map (nullable = true)

+ // ||||-- key: string

+ // ||||-- value: integer (valueConta

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r203933307

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetSchemaPruningSuite.scala

---

@@ -0,0 +1,156 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.datasources.parquet

+

+import java.io.File

+

+import org.apache.spark.sql.{QueryTest, Row}

+import org.apache.spark.sql.execution.FileSchemaPruningTest

+import org.apache.spark.sql.internal.SQLConf

+import org.apache.spark.sql.test.SharedSQLContext

+

+class ParquetSchemaPruningSuite

+extends QueryTest

+with ParquetTest

+with FileSchemaPruningTest

+with SharedSQLContext {

--- End diff --

Can we just simply:

```scala

override def beforeEach(): Unit = {

super.beforeEach()

spark.conf.set(SQLConf. NESTED_SCHEMA_PRUNING_ENABLED.key, "true")

}

override def afterEach(): Unit = {

try {

spark.conf.unset(SQLConf.NESTED_SCHEMA_PRUNING_ENABLED.key)

} finally {

super.afterEach()

}

}

```

without the complicated hierarchy?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21653: [SPARK-13343] speculative tasks that didn't commit shoul...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21653 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/93302/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21733: [SPARK-24763][SS] Remove redundant key data from value i...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21733 **[Test build #93315 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93315/testReport)** for PR 21733 at commit [`977428c`](https://github.com/apache/spark/commit/977428cb35a6fc0a9fa7a0ca1a51e39a94447a01). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21653: [SPARK-13343] speculative tasks that didn't commit shoul...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21653 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r203931755

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/planning/ProjectionOverSchema.scala

---

@@ -0,0 +1,62 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.planning

+

+import org.apache.spark.sql.catalyst.expressions._

+import org.apache.spark.sql.types._

+

+/**

+ * A Scala extractor that projects an expression over a given schema. Data

types,

+ * field indexes and field counts of complex type extractors and attributes

+ * are adjusted to fit the schema. All other expressions are left as-is.

This

+ * class is motivated by columnar nested schema pruning.

+ */

+case class ProjectionOverSchema(schema: StructType) {

--- End diff --

This still looks weird that we place this under `catalyst` since we

currently only use it under `execution`.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21813: [SPARK-24424][SQL] Support ANSI-SQL compliant syntax for...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21813 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/93304/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21813: [SPARK-24424][SQL] Support ANSI-SQL compliant syntax for...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21813 **[Test build #93304 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93304/testReport)** for PR 21813 at commit [`e0c57f7`](https://github.com/apache/spark/commit/e0c57f73ec4c3e24e4af107cb457c9b1c13f4174). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r203931060

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/planning/ProjectionOverSchema.scala

---

@@ -0,0 +1,62 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.planning

+

+import org.apache.spark.sql.catalyst.expressions._

+import org.apache.spark.sql.types._

+

+/**

+ * A Scala extractor that projects an expression over a given schema. Data

types,

+ * field indexes and field counts of complex type extractors and attributes

+ * are adjusted to fit the schema. All other expressions are left as-is.

This

+ * class is motivated by columnar nested schema pruning.

+ */

+case class ProjectionOverSchema(schema: StructType) {

+ private val fieldNames = schema.fieldNames.toSet

+

+ def unapply(expr: Expression): Option[Expression] = getProjection(expr)

+

+ private def getProjection(expr: Expression): Option[Expression] =

+expr match {

+ case a @ AttributeReference(name, _, _, _) if

(fieldNames.contains(name)) =>

+Some(a.copy(dataType = schema(name).dataType)(a.exprId,

a.qualifier))

+ case GetArrayItem(child, arrayItemOrdinal) =>

+getProjection(child).map {

+ case projection =>

+GetArrayItem(projection, arrayItemOrdinal)

--- End diff --

nit:

```

getProjection(child).map { projection => GetArrayItem(projection,

arrayItemOrdinal) }

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21320: [SPARK-4502][SQL] Parquet nested column pruning -...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21320#discussion_r203931030

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/planning/ProjectionOverSchema.scala

---

@@ -0,0 +1,62 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.planning

+

+import org.apache.spark.sql.catalyst.expressions._

+import org.apache.spark.sql.types._

+

+/**

+ * A Scala extractor that projects an expression over a given schema. Data

types,

+ * field indexes and field counts of complex type extractors and attributes

+ * are adjusted to fit the schema. All other expressions are left as-is.

This

+ * class is motivated by columnar nested schema pruning.

+ */

+case class ProjectionOverSchema(schema: StructType) {

+ private val fieldNames = schema.fieldNames.toSet

+

+ def unapply(expr: Expression): Option[Expression] = getProjection(expr)

+

+ private def getProjection(expr: Expression): Option[Expression] =

+expr match {

+ case a @ AttributeReference(name, _, _, _) if

(fieldNames.contains(name)) =>

+Some(a.copy(dataType = schema(name).dataType)(a.exprId,

a.qualifier))

+ case GetArrayItem(child, arrayItemOrdinal) =>

+getProjection(child).map {

+ case projection =>

+GetArrayItem(projection, arrayItemOrdinal)

+}

+ case GetArrayStructFields(child, StructField(name, _, _, _), _,

numFields, containsNull) =>

+getProjection(child).map(p => (p, p.dataType)).map {

+ case (projection, ArrayType(projSchema @ StructType(_), _)) =>

+GetArrayStructFields(projection,

+ projSchema(name), projSchema.fieldIndex(name),

projSchema.size, containsNull)

+}

+ case GetMapValue(child, key) =>

+getProjection(child).map {

+ case projection =>

--- End diff --

nit:

```scala

getProjection(child).map { projection => GetMapValue(projection, key) }

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21822: [SPARK-24865] Remove AnalysisBarrier - WIP

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21822 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21822: [SPARK-24865] Remove AnalysisBarrier - WIP

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21822 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/93310/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21822: [SPARK-24865] Remove AnalysisBarrier - WIP

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21822 **[Test build #93310 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/93310/testReport)** for PR 21822 at commit [`738e99c`](https://github.com/apache/spark/commit/738e99c615f45cfb6e5882a822dafcb8472b78ea). * This patch **fails Spark unit tests**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21474: [SPARK-24297][CORE] Fetch-to-disk by default for > 2gb

Github user jerryshao commented on the issue: https://github.com/apache/spark/pull/21474 I will take a look at this sometime day, but don't block on me if it is urgent. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org