[GitHub] [spark] SparkQA commented on issue #23531: [SPARK-24497][SQL] Support recursive SQL query

SparkQA commented on issue #23531: [SPARK-24497][SQL] Support recursive SQL query URL: https://github.com/apache/spark/pull/23531#issuecomment-526573618 **[Test build #109944 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/109944/testReport)** for PR 23531 at commit [`f35a784`](https://github.com/apache/spark/commit/f35a78495732d03baf671cb9465ed4b00c2d05a3). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #23531: [SPARK-24497][SQL] Support recursive SQL query

AmplabJenkins commented on issue #23531: [SPARK-24497][SQL] Support recursive SQL query URL: https://github.com/apache/spark/pull/23531#issuecomment-526575217 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #23531: [SPARK-24497][SQL] Support recursive SQL query

AmplabJenkins commented on issue #23531: [SPARK-24497][SQL] Support recursive SQL query URL: https://github.com/apache/spark/pull/23531#issuecomment-526575225 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/14970/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #23531: [SPARK-24497][SQL] Support recursive SQL query

AmplabJenkins removed a comment on issue #23531: [SPARK-24497][SQL] Support recursive SQL query URL: https://github.com/apache/spark/pull/23531#issuecomment-526575217 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #23531: [SPARK-24497][SQL] Support recursive SQL query

AmplabJenkins removed a comment on issue #23531: [SPARK-24497][SQL] Support recursive SQL query URL: https://github.com/apache/spark/pull/23531#issuecomment-526575225 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/14970/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #25299: [SPARK-27651][Core] Avoid the network when shuffle blocks are fetched from the same host

cloud-fan commented on a change in pull request #25299: [SPARK-27651][Core] Avoid the network when shuffle blocks are fetched from the same host URL: https://github.com/apache/spark/pull/25299#discussion_r319474398 ## File path: core/src/main/scala/org/apache/spark/storage/BlockManager.scala ## @@ -206,6 +206,8 @@ private[spark] class BlockManager( new BlockManager.RemoteBlockDownloadFileManager(this) private val maxRemoteBlockToMem = conf.get(config.MAX_REMOTE_BLOCK_SIZE_FETCH_TO_MEM) + private val executorIdToLocalDirsCache = new mutable.HashMap[String, Array[String]]() Review comment: when do we update it? e.g. what if an executor is down. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #25299: [SPARK-27651][Core] Avoid the network when shuffle blocks are fetched from the same host

cloud-fan commented on a change in pull request #25299: [SPARK-27651][Core]

Avoid the network when shuffle blocks are fetched from the same host

URL: https://github.com/apache/spark/pull/25299#discussion_r319469667

##

File path: core/src/main/scala/org/apache/spark/SparkContext.scala

##

@@ -2851,6 +2851,9 @@ object SparkContext extends Logging {

memoryPerSlaveInt, sc.executorMemory))

}

+// for local cluster mode the SHUFFLE_HOST_LOCAL_DISK_READING_ENABLED

defaults to false

+sc.conf.setIfMissing(config.SHUFFLE_HOST_LOCAL_DISK_READING_ENABLED,

false)

Review comment:

why is this necessary?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #25299: [SPARK-27651][Core] Avoid the network when shuffle blocks are fetched from the same host

cloud-fan commented on a change in pull request #25299: [SPARK-27651][Core]

Avoid the network when shuffle blocks are fetched from the same host

URL: https://github.com/apache/spark/pull/25299#discussion_r319473468

##

File path: core/src/main/scala/org/apache/spark/network/BlockDataManager.scala

##

@@ -22,16 +22,22 @@ import scala.reflect.ClassTag

import org.apache.spark.TaskContext

import org.apache.spark.network.buffer.ManagedBuffer

import org.apache.spark.network.client.StreamCallbackWithID

-import org.apache.spark.storage.{BlockId, StorageLevel}

+import org.apache.spark.storage.{BlockId, ShuffleBlockId, StorageLevel}

private[spark]

trait BlockDataManager {

+ /**

+ * Interface to get host-local shuffle block data. Throws an exception if

the block cannot be

Review comment:

The block manager keeps RDD blocks as well, shall we support it? I think

it's better to have a `def getHostLocalBlockData(blockId: BlockId, dirs:

Array[String])`, to be consistent with `getLocalBlockData`. We can add an

assert in `getHostLocalBlockData` to make sure the `blockId` is

`ShuffleBlockId`, if we don't want to support RDD blocks now.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on issue #23531: [SPARK-24497][SQL] Support recursive SQL query

SparkQA commented on issue #23531: [SPARK-24497][SQL] Support recursive SQL query URL: https://github.com/apache/spark/pull/23531#issuecomment-526575750 **[Test build #109945 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/109945/testReport)** for PR 23531 at commit [`1def9fa`](https://github.com/apache/spark/commit/1def9fa7078948d50fa9ff4a80fe0321ce948ada). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #25299: [SPARK-27651][Core] Avoid the network when shuffle blocks are fetched from the same host

cloud-fan commented on a change in pull request #25299: [SPARK-27651][Core] Avoid the network when shuffle blocks are fetched from the same host URL: https://github.com/apache/spark/pull/25299#discussion_r319477754 ## File path: core/src/main/scala/org/apache/spark/storage/BlockManagerMasterEndpoint.scala ## @@ -51,6 +53,13 @@ class BlockManagerMasterEndpoint( // Mapping from block manager id to the block manager's information. private val blockManagerInfo = new mutable.HashMap[BlockManagerId, BlockManagerInfo] + // Mapping from executor id to the block manager's local disk directories. + private val executorIdToLocalDirs = Review comment: shall we update it in `removeBlockManager`? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gaborgsomogyi opened a new pull request #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks

gaborgsomogyi opened a new pull request #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks URL: https://github.com/apache/spark/pull/25631 ### What changes were proposed in this pull request? At the moment there are 3 places where communication protocol with Kafka cluster has to be set when delegation token used: * On delegation token * On source * On sink Most of the time users are using the same protocol on all these places (within one Kafka cluster). It would be better to declare it in one place (delegation token side) and Kafka sources/sinks can take this config over. In this PR I've I've modified the code in a way that Kafka sources/sinks are taking over delegation token side `security.protocol` configuration when the token and the source/sink matches in `bootstrap.servers` configuration. This default configuration can be overwritten on each source/sink independently by using `kafka.security.protocol` configuration. ### Why are the changes needed? The actual configuration's default behavior represents the minority of the use-cases and inconvenient. ### Does this PR introduce any user-facing change? Yes, with this change users need to provide less configuration parameters by default. ### How was this patch tested? Existing + additional unit tests. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #25628: [SPARK-28897][Core]'coalesce' error when executing dataframe.na.fill

HyukjinKwon commented on a change in pull request #25628:

[SPARK-28897][Core]'coalesce' error when executing dataframe.na.fill

URL: https://github.com/apache/spark/pull/25628#discussion_r319482514

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/DataFrameNaFunctions.scala

##

@@ -435,11 +435,10 @@ final class DataFrameNaFunctions private[sql](df:

DataFrame) {

* Returns a [[Column]] expression that replaces null value in `col` with

`replacement`.

*/

private def fillCol[T](col: StructField, replacement: T): Column = {

-val quotedColName = "`" + col.name + "`"

Review comment:

Does `.` work too?

```scala

scala> val df = spark.range(1).selectExpr("1 as `a.b`")

df: org.apache.spark.sql.DataFrame = [a.b: int]

scala> df.col("a.b")

org.apache.spark.sql.AnalysisException: Cannot resolve column name "a.b"

among (a.b);

at org.apache.spark.sql.Dataset.$anonfun$resolve$1(Dataset.scala:259)

at scala.Option.getOrElse(Option.scala:138)

at org.apache.spark.sql.Dataset.resolve(Dataset.scala:259)

at org.apache.spark.sql.Dataset.col(Dataset.scala:1340)

... 47 elided

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks

AmplabJenkins commented on issue #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks URL: https://github.com/apache/spark/pull/25631#issuecomment-526577073 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks

AmplabJenkins commented on issue #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks URL: https://github.com/apache/spark/pull/25631#issuecomment-526577253 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks

AmplabJenkins removed a comment on issue #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks URL: https://github.com/apache/spark/pull/25631#issuecomment-526577073 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on issue #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks

SparkQA commented on issue #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks URL: https://github.com/apache/spark/pull/25631#issuecomment-526578034 **[Test build #109946 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/109946/testReport)** for PR 25631 at commit [`a79d77f`](https://github.com/apache/spark/commit/a79d77fbf793a37752912a8d84d9caf5d906b187). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

SparkQA commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526578038 **[Test build #109947 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/109947/testReport)** for PR 25497 at commit [`1068514`](https://github.com/apache/spark/commit/1068514162cc9f27e57d0342d3a953967aaf76e2). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks

AmplabJenkins removed a comment on issue #25631: [SPARK-28928][SS] Take over Kafka delegation token protocol on sources/sinks URL: https://github.com/apache/spark/pull/25631#issuecomment-526577253 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

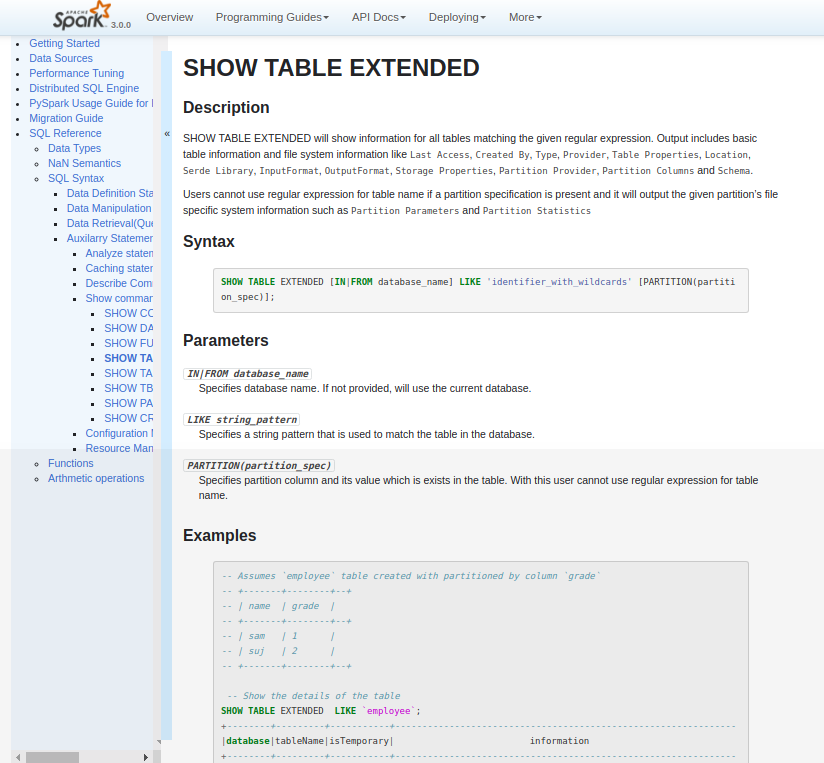

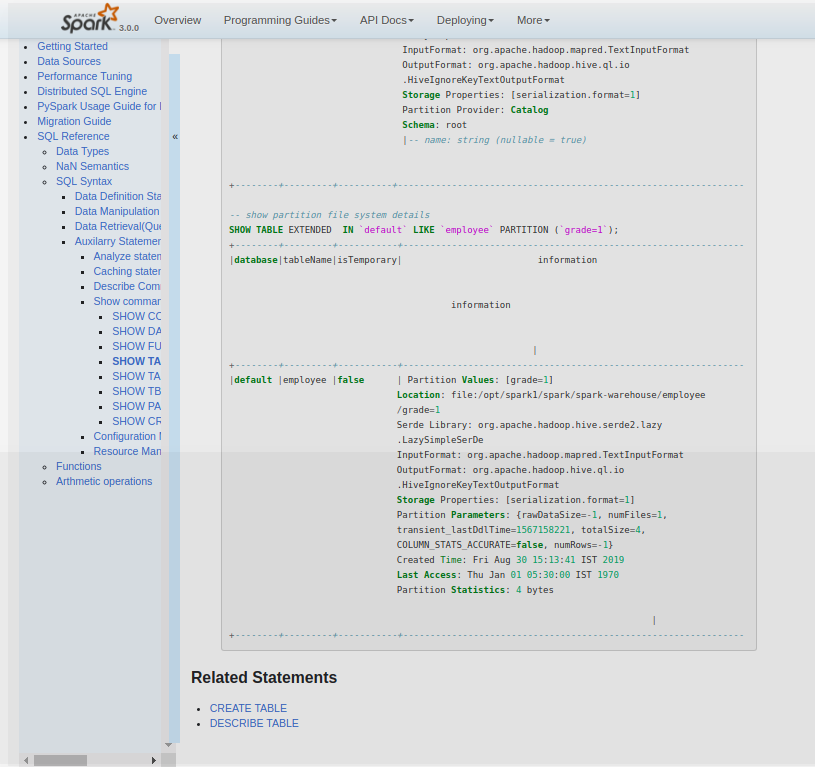

[GitHub] [spark] shivusondur opened a new pull request #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference

shivusondur opened a new pull request #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference URL: https://github.com/apache/spark/pull/25632 ### What changes were proposed in this pull request? Added the document reference for SHOW TABLE EXTENDED sql command ### Why are the changes needed? For User reference ### Does this PR introduce any user-facing change? yes, it provides document reference for SHOW TABLE EXTENDED sql command ### How was this patch tested? verified in snap Attached the Snap     This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] shivusondur commented on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference

shivusondur commented on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference URL: https://github.com/apache/spark/pull/25632#issuecomment-526580336 @dilipbiswal @gatorsmile plz review This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference

AmplabJenkins commented on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference URL: https://github.com/apache/spark/pull/25632#issuecomment-526580405 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on issue #25104: [SPARK-28341][SQL] create a public API for V2SessionCatalog

SparkQA commented on issue #25104: [SPARK-28341][SQL] create a public API for V2SessionCatalog URL: https://github.com/apache/spark/pull/25104#issuecomment-526580515 **[Test build #109948 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/109948/testReport)** for PR 25104 at commit [`8cd5cde`](https://github.com/apache/spark/commit/8cd5cde53c12ba363e2ec556ce03ba4544d76cf2). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference

AmplabJenkins commented on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference URL: https://github.com/apache/spark/pull/25632#issuecomment-526582100 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference

AmplabJenkins removed a comment on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference URL: https://github.com/apache/spark/pull/25632#issuecomment-526580405 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference

AmplabJenkins commented on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference URL: https://github.com/apache/spark/pull/25632#issuecomment-526582292 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #25104: [SPARK-28341][SQL] create a public API for V2SessionCatalog

AmplabJenkins commented on issue #25104: [SPARK-28341][SQL] create a public API for V2SessionCatalog URL: https://github.com/apache/spark/pull/25104#issuecomment-526582495 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/14972/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

AmplabJenkins removed a comment on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526582463 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

AmplabJenkins commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526582463 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

AmplabJenkins commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526582474 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/14971/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

AmplabJenkins removed a comment on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526582474 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/14971/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #25104: [SPARK-28341][SQL] create a public API for V2SessionCatalog

AmplabJenkins removed a comment on issue #25104: [SPARK-28341][SQL] create a public API for V2SessionCatalog URL: https://github.com/apache/spark/pull/25104#issuecomment-526582495 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/14972/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #25104: [SPARK-28341][SQL] create a public API for V2SessionCatalog

AmplabJenkins removed a comment on issue #25104: [SPARK-28341][SQL] create a public API for V2SessionCatalog URL: https://github.com/apache/spark/pull/25104#issuecomment-526582490 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference

AmplabJenkins removed a comment on issue #25632: [SPARK-28809][DOC][SQL]Document SHOW TABLE in SQL Reference URL: https://github.com/apache/spark/pull/25632#issuecomment-526582100 Can one of the admins verify this patch? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #25104: [SPARK-28341][SQL] create a public API for V2SessionCatalog

AmplabJenkins commented on issue #25104: [SPARK-28341][SQL] create a public API for V2SessionCatalog URL: https://github.com/apache/spark/pull/25104#issuecomment-526582490 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

SparkQA commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526583180 **[Test build #109949 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/109949/testReport)** for PR 25497 at commit [`806d443`](https://github.com/apache/spark/commit/806d443ab71dc34ed555b9d3fa7f894fe660eacc). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gaborgsomogyi commented on a change in pull request #25477: [SPARK-28760][SS][TESTS] Add Kafka delegation token end-to-end test with mini KDC

gaborgsomogyi commented on a change in pull request #25477:

[SPARK-28760][SS][TESTS] Add Kafka delegation token end-to-end test with mini

KDC

URL: https://github.com/apache/spark/pull/25477#discussion_r319491077

##

File path:

external/kafka-0-10-sql/src/test/scala/org/apache/spark/sql/kafka010/KafkaDelegationTokenSuite.scala

##

@@ -0,0 +1,120 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.kafka010

+

+import java.util.UUID

+

+import org.apache.hadoop.conf.Configuration

+import org.apache.hadoop.security.{Credentials, UserGroupInformation}

+import org.apache.kafka.common.security.auth.SecurityProtocol.SASL_PLAINTEXT

+

+import org.apache.spark.deploy.SparkHadoopUtil

+import org.apache.spark.deploy.security.HadoopDelegationTokenManager

+import org.apache.spark.internal.config.{KEYTAB, PRINCIPAL}

+import org.apache.spark.sql.execution.streaming.MemoryStream

+import org.apache.spark.sql.streaming.{OutputMode, StreamTest}

+import org.apache.spark.sql.test.SharedSQLContext

+

+class KafkaDelegationTokenSuite extends StreamTest with SharedSQLContext with

KafkaTest {

+

+ import testImplicits._

+

+ protected var testUtils: KafkaTestUtils = _

+

+ protected override def sparkConf = super.sparkConf

+.set("spark.security.credentials.hadoopfs.enabled", "false")

+.set("spark.security.credentials.hbase.enabled", "false")

+.set(KEYTAB, testUtils.clientKeytab)

+.set(PRINCIPAL, testUtils.clientPrincipal)

+.set("spark.kafka.clusters.cluster1.auth.bootstrap.servers",

testUtils.brokerAddress)

+.set("spark.kafka.clusters.cluster1.security.protocol",

SASL_PLAINTEXT.name)

+

+ override def beforeAll(): Unit = {

+testUtils = new KafkaTestUtils(Map.empty, true)

+testUtils.setup()

+super.beforeAll()

+ }

+

+ override def afterAll(): Unit = {

+try {

+ if (testUtils != null) {

+testUtils.teardown()

+testUtils = null

+ }

+ UserGroupInformation.reset()

+} finally {

+ super.afterAll()

+}

+ }

+

+ test("Roundtrip") {

+val hadoopConf = new Configuration()

+val manager = new HadoopDelegationTokenManager(spark.sparkContext.conf,

hadoopConf, null)

+val credentials = new Credentials()

+manager.obtainDelegationTokens(credentials)

+val serializedCredentials = SparkHadoopUtil.get.serialize(credentials)

+SparkHadoopUtil.get.addDelegationTokens(serializedCredentials,

spark.sparkContext.conf)

+

+val topic = "topic-" + UUID.randomUUID().toString

+testUtils.createTopic(topic, partitions = 5)

+

+withTempDir { checkpointDir =>

+ val input = MemoryStream[String]

+

+ val df = input.toDF()

+ val writer = df.writeStream

+.outputMode(OutputMode.Append)

+.format("kafka")

+.option("checkpointLocation", checkpointDir.getCanonicalPath)

+.option("kafka.bootstrap.servers", testUtils.brokerAddress)

+.option("kafka.security.protocol", SASL_PLAINTEXT.name)

Review comment:

For tracking purposes I've filed SPARK-28928.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gaborgsomogyi commented on a change in pull request #25477: [SPARK-28760][SS][TESTS] Add Kafka delegation token end-to-end test with mini KDC

gaborgsomogyi commented on a change in pull request #25477:

[SPARK-28760][SS][TESTS] Add Kafka delegation token end-to-end test with mini

KDC

URL: https://github.com/apache/spark/pull/25477#discussion_r319491077

##

File path:

external/kafka-0-10-sql/src/test/scala/org/apache/spark/sql/kafka010/KafkaDelegationTokenSuite.scala

##

@@ -0,0 +1,120 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.kafka010

+

+import java.util.UUID

+

+import org.apache.hadoop.conf.Configuration

+import org.apache.hadoop.security.{Credentials, UserGroupInformation}

+import org.apache.kafka.common.security.auth.SecurityProtocol.SASL_PLAINTEXT

+

+import org.apache.spark.deploy.SparkHadoopUtil

+import org.apache.spark.deploy.security.HadoopDelegationTokenManager

+import org.apache.spark.internal.config.{KEYTAB, PRINCIPAL}

+import org.apache.spark.sql.execution.streaming.MemoryStream

+import org.apache.spark.sql.streaming.{OutputMode, StreamTest}

+import org.apache.spark.sql.test.SharedSQLContext

+

+class KafkaDelegationTokenSuite extends StreamTest with SharedSQLContext with

KafkaTest {

+

+ import testImplicits._

+

+ protected var testUtils: KafkaTestUtils = _

+

+ protected override def sparkConf = super.sparkConf

+.set("spark.security.credentials.hadoopfs.enabled", "false")

+.set("spark.security.credentials.hbase.enabled", "false")

+.set(KEYTAB, testUtils.clientKeytab)

+.set(PRINCIPAL, testUtils.clientPrincipal)

+.set("spark.kafka.clusters.cluster1.auth.bootstrap.servers",

testUtils.brokerAddress)

+.set("spark.kafka.clusters.cluster1.security.protocol",

SASL_PLAINTEXT.name)

+

+ override def beforeAll(): Unit = {

+testUtils = new KafkaTestUtils(Map.empty, true)

+testUtils.setup()

+super.beforeAll()

+ }

+

+ override def afterAll(): Unit = {

+try {

+ if (testUtils != null) {

+testUtils.teardown()

+testUtils = null

+ }

+ UserGroupInformation.reset()

+} finally {

+ super.afterAll()

+}

+ }

+

+ test("Roundtrip") {

+val hadoopConf = new Configuration()

+val manager = new HadoopDelegationTokenManager(spark.sparkContext.conf,

hadoopConf, null)

+val credentials = new Credentials()

+manager.obtainDelegationTokens(credentials)

+val serializedCredentials = SparkHadoopUtil.get.serialize(credentials)

+SparkHadoopUtil.get.addDelegationTokens(serializedCredentials,

spark.sparkContext.conf)

+

+val topic = "topic-" + UUID.randomUUID().toString

+testUtils.createTopic(topic, partitions = 5)

+

+withTempDir { checkpointDir =>

+ val input = MemoryStream[String]

+

+ val df = input.toDF()

+ val writer = df.writeStream

+.outputMode(OutputMode.Append)

+.format("kafka")

+.option("checkpointLocation", checkpointDir.getCanonicalPath)

+.option("kafka.bootstrap.servers", testUtils.brokerAddress)

+.option("kafka.security.protocol", SASL_PLAINTEXT.name)

Review comment:

For tracking purposes I've filed

[SPARK-28928](https://issues.apache.org/jira/browse/SPARK-28928).

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cxzl25 commented on a change in pull request #23516: [SPARK-26598] Fix HiveThriftServer2 set hiveconf and hivevar in every sql

cxzl25 commented on a change in pull request #23516: [SPARK-26598] Fix

HiveThriftServer2 set hiveconf and hivevar in every sql

URL: https://github.com/apache/spark/pull/23516#discussion_r319491134

##

File path:

sql/hive-thriftserver/src/main/scala/org/apache/spark/sql/hive/thriftserver/server/SparkSQLOperationManager.scala

##

@@ -51,9 +50,6 @@ private[thriftserver] class SparkSQLOperationManager()

require(sqlContext != null, s"Session handle:

${parentSession.getSessionHandle} has not been" +

s" initialized or had already closed.")

val conf = sqlContext.sessionState.conf

-val hiveSessionState = parentSession.getSessionState

-setConfMap(conf, hiveSessionState.getOverriddenConfigurations)

-setConfMap(conf, hiveSessionState.getHiveVariables)

Review comment:

```

cat < test.sql

select '\${a}', '\${b}';

set b=MOD_VALUE;

set b;

EOF

beeline -u jdbc:hive2://localhost:1 --hiveconf a=avalue --hivevar

b=bvalue -f test.sql

```

Result:

```

+-+-+--+

| key | value |

+-+-+--+

| b | bvalue |

+-+-+--+

1 row selected (0.022 seconds)

```

It is wrong to set the hivevar/hiveconf variable in every operation, which

prevents variable updates.

The intention is just an initialized value, so setting it once in

SparkSQLSessionManager#openSession is enough.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

AmplabJenkins commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526584963 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/14973/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

AmplabJenkins commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526584956 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

AmplabJenkins removed a comment on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526584963 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/14973/ Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

AmplabJenkins removed a comment on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526584956 Merged build finished. Test PASSed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #25620: [SPARK-25341][Core] Support rolling back a shuffle map stage and re-generate the shuffle files

cloud-fan commented on a change in pull request #25620: [SPARK-25341][Core]

Support rolling back a shuffle map stage and re-generate the shuffle files

URL: https://github.com/apache/spark/pull/25620#discussion_r319495883

##

File path:

common/network-shuffle/src/main/java/org/apache/spark/network/shuffle/ExternalBlockHandler.java

##

@@ -106,7 +106,7 @@ protected void handleMessage(

numBlockIds += ids.length;

}

streamId = streamManager.registerStream(client.getClientId(),

-new ManagedBufferIterator(msg, numBlockIds), client.getChannel());

+new ShuffleManagedBufferIterator(msg), client.getChannel());

Review comment:

we can also remove

```

numBlockIds = 0;

for (int[] ids: msg.reduceIds) {

numBlockIds += ids.length;

}

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gaborgsomogyi commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer

gaborgsomogyi commented on a change in pull request #22138: [SPARK-25151][SS]

Apply Apache Commons Pool to KafkaDataConsumer

URL: https://github.com/apache/spark/pull/22138#discussion_r319496507

##

File path:

external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/InternalKafkaConsumerPool.scala

##

@@ -0,0 +1,221 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.kafka010

+

+import java.{util => ju}

+import java.util.concurrent.ConcurrentHashMap

+

+import org.apache.commons.pool2.{BaseKeyedPooledObjectFactory, PooledObject,

SwallowedExceptionListener}

+import org.apache.commons.pool2.impl.{DefaultEvictionPolicy,

DefaultPooledObject, GenericKeyedObjectPool, GenericKeyedObjectPoolConfig}

+

+import org.apache.spark.SparkConf

+import org.apache.spark.internal.Logging

+import org.apache.spark.sql.kafka010.InternalKafkaConsumerPool._

+import org.apache.spark.sql.kafka010.KafkaDataConsumer.CacheKey

+

+/**

+ * Provides object pool for [[InternalKafkaConsumer]] which is grouped by

[[CacheKey]].

+ *

+ * This class leverages [[GenericKeyedObjectPool]] internally, hence providing

methods based on

+ * the class, and same contract applies: after using the borrowed object, you

must either call

+ * returnObject() if the object is healthy to return to pool, or

invalidateObject() if the object

+ * should be destroyed.

+ *

+ * The soft capacity of pool is determined by

"spark.kafka.consumer.cache.capacity" config value,

+ * and the pool will have reasonable default value if the value is not

provided.

+ * (The instance will do its best effort to respect soft capacity but it can

exceed when there's

+ * a borrowing request and there's neither free space nor idle object to

clear.)

+ *

+ * This class guarantees that no caller will get pooled object once the object

is borrowed and

+ * not yet returned, hence provide thread-safety usage of non-thread-safe

[[InternalKafkaConsumer]]

+ * unless caller shares the object to multiple threads.

+ */

+private[kafka010] class InternalKafkaConsumerPool(

+objectFactory: ObjectFactory,

+poolConfig: PoolConfig) extends Logging {

+

+ def this(conf: SparkConf) = {

+this(new ObjectFactory, new PoolConfig(conf))

+ }

+

+ // the class is intended to have only soft capacity

+ assert(poolConfig.getMaxTotal < 0)

+

+ private val pool = {

+val internalPool = new GenericKeyedObjectPool[CacheKey,

InternalKafkaConsumer](

+ objectFactory, poolConfig)

+

internalPool.setSwallowedExceptionListener(CustomSwallowedExceptionListener)

+internalPool

+ }

+

+ /**

+ * Borrows [[InternalKafkaConsumer]] object from the pool. If there's no

idle object for the key,

+ * the pool will create the [[InternalKafkaConsumer]] object.

+ *

+ * If the pool doesn't have idle object for the key and also exceeds the

soft capacity,

+ * pool will try to clear some of idle objects.

+ *

+ * Borrowed object must be returned by either calling returnObject or

invalidateObject, otherwise

+ * the object will be kept in pool as active object.

+ */

+ def borrowObject(key: CacheKey, kafkaParams: ju.Map[String, Object]):

InternalKafkaConsumer = {

+updateKafkaParamForKey(key, kafkaParams)

+

+if (size >= poolConfig.softMaxSize) {

+ logWarning("Pool exceeds its soft max size, cleaning up idle objects...")

+ pool.clearOldest()

+}

+

+pool.borrowObject(key)

+ }

+

+ /** Returns borrowed object to the pool. */

+ def returnObject(consumer: InternalKafkaConsumer): Unit = {

+pool.returnObject(extractCacheKey(consumer), consumer)

+ }

+

+ /** Invalidates (destroy) borrowed object to the pool. */

+ def invalidateObject(consumer: InternalKafkaConsumer): Unit = {

+pool.invalidateObject(extractCacheKey(consumer), consumer)

+ }

+

+ /** Invalidates all idle consumers for the key */

+ def invalidateKey(key: CacheKey): Unit = {

+pool.clear(key)

+ }

+

+ /**

+ * Closes the keyed object pool. Once the pool is closed,

+ * borrowObject will fail with [[IllegalStateException]], but returnObject

and invalidateObject

+ * will continue to work, with returned objects destroyed on return.

+ *

+ * Also destroys idle instances in

[GitHub] [spark] cloud-fan commented on a change in pull request #25620: [SPARK-25341][Core] Support rolling back a shuffle map stage and re-generate the shuffle files

cloud-fan commented on a change in pull request #25620: [SPARK-25341][Core] Support rolling back a shuffle map stage and re-generate the shuffle files URL: https://github.com/apache/spark/pull/25620#discussion_r319498094 ## File path: core/src/main/java/org/apache/spark/shuffle/api/ShuffleExecutorComponents.java ## @@ -39,17 +39,15 @@ /** * Called once per map task to create a writer that will be responsible for persisting all the * partitioned bytes written by that map task. - * @param shuffleId Unique identifier for the shuffle the map task is a part of - * @param mapId Within the shuffle, the identifier of the map task - * @param mapTaskAttemptId Identifier of the task attempt. Multiple attempts of the same map task - * with the same (shuffleId, mapId) pair can be distinguished by the - * different values of mapTaskAttemptId. + * @param shuffleId Unique identifier for the shuffle the map task is a part of + * @param mapId Identifier of the task attempt. Multiple attempts of the same map task with the Review comment: let's rephrase it. How about `An id of the map task which is unique within this Spark application.` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #25620: [SPARK-25341][Core] Support rolling back a shuffle map stage and re-generate the shuffle files

cloud-fan commented on a change in pull request #25620: [SPARK-25341][Core] Support rolling back a shuffle map stage and re-generate the shuffle files URL: https://github.com/apache/spark/pull/25620#discussion_r319498094 ## File path: core/src/main/java/org/apache/spark/shuffle/api/ShuffleExecutorComponents.java ## @@ -39,17 +39,15 @@ /** * Called once per map task to create a writer that will be responsible for persisting all the * partitioned bytes written by that map task. - * @param shuffleId Unique identifier for the shuffle the map task is a part of - * @param mapId Within the shuffle, the identifier of the map task - * @param mapTaskAttemptId Identifier of the task attempt. Multiple attempts of the same map task - * with the same (shuffleId, mapId) pair can be distinguished by the - * different values of mapTaskAttemptId. + * @param shuffleId Unique identifier for the shuffle the map task is a part of + * @param mapId Identifier of the task attempt. Multiple attempts of the same map task with the Review comment: let's rephrase it. How about `An ID of the map task. The ID is unique within this Spark application.` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gaborgsomogyi commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer

gaborgsomogyi commented on a change in pull request #22138: [SPARK-25151][SS]

Apply Apache Commons Pool to KafkaDataConsumer

URL: https://github.com/apache/spark/pull/22138#discussion_r319498159

##

File path:

external/kafka-0-10-sql/src/test/scala/org/apache/spark/sql/kafka010/KafkaSparkConfSuite.scala

##

@@ -1,30 +0,0 @@

-/*

- * Licensed to the Apache Software Foundation (ASF) under one or more

- * contributor license agreements. See the NOTICE file distributed with

- * this work for additional information regarding copyright ownership.

- * The ASF licenses this file to You under the Apache License, Version 2.0

- * (the "License"); you may not use this file except in compliance with

- * the License. You may obtain a copy of the License at

- *

- *http://www.apache.org/licenses/LICENSE-2.0

- *

- * Unless required by applicable law or agreed to in writing, software

- * distributed under the License is distributed on an "AS IS" BASIS,

- * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

- * See the License for the specific language governing permissions and

- * limitations under the License.

- */

-

-package org.apache.spark.sql.kafka010

-

-import org.apache.spark.{LocalSparkContext, SparkConf, SparkFunSuite}

-import org.apache.spark.util.ResetSystemProperties

-

-class KafkaSparkConfSuite extends SparkFunSuite with LocalSparkContext with

ResetSystemProperties {

Review comment:

Hmm, what has happened with this test?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] gaborgsomogyi commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer

gaborgsomogyi commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer URL: https://github.com/apache/spark/pull/22138#discussion_r319500037 ## File path: external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaDataConsumer.scala ## @@ -269,9 +300,12 @@ private[kafka010] case class InternalKafkaConsumer( // When there is some error thrown, it's better to use a new consumer to drop all cached // states in the old consumer. We don't need to worry about the performance because this // is not a common path. - resetConsumer() - reportDataLoss(failOnDataLoss, s"Cannot fetch offset $toFetchOffset", e) - toFetchOffset = getEarliestAvailableOffsetBetween(toFetchOffset, untilOffset) + releaseConsumer() + fetchedData.reset() Review comment: Don't we need `releaseFetchedData` here? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer

HeartSaVioR commented on a change in pull request #22138: [SPARK-25151][SS]

Apply Apache Commons Pool to KafkaDataConsumer

URL: https://github.com/apache/spark/pull/22138#discussion_r319500084

##

File path:

external/kafka-0-10-sql/src/test/scala/org/apache/spark/sql/kafka010/KafkaSparkConfSuite.scala

##

@@ -1,30 +0,0 @@

-/*

- * Licensed to the Apache Software Foundation (ASF) under one or more

- * contributor license agreements. See the NOTICE file distributed with

- * this work for additional information regarding copyright ownership.

- * The ASF licenses this file to You under the Apache License, Version 2.0

- * (the "License"); you may not use this file except in compliance with

- * the License. You may obtain a copy of the License at

- *

- *http://www.apache.org/licenses/LICENSE-2.0

- *

- * Unless required by applicable law or agreed to in writing, software

- * distributed under the License is distributed on an "AS IS" BASIS,

- * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

- * See the License for the specific language governing permissions and

- * limitations under the License.

- */

-

-package org.apache.spark.sql.kafka010

-

-import org.apache.spark.{LocalSparkContext, SparkConf, SparkFunSuite}

-import org.apache.spark.util.ResetSystemProperties

-

-class KafkaSparkConfSuite extends SparkFunSuite with LocalSparkContext with

ResetSystemProperties {

Review comment:

I have renamed the config to old one per feedback, so no longer need this

test.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer

HeartSaVioR commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer URL: https://github.com/apache/spark/pull/22138#discussion_r319500695 ## File path: external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaDataConsumer.scala ## @@ -269,9 +300,12 @@ private[kafka010] case class InternalKafkaConsumer( // When there is some error thrown, it's better to use a new consumer to drop all cached // states in the old consumer. We don't need to worry about the performance because this // is not a common path. - resetConsumer() - reportDataLoss(failOnDataLoss, s"Cannot fetch offset $toFetchOffset", e) - toFetchOffset = getEarliestAvailableOffsetBetween(toFetchOffset, untilOffset) + releaseConsumer() + fetchedData.reset() Review comment: Yes, as FetchedData is designed to be modified per task. Once you get the one for that task, you can just modify it, and also reset if necessary. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer

HeartSaVioR commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer URL: https://github.com/apache/spark/pull/22138#discussion_r319500695 ## File path: external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaDataConsumer.scala ## @@ -269,9 +300,12 @@ private[kafka010] case class InternalKafkaConsumer( // When there is some error thrown, it's better to use a new consumer to drop all cached // states in the old consumer. We don't need to worry about the performance because this // is not a common path. - resetConsumer() - reportDataLoss(failOnDataLoss, s"Cannot fetch offset $toFetchOffset", e) - toFetchOffset = getEarliestAvailableOffsetBetween(toFetchOffset, untilOffset) + releaseConsumer() + fetchedData.reset() Review comment: Yes, as FetchedData is designed to be modified per task. (So based on the desired offset, in most cases pool will provide same FetchedData. Once you get the one for that task, you can just modify it, and also reset if necessary. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer

HeartSaVioR commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer URL: https://github.com/apache/spark/pull/22138#discussion_r319500695 ## File path: external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaDataConsumer.scala ## @@ -269,9 +300,12 @@ private[kafka010] case class InternalKafkaConsumer( // When there is some error thrown, it's better to use a new consumer to drop all cached // states in the old consumer. We don't need to worry about the performance because this // is not a common path. - resetConsumer() - reportDataLoss(failOnDataLoss, s"Cannot fetch offset $toFetchOffset", e) - toFetchOffset = getEarliestAvailableOffsetBetween(toFetchOffset, untilOffset) + releaseConsumer() + fetchedData.reset() Review comment: Yes, as FetchedData is designed to be modified per task. So based on the desired offset, in most cases pool will provide same FetchedData. Once you get the one for that task, you can just modify it, and also reset if necessary. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer

HeartSaVioR commented on a change in pull request #22138: [SPARK-25151][SS] Apply Apache Commons Pool to KafkaDataConsumer URL: https://github.com/apache/spark/pull/22138#discussion_r319500695 ## File path: external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaDataConsumer.scala ## @@ -269,9 +300,12 @@ private[kafka010] case class InternalKafkaConsumer( // When there is some error thrown, it's better to use a new consumer to drop all cached // states in the old consumer. We don't need to worry about the performance because this // is not a common path. - resetConsumer() - reportDataLoss(failOnDataLoss, s"Cannot fetch offset $toFetchOffset", e) - toFetchOffset = getEarliestAvailableOffsetBetween(toFetchOffset, untilOffset) + releaseConsumer() + fetchedData.reset() Review comment: No, as FetchedData is designed to be modified per task. So based on the desired offset, in most cases pool will provide same FetchedData. Once you get the one for that task, you can just modify it, and also reset if necessary. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on issue #25618: [SPARK-28908][SS]Implement Kafka EOS sink for Structured Streaming

HeartSaVioR commented on issue #25618: [SPARK-28908][SS]Implement Kafka EOS sink for Structured Streaming URL: https://github.com/apache/spark/pull/25618#issuecomment-526593592 Well, someone could say it as 2PC since the behavior is similar, but generally 2PC assumes coordinator and participants. In second phase, coordinator "ask" for commit/abort to participants, not committing/aborting things participants just did in first phase by itself. Based on that, driver should request tasks to commit their outputs, but Spark doesn't provide such flow. So that's pretty simplified version of 2PC and also pretty limited. I think the point is whether we are feeling OK to have exactly-once with some restrictions end users need to be aware of. Could you please initiate discussion on this in Spark dev mailing list? That would be good to hear others' voices. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #25620: [SPARK-25341][Core] Support rolling back a shuffle map stage and re-generate the shuffle files

cloud-fan commented on a change in pull request #25620: [SPARK-25341][Core]

Support rolling back a shuffle map stage and re-generate the shuffle files

URL: https://github.com/apache/spark/pull/25620#discussion_r319502863

##

File path: core/src/main/scala/org/apache/spark/scheduler/MapStatus.scala

##

@@ -100,16 +108,19 @@ private[spark] object MapStatus {

*

* @param loc location where the task is being executed.

* @param compressedSizes size of the blocks, indexed by reduce partition id.

+ * @param mapTaskId unique task id for the task

Review comment:

let's call it `mapId`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #25620: [SPARK-25341][Core] Support rolling back a shuffle map stage and re-generate the shuffle files

cloud-fan commented on a change in pull request #25620: [SPARK-25341][Core]

Support rolling back a shuffle map stage and re-generate the shuffle files

URL: https://github.com/apache/spark/pull/25620#discussion_r319502863

##

File path: core/src/main/scala/org/apache/spark/scheduler/MapStatus.scala

##

@@ -100,16 +108,19 @@ private[spark] object MapStatus {

*

* @param loc location where the task is being executed.

* @param compressedSizes size of the blocks, indexed by reduce partition id.

+ * @param mapTaskId unique task id for the task

Review comment:

let's call it `mapId`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

SparkQA commented on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526594832 **[Test build #109943 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/109943/testReport)** for PR 25497 at commit [`99a2182`](https://github.com/apache/spark/commit/99a21824580349e9f0524573e1c7ce5a739360e9). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0

SparkQA removed a comment on issue #25497: [BUILD][DO-NOT-MERGE] Investigate the detla in scala-maven-plugin 3.4.6 <> 4.0.0 URL: https://github.com/apache/spark/pull/25497#issuecomment-526556962 **[Test build #109943 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/109943/testReport)** for PR 25497 at commit [`99a2182`](https://github.com/apache/spark/commit/99a21824580349e9f0524573e1c7ce5a739360e9). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon opened a new pull request #25633: [SPARK-28759][BUILD] Upgrade scala-maven-plugin to 4.2.0 and fix build profile on AppVeyor