[GitHub] [spark] SparkQA commented on pull request #34821: [SPARK-37558][DOC] Improve spark sql cli document

SparkQA commented on pull request #34821: URL: https://github.com/apache/spark/pull/34821#issuecomment-992199941 Kubernetes integration test status failure URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/50596/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #34821: [SPARK-37558][DOC] Improve spark sql cli document

SparkQA commented on pull request #34821: URL: https://github.com/apache/spark/pull/34821#issuecomment-992199502 Kubernetes integration test starting URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/50598/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #34825: [SPARK-37563][PYTHON] Implement days, seconds, microseconds properties of TimedeltaIndex

SparkQA commented on pull request #34825: URL: https://github.com/apache/spark/pull/34825#issuecomment-992199116 Kubernetes integration test starting URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/50597/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang removed a comment on pull request #34870: [SPARK-37615][BUILD][FOLLOWUP] Upgrade SBT to 1.5.6 in AppVeyor

LuciferYang removed a comment on pull request #34870: URL: https://github.com/apache/spark/pull/34870#issuecomment-992177373 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #34821: [SPARK-37558][DOC] Improve spark sql cli document

AngersZh commented on a change in pull request #34821:

URL: https://github.com/apache/spark/pull/34821#discussion_r767479297

##

File path: docs/sql-distributed-sql-engine-spark-sql-cli.md

##

@@ -0,0 +1,193 @@

+---

+layout: global

+title: Spark SQL CLI

+displayTitle: Spark SQL CLI

+license: |

+ Licensed to the Apache Software Foundation (ASF) under one or more

+ contributor license agreements. See the NOTICE file distributed with

+ this work for additional information regarding copyright ownership.

+ The ASF licenses this file to You under the Apache License, Version 2.0

+ (the "License"); you may not use this file except in compliance with

+ the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

+---

+

+* Table of contents

+{:toc}

+

+

+The Spark SQL CLI is a convenient tool to run the Hive metastore service in

local mode and execute SQL

+queries input from the command line. Note that the Spark SQL CLI cannot talk

to the Thrift JDBC server.

+

+To start the Spark SQL CLI, run the following in the Spark directory:

+

+./bin/spark-sql

+

+Configuration of Hive is done by placing your `hive-site.xml`, `core-site.xml`

and `hdfs-site.xml` files in `conf/`.

+

+## Spark SQL Command Line Options

+

+You may run `./bin/spark-sql --help` for a complete list of all available

options.

+

+CLI options:

+ -d,--define Variable substitution to apply to Hive

+ commands. e.g. -d A=B or --define A=B

+--database Specify the database to use

+ -e SQL from command line

+ -f SQL from files

+ -H,--helpPrint help information

+--hiveconfUse value for given property

+--hivevar Variable substitution to apply to Hive

+ commands. e.g. --hivevar A=B

+ -i Initialization SQL file

+ -S,--silent Silent mode in interactive shell

+ -v,--verbose Verbose mode (echo executed SQL to the

+ console)

+

+## The hiverc File

+

+When invoked without the `-i`, the Spark SQL CLI will attempt to load

`$HIVE_HOME/bin/.hiverc` and `$HOME/.hiverc` as initialization files.

+

+## Supported comment types

+

+

+CommentExample

+

+ simple comment

+

+

+ -- This is a simple comment.

+

+ SELECT 1;

+

+

+

+

+ bracketed comment

+

+

+/* This is a bracketed comment. */

+

+SELECT 1;

+

+

+

+

+ nested bracketed comment

+

+

+/* This is a /* nested bracketed comment*/ .*/

+

+SELECT 1;

+

+

+

+

+

+## Spark SQL CLI Interactive Shell Commands

+

+When `./bin/spark-sql` is run without either the `-e` or `-f` option, it

enters interactive shell mode.

+Use `;` (semicolon) to terminate commands. Notice:

+1. The CLI use `;` to terminate commands only when it's at the end of line,

and it's not escaped by `\\;`.

+2. `;` is the only way to terminate commands. If the user types `SELECT 1` and

presses enter, the console will just wait for input.

+3. If the user types multiple commands in one line like `SELECT 1; SELECT 2;`,

the commands `SELECT 1` and `SELECT 2` will be executed separatly.

+4. If `;` appears within a SQL statement (not the end of the line), then it

has no special meanings:

+ ```sql

+ -- This is a ; comment

+ SELECT ';' as a;

+ ```

+ This is just a comment line followed by a SQL query which returns a string

literal.

+ ```sql

+ /* This is a comment contains ;

+ */ SELECT 1;

+ ```

+ However, if ';' is the end of the line, it terminates the SQL statement.

The example above will be terminated into `/* This is a comment contains ` and

`*/ SELECT 1`, Spark will submit these two command and throw parser error

(unclosed bracketed comment).

+

+

+

+CommandDescription

+

+ quit or exit

+ Exits the interactive shell.

+

+

+ !

+ Executes a shell command from the Spark SQL CLI shell.

+

+

+ dfs

+ Executes a HDFS https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-hdfs/HDFSCommands.html#dfs";>dfs

command from the Spark SQL CLI shell.

+

+

+

+ Executes a Spark SQL query and prints results to standard output.

+

+

+ source

+ Executes a script file inside the CLI.

+

+

+

+## Examples

+

+Example of running a query from the command line:

+

+./bin/spark-sql -e 'SELECT COL FROM TBL'

+

+Example of setting Hive configuration variables:

+

+./bin/spark-sql -e 'SELECT COL FROM TBL' --hiveconf

[GitHub] [spark] cloud-fan commented on a change in pull request #34821: [SPARK-37558][DOC] Improve spark sql cli document

cloud-fan commented on a change in pull request #34821:

URL: https://github.com/apache/spark/pull/34821#discussion_r767478374

##

File path: docs/sql-distributed-sql-engine-spark-sql-cli.md

##

@@ -0,0 +1,193 @@

+---

+layout: global

+title: Spark SQL CLI

+displayTitle: Spark SQL CLI

+license: |

+ Licensed to the Apache Software Foundation (ASF) under one or more

+ contributor license agreements. See the NOTICE file distributed with

+ this work for additional information regarding copyright ownership.

+ The ASF licenses this file to You under the Apache License, Version 2.0

+ (the "License"); you may not use this file except in compliance with

+ the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

+---

+

+* Table of contents

+{:toc}

+

+

+The Spark SQL CLI is a convenient tool to run the Hive metastore service in

local mode and execute SQL

+queries input from the command line. Note that the Spark SQL CLI cannot talk

to the Thrift JDBC server.

+

+To start the Spark SQL CLI, run the following in the Spark directory:

+

+./bin/spark-sql

+

+Configuration of Hive is done by placing your `hive-site.xml`, `core-site.xml`

and `hdfs-site.xml` files in `conf/`.

+

+## Spark SQL Command Line Options

+

+You may run `./bin/spark-sql --help` for a complete list of all available

options.

+

+CLI options:

+ -d,--define Variable substitution to apply to Hive

+ commands. e.g. -d A=B or --define A=B

+--database Specify the database to use

+ -e SQL from command line

+ -f SQL from files

+ -H,--helpPrint help information

+--hiveconfUse value for given property

+--hivevar Variable substitution to apply to Hive

+ commands. e.g. --hivevar A=B

+ -i Initialization SQL file

+ -S,--silent Silent mode in interactive shell

+ -v,--verbose Verbose mode (echo executed SQL to the

+ console)

+

+## The hiverc File

+

+When invoked without the `-i`, the Spark SQL CLI will attempt to load

`$HIVE_HOME/bin/.hiverc` and `$HOME/.hiverc` as initialization files.

+

+## Supported comment types

+

+

+CommentExample

+

+ simple comment

+

+

+ -- This is a simple comment.

+

+ SELECT 1;

+

+

+

+

+ bracketed comment

+

+

+/* This is a bracketed comment. */

+

+SELECT 1;

+

+

+

+

+ nested bracketed comment

+

+

+/* This is a /* nested bracketed comment*/ .*/

+

+SELECT 1;

+

+

+

+

+

+## Spark SQL CLI Interactive Shell Commands

+

+When `./bin/spark-sql` is run without either the `-e` or `-f` option, it

enters interactive shell mode.

+Use `;` (semicolon) to terminate commands. Notice:

+1. The CLI use `;` to terminate commands only when it's at the end of line,

and it's not escaped by `\\;`.

+2. `;` is the only way to terminate commands. If the user types `SELECT 1` and

presses enter, the console will just wait for input.

+3. If the user types multiple commands in one line like `SELECT 1; SELECT 2;`,

the commands `SELECT 1` and `SELECT 2` will be executed separatly.

+4. If `;` appears within a SQL statement (not the end of the line), then it

has no special meanings:

+ ```sql

+ -- This is a ; comment

+ SELECT ';' as a;

+ ```

+ This is just a comment line followed by a SQL query which returns a string

literal.

+ ```sql

+ /* This is a comment contains ;

+ */ SELECT 1;

+ ```

+ However, if ';' is the end of the line, it terminates the SQL statement.

The example above will be terminated into `/* This is a comment contains ` and

`*/ SELECT 1`, Spark will submit these two command and throw parser error

(unclosed bracketed comment).

+

+

+

+CommandDescription

+

+ quit or exit

+ Exits the interactive shell.

+

+

+ !

+ Executes a shell command from the Spark SQL CLI shell.

+

+

+ dfs

+ Executes a HDFS https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-hdfs/HDFSCommands.html#dfs";>dfs

command from the Spark SQL CLI shell.

+

+

+

+ Executes a Spark SQL query and prints results to standard output.

+

+

+ source

+ Executes a script file inside the CLI.

+

+

+

+## Examples

+

+Example of running a query from the command line:

+

+./bin/spark-sql -e 'SELECT COL FROM TBL'

+

+Example of setting Hive configuration variables:

+

+./bin/spark-sql -e 'SELECT COL FROM TBL' --hiveconf

hiv

[GitHub] [spark] dongjoon-hyun commented on a change in pull request #34874: [SPARK-37622][K8S] Support K8s executor rolling policy

dongjoon-hyun commented on a change in pull request #34874:

URL: https://github.com/apache/spark/pull/34874#discussion_r767477976

##

File path:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

##

@@ -145,6 +146,20 @@ private[spark] object Config extends Logging {

.checkValue(_ >= 0, "Interval should be non-negative")

.createWithDefault(0)

+ object ExecutorRollPolicy extends Enumeration {

+val ID, ADD_TIME, TOTAL_GC_TIME, TOTAL_DURATION = Value

+ }

+

+ val EXECUTOR_ROLL_POLICY =

+ConfigBuilder("spark.kubernetes.executor.rollPolicy")

+ .doc("Executor roll policy: Valid values are ID, ADD_TIME, TOTAL_GC_TIME

(default), " +

+"and TOTAL_DURATION")

Review comment:

Thank you for review. Sure! I'll add.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #34878: [SPARK-37626][BUILD] Upgrade libthrift to 0.15.0

SparkQA commented on pull request #34878: URL: https://github.com/apache/spark/pull/34878#issuecomment-992195550 Kubernetes integration test unable to build dist. exiting with code: 1 URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/50600/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #34852: [SPARK-37591][SQL] Support the GCM mode by `aes_encrypt()`/`aes_decrypt()`

cloud-fan commented on a change in pull request #34852:

URL: https://github.com/apache/spark/pull/34852#discussion_r767477561

##

File path:

sql/core/src/test/scala/org/apache/spark/sql/DataFrameFunctionsSuite.scala

##

@@ -386,7 +386,7 @@ class DataFrameFunctionsSuite extends QueryTest with

SharedSparkSession {

}

// Unsupported AES mode and padding in decrypt

-checkUnsupportedMode(df2.selectExpr(s"aes_decrypt(value16, '$key16',

'GSM')"))

+checkUnsupportedMode(df2.selectExpr(s"aes_decrypt(value16, '$key16',

'GSM', 'PKCS')"))

Review comment:

shall we test `GCM`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #34875: [SPARK-37624][PYTHON][DOCS] Suppress warnings for live pandas-on-Spark quickstart notebooks

SparkQA removed a comment on pull request #34875: URL: https://github.com/apache/spark/pull/34875#issuecomment-992124560 **[Test build #146118 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/146118/testReport)** for PR 34875 at commit [`9578511`](https://github.com/apache/spark/commit/9578511d4a7bec1e05ca4ab2bee14bce21cbd45e). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #34875: [SPARK-37624][PYTHON][DOCS] Suppress warnings for live pandas-on-Spark quickstart notebooks

SparkQA commented on pull request #34875: URL: https://github.com/apache/spark/pull/34875#issuecomment-992194460 **[Test build #146118 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/146118/testReport)** for PR 34875 at commit [`9578511`](https://github.com/apache/spark/commit/9578511d4a7bec1e05ca4ab2bee14bce21cbd45e). * This patch **fails PySpark pip packaging tests**. * This patch merges cleanly. * This patch adds no public classes. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on a change in pull request #34874: [SPARK-37622][K8S] Support K8s executor rolling policy

viirya commented on a change in pull request #34874:

URL: https://github.com/apache/spark/pull/34874#discussion_r767476322

##

File path:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

##

@@ -145,6 +146,20 @@ private[spark] object Config extends Logging {

.checkValue(_ >= 0, "Interval should be non-negative")

.createWithDefault(0)

+ object ExecutorRollPolicy extends Enumeration {

+val ID, ADD_TIME, TOTAL_GC_TIME, TOTAL_DURATION = Value

+ }

+

+ val EXECUTOR_ROLL_POLICY =

+ConfigBuilder("spark.kubernetes.executor.rollPolicy")

+ .doc("Executor roll policy: Valid values are ID, ADD_TIME, TOTAL_GC_TIME

(default), " +

+"and TOTAL_DURATION")

Review comment:

Do we need to describe the meanings for each policy like the description

does?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Ngone51 commented on a change in pull request #34629: [SPARK-37355][CORE]Avoid Block Manager registrations when Executor is shutting down

Ngone51 commented on a change in pull request #34629:

URL: https://github.com/apache/spark/pull/34629#discussion_r767476270

##

File path: core/src/main/scala/org/apache/spark/storage/BlockManager.scala

##

@@ -620,11 +620,13 @@ private[spark] class BlockManager(

* Note that this method must be called without any BlockInfo locks held.

*/

def reregister(): Unit = {

-// TODO: We might need to rate limit re-registering.

-logInfo(s"BlockManager $blockManagerId re-registering with master")

-master.registerBlockManager(blockManagerId,

diskBlockManager.localDirsString, maxOnHeapMemory,

- maxOffHeapMemory, storageEndpoint)

-reportAllBlocks()

+if (!SparkEnv.get.isStopped) {

Review comment:

Sorry, I mean you can update the fix with `HeartbeatReceiver` in this PR.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on pull request #34845: [SPARK-37577][SQL] Fix ClassCastException: ArrayType cannot be cast to StructType for Generate Pruning

viirya commented on pull request #34845: URL: https://github.com/apache/spark/pull/34845#issuecomment-992193200 Thanks. I will make a backport to branch-3.2. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #34673: [SPARK-37343][SQL] Implement createIndex, IndexExists and dropIndex in JDBC (Postgres dialect)

cloud-fan commented on a change in pull request #34673:

URL: https://github.com/apache/spark/pull/34673#discussion_r767474830

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JdbcUtils.scala

##

@@ -1073,6 +1075,73 @@ object JdbcUtils extends Logging with SQLConfHelper {

}

}

+ /**

+ * Check if index exists in a table

+ */

+ def checkIfIndexExists(

+ conn: Connection,

+ sql: String,

+ options: JDBCOptions): Boolean = {

+val statement = conn.createStatement

+try {

+ statement.setQueryTimeout(options.queryTimeout)

+ val rs = statement.executeQuery(sql)

+ rs.next

+} catch {

+ case _: Exception =>

+logWarning("Cannot retrieved index info.")

+false

+} finally {

+ statement.close()

+}

+ }

+

+ /**

+ * Process index properties and return tuple of indexType and list of the

other index properties.

+ */

+ def processIndexProperties(

+ properties: util.Map[String, String],

+ catalogName: String

+): (String, Array[String]) = {

+var indexType = ""

+val indexPropertyList: ArrayBuffer[String] = ArrayBuffer[String]()

+val supportedIndexTypeList = getSupportedIndexTypeList(catalogName)

+

+if (!properties.isEmpty) {

+ properties.asScala.foreach { case (k, v) =>

+if (k.equals(SupportsIndex.PROP_TYPE)) {

+ if (containsIndexTypeIgnoreCase(supportedIndexTypeList, v)) {

+indexType = s"USING $v"

+ } else {

+throw new UnsupportedOperationException(s"Index Type $v is not

supported." +

+ s" The supported Index Types are:

${supportedIndexTypeList.mkString(" AND ")}")

+ }

+} else {

+ indexPropertyList.append(s"$k = $v")

+}

+ }

+}

+(indexType, indexPropertyList.toArray)

+ }

+

+ def containsIndexTypeIgnoreCase(supportedIndexTypeList: Array[String],

value: String): Boolean = {

+if (supportedIndexTypeList.isEmpty) {

+ throw new UnsupportedOperationException(s"None of index type is

supported.")

Review comment:

to be more user-facing: `Cannot specify 'USING index_type' in 'CREATE

INDEX'`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] huaxingao opened a new pull request #34879: [SPARK-37627][SQL] Add sorted column in BucketTransform

huaxingao opened a new pull request #34879:

URL: https://github.com/apache/spark/pull/34879

### What changes were proposed in this pull request?

In V1, we can create table with sorted bucket like the following:

```

sql("CREATE TABLE tbl(a INT, b INT) USING parquet " +

"CLUSTERED BY (a) SORTED BY (b) INTO 5 BUCKETS")

```

However, creating table with sorted bucket in V2 failed with Exception

`org.apache.spark.sql.AnalysisException: Cannot convert bucketing with sort

columns to a transform.`

### Why are the changes needed?

This PR adds sorted column in BucketTransform so we can create table in V2

with sorted bucket

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

new UT

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #34673: [SPARK-37343][SQL] Implement createIndex, IndexExists and dropIndex in JDBC (Postgres dialect)

cloud-fan commented on a change in pull request #34673:

URL: https://github.com/apache/spark/pull/34673#discussion_r767474830

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JdbcUtils.scala

##

@@ -1073,6 +1075,73 @@ object JdbcUtils extends Logging with SQLConfHelper {

}

}

+ /**

+ * Check if index exists in a table

+ */

+ def checkIfIndexExists(

+ conn: Connection,

+ sql: String,

+ options: JDBCOptions): Boolean = {

+val statement = conn.createStatement

+try {

+ statement.setQueryTimeout(options.queryTimeout)

+ val rs = statement.executeQuery(sql)

+ rs.next

+} catch {

+ case _: Exception =>

+logWarning("Cannot retrieved index info.")

+false

+} finally {

+ statement.close()

+}

+ }

+

+ /**

+ * Process index properties and return tuple of indexType and list of the

other index properties.

+ */

+ def processIndexProperties(

+ properties: util.Map[String, String],

+ catalogName: String

+): (String, Array[String]) = {

+var indexType = ""

+val indexPropertyList: ArrayBuffer[String] = ArrayBuffer[String]()

+val supportedIndexTypeList = getSupportedIndexTypeList(catalogName)

+

+if (!properties.isEmpty) {

+ properties.asScala.foreach { case (k, v) =>

+if (k.equals(SupportsIndex.PROP_TYPE)) {

+ if (containsIndexTypeIgnoreCase(supportedIndexTypeList, v)) {

+indexType = s"USING $v"

+ } else {

+throw new UnsupportedOperationException(s"Index Type $v is not

supported." +

+ s" The supported Index Types are:

${supportedIndexTypeList.mkString(" AND ")}")

+ }

+} else {

+ indexPropertyList.append(s"$k = $v")

+}

+ }

+}

+(indexType, indexPropertyList.toArray)

+ }

+

+ def containsIndexTypeIgnoreCase(supportedIndexTypeList: Array[String],

value: String): Boolean = {

+if (supportedIndexTypeList.isEmpty) {

+ throw new UnsupportedOperationException(s"None of index type is

supported.")

Review comment:

to be more user-facing: `Cannot specify index type in CREATE INDEX`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wankunde commented on a change in pull request #34629: [SPARK-37355][CORE]Avoid Block Manager registrations when Executor is shutting down

wankunde commented on a change in pull request #34629:

URL: https://github.com/apache/spark/pull/34629#discussion_r767474069

##

File path: core/src/main/scala/org/apache/spark/storage/BlockManager.scala

##

@@ -620,11 +620,13 @@ private[spark] class BlockManager(

* Note that this method must be called without any BlockInfo locks held.

*/

def reregister(): Unit = {

-// TODO: We might need to rate limit re-registering.

-logInfo(s"BlockManager $blockManagerId re-registering with master")

-master.registerBlockManager(blockManagerId,

diskBlockManager.localDirsString, maxOnHeapMemory,

- maxOffHeapMemory, storageEndpoint)

-reportAllBlocks()

+if (!SparkEnv.get.isStopped) {

Review comment:

I have updated the PR description.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #34673: [SPARK-37343][SQL] Implement createIndex, IndexExists and dropIndex in JDBC (Postgres dialect)

cloud-fan commented on a change in pull request #34673:

URL: https://github.com/apache/spark/pull/34673#discussion_r767473789

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JdbcUtils.scala

##

@@ -1073,6 +1075,73 @@ object JdbcUtils extends Logging with SQLConfHelper {

}

}

+ /**

+ * Check if index exists in a table

+ */

+ def checkIfIndexExists(

+ conn: Connection,

+ sql: String,

+ options: JDBCOptions): Boolean = {

+val statement = conn.createStatement

+try {

+ statement.setQueryTimeout(options.queryTimeout)

+ val rs = statement.executeQuery(sql)

+ rs.next

+} catch {

+ case _: Exception =>

+logWarning("Cannot retrieved index info.")

+false

+} finally {

+ statement.close()

+}

+ }

+

+ /**

+ * Process index properties and return tuple of indexType and list of the

other index properties.

+ */

+ def processIndexProperties(

+ properties: util.Map[String, String],

+ catalogName: String

+): (String, Array[String]) = {

Review comment:

```suggestion

catalogName: String): (String, Array[String]) = {

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Ngone51 commented on a change in pull request #34831: [SPARK-37574][CORE][SHUFFLE] Simplify fetchBlocks w/o retry

Ngone51 commented on a change in pull request #34831:

URL: https://github.com/apache/spark/pull/34831#discussion_r767473622

##

File path:

core/src/main/scala/org/apache/spark/network/netty/NettyBlockTransferService.scala

##

@@ -139,14 +139,7 @@ private[spark] class NettyBlockTransferService(

}

}

}

-

- if (maxRetries > 0) {

-// Note this Fetcher will correctly handle maxRetries == 0; we avoid

it just in case there's

-// a bug in this code. We should remove the if statement once we're

sure of the stability.

Review comment:

When `spark.shuffle.io.maxRetries=0`, it tests `OneForOneBlockFetcher`

rather than `RetryingBlockTransferor`, right?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #34821: [SPARK-37558][DOC] Improve spark sql cli document

AngersZh commented on a change in pull request #34821:

URL: https://github.com/apache/spark/pull/34821#discussion_r767473011

##

File path: docs/sql-distributed-sql-engine-spark-sql-cli.md

##

@@ -0,0 +1,193 @@

+---

+layout: global

+title: Spark SQL CLI

+displayTitle: Spark SQL CLI

+license: |

+ Licensed to the Apache Software Foundation (ASF) under one or more

+ contributor license agreements. See the NOTICE file distributed with

+ this work for additional information regarding copyright ownership.

+ The ASF licenses this file to You under the Apache License, Version 2.0

+ (the "License"); you may not use this file except in compliance with

+ the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

+---

+

+* Table of contents

+{:toc}

+

+

+The Spark SQL CLI is a convenient tool to run the Hive metastore service in

local mode and execute SQL

+queries input from the command line. Note that the Spark SQL CLI cannot talk

to the Thrift JDBC server.

+

+To start the Spark SQL CLI, run the following in the Spark directory:

+

+./bin/spark-sql

+

+Configuration of Hive is done by placing your `hive-site.xml`, `core-site.xml`

and `hdfs-site.xml` files in `conf/`.

+

+## Spark SQL Command Line Options

+

+You may run `./bin/spark-sql --help` for a complete list of all available

options.

+

+CLI options:

+ -d,--define Variable substitution to apply to Hive

+ commands. e.g. -d A=B or --define A=B

+--database Specify the database to use

+ -e SQL from command line

+ -f SQL from files

+ -H,--helpPrint help information

+--hiveconfUse value for given property

+--hivevar Variable substitution to apply to Hive

+ commands. e.g. --hivevar A=B

+ -i Initialization SQL file

+ -S,--silent Silent mode in interactive shell

+ -v,--verbose Verbose mode (echo executed SQL to the

+ console)

+

+## The hiverc File

+

+When invoked without the `-i`, the Spark SQL CLI will attempt to load

`$HIVE_HOME/bin/.hiverc` and `$HOME/.hiverc` as initialization files.

+

+## Supported comment types

+

+

+CommentExample

+

+ simple comment

+

+

+ -- This is a simple comment.

+

+ SELECT 1;

+

+

+

+

+ bracketed comment

+

+

+/* This is a bracketed comment. */

+

+SELECT 1;

+

+

+

+

+ nested bracketed comment

+

+

+/* This is a /* nested bracketed comment*/ .*/

+

+SELECT 1;

+

+

+

+

+

+## Spark SQL CLI Interactive Shell Commands

+

+When `./bin/spark-sql` is run without either the `-e` or `-f` option, it

enters interactive shell mode.

+Use `;` (semicolon) to terminate commands. Notice:

+1. The CLI use `;` to terminate commands only when it's at the end of line,

and it's not escaped by `\\;`.

+2. `;` is the only way to terminate commands. If the user types `SELECT 1` and

presses enter, the console will just wait for input.

+3. If the user types multiple commands in one line like `SELECT 1; SELECT 2;`,

the commands `SELECT 1` and `SELECT 2` will be executed separatly.

+4. If `;` appears within a SQL statement (not the end of the line), then it

has no special meanings:

+ ```sql

+ -- This is a ; comment

+ SELECT ';' as a;

+ ```

+ This is just a comment line followed by a SQL query which returns a string

literal.

+ ```sql

+ /* This is a comment contains ;

+ */ SELECT 1;

+ ```

+ However, if ';' is the end of the line, it terminates the SQL statement.

The example above will be terminated into `/* This is a comment contains ` and

`*/ SELECT 1`, Spark will submit these two command and throw parser error

(unclosed bracketed comment).

+

+

+

+CommandDescription

+

+ quit or exit

+ Exits the interactive shell.

+

+

+ !

+ Executes a shell command from the Spark SQL CLI shell.

+

+

+ dfs

+ Executes a HDFS https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-hdfs/HDFSCommands.html#dfs";>dfs

command from the Spark SQL CLI shell.

+

+

+

+ Executes a Spark SQL query and prints results to standard output.

+

+

+ source

+ Executes a script file inside the CLI.

+

+

+

+## Examples

+

+Example of running a query from the command line:

+

+./bin/spark-sql -e 'SELECT COL FROM TBL'

+

+Example of setting Hive configuration variables:

+

+./bin/spark-sql -e 'SELECT COL FROM TBL' --hiveconf

[GitHub] [spark] cloud-fan commented on a change in pull request #34673: [SPARK-37343][SQL] Implement createIndex, IndexExists and dropIndex in JDBC (Postgres dialect)

cloud-fan commented on a change in pull request #34673:

URL: https://github.com/apache/spark/pull/34673#discussion_r767472919

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JdbcUtils.scala

##

@@ -1073,6 +1076,81 @@ object JdbcUtils extends Logging with SQLConfHelper {

}

}

+ /**

+ * Check if index exists in a table

+ */

+ def checkIfIndexExists(

+ conn: Connection,

+ sql: String,

+ options: JDBCOptions): Boolean = {

+val statement = conn.createStatement

+try {

+ statement.setQueryTimeout(options.queryTimeout)

+ val rs = statement.executeQuery(sql)

+ rs.next

+} catch {

+ case _: Exception =>

+logWarning("Cannot retrieved index info.")

+false

+} finally {

+ statement.close()

+}

+ }

+

+ /**

+ * Process index properties and return tuple of indexType and list of the

other index properties.

+ */

+ def processIndexProperties(

+ properties: util.Map[String, String],

+ catalogName: String

+): (String, Array[String]) = {

+var indexType = ""

+val indexPropertyList: ArrayBuffer[String] = ArrayBuffer[String]()

+val supportedIndexTypeList = getSupportedIndexTypeList(catalogName)

+

+if (!properties.isEmpty) {

+ properties.asScala.foreach { case (k, v) =>

+if (k.equals(SupportsIndex.PROP_TYPE)) {

+ if (containsIndexTypeIgnoreCase(supportedIndexTypeList, v)) {

+indexType = s"USING $v"

+ } else {

+throw new UnsupportedOperationException(s"Index Type $v is not

supported." +

+ s" The supported Index Types are:

${supportedIndexTypeList.mkString(" AND ")}")

+ }

+} else {

+ indexPropertyList.append(convertPropertyPairToString(catalogName, k,

v))

+}

+ }

+}

+(indexType, indexPropertyList.toArray)

+ }

+

+ def containsIndexTypeIgnoreCase(supportedIndexTypeList: Array[String],

value: String): Boolean = {

+if (supportedIndexTypeList.isEmpty) {

+ throw new UnsupportedOperationException(s"None of index type is

supported.")

+}

+for (indexType <- supportedIndexTypeList) {

+ if (value.equalsIgnoreCase(indexType)) return true

+}

+false

+ }

+

+ def getSupportedIndexTypeList(catalogName: String): Array[String] = {

+catalogName match {

+ case "mysql" => Array("BTREE", "HASH")

+ case "postgresql" => Array("BTREE", "HASH", "BRIN")

+ case _ => Array.empty

+}

+ }

Review comment:

So the only benefit of adding this API in `JdbcDialect` is to share the

code of checking unsupported index type earlier, which I don't think is

worthwhile.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #34673: [SPARK-37343][SQL] Implement createIndex, IndexExists and dropIndex in JDBC (Postgres dialect)

cloud-fan commented on a change in pull request #34673:

URL: https://github.com/apache/spark/pull/34673#discussion_r767472919

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JdbcUtils.scala

##

@@ -1073,6 +1076,81 @@ object JdbcUtils extends Logging with SQLConfHelper {

}

}

+ /**

+ * Check if index exists in a table

+ */

+ def checkIfIndexExists(

+ conn: Connection,

+ sql: String,

+ options: JDBCOptions): Boolean = {

+val statement = conn.createStatement

+try {

+ statement.setQueryTimeout(options.queryTimeout)

+ val rs = statement.executeQuery(sql)

+ rs.next

+} catch {

+ case _: Exception =>

+logWarning("Cannot retrieved index info.")

+false

+} finally {

+ statement.close()

+}

+ }

+

+ /**

+ * Process index properties and return tuple of indexType and list of the

other index properties.

+ */

+ def processIndexProperties(

+ properties: util.Map[String, String],

+ catalogName: String

+): (String, Array[String]) = {

+var indexType = ""

+val indexPropertyList: ArrayBuffer[String] = ArrayBuffer[String]()

+val supportedIndexTypeList = getSupportedIndexTypeList(catalogName)

+

+if (!properties.isEmpty) {

+ properties.asScala.foreach { case (k, v) =>

+if (k.equals(SupportsIndex.PROP_TYPE)) {

+ if (containsIndexTypeIgnoreCase(supportedIndexTypeList, v)) {

+indexType = s"USING $v"

+ } else {

+throw new UnsupportedOperationException(s"Index Type $v is not

supported." +

+ s" The supported Index Types are:

${supportedIndexTypeList.mkString(" AND ")}")

+ }

+} else {

+ indexPropertyList.append(convertPropertyPairToString(catalogName, k,

v))

+}

+ }

+}

+(indexType, indexPropertyList.toArray)

+ }

+

+ def containsIndexTypeIgnoreCase(supportedIndexTypeList: Array[String],

value: String): Boolean = {

+if (supportedIndexTypeList.isEmpty) {

+ throw new UnsupportedOperationException(s"None of index type is

supported.")

+}

+for (indexType <- supportedIndexTypeList) {

+ if (value.equalsIgnoreCase(indexType)) return true

+}

+false

+ }

+

+ def getSupportedIndexTypeList(catalogName: String): Array[String] = {

+catalogName match {

+ case "mysql" => Array("BTREE", "HASH")

+ case "postgresql" => Array("BTREE", "HASH", "BRIN")

+ case _ => Array.empty

+}

+ }

Review comment:

So the only benefit of adding this API in `JdbcDialect` is the share the

code of checking unsupported index type earlier, which I don't think is

worthwhile.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #34673: [SPARK-37343][SQL] Implement createIndex, IndexExists and dropIndex in JDBC (Postgres dialect)

cloud-fan commented on a change in pull request #34673:

URL: https://github.com/apache/spark/pull/34673#discussion_r767472272

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JdbcUtils.scala

##

@@ -1073,6 +1076,81 @@ object JdbcUtils extends Logging with SQLConfHelper {

}

}

+ /**

+ * Check if index exists in a table

+ */

+ def checkIfIndexExists(

+ conn: Connection,

+ sql: String,

+ options: JDBCOptions): Boolean = {

+val statement = conn.createStatement

+try {

+ statement.setQueryTimeout(options.queryTimeout)

+ val rs = statement.executeQuery(sql)

+ rs.next

+} catch {

+ case _: Exception =>

+logWarning("Cannot retrieved index info.")

+false

+} finally {

+ statement.close()

+}

+ }

+

+ /**

+ * Process index properties and return tuple of indexType and list of the

other index properties.

+ */

+ def processIndexProperties(

+ properties: util.Map[String, String],

+ catalogName: String

+): (String, Array[String]) = {

+var indexType = ""

+val indexPropertyList: ArrayBuffer[String] = ArrayBuffer[String]()

+val supportedIndexTypeList = getSupportedIndexTypeList(catalogName)

+

+if (!properties.isEmpty) {

+ properties.asScala.foreach { case (k, v) =>

+if (k.equals(SupportsIndex.PROP_TYPE)) {

+ if (containsIndexTypeIgnoreCase(supportedIndexTypeList, v)) {

+indexType = s"USING $v"

+ } else {

+throw new UnsupportedOperationException(s"Index Type $v is not

supported." +

+ s" The supported Index Types are:

${supportedIndexTypeList.mkString(" AND ")}")

+ }

+} else {

+ indexPropertyList.append(convertPropertyPairToString(catalogName, k,

v))

+}

+ }

+}

+(indexType, indexPropertyList.toArray)

+ }

+

+ def containsIndexTypeIgnoreCase(supportedIndexTypeList: Array[String],

value: String): Boolean = {

+if (supportedIndexTypeList.isEmpty) {

+ throw new UnsupportedOperationException(s"None of index type is

supported.")

+}

+for (indexType <- supportedIndexTypeList) {

+ if (value.equalsIgnoreCase(indexType)) return true

+}

+false

+ }

+

+ def getSupportedIndexTypeList(catalogName: String): Array[String] = {

+catalogName match {

+ case "mysql" => Array("BTREE", "HASH")

+ case "postgresql" => Array("BTREE", "HASH", "BRIN")

+ case _ => Array.empty

+}

+ }

Review comment:

I don't think so. This is for failing earlier in `createIndex`, where

the JDBC dialect implementation already has full control.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #34878: [SPARK-37626][BUILD] Upgrade libthrift to 0.15.0

SparkQA removed a comment on pull request #34878: URL: https://github.com/apache/spark/pull/34878#issuecomment-992181842 **[Test build #146125 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/146125/testReport)** for PR 34878 at commit [`1b7eb59`](https://github.com/apache/spark/commit/1b7eb598e4361a11e7e89f3d9a53af1b14610ade). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #34878: [SPARK-37626][BUILD] Upgrade libthrift to 0.15.0

SparkQA commented on pull request #34878: URL: https://github.com/apache/spark/pull/34878#issuecomment-992187435 **[Test build #146125 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/146125/testReport)** for PR 34878 at commit [`1b7eb59`](https://github.com/apache/spark/commit/1b7eb598e4361a11e7e89f3d9a53af1b14610ade). * This patch **fails to build**. * This patch merges cleanly. * This patch adds no public classes. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] sarutak edited a comment on pull request #34875: [SPARK-37624][PYTHON][DOCS] Suppress warnings for live pandas-on-Spark quickstart notebooks

sarutak edited a comment on pull request #34875: URL: https://github.com/apache/spark/pull/34875#issuecomment-992185125 @xinrong-databricks I've modified the URL. I just cherry-picked the two commits in this change (9578511 and dd84175) to `branch-3.2`. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] xinrong-databricks commented on pull request #34875: [SPARK-37624][PYTHON][DOCS] Suppress warnings for live pandas-on-Spark quickstart notebooks

xinrong-databricks commented on pull request #34875: URL: https://github.com/apache/spark/pull/34875#issuecomment-992186363 Got it, thanks @sarutak -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Kwafoor commented on pull request #34862: [SPARK-30471][SQL]Fix issue when comparing String and IntegerType

Kwafoor commented on pull request #34862: URL: https://github.com/apache/spark/pull/34862#issuecomment-992185361 > > But I still think SparkSQL should remind user where you wrong. > > This doesn't answer the question of why you only fix string integer comparison here. I think string integer comparison is common and easier to encounter,I haven't meet the other case.I have just tried, and also encounter problem(long, short, etc). And I agree with you it's better to not introduce inconsistency to the system. About other like math(+-*/), it's right about string and integer(SparkSQL cast string to double type). May be I make a confusion title.I found the issue in comparing String and IntegerType, and the reason is string2int cast overflow. So I fix issue by thrown exception in cast code. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] sarutak commented on pull request #34875: [SPARK-37624][PYTHON][DOCS] Suppress warnings for live pandas-on-Spark quickstart notebooks

sarutak commented on pull request #34875: URL: https://github.com/apache/spark/pull/34875#issuecomment-992185125 @xinrong-databricks I've modified the URL. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

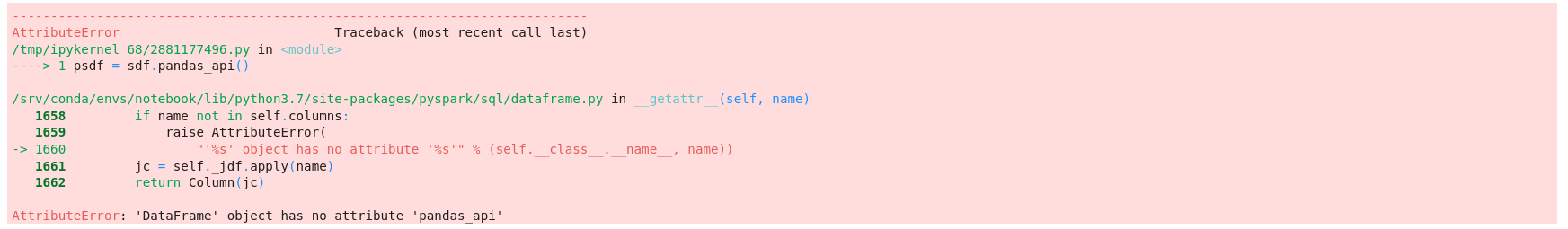

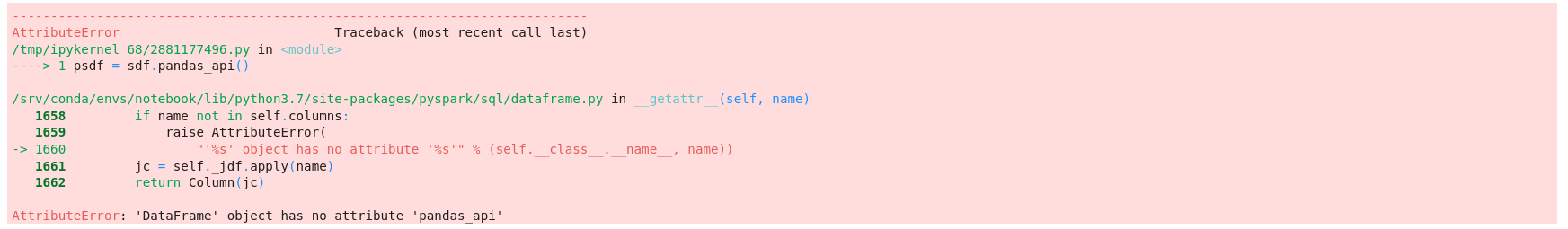

[GitHub] [spark] sarutak edited a comment on pull request #34875: [SPARK-37624][PYTHON][DOCS] Suppress warnings for live pandas-on-Spark quickstart notebooks

sarutak edited a comment on pull request #34875: URL: https://github.com/apache/spark/pull/34875#issuecomment-992172755 @HyukjinKwon LGTM. BTW, the notebook raises an error which is not relevant to this change (the runtime is 3.2.0, while `pandas_api` is not in this version).  So, I applied this change to `branch-3.2` and confirmed it works. https://mybinder.org/v2/gh/sarutak/spark/SPARK-37624-branch-3.2?labpath=python%2Fdocs%2Fsource%2Fgetting_started%2Fquickstart_ps.ipynb -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #34673: [SPARK-37343][SQL] Implement createIndex, IndexExists and dropIndex in JDBC (Postgres dialect)

cloud-fan commented on a change in pull request #34673:

URL: https://github.com/apache/spark/pull/34673#discussion_r767468635

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JdbcUtils.scala

##

@@ -1073,6 +1076,81 @@ object JdbcUtils extends Logging with SQLConfHelper {

}

}

+ /**

+ * Check if index exists in a table

+ */

+ def checkIfIndexExists(

+ conn: Connection,

+ sql: String,

+ options: JDBCOptions): Boolean = {

+val statement = conn.createStatement

+try {

+ statement.setQueryTimeout(options.queryTimeout)

+ val rs = statement.executeQuery(sql)

+ rs.next

+} catch {

+ case _: Exception =>

+logWarning("Cannot retrieved index info.")

+false

+} finally {

+ statement.close()

+}

+ }

+

+ /**

+ * Process index properties and return tuple of indexType and list of the

other index properties.

+ */

+ def processIndexProperties(

+ properties: util.Map[String, String],

+ catalogName: String

+): (String, Array[String]) = {

+var indexType = ""

+val indexPropertyList: ArrayBuffer[String] = ArrayBuffer[String]()

+val supportedIndexTypeList = getSupportedIndexTypeList(catalogName)

+

+if (!properties.isEmpty) {

+ properties.asScala.foreach { case (k, v) =>

+if (k.equals(SupportsIndex.PROP_TYPE)) {

+ if (containsIndexTypeIgnoreCase(supportedIndexTypeList, v)) {

+indexType = s"USING $v"

+ } else {

+throw new UnsupportedOperationException(s"Index Type $v is not

supported." +

+ s" The supported Index Types are:

${supportedIndexTypeList.mkString(" AND ")}")

+ }

+} else {

+ indexPropertyList.append(convertPropertyPairToString(catalogName, k,

v))

+}

+ }

+}

+(indexType, indexPropertyList.toArray)

+ }

+

+ def containsIndexTypeIgnoreCase(supportedIndexTypeList: Array[String],

value: String): Boolean = {

+if (supportedIndexTypeList.isEmpty) {

+ throw new UnsupportedOperationException(s"None of index type is

supported.")

+}

+for (indexType <- supportedIndexTypeList) {

+ if (value.equalsIgnoreCase(indexType)) return true

+}

+false

+ }

+

+ def getSupportedIndexTypeList(catalogName: String): Array[String] = {

+catalogName match {

+ case "mysql" => Array("BTREE", "HASH")

+ case "postgresql" => Array("BTREE", "HASH", "BRIN")

+ case _ => Array.empty

+}

+ }

Review comment:

Yea +1 to put it in `JdbcDialect`, instead of hardcoding the dialect

names here.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #34821: [SPARK-37558][DOC] Improve spark sql cli document

AmplabJenkins commented on pull request #34821: URL: https://github.com/apache/spark/pull/34821#issuecomment-992182882 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/146123/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #34821: [SPARK-37558][DOC] Improve spark sql cli document

SparkQA removed a comment on pull request #34821: URL: https://github.com/apache/spark/pull/34821#issuecomment-992174522 **[Test build #146123 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/146123/testReport)** for PR 34821 at commit [`a06b166`](https://github.com/apache/spark/commit/a06b1662c61639a5b2babc9f37f2e82dd3789607). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on a change in pull request #34367: [SPARK-37099][SQL] Impl a rank-based filter to optimize top-k computation

zhengruifeng commented on a change in pull request #34367:

URL: https://github.com/apache/spark/pull/34367#discussion_r767467761

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/window/RankLimitExec.scala

##

@@ -0,0 +1,280 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+

+package org.apache.spark.sql.execution.window

+

+import org.apache.spark.rdd.RDD

+import org.apache.spark.sql.catalyst.InternalRow

+import org.apache.spark.sql.catalyst.expressions._

+import org.apache.spark.sql.catalyst.expressions.codegen._

+import org.apache.spark.sql.catalyst.plans.physical._

+import org.apache.spark.sql.execution._

+import org.apache.spark.sql.execution.metric.SQLMetrics

+import org.apache.spark.util.collection.Utils

+

+

+sealed trait RankLimitMode

+

+case object Partial extends RankLimitMode

+

+case object Final extends RankLimitMode

+

+

+

+/**

+ * This operator is designed to filter out unnecessary rows before WindowExec,

+ * for top-k computation.

+ * @param partitionSpec Should be the same as [[WindowExec#partitionSpec]]

+ * @param orderSpec Should be the same as [[WindowExec#orderSpec]]

+ * @param rankFunction The function to compute row rank, should be

RowNumber/Rank/DenseRank.

+ */

+case class RankLimitExec(

+partitionSpec: Seq[Expression],

+orderSpec: Seq[SortOrder],

+rankFunction: Expression,

+limit: Int,

+mode: RankLimitMode,

+child: SparkPlan) extends UnaryExecNode {

+ assert(orderSpec.nonEmpty && limit > 0)

+

+ private val shouldPass = child match {

+case r: RankLimitExec =>

+ partitionSpec.size == r.partitionSpec.size &&

+partitionSpec.zip(r.partitionSpec).forall(p =>

p._1.semanticEquals(p._2)) &&

+orderSpec.size == r.orderSpec.size &&

+orderSpec.zip(r.orderSpec).forall(o => o._1.semanticEquals(o._2)) &&

+rankFunction.semanticEquals(r.rankFunction) &&

+mode == Final && r.mode == Partial && limit == r.limit

+case _ => false

+ }

+

+ private val shouldApplyTakeOrdered: Boolean = {

+rankFunction match {

+ case _: RowNumber => limit < conf.topKSortFallbackThreshold

+ case _: Rank => false

+ case _: DenseRank => false

+ case f => throw new IllegalArgumentException(s"Unsupported rank

function: $f")

+}

+ }

+

+ override def output: Seq[Attribute] = child.output

+

+ override def requiredChildOrdering: Seq[Seq[SortOrder]] = {

+if (shouldApplyTakeOrdered) {

+ Seq(partitionSpec.map(SortOrder(_, Ascending)))

+} else {

+ // Should be the same as [[WindowExec#requiredChildOrdering]]

+ Seq(partitionSpec.map(SortOrder(_, Ascending)) ++ orderSpec)

+}

+ }

+

+ override def outputOrdering: Seq[SortOrder] = {

+partitionSpec.map(SortOrder(_, Ascending)) ++ orderSpec

+ }

+

+ override def requiredChildDistribution: Seq[Distribution] = mode match {

+case Partial => super.requiredChildDistribution

+case Final =>

+ // Should be the same as [[WindowExec#requiredChildDistribution]]

+ if (partitionSpec.isEmpty) {

+AllTuples :: Nil

+ } else ClusteredDistribution(partitionSpec) :: Nil

+ }

+

+ override def outputPartitioning: Partitioning = child.outputPartitioning

+

+ override lazy val metrics = Map(

+"numOutputRows" -> SQLMetrics.createMetric(sparkContext, "number of output

rows"))

+

+ private lazy val ordering = GenerateOrdering.generate(orderSpec, output)

+

+ private lazy val limitFunction = rankFunction match {

+case _: RowNumber if shouldApplyTakeOrdered =>

+ (stream: Iterator[InternalRow]) =>

+Utils.takeOrdered(stream.map(_.copy()), limit)(ordering)

Review comment:

```

val TOP_K_SORT_FALLBACK_THRESHOLD =

buildConf("spark.sql.execution.topKSortFallbackThreshold")

.doc("In SQL queries with a SORT followed by a LIMIT like " +

"'SELECT x FROM t ORDER BY y LIMIT m', if m is under this

threshold, do a top-K sort" +

" in memory, otherwise do a global sort which spills to disk if

necessary.")

.version("2.4.0")

.intConf

.createWithDefault(ByteArrayMethods.MAX_ROUNDED_ARRAY_LENGTH)

```

I think we can still share the same threshold. The problem

[GitHub] [spark] AmplabJenkins removed a comment on pull request #34821: [SPARK-37558][DOC] Improve spark sql cli document

AmplabJenkins removed a comment on pull request #34821: URL: https://github.com/apache/spark/pull/34821#issuecomment-992182882 Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/146123/ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] sarutak commented on pull request #34875: [SPARK-37624][PYTHON][DOCS] Suppress warnings for live pandas-on-Spark quickstart notebooks

sarutak commented on pull request #34875: URL: https://github.com/apache/spark/pull/34875#issuecomment-992182720 @xinrong-databricks Oops. Let me check it. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #34821: [SPARK-37558][DOC] Improve spark sql cli document

SparkQA commented on pull request #34821: URL: https://github.com/apache/spark/pull/34821#issuecomment-992182620 **[Test build #146123 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/146123/testReport)** for PR 34821 at commit [`a06b166`](https://github.com/apache/spark/commit/a06b1662c61639a5b2babc9f37f2e82dd3789607). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] xinrong-databricks commented on pull request #34875: [SPARK-37624][PYTHON][DOCS] Suppress warnings for live pandas-on-Spark quickstart notebooks

xinrong-databricks commented on pull request #34875: URL: https://github.com/apache/spark/pull/34875#issuecomment-992182008 Hi @sarutak "Binder not found" after clicking the link attached. May I ask which change you applied? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #34878: [SPARK-37626][BUILD] Upgrade libthrift to 0.15.0

SparkQA commented on pull request #34878: URL: https://github.com/apache/spark/pull/34878#issuecomment-992181842 **[Test build #146125 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/146125/testReport)** for PR 34878 at commit [`1b7eb59`](https://github.com/apache/spark/commit/1b7eb598e4361a11e7e89f3d9a53af1b14610ade). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #34738: [SPARK-37483][SQL] Support push down top N to JDBC data source V2

cloud-fan commented on a change in pull request #34738:

URL: https://github.com/apache/spark/pull/34738#discussion_r767464502

##

File path: sql/core/src/test/scala/org/apache/spark/sql/jdbc/JDBCV2Suite.scala

##

@@ -120,52 +122,124 @@ class JDBCV2Suite extends QueryTest with

SharedSparkSession with ExplainSuiteHel

.table("h2.test.employee")

.filter($"dept" > 1)

.limit(1)

-checkPushedLimit(df2, true, 1)

+checkPushedLimit(df2, Some(1))

checkAnswer(df2, Seq(Row(2, "alex", 12000.00, 1200.0)))

val df3 = sql("SELECT name FROM h2.test.employee WHERE dept > 1 LIMIT 1")

val scan = df3.queryExecution.optimizedPlan.collectFirst {

case s: DataSourceV2ScanRelation => s

}.get

assert(scan.schema.names.sameElements(Seq("NAME")))

-checkPushedLimit(df3, true, 1)

+checkPushedLimit(df3, Some(1))

checkAnswer(df3, Seq(Row("alex")))

val df4 = spark.read

.table("h2.test.employee")

.groupBy("DEPT").sum("SALARY")

.limit(1)

-checkPushedLimit(df4, false, 0)

+checkPushedLimit(df4, None)

checkAnswer(df4, Seq(Row(1, 19000.00)))

+val name = udf { (x: String) => x.matches("cat|dav|amy") }

+val sub = udf { (x: String) => x.substring(0, 3) }

val df5 = spark.read

.table("h2.test.employee")

- .sort("SALARY")

+ .select($"SALARY", $"BONUS", sub($"NAME").as("shortName"))

+ .filter(name($"shortName"))

+ .limit(1)

+// LIMIT is pushed down only if all the filters are pushed down

+checkPushedLimit(df5, None)

+checkAnswer(df5, Seq(Row(1.00, 1000.0, "amy")))

+ }

+

+ private def checkPushedLimit(df: DataFrame, limit: Option[Int]): Unit = {

Review comment:

shall we merge `checkPushedTopN` into this? `def checkPushedLimit(df:

DataFrame, limit: Option[Int], sortValues: Seq[SortValue] = Nil)`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] bozhang2820 opened a new pull request #34878: [SPARK-37626][BUILD] Upgrade libthrift to 0.15.0

bozhang2820 opened a new pull request #34878: URL: https://github.com/apache/spark/pull/34878 ### What changes were proposed in this pull request? This PR upgrades libthrift from 0.12.0 to 0.15.0. ### Why are the changes needed? This is to avoid https://nvd.nist.gov/vuln/detail/CVE-2020-13949. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Will rely on PR testings. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #34738: [SPARK-37483][SQL] Support push down top N to JDBC data source V2

cloud-fan commented on a change in pull request #34738:

URL: https://github.com/apache/spark/pull/34738#discussion_r767463616

##

File path:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/v2/V2ScanRelationPushDown.scala

##

@@ -246,16 +247,42 @@ object V2ScanRelationPushDown extends Rule[LogicalPlan]

with PredicateHelper {

}

}

+ private def pushDownLimit(plan: LogicalPlan, limit: Int): LogicalPlan = plan

match {

+case operation @ ScanOperation(_, filter, sHolder: ScanBuilderHolder) if

filter.isEmpty =>

+ val limitPushed = PushDownUtils.pushLimit(sHolder.builder, limit)

+ if (limitPushed) {

+sHolder.pushedLimit = Some(limit)

+ }

+ operation

+case s @ Sort(order, _, operation @ ScanOperation(_, filter, sHolder:

ScanBuilderHolder))

+ if filter.isEmpty =>

+ val orders = DataSourceStrategy.translateSortOrders(order)

+ val topNPushed = PushDownUtils.pushTopN(sHolder.builder, orders.toArray,

limit)

+ if (topNPushed) {

+sHolder.pushedLimit = Some(limit)

+sHolder.sortValues = orders

+operation

+ } else {

+s

+ }

+case p: Project =>

+ val newChild = pushDownLimit(p.child, limit)

+ if (newChild == p.child) {

+p

+ } else {

+p.copy(child = newChild)

+ }

+case other => other