[GitHub] [spark] HyukjinKwon commented on pull request #39947: [SPARK-40453][SPARK-41715][CONNECT] Take super class into account when throwing an exception

HyukjinKwon commented on PR #39947: URL: https://github.com/apache/spark/pull/39947#issuecomment-1423774647 cc @xinrong-meng @grundprinzip @ueshin @zhengruifeng PTAL -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a diff in pull request #39947: [SPARK-40453][SPARK-41715][CONNECT] Take super class into account when throwing an exception

HyukjinKwon commented on code in PR #39947:

URL: https://github.com/apache/spark/pull/39947#discussion_r1101076905

##

python/pyspark/errors/exceptions/connect.py:

##

@@ -61,41 +97,34 @@ class AnalysisException(SparkConnectGrpcException,

BaseAnalysisException):

Failed to analyze a SQL query plan from Spark Connect server.

"""

-def __init__(

-self,

-message: Optional[str] = None,

-error_class: Optional[str] = None,

-message_parameters: Optional[Dict[str, str]] = None,

-plan: Optional[str] = None,

-reason: Optional[str] = None,

-) -> None:

-self.message = message # type: ignore[assignment]

-if plan is not None:

-self.message = f"{self.message}\nPlan: {plan}"

Review Comment:

Example:

```python

spark.range(1).select("a").show()

```

```

Traceback (most recent call last):

File "", line 1, in

File "/.../spark/python/pyspark/sql/connect/dataframe.py", line 776, in

show

print(self._show_string(n, truncate, vertical))

File "/.../spark/python/pyspark/sql/connect/dataframe.py", line 619, in

_show_string

pdf = DataFrame.withPlan(

File "/.../spark/python/pyspark/sql/connect/dataframe.py", line 1325, in

toPandas

return self._session.client.to_pandas(query)

File "/.../spark/python/pyspark/sql/connect/client.py", line 449, in

to_pandas

table, metrics = self._execute_and_fetch(req)

File "/.../spark/python/pyspark/sql/connect/client.py", line 636, in

_execute_and_fetch

self._handle_error(rpc_error)

File "/.../spark/python/pyspark/sql/connect/client.py", line 670, in

_handle_error

raise convert_exception(info, status.message) from None

pyspark.errors.exceptions.connect.AnalysisException:

[UNRESOLVED_COLUMN.WITH_SUGGESTION] A column or function parameter with name

`a` cannot be resolved. Did you mean one of the following? [`id`].;

'Project ['a]

+- Range (0, 1, step=1, splits=Some(16))

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a diff in pull request #39947: [SPARK-40453][SPARK-41715][CONNECT] Take super class into account when throwing an exception

HyukjinKwon commented on code in PR #39947:

URL: https://github.com/apache/spark/pull/39947#discussion_r1101075312

##

connector/connect/server/src/main/scala/org/apache/spark/sql/connect/service/SparkConnectService.scala:

##

@@ -83,27 +107,19 @@ class SparkConnectService(debug: Boolean)

private def handleError[V](

opType: String,

observer: StreamObserver[V]): PartialFunction[Throwable, Unit] = {

-case ae: AnalysisException =>

- logError(s"Error during: $opType", ae)

- val status = RPCStatus

-.newBuilder()

-.setCode(RPCCode.INTERNAL_VALUE)

-.addDetails(

- ProtoAny.pack(

-ErrorInfo

- .newBuilder()

- .setReason(ae.getClass.getName)

- .setDomain("org.apache.spark")

- .putMetadata("message", ae.getSimpleMessage)

- .putMetadata("plan", Option(ae.plan).flatten.map(p =>

s"$p").getOrElse(""))

- .build()))

-.setMessage(ae.getLocalizedMessage)

-.build()

- observer.onError(StatusProto.toStatusRuntimeException(status))

+case se: SparkException

+if se.getCause != null && se.getCause

+ .isInstanceOf[PythonException] && se.getCause.getStackTrace

+

.exists(_.toString.contains("org.apache.spark.sql.execution.python")) =>

+ // Python UDF execution

+ logError(s"Error during: $opType", se)

+ observer.onError(

+

StatusProto.toStatusRuntimeException(buildStatusFromThrowable(se.getCause)))

Review Comment:

Example:

```python

from pyspark.sql.functions import udf

@udf

def aa(a):

1/0

spark.range(1).select(aa("id")).show()

```

```

Traceback (most recent call last):

File "", line 1, in

File "/.../spark/python/pyspark/sql/connect/dataframe.py", line 776, in

show

print(self._show_string(n, truncate, vertical))

File "/.../spark/python/pyspark/sql/connect/dataframe.py", line 619, in

_show_string

pdf = DataFrame.withPlan(

File "/.../spark/python/pyspark/sql/connect/dataframe.py", line 1325, in

toPandas

return self._session.client.to_pandas(query)

File "/.../spark/python/pyspark/sql/connect/client.py", line 449, in

to_pandas

table, metrics = self._execute_and_fetch(req)

File "/.../spark/python/pyspark/sql/connect/client.py", line 636, in

_execute_and_fetch

self._handle_error(rpc_error)

File "/.../spark/python/pyspark/sql/connect/client.py", line 670, in

_handle_error

raise convert_exception(info, status.message) from None

pyspark.errors.exceptions.connect.PythonException:

An exception was thrown from the Python worker. Please see the stack trace

below.

Traceback (most recent call last):

File "", line 3, in aa

ZeroDivisionError: division by zero

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a diff in pull request #39947: [SPARK-40453][SPARK-41715][CONNECT] Take super class into account when throwing an exception

HyukjinKwon commented on code in PR #39947:

URL: https://github.com/apache/spark/pull/39947#discussion_r1101075312

##

connector/connect/server/src/main/scala/org/apache/spark/sql/connect/service/SparkConnectService.scala:

##

@@ -83,27 +107,19 @@ class SparkConnectService(debug: Boolean)

private def handleError[V](

opType: String,

observer: StreamObserver[V]): PartialFunction[Throwable, Unit] = {

-case ae: AnalysisException =>

- logError(s"Error during: $opType", ae)

- val status = RPCStatus

-.newBuilder()

-.setCode(RPCCode.INTERNAL_VALUE)

-.addDetails(

- ProtoAny.pack(

-ErrorInfo

- .newBuilder()

- .setReason(ae.getClass.getName)

- .setDomain("org.apache.spark")

- .putMetadata("message", ae.getSimpleMessage)

- .putMetadata("plan", Option(ae.plan).flatten.map(p =>

s"$p").getOrElse(""))

- .build()))

-.setMessage(ae.getLocalizedMessage)

-.build()

- observer.onError(StatusProto.toStatusRuntimeException(status))

+case se: SparkException

+if se.getCause != null && se.getCause

+ .isInstanceOf[PythonException] && se.getCause.getStackTrace

+

.exists(_.toString.contains("org.apache.spark.sql.execution.python")) =>

+ // Python UDF execution

+ logError(s"Error during: $opType", se)

+ observer.onError(

+

StatusProto.toStatusRuntimeException(buildStatusFromThrowable(se.getCause)))

Review Comment:

Example:

```

Traceback (most recent call last):

File "", line 1, in

File "/.../spark/python/pyspark/sql/connect/dataframe.py", line 776, in

show

print(self._show_string(n, truncate, vertical))

File "/.../spark/python/pyspark/sql/connect/dataframe.py", line 619, in

_show_string

pdf = DataFrame.withPlan(

File "/.../spark/python/pyspark/sql/connect/dataframe.py", line 1325, in

toPandas

return self._session.client.to_pandas(query)

File "/.../spark/python/pyspark/sql/connect/client.py", line 449, in

to_pandas

table, metrics = self._execute_and_fetch(req)

File "/.../spark/python/pyspark/sql/connect/client.py", line 636, in

_execute_and_fetch

self._handle_error(rpc_error)

File "/.../spark/python/pyspark/sql/connect/client.py", line 670, in

_handle_error

raise convert_exception(info, status.message) from None

pyspark.errors.exceptions.connect.PythonException:

An exception was thrown from the Python worker. Please see the stack trace

below.

Traceback (most recent call last):

File "", line 3, in aa

ZeroDivisionError: division by zero

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] panbingkun commented on pull request #39865: [SPARK-42052][SQL] Codegen Support for HiveSimpleUDF

panbingkun commented on PR #39865: URL: https://github.com/apache/spark/pull/39865#issuecomment-1423716592 > Hm, I think we should better go and figure out to resolve this by using `Invoke` so we don't have to manually implement the codegen logic. I was fine with #39555 as a one time thing but seems like there are some more to fix. As the first step, I have submitted a new Pr(https://github.com/apache/spark/pull/39949) to rewrite HiveGenericUDF with Invoke. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] panbingkun opened a new pull request, #39949: [SPARK-42386][SQL] Rewrite HiveGenericUDF with Invoke

panbingkun opened a new pull request, #39949: URL: https://github.com/apache/spark/pull/39949 ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on a diff in pull request #39941: [MINOR][DOCS] Add link to Hadoop docs

itholic commented on code in PR #39941: URL: https://github.com/apache/spark/pull/39941#discussion_r1101026968 ## docs/rdd-programming-guide.md: ## @@ -442,7 +442,7 @@ Apart from text files, Spark's Python API also supports several other data forma **Writable Support** -PySpark SequenceFile support loads an RDD of key-value pairs within Java, converts Writables to base Java types, and pickles the +PySpark SequenceFile support loads an RDD of key-value pairs within Java, converts [Writables](https://hadoop.apache.org/docs/current/api/org/apache/hadoop/io/Writable.html) to base Java types, and pickles the Review Comment: Sounds good! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] chaoqin-li1123 commented on pull request #39897: [WIP][SPARK-42353][SS] Cleanup orphan sst and log files in RocksDB checkpoint directory

chaoqin-li1123 commented on PR #39897: URL: https://github.com/apache/spark/pull/39897#issuecomment-1423698887 @rangadi -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] chaoqin-li1123 commented on a diff in pull request #39897: [WIP][SPARK-42353][SS] Cleanup orphan sst and log files in RocksDB checkpoint directory

chaoqin-li1123 commented on code in PR #39897:

URL: https://github.com/apache/spark/pull/39897#discussion_r1101025106

##

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/state/RocksDBFileManager.scala:

##

@@ -269,29 +306,46 @@ class RocksDBFileManager(

s"$numVersionsToRetain versions")

// Resolve RocksDB files for all the versions and find the max version

each file is used

-val fileToMaxUsedVersion = new mutable.HashMap[RocksDBImmutableFile, Long]

+val fileToMaxUsedVersion = new mutable.HashMap[String, Long]

sortedVersions.foreach { version =>

val files = Option(versionToRocksDBFiles.get(version)).getOrElse {

val newResolvedFiles = getImmutableFilesFromVersionZip(version)

versionToRocksDBFiles.put(version, newResolvedFiles)

newResolvedFiles

}

- files.foreach(f => fileToMaxUsedVersion(f) = version)

+ files.foreach(f => fileToMaxUsedVersion(f.dfsFileName) =

+math.max(version, fileToMaxUsedVersion.getOrElse(f.dfsFileName,

version)))

}

// Best effort attempt to delete SST files that were last used in

to-be-deleted versions

val filesToDelete = fileToMaxUsedVersion.filter { case (_, v) =>

versionsToDelete.contains(v) }

+

+val sstDir = new Path(dfsRootDir,

RocksDBImmutableFile.SST_FILES_DFS_SUBDIR)

+val logDir = new Path(dfsRootDir,

RocksDBImmutableFile.LOG_FILES_DFS_SUBDIR)

+val allSstFiles = if (fm.exists(sstDir)) fm.list(sstDir).toSeq else

Seq.empty

+val allLogFiles = if (fm.exists(logDir)) fm.list(logDir).toSeq else

Seq.empty

+filesToDelete ++= findOrphanFiles(fileToMaxUsedVersion.keys.toSeq,

allSstFiles ++ allLogFiles)

+ .map(_ -> -1L)

logInfo(s"Deleting ${filesToDelete.size} files not used in versions >=

$minVersionToRetain")

var failedToDelete = 0

-filesToDelete.foreach { case (file, maxUsedVersion) =>

+filesToDelete.foreach { case (dfsFileName, maxUsedVersion) =>

try {

-val dfsFile = dfsFilePath(file.dfsFileName)

+val dfsFile = dfsFilePath(dfsFileName)

fm.delete(dfsFile)

-logDebug(s"Deleted file $file that was last used in version

$maxUsedVersion")

+if (maxUsedVersion == -1) {

+ logDebug(s"Deleted orphan file $dfsFileName")

+} else {

+ logDebug(s"Deleted file $dfsFileName that was last used in version

$maxUsedVersion")

+}

+logDebug(s"Deleted file $dfsFileName that was last used in version

$maxUsedVersion")

Review Comment:

Thanks, fixed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on pull request #39937: [SPARK-42309][SQL] Assign name to _LEGACY_ERROR_TEMP_1204

itholic commented on PR #39937: URL: https://github.com/apache/spark/pull/39937#issuecomment-1423666280 @MaxGekk Just updated the structure to apply sub-error classes per each `addError`. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] db-scnakandala commented on a diff in pull request #39722: [SPARK-42162] Introduce MultiCommutativeOp expression as a memory optimization for canonicalizing large trees of commutative

db-scnakandala commented on code in PR #39722:

URL: https://github.com/apache/spark/pull/39722#discussion_r1101002739

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/arithmetic.scala:

##

@@ -632,7 +636,12 @@ case class Multiply(

override lazy val canonicalized: Expression = {

// TODO: do not reorder consecutive `Multiply`s with different `evalMode`

-orderCommutative({ case Multiply(l, r, _) => Seq(l, r)

}).reduce(Multiply(_, _, evalMode))

+val reorderResult = buildCanonicalizedPlan(

+ { case Multiply(l, r, _) => Seq(l, r) }

+ { case (l: Expression, r: Expression) => Multiply(l, r, evalMode)},

+ Some(evalMode)

+)

+reorderResult

Review Comment:

done.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #39722: [SPARK-42162] Introduce MultiCommutativeOp expression as a memory optimization for canonicalizing large trees of commutative expr

cloud-fan commented on code in PR #39722:

URL: https://github.com/apache/spark/pull/39722#discussion_r1101001554

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/arithmetic.scala:

##

@@ -479,8 +480,11 @@ case class Add(

override lazy val canonicalized: Expression = {

// TODO: do not reorder consecutive `Add`s with different `evalMode`

-val reorderResult =

- orderCommutative({ case Add(l, r, _) => Seq(l, r) }).reduce(Add(_, _,

evalMode))

+val reorderResult = buildCanonicalizedPlan(

+ { case Add(l, r, _) => Seq(l, r) },

+ { case (l: Expression, r: Expression) => Add(l, r, evalMode)},

+ Some(evalMode)

+)

if (resolved && reorderResult.resolved && reorderResult.dataType ==

dataType) {

Review Comment:

not related to this PR, @gengliangwang do we have a followup to apply this

fix for multiply?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #39722: [SPARK-42162] Introduce MultiCommutativeOp expression as a memory optimization for canonicalizing large trees of commutative expr

cloud-fan commented on code in PR #39722:

URL: https://github.com/apache/spark/pull/39722#discussion_r1101000846

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/arithmetic.scala:

##

@@ -632,7 +636,12 @@ case class Multiply(

override lazy val canonicalized: Expression = {

// TODO: do not reorder consecutive `Multiply`s with different `evalMode`

-orderCommutative({ case Multiply(l, r, _) => Seq(l, r)

}).reduce(Multiply(_, _, evalMode))

+val reorderResult = buildCanonicalizedPlan(

+ { case Multiply(l, r, _) => Seq(l, r) }

+ { case (l: Expression, r: Expression) => Multiply(l, r, evalMode)},

+ Some(evalMode)

+)

+reorderResult

Review Comment:

```suggestion

buildCanonicalizedPlan(

{ case Multiply(l, r, _) => Seq(l, r) }

{ case (l: Expression, r: Expression) => Multiply(l, r, evalMode)},

Some(evalMode)

)

```

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/arithmetic.scala:

##

@@ -632,7 +636,12 @@ case class Multiply(

override lazy val canonicalized: Expression = {

// TODO: do not reorder consecutive `Multiply`s with different `evalMode`

-orderCommutative({ case Multiply(l, r, _) => Seq(l, r)

}).reduce(Multiply(_, _, evalMode))

+val reorderResult = buildCanonicalizedPlan(

+ { case Multiply(l, r, _) => Seq(l, r) }

+ { case (l: Expression, r: Expression) => Multiply(l, r, evalMode)},

+ Some(evalMode)

+)

+reorderResult

Review Comment:

same for other places.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang opened a new pull request, #39948: [SPARK-42385][BUILD] Upgrade RoaringBitmap to 0.9.39

LuciferYang opened a new pull request, #39948: URL: https://github.com/apache/spark/pull/39948 ### What changes were proposed in this pull request? This pr aims upgrade RoaringBitmap 0.9.39 ### Why are the changes needed? This version bring a bug fix: - https://github.com/RoaringBitmap/RoaringBitmap/pull/614 other changes as follows: https://github.com/RoaringBitmap/RoaringBitmap/compare/0.9.38...0.9.39 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a diff in pull request #39947: [SPARK-40453][SPARK-41715][CONNECT] Take super class into account when throwing an exception

HyukjinKwon commented on code in PR #39947:

URL: https://github.com/apache/spark/pull/39947#discussion_r1100994819

##

python/pyspark/errors/exceptions/connect.py:

##

@@ -61,41 +97,34 @@ class AnalysisException(SparkConnectGrpcException,

BaseAnalysisException):

Failed to analyze a SQL query plan from Spark Connect server.

"""

-def __init__(

-self,

-message: Optional[str] = None,

-error_class: Optional[str] = None,

-message_parameters: Optional[Dict[str, str]] = None,

-plan: Optional[str] = None,

-reason: Optional[str] = None,

-) -> None:

-self.message = message # type: ignore[assignment]

-if plan is not None:

-self.message = f"{self.message}\nPlan: {plan}"

Review Comment:

The original `AnalysisException.getMessage` contains the string

representation of the underlying plan.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a diff in pull request #39947: [SPARK-40453][SPARK-41715][CONNECT] Take super class into account when throwing an exception

HyukjinKwon commented on code in PR #39947:

URL: https://github.com/apache/spark/pull/39947#discussion_r1100994134

##

connector/connect/server/src/main/scala/org/apache/spark/sql/connect/service/SparkConnectService.scala:

##

@@ -53,19 +59,37 @@ class SparkConnectService(debug: Boolean)

extends SparkConnectServiceGrpc.SparkConnectServiceImplBase

with Logging {

- private def buildStatusFromThrowable[A <: Throwable with SparkThrowable](st:

A): RPCStatus = {

-val t = Option(st.getCause).getOrElse(st)

+ private def allClasses(cl: Class[_]): Seq[Class[_]] = {

+val classes = ArrayBuffer.empty[Class[_]]

+if (cl != null && cl != classOf[java.lang.Object]) {

+ classes.append(cl) // Includes itself.

+}

+

+@tailrec

+def appendSuperClasses(clazz: Class[_]): Unit = {

+ if (clazz == null || clazz == classOf[java.lang.Object]) return

+ classes.append(clazz.getSuperclass)

+ appendSuperClasses(clazz.getSuperclass)

+}

+

+appendSuperClasses(cl)

+classes

+ }

+

+ private def buildStatusFromThrowable(st: Throwable): RPCStatus = {

RPCStatus

.newBuilder()

.setCode(RPCCode.INTERNAL_VALUE)

.addDetails(

ProtoAny.pack(

ErrorInfo

.newBuilder()

-.setReason(t.getClass.getName)

+.setReason(st.getClass.getName)

.setDomain("org.apache.spark")

+.putMetadata("message", StringUtils.abbreviate(st.getMessage,

2048))

Review Comment:

Otherwise, it complains the header length (8KiB limit). It can be configured

below via `NettyChannelBuilder.maxInboundMessageSize` but I didn't change it

here, see also https://stackoverflow.com/a/686243

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a diff in pull request #39897: [WIP][SPARK-42353][SS] Cleanup orphan sst and log files in RocksDB checkpoint directory

HeartSaVioR commented on code in PR #39897:

URL: https://github.com/apache/spark/pull/39897#discussion_r1100978171

##

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/state/RocksDBFileManager.scala:

##

@@ -269,29 +306,46 @@ class RocksDBFileManager(

s"$numVersionsToRetain versions")

// Resolve RocksDB files for all the versions and find the max version

each file is used

-val fileToMaxUsedVersion = new mutable.HashMap[RocksDBImmutableFile, Long]

+val fileToMaxUsedVersion = new mutable.HashMap[String, Long]

sortedVersions.foreach { version =>

val files = Option(versionToRocksDBFiles.get(version)).getOrElse {

val newResolvedFiles = getImmutableFilesFromVersionZip(version)

versionToRocksDBFiles.put(version, newResolvedFiles)

newResolvedFiles

}

- files.foreach(f => fileToMaxUsedVersion(f) = version)

+ files.foreach(f => fileToMaxUsedVersion(f.dfsFileName) =

+math.max(version, fileToMaxUsedVersion.getOrElse(f.dfsFileName,

version)))

}

// Best effort attempt to delete SST files that were last used in

to-be-deleted versions

val filesToDelete = fileToMaxUsedVersion.filter { case (_, v) =>

versionsToDelete.contains(v) }

+

+val sstDir = new Path(dfsRootDir,

RocksDBImmutableFile.SST_FILES_DFS_SUBDIR)

+val logDir = new Path(dfsRootDir,

RocksDBImmutableFile.LOG_FILES_DFS_SUBDIR)

+val allSstFiles = if (fm.exists(sstDir)) fm.list(sstDir).toSeq else

Seq.empty

+val allLogFiles = if (fm.exists(logDir)) fm.list(logDir).toSeq else

Seq.empty

+filesToDelete ++= findOrphanFiles(fileToMaxUsedVersion.keys.toSeq,

allSstFiles ++ allLogFiles)

+ .map(_ -> -1L)

logInfo(s"Deleting ${filesToDelete.size} files not used in versions >=

$minVersionToRetain")

var failedToDelete = 0

-filesToDelete.foreach { case (file, maxUsedVersion) =>

+filesToDelete.foreach { case (dfsFileName, maxUsedVersion) =>

try {

-val dfsFile = dfsFilePath(file.dfsFileName)

+val dfsFile = dfsFilePath(dfsFileName)

fm.delete(dfsFile)

-logDebug(s"Deleted file $file that was last used in version

$maxUsedVersion")

+if (maxUsedVersion == -1) {

+ logDebug(s"Deleted orphan file $dfsFileName")

+} else {

+ logDebug(s"Deleted file $dfsFileName that was last used in version

$maxUsedVersion")

+}

+logDebug(s"Deleted file $dfsFileName that was last used in version

$maxUsedVersion")

Review Comment:

nit: remove?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] db-scnakandala commented on a diff in pull request #39722: [SPARK-42162] Introduce MultiCommutativeOp expression as a memory optimization for canonicalizing large trees of commutative

db-scnakandala commented on code in PR #39722:

URL: https://github.com/apache/spark/pull/39722#discussion_r1100974436

##

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala:

##

@@ -240,6 +240,15 @@ object SQLConf {

.intConf

.createWithDefault(100)

+ val MULTI_COMMUTATIVE_OP_OPT_THRESHOLD =

+

buildConf("spark.sql.analyzer.canonicalization.multiCommutativeOpMemoryOptThreshold")

+ .internal()

+ .doc("The minimum number of consecutive non-commutative operands to" +

Review Comment:

Changed it to `The minimum number of operands in a commutative expression

tree`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #39722: [SPARK-42162] Introduce MultiCommutativeOp expression as a memory optimization for canonicalizing large trees of commutative expr

cloud-fan commented on code in PR #39722:

URL: https://github.com/apache/spark/pull/39722#discussion_r1100956662

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Expression.scala:

##

@@ -1335,3 +1335,72 @@ trait CommutativeExpression extends Expression {

f: PartialFunction[CommutativeExpression, Seq[Expression]]):

Seq[Expression] =

gatherCommutative(this, f).sortBy(_.hashCode())

}

+

+/**

+ * A helper class used by the Commutative expressions during canonicalization.

During

+ * canonicalization, when we have a long tree of commutative operations, we

use the MultiCommutative

+ * expression to represent that tree instead of creating new commutative

objects.

+ * This class is added as a memory optimization for processing large

commutative operation trees

+ * without creating a large number of new intermediate objects.

+ * The MultiCommutativeOp memory optimization is applied to the following

commutative

+ * expressions:

+ * Add, Multiply, And, Or, BitwiseAnd, BitwiseOr, BitwiseXor.

+ * @param operands A sequence of operands that produces a commutative

expression tree.

+ * @param opCls The class of the root operator of the expression tree.

+ * @param evalMode The optional expression evaluation mode.

+ * @param originalRoot Root operator of the commutative expression tree before

canonicalization.

+ * This object reference is used to deduce the return

dataType of Add and

+ * Multiply operations when the input datatype is decimal.

+ */

+case class MultiCommutativeOp(

+operands: Seq[Expression],

+opCls: Class[_],

+evalMode: Option[EvalMode.Value])(originalRoot: Expression) extends

Unevaluable {

+ // Helper method to deduce the data type of a single operation.

+ private def singleOpDataType(lType: DataType, rType: DataType): DataType = {

+originalRoot match {

+ case add: Add =>

+(lType, rType) match {

+ case (DecimalType.Fixed(p1, s1), DecimalType.Fixed(p2, s2)) =>

+add.resultDecimalType(p1, s1, p2, s2)

+ case _ => lType

+}

+ case multiply: Multiply =>

+(lType, rType) match {

+ case (DecimalType.Fixed(p1, s1), DecimalType.Fixed(p2, s2)) =>

+multiply.resultDecimalType(p1, s1, p2, s2)

+ case _ => lType

+}

+}

+ }

+

+ /**

+ * Returns the [[DataType]] of the result of evaluating this expression. It

is

+ * invalid to query the dataType of an unresolved expression (i.e., when

`resolved` == false).

+ */

+ override def dataType: DataType = {

+originalRoot match {

+ case _: Add | _: Multiply =>

+operands.map(_.dataType).reduce((l, r) => singleOpDataType(l, r))

+ case other => other.dataType

+}

+ }

+

+ /**

+ * Returns whether this node is nullable. This node is nullable if any of

its children is

+ * nullable.

+ */

+ override def nullable: Boolean = operands.exists(_.nullable)

+

+ /**

+ * Returns a Seq of the children of this node.

+ * Children should not change. Immutability required for containsChild

optimization

+ */

+ override def children: Seq[Expression] = operands

+

Review Comment:

how about providing a util function in this trait so that sub-classes is

easier to do canonicalization?

```

def buildCanonicalizedPlan(collectOperands: PartialFunction[Expression,

Seq[Expression]], buildBinaryOp: (Expression, Expression) => Expression) = ...

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #39722: [SPARK-42162] Introduce MultiCommutativeOp expression as a memory optimization for canonicalizing large trees of commutative expr

cloud-fan commented on code in PR #39722:

URL: https://github.com/apache/spark/pull/39722#discussion_r1100955001

##

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala:

##

@@ -240,6 +240,15 @@ object SQLConf {

.intConf

.createWithDefault(100)

+ val MULTI_COMMUTATIVE_OP_OPT_THRESHOLD =

+

buildConf("spark.sql.analyzer.canonicalization.multiCommutativeOpMemoryOptThreshold")

+ .internal()

+ .doc("The minimum number of consecutive non-commutative operands to" +

Review Comment:

non-commutative?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #39722: [SPARK-42162] Introduce MultiCommutativeOp expression as a memory optimization for canonicalizing large trees of commutative expr

cloud-fan commented on code in PR #39722:

URL: https://github.com/apache/spark/pull/39722#discussion_r1100954235

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Expression.scala:

##

@@ -1335,3 +1335,72 @@ trait CommutativeExpression extends Expression {

f: PartialFunction[CommutativeExpression, Seq[Expression]]):

Seq[Expression] =

gatherCommutative(this, f).sortBy(_.hashCode())

}

+

+/**

+ * A helper class used by the Commutative expressions during canonicalization.

During

+ * canonicalization, when we have a long tree of commutative operations, we

use the MultiCommutative

+ * expression to represent that tree instead of creating new commutative

objects.

+ * This class is added as a memory optimization for processing large

commutative operation trees

+ * without creating a large number of new intermediate objects.

+ * The MultiCommutativeOp memory optimization is applied to the following

commutative

+ * expressions:

+ * Add, Multiply, And, Or, BitwiseAnd, BitwiseOr, BitwiseXor.

+ * @param operands A sequence of operands that produces a commutative

expression tree.

+ * @param opCls The class of the root operator of the expression tree.

+ * @param evalMode The optional expression evaluation mode.

+ * @param originalRoot Root operator of the commutative expression tree before

canonicalization.

+ * This object reference is used to deduce the return

dataType of Add and

+ * Multiply operations when the input datatype is decimal.

+ */

+case class MultiCommutativeOp(

+operands: Seq[Expression],

+opCls: Class[_],

+evalMode: Option[EvalMode.Value])(originalRoot: Expression) extends

Unevaluable {

+ // Helper method to deduce the data type of a single operation.

+ private def singleOpDataType(lType: DataType, rType: DataType): DataType = {

+originalRoot match {

+ case add: Add =>

+(lType, rType) match {

+ case (DecimalType.Fixed(p1, s1), DecimalType.Fixed(p2, s2)) =>

+add.resultDecimalType(p1, s1, p2, s2)

+ case _ => lType

+}

+ case multiply: Multiply =>

+(lType, rType) match {

+ case (DecimalType.Fixed(p1, s1), DecimalType.Fixed(p2, s2)) =>

+multiply.resultDecimalType(p1, s1, p2, s2)

+ case _ => lType

+}

+}

+ }

+

+ /**

+ * Returns the [[DataType]] of the result of evaluating this expression. It

is

+ * invalid to query the dataType of an unresolved expression (i.e., when

`resolved` == false).

Review Comment:

nit: if you look at other sub-classes of `Expression`, we don't repeat the

api doc in the override methods.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon opened a new pull request, #39947: [SPARK-40453][SPARK-41715][CONNECT] Take super class into account when throwing an exception

HyukjinKwon opened a new pull request, #39947: URL: https://github.com/apache/spark/pull/39947 ### What changes were proposed in this pull request? This PR proposes to take the super classes into account when throwing an exception from the server to Python side by adding more metadata of classes, causes and traceback in JVM. In addition, this PR matches the exceptions being thrown to the regular PySpark exceptions defined: https://github.com/apache/spark/blob/04550edd49ee587656d215e59d6a072772d7d5ec/python/pyspark/errors/exceptions/captured.py#L108-L147 ### Why are the changes needed? Right now, many exceptions cannot be handled (e.g., `NoSuchDatabaseException` that inherits `AnalysisException`) in Python side. ### Does this PR introduce _any_ user-facing change? No to end users. Yes, it matches the exceptions to the regular PySpark exceptions. ### How was this patch tested? Unittests fixed. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

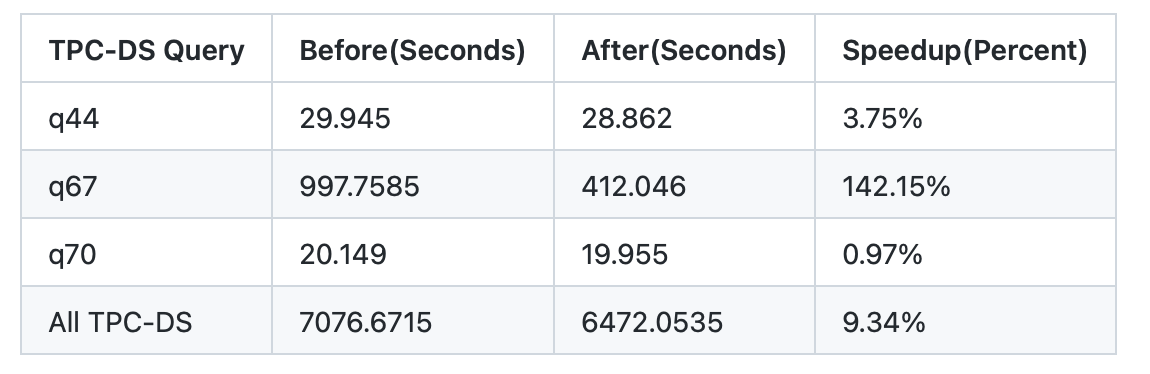

beliefer commented on PR #38799: URL: https://github.com/apache/spark/pull/38799#issuecomment-1423565384 > there are 3 tpc queries having plan change, what's their perf result?  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #39893: [SPARK-42350][SQL][K8S][SS] Replcace `get().getOrElse` with `getOrElse`

LuciferYang commented on PR #39893: URL: https://github.com/apache/spark/pull/39893#issuecomment-1423562100 Thanks @srowen -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #39899: [SPARK-42355][BUILD] Upgrade some maven-plugins

LuciferYang commented on PR #39899: URL: https://github.com/apache/spark/pull/39899#issuecomment-1423561970 Thanks @srowen @HyukjinKwon -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on a diff in pull request #39946: [SPARK-42310][SQL] Assign name to _LEGACY_ERROR_TEMP_1289

itholic commented on code in PR #39946:

URL: https://github.com/apache/spark/pull/39946#discussion_r1100935378

##

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DataSourceUtils.scala:

##

@@ -73,7 +73,8 @@ object DataSourceUtils extends PredicateHelper {

def checkFieldNames(format: FileFormat, schema: StructType): Unit = {

schema.foreach { field =>

if (!format.supportFieldName(field.name)) {

-throw

QueryCompilationErrors.columnNameContainsInvalidCharactersError(field.name)

+throw QueryCompilationErrors.invalidColumnNameAsPathError(

+ format.getClass.getSimpleName, field.name)

Review Comment:

FYI: If we don't use `getSimpleName` here, it displays the format with full

package path something like:

`org.apache.spark.sql.hive.execution.HiveFileFormat`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on pull request #39946: [SPARK-42310][SQL] Assign name to _LEGACY_ERROR_TEMP_1289

itholic commented on PR #39946: URL: https://github.com/apache/spark/pull/39946#issuecomment-1423557987 Thanks for the review, @MaxGekk ! Just adjusted the comments. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

beliefer commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1100921709

##

sql/core/src/main/scala/org/apache/spark/sql/execution/window/WindowGroupLimitExec.scala:

##

@@ -0,0 +1,245 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.window

+

+import org.apache.spark.rdd.RDD

+import org.apache.spark.sql.catalyst.InternalRow

+import org.apache.spark.sql.catalyst.expressions.{Ascending, Attribute,

DenseRank, Expression, Rank, RowNumber, SortOrder, UnsafeProjection, UnsafeRow}

+import org.apache.spark.sql.catalyst.expressions.codegen.GenerateOrdering

+import org.apache.spark.sql.catalyst.plans.physical.{AllTuples,

ClusteredDistribution, Distribution, Partitioning}

+import org.apache.spark.sql.execution.{SparkPlan, UnaryExecNode}

+

+sealed trait WindowGroupLimitMode

+

+case object Partial extends WindowGroupLimitMode

+

+case object Final extends WindowGroupLimitMode

+

+/**

+ * This operator is designed to filter out unnecessary rows before WindowExec

+ * for top-k computation.

+ * @param partitionSpec Should be the same as [[WindowExec#partitionSpec]].

+ * @param orderSpec Should be the same as [[WindowExec#orderSpec]].

+ * @param rankLikeFunction The function to compute row rank, should be

RowNumber/Rank/DenseRank.

+ * @param limit The limit for rank value.

+ * @param mode The mode describes [[WindowGroupLimitExec]] before or after

shuffle.

+ * @param child The child spark plan.

+ */

+case class WindowGroupLimitExec(

+partitionSpec: Seq[Expression],

+orderSpec: Seq[SortOrder],

+rankLikeFunction: Expression,

+limit: Int,

+mode: WindowGroupLimitMode,

+child: SparkPlan) extends UnaryExecNode {

+

+ override def output: Seq[Attribute] = child.output

+

+ override def requiredChildDistribution: Seq[Distribution] = mode match {

+case Partial => super.requiredChildDistribution

+case Final =>

+ if (partitionSpec.isEmpty) {

+AllTuples :: Nil

+ } else {

+ClusteredDistribution(partitionSpec) :: Nil

+ }

+ }

+

+ override def requiredChildOrdering: Seq[Seq[SortOrder]] =

+Seq(partitionSpec.map(SortOrder(_, Ascending)) ++ orderSpec)

+

+ override def outputOrdering: Seq[SortOrder] = child.outputOrdering

+

+ override def outputPartitioning: Partitioning = child.outputPartitioning

+

+ protected override def doExecute(): RDD[InternalRow] = rankLikeFunction

match {

+case _: RowNumber =>

+ child.execute().mapPartitionsInternal(

+SimpleGroupLimitIterator(partitionSpec, output, _, limit))

+case _: Rank =>

+ child.execute().mapPartitionsInternal(

+RankGroupLimitIterator(partitionSpec, output, _, orderSpec, limit))

+case _: DenseRank =>

+ child.execute().mapPartitionsInternal(

+DenseRankGroupLimitIterator(partitionSpec, output, _, orderSpec,

limit))

+ }

+

+ override protected def withNewChildInternal(newChild: SparkPlan):

WindowGroupLimitExec =

+copy(child = newChild)

+}

+

+abstract class WindowIterator extends Iterator[InternalRow] {

+

+ def partitionSpec: Seq[Expression]

+

+ def output: Seq[Attribute]

+

+ def input: Iterator[InternalRow]

+

+ def limit: Int

+

+ val grouping = UnsafeProjection.create(partitionSpec, output)

Review Comment:

Yes. I have another PR do the work.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] srowen commented on a diff in pull request #39941: [MINOR][DOCS] Add link to Hadoop docs

srowen commented on code in PR #39941: URL: https://github.com/apache/spark/pull/39941#discussion_r1100921708 ## docs/rdd-programming-guide.md: ## @@ -442,7 +442,7 @@ Apart from text files, Spark's Python API also supports several other data forma **Writable Support** -PySpark SequenceFile support loads an RDD of key-value pairs within Java, converts Writables to base Java types, and pickles the +PySpark SequenceFile support loads an RDD of key-value pairs within Java, converts [Writables](https://hadoop.apache.org/docs/current/api/org/apache/hadoop/io/Writable.html) to base Java types, and pickles the Review Comment: Yeah I might write `[Writable](..)s` but that's awkward. `[Writable](...) objects`? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] srowen commented on pull request #39899: [SPARK-42355][BUILD] Upgrade some maven-plugins

srowen commented on PR #39899: URL: https://github.com/apache/spark/pull/39899#issuecomment-1423539058 Merged to master -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] srowen closed pull request #39899: [SPARK-42355][BUILD] Upgrade some maven-plugins

srowen closed pull request #39899: [SPARK-42355][BUILD] Upgrade some maven-plugins URL: https://github.com/apache/spark/pull/39899 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] srowen closed pull request #39893: [SPARK-42350][SQL][K8S][SS] Replcace `get().getOrElse` with `getOrElse`

srowen closed pull request #39893: [SPARK-42350][SQL][K8S][SS] Replcace `get().getOrElse` with `getOrElse` URL: https://github.com/apache/spark/pull/39893 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] srowen commented on pull request #39893: [SPARK-42350][SQL][K8S][SS] Replcace `get().getOrElse` with `getOrElse`

srowen commented on PR #39893: URL: https://github.com/apache/spark/pull/39893#issuecomment-1423538496 Merged to master -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

beliefer commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1100920458

##

sql/core/src/test/scala/org/apache/spark/sql/DataFrameWindowFunctionsSuite.scala:

##

@@ -1265,4 +1265,168 @@ class DataFrameWindowFunctionsSuite extends QueryTest

)

)

}

+

+ test("SPARK-37099: Insert window group limit node for top-k computation") {

+

+val nullStr: String = null

+val df = Seq(

+ ("a", 0, "c", 1.0),

+ ("a", 1, "x", 2.0),

+ ("a", 2, "y", 3.0),

+ ("a", 3, "z", -1.0),

+ ("a", 4, "", 2.0),

+ ("a", 4, "", 2.0),

+ ("b", 1, "h", Double.NaN),

+ ("b", 1, "n", Double.PositiveInfinity),

+ ("c", 1, "z", -2.0),

+ ("c", 1, "a", -4.0),

+ ("c", 2, nullStr, 5.0)).toDF("key", "value", "order", "value2")

+

+val window = Window.partitionBy($"key").orderBy($"order".asc_nulls_first)

+val window2 = Window.partitionBy($"key").orderBy($"order".desc_nulls_first)

+

+Seq(-1, 100).foreach { threshold =>

+ withSQLConf(SQLConf.WINDOW_GROUP_LIMIT_THRESHOLD.key ->

threshold.toString) {

+Seq($"rn" === 0, $"rn" < 1, $"rn" <= 0).foreach { condition =>

+ checkAnswer(df.withColumn("rn",

row_number().over(window)).where(condition),

+Seq.empty[Row]

+ )

+}

+

+Seq($"rn" === 1, $"rn" < 2, $"rn" <= 1).foreach { condition =>

+ checkAnswer(df.withColumn("rn",

row_number().over(window)).where(condition),

+Seq(

+ Row("a", 4, "", 2.0, 1),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("c", 2, null, 5.0, 1)

+)

+ )

+

+ checkAnswer(df.withColumn("rn",

rank().over(window)).where(condition),

+Seq(

+ Row("a", 4, "", 2.0, 1),

+ Row("a", 4, "", 2.0, 1),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("c", 2, null, 5.0, 1)

+)

+ )

+

+ checkAnswer(df.withColumn("rn",

dense_rank().over(window)).where(condition),

+Seq(

+ Row("a", 4, "", 2.0, 1),

+ Row("a", 4, "", 2.0, 1),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("c", 2, null, 5.0, 1)

+)

+ )

+}

+

+Seq($"rn" < 3, $"rn" <= 2).foreach { condition =>

+ checkAnswer(df.withColumn("rn",

row_number().over(window)).where(condition),

+Seq(

+ Row("a", 4, "", 2.0, 1),

+ Row("a", 4, "", 2.0, 2),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("b", 1, "n", Double.PositiveInfinity, 2),

+ Row("c", 1, "a", -4.0, 2),

+ Row("c", 2, null, 5.0, 1)

+)

+ )

+

+ checkAnswer(df.withColumn("rn",

rank().over(window)).where(condition),

+Seq(

+ Row("a", 4, "", 2.0, 1),

+ Row("a", 4, "", 2.0, 1),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("b", 1, "n", Double.PositiveInfinity, 2),

+ Row("c", 1, "a", -4.0, 2),

+ Row("c", 2, null, 5.0, 1)

+)

+ )

+

+ checkAnswer(df.withColumn("rn",

dense_rank().over(window)).where(condition),

+Seq(

+ Row("a", 0, "c", 1.0, 2),

+ Row("a", 4, "", 2.0, 1),

+ Row("a", 4, "", 2.0, 1),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("b", 1, "n", Double.PositiveInfinity, 2),

+ Row("c", 1, "a", -4.0, 2),

+ Row("c", 2, null, 5.0, 1)

+)

+ )

+}

+

+val condition = $"rn" === 2 && $"value2" > 0.5

+checkAnswer(df.withColumn("rn",

row_number().over(window)).where(condition),

+ Seq(

+Row("a", 4, "", 2.0, 2),

+Row("b", 1, "n", Double.PositiveInfinity, 2)

+ )

+)

+

+checkAnswer(df.withColumn("rn", rank().over(window)).where(condition),

+ Seq(

+Row("b", 1, "n", Double.PositiveInfinity, 2)

+ )

+)

+

+checkAnswer(df.withColumn("rn",

dense_rank().over(window)).where(condition),

+ Seq(

+Row("a", 0, "c", 1.0, 2),

+Row("b", 1, "n", Double.PositiveInfinity, 2)

+ )

+)

+

+val multipleRowNumbers = df

+ .withColumn("rn", row_number().over(window))

+ .withColumn("rn2", row_number().over(window))

+ .where('rn < 2 && 'rn2 < 3)

+checkAnswer(multipleRowNumbers,

+ Seq(

+Row("a", 4, "", 2.0, 1, 1),

+Row("b", 1, "h", Double.NaN, 1, 1),

+Row("c", 2, null, 5.0, 1, 1)

+ )

+)

+

+val multipleRanks = df

+ .withColumn("rn", rank().over(window))

+ .withColumn("rn2", rank().over(window))

+ .where('rn < 2 && 'rn2 < 3)

+checkAnswer(multipleRanks,

+ Seq(

+Row("a", 4, "", 2.0, 1, 1),

+Row("a", 4, "",

[GitHub] [spark] beliefer commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

beliefer commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1100919419

##

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala:

##

@@ -2580,6 +2580,18 @@ object SQLConf {

.intConf

.createWithDefault(SHUFFLE_SPILL_NUM_ELEMENTS_FORCE_SPILL_THRESHOLD.defaultValue.get)

+ val WINDOW_GROUP_LIMIT_THRESHOLD =

+buildConf("spark.sql.optimizer.windowGroupLimitThreshold")

+ .internal()

+ .doc("Threshold for filter the dataset by the window group limit before"

+

Review Comment:

0 means the output results is empty.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #39933: [SPARK-42377][CONNECT][TESTS] Test framework for Spark Connect Scala Client

LuciferYang commented on PR #39933: URL: https://github.com/apache/spark/pull/39933#issuecomment-1423533123 https://user-images.githubusercontent.com/1475305/217703128-b0cb8d3e-a103-4595-80b1-db3e4a9da72a.png";> license test failed, need add new rule to `.rat-excludes`? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #39933: [SPARK-42377][CONNECT][TESTS] Test framework for Spark Connect Scala Client

LuciferYang commented on PR #39933: URL: https://github.com/apache/spark/pull/39933#issuecomment-1423528903 @hvanhovell checked, local maven test passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

beliefer commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1100909992

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/InsertWindowGroupLimit.scala:

##

@@ -0,0 +1,96 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.optimizer

+

+import org.apache.spark.sql.catalyst.expressions.{Alias, Attribute,

CurrentRow, DenseRank, EqualTo, Expression, GreaterThan, GreaterThanOrEqual,

IntegerLiteral, LessThan, LessThanOrEqual, NamedExpression, PredicateHelper,

Rank, RowFrame, RowNumber, SpecifiedWindowFrame, UnboundedPreceding,

WindowExpression, WindowSpecDefinition}

+import org.apache.spark.sql.catalyst.plans.logical.{Filter, LocalRelation,

LogicalPlan, Window, WindowGroupLimit}

+import org.apache.spark.sql.catalyst.rules.Rule

+import org.apache.spark.sql.catalyst.trees.TreePattern.{FILTER, WINDOW}

+

+/**

+ * Optimize the filter based on rank-like window function by reduce not

required rows.

+ * This rule optimizes the following cases:

+ * {{{

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1

WHERE rn = 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1

WHERE 5 = rn

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1

WHERE rn < 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1

WHERE 5 > rn

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1

WHERE rn <= 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1

WHERE 5 >= rn

+ * }}}

+ */

+object InsertWindowGroupLimit extends Rule[LogicalPlan] with PredicateHelper {

+

+ /**

+ * Extract all the limit values from predicates.

+ */

+ def extractLimits(condition: Expression, attr: Attribute): Option[Int] = {

+val limits = splitConjunctivePredicates(condition).collect {

+ case EqualTo(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit

+ case EqualTo(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit

+ case LessThan(e, IntegerLiteral(limit)) if e.semanticEquals(attr) =>

limit - 1

+ case GreaterThan(IntegerLiteral(limit), e) if e.semanticEquals(attr) =>

limit - 1

+ case LessThanOrEqual(e, IntegerLiteral(limit)) if e.semanticEquals(attr)

=> limit

+ case GreaterThanOrEqual(IntegerLiteral(limit), e) if

e.semanticEquals(attr) => limit

+}

+

+if (limits.nonEmpty) Some(limits.min) else None

+ }

+

+ private def support(

+ windowExpression: NamedExpression): Boolean = windowExpression match {

+case Alias(WindowExpression(_: Rank | _: DenseRank | _: RowNumber,

WindowSpecDefinition(_, _,

+SpecifiedWindowFrame(RowFrame, UnboundedPreceding, CurrentRow))), _) =>

true

+case _ => false

+ }

+

+ def apply(plan: LogicalPlan): LogicalPlan = {

+if (conf.windowGroupLimitThreshold == -1) return plan

+

+plan.transformWithPruning(_.containsAllPatterns(FILTER, WINDOW), ruleId) {

+ case filter @ Filter(condition,

+window @ Window(windowExpressions, partitionSpec, orderSpec, child))

+if !child.isInstanceOf[WindowGroupLimit] &&

windowExpressions.exists(support) &&

+ orderSpec.nonEmpty =>

+val limits = windowExpressions.collect {

+ case alias @ Alias(WindowExpression(rankLikeFunction, _), _) if

support(alias) =>

+extractLimits(condition, alias.toAttribute).map((_,

rankLikeFunction))

+}.flatten

+

+// multiple different rank-like functions unsupported.

+if (limits.isEmpty) {

+ filter

+} else {

+ limits.minBy(_._1) match {

Review Comment:

Good idea.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.or

[GitHub] [spark] LuciferYang commented on pull request #39893: [SPARK-42350][SQL][K8S][SS] Replcace `get().getOrElse` with `getOrElse`

LuciferYang commented on PR #39893: URL: https://github.com/apache/spark/pull/39893#issuecomment-1423517707 should be no more -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on a diff in pull request #39925: [SPARK-41812][SPARK-41823][CONNECT][SQL][PYTHON] Resolve ambiguous columns issue in `Join`

zhengruifeng commented on code in PR #39925:

URL: https://github.com/apache/spark/pull/39925#discussion_r1100882974

##

connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala:

##

@@ -123,6 +123,11 @@ class SparkConnectPlanner(val session: SparkSession) {

transformRelationPlugin(rel.getExtension)

case _ => throw InvalidPlanInput(s"${rel.getUnknown} not supported.")

}

+

+if (rel.hasCommon) {

+ plan.setTagValue(LogicalPlan.PLAN_ID_TAG, rel.getCommon.getPlanId)

Review Comment:

ok, let us make it optional.

~~the Commands and Catalogs like `CreateView` and `WriteOperation` will not

have a plan id~~

Let me also add plan_id for Commands, maybe useful in the future

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on a diff in pull request #39925: [SPARK-41812][SPARK-41823][CONNECT][SQL][PYTHON] Resolve ambiguous columns issue in `Join`

zhengruifeng commented on code in PR #39925: URL: https://github.com/apache/spark/pull/39925#discussion_r1100886574 ## python/pyspark/sql/connect/plan.py: ## @@ -259,6 +272,8 @@ def __init__( def plan(self, session: "SparkConnectClient") -> proto.Relation: plan = proto.Relation() Review Comment: nice, will add a helper method -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on a diff in pull request #39925: [SPARK-41812][SPARK-41823][CONNECT][SQL][PYTHON] Resolve ambiguous columns issue in `Join`

zhengruifeng commented on code in PR #39925:

URL: https://github.com/apache/spark/pull/39925#discussion_r1100882974

##

connector/connect/server/src/main/scala/org/apache/spark/sql/connect/planner/SparkConnectPlanner.scala:

##

@@ -123,6 +123,11 @@ class SparkConnectPlanner(val session: SparkSession) {

transformRelationPlugin(rel.getExtension)

case _ => throw InvalidPlanInput(s"${rel.getUnknown} not supported.")

}

+

+if (rel.hasCommon) {

+ plan.setTagValue(LogicalPlan.PLAN_ID_TAG, rel.getCommon.getPlanId)

Review Comment:

ok, let us make it optional.

the Commands and Catalogs like `CreateView` and `WriteOperation` will not

have a plan id

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on a diff in pull request #39925: [SPARK-41812][SPARK-41823][CONNECT][SQL][PYTHON] Resolve ambiguous columns issue in `Join`

zhengruifeng commented on code in PR #39925:

URL: https://github.com/apache/spark/pull/39925#discussion_r1100881330

##

connector/connect/common/src/main/protobuf/spark/connect/relations.proto:

##

@@ -91,8 +91,11 @@ message Unknown {}

// Common metadata of all relations.

message RelationCommon {

+ // (Required) A globally unique id for a given connect plan.

+ int64 plan_id = 1;

+

// (Required) Shared relation metadata.

- string source_info = 1;

+ string source_info = 2;

Review Comment:

ok

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on a diff in pull request #39925: [SPARK-41812][SPARK-41823][CONNECT][SQL][PYTHON] Resolve ambiguous columns issue in `Join`

zhengruifeng commented on code in PR #39925:

URL: https://github.com/apache/spark/pull/39925#discussion_r1100881164

##

connector/connect/common/src/main/protobuf/spark/connect/relations.proto:

##

@@ -91,8 +91,11 @@ message Unknown {}

// Common metadata of all relations.

message RelationCommon {

+ // (Required) A globally unique id for a given connect plan.

+ int64 plan_id = 1;

Review Comment:

will update

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on a diff in pull request #39925: [SPARK-41812][SPARK-41823][CONNECT][SQL][PYTHON] Resolve ambiguous columns issue in `Join`