[GitHub] spark pull request #21648: [SPARK-24665][PySpark] Use SQLConf in PySpark to ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21648#discussion_r199316817 --- Diff: python/pyspark/sql/context.py --- @@ -93,6 +93,10 @@ def _ssql_ctx(self): """ return self._jsqlContext +def conf(self): --- End diff -- Thanks, done in 4fc0ae4. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20930: [SPARK-23811][Core] FetchFailed comes before Succ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/20930#discussion_r184276403

--- Diff:

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala ---

@@ -2399,6 +2399,84 @@ class DAGSchedulerSuite extends SparkFunSuite with

LocalSparkContext with TimeLi

}

}

+ /**

+ * This tests the case where origin task success after speculative task

got FetchFailed

+ * before.

+ */

+ test("SPARK-23811: ShuffleMapStage failed by FetchFailed should ignore

following " +

+"successful tasks") {

+// Create 3 RDDs with shuffle dependencies on each other: rddA <---

rddB <--- rddC

+val rddA = new MyRDD(sc, 2, Nil)

+val shuffleDepA = new ShuffleDependency(rddA, new HashPartitioner(2))

+val shuffleIdA = shuffleDepA.shuffleId

+

+val rddB = new MyRDD(sc, 2, List(shuffleDepA), tracker =

mapOutputTracker)

+val shuffleDepB = new ShuffleDependency(rddB, new HashPartitioner(2))

+

+val rddC = new MyRDD(sc, 2, List(shuffleDepB), tracker =

mapOutputTracker)

+

+submit(rddC, Array(0, 1))

+

+// Complete both tasks in rddA.

+assert(taskSets(0).stageId === 0 && taskSets(0).stageAttemptId === 0)

+complete(taskSets(0), Seq(

+ (Success, makeMapStatus("hostA", 2)),

+ (Success, makeMapStatus("hostB", 2

+

+// The first task success

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(0), Success, makeMapStatus("hostB", 2)))

+

+// The second task's speculative attempt fails first, but task self

still running.

+// This may caused by ExecutorLost.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1),

+ FetchFailed(makeBlockManagerId("hostA"), shuffleIdA, 0, 0,

"ignored"),

+ null))

+// Check currently missing partition.

+

assert(mapOutputTracker.findMissingPartitions(shuffleDepB.shuffleId).get.size

=== 1)

+// The second result task self success soon.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1), Success, makeMapStatus("hostB", 2)))

+// Missing partition number should not change, otherwise it will cause

child stage

+// never succeed.

+

assert(mapOutputTracker.findMissingPartitions(shuffleDepB.shuffleId).get.size

=== 1)

+ }

+

+ test("SPARK-23811: check ResultStage failed by FetchFailed can ignore

following " +

+"successful tasks") {

+val rddA = new MyRDD(sc, 2, Nil)

+val shuffleDepA = new ShuffleDependency(rddA, new HashPartitioner(2))

+val shuffleIdA = shuffleDepA.shuffleId

+val rddB = new MyRDD(sc, 2, List(shuffleDepA), tracker =

mapOutputTracker)

+submit(rddB, Array(0, 1))

+

+// Complete both tasks in rddA.

+assert(taskSets(0).stageId === 0 && taskSets(0).stageAttemptId === 0)

+complete(taskSets(0), Seq(

+ (Success, makeMapStatus("hostA", 2)),

+ (Success, makeMapStatus("hostB", 2

+

+// The first task of rddB success

+assert(taskSets(1).tasks(0).isInstanceOf[ResultTask[_, _]])

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(0), Success, makeMapStatus("hostB", 2)))

+

+// The second task's speculative attempt fails first, but task self

still running.

+// This may caused by ExecutorLost.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1),

+ FetchFailed(makeBlockManagerId("hostA"), shuffleIdA, 0, 0,

"ignored"),

+ null))

+// Make sure failedStage is not empty now

+assert(scheduler.failedStages.nonEmpty)

+// The second result task self success soon.

+assert(taskSets(1).tasks(1).isInstanceOf[ResultTask[_, _]])

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1), Success, makeMapStatus("hostB", 2)))

--- End diff --

Yep, you're right. The success completely event in UT was treated as normal

success task. I fixed this by ignore this event at the beginning of

handleTaskCompletion.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20930: [SPARK-23811][Core] FetchFailed comes before Succ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/20930#discussion_r184260597

--- Diff:

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala ---

@@ -2399,6 +2399,84 @@ class DAGSchedulerSuite extends SparkFunSuite with

LocalSparkContext with TimeLi

}

}

+ /**

+ * This tests the case where origin task success after speculative task

got FetchFailed

+ * before.

+ */

+ test("SPARK-23811: ShuffleMapStage failed by FetchFailed should ignore

following " +

+"successful tasks") {

+// Create 3 RDDs with shuffle dependencies on each other: rddA <---

rddB <--- rddC

+val rddA = new MyRDD(sc, 2, Nil)

+val shuffleDepA = new ShuffleDependency(rddA, new HashPartitioner(2))

+val shuffleIdA = shuffleDepA.shuffleId

+

+val rddB = new MyRDD(sc, 2, List(shuffleDepA), tracker =

mapOutputTracker)

+val shuffleDepB = new ShuffleDependency(rddB, new HashPartitioner(2))

+

+val rddC = new MyRDD(sc, 2, List(shuffleDepB), tracker =

mapOutputTracker)

+

+submit(rddC, Array(0, 1))

+

+// Complete both tasks in rddA.

+assert(taskSets(0).stageId === 0 && taskSets(0).stageAttemptId === 0)

+complete(taskSets(0), Seq(

+ (Success, makeMapStatus("hostA", 2)),

+ (Success, makeMapStatus("hostB", 2

+

+// The first task success

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(0), Success, makeMapStatus("hostB", 2)))

+

+// The second task's speculative attempt fails first, but task self

still running.

+// This may caused by ExecutorLost.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1),

+ FetchFailed(makeBlockManagerId("hostA"), shuffleIdA, 0, 0,

"ignored"),

+ null))

+// Check currently missing partition.

+

assert(mapOutputTracker.findMissingPartitions(shuffleDepB.shuffleId).get.size

=== 1)

+// The second result task self success soon.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1), Success, makeMapStatus("hostB", 2)))

+// Missing partition number should not change, otherwise it will cause

child stage

+// never succeed.

+

assert(mapOutputTracker.findMissingPartitions(shuffleDepB.shuffleId).get.size

=== 1)

+ }

+

+ test("SPARK-23811: check ResultStage failed by FetchFailed can ignore

following " +

+"successful tasks") {

+val rddA = new MyRDD(sc, 2, Nil)

+val shuffleDepA = new ShuffleDependency(rddA, new HashPartitioner(2))

+val shuffleIdA = shuffleDepA.shuffleId

+val rddB = new MyRDD(sc, 2, List(shuffleDepA), tracker =

mapOutputTracker)

+submit(rddB, Array(0, 1))

+

+// Complete both tasks in rddA.

+assert(taskSets(0).stageId === 0 && taskSets(0).stageAttemptId === 0)

+complete(taskSets(0), Seq(

+ (Success, makeMapStatus("hostA", 2)),

+ (Success, makeMapStatus("hostB", 2

+

+// The first task of rddB success

+assert(taskSets(1).tasks(0).isInstanceOf[ResultTask[_, _]])

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(0), Success, makeMapStatus("hostB", 2)))

+

+// The second task's speculative attempt fails first, but task self

still running.

+// This may caused by ExecutorLost.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1),

+ FetchFailed(makeBlockManagerId("hostA"), shuffleIdA, 0, 0,

"ignored"),

+ null))

+// Make sure failedStage is not empty now

+assert(scheduler.failedStages.nonEmpty)

+// The second result task self success soon.

+assert(taskSets(1).tasks(1).isInstanceOf[ResultTask[_, _]])

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1), Success, makeMapStatus("hostB", 2)))

--- End diff --

The success task will be ignored by

`OutputCommitCoordinator.taskCompleted`, in the taskCompleted logic,

stageStates.getOrElse will return because the current stage is in failed set.

The detailed log providing below:

```

18/04/26 10:50:24.524 ScalaTest-run-running-DAGSchedulerSuite INFO

DAGScheduler: Resubmitting ShuffleMapStage 0 (RDD at

DAGSchedulerSuite.scala:74) and ResultStage 1 () due to fetch failure

18/04/26 10:50:24.535 ScalaTest-run-running-DAGSchedulerSuite DEBUG

DAGSchedulerSuite$$anon$6: Increasing epoch to 2

18/04/26 10:50:24.538 ScalaTest-run-running-DAGSchedulerSuite INFO

DAGScheduler: Executor lost: exec-hostA (epoch 1)

18/04/26 10:50:24.540 ScalaTest-run-running-DAGSchedulerSuite INFO

DAGScheduler: Shuffle files lost for execut

[GitHub] spark pull request #20930: [SPARK-23811][Core] FetchFailed comes before Succ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/20930#discussion_r184274946

--- Diff:

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala ---

@@ -2399,6 +2399,84 @@ class DAGSchedulerSuite extends SparkFunSuite with

LocalSparkContext with TimeLi

}

}

+ /**

+ * This tests the case where origin task success after speculative task

got FetchFailed

+ * before.

+ */

+ test("SPARK-23811: ShuffleMapStage failed by FetchFailed should ignore

following " +

+"successful tasks") {

+// Create 3 RDDs with shuffle dependencies on each other: rddA <---

rddB <--- rddC

+val rddA = new MyRDD(sc, 2, Nil)

+val shuffleDepA = new ShuffleDependency(rddA, new HashPartitioner(2))

+val shuffleIdA = shuffleDepA.shuffleId

+

+val rddB = new MyRDD(sc, 2, List(shuffleDepA), tracker =

mapOutputTracker)

+val shuffleDepB = new ShuffleDependency(rddB, new HashPartitioner(2))

+

+val rddC = new MyRDD(sc, 2, List(shuffleDepB), tracker =

mapOutputTracker)

+

+submit(rddC, Array(0, 1))

+

+// Complete both tasks in rddA.

+assert(taskSets(0).stageId === 0 && taskSets(0).stageAttemptId === 0)

+complete(taskSets(0), Seq(

+ (Success, makeMapStatus("hostA", 2)),

+ (Success, makeMapStatus("hostB", 2

+

+// The first task success

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(0), Success, makeMapStatus("hostB", 2)))

+

+// The second task's speculative attempt fails first, but task self

still running.

+// This may caused by ExecutorLost.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1),

+ FetchFailed(makeBlockManagerId("hostA"), shuffleIdA, 0, 0,

"ignored"),

+ null))

+// Check currently missing partition.

+

assert(mapOutputTracker.findMissingPartitions(shuffleDepB.shuffleId).get.size

=== 1)

+// The second result task self success soon.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1), Success, makeMapStatus("hostB", 2)))

+// Missing partition number should not change, otherwise it will cause

child stage

+// never succeed.

+

assert(mapOutputTracker.findMissingPartitions(shuffleDepB.shuffleId).get.size

=== 1)

+ }

+

+ test("SPARK-23811: check ResultStage failed by FetchFailed can ignore

following " +

+"successful tasks") {

+val rddA = new MyRDD(sc, 2, Nil)

+val shuffleDepA = new ShuffleDependency(rddA, new HashPartitioner(2))

+val shuffleIdA = shuffleDepA.shuffleId

+val rddB = new MyRDD(sc, 2, List(shuffleDepA), tracker =

mapOutputTracker)

+submit(rddB, Array(0, 1))

+

+// Complete both tasks in rddA.

+assert(taskSets(0).stageId === 0 && taskSets(0).stageAttemptId === 0)

+complete(taskSets(0), Seq(

+ (Success, makeMapStatus("hostA", 2)),

+ (Success, makeMapStatus("hostB", 2

+

+// The first task of rddB success

+assert(taskSets(1).tasks(0).isInstanceOf[ResultTask[_, _]])

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(0), Success, makeMapStatus("hostB", 2)))

+

+// The second task's speculative attempt fails first, but task self

still running.

+// This may caused by ExecutorLost.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1),

+ FetchFailed(makeBlockManagerId("hostA"), shuffleIdA, 0, 0,

"ignored"),

+ null))

+// Make sure failedStage is not empty now

+assert(scheduler.failedStages.nonEmpty)

+// The second result task self success soon.

+assert(taskSets(1).tasks(1).isInstanceOf[ResultTask[_, _]])

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1), Success, makeMapStatus("hostB", 2)))

+assertDataStructuresEmpty()

--- End diff --

Ah, it's used for check job successful complete and all temp structure

empty.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20930: [SPARK-23811][Core] FetchFailed comes before Succ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/20930#discussion_r184260210

--- Diff:

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala ---

@@ -2399,6 +2399,84 @@ class DAGSchedulerSuite extends SparkFunSuite with

LocalSparkContext with TimeLi

}

}

+ /**

+ * This tests the case where origin task success after speculative task

got FetchFailed

+ * before.

+ */

+ test("SPARK-23811: ShuffleMapStage failed by FetchFailed should ignore

following " +

+"successful tasks") {

+// Create 3 RDDs with shuffle dependencies on each other: rddA <---

rddB <--- rddC

+val rddA = new MyRDD(sc, 2, Nil)

+val shuffleDepA = new ShuffleDependency(rddA, new HashPartitioner(2))

+val shuffleIdA = shuffleDepA.shuffleId

+

+val rddB = new MyRDD(sc, 2, List(shuffleDepA), tracker =

mapOutputTracker)

+val shuffleDepB = new ShuffleDependency(rddB, new HashPartitioner(2))

+

+val rddC = new MyRDD(sc, 2, List(shuffleDepB), tracker =

mapOutputTracker)

+

+submit(rddC, Array(0, 1))

+

+// Complete both tasks in rddA.

+assert(taskSets(0).stageId === 0 && taskSets(0).stageAttemptId === 0)

+complete(taskSets(0), Seq(

+ (Success, makeMapStatus("hostA", 2)),

+ (Success, makeMapStatus("hostB", 2

+

+// The first task success

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(0), Success, makeMapStatus("hostB", 2)))

+

+// The second task's speculative attempt fails first, but task self

still running.

+// This may caused by ExecutorLost.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1),

+ FetchFailed(makeBlockManagerId("hostA"), shuffleIdA, 0, 0,

"ignored"),

+ null))

+// Check currently missing partition.

+

assert(mapOutputTracker.findMissingPartitions(shuffleDepB.shuffleId).get.size

=== 1)

+// The second result task self success soon.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1), Success, makeMapStatus("hostB", 2)))

+// Missing partition number should not change, otherwise it will cause

child stage

+// never succeed.

+

assert(mapOutputTracker.findMissingPartitions(shuffleDepB.shuffleId).get.size

=== 1)

+ }

+

+ test("SPARK-23811: check ResultStage failed by FetchFailed can ignore

following " +

+"successful tasks") {

+val rddA = new MyRDD(sc, 2, Nil)

+val shuffleDepA = new ShuffleDependency(rddA, new HashPartitioner(2))

+val shuffleIdA = shuffleDepA.shuffleId

+val rddB = new MyRDD(sc, 2, List(shuffleDepA), tracker =

mapOutputTracker)

+submit(rddB, Array(0, 1))

+

+// Complete both tasks in rddA.

+assert(taskSets(0).stageId === 0 && taskSets(0).stageAttemptId === 0)

+complete(taskSets(0), Seq(

+ (Success, makeMapStatus("hostA", 2)),

+ (Success, makeMapStatus("hostB", 2

+

+// The first task of rddB success

+assert(taskSets(1).tasks(0).isInstanceOf[ResultTask[_, _]])

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(0), Success, makeMapStatus("hostB", 2)))

+

+// The second task's speculative attempt fails first, but task self

still running.

+// This may caused by ExecutorLost.

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1),

+ FetchFailed(makeBlockManagerId("hostA"), shuffleIdA, 0, 0,

"ignored"),

+ null))

+// Make sure failedStage is not empty now

+assert(scheduler.failedStages.nonEmpty)

+// The second result task self success soon.

+assert(taskSets(1).tasks(1).isInstanceOf[ResultTask[_, _]])

+runEvent(makeCompletionEvent(

+ taskSets(1).tasks(1), Success, makeMapStatus("hostB", 2)))

+assertDataStructuresEmpty()

--- End diff --

I add this test for answering your previous question "Can you simulate what

happens to result task if FechFaileded comes before task success?". This test

can pass without my code changing in DAGScheduler.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21175: [SPARK-24107][CORE] ChunkedByteBuffer.writeFully ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21175#discussion_r184882396 --- Diff: core/src/test/scala/org/apache/spark/io/ChunkedByteBufferSuite.scala --- @@ -20,12 +20,12 @@ package org.apache.spark.io import java.nio.ByteBuffer import com.google.common.io.ByteStreams --- End diff -- add an empty line behind 22 to separate spark and third-party group. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21175: [SPARK-24107][CORE] ChunkedByteBuffer.writeFully ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21175#discussion_r184882338

--- Diff:

core/src/test/scala/org/apache/spark/io/ChunkedByteBufferSuite.scala ---

@@ -20,12 +20,12 @@ package org.apache.spark.io

import java.nio.ByteBuffer

import com.google.common.io.ByteStreams

-

-import org.apache.spark.SparkFunSuite

+import org.apache.spark.{SparkFunSuite, SharedSparkContext}

--- End diff --

move SharedSparkContext before SparkFunSuite

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21194: [SPARK-24046][SS] Fix rate source when rowsPerSec...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21194#discussion_r185252544

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/sources/RateStreamProvider.scala

---

@@ -101,25 +101,10 @@ object RateStreamProvider {

/** Calculate the end value we will emit at the time `seconds`. */

def valueAtSecond(seconds: Long, rowsPerSecond: Long, rampUpTimeSeconds:

Long): Long = {

-// E.g., rampUpTimeSeconds = 4, rowsPerSecond = 10

-// Then speedDeltaPerSecond = 2

-//

-// seconds = 0 1 2 3 4 5 6

-// speed = 0 2 4 6 8 10 10 (speedDeltaPerSecond * seconds)

-// end value = 0 2 6 12 20 30 40 (0 + speedDeltaPerSecond * seconds) *

(seconds + 1) / 2

-val speedDeltaPerSecond = rowsPerSecond / (rampUpTimeSeconds + 1)

-if (seconds <= rampUpTimeSeconds) {

- // Calculate "(0 + speedDeltaPerSecond * seconds) * (seconds + 1) /

2" in a special way to

- // avoid overflow

- if (seconds % 2 == 1) {

-(seconds + 1) / 2 * speedDeltaPerSecond * seconds

- } else {

-seconds / 2 * speedDeltaPerSecond * (seconds + 1)

- }

-} else {

- // rampUpPart is just a special case of the above formula:

rampUpTimeSeconds == seconds

- val rampUpPart = valueAtSecond(rampUpTimeSeconds, rowsPerSecond,

rampUpTimeSeconds)

- rampUpPart + (seconds - rampUpTimeSeconds) * rowsPerSecond

-}

+val delta = rowsPerSecond.toDouble / rampUpTimeSeconds

+val rampUpSeconds = if (seconds <= rampUpTimeSeconds) seconds else

rampUpTimeSeconds

+val afterRampUpSeconds = if (seconds > rampUpTimeSeconds ) seconds -

rampUpTimeSeconds else 0

+// Use classic distance formula based on accelaration: ut + ½at2

+Math.floor(rampUpSeconds * rampUpSeconds * delta / 2).toLong +

afterRampUpSeconds * rowsPerSecond

--- End diff --

nit: >100 characters

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21194: [SPARK-24046][SS] Fix rate source when rowsPerSec...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21194#discussion_r185252360

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/sources/RateStreamProvider.scala

---

@@ -101,25 +101,10 @@ object RateStreamProvider {

/** Calculate the end value we will emit at the time `seconds`. */

def valueAtSecond(seconds: Long, rowsPerSecond: Long, rampUpTimeSeconds:

Long): Long = {

-// E.g., rampUpTimeSeconds = 4, rowsPerSecond = 10

-// Then speedDeltaPerSecond = 2

-//

-// seconds = 0 1 2 3 4 5 6

-// speed = 0 2 4 6 8 10 10 (speedDeltaPerSecond * seconds)

-// end value = 0 2 6 12 20 30 40 (0 + speedDeltaPerSecond * seconds) *

(seconds + 1) / 2

-val speedDeltaPerSecond = rowsPerSecond / (rampUpTimeSeconds + 1)

-if (seconds <= rampUpTimeSeconds) {

- // Calculate "(0 + speedDeltaPerSecond * seconds) * (seconds + 1) /

2" in a special way to

- // avoid overflow

- if (seconds % 2 == 1) {

-(seconds + 1) / 2 * speedDeltaPerSecond * seconds

- } else {

-seconds / 2 * speedDeltaPerSecond * (seconds + 1)

- }

-} else {

- // rampUpPart is just a special case of the above formula:

rampUpTimeSeconds == seconds

- val rampUpPart = valueAtSecond(rampUpTimeSeconds, rowsPerSecond,

rampUpTimeSeconds)

- rampUpPart + (seconds - rampUpTimeSeconds) * rowsPerSecond

-}

+val delta = rowsPerSecond.toDouble / rampUpTimeSeconds

+val rampUpSeconds = if (seconds <= rampUpTimeSeconds) seconds else

rampUpTimeSeconds

+val afterRampUpSeconds = if (seconds > rampUpTimeSeconds ) seconds -

rampUpTimeSeconds else 0

+// Use classic distance formula based on accelaration: ut + ½at2

--- End diff --

nit: acceleration

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21188: [SPARK-24046][SS] Fix rate source rowsPerSecond <= rampU...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21188 @maasg as comment in #21194, I just consider we should not change the behavior while `seconds > rampUpTimeSeconds`. Maybe it more important than smooth. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21194: [SPARK-24046][SS] Fix rate source when rowsPerSec...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21194#discussion_r185851172

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/streaming/sources/RateStreamProviderSuite.scala

---

@@ -173,55 +173,154 @@ class RateSourceSuite extends StreamTest {

assert(readData.map(_.getLong(1)).sorted == Range(0, 33))

}

- test("valueAtSecond") {

+ test("valueAtSecond without ramp-up") {

import RateStreamProvider._

+val rowsPerSec = Seq(1,10,50,100,1000,1)

+val secs = Seq(1, 10, 100, 1000, 1, 10)

+for {

+ sec <- secs

+ rps <- rowsPerSec

+} yield {

+ assert(valueAtSecond(seconds = sec, rowsPerSecond = rps,

rampUpTimeSeconds = 0) === sec * rps)

+}

+ }

-assert(valueAtSecond(seconds = 0, rowsPerSecond = 5, rampUpTimeSeconds

= 0) === 0)

-assert(valueAtSecond(seconds = 1, rowsPerSecond = 5, rampUpTimeSeconds

= 0) === 5)

+ test("valueAtSecond with ramp-up") {

+import RateStreamProvider._

+val rowsPerSec = Seq(1, 5, 10, 50, 100, 1000, 1)

+val rampUpSec = Seq(10, 100, 1000)

+

+// for any combination, value at zero = 0

+for {

+ rps <- rowsPerSec

+ rampUp <- rampUpSec

+} yield {

+ assert(valueAtSecond(seconds = 0, rowsPerSecond = rps,

rampUpTimeSeconds = rampUp) === 0)

+}

-assert(valueAtSecond(seconds = 0, rowsPerSecond = 5, rampUpTimeSeconds

= 2) === 0)

-assert(valueAtSecond(seconds = 1, rowsPerSecond = 5, rampUpTimeSeconds

= 2) === 1)

-assert(valueAtSecond(seconds = 2, rowsPerSecond = 5, rampUpTimeSeconds

= 2) === 3)

-assert(valueAtSecond(seconds = 3, rowsPerSecond = 5, rampUpTimeSeconds

= 2) === 8)

--- End diff --

I try your implement local and it changes the original behavior

```

valueAtSecond(seconds = 1, rowsPerSecond = 5, rampUpTimeSeconds = 2) = 1

valueAtSecond(seconds = 2, rowsPerSecond = 5, rampUpTimeSeconds = 2) = 5

valueAtSecond(seconds = 3, rowsPerSecond = 5, rampUpTimeSeconds = 2) = 10

valueAtSecond(seconds = 4, rowsPerSecond = 5, rampUpTimeSeconds = 2) = 15

```

I think the bug fix should not change the value on `seconds >

rampUpTimeSeconds`, just my opinion, you can ping other committers to review.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21188: [SPARK-24046][SS] Fix rate source rowsPerSecond <...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21188#discussion_r185852663

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/sources/RateStreamProvider.scala

---

@@ -107,14 +107,25 @@ object RateStreamProvider {

// seconds = 0 1 2 3 4 5 6

// speed = 0 2 4 6 8 10 10 (speedDeltaPerSecond * seconds)

// end value = 0 2 6 12 20 30 40 (0 + speedDeltaPerSecond * seconds) *

(seconds + 1) / 2

-val speedDeltaPerSecond = rowsPerSecond / (rampUpTimeSeconds + 1)

+val speedDeltaPerSecond = math.max(1, rowsPerSecond /

(rampUpTimeSeconds + 1))

--- End diff --

Keep at-least 1 per second and leave other seconds to 0 is ok IMOP.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20150: [SPARK-22956][SS] Bug fix for 2 streams union failover s...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/20150 cc @zsxwing --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20150: [SPARK-22956][SS] Bug fix for 2 streams union failover s...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/20150 cc @gatorsmile @cloud-fan --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20150: [SPARK-22956][SS] Bug fix for 2 streams union failover s...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/20150 Thanks for your review! Shixiong --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #17702: [SPARK-20408][SQL] Get the glob path in parallel ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/17702#discussion_r163156332

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DataSource.scala

---

@@ -668,4 +672,31 @@ object DataSource extends Logging {

}

globPath

}

+

+ /**

+ * Return all paths represented by the wildcard string.

+ * Follow [[InMemoryFileIndex]].bulkListLeafFile and reuse the conf.

+ */

+ private def getGlobbedPaths(

+ sparkSession: SparkSession,

+ fs: FileSystem,

+ hadoopConf: SerializableConfiguration,

+ qualified: Path): Seq[Path] = {

+val paths = SparkHadoopUtil.get.expandGlobPath(fs, qualified)

+if (paths.size <=

sparkSession.sessionState.conf.parallelPartitionDiscoveryThreshold) {

+ SparkHadoopUtil.get.globPathIfNecessary(fs, qualified)

+} else {

+ val parallelPartitionDiscoveryParallelism =

+

sparkSession.sessionState.conf.parallelPartitionDiscoveryParallelism

+ val numParallelism = Math.min(paths.size,

parallelPartitionDiscoveryParallelism)

+ val expanded = sparkSession.sparkContext

--- End diff --

Sorry for the late reply, finished in next commit.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20150: [SPARK-22956][SS] Bug fix for 2 streams union fai...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/20150#discussion_r161426641

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/memory.scala

---

@@ -122,6 +122,11 @@ case class MemoryStream[A : Encoder](id: Int,

sqlContext: SQLContext)

batches.slice(sliceStart, sliceEnd)

}

+if (newBlocks.isEmpty) {

--- End diff --

DONE

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20150: [SPARK-22956][SS] Bug fix for 2 streams union fai...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/20150#discussion_r161426622

--- Diff:

external/kafka-0-10-sql/src/test/scala/org/apache/spark/sql/kafka010/KafkaSourceSuite.scala

---

@@ -318,6 +318,84 @@ class KafkaSourceSuite extends KafkaSourceTest {

)

}

+ test("union bug in failover") {

+def getSpecificDF(range: Range.Inclusive):

org.apache.spark.sql.Dataset[Int] = {

+ val topic = newTopic()

+ testUtils.createTopic(topic, partitions = 1)

+ testUtils.sendMessages(topic, range.map(_.toString).toArray, Some(0))

+

+ val reader = spark

+.readStream

+.format("kafka")

+.option("kafka.bootstrap.servers", testUtils.brokerAddress)

+.option("kafka.metadata.max.age.ms", "1")

+.option("maxOffsetsPerTrigger", 5)

+.option("subscribe", topic)

+.option("startingOffsets", "earliest")

+

+ reader.load()

+.selectExpr("CAST(value AS STRING)")

+.as[String]

+.map(k => k.toInt)

+}

+

+val df1 = getSpecificDF(0 to 9)

+val df2 = getSpecificDF(100 to 199)

+

+val kafka = df1.union(df2)

+

+val clock = new StreamManualClock

+

+val waitUntilBatchProcessed = AssertOnQuery { q =>

+ eventually(Timeout(streamingTimeout)) {

+if (!q.exception.isDefined) {

+ assert(clock.isStreamWaitingAt(clock.getTimeMillis()))

+}

+ }

+ if (q.exception.isDefined) {

+throw q.exception.get

+ }

+ true

+}

+

+testStream(kafka)(

+ StartStream(ProcessingTime(100), clock),

+ waitUntilBatchProcessed,

+ // 5 from smaller topic, 5 from bigger one

+ CheckAnswer(0, 1, 2, 3, 4, 100, 101, 102, 103, 104),

--- End diff --

Cool, this made the code more cleaner.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20150: [SPARK-22956][SS] Bug fix for 2 streams union fai...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/20150#discussion_r161426632

--- Diff:

external/kafka-0-10-sql/src/test/scala/org/apache/spark/sql/kafka010/KafkaSourceSuite.scala

---

@@ -318,6 +318,84 @@ class KafkaSourceSuite extends KafkaSourceTest {

)

}

+ test("union bug in failover") {

--- End diff --

DONE

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17702: [SPARK-20408][SQL] Get the glob path in parallel to redu...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/17702 ping @vanzin --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20150: [SPARK-22956][SS] Bug fix for 2 streams union failover s...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/20150 Hi Shixiong, thanks a lot for your reply. The full stack below can reproduce by running the added UT based on original code base. ``` Assert on query failed: : Query [id = 3421db21-652e-47af-9d54-2b74a222abed, runId = cd8d7c94-1286-44a5-b000-a8d870aef6fa] terminated with exception: Partition topic-0-0's offset was changed from 10 to 5, some data may have been missed. Some data may have been lost because they are not available in Kafka any more; either the data was aged out by Kafka or the topic may have been deleted before all the data in the topic was processed. If you don't want your streaming query to fail on such cases, set the source option "failOnDataLoss" to "false". org.apache.spark.sql.execution.streaming.StreamExecution.org$apache$spark$sql$execution$streaming$StreamExecution$$runStream(StreamExecution.scala:295) org.apache.spark.sql.execution.streaming.StreamExecution$$anon$1.run(StreamExecution.scala:189) Caused by: Partition topic-0-0's offset was changed from 10 to 5, some data may have been missed. Some data may have been lost because they are not available in Kafka any more; either the data was aged out by Kafka or the topic may have been deleted before all the data in the topic was processed. If you don't want your streaming query to fail on such cases, set the source option "failOnDataLoss" to "false". org.apache.spark.sql.kafka010.KafkaSource.org$apache$spark$sql$kafka010$KafkaSource$$reportDataLoss(KafkaSource.scala:332) org.apache.spark.sql.kafka010.KafkaSource$$anonfun$8.apply(KafkaSource.scala:291) org.apache.spark.sql.kafka010.KafkaSource$$anonfun$8.apply(KafkaSource.scala:289) scala.collection.TraversableLike$$anonfun$filterImpl$1.apply(TraversableLike.scala:248) scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59) scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48) scala.collection.TraversableLike$class.filterImpl(TraversableLike.scala:247) scala.collection.TraversableLike$class.filter(TraversableLike.scala:259) scala.collection.AbstractTraversable.filter(Traversable.scala:104) org.apache.spark.sql.kafka010.KafkaSource.getBatch(KafkaSource.scala:289) ``` --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20244: [SPARK-23053][CORE] taskBinarySerialization and task par...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/20244 reopen this... --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20244: [SPARK-23053][CORE] taskBinarySerialization and t...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/20244#discussion_r161141499

--- Diff:

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala ---

@@ -96,6 +98,22 @@ class MyRDD(

override def toString: String = "DAGSchedulerSuiteRDD " + id

}

+/** Wrapped rdd partition. */

+class WrappedPartition(val partition: Partition) extends Partition {

+ def index: Int = partition.index

+}

+

+/** Wrapped rdd with WrappedPartition. */

+class WrappedRDD(parent: RDD[Int]) extends RDD[Int](parent) {

+ protected def getPartitions: Array[Partition] = {

+parent.partitions.map(p => new WrappedPartition(p))

+ }

+

+ def compute(split: Partition, context: TaskContext): Iterator[Int] = {

+parent.compute(split.asInstanceOf[WrappedPartition].partition, context)

--- End diff --

I think this line is the key point for `WrppedPartition` and `WrappedRDD`,

please give comments for explaining your intention.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20244: [SPARK-23053][CORE] taskBinarySerialization and t...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/20244#discussion_r161144809

--- Diff:

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala ---

@@ -2399,6 +2417,93 @@ class DAGSchedulerSuite extends SparkFunSuite with

LocalSparkContext with TimeLi

}

}

+ /**

+ * In this test, we simply simulate the scene in concurrent jobs using

the same

+ * rdd which is marked to do checkpoint:

+ * Job one has already finished the spark job, and start the process of

doCheckpoint;

+ * Job two is submitted, and submitMissingTasks is called.

+ * In submitMissingTasks, if taskSerialization is called before

doCheckpoint is done,

+ * while part calculates from stage.rdd.partitions is called after

doCheckpoint is done,

+ * we may get a ClassCastException when execute the task because of some

rdd will do

+ * Partition cast.

+ *

+ * With this test case, just want to indicate that we should do

taskSerialization and

+ * part calculate in submitMissingTasks with the same rdd checkpoint

status.

+ */

+ test("task part misType with checkpoint rdd in concurrent execution

scenes") {

+// set checkpointDir.

+val tempDir = Utils.createTempDir()

+val checkpointDir = File.createTempFile("temp", "", tempDir)

+checkpointDir.delete()

+sc.setCheckpointDir(checkpointDir.toString)

+

+val latch = new CountDownLatch(2)

+val semaphore1 = new Semaphore(0)

+val semaphore2 = new Semaphore(0)

+

+val rdd = new WrappedRDD(sc.makeRDD(1 to 100, 4))

+rdd.checkpoint()

+

+val checkpointRunnable = new Runnable {

+ override def run() = {

+// Simply simulate what RDD.doCheckpoint() do here.

+rdd.doCheckpointCalled = true

+val checkpointData = rdd.checkpointData.get

+RDDCheckpointData.synchronized {

+ if (checkpointData.cpState == CheckpointState.Initialized) {

+checkpointData.cpState =

CheckpointState.CheckpointingInProgress

+ }

+}

+

+val newRDD = checkpointData.doCheckpoint()

+

+// Release semaphore1 after job triggered in checkpoint finished.

+semaphore1.release()

+semaphore2.acquire()

+// Update our state and truncate the RDD lineage.

+RDDCheckpointData.synchronized {

+ checkpointData.cpRDD = Some(newRDD)

+ checkpointData.cpState = CheckpointState.Checkpointed

+ rdd.markCheckpointed()

+}

+semaphore1.release()

+

+latch.countDown()

+ }

+}

+

+val submitMissingTasksRunnable = new Runnable {

+ override def run() = {

+// Simply simulate the process of submitMissingTasks.

+val ser = SparkEnv.get.closureSerializer.newInstance()

+semaphore1.acquire()

+// Simulate task serialization while submitMissingTasks.

+// Task serialized with rdd checkpoint not finished.

+val cleanedFunc = sc.clean(Utils.getIteratorSize _)

+val func = (ctx: TaskContext, it: Iterator[Int]) => cleanedFunc(it)

+val taskBinaryBytes = JavaUtils.bufferToArray(

+ ser.serialize((rdd, func): AnyRef))

+semaphore2.release()

+semaphore1.acquire()

+// Part calculated with rdd checkpoint already finished.

+val (taskRdd, taskFunc) = ser.deserialize[(RDD[Int], (TaskContext,

Iterator[Int]) => Unit)](

+ ByteBuffer.wrap(taskBinaryBytes),

Thread.currentThread.getContextClassLoader)

+val part = rdd.partitions(0)

+intercept[ClassCastException] {

--- End diff --

I think this not a "test", this just a "reproduce" for the problem you want

to fix. We should prove your code added in `DAGScheduler.scala` can fix that

problem and with the original code base, a `ClassCastException` raised.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20244: [SPARK-23053][CORE] taskBinarySerialization and task par...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/20244 ok to test --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20244: [SPARK-23053][CORE] taskBinarySerialization and t...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/20244#discussion_r161141879

--- Diff:

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala ---

@@ -2399,6 +2417,93 @@ class DAGSchedulerSuite extends SparkFunSuite with

LocalSparkContext with TimeLi

}

}

+ /**

+ * In this test, we simply simulate the scene in concurrent jobs using

the same

+ * rdd which is marked to do checkpoint:

+ * Job one has already finished the spark job, and start the process of

doCheckpoint;

+ * Job two is submitted, and submitMissingTasks is called.

+ * In submitMissingTasks, if taskSerialization is called before

doCheckpoint is done,

+ * while part calculates from stage.rdd.partitions is called after

doCheckpoint is done,

+ * we may get a ClassCastException when execute the task because of some

rdd will do

+ * Partition cast.

+ *

+ * With this test case, just want to indicate that we should do

taskSerialization and

+ * part calculate in submitMissingTasks with the same rdd checkpoint

status.

+ */

+ test("task part misType with checkpoint rdd in concurrent execution

scenes") {

--- End diff --

maybe "SPARK-23053: avoid CastException in concurrent execution with

checkpoint" better?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20244: [SPARK-23053][CORE] taskBinarySerialization and task par...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/20244 test this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20244: [SPARK-23053][CORE] taskBinarySerialization and task par...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/20244 @ivoson Tengfei, please post the full stack trace of the `ClassCastException`. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20675: [SPARK-23033][SS][Follow Up] Task level retry for contin...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/20675 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20675: [SPARK-23033][SS][Follow Up] Task level retry for...

GitHub user xuanyuanking opened a pull request: https://github.com/apache/spark/pull/20675 [SPARK-23033][SS][Follow Up] Task level retry for continuous processing ## What changes were proposed in this pull request? Here we want to reimplement the task level retry for continuous processing, changes include: 1. Add a new `EpochCoordinatorMessage` named `GetLastEpochAndOffset`, it is used for getting last epoch and offset of particular partition while task restarted. 2. Add function setOffset for `ContinuousDataReader`, it supported BaseReader can restart from given offset. ## How was this patch tested? Add new UT in `ContinuousSuite` and new `StreamAction` named `CheckAnswerRowsContainsOnlyOnce` for more accurate result checking. You can merge this pull request into a Git repository by running: $ git pull https://github.com/xuanyuanking/spark SPARK-23033 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20675.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20675 commit 21f574e2a3ad3c8e68b92776d2a141d7fcb90502 Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-02-26T07:27:10Z [SPARK-23033][SS][Follow Up] Task level retry for continuous processing --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20675: [SPARK-23033][SS][Follow Up] Task level retry for contin...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/20675 cc @tdas and @jose-torres #20225 gives a quickly fix for task level retry, this is just an attempt for a maybe better implementation. Please let me know if I do something wrong or have misunderstandings of Continuous Processing. Thanks :) --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20150: [SPARK-22956][SS] Bug fix for 2 streams union fai...

GitHub user xuanyuanking opened a pull request: https://github.com/apache/spark/pull/20150 [SPARK-22956][SS] Bug fix for 2 streams union failover scenario ## What changes were proposed in this pull request? This problem reported by @yanlin-Lynn @ivoson and @LiangchangZ. Thanks! When we union 2 streams from kafka or other sources, while one of them have no continues data coming and in the same time task restart, this will cause an `IllegalStateException`. This mainly cause because the code in [MicroBatchExecution](https://github.com/apache/spark/blob/master/sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/MicroBatchExecution.scala#L190) , while one stream has no continues data, its comittedOffset same with availableOffset during `populateStartOffsets`, and `currentPartitionOffsets` not properly handled in KafkaSource. Also, maybe we should also consider this scenario in other Source. ## How was this patch tested? Add a UT in KafkaSourceSuite.scala You can merge this pull request into a Git repository by running: $ git pull https://github.com/xuanyuanking/spark SPARK-22956 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/20150.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #20150 commit aa3d7b73ed5221bdc2aee9dea1f6db45b4a626d7 Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-01-04T11:52:23Z SPARK-22956: Bug fix for 2 streams union failover scenario --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #20675: [SPARK-23033][SS][Follow Up] Task level retry for...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/20675#discussion_r170830121

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/streaming/continuous/ContinuousSuite.scala

---

@@ -219,18 +219,59 @@ class ContinuousSuite extends ContinuousSuiteBase {

spark.sparkContext.addSparkListener(listener)

try {

testStream(df, useV2Sink = true)(

-StartStream(Trigger.Continuous(100)),

+StartStream(longContinuousTrigger),

+AwaitEpoch(0),

Execute(waitForRateSourceTriggers(_, 2)),

+IncrementEpoch(),

Execute { _ =>

// Wait until a task is started, then kill its first attempt.

eventually(timeout(streamingTimeout)) {

assert(taskId != -1)

}

spark.sparkContext.killTaskAttempt(taskId)

},

-ExpectFailure[SparkException] { e =>

- e.getCause != null &&

e.getCause.getCause.isInstanceOf[ContinuousTaskRetryException]

-})

+Execute(waitForRateSourceTriggers(_, 4)),

+IncrementEpoch(),

+// Check the answer exactly, if there's duplicated result,

CheckAnserRowsContains

+// will also return true.

+CheckAnswerRowsContainsOnlyOnce(scala.Range(0, 20).map(Row(_))),

--- End diff --

Actually I firstly use `CheckAnswer(0 to 19: _*)` here, but I found the

test case failure probably because the CP maybe not stop between Range(0, 20)

every time. See the logs below:

```

== Plan ==

== Parsed Logical Plan ==

WriteToDataSourceV2

org.apache.spark.sql.execution.streaming.sources.MemoryStreamWriter@6435422d

+- Project [value#13L]

+- StreamingDataSourceV2Relation [timestamp#12, value#13L],

org.apache.spark.sql.execution.streaming.continuous.RateStreamContinuousReader@5c5d9c45

== Analyzed Logical Plan ==

WriteToDataSourceV2

org.apache.spark.sql.execution.streaming.sources.MemoryStreamWriter@6435422d

+- Project [value#13L]

+- StreamingDataSourceV2Relation [timestamp#12, value#13L],

org.apache.spark.sql.execution.streaming.continuous.RateStreamContinuousReader@5c5d9c45

== Optimized Logical Plan ==

WriteToDataSourceV2

org.apache.spark.sql.execution.streaming.sources.MemoryStreamWriter@6435422d

+- Project [value#13L]

+- StreamingDataSourceV2Relation [timestamp#12, value#13L],

org.apache.spark.sql.execution.streaming.continuous.RateStreamContinuousReader@5c5d9c45

== Physical Plan ==

WriteToDataSourceV2

org.apache.spark.sql.execution.streaming.sources.MemoryStreamWriter@6435422d

+- *(1) Project [value#13L]

+- *(1) DataSourceV2Scan [timestamp#12, value#13L],

org.apache.spark.sql.execution.streaming.continuous.RateStreamContinuousReader@5c5d9c45

ScalaTestFailureLocation: org.apache.spark.sql.streaming.StreamTest$class

at (StreamTest.scala:436)

org.scalatest.exceptions.TestFailedException:

== Results ==

!== Correct Answer - 20 == == Spark Answer - 25 ==

!struct struct

[0] [0]

[10][10]

[11][11]

[12][12]

[13][13]

[14][14]

[15][15]

[16][16]

[17][17]

[18][18]

[19][19]

[1] [1]

![2] [20]

![3] [21]

![4] [22]

![5] [23]

![6] [24]

![7] [2]

![8] [3]

![9] [4]

![5]

![6]

![7]

![8]

![9]

== Progress ==

StartStream(ContinuousTrigger(360),org.apache.spark.util.SystemClock@343e225a,Map(),null)

AssertOnQuery(, )

AssertOnQuery(, )

AssertOnQuery(, )

AssertOnQuery(, )

AssertOnQuery(, )

AssertOnQuery(, )

=> CheckAnswer:

[0],[1],[2],[3],[4],[5],[6],[7],[8],[9],[10],[11],[12],[13],[14],[15],[16],[17],[18],[19]

StopStream

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20675: [SPARK-23033][SS][Follow Up] Task level retry for contin...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/20675 Great thanks for your detailed reply! > The semantics aren't quite right. Task-level retry can happen a fixed number of times for the lifetime of the task, which is the lifetime of the query - even if it runs for days after, the attempt number will never be reset. - I think the attempt number never be reset is not a problem, as long as the task start with right epoch and offset. Maybe I don't understand the meaning of the semantics, could you please give more explain? - As far as I'm concerned, while we have a larger parallel number, whole stage restart is a too heavy operation and will lead a data shaking. - Also want to leave a further thinking, after CP support shuffle and more complex scenario, task level retry need more work to do in order to ensure data is correct. But it maybe still a useful feature? I just want to leave this patch and initiate a discussion about this :) --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

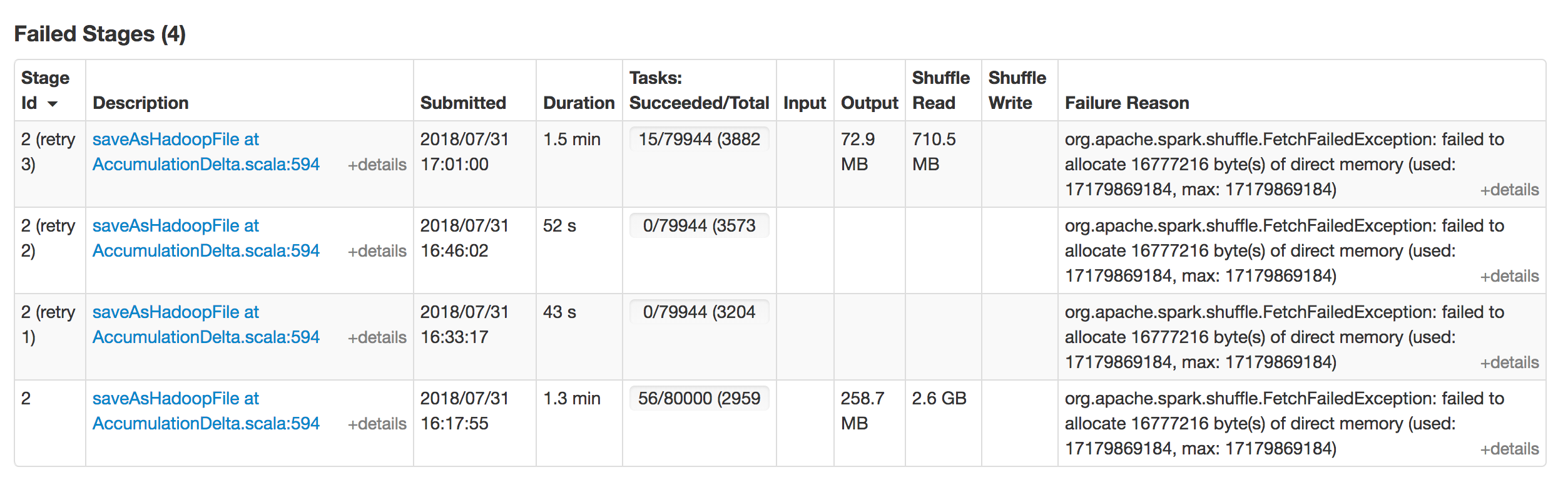

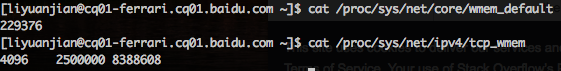

[GitHub] spark pull request #21945: [SPARK-24989][Core] Add retrying support for OutO...

GitHub user xuanyuanking opened a pull request: https://github.com/apache/spark/pull/21945 [SPARK-24989][Core] Add retrying support for OutOfDirectMemoryError ## What changes were proposed in this pull request? As the detailed description in [SPARK-24989](https://issues.apache.org/jira/browse/SPARK-24989), add retrying support in RetryingBlockFetcher while get io.netty.maxDirectMemory. The failed stages detail attached below:  ## How was this patch tested? Add UT in RetryingBlockFetcherSuite.java and test in the job above mentioned. You can merge this pull request into a Git repository by running: $ git pull https://github.com/xuanyuanking/spark SPARK-24989 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21945.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21945 commit bb6841b3a7a160e252fe35dab82f4ddeb0032591 Author: Yuanjian Li Date: 2018-08-01T16:15:09Z [SPARK-24989][Core] Add retry support for OutOfDirectMemoryError --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21945: [SPARK-24989][Core] Add retrying support for OutOfDirect...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21945 Close this, the param `spark.reducer.maxBlocksInFlightPerAddress` added after version 2.2 can solve my problem. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21945: [SPARK-24989][Core] Add retrying support for OutO...

Github user xuanyuanking closed the pull request at: https://github.com/apache/spark/pull/21945 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21893: [SPARK-24965][SQL] Support selecting from partiti...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21893#discussion_r206188190

--- Diff:

sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/MultiFormatTableSuite.scala

---

@@ -0,0 +1,514 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.hive.execution

+

+import java.io.File

+import java.net.URI

+

+import org.scalatest.BeforeAndAfterEach

+import org.scalatest.Matchers

+

+import org.apache.spark.sql.{DataFrame, QueryTest, Row}

+import org.apache.spark.sql.catalyst.TableIdentifier

+import org.apache.spark.sql.catalyst.catalog.CatalogTableType

+import org.apache.spark.sql.execution._

+import org.apache.spark.sql.execution.command.DDLUtils

+import org.apache.spark.sql.hive.test.TestHiveSingleton

+import org.apache.spark.sql.internal.SQLConf

+import org.apache.spark.sql.test.SQLTestUtils

+

+class MultiFormatTableSuite

+ extends QueryTest with SQLTestUtils with TestHiveSingleton with

BeforeAndAfterEach with Matchers {

+ import testImplicits._

+

+ val parser = new SparkSqlParser(new SQLConf())

+

+ override def afterEach(): Unit = {

+try {

+ // drop all databases, tables and functions after each test

+ spark.sessionState.catalog.reset()

+} finally {

+ super.afterEach()

+}

+ }

+

+ val partitionCol = "dt"

+ val partitionVal1 = "2018-01-26"

+ val partitionVal2 = "2018-01-27"

+

+ private case class PartitionDefinition(

+ column: String,

+ value: String,

+ location: URI,

+ format: Option[String] = None

+ ) {

+

+def toSpec: String = {

+ s"($column='$value')"

+}

+def toSpecAsMap: Map[String, String] = {

+ Map(column -> value)

+}

+ }

+

+ test("create hive table with multi format partitions") {

+val catalog = spark.sessionState.catalog

+withTempDir { baseDir =>

+

+ val partitionedTable = "ext_multiformat_partition_table"

+ withTable(partitionedTable) {

+assert(baseDir.listFiles.isEmpty)

+

+val partitions = createMultiformatPartitionDefinitions(baseDir)

+

+createTableWithPartitions(partitionedTable, baseDir, partitions)

+

+// Check table storage type is PARQUET

+val hiveResultTable =

+ catalog.getTableMetadata(TableIdentifier(partitionedTable,

Some("default")))

+assert(DDLUtils.isHiveTable(hiveResultTable))

+assert(hiveResultTable.tableType == CatalogTableType.EXTERNAL)

+assert(hiveResultTable.storage.inputFormat

+

.contains("org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat")

+)

+assert(hiveResultTable.storage.outputFormat

+

.contains("org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat")

+)

+assert(hiveResultTable.storage.serde

+

.contains("org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe")

+)

+

+// Check table has correct partititons

+assert(

+ catalog.listPartitions(TableIdentifier(partitionedTable,

+Some("default"))).map(_.spec).toSet ==

partitions.map(_.toSpecAsMap).toSet

+)

+

+// Check first table partition storage type is PARQUET

+val parquetPartition = catalog.getPartition(

+ TableIdentifier(partitionedTable, Some("default")),

+ partitions.head.toSpecAsMap

+)

+assert(

+ parquetPartition.storage.serde

+

.contains("org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe")

+)

+

+// Check second table partition storage type is AVRO

+

[GitHub] spark pull request #21893: [SPARK-24965][SQL] Support selecting from partiti...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21893#discussion_r206182473

--- Diff:

sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/MultiFormatTableSuite.scala

---

@@ -0,0 +1,514 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.hive.execution

+

+import java.io.File

+import java.net.URI

+

+import org.scalatest.BeforeAndAfterEach

+import org.scalatest.Matchers

+

+import org.apache.spark.sql.{DataFrame, QueryTest, Row}

+import org.apache.spark.sql.catalyst.TableIdentifier

+import org.apache.spark.sql.catalyst.catalog.CatalogTableType

+import org.apache.spark.sql.execution._

+import org.apache.spark.sql.execution.command.DDLUtils

+import org.apache.spark.sql.hive.test.TestHiveSingleton

+import org.apache.spark.sql.internal.SQLConf

+import org.apache.spark.sql.test.SQLTestUtils

+

+class MultiFormatTableSuite

+ extends QueryTest with SQLTestUtils with TestHiveSingleton with

BeforeAndAfterEach with Matchers {

+ import testImplicits._

+

+ val parser = new SparkSqlParser(new SQLConf())

+

+ override def afterEach(): Unit = {

+try {

+ // drop all databases, tables and functions after each test

+ spark.sessionState.catalog.reset()

+} finally {

+ super.afterEach()

+}

+ }

+

+ val partitionCol = "dt"

+ val partitionVal1 = "2018-01-26"

+ val partitionVal2 = "2018-01-27"

+

+ private case class PartitionDefinition(

+ column: String,

+ value: String,

+ location: URI,

+ format: Option[String] = None

+ ) {

+

+def toSpec: String = {

+ s"($column='$value')"

+}

+def toSpecAsMap: Map[String, String] = {

+ Map(column -> value)

+}

+ }

+

+ test("create hive table with multi format partitions") {

+val catalog = spark.sessionState.catalog

+withTempDir { baseDir =>

+

+ val partitionedTable = "ext_multiformat_partition_table"

+ withTable(partitionedTable) {

+assert(baseDir.listFiles.isEmpty)

+

+val partitions = createMultiformatPartitionDefinitions(baseDir)

+

+createTableWithPartitions(partitionedTable, baseDir, partitions)

+

+// Check table storage type is PARQUET

+val hiveResultTable =

+ catalog.getTableMetadata(TableIdentifier(partitionedTable,

Some("default")))

+assert(DDLUtils.isHiveTable(hiveResultTable))

+assert(hiveResultTable.tableType == CatalogTableType.EXTERNAL)

+assert(hiveResultTable.storage.inputFormat

+

.contains("org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat")

+)

+assert(hiveResultTable.storage.outputFormat

+

.contains("org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat")

+)

+assert(hiveResultTable.storage.serde

+

.contains("org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe")

+)

+

+// Check table has correct partititons

+assert(

+ catalog.listPartitions(TableIdentifier(partitionedTable,

+Some("default"))).map(_.spec).toSet ==

partitions.map(_.toSpecAsMap).toSet

+)

+

+// Check first table partition storage type is PARQUET

+val parquetPartition = catalog.getPartition(

+ TableIdentifier(partitionedTable, Some("default")),

+ partitions.head.toSpecAsMap

+)

+assert(

+ parquetPartition.storage.serde

+

.contains("org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe")

+)

+

+// Check second table partition storage type is AVRO

+

[GitHub] spark pull request #21893: [SPARK-24965][SQL] Support selecting from partiti...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21893#discussion_r206188295

--- Diff:

sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/MultiFormatTableSuite.scala

---

@@ -0,0 +1,514 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.hive.execution

+

+import java.io.File

+import java.net.URI

+

+import org.scalatest.BeforeAndAfterEach

+import org.scalatest.Matchers

+

+import org.apache.spark.sql.{DataFrame, QueryTest, Row}

+import org.apache.spark.sql.catalyst.TableIdentifier

+import org.apache.spark.sql.catalyst.catalog.CatalogTableType

+import org.apache.spark.sql.execution._

+import org.apache.spark.sql.execution.command.DDLUtils

+import org.apache.spark.sql.hive.test.TestHiveSingleton

+import org.apache.spark.sql.internal.SQLConf

+import org.apache.spark.sql.test.SQLTestUtils

+

+class MultiFormatTableSuite

+ extends QueryTest with SQLTestUtils with TestHiveSingleton with

BeforeAndAfterEach with Matchers {

+ import testImplicits._

+

+ val parser = new SparkSqlParser(new SQLConf())

+

+ override def afterEach(): Unit = {

+try {

+ // drop all databases, tables and functions after each test

+ spark.sessionState.catalog.reset()

+} finally {

+ super.afterEach()

+}

+ }

+

+ val partitionCol = "dt"

+ val partitionVal1 = "2018-01-26"

+ val partitionVal2 = "2018-01-27"

+

+ private case class PartitionDefinition(

+ column: String,

+ value: String,

+ location: URI,

+ format: Option[String] = None

+ ) {

+

+def toSpec: String = {

+ s"($column='$value')"

+}

+def toSpecAsMap: Map[String, String] = {

+ Map(column -> value)

+}

+ }

+

+ test("create hive table with multi format partitions") {

+val catalog = spark.sessionState.catalog

+withTempDir { baseDir =>

+

+ val partitionedTable = "ext_multiformat_partition_table"

+ withTable(partitionedTable) {

+assert(baseDir.listFiles.isEmpty)

+

+val partitions = createMultiformatPartitionDefinitions(baseDir)

+

+createTableWithPartitions(partitionedTable, baseDir, partitions)

+

+// Check table storage type is PARQUET

+val hiveResultTable =

+ catalog.getTableMetadata(TableIdentifier(partitionedTable,

Some("default")))

+assert(DDLUtils.isHiveTable(hiveResultTable))

+assert(hiveResultTable.tableType == CatalogTableType.EXTERNAL)

+assert(hiveResultTable.storage.inputFormat

+

.contains("org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat")

+)

+assert(hiveResultTable.storage.outputFormat

+

.contains("org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat")

+)

+assert(hiveResultTable.storage.serde

+

.contains("org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe")

+)

+

+// Check table has correct partititons

+assert(

+ catalog.listPartitions(TableIdentifier(partitionedTable,

+Some("default"))).map(_.spec).toSet ==

partitions.map(_.toSpecAsMap).toSet

+)

+

+// Check first table partition storage type is PARQUET

+val parquetPartition = catalog.getPartition(

+ TableIdentifier(partitionedTable, Some("default")),

+ partitions.head.toSpecAsMap

+)

+assert(

+ parquetPartition.storage.serde

+

.contains("org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe")

+)

+

+// Check second table partition storage type is AVRO

+

[GitHub] spark pull request #21893: [SPARK-24965][SQL] Support selecting from partiti...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21893#discussion_r206190334

--- Diff:

sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/MultiFormatTableSuite.scala

---

@@ -0,0 +1,514 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.hive.execution

+

+import java.io.File

+import java.net.URI

+

+import org.scalatest.BeforeAndAfterEach

+import org.scalatest.Matchers

+

+import org.apache.spark.sql.{DataFrame, QueryTest, Row}

+import org.apache.spark.sql.catalyst.TableIdentifier

+import org.apache.spark.sql.catalyst.catalog.CatalogTableType

+import org.apache.spark.sql.execution._

+import org.apache.spark.sql.execution.command.DDLUtils

+import org.apache.spark.sql.hive.test.TestHiveSingleton

+import org.apache.spark.sql.internal.SQLConf

+import org.apache.spark.sql.test.SQLTestUtils

+

+class MultiFormatTableSuite

+ extends QueryTest with SQLTestUtils with TestHiveSingleton with

BeforeAndAfterEach with Matchers {

+ import testImplicits._

+

+ val parser = new SparkSqlParser(new SQLConf())

+

+ override def afterEach(): Unit = {

+try {

+ // drop all databases, tables and functions after each test

+ spark.sessionState.catalog.reset()

+} finally {

+ super.afterEach()

+}

+ }

+

+ val partitionCol = "dt"

+ val partitionVal1 = "2018-01-26"

+ val partitionVal2 = "2018-01-27"

+

+ private case class PartitionDefinition(

+ column: String,

+ value: String,

+ location: URI,

+ format: Option[String] = None

+ ) {

--- End diff --

Do not have to start a new line.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21893: [SPARK-24965][SQL] Support selecting from partiti...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21893#discussion_r206184350

--- Diff:

sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/MultiFormatTableSuite.scala

---

@@ -0,0 +1,514 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.hive.execution

+

+import java.io.File

+import java.net.URI

+

+import org.scalatest.BeforeAndAfterEach

+import org.scalatest.Matchers

+

+import org.apache.spark.sql.{DataFrame, QueryTest, Row}

+import org.apache.spark.sql.catalyst.TableIdentifier

+import org.apache.spark.sql.catalyst.catalog.CatalogTableType

+import org.apache.spark.sql.execution._

+import org.apache.spark.sql.execution.command.DDLUtils

+import org.apache.spark.sql.hive.test.TestHiveSingleton

+import org.apache.spark.sql.internal.SQLConf

+import org.apache.spark.sql.test.SQLTestUtils

+

+class MultiFormatTableSuite

+ extends QueryTest with SQLTestUtils with TestHiveSingleton with

BeforeAndAfterEach with Matchers {

+ import testImplicits._

+

+ val parser = new SparkSqlParser(new SQLConf())

+

+ override def afterEach(): Unit = {

+try {

+ // drop all databases, tables and functions after each test

+ spark.sessionState.catalog.reset()

+} finally {

+ super.afterEach()

+}

+ }

+

+ val partitionCol = "dt"

+ val partitionVal1 = "2018-01-26"

+ val partitionVal2 = "2018-01-27"

+

+ private case class PartitionDefinition(

+ column: String,

+ value: String,

+ location: URI,

+ format: Option[String] = None

+ ) {

+

+def toSpec: String = {

+ s"($column='$value')"

+}

+def toSpecAsMap: Map[String, String] = {

+ Map(column -> value)

+}

+ }

+

+ test("create hive table with multi format partitions") {

+val catalog = spark.sessionState.catalog

+withTempDir { baseDir =>

+

+ val partitionedTable = "ext_multiformat_partition_table"

+ withTable(partitionedTable) {

+assert(baseDir.listFiles.isEmpty)

+

+val partitions = createMultiformatPartitionDefinitions(baseDir)

+

+createTableWithPartitions(partitionedTable, baseDir, partitions)

+

+// Check table storage type is PARQUET

+val hiveResultTable =

+ catalog.getTableMetadata(TableIdentifier(partitionedTable,

Some("default")))

+assert(DDLUtils.isHiveTable(hiveResultTable))

+assert(hiveResultTable.tableType == CatalogTableType.EXTERNAL)

+assert(hiveResultTable.storage.inputFormat

+

.contains("org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat")

+)