[spark] branch master updated: [SPARK-43334][UI] Fix error while serializing ExecutorPeakMetricsDistributions into API response

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 5ec13854620 [SPARK-43334][UI] Fix error while serializing

ExecutorPeakMetricsDistributions into API response

5ec13854620 is described below

commit 5ec138546205ba4248cc9ec72c3b7baf60f2fede

Author: Thejdeep Gudivada

AuthorDate: Wed May 24 18:25:36 2023 -0500

[SPARK-43334][UI] Fix error while serializing

ExecutorPeakMetricsDistributions into API response

When we calculate the quantile information from the peak executor metrics

values for the distribution, there is a possibility of running into an

`ArrayIndexOutOfBounds` exception when the metric values are empty. This PR

addresses that and fixes it by returning an empty array if the values are empty.

### Why are the changes needed?

Without these changes, when the withDetails query parameter is used to

query the stages REST API, we encounter a partial JSON response since the peak

executor metrics distribution cannot be serialized due to the above index error.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added a unit test to test this behavior

Closes #41017 from thejdeep/SPARK-43334.

Authored-by: Thejdeep Gudivada

Signed-off-by: Sean Owen

---

.../main/scala/org/apache/spark/status/AppStatusStore.scala | 9 +

.../main/scala/org/apache/spark/status/AppStatusUtils.scala | 12

core/src/main/scala/org/apache/spark/status/api/v1/api.scala | 7 +++

.../scala/org/apache/spark/status/AppStatusUtilsSuite.scala | 11 +++

4 files changed, 27 insertions(+), 12 deletions(-)

diff --git a/core/src/main/scala/org/apache/spark/status/AppStatusStore.scala

b/core/src/main/scala/org/apache/spark/status/AppStatusStore.scala

index d02d4b2507a..eaa7b7b9873 100644

--- a/core/src/main/scala/org/apache/spark/status/AppStatusStore.scala

+++ b/core/src/main/scala/org/apache/spark/status/AppStatusStore.scala

@@ -27,6 +27,7 @@ import scala.collection.mutable.HashMap

import org.apache.spark.{JobExecutionStatus, SparkConf, SparkContext}

import org.apache.spark.internal.Logging

import org.apache.spark.internal.config.Status.LIVE_UI_LOCAL_STORE_DIR

+import org.apache.spark.status.AppStatusUtils.getQuantilesValue

import org.apache.spark.status.api.v1

import org.apache.spark.storage.FallbackStorage.FALLBACK_BLOCK_MANAGER_ID

import org.apache.spark.ui.scope._

@@ -770,14 +771,6 @@ private[spark] class AppStatusStore(

}

}

- def getQuantilesValue(

-values: IndexedSeq[Double],

-quantiles: Array[Double]): IndexedSeq[Double] = {

-val count = values.size

-val indices = quantiles.map { q => math.min((q * count).toLong, count - 1)

}

-indices.map(i => values(i.toInt)).toIndexedSeq

- }

-

def rdd(rddId: Int): v1.RDDStorageInfo = {

store.read(classOf[RDDStorageInfoWrapper], rddId).info

}

diff --git a/core/src/main/scala/org/apache/spark/status/AppStatusUtils.scala

b/core/src/main/scala/org/apache/spark/status/AppStatusUtils.scala

index 87f434daf48..04918ccbd57 100644

--- a/core/src/main/scala/org/apache/spark/status/AppStatusUtils.scala

+++ b/core/src/main/scala/org/apache/spark/status/AppStatusUtils.scala

@@ -72,4 +72,16 @@ private[spark] object AppStatusUtils {

-1

}

}

+

+ def getQuantilesValue(

+values: IndexedSeq[Double],

+quantiles: Array[Double]): IndexedSeq[Double] = {

+val count = values.size

+if (count > 0) {

+ val indices = quantiles.map { q => math.min((q * count).toLong, count -

1) }

+ indices.map(i => values(i.toInt)).toIndexedSeq

+} else {

+ IndexedSeq.fill(quantiles.length)(0.0)

+}

+ }

}

diff --git a/core/src/main/scala/org/apache/spark/status/api/v1/api.scala

b/core/src/main/scala/org/apache/spark/status/api/v1/api.scala

index e272cf04dc7..f436d16ca47 100644

--- a/core/src/main/scala/org/apache/spark/status/api/v1/api.scala

+++ b/core/src/main/scala/org/apache/spark/status/api/v1/api.scala

@@ -31,6 +31,7 @@ import org.apache.spark.JobExecutionStatus

import org.apache.spark.executor.ExecutorMetrics

import org.apache.spark.metrics.ExecutorMetricType

import org.apache.spark.resource.{ExecutorResourceRequest,

ResourceInformation, TaskResourceRequest}

+import org.apache.spark.status.AppStatusUtils.getQuantilesValue

case class ApplicationInfo private[spark](

id: String,

@@ -454,13 +455,11 @@ class ExecutorMetricsDistributions private[spark](

class ExecutorPeakMetricsDistributions private[spark](

val quantiles: IndexedSeq[Double],

val executorMetrics: IndexedSeq[ExecutorMetrics]) {

- private lazy val count = executorMetrics.length

- private lazy val indices = quantiles.map { q => math.min((q * count).toLong,

count - 1) }

/** Returns

[spark] branch master updated (1c6b5382051 -> f2b4ff2769b)

This is an automated email from the ASF dual-hosted git repository. srowen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from 1c6b5382051 [SPARK-43771][BUILD][CONNECT] Upgrade mima-core from 1.1.0 to 1.1.2 add f2b4ff2769b [SPARK-43573][BUILD] Make SparkBuilder could config the heap size of test JVM No new revisions were added by this update. Summary of changes: project/SparkBuild.scala | 6 -- 1 file changed, 4 insertions(+), 2 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (afc508722e0 -> 5d03950b358)

This is an automated email from the ASF dual-hosted git repository. srowen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from afc508722e0 [SPARK-43595][BUILD] Update some maven plugins to newest version add 5d03950b358 [SPARK-43534][BUILD] Add log4j-1.2-api and log4j-slf4j2-impl to classpath if active hadoop-provided No new revisions were added by this update. Summary of changes: pom.xml | 2 -- 1 file changed, 2 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-43595][BUILD] Update some maven plugins to newest version

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new afc508722e0 [SPARK-43595][BUILD] Update some maven plugins to newest

version

afc508722e0 is described below

commit afc508722e07cf8fceb24204f538e51c6192c3e4

Author: panbingkun

AuthorDate: Sat May 20 08:50:16 2023 -0500

[SPARK-43595][BUILD] Update some maven plugins to newest version

### What changes were proposed in this pull request?

The pr aims to update some maven plugins to newest version. include:

- exec-maven-plugin from 1.6.0 to 3.1.0

- scala-maven-plugin from 4.8.0 to 4.8.1

- maven-antrun-plugin from 1.8 to 3.1.0

- maven-enforcer-plugin from 3.2.1 to 3.3.0

- build-helper-maven-plugin from 3.3.0 to 3.4.0

- maven-surefire-plugin from 3.0.0 to 3.1.0

- maven-assembly-plugin from 3.1.0 to 3.6.0

- maven-install-plugin from 3.1.0 to 3.1.1

- maven-deploy-plugin from 3.1.0 to 3.1.1

- maven-checkstyle-plugin from 3.2.1 to 3.2.2

### Why are the changes needed?

Routine upgrade.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass GA.

Closes #41228 from panbingkun/maven_plugin_upgrade.

Authored-by: panbingkun

Signed-off-by: Sean Owen

---

pom.xml | 20 ++--

1 file changed, 10 insertions(+), 10 deletions(-)

diff --git a/pom.xml b/pom.xml

index dfc54b25705..1c4c4eb0fa6 100644

--- a/pom.xml

+++ b/pom.xml

@@ -115,7 +115,7 @@

${java.version}

${java.version}

3.8.8

-1.6.0

+3.1.0

spark

9.5

2.0.7

@@ -175,7 +175,7 @@

errors building different Hadoop versions.

See: SPARK-36547, SPARK-38394.

-->

-4.8.0

+4.8.1

false

2.15.0

@@ -210,7 +210,7 @@

4.7.2

4.7.2

2.67.0

-1.8

+3.1.0

1.1.0

1.5.0

1.60

@@ -2744,7 +2744,7 @@

org.apache.maven.plugins

maven-enforcer-plugin

- 3.2.1

+ 3.3.0

enforce-versions

@@ -2787,7 +2787,7 @@

org.codehaus.mojo

build-helper-maven-plugin

- 3.3.0

+ 3.4.0

module-timestamp-property

@@ -2907,7 +2907,7 @@

org.apache.maven.plugins

maven-surefire-plugin

- 3.0.0

+ 3.1.0

@@ -3118,7 +3118,7 @@

org.apache.maven.plugins

maven-assembly-plugin

- 3.1.0

+ 3.6.0

posix

@@ -3143,12 +3143,12 @@

org.apache.maven.plugins

maven-install-plugin

- 3.1.0

+ 3.1.1

org.apache.maven.plugins

maven-deploy-plugin

- 3.1.0

+ 3.1.1

org.apache.maven.plugins

@@ -3293,7 +3293,7 @@

org.apache.maven.plugins

maven-checkstyle-plugin

-3.2.1

+3.2.2

false

true

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (37b9c532d69 -> f55fdca10b1)

This is an automated email from the ASF dual-hosted git repository. srowen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from 37b9c532d69 [SPARK-43542][SS] Define a new error class and apply for the case where streaming query fails due to concurrent run of streaming query with same checkpoint add f55fdca10b1 [MINOR][INFRA] Deduplicate `scikit-learn` in Dockerfile No new revisions were added by this update. Summary of changes: dev/infra/Dockerfile | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-43537][INFA][BUILD] Upgrading the ASM dependencies used in the `tools` module to 9.4

This is an automated email from the ASF dual-hosted git repository. srowen pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/master by this push: new 9785353684b [SPARK-43537][INFA][BUILD] Upgrading the ASM dependencies used in the `tools` module to 9.4 9785353684b is described below commit 9785353684bdc2a2c7445b7e6b9ab85154f6933f Author: yangjie01 AuthorDate: Wed May 17 11:18:14 2023 -0500 [SPARK-43537][INFA][BUILD] Upgrading the ASM dependencies used in the `tools` module to 9.4 ### What changes were proposed in this pull request? This pr aims upgrade ASM related dependencies in the `tools` module from version 7.1 to version 9.4 to make `GenerateMIMAIgnore` can process Java 17+ compiled code. Additionally, this pr defines `asm.version` to manage versions of ASM. ### Why are the changes needed? The classpath processed by `GenerateMIMAIgnore` cannot contain Java 17+ compiled code now due to the ASM version use by `tools` module is too low, but https://github.com/bmc/classutil has not been updated for a long time, we can't solve the problem by upgrading `classutil`, so this pr make the `tools` module explicitly rely on ASM 9.4 for workaround. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - Pass GitHub Action - Manual checked `dev/mima` due to this pr upgrade the dependency of tools module ``` dev/mima ``` and ``` dev/change-scala-version.sh 2.13 dev/mima -Pscala-2.13 ``` - A case that can reproduce the problem: run following script with master branch: ``` set -o pipefail set -e FWDIR="$(cd "`dirname "$0"`"/..; pwd)" cd "$FWDIR" export SPARK_HOME=$FWDIR echo $SPARK_HOME if [[ -x "$JAVA_HOME/bin/java" ]]; then JAVA_CMD="$JAVA_HOME/bin/java" else JAVA_CMD=java fi TOOLS_CLASSPATH="$(build/sbt -DcopyDependencies=false "export tools/fullClasspath" | grep jar | tail -n1)" ASSEMBLY_CLASSPATH="$(build/sbt -DcopyDependencies=false "export assembly/fullClasspath" | grep jar | tail -n1)" rm -f .generated-mima* $JAVA_CMD \ -Xmx2g \ -XX:+IgnoreUnrecognizedVMOptions --add-opens=java.base/java.util.jar=ALL-UNNAMED \ -cp "$TOOLS_CLASSPATH:$ASSEMBLY_CLASSPATH" \ org.apache.spark.tools.GenerateMIMAIgnore rm -f .generated-mima* ``` **Before** ``` Exception in thread "main" java.lang.IllegalArgumentException: Unsupported class file major version 61 at org.objectweb.asm.ClassReader.(ClassReader.java:195) at org.objectweb.asm.ClassReader.(ClassReader.java:176) at org.objectweb.asm.ClassReader.(ClassReader.java:162) at org.objectweb.asm.ClassReader.(ClassReader.java:283) at org.clapper.classutil.asm.ClassFile$.load(ClassFinderImpl.scala:222) at org.clapper.classutil.ClassFinder.classData(ClassFinder.scala:404) at org.clapper.classutil.ClassFinder.$anonfun$processOpenZip$2(ClassFinder.scala:359) at scala.collection.Iterator$$anon$10.next(Iterator.scala:461) at scala.collection.Iterator$$anon$11.nextCur(Iterator.scala:486) at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:492) at scala.collection.Iterator.toStream(Iterator.scala:1417) at scala.collection.Iterator.toStream$(Iterator.scala:1416) at scala.collection.AbstractIterator.toStream(Iterator.scala:1431) at scala.collection.Iterator.$anonfun$toStream$1(Iterator.scala:1417) at scala.collection.immutable.Stream$Cons.tail(Stream.scala:1173) at scala.collection.immutable.Stream$Cons.tail(Stream.scala:1163) at scala.collection.immutable.Stream.$anonfun$$plus$plus$1(Stream.scala:372) at scala.collection.immutable.Stream$Cons.tail(Stream.scala:1173) at scala.collection.immutable.Stream$Cons.tail(Stream.scala:1163) at scala.collection.immutable.Stream.$anonfun$$plus$plus$1(Stream.scala:372) at scala.collection.immutable.Stream$Cons.tail(Stream.scala:1173) at scala.collection.immutable.Stream$Cons.tail(Stream.scala:1163) at scala.collection.immutable.Stream.$anonfun$map$1(Stream.scala:418) at scala.collection.immutable.Stream$Cons.tail(Stream.scala:1173) at scala.collection.immutable.Stream$Cons.tail(Stream.scala:1163) at scala.collection.immutable.Stream.filterImpl(Stream.scala:506) at scala.collection.immutable.Stream$.$anonfun$filteredTail$1(Stream.scala:1260) at scala.collection.immutable.Stream$Cons.tail(Stream.scala:1173) at scala.collection.immutable.Stream$Cons.tail(Stream.scala:1163) at scala.collection.immutable.S

[spark] branch master updated: [MINOR] Remove redundant character escape "\\" and add UT

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new b8f22f33308 [MINOR] Remove redundant character escape "\\" and add UT

b8f22f33308 is described below

commit b8f22f33308ab51b93052457dba17b04c2daeb4a

Author: panbingkun

AuthorDate: Mon May 15 18:04:31 2023 -0500

[MINOR] Remove redundant character escape "\\" and add UT

### What changes were proposed in this pull request?

The pr aims to remove redundant character escape "\\" and add UT for

SparkHadoopUtil.substituteHadoopVariables.

### Why are the changes needed?

Make code clean & remove warning.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass GA & Add new UT.

Closes #41170 from panbingkun/SparkHadoopUtil_fix.

Authored-by: panbingkun

Signed-off-by: Sean Owen

---

.../org/apache/spark/deploy/SparkHadoopUtil.scala | 4 +-

.../apache/spark/deploy/SparkHadoopUtilSuite.scala | 52 ++

2 files changed, 54 insertions(+), 2 deletions(-)

diff --git a/core/src/main/scala/org/apache/spark/deploy/SparkHadoopUtil.scala

b/core/src/main/scala/org/apache/spark/deploy/SparkHadoopUtil.scala

index 4908a081367..9ff2621b791 100644

--- a/core/src/main/scala/org/apache/spark/deploy/SparkHadoopUtil.scala

+++ b/core/src/main/scala/org/apache/spark/deploy/SparkHadoopUtil.scala

@@ -174,7 +174,7 @@ private[spark] class SparkHadoopUtil extends Logging {

* So we need a map to track the bytes read from the child threads and

parent thread,

* summing them together to get the bytes read of this task.

*/

-new Function0[Long] {

+new (() => Long) {

private val bytesReadMap = new mutable.HashMap[Long, Long]()

override def apply(): Long = {

@@ -248,7 +248,7 @@ private[spark] class SparkHadoopUtil extends Logging {

if (isGlobPath(pattern)) globPath(fs, pattern) else Seq(pattern)

}

- private val HADOOP_CONF_PATTERN =

"(\\$\\{hadoopconf-[^\\}\\$\\s]+\\})".r.unanchored

+ private val HADOOP_CONF_PATTERN =

"(\\$\\{hadoopconf-[^}$\\s]+})".r.unanchored

/**

* Substitute variables by looking them up in Hadoop configs. Only variables

that match the

diff --git

a/core/src/test/scala/org/apache/spark/deploy/SparkHadoopUtilSuite.scala

b/core/src/test/scala/org/apache/spark/deploy/SparkHadoopUtilSuite.scala

index 17f1476cd8d..6250b7d0ed2 100644

--- a/core/src/test/scala/org/apache/spark/deploy/SparkHadoopUtilSuite.scala

+++ b/core/src/test/scala/org/apache/spark/deploy/SparkHadoopUtilSuite.scala

@@ -123,6 +123,58 @@ class SparkHadoopUtilSuite extends SparkFunSuite {

assertConfigValue(hadoopConf, "fs.s3a.session.token", null)

}

+ test("substituteHadoopVariables") {

+val hadoopConf = new Configuration(false)

+hadoopConf.set("xxx", "yyy")

+

+val text1 = "${hadoopconf-xxx}"

+val result1 = new SparkHadoopUtil().substituteHadoopVariables(text1,

hadoopConf)

+assert(result1 == "yyy")

+

+val text2 = "${hadoopconf-xxx"

+val result2 = new SparkHadoopUtil().substituteHadoopVariables(text2,

hadoopConf)

+assert(result2 == "${hadoopconf-xxx")

+

+val text3 = "${hadoopconf-xxx}zzz"

+val result3 = new SparkHadoopUtil().substituteHadoopVariables(text3,

hadoopConf)

+assert(result3 == "yyyzzz")

+

+val text4 = "www${hadoopconf-xxx}zzz"

+val result4 = new SparkHadoopUtil().substituteHadoopVariables(text4,

hadoopConf)

+assert(result4 == "wwwyyyzzz")

+

+val text5 = "www${hadoopconf-xxx}"

+val result5 = new SparkHadoopUtil().substituteHadoopVariables(text5,

hadoopConf)

+assert(result5 == "wwwyyy")

+

+val text6 = "www${hadoopconf-xxx"

+val result6 = new SparkHadoopUtil().substituteHadoopVariables(text6,

hadoopConf)

+assert(result6 == "www${hadoopconf-xxx")

+

+val text7 = "www$hadoopconf-xxx}"

+val result7 = new SparkHadoopUtil().substituteHadoopVariables(text7,

hadoopConf)

+assert(result7 == "www$hadoopconf-xxx}")

+

+val text8 = "www{hadoopconf-xxx}"

+val result8 = new SparkHadoopUtil().substituteHadoopVariables(text8,

hadoopConf)

+assert(result8 == "www{hadoopconf-xxx}")

+ }

+

+ test("Redundant character escape '\\}' in RegExp ") {

+val HADOOP_CONF_PATTERN_1 = "(\\$\\{hadoopconf-[^}$\\s]+})".r.unanchored

+val HADOOP_CONF_PATTERN_2 = "(\\$\\{hadoopconf-[^}$\\s]+\\})".r.unanchored

+

+val text = "www${hadoopconf-xxx}zzz"

+val target1 = text match {

+ case HADOOP_CONF_PATTERN_1(matched) =&

[spark] branch master updated: [SPARK-43508][DOC] Replace the link related to hadoop version 2 with hadoop version 3

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new cadfef6f807 [SPARK-43508][DOC] Replace the link related to hadoop

version 2 with hadoop version 3

cadfef6f807 is described below

commit cadfef6f807a75ff403f6dd9234a3996ec7c691c

Author: panbingkun

AuthorDate: Mon May 15 09:44:03 2023 -0500

[SPARK-43508][DOC] Replace the link related to hadoop version 2 with hadoop

version 3

### What changes were proposed in this pull request?

The pr aims to replace the link related to hadoop version 2 with hadoop

version 3

### Why are the changes needed?

Because [SPARK-40651](https://issues.apache.org/jira/browse/SPARK-40651)

Drop Hadoop2 binary distribtuion from release process and

[SPARK-42447](https://issues.apache.org/jira/browse/SPARK-42447) Remove Hadoop

2 GitHub Action job.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manual test.

Closes #41171 from panbingkun/SPARK-43508.

Authored-by: panbingkun

Signed-off-by: Sean Owen

---

docs/streaming-programming-guide.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/docs/streaming-programming-guide.md

b/docs/streaming-programming-guide.md

index 5ed66eab348..f8f98ca5442 100644

--- a/docs/streaming-programming-guide.md

+++ b/docs/streaming-programming-guide.md

@@ -748,7 +748,7 @@ of the store is consistent with that expected by Spark

Streaming. It may be

that writing directly into a destination directory is the appropriate strategy

for

streaming data via the chosen object store.

-For more details on this topic, consult the [Hadoop Filesystem

Specification](https://hadoop.apache.org/docs/stable2/hadoop-project-dist/hadoop-common/filesystem/introduction.html).

+For more details on this topic, consult the [Hadoop Filesystem

Specification](https://hadoop.apache.org/docs/stable3/hadoop-project-dist/hadoop-common/filesystem/introduction.html).

Streams based on Custom Receivers

{:.no_toc}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-43495][BUILD] Upgrade RoaringBitmap to 0.9.44

This is an automated email from the ASF dual-hosted git repository. srowen pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/master by this push: new bee8187d731 [SPARK-43495][BUILD] Upgrade RoaringBitmap to 0.9.44 bee8187d731 is described below commit bee8187d7319ededf82701b4fd2a2928cd56c7f8 Author: yangjie01 AuthorDate: Mon May 15 08:46:20 2023 -0500 [SPARK-43495][BUILD] Upgrade RoaringBitmap to 0.9.44 ### What changes were proposed in this pull request? This pr aims upgrade `RoaringBitmap` from 0.9.39 to 0.9.44. ### Why are the changes needed? The new version brings 2 bug fix: - https://github.com/RoaringBitmap/RoaringBitmap/issues/619 | https://github.com/RoaringBitmap/RoaringBitmap/pull/620 - https://github.com/RoaringBitmap/RoaringBitmap/issues/623 | https://github.com/RoaringBitmap/RoaringBitmap/pull/624 The full release notes as follows: - https://github.com/RoaringBitmap/RoaringBitmap/releases/tag/0.9.40 - https://github.com/RoaringBitmap/RoaringBitmap/releases/tag/0.9.41 - https://github.com/RoaringBitmap/RoaringBitmap/releases/tag/0.9.44 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions Closes #41165 from LuciferYang/SPARK-43495. Authored-by: yangjie01 Signed-off-by: Sean Owen --- core/benchmarks/MapStatusesConvertBenchmark-jdk11-results.txt | 10 +- core/benchmarks/MapStatusesConvertBenchmark-jdk17-results.txt | 10 +- core/benchmarks/MapStatusesConvertBenchmark-results.txt | 10 +- dev/deps/spark-deps-hadoop-3-hive-2.3 | 4 ++-- pom.xml | 2 +- 5 files changed, 18 insertions(+), 18 deletions(-) diff --git a/core/benchmarks/MapStatusesConvertBenchmark-jdk11-results.txt b/core/benchmarks/MapStatusesConvertBenchmark-jdk11-results.txt index ef9dd139ff2..f42b95e8d4c 100644 --- a/core/benchmarks/MapStatusesConvertBenchmark-jdk11-results.txt +++ b/core/benchmarks/MapStatusesConvertBenchmark-jdk11-results.txt @@ -2,12 +2,12 @@ MapStatuses Convert Benchmark -OpenJDK 64-Bit Server VM 11.0.18+10 on Linux 5.15.0-1031-azure -Intel(R) Xeon(R) Platinum 8370C CPU @ 2.80GHz +OpenJDK 64-Bit Server VM 11.0.19+7 on Linux 5.15.0-1037-azure +Intel(R) Xeon(R) CPU E5-2673 v4 @ 2.30GHz MapStatuses Convert: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -Num Maps: 5 Fetch partitions:500 1288 1317 38 0.0 1288194389.0 1.0X -Num Maps: 5 Fetch partitions:1000 2608 2671 65 0.0 2607771122.0 0.5X -Num Maps: 5 Fetch partitions:1500 3985 4026 64 0.0 3984885770.0 0.3X +Num Maps: 5 Fetch partitions:500 1346 1367 28 0.0 1345826909.0 1.0X +Num Maps: 5 Fetch partitions:1000 2807 2818 11 0.0 2806866333.0 0.5X +Num Maps: 5 Fetch partitions:1500 4287 4308 19 0.0 4286688536.0 0.3X diff --git a/core/benchmarks/MapStatusesConvertBenchmark-jdk17-results.txt b/core/benchmarks/MapStatusesConvertBenchmark-jdk17-results.txt index 12af87d9689..b0b61cc11ef 100644 --- a/core/benchmarks/MapStatusesConvertBenchmark-jdk17-results.txt +++ b/core/benchmarks/MapStatusesConvertBenchmark-jdk17-results.txt @@ -2,12 +2,12 @@ MapStatuses Convert Benchmark -OpenJDK 64-Bit Server VM 17.0.6+10 on Linux 5.15.0-1031-azure -Intel(R) Xeon(R) Platinum 8370C CPU @ 2.80GHz +OpenJDK 64-Bit Server VM 17.0.7+7 on Linux 5.15.0-1037-azure +Intel(R) Xeon(R) Platinum 8272CL CPU @ 2.60GHz MapStatuses Convert: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -Num Maps: 5 Fetch partitions:500 1052 1061 12 0.0 1051946292.0 1.0X -Num Maps: 5 Fetch partitions:1000 1888 2007 109 0.0 1888235523.0 0.6X -Num Maps: 5 Fetch partitions:1500 3070 3149 81 0.0 3070386448.0 0.3X +Num Maps: 5 Fetch partitions:500 1041 1050 16 0.0

[spark] branch master updated: [SPARK-43489][BUILD] Remove protobuf 2.5.0

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new b23185080cc [SPARK-43489][BUILD] Remove protobuf 2.5.0

b23185080cc is described below

commit b23185080cc3e5a00b88496cec70c2b3cd7019f5

Author: Cheng Pan

AuthorDate: Sun May 14 08:09:37 2023 -0500

[SPARK-43489][BUILD] Remove protobuf 2.5.0

### What changes were proposed in this pull request?

Spark does not use protobuf 2.5.0 directly, instead, it comes from other

dependencies, with the following changes, now, Spark does not require protobuf

2.5.0 (please let me know if I miss something),

- SPARK-40323 upgraded ORC 1.8.0, which moved from protobuf 2.5.0 to a

shaded protobuf 3

- SPARK-33212 switched from Hadoop vanilla client to Hadoop shaded client,

also removed the protobuf 2 dependency. SPARK-42452 removed the support for

Hadoop 2.

- SPARK-14421 shaded and relocated protobuf 2.6.1, which is required by the

kinesis client, into the kinesis assembly jar

- Spark itself's core/connect/protobuf modules use protobuf 3, also shaded

and relocated all protobuf 3 deps.

### Why are the changes needed?

Remove the obsolete dependency, which is EOL long ago, and has CVEs

[CVE-2022-3510](https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2022-3510)

[CVE-2022-3509](https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2022-3509)

[CVE-2022-3171](https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2022-3171)

[CVE-2021-22569](https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2021-22569)

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass GA.

Closes #41153 from pan3793/remove-protobuf-2.

Authored-by: Cheng Pan

Signed-off-by: Sean Owen

---

connector/connect/client/jvm/pom.xml | 1 -

connector/connect/common/pom.xml | 1 -

connector/connect/server/pom.xml | 2 --

connector/protobuf/pom.xml| 2 --

core/pom.xml | 3 +--

dev/deps/spark-deps-hadoop-3-hive-2.3 | 1 -

pom.xml | 18 --

sql/core/pom.xml | 1 -

sql/hive/pom.xml | 11 ---

9 files changed, 9 insertions(+), 31 deletions(-)

diff --git a/connector/connect/client/jvm/pom.xml

b/connector/connect/client/jvm/pom.xml

index 8543057d0c0..413764d0ea2 100644

--- a/connector/connect/client/jvm/pom.xml

+++ b/connector/connect/client/jvm/pom.xml

@@ -65,7 +65,6 @@

com.google.protobuf

protobuf-java

- ${protobuf.version}

compile

diff --git a/connector/connect/common/pom.xml b/connector/connect/common/pom.xml

index e457620e593..06076646df7 100644

--- a/connector/connect/common/pom.xml

+++ b/connector/connect/common/pom.xml

@@ -57,7 +57,6 @@

com.google.protobuf

protobuf-java

-${protobuf.version}

compile

diff --git a/connector/connect/server/pom.xml b/connector/connect/server/pom.xml

index a62c420bcc0..8313f21f4ba 100644

--- a/connector/connect/server/pom.xml

+++ b/connector/connect/server/pom.xml

@@ -170,13 +170,11 @@

com.google.protobuf

protobuf-java

- ${protobuf.version}

compile

com.google.protobuf

protobuf-java-util

- ${protobuf.version}

compile

diff --git a/connector/protobuf/pom.xml b/connector/protobuf/pom.xml

index 6feef54ce71..e85f07841df 100644

--- a/connector/protobuf/pom.xml

+++ b/connector/protobuf/pom.xml

@@ -79,13 +79,11 @@

com.google.protobuf

protobuf-java

- ${protobuf.version}

compile

com.google.protobuf

protobuf-java-util

- ${protobuf.version}

compile

diff --git a/core/pom.xml b/core/pom.xml

index 66e41837d52..09b0a2af96f 100644

--- a/core/pom.xml

+++ b/core/pom.xml

@@ -536,7 +536,6 @@

com.google.protobuf

protobuf-java

- ${protobuf.version}

compile

@@ -627,7 +626,7 @@

true

true

-

guava,jetty-io,jetty-servlet,jetty-servlets,jetty-continuation,jetty-http,jetty-plus,jetty-util,jetty-server,jetty-security,jetty-proxy,jetty-client

+

guava,protobuf-java,jetty-io,jetty-servlet,jetty-servlets,jetty-continuation,jetty-http,jetty-plus,jetty-util,jetty-server,jetty-security,jetty-proxy,jetty-client

true

diff --git a/dev/deps/spark-deps-hadoop-3-hive-2.3

b/dev/deps/spark-deps-hadoop-3-hive-2.3

index c23bb89c983..7e702e44c40 100644

--- a/dev/deps/spark-deps-hadoop-3-hive-2.3

+++ b/dev/deps/spark-deps-hadoop-3-hive-2.3

@@ -221,7 +221,6 @@

parquet-format-structures/1.13.0

[spark] branch master updated: [SPARK-43138][CORE] Fix ClassNotFoundException during migration

This is an automated email from the ASF dual-hosted git repository. srowen pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/master by this push: new 37a0ae3511c [SPARK-43138][CORE] Fix ClassNotFoundException during migration 37a0ae3511c is described below commit 37a0ae3511c9f153537d5928e9938f72763f5464 Author: Emil Ejbyfeldt AuthorDate: Thu May 11 08:25:45 2023 -0500 [SPARK-43138][CORE] Fix ClassNotFoundException during migration ### What changes were proposed in this pull request? This PR fixes an unhandled ClassNotFoundException during RDD block decommissions migrations. ``` 2023-04-08 04:15:11,791 ERROR server.TransportRequestHandler: Error while invoking RpcHandler#receive() on RPC id 6425687122551756860 java.lang.ClassNotFoundException: com.class.from.user.jar.ClassName at java.base/jdk.internal.loader.BuiltinClassLoader.loadClass(BuiltinClassLoader.java:581) at java.base/jdk.internal.loader.ClassLoaders$AppClassLoader.loadClass(ClassLoaders.java:178) at java.base/java.lang.ClassLoader.loadClass(ClassLoader.java:522) at java.base/java.lang.Class.forName0(Native Method) at java.base/java.lang.Class.forName(Class.java:398) at org.apache.spark.serializer.JavaDeserializationStream$$anon$1.resolveClass(JavaSerializer.scala:71) at java.base/java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:2003) at java.base/java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1870) at java.base/java.io.ObjectInputStream.readClass(ObjectInputStream.java:1833) at java.base/java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1658) at java.base/java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2496) at java.base/java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2390) at java.base/java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2228) at java.base/java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1687) at java.base/java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2496) at java.base/java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2390) at java.base/java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2228) at java.base/java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1687) at java.base/java.io.ObjectInputStream.readObject(ObjectInputStream.java:489) at java.base/java.io.ObjectInputStream.readObject(ObjectInputStream.java:447) at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:87) at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:123) at org.apache.spark.network.netty.NettyBlockRpcServer.deserializeMetadata(NettyBlockRpcServer.scala:180) at org.apache.spark.network.netty.NettyBlockRpcServer.receive(NettyBlockRpcServer.scala:119) at org.apache.spark.network.server.TransportRequestHandler.processRpcRequest(TransportRequestHandler.java:163) at org.apache.spark.network.server.TransportRequestHandler.handle(TransportRequestHandler.java:109) at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:140) at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:53) at io.netty.channel.SimpleChannelInboundHandler.channelRead(SimpleChannelInboundHandler.java:99) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357

[spark] branch master updated: [SPARK-40912][CORE] Overhead of Exceptions in KryoDeserializationStream

This is an automated email from the ASF dual-hosted git repository. srowen pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/master by this push: new 4def99d54fc [SPARK-40912][CORE] Overhead of Exceptions in KryoDeserializationStream 4def99d54fc is described below commit 4def99d54fcb55e80fb4f5f9558af1739b385e6c Author: Emil Ejbyfeldt AuthorDate: Wed May 10 08:23:07 2023 -0500 [SPARK-40912][CORE] Overhead of Exceptions in KryoDeserializationStream ### What changes were proposed in this pull request? This PR avoid exceptions in the implementation of KryoDeserializationStream. ### Why are the changes needed? Using an exceptions for end of stream is slow, especially for small streams. It also problematic as it the exception caught in the KryoDeserializationStream could also be caused by corrupt data which would just be ignored in the current implementation. ### Does this PR introduce _any_ user-facing change? Yes, it changes so some method on KryoDeserializationStream no longer raises EOFException. ### How was this patch tested? Existing tests. This PR only changes KryoDeserializationStream as a proof of concept. If this is the direction we want to go we should probably change DerserializationStream isntead so that the interface is consistent. Closes #38428 from eejbyfeldt/SPARK-40912. Authored-by: Emil Ejbyfeldt Signed-off-by: Sean Owen --- core/benchmarks/KryoBenchmark-jdk11-results.txt| 40 +++ core/benchmarks/KryoBenchmark-jdk17-results.txt| 36 +++ core/benchmarks/KryoBenchmark-results.txt | 40 +++ .../KryoIteratorBenchmark-jdk11-results.txt| 28 + .../KryoIteratorBenchmark-jdk17-results.txt| 28 + core/benchmarks/KryoIteratorBenchmark-results.txt | 28 + .../KryoSerializerBenchmark-jdk11-results.txt | 8 +- .../KryoSerializerBenchmark-jdk17-results.txt | 6 +- .../benchmarks/KryoSerializerBenchmark-results.txt | 8 +- .../apache/spark/serializer/KryoSerializer.scala | 48 - .../util/collection/ExternalAppendOnlyMap.scala| 46 +++- .../spark/serializer/KryoIteratorBenchmark.scala | 120 + .../spark/serializer/KryoSerializerSuite.scala | 24 - 13 files changed, 360 insertions(+), 100 deletions(-) diff --git a/core/benchmarks/KryoBenchmark-jdk11-results.txt b/core/benchmarks/KryoBenchmark-jdk11-results.txt index 73e7f15ba22..01269b496e0 100644 --- a/core/benchmarks/KryoBenchmark-jdk11-results.txt +++ b/core/benchmarks/KryoBenchmark-jdk11-results.txt @@ -2,27 +2,27 @@ Benchmark Kryo Unsafe vs safe Serialization -OpenJDK 64-Bit Server VM 11.0.18+10 on Linux 5.15.0-1031-azure -Intel(R) Xeon(R) CPU E5-2673 v3 @ 2.40GHz +OpenJDK 64-Bit Server VM 11.0.18+10 on Linux 5.15.0-1036-azure +Intel(R) Xeon(R) Platinum 8370C CPU @ 2.80GHz Benchmark Kryo Unsafe vs safe Serialization: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative --- -basicTypes: Int with unsafe:true 301319 11 3.3 301.5 1.0X -basicTypes: Long with unsafe:true 337351 9 3.0 337.2 0.9X -basicTypes: Float with unsafe:true 327335 6 3.1 327.5 0.9X -basicTypes: Double with unsafe:true321336 10 3.1 321.0 0.9X -Array: Int with unsafe:true 4 5 1245.2 4.1 73.9X -Array: Long with unsafe:true 7 8 1147.6 6.8 44.5X -Array: Float with unsafe:true4 5 1250.4 4.0 75.5X -Array: Double with unsafe:true 7 8 1144.1 6.9 43.4X -Map of string->Double with unsafe:true 42 46 4 23.8 42.0 7.2X -basicTypes: Int with unsafe:false 347357 10 2.9 347.4 0.9X -basicTypes: Long with unsafe:false 378394 10 2.6 378.1 0.8X -basicTypes: Float with unsafe:false346359 9 2.9 345.6 0.9X -basicTypes: Double with unsafe:false 350372 20 2.9 350.3 0

[spark] branch master updated: [SPARK-43394][BUILD] Upgrade maven to 3.8.8

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 04ef3d5d0f2 [SPARK-43394][BUILD] Upgrade maven to 3.8.8

04ef3d5d0f2 is described below

commit 04ef3d5d0f2bfebce8dd3b48b9861a2aa5ba1c3a

Author: Cheng Pan

AuthorDate: Sun May 7 08:24:12 2023 -0500

[SPARK-43394][BUILD] Upgrade maven to 3.8.8

### What changes were proposed in this pull request?

Upgrade Maven from 3.8.7 to 3.8.8.

### Why are the changes needed?

Maven 3.8.8 is the latest patched version of 3.8.x

https://maven.apache.org/docs/3.8.8/release-notes.html

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Pass GA.

Closes #41073 from pan3793/SPARK-43394.

Authored-by: Cheng Pan

Signed-off-by: Sean Owen

---

dev/appveyor-install-dependencies.ps1 | 2 +-

docs/building-spark.md| 2 +-

pom.xml | 2 +-

3 files changed, 3 insertions(+), 3 deletions(-)

diff --git a/dev/appveyor-install-dependencies.ps1

b/dev/appveyor-install-dependencies.ps1

index 7f4f027c820..88090149f5c 100644

--- a/dev/appveyor-install-dependencies.ps1

+++ b/dev/appveyor-install-dependencies.ps1

@@ -81,7 +81,7 @@ if (!(Test-Path $tools)) {

# == Maven

# Push-Location $tools

#

-# $mavenVer = "3.8.7"

+# $mavenVer = "3.8.8"

# Start-FileDownload

"https://archive.apache.org/dist/maven/maven-3/$mavenVer/binaries/apache-maven-$mavenVer-bin.zip;

"maven.zip"

#

# # extract

diff --git a/docs/building-spark.md b/docs/building-spark.md

index ba8dddbf6b1..4b8e70655d5 100644

--- a/docs/building-spark.md

+++ b/docs/building-spark.md

@@ -27,7 +27,7 @@ license: |

## Apache Maven

The Maven-based build is the build of reference for Apache Spark.

-Building Spark using Maven requires Maven 3.8.7 and Java 8.

+Building Spark using Maven requires Maven 3.8.8 and Java 8/11/17.

Spark requires Scala 2.12/2.13; support for Scala 2.11 was removed in Spark

3.0.0.

### Setting up Maven's Memory Usage

diff --git a/pom.xml b/pom.xml

index c760eaf0cbb..96ee3fb5ed9 100644

--- a/pom.xml

+++ b/pom.xml

@@ -114,7 +114,7 @@

1.8

${java.version}

${java.version}

-3.8.7

+3.8.8

1.6.0

spark

2.0.7

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.2 updated: [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh

This is an automated email from the ASF dual-hosted git repository. srowen pushed a commit to branch branch-3.2 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.2 by this push: new 37c27451a2d [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh 37c27451a2d is described below commit 37c27451a2dbb4668c2793c1fcae4759c845d3ad Author: Cheng Pan AuthorDate: Sat May 6 09:37:44 2023 -0500 [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh ### What changes were proposed in this pull request? Add args `--no-mac-metadata --no-xattrs --no-fflags` to `tar` on macOS in `dev/make-distribution.sh` to exclude macOS-specific extended metadata. ### Why are the changes needed? The binary tarball created on macOS includes extended macOS-specific metadata and xattrs, which causes warnings when unarchiving it on Linux. Step to reproduce 1. create tarball on macOS (13.3.1) ``` ➜ apache-spark git:(master) tar --version bsdtar 3.5.3 - libarchive 3.5.3 zlib/1.2.11 liblzma/5.0.5 bz2lib/1.0.8 ``` ``` ➜ apache-spark git:(master) dev/make-distribution.sh --tgz ``` 2. unarchive the binary tarball on Linux (CentOS-7) ``` ➜ ~ tar --version tar (GNU tar) 1.26 Copyright (C) 2011 Free Software Foundation, Inc. License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>. This is free software: you are free to change and redistribute it. There is NO WARRANTY, to the extent permitted by law. Written by John Gilmore and Jay Fenlason. ``` ``` ➜ ~ tar -xzf spark-3.5.0-SNAPSHOT-bin-3.3.5.tgz tar: Ignoring unknown extended header keyword `SCHILY.fflags' tar: Ignoring unknown extended header keyword `LIBARCHIVE.xattr.com.apple.FinderInfo' ``` ### Does this PR introduce _any_ user-facing change? No, dev only. ### How was this patch tested? Create binary tarball on macOS then unarchive on Linux, warnings disappear after this change. Closes #41074 from pan3793/SPARK-43395. Authored-by: Cheng Pan Signed-off-by: Sean Owen (cherry picked from commit 2d0240df3c474902e263f67b93fb497ca13da00f) Signed-off-by: Sean Owen --- dev/make-distribution.sh | 6 +- 1 file changed, 5 insertions(+), 1 deletion(-) diff --git a/dev/make-distribution.sh b/dev/make-distribution.sh index 571059be6fd..e92f445f046 100755 --- a/dev/make-distribution.sh +++ b/dev/make-distribution.sh @@ -287,6 +287,10 @@ if [ "$MAKE_TGZ" == "true" ]; then TARDIR="$SPARK_HOME/$TARDIR_NAME" rm -rf "$TARDIR" cp -r "$DISTDIR" "$TARDIR" - tar czf "spark-$VERSION-bin-$NAME.tgz" -C "$SPARK_HOME" "$TARDIR_NAME" + TAR="tar" + if [ "$(uname -s)" = "Darwin" ]; then +TAR="tar --no-mac-metadata --no-xattrs --no-fflags" + fi + $TAR -czf "spark-$VERSION-bin-$NAME.tgz" -C "$SPARK_HOME" "$TARDIR_NAME" rm -rf "$TARDIR" fi - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.3 updated: [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh

This is an automated email from the ASF dual-hosted git repository. srowen pushed a commit to branch branch-3.3 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.3 by this push: new 85ff71f9459 [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh 85ff71f9459 is described below commit 85ff71f94593dd8ede9a0ea3278f5026da10c46f Author: Cheng Pan AuthorDate: Sat May 6 09:37:44 2023 -0500 [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh ### What changes were proposed in this pull request? Add args `--no-mac-metadata --no-xattrs --no-fflags` to `tar` on macOS in `dev/make-distribution.sh` to exclude macOS-specific extended metadata. ### Why are the changes needed? The binary tarball created on macOS includes extended macOS-specific metadata and xattrs, which causes warnings when unarchiving it on Linux. Step to reproduce 1. create tarball on macOS (13.3.1) ``` ➜ apache-spark git:(master) tar --version bsdtar 3.5.3 - libarchive 3.5.3 zlib/1.2.11 liblzma/5.0.5 bz2lib/1.0.8 ``` ``` ➜ apache-spark git:(master) dev/make-distribution.sh --tgz ``` 2. unarchive the binary tarball on Linux (CentOS-7) ``` ➜ ~ tar --version tar (GNU tar) 1.26 Copyright (C) 2011 Free Software Foundation, Inc. License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>. This is free software: you are free to change and redistribute it. There is NO WARRANTY, to the extent permitted by law. Written by John Gilmore and Jay Fenlason. ``` ``` ➜ ~ tar -xzf spark-3.5.0-SNAPSHOT-bin-3.3.5.tgz tar: Ignoring unknown extended header keyword `SCHILY.fflags' tar: Ignoring unknown extended header keyword `LIBARCHIVE.xattr.com.apple.FinderInfo' ``` ### Does this PR introduce _any_ user-facing change? No, dev only. ### How was this patch tested? Create binary tarball on macOS then unarchive on Linux, warnings disappear after this change. Closes #41074 from pan3793/SPARK-43395. Authored-by: Cheng Pan Signed-off-by: Sean Owen (cherry picked from commit 2d0240df3c474902e263f67b93fb497ca13da00f) Signed-off-by: Sean Owen --- dev/make-distribution.sh | 6 +- 1 file changed, 5 insertions(+), 1 deletion(-) diff --git a/dev/make-distribution.sh b/dev/make-distribution.sh index 571059be6fd..e92f445f046 100755 --- a/dev/make-distribution.sh +++ b/dev/make-distribution.sh @@ -287,6 +287,10 @@ if [ "$MAKE_TGZ" == "true" ]; then TARDIR="$SPARK_HOME/$TARDIR_NAME" rm -rf "$TARDIR" cp -r "$DISTDIR" "$TARDIR" - tar czf "spark-$VERSION-bin-$NAME.tgz" -C "$SPARK_HOME" "$TARDIR_NAME" + TAR="tar" + if [ "$(uname -s)" = "Darwin" ]; then +TAR="tar --no-mac-metadata --no-xattrs --no-fflags" + fi + $TAR -czf "spark-$VERSION-bin-$NAME.tgz" -C "$SPARK_HOME" "$TARDIR_NAME" rm -rf "$TARDIR" fi - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.4 updated: [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh

This is an automated email from the ASF dual-hosted git repository. srowen pushed a commit to branch branch-3.4 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.4 by this push: new cd2a6f38e0c [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh cd2a6f38e0c is described below commit cd2a6f38e0cceff68493918fe7cd6498a7f4119d Author: Cheng Pan AuthorDate: Sat May 6 09:37:44 2023 -0500 [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh ### What changes were proposed in this pull request? Add args `--no-mac-metadata --no-xattrs --no-fflags` to `tar` on macOS in `dev/make-distribution.sh` to exclude macOS-specific extended metadata. ### Why are the changes needed? The binary tarball created on macOS includes extended macOS-specific metadata and xattrs, which causes warnings when unarchiving it on Linux. Step to reproduce 1. create tarball on macOS (13.3.1) ``` ➜ apache-spark git:(master) tar --version bsdtar 3.5.3 - libarchive 3.5.3 zlib/1.2.11 liblzma/5.0.5 bz2lib/1.0.8 ``` ``` ➜ apache-spark git:(master) dev/make-distribution.sh --tgz ``` 2. unarchive the binary tarball on Linux (CentOS-7) ``` ➜ ~ tar --version tar (GNU tar) 1.26 Copyright (C) 2011 Free Software Foundation, Inc. License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>. This is free software: you are free to change and redistribute it. There is NO WARRANTY, to the extent permitted by law. Written by John Gilmore and Jay Fenlason. ``` ``` ➜ ~ tar -xzf spark-3.5.0-SNAPSHOT-bin-3.3.5.tgz tar: Ignoring unknown extended header keyword `SCHILY.fflags' tar: Ignoring unknown extended header keyword `LIBARCHIVE.xattr.com.apple.FinderInfo' ``` ### Does this PR introduce _any_ user-facing change? No, dev only. ### How was this patch tested? Create binary tarball on macOS then unarchive on Linux, warnings disappear after this change. Closes #41074 from pan3793/SPARK-43395. Authored-by: Cheng Pan Signed-off-by: Sean Owen (cherry picked from commit 2d0240df3c474902e263f67b93fb497ca13da00f) Signed-off-by: Sean Owen --- dev/make-distribution.sh | 6 +- 1 file changed, 5 insertions(+), 1 deletion(-) diff --git a/dev/make-distribution.sh b/dev/make-distribution.sh index d4c8559fd4a..948ee19fbac 100755 --- a/dev/make-distribution.sh +++ b/dev/make-distribution.sh @@ -287,6 +287,10 @@ if [ "$MAKE_TGZ" == "true" ]; then TARDIR="$SPARK_HOME/$TARDIR_NAME" rm -rf "$TARDIR" cp -r "$DISTDIR" "$TARDIR" - tar czf "spark-$VERSION-bin-$NAME.tgz" -C "$SPARK_HOME" "$TARDIR_NAME" + TAR="tar" + if [ "$(uname -s)" = "Darwin" ]; then +TAR="tar --no-mac-metadata --no-xattrs --no-fflags" + fi + $TAR -czf "spark-$VERSION-bin-$NAME.tgz" -C "$SPARK_HOME" "$TARDIR_NAME" rm -rf "$TARDIR" fi - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (566980fba1c -> 2d0240df3c4)

This is an automated email from the ASF dual-hosted git repository. srowen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from 566980fba1c [SPARK-38462][CORE] Add error class INTERNAL_ERROR_EXECUTOR add 2d0240df3c4 [SPARK-43395][BUILD] Exclude macOS tar extended metadata in make-distribution.sh No new revisions were added by this update. Summary of changes: dev/make-distribution.sh | 6 +- 1 file changed, 5 insertions(+), 1 deletion(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.3 updated (e9aab411ca8 -> bc2c9553805)

This is an automated email from the ASF dual-hosted git repository. srowen pushed a change to branch branch-3.3 in repository https://gitbox.apache.org/repos/asf/spark.git from e9aab411ca8 [SPARK-43293][SQL] `__qualified_access_only` should be ignored in normal columns add bc2c9553805 [SPARK-43337][UI][3.3] Asc/desc arrow icons for sorting column does not get displayed in the table column No new revisions were added by this update. Summary of changes: .../org/apache/spark/ui/static/jquery.dataTables.1.10.25.min.css| 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (d65a0ce996b -> 1b54b014543)

This is an automated email from the ASF dual-hosted git repository. srowen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from d65a0ce996b [SPARK-43379][DOCS] Deprecate old Java 8 versions prior to 8u371 add 1b54b014543 [SPARK-43185][BUILD] Inline `hadoop-client` related properties in `pom.xml` No new revisions were added by this update. Summary of changes: common/network-yarn/pom.xml | 4 ++-- connector/kafka-0-10-assembly/pom.xml | 4 ++-- connector/kafka-0-10-token-provider/pom.xml | 2 +- connector/kinesis-asl-assembly/pom.xml | 4 ++-- core/pom.xml| 4 ++-- hadoop-cloud/pom.xml| 4 ++-- launcher/pom.xml| 4 ++-- pom.xml | 27 +++ resource-managers/yarn/pom.xml | 6 +++--- sql/hive/pom.xml| 2 +- 10 files changed, 20 insertions(+), 41 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-43379][DOCS] Deprecate old Java 8 versions prior to 8u371

This is an automated email from the ASF dual-hosted git repository. srowen pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/master by this push: new d65a0ce996b [SPARK-43379][DOCS] Deprecate old Java 8 versions prior to 8u371 d65a0ce996b is described below commit d65a0ce996b776392e6be8fef37ee2754ebc4dfd Author: Dongjoon Hyun AuthorDate: Fri May 5 10:37:46 2023 -0500 [SPARK-43379][DOCS] Deprecate old Java 8 versions prior to 8u371 ### What changes were proposed in this pull request? This PR aims to deprecate old Java 8 versions prior to 8u371. Specifically, it's fixed at Java SE 8u371, 11.0.19, 17.0.7, 20.0.1. ### Why are the changes needed? To avoid TLS issue - [OpenJDK: improper connection handling during TLS handshake](https://bugzilla.redhat.com/show_bug.cgi?id=2187435) - https://www.oracle.com/security-alerts/cpuapr2023.html#AppendixJAVA Release notes: - https://www.oracle.com/java/technologies/javase/8u371-relnotes.html - https://www.oracle.com/java/technologies/javase/11-0-19-relnotes.html - https://www.oracle.com/java/technologies/javase/17-0-7-relnotes.html - https://www.oracle.com/java/technologies/javase/20-0-1-relnotes.html ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Manual review. Closes #41051 from dongjoon-hyun/SPARK-43379. Authored-by: Dongjoon Hyun Signed-off-by: Sean Owen --- docs/index.md | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) diff --git a/docs/index.md b/docs/index.md index 81311b3442d..c673537d214 100644 --- a/docs/index.md +++ b/docs/index.md @@ -35,7 +35,7 @@ source, visit [Building Spark](building-spark.html). Spark runs on both Windows and UNIX-like systems (e.g. Linux, Mac OS), and it should run on any platform that runs a supported version of Java. This should include JVMs on x86_64 and ARM64. It's easy to run locally on one machine --- all you need is to have `java` installed on your system `PATH`, or the `JAVA_HOME` environment variable pointing to a Java installation. Spark runs on Java 8/11/17, Scala 2.12/2.13, Python 3.8+, and R 3.5+. -Java 8 prior to version 8u362 support is deprecated as of Spark 3.4.0. +Java 8 prior to version 8u371 support is deprecated as of Spark 3.5.0. When using the Scala API, it is necessary for applications to use the same version of Scala that Spark was compiled for. For example, when using Scala 2.13, use Spark compiled for 2.13, and compile code/applications for Scala 2.13 as well. - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (79d5d908e5d -> 44e68599228)

This is an automated email from the ASF dual-hosted git repository. srowen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from 79d5d908e5d [SPARK-43284][SQL][FOLLOWUP] Return URI encoded path, and add a test add 44e68599228 [SPARK-43279][CORE] Cleanup unused members from `SparkHadoopUtil` No new revisions were added by this update. Summary of changes: .../org/apache/spark/deploy/SparkHadoopUtil.scala | 59 +- 1 file changed, 1 insertion(+), 58 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

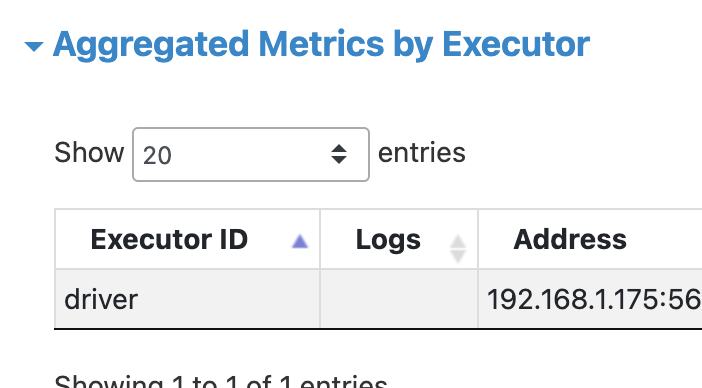

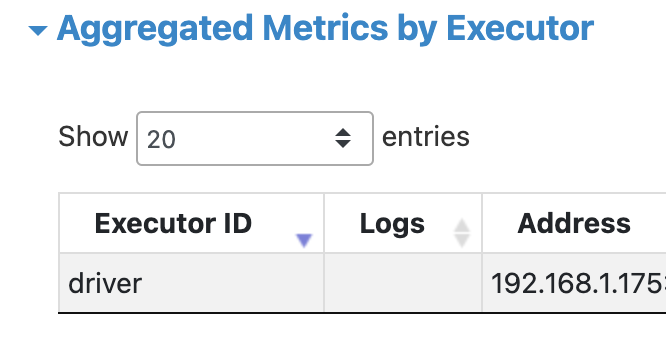

[spark] branch branch-3.4 updated: [SPARK-43337][UI][3.4] Asc/desc arrow icons for sorting column does not get displayed in the table column

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch branch-3.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.4 by this push:

new e04025e359f [SPARK-43337][UI][3.4] Asc/desc arrow icons for sorting

column does not get displayed in the table column

e04025e359f is described below

commit e04025e359fe3d8bbaa7b695b491319f050adeb1

Author: Maytas Monsereenusorn

AuthorDate: Fri May 5 10:25:46 2023 -0500

[SPARK-43337][UI][3.4] Asc/desc arrow icons for sorting column does not get

displayed in the table column

### What changes were proposed in this pull request? Remove css

`!important` tag for asc/desc arrow icons in jquery.dataTables.1.10.25.min.css

### Why are the changes needed?

Upgrading to DataTables 1.10.25 broke asc/desc arrow icons for sorting

column. The sorting icon is not displayed when the column is clicked to sort by

asc/desc. This is because the new DataTables 1.10.25's

jquery.dataTables.1.10.25.min.css file added `!important` rule preventing the

override set in webui-dataTables.css

### Does this PR introduce _any_ user-facing change? No.

### How was this patch tested?

Manual test.

Closes #41061 from maytasm/fix-arrow-4.

Authored-by: Maytas Monsereenusorn

Signed-off-by: Sean Owen

---

.../org/apache/spark/ui/static/jquery.dataTables.1.10.25.min.css| 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git

a/core/src/main/resources/org/apache/spark/ui/static/jquery.dataTables.1.10.25.min.css

b/core/src/main/resources/org/apache/spark/ui/static/jquery.dataTables.1.10.25.min.css

index 6e60559741c..4df81e13a75 100644

---

a/core/src/main/resources/org/apache/spark/ui/static/jquery.dataTables.1.10.25.min.css

+++

b/core/src/main/resources/org/apache/spark/ui/static/jquery.dataTables.1.10.25.min.css

@@ -1 +1 @@

-table.dataTable{width:100%;margin:0

auto;clear:both;border-collapse:separate;border-spacing:0}table.dataTable thead

th,table.dataTable tfoot th{font-weight:bold}table.dataTable thead

th,table.dataTable thead td{padding:10px 18px;border-bottom:1px solid

#111}table.dataTable thead th:active,table.dataTable thead

td:active{outline:none}table.dataTable tfoot th,table.dataTable tfoot

td{padding:10px 18px 6px 18px;border-top:1px solid #111}table.dataTable thead

.sorting,table.dataTable thead . [...]

\ No newline at end of file

+table.dataTable{width:100%;margin:0

auto;clear:both;border-collapse:separate;border-spacing:0}table.dataTable thead

th,table.dataTable tfoot th{font-weight:bold}table.dataTable thead

th,table.dataTable thead td{padding:10px 18px;border-bottom:1px solid

#111}table.dataTable thead th:active,table.dataTable thead

td:active{outline:none}table.dataTable tfoot th,table.dataTable tfoot

td{padding:10px 18px 6px 18px;border-top:1px solid #111}table.dataTable thead

.sorting,table.dataTable thead . [...]

\ No newline at end of file

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.4 updated: [SPARK-43378][CORE] Properly close stream objects in deserializeFromChunkedBuffer

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch branch-3.4

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.4 by this push:

new b51e860cbfd [SPARK-43378][CORE] Properly close stream objects in

deserializeFromChunkedBuffer

b51e860cbfd is described below

commit b51e860cbfdc03c0b085dc6e7dcb11fd1579113b

Author: Emil Ejbyfeldt

AuthorDate: Thu May 4 19:34:14 2023 -0500

[SPARK-43378][CORE] Properly close stream objects in

deserializeFromChunkedBuffer

### What changes were proposed in this pull request?

Fixes a that SerializerHelper.deserializeFromChunkedBuffer does not call

close on the deserialization stream. For some serializers like Kryo this

creates a performance regressions as the kryo instances are not returned to the

pool.

### Why are the changes needed?

This causes a performance regression in Spark 3.4.0 for some workloads.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests.

Closes #41049 from eejbyfeldt/SPARK-43378.

Authored-by: Emil Ejbyfeldt

Signed-off-by: Sean Owen

(cherry picked from commit cb26ad88c522070c66e979ab1ab0f040cd1bdbe7)

Signed-off-by: Sean Owen

---

.../src/main/scala/org/apache/spark/serializer/SerializerHelper.scala | 4 +++-

1 file changed, 3 insertions(+), 1 deletion(-)

diff --git

a/core/src/main/scala/org/apache/spark/serializer/SerializerHelper.scala

b/core/src/main/scala/org/apache/spark/serializer/SerializerHelper.scala

index 2cff87990a4..54a0b2e339e 100644

--- a/core/src/main/scala/org/apache/spark/serializer/SerializerHelper.scala

+++ b/core/src/main/scala/org/apache/spark/serializer/SerializerHelper.scala

@@ -49,6 +49,8 @@ private[spark] object SerializerHelper extends Logging {

serializerInstance: SerializerInstance,

bytes: ChunkedByteBuffer): T = {

val in = serializerInstance.deserializeStream(bytes.toInputStream())

-in.readObject()

+val res = in.readObject()

+in.close()

+res

}

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (05df8c472b4 -> cb26ad88c52)

This is an automated email from the ASF dual-hosted git repository. srowen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from 05df8c472b4 [SPARK-43312][PROTOBUF] Option to convert Any fields into JSON add cb26ad88c52 [SPARK-43378][CORE] Properly close stream objects in deserializeFromChunkedBuffer No new revisions were added by this update. Summary of changes: .../src/main/scala/org/apache/spark/serializer/SerializerHelper.scala | 4 +++- 1 file changed, 3 insertions(+), 1 deletion(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark-website] branch asf-site updated: Update for CVE-2023-32007

This is an automated email from the ASF dual-hosted git repository. srowen pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/spark-website.git The following commit(s) were added to refs/heads/asf-site by this push: new 54ff7efc3b Update for CVE-2023-32007 54ff7efc3b is described below commit 54ff7efc3bc512a57abc99325896bcaeb674d9b4 Author: Sean Owen AuthorDate: Tue May 2 08:57:30 2023 -0500 Update for CVE-2023-32007 --- security.md| 7 ++- site/security.html | 7 ++- 2 files changed, 12 insertions(+), 2 deletions(-) diff --git a/security.md b/security.md index 805e400fa4..182b1e8ef7 100644 --- a/security.md +++ b/security.md @@ -18,6 +18,11 @@ non-public list that will reach the Apache Security team, as well as the Spark P Known security issues +CVE-2023-32007: Apache Spark shell command injection vulnerability via Spark UI + +This CVE is only an update to [CVE-2022-33891](#CVE-2022-33891) to clarify that version 3.1.3 is also +affected. It is otherwise not a new vulnerability. Note that Apache Spark 3.1.x is EOL now. + CVE-2023-22946: Apache Spark proxy-user privilege escalation from malicious configuration class Severity: Medium @@ -81,7 +86,7 @@ Vendor: The Apache Software Foundation Versions Affected: -- 3.1.3 and earlier +- 3.1.3 and earlier (previously, this was marked as fixed in 3.1.3; this change is tracked as [CVE-2023-32007](#CVE-2023-32007)) - 3.2.0 to 3.2.1 Description: diff --git a/site/security.html b/site/security.html index 57b3def5b5..959e474d80 100644 --- a/site/security.html +++ b/site/security.html @@ -133,6 +133,11 @@ non-public list that will reach the Apache Security team, as well as the Spark P Known security issues +CVE-2023-32007: Apache Spark shell command injection vulnerability via Spark UI + +This CVE is only an update to CVE-2022-33891 to clarify that version 3.1.3 is also +affected. It is otherwise not a new vulnerability. Note that Apache Spark 3.1.x is EOL now. + CVE-2023-22946: Apache Spark proxy-user privilege escalation from malicious configuration class Severity: Medium @@ -207,7 +212,7 @@ the logs which would be returned in logs rendered in the UI. Versions Affected: - 3.1.3 and earlier + 3.1.3 and earlier (previously, this was marked as fixed in 3.1.3; this change is tracked as CVE-2023-32007) 3.2.0 to 3.2.1 - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-43320][SQL][HIVE] Directly call Hive 2.3.9 API

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new aed6a47580e [SPARK-43320][SQL][HIVE] Directly call Hive 2.3.9 API

aed6a47580e is described below

commit aed6a47580e66f92b0641d5bc08ad833be4724f4

Author: Cheng Pan

AuthorDate: Sat Apr 29 09:38:14 2023 -0500

[SPARK-43320][SQL][HIVE] Directly call Hive 2.3.9 API

### What changes were proposed in this pull request?

Call Hive 2.3.9 API directly instead of reflection, basically reverts

SPARK-37446.

### Why are the changes needed?

Switch to direct calling to achieve compile time check.

Spark does not officially support building against Hive other than 2.3.9,

for cases listed in SPARK-37446, it's the vendor's responsibility to port

HIVE-21563 into their maintained Hive 2.3.8-[vender-custom-version].

See full discussion in https://github.com/apache/spark/pull/40893.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass GA.

Closes #40995 from pan3793/SPARK-43320.

Authored-by: Cheng Pan

Signed-off-by: Sean Owen

---

.../main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala | 5 ++---

1 file changed, 2 insertions(+), 3 deletions(-)

diff --git

a/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala

b/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala

index becca8eae5e..5b0309813fc 100644

---

a/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala

+++

b/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala

@@ -1347,12 +1347,11 @@ private[hive] object HiveClientImpl extends Logging {

new HiveConf(conf, classOf[HiveConf])

}

try {

- classOf[Hive].getMethod("getWithoutRegisterFns", classOf[HiveConf])

-.invoke(null, hiveConf).asInstanceOf[Hive]

+ Hive.getWithoutRegisterFns(hiveConf)

} catch {

// SPARK-37069: not all Hive versions have the above method (e.g., Hive

2.3.9 has it but

// 2.3.8 don't), therefore here we fallback when encountering the

exception.

- case _: NoSuchMethodException =>

+ case _: NoSuchMethodError =>

Hive.get(hiveConf)

}

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-43263][BUILD] Upgrade `FasterXML jackson` to 2.15.0

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new a4a274c4e4f [SPARK-43263][BUILD] Upgrade `FasterXML jackson` to 2.15.0

a4a274c4e4f is described below

commit a4a274c4e4f709765e7a8c687347816d8951a681

Author: bjornjorgensen

AuthorDate: Fri Apr 28 08:29:59 2023 -0500

[SPARK-43263][BUILD] Upgrade `FasterXML jackson` to 2.15.0

### What changes were proposed in this pull request?

Upgrade FasterXML jackson from 2.14.2 to 2.15.0

### Why are the changes needed?

Upgrade Snakeyaml to 2.0 (resolves CVE-2022-1471 [CVE-2022-1471 at

nist](https://nvd.nist.gov/vuln/detail/CVE-2022-1471)

### Does this PR introduce _any_ user-facing change?

This PR introduces user-facing changes by implementing streaming read

constraints in the JSONOptions class. The constraints limit the size of input

constructs, improving security and efficiency when processing input data.

Users working with JSON data larger than the following default settings may

need to adjust the constraints accordingly:

Maximum Number value length: 1000 characters (`DEFAULT_MAX_NUM_LEN`)

Maximum String value length: 5,000,000 characters (`DEFAULT_MAX_STRING_LEN`)

Maximum Nesting depth: 1000 levels (`DEFAULT_MAX_DEPTH`)

Additionally, the maximum magnitude of scale for BigDecimal to BigInteger

conversion is set to 100,000 digits (`MAX_BIGINT_SCALE_MAGNITUDE`) and cannot

be changed.

Users can customize the constraints as needed by providing the

corresponding options in the parameters object. If not explicitly specified,

default settings will be applied.

### How was this patch tested?

Pass GA

Closes #40933 from bjornjorgensen/test_jacon.

Authored-by: bjornjorgensen

Signed-off-by: Sean Owen

---

dev/deps/spark-deps-hadoop-3-hive-2.3 | 16 +++---

pom.xml| 4 ++--

.../spark/sql/catalyst/json/JSONOptions.scala | 25 +-

3 files changed, 34 insertions(+), 11 deletions(-)

diff --git a/dev/deps/spark-deps-hadoop-3-hive-2.3

b/dev/deps/spark-deps-hadoop-3-hive-2.3

index a6c41cdd726..bd689f9e913 100644

--- a/dev/deps/spark-deps-hadoop-3-hive-2.3

+++ b/dev/deps/spark-deps-hadoop-3-hive-2.3

@@ -97,13 +97,13 @@ httpcore/4.4.16//httpcore-4.4.16.jar

ini4j/0.5.4//ini4j-0.5.4.jar

istack-commons-runtime/3.0.8//istack-commons-runtime-3.0.8.jar

ivy/2.5.1//ivy-2.5.1.jar

-jackson-annotations/2.14.2//jackson-annotations-2.14.2.jar

-jackson-core/2.14.2//jackson-core-2.14.2.jar

-jackson-databind/2.14.2//jackson-databind-2.14.2.jar

-jackson-dataformat-cbor/2.14.2//jackson-dataformat-cbor-2.14.2.jar

-jackson-dataformat-yaml/2.14.2//jackson-dataformat-yaml-2.14.2.jar

-jackson-datatype-jsr310/2.14.2//jackson-datatype-jsr310-2.14.2.jar

-jackson-module-scala_2.12/2.14.2//jackson-module-scala_2.12-2.14.2.jar

+jackson-annotations/2.15.0//jackson-annotations-2.15.0.jar

+jackson-core/2.15.0//jackson-core-2.15.0.jar

+jackson-databind/2.15.0//jackson-databind-2.15.0.jar

+jackson-dataformat-cbor/2.15.0//jackson-dataformat-cbor-2.15.0.jar

+jackson-dataformat-yaml/2.15.0//jackson-dataformat-yaml-2.15.0.jar

+jackson-datatype-jsr310/2.15.0//jackson-datatype-jsr310-2.15.0.jar

+jackson-module-scala_2.12/2.15.0//jackson-module-scala_2.12-2.15.0.jar

jakarta.annotation-api/1.3.5//jakarta.annotation-api-1.3.5.jar

jakarta.inject/2.6.1//jakarta.inject-2.6.1.jar

jakarta.servlet-api/4.0.3//jakarta.servlet-api-4.0.3.jar

@@ -233,7 +233,7 @@ scala-xml_2.12/2.1.0//scala-xml_2.12-2.1.0.jar

shims/0.9.39//shims-0.9.39.jar

slf4j-api/2.0.7//slf4j-api-2.0.7.jar

snakeyaml-engine/2.6//snakeyaml-engine-2.6.jar

-snakeyaml/1.33//snakeyaml-1.33.jar

+snakeyaml/2.0//snakeyaml-2.0.jar

snappy-java/1.1.9.1//snappy-java-1.1.9.1.jar

spire-macros_2.12/0.17.0//spire-macros_2.12-0.17.0.jar

spire-platform_2.12/0.17.0//spire-platform_2.12-0.17.0.jar

diff --git a/pom.xml b/pom.xml

index c74da8e3ace..df7fef1cf79 100644

--- a/pom.xml

+++ b/pom.xml

@@ -184,8 +184,8 @@

true

true

1.9.13

-2.14.2

-

2.14.2

+2.15.0

+

2.15.0

1.1.9.1

3.0.3

1.15

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/json/JSONOptions.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/json/JSONOptions.scala

index bf5b83e9df0..c06f411c505 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/json/JSONOptions.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/json/JSONOptions.scala

@@ -21,7 +21,7 @@ import java.nio.charset.{Charset, StandardCharsets}

import java.time.ZoneId

import java.util.Locale

-import com.fasterxml.jackson.core.{JsonFactory, JsonFactoryBuilder}

+import com.fasterxml.jackson.core.{JsonFactory, JsonFactoryBuilder

[spark] branch master updated: [SPARK-43277][YARN] Clean up deprecation hadoop api usage in `yarn` module

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 014685c41e4 [SPARK-43277][YARN] Clean up deprecation hadoop api usage

in `yarn` module

014685c41e4 is described below

commit 014685c41e4741f83570d8a2a6a253e48967919a

Author: yangjie01

AuthorDate: Tue Apr 25 22:12:35 2023 -0500

[SPARK-43277][YARN] Clean up deprecation hadoop api usage in `yarn` module

### What changes were proposed in this pull request?

`yarn` module has the following compilation warnings related to the Hadoop

API:

```

[WARNING] [Warn]

/${spark-source-dir}/resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/ApplicationMaster.scala:157:

[deprecation

org.apache.spark.deploy.yarn.ApplicationMaster.prepareLocalResources.setupDistributedCache

| origin=org.apache.hadoop.yarn.util.ConverterUtils.getYarnUrlFromURI |

version=] method getYarnUrlFromURI in class ConverterUtils is deprecated

[WARNING] [Warn]

/${spark-source-dir}/resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/Client.scala:292:

[deprecation

org.apache.spark.deploy.yarn.Client.createApplicationSubmissionContext |

origin=org.apache.hadoop.yarn.api.records.Resource.setMemory | version=] method

setMemory in class Resource is deprecated

[WARNING] [Warn]

/${spark-source-dir}/resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/Client.scala:307:

[deprecation

org.apache.spark.deploy.yarn.Client.createApplicationSubmissionContext |

origin=org.apache.hadoop.yarn.api.records.ApplicationSubmissionContext.setAMContainerResourceRequest

| version=] method setAMContainerResourceRequest in class

ApplicationSubmissionContext is deprecated

[WARNING] [Warn]

/${spark-source-dir}/resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/Client.scala:392:

[deprecation

org.apache.spark.deploy.yarn.Client.verifyClusterResources.maxMem |

origin=org.apache.hadoop.yarn.api.records.Resource.getMemory | version=] method

getMemory in class Resource is deprecated

[WARNING] [Warn]

/${spark-source-dir}/resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/ClientDistributedCacheManager.scala:76:

[deprecation

org.apache.spark.deploy.yarn.ClientDistributedCacheManager.addResource |

origin=org.apache.hadoop.yarn.util.ConverterUtils.getYarnUrlFromPath |

version=] method getYarnUrlFromPath in class ConverterUtils is deprecated

[WARNING] [Warn]

/${spark-source-dir}/resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnAllocator.scala:510:

[deprecation

org.apache.spark.deploy.yarn.YarnAllocator.updateResourceRequests.$anonfun.requestContainerMessage

| origin=org.apache.hadoop.yarn.api.records.Resource.getMemory | version=]

method getMemory in class Resource is deprecated

[WARNING] [Warn]

/${spark-source-dir}/resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnAllocator.scala:737:

[deprecation

org.apache.spark.deploy.yarn.YarnAllocator.runAllocatedContainers.$anonfun |

origin=org.apache.hadoop.yarn.api.records.Resource.getMemory | version=] method

getMemory in class Resource is deprecated

[WARNING] [Warn]

/${spark-source-dir}/resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnAllocator.scala:737:

[deprecation

org.apache.spark.deploy.yarn.YarnAllocator.runAllocatedContainers.$anonfun |

origin=org.apache.hadoop.yarn.api.records.Resource.getMemory | version=] method

getMemory in class Resource is deprecated

[WARNING] [Warn]

/${spark-source-dir}/resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/YarnSparkHadoopUtil.scala:202: