soumilshah1995 opened a new issue, #8031:

URL: https://github.com/apache/hudi/issues/8031

Hello Good Evening

i am trying to experiment with Timestamp based key generator following docx

on hudi websites

### Code

```

try:

import os

import sys

import uuid

import pyspark

from pyspark.sql import SparkSession

from pyspark import SparkConf, SparkContext

from pyspark.sql.functions import col, asc, desc

from pyspark.sql.functions import col, to_timestamp,

monotonically_increasing_id, to_date, when

from pyspark.sql.functions import *

from pyspark.sql.types import *

from datetime import datetime

from functools import reduce

from faker import Faker

except Exception as e:

pass

SUBMIT_ARGS = "--packages org.apache.hudi:hudi-spark3.3-bundle_2.12:0.12.1

pyspark-shell"

os.environ["PYSPARK_SUBMIT_ARGS"] = SUBMIT_ARGS

os.environ['PYSPARK_PYTHON'] = sys.executable

os.environ['PYSPARK_DRIVER_PYTHON'] = sys.executable

spark = SparkSession.builder \

.config('spark.serializer',

'org.apache.spark.serializer.KryoSerializer') \

.config('className', 'org.apache.hudi') \

.config('spark.sql.hive.convertMetastoreParquet', 'false') \

.getOrCreate()

db_name = "hudidb"

table_name = "hudi_table"

recordkey = 'uuid'

precombine = 'date'

path = f"file:///C:/tmp/{db_name}/{table_name}"

method = 'upsert'

table_type = "COPY_ON_WRITE" # COPY_ON_WRITE | MERGE_ON_READ

hudi_options = {

'hoodie.table.name': table_name,

'hoodie.datasource.write.recordkey.field': recordkey,

'hoodie.datasource.write.table.name': table_name,

'hoodie.datasource.write.operation': method,

'hoodie.datasource.write.precombine.field': precombine,

'hoodie.datasource.write.partitionpath.field':

'year:SIMPLE,month:SIMPLE,day:SIMPLE',

"hoodie-conf hoodie.datasource.write.partitionpath.field":"date",

'hoodie.datasource.write.keygenerator.class':

'org.apache.hudi.keygen.TimestampBasedKeyGenerator',

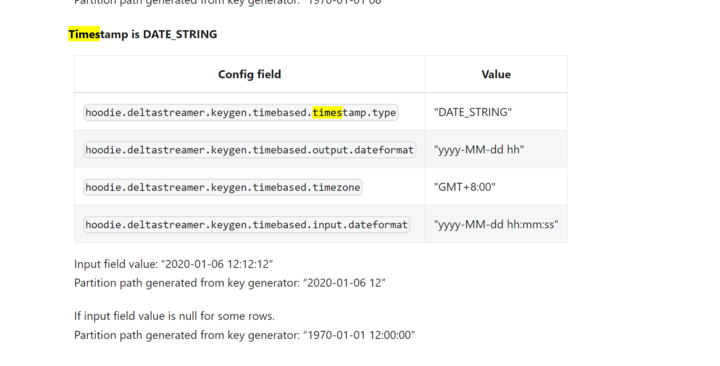

'hoodie.deltastreamer.keygen.timebased.timestamp.type': 'DATE_STRING',

'hoodie.deltastreamer.keygen.timebased.timezone':"GMT+8:00",

'hoodie.deltastreamer.keygen.timebased.input.dateformat': 'yyyy-MM-dd

hh:mm:ss',

'hoodie.deltastreamer.keygen.timebased.output.dateformat': 'yyyy/MM/dd'

}

#Input field value: “2020-01-06 12:12:12”

# Partition path generated from key generator: “2020-01-06 12”

data_items = [

(1, "mess 1", 111, "2020-01-06 12:12:12"),

(2, "mes 2", 22, "2020-01-06 12:12:12"),

]

columns = ["uuid", "message", "precomb", "date"]

spark_df = spark.createDataFrame(data=data_items, schema=columns)

spark_df.show()

spark_df.printSchema()

spark_df.write.format("hudi"). \

options(**hudi_options). \

mode("append"). \

save(path)

```

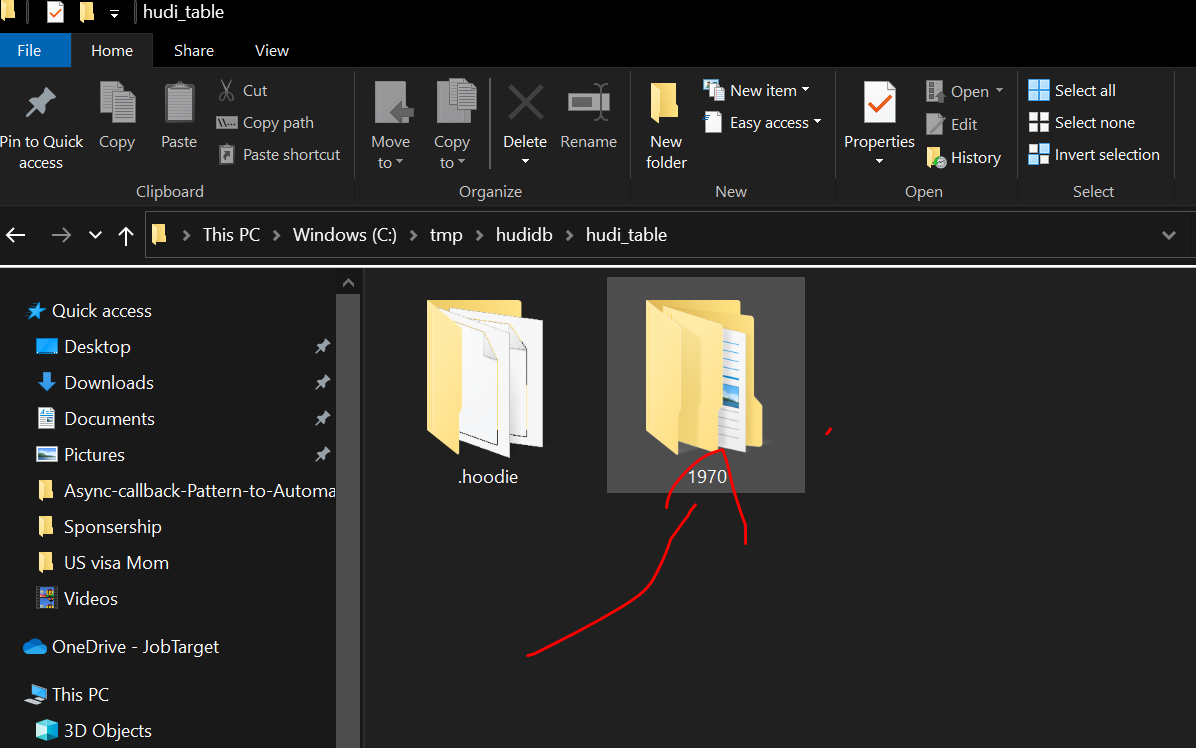

## Expectation was to see partition 2020/01/06/ hudi files inside that

* Maybe i am missing something help from community to point out missing conf

would be great :D

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org