easonwood opened a new issue, #8540:

URL: https://github.com/apache/hudi/issues/8540

**Describe the problem you faced**

We have a hudi table created in 20221130, and we did column change by adding

some columns.

Now when we do DELETE on whole partitions, it will get error

**To Reproduce**

Steps to reproduce the behavior:

1. We have old data ::

>>> old_df =

spark.read.parquet("s3://our-bucket/data-lake-hudi/ods_meeting_mmr_log/ods_mmr_conf_join/cluster=aw1/year=2022/month=11/day=30")

>>> old_df.printSchema()

root

|-- _hoodie_commit_time: string (nullable = true)

|-- _hoodie_commit_seqno: string (nullable = true)

|-- _hoodie_record_key: string (nullable = true)

|-- _hoodie_partition_path: string (nullable = true)

|-- _hoodie_file_name: string (nullable = true)

|-- _hoodie_is_deleted: boolean (nullable = true)

|-- account_id: string (nullable = true)

|-- cluster: string (nullable = true)

|-- day: string (nullable = true)

|-- error_fields: string (nullable = true)

|-- host_name: string (nullable = true)

|-- instance_id: string (nullable = true)

|-- log_time: string (nullable = true)

|-- meeting_uuid: string (nullable = true)

|-- month: string (nullable = true)

|-- region: string (nullable = true)

|-- rowkey: string (nullable = true)

|-- year: string (nullable = true)

|-- mmr_addr: string (nullable = true)

|-- top_mmr_addr: string (nullable = true)

|-- zone_name: string (nullable = true)

|-- client_addr: string (nullable = true)

|-- user_id: long (nullable = true)

|-- user_type: long (nullable = true)

|-- result: long (nullable = true)

|-- failover: long (nullable = true)

|-- name: string (nullable = true)

|-- viewonly_user: long (nullable = true)

|-- db_user_id: string (nullable = true)

|-- host_account: string (nullable = true)

|-- time_cost: long (nullable = true)

|-- device_id: string (nullable = true)

|-- index: long (nullable = true)

|-- persist_index: long (nullable = true)

|-- email: string (nullable = true)

|-- cluster_info: string (nullable = true)

|-- kms_enable: long (nullable = true)

|-- need_holding: long (nullable = true)

|-- not_guest: long (nullable = true)

|-- need_hide_pii: long (nullable = true)

|-- acct_id: string (nullable = true)

**|-- rest_fields: string (nullable = true)**

2. >>> new_df =

spark.read.parquet("s3://our-bucket/data-lake-hudi/ods_meeting_mmr_log/ods_mmr_conf_join/cluster=aw1/year=2023/month=04/day=16")

>>> new_df.printSchema()

root

|-- _hoodie_commit_time: string (nullable = true)

|-- _hoodie_commit_seqno: string (nullable = true)

|-- _hoodie_record_key: string (nullable = true)

|-- _hoodie_partition_path: string (nullable = true)

|-- _hoodie_file_name: string (nullable = true)

|-- _hoodie_is_deleted: boolean (nullable = true)

|-- account_id: string (nullable = true)

|-- cluster: string (nullable = true)

|-- day: string (nullable = true)

|-- error_fields: string (nullable = true)

|-- host_name: string (nullable = true)

|-- instance_id: string (nullable = true)

|-- log_time: string (nullable = true)

|-- meeting_uuid: string (nullable = true)

|-- month: string (nullable = true)

|-- region: string (nullable = true)

|-- rowkey: string (nullable = true)

|-- year: string (nullable = true)

|-- mmr_addr: string (nullable = true)

|-- top_mmr_addr: string (nullable = true)

|-- zone_name: string (nullable = true)

|-- client_addr: string (nullable = true)

|-- user_id: long (nullable = true)

|-- user_type: long (nullable = true)

|-- result: long (nullable = true)

|-- failover: long (nullable = true)

|-- name: string (nullable = true)

|-- viewonly_user: long (nullable = true)

|-- db_user_id: string (nullable = true)

|-- host_account: string (nullable = true)

|-- time_cost: long (nullable = true)

|-- device_id: string (nullable = true)

|-- index: long (nullable = true)

|-- persist_index: long (nullable = true)

|-- email: string (nullable = true)

|-- cluster_info: string (nullable = true)

|-- kms_enable: long (nullable = true)

|-- need_holding: long (nullable = true)

|-- not_guest: long (nullable = true)

|-- need_hide_pii: long (nullable = true)

|-- acct_id: string (nullable = true)

**|-- customer_key: string (nullable = true)

|-- phone_id: long (nullable = true)

|-- bind_number: long (nullable = true)

|-- tracking_id: string (nullable = true)

|-- reg_user_id: string (nullable = true)

|-- user_sn: string (nullable = true)

|-- gateways: string (nullable = true)

|-- jid: string (nullable = true)

|-- options2: string (nullable = true)

|-- attendee_option: long (nullable = true)

|-- is_user_failover: long (nullable = true)

|-- attendee_account_id: string (nullable = true)

|-- token_uuid: string (nullable = true)

|-- restrict_join_status_sharing: long (nullable = true)

|-- rest_fields: string (nullable = true)**

3.

when we do DELETE by these config:

"hoodie.fail.on.timeline.archiving": "false",

"hoodie.datasource.write.table.type": "COPY_ON_WRITE",

"hoodie.datasource.write.hive_style_partitioning": "true",

"hoodie.datasource.write.keygenerator.class":

"org.apache.hudi.keygen.CustomKeyGenerator",

"hoodie.datasource.hive_sync.enable": "false",

"hoodie.archive.automatic": "false",

"hoodie.metadata.enable": "false",

"hoodie.metadata.enable.full.scan.log.files": "false",

"hoodie.index.type": "SIMPLE",

"hoodie.embed.timeline.server": "false",

"hoodie.avro.schema.external.transformation": "true",

"hoodie.schema.on.read.enable": "true",

"hoodie.datasource.write.reconcile.schema": "true"

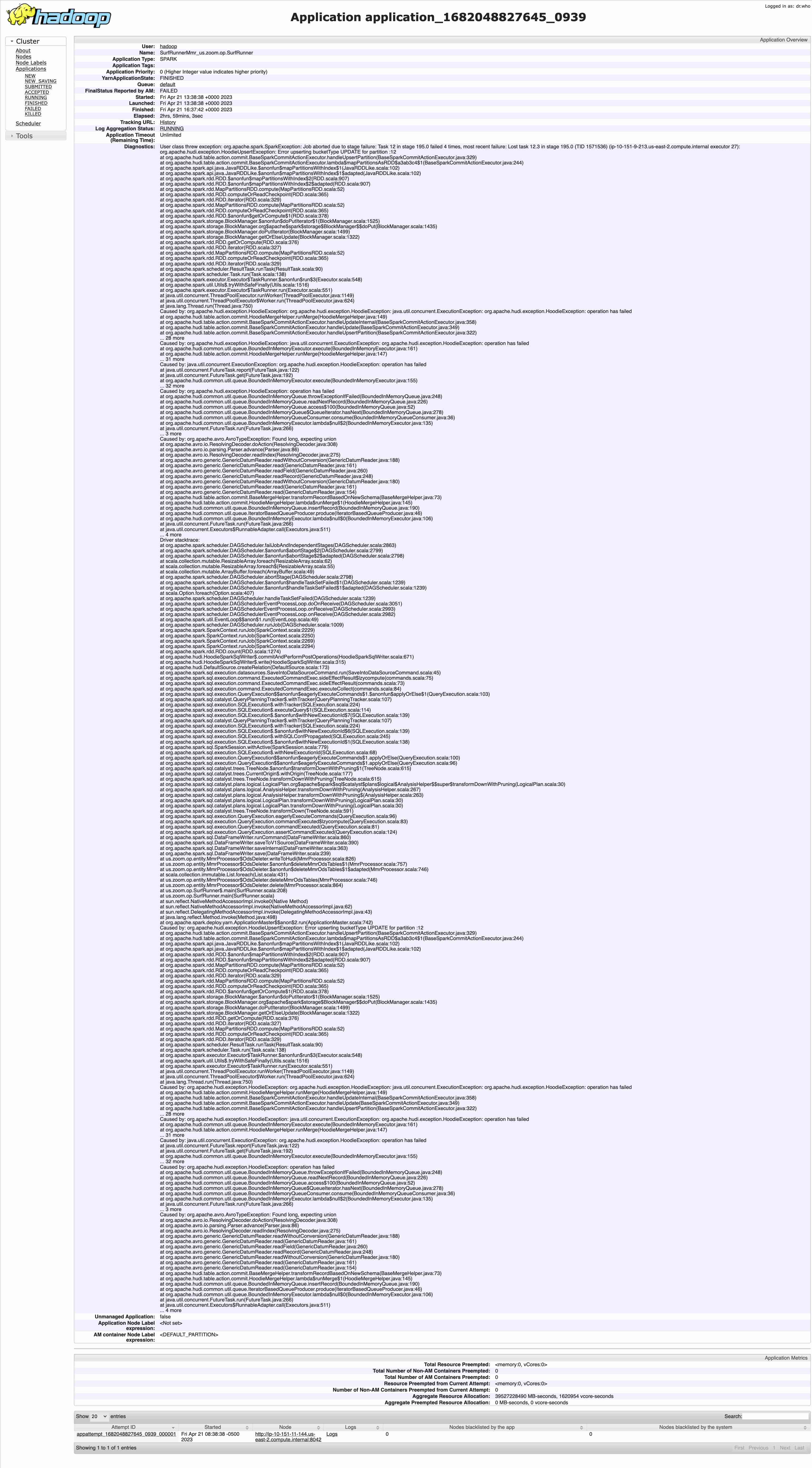

4. we got the error :

AvroTypeException: Found long, expecting union

**Environment Description**

* Hudi version :

* Spark version :

* Storage (HDFS/S3/GCS..) : S3

* Running on Docker? (yes/no) : no

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org