zuyanton opened a new issue #1847:

URL: https://github.com/apache/hudi/issues/1847

We are noticing that Hudi MoR table on S3 starts perform slow with number of

files growing. Although it may sound similar as #1829 , its a different issue,

as this time we have tested on table with relatively few partitions (100) and

log indicates a different bottleneck. We can manage to keep writing/reading

time within acceptable limits if we keep number of files small (compacting

every 10 delta commits, setting cleaner to only keep one commit ) however if we

try to increase the number of historical commits to 30 - 40 thats when we

start noticing increase in upsert and read time. Specifically to reading table:

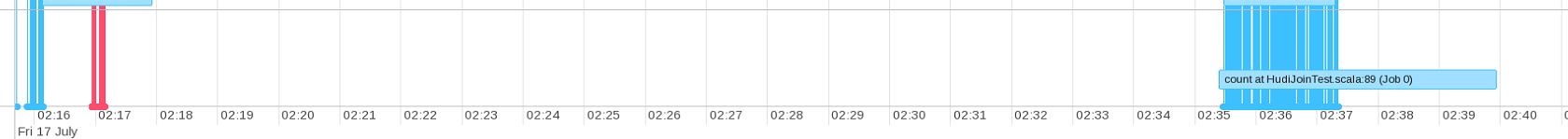

We run simple count query, when checking spark UI we can see that cluster is

idled for the first 20 minutes and only master node does some work, after that

20 minutes pause,spark starts running the count job.

When checking logs we observe that first 20 minutes are taken by master node

loading all the necessary metadata from s3. More specifically we see a lot of

lines like follow:

```

20/07/17 02:16:45 INFO HoodieTableFileSystemView: Adding file-groups for

partition :11, #FileGroups=17

20/07/17 02:16:45 INFO AbstractTableFileSystemView: addFilesToView:

NumFiles=120, NumFileGroups=17, FileGroupsCreationTime=13226, StoreTimeTaken=0

20/07/17 02:16:45 INFO AbstractTableFileSystemView: Time to load partition

(11) =13285

```

we observe FileGroupsCreationTime value is all over the place from less then

a second for small partitions to 4 minutes per partition containing 1000+

files. I placed bunch of timer log lines in Hudi code to narrow done the bottle

neck and my findings are following: most time consuming lines are this

https://github.com/apache/hudi/blob/bf1d36fa639cae558aa388d8d547e58ad2e67aba/hudi-common/src/main/java/org/apache/hudi/common/table/view/AbstractTableFileSystemView.java#L254

and

https://github.com/apache/hudi/blob/master/hudi-common/src/main/java/org/apache/hudi/common/table/view/AbstractTableFileSystemView.java#L265

more specifically instantiation of HoodieLogFile and HoodieBaseFile more

specifically grabbing file length value from FileStatus here

https://github.com/apache/hudi/blob/bf1d36fa639cae558aa388d8d547e58ad2e67aba/hudi-common/src/main/java/org/apache/hudi/common/model/HoodieBaseFile.java#L42

and here

https://github.com/apache/hudi/blob/bf1d36fa639cae558aa388d8d547e58ad2e67aba/hudi-common/src/main/java/org/apache/hudi/common/model/HoodieLogFile.java#L51

.

so pretty much hoodie iterates all partitions and within each partition

sequentially traverses all files and grabs their length, mentioned lines of

code in HoodieLogFile and HoodieBaseFile constructors take on average 100

milliseconds ,so every time Hoodie processes partition with 600+ files , it

takes 600*100 milliseconds = 1 minute+ per partition.

**Possible ways this process may be sped up**

This are based on my not super deep understanding of Hudi functionality, I

can be grossly wrong about them.

From my understanding ```HoodieParquetInputFormat.listStatus``` that gets

executed per each partition and that eventually triggers File group creation,

gets executed in multiple threads (multiple thread run listStatus) ,so Hudi

does not process one partition at a time, there is some multi threading going

on, however from my observations this parallelism is pretty small, maybe just

handful of threads at a time and I dont know what parameter controls it.

Theoretically increasing this number may improve performance.

It looks like Hudi grabs file sizes for all files in partition folder ,which

may be unnecessary since if lets say we configured to keep last N commits, then

only 1/N th of the folder are parquet files that need to be queried (plus log

files of cause), the rest are just historical commits. just grabbing file

length of 1/N th of the total files should make file groups creation process

faster.

**Environment Description**

* Hudi version : master branch

* Spark version : 2.4.4

* Hive version : 2.3.6

* Hadoop version : 2.8.5

* Storage (HDFS/S3/GCS..) : S3

* Running on Docker? (yes/no) : no

**Table Info**

We observe read time increasing for all the tests tables, however it gets

more obvious pretty quick on tables where every incremental update , updates

data in 50+ out of 100 partitions. Initial state of test table was 100

partitions, initial size 100gb, initial file count 6k. compaction is set to be

ran every 10 delta commits, cleaner is set to keep last 50 commits. We started

observing 20+ minutes table loading time after table grew to 27k files and

400gb.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org