[jira] [Created] (FLINK-18069) Scaladocs not building since inner Java interfaces cannot be recognized

Tzu-Li (Gordon) Tai created FLINK-18069:

---

Summary: Scaladocs not building since inner Java interfaces cannot

be recognized

Key: FLINK-18069

URL: https://issues.apache.org/jira/browse/FLINK-18069

Project: Flink

Issue Type: Bug

Components: API / Scala, Documentation

Affects Versions: 1.11.0

Reporter: Tzu-Li (Gordon) Tai

Assignee: Tzu-Li (Gordon) Tai

Error:

{code}

/home/buildslave/slave/flink-docs-master/build/flink-scala/src/main/java/org/apache/flink/api/scala/typeutils/Tuple2CaseClassSerializerSnapshot.java:98:

error: not found: type OuterSchemaCompatibility

protected OuterSchemaCompatibility

resolveOuterSchemaCompatibility(ScalaCaseClassSerializer>

newSerializer) {

^

/home/buildslave/slave/flink-docs-master/build/flink-scala/src/main/java/org/apache/flink/api/scala/typeutils/TraversableSerializerSnapshot.java:101:

error: not found: type OuterSchemaCompatibility

protected OuterSchemaCompatibility

resolveOuterSchemaCompatibility(TraversableSerializer newSerializer) {

^

/home/buildslave/slave/flink-docs-master/build/flink-scala/src/main/java/org/apache/flink/api/scala/typeutils/ScalaCaseClassSerializerSnapshot.java:106:

error: not found: type OuterSchemaCompatibility

protected OuterSchemaCompatibility

resolveOuterSchemaCompatibility(ScalaCaseClassSerializer newSerializer) {

^

{code}

This is a similar issue as reported here:

https://github.com/scala/bug/issues/10509.

This seems to be a problem with Scala 2.12.x. The only workaround is to

redundantly add the full-length qualifiers for such interfaces.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Comment Edited] (FLINK-18035) Executors#newCachedThreadPool could not work as expected

[

https://issues.apache.org/jira/browse/FLINK-18035?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17120644#comment-17120644

]

Chesnay Schepler edited comment on FLINK-18035 at 6/2/20, 12:35 PM:

master: 092a9553efb70dcf19f6f93b1afa94c4d496beb3

1.11: 87afb9e08b7a3fb846eaa406a139646096450f50

1.10: d4f940f4bf247f47b0304401b064317de981a724

was (Author: zentol):

master: 092a9553efb70dcf19f6f93b1afa94c4d496beb3

1.11: bd552c6f9c92600e6ce7297feda5b9494dfe15ec

1.10: d4f940f4bf247f47b0304401b064317de981a724

> Executors#newCachedThreadPool could not work as expected

>

>

> Key: FLINK-18035

> URL: https://issues.apache.org/jira/browse/FLINK-18035

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Coordination

>Affects Versions: 1.11.0, 1.10.2

>Reporter: Yang Wang

>Assignee: Chesnay Schepler

>Priority: Blocker

> Labels: pull-request-available

> Fix For: 1.11.0, 1.10.2

>

>

> In FLINK-17558, we introduce {{Executors#newCachedThreadPool}} to create

> dedicated thread pool for TaskManager io. However, it could not work as

> expected.

> The root cause is about the following constructor of {{ThreadPoolExecutor}}.

> Only when the workQueue is full, new thread will be started then. So if we

> set a {{LinkedBlockingQueue}} with {{Integer.MAX_VALUE}} capacity, only one

> thread will be started. It never grows up.

>

> {code:java}

> public ThreadPoolExecutor(int corePoolSize,

> int maximumPoolSize,

> long keepAliveTime,

> TimeUnit unit,

> BlockingQueue workQueue,

> ThreadFactory threadFactory)

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] flinkbot commented on pull request #12441: [FLINK-17918][table-blink] Fix AppendOnlyTopNFunction shouldn't mutate list value of MapState

flinkbot commented on pull request #12441: URL: https://github.com/apache/flink/pull/12441#issuecomment-637512303 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 73b38c8ef83b559c54c66308b3b923e108e2ed86 (Tue Jun 02 12:34:19 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] liyubin117 opened a new pull request #12442: [FLINK-12428][docs-zh] Translate the "Event Time" page into Chinese

liyubin117 opened a new pull request #12442: URL: https://github.com/apache/flink/pull/12442 ## What is the purpose of the change Translate the "Event Time" page into Chinese file locate flink/docs/dev/event_time.zh.md https://ci.apache.org/projects/flink/flink-docs-master/dev/event_time.html ## Brief change log * translate `flink/docs/dev/event_time.zh.md` ## Verifying this change This change is a trivial rework / code cleanup without any test coverage. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? no This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #11186: [FLINK-16200][sql] Support JSON_EXISTS for blink planner

flinkbot edited a comment on pull request #11186: URL: https://github.com/apache/flink/pull/11186#issuecomment-589950295 ## CI report: * a0bba3877b6baecbbb5800bfe3d47f77436f4c80 UNKNOWN * 3859e02d712ba2d889d479d697bd064a040befe6 UNKNOWN * a74fdce8cbb740e2c2acc3e5758c634da42cb0a6 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2550) * 13334d6b6e944dbb3d373def06daf601d4c307b3 UNKNOWN * 6704cef1a83c753a142dcf4fb9349cb413ce Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2554) * f4bd79920e37641f4c6537dda71836a6bafe4171 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2560) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] zentol merged pull request #12384: [FLINK-18010][runtime] Expand HistoryServer logging

zentol merged pull request #12384: URL: https://github.com/apache/flink/pull/12384 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-9900) Fix unstable test ZooKeeperHighAvailabilityITCase#testRestoreBehaviourWithFaultyStateHandles

[ https://issues.apache.org/jira/browse/FLINK-9900?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17123719#comment-17123719 ] Chesnay Schepler commented on FLINK-9900: - I would ignore the recent failure for the time being since it occurred in the legacy scheduler. I could not reproduce the failure locally after ~1000 runs. > Fix unstable test > ZooKeeperHighAvailabilityITCase#testRestoreBehaviourWithFaultyStateHandles > > > Key: FLINK-9900 > URL: https://issues.apache.org/jira/browse/FLINK-9900 > Project: Flink > Issue Type: Bug > Components: Runtime / Coordination, Tests >Affects Versions: 1.5.1, 1.6.0, 1.9.0 >Reporter: zhangminglei >Assignee: Chesnay Schepler >Priority: Critical > Labels: pull-request-available, test-stability > Fix For: 1.9.1, 1.10.0 > > Attachments: mvn-2.log > > Time Spent: 40m > Remaining Estimate: 0h > > https://api.travis-ci.org/v3/job/405843617/log.txt > Tests run: 1, Failures: 0, Errors: 1, Skipped: 0, Time elapsed: 124.598 sec > <<< FAILURE! - in > org.apache.flink.test.checkpointing.ZooKeeperHighAvailabilityITCase > > testRestoreBehaviourWithFaultyStateHandles(org.apache.flink.test.checkpointing.ZooKeeperHighAvailabilityITCase) > Time elapsed: 120.036 sec <<< ERROR! > org.junit.runners.model.TestTimedOutException: test timed out after 12 > milliseconds > at sun.misc.Unsafe.park(Native Method) > at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175) > at > java.util.concurrent.CompletableFuture$Signaller.block(CompletableFuture.java:1693) > at java.util.concurrent.ForkJoinPool.managedBlock(ForkJoinPool.java:3323) > at > java.util.concurrent.CompletableFuture.waitingGet(CompletableFuture.java:1729) > at java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1895) > at > org.apache.flink.test.checkpointing.ZooKeeperHighAvailabilityITCase.testRestoreBehaviourWithFaultyStateHandles(ZooKeeperHighAvailabilityITCase.java:244) > Results : > Tests in error: > > ZooKeeperHighAvailabilityITCase.testRestoreBehaviourWithFaultyStateHandles:244 > » TestTimedOut > Tests run: 1453, Failures: 0, Errors: 1, Skipped: 29 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] zentol merged pull request #12383: [FLINK-18008][runtime] HistoryServer logs environment info

zentol merged pull request #12383: URL: https://github.com/apache/flink/pull/12383 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] liyubin117 closed pull request #12440: [FLINK-16137][docs-zh] Translate the "Event Time" page into Chinese

liyubin117 closed pull request #12440: URL: https://github.com/apache/flink/pull/12440 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-17918) Blink Jobs are loosing data on recovery

[

https://issues.apache.org/jira/browse/FLINK-17918?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-17918:

---

Labels: pull-request-available (was: )

> Blink Jobs are loosing data on recovery

> ---

>

> Key: FLINK-17918

> URL: https://issues.apache.org/jira/browse/FLINK-17918

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Checkpointing, Table SQL / Runtime

>Affects Versions: 1.11.0

>Reporter: Piotr Nowojski

>Assignee: Jark Wu

>Priority: Blocker

> Labels: pull-request-available

> Fix For: 1.11.0

>

>

> After trying to enable unaligned checkpoints by default, a lot of Blink

> streaming SQL/Table API tests containing joins or set operations are throwing

> errors that are indicating we are loosing some data (full records, without

> deserialisation errors). Example errors:

> {noformat}

> [ERROR] Failures:

> [ERROR] JoinITCase.testFullJoinWithEqualPk:775 expected: 3,3, null,4, null,5)> but was:

> [ERROR] JoinITCase.testStreamJoinWithSameRecord:391 expected: 1,1,1,1, 2,2,2,2, 2,2,2,2, 3,3,3,3, 3,3,3,3, 4,4,4,4, 4,4,4,4, 5,5,5,5,

> 5,5,5,5)> but was:

> [ERROR] SemiAntiJoinStreamITCase.testAntiJoin:352 expected:<0> but was:<1>

> [ERROR] SetOperatorsITCase.testIntersect:55 expected: 2,2,Hello, 3,2,Hello world)> but was:

> [ERROR] JoinITCase.testJoinPushThroughJoin:1272 expected: 2,1,Hello, 2,1,Hello world)> but was:

> {noformat}

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] wuchong opened a new pull request #12441: [FLINK-17918][table-blink] Fix AppendOnlyTopNFunction shouldn't mutate list value of MapState

wuchong opened a new pull request #12441: URL: https://github.com/apache/flink/pull/12441 ## What is the purpose of the change As discussed in the JIRA issue, operators shouldn't update value object of state in the other processElement call. AppendOnlyTopNFunction doesn't follow this, and I have checked all the operators in table module, this should be the only one. All others either never update value obejct of state, or follow the get-update-put operation in a single processElement. ## Brief change log - Always shallow copy the list value when putting it into `dataState`. - Update `FailingCollectionSource` to fail after at least one element was checkpointed. This may uncover more failures like this one (but can't reproduce this problem all the time). ## Verifying this change It is hard to be reproduced using existing tests. I reproduce the problem using @AHeise s [commit](https://github.com/apache/flink/commit/c05a0d865989c9959047cebcf2cd68b3838cc699), and verified this fix manually. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (yes / **no**) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (yes / **no**) - The serializers: (yes / **no** / don't know) - The runtime per-record code paths (performance sensitive): (yes / **no** / don't know) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: (yes / **no** / don't know) - The S3 file system connector: (yes / **no** / don't know) ## Documentation - Does this pull request introduce a new feature? (yes / **no**) - If yes, how is the feature documented? (**not applicable** / docs / JavaDocs / not documented) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #12440: [FLINK-16137][docs-zh] Translate the "Event Time" page into Chinese

flinkbot commented on pull request #12440: URL: https://github.com/apache/flink/pull/12440#issuecomment-637500901 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit e19f4c519c1ccdae4000d95378884e1e6d7861c1 (Tue Jun 02 12:12:29 UTC 2020) ✅no warnings Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

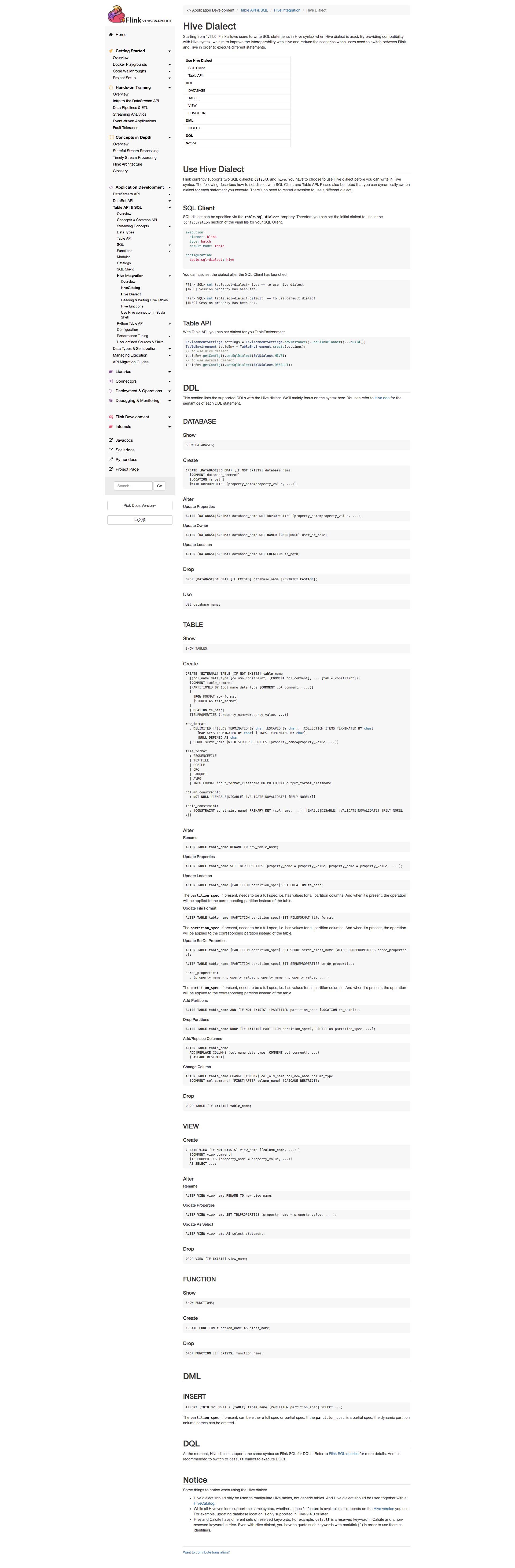

[GitHub] [flink] lirui-apache commented on pull request #12439: [FLINK-17776][hive][doc] Add documentation for DDL in hive dialect

lirui-apache commented on pull request #12439: URL: https://github.com/apache/flink/pull/12439#issuecomment-637499989  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] lirui-apache commented on pull request #12439: [FLINK-17776][hive][doc] Add documentation for DDL in hive dialect

lirui-apache commented on pull request #12439: URL: https://github.com/apache/flink/pull/12439#issuecomment-637500275 @JingsongLi Please take a look. Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-16137) Translate all DataStream API related pages into Chinese

[ https://issues.apache.org/jira/browse/FLINK-16137?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-16137: --- Labels: pull-request-available (was: ) > Translate all DataStream API related pages into Chinese > > > Key: FLINK-16137 > URL: https://issues.apache.org/jira/browse/FLINK-16137 > Project: Flink > Issue Type: Improvement > Components: chinese-translation, Documentation >Reporter: Yun Gao >Assignee: Yun Gao >Priority: Major > Labels: pull-request-available > Fix For: 1.11.0 > > > Translate data stream related pages into Chinese, including the pages under > the section "Application Development/Streaming(DataStream API)" in the > document site. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] liyubin117 opened a new pull request #12440: [FLINK-16137][docs-zh] Translate the "Event Time" page into Chinese

liyubin117 opened a new pull request #12440: URL: https://github.com/apache/flink/pull/12440 ## What is the purpose of the change Translate the "Event Time" page into Chinese file locate flink/docs/dev/event_time.zh.md https://ci.apache.org/projects/flink/flink-docs-master/dev/event_time.html ## Brief change log * translate `flink/docs/dev/event_time.zh.md` ## Verifying this change This change is a trivial rework / code cleanup without any test coverage. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? no This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #12439: [FLINK-17776][hive][doc] Add documentation for DDL in hive dialect

flinkbot commented on pull request #12439: URL: https://github.com/apache/flink/pull/12439#issuecomment-637496929 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit a8e8962ad90e67b78240a2a9023e15dec1df (Tue Jun 02 12:05:08 UTC 2020) **Warnings:** * Documentation files were touched, but no `.zh.md` files: Update Chinese documentation or file Jira ticket. Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-17918) Blink Jobs are loosing data on recovery

[

https://issues.apache.org/jira/browse/FLINK-17918?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17123696#comment-17123696

]

Jark Wu commented on FLINK-17918:

-

No, all the failed join tests do not use watermarks.

> Blink Jobs are loosing data on recovery

> ---

>

> Key: FLINK-17918

> URL: https://issues.apache.org/jira/browse/FLINK-17918

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Checkpointing, Table SQL / Runtime

>Affects Versions: 1.11.0

>Reporter: Piotr Nowojski

>Assignee: Jark Wu

>Priority: Blocker

> Fix For: 1.11.0

>

>

> After trying to enable unaligned checkpoints by default, a lot of Blink

> streaming SQL/Table API tests containing joins or set operations are throwing

> errors that are indicating we are loosing some data (full records, without

> deserialisation errors). Example errors:

> {noformat}

> [ERROR] Failures:

> [ERROR] JoinITCase.testFullJoinWithEqualPk:775 expected: 3,3, null,4, null,5)> but was:

> [ERROR] JoinITCase.testStreamJoinWithSameRecord:391 expected: 1,1,1,1, 2,2,2,2, 2,2,2,2, 3,3,3,3, 3,3,3,3, 4,4,4,4, 4,4,4,4, 5,5,5,5,

> 5,5,5,5)> but was:

> [ERROR] SemiAntiJoinStreamITCase.testAntiJoin:352 expected:<0> but was:<1>

> [ERROR] SetOperatorsITCase.testIntersect:55 expected: 2,2,Hello, 3,2,Hello world)> but was:

> [ERROR] JoinITCase.testJoinPushThroughJoin:1272 expected: 2,1,Hello, 2,1,Hello world)> but was:

> {noformat}

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-17776) Add documentation for DDL in hive dialect

[ https://issues.apache.org/jira/browse/FLINK-17776?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-17776: --- Labels: pull-request-available (was: ) > Add documentation for DDL in hive dialect > - > > Key: FLINK-17776 > URL: https://issues.apache.org/jira/browse/FLINK-17776 > Project: Flink > Issue Type: Sub-task > Components: Documentation >Reporter: Jingsong Lee >Assignee: Rui Li >Priority: Major > Labels: pull-request-available > Fix For: 1.11.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] lirui-apache opened a new pull request #12439: [FLINK-17776][hive][doc] Add documentation for DDL in hive dialect

lirui-apache opened a new pull request #12439: URL: https://github.com/apache/flink/pull/12439 ## What is the purpose of the change Add doc for Hive dialect. ## Brief change log - Add a new page for Hive dialect ## Verifying this change Manually built the page and everything looks fine. ## Does this pull request potentially affect one of the following parts: NA ## Documentation NA This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] leonardBang commented on pull request #12437: [FLINK-18055] [sql-client] Fix catalog/database does not exist in sql client

leonardBang commented on pull request #12437: URL: https://github.com/apache/flink/pull/12437#issuecomment-637493457 > > Hi, @jxeditor this issue has a opened PR #12431 > > Please, How should I submit code under the existing pr? Hi, @jxeditor community usually proposed open one PR for an issue. But you can help to review the PR, it's one way of contribution too. To avoid the conflict with other's PR, you can simply left comment that you want to address it below the JIRA issue before open a PR. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12438: [FLINK-17992][checkpointing] Exception from RemoteInputChannel#onBuffer should not fail the whole NetworkClientHandler

flinkbot edited a comment on pull request #12438: URL: https://github.com/apache/flink/pull/12438#issuecomment-637482992 ## CI report: * 2e5eee9964e83d15c823b3865f8d15bd295d Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2562) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12189: [FLINK-17376][API/DataStream]Deprecated methods and related code updated

flinkbot edited a comment on pull request #12189: URL: https://github.com/apache/flink/pull/12189#issuecomment-629635252 ## CI report: * 6ec41f21bd41a1ad6e3530acdd92d5f14d264236 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2434) * a3afaaf29b9966ae3d0af63b8b6fcdb11ee7342c Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2561) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12355: [FLINK-17893] [sql-client] SQL CLI should print the root cause if the statement is invalid

flinkbot edited a comment on pull request #12355: URL: https://github.com/apache/flink/pull/12355#issuecomment-634548726 ## CI report: * 18758d007d909ad3427c384140bf36a71e06ea1b Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2548) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-18009) Select an invalid column crashes the SQL CLI

[ https://issues.apache.org/jira/browse/FLINK-18009?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17123687#comment-17123687 ] Nicholas Jiang commented on FLINK-18009: [~godfreyhe]This issue is related to [SQL-CLI no exception stack|https://issues.apache.org/jira/browse/FLINK-17893]. > Select an invalid column crashes the SQL CLI > > > Key: FLINK-18009 > URL: https://issues.apache.org/jira/browse/FLINK-18009 > Project: Flink > Issue Type: Bug > Components: Table SQL / Client >Reporter: Rui Li >Assignee: Nicholas Jiang >Priority: Blocker > Fix For: 1.11.0 > > > To reproduce: just select a non-existing column from table in SQL CLI -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink-web] klion26 commented on pull request #342: [FLINK-17926] Fix the build problem of docker image

klion26 commented on pull request #342: URL: https://github.com/apache/flink-web/pull/342#issuecomment-637490706 @rmetzger hmm. seems there is some problem after applying this patch(not sure whether it was there before applying this patch), but I can't reproduce it using this patch now. When trying to use the base image from main repo as @sjwiesman suggested, encounter some errors, I'm trying to fix such error on my side(using the dockerfile from main repo). ``` Configuration file: /Users/congxianqiu/klion26/flink-web/_config.yml Dependency Error: Yikes! It looks like you don't have /Users/congxianqiu/klion26/flink-web/_plugins/highlightCode.rb or one of its dependencies installed. In order to use Jekyll as currently configured, you'll need to install this gem. If you've run Jekyll with `bundle exec`, ensure that you have included the /Users/congxianqiu/klion26/flink-web/_plugins/highlightCode.rb gem in your Gemfile as well. The full error message from Ruby is: 'cannot load such file -- pygments' If you run into trouble, you can find helpful resources at https://jekyllrb.com/help/! bundler: failed to load command: jekyll (/Users/congxianqiu/klion26/flink-web/.rubydeps/ruby/2.5.0/bin/jekyll) J ``` PS: the Dockerfile in the main repo can run on my laptop successfully. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] yangyichao-mango commented on a change in pull request #12417: [hotfix][docs] Remove the redundant punctuation in dev/training/index.md

yangyichao-mango commented on a change in pull request #12417: URL: https://github.com/apache/flink/pull/12417#discussion_r433816986 ## File path: docs/training/index.md ## @@ -130,7 +130,7 @@ Streams can transport data between two operators in a *one-to-one* (or ## Timely Stream Processing For most streaming applications it is very valuable to be able re-process historic data with the -same code that is used to process live data -- and to produce deterministic, consistent results, +same code that is used to process live data and to produce deterministic, consistent results, Review comment: > A comma might be better than a dash, but some punctuation is needed to create a pause after "data". Thx for your review. I've apply the suggestion with a new commit. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] leonardBang commented on a change in pull request #12436: [FLINK-17847][table sql / planner] ArrayIndexOutOfBoundsException happens in StreamExecCalc operator

leonardBang commented on a change in pull request #12436:

URL: https://github.com/apache/flink/pull/12436#discussion_r433815328

##

File path:

flink-table/flink-table-planner-blink/src/main/scala/org/apache/flink/table/planner/codegen/calls/ScalarOperatorGens.scala

##

@@ -1643,19 +1644,35 @@ object ScalarOperatorGens {

val resultTypeTerm = primitiveTypeTermForType(componentInfo)

val defaultTerm = primitiveDefaultValue(componentInfo)

+if (index.literalValue.isDefined &&

+index.literalValue.get.isInstanceOf[Int] &&

+index.literalValue.get.asInstanceOf[Int] < 1) {

+ throw new ValidationException(s"Array element access needs an index

starting at 1 but was " +

Review comment:

> "Array element reference requires an index starts from 1, but was"

This text is same to [existed Table API

validation](https://github.com/apache/flink/blob/master/flink-table/flink-table-planner/src/main/scala/org/apache/flink/table/expressions/collection.scala#L212)

so that we can keep align.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] godfreyhe commented on pull request #12326: [FLINK-16577] [table-planner-blink] Fix numeric type mismatch error in column interval relmetadata

godfreyhe commented on pull request #12326: URL: https://github.com/apache/flink/pull/12326#issuecomment-637486962 cc @cshuo This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #12438: [FLINK-17992][checkpointing] Exception from RemoteInputChannel#onBuffer should not fail the whole NetworkClientHandler

flinkbot commented on pull request #12438: URL: https://github.com/apache/flink/pull/12438#issuecomment-637482992 ## CI report: * 2e5eee9964e83d15c823b3865f8d15bd295d UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12434: [FLINK-18052] Increase timeout for ES Search API in IT Cases

flinkbot edited a comment on pull request #12434: URL: https://github.com/apache/flink/pull/12434#issuecomment-637351443 ## CI report: * 0719d0f449d33d02986c4c84d9f2377e9a96bae9 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2546) * a96c2e5f943f4467332071089eaa6fd81ec155a2 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2553) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12401: [FLINK-16057] Optimize ContinuousFileReaderOperator

flinkbot edited a comment on pull request #12401: URL: https://github.com/apache/flink/pull/12401#issuecomment-635845764 ## CI report: * 9ee16834faff9045e1f356f4696b7b3e7834527c Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2547) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12189: [FLINK-17376][API/DataStream]Deprecated methods and related code updated

flinkbot edited a comment on pull request #12189: URL: https://github.com/apache/flink/pull/12189#issuecomment-629635252 ## CI report: * 6ec41f21bd41a1ad6e3530acdd92d5f14d264236 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2434) * a3afaaf29b9966ae3d0af63b8b6fcdb11ee7342c UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #11186: [FLINK-16200][sql] Support JSON_EXISTS for blink planner

flinkbot edited a comment on pull request #11186: URL: https://github.com/apache/flink/pull/11186#issuecomment-589950295 ## CI report: * a0bba3877b6baecbbb5800bfe3d47f77436f4c80 UNKNOWN * c3610799982dd4e4474f4414a078461e06648b7c Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2522) * 3859e02d712ba2d889d479d697bd064a040befe6 UNKNOWN * a74fdce8cbb740e2c2acc3e5758c634da42cb0a6 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2550) * 13334d6b6e944dbb3d373def06daf601d4c307b3 UNKNOWN * 6704cef1a83c753a142dcf4fb9349cb413ce Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2554) * f4bd79920e37641f4c6537dda71836a6bafe4171 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2560) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] zentol commented on a change in pull request #11586: [FLINK-5552][runtime] make JMXServer static per JVM

zentol commented on a change in pull request #11586:

URL: https://github.com/apache/flink/pull/11586#discussion_r433801486

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/management/JMXServer.java

##

@@ -0,0 +1,239 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.management;

+

+import org.apache.flink.configuration.JMXServerOptions;

+import org.apache.flink.util.NetUtils;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import javax.management.remote.JMXConnectorServer;

+import javax.management.remote.JMXServiceURL;

+import javax.management.remote.rmi.RMIConnectorServer;

+import javax.management.remote.rmi.RMIJRMPServerImpl;

+

+import java.io.IOException;

+import java.lang.management.ManagementFactory;

+import java.net.MalformedURLException;

+import java.rmi.NoSuchObjectException;

+import java.rmi.NotBoundException;

+import java.rmi.Remote;

+import java.rmi.RemoteException;

+import java.rmi.registry.Registry;

+import java.rmi.server.UnicastRemoteObject;

+import java.util.Iterator;

+import java.util.concurrent.atomic.AtomicReference;

+

+

+/**

+ * JMX Server implementation that JMX clients can connect to.

+ *

+ * Heavily based on j256 simplejmx project

+ *

+ *

https://github.com/j256/simplejmx/blob/master/src/main/java/com/j256/simplejmx/server/JmxServer.java

+ */

+public class JMXServer {

+ private static final Logger LOG =

LoggerFactory.getLogger(JMXServer.class);

+

+ private static JMXServer instance = null;

+

+ private final AtomicReference rmiServerReference = new

AtomicReference<>();

+

+ private Registry rmiRegistry;

+ private JMXConnectorServer connector;

+ private int port;

+

+ /**

+* Construct a new JMV-wide JMX server or acquire existing JMX server.

+*

+* If JMXServer static instance is already constructed, it will not

be

+* reconstruct again. Instead a warning sign will be posted if the

desired

+* port configuration doesn't match the existing JMXServer static

instance.

+*

+* @param portsConfig port configuration of the JMX server.

+* @return JMXServer static instance.

+*/

+ public static JMXServer startInstance(String portsConfig) {

+ if (instance == null) {

+ if

(!portsConfig.equals(JMXServerOptions.JMX_SERVER_PORT.defaultValue())) {

+ instance =

startJMXServerWithPortRanges(portsConfig);

+ } else {

+ LOG.warn("JMX Server start failed. No explicit

JMX port is configured.");

Review comment:

This should not be logged as a warning (since it is completely fine to

not have a JMXServer); probably only at debug.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] zentol commented on a change in pull request #11586: [FLINK-5552][runtime] make JMXServer static per JVM

zentol commented on a change in pull request #11586:

URL: https://github.com/apache/flink/pull/11586#discussion_r433800992

##

File path:

flink-core/src/main/java/org/apache/flink/configuration/JMXServerOptions.java

##

@@ -0,0 +1,46 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.configuration;

+

+import org.apache.flink.annotation.PublicEvolving;

+import org.apache.flink.annotation.docs.Documentation;

+

+import static org.apache.flink.configuration.ConfigOptions.key;

+

+/**

+ * The set of configuration options relating to heartbeat manager settings.

+ */

+@PublicEvolving

+public class JMXServerOptions {

+

+ /** Port configured to enable JMX server for metrics and debugging. */

+

@Documentation.Section(Documentation.Sections.EXPERT_DEBUGGING_AND_TUNING)

+ public static final ConfigOptionJMX_SERVER_PORT =

+ key("jmx.server.port")

+ .defaultValue("-1")

Review comment:

But the current code handles this fine though, right?

You just have to replace

```

portsConfig.equals(JMXServerOptions.JMX_SERVER_PORT.defaultValue())

```

with

```

portsConfig == null

```

in `JMXServer#startInstance(string)`. That's ultimately all I was suggesting.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] rmetzger commented on pull request #11923: [FLINK-17401] Add Labels to the mesos task info

rmetzger commented on pull request #11923: URL: https://github.com/apache/flink/pull/11923#issuecomment-637469526 I'm closing this PR now. Please reopen if you want to continue working on it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] rmetzger closed pull request #11923: [FLINK-17401] Add Labels to the mesos task info

rmetzger closed pull request #11923: URL: https://github.com/apache/flink/pull/11923 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12360: [FLINK-17970] Increase default value of cluster.io-pool.size from #cores to 4 * #cores

flinkbot edited a comment on pull request #12360: URL: https://github.com/apache/flink/pull/12360#issuecomment-63426 ## CI report: * f738742d985b130dbd5aa457fe0ad86ac6a49062 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2544) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12393: [BP-1.11][FLINK-17970] Increase default value of cluster.io-pool.size from #cores to 4 * #cores

flinkbot edited a comment on pull request #12393: URL: https://github.com/apache/flink/pull/12393#issuecomment-635472978 ## CI report: * 16ac28ab5d2ab2e12ef6a5ad39c067dca3e85cd7 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2545) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] aljoscha commented on pull request #12189: [FLINK-17376][API/DataStream]Deprecated methods and related code updated

aljoscha commented on pull request #12189: URL: https://github.com/apache/flink/pull/12189#issuecomment-637466858 pushed changes This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #11186: [FLINK-16200][sql] Support JSON_EXISTS for blink planner

flinkbot edited a comment on pull request #11186: URL: https://github.com/apache/flink/pull/11186#issuecomment-589950295 ## CI report: * a0bba3877b6baecbbb5800bfe3d47f77436f4c80 UNKNOWN * c3610799982dd4e4474f4414a078461e06648b7c Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2522) * 3859e02d712ba2d889d479d697bd064a040befe6 UNKNOWN * a74fdce8cbb740e2c2acc3e5758c634da42cb0a6 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2550) * 13334d6b6e944dbb3d373def06daf601d4c307b3 UNKNOWN * 6704cef1a83c753a142dcf4fb9349cb413ce Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2554) * f4bd79920e37641f4c6537dda71836a6bafe4171 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #12438: [FLINK-17992][checkpointing] Exception from RemoteInputChannel#onBuffer should not fail the whole NetworkClientHandler

flinkbot commented on pull request #12438: URL: https://github.com/apache/flink/pull/12438#issuecomment-637464223 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 2e5eee9964e83d15c823b3865f8d15bd295d (Tue Jun 02 11:06:08 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] zhijiangW commented on pull request #12438: [FLINK-17992][checkpointing] Exception from RemoteInputChannel#onBuffer should not fail the whole NetworkClientHandler

zhijiangW commented on pull request #12438: URL: https://github.com/apache/flink/pull/12438#issuecomment-637463375 Cherry-pick from #12374 which was already approved and merged into release-1.11, will merge into master after azure pass. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] zhijiangW opened a new pull request #12438: [FLINK-17992][checkpointing] Exception from RemoteInputChannel#onBuffer should not fail the whole NetworkClientHandler

zhijiangW opened a new pull request #12438: URL: https://github.com/apache/flink/pull/12438 ## What is the purpose of the change RemoteInputChannel#onBuffer is invoked by CreditBasedPartitionRequestClientHandler while receiving and decoding the network data. #onBuffer can throw exceptions which would tag the error in client handler and fail all the added input channels inside handler. Then it would cause a tricky potential issue as following. If the RemoteInputChannel is canceling by canceler thread, then the task thread might exit early than canceler thread terminate. That means the PartitionRequestClient might not be closed (triggered by canceler thread) while the new task attempt is already deployed into the same TaskManager. Therefore the new task might reuse the previous PartitionRequestClient while requesting partitions, but note that the respective client handler was already tagged an error before during above RemoteInputChannel#onBuffer, to cause the next round unnecessary failover. The solution is to only fail the respective task when its internal RemoteInputChannel#onBuffer throws any exceptions instead of failing the whole channels inside client handler, then the client is still healthy and can also be reused by other input channels as long as it is not released yet. ## Brief change log Not fail the whole network client handler while exception in `RemoteInputChannel#onBuffer` ## Verifying this change Added new unit test `CreditBasedPartitionRequestClientHandlerTest#testRemoteInputChannelOnBufferException` ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (yes / **no**) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (yes / **no**) - The serializers: (yes / **no** / don't know) - The runtime per-record code paths (performance sensitive): (yes / **no** / don't know) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: (yes / **no** / don't know) - The S3 file system connector: (yes / **no** / don't know) ## Documentation - Does this pull request introduce a new feature? (yes / **no**) - If yes, how is the feature documented? (**not applicable** / docs / JavaDocs / not documented) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Reopened] (FLINK-9900) Fix unstable test ZooKeeperHighAvailabilityITCase#testRestoreBehaviourWithFaultyStateHandles

[ https://issues.apache.org/jira/browse/FLINK-9900?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Chesnay Schepler reopened FLINK-9900: - Assignee: Chesnay Schepler (was: Biao Liu) > Fix unstable test > ZooKeeperHighAvailabilityITCase#testRestoreBehaviourWithFaultyStateHandles > > > Key: FLINK-9900 > URL: https://issues.apache.org/jira/browse/FLINK-9900 > Project: Flink > Issue Type: Bug > Components: Runtime / Coordination, Tests >Affects Versions: 1.5.1, 1.6.0, 1.9.0 >Reporter: zhangminglei >Assignee: Chesnay Schepler >Priority: Critical > Labels: pull-request-available, test-stability > Fix For: 1.9.1, 1.10.0 > > Attachments: mvn-2.log > > Time Spent: 40m > Remaining Estimate: 0h > > https://api.travis-ci.org/v3/job/405843617/log.txt > Tests run: 1, Failures: 0, Errors: 1, Skipped: 0, Time elapsed: 124.598 sec > <<< FAILURE! - in > org.apache.flink.test.checkpointing.ZooKeeperHighAvailabilityITCase > > testRestoreBehaviourWithFaultyStateHandles(org.apache.flink.test.checkpointing.ZooKeeperHighAvailabilityITCase) > Time elapsed: 120.036 sec <<< ERROR! > org.junit.runners.model.TestTimedOutException: test timed out after 12 > milliseconds > at sun.misc.Unsafe.park(Native Method) > at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175) > at > java.util.concurrent.CompletableFuture$Signaller.block(CompletableFuture.java:1693) > at java.util.concurrent.ForkJoinPool.managedBlock(ForkJoinPool.java:3323) > at > java.util.concurrent.CompletableFuture.waitingGet(CompletableFuture.java:1729) > at java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1895) > at > org.apache.flink.test.checkpointing.ZooKeeperHighAvailabilityITCase.testRestoreBehaviourWithFaultyStateHandles(ZooKeeperHighAvailabilityITCase.java:244) > Results : > Tests in error: > > ZooKeeperHighAvailabilityITCase.testRestoreBehaviourWithFaultyStateHandles:244 > » TestTimedOut > Tests run: 1453, Failures: 0, Errors: 1, Skipped: 29 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] zentol commented on pull request #12169: [FLINK-17495][metrics][prometheus]Add custom labels on PrometheusReporter like PrometheusPushGatewayReporter's groupingKey

zentol commented on pull request #12169: URL: https://github.com/apache/flink/pull/12169#issuecomment-637460924 This can be implemented more generically by configuring these for the entire metric system and adding these labels as variables into the root metric groups. This would then _just work_ for all reporters. I'd suggest to close this PR and get back to the drawing board. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] XuQianJin-Stars commented on pull request #11186: [FLINK-16200][sql] Support JSON_EXISTS for blink planner

XuQianJin-Stars commented on pull request #11186: URL: https://github.com/apache/flink/pull/11186#issuecomment-637459807 > @XuQianJin-Stars Thanks for the updating. The changes LGTM now. > CC @wuchong hi @libenchao Thank you very much for review this pr. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12144: [FLINK-17384][flink-dist] support read hbase conf dir from flink.conf and change HBaseConfiguration construction.

flinkbot edited a comment on pull request #12144: URL: https://github.com/apache/flink/pull/12144#issuecomment-628475376 ## CI report: * d36b959fb16a91c15babda10dd884cbbdec58420 UNKNOWN * 3df97a6fdb130416fa447295f4ac7eb174be9cc4 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2557) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12436: [FLINK-17847][table sql / planner] ArrayIndexOutOfBoundsException happens in StreamExecCalc operator

flinkbot edited a comment on pull request #12436: URL: https://github.com/apache/flink/pull/12436#issuecomment-637399261 ## CI report: * 3078f1dc40f7f79e96270051eec8bf22e3b90a6b Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2552) * e98155d0d16e4c8aff16f018a349587cdadadede Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2558) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12433: [hotfix] Disable Log4j's JMX for (integration)tests

flinkbot edited a comment on pull request #12433: URL: https://github.com/apache/flink/pull/12433#issuecomment-637330294 ## CI report: * 2ed64c84d8df75737306ba2fe4f50a0e4c5856e1 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2541) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #12431: [FLINK-18055] [sql-client] Fix catalog/database does not exist in sql client

flinkbot edited a comment on pull request #12431: URL: https://github.com/apache/flink/pull/12431#issuecomment-637329823 ## CI report: * b04883a2d9156cc6827d7de1aa2db5831eb1ff56 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=2539) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] XuQianJin-Stars commented on a change in pull request #11186: [FLINK-16200][sql] Support JSON_EXISTS for blink planner

XuQianJin-Stars commented on a change in pull request #11186:

URL: https://github.com/apache/flink/pull/11186#discussion_r433786840

##

File path:

flink-table/flink-table-planner-blink/src/test/scala/org/apache/flink/table/planner/expressions/utils/ExpressionTestBase.scala

##

@@ -77,7 +77,7 @@ abstract class ExpressionTestBase {

protected val notNullable = "not null"

// used for accurate exception information checking.

- val expectedException: ExpectedException = ExpectedException.none()

+ var expectedException: ExpectedException = ExpectedException.none()

Review comment:

> do we still need to change this to `var`?

I have changed it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] nielsbasjes commented on a change in pull request #11245: [FLINK-15794][Kubernetes] Generate the Kubernetes default image version

nielsbasjes commented on a change in pull request #11245:

URL: https://github.com/apache/flink/pull/11245#discussion_r433786745

##

File path: docs/ops/deployment/kubernetes.md

##

@@ -262,7 +262,7 @@ spec:

spec:

containers:

- name: jobmanager

-image: flink:{% if site.is_stable

%}{{site.version}}-scala{{site.scala_version_suffix}}{% else %}latest{% endif %}

+image: flink:{% if site.is_stable

%}{{site.version}}-scala{{site.scala_version_suffix}}{% else %}latest # The

'latest' tag contains the latest released version of Flink for a specific Scala

version which will mismatch with your application over time.{% endif %}

Review comment:

In my experience: Yes, the versions will mismatch at some point.

Also with a Scala mismatch the problem is big, yet with a Flink version

mismatch I suspect more subtle problems to occur.

Also I consider the choice of having Scala 2.12 the version used for

"latest" a choice for today which may change (As I mentioned in the mailing

list I am actually against having a "latest" for something like Flink).

Note that this comment is ONLY shown when the documentation is generated for

a non-stable "SNAPSHOT" build of the documentation. So the normal documentation

should not show this.

I duplicated it in all of those places because these are Kubernetes config

files I expect to be used by operations people who will have limited knowledge

of the application at hand.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] jxeditor closed pull request #12437: [FLINK-18055] [sql-client] Fix catalog/database does not exist in sql client

jxeditor closed pull request #12437: URL: https://github.com/apache/flink/pull/12437 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-16497) Improve default flush strategy for JDBC sink to make it work out-of-box

[

https://issues.apache.org/jira/browse/FLINK-16497?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17123622#comment-17123622

]

Leonard Xu commented on FLINK-16497:

Thanks [~sunjincheng121] and [~libenchao] 's detailed comments.

I want to supply that the two parameters is just an out-of-box configuration

and users may need to tune according to their specific scenarios.

I'd like to suggest set *interval less than 1s* because we always proposed that

FLIINK framework can offer sub-second latency, most user cases always send

smaller data to db and will use other sink for big scale data. So, we can set

*max-rows less than 10/50* as a basic tuning which can work with *interval* to

reduce the DB's pressure efficiently.

WDYT?

> Improve default flush strategy for JDBC sink to make it work out-of-box

> ---

>

> Key: FLINK-16497

> URL: https://issues.apache.org/jira/browse/FLINK-16497

> Project: Flink

> Issue Type: Improvement

> Components: Connectors / JDBC, Table SQL / Ecosystem

>Reporter: Jark Wu

>Priority: Critical

> Fix For: 1.11.0

>

>

> Currently, JDBC sink provides 2 flush options:

> {code}

> 'connector.write.flush.max-rows' = '5000', -- default is 5000

> 'connector.write.flush.interval' = '2s', -- no default value

> {code}

> That means if flush interval is not set, the buffered output rows may not be

> flushed to database for a long time. That is a surprising behavior because no

> results are outputed by default.

> So I propose to have a default flush '1s' interval for JDBC sink or default 1

> row for flush size.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] wuchong commented on a change in pull request #12436: [FLINK-17847][table sql / planner] ArrayIndexOutOfBoundsException happens in StreamExecCalc operator

wuchong commented on a change in pull request #12436:

URL: https://github.com/apache/flink/pull/12436#discussion_r433776867

##

File path:

flink-table/flink-table-planner-blink/src/main/scala/org/apache/flink/table/planner/codegen/calls/ScalarOperatorGens.scala

##

@@ -1643,19 +1644,35 @@ object ScalarOperatorGens {

val resultTypeTerm = primitiveTypeTermForType(componentInfo)

val defaultTerm = primitiveDefaultValue(componentInfo)

+if (index.literalValue.isDefined &&

+index.literalValue.get.isInstanceOf[Int] &&

+index.literalValue.get.asInstanceOf[Int] < 1) {

+ throw new ValidationException(s"Array element access needs an index

starting at 1 but was " +

+s"${index.literalValue.get.asInstanceOf[Int]}.")

+}

val idxStr = s"${index.resultTerm} - 1"

val arrayIsNull = s"${array.resultTerm}.isNullAt($idxStr)"

val arrayGet =

rowFieldReadAccess(ctx, idxStr, array.resultTerm, componentInfo)

+/**

+ * Return null when array index out of bounds which follows Calcite's

behaviour.

+ * @see org.apache.calcite.sql.fun.SqlStdOperatorTable

+ */

val arrayAccessCode =

- s"""

- |${array.code}

- |${index.code}

- |boolean $nullTerm = ${array.nullTerm} || ${index.nullTerm} ||

$arrayIsNull;

- |$resultTypeTerm $resultTerm = $nullTerm ? $defaultTerm : $arrayGet;

- |""".stripMargin

-

+s"""

+|${array.code}

+|${index.code}

+|$resultTypeTerm $resultTerm;

+|boolean $nullTerm;

+|if (${idxStr} < 0 || ${idxStr} >= ${array.resultTerm}.size()) {

Review comment:

I think it's better to return null than throwing exception, because

streaming is an online service. Besides, the definition of element accessing in

Calcite also returns null for array element error.

`org.apache.calcite.sql.fun.SqlStdOperatorTable#ITEM`

But could you add description for this on documentation? @leonardBang

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] libenchao commented on a change in pull request #12436: [FLINK-17847][table sql / planner] ArrayIndexOutOfBoundsException happens in StreamExecCalc operator

libenchao commented on a change in pull request #12436:

URL: https://github.com/apache/flink/pull/12436#discussion_r433776897

##

File path:

flink-table/flink-table-planner-blink/src/main/scala/org/apache/flink/table/planner/codegen/calls/ScalarOperatorGens.scala

##

@@ -1643,19 +1644,35 @@ object ScalarOperatorGens {

val resultTypeTerm = primitiveTypeTermForType(componentInfo)

val defaultTerm = primitiveDefaultValue(componentInfo)

+if (index.literalValue.isDefined &&

+index.literalValue.get.isInstanceOf[Int] &&

+index.literalValue.get.asInstanceOf[Int] < 1) {

+ throw new ValidationException(s"Array element access needs an index

starting at 1 but was " +

+s"${index.literalValue.get.asInstanceOf[Int]}.")

+}

val idxStr = s"${index.resultTerm} - 1"

val arrayIsNull = s"${array.resultTerm}.isNullAt($idxStr)"

val arrayGet =

rowFieldReadAccess(ctx, idxStr, array.resultTerm, componentInfo)

+/**

+ * Return null when array index out of bounds which follows Calcite's

behaviour.

+ * @see org.apache.calcite.sql.fun.SqlStdOperatorTable

+ */

val arrayAccessCode =

- s"""

- |${array.code}

- |${index.code}

- |boolean $nullTerm = ${array.nullTerm} || ${index.nullTerm} ||

$arrayIsNull;

- |$resultTypeTerm $resultTerm = $nullTerm ? $defaultTerm : $arrayGet;

- |""".stripMargin

-

+s"""

+|${array.code}

+|${index.code}

+|$resultTypeTerm $resultTerm;

+|boolean $nullTerm;

+|if (${idxStr} < 0 || ${idxStr} >= ${array.resultTerm}.size()) {

Review comment:

consider this case:

```sql

CREATE TABLE my_source (

idx int,

my_arr array

) WITH (...);

SELECT my_arr[idx] FROM my_source;

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (FLINK-17708) ScalarFunction has different classloader after submit task jar to cluster。

[

https://issues.apache.org/jira/browse/FLINK-17708?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17123604#comment-17123604

]

xiemeilong commented on FLINK-17708:

Yes, this error happened on 1.10.1. This issue is very similar to FLINK-16662

except it is happened on ScalarFunction. The local mode is the standalone

mode。 It only happen when I submit the jar to cluster。

> ScalarFunction has different classloader after submit task jar to cluster。

> --

>

> Key: FLINK-17708

> URL: https://issues.apache.org/jira/browse/FLINK-17708

> Project: Flink

> Issue Type: Bug

> Components: Table SQL / Planner

>Affects Versions: 1.10.1

>Reporter: xiemeilong

>Priority: Major

>

> The issue is similar to FLINK-16662

> Only occurred when submit task to cluster, not in local mode.

>

> {code:java}

> Caused by: org.apache.flink.table.api.ValidationException: Given parameters

> of function 'generateDecoder' do not match any signature.

> Actual: (com.yunmo.iot.schema.RecordFormat, com.yunmo.iot.schema.Schema)

> Expected: (com.yunmo.iot.schema.RecordFormat, com.yunmo.iot.schema.Schema)

> {code}

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] wuchong commented on a change in pull request #12436: [FLINK-17847][table sql / planner] ArrayIndexOutOfBoundsException happens in StreamExecCalc operator

wuchong commented on a change in pull request #12436:

URL: https://github.com/apache/flink/pull/12436#discussion_r433775688

##

File path:

flink-table/flink-table-planner-blink/src/main/scala/org/apache/flink/table/planner/codegen/calls/ScalarOperatorGens.scala

##

@@ -1643,19 +1644,35 @@ object ScalarOperatorGens {

val resultTypeTerm = primitiveTypeTermForType(componentInfo)

val defaultTerm = primitiveDefaultValue(componentInfo)

+if (index.literalValue.isDefined &&

+index.literalValue.get.isInstanceOf[Int] &&

+index.literalValue.get.asInstanceOf[Int] < 1) {

+ throw new ValidationException(s"Array element access needs an index

starting at 1 but was " +

Review comment:

"Array element reference requires an index starts from 1, but was"

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] jxeditor commented on pull request #12437: [FLINK-18055] [sql-client] Fix catalog/database does not exist in sql client

jxeditor commented on pull request #12437: URL: https://github.com/apache/flink/pull/12437#issuecomment-637444390 > Hi, @jxeditor this issue has a opened PR #12431 Please, How should I submit code under the existing pr? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-18068) Job scheduling stops but not exits after throwing non-fatal exception

[