[GitHub] [hudi] SteNicholas opened a new pull request, #7940: [HUDI-5787] HoodieHiveCatalog should not delete data for dropping external table

SteNicholas opened a new pull request, #7940: URL: https://github.com/apache/hudi/pull/7940 ### Change Logs `HoodieHiveCatalog` should not delete data when dropping the Hive external table, for example, the value of the `hoodie.datasource.hive_sync.create_managed_table` config is false. ### Impact `HoodieHiveCatalog` drops the external table without deleting the data. ### Risk level (write none, low medium or high below) _If medium or high, explain what verification was done to mitigate the risks._ ### Documentation Update _Describe any necessary documentation update if there is any new feature, config, or user-facing change_ - _The config description must be updated if new configs are added or the default value of the configs are changed_ - _Any new feature or user-facing change requires updating the Hudi website. Please create a Jira ticket, attach the ticket number here and follow the [instruction](https://hudi.apache.org/contribute/developer-setup#website) to make changes to the website._ ### Contributor's checklist - [x] Read through [contributor's guide](https://hudi.apache.org/contribute/how-to-contribute) - [x] Change Logs and Impact were stated clearly - [x] Adequate tests were added if applicable - [x] CI passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-5787) HoodieHiveCatalog should not delete data for dropping external table

[ https://issues.apache.org/jira/browse/HUDI-5787?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HUDI-5787: - Labels: pull-request-available (was: ) > HoodieHiveCatalog should not delete data for dropping external table > > > Key: HUDI-5787 > URL: https://issues.apache.org/jira/browse/HUDI-5787 > Project: Apache Hudi > Issue Type: Improvement > Components: flink >Reporter: Nicholas Jiang >Assignee: Nicholas Jiang >Priority: Major > Labels: pull-request-available > Fix For: 0.13.1 > > > HoodieHiveCatalog should not delete data when dropping the Hive external > table, for example, the value of the > 'hoodie.datasource.hive_sync.create_managed_table' config is false. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] eric9204 closed pull request #7205: [HUDI-5094] modify avro schema for drop partition fields case

eric9204 closed pull request #7205: [HUDI-5094] modify avro schema for drop partition fields case URL: https://github.com/apache/hudi/pull/7205 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7940: [HUDI-5787] HoodieHiveCatalog should not delete data for dropping external table

hudi-bot commented on PR #7940: URL: https://github.com/apache/hudi/pull/7940#issuecomment-1429334086 ## CI report: * 5e9308f176e728950ddfe41a931b54eae4e6f40a UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7868: [HUDI-1593] Add support for "show restores" and "show restore" commands in hudi-cli

hudi-bot commented on PR #7868: URL: https://github.com/apache/hudi/pull/7868#issuecomment-1429341996 ## CI report: * 943c91a266397e07f0aa10289ed78ef277ce42f9 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=15149) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7940: [HUDI-5787] HoodieHiveCatalog should not delete data for dropping external table

hudi-bot commented on PR #7940: URL: https://github.com/apache/hudi/pull/7940#issuecomment-1429342471 ## CI report: * 5e9308f176e728950ddfe41a931b54eae4e6f40a Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=15154) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] stream2000 closed pull request #7918: [MINOR] Fix spark sql run clean do not exit

stream2000 closed pull request #7918: [MINOR] Fix spark sql run clean do not exit URL: https://github.com/apache/hudi/pull/7918 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] gaoshihang opened a new pull request, #7941: [HUDI-5786] Add a new config to specific spark write rdd storage level

gaoshihang opened a new pull request, #7941:

URL: https://github.com/apache/hudi/pull/7941

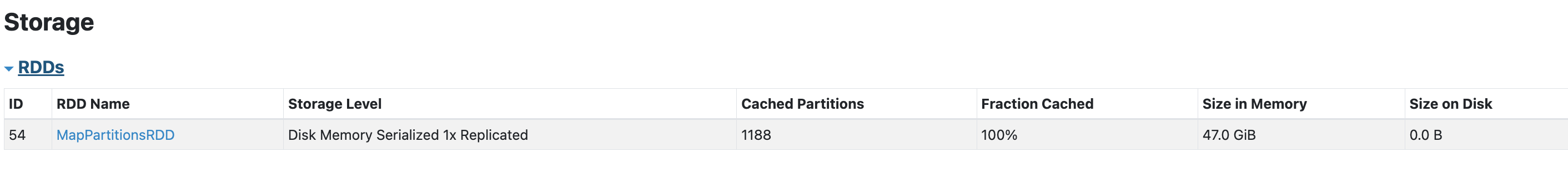

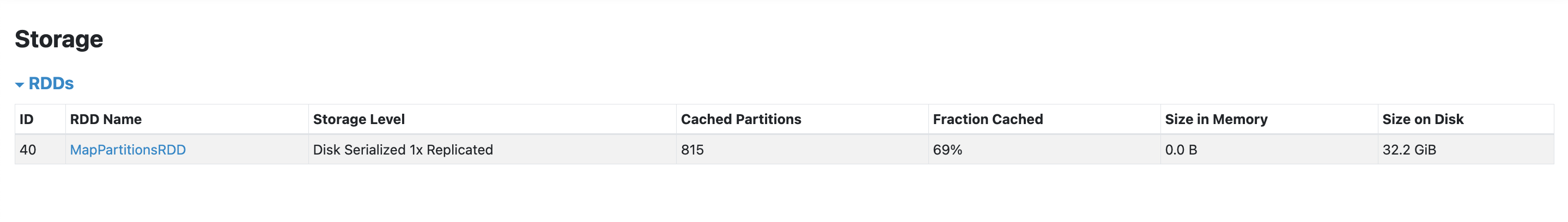

### Change Logs

In BaseSparkCommitActionExecutor.java, This RDD is hardcoded as

persist(MEMORY_AND_DISK_SER)

`// TODO: Consistent contract in HoodieWriteClient regarding

preppedRecord storage level handling

JavaRDD> inputRDD =

HoodieJavaRDD.getJavaRDD(inputRecords);

if (inputRDD.getStorageLevel() == StorageLevel.NONE()) {

inputRDD.persist(StorageLevel.MEMORY_AND_DISK_SER());

} else {

LOG.info("RDD PreppedRecords was persisted at: " +

inputRDD.getStorageLevel());

}`

But if we want to change its storage level, we have no way to use a

parameter.

### Impact

Add a new config: hoodie.spark.write.storage.level.

To specific the storage level of this RDD.

1. the config's default value is MEMORY_AND_DISK_SER

2. If set the config to another storage level

"hoodie.spark.write.storage.level": "DISK_ONLY"

3. If the storage level is wrong, It will throw exception

like set "hoodie.spark.write.storage.level": "DISKE_ONLY"

Caused by: java.lang.IllegalArgumentException: Invalid StorageLevel:

DISKE_ONLY

### Risk level (write none, low medium or high below)

Low

### Documentation Update

_Describe any necessary documentation update if there is any new feature,

config, or user-facing change_

- _The config description must be updated if new configs are added or the

default value of the configs are changed_

- _Any new feature or user-facing change requires updating the Hudi website.

Please create a Jira ticket, attach the

ticket number here and follow the

[instruction](https://hudi.apache.org/contribute/developer-setup#website) to

make

changes to the website._

### Contributor's checklist

- [ ] Read through [contributor's

guide](https://hudi.apache.org/contribute/how-to-contribute)

- [ ] Change Logs and Impact were stated clearly

- [ ] Adequate tests were added if applicable

- [ ] CI passed

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (HUDI-5786) Add a new config to specifies the cache level for the rdd spark write to hudi

[ https://issues.apache.org/jira/browse/HUDI-5786?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HUDI-5786: - Labels: pull-request-available (was: ) > Add a new config to specifies the cache level for the rdd spark write to hudi > - > > Key: HUDI-5786 > URL: https://issues.apache.org/jira/browse/HUDI-5786 > Project: Apache Hudi > Issue Type: Improvement > Components: spark >Reporter: ShiHang Gao >Priority: Major > Labels: pull-request-available > > Before building workload profile/doing partition and writing data, the cache > level of spark rdd is hard-coded for MEMORY_AND_DISK_SER, and a new > configuration is added to set this storage level -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] Zouxxyy commented on a diff in pull request #7872: [HUDI-5716] Cleaning up `Partitioner`s hierarchy

Zouxxyy commented on code in PR #7872:

URL: https://github.com/apache/hudi/pull/7872#discussion_r1105531548

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/RowCustomColumnsSortPartitioner.java:

##

@@ -19,43 +19,70 @@

package org.apache.hudi.execution.bulkinsert;

import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.common.table.HoodieTableConfig;

import org.apache.hudi.config.HoodieWriteConfig;

-import org.apache.hudi.table.BulkInsertPartitioner;

-

+import org.apache.spark.sql.Column;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

+import scala.collection.JavaConverters;

import java.util.Arrays;

+import java.util.stream.Collectors;

+

+import static org.apache.hudi.common.util.ValidationUtils.checkState;

+import static

org.apache.hudi.execution.bulkinsert.RDDCustomColumnsSortPartitioner.getOrderByColumnNames;

/**

- * A partitioner that does sorting based on specified column values for each

spark partitions.

+ * A partitioner that does local sorting for each RDD partition based on the

tuple of

+ * values of the columns configured for ordering.

*/

-public class RowCustomColumnsSortPartitioner implements

BulkInsertPartitioner> {

+public class RowCustomColumnsSortPartitioner extends

RepartitioningBulkInsertPartitionerBase> {

- private final String[] sortColumnNames;

+ private final String[] orderByColumnNames;

- public RowCustomColumnsSortPartitioner(HoodieWriteConfig config) {

-this.sortColumnNames = getSortColumnName(config);

+ public RowCustomColumnsSortPartitioner(HoodieWriteConfig config,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = getOrderByColumnNames(config);

+

+checkState(orderByColumnNames.length > 0);

}

- public RowCustomColumnsSortPartitioner(String[] columnNames) {

-this.sortColumnNames = columnNames;

+ public RowCustomColumnsSortPartitioner(String[] columnNames,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = columnNames;

+

+checkState(orderByColumnNames.length > 0);

}

@Override

- public Dataset repartitionRecords(Dataset records, int

outputSparkPartitions) {

-final String[] sortColumns = this.sortColumnNames;

-return records.sort(HoodieRecord.PARTITION_PATH_METADATA_FIELD,

sortColumns)

-.coalesce(outputSparkPartitions);

+ public Dataset repartitionRecords(Dataset dataset, int

targetPartitionNumHint) {

+Dataset repartitionedDataset;

+

+// NOTE: In case of partitioned table even "global" ordering (across all

RDD partitions) could

+// not change table's partitioning and therefore there's no point in

doing global sorting

+// across "physical" partitions, and instead we can reduce total

amount of data being

+// shuffled by doing do "local" sorting:

Review Comment:

As far as I know, RowCustomColumnsSortPartitioner will only be used in

`cluster`. At this time, the files in the same FG should already be in one

physical partition.

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/NonSortPartitioner.java:

##

@@ -31,38 +29,15 @@

*

Review Comment:

`enforceNumOutputPartitions` has been deleted, maybe the expression here can

be modified

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/SparkBulkInsertPartitionerBase.java:

##

@@ -0,0 +1,62 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.execution.bulkinsert;

+

+import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.table.BulkInsertPartitioner;

+import org.apache.spark.api.java.JavaRDD;

+import org.apache.spark.sql.Dataset;

+import org.apache.spark.sql.HoodieUnsafeUtils$;

+import org.apache.spark.sql.Row;

+import org.apache.spark.sql.SparkSession$;

+

+public abstract class SparkBulkInsertPartitionerBase implements

BulkInsertPartitioner {

+

+ protected static Dataset tryCoalesce(Dataset dataset, int

targetPartitionNumHint) {

+// NOTE: In case incoming [[Dataset]]'s partition count matches the target

one,

+// we short

[GitHub] [hudi] kazdy commented on pull request #7935: [DRAFT] Add maven-build-cache-extension

kazdy commented on PR #7935: URL: https://github.com/apache/hudi/pull/7935#issuecomment-1429415229 @xushiyan would you be interested in introducing this extension? Although just adding the extension works, it comes with some configurations worth reviewing (excluding files etc.). It will require changes in CI like bumping mvn to 3.9. If you preferred to have a remote cache to speed up builds or rather build clean with no cache in CI. With a remote cache, it could reduce CI time a bit and leave more for running tests. I also see that mvn install failed in CI with OOM so that can be an issue. Anyways it seems like a pretty big change and it rather feels like something for Hudi commiters/maintainers ? What do you think? I'm willing to help of course :) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] Zouxxyy commented on a diff in pull request #7872: [HUDI-5716] Cleaning up `Partitioner`s hierarchy

Zouxxyy commented on code in PR #7872:

URL: https://github.com/apache/hudi/pull/7872#discussion_r1105505809

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/SparkBulkInsertPartitionerBase.java:

##

@@ -0,0 +1,62 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.execution.bulkinsert;

+

+import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.table.BulkInsertPartitioner;

+import org.apache.spark.api.java.JavaRDD;

+import org.apache.spark.sql.Dataset;

+import org.apache.spark.sql.HoodieUnsafeUtils$;

+import org.apache.spark.sql.Row;

+import org.apache.spark.sql.SparkSession$;

+

+public abstract class SparkBulkInsertPartitionerBase implements

BulkInsertPartitioner {

+

+ protected static Dataset tryCoalesce(Dataset dataset, int

targetPartitionNumHint) {

+// NOTE: In case incoming [[Dataset]]'s partition count matches the target

one,

+// we short-circuit coalescing altogether (since this isn't done by

Spark itself)

+if (targetPartitionNumHint > 0 && targetPartitionNumHint !=

HoodieUnsafeUtils$.MODULE$.getNumPartitions(dataset)) {

+ return dataset.coalesce(targetPartitionNumHint);

+}

+

+return dataset;

+ }

+

+ protected static JavaRDD>

tryCoalesce(JavaRDD> records,

+int

targetPartitionNumHint) {

+// NOTE: In case incoming [[RDD]]'s partition count matches the target one,

+// we short-circuit coalescing altogether (since this isn't done by

Spark itself)

+if (targetPartitionNumHint > 0 && targetPartitionNumHint !=

records.getNumPartitions()) {

Review Comment:

When `targetPartitionNumHint` > `records.getNumPartitions` `coalesce` is

meaningless, maybe we can use `targetPartitionNumHint !<

records.getNumPartitions()` as the condition

In addition, I actually have a question, if `targetPartitionNumHint >

records.getNumPartitions`, should we use `repartition` instead of `coalesce`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] Zouxxyy commented on a diff in pull request #7872: [HUDI-5716] Cleaning up `Partitioner`s hierarchy

Zouxxyy commented on code in PR #7872:

URL: https://github.com/apache/hudi/pull/7872#discussion_r1105505809

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/SparkBulkInsertPartitionerBase.java:

##

@@ -0,0 +1,62 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.execution.bulkinsert;

+

+import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.table.BulkInsertPartitioner;

+import org.apache.spark.api.java.JavaRDD;

+import org.apache.spark.sql.Dataset;

+import org.apache.spark.sql.HoodieUnsafeUtils$;

+import org.apache.spark.sql.Row;

+import org.apache.spark.sql.SparkSession$;

+

+public abstract class SparkBulkInsertPartitionerBase implements

BulkInsertPartitioner {

+

+ protected static Dataset tryCoalesce(Dataset dataset, int

targetPartitionNumHint) {

+// NOTE: In case incoming [[Dataset]]'s partition count matches the target

one,

+// we short-circuit coalescing altogether (since this isn't done by

Spark itself)

+if (targetPartitionNumHint > 0 && targetPartitionNumHint !=

HoodieUnsafeUtils$.MODULE$.getNumPartitions(dataset)) {

+ return dataset.coalesce(targetPartitionNumHint);

+}

+

+return dataset;

+ }

+

+ protected static JavaRDD>

tryCoalesce(JavaRDD> records,

+int

targetPartitionNumHint) {

+// NOTE: In case incoming [[RDD]]'s partition count matches the target one,

+// we short-circuit coalescing altogether (since this isn't done by

Spark itself)

+if (targetPartitionNumHint > 0 && targetPartitionNumHint !=

records.getNumPartitions()) {

Review Comment:

When `targetPartitionNumHint` > `records.getNumPartitions` `coalesce` is

meaningless, maybe we can use `targetPartitionNumHint <

records.getNumPartitions()` as the condition

In addition, I actually have a question, if `targetPartitionNumHint >

records.getNumPartitions`, should we use `repartition` instead of `coalesce`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7941: [HUDI-5786] Add a new config to specific spark write rdd storage level

hudi-bot commented on PR #7941: URL: https://github.com/apache/hudi/pull/7941#issuecomment-1429418042 ## CI report: * 42e12ad1b6bebdcc3dc9d985e5be661b198f3f5c UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] Zouxxyy commented on a diff in pull request #7872: [HUDI-5716] Cleaning up `Partitioner`s hierarchy

Zouxxyy commented on code in PR #7872:

URL: https://github.com/apache/hudi/pull/7872#discussion_r1105540207

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/RowCustomColumnsSortPartitioner.java:

##

@@ -19,43 +19,70 @@

package org.apache.hudi.execution.bulkinsert;

import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.common.table.HoodieTableConfig;

import org.apache.hudi.config.HoodieWriteConfig;

-import org.apache.hudi.table.BulkInsertPartitioner;

-

+import org.apache.spark.sql.Column;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

+import scala.collection.JavaConverters;

import java.util.Arrays;

+import java.util.stream.Collectors;

+

+import static org.apache.hudi.common.util.ValidationUtils.checkState;

+import static

org.apache.hudi.execution.bulkinsert.RDDCustomColumnsSortPartitioner.getOrderByColumnNames;

/**

- * A partitioner that does sorting based on specified column values for each

spark partitions.

+ * A partitioner that does local sorting for each RDD partition based on the

tuple of

+ * values of the columns configured for ordering.

*/

-public class RowCustomColumnsSortPartitioner implements

BulkInsertPartitioner> {

+public class RowCustomColumnsSortPartitioner extends

RepartitioningBulkInsertPartitionerBase> {

- private final String[] sortColumnNames;

+ private final String[] orderByColumnNames;

- public RowCustomColumnsSortPartitioner(HoodieWriteConfig config) {

-this.sortColumnNames = getSortColumnName(config);

+ public RowCustomColumnsSortPartitioner(HoodieWriteConfig config,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = getOrderByColumnNames(config);

+

+checkState(orderByColumnNames.length > 0);

}

- public RowCustomColumnsSortPartitioner(String[] columnNames) {

-this.sortColumnNames = columnNames;

+ public RowCustomColumnsSortPartitioner(String[] columnNames,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = columnNames;

+

+checkState(orderByColumnNames.length > 0);

}

@Override

- public Dataset repartitionRecords(Dataset records, int

outputSparkPartitions) {

-final String[] sortColumns = this.sortColumnNames;

-return records.sort(HoodieRecord.PARTITION_PATH_METADATA_FIELD,

sortColumns)

-.coalesce(outputSparkPartitions);

+ public Dataset repartitionRecords(Dataset dataset, int

targetPartitionNumHint) {

+Dataset repartitionedDataset;

+

+// NOTE: In case of partitioned table even "global" ordering (across all

RDD partitions) could

+// not change table's partitioning and therefore there's no point in

doing global sorting

+// across "physical" partitions, and instead we can reduce total

amount of data being

+// shuffled by doing do "local" sorting:

+// - First, re-partitioning dataset such that "logical"

partitions are aligned w/

+// "physical" ones

+// - Sorting locally w/in RDD ("logical") partitions

+//

+// Non-partitioned tables will be globally sorted.

+if (isPartitionedTable) {

+ repartitionedDataset =

dataset.repartition(handleTargetPartitionNumHint(targetPartitionNumHint),

+ new Column(HoodieRecord.PARTITION_PATH_METADATA_FIELD));

+} else {

+ repartitionedDataset = tryCoalesce(dataset, targetPartitionNumHint);

Review Comment:

In addition, I wonder if `coalesce` can meet our needs. For example, if we

want to modify the FG containing N files into M (M>N) files (using cluster),

shuffle needs to happen anyway.

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/RowCustomColumnsSortPartitioner.java:

##

@@ -19,43 +19,70 @@

package org.apache.hudi.execution.bulkinsert;

import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.common.table.HoodieTableConfig;

import org.apache.hudi.config.HoodieWriteConfig;

-import org.apache.hudi.table.BulkInsertPartitioner;

-

+import org.apache.spark.sql.Column;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

+import scala.collection.JavaConverters;

import java.util.Arrays;

+import java.util.stream.Collectors;

+

+import static org.apache.hudi.common.util.ValidationUtils.checkState;

+import static

org.apache.hudi.execution.bulkinsert.RDDCustomColumnsSortPartitioner.getOrderByColumnNames;

/**

- * A partitioner that does sorting based on specified column values for each

spark partitions.

+ * A partitioner that does local sorting for each RDD partition based on the

tuple of

+ * values of the columns configured for ordering.

*/

-public class RowCustomColumnsSortPartitioner implements

BulkInsertPartitioner> {

+public class RowCustomColumnsSortPartitioner extends

RepartitioningBulkInsertPartitionerBase> {

- private final String[] sortColumnNames;

+ private final String[] ord

[GitHub] [hudi] Zouxxyy commented on a diff in pull request #7872: [HUDI-5716] Cleaning up `Partitioner`s hierarchy

Zouxxyy commented on code in PR #7872:

URL: https://github.com/apache/hudi/pull/7872#discussion_r1105540267

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/RowCustomColumnsSortPartitioner.java:

##

@@ -19,43 +19,70 @@

package org.apache.hudi.execution.bulkinsert;

import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.common.table.HoodieTableConfig;

import org.apache.hudi.config.HoodieWriteConfig;

-import org.apache.hudi.table.BulkInsertPartitioner;

-

+import org.apache.spark.sql.Column;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

+import scala.collection.JavaConverters;

import java.util.Arrays;

+import java.util.stream.Collectors;

+

+import static org.apache.hudi.common.util.ValidationUtils.checkState;

+import static

org.apache.hudi.execution.bulkinsert.RDDCustomColumnsSortPartitioner.getOrderByColumnNames;

/**

- * A partitioner that does sorting based on specified column values for each

spark partitions.

+ * A partitioner that does local sorting for each RDD partition based on the

tuple of

+ * values of the columns configured for ordering.

*/

-public class RowCustomColumnsSortPartitioner implements

BulkInsertPartitioner> {

+public class RowCustomColumnsSortPartitioner extends

RepartitioningBulkInsertPartitionerBase> {

- private final String[] sortColumnNames;

+ private final String[] orderByColumnNames;

- public RowCustomColumnsSortPartitioner(HoodieWriteConfig config) {

-this.sortColumnNames = getSortColumnName(config);

+ public RowCustomColumnsSortPartitioner(HoodieWriteConfig config,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = getOrderByColumnNames(config);

+

+checkState(orderByColumnNames.length > 0);

}

- public RowCustomColumnsSortPartitioner(String[] columnNames) {

-this.sortColumnNames = columnNames;

+ public RowCustomColumnsSortPartitioner(String[] columnNames,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = columnNames;

+

+checkState(orderByColumnNames.length > 0);

}

@Override

- public Dataset repartitionRecords(Dataset records, int

outputSparkPartitions) {

-final String[] sortColumns = this.sortColumnNames;

-return records.sort(HoodieRecord.PARTITION_PATH_METADATA_FIELD,

sortColumns)

-.coalesce(outputSparkPartitions);

+ public Dataset repartitionRecords(Dataset dataset, int

targetPartitionNumHint) {

+Dataset repartitionedDataset;

+

+// NOTE: In case of partitioned table even "global" ordering (across all

RDD partitions) could

+// not change table's partitioning and therefore there's no point in

doing global sorting

+// across "physical" partitions, and instead we can reduce total

amount of data being

+// shuffled by doing do "local" sorting:

+// - First, re-partitioning dataset such that "logical"

partitions are aligned w/

+// "physical" ones

+// - Sorting locally w/in RDD ("logical") partitions

+//

+// Non-partitioned tables will be globally sorted.

+if (isPartitionedTable) {

+ repartitionedDataset =

dataset.repartition(handleTargetPartitionNumHint(targetPartitionNumHint),

+ new Column(HoodieRecord.PARTITION_PATH_METADATA_FIELD));

+} else {

+ repartitionedDataset = tryCoalesce(dataset, targetPartitionNumHint);

Review Comment:

In addition, I wonder if `coalesce` can meet our needs. For example, if we

want to modify the FG containing N files into M (M>N) files (using `cluster`),

shuffle needs to happen anyway.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7941: [HUDI-5786] Add a new config to specific spark write rdd storage level

hudi-bot commented on PR #7941: URL: https://github.com/apache/hudi/pull/7941#issuecomment-1429430076 ## CI report: * 42e12ad1b6bebdcc3dc9d985e5be661b198f3f5c Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=15155) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] Zouxxyy commented on a diff in pull request #7872: [HUDI-5716] Cleaning up `Partitioner`s hierarchy

Zouxxyy commented on code in PR #7872:

URL: https://github.com/apache/hudi/pull/7872#discussion_r1105531548

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/RowCustomColumnsSortPartitioner.java:

##

@@ -19,43 +19,70 @@

package org.apache.hudi.execution.bulkinsert;

import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.common.table.HoodieTableConfig;

import org.apache.hudi.config.HoodieWriteConfig;

-import org.apache.hudi.table.BulkInsertPartitioner;

-

+import org.apache.spark.sql.Column;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

+import scala.collection.JavaConverters;

import java.util.Arrays;

+import java.util.stream.Collectors;

+

+import static org.apache.hudi.common.util.ValidationUtils.checkState;

+import static

org.apache.hudi.execution.bulkinsert.RDDCustomColumnsSortPartitioner.getOrderByColumnNames;

/**

- * A partitioner that does sorting based on specified column values for each

spark partitions.

+ * A partitioner that does local sorting for each RDD partition based on the

tuple of

+ * values of the columns configured for ordering.

*/

-public class RowCustomColumnsSortPartitioner implements

BulkInsertPartitioner> {

+public class RowCustomColumnsSortPartitioner extends

RepartitioningBulkInsertPartitionerBase> {

- private final String[] sortColumnNames;

+ private final String[] orderByColumnNames;

- public RowCustomColumnsSortPartitioner(HoodieWriteConfig config) {

-this.sortColumnNames = getSortColumnName(config);

+ public RowCustomColumnsSortPartitioner(HoodieWriteConfig config,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = getOrderByColumnNames(config);

+

+checkState(orderByColumnNames.length > 0);

}

- public RowCustomColumnsSortPartitioner(String[] columnNames) {

-this.sortColumnNames = columnNames;

+ public RowCustomColumnsSortPartitioner(String[] columnNames,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = columnNames;

+

+checkState(orderByColumnNames.length > 0);

}

@Override

- public Dataset repartitionRecords(Dataset records, int

outputSparkPartitions) {

-final String[] sortColumns = this.sortColumnNames;

-return records.sort(HoodieRecord.PARTITION_PATH_METADATA_FIELD,

sortColumns)

-.coalesce(outputSparkPartitions);

+ public Dataset repartitionRecords(Dataset dataset, int

targetPartitionNumHint) {

+Dataset repartitionedDataset;

+

+// NOTE: In case of partitioned table even "global" ordering (across all

RDD partitions) could

+// not change table's partitioning and therefore there's no point in

doing global sorting

+// across "physical" partitions, and instead we can reduce total

amount of data being

+// shuffled by doing do "local" sorting:

Review Comment:

As far as I know, RowCustomColumnsSortPartitioner will only be used in

`cluster`. At this time, the files in the same `clusteringGroup` should already

be in one physical partition.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] Zouxxyy commented on a diff in pull request #7872: [HUDI-5716] Cleaning up `Partitioner`s hierarchy

Zouxxyy commented on code in PR #7872:

URL: https://github.com/apache/hudi/pull/7872#discussion_r1105540267

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/RowCustomColumnsSortPartitioner.java:

##

@@ -19,43 +19,70 @@

package org.apache.hudi.execution.bulkinsert;

import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.common.table.HoodieTableConfig;

import org.apache.hudi.config.HoodieWriteConfig;

-import org.apache.hudi.table.BulkInsertPartitioner;

-

+import org.apache.spark.sql.Column;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

+import scala.collection.JavaConverters;

import java.util.Arrays;

+import java.util.stream.Collectors;

+

+import static org.apache.hudi.common.util.ValidationUtils.checkState;

+import static

org.apache.hudi.execution.bulkinsert.RDDCustomColumnsSortPartitioner.getOrderByColumnNames;

/**

- * A partitioner that does sorting based on specified column values for each

spark partitions.

+ * A partitioner that does local sorting for each RDD partition based on the

tuple of

+ * values of the columns configured for ordering.

*/

-public class RowCustomColumnsSortPartitioner implements

BulkInsertPartitioner> {

+public class RowCustomColumnsSortPartitioner extends

RepartitioningBulkInsertPartitionerBase> {

- private final String[] sortColumnNames;

+ private final String[] orderByColumnNames;

- public RowCustomColumnsSortPartitioner(HoodieWriteConfig config) {

-this.sortColumnNames = getSortColumnName(config);

+ public RowCustomColumnsSortPartitioner(HoodieWriteConfig config,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = getOrderByColumnNames(config);

+

+checkState(orderByColumnNames.length > 0);

}

- public RowCustomColumnsSortPartitioner(String[] columnNames) {

-this.sortColumnNames = columnNames;

+ public RowCustomColumnsSortPartitioner(String[] columnNames,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = columnNames;

+

+checkState(orderByColumnNames.length > 0);

}

@Override

- public Dataset repartitionRecords(Dataset records, int

outputSparkPartitions) {

-final String[] sortColumns = this.sortColumnNames;

-return records.sort(HoodieRecord.PARTITION_PATH_METADATA_FIELD,

sortColumns)

-.coalesce(outputSparkPartitions);

+ public Dataset repartitionRecords(Dataset dataset, int

targetPartitionNumHint) {

+Dataset repartitionedDataset;

+

+// NOTE: In case of partitioned table even "global" ordering (across all

RDD partitions) could

+// not change table's partitioning and therefore there's no point in

doing global sorting

+// across "physical" partitions, and instead we can reduce total

amount of data being

+// shuffled by doing do "local" sorting:

+// - First, re-partitioning dataset such that "logical"

partitions are aligned w/

+// "physical" ones

+// - Sorting locally w/in RDD ("logical") partitions

+//

+// Non-partitioned tables will be globally sorted.

+if (isPartitionedTable) {

+ repartitionedDataset =

dataset.repartition(handleTargetPartitionNumHint(targetPartitionNumHint),

+ new Column(HoodieRecord.PARTITION_PATH_METADATA_FIELD));

+} else {

+ repartitionedDataset = tryCoalesce(dataset, targetPartitionNumHint);

Review Comment:

In addition, I wonder if `coalesce` can meet our needs. For example, if we

want to modify the `clusteringGroup` containing N partitions into M (M>N) files

(using `cluster`), shuffle needs to happen anyway.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] Zouxxyy commented on a diff in pull request #7872: [HUDI-5716] Cleaning up `Partitioner`s hierarchy

Zouxxyy commented on code in PR #7872:

URL: https://github.com/apache/hudi/pull/7872#discussion_r1105540267

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/RowCustomColumnsSortPartitioner.java:

##

@@ -19,43 +19,70 @@

package org.apache.hudi.execution.bulkinsert;

import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.common.table.HoodieTableConfig;

import org.apache.hudi.config.HoodieWriteConfig;

-import org.apache.hudi.table.BulkInsertPartitioner;

-

+import org.apache.spark.sql.Column;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

+import scala.collection.JavaConverters;

import java.util.Arrays;

+import java.util.stream.Collectors;

+

+import static org.apache.hudi.common.util.ValidationUtils.checkState;

+import static

org.apache.hudi.execution.bulkinsert.RDDCustomColumnsSortPartitioner.getOrderByColumnNames;

/**

- * A partitioner that does sorting based on specified column values for each

spark partitions.

+ * A partitioner that does local sorting for each RDD partition based on the

tuple of

+ * values of the columns configured for ordering.

*/

-public class RowCustomColumnsSortPartitioner implements

BulkInsertPartitioner> {

+public class RowCustomColumnsSortPartitioner extends

RepartitioningBulkInsertPartitionerBase> {

- private final String[] sortColumnNames;

+ private final String[] orderByColumnNames;

- public RowCustomColumnsSortPartitioner(HoodieWriteConfig config) {

-this.sortColumnNames = getSortColumnName(config);

+ public RowCustomColumnsSortPartitioner(HoodieWriteConfig config,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = getOrderByColumnNames(config);

+

+checkState(orderByColumnNames.length > 0);

}

- public RowCustomColumnsSortPartitioner(String[] columnNames) {

-this.sortColumnNames = columnNames;

+ public RowCustomColumnsSortPartitioner(String[] columnNames,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = columnNames;

+

+checkState(orderByColumnNames.length > 0);

}

@Override

- public Dataset repartitionRecords(Dataset records, int

outputSparkPartitions) {

-final String[] sortColumns = this.sortColumnNames;

-return records.sort(HoodieRecord.PARTITION_PATH_METADATA_FIELD,

sortColumns)

-.coalesce(outputSparkPartitions);

+ public Dataset repartitionRecords(Dataset dataset, int

targetPartitionNumHint) {

+Dataset repartitionedDataset;

+

+// NOTE: In case of partitioned table even "global" ordering (across all

RDD partitions) could

+// not change table's partitioning and therefore there's no point in

doing global sorting

+// across "physical" partitions, and instead we can reduce total

amount of data being

+// shuffled by doing do "local" sorting:

+// - First, re-partitioning dataset such that "logical"

partitions are aligned w/

+// "physical" ones

+// - Sorting locally w/in RDD ("logical") partitions

+//

+// Non-partitioned tables will be globally sorted.

+if (isPartitionedTable) {

+ repartitionedDataset =

dataset.repartition(handleTargetPartitionNumHint(targetPartitionNumHint),

+ new Column(HoodieRecord.PARTITION_PATH_METADATA_FIELD));

+} else {

+ repartitionedDataset = tryCoalesce(dataset, targetPartitionNumHint);

Review Comment:

In addition, I wonder if `coalesce` can meet our needs. For example, if we

want to modify the `clusteringGroup` containing N logical partitions into M

(M>N) files (using `cluster`), shuffle needs to happen anyway.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] Zouxxyy commented on a diff in pull request #7872: [HUDI-5716] Cleaning up `Partitioner`s hierarchy

Zouxxyy commented on code in PR #7872:

URL: https://github.com/apache/hudi/pull/7872#discussion_r1105540267

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/RowCustomColumnsSortPartitioner.java:

##

@@ -19,43 +19,70 @@

package org.apache.hudi.execution.bulkinsert;

import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.common.table.HoodieTableConfig;

import org.apache.hudi.config.HoodieWriteConfig;

-import org.apache.hudi.table.BulkInsertPartitioner;

-

+import org.apache.spark.sql.Column;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

+import scala.collection.JavaConverters;

import java.util.Arrays;

+import java.util.stream.Collectors;

+

+import static org.apache.hudi.common.util.ValidationUtils.checkState;

+import static

org.apache.hudi.execution.bulkinsert.RDDCustomColumnsSortPartitioner.getOrderByColumnNames;

/**

- * A partitioner that does sorting based on specified column values for each

spark partitions.

+ * A partitioner that does local sorting for each RDD partition based on the

tuple of

+ * values of the columns configured for ordering.

*/

-public class RowCustomColumnsSortPartitioner implements

BulkInsertPartitioner> {

+public class RowCustomColumnsSortPartitioner extends

RepartitioningBulkInsertPartitionerBase> {

- private final String[] sortColumnNames;

+ private final String[] orderByColumnNames;

- public RowCustomColumnsSortPartitioner(HoodieWriteConfig config) {

-this.sortColumnNames = getSortColumnName(config);

+ public RowCustomColumnsSortPartitioner(HoodieWriteConfig config,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = getOrderByColumnNames(config);

+

+checkState(orderByColumnNames.length > 0);

}

- public RowCustomColumnsSortPartitioner(String[] columnNames) {

-this.sortColumnNames = columnNames;

+ public RowCustomColumnsSortPartitioner(String[] columnNames,

HoodieTableConfig tableConfig) {

+super(tableConfig);

+this.orderByColumnNames = columnNames;

+

+checkState(orderByColumnNames.length > 0);

}

@Override

- public Dataset repartitionRecords(Dataset records, int

outputSparkPartitions) {

-final String[] sortColumns = this.sortColumnNames;

-return records.sort(HoodieRecord.PARTITION_PATH_METADATA_FIELD,

sortColumns)

-.coalesce(outputSparkPartitions);

+ public Dataset repartitionRecords(Dataset dataset, int

targetPartitionNumHint) {

+Dataset repartitionedDataset;

+

+// NOTE: In case of partitioned table even "global" ordering (across all

RDD partitions) could

+// not change table's partitioning and therefore there's no point in

doing global sorting

+// across "physical" partitions, and instead we can reduce total

amount of data being

+// shuffled by doing do "local" sorting:

+// - First, re-partitioning dataset such that "logical"

partitions are aligned w/

+// "physical" ones

+// - Sorting locally w/in RDD ("logical") partitions

+//

+// Non-partitioned tables will be globally sorted.

+if (isPartitionedTable) {

+ repartitionedDataset =

dataset.repartition(handleTargetPartitionNumHint(targetPartitionNumHint),

+ new Column(HoodieRecord.PARTITION_PATH_METADATA_FIELD));

+} else {

+ repartitionedDataset = tryCoalesce(dataset, targetPartitionNumHint);

Review Comment:

In addition, I wonder if `coalesce` can meet our needs. For example, if we

want to modify the `clusteringGroup` containing N logical partitions into M

(M>N) (using `cluster`), shuffle needs to happen anyway.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #6121: [HUDI-4406] Support Flink compaction/clustering write error resolvement to avoid data loss

danny0405 commented on PR #6121: URL: https://github.com/apache/hudi/pull/6121#issuecomment-1429471911 The failed test case `TestHoodieTableFactory#testTableTypeCheck` is unrelated with this patch and I test it locally to pass, would merge the PR soon ~ -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 merged pull request #6121: [HUDI-4406] Support Flink compaction/clustering write error resolvement to avoid data loss

danny0405 merged PR #6121: URL: https://github.com/apache/hudi/pull/6121 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[hudi] branch master updated: [HUDI-4406] Support Flink compaction/clustering write error resolvement to avoid data loss (#6121)

This is an automated email from the ASF dual-hosted git repository.

danny0405 pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/hudi.git

The following commit(s) were added to refs/heads/master by this push:

new ed6b7f6aedc [HUDI-4406] Support Flink compaction/clustering write

error resolvement to avoid data loss (#6121)

ed6b7f6aedc is described below

commit ed6b7f6aedc2cba0f753a4ee130cef860ecb0801

Author: Chenshizhi <107476116+chens...@users.noreply.github.com>

AuthorDate: Tue Feb 14 18:15:18 2023 +0800

[HUDI-4406] Support Flink compaction/clustering write error resolvement to

avoid data loss (#6121)

---

.../main/java/org/apache/hudi/configuration/FlinkOptions.java | 6 +++---

.../org/apache/hudi/sink/clustering/ClusteringCommitSink.java | 11 +++

.../org/apache/hudi/sink/compact/CompactionCommitSink.java| 11 +++

3 files changed, 25 insertions(+), 3 deletions(-)

diff --git

a/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/configuration/FlinkOptions.java

b/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/configuration/FlinkOptions.java

index e447692fc98..9cdeb963d53 100644

---

a/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/configuration/FlinkOptions.java

+++

b/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/configuration/FlinkOptions.java

@@ -382,9 +382,9 @@ public class FlinkOptions extends HoodieConfig {

.key("write.ignore.failed")

.booleanType()

.defaultValue(false)

- .withDescription("Flag to indicate whether to ignore any non exception

error (e.g. writestatus error). within a checkpoint batch.\n"

- + "By default false. Turning this on, could hide the write status

errors while the spark checkpoint moves ahead. \n"

- + " So, would recommend users to use this with caution.");

+ .withDescription("Flag to indicate whether to ignore any non exception

error (e.g. writestatus error). within a checkpoint batch. \n"

+ + "By default false. Turning this on, could hide the write status

errors while the flink checkpoint moves ahead. \n"

+ + "So, would recommend users to use this with caution.");

public static final ConfigOption RECORD_KEY_FIELD = ConfigOptions

.key(KeyGeneratorOptions.RECORDKEY_FIELD_NAME.key())

diff --git

a/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/clustering/ClusteringCommitSink.java

b/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/clustering/ClusteringCommitSink.java

index eb567d89f18..3f392de1527 100644

---

a/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/clustering/ClusteringCommitSink.java

+++

b/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/clustering/ClusteringCommitSink.java

@@ -147,6 +147,17 @@ public class ClusteringCommitSink extends

CleanFunction {

.flatMap(Collection::stream)

.collect(Collectors.toList());

+long numErrorRecords =

statuses.stream().map(WriteStatus::getTotalErrorRecords).reduce(Long::sum).orElse(0L);

+

+if (numErrorRecords > 0 &&

!this.conf.getBoolean(FlinkOptions.IGNORE_FAILED)) {

+ // handle failure case

+ LOG.error("Got {} error records during clustering of instant {},\n"

+ + "option '{}' is configured as false,"

+ + "rolls back the clustering", numErrorRecords, instant,

FlinkOptions.IGNORE_FAILED.key());

+ ClusteringUtil.rollbackClustering(table, writeClient, instant);

+ return;

+}

+

HoodieWriteMetadata> writeMetadata = new

HoodieWriteMetadata<>();

writeMetadata.setWriteStatuses(statuses);

writeMetadata.setWriteStats(statuses.stream().map(WriteStatus::getStat).collect(Collectors.toList()));

diff --git

a/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitSink.java

b/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitSink.java

index 1e05dce6076..0e9bc54f8fb 100644

---

a/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitSink.java

+++

b/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitSink.java

@@ -157,6 +157,17 @@ public class CompactionCommitSink extends

CleanFunction {

.flatMap(Collection::stream)

.collect(Collectors.toList());

+long numErrorRecords =

statuses.stream().map(WriteStatus::getTotalErrorRecords).reduce(Long::sum).orElse(0L);

+

+if (numErrorRecords > 0 &&

!this.conf.getBoolean(FlinkOptions.IGNORE_FAILED)) {

+ // handle failure case

+ LOG.error("Got {} error records during compaction of instant {},\n"

+ + "option '{}' is configured as false,"

+ + "rolls back the compaction", numErrorRecords, instant,

FlinkOptions.IGNORE_FAILED.key());

+ CompactionUtil.rollbackCompaction(table, instant);

+ return;

+}

+

HoodieCo

[jira] [Closed] (HUDI-4406) Support compaction commit write error resolvement to avoid data loss

[

https://issues.apache.org/jira/browse/HUDI-4406?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Danny Chen closed HUDI-4406.

Resolution: Fixed

Fixed via master branch: ed6b7f6aedc2cba0f753a4ee130cef860ecb0801

> Support compaction commit write error resolvement to avoid data loss

>

>

> Key: HUDI-4406

> URL: https://issues.apache.org/jira/browse/HUDI-4406

> Project: Apache Hudi

> Issue Type: Bug

> Components: flink

>Affects Versions: 0.12.0

>Reporter: Shizhi Chen

>Assignee: Shizhi Chen

>Priority: Blocker

> Labels: pull-request-available

> Fix For: 0.13.1, 0.14.0

>

>

> Currently CompactionCommitSink commit or rollback logics doesn't take the

> writestatus error under consideration (only consider null writestatus), which

> actually will cause data loss when compacting the delta commit log files into

> the new versioned data files.

> eg. org.apache.hudi.io.HoodieMergeHandle#writeRecord will lead to data loss

> from log files due to Exceptions.

> {code:java}

> ```java

> protected boolean writeRecord(HoodieRecord hoodieRecord,

> Option indexedRecord, boolean isDelete) {

> Option recordMetadata = hoodieRecord.getData().getMetadata();

> if (!partitionPath.equals(hoodieRecord.getPartitionPath())) {

> HoodieUpsertException failureEx = new HoodieUpsertException("mismatched

> partition path, record partition: "

> + hoodieRecord.getPartitionPath() + " but trying to insert into

> partition: " + partitionPath);

> writeStatus.markFailure(hoodieRecord, failureEx, recordMetadata);

> return false;

> }

> try {

> if (indexedRecord.isPresent() && !isDelete) {

> writeToFile(hoodieRecord.getKey(), (GenericRecord)

> indexedRecord.get(), preserveMetadata && useWriterSchemaForCompaction);

> recordsWritten++;

> } else {

> recordsDeleted++;

> }

> writeStatus.markSuccess(hoodieRecord, recordMetadata);

> // deflate record payload after recording success. This will help users

> access payload as a

> // part of marking

> // record successful.

> hoodieRecord.deflate();

> return true;

> } catch (Exception e) {

> LOG.error("Error writing record " + hoodieRecord, e);

> writeStatus.markFailure(hoodieRecord, e, recordMetadata);

> }

> return false;

> }{code}

> And it's known that StreamWriteOperatorCoordinator has related commit or

> rollback handle process.

> So this pr will:

> a) Also add writestatus error as rollback reason for CompactionCommitSink

> compaction rollback to avoid data loss

> b) Unify the handle procedure for write commit policy with its implementions,

> as described in org.apache.hudi.commit.policy.WriteCommitPolicy, which is

> consolidated with that of StreamWriteOperatorCoordinator.

> c) All control whether data quality or ingestion stability should be in high

> priority through FlinkOptions#IGNORE_FAILED.

> And, we suggest that FlinkOptions#IGNORE_FAILED be in true by default to

> avoid data loss.

> d) Optimize and fix some tiny bugs for log traces when commiting on error or

> rolling back.

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Updated] (HUDI-4406) Support compaction commit write error resolvement to avoid data loss

[

https://issues.apache.org/jira/browse/HUDI-4406?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Danny Chen updated HUDI-4406:

-

Fix Version/s: 0.13.1

0.14.0

> Support compaction commit write error resolvement to avoid data loss

>

>

> Key: HUDI-4406

> URL: https://issues.apache.org/jira/browse/HUDI-4406

> Project: Apache Hudi

> Issue Type: Bug

> Components: flink

>Affects Versions: 0.12.0

>Reporter: Shizhi Chen

>Assignee: Shizhi Chen

>Priority: Blocker

> Labels: pull-request-available

> Fix For: 0.13.1, 0.14.0

>

>

> Currently CompactionCommitSink commit or rollback logics doesn't take the

> writestatus error under consideration (only consider null writestatus), which

> actually will cause data loss when compacting the delta commit log files into

> the new versioned data files.

> eg. org.apache.hudi.io.HoodieMergeHandle#writeRecord will lead to data loss

> from log files due to Exceptions.

> {code:java}

> ```java

> protected boolean writeRecord(HoodieRecord hoodieRecord,

> Option indexedRecord, boolean isDelete) {

> Option recordMetadata = hoodieRecord.getData().getMetadata();

> if (!partitionPath.equals(hoodieRecord.getPartitionPath())) {

> HoodieUpsertException failureEx = new HoodieUpsertException("mismatched

> partition path, record partition: "

> + hoodieRecord.getPartitionPath() + " but trying to insert into

> partition: " + partitionPath);

> writeStatus.markFailure(hoodieRecord, failureEx, recordMetadata);

> return false;

> }

> try {

> if (indexedRecord.isPresent() && !isDelete) {

> writeToFile(hoodieRecord.getKey(), (GenericRecord)

> indexedRecord.get(), preserveMetadata && useWriterSchemaForCompaction);

> recordsWritten++;

> } else {

> recordsDeleted++;

> }

> writeStatus.markSuccess(hoodieRecord, recordMetadata);

> // deflate record payload after recording success. This will help users

> access payload as a

> // part of marking

> // record successful.

> hoodieRecord.deflate();

> return true;

> } catch (Exception e) {

> LOG.error("Error writing record " + hoodieRecord, e);

> writeStatus.markFailure(hoodieRecord, e, recordMetadata);

> }

> return false;

> }{code}

> And it's known that StreamWriteOperatorCoordinator has related commit or

> rollback handle process.

> So this pr will:

> a) Also add writestatus error as rollback reason for CompactionCommitSink

> compaction rollback to avoid data loss

> b) Unify the handle procedure for write commit policy with its implementions,

> as described in org.apache.hudi.commit.policy.WriteCommitPolicy, which is

> consolidated with that of StreamWriteOperatorCoordinator.

> c) All control whether data quality or ingestion stability should be in high

> priority through FlinkOptions#IGNORE_FAILED.

> And, we suggest that FlinkOptions#IGNORE_FAILED be in true by default to

> avoid data loss.

> d) Optimize and fix some tiny bugs for log traces when commiting on error or

> rolling back.

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [hudi] danny0405 commented on a diff in pull request #7940: [HUDI-5787] HoodieHiveCatalog should not delete data for dropping external table

danny0405 commented on code in PR #7940:

URL: https://github.com/apache/hudi/pull/7940#discussion_r1105583908

##

hudi-sync/hudi-hive-sync/src/main/java/org/apache/hudi/hive/ddl/HMSDDLExecutor.java:

##

@@ -137,12 +137,12 @@ public void createTable(String tableName, MessageType

storageSchema, String inpu

if (!syncConfig.getBoolean(HIVE_CREATE_MANAGED_TABLE)) {

newTb.putToParameters("EXTERNAL", "TRUE");

+newTb.setTableType(TableType.EXTERNAL_TABLE.toString());

}

for (Map.Entry entry : tableProperties.entrySet()) {

newTb.putToParameters(entry.getKey(), entry.getValue());

}

- newTb.setTableType(TableType.EXTERNAL_TABLE.toString());

client.createTable(newTb);

Review Comment:

Nice catch, can we add a test case for it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #7940: [HUDI-5787] HoodieHiveCatalog should not delete data for dropping external table

danny0405 commented on code in PR #7940: URL: https://github.com/apache/hudi/pull/7940#discussion_r1105584401 ## hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/table/catalog/HoodieHiveCatalog.java: ## @@ -656,12 +657,10 @@ public void dropTable(ObjectPath tablePath, boolean ignoreIfNotExists) client.dropTable( tablePath.getDatabaseName(), tablePath.getObjectName(), - // Indicate whether associated data should be deleted. - // Set to 'true' for now because Flink tables shouldn't have data in Hive. Can - // be changed later if necessary - true, + // External table drops only the metadata, should not delete the underlying data. + !TableType.EXTERNAL_TABLE.name().equals(getHiveTable(tablePath).getTableType().toUpperCase(Locale.ROOT)), Review Comment: Can we also add a test case in `TestHoodieHiveCatalog`? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #7894: [HUDI-5729] Fix RowDataKeyGen method getRecordKey

danny0405 commented on PR #7894: URL: https://github.com/apache/hudi/pull/7894#issuecomment-1429484584 There is a test failure: `TestRowDataKeyGen.testRecoredKeyContainsTimestamp:201` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on issue #7936: [SUPPORT]Flink HiveCatalog should respect 'managed_table' options to avoid deleting data unexpectable.

danny0405 commented on issue #7936: URL: https://github.com/apache/hudi/issues/7936#issuecomment-1429487379 I see a fix patch: https://github.com/apache/hudi/pull/7940 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 merged pull request #7633: [HUDI-5737] Fix Deletes issued without any prior commits

danny0405 merged PR #7633: URL: https://github.com/apache/hudi/pull/7633 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[hudi] branch master updated (ed6b7f6aedc -> 4f8f2d8dc5c)

This is an automated email from the ASF dual-hosted git repository. danny0405 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git from ed6b7f6aedc [HUDI-4406] Support Flink compaction/clustering write error resolvement to avoid data loss (#6121) add 4f8f2d8dc5c [HUDI-5737] Fix Deletes issued without any prior commits (#7633) No new revisions were added by this update. Summary of changes: .../src/main/java/org/apache/hudi/client/BaseHoodieWriteClient.java | 2 +- .../hudi/client/functional/TestHoodieClientOnCopyOnWriteStorage.java | 4 +--- 2 files changed, 2 insertions(+), 4 deletions(-)

[jira] [Closed] (HUDI-5737) Fix Deletes issued without any prior commits

[ https://issues.apache.org/jira/browse/HUDI-5737?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen closed HUDI-5737. Resolution: Fixed Fixed via master branch: 4f8f2d8dc5c449f70562f0a629a1765dbdac7235 > Fix Deletes issued without any prior commits > > > Key: HUDI-5737 > URL: https://issues.apache.org/jira/browse/HUDI-5737 > Project: Apache Hudi > Issue Type: Bug > Components: spark-sql >Reporter: Danny Chen >Priority: Major > Labels: pull-request-available > Fix For: 0.13.1, 0.14.0 > > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Assigned] (HUDI-5737) Fix Deletes issued without any prior commits

[ https://issues.apache.org/jira/browse/HUDI-5737?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen reassigned HUDI-5737: Assignee: Danny Chen > Fix Deletes issued without any prior commits > > > Key: HUDI-5737 > URL: https://issues.apache.org/jira/browse/HUDI-5737 > Project: Apache Hudi > Issue Type: Bug > Components: spark-sql >Reporter: Danny Chen >Assignee: Danny Chen >Priority: Major > Labels: pull-request-available > Fix For: 0.13.1, 0.14.0 > > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] LinMingQiang commented on a diff in pull request #7907: [HUDI-5672][RFC-61] Lockless multi writer support

LinMingQiang commented on code in PR #7907: URL: https://github.com/apache/hudi/pull/7907#discussion_r1101638612 ## rfc/rfc-61/rfc-61.md: ## @@ -0,0 +1,98 @@ +# RFC-61: Lockless Multi Writer + +## Proposers +- @danny0405 +- @ForwardXu +- @SteNicholas + +## Approvers +- + +## Status + +JIRA: [Lockless multi writer support](https://issues.apache.org/jira/browse/HUDI-5672) + +## Abstract +As you know, Hudi already supports basic OCC with abundant lock providers. +But for multi streaming ingestion writers, the OCC does not work well because the conflicts happen in very high frequency. +Expand it a little bit, with hashing index, all the writers have deterministic hashing algorithm for distributing the records by primary keys, +all the keys are evenly distributed in all the data buckets, for a single data flushing in one writer, almost all the data buckets are appended with new inputs, +so the conflict would very possibility happen for mul-writer because almost all the data buckets are being written by multiple writers at the same time; +For bloom filter index, things are different, but remember that we have a small file load rebalance strategy to writer into the **small** bucket in higher priority, +that means, multiple writers prune to write into the same **small** buckets at the same time, that's how conflicts happen. + +In general, for multiple streaming writers ingestion, explicit lock is not very capable of putting into production, in this RFC, we propse a lockless solution for streaming ingestion. + +## Background + +Streaming jobs are naturally suitable for data ingestion, it has no complexity of pipeline orchestration and has a smother write workload. +Most of the raw data set we are handling today are generating all the time in streaming way. + +Based on that, many requests for multiple writers' ingestion are derived. With multi-writer ingestion, several streaming events with the same schema can be drained into one Hudi table, +the Hudi table kind of becomes a UNION table view for all the input data set. This is a very common use case because in reality, the data sets are usually scattered all over the data sources. + +Another very useful use case we wanna unlock is the real-time data set join. One of the biggest pain point in streaming computation is the dataset join, +the engine like Flink has basic supports for all kind of SQL JOINs, but it stores the input records within its inner state-backend which is a huge cost for pure data join with no additional computations. +In [HUDI-3304](https://issues.apache.org/jira/browse/HUDI-3304), we introduced a `PartialUpdateAvroPayload`, in combination with the lockless multi-writer, +we can implement N-ways data sources join in real-time! Hudi would take care of the payload join during compaction service procedure. + +## Design + +### The Precondition + + MOR Table Type Is Required + +The table type must be `MERGE_ON_READ`, so that we can defer the conflict resolution to the compaction phase. The compaction service would resolve the conflicts of the same keys by respecting the event time sequence of the events. + + Deterministic Bucketing Strategy + +Determistic bucketing strategy is required, because the same records keys from different writers are desired to be distributed into the same bucket, not only for UPSERTs, but also for all the new INSERTs. Review Comment: If we are using a MOR table but not a bucket index layout, can we support lockless multi writer for INSERT? Will there be any problem? It will cause multiple writers to write to the same file and cause conflicts. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #7907: [HUDI-5672][RFC-61] Lockless multi writer support