[GitHub] [hudi] bvaradar commented on pull request #7680: [HUDI-5548] spark sql show | update hudi's table properties

bvaradar commented on PR #7680: URL: https://github.com/apache/hudi/pull/7680#issuecomment-1539440046 @XuQianJin-Stars : Checking to see if you can take this to finish line ? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] ad1happy2go commented on issue #8159: [SUPPORT] - Debezium PostgreSQL

ad1happy2go commented on issue #8159: URL: https://github.com/apache/hudi/issues/8159#issuecomment-1539434631 @lenhardtx Did you got a chance to test out with the patch? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] bvaradar commented on a diff in pull request #8452: [HUDI-6077] Add more partition push down filters

bvaradar commented on code in PR #8452:

URL: https://github.com/apache/hudi/pull/8452#discussion_r1188099833

##

hudi-common/src/main/java/org/apache/hudi/metadata/FileSystemBackedTableMetadata.java:

##

@@ -58,13 +62,21 @@ public class FileSystemBackedTableMetadata implements

HoodieTableMetadata {

private final SerializableConfiguration hadoopConf;

private final String datasetBasePath;

private final boolean assumeDatePartitioning;

+ private final boolean hiveStylePartitioningEnabled;

+ private final boolean urlEncodePartitioningEnabled;

public FileSystemBackedTableMetadata(HoodieEngineContext engineContext,

SerializableConfiguration conf, String datasetBasePath,

boolean assumeDatePartitioning) {

this.engineContext = engineContext;

this.hadoopConf = conf;

this.datasetBasePath = datasetBasePath;

this.assumeDatePartitioning = assumeDatePartitioning;

+HoodieTableMetaClient metaClient = HoodieTableMetaClient.builder()

Review Comment:

The super class already instantiates metaclient. Please move the members

hiveStylePartitioningEnabled and urlEncodePartitioningEnabled there so that

they can be reused for HoodieBackedTableMetadata

##

hudi-sync/hudi-hive-sync/src/main/java/org/apache/hudi/hive/util/FilterGenVisitor.java:

##

@@ -42,9 +43,10 @@ private String quoteStringLiteral(String value) {

}

}

- private String visitAnd(Expression left, Expression right) {

-String leftResult = left.accept(this);

-String rightResult = right.accept(this);

+ @Override

+ public String visitAnd(Predicates.And and) {

Review Comment:

Is case sensitivity same between hive-sync and spark integration ?

##

hudi-spark-datasource/hudi-spark2/src/main/scala/org/apache/spark/sql/adapter/Spark2Adapter.scala:

##

@@ -186,4 +186,13 @@ class Spark2Adapter extends SparkAdapter {

case OFF_HEAP => "OFF_HEAP"

case _ => throw new IllegalArgumentException(s"Invalid StorageLevel:

$level")

}

+

+ override def translateFilter(predicate: Expression,

+ supportNestedPredicatePushdown: Boolean =

false): Option[Filter] = {

+if (supportNestedPredicatePushdown) {

Review Comment:

Is this expected to fail any spark 2 queries ?

##

hudi-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadata.java:

##

@@ -153,6 +156,20 @@ protected Option>

getRecordByKey(String key,

return recordsByKeys.size() == 0 ? Option.empty() :

recordsByKeys.get(0).getValue();

}

+ @Override

+ public List getPartitionPathByExpression(List

relativePathPrefixes,

+ Types.RecordType

partitionFields,

+ Expression expression)

throws IOException {

+Expression boundedExpr = expression.accept(new

BindVisitor(partitionFields, false));

+boolean hiveStylePartitioningEnabled =

Boolean.parseBoolean(dataMetaClient.getTableConfig().getHiveStylePartitioningEnable());

Review Comment:

Once we move hiveStylePartitioningEnabled and urlEncodePartitioningEnabled

to base class, reuse them instead of creating this each time.

##

hudi-spark-datasource/hudi-spark-common/src/main/scala/org/apache/hudi/SparkHoodieTableFileIndex.scala:

##

@@ -307,8 +318,20 @@ class SparkHoodieTableFileIndex(spark: SparkSession,

Seq(new PartitionPath(relativePartitionPathPrefix,

staticPartitionColumnNameValuePairs.map(_._2._2.asInstanceOf[AnyRef]).toArray))

} else {

// Otherwise, compile extracted partition values (from query

predicates) into a sub-path which is a prefix

-// of the complete partition path, do listing for this prefix-path only

-

listPartitionPaths(Seq(relativePartitionPathPrefix).toList.asJava).asScala

+// of the complete partition path, do listing for this prefix-path and

filter them with partitionPredicates

+Try {

+

SparkFilterHelper.convertDataType(partitionSchema).asInstanceOf[RecordType]

+} match {

+ case Success(partitionRecordType) if

partitionRecordType.fields().size() == _partitionSchemaFromProperties.size =>

+val convertedFilters = SparkFilterHelper.convertFilters(

+ partitionColumnPredicates.flatMap {

+expr => sparkAdapter.translateFilter(expr)

+ })

+listPartitionPaths(Seq(relativePartitionPathPrefix).toList.asJava,

partitionRecordType, convertedFilters).asScala

Review Comment:

If we encounter exception such as in Conversions.fromPartitionString

default case, we should revert to list by prefix without filtering.

##

hudi-common/src/main/java/org/apache/hudi/metadata/FileSystemBackedTableMetadata.java:

##

@@ -84,6 +96,19 @@ public List getAllPartitionPaths() throws

IOException {

return

[GitHub] [hudi] ad1happy2go commented on issue #8614: [SUPPORT] Exception in thread "main" java.lang.NoSuchMethodError: org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDown(Lscala/Par

ad1happy2go commented on issue #8614: URL: https://github.com/apache/hudi/issues/8614#issuecomment-1539432004 @abdkumar Were you able to test out with this patch. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] ad1happy2go commented on issue #8532: [SUPPORT]org.apache.spark.shuffle.MetadataFetchFailedException: Missing an output location for shuffle 11 partition 1

ad1happy2go commented on issue #8532: URL: https://github.com/apache/hudi/issues/8532#issuecomment-1539432545 @gtwuser Did the tuning guide helped? Were you Able to resolve the issue? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] codope closed issue #8340: [SUPPORT] cannot assign instance of java.lang.invoke.SerializedLambda

codope closed issue #8340: [SUPPORT] cannot assign instance of java.lang.invoke.SerializedLambda URL: https://github.com/apache/hudi/issues/8340 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] ad1happy2go commented on issue #8340: [SUPPORT] cannot assign instance of java.lang.invoke.SerializedLambda

ad1happy2go commented on issue #8340: URL: https://github.com/apache/hudi/issues/8340#issuecomment-1539430091 Thanks @TranHuyTiep. Closing the issue as you are able to fix. Please reopen if you see issue again. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8659: [HUDI-6155] Fix cleaner based on hours for earliest commit to retain

hudi-bot commented on PR #8659: URL: https://github.com/apache/hudi/pull/8659#issuecomment-1539417125 ## CI report: * 4173ee7fd4dda6e1791b5356a4ca0d09df207f27 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16958) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Closed] (HUDI-6031) checkpoint lost after changing COW to MOR, when using deltastreamer

[ https://issues.apache.org/jira/browse/HUDI-6031?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Kong Wei closed HUDI-6031. -- Resolution: Fixed > checkpoint lost after changing COW to MOR, when using deltastreamer > --- > > Key: HUDI-6031 > URL: https://issues.apache.org/jira/browse/HUDI-6031 > Project: Apache Hudi > Issue Type: Bug > Components: deltastreamer >Reporter: Kong Wei >Assignee: Kong Wei >Priority: Major > Labels: pull-request-available > > after changing existing COW table to MOR (follow the > [FAQ|#how-to-convert-an-existing-cow-table-to-mor]), then continue the > deltastreamer on the MOR table, the checkpoint from COW (saved in commit > file) will lost, cause the dataloss issue in this case. > > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Resolved] (HUDI-6031) checkpoint lost after changing COW to MOR, when using deltastreamer

[ https://issues.apache.org/jira/browse/HUDI-6031?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Kong Wei resolved HUDI-6031. > checkpoint lost after changing COW to MOR, when using deltastreamer > --- > > Key: HUDI-6031 > URL: https://issues.apache.org/jira/browse/HUDI-6031 > Project: Apache Hudi > Issue Type: Bug > Components: deltastreamer >Reporter: Kong Wei >Assignee: Kong Wei >Priority: Major > Labels: pull-request-available > > after changing existing COW table to MOR (follow the > [FAQ|#how-to-convert-an-existing-cow-table-to-mor]), then continue the > deltastreamer on the MOR table, the checkpoint from COW (saved in commit > file) will lost, cause the dataloss issue in this case. > > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Closed] (HUDI-6019) Kafka source support split by count

[

https://issues.apache.org/jira/browse/HUDI-6019?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Kong Wei closed HUDI-6019.

--

Resolution: Fixed

> Kafka source support split by count

> ---

>

> Key: HUDI-6019

> URL: https://issues.apache.org/jira/browse/HUDI-6019

> Project: Apache Hudi

> Issue Type: New Feature

> Components: deltastreamer, hudi-utilities

>Reporter: Kong Wei

>Assignee: Kong Wei

>Priority: Major

> Labels: pull-request-available

>

> For the kafka source, when pulling data from kafka, the default parallelism

> is the number of kafka partitions, and the only way to increase the

> parallelism (to speed up) is to add more kafka partitions.

> There are cases:

> # Pulling large amount of data from kafka (eg. maxEvents=1), but the

> # of kafka partition is not enough, the procedure of the pulling will cost

> too much of time, even worse can cause the executor OOM

> # There is huge data skew between kafka partitions, the procedure of the

> pulling will be blocked by the slowest partition

> to solve those cases, I want to add a parameter

> {{*hoodie.deltastreamer.source.kafka.per.partition.maxEvents*}} to control

> the maxEvents in one kafka partition, default Long.MAX_VALUE means not trun

> this feature on.

>

> For example, given hoodie.deltastreamer.kafka.source.maxEvents=1000, 2

> kafka partitions:

> the best case is pulling 500 events from each kafka partition, which may

> take minutes to finish;

> while worse case may be pulling 900 event from one partition, and pulling

> 100 events from another one, which will take more time to finish due to

> data skew.

>

> In this example, we set

> {{hoodie.deltastreamer.source.kafka.per.partition.maxEvents=100, then we

> will split the kafka source into at least 10 parts, each executor will

> pulling at most 100 events from kafka, which will take the advantage of

> parallelism.}}

> {{}}

> {{}}

> {{**}}

> 3 benefits of this feature:

> # Avoid a single executor pulling a large amount of data and taking too long

> ({*}avoid data skew{*})

> # Avoid a single executor pulling a large amount of data, use too much

> memory or even OOM ({*}avoid OOM{*})

> # A single executor pulls a small amount of data, which can make full use of

> the number of cores to improve concurrency, then reduce the time of the

> pulling procedure ({*}increase parallelism{*})

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Resolved] (HUDI-6019) Kafka source support split by count

[

https://issues.apache.org/jira/browse/HUDI-6019?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Kong Wei resolved HUDI-6019.

> Kafka source support split by count

> ---

>

> Key: HUDI-6019

> URL: https://issues.apache.org/jira/browse/HUDI-6019

> Project: Apache Hudi

> Issue Type: New Feature

> Components: deltastreamer, hudi-utilities

>Reporter: Kong Wei

>Assignee: Kong Wei

>Priority: Major

> Labels: pull-request-available

>

> For the kafka source, when pulling data from kafka, the default parallelism

> is the number of kafka partitions, and the only way to increase the

> parallelism (to speed up) is to add more kafka partitions.

> There are cases:

> # Pulling large amount of data from kafka (eg. maxEvents=1), but the

> # of kafka partition is not enough, the procedure of the pulling will cost

> too much of time, even worse can cause the executor OOM

> # There is huge data skew between kafka partitions, the procedure of the

> pulling will be blocked by the slowest partition

> to solve those cases, I want to add a parameter

> {{*hoodie.deltastreamer.source.kafka.per.partition.maxEvents*}} to control

> the maxEvents in one kafka partition, default Long.MAX_VALUE means not trun

> this feature on.

>

> For example, given hoodie.deltastreamer.kafka.source.maxEvents=1000, 2

> kafka partitions:

> the best case is pulling 500 events from each kafka partition, which may

> take minutes to finish;

> while worse case may be pulling 900 event from one partition, and pulling

> 100 events from another one, which will take more time to finish due to

> data skew.

>

> In this example, we set

> {{hoodie.deltastreamer.source.kafka.per.partition.maxEvents=100, then we

> will split the kafka source into at least 10 parts, each executor will

> pulling at most 100 events from kafka, which will take the advantage of

> parallelism.}}

> {{}}

> {{}}

> {{**}}

> 3 benefits of this feature:

> # Avoid a single executor pulling a large amount of data and taking too long

> ({*}avoid data skew{*})

> # Avoid a single executor pulling a large amount of data, use too much

> memory or even OOM ({*}avoid OOM{*})

> # A single executor pulls a small amount of data, which can make full use of

> the number of cores to improve concurrency, then reduce the time of the

> pulling procedure ({*}increase parallelism{*})

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[hudi] branch master updated: [MINOR] Claim RFC-69 for Hudi 1.x (#8671)

This is an automated email from the ASF dual-hosted git repository. vinoth pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git The following commit(s) were added to refs/heads/master by this push: new a71d3e49fe6 [MINOR] Claim RFC-69 for Hudi 1.x (#8671) a71d3e49fe6 is described below commit a71d3e49fe6fb1c7d3dbbed846eb81d97464768b Author: vinoth chandar AuthorDate: Mon May 8 21:39:27 2023 -0700 [MINOR] Claim RFC-69 for Hudi 1.x (#8671) --- rfc/README.md | 1 + 1 file changed, 1 insertion(+) diff --git a/rfc/README.md b/rfc/README.md index d894ccf0d22..9218b0e71b6 100644 --- a/rfc/README.md +++ b/rfc/README.md @@ -104,3 +104,4 @@ The list of all RFCs can be found here. | 66 | [Lockless Multi-Writer Support](./rfc-66/rfc-66.md) | `UNDER REVIEW` | | 67 | [Hudi Bundle Standards](./rfc-67/rfc-67.md) | `UNDER REVIEW` | | 68 | [A More Effective HoodieMergeHandler for COW Table with Parquet](./rfc-68/rfc-68.md) | `UNDER REVIEW` | +| 69 | [Hudi 1.x](./rfc-69/rfc-69.md) | `UNDER REVIEW` |

[GitHub] [hudi] vinothchandar merged pull request #8671: [MINOR] Claim RFC-69 for Hudi 1.x

vinothchandar merged PR #8671: URL: https://github.com/apache/hudi/pull/8671 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #8503: [HUDI-6047] Clustering operation on consistent hashing index resulting in duplicate data

danny0405 commented on code in PR #8503:

URL: https://github.com/apache/hudi/pull/8503#discussion_r1188118551

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/index/HoodieIndex.java:

##

@@ -154,6 +154,14 @@ public boolean requiresTagging(WriteOperationType

operationType) {

public void close() {

}

+ /***

+ * Updates index metadata of the given table and instant if needed.

+ * @param table The committed table.

+ * @param hoodieInstant The instant to commit.

+ */

+ public void commitIndexMetadataIfNeeded(HoodieTable table, String

hoodieInstant) {

+ }

+

Review Comment:

Cool, then we can get rid of the in-consistency and also the method

`commitIndexMetadataIfNeeded`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8669: [HUDI-5362] Rebase IncrementalRelation over HoodieBaseRelation

hudi-bot commented on PR #8669: URL: https://github.com/apache/hudi/pull/8669#issuecomment-1539383881 ## CI report: * 9b8fd1cd5d56d58fc52d334a54e326c405fadf53 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16966) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8660: [MINOR] Fix RunBootstrapProcedure doesn't has database default value

hudi-bot commented on PR #8660: URL: https://github.com/apache/hudi/pull/8660#issuecomment-1539383737 ## CI report: * a8c869a89e0382f1d82eab51a73dac7b180b766a Azure: [CANCELED](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16961) * 07fff1ff35fd19d4abb39a184e17cc0683db770e Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16965) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] vinothchandar opened a new pull request, #8671: [MINOR] Claim RFC-69 for Hudi 1.x

vinothchandar opened a new pull request, #8671: URL: https://github.com/apache/hudi/pull/8671 ### Change Logs _Describe context and summary for this change. Highlight if any code was copied._ ### Impact _Describe any public API or user-facing feature change or any performance impact._ ### Risk level (write none, low medium or high below) _If medium or high, explain what verification was done to mitigate the risks._ ### Documentation Update _Describe any necessary documentation update if there is any new feature, config, or user-facing change_ - _The config description must be updated if new configs are added or the default value of the configs are changed_ - _Any new feature or user-facing change requires updating the Hudi website. Please create a Jira ticket, attach the ticket number here and follow the [instruction](https://hudi.apache.org/contribute/developer-setup#website) to make changes to the website._ ### Contributor's checklist - [ ] Read through [contributor's guide](https://hudi.apache.org/contribute/how-to-contribute) - [ ] Change Logs and Impact were stated clearly - [ ] Adequate tests were added if applicable - [ ] CI passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8452: [HUDI-6077] Add more partition push down filters

hudi-bot commented on PR #8452: URL: https://github.com/apache/hudi/pull/8452#issuecomment-1539382645 ## CI report: * 6526a12287cc85865da640d23a9266d887e82eba Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16864) Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16877) * 38071fbfee977489b4997fd386e6d183435a6cbe Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16964) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8669: [HUDI-5362] Rebase IncrementalRelation over HoodieBaseRelation

hudi-bot commented on PR #8669: URL: https://github.com/apache/hudi/pull/8669#issuecomment-1539373231 ## CI report: * 9b8fd1cd5d56d58fc52d334a54e326c405fadf53 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8668: [HUDI-3639] Add Proper Incremental Records FIltering support into Hudi's custom RDD

hudi-bot commented on PR #8668: URL: https://github.com/apache/hudi/pull/8668#issuecomment-1539373202 ## CI report: * f13c6675399e5ef6c4a64b276251ef7cbd7a7c84 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16963) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8660: [MINOR] Fix RunBootstrapProcedure doesn't has database default value

hudi-bot commented on PR #8660: URL: https://github.com/apache/hudi/pull/8660#issuecomment-1539373174 ## CI report: * b0f6290c6294d4857e4781dc83de2e626ed68f3a Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16930) * a8c869a89e0382f1d82eab51a73dac7b180b766a Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16961) * 07fff1ff35fd19d4abb39a184e17cc0683db770e UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8452: [HUDI-6077] Add more partition push down filters

hudi-bot commented on PR #8452: URL: https://github.com/apache/hudi/pull/8452#issuecomment-1539372872 ## CI report: * 6526a12287cc85865da640d23a9266d887e82eba Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16864) Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16877) * 38071fbfee977489b4997fd386e6d183435a6cbe UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8668: [HUDI-3639] Add Proper Incremental Records FIltering support into Hudi's custom RDD

hudi-bot commented on PR #8668: URL: https://github.com/apache/hudi/pull/8668#issuecomment-1539369241 ## CI report: * f13c6675399e5ef6c4a64b276251ef7cbd7a7c84 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8666: [HUDI-915] Add missing partititonpath to records COW

hudi-bot commented on PR #8666: URL: https://github.com/apache/hudi/pull/8666#issuecomment-1539369207 ## CI report: * 5d1b90a6e91fbfe1229556377831d0c52d9c7613 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16956) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] bvaradar commented on a diff in pull request #8452: [HUDI-6077] Add more partition push down filters

bvaradar commented on code in PR #8452:

URL: https://github.com/apache/hudi/pull/8452#discussion_r1186777672

##

hudi-common/src/main/java/org/apache/hudi/expression/Expression.java:

##

@@ -40,14 +51,19 @@ public enum Operator {

}

}

- private final List children;

+ List getChildren();

Review Comment:

Make this and getDataType protected

##

hudi-common/src/main/java/org/apache/hudi/metadata/FileSystemBackedTableMetadata.java:

##

@@ -112,6 +164,17 @@ private List getPartitionPathWithPathPrefix(String

relativePathPrefix) t

}, listingParallelism);

pathsToList.clear();

+ Expression boundedExpr;

Review Comment:

Please add descriptive comment for this block.

##

hudi-common/src/main/java/org/apache/hudi/metadata/FileSystemBackedTableMetadata.java:

##

@@ -95,11 +120,38 @@ public List

getPartitionPathWithPathPrefixes(List relativePathPr

}).collect(Collectors.toList());

}

+ private int getRelativePathPartitionLevel(Types.RecordType partitionFields,

String relativePathPrefix) {

+if (StringUtils.isNullOrEmpty(relativePathPrefix) || partitionFields ==

null || partitionFields.fields().size() == 1) {

+ return 0;

+}

+

+int level = 0;

+for (int i = 1; i < relativePathPrefix.length() - 1; i++) {

Review Comment:

Can we use partitionFields.size to find the level ?

##

hudi-common/src/main/java/org/apache/hudi/expression/BindVisitor.java:

##

@@ -0,0 +1,179 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.expression;

+

+import org.apache.hudi.internal.schema.Types;

+

+import java.util.List;

+import java.util.stream.Collectors;

+

+public class BindVisitor implements ExpressionVisitor {

+

+ protected final Types.RecordType recordType;

+ protected final boolean caseSensitive;

+

+ public BindVisitor(Types.RecordType recordType, boolean caseSensitive) {

+this.recordType = recordType;

+this.caseSensitive = caseSensitive;

+ }

+

+ @Override

+ public Expression alwaysTrue() {

+return Predicates.True.get();

+ }

+

+ @Override

+ public Expression alwaysFalse() {

+return Predicates.False.get();

+ }

+

+ @Override

+ public Expression visitAnd(Predicates.And and) {

+if (and.getLeft() instanceof Predicates.False

+|| and.getRight() instanceof Predicates.False) {

+ return alwaysFalse();

+}

+

+Expression left = and.getLeft().accept(this);

+Expression right = and.getRight().accept(this);

+if (left instanceof Predicates.False

+|| right instanceof Predicates.False) {

+ return alwaysFalse();

+}

+

+if (left instanceof Predicates.True

+&& right instanceof Predicates.True) {

+ return alwaysTrue();

+}

+

+if (left instanceof Predicates.True) {

+ return right;

+}

+

+if (right instanceof Predicates.True) {

+ return left;

+}

+

+return Predicates.and(left, right);

+ }

+

+ @Override

+ public Expression visitOr(Predicates.Or or) {

+if (or.getLeft() instanceof Predicates.True

+|| or.getRight() instanceof Predicates.True) {

+ return alwaysTrue();

+}

+

+Expression left = or.getLeft().accept(this);

+Expression right = or.getRight().accept(this);

+if (left instanceof Predicates.True

+|| right instanceof Predicates.True) {

+ return alwaysTrue();

+}

+

+if (left instanceof Predicates.False

+&& right instanceof Predicates.False) {

+ return alwaysFalse();

+}

+

+if (left instanceof Predicates.False) {

+ return right;

+}

+

+if (right instanceof Predicates.False) {

+ return left;

+}

+

+return Predicates.or(left, right);

+ }

+

+ @Override

+ public Expression visitLiteral(Literal literal) {

+return literal;

+ }

+

+ @Override

+ public Expression visitNameReference(NameReference attribute) {

+// TODO Should consider caseSensitive?

Review Comment:

Yes, case insensitive by default would make it consistent with spark sql.

For this , I think it would be ok to introduce a config for case sensitivity

and align it with spark.sql.caseSensitive config in the hudi-spark integration.

[GitHub] [hudi] boneanxs commented on pull request #8076: [HUDI-5884] Support bulk_insert for insert_overwrite and insert_overwrite_table

boneanxs commented on PR #8076: URL: https://github.com/apache/hudi/pull/8076#issuecomment-1539365438 Hi @codope @stream2000 Gentle ping... Could you please take a look again? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] boneanxs commented on a diff in pull request #8669: [HUDI-5362] Rebase IncrementalRelation over HoodieBaseRelation

boneanxs commented on code in PR #8669:

URL: https://github.com/apache/hudi/pull/8669#discussion_r1188092438

##

hudi-spark-datasource/hudi-spark-common/src/main/scala/org/apache/hudi/IncrementalRelation.scala:

##

@@ -17,281 +17,82 @@

package org.apache.hudi

-import org.apache.avro.Schema

-import org.apache.hadoop.fs.{GlobPattern, Path}

-import org.apache.hudi.HoodieBaseRelation.isSchemaEvolutionEnabledOnRead

-import org.apache.hudi.client.common.HoodieSparkEngineContext

-import org.apache.hudi.client.utils.SparkInternalSchemaConverter

-import org.apache.hudi.common.fs.FSUtils

-import org.apache.hudi.common.model.{HoodieCommitMetadata, HoodieFileFormat,

HoodieRecord, HoodieReplaceCommitMetadata}

-import org.apache.hudi.common.table.timeline.{HoodieInstant, HoodieTimeline}

-import org.apache.hudi.common.table.{HoodieTableMetaClient,

TableSchemaResolver}

-import org.apache.hudi.common.util.{HoodieTimer, InternalSchemaCache}

-import org.apache.hudi.config.HoodieWriteConfig

-import org.apache.hudi.exception.HoodieException

-import org.apache.hudi.internal.schema.InternalSchema

-import org.apache.hudi.internal.schema.utils.SerDeHelper

-import org.apache.hudi.table.HoodieSparkTable

-import org.apache.spark.api.java.JavaSparkContext

+import org.apache.hudi.common.table.timeline.HoodieTimeline

+import org.apache.hudi.common.table.view.HoodieTableFileSystemView

+import org.apache.hudi.common.table.HoodieTableMetaClient

+import

org.apache.hudi.hadoop.utils.HoodieInputFormatUtils.getWritePartitionPaths

import org.apache.spark.rdd.RDD

-import

org.apache.spark.sql.execution.datasources.parquet.HoodieParquetFileFormat

-import org.apache.spark.sql.sources.{BaseRelation, TableScan}

+import org.apache.spark.sql.catalyst.InternalRow

+import org.apache.spark.sql.catalyst.expressions.Expression

+import org.apache.spark.sql.sources.Filter

import org.apache.spark.sql.types.StructType

-import org.apache.spark.sql.{DataFrame, Row, SQLContext}

-import org.slf4j.LoggerFactory

+import org.apache.spark.sql.SQLContext

-import scala.collection.JavaConversions._

-import scala.collection.mutable

+import scala.collection.JavaConverters._

/**

* Relation, that implements the Hoodie incremental view.

*

* Implemented for Copy_on_write storage.

- * TODO: rebase w/ HoodieBaseRelation HUDI-5362

*

*/

-class IncrementalRelation(val sqlContext: SQLContext,

- val optParams: Map[String, String],

- val userSchema: Option[StructType],

- val metaClient: HoodieTableMetaClient) extends

BaseRelation with TableScan {

-

- private val log = LoggerFactory.getLogger(classOf[IncrementalRelation])

-

- val skeletonSchema: StructType = HoodieSparkUtils.getMetaSchema

- private val basePath = metaClient.getBasePathV2

- // TODO : Figure out a valid HoodieWriteConfig

- private val hoodieTable =

HoodieSparkTable.create(HoodieWriteConfig.newBuilder().withPath(basePath.toString).build(),

-new HoodieSparkEngineContext(new

JavaSparkContext(sqlContext.sparkContext)),

-metaClient)

- private val commitTimeline =

hoodieTable.getMetaClient.getCommitTimeline.filterCompletedInstants()

-

- private val useStateTransitionTime =

optParams.get(DataSourceReadOptions.READ_BY_STATE_TRANSITION_TIME.key)

-.map(_.toBoolean)

-

.getOrElse(DataSourceReadOptions.READ_BY_STATE_TRANSITION_TIME.defaultValue)

-

- if (commitTimeline.empty()) {

-throw new HoodieException("No instants to incrementally pull")

- }

- if (!optParams.contains(DataSourceReadOptions.BEGIN_INSTANTTIME.key)) {

-throw new HoodieException(s"Specify the begin instant time to pull from

using " +

- s"option ${DataSourceReadOptions.BEGIN_INSTANTTIME.key}")

+case class IncrementalRelation(override val sqlContext: SQLContext,

+ override val optParams: Map[String, String],

+ private val userSchema: Option[StructType],

+ override val metaClient: HoodieTableMetaClient,

+ private val prunedDataSchema:

Option[StructType] = None)

+ extends AbstractBaseFileOnlyRelation(sqlContext, metaClient, optParams,

userSchema, Seq(), prunedDataSchema)

+with HoodieIncrementalRelationTrait {

+

+ override type Relation = IncrementalRelation

+

+ override def imbueConfigs(sqlContext: SQLContext): Unit = {

+super.imbueConfigs(sqlContext)

+// TODO(HUDI-3639) vectorized reader has to be disabled to make sure

IncrementalRelation is working properly

+

sqlContext.sparkSession.sessionState.conf.setConfString("spark.sql.parquet.enableVectorizedReader",

"false")

}

-

- if (!metaClient.getTableConfig.populateMetaFields()) {

-throw new HoodieException("Incremental queries are not supported when meta

fields are disabled")

- }

-

- val useEndInstantSchema =

optParams.getOrElse(DataSourceReadOptions.INCREMENTAL_READ_SCHEMA_USE_END_INSTANTTIME.key,

-

[GitHub] [hudi] boneanxs commented on a diff in pull request #8669: [HUDI-5362] Rebase IncrementalRelation over HoodieBaseRelation

boneanxs commented on code in PR #8669:

URL: https://github.com/apache/hudi/pull/8669#discussion_r1188092438

##

hudi-spark-datasource/hudi-spark-common/src/main/scala/org/apache/hudi/IncrementalRelation.scala:

##

@@ -17,281 +17,82 @@

package org.apache.hudi

-import org.apache.avro.Schema

-import org.apache.hadoop.fs.{GlobPattern, Path}

-import org.apache.hudi.HoodieBaseRelation.isSchemaEvolutionEnabledOnRead

-import org.apache.hudi.client.common.HoodieSparkEngineContext

-import org.apache.hudi.client.utils.SparkInternalSchemaConverter

-import org.apache.hudi.common.fs.FSUtils

-import org.apache.hudi.common.model.{HoodieCommitMetadata, HoodieFileFormat,

HoodieRecord, HoodieReplaceCommitMetadata}

-import org.apache.hudi.common.table.timeline.{HoodieInstant, HoodieTimeline}

-import org.apache.hudi.common.table.{HoodieTableMetaClient,

TableSchemaResolver}

-import org.apache.hudi.common.util.{HoodieTimer, InternalSchemaCache}

-import org.apache.hudi.config.HoodieWriteConfig

-import org.apache.hudi.exception.HoodieException

-import org.apache.hudi.internal.schema.InternalSchema

-import org.apache.hudi.internal.schema.utils.SerDeHelper

-import org.apache.hudi.table.HoodieSparkTable

-import org.apache.spark.api.java.JavaSparkContext

+import org.apache.hudi.common.table.timeline.HoodieTimeline

+import org.apache.hudi.common.table.view.HoodieTableFileSystemView

+import org.apache.hudi.common.table.HoodieTableMetaClient

+import

org.apache.hudi.hadoop.utils.HoodieInputFormatUtils.getWritePartitionPaths

import org.apache.spark.rdd.RDD

-import

org.apache.spark.sql.execution.datasources.parquet.HoodieParquetFileFormat

-import org.apache.spark.sql.sources.{BaseRelation, TableScan}

+import org.apache.spark.sql.catalyst.InternalRow

+import org.apache.spark.sql.catalyst.expressions.Expression

+import org.apache.spark.sql.sources.Filter

import org.apache.spark.sql.types.StructType

-import org.apache.spark.sql.{DataFrame, Row, SQLContext}

-import org.slf4j.LoggerFactory

+import org.apache.spark.sql.SQLContext

-import scala.collection.JavaConversions._

-import scala.collection.mutable

+import scala.collection.JavaConverters._

/**

* Relation, that implements the Hoodie incremental view.

*

* Implemented for Copy_on_write storage.

- * TODO: rebase w/ HoodieBaseRelation HUDI-5362

*

*/

-class IncrementalRelation(val sqlContext: SQLContext,

- val optParams: Map[String, String],

- val userSchema: Option[StructType],

- val metaClient: HoodieTableMetaClient) extends

BaseRelation with TableScan {

-

- private val log = LoggerFactory.getLogger(classOf[IncrementalRelation])

-

- val skeletonSchema: StructType = HoodieSparkUtils.getMetaSchema

- private val basePath = metaClient.getBasePathV2

- // TODO : Figure out a valid HoodieWriteConfig

- private val hoodieTable =

HoodieSparkTable.create(HoodieWriteConfig.newBuilder().withPath(basePath.toString).build(),

-new HoodieSparkEngineContext(new

JavaSparkContext(sqlContext.sparkContext)),

-metaClient)

- private val commitTimeline =

hoodieTable.getMetaClient.getCommitTimeline.filterCompletedInstants()

-

- private val useStateTransitionTime =

optParams.get(DataSourceReadOptions.READ_BY_STATE_TRANSITION_TIME.key)

-.map(_.toBoolean)

-

.getOrElse(DataSourceReadOptions.READ_BY_STATE_TRANSITION_TIME.defaultValue)

-

- if (commitTimeline.empty()) {

-throw new HoodieException("No instants to incrementally pull")

- }

- if (!optParams.contains(DataSourceReadOptions.BEGIN_INSTANTTIME.key)) {

-throw new HoodieException(s"Specify the begin instant time to pull from

using " +

- s"option ${DataSourceReadOptions.BEGIN_INSTANTTIME.key}")

+case class IncrementalRelation(override val sqlContext: SQLContext,

+ override val optParams: Map[String, String],

+ private val userSchema: Option[StructType],

+ override val metaClient: HoodieTableMetaClient,

+ private val prunedDataSchema:

Option[StructType] = None)

+ extends AbstractBaseFileOnlyRelation(sqlContext, metaClient, optParams,

userSchema, Seq(), prunedDataSchema)

+with HoodieIncrementalRelationTrait {

+

+ override type Relation = IncrementalRelation

+

+ override def imbueConfigs(sqlContext: SQLContext): Unit = {

+super.imbueConfigs(sqlContext)

+// TODO(HUDI-3639) vectorized reader has to be disabled to make sure

IncrementalRelation is working properly

+

sqlContext.sparkSession.sessionState.conf.setConfString("spark.sql.parquet.enableVectorizedReader",

"false")

}

-

- if (!metaClient.getTableConfig.populateMetaFields()) {

-throw new HoodieException("Incremental queries are not supported when meta

fields are disabled")

- }

-

- val useEndInstantSchema =

optParams.getOrElse(DataSourceReadOptions.INCREMENTAL_READ_SCHEMA_USE_END_INSTANTTIME.key,

-

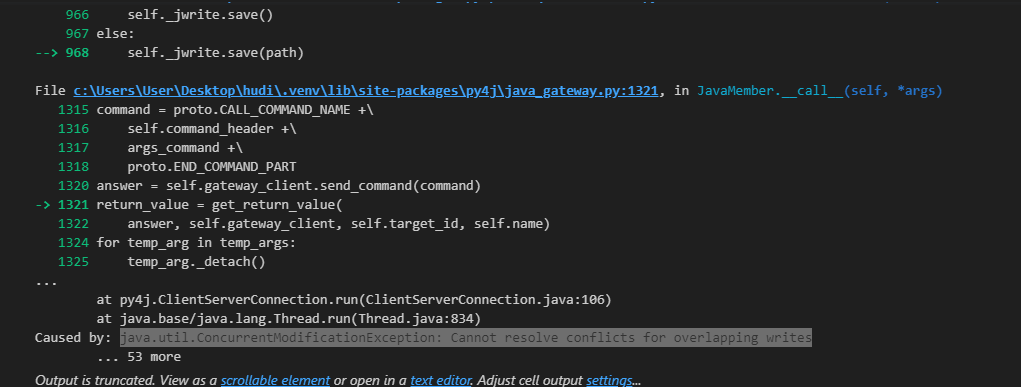

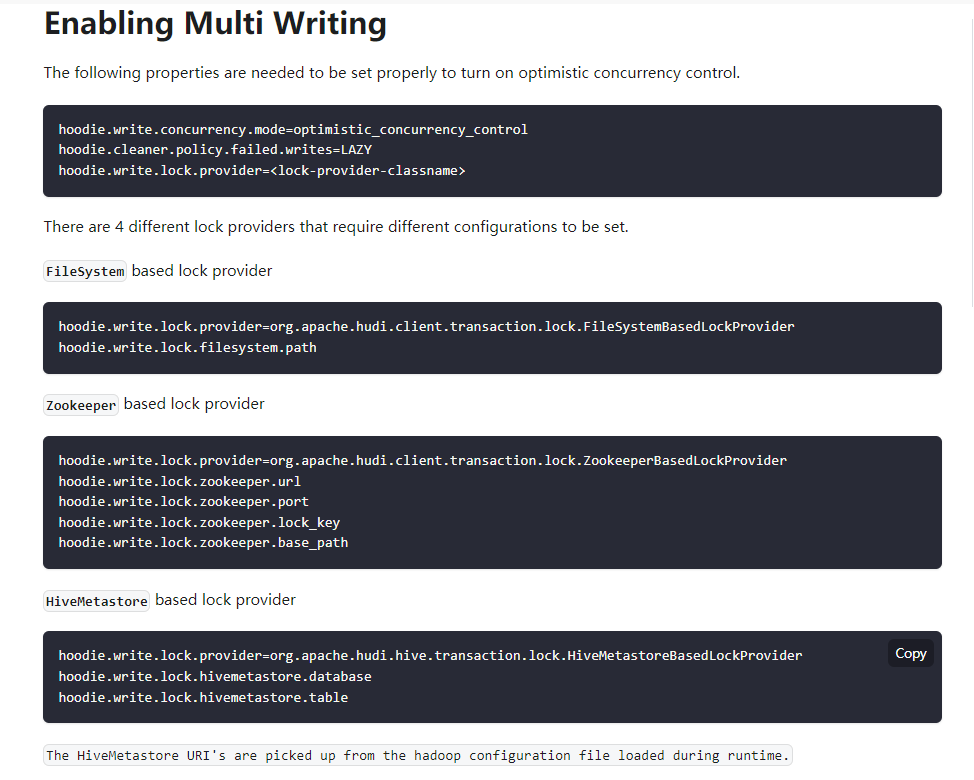

[GitHub] [hudi] tomyanth opened a new issue, #8670: [SUPPORT] Hudi cannot multi-write referring to case #7653

tomyanth opened a new issue, #8670:

URL: https://github.com/apache/hudi/issues/8670

**Describe the problem you faced**

Actually I raise my issues under case #7653 because I have almost the same

issue with that original question which is

java.util.ConcurrentModificationException: Cannot resolve conflicts for

overlapping writes

**To Reproduce**

Steps to reproduce the behavior:

1. Run 2 hudi job to write the same location to simulate the process of

multi write

2. If set to overwrite, both job fails

3. If set to append, at most one job succeed.

4. With or without the multi-write setting suggest below, at most only one

job succeed but the error message is different

hudi_options = {

'hoodie.table.name': table_name,

'hoodie.datasource.write.recordkey.field': 'emp_id',

'hoodie.datasource.write.table.name': table_name,

'hoodie.datasource.write.operation': 'upsert',

'hoodie.datasource.write.precombine.field': 'ts',

'hoodie.upsert.shuffle.parallelism': 2,

'hoodie.insert.shuffle.parallelism': 2,

'hoodie.schema.on.read.enable' : 'true', # for changing column names

'hoodie.write.concurrency.mode':'optimistic_concurrency_control',

#added for zookeeper to deal with multiple source writes

'hoodie.cleaner.policy.failed.writes':'LAZY',

#

'hoodie.write.lock.provider':'org.apache.hudi.client.transaction.lock.FileSystemBasedLockProvider',

'hoodie.write.lock.provider':'org.apache.hudi.client.transaction.lock.ZookeeperBasedLockProvider',

'hoodie.write.lock.zookeeper.url':'localhost',

'hoodie.write.lock.zookeeper.port':'2181',

'hoodie.write.lock.zookeeper.lock_key':'my_lock',

'hoodie.write.lock.zookeeper.base_path':'/hudi_locks',

}

**Expected behavior**

I expect at least FileSystemBasedLockProvider wll be able to perform

multi-write but unfortunately the same error message

java.util.ConcurrentModificationException: Cannot resolve conflicts for

overlapping writes always pops up.

Code run

"""

Install

https://dlcdn.apache.org/spark/spark-3.3.1/spark-3.3.1-bin-hadoop2.tgz

hadoop2.7

https://github.com/soumilshah1995/winutils/blob/master/hadoop-2.7.7/bin/winutils.exe

pyspark --packages org.apache.hudi:hudi-spark3.3-bundle_2.12:0.12.1 --conf

'spark.serializer=org.apache.spark.serializer.KryoSerializer'

VAR

SPARK_HOME

HADOOP_HOME

PATH

`%HAPOOP_HOME%\bin`

`%SPARK_HOME%\bin`

Complete Tutorials on HUDI

https://github.com/soumilshah1995/Insert-Update-Read-Write-SnapShot-Time-Travel-incremental-Query-on-APache-Hudi-transacti/blob/main/hudi%20(1).ipynb

"""

import os

import sys

import uuid

import pyspark

from pyspark.sql import SparkSession

from pyspark import SparkConf, SparkContext

from pyspark.sql.functions import col, asc, desc

from pyspark.sql.functions import col, to_timestamp,

monotonically_increasing_id, to_date, when

from pyspark.sql.functions import *

from pyspark.sql.types import *

from datetime import datetime

from functools import reduce

from faker import Faker

from faker import Faker

import findspark

import datetime

time = datetime.datetime.now()

time = time.strftime("YMD%Y%m%dHHMMSSms%H%M%S%f")

SUBMIT_ARGS = "--packages org.apache.hudi:hudi-spark3.3-bundle_2.12:0.12.1

pyspark-shell"

os.environ["PYSPARK_SUBMIT_ARGS"] = SUBMIT_ARGS

os.environ['PYSPARK_PYTHON'] = sys.executable

os.environ['PYSPARK_DRIVER_PYTHON'] = sys.executable

findspark.init()

spark = SparkSession.builder\

.config('spark.serializer',

'org.apache.spark.serializer.KryoSerializer') \

.config('className', 'org.apache.hudi') \

.config('spark.sql.hive.convertMetastoreParquet', 'false') \

.config('spark.sql.extensions',

'org.apache.spark.sql.hudi.HoodieSparkSessionExtension') \

.config('spark.sql.warehouse.dir', 'file:///C:/tmp/spark_warehouse') \

.getOrCreate()

global faker

faker = Faker()

class DataGenerator(object):

@staticmethod

def get_data():

return [

(

x,

faker.name(),

faker.random_element(elements=('IT', 'HR', 'Sales',

'Marketing')),

faker.random_element(elements=('CA', 'NY', 'TX', 'FL', 'IL',

'RJ')),

faker.random_int(min=1, max=15),

faker.random_int(min=18, max=60),

faker.random_int(min=0, max=10),

faker.unix_time()

) for x in range(5)

]

data = DataGenerator.get_data()

columns = ["emp_id", "employee_name",

[jira] [Updated] (HUDI-5362) Rebase IncrementalRelation over HoodieBaseRelation

[ https://issues.apache.org/jira/browse/HUDI-5362?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HUDI-5362: - Labels: pull-request-available (was: ) > Rebase IncrementalRelation over HoodieBaseRelation > -- > > Key: HUDI-5362 > URL: https://issues.apache.org/jira/browse/HUDI-5362 > Project: Apache Hudi > Issue Type: Improvement > Components: reader-core >Reporter: sivabalan narayanan >Priority: Major > Labels: pull-request-available > > We need to rebase IncrementalRelation over HoodieBaseRelation. As of now, its > based of of BaseRelation. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] boneanxs opened a new pull request, #8669: [HUDI-5362] Rebase IncrementalRelation over HoodieBaseRelation

boneanxs opened a new pull request, #8669: URL: https://github.com/apache/hudi/pull/8669 ### Change Logs _Describe context and summary for this change. Highlight if any code was copied._ Rebase IncrementalRelation over HoodieBaseRelation ### Impact _Describe any public API or user-facing feature change or any performance impact._ None. ### Risk level (write none, low medium or high below) _If medium or high, explain what verification was done to mitigate the risks._ none. ### Documentation Update _Describe any necessary documentation update if there is any new feature, config, or user-facing change_ - _The config description must be updated if new configs are added or the default value of the configs are changed_ - _Any new feature or user-facing change requires updating the Hudi website. Please create a Jira ticket, attach the ticket number here and follow the [instruction](https://hudi.apache.org/contribute/developer-setup#website) to make changes to the website._ ### Contributor's checklist - [ ] Read through [contributor's guide](https://hudi.apache.org/contribute/how-to-contribute) - [ ] Change Logs and Impact were stated clearly - [ ] Adequate tests were added if applicable - [ ] CI passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #8082: [HUDI-5868] Upgrade Spark to 3.3.2

danny0405 commented on code in PR #8082:

URL: https://github.com/apache/hudi/pull/8082#discussion_r1188080016

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/io/storage/HoodieSparkFileReaderFactory.java:

##

@@ -33,6 +33,8 @@ protected HoodieFileReader newParquetFileReader(Configuration

conf, Path path) {

conf.setIfUnset(SQLConf.PARQUET_BINARY_AS_STRING().key(),

SQLConf.PARQUET_BINARY_AS_STRING().defaultValueString());

conf.setIfUnset(SQLConf.PARQUET_INT96_AS_TIMESTAMP().key(),

SQLConf.PARQUET_INT96_AS_TIMESTAMP().defaultValueString());

conf.setIfUnset(SQLConf.CASE_SENSITIVE().key(),

SQLConf.CASE_SENSITIVE().defaultValueString());

+// Using string value of this conf to preserve compatibility across spark

versions.

+conf.setIfUnset("spark.sql.legacy.parquet.nanosAsLong", "false");

Review Comment:

No need to do that.

##

hudi-spark-datasource/hudi-spark3.2plus-common/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/Spark32PlusHoodieParquetFileFormat.scala:

##

@@ -95,7 +95,11 @@ class Spark32PlusHoodieParquetFileFormat(private val

shouldAppendPartitionValues

hadoopConf.setBoolean(

SQLConf.PARQUET_INT96_AS_TIMESTAMP.key,

sparkSession.sessionState.conf.isParquetINT96AsTimestamp)

-

+// Using string value of this conf to preserve compatibility across spark

versions.

+hadoopConf.setBoolean(

+ "spark.sql.legacy.parquet.nanosAsLong",

+

sparkSession.sessionState.conf.getConfString("spark.sql.legacy.parquet.nanosAsLong",

"false").toBoolean

Review Comment:

No need to do that.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #8082: [HUDI-5868] Upgrade Spark to 3.3.2

danny0405 commented on code in PR #8082:

URL: https://github.com/apache/hudi/pull/8082#discussion_r1188080242

##

hudi-client/hudi-spark-client/src/main/scala/org/apache/hudi/HoodieSparkUtils.scala:

##

@@ -58,6 +58,7 @@ private[hudi] trait SparkVersionsSupport {

def gteqSpark3_2_1: Boolean = getSparkVersion >= "3.2.1"

def gteqSpark3_2_2: Boolean = getSparkVersion >= "3.2.2"

def gteqSpark3_3: Boolean = getSparkVersion >= "3.3"

+ def gteqSpark3_3_2: Boolean = getSparkVersion >= "3.3.2"

Review Comment:

Should be runtime, I think.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #8082: [HUDI-5868] Upgrade Spark to 3.3.2

danny0405 commented on PR #8082: URL: https://github.com/apache/hudi/pull/8082#issuecomment-1539339102 There was one failure in the CI: TestAvroSchemaResolutionSupport.testDataTypePromotions -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #8659: [HUDI-6155] Fix cleaner based on hours for earliest commit to retain

danny0405 commented on code in PR #8659: URL: https://github.com/apache/hudi/pull/8659#discussion_r1188076995 ## hudi-common/src/main/java/org/apache/hudi/common/table/timeline/HoodieInstantTimeGenerator.java: ## @@ -94,7 +96,9 @@ public static Date parseDateFromInstantTime(String timestamp) throws ParseExcept } LocalDateTime dt = LocalDateTime.parse(timestampInMillis, MILLIS_INSTANT_TIME_FORMATTER); - return Date.from(dt.atZone(ZoneId.systemDefault()).toInstant()); + Instant instant = dt.atZone(getZoneId()).toInstant(); + TimeZone.setDefault(TimeZone.getTimeZone(getZoneId())); + return Date.from(instant); Review Comment: It is risky to set up timezone per JVM process: `TimeZone.setDefault(`, this could impact all the threads in the JVM. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-3639) [Incremental] Add Proper Incremental Records FIltering support into Hudi's custom RDD

[ https://issues.apache.org/jira/browse/HUDI-3639?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HUDI-3639: - Labels: pull-request-available (was: ) > [Incremental] Add Proper Incremental Records FIltering support into Hudi's > custom RDD > - > > Key: HUDI-3639 > URL: https://issues.apache.org/jira/browse/HUDI-3639 > Project: Apache Hudi > Issue Type: Bug >Reporter: Alexey Kudinkin >Priority: Critical > Labels: pull-request-available > Fix For: 0.13.1 > > > Currently, Hudi's `MergeOnReadIncrementalRelation` solely relies on > `ParquetFileReader` to do record-level filtering of the records that don't > belong to a timeline span being queried. > As a side-effect, Hudi actually have to disable the use of > [VectorizedParquetReader|https://jaceklaskowski.gitbooks.io/mastering-spark-sql/content/spark-sql-vectorized-parquet-reader.html] > (since using one would prevent records from being filtered by the Reader) > > Instead, we should make sure that proper record-level filtering is performed > w/in the returned RDD, instead of squarely relying on FileReader to do that. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] cxzl25 opened a new pull request, #8668: [HUDI-3639] [Incremental] Add Proper Incremental Records FIltering support into Hudi's custom RDD

cxzl25 opened a new pull request, #8668: URL: https://github.com/apache/hudi/pull/8668 ### Change Logs Add the filter operator in `HoodieMergeOnReadRDD`, and ensure the accuracy of incremental query results when `spark.sql.parquet.recordLevelFilter.enabled=false` or `spark.sql.parquet.enableVectorizedReader=true` ### Impact Fix the scenario where the incremental query data may be wrong. https://github.com/apache/hudi/pull/5168#discussion_r1186728549 ### Risk level (write none, low medium or high below) ### Documentation Update ### Contributor's checklist - [ ] Read through [contributor's guide](https://hudi.apache.org/contribute/how-to-contribute) - [ ] Change Logs and Impact were stated clearly - [ ] Adequate tests were added if applicable - [ ] CI passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8660: [MINOR] Fix RunBootstrapProcedure doesn't has database default value

hudi-bot commented on PR #8660: URL: https://github.com/apache/hudi/pull/8660#issuecomment-1539325879 ## CI report: * b0f6290c6294d4857e4781dc83de2e626ed68f3a Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16930) * a8c869a89e0382f1d82eab51a73dac7b180b766a Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16961) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xushiyan commented on a diff in pull request #6176: [HUDI-4445] S3 Incremental source improvements

xushiyan commented on code in PR #6176:

URL: https://github.com/apache/hudi/pull/6176#discussion_r1188070807

##

hudi-common/src/test/java/org/apache/hudi/common/testutils/S3EventTestPayload.java:

##

@@ -0,0 +1,53 @@

+package org.apache.hudi.common.testutils;

+

+import org.apache.hudi.avro.MercifulJsonConverter;

+import org.apache.hudi.common.model.HoodieRecordPayload;

+import org.apache.hudi.common.util.Option;

+

+import org.apache.avro.Schema;

+import org.apache.avro.generic.IndexedRecord;

+

+import java.io.IOException;

+import java.util.Map;

+

+/**

+ * Test payload for S3 event here

(https://docs.aws.amazon.com/AmazonS3/latest/userguide/notification-content-structure.html).

+ */

+public class S3EventTestPayload extends GenericTestPayload implements

HoodieRecordPayload {

Review Comment:

there is a lot of existing misused with the RawTripTestPayload see

https://issues.apache.org/jira/browse/HUDI-6164

so you may want to decouple the improvement changes from payload changes.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8107: [HUDI-5514][HUDI-5574][HUDI-5604][HUDI-5535] Adding auto generation of record keys support to Hudi/Spark

hudi-bot commented on PR #8107: URL: https://github.com/apache/hudi/pull/8107#issuecomment-1539325235 ## CI report: * 780318c5f048c4bf69980ac47d10d5e23994a21b Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=16954) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xushiyan commented on a diff in pull request #6176: [HUDI-4445] S3 Incremental source improvements

xushiyan commented on code in PR #6176:

URL: https://github.com/apache/hudi/pull/6176#discussion_r1188069077

##

hudi-common/src/test/java/org/apache/hudi/common/testutils/S3EventTestPayload.java:

##

@@ -0,0 +1,53 @@

+package org.apache.hudi.common.testutils;

+

+import org.apache.hudi.avro.MercifulJsonConverter;

+import org.apache.hudi.common.model.HoodieRecordPayload;

+import org.apache.hudi.common.util.Option;

+

+import org.apache.avro.Schema;

+import org.apache.avro.generic.IndexedRecord;

+

+import java.io.IOException;

+import java.util.Map;

+

+/**

+ * Test payload for S3 event here

(https://docs.aws.amazon.com/AmazonS3/latest/userguide/notification-content-structure.html).

+ */

+public class S3EventTestPayload extends GenericTestPayload implements

HoodieRecordPayload {

Review Comment:

I'd suggest just test with DefaultHoodieRecordPayload with a specific S3

event schema, instead of creating a new test payload, as we want to test as

close as the real scenario. Besides, we don't couple payload with schema, as

payload is just responsible for how to merge

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] xushiyan commented on a diff in pull request #6176: [HUDI-4445] S3 Incremental source improvements

xushiyan commented on code in PR #6176:

URL: https://github.com/apache/hudi/pull/6176#discussion_r1188065909

##

hudi-utilities/src/main/java/org/apache/hudi/utilities/sources/S3EventsHoodieIncrSource.java:

##

@@ -224,6 +232,6 @@ public Pair>, String>

fetchNextBatch(Option lastCkpt

}

LOG.debug("Extracted distinct files " + cloudFiles.size()

+ " and some samples " +

cloudFiles.stream().limit(10).collect(Collectors.toList()));

-return Pair.of(dataset, queryTypeAndInstantEndpts.getRight().getRight());

+return Pair.of(dataset, sourceMetadata.getRight());

}

-}

+}

Review Comment:

we should have the EOL

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] xushiyan commented on a diff in pull request #6176: [HUDI-4445] S3 Incremental source improvements

xushiyan commented on code in PR #6176:

URL: https://github.com/apache/hudi/pull/6176#discussion_r1188065742

##

hudi-utilities/src/main/java/org/apache/hudi/utilities/sources/S3EventsHoodieIncrSource.java:

##

@@ -189,33 +194,36 @@ public Pair>, String>

fetchNextBatch(Option lastCkpt

.filter(filter)

.select("s3.bucket.name", "s3.object.key")

.distinct()

-.mapPartitions((MapPartitionsFunction) fileListIterator

-> {

+.rdd()

+// JavaRDD simplifies coding with collect and suitable mapPartitions

signature. check if this can be avoided.

+.toJavaRDD()

+.mapPartitions(fileListIterator -> {

Review Comment:

we usually prefer high level dataframe apis. how is it actually beneficial

to convert to rdd here? don't quite get the comment

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] xushiyan commented on a diff in pull request #6176: [HUDI-4445] S3 Incremental source improvements

xushiyan commented on code in PR #6176:

URL: https://github.com/apache/hudi/pull/6176#discussion_r1188064051

##

hudi-common/src/test/java/org/apache/hudi/common/testutils/GenericTestPayload.java:

##

@@ -0,0 +1,110 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.hudi.common.testutils;

+

+import org.apache.hudi.avro.MercifulJsonConverter;

+import org.apache.hudi.common.util.FileIOUtils;

+import org.apache.hudi.common.util.Option;

+

+import com.fasterxml.jackson.databind.ObjectMapper;

+import org.apache.avro.Schema;

+import org.apache.avro.generic.IndexedRecord;

+

+import java.io.ByteArrayInputStream;

+import java.io.ByteArrayOutputStream;

+import java.io.IOException;

+import java.util.Map;

+import java.util.zip.Deflater;

+import java.util.zip.DeflaterOutputStream;

+import java.util.zip.InflaterInputStream;

+

+/**

+ * Generic class for specific payload implementations to inherit from.

+ */

+public abstract class GenericTestPayload {

Review Comment:

unsure about the necessity of creating a parent payload in tests. we need

just different types of payload to use directly, be it json, avro, spark, etc.

we should make test utils/models more straightforward

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #8631: [HUDI-6170] Use correct zone id while calculating earliestTimeToRetain

danny0405 commented on code in PR #8631:

URL: https://github.com/apache/hudi/pull/8631#discussion_r1188063597

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/table/action/clean/CleanPlanner.java:

##

@@ -510,7 +510,7 @@ public Option getEarliestCommitToRetain() {

}

} else if (config.getCleanerPolicy() ==

HoodieCleaningPolicy.KEEP_LATEST_BY_HOURS) {

Instant instant = Instant.now();

- ZonedDateTime currentDateTime = ZonedDateTime.ofInstant(instant,

ZoneId.systemDefault());

+ ZonedDateTime currentDateTime = ZonedDateTime.ofInstant(instant,

HoodieInstantTimeGenerator.getTimelineTimeZone().getZoneId());

String earliestTimeToRetain =

HoodieActiveTimeline.formatDate(Date.from(currentDateTime.minusHours(hoursRetained).toInstant()));

Review Comment:

Yeah, that means the code is prone to making misusages, let's fix all those

test falures by initialzing the zoneId manually.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #8631: [HUDI-6170] Use correct zone id while calculating earliestTimeToRetain

danny0405 commented on code in PR #8631:

URL: https://github.com/apache/hudi/pull/8631#discussion_r1188063597

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/table/action/clean/CleanPlanner.java:

##

@@ -510,7 +510,7 @@ public Option getEarliestCommitToRetain() {

}

} else if (config.getCleanerPolicy() ==

HoodieCleaningPolicy.KEEP_LATEST_BY_HOURS) {

Instant instant = Instant.now();

- ZonedDateTime currentDateTime = ZonedDateTime.ofInstant(instant,

ZoneId.systemDefault());

+ ZonedDateTime currentDateTime = ZonedDateTime.ofInstant(instant,

HoodieInstantTimeGenerator.getTimelineTimeZone().getZoneId());

String earliestTimeToRetain =

HoodieActiveTimeline.formatDate(Date.from(currentDateTime.minusHours(hoursRetained).toInstant()));

Review Comment:

Yeah, that means the code is prune to making misusages, let's fix all those

test falures by initialzing the zoneId manually.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] xushiyan commented on a diff in pull request #6176: [HUDI-4445] S3 Incremental source improvements

xushiyan commented on code in PR #6176:

URL: https://github.com/apache/hudi/pull/6176#discussion_r1188063109

##

hudi-common/src/test/java/org/apache/hudi/common/testutils/GenericTestPayload.java:

##

@@ -0,0 +1,110 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.hudi.common.testutils;

+

+import org.apache.hudi.avro.MercifulJsonConverter;

+import org.apache.hudi.common.util.FileIOUtils;

+import org.apache.hudi.common.util.Option;

+

+import com.fasterxml.jackson.databind.ObjectMapper;

+import org.apache.avro.Schema;

+import org.apache.avro.generic.IndexedRecord;

+

+import java.io.ByteArrayInputStream;

+import java.io.ByteArrayOutputStream;

+import java.io.IOException;

+import java.util.Map;

+import java.util.zip.Deflater;

+import java.util.zip.DeflaterOutputStream;

+import java.util.zip.InflaterInputStream;

+

+/**

+ * Generic class for specific payload implementations to inherit from.

+ */

+public abstract class GenericTestPayload {

+

+ protected static final transient ObjectMapper OBJECT_MAPPER = new

ObjectMapper();

+ protected String partitionPath;

+ protected String rowKey;

+ protected byte[] jsonDataCompressed;

+ protected int dataSize;

+ protected boolean isDeleted;

+ protected Comparable orderingVal;

+

+ public GenericTestPayload(Option jsonData, String rowKey, String

partitionPath, String schemaStr,

+Boolean isDeleted, Comparable orderingVal) throws

IOException {

+if (jsonData.isPresent()) {

+ this.jsonDataCompressed = compressData(jsonData.get());

+ this.dataSize = jsonData.get().length();

+}

+this.rowKey = rowKey;

+this.partitionPath = partitionPath;

+this.isDeleted = isDeleted;

+this.orderingVal = orderingVal;

+ }

+

+ public GenericTestPayload(String jsonData, String rowKey, String

partitionPath, String schemaStr) throws IOException {

+this(Option.of(jsonData), rowKey, partitionPath, schemaStr, false, 0L);

+ }

+

+ public GenericTestPayload(String jsonData) throws IOException {

+this.jsonDataCompressed = compressData(jsonData);

+this.dataSize = jsonData.length();

+Map jsonRecordMap = OBJECT_MAPPER.readValue(jsonData,

Map.class);

+this.rowKey = jsonRecordMap.get("_row_key").toString();

+this.partitionPath =

jsonRecordMap.get("time").toString().split("T")[0].replace("-", "/");

Review Comment:

i recall this logic has been refactored in the current `RawTripTestPayload`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org