[GitHub] [hadoop] hfutatzhanghb commented on pull request #3976: HDFS-16452. msync RPC should send to acitve namenode directly

hfutatzhanghb commented on pull request #3976: URL: https://github.com/apache/hadoop/pull/3976#issuecomment-1050618080 hi, @xkrogen . thanks a lot. I agree with you. I will figure out the better solution as i can. we can discuss it later. very thanks -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] virajjasani commented on pull request #4028: HDFS-16481. Provide support to set Http and Rpc ports in MiniJournalCluster

virajjasani commented on pull request #4028: URL: https://github.com/apache/hadoop/pull/4028#issuecomment-1050610723 @ferhui @goiri could you please review this PR? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

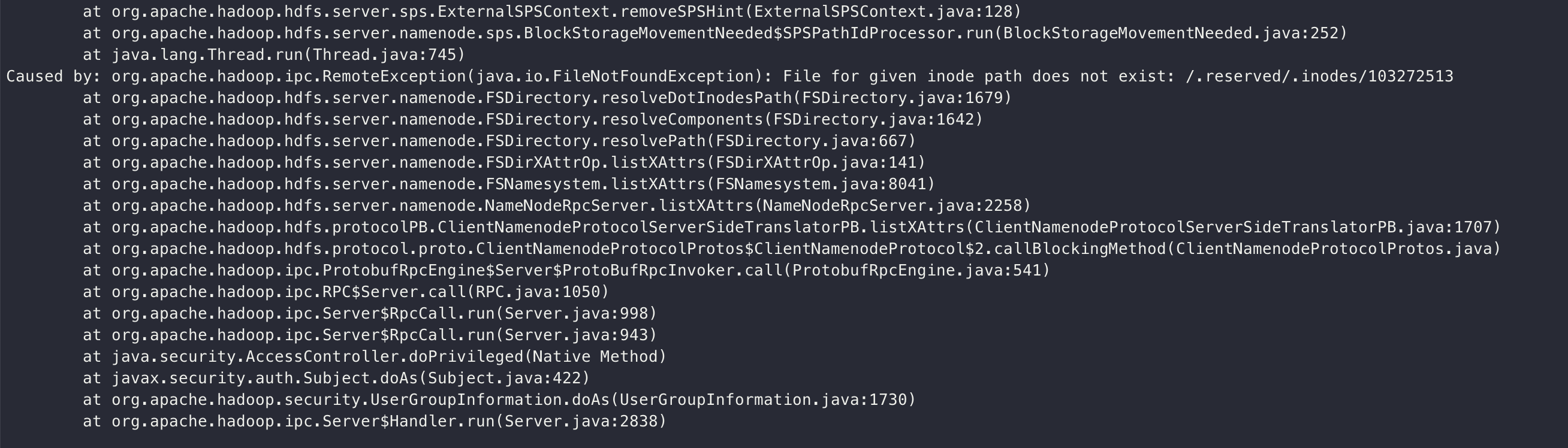

[GitHub] [hadoop] liubingxing opened a new pull request #4032: HDFS-16484. [SPS]: Fix an infinite loop bug in SPSPathIdProcessor thread

liubingxing opened a new pull request #4032: URL: https://github.com/apache/hadoop/pull/4032 JIRA: [HDFS-16484](https://issues.apache.org/jira/browse/HDFS-16484) In SPSPathIdProcessor thread, if it get a inodeId which path does not exist, then the SPSPathIdProcessor thread entry infinite loop and can't work normally.  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] tomscut opened a new pull request #4031: HDFS-16371. Exclude slow disks when choosing volume (#3753)

tomscut opened a new pull request #4031: URL: https://github.com/apache/hadoop/pull/4031 Cherry-pick [#3753](https://github.com/apache/hadoop/pull/3753) to branch-3.3 . -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-15566) Support OpenTelemetry

[ https://issues.apache.org/jira/browse/HADOOP-15566?focusedWorklogId=732858&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-732858 ] ASF GitHub Bot logged work on HADOOP-15566: --- Author: ASF GitHub Bot Created on: 25/Feb/22 04:56 Start Date: 25/Feb/22 04:56 Worklog Time Spent: 10m Work Description: kiran-maturi commented on pull request #3445: URL: https://github.com/apache/hadoop/pull/3445#issuecomment-1050521577 @ndimiduk @cijothomas @iwasakims can you please help with the review -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 732858) Time Spent: 9.5h (was: 9h 20m) > Support OpenTelemetry > - > > Key: HADOOP-15566 > URL: https://issues.apache.org/jira/browse/HADOOP-15566 > Project: Hadoop Common > Issue Type: New Feature > Components: metrics, tracing >Affects Versions: 3.1.0 >Reporter: Todd Lipcon >Assignee: Siyao Meng >Priority: Major > Labels: pull-request-available, security > Attachments: HADOOP-15566-WIP.1.patch, HADOOP-15566.000.WIP.patch, > OpenTelemetry Support Scope Doc v2.pdf, OpenTracing Support Scope Doc.pdf, > Screen Shot 2018-06-29 at 11.59.16 AM.png, ss-trace-s3a.png > > Time Spent: 9.5h > Remaining Estimate: 0h > > The HTrace incubator project has voted to retire itself and won't be making > further releases. The Hadoop project currently has various hooks with HTrace. > It seems in some cases (eg HDFS-13702) these hooks have had measurable > performance overhead. Given these two factors, I think we should consider > removing the HTrace integration. If there is someone willing to do the work, > replacing it with OpenTracing might be a better choice since there is an > active community. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] kiran-maturi commented on pull request #3445: HADOOP-15566 Opentelemetry changes using java agent

kiran-maturi commented on pull request #3445: URL: https://github.com/apache/hadoop/pull/3445#issuecomment-1050521577 @ndimiduk @cijothomas @iwasakims can you please help with the review -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #4028: HDFS-16481. Provide support to set Http and Rpc ports in MiniJournalCluster

hadoop-yetus commented on pull request #4028:

URL: https://github.com/apache/hadoop/pull/4028#issuecomment-1050482214

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 51s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 2s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 41m 47s | | trunk passed |

| +1 :green_heart: | compile | 2m 6s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 1m 55s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 16s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 1s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 27s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 54s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 4m 16s | | trunk passed |

| +1 :green_heart: | shadedclient | 32m 39s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 52s | | the patch passed |

| +1 :green_heart: | compile | 1m 59s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 1m 59s | | the patch passed |

| +1 :green_heart: | compile | 1m 40s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 1m 40s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 3s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 35s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 2s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 30s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 3m 35s | | the patch passed |

| +1 :green_heart: | shadedclient | 25m 38s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 356m 37s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 39s | | The patch does not

generate ASF License warnings. |

| | | 485m 9s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/3/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4028 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux a8a1c7074c87 4.15.0-163-generic #171-Ubuntu SMP Fri Nov 5

11:55:11 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 5833956b6f9d4c3116897d027efe6d4cabdbd1c9 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/3/testReport/ |

| Max. process+thread count | 2083 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/3/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hadoop] tomscut commented on pull request #3828: HDFS-16397. Reconfig slow disk parameters for datanode

tomscut commented on pull request #3828: URL: https://github.com/apache/hadoop/pull/3828#issuecomment-1050392857 Thanks @tasanuma for reviewing and merging it. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-16254) Add proxy address in IPC connection

[ https://issues.apache.org/jira/browse/HADOOP-16254?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17497809#comment-17497809 ] Owen O'Malley commented on HADOOP-16254: [~ayushtkn] , yeah I hadn't found that jira, so thank you. Of course, using the caller context will work, with the only major downside is that if the user sets a caller context that is close to the limit, it could cause bytes to get dropped. We might want to pick a shorter lead string (4 bytes?) to minimize that chance. (Or bump up the default limit by 50 bytes?) > Add proxy address in IPC connection > --- > > Key: HADOOP-16254 > URL: https://issues.apache.org/jira/browse/HADOOP-16254 > Project: Hadoop Common > Issue Type: New Feature > Components: ipc >Reporter: Xiaoqiao He >Assignee: Xiaoqiao He >Priority: Blocker > Attachments: HADOOP-16254.001.patch, HADOOP-16254.002.patch, > HADOOP-16254.004.patch, HADOOP-16254.005.patch, HADOOP-16254.006.patch, > HADOOP-16254.007.patch > > > In order to support data locality of RBF, we need to add new field about > client hostname in the RPC headers of Router protocol calls. > clientHostname represents hostname of client and forward by Router to > Namenode to support data locality friendly. See more [RBF Data Locality > Design|https://issues.apache.org/jira/secure/attachment/12965092/RBF%20Data%20Locality%20Design.pdf] > in HDFS-13248 and [maillist > vote|http://mail-archives.apache.org/mod_mbox/hadoop-common-dev/201904.mbox/%3CCAF3Ajax7hGxvowg4K_HVTZeDqC5H=3bfb7mv5sz5mgvadhv...@mail.gmail.com%3E]. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Resolved] (HADOOP-18139) Allow configuration of zookeeper server principal

[ https://issues.apache.org/jira/browse/HADOOP-18139?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Owen O'Malley resolved HADOOP-18139. Fix Version/s: 3.4.0 3.3.3 Resolution: Fixed Thanks for the review, Íñigo! > Allow configuration of zookeeper server principal > - > > Key: HADOOP-18139 > URL: https://issues.apache.org/jira/browse/HADOOP-18139 > Project: Hadoop Common > Issue Type: Improvement > Components: auth >Reporter: Owen O'Malley >Assignee: Owen O'Malley >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0, 3.3.3 > > Time Spent: 20m > Remaining Estimate: 0h > > Allow configuration of zookeeper server principal. > This would allow the Router to specify the principal. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-18139) Allow configuration of zookeeper server principal

[ https://issues.apache.org/jira/browse/HADOOP-18139?focusedWorklogId=732759&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-732759 ] ASF GitHub Bot logged work on HADOOP-18139: --- Author: ASF GitHub Bot Created on: 24/Feb/22 23:02 Start Date: 24/Feb/22 23:02 Worklog Time Spent: 10m Work Description: omalley closed pull request #4024: URL: https://github.com/apache/hadoop/pull/4024 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 732759) Time Spent: 20m (was: 10m) > Allow configuration of zookeeper server principal > - > > Key: HADOOP-18139 > URL: https://issues.apache.org/jira/browse/HADOOP-18139 > Project: Hadoop Common > Issue Type: Improvement > Components: auth >Reporter: Owen O'Malley >Assignee: Owen O'Malley >Priority: Major > Labels: pull-request-available > Time Spent: 20m > Remaining Estimate: 0h > > Allow configuration of zookeeper server principal. > This would allow the Router to specify the principal. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] omalley closed pull request #4024: HADOOP-18139. Allow configuration of zookeeper server principal.

omalley closed pull request #4024: URL: https://github.com/apache/hadoop/pull/4024 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-8521) Port StreamInputFormat to new Map Reduce API

[ https://issues.apache.org/jira/browse/HADOOP-8521?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17497774#comment-17497774 ] Giuseppe Valente commented on HADOOP-8521: -- I'm getting this stacktrace trying to use an input format based on the new API, is it supposed to work? Exception in thread "main" java.lang.RuntimeException: class org.apache.hadoop.hbase.mapreduce.HFileInputFormat not org.apache.hadoop.mapred.InputFormat at org.apache.hadoop.conf.Configuration.setClass(Configuration.java:2712) at org.apache.hadoop.mapred.JobConf.setInputFormat(JobConf.java:700) at org.apache.hadoop.streaming.StreamJob.setJobConf(StreamJob.java:798) at org.apache.hadoop.streaming.StreamJob.run(StreamJob.java:126) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90) at org.apache.hadoop.streaming.HadoopStreaming.main(HadoopStreaming.java:50) at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.base/java.lang.reflect.Method.invoke(Method.java:564) at org.apache.hadoop.util.RunJar.run(RunJar.java:318) at org.apache.hadoop.util.RunJar.main(RunJar.java:232) > Port StreamInputFormat to new Map Reduce API > > > Key: HADOOP-8521 > URL: https://issues.apache.org/jira/browse/HADOOP-8521 > Project: Hadoop Common > Issue Type: Improvement > Components: streaming >Affects Versions: 0.23.0 >Reporter: madhukara phatak >Assignee: madhukara phatak >Priority: Major > Fix For: 3.0.0-alpha1 > > Attachments: HADOOP-8521-1.patch, HADOOP-8521-2.patch, > HADOOP-8521-3.patch, HADOOP-8521.patch > > > As of now , hadoop streaming uses old Hadoop M/R API. This JIRA ports it to > the new M/R API. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-18139) Allow configuration of zookeeper server principal

[ https://issues.apache.org/jira/browse/HADOOP-18139?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HADOOP-18139: Labels: pull-request-available (was: ) > Allow configuration of zookeeper server principal > - > > Key: HADOOP-18139 > URL: https://issues.apache.org/jira/browse/HADOOP-18139 > Project: Hadoop Common > Issue Type: Improvement > Components: auth >Reporter: Owen O'Malley >Assignee: Owen O'Malley >Priority: Major > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > Allow configuration of zookeeper server principal. > This would allow the Router to specify the principal. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-18139) Allow configuration of zookeeper server principal

[

https://issues.apache.org/jira/browse/HADOOP-18139?focusedWorklogId=732734&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-732734

]

ASF GitHub Bot logged work on HADOOP-18139:

---

Author: ASF GitHub Bot

Created on: 24/Feb/22 22:14

Start Date: 24/Feb/22 22:14

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #4024:

URL: https://github.com/apache/hadoop/pull/4024#issuecomment-1050315985

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 32s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ branch-3.3 Compile Tests _ |

| +1 :green_heart: | mvninstall | 40m 37s | | branch-3.3 passed |

| +1 :green_heart: | compile | 24m 1s | | branch-3.3 passed |

| +1 :green_heart: | checkstyle | 1m 4s | | branch-3.3 passed |

| +1 :green_heart: | mvnsite | 2m 9s | | branch-3.3 passed |

| +1 :green_heart: | javadoc | 2m 2s | | branch-3.3 passed |

| +1 :green_heart: | spotbugs | 3m 7s | | branch-3.3 passed |

| +1 :green_heart: | shadedclient | 31m 36s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 9s | | the patch passed |

| +1 :green_heart: | compile | 23m 21s | | the patch passed |

| +1 :green_heart: | javac | 23m 21s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 58s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 52s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 51s | | the patch passed |

| +1 :green_heart: | spotbugs | 3m 4s | | the patch passed |

| +1 :green_heart: | shadedclient | 30m 14s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 17m 10s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 48s | | The patch does not

generate ASF License warnings. |

| | | 184m 55s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4024/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4024 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 79363f83193d 4.15.0-153-generic #160-Ubuntu SMP Thu Jul 29

06:54:29 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | branch-3.3 / fc4bf4a7d00b7b719cdc876a7932d824f5e28a7f |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~18.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4024/4/testReport/ |

| Max. process+thread count | 2575 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common U:

hadoop-common-project/hadoop-common |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4024/4/console |

| versions | git=2.17.1 maven=3.6.0 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 732734)

Remaining Estimate: 0h

Time Spent: 10m

> Allow configuration of zookeeper server principal

> -

>

> Key: HADOOP-18139

> URL: https://issues.apache.org/jira/browse/HADOOP-18139

> Project: Hadoop Common

> Issue Type: Improvement

>

[GitHub] [hadoop] hadoop-yetus commented on pull request #4024: HADOOP-18139. Allow configuration of zookeeper server principal.

hadoop-yetus commented on pull request #4024:

URL: https://github.com/apache/hadoop/pull/4024#issuecomment-1050315985

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 32s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ branch-3.3 Compile Tests _ |

| +1 :green_heart: | mvninstall | 40m 37s | | branch-3.3 passed |

| +1 :green_heart: | compile | 24m 1s | | branch-3.3 passed |

| +1 :green_heart: | checkstyle | 1m 4s | | branch-3.3 passed |

| +1 :green_heart: | mvnsite | 2m 9s | | branch-3.3 passed |

| +1 :green_heart: | javadoc | 2m 2s | | branch-3.3 passed |

| +1 :green_heart: | spotbugs | 3m 7s | | branch-3.3 passed |

| +1 :green_heart: | shadedclient | 31m 36s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 9s | | the patch passed |

| +1 :green_heart: | compile | 23m 21s | | the patch passed |

| +1 :green_heart: | javac | 23m 21s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 58s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 52s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 51s | | the patch passed |

| +1 :green_heart: | spotbugs | 3m 4s | | the patch passed |

| +1 :green_heart: | shadedclient | 30m 14s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 17m 10s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 48s | | The patch does not

generate ASF License warnings. |

| | | 184m 55s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4024/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4024 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 79363f83193d 4.15.0-153-generic #160-Ubuntu SMP Thu Jul 29

06:54:29 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | branch-3.3 / fc4bf4a7d00b7b719cdc876a7932d824f5e28a7f |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~18.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4024/4/testReport/ |

| Max. process+thread count | 2575 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common U:

hadoop-common-project/hadoop-common |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4024/4/console |

| versions | git=2.17.1 maven=3.6.0 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-18143) toString method of RpcCall throws IllegalArgumentException

[

https://issues.apache.org/jira/browse/HADOOP-18143?focusedWorklogId=732720&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-732720

]

ASF GitHub Bot logged work on HADOOP-18143:

---

Author: ASF GitHub Bot

Created on: 24/Feb/22 21:59

Start Date: 24/Feb/22 21:59

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #4030:

URL: https://github.com/apache/hadoop/pull/4030#issuecomment-1050305846

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 47s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 33m 43s | | trunk passed |

| +1 :green_heart: | compile | 23m 53s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 20m 46s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 8s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 40s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 14s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 42s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 2m 28s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 39s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 0s | | the patch passed |

| +1 :green_heart: | compile | 23m 1s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 23m 1s | | the patch passed |

| +1 :green_heart: | compile | 20m 37s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 20m 37s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 4s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 39s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 9s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 40s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 2m 43s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 44s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 18m 6s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 55s | | The patch does not

generate ASF License warnings. |

| | | 204m 43s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4030/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4030 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux ecc3a7dae72f 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / efdfab807f4dffd48dfd1c1e4d0af5ffbdc64136 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4030/1/testReport/ |

| Max. process+thread count | 1251 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common U:

hadoop-common-project/hadoop-common |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4030/1/console |

| v

[GitHub] [hadoop] hadoop-yetus commented on pull request #4030: HADOOP-18143. toString method of RpcCall throws IllegalArgumentException

hadoop-yetus commented on pull request #4030:

URL: https://github.com/apache/hadoop/pull/4030#issuecomment-1050305846

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 47s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 33m 43s | | trunk passed |

| +1 :green_heart: | compile | 23m 53s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 20m 46s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 8s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 40s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 14s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 42s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 2m 28s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 39s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 0s | | the patch passed |

| +1 :green_heart: | compile | 23m 1s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 23m 1s | | the patch passed |

| +1 :green_heart: | compile | 20m 37s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 20m 37s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 4s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 39s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 9s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 40s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 2m 43s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 44s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 18m 6s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 55s | | The patch does not

generate ASF License warnings. |

| | | 204m 43s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4030/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/4030 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux ecc3a7dae72f 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / efdfab807f4dffd48dfd1c1e4d0af5ffbdc64136 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4030/1/testReport/ |

| Max. process+thread count | 1251 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common U:

hadoop-common-project/hadoop-common |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4030/1/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For querie

[GitHub] [hadoop] hadoop-yetus commented on pull request #4028: HDFS-16481. Provide support to set Http and Rpc ports in MiniJournalCluster

hadoop-yetus commented on pull request #4028:

URL: https://github.com/apache/hadoop/pull/4028#issuecomment-1050170528

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 50s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| -1 :x: | mvninstall | 20m 36s |

[/branch-mvninstall-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/branch-mvninstall-root.txt)

| root in trunk failed. |

| -1 :x: | compile | 0m 27s |

[/branch-compile-hadoop-hdfs-project_hadoop-hdfs-jdkUbuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/branch-compile-hadoop-hdfs-project_hadoop-hdfs-jdkUbuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04.txt)

| hadoop-hdfs in trunk failed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04. |

| -1 :x: | compile | 0m 27s |

[/branch-compile-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/branch-compile-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07.txt)

| hadoop-hdfs in trunk failed with JDK Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07. |

| -0 :warning: | checkstyle | 0m 26s |

[/buildtool-branch-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/buildtool-branch-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| The patch fails to run checkstyle in hadoop-hdfs |

| -1 :x: | mvnsite | 0m 28s |

[/branch-mvnsite-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/branch-mvnsite-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in trunk failed. |

| -1 :x: | javadoc | 0m 28s |

[/branch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkUbuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/branch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkUbuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04.txt)

| hadoop-hdfs in trunk failed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04. |

| -1 :x: | javadoc | 0m 27s |

[/branch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/branch-javadoc-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07.txt)

| hadoop-hdfs in trunk failed with JDK Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07. |

| -1 :x: | spotbugs | 0m 28s |

[/branch-spotbugs-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/branch-spotbugs-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in trunk failed. |

| +1 :green_heart: | shadedclient | 3m 17s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| -1 :x: | mvninstall | 0m 22s |

[/patch-mvninstall-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/patch-mvninstall-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch failed. |

| -1 :x: | compile | 0m 22s |

[/patch-compile-hadoop-hdfs-project_hadoop-hdfs-jdkUbuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/patch-compile-hadoop-hdfs-project_hadoop-hdfs-jdkUbuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04.txt)

| hadoop-hdfs in the patch failed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04. |

| -1 :x: | javac | 0m 22s |

[/patch-compile-hadoop-hdfs-project_hadoop-hdfs-jdkUbuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/patch-compile-hadoop-hdfs-project_hadoop-hdfs-jdkUbuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04.txt)

| hadoop-hdfs in the patch failed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04. |

| -1 :x: | compile | 0m 23s |

[/patch-compile-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-4028/2/artifact/out/patch-compile-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-1.8.0_31

[jira] [Updated] (HADOOP-18139) Allow configuration of zookeeper server principal

[ https://issues.apache.org/jira/browse/HADOOP-18139?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Íñigo Goiri updated HADOOP-18139: - Description: Allow configuration of zookeeper server principal. This would allow the Router to specify the principal. > Allow configuration of zookeeper server principal > - > > Key: HADOOP-18139 > URL: https://issues.apache.org/jira/browse/HADOOP-18139 > Project: Hadoop Common > Issue Type: Improvement > Components: auth >Reporter: Owen O'Malley >Assignee: Owen O'Malley >Priority: Major > > Allow configuration of zookeeper server principal. > This would allow the Router to specify the principal. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-18139) Allow configuration of zookeeper server principal

[ https://issues.apache.org/jira/browse/HADOOP-18139?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Íñigo Goiri updated HADOOP-18139: - Summary: Allow configuration of zookeeper server principal (was: RBF: Allow configuration of zookeeper server principal in router) > Allow configuration of zookeeper server principal > - > > Key: HADOOP-18139 > URL: https://issues.apache.org/jira/browse/HADOOP-18139 > Project: Hadoop Common > Issue Type: Improvement > Components: auth >Reporter: Owen O'Malley >Assignee: Owen O'Malley >Priority: Major > -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-18142) Increase precommit job timeout from 24 hr to 30 hr

[ https://issues.apache.org/jira/browse/HADOOP-18142?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17497613#comment-17497613 ] Viraj Jasani commented on HADOOP-18142: --- Thanks [~kgyrtkirk] for the nice suggestions! If you would like to take up this work, please feel free to go ahead with creating PR. > Increase precommit job timeout from 24 hr to 30 hr > -- > > Key: HADOOP-18142 > URL: https://issues.apache.org/jira/browse/HADOOP-18142 > Project: Hadoop Common > Issue Type: Task >Reporter: Viraj Jasani >Assignee: Viraj Jasani >Priority: Major > > As per some recent precommit build results, full build QA is not getting > completed in 24 hr (recent example > [here|https://github.com/apache/hadoop/pull/4000] where more than 5 builds > timed out after 24 hr). We should increase it to 30 hr. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-18131) Upgrade maven enforcer plugin and relevant dependencies

[

https://issues.apache.org/jira/browse/HADOOP-18131?focusedWorklogId=732556&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-732556

]

ASF GitHub Bot logged work on HADOOP-18131:

---

Author: ASF GitHub Bot

Created on: 24/Feb/22 18:11

Start Date: 24/Feb/22 18:11

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #4000:

URL: https://github.com/apache/hadoop/pull/4000#issuecomment-1050125099

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 53s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | shelldocs | 0m 1s | | Shelldocs was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 56s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 26m 8s | | trunk passed |

| +1 :green_heart: | compile | 27m 0s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 22m 23s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 3m 56s | | trunk passed |

| +1 :green_heart: | mvnsite | 28m 13s | | trunk passed |

| +1 :green_heart: | javadoc | 9m 12s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 9m 43s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +0 :ok: | spotbugs | 0m 25s | | branch/hadoop-minicluster no

spotbugs output file (spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 26s | |

branch/hadoop-tools/hadoop-tools-dist no spotbugs output file (spotbugsXml.xml)

|

| +0 :ok: | spotbugs | 0m 27s | |

branch/hadoop-client-modules/hadoop-client-api no spotbugs output file

(spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 26s | |

branch/hadoop-client-modules/hadoop-client-runtime no spotbugs output file

(spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 27s | |

branch/hadoop-client-modules/hadoop-client-check-invariants no spotbugs output

file (spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 25s | |

branch/hadoop-client-modules/hadoop-client-minicluster no spotbugs output file

(spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 28s | |

branch/hadoop-client-modules/hadoop-client-check-test-invariants no spotbugs

output file (spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 27s | |

branch/hadoop-client-modules/hadoop-client-integration-tests no spotbugs output

file (spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 26s | |

branch/hadoop-cloud-storage-project/hadoop-cloud-storage no spotbugs output

file (spotbugsXml.xml) |

| +1 :green_heart: | shadedclient | 63m 58s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 40s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 79m 31s | | the patch passed |

| +1 :green_heart: | compile | 28m 49s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 28m 49s | | the patch passed |

| +1 :green_heart: | compile | 25m 42s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 25m 42s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 4m 13s | | the patch passed |

| +1 :green_heart: | mvnsite | 25m 16s | | the patch passed |

| +1 :green_heart: | shellcheck | 0m 0s | | No new issues. |

| +1 :green_heart: | xml | 2m 0s | | The patch has no ill-formed XML

file. |

| +1 :green_heart: | javadoc | 10m 7s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 9m 42s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +0 :ok: | spotbugs | 0m 20s | | hadoop-minicluster has no data from

spotbugs |

| +0 :ok: | spotbugs | 0m 28s | | hadoop-tools/hadoop-tools-dis

[GitHub] [hadoop] hadoop-yetus commented on pull request #4000: HADOOP-18131. Upgrade maven enforcer plugin and relevant dependencies

hadoop-yetus commented on pull request #4000:

URL: https://github.com/apache/hadoop/pull/4000#issuecomment-1050125099

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 53s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | shelldocs | 0m 1s | | Shelldocs was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 56s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 26m 8s | | trunk passed |

| +1 :green_heart: | compile | 27m 0s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 22m 23s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 3m 56s | | trunk passed |

| +1 :green_heart: | mvnsite | 28m 13s | | trunk passed |

| +1 :green_heart: | javadoc | 9m 12s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 9m 43s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +0 :ok: | spotbugs | 0m 25s | | branch/hadoop-minicluster no

spotbugs output file (spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 26s | |

branch/hadoop-tools/hadoop-tools-dist no spotbugs output file (spotbugsXml.xml)

|

| +0 :ok: | spotbugs | 0m 27s | |

branch/hadoop-client-modules/hadoop-client-api no spotbugs output file

(spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 26s | |

branch/hadoop-client-modules/hadoop-client-runtime no spotbugs output file

(spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 27s | |

branch/hadoop-client-modules/hadoop-client-check-invariants no spotbugs output

file (spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 25s | |

branch/hadoop-client-modules/hadoop-client-minicluster no spotbugs output file

(spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 28s | |

branch/hadoop-client-modules/hadoop-client-check-test-invariants no spotbugs

output file (spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 27s | |

branch/hadoop-client-modules/hadoop-client-integration-tests no spotbugs output

file (spotbugsXml.xml) |

| +0 :ok: | spotbugs | 0m 26s | |

branch/hadoop-cloud-storage-project/hadoop-cloud-storage no spotbugs output

file (spotbugsXml.xml) |

| +1 :green_heart: | shadedclient | 63m 58s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 40s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 79m 31s | | the patch passed |

| +1 :green_heart: | compile | 28m 49s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 28m 49s | | the patch passed |

| +1 :green_heart: | compile | 25m 42s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 25m 42s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 4m 13s | | the patch passed |

| +1 :green_heart: | mvnsite | 25m 16s | | the patch passed |

| +1 :green_heart: | shellcheck | 0m 0s | | No new issues. |

| +1 :green_heart: | xml | 2m 0s | | The patch has no ill-formed XML

file. |

| +1 :green_heart: | javadoc | 10m 7s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 9m 42s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +0 :ok: | spotbugs | 0m 20s | | hadoop-minicluster has no data from

spotbugs |

| +0 :ok: | spotbugs | 0m 28s | | hadoop-tools/hadoop-tools-dist has

no data from spotbugs |

| +0 :ok: | spotbugs | 0m 28s | |

hadoop-client-modules/hadoop-client-api has no data from spotbugs |

| +0 :ok: | spotbugs | 0m 25s | |

hadoop-client-modules/hadoop-client-runtime has no data from spotbugs |

| +0 :ok: | spotbugs | 0m 24s | |

hadoop-client-modules/hadoop-client-check-invariants has no data from spotbugs

|

| +0 :ok: | spotbugs | 0m 31s | |

[GitHub] [hadoop] xkrogen commented on pull request #3976: HDFS-16452. msync RPC should send to acitve namenode directly

xkrogen commented on pull request #3976: URL: https://github.com/apache/hadoop/pull/3976#issuecomment-1050121895 I agree with you that the current logic has RPCs that are theoretically unnecessary. I would be very open to some approach that allows `ObservedReadProxyProvider` to share information with its `failoverProxy` instance (either direction, from ORRP to `failoverProxy` or from `failoverProxy` to ORPP, could be useful) to eliminate unnecessary work. But we need to make sure it is layered cleanly, so that `failoverProxy` and ORPP both continue to respect their duties: `failoverProxy` is responsible for finding/contacting the Active NN (including for `msync`), and ORPP is responsible for finding/contacting Observer NNs. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] omalley commented on pull request #4024: Hadoop 18139

omalley commented on pull request #4024: URL: https://github.com/apache/hadoop/pull/4024#issuecomment-1050121255 I looked into adding unit tests, but the test zookeeper instance that the unit tests use doesn't support security, so it can't test this patch, which only matters if the zookeeper service is running with kerberos authentication. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3828: HDFS-16397. Reconfig slow disk parameters for datanode

hadoop-yetus commented on pull request #3828:

URL: https://github.com/apache/hadoop/pull/3828#issuecomment-1050094467

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 49s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 32m 2s | | trunk passed |

| +1 :green_heart: | compile | 1m 30s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 1m 19s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 1m 2s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 31s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 2s | | trunk passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 31s | | trunk passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 3m 14s | | trunk passed |

| +1 :green_heart: | shadedclient | 23m 52s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 27s | | the patch passed |

| +1 :green_heart: | compile | 1m 35s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 1m 35s | | the patch passed |

| +1 :green_heart: | compile | 1m 22s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 1m 22s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 59s |

[/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3828/8/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs-project/hadoop-hdfs: The patch generated 2 new + 136 unchanged

- 2 fixed = 138 total (was 138) |

| +1 :green_heart: | mvnsite | 1m 30s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 1s | | the patch passed with JDK

Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 32s | | the patch passed with JDK

Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 3m 44s | | the patch passed |

| +1 :green_heart: | shadedclient | 25m 43s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 35m 43s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3828/8/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +0 :ok: | asflicense | 0m 28s | | ASF License check generated no

output? |

| | | 140m 22s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.TestDFSStorageStateRecovery |

| | hadoop.hdfs.TestReadStripedFileWithMissingBlocks |

| | hadoop.hdfs.TestGetBlocks |

| | hadoop.hdfs.TestReadStripedFileWithDNFailure |

| | hadoop.hdfs.client.impl.TestBlockReaderLocal |

| | hadoop.hdfs.web.TestWebHDFSForHA |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3828/8/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3828 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux c937a0d6c41b 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 9acc63a94679eafb9ae8e57108890794d0293685 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3828/8/testReport/ |

| Max. process+thr

[jira] [Commented] (HADOOP-18143) toString method of RpcCall throws IllegalArgumentException

[

https://issues.apache.org/jira/browse/HADOOP-18143?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17497588#comment-17497588

]

András Győri commented on HADOOP-18143:

---

Created a PR for it, in which I wrap the affected functions with a mutex. As

getRequestHeader is more like getOrCreateRequestHeader, I did not see the

benefit of dividing the lock as separate read/write locks (as in both methods

we would just end up using the write lock anyway). Not sure whether it is

acceptable in terms of performance.

cc [~vinayakumarb]

> toString method of RpcCall throws IllegalArgumentException

> --

>

> Key: HADOOP-18143

> URL: https://issues.apache.org/jira/browse/HADOOP-18143

> Project: Hadoop Common

> Issue Type: Bug

>Reporter: András Győri

>Assignee: András Győri

>Priority: Critical

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> We have observed breaking tests such as TestApplicationACLs. We have located

> the root cause, which is HADOOP-18082. It seems that there is a concurrency

> issue within ProtobufRpcEngine2. When using a debugger, the missing fields

> are there, hence the suspicion of concurrency problem. The stack trace:

> {noformat}

> java.lang.IllegalArgumentException

> at java.nio.Buffer.position(Buffer.java:244)

> at

> org.apache.hadoop.ipc.RpcWritable$ProtobufWrapper.readFrom(RpcWritable.java:131)

> at org.apache.hadoop.ipc.RpcWritable$Buffer.getValue(RpcWritable.java:232)

> at

> org.apache.hadoop.ipc.ProtobufRpcEngine2$RpcProtobufRequest.getRequestHeader(ProtobufRpcEngine2.java:645)

> at

> org.apache.hadoop.ipc.ProtobufRpcEngine2$RpcProtobufRequest.toString(ProtobufRpcEngine2.java:663)

> at java.lang.String.valueOf(String.java:3425)

> at java.lang.StringBuilder.append(StringBuilder.java:516)

> at org.apache.hadoop.ipc.Server$RpcCall.toString(Server.java:1328)

> at java.lang.String.valueOf(String.java:3425)

> at java.lang.StringBuilder.append(StringBuilder.java:516)

> at org.apache.hadoop.ipc.Server$Handler.run(Server.java:3097){noformat}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-18143) toString method of RpcCall throws IllegalArgumentException

[

https://issues.apache.org/jira/browse/HADOOP-18143?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HADOOP-18143:

Labels: pull-request-available (was: )

> toString method of RpcCall throws IllegalArgumentException

> --

>

> Key: HADOOP-18143

> URL: https://issues.apache.org/jira/browse/HADOOP-18143

> Project: Hadoop Common

> Issue Type: Bug

>Reporter: András Győri

>Assignee: András Győri

>Priority: Critical

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> We have observed breaking tests such as TestApplicationACLs. We have located

> the root cause, which is HADOOP-18082. It seems that there is a concurrency

> issue within ProtobufRpcEngine2. When using a debugger, the missing fields

> are there, hence the suspicion of concurrency problem. The stack trace:

> {noformat}

> java.lang.IllegalArgumentException

> at java.nio.Buffer.position(Buffer.java:244)

> at

> org.apache.hadoop.ipc.RpcWritable$ProtobufWrapper.readFrom(RpcWritable.java:131)

> at org.apache.hadoop.ipc.RpcWritable$Buffer.getValue(RpcWritable.java:232)

> at

> org.apache.hadoop.ipc.ProtobufRpcEngine2$RpcProtobufRequest.getRequestHeader(ProtobufRpcEngine2.java:645)

> at

> org.apache.hadoop.ipc.ProtobufRpcEngine2$RpcProtobufRequest.toString(ProtobufRpcEngine2.java:663)

> at java.lang.String.valueOf(String.java:3425)

> at java.lang.StringBuilder.append(StringBuilder.java:516)

> at org.apache.hadoop.ipc.Server$RpcCall.toString(Server.java:1328)

> at java.lang.String.valueOf(String.java:3425)

> at java.lang.StringBuilder.append(StringBuilder.java:516)

> at org.apache.hadoop.ipc.Server$Handler.run(Server.java:3097){noformat}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-18143) toString method of RpcCall throws IllegalArgumentException

[

https://issues.apache.org/jira/browse/HADOOP-18143?focusedWorklogId=732519&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-732519

]

ASF GitHub Bot logged work on HADOOP-18143:

---

Author: ASF GitHub Bot

Created on: 24/Feb/22 17:29

Start Date: 24/Feb/22 17:29

Worklog Time Spent: 10m

Work Description: 9uapaw opened a new pull request #4030:

URL: https://github.com/apache/hadoop/pull/4030

### Description of PR

### How was this patch tested?

### For code changes:

- [ ] Does the title or this PR starts with the corresponding JIRA issue id

(e.g. 'HADOOP-17799. Your PR title ...')?

- [ ] Object storage: have the integration tests been executed and the

endpoint declared according to the connector-specific documentation?

- [ ] If adding new dependencies to the code, are these dependencies

licensed in a way that is compatible for inclusion under [ASF

2.0](http://www.apache.org/legal/resolved.html#category-a)?

- [ ] If applicable, have you updated the `LICENSE`, `LICENSE-binary`,

`NOTICE-binary` files?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 732519)

Remaining Estimate: 0h

Time Spent: 10m

> toString method of RpcCall throws IllegalArgumentException

> --

>

> Key: HADOOP-18143

> URL: https://issues.apache.org/jira/browse/HADOOP-18143

> Project: Hadoop Common

> Issue Type: Bug

>Reporter: András Győri

>Assignee: András Győri

>Priority: Critical

> Time Spent: 10m

> Remaining Estimate: 0h

>

> We have observed breaking tests such as TestApplicationACLs. We have located

> the root cause, which is HADOOP-18082. It seems that there is a concurrency

> issue within ProtobufRpcEngine2. When using a debugger, the missing fields

> are there, hence the suspicion of concurrency problem. The stack trace:

> {noformat}

> java.lang.IllegalArgumentException

> at java.nio.Buffer.position(Buffer.java:244)

> at

> org.apache.hadoop.ipc.RpcWritable$ProtobufWrapper.readFrom(RpcWritable.java:131)

> at org.apache.hadoop.ipc.RpcWritable$Buffer.getValue(RpcWritable.java:232)

> at

> org.apache.hadoop.ipc.ProtobufRpcEngine2$RpcProtobufRequest.getRequestHeader(ProtobufRpcEngine2.java:645)

> at

> org.apache.hadoop.ipc.ProtobufRpcEngine2$RpcProtobufRequest.toString(ProtobufRpcEngine2.java:663)

> at java.lang.String.valueOf(String.java:3425)

> at java.lang.StringBuilder.append(StringBuilder.java:516)

> at org.apache.hadoop.ipc.Server$RpcCall.toString(Server.java:1328)

> at java.lang.String.valueOf(String.java:3425)

> at java.lang.StringBuilder.append(StringBuilder.java:516)

> at org.apache.hadoop.ipc.Server$Handler.run(Server.java:3097){noformat}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] 9uapaw opened a new pull request #4030: HADOOP-18143. toString method of RpcCall throws IllegalArgumentException

9uapaw opened a new pull request #4030: URL: https://github.com/apache/hadoop/pull/4030 ### Description of PR ### How was this patch tested? ### For code changes: - [ ] Does the title or this PR starts with the corresponding JIRA issue id (e.g. 'HADOOP-17799. Your PR title ...')? - [ ] Object storage: have the integration tests been executed and the endpoint declared according to the connector-specific documentation? - [ ] If adding new dependencies to the code, are these dependencies licensed in a way that is compatible for inclusion under [ASF 2.0](http://www.apache.org/legal/resolved.html#category-a)? - [ ] If applicable, have you updated the `LICENSE`, `LICENSE-binary`, `NOTICE-binary` files? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] simbadzina commented on a change in pull request #4024: Hadoop 18139

simbadzina commented on a change in pull request #4024:

URL: https://github.com/apache/hadoop/pull/4024#discussion_r814105513

##

File path:

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/curator/ZKCuratorManager.java

##

@@ -428,4 +434,26 @@ public void setData(String path, byte[] data, int version)

.forPath(path, data));

}

}

+

+ public static class HadoopZookeeperFactory implements ZookeeperFactory {

+private final String zkPrincipal;

+

+public HadoopZookeeperFactory(String zkPrincipal) {

+ this.zkPrincipal = zkPrincipal;

+}

+

+@Override

+public ZooKeeper newZooKeeper(String connectString, int sessionTimeout,

+ Watcher watcher, boolean canBeReadOnly

+) throws Exception {

+ ZKClientConfig zkClientConfig = new ZKClientConfig();

+ if (zkPrincipal != null) {

+LOG.info("Configuring zookeeper client to use {}", zkPrincipal);

Review comment:

Can you add "as the server principal" at the end of the log message so

that it's clear what the value is being used for.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] simbadzina commented on a change in pull request #4024: Hadoop 18139

simbadzina commented on a change in pull request #4024: URL: https://github.com/apache/hadoop/pull/4024#discussion_r814105002 ## File path: hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/security/token/delegation/ZKDelegationTokenSecretManager.java ## @@ -52,9 +52,11 @@ import org.apache.hadoop.classification.InterfaceAudience.Private; import org.apache.hadoop.classification.InterfaceStability.Unstable; import org.apache.hadoop.conf.Configuration; +import org.apache.hadoop.fs.CommonConfigurationKeys; Review comment: Unused import. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-15245) S3AInputStream.skip() to use lazy seek

[

https://issues.apache.org/jira/browse/HADOOP-15245?focusedWorklogId=732507&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-732507

]

ASF GitHub Bot logged work on HADOOP-15245:

---

Author: ASF GitHub Bot

Created on: 24/Feb/22 17:13

Start Date: 24/Feb/22 17:13

Worklog Time Spent: 10m

Work Description: dannycjones commented on a change in pull request #3927:

URL: https://github.com/apache/hadoop/pull/3927#discussion_r814094303

##

File path:

hadoop-tools/hadoop-aws/src/main/java/org/apache/hadoop/fs/s3a/statistics/impl/EmptyS3AStatisticsContext.java

##

@@ -317,6 +322,9 @@ public long getInputPolicy() {

return 0;

}

+@Override

+public long getSkipOperations() { return 0; }

Review comment:

This line introduces a new checkstyle violation, can you drop the return

on to a new line?

```

./hadoop-tools/hadoop-aws/src/main/java/org/apache/hadoop/fs/s3a/statistics/impl/EmptyS3AStatisticsContext.java:326:

public long getSkipOperations() { return 0; }:37: '{' at column 37 should

have line break after. [LeftCurly]

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org