[GitHub] weijietong commented on a change in pull request #1504: DRILL-6792: Find the right probe side fragment wrapper & fix DrillBuf…

weijietong commented on a change in pull request #1504: DRILL-6792: Find the

right probe side fragment wrapper & fix DrillBuf…

URL: https://github.com/apache/drill/pull/1504#discussion_r232918785

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/work/filter/RuntimeFilterSink.java

##

@@ -17,206 +17,217 @@

*/

package org.apache.drill.exec.work.filter;

-import org.apache.drill.exec.memory.BufferAllocator;

+import io.netty.buffer.DrillBuf;

+import org.apache.drill.exec.ops.AccountingDataTunnel;

+import org.apache.drill.exec.ops.Consumer;

+import org.apache.drill.exec.ops.SendingAccountor;

+import org.apache.drill.exec.ops.StatusHandler;

+import org.apache.drill.exec.proto.BitData;

+import org.apache.drill.exec.proto.CoordinationProtos;

+import org.apache.drill.exec.proto.GeneralRPCProtos;

+import org.apache.drill.exec.proto.UserBitShared;

+import org.apache.drill.exec.rpc.RpcException;

+import org.apache.drill.exec.rpc.RpcOutcomeListener;

+import org.apache.drill.exec.rpc.data.DataTunnel;

+import org.apache.drill.exec.server.DrillbitContext;

+import org.apache.drill.shaded.guava.com.google.common.base.Stopwatch;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

+

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

import java.util.concurrent.BlockingQueue;

-import java.util.concurrent.ExecutorService;

-import java.util.concurrent.Future;

import java.util.concurrent.LinkedBlockingQueue;

-import java.util.concurrent.atomic.AtomicBoolean;

-import java.util.concurrent.atomic.AtomicInteger;

+import java.util.concurrent.TimeUnit;

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.ReentrantLock;

/**

* This sink receives the RuntimeFilters from the netty thread,

- * aggregates them in an async thread, supplies the aggregated

- * one to the fragment running thread.

+ * aggregates them in an async thread, broadcast the final aggregated

+ * one to the RuntimeFilterRecordBatch.

*/

-public class RuntimeFilterSink implements AutoCloseable {

-

- private AtomicInteger currentBookId = new AtomicInteger(0);

-

- private int staleBookId = 0;

-

- /**

- * RuntimeFilterWritable holding the aggregated version of all the received

filter

- */

- private RuntimeFilterWritable aggregated = null;

+public class RuntimeFilterSink

+{

private BlockingQueue rfQueue = new

LinkedBlockingQueue<>();

- /**

- * Flag used by Minor Fragment thread to indicate it has encountered error

- */

- private AtomicBoolean running = new AtomicBoolean(true);

-

- /**

- * Lock used to synchronize between producer (Netty Thread) and consumer

(AsyncAggregateThread) of elements of this

- * queue. This is needed because in error condition running flag can be

consumed by producer and consumer thread at

- * different times. Whoever sees it first will take this lock and clear all

elements and set the queue to null to

- * indicate producer not to put any new elements in it.

- */

private ReentrantLock queueLock = new ReentrantLock();

private Condition notEmpty = queueLock.newCondition();

- private ReentrantLock aggregatedRFLock = new ReentrantLock();

+ private Map joinMjId2rfNumber;

+

+ //HashJoin node's major fragment id to its corresponding probe side nodes's

endpoints

+ private Map>

joinMjId2probeScanEps = new HashMap<>();

- private BufferAllocator bufferAllocator;

+ //HashJoin node's major fragment id to its corresponding probe side scan

node's belonging major fragment id

+ private Map joinMjId2ScanMjId = new HashMap<>();

- private Future future;

+ //HashJoin node's major fragment id to its aggregated RuntimeFilterWritable

+ private Map joinMjId2AggregatedRF = new

HashMap<>();

+ //for debug usage

+ private Map joinMjId2Stopwatch = new HashMap<>();

+

+ private DrillbitContext drillbitContext;

+

+ private SendingAccountor sendingAccountor;

private static final Logger logger =

LoggerFactory.getLogger(RuntimeFilterSink.class);

- public RuntimeFilterSink(BufferAllocator bufferAllocator, ExecutorService

executorService) {

-this.bufferAllocator = bufferAllocator;

+ public RuntimeFilterSink(DrillbitContext drillbitContext, SendingAccountor

sendingAccountor)

+ {

+this.drillbitContext = drillbitContext;

+this.sendingAccountor = sendingAccountor;

AsyncAggregateWorker asyncAggregateWorker = new AsyncAggregateWorker();

-future = executorService.submit(asyncAggregateWorker);

+drillbitContext.getExecutor().submit(asyncAggregateWorker);

}

- public void aggregate(RuntimeFilterWritable runtimeFilterWritable) {

-if (running.get()) {

- try {

-aggregatedRFLock.lock();

-if (containOne()) {

- boolean same = aggregated.equals(runtimeFilterWritable);

- if (!same) {

-// This is to solve the only one fragment case that two

RuntimeFilterRecordBatchs

-// share the same FragmentContext.

-aggreg

[GitHub] weijietong commented on a change in pull request #1504: DRILL-6792: Find the right probe side fragment wrapper & fix DrillBuf…

weijietong commented on a change in pull request #1504: DRILL-6792: Find the

right probe side fragment wrapper & fix DrillBuf…

URL: https://github.com/apache/drill/pull/1504#discussion_r232918469

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/ops/FragmentContextImpl.java

##

@@ -136,8 +143,8 @@ public void interrupt(final InterruptedException e) {

private final AccountingUserConnection accountingUserConnection;

/** Stores constants and their holders by type */

private final Map>

constantValueHolderCache;

-

- private RuntimeFilterSink runtimeFilterSink;

+ private Map rfIdentifier2RFW = new

ConcurrentHashMap<>();

+ private Map rfIdentifier2fetched = new ConcurrentHashMap<>();

Review comment:

It can't be replaced by the `rfIdentifier2RFW ` thorough setting the value

to null. Since we need to decrease the reference number of the received RFW's

Drillbuf to keep reference number count balance, we should keep the RFW object

reference to do the decreasing at the `closeReceivedRFWs ` . Further, we need

to do the decreasing even it has been consumed to keep reference number balance.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

Re: Hangout Discussion Topics

Works for me. Then let's skip the hangout tomorrow. If there are any objections please feel free to respond to this thread. Thanks, Tim On Mon, Nov 12, 2018 at 5:35 PM Aman Sinha wrote: > Since we are having the Drill Developer day on Wednesday, perhaps we can > skip the hangout tomorrow ? > > Aman > > On Mon, Nov 12, 2018 at 10:13 AM Timothy Farkas wrote: > > > Hi All, > > > > Does anyone have any topics to discuss during the hangout tomorrow? > > > > Thanks, > > Tim > > >

[jira] [Created] (DRILL-6846) Store test results

Vitalii Diravka created DRILL-6846: -- Summary: Store test results Key: DRILL-6846 URL: https://issues.apache.org/jira/browse/DRILL-6846 Project: Apache Drill Issue Type: Sub-task Components: Tools, Build & Test Reporter: Vitalii Diravka Currently to see the result of build you should download the text file with that info. Special step can be used to upload test results so they display in builds’ Test Summary section and can be used for timing analysis. See more: https://circleci.com/docs/2.0/configuration-reference/#store_test_results -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] Ben-Zvi commented on issue #1522: Drill 6735: Implement Semi-Join for the Hash-Join operator

Ben-Zvi commented on issue #1522: Drill 6735: Implement Semi-Join for the Hash-Join operator URL: https://github.com/apache/drill/pull/1522#issuecomment-438119015 Good suggestions. The PR was changed - removed the 2nd and 3rd commits, and added a new commit with the Unused Hash Join Helper returning size zero. Also created DRILL-6845 to handle the duplicates (i.e., eventually the 2nd + 3rd commits would go there). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] Ben-Zvi edited a comment on issue #1522: Drill 6735: Implement Semi-Join for the Hash-Join operator

Ben-Zvi edited a comment on issue #1522: Drill 6735: Implement Semi-Join for the Hash-Join operator URL: https://github.com/apache/drill/pull/1522#issuecomment-438119015 Good suggestions. The PR was changed - removed the 2nd and 3rd commits, and added a new commit with the Unused Hash Join Helper returning size zero. Also created (as a sub-task) DRILL-6845 to handle the duplicates (i.e., eventually the 2nd + 3rd commits would go there). This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] sohami commented on a change in pull request #1504: DRILL-6792: Find the right probe side fragment wrapper & fix DrillBuf…

sohami commented on a change in pull request #1504: DRILL-6792: Find the right

probe side fragment wrapper & fix DrillBuf…

URL: https://github.com/apache/drill/pull/1504#discussion_r232866925

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/ops/FragmentContextImpl.java

##

@@ -136,8 +143,8 @@ public void interrupt(final InterruptedException e) {

private final AccountingUserConnection accountingUserConnection;

/** Stores constants and their holders by type */

private final Map>

constantValueHolderCache;

-

- private RuntimeFilterSink runtimeFilterSink;

+ private Map rfIdentifier2RFW = new

ConcurrentHashMap<>();

+ private Map rfIdentifier2fetched = new ConcurrentHashMap<>();

Review comment:

The information which `rfIdentifier2fetched` map is storing can be

implicitly derived using first map `rfIdentifier2RFW`. Once the

`RuntimeFilterWritable` is consumed just remove it from first map. Then in

`closeReceivedRFWs` just call `close` on the `RuntimeFilterWritable` which is

present.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] sohami commented on a change in pull request #1504: DRILL-6792: Find the right probe side fragment wrapper & fix DrillBuf…

sohami commented on a change in pull request #1504: DRILL-6792: Find the right

probe side fragment wrapper & fix DrillBuf…

URL: https://github.com/apache/drill/pull/1504#discussion_r232881982

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/work/filter/RuntimeFilterSink.java

##

@@ -17,206 +17,217 @@

*/

package org.apache.drill.exec.work.filter;

-import org.apache.drill.exec.memory.BufferAllocator;

+import io.netty.buffer.DrillBuf;

+import org.apache.drill.exec.ops.AccountingDataTunnel;

+import org.apache.drill.exec.ops.Consumer;

+import org.apache.drill.exec.ops.SendingAccountor;

+import org.apache.drill.exec.ops.StatusHandler;

+import org.apache.drill.exec.proto.BitData;

+import org.apache.drill.exec.proto.CoordinationProtos;

+import org.apache.drill.exec.proto.GeneralRPCProtos;

+import org.apache.drill.exec.proto.UserBitShared;

+import org.apache.drill.exec.rpc.RpcException;

+import org.apache.drill.exec.rpc.RpcOutcomeListener;

+import org.apache.drill.exec.rpc.data.DataTunnel;

+import org.apache.drill.exec.server.DrillbitContext;

+import org.apache.drill.shaded.guava.com.google.common.base.Stopwatch;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

+

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

import java.util.concurrent.BlockingQueue;

-import java.util.concurrent.ExecutorService;

-import java.util.concurrent.Future;

import java.util.concurrent.LinkedBlockingQueue;

-import java.util.concurrent.atomic.AtomicBoolean;

-import java.util.concurrent.atomic.AtomicInteger;

+import java.util.concurrent.TimeUnit;

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.ReentrantLock;

/**

* This sink receives the RuntimeFilters from the netty thread,

- * aggregates them in an async thread, supplies the aggregated

- * one to the fragment running thread.

+ * aggregates them in an async thread, broadcast the final aggregated

+ * one to the RuntimeFilterRecordBatch.

*/

-public class RuntimeFilterSink implements AutoCloseable {

-

- private AtomicInteger currentBookId = new AtomicInteger(0);

-

- private int staleBookId = 0;

-

- /**

- * RuntimeFilterWritable holding the aggregated version of all the received

filter

- */

- private RuntimeFilterWritable aggregated = null;

+public class RuntimeFilterSink

+{

private BlockingQueue rfQueue = new

LinkedBlockingQueue<>();

- /**

- * Flag used by Minor Fragment thread to indicate it has encountered error

- */

- private AtomicBoolean running = new AtomicBoolean(true);

-

- /**

- * Lock used to synchronize between producer (Netty Thread) and consumer

(AsyncAggregateThread) of elements of this

- * queue. This is needed because in error condition running flag can be

consumed by producer and consumer thread at

- * different times. Whoever sees it first will take this lock and clear all

elements and set the queue to null to

- * indicate producer not to put any new elements in it.

- */

private ReentrantLock queueLock = new ReentrantLock();

private Condition notEmpty = queueLock.newCondition();

- private ReentrantLock aggregatedRFLock = new ReentrantLock();

+ private Map joinMjId2rfNumber;

+

+ //HashJoin node's major fragment id to its corresponding probe side nodes's

endpoints

+ private Map>

joinMjId2probeScanEps = new HashMap<>();

- private BufferAllocator bufferAllocator;

+ //HashJoin node's major fragment id to its corresponding probe side scan

node's belonging major fragment id

+ private Map joinMjId2ScanMjId = new HashMap<>();

- private Future future;

+ //HashJoin node's major fragment id to its aggregated RuntimeFilterWritable

+ private Map joinMjId2AggregatedRF = new

HashMap<>();

+ //for debug usage

+ private Map joinMjId2Stopwatch = new HashMap<>();

+

+ private DrillbitContext drillbitContext;

+

+ private SendingAccountor sendingAccountor;

private static final Logger logger =

LoggerFactory.getLogger(RuntimeFilterSink.class);

- public RuntimeFilterSink(BufferAllocator bufferAllocator, ExecutorService

executorService) {

-this.bufferAllocator = bufferAllocator;

+ public RuntimeFilterSink(DrillbitContext drillbitContext, SendingAccountor

sendingAccountor)

+ {

+this.drillbitContext = drillbitContext;

+this.sendingAccountor = sendingAccountor;

AsyncAggregateWorker asyncAggregateWorker = new AsyncAggregateWorker();

-future = executorService.submit(asyncAggregateWorker);

+drillbitContext.getExecutor().submit(asyncAggregateWorker);

}

- public void aggregate(RuntimeFilterWritable runtimeFilterWritable) {

-if (running.get()) {

- try {

-aggregatedRFLock.lock();

-if (containOne()) {

- boolean same = aggregated.equals(runtimeFilterWritable);

- if (!same) {

-// This is to solve the only one fragment case that two

RuntimeFilterRecordBatchs

-// share the same FragmentContext.

-aggregated

[jira] [Created] (DRILL-6845) Eliminate duplicates for Semi Hash Join

Boaz Ben-Zvi created DRILL-6845: --- Summary: Eliminate duplicates for Semi Hash Join Key: DRILL-6845 URL: https://issues.apache.org/jira/browse/DRILL-6845 Project: Apache Drill Issue Type: Sub-task Components: Execution - Relational Operators Affects Versions: 1.14.0 Reporter: Boaz Ben-Zvi Assignee: Boaz Ben-Zvi Fix For: 1.15.0 Following DRILL-6735: The performance of the new Semi Hash Join may degrade if the build side contains excessive number of join-key duplicate rows; this mainly a result of the need to store all those rows first, before the hash table is built. Proposed solution: For Semi, the Hash Agg would create a Hash-Table initially, and use it to eliminate key-duplicate rows as they arrive. Proposed extra: That Hash-Table has an added cost (e.g. resizing). So perform "runtime stats" – Check initial number of incoming rows (e.g. 32k), and if the number of duplicates is less than some threshold (e.g. %20) – cancel that "early" hash table. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

Re: Hangout Discussion Topics

Since we are having the Drill Developer day on Wednesday, perhaps we can skip the hangout tomorrow ? Aman On Mon, Nov 12, 2018 at 10:13 AM Timothy Farkas wrote: > Hi All, > > Does anyone have any topics to discuss during the hangout tomorrow? > > Thanks, > Tim >

[GitHub] vdiravka commented on issue #1530: DRILL-6582: SYSLOG (RFC-5424) Format Plugin

vdiravka commented on issue #1530: DRILL-6582: SYSLOG (RFC-5424) Format Plugin URL: https://github.com/apache/drill/pull/1530#issuecomment-438072903 @cgivre Please squash commits into the one and rebase the branch onto the latest master branch. Please add some description in the Jira or here in PR, it can be info from your [README](https://github.com/apache/drill/pull/1530/files#diff-c3c1e6700cf903f281eef810595cdc4bR2) file This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] gparai commented on a change in pull request #1532: DRILL-6833: Support for pushdown of rowkey based joins

gparai commented on a change in pull request #1532: DRILL-6833: Support for

pushdown of rowkey based joins

URL: https://github.com/apache/drill/pull/1532#discussion_r232836231

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/planner/logical/DrillPushRowKeyJoinToScanRule.java

##

@@ -0,0 +1,520 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.drill.exec.planner.logical;

+

+

+import org.apache.calcite.plan.RelOptPlanner;

+import org.apache.calcite.plan.RelOptRule;

+import org.apache.calcite.plan.RelOptRuleCall;

+import org.apache.calcite.plan.RelOptRuleOperand;

+import org.apache.calcite.plan.RelOptUtil;

+import org.apache.calcite.plan.hep.HepRelVertex;

+import org.apache.calcite.plan.volcano.RelSubset;

+import org.apache.calcite.rel.RelNode;

+import org.apache.calcite.rel.core.JoinRelType;

+import org.apache.calcite.rel.metadata.RelMetadataQuery;

+import org.apache.calcite.rex.RexCall;

+import org.apache.calcite.rex.RexInputRef;

+import org.apache.calcite.rex.RexNode;

+import org.apache.calcite.sql.SqlKind;

+import org.apache.calcite.util.Pair;

+import org.apache.drill.exec.planner.logical.RowKeyJoinCallContext.RowKey;

+import org.apache.drill.exec.physical.base.DbGroupScan;

+import org.apache.drill.exec.planner.index.rules.MatchFunction;

+import org.apache.drill.exec.planner.physical.PrelUtil;

+

+import org.apache.drill.shaded.guava.com.google.common.collect.ImmutableList;

+

+import java.util.ArrayList;

+import java.util.List;

+

+public class DrillPushRowKeyJoinToScanRule extends RelOptRule {

Review comment:

@amansinha100 I have addressed your review comments. Please take a look and

let me know your thoughts.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

Re: Unit Test Question

Hi Vitalii, I did run all the tests on my machine and it completed without error after 42 min. Is the PR ok to proceed, or what do I need to do in order for the PR to be reviewed here and approved? —C > On Nov 12, 2018, at 15:18, Vitalii Diravka wrote: > > Hi Charles, > > Actually 45-50 mins is the Travis time limit for the job, but anyway you > can see the output and find the result of the run. > Also you can try to enable CircleCI for your own Drill repo. It can run > tests for you similar to Travis, but without time limits. > But neither TravisCI nor CircleCI doesn't run all the Drill unit tests. > Therefore it is good to run unit tests on your PC before every PR via *mvn > clean install* > > Kind regards > Vitalii > > > On Mon, Nov 12, 2018 at 10:36 AM Charles Givre wrote: > >> Hi Paul, >> I fixed the issue with the unit test, updated the PR but now Tavis is >> timing out when building Kudu or something not related to my PR. Is there >> anything I can do about that? >> >> The job exceeded the maximum time limit for jobs, and has been terminated. >> >> >>> On Nov 11, 2018, at 14:32, Paul Rogers >> wrote: >>> >>> Hi Charles, >>> >>> This error is Drill's long-winded way of saying you have a direct memory >> (value vector) memory leak: you allocated a vector (or buffer) which was >> never passed downstream or freed. Looks like quite a few leaked so should >> be fairly easy to track down. >>> >>> On your last batch, did you allocate some vectors that ended up not >> being used, maybe? >>> >>> Thanks, >>> - Paul >>> >>> >>> >>> On Sunday, November 11, 2018, 6:49:21 AM PST, Charles Givre < >> cgi...@gmail.com> wrote: >>> >>> Hi Paul, >>> Thanks for this. I updated the test and I think I’m close but how I’m >> getting different errors. It looks like it isn’t closing the cluster >> properly, but I’m not quite sure what to do about this. >>> — C >>> >>> >>> >>> Tests run: 2, Failures: 0, Errors: 1, Skipped: 0, Time elapsed: 1.817 >> sec <<< FAILURE! - in org.apache.drill.exec.store.syslog.TestSyslogFormat >>> org.apache.drill.exec.store.syslog.TestSyslogFormat Time elapsed: 1.817 >> sec <<< ERROR! >>> java.lang.RuntimeException: Exception while closing >>>at >> org.apache.drill.common.DrillAutoCloseables.closeNoChecked(DrillAutoCloseables.java:46) >>>at >> org.apache.drill.exec.client.DrillClient.close(DrillClient.java:475) >>>at org.apache.drill.test.ClientFixture.close(ClientFixture.java:242) >>>at >> org.apache.drill.common.AutoCloseables.close(AutoCloseables.java:81) >>>at >> org.apache.drill.common.AutoCloseables.close(AutoCloseables.java:69) >>>at org.apache.drill.test.ClusterTest.shutdown(ClusterTest.java:89) >>> Caused by: java.lang.IllegalStateException: Allocator[ROOT] closed with >> outstanding buffers allocated (1). >>> Allocator(ROOT) 0/2048/6176/4294967296 (res/actual/peak/limit) >>> child allocators: 0 >>> ledgers: 1 >>>ledger[251] allocator: ROOT), isOwning: true, size: 2048, >> references: 33, life: 811185821181057..0, allocatorManager: [177, life: >> 811185821154333..0] holds 59 buffers. >>>DrillBuf[447], udle: [178 1229..1237] >>>DrillBuf[470], udle: [178 1366..1367] >>>DrillBuf[457], udle: [178 1282..1290] >>>DrillBuf[442], udle: [178 1207..1215] >>>DrillBuf[315], udle: [178 11..1126] >>>DrillBuf[443], udle: [178 1215..1228] >>>DrillBuf[465], udle: [178 1350..1351] >>>DrillBuf[456], udle: [178 1282..1319] >>>DrillBuf[480], udle: [178 1402..1403] >>>DrillBuf[452], udle: [178 1262..1270] >>>DrillBuf[453], udle: [178 1270..1281] >>>DrillBuf[460], udle: [178 1319..1320] >>>DrillBuf[473], udle: [178 1375..1380] >>>DrillBuf[485], udle: [178 1416..1417] >>>DrillBuf[471], udle: [178 1367..1380] >>>DrillBuf[463], udle: [178 1328..1350] >>>DrillBuf[474], udle: [178 1380..1402] >>>DrillBuf[459], udle: [178 1319..1350] >>>DrillBuf[461], udle: [178 1320..1350] >>>DrillBuf[468], udle: [178 1359..1366] >>>DrillBuf[441], udle: [178 1207..1228] >>>DrillBuf[488], udle: [178 1425..1426] >>>DrillBuf[313], udle: [178 0..2048] >>>DrillBuf[433], udle: [178 1129..1426] >>>DrillBuf[486], udle: [178 1417..1426] >>>DrillBuf[436], udle: [178 1130..1206] >>>DrillBuf[464], udle: [178 1350..1366] >>>DrillBuf[444], udle: [178 1228..1261] >>>DrillBuf[466], udle: [178 1351..1366] >>>DrillBuf[469], udle: [178 1366..1380] >>>DrillBuf[435], udle: [178 1129..1130] >>>DrillBuf[449], udle: [178 1261..1281] >>>DrillBuf[458], udle: [178 1290..1319] >>>DrillBuf[484], udle: [178 1416..1426] >>>DrillBuf[454], udle: [178 1281..1319] >>>DrillBuf[434], udle: [178 1129..1206] >>>DrillBuf[467], udle: [178 1351..1359] >>>DrillBuf[317], udle: [178 1129..1426] >>>DrillBuf[

[GitHub] dvjyothsna opened a new pull request #1536: DRILL-6039: Fixed drillbit.sh script to do graceful shutdown

dvjyothsna opened a new pull request #1536: DRILL-6039: Fixed drillbit.sh script to do graceful shutdown URL: https://github.com/apache/drill/pull/1536 @sohami please review This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] bitblender commented on issue #1467: DRILL-5671: Set secure ACLs (Access Control List) for Drill ZK nodes in a secure cluster

bitblender commented on issue #1467: DRILL-5671: Set secure ACLs (Access Control List) for Drill ZK nodes in a secure cluster URL: https://github.com/apache/drill/pull/1467#issuecomment-438017349 @arina-ielchiieva @sohami Thanks for the reviews. The commits are squashed now. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

Re: Unit Test Question

Hi Charles, Actually 45-50 mins is the Travis time limit for the job, but anyway you can see the output and find the result of the run. Also you can try to enable CircleCI for your own Drill repo. It can run tests for you similar to Travis, but without time limits. But neither TravisCI nor CircleCI doesn't run all the Drill unit tests. Therefore it is good to run unit tests on your PC before every PR via *mvn clean install* Kind regards Vitalii On Mon, Nov 12, 2018 at 10:36 AM Charles Givre wrote: > Hi Paul, > I fixed the issue with the unit test, updated the PR but now Tavis is > timing out when building Kudu or something not related to my PR. Is there > anything I can do about that? > > The job exceeded the maximum time limit for jobs, and has been terminated. > > > > On Nov 11, 2018, at 14:32, Paul Rogers > wrote: > > > > Hi Charles, > > > > This error is Drill's long-winded way of saying you have a direct memory > (value vector) memory leak: you allocated a vector (or buffer) which was > never passed downstream or freed. Looks like quite a few leaked so should > be fairly easy to track down. > > > > On your last batch, did you allocate some vectors that ended up not > being used, maybe? > > > > Thanks, > > - Paul > > > > > > > >On Sunday, November 11, 2018, 6:49:21 AM PST, Charles Givre < > cgi...@gmail.com> wrote: > > > > Hi Paul, > > Thanks for this. I updated the test and I think I’m close but how I’m > getting different errors. It looks like it isn’t closing the cluster > properly, but I’m not quite sure what to do about this. > > — C > > > > > > > > Tests run: 2, Failures: 0, Errors: 1, Skipped: 0, Time elapsed: 1.817 > sec <<< FAILURE! - in org.apache.drill.exec.store.syslog.TestSyslogFormat > > org.apache.drill.exec.store.syslog.TestSyslogFormat Time elapsed: 1.817 > sec <<< ERROR! > > java.lang.RuntimeException: Exception while closing > > at > org.apache.drill.common.DrillAutoCloseables.closeNoChecked(DrillAutoCloseables.java:46) > > at > org.apache.drill.exec.client.DrillClient.close(DrillClient.java:475) > > at org.apache.drill.test.ClientFixture.close(ClientFixture.java:242) > > at > org.apache.drill.common.AutoCloseables.close(AutoCloseables.java:81) > > at > org.apache.drill.common.AutoCloseables.close(AutoCloseables.java:69) > > at org.apache.drill.test.ClusterTest.shutdown(ClusterTest.java:89) > > Caused by: java.lang.IllegalStateException: Allocator[ROOT] closed with > outstanding buffers allocated (1). > > Allocator(ROOT) 0/2048/6176/4294967296 (res/actual/peak/limit) > > child allocators: 0 > > ledgers: 1 > > ledger[251] allocator: ROOT), isOwning: true, size: 2048, > references: 33, life: 811185821181057..0, allocatorManager: [177, life: > 811185821154333..0] holds 59 buffers. > > DrillBuf[447], udle: [178 1229..1237] > > DrillBuf[470], udle: [178 1366..1367] > > DrillBuf[457], udle: [178 1282..1290] > > DrillBuf[442], udle: [178 1207..1215] > > DrillBuf[315], udle: [178 11..1126] > > DrillBuf[443], udle: [178 1215..1228] > > DrillBuf[465], udle: [178 1350..1351] > > DrillBuf[456], udle: [178 1282..1319] > > DrillBuf[480], udle: [178 1402..1403] > > DrillBuf[452], udle: [178 1262..1270] > > DrillBuf[453], udle: [178 1270..1281] > > DrillBuf[460], udle: [178 1319..1320] > > DrillBuf[473], udle: [178 1375..1380] > > DrillBuf[485], udle: [178 1416..1417] > > DrillBuf[471], udle: [178 1367..1380] > > DrillBuf[463], udle: [178 1328..1350] > > DrillBuf[474], udle: [178 1380..1402] > > DrillBuf[459], udle: [178 1319..1350] > > DrillBuf[461], udle: [178 1320..1350] > > DrillBuf[468], udle: [178 1359..1366] > > DrillBuf[441], udle: [178 1207..1228] > > DrillBuf[488], udle: [178 1425..1426] > > DrillBuf[313], udle: [178 0..2048] > > DrillBuf[433], udle: [178 1129..1426] > > DrillBuf[486], udle: [178 1417..1426] > > DrillBuf[436], udle: [178 1130..1206] > > DrillBuf[464], udle: [178 1350..1366] > > DrillBuf[444], udle: [178 1228..1261] > > DrillBuf[466], udle: [178 1351..1366] > > DrillBuf[469], udle: [178 1366..1380] > > DrillBuf[435], udle: [178 1129..1130] > > DrillBuf[449], udle: [178 1261..1281] > > DrillBuf[458], udle: [178 1290..1319] > > DrillBuf[484], udle: [178 1416..1426] > > DrillBuf[454], udle: [178 1281..1319] > > DrillBuf[434], udle: [178 1129..1206] > > DrillBuf[467], udle: [178 1351..1359] > > DrillBuf[317], udle: [178 1129..1426] > > DrillBuf[438], udle: [178 1138..1206] > > DrillBuf[448], udle: [178 1237..1261] > > DrillBuf[462], udle: [178 1320..1328] > > DrillBuf[450], udle: [178 1261..1262] > > DrillBuf[476], udle: [178 1381..1402] > > DrillBuf[478], udle: [178 1389..1402] > >

[GitHub] kkhatua opened a new pull request #1535: DRILL-2035: Add ability to cancel multiple queries

kkhatua opened a new pull request #1535: DRILL-2035: Add ability to cancel multiple queries URL: https://github.com/apache/drill/pull/1535 Currently Drill UI allows canceling one query at a time. This commit (on lines of DRILL-5571 / PR #1531) allows for cancelling multiple `running` queries. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] amansinha100 commented on a change in pull request #1532: DRILL-6833: Support for pushdown of rowkey based joins

amansinha100 commented on a change in pull request #1532: DRILL-6833: Support

for pushdown of rowkey based joins

URL: https://github.com/apache/drill/pull/1532#discussion_r232773609

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/planner/logical/DrillPushRowKeyJoinToScanRule.java

##

@@ -0,0 +1,520 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.drill.exec.planner.logical;

+

+

+import org.apache.calcite.plan.RelOptPlanner;

+import org.apache.calcite.plan.RelOptRule;

+import org.apache.calcite.plan.RelOptRuleCall;

+import org.apache.calcite.plan.RelOptRuleOperand;

+import org.apache.calcite.plan.RelOptUtil;

+import org.apache.calcite.plan.hep.HepRelVertex;

+import org.apache.calcite.plan.volcano.RelSubset;

+import org.apache.calcite.rel.RelNode;

+import org.apache.calcite.rel.core.JoinRelType;

+import org.apache.calcite.rel.metadata.RelMetadataQuery;

+import org.apache.calcite.rex.RexCall;

+import org.apache.calcite.rex.RexInputRef;

+import org.apache.calcite.rex.RexNode;

+import org.apache.calcite.sql.SqlKind;

+import org.apache.calcite.util.Pair;

+import org.apache.drill.exec.planner.logical.RowKeyJoinCallContext.RowKey;

+import org.apache.drill.exec.physical.base.DbGroupScan;

+import org.apache.drill.exec.planner.index.rules.MatchFunction;

+import org.apache.drill.exec.planner.physical.PrelUtil;

+

+import org.apache.drill.shaded.guava.com.google.common.collect.ImmutableList;

+

+import java.util.ArrayList;

+import java.util.List;

+

+public class DrillPushRowKeyJoinToScanRule extends RelOptRule {

Review comment:

Can you add a brief Javadoc about what types of query pattern is eligible

for this rule's transformation and the nature of the transformed output.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

Re: Unit Test Question

Hi Paul, I fixed the issue with the unit test, updated the PR but now Tavis is timing out when building Kudu or something not related to my PR. Is there anything I can do about that? The job exceeded the maximum time limit for jobs, and has been terminated. > On Nov 11, 2018, at 14:32, Paul Rogers wrote: > > Hi Charles, > > This error is Drill's long-winded way of saying you have a direct memory > (value vector) memory leak: you allocated a vector (or buffer) which was > never passed downstream or freed. Looks like quite a few leaked so should be > fairly easy to track down. > > On your last batch, did you allocate some vectors that ended up not being > used, maybe? > > Thanks, > - Paul > > > >On Sunday, November 11, 2018, 6:49:21 AM PST, Charles Givre > wrote: > > Hi Paul, > Thanks for this. I updated the test and I think I’m close but how I’m > getting different errors. It looks like it isn’t closing the cluster > properly, but I’m not quite sure what to do about this. > — C > > > > Tests run: 2, Failures: 0, Errors: 1, Skipped: 0, Time elapsed: 1.817 sec <<< > FAILURE! - in org.apache.drill.exec.store.syslog.TestSyslogFormat > org.apache.drill.exec.store.syslog.TestSyslogFormat Time elapsed: 1.817 sec > <<< ERROR! > java.lang.RuntimeException: Exception while closing > at > org.apache.drill.common.DrillAutoCloseables.closeNoChecked(DrillAutoCloseables.java:46) > at org.apache.drill.exec.client.DrillClient.close(DrillClient.java:475) > at org.apache.drill.test.ClientFixture.close(ClientFixture.java:242) > at org.apache.drill.common.AutoCloseables.close(AutoCloseables.java:81) > at org.apache.drill.common.AutoCloseables.close(AutoCloseables.java:69) > at org.apache.drill.test.ClusterTest.shutdown(ClusterTest.java:89) > Caused by: java.lang.IllegalStateException: Allocator[ROOT] closed with > outstanding buffers allocated (1). > Allocator(ROOT) 0/2048/6176/4294967296 (res/actual/peak/limit) > child allocators: 0 > ledgers: 1 > ledger[251] allocator: ROOT), isOwning: true, size: 2048, references: 33, > life: 811185821181057..0, allocatorManager: [177, life: 811185821154333..0] > holds 59 buffers. > DrillBuf[447], udle: [178 1229..1237] > DrillBuf[470], udle: [178 1366..1367] > DrillBuf[457], udle: [178 1282..1290] > DrillBuf[442], udle: [178 1207..1215] > DrillBuf[315], udle: [178 11..1126] > DrillBuf[443], udle: [178 1215..1228] > DrillBuf[465], udle: [178 1350..1351] > DrillBuf[456], udle: [178 1282..1319] > DrillBuf[480], udle: [178 1402..1403] > DrillBuf[452], udle: [178 1262..1270] > DrillBuf[453], udle: [178 1270..1281] > DrillBuf[460], udle: [178 1319..1320] > DrillBuf[473], udle: [178 1375..1380] > DrillBuf[485], udle: [178 1416..1417] > DrillBuf[471], udle: [178 1367..1380] > DrillBuf[463], udle: [178 1328..1350] > DrillBuf[474], udle: [178 1380..1402] > DrillBuf[459], udle: [178 1319..1350] > DrillBuf[461], udle: [178 1320..1350] > DrillBuf[468], udle: [178 1359..1366] > DrillBuf[441], udle: [178 1207..1228] > DrillBuf[488], udle: [178 1425..1426] > DrillBuf[313], udle: [178 0..2048] > DrillBuf[433], udle: [178 1129..1426] > DrillBuf[486], udle: [178 1417..1426] > DrillBuf[436], udle: [178 1130..1206] > DrillBuf[464], udle: [178 1350..1366] > DrillBuf[444], udle: [178 1228..1261] > DrillBuf[466], udle: [178 1351..1366] > DrillBuf[469], udle: [178 1366..1380] > DrillBuf[435], udle: [178 1129..1130] > DrillBuf[449], udle: [178 1261..1281] > DrillBuf[458], udle: [178 1290..1319] > DrillBuf[484], udle: [178 1416..1426] > DrillBuf[454], udle: [178 1281..1319] > DrillBuf[434], udle: [178 1129..1206] > DrillBuf[467], udle: [178 1351..1359] > DrillBuf[317], udle: [178 1129..1426] > DrillBuf[438], udle: [178 1138..1206] > DrillBuf[448], udle: [178 1237..1261] > DrillBuf[462], udle: [178 1320..1328] > DrillBuf[450], udle: [178 1261..1262] > DrillBuf[476], udle: [178 1381..1402] > DrillBuf[478], udle: [178 1389..1402] > DrillBuf[479], udle: [178 1402..1416] > DrillBuf[455], udle: [178 1281..1282] > DrillBuf[475], udle: [178 1380..1381] > DrillBuf[439], udle: [178 1206..1228] > DrillBuf[440], udle: [178 1206..1207] > DrillBuf[477], udle: [178 1381..1389] > DrillBuf[437], udle: [178 1130..1138] > DrillBuf[446], udle: [178 1229..1261] > DrillBuf[451], udle: [178 1262..1281] > DrillBuf[487], udle: [178 1417..1425] > DrillBuf[472], udle: [178 1367..1375] > DrillBuf[482], udle: [178 1403..1411] > DrillBuf[483], udle: [178 1411..1416] > DrillBuf[481], udle: [178 1403..1416] >

Hangout Discussion Topics

Hi All, Does anyone have any topics to discuss during the hangout tomorrow? Thanks, Tim

[GitHub] kkhatua commented on issue #1531: DRILL-5571: Cancel running query from its Web UI

kkhatua commented on issue #1531: DRILL-5571: Cancel running query from its Web UI URL: https://github.com/apache/drill/pull/1531#issuecomment-437973137 @arina-ielchiieva that is my Drillbit's hostname. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

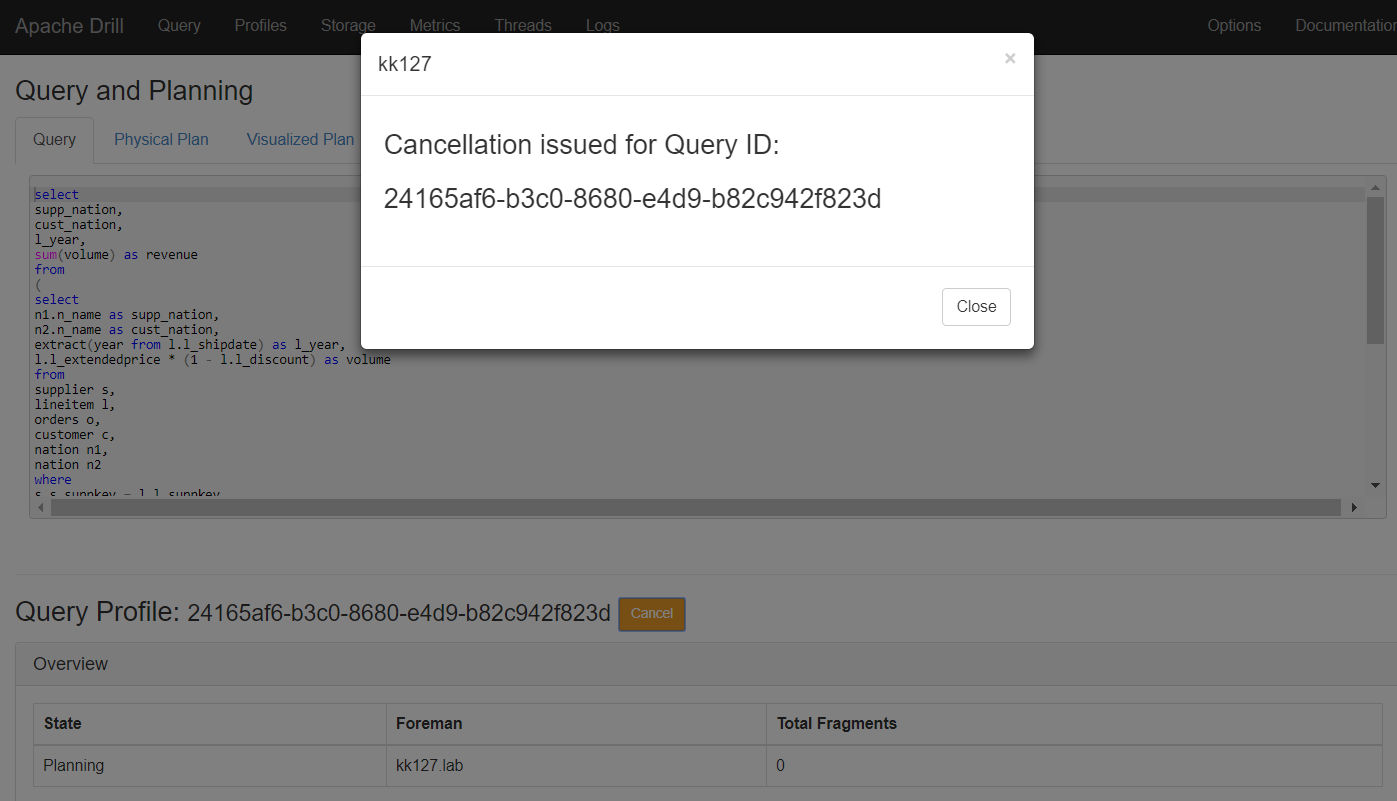

[GitHub] arina-ielchiieva edited a comment on issue #1531: DRILL-5571: Cancel running query from its Web UI

arina-ielchiieva edited a comment on issue #1531: DRILL-5571: Cancel running query from its Web UI URL: https://github.com/apache/drill/pull/1531#issuecomment-437949964 @kkhatua what kk127 means on the screenshot with modal window? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] arina-ielchiieva commented on issue #1531: DRILL-5571: Cancel running query from its Web UI

arina-ielchiieva commented on issue #1531: DRILL-5571: Cancel running query from its Web UI URL: https://github.com/apache/drill/pull/1531#issuecomment-437949964 @kkhatua what kk127 on the screenshot with modal window? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] kkhatua commented on a change in pull request #1531: DRILL-5571: Cancel running query from its Web UI

kkhatua commented on a change in pull request #1531: DRILL-5571: Cancel running

query from its Web UI

URL: https://github.com/apache/drill/pull/1531#discussion_r232722408

##

File path: exec/java-exec/src/main/resources/rest/profile/profile.ftl

##

@@ -171,7 +188,30 @@ table.sortable thead .sorting_desc { background-image:

url("/static/img/black-de

<#assign queued = queueName != "" && queueName != "-" />

- Query Profile : ${model.getQueryId()}

+ Query Profile: ${model.getQueryId()}

+ <#if model.getQueryStateDisplayName() == "Running" ||

model.getQueryStateDisplayName() == "Planning">

Review comment:

Actually, on second thoughts, I can add STARTING, etc as well. If the query

ID doesnt exist, the page wouldn't exist, so we should be fine. I'll add the

change.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] kkhatua commented on a change in pull request #1531: DRILL-5571: Cancel running query from its Web UI

kkhatua commented on a change in pull request #1531: DRILL-5571: Cancel running

query from its Web UI

URL: https://github.com/apache/drill/pull/1531#discussion_r232718757

##

File path: exec/java-exec/src/main/resources/rest/profile/profile.ftl

##

@@ -171,7 +188,30 @@ table.sortable thead .sorting_desc { background-image:

url("/static/img/black-de

<#assign queued = queueName != "" && queueName != "-" />

- Query Profile : ${model.getQueryId()}

+ Query Profile: ${model.getQueryId()}

+ <#if model.getQueryStateDisplayName() == "Running" ||

model.getQueryStateDisplayName() == "Planning">

Review comment:

I believe a QueryId is not created during STARTING. Will need to check for

other states... as I'm not sure what PREPARING does

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] kkhatua commented on a change in pull request #1531: DRILL-5571: Cancel running query from its Web UI

kkhatua commented on a change in pull request #1531: DRILL-5571: Cancel running

query from its Web UI

URL: https://github.com/apache/drill/pull/1531#discussion_r232718140

##

File path: exec/java-exec/src/main/resources/rest/profile/profile.ftl

##

@@ -50,6 +50,22 @@

"info": false

}

);} );

+

+//Close the cancellation status popup

+function refreshStatus() {

+ //Close PopUp Modal

+ $("#queryCancelModal").modal("hide");

+ location.reload(true);

+}

+

+//Cancel query & show cancellation status

+function cancelQuery() {

+ document.getElementById("cancelTitle").innerHTML = location.hostname;

Review comment:

Indicating the host to which the cancelation was issued.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] kkhatua commented on issue #1531: DRILL-5571: Cancel running query from its Web UI

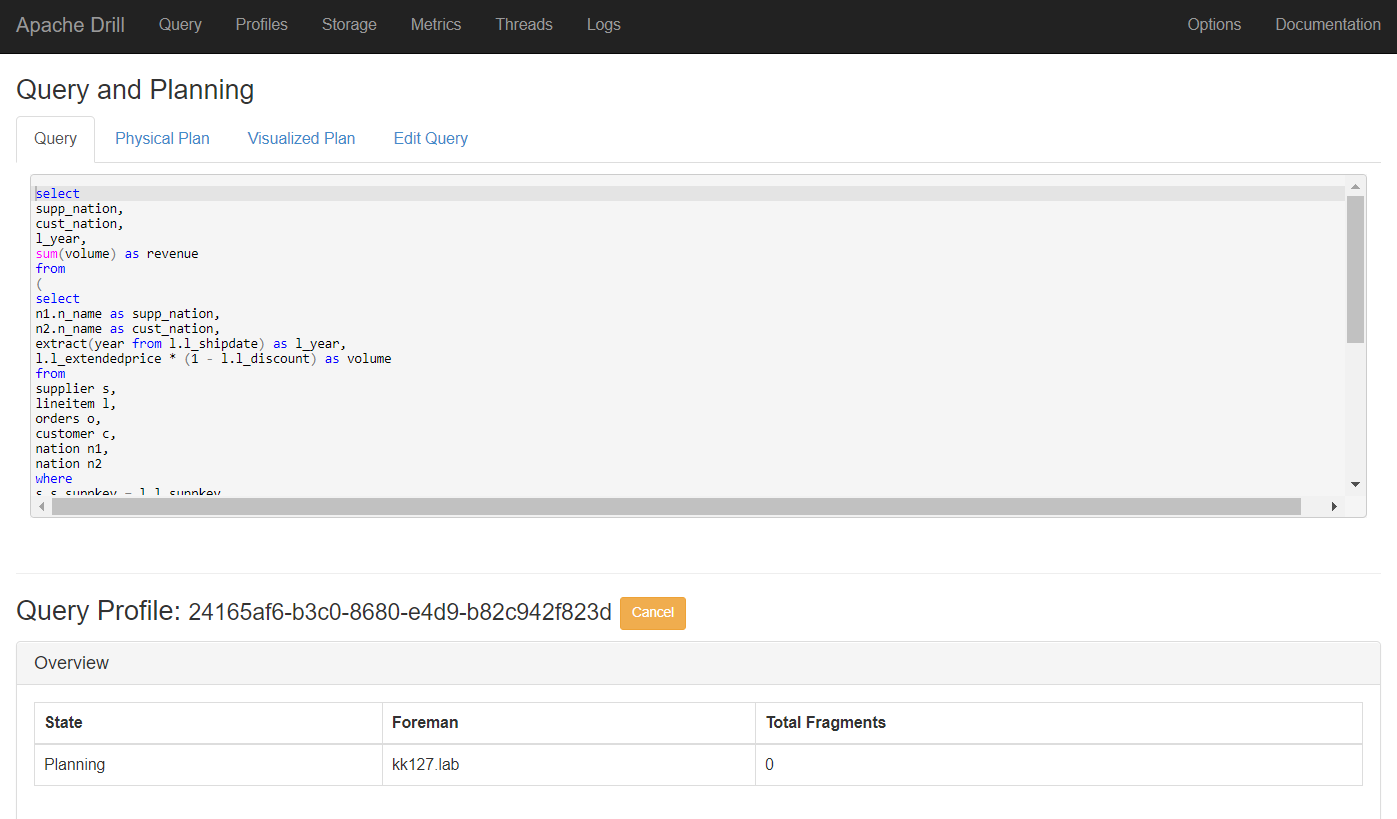

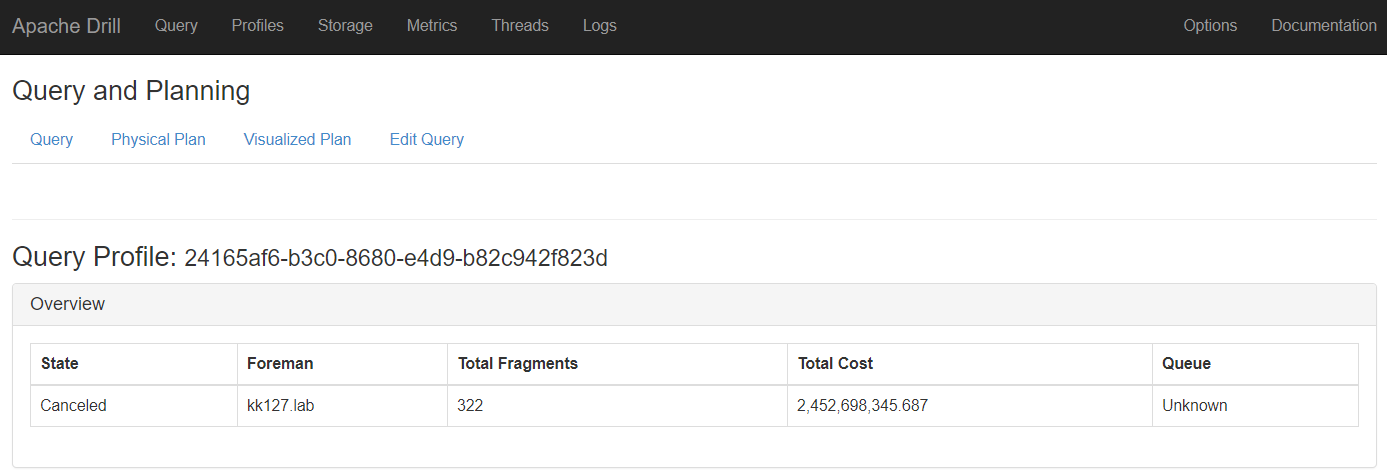

kkhatua commented on issue #1531: DRILL-5571: Cancel running query from its Web UI URL: https://github.com/apache/drill/pull/1531#issuecomment-437937304 Running Query:  During Cancellation:  Post Cancellation Status:  This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] arina-ielchiieva commented on a change in pull request #1531: DRILL-5571: Cancel running query from its Web UI

arina-ielchiieva commented on a change in pull request #1531: DRILL-5571:

Cancel running query from its Web UI

URL: https://github.com/apache/drill/pull/1531#discussion_r232642539

##

File path: exec/java-exec/src/main/resources/rest/profile/profile.ftl

##

@@ -171,7 +188,30 @@ table.sortable thead .sorting_desc { background-image:

url("/static/img/black-de

<#assign queued = queueName != "" && queueName != "-" />

- Query Profile : ${model.getQueryId()}

+ Query Profile: ${model.getQueryId()}

+ <#if model.getQueryStateDisplayName() == "Running" ||

model.getQueryStateDisplayName() == "Planning">

Review comment:

What about other states when query can be cancelled?

STARTING

ENQUEUED

PREPARING

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] arina-ielchiieva commented on a change in pull request #1531: DRILL-5571: Cancel running query from its Web UI

arina-ielchiieva commented on a change in pull request #1531: DRILL-5571:

Cancel running query from its Web UI

URL: https://github.com/apache/drill/pull/1531#discussion_r232642178

##

File path: exec/java-exec/src/main/resources/rest/profile/profile.ftl

##

@@ -50,6 +50,22 @@

"info": false

}

);} );

+

+//Close the cancellation status popup

+function refreshStatus() {

+ //Close PopUp Modal

+ $("#queryCancelModal").modal("hide");

+ location.reload(true);

+}

+

+//Cancel query & show cancellation status

+function cancelQuery() {

+ document.getElementById("cancelTitle").innerHTML = location.hostname;

Review comment:

Why this is needed?

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] arina-ielchiieva commented on issue #1533: DRILL-6843: Update SchemaBuilder comment to match implementation

arina-ielchiieva commented on issue #1533: DRILL-6843: Update SchemaBuilder comment to match implementation URL: https://github.com/apache/drill/pull/1533#issuecomment-437864001 +1 This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM

oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM URL: https://github.com/apache/drill/pull/1446#issuecomment-437862742 @vvysotskyi I was able to remove warning on startup by adding `--add-opens` for all accessed modules. But there is not guarantee that this warning will not be shown later. What about Visual C++ Runtime library? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] oleg-zinovev commented on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM

oleg-zinovev commented on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM URL: https://github.com/apache/drill/pull/1446#issuecomment-437862742 @vvysotskyi I was able to remove warning on startup by adding `--add-opens` for all accessed modules. But there is not guarantee that this warning will not be shown later. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM

oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM URL: https://github.com/apache/drill/pull/1446#issuecomment-437848102 @vvysotskyi, `2.7.1` is the latest version of `hadoop-winutils` (in central.maven.org) > Also, could you please hide warnings which are displayed after starting Drill in embedded mode for JDK 9+ for both Linux and Windows? According to http://mail.openjdk.java.net/pipermail/jigsaw-dev/2017-May/012673.html there is no official way to remove this warnings. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM

oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM URL: https://github.com/apache/drill/pull/1446#issuecomment-437848102 @vvysotskyi, `2.7.1` is the latest version of `hadoop-winutils`. > Also, could you please hide warnings which are displayed after starting Drill in embedded mode for JDK 9+ for both Linux and Windows? According to http://mail.openjdk.java.net/pipermail/jigsaw-dev/2017-May/012673.html there is no official way to remove this warnings. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] oleg-zinovev commented on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM

oleg-zinovev commented on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM URL: https://github.com/apache/drill/pull/1446#issuecomment-437848102 @vvysotskyi, `2.7.1` is the latest version of `hadoop-winutils` This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] vvysotskyi commented on a change in pull request #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM

vvysotskyi commented on a change in pull request #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM URL: https://github.com/apache/drill/pull/1446#discussion_r232586065 ## File path: distribution/src/resources/sqlline.bat ## @@ -1,226 +1,234 @@ -@REM -@REM Licensed to the Apache Software Foundation (ASF) under one -@REM or more contributor license agreements. See the NOTICE file -@REM distributed with this work for additional information -@REM regarding copyright ownership. The ASF licenses this file -@REM to you under the Apache License, Version 2.0 (the -@REM "License"); you may not use this file except in compliance -@REM with the License. You may obtain a copy of the License at -@REM -@REM http://www.apache.org/licenses/LICENSE-2.0 -@REM -@REM Unless required by applicable law or agreed to in writing, software -@REM distributed under the License is distributed on an "AS IS" BASIS, -@REM WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. -@REM See the License for the specific language governing permissions and -@REM limitations under the License. -@REM - -@echo off -@rem/* -@rem * Licensed to the Apache Software Foundation (ASF) under one -@rem * or more contributor license agreements. See the NOTICE file -@rem * distributed with this work for additional information -@rem * regarding copyright ownership. The ASF licenses this file -@rem * to you under the Apache License, Version 2.0 (the -@rem * "License"); you may not use this file except in compliance -@rem * with the License. You may obtain a copy of the License at -@rem * -@rem * http://www.apache.org/licenses/LICENSE-2.0 -@rem * -@rem * Unless required by applicable law or agreed to in writing, software -@rem * distributed under the License is distributed on an "AS IS" BASIS, -@rem * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. -@rem * See the License for the specific language governing permissions and -@rem * limitations under the License. -@rem */ -@rem -setlocal EnableExtensions EnableDelayedExpansion - -rem -rem In order to pass in arguments with an equals symbol, use quotation marks. -rem For example -rem sqlline -u "jdbc:drill:zk=local" -n admin -p admin -rem - -rem -rem Deal with command-line arguments -rem - -:argactionstart -if -%1-==-- goto argactionend - -set atleastonearg=0 - -if x%1 == x-q ( - set QUERY=%2 - set atleastonearg=1 - shift - shift -) - -if x%1 == x-e ( - set QUERY=%2 - set atleastonearg=1 - shift - shift -) - -if x%1 == x-f ( - set FILE=%2 - set atleastonearg=1 - shift - shift -) - -if x%1 == x--config ( - set confdir=%2 - set DRILL_CONF_DIR=%2 - set atleastonearg=1 - shift - shift -) - -if x%1 == x--jvm ( - set DRILL_SHELL_JAVA_OPTS=!DRILL_SHELL_JAVA_OPTS! %2 - set DRILL_SHELL_JAVA_OPTS=!DRILL_SHELL_JAVA_OPTS:"=! - set atleastonearg=1 - shift - shift -) - -if "!atleastonearg!"=="0" ( - set DRILL_ARGS=!DRILL_ARGS! %~1 - shift -) - -goto argactionstart -:argactionend - -rem -rem Validate JAVA_HOME -rem -if "%JAVA_EXE%" == "" (set JAVA_EXE=java.exe) - -if not "%JAVA_HOME%" == "" goto javaHomeSet -echo. -echo WARN: JAVA_HOME not found in your environment. -echo Please set the JAVA_HOME variable in your environment to match the -echo location of your Java installation -echo. -goto initDrillEnv - -:javaHomeSet -if exist "%JAVA_HOME%\bin\%JAVA_EXE%" goto initDrillEnv -echo. -echo ERROR: JAVA_HOME is set to an invalid directory. -echo JAVA_HOME = %JAVA_HOME% -echo Please set the JAVA_HOME variable in your environment to match the -echo location of your Java installation -echo. -goto error - -:initDrillEnv -echo DRILL_ARGS - "%DRILL_ARGS%" - -rem -rem Deal with Drill variables -rem - -set DRILL_BIN_DIR=%~dp0 -pushd %DRILL_BIN_DIR%.. -set DRILL_HOME=%cd% -popd - -if "test%DRILL_CONF_DIR%" == "test" ( - set DRILL_CONF_DIR=%DRILL_HOME%\conf -) - -if "test%DRILL_LOG_DIR%" == "test" ( - set DRILL_LOG_DIR=%DRILL_HOME%\log -) - -@rem Drill temporary directory is used as base for temporary storage of Dynamic UDF jars. -if "test%DRILL_TMP_DIR%" == "test" ( - set DRILL_TMP_DIR=%TEMP% -) - -rem -rem Deal with Hadoop JARs, if HADOOP_HOME was specified -rem - -if "test%HADOOP_HOME%" == "test" ( - echo HADOOP_HOME not detected... - set USE_HADOOP_CP=0 - set HADOOP_HOME=%DRILL_HOME%\winutils -) else ( - echo Calculating HADOOP_CLASSPATH ... - for %%i in (%HADOOP_HOME%\lib\*.jar) do ( -set IGNOREJAR=0 -for /F "tokens=*" %%A in (%DRILL_BIN_DIR%\hadoop-excludes.txt) do ( - echo.%%~ni|findstr /C:"%%A" >nul 2>&1 - if not errorlevel 1 set IGNOREJAR=1 -) -if "!IGNOREJAR!"=="0" set HADOOP_CLASSPATH=%%i;!HADOOP_CLASSPATH! - ) - set HADOOP_CLASSPATH=%HADOOP_HOME%\conf;!HADOOP_CLASSPATH! - set USE_HADOOP_CP=1 -) - -rem -rem Deal with HBase JARs, if HBASE_HOME was specified -rem - -if "test%HBASE_HOME%" == "t

[GitHub] vvysotskyi commented on a change in pull request #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM

vvysotskyi commented on a change in pull request #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM URL: https://github.com/apache/drill/pull/1446#discussion_r232587070 ## File path: distribution/src/resources/sqlline.bat ## @@ -1,226 +1,234 @@ -@REM -@REM Licensed to the Apache Software Foundation (ASF) under one -@REM or more contributor license agreements. See the NOTICE file -@REM distributed with this work for additional information -@REM regarding copyright ownership. The ASF licenses this file -@REM to you under the Apache License, Version 2.0 (the -@REM "License"); you may not use this file except in compliance -@REM with the License. You may obtain a copy of the License at -@REM -@REM http://www.apache.org/licenses/LICENSE-2.0 -@REM -@REM Unless required by applicable law or agreed to in writing, software -@REM distributed under the License is distributed on an "AS IS" BASIS, -@REM WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. -@REM See the License for the specific language governing permissions and -@REM limitations under the License. -@REM - -@echo off -@rem/* -@rem * Licensed to the Apache Software Foundation (ASF) under one -@rem * or more contributor license agreements. See the NOTICE file -@rem * distributed with this work for additional information -@rem * regarding copyright ownership. The ASF licenses this file -@rem * to you under the Apache License, Version 2.0 (the -@rem * "License"); you may not use this file except in compliance -@rem * with the License. You may obtain a copy of the License at -@rem * -@rem * http://www.apache.org/licenses/LICENSE-2.0 -@rem * -@rem * Unless required by applicable law or agreed to in writing, software -@rem * distributed under the License is distributed on an "AS IS" BASIS, -@rem * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. -@rem * See the License for the specific language governing permissions and -@rem * limitations under the License. -@rem */ -@rem -setlocal EnableExtensions EnableDelayedExpansion - -rem -rem In order to pass in arguments with an equals symbol, use quotation marks. -rem For example -rem sqlline -u "jdbc:drill:zk=local" -n admin -p admin -rem - -rem -rem Deal with command-line arguments -rem - -:argactionstart -if -%1-==-- goto argactionend - -set atleastonearg=0 - -if x%1 == x-q ( - set QUERY=%2 - set atleastonearg=1 - shift - shift -) - -if x%1 == x-e ( - set QUERY=%2 - set atleastonearg=1 - shift - shift -) - -if x%1 == x-f ( - set FILE=%2 - set atleastonearg=1 - shift - shift -) - -if x%1 == x--config ( - set confdir=%2 - set DRILL_CONF_DIR=%2 - set atleastonearg=1 - shift - shift -) - -if x%1 == x--jvm ( - set DRILL_SHELL_JAVA_OPTS=!DRILL_SHELL_JAVA_OPTS! %2 - set DRILL_SHELL_JAVA_OPTS=!DRILL_SHELL_JAVA_OPTS:"=! - set atleastonearg=1 - shift - shift -) - -if "!atleastonearg!"=="0" ( - set DRILL_ARGS=!DRILL_ARGS! %~1 - shift -) - -goto argactionstart -:argactionend - -rem -rem Validate JAVA_HOME -rem -if "%JAVA_EXE%" == "" (set JAVA_EXE=java.exe) - -if not "%JAVA_HOME%" == "" goto javaHomeSet -echo. -echo WARN: JAVA_HOME not found in your environment. -echo Please set the JAVA_HOME variable in your environment to match the -echo location of your Java installation -echo. -goto initDrillEnv - -:javaHomeSet -if exist "%JAVA_HOME%\bin\%JAVA_EXE%" goto initDrillEnv -echo. -echo ERROR: JAVA_HOME is set to an invalid directory. -echo JAVA_HOME = %JAVA_HOME% -echo Please set the JAVA_HOME variable in your environment to match the -echo location of your Java installation -echo. -goto error - -:initDrillEnv -echo DRILL_ARGS - "%DRILL_ARGS%" - -rem -rem Deal with Drill variables -rem - -set DRILL_BIN_DIR=%~dp0 -pushd %DRILL_BIN_DIR%.. -set DRILL_HOME=%cd% -popd - -if "test%DRILL_CONF_DIR%" == "test" ( - set DRILL_CONF_DIR=%DRILL_HOME%\conf -) - -if "test%DRILL_LOG_DIR%" == "test" ( - set DRILL_LOG_DIR=%DRILL_HOME%\log -) - -@rem Drill temporary directory is used as base for temporary storage of Dynamic UDF jars. -if "test%DRILL_TMP_DIR%" == "test" ( - set DRILL_TMP_DIR=%TEMP% -) - -rem -rem Deal with Hadoop JARs, if HADOOP_HOME was specified -rem - -if "test%HADOOP_HOME%" == "test" ( - echo HADOOP_HOME not detected... - set USE_HADOOP_CP=0 - set HADOOP_HOME=%DRILL_HOME%\winutils -) else ( - echo Calculating HADOOP_CLASSPATH ... - for %%i in (%HADOOP_HOME%\lib\*.jar) do ( -set IGNOREJAR=0 -for /F "tokens=*" %%A in (%DRILL_BIN_DIR%\hadoop-excludes.txt) do ( - echo.%%~ni|findstr /C:"%%A" >nul 2>&1 - if not errorlevel 1 set IGNOREJAR=1 -) -if "!IGNOREJAR!"=="0" set HADOOP_CLASSPATH=%%i;!HADOOP_CLASSPATH! - ) - set HADOOP_CLASSPATH=%HADOOP_HOME%\conf;!HADOOP_CLASSPATH! - set USE_HADOOP_CP=1 -) - -rem -rem Deal with HBase JARs, if HBASE_HOME was specified -rem - -if "test%HBASE_HOME%" == "t

[GitHub] vvysotskyi commented on issue #1488: DRILL-786: Allow CROSS JOIN syntax

vvysotskyi commented on issue #1488: DRILL-786: Allow CROSS JOIN syntax URL: https://github.com/apache/drill/pull/1488#issuecomment-437826528 +1 for this PR and for the proposal to fix this issue in the scope of the separate Jira. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM

oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM URL: https://github.com/apache/drill/pull/1446#issuecomment-437823097 @vvysotskyi I finally managed to reproduce the error. The `winutils.exe` (Hadoop WinUtils) requires a `visual c ++ redistributable` (msvcr100.dll). There is 2 options: 1) Require `visual c ++ redistributable` to be installed by user (via documentation?) 2) Add msvcr100.dll for Windows x64 into `winutils/bin` This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] ihuzenko commented on issue #1488: DRILL-786: Allow CROSS JOIN syntax

ihuzenko commented on issue #1488: DRILL-786: Allow CROSS JOIN syntax URL: https://github.com/apache/drill/pull/1488#issuecomment-437823918 Hi @amansinha100 , thanks for approving the PR and sorry for confusing comment about rule ordering. I've added documentation note under jira issue. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM

oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM URL: https://github.com/apache/drill/pull/1446#issuecomment-437823097 I finally managed to reproduce the error. The `winutils.exe` (Hadoop WinUtils) requires a `visual c ++ redistributable` (msvcr100.dll). There is 2 options: 1) Require `visual c ++ redistributable` to be installed by user (via documentation?) 2) Add msvcr100.dll for Windows x64 into `winutils/bin` This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM

oleg-zinovev edited a comment on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM URL: https://github.com/apache/drill/pull/1446#issuecomment-437823097 I finally managed to reproduce the error. The `winutils.exe` (Hadoop WinUtils) requires a `visual c ++ redistributable` (msvcr100.dll). There is 2 options: 1) Require `visual c ++ redistributable` to be installed by user (via documentation maybe?) 2) Add msvcr100.dll for Windows x64 into `winutils/bin` This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] oleg-zinovev commented on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM

oleg-zinovev commented on issue #1446: DRILL-6349: Drill JDBC driver fails on Java 1.9+ with NoClassDefFoundError: sun/misc/VM URL: https://github.com/apache/drill/pull/1446#issuecomment-437823097 I finally managed to reproduce the error. The `winutils.exe` (Hadoop WinUtils) requires a `visual c ++ redistributable` (msvcr100.dll). There is 2 options: 1) Require to `visual c ++ redistributable` installation be user 2) Add msvcr100.dll for Windows x64 into `winutils/bin` This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Resolved] (DRILL-6222) Drill fails on Schema changes

[ https://issues.apache.org/jira/browse/DRILL-6222?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Vitalii Diravka resolved DRILL-6222. Resolution: Duplicate Assignee: (was: salim achouche) > Drill fails on Schema changes > -- > > Key: DRILL-6222 > URL: https://issues.apache.org/jira/browse/DRILL-6222 > Project: Apache Drill > Issue Type: Improvement > Components: Execution - Relational Operators >Affects Versions: 1.10.0, 1.12.0 >Reporter: salim achouche >Priority: Major > > Drill Query Failing when selecting all columns from a Complex Nested Data > File (Parquet) Set). There are differences in Schema among the files: > * The Parquet files exhibit differences both at the first level and within > nested data types > * A select * will not cause an exception but using a limit clause will > * Note also this issue seems to happen only when multiple Drillbit minor > fragments are involved (concurrency higher than one) -- This message was sent by Atlassian JIRA (v7.6.3#76005)