[jira] [Work logged] (HDFS-16378) Add datanode address to BlockReportLeaseManager logs

[

https://issues.apache.org/jira/browse/HDFS-16378?focusedWorklogId=694392&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694392

]

ASF GitHub Bot logged work on HDFS-16378:

-

Author: ASF GitHub Bot

Created on: 11/Dec/21 07:28

Start Date: 11/Dec/21 07:28

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3786:

URL: https://github.com/apache/hadoop/pull/3786#issuecomment-991510370

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 45s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 32m 31s | | trunk passed |

| +1 :green_heart: | compile | 1m 25s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 20s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 1s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 27s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 4s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 32s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 12s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 25s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 17s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 8s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 8s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 50s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 17s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 52s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 23s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 12s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 8s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 225m 28s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 45s | | The patch does not

generate ASF License warnings. |

| | | 324m 24s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3786/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3786 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 38d895cb22a1 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / a2ab15b759c5bfec18875ce9c2cb75c95d105d76 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3786/1/testReport/ |

| Max. process+thread count | 2993 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3786/1/console |

| versions | git=2.2

[jira] [Work logged] (HDFS-16378) Add datanode address to BlockReportLeaseManager logs

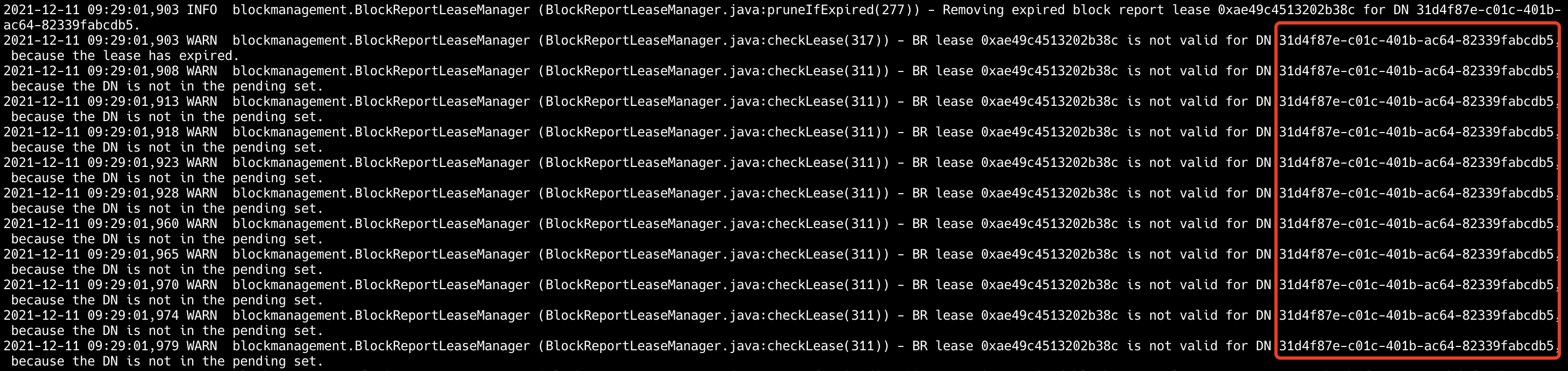

[ https://issues.apache.org/jira/browse/HDFS-16378?focusedWorklogId=694389&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694389 ] ASF GitHub Bot logged work on HDFS-16378: - Author: ASF GitHub Bot Created on: 11/Dec/21 06:45 Start Date: 11/Dec/21 06:45 Worklog Time Spent: 10m Work Description: Hexiaoqiao commented on pull request #3786: URL: https://github.com/apache/hadoop/pull/3786#issuecomment-991491441 I am prefer to keep `getXferAddr` here, IMO IP and Hostname is equal for maintainer. But when meet binding Multiple NICs env and multi DataNode instances deployed on one node, it is easy to differ them if use IP information. Another, `getXferAddr` saves to calculate sum of string than `getXferAddrWithHostname` (not key point). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 694389) Time Spent: 40m (was: 0.5h) > Add datanode address to BlockReportLeaseManager logs > > > Key: HDFS-16378 > URL: https://issues.apache.org/jira/browse/HDFS-16378 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: tomscut >Assignee: tomscut >Priority: Minor > Labels: pull-request-available > Attachments: image-2021-12-11-09-58-59-494.png > > Time Spent: 40m > Remaining Estimate: 0h > > We should add datanode address to BlockReportLeaseManager logs. Because the > datanodeuuid is not convenient for tracking. > !image-2021-12-11-09-58-59-494.png|width=643,height=152! -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-16379) Reset fullBlockReportLeaseId after any exceptions

[

https://issues.apache.org/jira/browse/HDFS-16379?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17457548#comment-17457548

]

tomscut commented on HDFS-16379:

The problem we had was solved by introducing HDFS-12914 and HDFS-14314. But

when I looked at the code, I found that there might be such a problem, so I

file this issue. We haven't encountered this situation yet, but I think it's a

possibility.

> Reset fullBlockReportLeaseId after any exceptions

> -

>

> Key: HDFS-16379

> URL: https://issues.apache.org/jira/browse/HDFS-16379

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Recently we encountered FBR-related problems in the production environment,

> which were solved by introducing HDFS-12914 and HDFS-14314.

> But there may be situations like this:

> 1 DN got *fullBlockReportLeaseId* via heartbeat.

> 2 DN trigger a blockReport, but some exception occurs (this may be rare, but

> it may exist), and then DN does multiple retries *without resetting*

> {*}fullBlockReportLeaseId{*}{*}{*}. Because fullBlockReportLeaseId is reset

> only if it succeeds currently.

> 3 After a while, the exception is cleared, but the fullBlockReportLeaseId has

> expired. *Since NN did not throw an exception after the lease expired, the DN

> considered that the blockReport was successful.* So the blockReport was not

> actually executed this time and needs to wait until the next time.

> Therefore, {*}should we consider resetting the fullBlockReportLeaseId in the

> finally block{*}? The advantage of this is that lease expiration can be

> avoided. The downside is that each heartbeat will apply for a new

> fullBlockReportLeaseId during the exception, but I think this cost is

> negligible.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16352) return the real datanode numBlocks in #getDatanodeStorageReport

[

https://issues.apache.org/jira/browse/HDFS-16352?focusedWorklogId=694387&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694387

]

ASF GitHub Bot logged work on HDFS-16352:

-

Author: ASF GitHub Bot

Created on: 11/Dec/21 06:19

Start Date: 11/Dec/21 06:19

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3714:

URL: https://github.com/apache/hadoop/pull/3714#issuecomment-991477784

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 43s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 32m 44s | | trunk passed |

| +1 :green_heart: | compile | 1m 26s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 18s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 1s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 28s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 2s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 34s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 9s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 33s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 16s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 16s | | the patch passed |

| +1 :green_heart: | compile | 1m 13s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 13s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 52s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 20s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 51s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 26s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 16s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 9s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 225m 23s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 46s | | The patch does not

generate ASF License warnings. |

| | | 324m 37s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3714/3/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3714 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 556e003fd6ee 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 81d01fffbe6c590e9cbaa4b2b31801cfe7ab9a2b |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3714/3/testReport/ |

| Max. process+thread count | 3131 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3714/3/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This mes

[jira] [Work logged] (HDFS-16378) Add datanode address to BlockReportLeaseManager logs

[

https://issues.apache.org/jira/browse/HDFS-16378?focusedWorklogId=694386&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694386

]

ASF GitHub Bot logged work on HDFS-16378:

-

Author: ASF GitHub Bot

Created on: 11/Dec/21 06:18

Start Date: 11/Dec/21 06:18

Worklog Time Spent: 10m

Work Description: tomscut commented on pull request #3786:

URL: https://github.com/apache/hadoop/pull/3786#issuecomment-991477452

> Thanks @tomscut for your report. It is almost LGTM. Some nits, a. There

are also some other logs which only leaves DataNodeUUID, do you mind to

complete them with address together? b. Just suggest to invoke

`dn.getXferAddr()` to keep the log style same. e.g. `200 LOG.info("Registered

DN {} ({}).", dn.getDatanodeUuid(), dn.getXferAddr());`

Thank you very much for your review and suggestion. Can we change

`getXferAddr` to `getXferAddrWithHostname`, because `hostname` may be more

intuitive than `IP`. What do you think? I will correct the omissions together.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 694386)

Time Spent: 0.5h (was: 20m)

> Add datanode address to BlockReportLeaseManager logs

>

>

> Key: HDFS-16378

> URL: https://issues.apache.org/jira/browse/HDFS-16378

> Project: Hadoop HDFS

> Issue Type: Improvement

>Reporter: tomscut

>Assignee: tomscut

>Priority: Minor

> Labels: pull-request-available

> Attachments: image-2021-12-11-09-58-59-494.png

>

> Time Spent: 0.5h

> Remaining Estimate: 0h

>

> We should add datanode address to BlockReportLeaseManager logs. Because the

> datanodeuuid is not convenient for tracking.

> !image-2021-12-11-09-58-59-494.png|width=643,height=152!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-16261) Configurable grace period around invalidation of replaced blocks

[ https://issues.apache.org/jira/browse/HDFS-16261?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17457543#comment-17457543 ] Xiaoqiao He commented on HDFS-16261: Thanks [~bbeaudreault] for your detailed analysis and continued/solid works. IMO, I am prefer to solve this issue at DataNode side. Of course it has some pros and cons compare to process it at NameNode side as you mentioned above. a. NameNode could postpone to send back Invalid Command to DataNode to avoid exception and retry. Maybe the during is unique configuration. But for different block/access, it will cost different time to read, so the static postpone during is hard to solve all cases. b. IMO, DataNode has all information about read which block at local. And we could use this point to decide if execute `DNA_INVALIDATE` immediately or postpone it. For every invalid block, check if it is in BlockSender (between receive op_read and close the stream) sets first, if yes then wait to execute until all corresponding streams close. Just one choice, welcome to comment and discussion. Thanks again. > Configurable grace period around invalidation of replaced blocks > > > Key: HDFS-16261 > URL: https://issues.apache.org/jira/browse/HDFS-16261 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: Bryan Beaudreault >Assignee: Bryan Beaudreault >Priority: Major > Labels: pull-request-available > Time Spent: 20m > Remaining Estimate: 0h > > When a block is moved with REPLACE_BLOCK, the new location is recorded in the > NameNode and the NameNode instructs the old host to in invalidate the block > using DNA_INVALIDATE. As it stands today, this invalidation is async but > tends to happen relatively quickly. > I'm working on a feature for HBase which enables efficient healing of > locality through Balancer-style low level block moves (HBASE-26250). One > issue is that HBase tends to keep open long running DFSInputStreams and > moving blocks from under them causes lots of warns in the RegionServer and > increases long tail latencies due to the necessary retries in the DFSClient. > One way I'd like to fix this is to provide a configurable grace period on > async invalidations. This would give the DFSClient enough time to refresh > block locations before hitting any errors. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16352) return the real datanode numBlocks in #getDatanodeStorageReport

[

https://issues.apache.org/jira/browse/HDFS-16352?focusedWorklogId=694383&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694383

]

ASF GitHub Bot logged work on HDFS-16352:

-

Author: ASF GitHub Bot

Created on: 11/Dec/21 04:58

Start Date: 11/Dec/21 04:58

Worklog Time Spent: 10m

Work Description: Hexiaoqiao commented on a change in pull request #3714:

URL: https://github.com/apache/hadoop/pull/3714#discussion_r767089872

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -2172,8 +2172,9 @@ public String getSlowDisksReport() {

.size()];

for (int i = 0; i < reports.length; i++) {

final DatanodeDescriptor d = datanodes.get(i);

- reports[i] = new DatanodeStorageReport(

- new DatanodeInfoBuilder().setFrom(d).build(), d.getStorageReports());

+ DatanodeInfo dnInfo = new DatanodeInfoBuilder().setFrom(d).build();

+ dnInfo.setNumBlocks(d.numBlocks());

Review comment:

`new DatanodeInfoBuilder().setFrom(d).build()` has set `numBlocks`. Any

difference to set it again here? Any other reason?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 694383)

Time Spent: 1h 20m (was: 1h 10m)

> return the real datanode numBlocks in #getDatanodeStorageReport

> ---

>

> Key: HDFS-16352

> URL: https://issues.apache.org/jira/browse/HDFS-16352

> Project: Hadoop HDFS

> Issue Type: Improvement

>Reporter: qinyuren

>Assignee: qinyuren

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-11-23-22-04-06-131.png

>

> Time Spent: 1h 20m

> Remaining Estimate: 0h

>

> #getDatanodeStorageReport will return the array of DatanodeStorageReport

> which contains the DatanodeInfo in each DatanodeStorageReport, but the

> numBlocks in DatanodeInfo is always zero, which is confusing

> !image-2021-11-23-22-04-06-131.png|width=683,height=338!

> Or we can return the real numBlocks in DatanodeInfo when we call

> #getDatanodeStorageReport

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-16379) Reset fullBlockReportLeaseId after any exceptions

[

https://issues.apache.org/jira/browse/HDFS-16379?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17457537#comment-17457537

]

Xiaoqiao He commented on HDFS-16379:

Thanks [~tomscut] involve me here. I am not sure if this is issue for

BlockReport. Do you meet the same case on your prod cluster, if that attach

logs may be more helpful. Thanks.

> Reset fullBlockReportLeaseId after any exceptions

> -

>

> Key: HDFS-16379

> URL: https://issues.apache.org/jira/browse/HDFS-16379

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Recently we encountered FBR-related problems in the production environment,

> which were solved by introducing HDFS-12914 and HDFS-14314.

> But there may be situations like this:

> 1 DN got *fullBlockReportLeaseId* via heartbeat.

> 2 DN trigger a blockReport, but some exception occurs (this may be rare, but

> it may exist), and then DN does multiple retries *without resetting*

> {*}fullBlockReportLeaseId{*}{*}{*}. Because fullBlockReportLeaseId is reset

> only if it succeeds currently.

> 3 After a while, the exception is cleared, but the fullBlockReportLeaseId has

> expired. *Since NN did not throw an exception after the lease expired, the DN

> considered that the blockReport was successful.* So the blockReport was not

> actually executed this time and needs to wait until the next time.

> Therefore, {*}should we consider resetting the fullBlockReportLeaseId in the

> finally block{*}? The advantage of this is that lease expiration can be

> avoided. The downside is that each heartbeat will apply for a new

> fullBlockReportLeaseId during the exception, but I think this cost is

> negligible.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16378) Add datanode address to BlockReportLeaseManager logs

[

https://issues.apache.org/jira/browse/HDFS-16378?focusedWorklogId=694381&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694381

]

ASF GitHub Bot logged work on HDFS-16378:

-

Author: ASF GitHub Bot

Created on: 11/Dec/21 04:44

Start Date: 11/Dec/21 04:44

Worklog Time Spent: 10m

Work Description: Hexiaoqiao commented on pull request #3786:

URL: https://github.com/apache/hadoop/pull/3786#issuecomment-991458334

Thanks @tomscut for your report. It is almost LGTM. Some nits,

a. There are also some other logs which only leaves DataNodeUUID, do you

mind to complete them with address together?

b. Just suggest to invoke `dn.getXferAddr()` to keep the log style same. e.g.

`200 LOG.info("Registered DN {} ({}).", dn.getDatanodeUuid(),

dn.getXferAddr());`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 694381)

Time Spent: 20m (was: 10m)

> Add datanode address to BlockReportLeaseManager logs

>

>

> Key: HDFS-16378

> URL: https://issues.apache.org/jira/browse/HDFS-16378

> Project: Hadoop HDFS

> Issue Type: Improvement

>Reporter: tomscut

>Assignee: tomscut

>Priority: Minor

> Labels: pull-request-available

> Attachments: image-2021-12-11-09-58-59-494.png

>

> Time Spent: 20m

> Remaining Estimate: 0h

>

> We should add datanode address to BlockReportLeaseManager logs. Because the

> datanodeuuid is not convenient for tracking.

> !image-2021-12-11-09-58-59-494.png|width=643,height=152!

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16303) Losing over 100 datanodes in state decommissioning results in full blockage of all datanode decommissioning

[

https://issues.apache.org/jira/browse/HDFS-16303?focusedWorklogId=694362&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694362

]

ASF GitHub Bot logged work on HDFS-16303:

-

Author: ASF GitHub Bot

Created on: 11/Dec/21 03:35

Start Date: 11/Dec/21 03:35

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3675:

URL: https://github.com/apache/hadoop/pull/3675#issuecomment-991435605

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 41s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 4 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 31m 6s | | trunk passed |

| +1 :green_heart: | compile | 1m 29s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 20s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 0s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 25s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 1s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 28s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 9s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 17s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 15s | | the patch passed |

| +1 :green_heart: | compile | 1m 18s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 18s | | the patch passed |

| +1 :green_heart: | compile | 1m 13s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 13s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 52s | |

hadoop-hdfs-project/hadoop-hdfs: The patch generated 0 new + 45 unchanged - 1

fixed = 45 total (was 46) |

| +1 :green_heart: | mvnsite | 1m 18s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 51s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 22s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 9s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 4s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 226m 20s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 47s | | The patch does not

generate ASF License warnings. |

| | | 323m 13s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3675/2/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3675 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 6f28e8ecb417 4.15.0-156-generic #163-Ubuntu SMP Thu Aug 19

23:31:58 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 54ba1cb54ae6bd66c126412933ec859007edd273 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3675/2/testReport/ |

| Max. process+thread count | 3374 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3675/2/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

[jira] [Commented] (HDFS-16379) Reset fullBlockReportLeaseId after any exceptions

[

https://issues.apache.org/jira/browse/HDFS-16379?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17457520#comment-17457520

]

tomscut commented on HDFS-16379:

[~weichiu] [~hexiaoqiao] [~starphin] , could you please take a look at this?

Thank you very much.

> Reset fullBlockReportLeaseId after any exceptions

> -

>

> Key: HDFS-16379

> URL: https://issues.apache.org/jira/browse/HDFS-16379

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Recently we encountered FBR-related problems in the production environment,

> which were solved by introducing HDFS-12914 and HDFS-14314.

> But there may be situations like this:

> 1 DN got *fullBlockReportLeaseId* via heartbeat.

> 2 DN trigger a blockReport, but some exception occurs (this may be rare, but

> it may exist), and then DN does multiple retries *without resetting*

> {*}fullBlockReportLeaseId{*}{*}{*}. Because fullBlockReportLeaseId is reset

> only if it succeeds currently.

> 3 After a while, the exception is cleared, but the fullBlockReportLeaseId has

> expired. *Since NN did not throw an exception after the lease expired, the DN

> considered that the blockReport was successful.* So the blockReport was not

> actually executed this time and needs to wait until the next time.

> Therefore, {*}should we consider resetting the fullBlockReportLeaseId in the

> finally block{*}? The advantage of this is that lease expiration can be

> avoided. The downside is that each heartbeat will apply for a new

> fullBlockReportLeaseId during the exception, but I think this cost is

> negligible.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-16379) Reset fullBlockReportLeaseId after any exceptions

[

https://issues.apache.org/jira/browse/HDFS-16379?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

tomscut updated HDFS-16379:

---

Description:

Recently we encountered FBR-related problems in the production environment,

which were solved by introducing HDFS-12914 and HDFS-14314.

But there may be situations like this:

1 DN got *fullBlockReportLeaseId* via heartbeat.

2 DN trigger a blockReport, but some exception occurs (this may be rare, but it

may exist), and then DN does multiple retries *without resetting*

{*}fullBlockReportLeaseId{*}{*}{*}. Because fullBlockReportLeaseId is reset

only if it succeeds currently.

3 After a while, the exception is cleared, but the fullBlockReportLeaseId has

expired. *Since NN did not throw an exception after the lease expired, the DN

considered that the blockReport was successful.* So the blockReport was not

actually executed this time and needs to wait until the next time.

Therefore, {*}should we consider resetting the fullBlockReportLeaseId in the

finally block{*}? The advantage of this is that lease expiration can be

avoided. The downside is that each heartbeat will apply for a new

fullBlockReportLeaseId during the exception, but I think this cost is

negligible.

was:

Recently we encountered FBR-related problems in the production environment,

which were solved by introducing HDFS-12914 and HDFS-14314.

But there may be situations like this:

1 DN got *fullBlockReportLeaseId* via heartbeat.

2 DN trigger a blockReport, but some exception occurs (this may be rare, but it

may exist), and then DN does multiple retries {*}without resetting leaseID{*}.

Because leaseID is reset only if it succeeds currently.

3 After a while, the exception is cleared, but the LeaseID has expired. *Since

NN did not throw an exception after the lease expired, the DN considered that

the blockReport was successful.* So the blockReport was not actually executed

this time and needs to wait until the next time.

Therefore, {*}should we consider resetting the fullBlockReportLeaseId in the

finally block{*}? The advantage of this is that lease expiration can be

avoided. The downside is that each heartbeat will apply for a new

fullBlockReportLeaseId during the exception, but I think this cost is

negligible.

> Reset fullBlockReportLeaseId after any exceptions

> -

>

> Key: HDFS-16379

> URL: https://issues.apache.org/jira/browse/HDFS-16379

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Recently we encountered FBR-related problems in the production environment,

> which were solved by introducing HDFS-12914 and HDFS-14314.

> But there may be situations like this:

> 1 DN got *fullBlockReportLeaseId* via heartbeat.

> 2 DN trigger a blockReport, but some exception occurs (this may be rare, but

> it may exist), and then DN does multiple retries *without resetting*

> {*}fullBlockReportLeaseId{*}{*}{*}. Because fullBlockReportLeaseId is reset

> only if it succeeds currently.

> 3 After a while, the exception is cleared, but the fullBlockReportLeaseId has

> expired. *Since NN did not throw an exception after the lease expired, the DN

> considered that the blockReport was successful.* So the blockReport was not

> actually executed this time and needs to wait until the next time.

> Therefore, {*}should we consider resetting the fullBlockReportLeaseId in the

> finally block{*}? The advantage of this is that lease expiration can be

> avoided. The downside is that each heartbeat will apply for a new

> fullBlockReportLeaseId during the exception, but I think this cost is

> negligible.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-16379) Reset fullBlockReportLeaseId after any exceptions

[

https://issues.apache.org/jira/browse/HDFS-16379?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HDFS-16379:

--

Labels: pull-request-available (was: )

> Reset fullBlockReportLeaseId after any exceptions

> -

>

> Key: HDFS-16379

> URL: https://issues.apache.org/jira/browse/HDFS-16379

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Recently we encountered FBR-related problems in the production environment,

> which were solved by introducing HDFS-12914 and HDFS-14314.

> But there may be situations like this:

> 1 DN got *fullBlockReportLeaseId* via heartbeat.

> 2 DN trigger a blockReport, but some exception occurs (this may be rare, but

> it may exist), and then DN does multiple retries {*}without resetting

> leaseID{*}. Because leaseID is reset only if it succeeds currently.

> 3 After a while, the exception is cleared, but the LeaseID has expired.

> *Since NN did not throw an exception after the lease expired, the DN

> considered that the blockReport was successful.* So the blockReport was not

> actually executed this time and needs to wait until the next time.

> Therefore, {*}should we consider resetting the fullBlockReportLeaseId in the

> finally block{*}? The advantage of this is that lease expiration can be

> avoided. The downside is that each heartbeat will apply for a new

> fullBlockReportLeaseId during the exception, but I think this cost is

> negligible.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16379) Reset fullBlockReportLeaseId after any exceptions

[

https://issues.apache.org/jira/browse/HDFS-16379?focusedWorklogId=694359&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694359

]

ASF GitHub Bot logged work on HDFS-16379:

-

Author: ASF GitHub Bot

Created on: 11/Dec/21 02:50

Start Date: 11/Dec/21 02:50

Worklog Time Spent: 10m

Work Description: tomscut opened a new pull request #3787:

URL: https://github.com/apache/hadoop/pull/3787

JIRA: [HDFS-16379](https://issues.apache.org/jira/browse/HDFS-16379).

Recently we encountered FBR-related problems in the production environment,

which were solved by introducing HDFS-12914 and HDFS-14314.

But there may be situations like this:

1 DN got `fullBlockReportLeaseId` via heartbeat.

2 DN trigger a blockReport, but some exception occurs (this may be rare, but

it may exist), and then DN does multiple retries without resetting

fullBlockReportLeaseId. Because fullBlockReportLeaseId is reset only if it

succeeds currently.

3 After a while, the exception is cleared, but the `fullBlockReportLeaseId`

has expired. Since NN did not throw an exception after the lease expired, the

DN considered that the blockReport was successful. So the blockReport was not

actually executed this time and needs to wait until the next time.

Therefore, should we consider resetting the `fullBlockReportLeaseId` in the

finally block? The advantage of this is that lease expiration can be avoided.

The downside is that each heartbeat will apply for a new

`fullBlockReportLeaseId` during the exception, but I think this cost is

negligible.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 694359)

Remaining Estimate: 0h

Time Spent: 10m

> Reset fullBlockReportLeaseId after any exceptions

> -

>

> Key: HDFS-16379

> URL: https://issues.apache.org/jira/browse/HDFS-16379

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Recently we encountered FBR-related problems in the production environment,

> which were solved by introducing HDFS-12914 and HDFS-14314.

> But there may be situations like this:

> 1 DN got *fullBlockReportLeaseId* via heartbeat.

> 2 DN trigger a blockReport, but some exception occurs (this may be rare, but

> it may exist), and then DN does multiple retries {*}without resetting

> leaseID{*}. Because leaseID is reset only if it succeeds currently.

> 3 After a while, the exception is cleared, but the LeaseID has expired.

> *Since NN did not throw an exception after the lease expired, the DN

> considered that the blockReport was successful.* So the blockReport was not

> actually executed this time and needs to wait until the next time.

> Therefore, {*}should we consider resetting the fullBlockReportLeaseId in the

> finally block{*}? The advantage of this is that lease expiration can be

> avoided. The downside is that each heartbeat will apply for a new

> fullBlockReportLeaseId during the exception, but I think this cost is

> negligible.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Created] (HDFS-16379) Reset fullBlockReportLeaseId after any exceptions

tomscut created HDFS-16379:

--

Summary: Reset fullBlockReportLeaseId after any exceptions

Key: HDFS-16379

URL: https://issues.apache.org/jira/browse/HDFS-16379

Project: Hadoop HDFS

Issue Type: Bug

Reporter: tomscut

Assignee: tomscut

Recently we encountered FBR-related problems in the production environment,

which were solved by introducing HDFS-12914 and HDFS-14314.

But there may be situations like this:

1 DN got *fullBlockReportLeaseId* via heartbeat.

2 DN trigger a blockReport, but some exception occurs (this may be rare, but it

may exist), and then DN does multiple retries {*}without resetting leaseID{*}.

Because leaseID is reset only if it succeeds currently.

3 After a while, the exception is cleared, but the LeaseID has expired. *Since

NN did not throw an exception after the lease expired, the DN considered that

the blockReport was successful.* So the blockReport was not actually executed

this time and needs to wait until the next time.

Therefore, {*}should we consider resetting the fullBlockReportLeaseId in the

finally block{*}? The advantage of this is that lease expiration can be

avoided. The downside is that each heartbeat will apply for a new

fullBlockReportLeaseId during the exception, but I think this cost is

negligible.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16378) Add datanode address to BlockReportLeaseManager logs

[ https://issues.apache.org/jira/browse/HDFS-16378?focusedWorklogId=694354&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694354 ] ASF GitHub Bot logged work on HDFS-16378: - Author: ASF GitHub Bot Created on: 11/Dec/21 02:02 Start Date: 11/Dec/21 02:02 Worklog Time Spent: 10m Work Description: tomscut opened a new pull request #3786: URL: https://github.com/apache/hadoop/pull/3786 JIRA: [HDFS-16378](https://issues.apache.org/jira/browse/HDFS-16378). We should add datanode address to `BlockReportLeaseManager` logs. Because the `datanodeuuid` is not convenient for tracking.  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 694354) Remaining Estimate: 0h Time Spent: 10m > Add datanode address to BlockReportLeaseManager logs > > > Key: HDFS-16378 > URL: https://issues.apache.org/jira/browse/HDFS-16378 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: tomscut >Assignee: tomscut >Priority: Minor > Labels: pull-request-available > Attachments: image-2021-12-11-09-58-59-494.png > > Time Spent: 10m > Remaining Estimate: 0h > > We should add datanode address to BlockReportLeaseManager logs. Because the > datanodeuuid is not convenient for tracking. > !image-2021-12-11-09-58-59-494.png|width=643,height=152! -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-16378) Add datanode address to BlockReportLeaseManager logs

[ https://issues.apache.org/jira/browse/HDFS-16378?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HDFS-16378: -- Labels: pull-request-available (was: ) > Add datanode address to BlockReportLeaseManager logs > > > Key: HDFS-16378 > URL: https://issues.apache.org/jira/browse/HDFS-16378 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: tomscut >Assignee: tomscut >Priority: Minor > Labels: pull-request-available > Attachments: image-2021-12-11-09-58-59-494.png > > Time Spent: 10m > Remaining Estimate: 0h > > We should add datanode address to BlockReportLeaseManager logs. Because the > datanodeuuid is not convenient for tracking. > !image-2021-12-11-09-58-59-494.png|width=643,height=152! -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Created] (HDFS-16378) Add datanode address to BlockReportLeaseManager logs

tomscut created HDFS-16378: -- Summary: Add datanode address to BlockReportLeaseManager logs Key: HDFS-16378 URL: https://issues.apache.org/jira/browse/HDFS-16378 Project: Hadoop HDFS Issue Type: Improvement Reporter: tomscut Assignee: tomscut Attachments: image-2021-12-11-09-58-59-494.png We should add datanode address to BlockReportLeaseManager logs. Because the datanodeuuid is not convenient for tracking. !image-2021-12-11-09-58-59-494.png|width=643,height=152! -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16352) return the real datanode numBlocks in #getDatanodeStorageReport

[ https://issues.apache.org/jira/browse/HDFS-16352?focusedWorklogId=694349&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694349 ] ASF GitHub Bot logged work on HDFS-16352: - Author: ASF GitHub Bot Created on: 11/Dec/21 01:07 Start Date: 11/Dec/21 01:07 Worklog Time Spent: 10m Work Description: liubingxing commented on pull request #3714: URL: https://github.com/apache/hadoop/pull/3714#issuecomment-991399254 @virajjasani Thanks for your review. I have fixed the checkstyle warning and updated the PR. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 694349) Time Spent: 1h 10m (was: 1h) > return the real datanode numBlocks in #getDatanodeStorageReport > --- > > Key: HDFS-16352 > URL: https://issues.apache.org/jira/browse/HDFS-16352 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: qinyuren >Assignee: qinyuren >Priority: Major > Labels: pull-request-available > Attachments: image-2021-11-23-22-04-06-131.png > > Time Spent: 1h 10m > Remaining Estimate: 0h > > #getDatanodeStorageReport will return the array of DatanodeStorageReport > which contains the DatanodeInfo in each DatanodeStorageReport, but the > numBlocks in DatanodeInfo is always zero, which is confusing > !image-2021-11-23-22-04-06-131.png|width=683,height=338! > Or we can return the real numBlocks in DatanodeInfo when we call > #getDatanodeStorageReport -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16377) Should CheckNotNull before access FsDatasetSpi

[

https://issues.apache.org/jira/browse/HDFS-16377?focusedWorklogId=694342&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694342

]

ASF GitHub Bot logged work on HDFS-16377:

-

Author: ASF GitHub Bot

Created on: 11/Dec/21 00:04

Start Date: 11/Dec/21 00:04

Worklog Time Spent: 10m

Work Description: tomscut commented on pull request #3784:

URL: https://github.com/apache/hadoop/pull/3784#issuecomment-991383599

Thanks @virajjasani for your review.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 694342)

Time Spent: 0.5h (was: 20m)

> Should CheckNotNull before access FsDatasetSpi

> --

>

> Key: HDFS-16377

> URL: https://issues.apache.org/jira/browse/HDFS-16377

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-12-10-19-19-22-957.png,

> image-2021-12-10-19-20-58-022.png

>

> Time Spent: 0.5h

> Remaining Estimate: 0h

>

> When starting the DN, we found NPE in the staring DN's log, as follows:

> !image-2021-12-10-19-19-22-957.png|width=909,height=126!

> The logs of the upstream DN are as follows:

> !image-2021-12-10-19-20-58-022.png|width=905,height=239!

> This is mainly because *FsDatasetSpi* has not been initialized at the time of

> access.

> I noticed that checkNotNull is already done in these two

> method({*}DataNode#getBlockLocalPathInfo{*} and

> {*}DataNode#getVolumeInfo{*}). So we should add it to other places(interfaces

> that clients and other DN can access directly) so that we can add a message

> when throwing exceptions.

> Therefore, the client and the upstream DN know that FsDatasetSpi has not been

> initialized, rather than blindly unaware of the specific cause of the NPE.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16348) Mark slownode as badnode to recover pipeline

[

https://issues.apache.org/jira/browse/HDFS-16348?focusedWorklogId=694124&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694124

]

ASF GitHub Bot logged work on HDFS-16348:

-

Author: ASF GitHub Bot

Created on: 10/Dec/21 17:32

Start Date: 10/Dec/21 17:32

Worklog Time Spent: 10m

Work Description: tasanuma commented on a change in pull request #3704:

URL: https://github.com/apache/hadoop/pull/3704#discussion_r766860075

##

File path:

hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/DataStreamer.java

##

@@ -1188,6 +1198,43 @@ public void run() {

}

}

+ if (slowNodesFromAck.isEmpty()) {

+if (!slowNodeMap.isEmpty()) {

+ slowNodeMap.clear();

+}

+ } else {

+Map newSlowNodeMap = new HashMap<>();

+for (DatanodeInfo slowNode : slowNodesFromAck) {

+ if (!slowNodeMap.containsKey(slowNode)) {

+newSlowNodeMap.put(slowNode, 1);

+ } else {

+int oldCount = slowNodeMap.get(slowNode);

+newSlowNodeMap.put(slowNode, ++oldCount);

+ }

+}

+slowNodeMap = newSlowNodeMap;

Review comment:

Sorry, I didn't realize that `slowNodeMap` has discontinuous nodes, so

my suggestion is wrong.

> Could you help to check the implementation in

https://github.com/symious/hadoop/commit/973af5e7a959f86f8b8807472a063c60193a2cdc

?

It seems more readable than the previous one. Please use it. Thanks.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 694124)

Time Spent: 1h 50m (was: 1h 40m)

> Mark slownode as badnode to recover pipeline

>

>

> Key: HDFS-16348

> URL: https://issues.apache.org/jira/browse/HDFS-16348

> Project: Hadoop HDFS

> Issue Type: Improvement

>Reporter: Janus Chow

>Assignee: Janus Chow

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1h 50m

> Remaining Estimate: 0h

>

> In HDFS-16320, the DataNode can retrieve the SLOW status from each NameNode.

> This ticket is to send this information back to Clients who are writing

> blocks. If a Clients noticed the pipeline is build on a slownode, he/she can

> choose to mark the slownode as a badnode to exclude the node or rebuild a

> pipeline.

> In order to avoid the false positives, we added a config of "threshold", only

> clients continuously receives slownode reply from the same node will the node

> be marked as SLOW.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16348) Mark slownode as badnode to recover pipeline

[ https://issues.apache.org/jira/browse/HDFS-16348?focusedWorklogId=694122&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694122 ] ASF GitHub Bot logged work on HDFS-16348: - Author: ASF GitHub Bot Created on: 10/Dec/21 17:29 Start Date: 10/Dec/21 17:29 Worklog Time Spent: 10m Work Description: tasanuma commented on pull request #3704: URL: https://github.com/apache/hadoop/pull/3704#issuecomment-991159188 @symious Thanks for your explanation. So my question is which do you think better to implement the configuration, on the client side (`dfs.client.mark.slownode.as.badnode`) or on the datanode side (`dfs.pipeline.reply_slownode_in_pipeline_ack.enable`)? If this feature is similar to ECN, this ticket would be better to implement `dfs.pipeline.reply_slownode_in_pipeline_ack.enable`. Or do you think `dfs.client.mark.slownode.as.badnode` is better? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 694122) Time Spent: 1h 40m (was: 1.5h) > Mark slownode as badnode to recover pipeline > > > Key: HDFS-16348 > URL: https://issues.apache.org/jira/browse/HDFS-16348 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Janus Chow >Assignee: Janus Chow >Priority: Major > Labels: pull-request-available > Time Spent: 1h 40m > Remaining Estimate: 0h > > In HDFS-16320, the DataNode can retrieve the SLOW status from each NameNode. > This ticket is to send this information back to Clients who are writing > blocks. If a Clients noticed the pipeline is build on a slownode, he/she can > choose to mark the slownode as a badnode to exclude the node or rebuild a > pipeline. > In order to avoid the false positives, we added a config of "threshold", only > clients continuously receives slownode reply from the same node will the node > be marked as SLOW. -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16377) Should CheckNotNull before access FsDatasetSpi

[

https://issues.apache.org/jira/browse/HDFS-16377?focusedWorklogId=694106&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694106

]

ASF GitHub Bot logged work on HDFS-16377:

-

Author: ASF GitHub Bot

Created on: 10/Dec/21 16:58

Start Date: 10/Dec/21 16:58

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3784:

URL: https://github.com/apache/hadoop/pull/3784#issuecomment-991136164

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 55s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 32m 16s | | trunk passed |

| +1 :green_heart: | compile | 1m 24s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 19s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 1s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 30s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 2s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 33s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 13s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 31s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 14s | | the patch passed |

| +1 :green_heart: | compile | 1m 18s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 18s | | the patch passed |

| +1 :green_heart: | compile | 1m 12s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 12s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 51s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 17s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 50s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 20s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 11s | | the patch passed |

| +1 :green_heart: | shadedclient | 21m 47s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 223m 56s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 47s | | The patch does not

generate ASF License warnings. |

| | | 322m 34s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3784/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3784 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 12fcebf030bd 4.15.0-156-generic #163-Ubuntu SMP Thu Aug 19

23:31:58 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 8d3842d95bf909f5f514675ad28ff8081a44e515 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3784/1/testReport/ |

| Max. process+thread count | 2987 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3784/1/console |

| versions | git=

[jira] [Commented] (HDFS-16317) Backport HDFS-14729 for branch-3.2

[ https://issues.apache.org/jira/browse/HDFS-16317?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17457206#comment-17457206 ] Ananya Singh commented on HDFS-16317: - [~brahmareddy] raised PR : [GitHub Pull Request #3780|https://github.com/apache/hadoop/pull/3780] for branch-3.2.3 also, please review. > Backport HDFS-14729 for branch-3.2 > -- > > Key: HDFS-16317 > URL: https://issues.apache.org/jira/browse/HDFS-16317 > Project: Hadoop HDFS > Issue Type: Bug > Components: security >Affects Versions: 3.2.2 >Reporter: Ananya Singh >Assignee: Ananya Singh >Priority: Major > Labels: pull-request-available > Time Spent: 2h > Remaining Estimate: 0h > > Our security tool raised the following security flaw on Hadoop 3.2.2: > +[CVE-2015-9251 : > |http://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2015-9251] > [https://nvd.nist.gov/vuln/detail/|https://nvd.nist.gov/vuln/detail/CVE-2021-29425] > > [CVE-2015-9251|http://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2015-9251]+ > +[CVE-2019-11358|http://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2019-11358] > : > [https://nvd.nist.gov/vuln/detail/|https://nvd.nist.gov/vuln/detail/CVE-2021-29425] > > [CVE-2019-11358|http://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2019-11358]+ > +[CVE-2020-11022 > |http://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2020-11022] : > [https://nvd.nist.gov/vuln/detail/|https://nvd.nist.gov/vuln/detail/CVE-2021-29425] > > [CVE-2020-11022|http://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2020-11022]+ > > +[CVE-2020-11023 > |http://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2020-11023] [ > |http://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2020-11022] : > [https://nvd.nist.gov/vuln/detail/|https://nvd.nist.gov/vuln/detail/CVE-2021-29425] > > [CVE-2020-11023|http://web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-2020-11023]+ > > > > -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16352) return the real datanode numBlocks in #getDatanodeStorageReport

[ https://issues.apache.org/jira/browse/HDFS-16352?focusedWorklogId=694008&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-694008 ] ASF GitHub Bot logged work on HDFS-16352: - Author: ASF GitHub Bot Created on: 10/Dec/21 14:43 Start Date: 10/Dec/21 14:43 Worklog Time Spent: 10m Work Description: virajjasani commented on pull request #3714: URL: https://github.com/apache/hadoop/pull/3714#issuecomment-991030458 Sorry somehow I missed QA result from Jenkins. @liubingxing could you take care of checkstyle warning? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 694008) Time Spent: 1h (was: 50m) > return the real datanode numBlocks in #getDatanodeStorageReport > --- > > Key: HDFS-16352 > URL: https://issues.apache.org/jira/browse/HDFS-16352 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: qinyuren >Assignee: qinyuren >Priority: Major > Labels: pull-request-available > Attachments: image-2021-11-23-22-04-06-131.png > > Time Spent: 1h > Remaining Estimate: 0h > > #getDatanodeStorageReport will return the array of DatanodeStorageReport > which contains the DatanodeInfo in each DatanodeStorageReport, but the > numBlocks in DatanodeInfo is always zero, which is confusing > !image-2021-11-23-22-04-06-131.png|width=683,height=338! > Or we can return the real numBlocks in DatanodeInfo when we call > #getDatanodeStorageReport -- This message was sent by Atlassian Jira (v8.20.1#820001) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16317) Backport HDFS-14729 for branch-3.2

[

https://issues.apache.org/jira/browse/HDFS-16317?focusedWorklogId=693940&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-693940

]

ASF GitHub Bot logged work on HDFS-16317:

-

Author: ASF GitHub Bot

Created on: 10/Dec/21 12:59

Start Date: 10/Dec/21 12:59

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3780:

URL: https://github.com/apache/hadoop/pull/3780#issuecomment-990953213

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 59s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | jshint | 0m 0s | | jshint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ branch-3.2.3 Compile Tests _ |

| +0 :ok: | mvndep | 3m 21s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 25m 49s | | branch-3.2.3 passed |

| +1 :green_heart: | compile | 15m 42s | | branch-3.2.3 passed |

| +1 :green_heart: | mvnsite | 17m 41s | | branch-3.2.3 passed |

| +1 :green_heart: | javadoc | 6m 19s | | branch-3.2.3 passed |

| +1 :green_heart: | shadedclient | 84m 32s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 23s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 24m 33s | | the patch passed |

| +1 :green_heart: | compile | 16m 51s | | the patch passed |

| +1 :green_heart: | javac | 16m 51s | | the patch passed |

| -1 :x: | blanks | 0m 0s |

[/blanks-tabs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3780/1/artifact/out/blanks-tabs.txt)

| The patch 2 line(s) with tabs. |

| +1 :green_heart: | mvnsite | 13m 42s | | the patch passed |

| +1 :green_heart: | xml | 0m 2s | | The patch has no ill-formed XML

file. |

| +1 :green_heart: | javadoc | 6m 23s | | the patch passed |

| +1 :green_heart: | shadedclient | 23m 6s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 262m 51s |

[/patch-unit-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3780/1/artifact/out/patch-unit-root.txt)

| root in the patch failed. |

| +1 :green_heart: | asflicense | 0m 56s | | The patch does not

generate ASF License warnings. |

| | | 430m 13s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.server.namenode.TestFsck |

| | hadoop.hdfs.TestReconstructStripedFileWithValidator |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3780/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3780 |

| Optional Tests | dupname asflicense codespell shadedclient compile javac

javadoc mvninstall mvnsite unit xml jshint |

| uname | Linux 6a2f2c7d86cf 4.15.0-153-generic #160-Ubuntu SMP Thu Jul 29

06:54:29 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | branch-3.2.3 / 5caeb21be49535b30d386c3893f1a01f57f99518 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~18.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3780/1/testReport/ |

| Max. process+thread count | 2396 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs

hadoop-hdfs-project/hadoop-hdfs-rbf . U: . |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3780/1/console |

| versions | git=2.17.1 maven=3.6.0 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

-

[jira] [Updated] (HDFS-16377) Should CheckNotNull before access FsDatasetSpi

[

https://issues.apache.org/jira/browse/HDFS-16377?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HDFS-16377:

--

Labels: pull-request-available (was: )

> Should CheckNotNull before access FsDatasetSpi

> --

>

> Key: HDFS-16377

> URL: https://issues.apache.org/jira/browse/HDFS-16377

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-12-10-19-19-22-957.png,

> image-2021-12-10-19-20-58-022.png

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> When starting the DN, we found NPE in the staring DN's log, as follows:

> !image-2021-12-10-19-19-22-957.png|width=909,height=126!

> The logs of the upstream DN are as follows:

> !image-2021-12-10-19-20-58-022.png|width=905,height=239!

> This is mainly because *FsDatasetSpi* has not been initialized at the time of

> access.

> I noticed that checkNotNull is already done in these two

> method({*}DataNode#getBlockLocalPathInfo{*} and

> {*}DataNode#getVolumeInfo{*}). So we should add it to other places(interfaces

> that clients and other DN can access directly) so that we can add a message

> when throwing exceptions.

> Therefore, the client and the upstream DN know that FsDatasetSpi has not been

> initialized, rather than blindly unaware of the specific cause of the NPE.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16377) Should CheckNotNull before access FsDatasetSpi

[

https://issues.apache.org/jira/browse/HDFS-16377?focusedWorklogId=693859&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-693859

]

ASF GitHub Bot logged work on HDFS-16377:

-

Author: ASF GitHub Bot

Created on: 10/Dec/21 11:34

Start Date: 10/Dec/21 11:34

Worklog Time Spent: 10m

Work Description: tomscut opened a new pull request #3784:

URL: https://github.com/apache/hadoop/pull/3784

JIRA: [HDFS-16377](https://issues.apache.org/jira/browse/HDFS-16377).

When starting the DN, we found NPE in the **staring DN's log**, as follows:

The logs of the **upstream DN** are as follows:

This is mainly because `FsDatasetSpi` has not been initialized at the time

of access.

I noticed that checkNotNull is already done in these two

method(`DataNode#getBlockLocalPathInfo` and `DataNode#getVolumeInfo`). So we

should add it to other places(interfaces that clients and other DN can access

directly) so that we can add a message(`Storage not yet initialized`) when

throwing exceptions.

Therefore, the client and the upstream DN know that `FsDatasetSpi` has not

been initialized, rather than blindly unaware of the specific cause of the NPE.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 693859)

Remaining Estimate: 0h

Time Spent: 10m

> Should CheckNotNull before access FsDatasetSpi

> --

>

> Key: HDFS-16377

> URL: https://issues.apache.org/jira/browse/HDFS-16377

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-12-10-19-19-22-957.png,

> image-2021-12-10-19-20-58-022.png

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> When starting the DN, we found NPE in the staring DN's log, as follows:

> !image-2021-12-10-19-19-22-957.png|width=909,height=126!

> The logs of the upstream DN are as follows:

> !image-2021-12-10-19-20-58-022.png|width=905,height=239!

> This is mainly because *FsDatasetSpi* has not been initialized at the time of

> access.