[jira] [Commented] (DRILL-3640) Drill JDBC driver support Statement.setQueryTimeout(int)

[

https://issues.apache.org/jira/browse/DRILL-3640?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16247062#comment-16247062

]

ASF GitHub Bot commented on DRILL-3640:

---

Github user kkhatua commented on a diff in the pull request:

https://github.com/apache/drill/pull/1024#discussion_r150160362

--- Diff:

exec/jdbc/src/main/java/org/apache/drill/jdbc/impl/DrillResultSetImpl.java ---

@@ -96,6 +105,14 @@ private void throwIfClosed() throws

AlreadyClosedSqlException,

throw new AlreadyClosedSqlException( "ResultSet is already

closed." );

}

}

+

+//Implicit check for whether timeout is set

+if (elapsedTimer != null) {

--- End diff --

Ok, so I think I see how you've been trying to help me test the server side

timeout.

You are hoping to have a unit test force the awaiteFirstMessage() throw the

exception by preventing the server from sending back any batch of data, since

the sample test data doesn't allow for any query to run sufficiently long. All

the current tests I've added essentially have already delivered the data from

the 'Drill Server' to the 'DrillClient', but the application downstream has not

consumed it.

Your suggestion of putting a `pause` before the `execute()` call got me

thinking that the timer had already begun after Statement initialization. My

understanding now is that you're simply asking to block any SCREEN operator

from sending back any batches. So, the DrillCursor should time out waiting for

the first batch. In fact, I'm thinking that I don't even need a pause. The

DrillCursor awaits all the time for something from the SCREEN operator that

never comes and eventually times out.

However, since the control injection is essentially applying to the

Connection (`alter session ...`, any other unit tests in parallel execution on

the same connection, would be affected by this. So, I would need to also undo

this at the end of the test, if the connection is reused. Or fork off a

connection exclusively for this.

Was that what you've been suggesting all along?

> Drill JDBC driver support Statement.setQueryTimeout(int)

>

>

> Key: DRILL-3640

> URL: https://issues.apache.org/jira/browse/DRILL-3640

> Project: Apache Drill

> Issue Type: New Feature

> Components: Client - JDBC

>Affects Versions: 1.2.0

>Reporter: Chun Chang

>Assignee: Kunal Khatua

> Fix For: 1.12.0

>

>

> It would be nice if we have this implemented. Run away queries can be

> automatically canceled by setting the timeout.

> java.sql.SQLFeatureNotSupportedException: Setting network timeout is not

> supported.

> at

> org.apache.drill.jdbc.impl.DrillStatementImpl.setQueryTimeout(DrillStatementImpl.java:152)

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-3640) Drill JDBC driver support Statement.setQueryTimeout(int)

[

https://issues.apache.org/jira/browse/DRILL-3640?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16247043#comment-16247043

]

ASF GitHub Bot commented on DRILL-3640:

---

Github user kkhatua commented on a diff in the pull request:

https://github.com/apache/drill/pull/1024#discussion_r150157923

--- Diff:

exec/jdbc/src/main/java/org/apache/drill/jdbc/impl/DrillResultSetImpl.java ---

@@ -96,6 +105,14 @@ private void throwIfClosed() throws

AlreadyClosedSqlException,

throw new AlreadyClosedSqlException( "ResultSet is already

closed." );

}

}

+

+//Implicit check for whether timeout is set

+if (elapsedTimer != null) {

--- End diff --

I don't think you are wrong, but I think the interpretation of the timeout

is ambiguous. My understanding based on what drivers like Oracle do is to start

the timeout only when the execute call is made. So, for a regular Statement

object, just initialization (or even setting the timeout) should not be the

basis of starting the timer.

With regards to whether we are testing for the time when only the

DrillCursor is in operation, we'd need a query that is running sufficiently

long to timeout before the server can send back anything for the very first

time. The `awaitFirstMessage()` already has the timeout applied there and

worked in some of my longer running sample queries. If you're hinting towards

this, then yes.. it is certainly doesn't hurt to have the test, although the

timeout does guarantee exactly that.

I'm not familiar with the Drillbit Injection feature, so let me tinker a

bit to confirm it before I update the PR.

> Drill JDBC driver support Statement.setQueryTimeout(int)

>

>

> Key: DRILL-3640

> URL: https://issues.apache.org/jira/browse/DRILL-3640

> Project: Apache Drill

> Issue Type: New Feature

> Components: Client - JDBC

>Affects Versions: 1.2.0

>Reporter: Chun Chang

>Assignee: Kunal Khatua

> Fix For: 1.12.0

>

>

> It would be nice if we have this implemented. Run away queries can be

> automatically canceled by setting the timeout.

> java.sql.SQLFeatureNotSupportedException: Setting network timeout is not

> supported.

> at

> org.apache.drill.jdbc.impl.DrillStatementImpl.setQueryTimeout(DrillStatementImpl.java:152)

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-5691) multiple count distinct query planning error at physical phase

[ https://issues.apache.org/jira/browse/DRILL-5691?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16247040#comment-16247040 ] ASF GitHub Bot commented on DRILL-5691: --- Github user weijietong commented on the issue: https://github.com/apache/drill/pull/889 @amansinha100 thanks for sharing the information. Got your point. I think your propose on [CALCITE-1048](https://issues.apache.org/jira/browse/CALCITE-1048) is possible. Since [CALCITE-794](https://issues.apache.org/jira/browse/CALCITE-794) has completed at version 1.6 ,it seems there's a more perfect solution( to get the least max number of all the rels of the RelSubSet). But due to Drill's Caclite version is still based on 1.4 , I support your current temp solution. Only wonder that whether the explicitly searched RelNode's (such as DrillAggregateRel) maxRowCount can represent the best RelNode's maxRowCount ? > multiple count distinct query planning error at physical phase > --- > > Key: DRILL-5691 > URL: https://issues.apache.org/jira/browse/DRILL-5691 > Project: Apache Drill > Issue Type: Bug > Components: Execution - Relational Operators >Affects Versions: 1.9.0, 1.10.0 >Reporter: weijie.tong > > I materialized the count distinct query result in a cache , added a plugin > rule to translate the (Aggregate、Aggregate、Project、Scan) or > (Aggregate、Aggregate、Scan) to (Project、Scan) at the PARTITION_PRUNING phase. > Then ,once user issue count distinct queries , it will be translated to query > the cache to get the result. > eg1: " select count(*),sum(a) ,count(distinct b) from t where dt=xx " > eg2:"select count(*),sum(a) ,count(distinct b) ,count(distinct c) from t > where dt=xxx " > eg3:"select count(distinct b), count(distinct c) from t where dt=xxx" > eg1 will be right and have a query result as I expected , but eg2 will be > wrong at the physical phase.The error info is here: > https://gist.github.com/weijietong/1b8ed12db9490bf006e8b3fe0ee52269. > eg3 will also get the similar error. -- This message was sent by Atlassian JIRA (v6.4.14#64029)

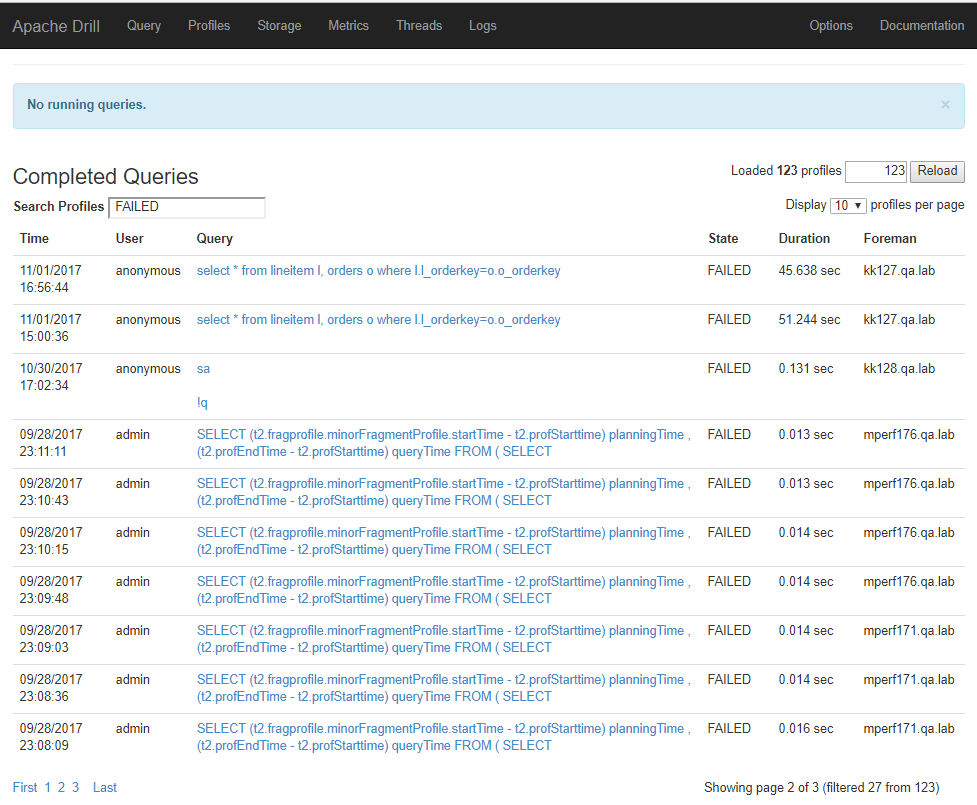

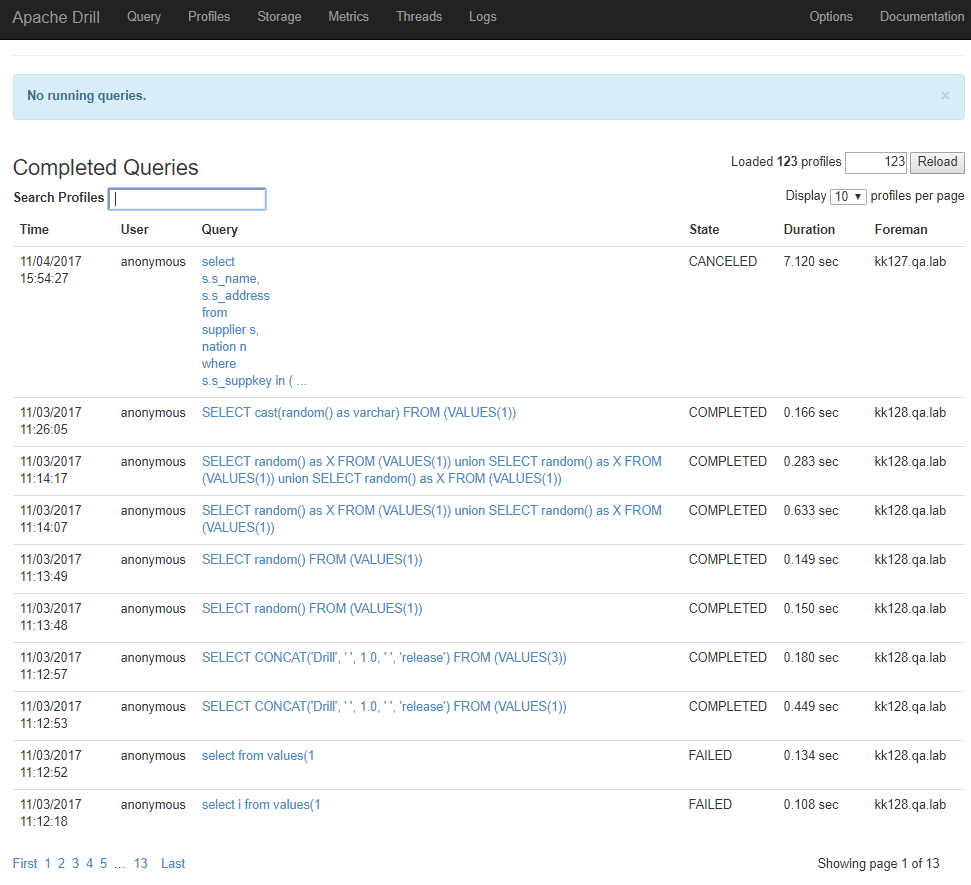

[jira] [Commented] (DRILL-5923) State of a successfully completed query shown as "COMPLETED"

[ https://issues.apache.org/jira/browse/DRILL-5923?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246967#comment-16246967 ] ASF GitHub Bot commented on DRILL-5923: --- Github user prasadns14 commented on the issue: https://github.com/apache/drill/pull/1021 @arina-ielchiieva, @paul-rogers Reverted to the array approach, also added documentation. > State of a successfully completed query shown as "COMPLETED" > > > Key: DRILL-5923 > URL: https://issues.apache.org/jira/browse/DRILL-5923 > Project: Apache Drill > Issue Type: Bug > Components: Client - HTTP >Affects Versions: 1.11.0 >Reporter: Prasad Nagaraj Subramanya >Assignee: Prasad Nagaraj Subramanya > Fix For: 1.12.0 > > > Drill UI currently lists a successfully completed query as "COMPLETED". > Successfully completed, failed and canceled queries are all grouped as > Completed queries. > It would be better to list the state of a successfully completed query as > "Succeeded" to avoid confusion. -- This message was sent by Atlassian JIRA (v6.4.14#64029)

[jira] [Updated] (DRILL-5917) Ban org.json:json library in Drill

[

https://issues.apache.org/jira/browse/DRILL-5917?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Vlad Rozov updated DRILL-5917:

--

Reviewer: Arina Ielchiieva

> Ban org.json:json library in Drill

> --

>

> Key: DRILL-5917

> URL: https://issues.apache.org/jira/browse/DRILL-5917

> Project: Apache Drill

> Issue Type: Task

>Affects Versions: 1.11.0

>Reporter: Arina Ielchiieva

>Assignee: Vlad Rozov

> Fix For: 1.12.0

>

>

> Apache Drill has dependencies on json.org lib indirectly from two libraries:

> com.mapr.hadoop:maprfs:jar:5.2.1-mapr

> com.mapr.fs:mapr-hbase:jar:5.2.1-mapr

> {noformat}

> [INFO] org.apache.drill.contrib:drill-format-mapr:jar:1.12.0-SNAPSHOT

> [INFO] +- com.mapr.hadoop:maprfs:jar:5.2.1-mapr:compile

> [INFO] | \- org.json:json:jar:20080701:compile

> [INFO] \- com.mapr.fs:mapr-hbase:jar:5.2.1-mapr:compile

> [INFO]\- (org.json:json:jar:20080701:compile - omitted for duplicate)

> {noformat}

> Need to make sure we won't have any dependencies from these libs to json.org

> lib and ban this lib in main pom.xml file.

> Issue is critical since Apache release won't happen until we make sure

> json.org lib is not used (https://www.apache.org/legal/resolved.html).

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-5926) TestValueVector tests fail sporadically

[ https://issues.apache.org/jira/browse/DRILL-5926?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246936#comment-16246936 ] Vlad Rozov commented on DRILL-5926: --- It may be OK to increase MaxDirectMemorySize from 3GB to 4GB as a short-term workaround to avoid unit test failure for unrelated PRs. In a long-term, it is necessary to investigate if memory can be reclaimed from the Pooled Allocator and whether tests indeed require more than 3 GB of memory. [~timothyfarkas] Can you create a separate PR for the workaround. > TestValueVector tests fail sporadically > --- > > Key: DRILL-5926 > URL: https://issues.apache.org/jira/browse/DRILL-5926 > Project: Apache Drill > Issue Type: Bug >Reporter: Timothy Farkas >Assignee: Timothy Farkas >Priority: Trivial > > As reported by [~Paul.Rogers]. The following tests fail sporadically with out > of memory exception: > * TestValueVector.testFixedVectorReallocation > * TestValueVector.testVariableVectorReallocation -- This message was sent by Atlassian JIRA (v6.4.14#64029)

[jira] [Updated] (DRILL-5943) Avoid the strong check introduced by DRILL-5582 for PLAIN mechanism

[ https://issues.apache.org/jira/browse/DRILL-5943?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Sorabh Hamirwasia updated DRILL-5943: - Labels: ready-to-commit (was: ) Reviewer: Laurent Goujon (was: Parth Chandra) > Avoid the strong check introduced by DRILL-5582 for PLAIN mechanism > --- > > Key: DRILL-5943 > URL: https://issues.apache.org/jira/browse/DRILL-5943 > Project: Apache Drill > Issue Type: Improvement >Affects Versions: 1.12.0 >Reporter: Sorabh Hamirwasia >Assignee: Sorabh Hamirwasia > Labels: ready-to-commit > Fix For: 1.12.0 > > > For PLAIN mechanism we will weaken the strong check introduced with > DRILL-5582 to keep the forward compatibility between Drill 1.12 client and > Drill 1.9 server. This is fine since with and without this strong check PLAIN > mechanism is still vulnerable to MITM during handshake itself unlike mutual > authentication protocols like Kerberos. > Also for keeping forward compatibility with respect to SASL we will treat > UNKNOWN_SASL_SUPPORT as valid value. For handshake message received from a > client which is running on later version (let say 1.13) then Drillbit (1.12) > and having a new value for SaslSupport field which is unknown to server, this > field will be decoded as UNKNOWN_SASL_SUPPORT. In this scenario client will > be treated as one aware about SASL protocol but server doesn't know exact > capabilities of client. Hence the SASL handshake will still be required from > server side. -- This message was sent by Atlassian JIRA (v6.4.14#64029)

[jira] [Commented] (DRILL-5771) Fix serDe errors for format plugins

[ https://issues.apache.org/jira/browse/DRILL-5771?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246879#comment-16246879 ] ASF GitHub Bot commented on DRILL-5771: --- Github user paul-rogers commented on the issue: https://github.com/apache/drill/pull/1014 Not sure the description here is entirely correct. Let's separate two concepts: the plugin (code) and the plugin definition (the stuff in JSON.) Plugin definitions are stored in ZK and retrieved by the Foreman. There may be some form of race condition in the Foreman, but that's not my focus here. The plugin *definition* is read by the Forman and serialized into the physical plan. Each worker reads the definition from the physical plan. For this reason, the worker's definition can never be out of date: it is the definition used when serializing the plan. Further, Drill allows table functions which provide query-time name/value pair settings for format plugin properties. The only way these can work is to be serialized along with the query. So, the actual serialized format plugin definition, included with the query, includes both the ZK information and the table function information. > Fix serDe errors for format plugins > --- > > Key: DRILL-5771 > URL: https://issues.apache.org/jira/browse/DRILL-5771 > Project: Apache Drill > Issue Type: Bug >Affects Versions: 1.11.0 >Reporter: Arina Ielchiieva >Assignee: Arina Ielchiieva >Priority: Minor > Fix For: 1.12.0 > > > Create unit tests to check that all storage format plugins can be > successfully serialized / deserialized. > Usually this happens when query has several major fragments. > One way to check serde is to generate physical plan (generated as json) and > then submit it back to Drill. > One example of found errors is described in the first comment. Another > example is described in DRILL-5166. > *Serde issues:* > 1. Could not obtain format plugin during deserialization > Format plugin is created based on format plugin configuration or its name. > On Drill start up we load information about available plugins (its reloaded > each time storage plugin is updated, can be done only by admin). > When query is parsed, we try to get plugin from the available ones, it we can > not find one we try to [create > one|https://github.com/apache/drill/blob/3e8b01d5b0d3013e3811913f0fd6028b22c1ac3f/exec/java-exec/src/main/java/org/apache/drill/exec/store/dfs/FileSystemPlugin.java#L136-L144] > but on other query execution stages we always assume that [plugin exists > based on > configuration|https://github.com/apache/drill/blob/3e8b01d5b0d3013e3811913f0fd6028b22c1ac3f/exec/java-exec/src/main/java/org/apache/drill/exec/store/dfs/FileSystemPlugin.java#L156-L162]. > For example, during query parsing we had to create format plugin on one node > based on format configuration. > Then we have sent major fragment to the different node where we used this > format configuration we could not get format plugin based on it and > deserialization has failed. > To fix this problem we need to create format plugin during query > deserialization if it's absent. > > 2. Absent hash code and equals. > Format plugins are stored in hash map where key is format plugin config. > Since some format plugin configs did not have overridden hash code and > equals, we could not find format plugin based on its configuration. > 3. Named format plugin usage > Named format plugins configs allow to get format plugin by its name for > configuration shared among all drillbits. > They are used as alias for pre-configured format plugiins. User with admin > priliges can modify them at runtime. > Named format plugins configs are used instead of sending all non-default > parameters of format plugin config, in this case only name is sent. > Their usage in distributed system may cause raise conditions. > For example, > 1. Query is submitted. > 2. Parquet format plugin is created with the following configuration > (autoCorrectCorruptDates=>true). > 3. Seralized named format plugin config with name as parquet. > 4. Major fragment is sent to the different node. > 5. Admin has changed parquet configuration for the alias 'parquet' on all > nodes to autoCorrectCorruptDates=>false. > 6. Named format is deserialized on the different node into parquet format > plugin with configuration (autoCorrectCorruptDates=>false). -- This message was sent by Atlassian JIRA (v6.4.14#64029)

[jira] [Commented] (DRILL-5936) Refactor MergingRecordBatch based on code review

[

https://issues.apache.org/jira/browse/DRILL-5936?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246876#comment-16246876

]

Vlad Rozov commented on DRILL-5936:

---

[~amansinha100] It is based on my code walkthrough completed as part of

exchange operator analysis. The PR is mostly self-explanatory and the goal is

to address two deficiencies mentioned in the JIRA description.

> Refactor MergingRecordBatch based on code review

>

>

> Key: DRILL-5936

> URL: https://issues.apache.org/jira/browse/DRILL-5936

> Project: Apache Drill

> Issue Type: Improvement

> Components: Tools, Build & Test

>Reporter: Vlad Rozov

>Assignee: Vlad Rozov

>Priority: Minor

>

> * Reorganize code to remove unnecessary {{pqueue.peek()}}

> * Reuse Node

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-5936) Refactor MergingRecordBatch based on code review

[

https://issues.apache.org/jira/browse/DRILL-5936?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246872#comment-16246872

]

Aman Sinha commented on DRILL-5936:

---

[~vrozov] the title says 'based on code review'..which code review are you

referring to ? can you point me to the other JIRA or PR ? thanks.

> Refactor MergingRecordBatch based on code review

>

>

> Key: DRILL-5936

> URL: https://issues.apache.org/jira/browse/DRILL-5936

> Project: Apache Drill

> Issue Type: Improvement

> Components: Tools, Build & Test

>Reporter: Vlad Rozov

>Assignee: Vlad Rozov

>Priority: Minor

>

> * Reorganize code to remove unnecessary {{pqueue.peek()}}

> * Reuse Node

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Created] (DRILL-5950) Allow JSON files to be splittable - for sequence of objects format

Paul Rogers created DRILL-5950:

--

Summary: Allow JSON files to be splittable - for sequence of

objects format

Key: DRILL-5950

URL: https://issues.apache.org/jira/browse/DRILL-5950

Project: Apache Drill

Issue Type: Improvement

Affects Versions: 1.12.0

Reporter: Paul Rogers

The JSON plugin format is not currently splittable. This means that every JSON

file must be read by only a single thread. By contrast, text files are

splittable.

The key barrier to allowing JSON files to be splittable is the lack of a good

mechanism to find the start of a record at some arbitrary point in the file.

Text readers handle this by scanning forward looking for (say) the newline that

separates records. (Though this process can be thrown off if a newline appears

in a quoted value, and the start quote appears before the split point.)

However, as was discovered in a previous JSON fix, Drill's form of JSON does

provide the tools. In standard JSON, a list of records must be stuctured as a

list:

{code}

[ { text: "first record"},

{ text: "second record"},

...

{ text: "final record" }

]

{code}

In this form, it is impossible to find the start of a record without parsing

from the first character onwards.

But, Drill uses a common, but non-standard, JSON structure that dispenses with

the array and the commas between records:

{code}

{ text: "first record" }

{ text: "second record" }

...

{ text: "last record" }

{code}

This form does unambiguously allow finding the start of the record. Simply scan

until we find the tokens: , , possibly separated by white space.

That sequence is not valid JSON and only occurs between records in the

sequence-of-records format.

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-5771) Fix serDe errors for format plugins

[ https://issues.apache.org/jira/browse/DRILL-5771?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246871#comment-16246871 ] ASF GitHub Bot commented on DRILL-5771: --- Github user ilooner commented on the issue: https://github.com/apache/drill/pull/1014 @arina-ielchiieva - The parts addressing DRILL-4640 and DRILL-5166 LGTM - I think the fix for DRILL-5771 LGTM but I would like write down what I think is happening and confirm with you that my understanding is correct. This is mostly just a learning exercise for me since I am not very familiar with this part of the code :). In DRILL-5771 there were two issues. ## Race Conditions With Format Plugins ### Issue The following used to happen before the fix: 1. When using an existing format plugin, the **FormatPlugin** would create a **DrillTable** with a **NamedFormatPluginConfig** which only contains the name of the format plugin to use. 1. The **ScanOperator** created for a **DrillTable** will contain the **NamedFormatPluginConfig** 1. When the **ScanOperators** are serialized in to the physical plan the serialized **ScanOperator** will only contain the name of the format plugin to use. 1. When a worker deserializes the physical plan to do a scan, he gets the name of the **FormatPluginConfig** to use. 1. The worker then looks up the correct **FormatPlugin** in the **FormatCreator** using the name he has. 1. The worker can get into trouble if the **FormatPlugins** he has cached in his **FormatCreator** is out of sync with the rest of the cluster. ### Fix Race conditions are eliminated because the **DrillTables** returned by the **FormatPlugins** no longer contain a **NamedFormatPluginConfig**, they contain the full **FormatPluginConfig** not just a name alias. So when a query is executed: 1. The ScanOperator contains the complete **FormatPluginConfig** 1. When the physical plan is serialized it contains the complete **FormatPluginConfig** for each scan operator. 1. When a worker node deserializes the ScanOperator it also has the complete **FormatPluginConfig** so it can reconstruct the **FormatPlugin** correctly, whereas previously the worker would have to do a lookup using the **FormatPlugin** name in the **FormatCreator** when the cache in the **FormatCreator** may be out of sync with the rest of the cluster. ## FormatPluginConfig Equals and HashCode ### Issue The **FileSystemPlugin** looks up **FormatPlugins** corresponding to a **FormatPluginConfig** in formatPluginsByConfig. However, the **FormatPluginConfig** implementations didn't override equals and hashCode. > Fix serDe errors for format plugins > --- > > Key: DRILL-5771 > URL: https://issues.apache.org/jira/browse/DRILL-5771 > Project: Apache Drill > Issue Type: Bug >Affects Versions: 1.11.0 >Reporter: Arina Ielchiieva >Assignee: Arina Ielchiieva >Priority: Minor > Fix For: 1.12.0 > > > Create unit tests to check that all storage format plugins can be > successfully serialized / deserialized. > Usually this happens when query has several major fragments. > One way to check serde is to generate physical plan (generated as json) and > then submit it back to Drill. > One example of found errors is described in the first comment. Another > example is described in DRILL-5166. > *Serde issues:* > 1. Could not obtain format plugin during deserialization > Format plugin is created based on format plugin configuration or its name. > On Drill start up we load information about available plugins (its reloaded > each time storage plugin is updated, can be done only by admin). > When query is parsed, we try to get plugin from the available ones, it we can > not find one we try to [create > one|https://github.com/apache/drill/blob/3e8b01d5b0d3013e3811913f0fd6028b22c1ac3f/exec/java-exec/src/main/java/org/apache/drill/exec/store/dfs/FileSystemPlugin.java#L136-L144] > but on other query execution stages we always assume that [plugin exists > based on > configuration|https://github.com/apache/drill/blob/3e8b01d5b0d3013e3811913f0fd6028b22c1ac3f/exec/java-exec/src/main/java/org/apache/drill/exec/store/dfs/FileSystemPlugin.java#L156-L162]. > For example, during query parsing we had to create format plugin on one node > based on format configuration. > Then we have sent major fragment to the different node where we used this > format configuration we could not get format plugin based on it and > deserialization has failed. > To fix this problem we need to create format plugin during query > deserialization if it's absent. > > 2. Absent hash code and equals. > Format plugins are stored in hash map where key

[jira] [Updated] (DRILL-5936) Refactor MergingRecordBatch based on code review

[

https://issues.apache.org/jira/browse/DRILL-5936?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Vlad Rozov updated DRILL-5936:

--

Description:

* Reorganize code to remove unnecessary {{pqueue.peek()}}

* Reuse Node

was:*

> Refactor MergingRecordBatch based on code review

>

>

> Key: DRILL-5936

> URL: https://issues.apache.org/jira/browse/DRILL-5936

> Project: Apache Drill

> Issue Type: Improvement

> Components: Tools, Build & Test

>Reporter: Vlad Rozov

>Assignee: Vlad Rozov

>Priority: Minor

>

> * Reorganize code to remove unnecessary {{pqueue.peek()}}

> * Reuse Node

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Updated] (DRILL-5936) Refactor MergingRecordBatch based on code review

[ https://issues.apache.org/jira/browse/DRILL-5936?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Vlad Rozov updated DRILL-5936: -- Description: * > Refactor MergingRecordBatch based on code review > > > Key: DRILL-5936 > URL: https://issues.apache.org/jira/browse/DRILL-5936 > Project: Apache Drill > Issue Type: Improvement > Components: Tools, Build & Test >Reporter: Vlad Rozov >Assignee: Vlad Rozov >Priority: Minor > > * -- This message was sent by Atlassian JIRA (v6.4.14#64029)

[jira] [Commented] (DRILL-5949) JSON format options should be part of plugin config; not session options

[

https://issues.apache.org/jira/browse/DRILL-5949?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246851#comment-16246851

]

Paul Rogers commented on DRILL-5949:

Although, technically, it is quite easy to make this change; backward

compatibility is a challenge because Drill has no mechanism to assist. Here are

two possibilities.

First, assign a priority to the settings as follows:

* Table function options (highest priority)

* Session options

* Plugin options

* System options (lowest priority)

That is, if session options are set, use them. Else, use the plugin options, if

set. Else use the system options (and the system option defaults.) This is

possible because options now identify the scope in which they are set, so we

can differentiate session from system options. The problem here is that the

reader can't actually tell if a setting comes from a table function or from the

plugin definition, so some work may be required to support this pattern.

Second, modify the system/session options to have three values:

{{true}}/{{false}}/{{unset}}. If the value is set to {{unset}}, use the plugin

options. The default option value becomes {{unset}}. If the user changes the

session (or system) option, this is used. So, if a user has changed the system

option, and stored the value in ZK, then that setting will be {{true}} or

{{false}} and will take precedence over the plugin options.

> JSON format options should be part of plugin config; not session options

>

>

> Key: DRILL-5949

> URL: https://issues.apache.org/jira/browse/DRILL-5949

> Project: Apache Drill

> Issue Type: Improvement

>Affects Versions: 1.12.0

>Reporter: Paul Rogers

>

> Drill provides a JSON record reader. Drill provides two ways to configure

> this reader:

> * Using the JSON plugin configuration.

> * Using a set of session options.

> The plugin configuration defines the file suffix associated with JSON files.

> The session options are:

> * {{store.json.all_text_mode}}

> * {{store.json.read_numbers_as_double}}

> * {{store.json.reader.skip_invalid_records}}

> * {{store.json.reader.print_skipped_invalid_record_number}}

> Suppose I have to JSON files from different sources (and keep them in

> distinct directories.) For the one, I want to use {{all_text_mode}} off as

> the data is nicely formatted. Also, my numbers are fine, so I want

> {{read_numbers_as_double}} off.

> But, the other file is a mess and uses a rather ad-hoc format. So, I want

> these two options turned on.

> As it turns out I often query both files. Today, I must set the session

> options one way to query my "clean" file, then reverse them to query the

> "dirty" file.

> Next, I want to join the two files. How do I set the options one way for the

> "clean" file, and the other for the "dirty" file within the *same query*?

> Can't.

> Now, consider the text format plugin that can read CSV, TSV, PSV and so on.

> It has a variety of options. But, the are *not* session options; they are

> instead options in the plugin definition. This allows me to, say, have a

> plugin config for CSV-with-headers files that I get from source A, and a

> different plugin config for my CSV-without-headers files from source B.

> Suppose we applied the text reader technique to the JSON reader. We'd move

> the session options listed above into the JSON format plugin. Then, I can

> define one plugin for my "clean" files, and a different plugin config for my

> "dirty" files.

> What's more, I can then use table functions to adjust the format for each

> file as needed within a single query. Since table functions are part of a

> query, I can add them to a view that I define for the various JSON files.

> The result is a far simpler user experience than the tedium of resetting

> session options for every query.

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-3640) Drill JDBC driver support Statement.setQueryTimeout(int)

[

https://issues.apache.org/jira/browse/DRILL-3640?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246849#comment-16246849

]

ASF GitHub Bot commented on DRILL-3640:

---

Github user laurentgo commented on a diff in the pull request:

https://github.com/apache/drill/pull/1024#discussion_r150131105

--- Diff:

exec/jdbc/src/main/java/org/apache/drill/jdbc/impl/DrillResultSetImpl.java ---

@@ -96,6 +105,14 @@ private void throwIfClosed() throws

AlreadyClosedSqlException,

throw new AlreadyClosedSqlException( "ResultSet is already

closed." );

}

}

+

+//Implicit check for whether timeout is set

+if (elapsedTimer != null) {

--- End diff --

Yes, I'm wrong? (asking because the rest of the sentence suggest I was

right in my interpretation of the test). Maybe we can/should test both? I would

have like to test for the first batch, but it's not possible to access the

query id until `statement.execute()`, and I'd need it to unpause the request.

> Drill JDBC driver support Statement.setQueryTimeout(int)

>

>

> Key: DRILL-3640

> URL: https://issues.apache.org/jira/browse/DRILL-3640

> Project: Apache Drill

> Issue Type: New Feature

> Components: Client - JDBC

>Affects Versions: 1.2.0

>Reporter: Chun Chang

>Assignee: Kunal Khatua

> Fix For: 1.12.0

>

>

> It would be nice if we have this implemented. Run away queries can be

> automatically canceled by setting the timeout.

> java.sql.SQLFeatureNotSupportedException: Setting network timeout is not

> supported.

> at

> org.apache.drill.jdbc.impl.DrillStatementImpl.setQueryTimeout(DrillStatementImpl.java:152)

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-5936) Refactor MergingRecordBatch based on code review

[ https://issues.apache.org/jira/browse/DRILL-5936?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246828#comment-16246828 ] ASF GitHub Bot commented on DRILL-5936: --- Github user priteshm commented on the issue: https://github.com/apache/drill/pull/1025 @amansinha100 can you review this change? > Refactor MergingRecordBatch based on code review > > > Key: DRILL-5936 > URL: https://issues.apache.org/jira/browse/DRILL-5936 > Project: Apache Drill > Issue Type: Improvement > Components: Tools, Build & Test >Reporter: Vlad Rozov >Assignee: Vlad Rozov >Priority: Minor > -- This message was sent by Atlassian JIRA (v6.4.14#64029)

[jira] [Commented] (DRILL-3640) Drill JDBC driver support Statement.setQueryTimeout(int)

[

https://issues.apache.org/jira/browse/DRILL-3640?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246827#comment-16246827

]

ASF GitHub Bot commented on DRILL-3640:

---

Github user kkhatua commented on a diff in the pull request:

https://github.com/apache/drill/pull/1024#discussion_r150127658

--- Diff:

exec/jdbc/src/main/java/org/apache/drill/jdbc/impl/DrillResultSetImpl.java ---

@@ -96,6 +105,14 @@ private void throwIfClosed() throws

AlreadyClosedSqlException,

throw new AlreadyClosedSqlException( "ResultSet is already

closed." );

}

}

+

+//Implicit check for whether timeout is set

+if (elapsedTimer != null) {

--- End diff --

Yes. So I'm testing for the part when the batch has been fetched byt

DrillCursor but not consumed via the DrillResultSetImpl. That's why I found the

need for pausing the Screen operator odd and, hence, the question.

> Drill JDBC driver support Statement.setQueryTimeout(int)

>

>

> Key: DRILL-3640

> URL: https://issues.apache.org/jira/browse/DRILL-3640

> Project: Apache Drill

> Issue Type: New Feature

> Components: Client - JDBC

>Affects Versions: 1.2.0

>Reporter: Chun Chang

>Assignee: Kunal Khatua

> Fix For: 1.12.0

>

>

> It would be nice if we have this implemented. Run away queries can be

> automatically canceled by setting the timeout.

> java.sql.SQLFeatureNotSupportedException: Setting network timeout is not

> supported.

> at

> org.apache.drill.jdbc.impl.DrillStatementImpl.setQueryTimeout(DrillStatementImpl.java:152)

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Comment Edited] (DRILL-5926) TestValueVector tests fail sporadically

[ https://issues.apache.org/jira/browse/DRILL-5926?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16238479#comment-16238479 ] Pritesh Maker edited comment on DRILL-5926 at 11/10/17 12:33 AM: - Is this the PR? https://github.com/apache/drill/pull/1023 Should we create a separate PR for this issue? cc [~vrozov] was (Author: priteshm): Is this the PR? https://github.com/apache/drill/pull/1023 Should we create a separate PR for this issue? > TestValueVector tests fail sporadically > --- > > Key: DRILL-5926 > URL: https://issues.apache.org/jira/browse/DRILL-5926 > Project: Apache Drill > Issue Type: Bug >Reporter: Timothy Farkas >Assignee: Timothy Farkas >Priority: Trivial > > As reported by [~Paul.Rogers]. The following tests fail sporadically with out > of memory exception: > * TestValueVector.testFixedVectorReallocation > * TestValueVector.testVariableVectorReallocation -- This message was sent by Atlassian JIRA (v6.4.14#64029)

[jira] [Commented] (DRILL-3640) Drill JDBC driver support Statement.setQueryTimeout(int)

[

https://issues.apache.org/jira/browse/DRILL-3640?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246775#comment-16246775

]

ASF GitHub Bot commented on DRILL-3640:

---

Github user laurentgo commented on a diff in the pull request:

https://github.com/apache/drill/pull/1024#discussion_r150119338

--- Diff:

exec/jdbc/src/main/java/org/apache/drill/jdbc/impl/DrillResultSetImpl.java ---

@@ -96,6 +105,14 @@ private void throwIfClosed() throws

AlreadyClosedSqlException,

throw new AlreadyClosedSqlException( "ResultSet is already

closed." );

}

}

+

+//Implicit check for whether timeout is set

+if (elapsedTimer != null) {

--- End diff --

I wonder if we actually test timeout during DrillCursor operations. It

seems your test relies on the user being slow to read data from the result set

although the data has already been fetched by the client. Am I wrong?

> Drill JDBC driver support Statement.setQueryTimeout(int)

>

>

> Key: DRILL-3640

> URL: https://issues.apache.org/jira/browse/DRILL-3640

> Project: Apache Drill

> Issue Type: New Feature

> Components: Client - JDBC

>Affects Versions: 1.2.0

>Reporter: Chun Chang

>Assignee: Kunal Khatua

> Fix For: 1.12.0

>

>

> It would be nice if we have this implemented. Run away queries can be

> automatically canceled by setting the timeout.

> java.sql.SQLFeatureNotSupportedException: Setting network timeout is not

> supported.

> at

> org.apache.drill.jdbc.impl.DrillStatementImpl.setQueryTimeout(DrillStatementImpl.java:152)

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-5943) Avoid the strong check introduced by DRILL-5582 for PLAIN mechanism

[

https://issues.apache.org/jira/browse/DRILL-5943?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246769#comment-16246769

]

ASF GitHub Bot commented on DRILL-5943:

---

Github user laurentgo commented on a diff in the pull request:

https://github.com/apache/drill/pull/1028#discussion_r150118518

--- Diff: contrib/native/client/src/clientlib/saslAuthenticatorImpl.hpp ---

@@ -59,6 +59,12 @@ class SaslAuthenticatorImpl {

const char *getErrorMessage(int errorCode);

+static const std::string KERBEROS_SIMPLE_NAME;

+

+static const std::string KERBEROS_SASL_NAME;

--- End diff --

do we need to expose it? (it looks like we only look for the keys)

> Avoid the strong check introduced by DRILL-5582 for PLAIN mechanism

> ---

>

> Key: DRILL-5943

> URL: https://issues.apache.org/jira/browse/DRILL-5943

> Project: Apache Drill

> Issue Type: Improvement

>Affects Versions: 1.12.0

>Reporter: Sorabh Hamirwasia

>Assignee: Sorabh Hamirwasia

> Fix For: 1.12.0

>

>

> For PLAIN mechanism we will weaken the strong check introduced with

> DRILL-5582 to keep the forward compatibility between Drill 1.12 client and

> Drill 1.9 server. This is fine since with and without this strong check PLAIN

> mechanism is still vulnerable to MITM during handshake itself unlike mutual

> authentication protocols like Kerberos.

> Also for keeping forward compatibility with respect to SASL we will treat

> UNKNOWN_SASL_SUPPORT as valid value. For handshake message received from a

> client which is running on later version (let say 1.13) then Drillbit (1.12)

> and having a new value for SaslSupport field which is unknown to server, this

> field will be decoded as UNKNOWN_SASL_SUPPORT. In this scenario client will

> be treated as one aware about SASL protocol but server doesn't know exact

> capabilities of client. Hence the SASL handshake will still be required from

> server side.

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Updated] (DRILL-5863) Sortable table incorrectly sorts minor fragments and time elements lexically instead of sorting by implicit value

[

https://issues.apache.org/jira/browse/DRILL-5863?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Pritesh Maker updated DRILL-5863:

-

Reviewer: Paul Rogers (was: Paul Rogers)

> Sortable table incorrectly sorts minor fragments and time elements lexically

> instead of sorting by implicit value

> -

>

> Key: DRILL-5863

> URL: https://issues.apache.org/jira/browse/DRILL-5863

> Project: Apache Drill

> Issue Type: Bug

> Components: Web Server

>Affects Versions: 1.11.0

>Reporter: Kunal Khatua

>Assignee: Kunal Khatua

>Priority: Minor

> Labels: ready-to-commit

> Fix For: 1.12.0

>

>

> The fix for this is to use dataTable library's {{data-order}} attribute for

> the data elements that need to sort by an implicit value.

> ||Old order of Minor Fragment||New order of Minor Fragment||

> |...|...|

> |01-09-01 | 01-09-01|

> |01-10-01 | 01-10-01|

> |01-100-01 | 01-11-01|

> |01-101-01 | 01-12-01|

> |... | ... |

> ||Old order of Duration||New order of Duration|||

> |...|...|

> |1m15s | 55.03s|

> |55s | 1m15s|

> |...|...|

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Assigned] (DRILL-5717) change some date time unit cases with specific timezone or Local

[ https://issues.apache.org/jira/browse/DRILL-5717?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Pritesh Maker reassigned DRILL-5717: Assignee: weijie.tong > change some date time unit cases with specific timezone or Local > > > Key: DRILL-5717 > URL: https://issues.apache.org/jira/browse/DRILL-5717 > Project: Apache Drill > Issue Type: Bug > Components: Tools, Build & Test >Affects Versions: 1.9.0, 1.11.0 >Reporter: weijie.tong >Assignee: weijie.tong > Labels: ready-to-commit > > Some date time test cases like JodaDateValidatorTest is not Local > independent .This will cause other Local's users's test phase to fail. We > should let these test cases to be Local env independent. -- This message was sent by Atlassian JIRA (v6.4.14#64029)

[jira] [Commented] (DRILL-5783) Make code generation in the TopN operator more modular and test it

[

https://issues.apache.org/jira/browse/DRILL-5783?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246617#comment-16246617

]

ASF GitHub Bot commented on DRILL-5783:

---

Github user paul-rogers commented on a diff in the pull request:

https://github.com/apache/drill/pull/984#discussion_r150097140

--- Diff:

exec/java-exec/src/test/java/org/apache/drill/test/rowSet/RowSet.java ---

@@ -85,8 +85,7 @@

* new row set with the updated columns, then merge the new

* and old row sets to create a new immutable row set.

*/

-

- public interface RowSetWriter extends TupleWriter {

+ interface RowSetWriter extends TupleWriter {

--- End diff --

Ah, forgot that the file defines an interface, not a class. (The situation

I described was an interface nested inside a class.) So, you're good.

> Make code generation in the TopN operator more modular and test it

> --

>

> Key: DRILL-5783

> URL: https://issues.apache.org/jira/browse/DRILL-5783

> Project: Apache Drill

> Issue Type: Improvement

>Reporter: Timothy Farkas

>Assignee: Timothy Farkas

>

> The work for this PR has had several other PRs batched together with it. The

> full description of work is the following:

> DRILL-5783

> * A unit test is created for the priority queue in the TopN operator

> * The code generation classes passed around a completely unused function

> registry reference in some places so I removed it.

> * The priority queue had unused parameters for some of its methods so I

> removed them.

> DRILL-5841

> * There were many many ways in which temporary folders were created in unit

> tests. I have unified the way these folders are created with the

> DirTestWatcher, SubDirTestWatcher, and BaseDirTestWatcher. All the unit tests

> have been updated to use these. The test watchers create temp directories in

> ./target//. So all the files generated and used in the context of a test can

> easily be found in the same consistent location.

> * This change should fix the sporadic hashagg test failures, as well as

> failures caused by stray files in /tmp

> DRILL-5894

> * dfs_test is used as a storage plugin throughout the unit tests. This is

> highly confusing and we can just use dfs instead.

> *Misc*

> * General code cleanup.

> * There are many places where String.format is used unnecessarily. The test

> builder methods already use String.format for you when you pass them args. I

> cleaned some of these up.

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Created] (DRILL-5949) JSON format options should be part of plugin config; not session options

Paul Rogers created DRILL-5949:

--

Summary: JSON format options should be part of plugin config; not

session options

Key: DRILL-5949

URL: https://issues.apache.org/jira/browse/DRILL-5949

Project: Apache Drill

Issue Type: Improvement

Affects Versions: 1.12.0

Reporter: Paul Rogers

Drill provides a JSON record reader. Drill provides two ways to configure this

reader:

* Using the JSON plugin configuration.

* Using a set of session options.

The plugin configuration defines the file suffix associated with JSON files.

The session options are:

* {{store.json.all_text_mode}}

* {{store.json.read_numbers_as_double}}

* {{store.json.reader.skip_invalid_records}}

* {{store.json.reader.print_skipped_invalid_record_number}}

Suppose I have to JSON files from different sources (and keep them in distinct

directories.) For the one, I want to use {{all_text_mode}} off as the data is

nicely formatted. Also, my numbers are fine, so I want

{{read_numbers_as_double}} off.

But, the other file is a mess and uses a rather ad-hoc format. So, I want these

two options turned on.

As it turns out I often query both files. Today, I must set the session options

one way to query my "clean" file, then reverse them to query the "dirty" file.

Next, I want to join the two files. How do I set the options one way for the

"clean" file, and the other for the "dirty" file within the *same query*? Can't.

Now, consider the text format plugin that can read CSV, TSV, PSV and so on. It

has a variety of options. But, the are *not* session options; they are instead

options in the plugin definition. This allows me to, say, have a plugin config

for CSV-with-headers files that I get from source A, and a different plugin

config for my CSV-without-headers files from source B.

Suppose we applied the text reader technique to the JSON reader. We'd move the

session options listed above into the JSON format plugin. Then, I can define

one plugin for my "clean" files, and a different plugin config for my "dirty"

files.

What's more, I can then use table functions to adjust the format for each file

as needed within a single query. Since table functions are part of a query, I

can add them to a view that I define for the various JSON files.

The result is a far simpler user experience than the tedium of resetting

session options for every query.

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-5783) Make code generation in the TopN operator more modular and test it

[

https://issues.apache.org/jira/browse/DRILL-5783?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246608#comment-16246608

]

ASF GitHub Bot commented on DRILL-5783:

---

Github user ilooner commented on a diff in the pull request:

https://github.com/apache/drill/pull/984#discussion_r150096261

--- Diff:

exec/java-exec/src/test/java/org/apache/drill/test/rowSet/RowSet.java ---

@@ -85,8 +85,7 @@

* new row set with the updated columns, then merge the new

* and old row sets to create a new immutable row set.

*/

-

- public interface RowSetWriter extends TupleWriter {

+ interface RowSetWriter extends TupleWriter {

--- End diff --

IntelliJ gave a warning that the modifier is redundant. Also an interface

nested inside another interface is public by default.

https://beginnersbook.com/2016/03/nested-or-inner-interfaces-in-java/

> Make code generation in the TopN operator more modular and test it

> --

>

> Key: DRILL-5783

> URL: https://issues.apache.org/jira/browse/DRILL-5783

> Project: Apache Drill

> Issue Type: Improvement

>Reporter: Timothy Farkas

>Assignee: Timothy Farkas

>

> The work for this PR has had several other PRs batched together with it. The

> full description of work is the following:

> DRILL-5783

> * A unit test is created for the priority queue in the TopN operator

> * The code generation classes passed around a completely unused function

> registry reference in some places so I removed it.

> * The priority queue had unused parameters for some of its methods so I

> removed them.

> DRILL-5841

> * There were many many ways in which temporary folders were created in unit

> tests. I have unified the way these folders are created with the

> DirTestWatcher, SubDirTestWatcher, and BaseDirTestWatcher. All the unit tests

> have been updated to use these. The test watchers create temp directories in

> ./target//. So all the files generated and used in the context of a test can

> easily be found in the same consistent location.

> * This change should fix the sporadic hashagg test failures, as well as

> failures caused by stray files in /tmp

> DRILL-5894

> * dfs_test is used as a storage plugin throughout the unit tests. This is

> highly confusing and we can just use dfs instead.

> *Misc*

> * General code cleanup.

> * There are many places where String.format is used unnecessarily. The test

> builder methods already use String.format for you when you pass them args. I

> cleaned some of these up.

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-5783) Make code generation in the TopN operator more modular and test it

[

https://issues.apache.org/jira/browse/DRILL-5783?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246639#comment-16246639

]

ASF GitHub Bot commented on DRILL-5783:

---

Github user ilooner commented on a diff in the pull request:

https://github.com/apache/drill/pull/984#discussion_r15009

--- Diff:

exec/java-exec/src/test/java/org/apache/drill/test/rowSet/RowSetComparison.java

---

@@ -255,4 +257,39 @@ private void verifyArray(String colLabel, ArrayReader

ea,

}

}

}

+

+ // TODO make a native RowSetComparison comparator

+ public static class ObjectComparator implements Comparator {

--- End diff --

This is used in the DrillTestWrapper to verify the ordering of results. I

agree this is not suitable for equality tests, but it's intended to be used

only for ordering tests. I didn't add support for all the supported RowSet

types because we would first have to move DrillTestWrapper to use RowSets

(currently it uses Maps and Lists to represent data). Currently it is not used

by RowSets, but the intention is to move DrillTestWrapper to use RowSets and

then make this comparator operate on RowSets, but that will be an incremental

process.

> Make code generation in the TopN operator more modular and test it

> --

>

> Key: DRILL-5783

> URL: https://issues.apache.org/jira/browse/DRILL-5783

> Project: Apache Drill

> Issue Type: Improvement

>Reporter: Timothy Farkas

>Assignee: Timothy Farkas

>

> The work for this PR has had several other PRs batched together with it. The

> full description of work is the following:

> DRILL-5783

> * A unit test is created for the priority queue in the TopN operator

> * The code generation classes passed around a completely unused function

> registry reference in some places so I removed it.

> * The priority queue had unused parameters for some of its methods so I

> removed them.

> DRILL-5841

> * There were many many ways in which temporary folders were created in unit

> tests. I have unified the way these folders are created with the

> DirTestWatcher, SubDirTestWatcher, and BaseDirTestWatcher. All the unit tests

> have been updated to use these. The test watchers create temp directories in

> ./target//. So all the files generated and used in the context of a test can

> easily be found in the same consistent location.

> * This change should fix the sporadic hashagg test failures, as well as

> failures caused by stray files in /tmp

> DRILL-5894

> * dfs_test is used as a storage plugin throughout the unit tests. This is

> highly confusing and we can just use dfs instead.

> *Misc*

> * General code cleanup.

> * There are many places where String.format is used unnecessarily. The test

> builder methods already use String.format for you when you pass them args. I

> cleaned some of these up.

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-5783) Make code generation in the TopN operator more modular and test it

[

https://issues.apache.org/jira/browse/DRILL-5783?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246609#comment-16246609

]

ASF GitHub Bot commented on DRILL-5783:

---

Github user ilooner commented on a diff in the pull request:

https://github.com/apache/drill/pull/984#discussion_r150096444

--- Diff:

exec/java-exec/src/test/java/org/apache/drill/test/rowSet/file/JsonFileBuilder.java

---

@@ -0,0 +1,159 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.drill.test.rowSet.file;

+

+import com.google.common.base.Preconditions;

+import com.google.common.collect.ImmutableMap;

+import com.google.common.collect.Lists;

+import com.google.common.collect.Maps;

+import org.apache.drill.exec.record.MaterializedField;

+import org.apache.drill.exec.vector.accessor.ColumnAccessor;

+import org.apache.drill.exec.vector.accessor.ColumnReader;

+import org.apache.drill.test.rowSet.RowSet;

+

+import java.io.BufferedOutputStream;

+import java.io.File;

+import java.io.FileOutputStream;

+import java.io.IOException;

+import java.util.Iterator;

+import java.util.List;

+import java.util.Map;

+

+public class JsonFileBuilder

+{

+ public static final String DEFAULT_DOUBLE_FORMATTER = "%f";

+ public static final String DEFAULT_INTEGER_FORMATTER = "%d";

+ public static final String DEFAULT_LONG_FORMATTER = "%d";

+ public static final String DEFAULT_STRING_FORMATTER = "\"%s\"";

+ public static final String DEFAULT_DECIMAL_FORMATTER = "%s";

+ public static final String DEFAULT_PERIOD_FORMATTER = "%s";

+

+ public static final Map DEFAULT_FORMATTERS = new

ImmutableMap.Builder()

+.put(ColumnAccessor.ValueType.DOUBLE, DEFAULT_DOUBLE_FORMATTER)

+.put(ColumnAccessor.ValueType.INTEGER, DEFAULT_INTEGER_FORMATTER)

+.put(ColumnAccessor.ValueType.LONG, DEFAULT_LONG_FORMATTER)

+.put(ColumnAccessor.ValueType.STRING, DEFAULT_STRING_FORMATTER)

+.put(ColumnAccessor.ValueType.DECIMAL, DEFAULT_DECIMAL_FORMATTER)

+.put(ColumnAccessor.ValueType.PERIOD, DEFAULT_PERIOD_FORMATTER)

+.build();

+

+ private final RowSet rowSet;

+ private final Map customFormatters = Maps.newHashMap();

+

+ public JsonFileBuilder(RowSet rowSet) {

+this.rowSet = Preconditions.checkNotNull(rowSet);

+Preconditions.checkArgument(rowSet.rowCount() > 0, "The given rowset

is empty.");

+ }

+

+ public JsonFileBuilder setCustomFormatter(final String columnName, final

String columnFormatter) {

+Preconditions.checkNotNull(columnName);

+Preconditions.checkNotNull(columnFormatter);

+

+Iterator fields = rowSet

+ .schema()

+ .batch()

+ .iterator();

+

+boolean hasColumn = false;

+

+while (!hasColumn && fields.hasNext()) {

+ hasColumn = fields.next()

+.getName()

+.equals(columnName);

+}

+

+final String message = String.format("(%s) is not a valid column",

columnName);

+Preconditions.checkArgument(hasColumn, message);

+

+customFormatters.put(columnName, columnFormatter);

+

+return this;

+ }

+

+ public void build(File tableFile) throws IOException {

--- End diff --

Sounds Good

> Make code generation in the TopN operator more modular and test it

> --

>

> Key: DRILL-5783

> URL: https://issues.apache.org/jira/browse/DRILL-5783

> Project: Apache Drill

> Issue Type: Improvement

>Reporter: Timothy Farkas

>Assignee: Timothy Farkas

>

> The work for this PR has had several other PRs batched together with it. The

> full description of work is the following:

> DRILL-5783

> * A unit test is created for the priority queue in the TopN operator

> * The code generation classes passed around a

[jira] [Commented] (DRILL-4779) Kafka storage plugin support

[

https://issues.apache.org/jira/browse/DRILL-4779?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246547#comment-16246547

]

ASF GitHub Bot commented on DRILL-4779:

---

Github user paul-rogers commented on a diff in the pull request:

https://github.com/apache/drill/pull/1027#discussion_r150087815

--- Diff:

contrib/storage-kafka/src/main/java/org/apache/drill/exec/store/kafka/KafkaScanBatchCreator.java

---

@@ -0,0 +1,61 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.drill.exec.store.kafka;

+

+import java.util.List;

+

+import org.apache.drill.common.exceptions.ExecutionSetupException;

+import org.apache.drill.common.expression.SchemaPath;

+import org.apache.drill.exec.ops.FragmentContext;

+import org.apache.drill.exec.physical.base.GroupScan;

+import org.apache.drill.exec.physical.impl.BatchCreator;

+import org.apache.drill.exec.physical.impl.ScanBatch;

+import org.apache.drill.exec.record.CloseableRecordBatch;

+import org.apache.drill.exec.record.RecordBatch;

+import org.apache.drill.exec.store.RecordReader;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import com.google.common.base.Preconditions;

+import com.google.common.collect.Lists;

+

+public class KafkaScanBatchCreator implements BatchCreator {

+ static final Logger logger =

LoggerFactory.getLogger(KafkaScanBatchCreator.class);

+

+ @Override

+ public CloseableRecordBatch getBatch(FragmentContext context,

KafkaSubScan subScan, List children)

+ throws ExecutionSetupException {

+Preconditions.checkArgument(children.isEmpty());

+List readers = Lists.newArrayList();

+List columns = null;

+for (KafkaSubScan.KafkaSubScanSpec scanSpec :

subScan.getPartitionSubScanSpecList()) {

+ try {

+if ((columns = subScan.getCoulmns()) == null) {

+ columns = GroupScan.ALL_COLUMNS;

+}

--- End diff --

When will the columns be null? Not sure this is a valid state. However, as

noted above, an empty list is a valid state (used for `COUNT(*)` queries.)

> Kafka storage plugin support

>

>

> Key: DRILL-4779

> URL: https://issues.apache.org/jira/browse/DRILL-4779

> Project: Apache Drill

> Issue Type: New Feature

> Components: Storage - Other

>Affects Versions: 1.11.0

>Reporter: B Anil Kumar

>Assignee: B Anil Kumar

> Labels: doc-impacting

> Fix For: 1.12.0

>

>

> Implement Kafka storage plugin will enable the strong SQL support for Kafka.

> Initially implementation can target for supporting json and avro message types

--

This message was sent by Atlassian JIRA

(v6.4.14#64029)

[jira] [Commented] (DRILL-4779) Kafka storage plugin support

[

https://issues.apache.org/jira/browse/DRILL-4779?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246550#comment-16246550

]

ASF GitHub Bot commented on DRILL-4779:

---

Github user paul-rogers commented on a diff in the pull request:

https://github.com/apache/drill/pull/1027#discussion_r150086335

--- Diff:

contrib/storage-kafka/src/main/java/org/apache/drill/exec/store/kafka/KafkaRecordReader.java

---

@@ -0,0 +1,178 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.drill.exec.store.kafka;

+

+import static

org.apache.drill.exec.store.kafka.DrillKafkaConfig.DRILL_KAFKA_POLL_TIMEOUT;

+

+import java.util.Collection;

+import java.util.Iterator;

+import java.util.List;

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+

+import org.apache.drill.common.exceptions.ExecutionSetupException;

+import org.apache.drill.common.expression.SchemaPath;

+import org.apache.drill.exec.ExecConstants;

+import org.apache.drill.exec.ops.FragmentContext;

+import org.apache.drill.exec.ops.OperatorContext;

+import org.apache.drill.exec.physical.impl.OutputMutator;

+import org.apache.drill.exec.store.AbstractRecordReader;

+import org.apache.drill.exec.store.kafka.KafkaSubScan.KafkaSubScanSpec;

+import org.apache.drill.exec.store.kafka.decoders.MessageReader;

+import org.apache.drill.exec.store.kafka.decoders.MessageReaderFactory;

+import org.apache.drill.exec.util.Utilities;

+import org.apache.drill.exec.vector.complex.impl.VectorContainerWriter;

+import org.apache.kafka.clients.consumer.ConsumerRecord;

+import org.apache.kafka.clients.consumer.ConsumerRecords;

+import org.apache.kafka.clients.consumer.KafkaConsumer;

+import org.apache.kafka.common.TopicPartition;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import com.google.common.base.Stopwatch;

+import com.google.common.collect.Lists;

+import com.google.common.collect.Sets;

+public class KafkaRecordReader extends AbstractRecordReader {

+ private static final Logger logger =

LoggerFactory.getLogger(KafkaRecordReader.class);

+ public static final long DEFAULT_MESSAGES_PER_BATCH = 4000;

+

+ private VectorContainerWriter writer;

+ private MessageReader messageReader;

+

+ private boolean unionEnabled;

+ private KafkaConsumer kafkaConsumer;

+ private KafkaStoragePlugin plugin;

+ private KafkaSubScanSpec subScanSpec;

+ private long kafkaPollTimeOut;

+ private long endOffset;

+

+ private long currentOffset;

+ private long totalFetchTime = 0;

+

+ private List partitions;

+ private final boolean enableAllTextMode;

+ private final boolean readNumbersAsDouble;

+

+ private Iterator> messageIter;

+

+ public KafkaRecordReader(KafkaSubScan.KafkaSubScanSpec subScanSpec,

List projectedColumns,

+ FragmentContext context, KafkaStoragePlugin plugin) {

+setColumns(projectedColumns);

+this.enableAllTextMode =

context.getOptions().getOption(ExecConstants.KAFKA_ALL_TEXT_MODE).bool_val;

+this.readNumbersAsDouble = context.getOptions()

+

.getOption(ExecConstants.KAFKA_READER_READ_NUMBERS_AS_DOUBLE).bool_val;

+this.unionEnabled =

context.getOptions().getOption(ExecConstants.ENABLE_UNION_TYPE);

+this.plugin = plugin;

+this.subScanSpec = subScanSpec;

+this.endOffset = subScanSpec.getEndOffset();

+this.kafkaPollTimeOut =

Long.valueOf(plugin.getConfig().getDrillKafkaProps().getProperty(DRILL_KAFKA_POLL_TIMEOUT));

+ }

+

+ @Override

+ protected Collection transformColumns(Collection

projectedColumns) {

+Set transformed = Sets.newLinkedHashSet();

+if (!isStarQuery()) {

+ for (SchemaPath column : projectedColumns) {

+transformed.add(column);

+ }

+} else {

+ transformed.add(Utilities.STAR_COLUMN);

+

[jira] [Commented] (DRILL-4779) Kafka storage plugin support

[

https://issues.apache.org/jira/browse/DRILL-4779?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246553#comment-16246553

]

ASF GitHub Bot commented on DRILL-4779:

---

Github user paul-rogers commented on a diff in the pull request:

https://github.com/apache/drill/pull/1027#discussion_r150086292

--- Diff:

contrib/storage-kafka/src/main/java/org/apache/drill/exec/store/kafka/KafkaRecordReader.java

---

@@ -0,0 +1,178 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.drill.exec.store.kafka;

+

+import static

org.apache.drill.exec.store.kafka.DrillKafkaConfig.DRILL_KAFKA_POLL_TIMEOUT;

+

+import java.util.Collection;

+import java.util.Iterator;

+import java.util.List;

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+

+import org.apache.drill.common.exceptions.ExecutionSetupException;

+import org.apache.drill.common.expression.SchemaPath;

+import org.apache.drill.exec.ExecConstants;

+import org.apache.drill.exec.ops.FragmentContext;

+import org.apache.drill.exec.ops.OperatorContext;

+import org.apache.drill.exec.physical.impl.OutputMutator;

+import org.apache.drill.exec.store.AbstractRecordReader;

+import org.apache.drill.exec.store.kafka.KafkaSubScan.KafkaSubScanSpec;

+import org.apache.drill.exec.store.kafka.decoders.MessageReader;

+import org.apache.drill.exec.store.kafka.decoders.MessageReaderFactory;

+import org.apache.drill.exec.util.Utilities;

+import org.apache.drill.exec.vector.complex.impl.VectorContainerWriter;

+import org.apache.kafka.clients.consumer.ConsumerRecord;

+import org.apache.kafka.clients.consumer.ConsumerRecords;

+import org.apache.kafka.clients.consumer.KafkaConsumer;

+import org.apache.kafka.common.TopicPartition;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import com.google.common.base.Stopwatch;

+import com.google.common.collect.Lists;

+import com.google.common.collect.Sets;

+public class KafkaRecordReader extends AbstractRecordReader {

+ private static final Logger logger =

LoggerFactory.getLogger(KafkaRecordReader.class);

+ public static final long DEFAULT_MESSAGES_PER_BATCH = 4000;

+

+ private VectorContainerWriter writer;

+ private MessageReader messageReader;

+

+ private boolean unionEnabled;

+ private KafkaConsumer kafkaConsumer;

+ private KafkaStoragePlugin plugin;

+ private KafkaSubScanSpec subScanSpec;

+ private long kafkaPollTimeOut;

+ private long endOffset;

+

+ private long currentOffset;

+ private long totalFetchTime = 0;

+

+ private List partitions;

+ private final boolean enableAllTextMode;

+ private final boolean readNumbersAsDouble;

+

+ private Iterator> messageIter;

+

+ public KafkaRecordReader(KafkaSubScan.KafkaSubScanSpec subScanSpec,

List projectedColumns,

+ FragmentContext context, KafkaStoragePlugin plugin) {

+setColumns(projectedColumns);

+this.enableAllTextMode =

context.getOptions().getOption(ExecConstants.KAFKA_ALL_TEXT_MODE).bool_val;

+this.readNumbersAsDouble = context.getOptions()

+

.getOption(ExecConstants.KAFKA_READER_READ_NUMBERS_AS_DOUBLE).bool_val;

+this.unionEnabled =

context.getOptions().getOption(ExecConstants.ENABLE_UNION_TYPE);

+this.plugin = plugin;

+this.subScanSpec = subScanSpec;

+this.endOffset = subScanSpec.getEndOffset();

+this.kafkaPollTimeOut =

Long.valueOf(plugin.getConfig().getDrillKafkaProps().getProperty(DRILL_KAFKA_POLL_TIMEOUT));

+ }

+

+ @Override

+ protected Collection transformColumns(Collection

projectedColumns) {

+Set transformed = Sets.newLinkedHashSet();

+if (!isStarQuery()) {

+ for (SchemaPath column : projectedColumns) {

+transformed.add(column);

+ }

+} else {

+ transformed.add(Utilities.STAR_COLUMN);

+

[jira] [Commented] (DRILL-4779) Kafka storage plugin support

[

https://issues.apache.org/jira/browse/DRILL-4779?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16246551#comment-16246551

]

ASF GitHub Bot commented on DRILL-4779:

---

Github user paul-rogers commented on a diff in the pull request:

https://github.com/apache/drill/pull/1027#discussion_r150087650

--- Diff:

contrib/storage-kafka/src/main/java/org/apache/drill/exec/store/kafka/KafkaScanBatchCreator.java

---

@@ -0,0 +1,61 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.drill.exec.store.kafka;

+

+import java.util.List;

+

+import org.apache.drill.common.exceptions.ExecutionSetupException;

+import org.apache.drill.common.expression.SchemaPath;

+import org.apache.drill.exec.ops.FragmentContext;

+import org.apache.drill.exec.physical.base.GroupScan;

+import org.apache.drill.exec.physical.impl.BatchCreator;

+import org.apache.drill.exec.physical.impl.ScanBatch;

+import org.apache.drill.exec.record.CloseableRecordBatch;

+import org.apache.drill.exec.record.RecordBatch;

+import org.apache.drill.exec.store.RecordReader;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import com.google.common.base.Preconditions;

+import com.google.common.collect.Lists;

+

+public class KafkaScanBatchCreator implements BatchCreator {

+ static final Logger logger =

LoggerFactory.getLogger(KafkaScanBatchCreator.class);

+

+ @Override

+ public CloseableRecordBatch getBatch(FragmentContext context,

KafkaSubScan subScan, List children)

+ throws ExecutionSetupException {

+Preconditions.checkArgument(children.isEmpty());

+List readers = Lists.newArrayList();

+List columns = null;

+for (KafkaSubScan.KafkaSubScanSpec scanSpec :

subScan.getPartitionSubScanSpecList()) {

+ try {

+if ((columns = subScan.getCoulmns()) == null) {

+ columns = GroupScan.ALL_COLUMNS;

+}

--- End diff --

The column list can be shared by all readers, and so can be created outside

of the loop over scan specs.

> Kafka storage plugin support

>

>

> Key: DRILL-4779

> URL: https://issues.apache.org/jira/browse/DRILL-4779

> Project: Apache Drill

> Issue Type: New Feature

> Components: Storage - Other

>Affects Versions: 1.11.0

>Reporter: B Anil Kumar

>Assignee: B Anil Kumar

> Labels: doc-impacting

> Fix For: 1.12.0

>