[jira] [Commented] (FLINK-11779) CLI ignores -m parameter if high-availability is ZOOKEEPER

[

https://issues.apache.org/jira/browse/FLINK-11779?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16784201#comment-16784201

]

TisonKun commented on FLINK-11779:

--

[~gjy]

Isn't "-m" ignored because it is run in a yarn-cluster? If so, what we need to

do is excluding "-m" option from yarn-cluster mode document.

>From {{FlinkYarnSessionCli#applyCommandLineOptionsToConfiguration}}.

{code:java}

@Override

protected Configuration

applyCommandLineOptionsToConfiguration(CommandLine commandLine) throws

FlinkException {

// we ignore the addressOption because it can only contain

"yarn-cluster"

...

{code}

> CLI ignores -m parameter if high-availability is ZOOKEEPER

> ---

>

> Key: FLINK-11779

> URL: https://issues.apache.org/jira/browse/FLINK-11779

> Project: Flink

> Issue Type: Bug

> Components: Command Line Client

>Affects Versions: 1.7.2, 1.8.0

>Reporter: Gary Yao

>Assignee: leesf

>Priority: Major

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> *Description*

> The CLI will ignores the host/port provided by the {{-m}} parameter if

> {{high-availability: ZOOKEEPER}} is configured in {{flink-conf.yaml}}

> *Expected behavior*

> * TBD: either document this behavior or give precedence to {{-m}}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Assigned] (FLINK-7129) Support dynamically changing CEP patterns

[ https://issues.apache.org/jira/browse/FLINK-7129?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dawid Wysakowicz reassigned FLINK-7129: --- Assignee: (was: Dawid Wysakowicz) > Support dynamically changing CEP patterns > - > > Key: FLINK-7129 > URL: https://issues.apache.org/jira/browse/FLINK-7129 > Project: Flink > Issue Type: New Feature > Components: Library / CEP >Reporter: Dawid Wysakowicz >Priority: Major > > An umbrella task for introducing mechanism for injecting patterns through > coStream -- This message was sent by Atlassian JIRA (v7.6.3#76005)

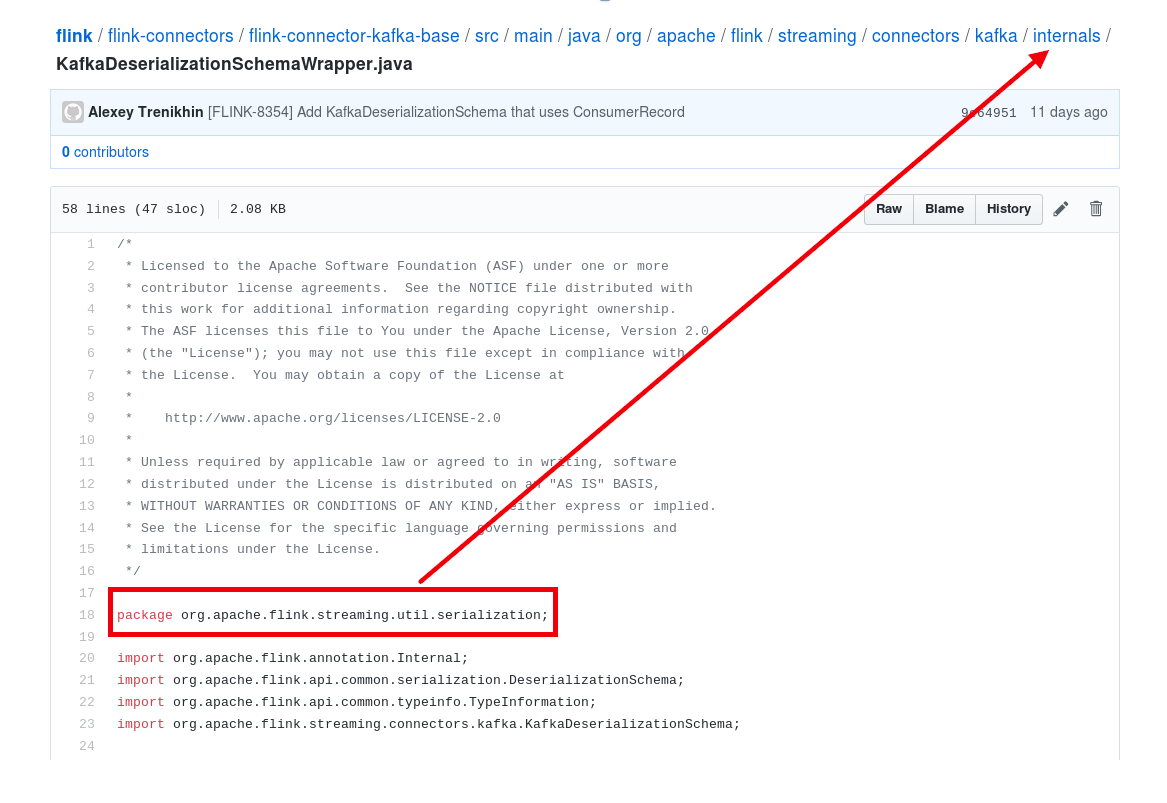

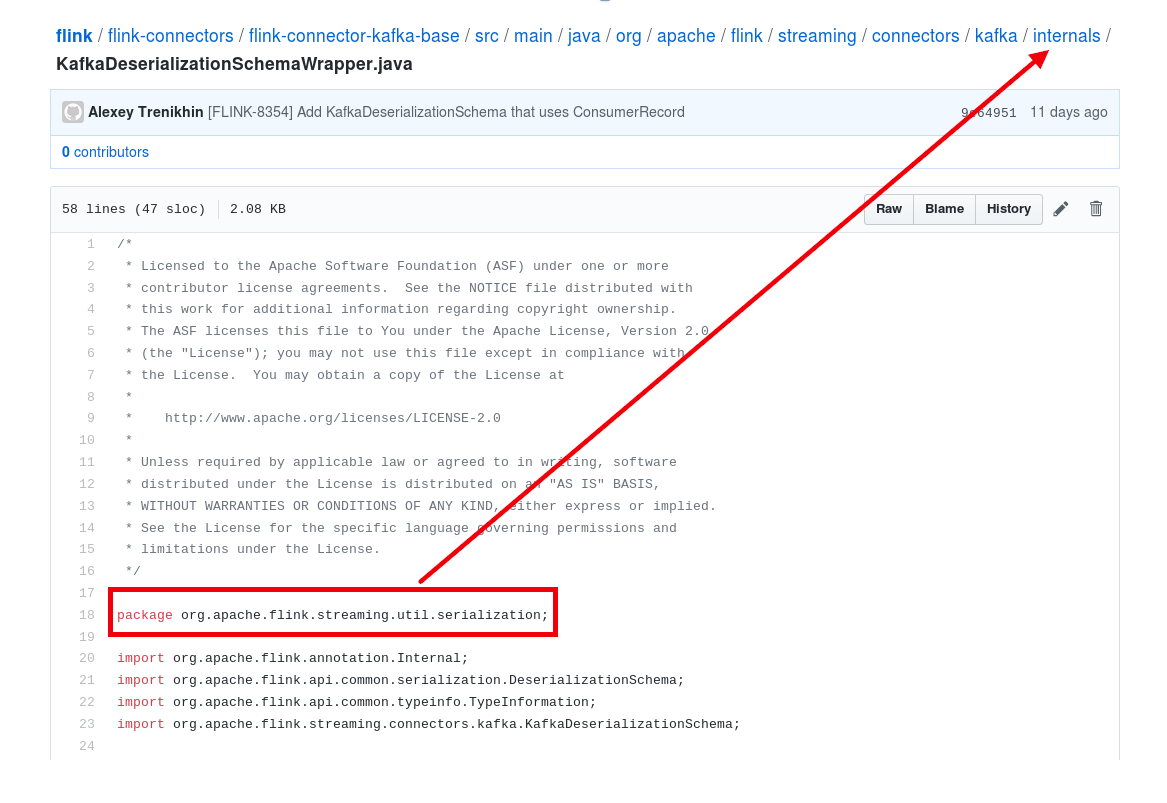

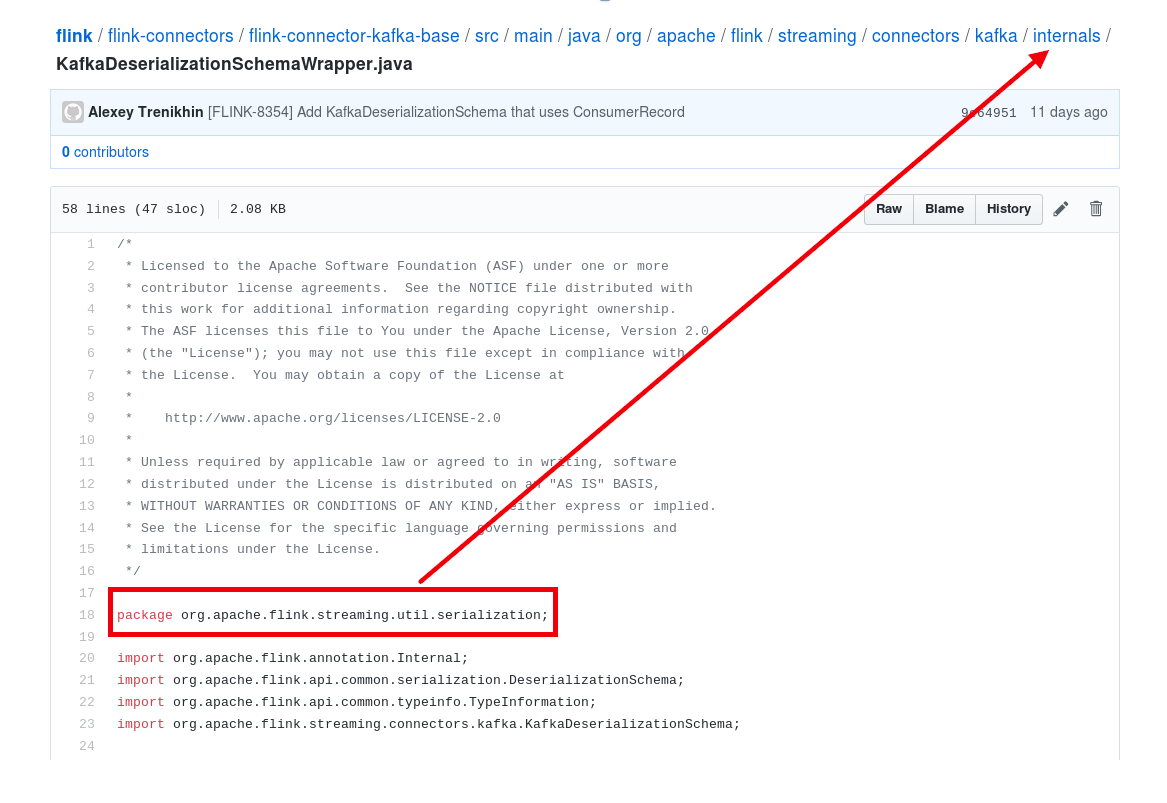

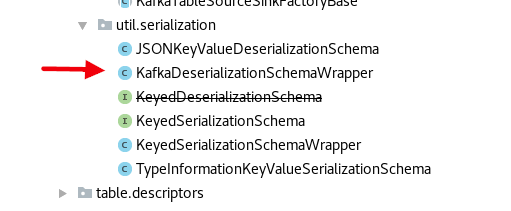

[jira] [Created] (FLINK-11821) fix the error package location of KafkaDeserializationSchemaWrapper.java file

lamber-ken created FLINK-11821: -- Summary: fix the error package location of KafkaDeserializationSchemaWrapper.java file Key: FLINK-11821 URL: https://issues.apache.org/jira/browse/FLINK-11821 Project: Flink Issue Type: Bug Components: Connectors / Kafka Reporter: lamber-ken Assignee: lamber-ken fix the error package location of KafkaDeserializationSchemaWrapper.java -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] [flink] lamber-ken opened a new pull request #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java

lamber-ken opened a new pull request #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java URL: https://github.com/apache/flink/pull/7902 ## What is the purpose of the change fix the error package location of KafkaDeserializationSchemaWrapper.java ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (yes) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: (no) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? (no) - If yes, how is the feature documented? (not documented) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] flinkbot commented on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java

flinkbot commented on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java URL: https://github.com/apache/flink/pull/7902#issuecomment-469587374 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Updated] (FLINK-11821) fix the error package location of KafkaDeserializationSchemaWrapper.java file

[ https://issues.apache.org/jira/browse/FLINK-11821?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-11821: --- Labels: pull-request-available (was: ) > fix the error package location of KafkaDeserializationSchemaWrapper.java file > -- > > Key: FLINK-11821 > URL: https://issues.apache.org/jira/browse/FLINK-11821 > Project: Flink > Issue Type: Bug > Components: Connectors / Kafka >Reporter: lamber-ken >Assignee: lamber-ken >Priority: Blocker > Labels: pull-request-available > > fix the error package location of KafkaDeserializationSchemaWrapper.java -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] [flink] aljoscha commented on issue #7781: [FLINK-8354] Add KafkaDeserializationSchema that directly uses ConsumerRecord

aljoscha commented on issue #7781: [FLINK-8354] Add KafkaDeserializationSchema that directly uses ConsumerRecord URL: https://github.com/apache/flink/pull/7781#issuecomment-469591040 @lamber-ken How do you mean? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] lamber-ken commented on issue #7781: [FLINK-8354] Add KafkaDeserializationSchema that directly uses ConsumerRecord

lamber-ken commented on issue #7781: [FLINK-8354] Add KafkaDeserializationSchema that directly uses ConsumerRecord URL: https://github.com/apache/flink/pull/7781#issuecomment-469594239 @aljoscha, hi, the mismatch between KafkaDeserializationSchemaWrapper class's package name and file's location. #7902 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (FLINK-11779) CLI ignores -m parameter if high-availability is ZOOKEEPER

[

https://issues.apache.org/jira/browse/FLINK-11779?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16784246#comment-16784246

]

Gary Yao commented on FLINK-11779:

--

[~Tison] I am not sure what you are proposing. It does not make sense to

specify a host:port when submitting to a per-job cluster but if the user

submits to a yarn session cluster with the {{-m host:port}} option, the client

could respect the specified host:port.

> CLI ignores -m parameter if high-availability is ZOOKEEPER

> ---

>

> Key: FLINK-11779

> URL: https://issues.apache.org/jira/browse/FLINK-11779

> Project: Flink

> Issue Type: Bug

> Components: Command Line Client

>Affects Versions: 1.7.2, 1.8.0

>Reporter: Gary Yao

>Assignee: leesf

>Priority: Major

> Labels: pull-request-available

> Time Spent: 10m

> Remaining Estimate: 0h

>

> *Description*

> The CLI will ignores the host/port provided by the {{-m}} parameter if

> {{high-availability: ZOOKEEPER}} is configured in {{flink-conf.yaml}}

> *Expected behavior*

> * TBD: either document this behavior or give precedence to {{-m}}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Created] (FLINK-11822) Introduce Flink metadata handler

godfrey he created FLINK-11822: -- Summary: Introduce Flink metadata handler Key: FLINK-11822 URL: https://issues.apache.org/jira/browse/FLINK-11822 Project: Flink Issue Type: New Feature Components: API / Table SQL Reporter: godfrey he Assignee: godfrey he Calcite has defined various metadata handlers(e.g. `RowCount`, `Selectivity`) and provided default implementation(e.g. `RelMdRowCount`, `RelMdSelectivity`). However, the default implementation can't completely meet our requirements, e.g. some of its logic is incomplete,and some `RelNode`s are not considered. There are two options to meet our requirements: option 1. Extends from default implementation, overrides method to improve its logic, add new methods for new `RelNode`. The advantage of this option is we just need to focus on the additions and modifications. However, its shortcomings are also obvious: we have no control over the code of non-override methods in default implementation classes especially when upgrading the Calcite version. option 2. Extends from metadata handler interfaces, reimplement all the logic. Its shortcomings are very obvious, however we can control all the code logic that's what we want. so we choose option 2! In this jira, only basic metadata handles will be introduce, including: `FlinkRelMdPercentageOriginalRow`, `FlinkRelMdNonCumulativeCost`, `FlinkRelMdCumulativeCost`, `FlinkRelMdRowCount`, `FlinkRelMdSize`, `FlinkRelMdSelectivity`, `FlinkRelMdDistinctRowCoun`, `FlinkRelMdPopulationSize`, `FlinkRelMdColumnUniqueness`, `FlinkRelMdUniqueKeys` -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (FLINK-11822) Introduce Flink metadata handlers

[ https://issues.apache.org/jira/browse/FLINK-11822?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] godfrey he updated FLINK-11822: --- Summary: Introduce Flink metadata handlers (was: Introduce Flink metadata handler) > Introduce Flink metadata handlers > - > > Key: FLINK-11822 > URL: https://issues.apache.org/jira/browse/FLINK-11822 > Project: Flink > Issue Type: New Feature > Components: API / Table SQL >Reporter: godfrey he >Assignee: godfrey he >Priority: Major > > Calcite has defined various metadata handlers(e.g. `RowCount`, `Selectivity`) > and provided default implementation(e.g. `RelMdRowCount`, > `RelMdSelectivity`). However, the default implementation can't completely > meet our requirements, e.g. some of its logic is incomplete,and some > `RelNode`s are not considered. > There are two options to meet our requirements: > option 1. Extends from default implementation, overrides method to improve > its logic, add new methods for new `RelNode`. The advantage of this option is > we just need to focus on the additions and modifications. However, its > shortcomings are also obvious: we have no control over the code of > non-override methods in default implementation classes especially when > upgrading the Calcite version. > option 2. Extends from metadata handler interfaces, reimplement all the > logic. Its shortcomings are very obvious, however we can control all the code > logic that's what we want. > so we choose option 2! > In this jira, only basic metadata handles will be introduce, including: > `FlinkRelMdPercentageOriginalRow`, > `FlinkRelMdNonCumulativeCost`, > `FlinkRelMdCumulativeCost`, > `FlinkRelMdRowCount`, > `FlinkRelMdSize`, > `FlinkRelMdSelectivity`, > `FlinkRelMdDistinctRowCoun`, > `FlinkRelMdPopulationSize`, > `FlinkRelMdColumnUniqueness`, > `FlinkRelMdUniqueKeys` -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (FLINK-11822) Introduce Flink metadata handlers

[

https://issues.apache.org/jira/browse/FLINK-11822?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

godfrey he updated FLINK-11822:

---

Description:

Calcite has defined various metadata handlers(e.g. {{RowCoun}}, {{Selectivity}}

and provided default implementation(e.g. {{RelMdRowCount}},

{{RelMdSelectivity}}). However, the default implementation can't completely

meet our requirements, e.g. some of its logic is incomplete,and some

{{RelNode}}s are not considered.

There are two options to meet our requirements:

option 1. Extends from default implementation, overrides method to improve its

logic, add new methods for new {{RelNode}}. The advantage of this option is we

just need to focus on the additions and modifications. However, its

shortcomings are also obvious: we have no control over the code of non-override

methods in default implementation classes especially when upgrading the Calcite

version.

option 2. Extends from metadata handler interfaces, reimplement all the logic.

Its shortcomings are very obvious, however we can control all the code logic

that's what we want.

so we choose option 2!

In this jira, only basic metadata handles will be introduced, including:

{{FlinkRelMdPercentageOriginalRow}},

{{FlinkRelMdNonCumulativeCost}},

{{FlinkRelMdCumulativeCost}},

{{FlinkRelMdRowCount}},

{{FlinkRelMdSize}},

{{FlinkRelMdSelectivity}},

{{FlinkRelMdDistinctRowCoun}},

{{FlinkRelMdPopulationSize}},

{{FlinkRelMdColumnUniqueness}},

{{FlinkRelMdUniqueKeys}}

was:

Calcite has defined various metadata handlers(e.g. `RowCount`, `Selectivity`)

and provided default implementation(e.g. `RelMdRowCount`, `RelMdSelectivity`).

However, the default implementation can't completely meet our requirements,

e.g. some of its logic is incomplete,and some `RelNode`s are not considered.

There are two options to meet our requirements:

option 1. Extends from default implementation, overrides method to improve its

logic, add new methods for new `RelNode`. The advantage of this option is we

just need to focus on the additions and modifications. However, its

shortcomings are also obvious: we have no control over the code of non-override

methods in default implementation classes especially when upgrading the Calcite

version.

option 2. Extends from metadata handler interfaces, reimplement all the logic.

Its shortcomings are very obvious, however we can control all the code logic

that's what we want.

so we choose option 2!

In this jira, only basic metadata handles will be introduce, including:

`FlinkRelMdPercentageOriginalRow`,

`FlinkRelMdNonCumulativeCost`,

`FlinkRelMdCumulativeCost`,

`FlinkRelMdRowCount`,

`FlinkRelMdSize`,

`FlinkRelMdSelectivity`,

`FlinkRelMdDistinctRowCoun`,

`FlinkRelMdPopulationSize`,

`FlinkRelMdColumnUniqueness`,

`FlinkRelMdUniqueKeys`

> Introduce Flink metadata handlers

> -

>

> Key: FLINK-11822

> URL: https://issues.apache.org/jira/browse/FLINK-11822

> Project: Flink

> Issue Type: New Feature

> Components: API / Table SQL

>Reporter: godfrey he

>Assignee: godfrey he

>Priority: Major

>

> Calcite has defined various metadata handlers(e.g. {{RowCoun}},

> {{Selectivity}} and provided default implementation(e.g. {{RelMdRowCount}},

> {{RelMdSelectivity}}). However, the default implementation can't completely

> meet our requirements, e.g. some of its logic is incomplete,and some

> {{RelNode}}s are not considered.

> There are two options to meet our requirements:

> option 1. Extends from default implementation, overrides method to improve

> its logic, add new methods for new {{RelNode}}. The advantage of this option

> is we just need to focus on the additions and modifications. However, its

> shortcomings are also obvious: we have no control over the code of

> non-override methods in default implementation classes especially when

> upgrading the Calcite version.

> option 2. Extends from metadata handler interfaces, reimplement all the

> logic. Its shortcomings are very obvious, however we can control all the code

> logic that's what we want.

> so we choose option 2!

> In this jira, only basic metadata handles will be introduced, including:

> {{FlinkRelMdPercentageOriginalRow}},

> {{FlinkRelMdNonCumulativeCost}},

> {{FlinkRelMdCumulativeCost}},

> {{FlinkRelMdRowCount}},

> {{FlinkRelMdSize}},

> {{FlinkRelMdSelectivity}},

> {{FlinkRelMdDistinctRowCoun}},

> {{FlinkRelMdPopulationSize}},

> {{FlinkRelMdColumnUniqueness}},

> {{FlinkRelMdUniqueKeys}}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (FLINK-11822) Introduce Flink metadata handlers

[

https://issues.apache.org/jira/browse/FLINK-11822?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

godfrey he updated FLINK-11822:

---

Description:

Calcite has defined various metadata handlers(e.g. {{RowCoun}}, {{Selectivity}}

and provided default implementation(e.g. {{RelMdRowCount}},

{{RelMdSelectivity}}). However, the default implementation can't completely

meet our requirements, e.g. some of its logic is incomplete,and some RelNodes

are not considered.

There are two options to meet our requirements:

option 1. Extends from default implementation, overrides method to improve its

logic, add new methods for new {{RelNode}}. The advantage of this option is we

just need to focus on the additions and modifications. However, its

shortcomings are also obvious: we have no control over the code of non-override

methods in default implementation classes especially when upgrading the Calcite

version.

option 2. Extends from metadata handler interfaces, reimplement all the logic.

Its shortcomings are very obvious, however we can control all the code logic

that's what we want.

so we choose option 2!

In this jira, only basic metadata handles will be introduced, including:

{{FlinkRelMdPercentageOriginalRow}},

{{FlinkRelMdNonCumulativeCost}},

{{FlinkRelMdCumulativeCost}},

{{FlinkRelMdRowCount}},

{{FlinkRelMdSize}},

{{FlinkRelMdSelectivity}},

{{FlinkRelMdDistinctRowCoun}},

{{FlinkRelMdPopulationSize}},

{{FlinkRelMdColumnUniqueness}},

{{FlinkRelMdUniqueKeys}}

was:

Calcite has defined various metadata handlers(e.g. {{RowCoun}}, {{Selectivity}}

and provided default implementation(e.g. {{RelMdRowCount}},

{{RelMdSelectivity}}). However, the default implementation can't completely

meet our requirements, e.g. some of its logic is incomplete,and some

{{RelNode}}s are not considered.

There are two options to meet our requirements:

option 1. Extends from default implementation, overrides method to improve its

logic, add new methods for new {{RelNode}}. The advantage of this option is we

just need to focus on the additions and modifications. However, its

shortcomings are also obvious: we have no control over the code of non-override

methods in default implementation classes especially when upgrading the Calcite

version.

option 2. Extends from metadata handler interfaces, reimplement all the logic.

Its shortcomings are very obvious, however we can control all the code logic

that's what we want.

so we choose option 2!

In this jira, only basic metadata handles will be introduced, including:

{{FlinkRelMdPercentageOriginalRow}},

{{FlinkRelMdNonCumulativeCost}},

{{FlinkRelMdCumulativeCost}},

{{FlinkRelMdRowCount}},

{{FlinkRelMdSize}},

{{FlinkRelMdSelectivity}},

{{FlinkRelMdDistinctRowCoun}},

{{FlinkRelMdPopulationSize}},

{{FlinkRelMdColumnUniqueness}},

{{FlinkRelMdUniqueKeys}}

> Introduce Flink metadata handlers

> -

>

> Key: FLINK-11822

> URL: https://issues.apache.org/jira/browse/FLINK-11822

> Project: Flink

> Issue Type: New Feature

> Components: API / Table SQL

>Reporter: godfrey he

>Assignee: godfrey he

>Priority: Major

>

> Calcite has defined various metadata handlers(e.g. {{RowCoun}},

> {{Selectivity}} and provided default implementation(e.g. {{RelMdRowCount}},

> {{RelMdSelectivity}}). However, the default implementation can't completely

> meet our requirements, e.g. some of its logic is incomplete,and some RelNodes

> are not considered.

> There are two options to meet our requirements:

> option 1. Extends from default implementation, overrides method to improve

> its logic, add new methods for new {{RelNode}}. The advantage of this option

> is we just need to focus on the additions and modifications. However, its

> shortcomings are also obvious: we have no control over the code of

> non-override methods in default implementation classes especially when

> upgrading the Calcite version.

> option 2. Extends from metadata handler interfaces, reimplement all the

> logic. Its shortcomings are very obvious, however we can control all the code

> logic that's what we want.

> so we choose option 2!

> In this jira, only basic metadata handles will be introduced, including:

> {{FlinkRelMdPercentageOriginalRow}},

> {{FlinkRelMdNonCumulativeCost}},

> {{FlinkRelMdCumulativeCost}},

> {{FlinkRelMdRowCount}},

> {{FlinkRelMdSize}},

> {{FlinkRelMdSelectivity}},

> {{FlinkRelMdDistinctRowCoun}},

> {{FlinkRelMdPopulationSize}},

> {{FlinkRelMdColumnUniqueness}},

> {{FlinkRelMdUniqueKeys}}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] [flink] azagrebin commented on a change in pull request #7880: [FLINK-11336][zk] Delete ZNodes when ZooKeeperHaServices#closeAndCleanupAllData

azagrebin commented on a change in pull request #7880: [FLINK-11336][zk] Delete

ZNodes when ZooKeeperHaServices#closeAndCleanupAllData

URL: https://github.com/apache/flink/pull/7880#discussion_r262417205

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/highavailability/zookeeper/ZooKeeperHaServicesTest.java

##

@@ -0,0 +1,250 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.highavailability.zookeeper;

+

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.configuration.HighAvailabilityOptions;

+import org.apache.flink.runtime.blob.BlobKey;

+import org.apache.flink.runtime.blob.BlobStoreService;

+import org.apache.flink.runtime.concurrent.Executors;

+import org.apache.flink.runtime.highavailability.RunningJobsRegistry;

+import org.apache.flink.runtime.leaderelection.LeaderElectionService;

+import org.apache.flink.runtime.leaderelection.TestingContender;

+import org.apache.flink.runtime.leaderelection.TestingListener;

+import org.apache.flink.runtime.leaderretrieval.LeaderRetrievalService;

+import org.apache.flink.runtime.util.ZooKeeperUtils;

+import org.apache.flink.runtime.zookeeper.ZooKeeperResource;

+import org.apache.flink.util.TestLogger;

+import org.apache.flink.util.function.ThrowingConsumer;

+

+import org.apache.curator.framework.CuratorFramework;

+import org.apache.curator.framework.CuratorFrameworkFactory;

+import org.apache.curator.retry.RetryForever;

+import org.junit.AfterClass;

+import org.junit.Before;

+import org.junit.BeforeClass;

+import org.junit.ClassRule;

+import org.junit.Test;

+

+import javax.annotation.Nonnull;

+

+import java.io.File;

+import java.io.IOException;

+import java.util.List;

+

+import static org.hamcrest.Matchers.empty;

+import static org.hamcrest.Matchers.equalTo;

+import static org.hamcrest.Matchers.is;

+import static org.hamcrest.Matchers.not;

+import static org.hamcrest.Matchers.notNullValue;

+import static org.hamcrest.Matchers.nullValue;

+import static org.junit.Assert.assertThat;

+

+/**

+ * Tests for the {@link ZooKeeperHaServices}.

+ */

+public class ZooKeeperHaServicesTest extends TestLogger {

+

+ @ClassRule

+ public static final ZooKeeperResource ZOO_KEEPER_RESOURCE = new

ZooKeeperResource();

+

+ private static CuratorFramework client;

+

+ @BeforeClass

+ public static void setupClass() {

+ client = startCuratorFramework();

+ client.start();

+ }

+

+ @Before

+ public void setup() throws Exception {

+ final List children = client.getChildren().forPath("/");

+

+ for (String child : children) {

+ if (!child.equals("zookeeper")) {

+

client.delete().deletingChildrenIfNeeded().forPath('/' + child);

+ }

+ }

+ }

+

+ @AfterClass

+ public static void teardownClass() {

+ if (client != null) {

+ client.close();

+ }

+ }

+

+ /**

+* Tests that a simple {@link ZooKeeperHaServices#close()} does not

delete ZooKeeper paths.

+*/

+ @Test

+ public void testSimpleClose() throws Exception {

+ final String rootPath = "/foo/bar/flink";

+ final Configuration configuration =

createConfiguration(rootPath);

+

+ final TestingBlobStoreService blobStoreService = new

TestingBlobStoreService();

+

+ runCleanupTest(

+ configuration,

+ blobStoreService,

+ ZooKeeperHaServices::close);

+

+ assertThat(blobStoreService.isClosed(), is(true));

+ assertThat(blobStoreService.isClosedAndCleanedUpAllData(),

is(false));

+

+ final List children =

client.getChildren().forPath(rootPath);

+ assertThat(children, is(not(empty(;

+ }

+

+ /**

+* Tests that the {@link ZooKeeperHaServices} cleans up all paths if

+* it is closed via {@link

ZooKeeperHaServices#closeAndCleanupAllData()}.

+*/

+ @T

[GitHub] [flink] azagrebin commented on a change in pull request #7880: [FLINK-11336][zk] Delete ZNodes when ZooKeeperHaServices#closeAndCleanupAllData

azagrebin commented on a change in pull request #7880: [FLINK-11336][zk] Delete

ZNodes when ZooKeeperHaServices#closeAndCleanupAllData

URL: https://github.com/apache/flink/pull/7880#discussion_r262164377

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/highavailability/zookeeper/ZooKeeperHaServicesTest.java

##

@@ -0,0 +1,250 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.highavailability.zookeeper;

+

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.configuration.HighAvailabilityOptions;

+import org.apache.flink.runtime.blob.BlobKey;

+import org.apache.flink.runtime.blob.BlobStoreService;

+import org.apache.flink.runtime.concurrent.Executors;

+import org.apache.flink.runtime.highavailability.RunningJobsRegistry;

+import org.apache.flink.runtime.leaderelection.LeaderElectionService;

+import org.apache.flink.runtime.leaderelection.TestingContender;

+import org.apache.flink.runtime.leaderelection.TestingListener;

+import org.apache.flink.runtime.leaderretrieval.LeaderRetrievalService;

+import org.apache.flink.runtime.util.ZooKeeperUtils;

+import org.apache.flink.runtime.zookeeper.ZooKeeperResource;

+import org.apache.flink.util.TestLogger;

+import org.apache.flink.util.function.ThrowingConsumer;

+

+import org.apache.curator.framework.CuratorFramework;

+import org.apache.curator.framework.CuratorFrameworkFactory;

+import org.apache.curator.retry.RetryForever;

+import org.junit.AfterClass;

+import org.junit.Before;

+import org.junit.BeforeClass;

+import org.junit.ClassRule;

+import org.junit.Test;

+

+import javax.annotation.Nonnull;

+

+import java.io.File;

+import java.io.IOException;

+import java.util.List;

+

+import static org.hamcrest.Matchers.empty;

+import static org.hamcrest.Matchers.equalTo;

+import static org.hamcrest.Matchers.is;

+import static org.hamcrest.Matchers.not;

+import static org.hamcrest.Matchers.notNullValue;

+import static org.hamcrest.Matchers.nullValue;

+import static org.junit.Assert.assertThat;

+

+/**

+ * Tests for the {@link ZooKeeperHaServices}.

+ */

+public class ZooKeeperHaServicesTest extends TestLogger {

+

+ @ClassRule

+ public static final ZooKeeperResource ZOO_KEEPER_RESOURCE = new

ZooKeeperResource();

+

+ private static CuratorFramework client;

+

+ @BeforeClass

+ public static void setupClass() {

+ client = startCuratorFramework();

+ client.start();

+ }

+

+ @Before

+ public void setup() throws Exception {

+ final List children = client.getChildren().forPath("/");

+

+ for (String child : children) {

+ if (!child.equals("zookeeper")) {

+

client.delete().deletingChildrenIfNeeded().forPath('/' + child);

+ }

+ }

+ }

+

+ @AfterClass

+ public static void teardownClass() {

+ if (client != null) {

+ client.close();

+ }

+ }

+

+ /**

+* Tests that a simple {@link ZooKeeperHaServices#close()} does not

delete ZooKeeper paths.

+*/

+ @Test

+ public void testSimpleClose() throws Exception {

+ final String rootPath = "/foo/bar/flink";

+ final Configuration configuration =

createConfiguration(rootPath);

+

+ final TestingBlobStoreService blobStoreService = new

TestingBlobStoreService();

+

+ runCleanupTest(

+ configuration,

+ blobStoreService,

+ ZooKeeperHaServices::close);

+

+ assertThat(blobStoreService.isClosed(), is(true));

+ assertThat(blobStoreService.isClosedAndCleanedUpAllData(),

is(false));

+

+ final List children =

client.getChildren().forPath(rootPath);

+ assertThat(children, is(not(empty(;

+ }

+

+ /**

+* Tests that the {@link ZooKeeperHaServices} cleans up all paths if

+* it is closed via {@link

ZooKeeperHaServices#closeAndCleanupAllData()}.

+*/

+ @T

[GitHub] [flink] azagrebin commented on a change in pull request #7880: [FLINK-11336][zk] Delete ZNodes when ZooKeeperHaServices#closeAndCleanupAllData

azagrebin commented on a change in pull request #7880: [FLINK-11336][zk] Delete ZNodes when ZooKeeperHaServices#closeAndCleanupAllData URL: https://github.com/apache/flink/pull/7880#discussion_r262162846 ## File path: flink-runtime/src/test/resources/log4j-test.properties ## @@ -18,7 +18,7 @@ # Set root logger level to OFF to not flood build logs # set manually to INFO for debugging purposes -log4j.rootLogger=OFF, testlogger +log4j.rootLogger=INFO, testlogger Review comment: needs to be reverted? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] mxm commented on issue #7762: [FLINK-11146] Remove invalid codes in ClusterClient

mxm commented on issue #7762: [FLINK-11146] Remove invalid codes in ClusterClient URL: https://github.com/apache/flink/pull/7762#issuecomment-469615045 I'd merge. Anything blocking this from being merged? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] klion26 commented on a change in pull request #7895: [FLINK-11126][YARN][security] Filter out AMRMToken in TaskManager‘s credentials

klion26 commented on a change in pull request #7895: [FLINK-11126][YARN][security] Filter out AMRMToken in TaskManager‘s credentials URL: https://github.com/apache/flink/pull/7895#discussion_r262423416 ## File path: flink-yarn/src/test/java/org/apache/flink/yarn/UtilsTest.java ## @@ -18,25 +18,54 @@ package org.apache.flink.yarn; +import org.apache.flink.configuration.Configuration; +import org.apache.flink.core.testutils.CommonTestUtils; +import org.apache.flink.runtime.clusterframework.ContaineredTaskManagerParameters; import org.apache.flink.util.TestLogger; +import org.apache.hadoop.io.Text; +import org.apache.hadoop.security.Credentials; +import org.apache.hadoop.security.token.Token; +import org.apache.hadoop.security.token.TokenIdentifier; +import org.apache.hadoop.yarn.api.records.ContainerLaunchContext; +import org.apache.hadoop.yarn.conf.YarnConfiguration; import org.junit.Rule; import org.junit.Test; import org.junit.rules.TemporaryFolder; +import org.mockito.Mockito; +import org.mockito.invocation.InvocationOnMock; +import org.mockito.stubbing.Answer; +import org.slf4j.Logger; +import org.slf4j.LoggerFactory; +import java.io.ByteArrayInputStream; +import java.io.DataInputStream; +import java.io.File; import java.nio.file.Files; import java.nio.file.Path; +import java.util.Collection; import java.util.Collections; +import java.util.HashMap; +import java.util.Map; import java.util.stream.Stream; import static org.hamcrest.Matchers.equalTo; +import static org.junit.Assert.assertEquals; +import static org.junit.Assert.assertFalse; import static org.junit.Assert.assertThat; +import static org.junit.Assert.assertTrue; +import static org.mockito.ArgumentMatchers.anyInt; +import static org.mockito.ArgumentMatchers.anyString; +import static org.mockito.Mockito.doAnswer; +import static org.mockito.Mockito.mock; Review comment: Using mock may cause the test instability, I think. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] flinkbot commented on issue #7903: [FLINK-11822][table-planner-blink] Introduce Flink metadata handlers

flinkbot commented on issue #7903: [FLINK-11822][table-planner-blink] Introduce Flink metadata handlers URL: https://github.com/apache/flink/pull/7903#issuecomment-469617035 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Updated] (FLINK-11822) Introduce Flink metadata handlers

[

https://issues.apache.org/jira/browse/FLINK-11822?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-11822:

---

Labels: pull-request-available (was: )

> Introduce Flink metadata handlers

> -

>

> Key: FLINK-11822

> URL: https://issues.apache.org/jira/browse/FLINK-11822

> Project: Flink

> Issue Type: New Feature

> Components: API / Table SQL

>Reporter: godfrey he

>Assignee: godfrey he

>Priority: Major

> Labels: pull-request-available

>

> Calcite has defined various metadata handlers(e.g. {{RowCoun}},

> {{Selectivity}} and provided default implementation(e.g. {{RelMdRowCount}},

> {{RelMdSelectivity}}). However, the default implementation can't completely

> meet our requirements, e.g. some of its logic is incomplete,and some RelNodes

> are not considered.

> There are two options to meet our requirements:

> option 1. Extends from default implementation, overrides method to improve

> its logic, add new methods for new {{RelNode}}. The advantage of this option

> is we just need to focus on the additions and modifications. However, its

> shortcomings are also obvious: we have no control over the code of

> non-override methods in default implementation classes especially when

> upgrading the Calcite version.

> option 2. Extends from metadata handler interfaces, reimplement all the

> logic. Its shortcomings are very obvious, however we can control all the code

> logic that's what we want.

> so we choose option 2!

> In this jira, only basic metadata handles will be introduced, including:

> {{FlinkRelMdPercentageOriginalRow}},

> {{FlinkRelMdNonCumulativeCost}},

> {{FlinkRelMdCumulativeCost}},

> {{FlinkRelMdRowCount}},

> {{FlinkRelMdSize}},

> {{FlinkRelMdSelectivity}},

> {{FlinkRelMdDistinctRowCoun}},

> {{FlinkRelMdPopulationSize}},

> {{FlinkRelMdColumnUniqueness}},

> {{FlinkRelMdUniqueKeys}}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] [flink] godfreyhe opened a new pull request #7903: [FLINK-11822][table-planner-blink] Introduce Flink metadata handlers

godfreyhe opened a new pull request #7903: [FLINK-11822][table-planner-blink] Introduce Flink metadata handlers URL: https://github.com/apache/flink/pull/7903 ## What is the purpose of the change *Introduce Flink metadata handlers* ## Brief change log - *adds Flink metadata handlers, including `FlinkRelMdPercentageOriginalRow`, `FlinkRelMdNonCumulativeCost`, `FlinkRelMdCumulativeCost`, `FlinkRelMdRowCount`, `FlinkRelMdSize`, `FlinkRelMdSelectivity`, `FlinkRelMdDistinctRowCoun`, `FlinkRelMdPopulationSize`, `FlinkRelMdColumnUniqueness`, `FlinkRelMdUniqueKeys`* - *adds dependent classes: `FlinkCalciteCatalogReader`, `FlinkRelBuilder`, `FlinkRelOptClusterFactory`, `FlinkTypeSystem` and `FlinkRelOptTable` for test* ## Verifying this change This change added tests and can be verified as follows: - *Added test for each metadata handler* ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: (no) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? (yes) - If yes, how is the feature documented? (not documented) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] zentol commented on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java

zentol commented on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java URL: https://github.com/apache/flink/pull/7902#issuecomment-469618123 Why do you consider the current package to be wrong? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Created] (FLINK-11823) TrySerializer#duplicate does not create a proper duplication

Dawid Wysakowicz created FLINK-11823: Summary: TrySerializer#duplicate does not create a proper duplication Key: FLINK-11823 URL: https://issues.apache.org/jira/browse/FLINK-11823 Project: Flink Issue Type: Bug Components: API / Type Serialization System Affects Versions: 1.7.2 Reporter: Dawid Wysakowicz Fix For: 1.7.3, 1.8.0 In flink 1.7.x TrySerializer#duplicate does not duplicate elemSerializer and throwableSerializer, which additionally is a KryoSerializer and therefore should always be duplicated. It was fixed in 1.8/master with 186b8df4155a4c171d71f1c806290bd94374416c -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (FLINK-11823) TrySerializer#duplicate does not create a proper duplicate

[ https://issues.apache.org/jira/browse/FLINK-11823?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dawid Wysakowicz updated FLINK-11823: - Summary: TrySerializer#duplicate does not create a proper duplicate (was: TrySerializer#duplicate does not create a proper duplication) > TrySerializer#duplicate does not create a proper duplicate > -- > > Key: FLINK-11823 > URL: https://issues.apache.org/jira/browse/FLINK-11823 > Project: Flink > Issue Type: Bug > Components: API / Type Serialization System >Affects Versions: 1.7.2 >Reporter: Dawid Wysakowicz >Priority: Major > Fix For: 1.7.3, 1.8.0 > > > In flink 1.7.x TrySerializer#duplicate does not duplicate elemSerializer and > throwableSerializer, which additionally is a KryoSerializer and therefore > should always be duplicated. > It was fixed in 1.8/master with 186b8df4155a4c171d71f1c806290bd94374416c -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (FLINK-11823) TrySerializer#duplicate does not create a proper duplicate

[ https://issues.apache.org/jira/browse/FLINK-11823?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16784280#comment-16784280 ] Dawid Wysakowicz commented on FLINK-11823: -- Fixed in master: 186b8df4155a4c171d71f1c806290bd94374416c 1.8: 186b8df4155a4c171d71f1c806290bd94374416c > TrySerializer#duplicate does not create a proper duplicate > -- > > Key: FLINK-11823 > URL: https://issues.apache.org/jira/browse/FLINK-11823 > Project: Flink > Issue Type: Bug > Components: API / Type Serialization System >Affects Versions: 1.7.2 >Reporter: Dawid Wysakowicz >Priority: Major > Fix For: 1.7.3, 1.8.0 > > > In flink 1.7.x TrySerializer#duplicate does not duplicate elemSerializer and > throwableSerializer, which additionally is a KryoSerializer and therefore > should always be duplicated. > It was fixed in 1.8/master with 186b8df4155a4c171d71f1c806290bd94374416c -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (FLINK-11823) TrySerializer#duplicate does not create a proper duplicate

[ https://issues.apache.org/jira/browse/FLINK-11823?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dawid Wysakowicz updated FLINK-11823: - Description: In flink 1.7.x TrySerializer#duplicate does not duplicate elemSerializer and throwableSerializer, which additionally is a KryoSerializer and therefore should always be duplicated. was: In flink 1.7.x TrySerializer#duplicate does not duplicate elemSerializer and throwableSerializer, which additionally is a KryoSerializer and therefore should always be duplicated. It was fixed in 1.8/master with 186b8df4155a4c171d71f1c806290bd94374416c > TrySerializer#duplicate does not create a proper duplicate > -- > > Key: FLINK-11823 > URL: https://issues.apache.org/jira/browse/FLINK-11823 > Project: Flink > Issue Type: Bug > Components: API / Type Serialization System >Affects Versions: 1.7.2 >Reporter: Dawid Wysakowicz >Priority: Major > Fix For: 1.7.3, 1.8.0 > > > In flink 1.7.x TrySerializer#duplicate does not duplicate elemSerializer and > throwableSerializer, which additionally is a KryoSerializer and therefore > should always be duplicated. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[GitHub] [flink] lamber-ken commented on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java

lamber-ken commented on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java URL: https://github.com/apache/flink/pull/7902#issuecomment-46962 > Why do you consider the current package to be wrong? hi, the mismatch between KafkaDeserializationSchemaWrapper class's package name and file's location This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] lamber-ken commented on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java

lamber-ken commented on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java URL: https://github.com/apache/flink/pull/7902#issuecomment-469622874  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] lamber-ken removed a comment on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java

lamber-ken removed a comment on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java URL: https://github.com/apache/flink/pull/7902#issuecomment-46962 > Why do you consider the current package to be wrong? hi, the mismatch between KafkaDeserializationSchemaWrapper class's package name and file's location This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] lamber-ken edited a comment on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java

lamber-ken edited a comment on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java URL: https://github.com/apache/flink/pull/7902#issuecomment-469622874 > Why do you consider the current package to be wrong? hi, the mismatch between KafkaDeserializationSchemaWrapper class's package name and file's location  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] lamber-ken edited a comment on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java

lamber-ken edited a comment on issue #7902: [FLINK-11821] fix the error package location of KafkaDeserializationSchemaWrapper.java URL: https://github.com/apache/flink/pull/7902#issuecomment-469622874 > Why do you consider the current package to be wrong? @zentol hi, the mismatch between KafkaDeserializationSchemaWrapper class's package name and file's location  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] carp84 commented on issue #7899: [FLINK-11731] [State Backends] Make DefaultOperatorStateBackend follow the builder pattern

carp84 commented on issue #7899: [FLINK-11731] [State Backends] Make DefaultOperatorStateBackend follow the builder pattern URL: https://github.com/apache/flink/pull/7899#issuecomment-469627041 Checked the travis build and confirmed the failure is irrelative with change here. And a [local travis check](https://travis-ci.org/carp84/flink/builds/501881135) with the same commit passed This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] zhijiangW commented on issue #7713: [FLINK-10995][network] Copy intermediate serialization results only once for broadcast mode

zhijiangW commented on issue #7713: [FLINK-10995][network] Copy intermediate serialization results only once for broadcast mode URL: https://github.com/apache/flink/pull/7713#issuecomment-469631030 @pnowojski , thanks for your review and suggestions! I have updated the codes for addressing the comments. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Created] (FLINK-11824) Event-time attribute cannot have same name as in original format

Fabian Hueske created FLINK-11824:

-

Summary: Event-time attribute cannot have same name as in original

format

Key: FLINK-11824

URL: https://issues.apache.org/jira/browse/FLINK-11824

Project: Flink

Issue Type: Bug

Components: API / Table SQL

Affects Versions: 1.7.2, 1.8.0

Reporter: Fabian Hueske

When a table is defined, event-time attributes are typically defined by linking

them to an existing field in the original format (e.g., CSV, Avro, JSON, ...).

However, right now, the event-time attribute in the defined table cannot have

the same name as the original field.

The following table definition fails with an exception:

{code}

// set up execution environment

val env = StreamExecutionEnvironment.getExecutionEnvironment

val tEnv = StreamTableEnvironment.create(env)

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

val names: Array[String] = Array("name", "t")

val types: Array[TypeInformation[_]] = Array(Types.STRING, Types.LONG)

tEnv.connect(new Kafka()

.version("universal")

.topic("namesTopic")

.property("zookeeper.connect", "localhost:2181")

.property("bootstrap.servers", "localhost:9092")

.property("group.id", "testGroup"))

.withFormat(new Csv()

.schema(Types.ROW(names, types)))

.withSchema(new Schema()

.field("name", Types.STRING)

.field("t", Types.SQL_TIMESTAMP) // changing "t" to "t2" works

.rowtime(new Rowtime()

.timestampsFromField("t")

.watermarksPeriodicAscending()))

.inAppendMode()

.registerTableSource("Names")

{code}

{code}

Exception in thread "main" org.apache.flink.table.api.ValidationException:

Field 't' could not be resolved by the field mapping.

at

org.apache.flink.table.sources.TableSourceUtil$.org$apache$flink$table$sources$TableSourceUtil$$resolveInputField(TableSourceUtil.scala:491)

at

org.apache.flink.table.sources.TableSourceUtil$$anonfun$org$apache$flink$table$sources$TableSourceUtil$$resolveInputFields$1.apply(TableSourceUtil.scala:521)

at

org.apache.flink.table.sources.TableSourceUtil$$anonfun$org$apache$flink$table$sources$TableSourceUtil$$resolveInputFields$1.apply(TableSourceUtil.scala:521)

at

scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at

scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at

scala.collection.IndexedSeqOptimized$class.foreach(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:186)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.mutable.ArrayOps$ofRef.map(ArrayOps.scala:186)

at

org.apache.flink.table.sources.TableSourceUtil$.org$apache$flink$table$sources$TableSourceUtil$$resolveInputFields(TableSourceUtil.scala:521)

at

org.apache.flink.table.sources.TableSourceUtil$.validateTableSource(TableSourceUtil.scala:127)

at

org.apache.flink.table.plan.schema.StreamTableSourceTable.(StreamTableSourceTable.scala:33)

at

org.apache.flink.table.api.StreamTableEnvironment.registerTableSourceInternal(StreamTableEnvironment.scala:150)

at

org.apache.flink.table.api.TableEnvironment.registerTableSource(TableEnvironment.scala:541)

at

org.apache.flink.table.descriptors.ConnectTableDescriptor.registerTableSource(ConnectTableDescriptor.scala:47)

{code}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] [flink] azagrebin commented on issue #7186: [FLINK-10941] Keep slots which contain unconsumed result partitions

azagrebin commented on issue #7186: [FLINK-10941] Keep slots which contain unconsumed result partitions URL: https://github.com/apache/flink/pull/7186#issuecomment-469636966 @zhijiangW I agree we need to revisit this topic after shuffle refactoring @QiLuo-BD What do you think if we keep releasing of partitions as it is now but add a separate `isClosed` flag to subpartition/partition and use it instead of `isReleased`? The `PartitionRequestQueue.close` and `PartitionRequestQueue.channelInactive` could notify read view and subsequently subpartition/partition that it is closed. The task/slot could use `partition.isClosed` flag instead of `isReeleased` to report to resource manager that task executor can be released the same way as it is now in PR. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Created] (FLINK-11825) Resolve name clash of StateTTL TimeCharacteristic class

Fabian Hueske created FLINK-11825:

-

Summary: Resolve name clash of StateTTL TimeCharacteristic class

Key: FLINK-11825

URL: https://issues.apache.org/jira/browse/FLINK-11825

Project: Flink

Issue Type: Improvement

Components: Runtime / State Backends

Affects Versions: 1.7.2

Reporter: Fabian Hueske

The StateTTL feature introduced the class

\{{org.apache.flink.api.common.state.TimeCharacteristic}} which clashes with

\{{org.apache.flink.streaming.api.TimeCharacteristic}}.

This is a problem for two reasons:

1. Users get confused because the mistakenly import

\{{org.apache.flink.api.common.state.TimeCharacteristic}}.

2. When using the StateTTL feature, users need to spell out the package name

for \{{org.apache.flink.api.common.state.TimeCharacteristic}} because the other

class is most likely already imported.

Since \{{org.apache.flink.streaming.api.TimeCharacteristic}} is one of the most

used classes of the DataStream API, we should make sure that users can use it

without import problems.

These error are hard to spot and confusing for many users.

I see two ways to resolve the issue:

1. drop \{{org.apache.flink.api.common.state.TimeCharacteristic}} and use

\{{org.apache.flink.streaming.api.TimeCharacteristic}} throwing an exception if

an incorrect characteristic is used.

2. rename the class \{{org.apache.flink.api.common.state.TimeCharacteristic}}

to some other name.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] [flink] StefanRRichter commented on a change in pull request #7899: [FLINK-11731] [State Backends] Make DefaultOperatorStateBackend follow the builder pattern

StefanRRichter commented on a change in pull request #7899: [FLINK-11731]

[State Backends] Make DefaultOperatorStateBackend follow the builder pattern

URL: https://github.com/apache/flink/pull/7899#discussion_r262438032

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/state/DefaultOperatorStateBackendBuilder.java

##

@@ -0,0 +1,103 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.flink.runtime.state;

+

+import org.apache.flink.annotation.VisibleForTesting;

+import org.apache.flink.api.common.ExecutionConfig;

+import org.apache.flink.core.fs.CloseableRegistry;

+import org.apache.flink.util.IOUtils;

+

+import java.io.Serializable;

+import java.util.Collection;

+import java.util.HashMap;

+import java.util.Map;

+

+/**

+ * Builder class for {@link DefaultOperatorStateBackend} which handles all

necessary initializations and clean ups.

+ */

+public class DefaultOperatorStateBackendBuilder implements

+ StateBackendBuilder {

+ /** The user code classloader. */

+ @VisibleForTesting

+ protected final ClassLoader userClassloader;

+ /** The execution configuration. */

+ @VisibleForTesting

+ protected final ExecutionConfig executionConfig;

+ /** Flag to de/activate asynchronous snapshots. */

+ @VisibleForTesting

+ protected final boolean asynchronousSnapshots;

+ /** State handles for restore. */

+ @VisibleForTesting

+ protected final Collection restoreStateHandles;

+ @VisibleForTesting

+ protected final CloseableRegistry cancelStreamRegistry;

+

+

+ public DefaultOperatorStateBackendBuilder(

+ ClassLoader userClassloader,

+ ExecutionConfig executionConfig,

+ boolean asynchronousSnapshots,

+ Collection stateHandles,

+ CloseableRegistry cancelStreamRegistry) {

+ this.userClassloader = userClassloader;

+ this.executionConfig = executionConfig;

+ this.asynchronousSnapshots = asynchronousSnapshots;

+ this.restoreStateHandles = stateHandles;

+ this.cancelStreamRegistry = cancelStreamRegistry;

+ }

+

+ @Override

+ public DefaultOperatorStateBackend build() throws

BackendBuildingException {

+ JavaSerializer serializer = new

JavaSerializer<>();

Review comment:

One more comment about this `JavaSerializer`. This is one exception where I

would initialize the field inside the constructor of

`DefaultOperatorStateBackend` and not pass it in. The reasons are, that this

member has a similar intent as a `static final` field, only the class does not

support concurrency so we must create a new instance per object. This field is

always assigned the same type of object and it is also only used in the context

of a deprecated method. No new code or tests would ever be interested to pass

in something different here.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] StefanRRichter commented on a change in pull request #7899: [FLINK-11731] [State Backends] Make DefaultOperatorStateBackend follow the builder pattern

StefanRRichter commented on a change in pull request #7899: [FLINK-11731] [State Backends] Make DefaultOperatorStateBackend follow the builder pattern URL: https://github.com/apache/flink/pull/7899#discussion_r262439351 ## File path: flink-runtime/src/main/java/org/apache/flink/runtime/state/DefaultOperatorStateBackend.java ## @@ -85,25 +73,15 @@ private final CloseableRegistry closeStreamOnCancelRegistry; /** -* Default serializer. Only used for the default operator state. -*/ - private final JavaSerializer javaSerializer; - - /** -* The user code classloader. +* Default typeSerializer. Only used for the default operator state. */ - private final ClassLoader userClassloader; + private final TypeSerializer typeSerializer; Review comment: I suggest to change the name of this field. `typeSerializer` is general and makes it look like this is a very commonly used field. However, this is more like a very static field, that always gets assigned the same type of serializer and is only used in deprecated context (see one of my other comments in the builder about this). I suggest something like `deprecatedDefaultJavaSerializer`. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] StefanRRichter commented on a change in pull request #7899: [FLINK-11731] [State Backends] Make DefaultOperatorStateBackend follow the builder pattern

StefanRRichter commented on a change in pull request #7899: [FLINK-11731]

[State Backends] Make DefaultOperatorStateBackend follow the builder pattern

URL: https://github.com/apache/flink/pull/7899#discussion_r262437095

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/state/DefaultOperatorStateBackendBuilder.java

##

@@ -0,0 +1,103 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.flink.runtime.state;

+

+import org.apache.flink.annotation.VisibleForTesting;

+import org.apache.flink.api.common.ExecutionConfig;

+import org.apache.flink.core.fs.CloseableRegistry;

+import org.apache.flink.util.IOUtils;

+

+import java.io.Serializable;

+import java.util.Collection;

+import java.util.HashMap;

+import java.util.Map;

+

+/**

+ * Builder class for {@link DefaultOperatorStateBackend} which handles all

necessary initializations and clean ups.

+ */

+public class DefaultOperatorStateBackendBuilder implements

+ StateBackendBuilder {

+ /** The user code classloader. */

+ @VisibleForTesting

+ protected final ClassLoader userClassloader;

+ /** The execution configuration. */

+ @VisibleForTesting

+ protected final ExecutionConfig executionConfig;

+ /** Flag to de/activate asynchronous snapshots. */

+ @VisibleForTesting

+ protected final boolean asynchronousSnapshots;

+ /** State handles for restore. */

+ @VisibleForTesting

+ protected final Collection restoreStateHandles;

+ @VisibleForTesting

+ protected final CloseableRegistry cancelStreamRegistry;

+

+

+ public DefaultOperatorStateBackendBuilder(

+ ClassLoader userClassloader,

+ ExecutionConfig executionConfig,

+ boolean asynchronousSnapshots,

+ Collection stateHandles,

+ CloseableRegistry cancelStreamRegistry) {

+ this.userClassloader = userClassloader;

+ this.executionConfig = executionConfig;

+ this.asynchronousSnapshots = asynchronousSnapshots;

+ this.restoreStateHandles = stateHandles;

+ this.cancelStreamRegistry = cancelStreamRegistry;

+ }

+

+ @Override

+ public DefaultOperatorStateBackend build() throws

BackendBuildingException {

+ JavaSerializer serializer = new

JavaSerializer<>();

Review comment:

I suggest to move some of the local variables (like this one) further down,

closer to their first usage. First, it is better to keep the scope of variables

as small as possible, second if we run in the exceptional case, those objects

have been constructed for nothing.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] StefanRRichter commented on a change in pull request #7899: [FLINK-11731] [State Backends] Make DefaultOperatorStateBackend follow the builder pattern

StefanRRichter commented on a change in pull request #7899: [FLINK-11731]

[State Backends] Make DefaultOperatorStateBackend follow the builder pattern

URL: https://github.com/apache/flink/pull/7899#discussion_r262434116

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/state/DefaultOperatorStateBackendBuilder.java

##

@@ -0,0 +1,103 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.flink.runtime.state;

+

+import org.apache.flink.annotation.VisibleForTesting;

+import org.apache.flink.api.common.ExecutionConfig;

+import org.apache.flink.core.fs.CloseableRegistry;

+import org.apache.flink.util.IOUtils;

+

+import java.io.Serializable;

+import java.util.Collection;

+import java.util.HashMap;

+import java.util.Map;

+

+/**

+ * Builder class for {@link DefaultOperatorStateBackend} which handles all

necessary initializations and clean ups.

+ */

+public class DefaultOperatorStateBackendBuilder implements

+ StateBackendBuilder {

+ /** The user code classloader. */

+ @VisibleForTesting

+ protected final ClassLoader userClassloader;

+ /** The execution configuration. */

+ @VisibleForTesting

+ protected final ExecutionConfig executionConfig;

+ /** Flag to de/activate asynchronous snapshots. */

+ @VisibleForTesting

+ protected final boolean asynchronousSnapshots;

+ /** State handles for restore. */

+ @VisibleForTesting

+ protected final Collection restoreStateHandles;

+ @VisibleForTesting

+ protected final CloseableRegistry cancelStreamRegistry;

+

+

+ public DefaultOperatorStateBackendBuilder(

+ ClassLoader userClassloader,

+ ExecutionConfig executionConfig,

+ boolean asynchronousSnapshots,

+ Collection stateHandles,

+ CloseableRegistry cancelStreamRegistry) {

+ this.userClassloader = userClassloader;

+ this.executionConfig = executionConfig;

+ this.asynchronousSnapshots = asynchronousSnapshots;

+ this.restoreStateHandles = stateHandles;

+ this.cancelStreamRegistry = cancelStreamRegistry;

+ }

+

+ @Override

+ public DefaultOperatorStateBackend build() throws

BackendBuildingException {

+ JavaSerializer serializer = new

JavaSerializer<>();

+ Map> registeredOperatorStates

= new HashMap<>();

+ Map>

registeredBroadcastStates = new HashMap<>();

+ Map> accessedStatesByName =

new HashMap<>();

+ Map>

accessedBroadcastStatesByName = new HashMap<>();

+ CloseableRegistry cancelStreamRegistryForBackend = new

CloseableRegistry();

+ AbstractSnapshotStrategy snapshotStrategy =

+ new DefaultOperatorStateBackendSnapshotStrategy(

+ userClassloader,

+ asynchronousSnapshots,

+ registeredOperatorStates,

+ registeredBroadcastStates,

+ cancelStreamRegistryForBackend);

+ OperatorStateRestoreOperation restoreOperation = new

OperatorStateRestoreOperation(

+ cancelStreamRegistry,

+ userClassloader,

+ registeredOperatorStates,

+ registeredBroadcastStates,

+ restoreStateHandles

+ );

+ try {

+ restoreOperation.restore();

+ } catch (Throwable e) {

Review comment:

I would suggest to only catch `Exception` here, not `Throwable`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] tillrohrmann commented on a change in pull request #7880: [FLINK-11336][zk] Delete ZNodes when ZooKeeperHaServices#closeAndCleanupAllData

tillrohrmann commented on a change in pull request #7880: [FLINK-11336][zk] Delete ZNodes when ZooKeeperHaServices#closeAndCleanupAllData URL: https://github.com/apache/flink/pull/7880#discussion_r262455867 ## File path: flink-runtime/src/test/resources/log4j-test.properties ## @@ -18,7 +18,7 @@ # Set root logger level to OFF to not flood build logs # set manually to INFO for debugging purposes -log4j.rootLogger=OFF, testlogger +log4j.rootLogger=INFO, testlogger Review comment: Good catch. Will revert it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [flink] tillrohrmann commented on a change in pull request #7880: [FLINK-11336][zk] Delete ZNodes when ZooKeeperHaServices#closeAndCleanupAllData

tillrohrmann commented on a change in pull request #7880: [FLINK-11336][zk]

Delete ZNodes when ZooKeeperHaServices#closeAndCleanupAllData

URL: https://github.com/apache/flink/pull/7880#discussion_r262457417

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/highavailability/zookeeper/ZooKeeperHaServicesTest.java

##

@@ -0,0 +1,250 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.highavailability.zookeeper;

+

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.configuration.HighAvailabilityOptions;

+import org.apache.flink.runtime.blob.BlobKey;

+import org.apache.flink.runtime.blob.BlobStoreService;

+import org.apache.flink.runtime.concurrent.Executors;

+import org.apache.flink.runtime.highavailability.RunningJobsRegistry;

+import org.apache.flink.runtime.leaderelection.LeaderElectionService;

+import org.apache.flink.runtime.leaderelection.TestingContender;

+import org.apache.flink.runtime.leaderelection.TestingListener;

+import org.apache.flink.runtime.leaderretrieval.LeaderRetrievalService;

+import org.apache.flink.runtime.util.ZooKeeperUtils;

+import org.apache.flink.runtime.zookeeper.ZooKeeperResource;

+import org.apache.flink.util.TestLogger;

+import org.apache.flink.util.function.ThrowingConsumer;

+