[GitHub] [flink] zhijiangW commented on issue #7549: [FLINK-11403][network] Remove ResultPartitionConsumableNotifier from ResultPartition

zhijiangW commented on issue #7549: [FLINK-11403][network] Remove ResultPartitionConsumableNotifier from ResultPartition URL: https://github.com/apache/flink/pull/7549#issuecomment-474706964 @azagrebin , thanks for reviews! I submitted a separate fixup commit for addressing the method access modifier. For the other issues I left some comments need to be further confirmed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Updated] (FLINK-11972) The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR during the end-to-end test.

[

https://issues.apache.org/jira/browse/FLINK-11972?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11972:

Description:

Since the difference between 1.8.0 and 1.7.x is that 1.8.x does not put the

`hadoop-shaded` JAR integrated into the dist. It will cause an error when the

end-to-end test cannot be found with `Hadoop` Related classes, such as:

`java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem`. So we need

to improve the end-to-end test script, or explicitly stated in the README, i.e.

end-to-end test need to add `flink-shaded-hadoop2-uber-.jar` to the

classpath. So, we will get the exception something like:

{code:java}

[INFO] 3 instance(s) of taskexecutor are already running on

jinchengsunjcs-iMac.local.

Starting taskexecutor daemon on host jinchengsunjcs-iMac.local.

java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem;

at java.lang.Class.getDeclaredFields0(Native Method)

at java.lang.Class.privateGetDeclaredFields(Class.java:2583)

at java.lang.Class.getDeclaredFields(Class.java:1916)

at org.apache.flink.api.java.ClosureCleaner.clean(ClosureCleaner.java:72)

at

org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.clean(StreamExecutionEnvironment.java:1558)

at

org.apache.flink.streaming.api.datastream.DataStream.clean(DataStream.java:185)

at

org.apache.flink.streaming.api.datastream.DataStream.addSink(DataStream.java:1227)

at

org.apache.flink.streaming.tests.BucketingSinkTestProgram.main(BucketingSinkTestProgram.java:80)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:529)

at

org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:421)

at org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:423)

at org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:813)

at org.apache.flink.client.cli.CliFrontend.runProgram(CliFrontend.java:287)

at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:213)

at

org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1050)

at org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1126)

at

org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1126)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FileSystem

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 22 more

Job () is running.{code}

So, I think we can import the test script or improve the README.

What do you think?

was:

Since the difference between 1.8.0 and 1.7.x is that 1.8.x does not put the

`hadoop-shaded` JAR integrated into the dist. It will cause an error when the

end-to-end test cannot be found with `Hadoop` Related classes, such as:

`java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem`. So we need

to improve the end-to-end test script, or explicitly stated in the README, i.e.

end-to-end test need to add `flink-shaded-hadoop2-uber-.jar` to the

classpath. So, we will get the exception something like:

{code:java}

[INFO] 3 instance(s) of taskexecutor are already running on

jinchengsunjcs-iMac.local.

Starting taskexecutor daemon on host jinchengsunjcs-iMac.local.

java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem;

at java.lang.Class.getDeclaredFields0(Native Method)

at java.lang.Class.privateGetDeclaredFields(Class.java:2583)

at java.lang.Class.getDeclaredFields(Class.java:1916)

at org.apache.flink.api.java.ClosureCleaner.clean(ClosureCleaner.java:72)

at

org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.clean(StreamExecutionEnvironment.java:1558)

at

org.apache.flink.streaming.api.datastream.DataStream.clean(DataStream.java:185)

at

org.apache.flink.streaming.api.datastream.DataStream.addSink(DataStream.java:1227)

at

org.apache.flink.streaming.tests.BucketingSinkTestProgram.main(BucketingSinkTestProgram.java:80)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:529)

at

org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecuti

[jira] [Updated] (FLINK-11971) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[

https://issues.apache.org/jira/browse/FLINK-11971?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11971:

Description:

When I did the end-to-end test under Mac OS, I found the following two problems:

1. The verification returned for different `minikube status` is not enough for

the robustness. The strings returned by different versions of different

platforms are different. the following misjudgment is caused:

When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, the

`minikube` has actually started successfully. The core reason is that there is

a bug in the `test_kubernetes_embedded_job.sh` script. The error message as

follows:

!screenshot-1.png!

{code:java}

Current check logic: echo ${status} | grep -q "minikube: Running cluster:

Running kubectl: Correctly Configured"

My local info

jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

So, I think we should improve the check logic of `minikube status`, What do you

think?

was:

When I did the end-to-end test under Mac OS, I found the following two problems:

1. The verification returned for different `minikube status` is not enough for

the robustness. The strings returned by different versions of different

platforms are different. the following misjudgment is caused:

When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, the

`minikube` has actually started successfully. The core reason is that there is

a bug in the `test_kubernetes_embedded_job.sh` script. The error message as

follows:

!screenshot-1.png!

{code:java}

Current check logic: echo ${status} | grep -q "minikube: Running cluster:

Running kubectl: Correctly Configured"

My local messae

jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

So, I think we should improve the check logic of `minikube status`, What do you

think?

> Fix `Command: start_kubernetes_if_not_ruunning failed` error

>

>

> Key: FLINK-11971

> URL: https://issues.apache.org/jira/browse/FLINK-11971

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Assignee: Hequn Cheng

>Priority: Major

> Attachments: screenshot-1.png

>

>

> When I did the end-to-end test under Mac OS, I found the following two

> problems:

> 1. The verification returned for different `minikube status` is not enough

> for the robustness. The strings returned by different versions of different

> platforms are different. the following misjudgment is caused:

> When the `Command: start_kubernetes_if_not_ruunning failed` error occurs,

> the `minikube` has actually started successfully. The core reason is that

> there is a bug in the `test_kubernetes_embedded_job.sh` script. The error

> message as follows:

> !screenshot-1.png!

> {code:java}

> Current check logic: echo ${status} | grep -q "minikube: Running cluster:

> Running kubectl: Correctly Configured"

> My local info

> jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

> host: Running

> kubelet: Running

> apiserver: Running

> kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

> So, I think we should improve the check logic of `minikube status`, What do

> you think?

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] [flink] zhijiangW commented on a change in pull request #7549: [FLINK-11403][network] Remove ResultPartitionConsumableNotifier from ResultPartition

zhijiangW commented on a change in pull request #7549: [FLINK-11403][network]

Remove ResultPartitionConsumableNotifier from ResultPartition

URL: https://github.com/apache/flink/pull/7549#discussion_r267201040

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/io/network/partition/ResultPartition.java

##

@@ -19,432 +19,105 @@

package org.apache.flink.runtime.io.network.partition;

import org.apache.flink.api.common.JobID;

-import org.apache.flink.runtime.executiongraph.IntermediateResultPartition;

-import org.apache.flink.runtime.io.disk.iomanager.IOManager;

import org.apache.flink.runtime.io.network.api.writer.ResultPartitionWriter;

-import org.apache.flink.runtime.io.network.buffer.Buffer;

import org.apache.flink.runtime.io.network.buffer.BufferConsumer;

-import org.apache.flink.runtime.io.network.buffer.BufferPool;

-import org.apache.flink.runtime.io.network.buffer.BufferPoolOwner;

import org.apache.flink.runtime.io.network.buffer.BufferProvider;

-import

org.apache.flink.runtime.io.network.partition.consumer.LocalInputChannel;

-import

org.apache.flink.runtime.io.network.partition.consumer.RemoteInputChannel;

-import org.apache.flink.runtime.jobgraph.DistributionPattern;

import org.apache.flink.runtime.taskmanager.TaskActions;

-import org.apache.flink.runtime.taskmanager.TaskManager;

-

-import org.slf4j.Logger;

-import org.slf4j.LoggerFactory;

import java.io.IOException;

-import java.util.concurrent.atomic.AtomicBoolean;

-import java.util.concurrent.atomic.AtomicInteger;

-import static org.apache.flink.util.Preconditions.checkArgument;

-import static org.apache.flink.util.Preconditions.checkElementIndex;

import static org.apache.flink.util.Preconditions.checkNotNull;

-import static org.apache.flink.util.Preconditions.checkState;

/**

- * A result partition for data produced by a single task.

- *

- * This class is the runtime part of a logical {@link

IntermediateResultPartition}. Essentially,

- * a result partition is a collection of {@link Buffer} instances. The buffers

are organized in one

- * or more {@link ResultSubpartition} instances, which further partition the

data depending on the

- * number of consuming tasks and the data {@link DistributionPattern}.

- *

- * Tasks, which consume a result partition have to request one of its

subpartitions. The request

- * happens either remotely (see {@link RemoteInputChannel}) or locally (see

{@link LocalInputChannel})

- *

- * Life-cycle

- *

- * The life-cycle of each result partition has three (possibly overlapping)

phases:

- *

- * Produce:

- * Consume:

- * Release:

- *

- *

- * Lazy deployment and updates of consuming tasks

- *

- * Before a consuming task can request the result, it has to be deployed.

The time of deployment

- * depends on the PIPELINED vs. BLOCKING characteristic of the result

partition. With pipelined

- * results, receivers are deployed as soon as the first buffer is added to the

result partition.

- * With blocking results on the other hand, receivers are deployed after the

partition is finished.

- *

- * Buffer management

- *

- * State management

+ * A wrapper of result partition writer for handling notification of the

consumable

+ * partition which is added a {@link BufferConsumer} or finished.

*/

-public class ResultPartition implements ResultPartitionWriter, BufferPoolOwner

{

-

- private static final Logger LOG =

LoggerFactory.getLogger(ResultPartition.class);

-

- private final String owningTaskName;

+public class ResultPartition implements ResultPartitionWriter {

private final TaskActions taskActions;

private final JobID jobId;

- private final ResultPartitionID partitionId;

-

- /** Type of this partition. Defines the concrete subpartition

implementation to use. */

private final ResultPartitionType partitionType;

- /** The subpartitions of this partition. At least one. */

- private final ResultSubpartition[] subpartitions;

-

- private final ResultPartitionManager partitionManager;

+ private final ResultPartitionWriter partitionWriter;

Review comment:

In the final form, `ResultPartition` should not implement

`ResultPartitionWriter` and it could be put in the `TaskManager` package

instead of current network package. And the field in `ResultPartition` should

be regular `ResultPartitionWriter` finally.

I could understand your concern. If we keep special `NetworkResultPartition`

in `ResultPartition` temporarily, it would bring other troubles after I have a

try. The mainly involved ones are `Environment` and `RecordWriter`.

- `RecordWriter` -> `ResultPartition` -> `NetworkResultPartition`. There are

many other implementations for `ResultPartitionWriter` used for tests. In

`RecordWriterTest` we could not construct the proper `RecordWriter` based on

`ResultPartition` which contains specific `NetworkResultPartition`.

- `Environment` should keep `ResultPartit

[jira] [Assigned] (FLINK-11970) TableEnvironment#registerFunction should overwrite if the same name function existed.

[ https://issues.apache.org/jira/browse/FLINK-11970?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] vinoyang reassigned FLINK-11970: Assignee: vinoyang > TableEnvironment#registerFunction should overwrite if the same name function > existed. > -- > > Key: FLINK-11970 > URL: https://issues.apache.org/jira/browse/FLINK-11970 > Project: Flink > Issue Type: Improvement >Reporter: Jeff Zhang >Assignee: vinoyang >Priority: Major > > Currently, it would throw exception if I try to register user function > multiple times. Registering udf multiple times is very common usage in > notebook scenario. And I don't think it would cause issues for users. > e.g. If user happened to register the same udf multiple times (he intend to > register 2 different udf actually), then he would get exception at runtime > where he use the udf that is missing registration. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (FLINK-11971) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[

https://issues.apache.org/jira/browse/FLINK-11971?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11971:

Description:

When I did the end-to-end test under Mac OS, I found the following two problems:

1. The verification returned for different `minikube status` is not enough for

the robustness. The strings returned by different versions of different

platforms are different. the following misjudgment is caused:

When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, the

`minikube` has actually started successfully. The core reason is that there is

a bug in the `test_kubernetes_embedded_job.sh` script. The error message as

follows:

!screenshot-1.png!

{code:java}

Current check logic: echo ${status} | grep -q "minikube: Running cluster:

Running kubectl: Correctly Configured"

My local messae

jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

So, I think we should improve the check logic of `minikube status`, What do you

think?

was:

When I did the end-to-end test under Mac OS, I found the following two problems:

1. The verification returned for different `minikube status` is not enough for

the robustness. The strings returned by different versions of different

platforms are different. the following misjudgment is caused:

When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, the

`minikube` has actually started successfully. The core reason is that there is

a bug in the `test_kubernetes_embedded_job.sh` script. The error message as

follows:

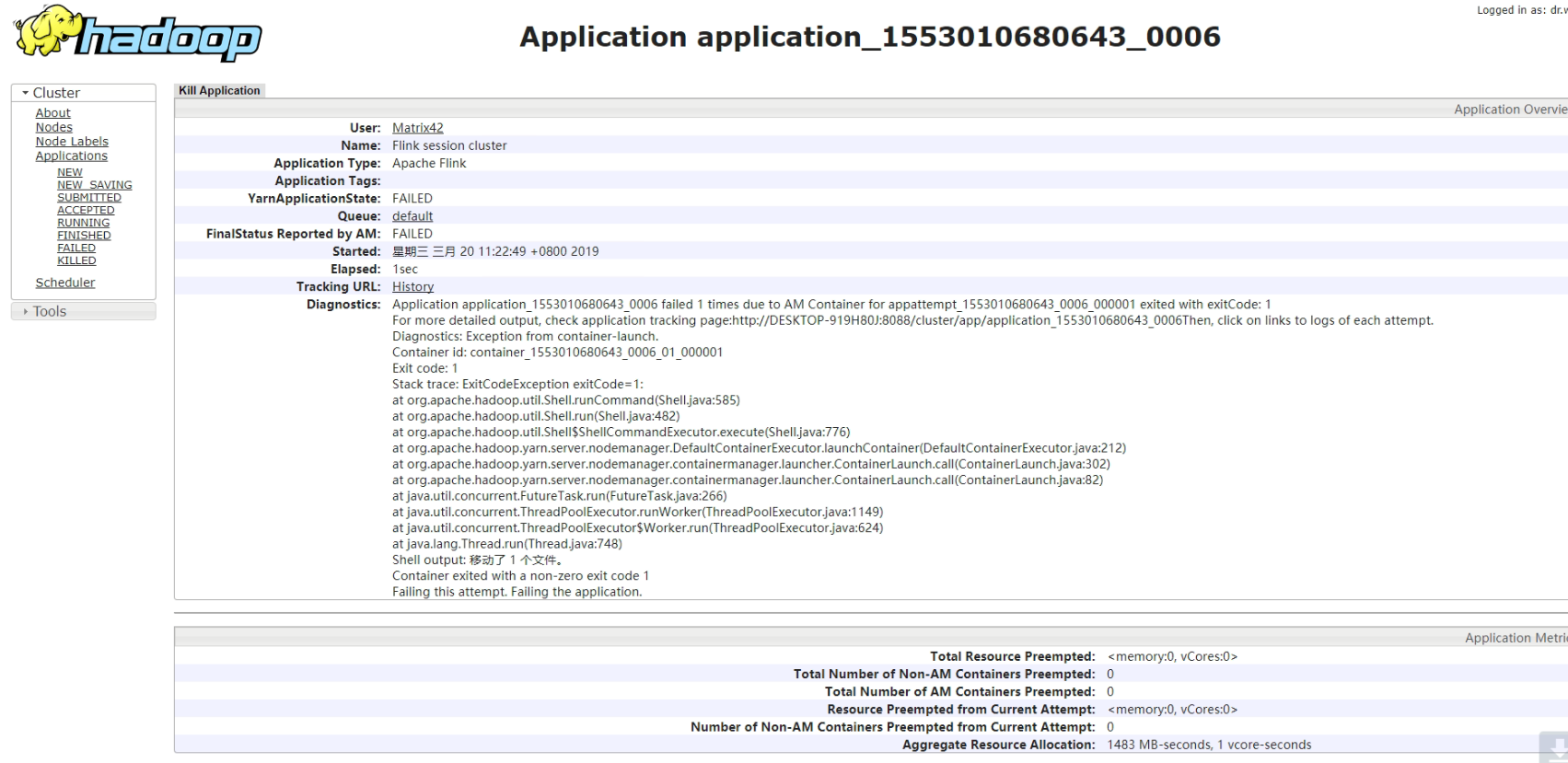

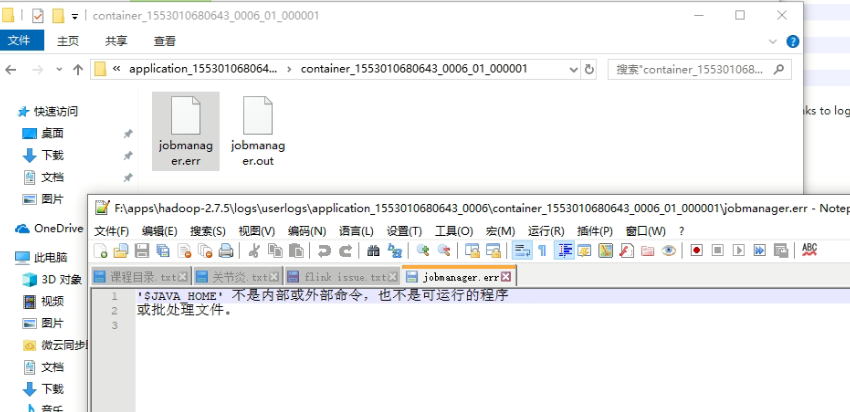

!image-2019-03-20-14-02-29-636.png!

!image-2019-03-20-14-04-17-933.png!

{code:java}

Current check logic: echo ${status} | grep -q "minikube: Running cluster:

Running kubectl: Correctly Configured"

My local messae

jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

So, I think we should improve the check logic of `minikube status`, What do you

think?

> Fix `Command: start_kubernetes_if_not_ruunning failed` error

>

>

> Key: FLINK-11971

> URL: https://issues.apache.org/jira/browse/FLINK-11971

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Assignee: Hequn Cheng

>Priority: Major

> Attachments: screenshot-1.png

>

>

> When I did the end-to-end test under Mac OS, I found the following two

> problems:

> 1. The verification returned for different `minikube status` is not enough

> for the robustness. The strings returned by different versions of different

> platforms are different. the following misjudgment is caused:

> When the `Command: start_kubernetes_if_not_ruunning failed` error occurs,

> the `minikube` has actually started successfully. The core reason is that

> there is a bug in the `test_kubernetes_embedded_job.sh` script. The error

> message as follows:

> !screenshot-1.png!

> {code:java}

> Current check logic: echo ${status} | grep -q "minikube: Running cluster:

> Running kubectl: Correctly Configured"

> My local messae

> jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

> host: Running

> kubelet: Running

> apiserver: Running

> kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

> So, I think we should improve the check logic of `minikube status`, What do

> you think?

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Closed] (FLINK-11949) Introduce DeclarativeAggregateFunction and AggsHandlerCodeGenerator to blink planner

[ https://issues.apache.org/jira/browse/FLINK-11949?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Kurt Young closed FLINK-11949. -- Resolution: Implemented Fix Version/s: 1.9.0 fixed in 4bd2db2abf73f233632a0f256ac00c30dba0c6ec > Introduce DeclarativeAggregateFunction and AggsHandlerCodeGenerator to blink > planner > > > Key: FLINK-11949 > URL: https://issues.apache.org/jira/browse/FLINK-11949 > Project: Flink > Issue Type: New Feature > Components: SQL / Planner >Reporter: Jingsong Lee >Assignee: Jingsong Lee >Priority: Major > Labels: pull-request-available > Fix For: 1.9.0 > > Time Spent: 0.5h > Remaining Estimate: 0h > > Introduce DeclarativeAggregateFunction: Use java Expressions to write a > AggregateFunction, just like Table Api. Then the Table generates the > corresponding CodeGenerator code according to the user's Expression logic. > This avoids the Java object overhead in AggregateFunction before. > Introduce AggsHandlerCodeGenerator: According to multiple AggregateFunctions, > generate a complete aggregation processing Class. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Assigned] (FLINK-11971) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[

https://issues.apache.org/jira/browse/FLINK-11971?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Hequn Cheng reassigned FLINK-11971:

---

Assignee: Hequn Cheng

> Fix `Command: start_kubernetes_if_not_ruunning failed` error

>

>

> Key: FLINK-11971

> URL: https://issues.apache.org/jira/browse/FLINK-11971

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Assignee: Hequn Cheng

>Priority: Major

> Attachments: screenshot-1.png

>

>

> When I did the end-to-end test under Mac OS, I found the following two

> problems:

> 1. The verification returned for different `minikube status` is not enough

> for the robustness. The strings returned by different versions of different

> platforms are different. the following misjudgment is caused:

> When the `Command: start_kubernetes_if_not_ruunning failed` error occurs,

> the `minikube` has actually started successfully. The core reason is that

> there is a bug in the `test_kubernetes_embedded_job.sh` script. The error

> message as follows:

> !image-2019-03-20-14-02-29-636.png!

> !image-2019-03-20-14-04-17-933.png!

>

> {code:java}

> Current check logic: echo ${status} | grep -q "minikube: Running cluster:

> Running kubectl: Correctly Configured"

> My local messae

> jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

> host: Running

> kubelet: Running

> apiserver: Running

> kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

> So, I think we should improve the check logic of `minikube status`, What do

> you think?

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (FLINK-11971) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[

https://issues.apache.org/jira/browse/FLINK-11971?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11971:

Summary: Fix `Command: start_kubernetes_if_not_ruunning failed` error

(was: The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar`

JAR during the end-to-end test.)

> Fix `Command: start_kubernetes_if_not_ruunning failed` error

>

>

> Key: FLINK-11971

> URL: https://issues.apache.org/jira/browse/FLINK-11971

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Priority: Major

>

> When I did the end-to-end test under Mac OS, I found the following two

> problems:

> 1. The verification returned for different `minikube status` is not enough

> for the robustness. The strings returned by different versions of different

> platforms are different. the following misjudgment is caused:

> When the `Command: start_kubernetes_if_not_ruunning failed` error occurs,

> the `minikube` has actually started successfully. The core reason is that

> there is a bug in the `test_kubernetes_embedded_job.sh` script. The error

> message as follows:

> !image-2019-03-20-14-02-29-636.png!

> !image-2019-03-20-14-04-17-933.png!

>

> {code:java}

> Current check logic: echo ${status} | grep -q "minikube: Running cluster:

> Running kubectl: Correctly Configured"

> My local messae

> jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

> host: Running

> kubelet: Running

> apiserver: Running

> kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

> So, I think we should improve the check logic of `minikube status`, What do

> you think?

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (FLINK-11971) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[

https://issues.apache.org/jira/browse/FLINK-11971?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11971:

Attachment: screenshot-1.png

> Fix `Command: start_kubernetes_if_not_ruunning failed` error

>

>

> Key: FLINK-11971

> URL: https://issues.apache.org/jira/browse/FLINK-11971

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Assignee: Hequn Cheng

>Priority: Major

> Attachments: screenshot-1.png

>

>

> When I did the end-to-end test under Mac OS, I found the following two

> problems:

> 1. The verification returned for different `minikube status` is not enough

> for the robustness. The strings returned by different versions of different

> platforms are different. the following misjudgment is caused:

> When the `Command: start_kubernetes_if_not_ruunning failed` error occurs,

> the `minikube` has actually started successfully. The core reason is that

> there is a bug in the `test_kubernetes_embedded_job.sh` script. The error

> message as follows:

> !image-2019-03-20-14-02-29-636.png!

> !image-2019-03-20-14-04-17-933.png!

>

> {code:java}

> Current check logic: echo ${status} | grep -q "minikube: Running cluster:

> Running kubectl: Correctly Configured"

> My local messae

> jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

> host: Running

> kubelet: Running

> apiserver: Running

> kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

> So, I think we should improve the check logic of `minikube status`, What do

> you think?

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (FLINK-11971) The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR during the end-to-end test.

[

https://issues.apache.org/jira/browse/FLINK-11971?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11971:

Summary: The classpath is missing the

`flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR during the end-to-end test.

(was: Fix `Command: start_kubernetes_if_not_ruunning failed` error)

> The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR

> during the end-to-end test.

>

>

> Key: FLINK-11971

> URL: https://issues.apache.org/jira/browse/FLINK-11971

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Priority: Major

>

> When I did the end-to-end test under Mac OS, I found the following two

> problems:

> 1. The verification returned for different `minikube status` is not enough

> for the robustness. The strings returned by different versions of different

> platforms are different. the following misjudgment is caused:

> When the `Command: start_kubernetes_if_not_ruunning failed` error occurs,

> the `minikube` has actually started successfully. The core reason is that

> there is a bug in the `test_kubernetes_embedded_job.sh` script. The error

> message as follows:

> !image-2019-03-20-14-02-29-636.png!

> !image-2019-03-20-14-04-17-933.png!

>

> {code:java}

> Current check logic: echo ${status} | grep -q "minikube: Running cluster:

> Running kubectl: Correctly Configured"

> My local messae

> jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

> host: Running

> kubelet: Running

> apiserver: Running

> kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

> So, I think we should improve the check logic of `minikube status`, What do

> you think?

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (FLINK-11972) The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR during the end-to-end test.

[

https://issues.apache.org/jira/browse/FLINK-11972?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11972:

Summary: The classpath is missing the

`flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR during the end-to-end test.

(was: Fix `Command: start_kubernetes_if_not_ruunning failed` error)

> The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR

> during the end-to-end test.

>

>

> Key: FLINK-11972

> URL: https://issues.apache.org/jira/browse/FLINK-11972

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Assignee: Hequn Cheng

>Priority: Major

>

> Since the difference between 1.8.0 and 1.7.x is that 1.8.x does not put the

> `hadoop-shaded` JAR integrated into the dist. It will cause an error when

> the end-to-end test cannot be found with `Hadoop` Related classes, such as:

> `java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem`. So we

> need to improve the end-to-end test script, or explicitly stated in the

> README, i.e. end-to-end test need to add `flink-shaded-hadoop2-uber-.jar`

> to the classpath. So, we will get the exception something like:

> {code:java}

> [INFO] 3 instance(s) of taskexecutor are already running on

> jinchengsunjcs-iMac.local.

> Starting taskexecutor daemon on host jinchengsunjcs-iMac.local.

> java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem;

> at java.lang.Class.getDeclaredFields0(Native Method)

> at java.lang.Class.privateGetDeclaredFields(Class.java:2583)

> at java.lang.Class.getDeclaredFields(Class.java:1916)

> at org.apache.flink.api.java.ClosureCleaner.clean(ClosureCleaner.java:72)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.clean(StreamExecutionEnvironment.java:1558)

> at

> org.apache.flink.streaming.api.datastream.DataStream.clean(DataStream.java:185)

> at

> org.apache.flink.streaming.api.datastream.DataStream.addSink(DataStream.java:1227)

> at

> org.apache.flink.streaming.tests.BucketingSinkTestProgram.main(BucketingSinkTestProgram.java:80)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:529)

> at

> org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:421)

> at org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:423)

> at

> org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:813)

> at org.apache.flink.client.cli.CliFrontend.runProgram(CliFrontend.java:287)

> at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:213)

> at

> org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1050)

> at

> org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1126)

> at

> org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

> at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1126)

> Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FileSystem

> at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

> at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

> at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

> ... 22 more

> Job () is running.{code}

> So, I think we can import the test script or import the README.

> What do you think?

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (FLINK-11972) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[

https://issues.apache.org/jira/browse/FLINK-11972?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11972:

Description:

Since the difference between 1.8.0 and 1.7.x is that 1.8.x does not put the

`hadoop-shaded` JAR integrated into the dist. It will cause an error when the

end-to-end test cannot be found with `Hadoop` Related classes, such as:

`java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem`. So we need

to improve the end-to-end test script, or explicitly stated in the README, i.e.

end-to-end test need to add `flink-shaded-hadoop2-uber-.jar` to the

classpath. So, we will get the exception something like:

{code:java}

[INFO] 3 instance(s) of taskexecutor are already running on

jinchengsunjcs-iMac.local.

Starting taskexecutor daemon on host jinchengsunjcs-iMac.local.

java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem;

at java.lang.Class.getDeclaredFields0(Native Method)

at java.lang.Class.privateGetDeclaredFields(Class.java:2583)

at java.lang.Class.getDeclaredFields(Class.java:1916)

at org.apache.flink.api.java.ClosureCleaner.clean(ClosureCleaner.java:72)

at

org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.clean(StreamExecutionEnvironment.java:1558)

at

org.apache.flink.streaming.api.datastream.DataStream.clean(DataStream.java:185)

at

org.apache.flink.streaming.api.datastream.DataStream.addSink(DataStream.java:1227)

at

org.apache.flink.streaming.tests.BucketingSinkTestProgram.main(BucketingSinkTestProgram.java:80)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:529)

at

org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:421)

at org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:423)

at org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:813)

at org.apache.flink.client.cli.CliFrontend.runProgram(CliFrontend.java:287)

at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:213)

at

org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1050)

at org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1126)

at

org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1126)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FileSystem

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 22 more

Job () is running.{code}

So, I think we can import the test script or import the README.

What do you think?

was:

When I did the end-to-end test under Mac OS, I found the following two problems:

1. The verification returned for different `minikube status` is not enough for

the robustness. The strings returned by different versions of different

platforms are different. the following misjudgment is caused:

When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, the

`minikube` has actually started successfully. The core reason is that there is

a bug in the `test_kubernetes_embedded_job.sh` script. The error message as

follows:

!image-2019-03-20-14-02-29-636.png!

!image-2019-03-20-14-04-17-933.png!

{code:java}

Current check logic: echo ${status} | grep -q "minikube: Running cluster:

Running kubectl: Correctly Configured"

My local messae

jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

So, I think we should improve the check logic of `minikube status`, What do you

think?

> Fix `Command: start_kubernetes_if_not_ruunning failed` error

>

>

> Key: FLINK-11972

> URL: https://issues.apache.org/jira/browse/FLINK-11972

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Assignee: Hequn Cheng

>Priority: Major

> Attachments: image-2019-03-20-14-04-17-933.png

>

>

> Since the difference between 1.8.0 and 1.7.x is that 1.8.x does not put the

> `hadoop-shaded` JAR integrated into the dist. It will cause an error when

> the end-to-end test cannot be found with `Hadoop` Related classes, such as:

> `java.lang.NoClassDefFoundError: Lorg/apache/had

[jira] [Assigned] (FLINK-11972) The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR during the end-to-end test.

[

https://issues.apache.org/jira/browse/FLINK-11972?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Hequn Cheng reassigned FLINK-11972:

---

Assignee: (was: Hequn Cheng)

> The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR

> during the end-to-end test.

>

>

> Key: FLINK-11972

> URL: https://issues.apache.org/jira/browse/FLINK-11972

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Priority: Major

>

> Since the difference between 1.8.0 and 1.7.x is that 1.8.x does not put the

> `hadoop-shaded` JAR integrated into the dist. It will cause an error when

> the end-to-end test cannot be found with `Hadoop` Related classes, such as:

> `java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem`. So we

> need to improve the end-to-end test script, or explicitly stated in the

> README, i.e. end-to-end test need to add `flink-shaded-hadoop2-uber-.jar`

> to the classpath. So, we will get the exception something like:

> {code:java}

> [INFO] 3 instance(s) of taskexecutor are already running on

> jinchengsunjcs-iMac.local.

> Starting taskexecutor daemon on host jinchengsunjcs-iMac.local.

> java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem;

> at java.lang.Class.getDeclaredFields0(Native Method)

> at java.lang.Class.privateGetDeclaredFields(Class.java:2583)

> at java.lang.Class.getDeclaredFields(Class.java:1916)

> at org.apache.flink.api.java.ClosureCleaner.clean(ClosureCleaner.java:72)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.clean(StreamExecutionEnvironment.java:1558)

> at

> org.apache.flink.streaming.api.datastream.DataStream.clean(DataStream.java:185)

> at

> org.apache.flink.streaming.api.datastream.DataStream.addSink(DataStream.java:1227)

> at

> org.apache.flink.streaming.tests.BucketingSinkTestProgram.main(BucketingSinkTestProgram.java:80)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:529)

> at

> org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:421)

> at org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:423)

> at

> org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:813)

> at org.apache.flink.client.cli.CliFrontend.runProgram(CliFrontend.java:287)

> at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:213)

> at

> org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1050)

> at

> org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1126)

> at

> org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

> at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1126)

> Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FileSystem

> at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

> at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

> at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

> ... 22 more

> Job () is running.{code}

> So, I think we can import the test script or import the README.

> What do you think?

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (FLINK-11972) The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR during the end-to-end test.

[

https://issues.apache.org/jira/browse/FLINK-11972?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11972:

Attachment: (was: image-2019-03-20-14-04-17-933.png)

> The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR

> during the end-to-end test.

>

>

> Key: FLINK-11972

> URL: https://issues.apache.org/jira/browse/FLINK-11972

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Assignee: Hequn Cheng

>Priority: Major

>

> Since the difference between 1.8.0 and 1.7.x is that 1.8.x does not put the

> `hadoop-shaded` JAR integrated into the dist. It will cause an error when

> the end-to-end test cannot be found with `Hadoop` Related classes, such as:

> `java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem`. So we

> need to improve the end-to-end test script, or explicitly stated in the

> README, i.e. end-to-end test need to add `flink-shaded-hadoop2-uber-.jar`

> to the classpath. So, we will get the exception something like:

> {code:java}

> [INFO] 3 instance(s) of taskexecutor are already running on

> jinchengsunjcs-iMac.local.

> Starting taskexecutor daemon on host jinchengsunjcs-iMac.local.

> java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem;

> at java.lang.Class.getDeclaredFields0(Native Method)

> at java.lang.Class.privateGetDeclaredFields(Class.java:2583)

> at java.lang.Class.getDeclaredFields(Class.java:1916)

> at org.apache.flink.api.java.ClosureCleaner.clean(ClosureCleaner.java:72)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.clean(StreamExecutionEnvironment.java:1558)

> at

> org.apache.flink.streaming.api.datastream.DataStream.clean(DataStream.java:185)

> at

> org.apache.flink.streaming.api.datastream.DataStream.addSink(DataStream.java:1227)

> at

> org.apache.flink.streaming.tests.BucketingSinkTestProgram.main(BucketingSinkTestProgram.java:80)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:529)

> at

> org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:421)

> at org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:423)

> at

> org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:813)

> at org.apache.flink.client.cli.CliFrontend.runProgram(CliFrontend.java:287)

> at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:213)

> at

> org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1050)

> at

> org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1126)

> at

> org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

> at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1126)

> Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FileSystem

> at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

> at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

> at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

> ... 22 more

> Job () is running.{code}

> So, I think we can import the test script or import the README.

> What do you think?

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (FLINK-11971) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[

https://issues.apache.org/jira/browse/FLINK-11971?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11971:

Description:

When I did the end-to-end test under Mac OS, I found the following two problems:

1. The verification returned for different `minikube status` is not enough for

the robustness. The strings returned by different versions of different

platforms are different. the following misjudgment is caused:

When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, the

`minikube` has actually started successfully. The core reason is that there is

a bug in the `test_kubernetes_embedded_job.sh` script. The error message as

follows:

!image-2019-03-20-14-02-29-636.png!

!image-2019-03-20-14-04-17-933.png!

{code:java}

Current check logic: echo ${status} | grep -q "minikube: Running cluster:

Running kubectl: Correctly Configured"

My local messae

jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

So, I think we should improve the check logic of `minikube status`, What do you

think?

was:

Since the difference between 1.8.0 and 1.7.x is that 1.8.x does not put the

`hadoop-shaded` JAR integrated into the dist. It will cause an error when the

end-to-end test cannot be found with `Hadoop` Related classes, such as:

`java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem`. So we need

to improve the end-to-end test script, or explicitly stated in the README, i.e.

end-to-end test need to add `flink-shaded-hadoop2-uber-.jar` to the

classpath. So, we will get the exception something like:

{code:java}

[INFO] 3 instance(s) of taskexecutor are already running on

jinchengsunjcs-iMac.local.

Starting taskexecutor daemon on host jinchengsunjcs-iMac.local.

java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem;

at java.lang.Class.getDeclaredFields0(Native Method)

at java.lang.Class.privateGetDeclaredFields(Class.java:2583)

at java.lang.Class.getDeclaredFields(Class.java:1916)

at org.apache.flink.api.java.ClosureCleaner.clean(ClosureCleaner.java:72)

at

org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.clean(StreamExecutionEnvironment.java:1558)

at

org.apache.flink.streaming.api.datastream.DataStream.clean(DataStream.java:185)

at

org.apache.flink.streaming.api.datastream.DataStream.addSink(DataStream.java:1227)

at

org.apache.flink.streaming.tests.BucketingSinkTestProgram.main(BucketingSinkTestProgram.java:80)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:529)

at

org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:421)

at org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:423)

at org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:813)

at org.apache.flink.client.cli.CliFrontend.runProgram(CliFrontend.java:287)

at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:213)

at

org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1050)

at org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1126)

at

org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1126)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FileSystem

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 22 more

Job () is running.{code}

So, I think we can import the test script or import the README.

What do you think?

> Fix `Command: start_kubernetes_if_not_ruunning failed` error

>

>

> Key: FLINK-11971

> URL: https://issues.apache.org/jira/browse/FLINK-11971

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Priority: Major

>

> When I did the end-to-end test under Mac OS, I found the following two

> problems:

> 1. The verification returned for different `minikube status` is not enough

> for the robustness. The strings returned by different versions of different

> platforms are different. the following misjudgment is caused:

> When the `Command: start_kubernetes_if_not_ruunning failed` error occurs,

[jira] [Updated] (FLINK-11971) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[

https://issues.apache.org/jira/browse/FLINK-11971?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11971:

Summary: Fix `Command: start_kubernetes_if_not_ruunning failed` error

(was: The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar`

JAR during the end-to-end test.)

> Fix `Command: start_kubernetes_if_not_ruunning failed` error

>

>

> Key: FLINK-11971

> URL: https://issues.apache.org/jira/browse/FLINK-11971

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Priority: Major

>

> Since the difference between 1.8.0 and 1.7.x is that 1.8.x does not put the

> `hadoop-shaded` JAR integrated into the dist. It will cause an error when

> the end-to-end test cannot be found with `Hadoop` Related classes, such as:

> `java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem`. So we

> need to improve the end-to-end test script, or explicitly stated in the

> README, i.e. end-to-end test need to add `flink-shaded-hadoop2-uber-.jar`

> to the classpath. So, we will get the exception something like:

> {code:java}

> [INFO] 3 instance(s) of taskexecutor are already running on

> jinchengsunjcs-iMac.local.

> Starting taskexecutor daemon on host jinchengsunjcs-iMac.local.

> java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem;

> at java.lang.Class.getDeclaredFields0(Native Method)

> at java.lang.Class.privateGetDeclaredFields(Class.java:2583)

> at java.lang.Class.getDeclaredFields(Class.java:1916)

> at org.apache.flink.api.java.ClosureCleaner.clean(ClosureCleaner.java:72)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.clean(StreamExecutionEnvironment.java:1558)

> at

> org.apache.flink.streaming.api.datastream.DataStream.clean(DataStream.java:185)

> at

> org.apache.flink.streaming.api.datastream.DataStream.addSink(DataStream.java:1227)

> at

> org.apache.flink.streaming.tests.BucketingSinkTestProgram.main(BucketingSinkTestProgram.java:80)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:529)

> at

> org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:421)

> at org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:423)

> at

> org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:813)

> at org.apache.flink.client.cli.CliFrontend.runProgram(CliFrontend.java:287)

> at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:213)

> at

> org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1050)

> at

> org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1126)

> at

> org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

> at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1126)

> Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FileSystem

> at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

> at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

> at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

> ... 22 more

> Job () is running.{code}

> So, I think we can import the test script or import the README.

> What do you think?

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[GitHub] [flink] KurtYoung merged pull request #8001: [FLINK-11949][table-planner-blink] Introduce DeclarativeAggregateFunction and AggsHandlerCodeGenerator to blink planner

KurtYoung merged pull request #8001: [FLINK-11949][table-planner-blink] Introduce DeclarativeAggregateFunction and AggsHandlerCodeGenerator to blink planner URL: https://github.com/apache/flink/pull/8001 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Updated] (FLINK-11972) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[ https://issues.apache.org/jira/browse/FLINK-11972?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sunjincheng updated FLINK-11972: Attachment: (was: image-2019-03-20-14-01-05-647.png) > Fix `Command: start_kubernetes_if_not_ruunning failed` error > > > Key: FLINK-11972 > URL: https://issues.apache.org/jira/browse/FLINK-11972 > Project: Flink > Issue Type: Bug > Components: Tests >Affects Versions: 1.8.0, 1.9.0 >Reporter: sunjincheng >Assignee: Hequn Cheng >Priority: Major > > When I did the end-to-end test under Mac OS, I found the following two > problems: > 1. The verification returned for different `minikube status` is not enough > for the robustness. The strings returned by different versions of different > platforms are different. the following misjudgment is caused: > When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, > the `minikube` has actually started successfully. The core reason is that > there is a bug in the `test_kubernetes_embedded_job.sh` script. The error > message as follows: > !image-2019-03-20-14-02-29-636.png! > > So, I think we should improve the check logic of `minikube status`, What do > you think? -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Assigned] (FLINK-11972) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[ https://issues.apache.org/jira/browse/FLINK-11972?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Hequn Cheng reassigned FLINK-11972: --- Assignee: Hequn Cheng > Fix `Command: start_kubernetes_if_not_ruunning failed` error > > > Key: FLINK-11972 > URL: https://issues.apache.org/jira/browse/FLINK-11972 > Project: Flink > Issue Type: Bug > Components: Tests >Affects Versions: 1.8.0, 1.9.0 >Reporter: sunjincheng >Assignee: Hequn Cheng >Priority: Major > > When I did the end-to-end test under Mac OS, I found the following two > problems: > 1. The verification returned for different `minikube status` is not enough > for the robustness. The strings returned by different versions of different > platforms are different. the following misjudgment is caused: > When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, > the `minikube` has actually started successfully. The core reason is that > there is a bug in the `test_kubernetes_embedded_job.sh` script. The error > message as follows: > !image-2019-03-20-14-02-29-636.png! > > So, I think we should improve the check logic of `minikube status`, What do > you think? -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (FLINK-11972) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[

https://issues.apache.org/jira/browse/FLINK-11972?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sunjincheng updated FLINK-11972:

Description:

When I did the end-to-end test under Mac OS, I found the following two problems:

1. The verification returned for different `minikube status` is not enough for

the robustness. The strings returned by different versions of different

platforms are different. the following misjudgment is caused:

When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, the

`minikube` has actually started successfully. The core reason is that there is

a bug in the `test_kubernetes_embedded_job.sh` script. The error message as

follows:

!image-2019-03-20-14-02-29-636.png!

!image-2019-03-20-14-04-17-933.png!

{code:java}

Current check logic: echo ${status} | grep -q "minikube: Running cluster:

Running kubectl: Correctly Configured"

My local messae

jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

So, I think we should improve the check logic of `minikube status`, What do you

think?

was:

When I did the end-to-end test under Mac OS, I found the following two problems:

1. The verification returned for different `minikube status` is not enough for

the robustness. The strings returned by different versions of different

platforms are different. the following misjudgment is caused:

When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, the

`minikube` has actually started successfully. The core reason is that there is

a bug in the `test_kubernetes_embedded_job.sh` script. The error message as

follows:

!image-2019-03-20-14-02-29-636.png!

!image-2019-03-20-14-04-17-933.png!

So, I think we should improve the check logic of `minikube status`, What do

you think?

> Fix `Command: start_kubernetes_if_not_ruunning failed` error

>

>

> Key: FLINK-11972

> URL: https://issues.apache.org/jira/browse/FLINK-11972

> Project: Flink

> Issue Type: Bug

> Components: Tests

>Affects Versions: 1.8.0, 1.9.0

>Reporter: sunjincheng

>Assignee: Hequn Cheng

>Priority: Major

> Attachments: image-2019-03-20-14-04-17-933.png

>

>

> When I did the end-to-end test under Mac OS, I found the following two

> problems:

> 1. The verification returned for different `minikube status` is not enough

> for the robustness. The strings returned by different versions of different

> platforms are different. the following misjudgment is caused:

> When the `Command: start_kubernetes_if_not_ruunning failed` error occurs,

> the `minikube` has actually started successfully. The core reason is that

> there is a bug in the `test_kubernetes_embedded_job.sh` script. The error

> message as follows:

> !image-2019-03-20-14-02-29-636.png!

> !image-2019-03-20-14-04-17-933.png!

>

> {code:java}

> Current check logic: echo ${status} | grep -q "minikube: Running cluster:

> Running kubectl: Correctly Configured"

> My local messae

> jinchengsunjcs-iMac:flink-1.8.0 jincheng$ minikube status

> host: Running

> kubelet: Running

> apiserver: Running

> kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.101{code}

> So, I think we should improve the check logic of `minikube status`, What do

> you think?

>

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (FLINK-11972) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[ https://issues.apache.org/jira/browse/FLINK-11972?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sunjincheng updated FLINK-11972: Description: When I did the end-to-end test under Mac OS, I found the following two problems: 1. The verification returned for different `minikube status` is not enough for the robustness. The strings returned by different versions of different platforms are different. the following misjudgment is caused: When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, the `minikube` has actually started successfully. The core reason is that there is a bug in the `test_kubernetes_embedded_job.sh` script. The error message as follows: !image-2019-03-20-14-02-29-636.png! !image-2019-03-20-14-04-17-933.png! So, I think we should improve the check logic of `minikube status`, What do you think? was: When I did the end-to-end test under Mac OS, I found the following two problems: 1. The verification returned for different `minikube status` is not enough for the robustness. The strings returned by different versions of different platforms are different. the following misjudgment is caused: When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, the `minikube` has actually started successfully. The core reason is that there is a bug in the `test_kubernetes_embedded_job.sh` script. The error message as follows: !image-2019-03-20-14-02-29-636.png! So, I think we should improve the check logic of `minikube status`, What do you think? > Fix `Command: start_kubernetes_if_not_ruunning failed` error > > > Key: FLINK-11972 > URL: https://issues.apache.org/jira/browse/FLINK-11972 > Project: Flink > Issue Type: Bug > Components: Tests >Affects Versions: 1.8.0, 1.9.0 >Reporter: sunjincheng >Assignee: Hequn Cheng >Priority: Major > Attachments: image-2019-03-20-14-04-17-933.png > > > When I did the end-to-end test under Mac OS, I found the following two > problems: > 1. The verification returned for different `minikube status` is not enough > for the robustness. The strings returned by different versions of different > platforms are different. the following misjudgment is caused: > When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, > the `minikube` has actually started successfully. The core reason is that > there is a bug in the `test_kubernetes_embedded_job.sh` script. The error > message as follows: > !image-2019-03-20-14-02-29-636.png! > !image-2019-03-20-14-04-17-933.png! > So, I think we should improve the check logic of `minikube status`, What do > you think? -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (FLINK-11972) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[ https://issues.apache.org/jira/browse/FLINK-11972?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sunjincheng updated FLINK-11972: Attachment: image-2019-03-20-14-04-17-933.png > Fix `Command: start_kubernetes_if_not_ruunning failed` error > > > Key: FLINK-11972 > URL: https://issues.apache.org/jira/browse/FLINK-11972 > Project: Flink > Issue Type: Bug > Components: Tests >Affects Versions: 1.8.0, 1.9.0 >Reporter: sunjincheng >Assignee: Hequn Cheng >Priority: Major > Attachments: image-2019-03-20-14-04-17-933.png > > > When I did the end-to-end test under Mac OS, I found the following two > problems: > 1. The verification returned for different `minikube status` is not enough > for the robustness. The strings returned by different versions of different > platforms are different. the following misjudgment is caused: > When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, > the `minikube` has actually started successfully. The core reason is that > there is a bug in the `test_kubernetes_embedded_job.sh` script. The error > message as follows: > !image-2019-03-20-14-02-29-636.png! > !image-2019-03-20-14-04-17-933.png! > So, I think we should improve the check logic of `minikube status`, What do > you think? -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (FLINK-11972) Fix `Command: start_kubernetes_if_not_ruunning failed` error

[ https://issues.apache.org/jira/browse/FLINK-11972?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sunjincheng updated FLINK-11972: Attachment: (was: image-2019-03-20-14-02-29-636.png) > Fix `Command: start_kubernetes_if_not_ruunning failed` error > > > Key: FLINK-11972 > URL: https://issues.apache.org/jira/browse/FLINK-11972 > Project: Flink > Issue Type: Bug > Components: Tests >Affects Versions: 1.8.0, 1.9.0 >Reporter: sunjincheng >Assignee: Hequn Cheng >Priority: Major > > When I did the end-to-end test under Mac OS, I found the following two > problems: > 1. The verification returned for different `minikube status` is not enough > for the robustness. The strings returned by different versions of different > platforms are different. the following misjudgment is caused: > When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, > the `minikube` has actually started successfully. The core reason is that > there is a bug in the `test_kubernetes_embedded_job.sh` script. The error > message as follows: > !image-2019-03-20-14-02-29-636.png! > > So, I think we should improve the check logic of `minikube status`, What do > you think? -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Created] (FLINK-11971) The classpath is missing the `flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR during the end-to-end test.

sunjincheng created FLINK-11971:

---

Summary: The classpath is missing the

`flink-shaded-hadoop2-uber-2.8.3-1.8.0.jar` JAR during the end-to-end test.

Key: FLINK-11971

URL: https://issues.apache.org/jira/browse/FLINK-11971

Project: Flink

Issue Type: Bug

Components: Tests

Affects Versions: 1.8.0, 1.9.0

Reporter: sunjincheng

Since the difference between 1.8.0 and 1.7.x is that 1.8.x does not put the

`hadoop-shaded` JAR integrated into the dist. It will cause an error when the

end-to-end test cannot be found with `Hadoop` Related classes, such as:

`java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem`. So we need

to improve the end-to-end test script, or explicitly stated in the README, i.e.

end-to-end test need to add `flink-shaded-hadoop2-uber-.jar` to the

classpath. So, we will get the exception something like:

{code:java}

[INFO] 3 instance(s) of taskexecutor are already running on

jinchengsunjcs-iMac.local.

Starting taskexecutor daemon on host jinchengsunjcs-iMac.local.

java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem;

at java.lang.Class.getDeclaredFields0(Native Method)

at java.lang.Class.privateGetDeclaredFields(Class.java:2583)

at java.lang.Class.getDeclaredFields(Class.java:1916)

at org.apache.flink.api.java.ClosureCleaner.clean(ClosureCleaner.java:72)

at

org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.clean(StreamExecutionEnvironment.java:1558)

at

org.apache.flink.streaming.api.datastream.DataStream.clean(DataStream.java:185)

at

org.apache.flink.streaming.api.datastream.DataStream.addSink(DataStream.java:1227)

at

org.apache.flink.streaming.tests.BucketingSinkTestProgram.main(BucketingSinkTestProgram.java:80)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:529)

at

org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:421)

at org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:423)

at org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:813)

at org.apache.flink.client.cli.CliFrontend.runProgram(CliFrontend.java:287)

at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:213)

at

org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1050)

at org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1126)

at

org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1126)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FileSystem

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 22 more

Job () is running.{code}

So, I think we can import the test script or import the README.

What do you think?

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Created] (FLINK-11972) Fix `Command: start_kubernetes_if_not_ruunning failed` error

sunjincheng created FLINK-11972: --- Summary: Fix `Command: start_kubernetes_if_not_ruunning failed` error Key: FLINK-11972 URL: https://issues.apache.org/jira/browse/FLINK-11972 Project: Flink Issue Type: Bug Components: Tests Affects Versions: 1.8.0, 1.9.0 Reporter: sunjincheng Attachments: image-2019-03-20-14-01-05-647.png, image-2019-03-20-14-02-29-636.png When I did the end-to-end test under Mac OS, I found the following two problems: 1. The verification returned for different `minikube status` is not enough for the robustness. The strings returned by different versions of different platforms are different. the following misjudgment is caused: When the `Command: start_kubernetes_if_not_ruunning failed` error occurs, the `minikube` has actually started successfully. The core reason is that there is a bug in the `test_kubernetes_embedded_job.sh` script. The error message as follows: !image-2019-03-20-14-02-29-636.png! So, I think we should improve the check logic of `minikube status`, What do you think? -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Created] (FLINK-11970) TableEnvironment#registerFunction should overwrite if the same name function existed.

Jeff Zhang created FLINK-11970: -- Summary: TableEnvironment#registerFunction should overwrite if the same name function existed. Key: FLINK-11970 URL: https://issues.apache.org/jira/browse/FLINK-11970 Project: Flink Issue Type: Improvement Reporter: Jeff Zhang Currently, it would throw exception if I try to register user function multiple times. Registering udf multiple times is very common usage in notebook scenario. And I don't think it would cause issues for users. e.g. If user happened to register the same udf multiple times (he intend to register 2 different udf actually), then he would get exception at runtime where he use the udf that is missing registration. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (FLINK-11912) Expose per partition Kafka lag metric in Flink Kafka connector

[

https://issues.apache.org/jira/browse/FLINK-11912?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16796788#comment-16796788

]

Jiangjie Qin commented on FLINK-11912:

--

[~suez1224] Thanks for bringing this up. The current proposal (and the WIP

patch) seems still having metric leak. Even if the metric objects are removed

from the {{manualRegisteredMetricSet}}, the metric will still be there until

{{MetricRegistry.unregister()}} is invoked. Unfortunately, the {{MetricGroup}}

interface does not expose a method to explicitly remove a single metric. At

this point, in order to unregister the metrics, the metric group has to be

closed.

So it seems we need to add a {{remove(String MetricName)}} method to the

{{MetricGroup}} interface to ensure the metrics are properly unregistered.

> Expose per partition Kafka lag metric in Flink Kafka connector

> --

>

> Key: FLINK-11912

> URL: https://issues.apache.org/jira/browse/FLINK-11912

> Project: Flink

> Issue Type: New Feature

> Components: Connectors / Kafka

>Affects Versions: 1.6.4, 1.7.2

>Reporter: Shuyi Chen

>Assignee: Shuyi Chen

>Priority: Major

>

> In production, it's important that we expose the Kafka lag by partition

> metric in order for users to diagnose which Kafka partition is lagging.

> However, although the Kafka lag by partition metrics are available in

> KafkaConsumer after 0.10.2, Flink was not able to properly register it

> because the metrics are only available after the consumer start polling data

> from partitions. I would suggest the following fix:

> 1) In KafkaConsumerThread.run(), allocate a manualRegisteredMetricSet.

> 2) in the fetch loop, as KafkaConsumer discovers new partitions, manually add

> MetricName for those partitions that we want to register into

> manualRegisteredMetricSet.

> 3) in the fetch loop, check if manualRegisteredMetricSet is empty. If not,

> try to search for the metrics available in KafkaConsumer, and if found,

> register it and remove the entry from manualRegisteredMetricSet.

> The overhead of the above approach is bounded and only incur when discovering

> new partitions, and registration is done once the KafkaConsumer have the

> metrics exposed.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)