[GitHub] [flink-statefun] tzulitai commented on pull request #162: [FLINK-19399][doc][py] Document AsyncRequestHandler

tzulitai commented on pull request #162: URL: https://github.com/apache/flink-statefun/pull/162#issuecomment-698736864 Merging ... This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] dianfu commented on a change in pull request #13475: [FLINK-19372][python] Support Pandas Batch Over Window Aggregation

dianfu commented on a change in pull request #13475: URL: https://github.com/apache/flink/pull/13475#discussion_r494761634 ## File path: flink-python/pyflink/fn_execution/beam/beam_operations_fast.pyx ## @@ -192,6 +194,108 @@ cdef class PandasAggregateFunctionOperation(BeamStatelessFunctionOperation): return generate_func, user_defined_funcs +cdef class PandasBatchOverWindowAggregateFunctionOperation(BeamStatelessFunctionOperation): Review comment: It seems to me that most code of beam_operations_fast and beam_operation_slow are the same. Is it possible to avoid the duplication? PS: This is not an issue introduced in this PR. We could also address it in a separate if it's possible. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13419: [FLINK-18842][e2e] Added 10min timeout to building the container.

flinkbot edited a comment on pull request #13419: URL: https://github.com/apache/flink/pull/13419#issuecomment-694745717 ## CI report: * bf0418c1332c5371f14820d80af9ceea7507bedc Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6913) * e955f78bf582ca3b1c50e357d6ed5d7bfe159268 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6939) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13475: [FLINK-19372][python] Support Pandas Batch Over Window Aggregation

flinkbot edited a comment on pull request #13475: URL: https://github.com/apache/flink/pull/13475#issuecomment-698240452 ## CI report: * 76736cc092aa256ff4680e54613e8c17cf45b596 Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6931) * 469104d651f8f47e1dc76f0d317ea0477c1eef87 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6932) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] dianfu commented on a change in pull request #13475: [FLINK-19372][python] Support Pandas Batch Over Window Aggregation

dianfu commented on a change in pull request #13475:

URL: https://github.com/apache/flink/pull/13475#discussion_r494733674

##

File path: flink-python/pyflink/fn_execution/beam/beam_coder_impl_slow.py

##

@@ -560,23 +560,52 @@ def encode_to_stream(self, iter_cols, out_stream, nested):

def decode_from_stream(self, in_stream, nested):

while in_stream.size() > 0:

-yield self._decode_one_batch_from_stream(in_stream)

+yield self._decode_one_batch_from_stream(in_stream,

in_stream.read_var_int64())

@staticmethod

def _load_from_stream(stream):

reader = pa.ipc.open_stream(stream)

for batch in reader:

yield batch

-def _decode_one_batch_from_stream(self, in_stream: create_InputStream) ->

List:

-self._resettable_io.set_input_bytes(in_stream.read_all(True))

+def _decode_one_batch_from_stream(self, in_stream: create_InputStream,

size: int) -> List:

+self._resettable_io.set_input_bytes(in_stream.read(size))

# there is only one arrow batch in the underlying input stream

return arrow_to_pandas(self._timezone, self._field_types,

[next(self._batch_reader)])

def __repr__(self):

return 'ArrowCoderImpl[%s]' % self._schema

+class OverWindowArrowCoderImpl(StreamCoderImpl):

+def __init__(self, arrow_coder):

+self._arrow_coder = arrow_coder

+self._int_coder = IntCoderImpl()

+

+def encode_to_stream(self, value, stream, nested):

+self._arrow_coder.encode_to_stream(value, stream, nested)

+

+def decode_from_stream(self, in_stream, nested):

+while in_stream.size():

+all_size = in_stream.read_var_int64()

Review comment:

```suggestion

remaining_size = in_stream.read_var_int64()

```

##

File path: flink-python/pyflink/fn_execution/beam/beam_operations_slow.py

##

@@ -172,6 +172,113 @@ def generate_func(self, udfs):

return lambda it: map(mapper, it), user_defined_funcs

+class

PandasBatchOverWindowAggregateFunctionOperation(StatelessFunctionOperation):

+def __init__(self, name, spec, counter_factory, sampler, consumers):

+super(PandasBatchOverWindowAggregateFunctionOperation, self).__init__(

+name, spec, counter_factory, sampler, consumers)

+self.windows = [window for window in self.spec.serialized_fn.windows]

+# Set a serial number for each over window to indicate which bounded

range over window

+# it is.

+self.window_to_bounded_range_window_index = [-1 for _ in

range(len(self.windows))]

+# Whether the specified position window is a bounded range window.

+self.window_is_bounded_range_type = []

Review comment:

```suggestion

self.is_bounded_range_window = []

```

##

File path: flink-python/pyflink/fn_execution/beam/beam_operations_slow.py

##

@@ -172,6 +172,113 @@ def generate_func(self, udfs):

return lambda it: map(mapper, it), user_defined_funcs

+class

PandasBatchOverWindowAggregateFunctionOperation(StatelessFunctionOperation):

+def __init__(self, name, spec, counter_factory, sampler, consumers):

+super(PandasBatchOverWindowAggregateFunctionOperation, self).__init__(

+name, spec, counter_factory, sampler, consumers)

+self.windows = [window for window in self.spec.serialized_fn.windows]

+# Set a serial number for each over window to indicate which bounded

range over window

+# it is.

+self.window_to_bounded_range_window_index = [-1 for _ in

range(len(self.windows))]

+# Whether the specified position window is a bounded range window.

+self.window_is_bounded_range_type = []

+window_types = flink_fn_execution_pb2.OverWindow

+

+bounded_range_window_nums = 0

+for i, window in enumerate(self.windows):

+window_type = window.window_type

+if (window_type is window_types.RANGE_UNBOUNDED_PRECEDING) or (

+window_type is window_types.RANGE_UNBOUNDED_FOLLOWING) or (

+window_type is window_types.RANGE_SLIDING):

+self.window_to_bounded_range_window_index[i] =

bounded_range_window_nums

+self.window_is_bounded_range_type.append(True)

+bounded_range_window_nums += 1

+else:

+self.window_is_bounded_range_type.append(False)

+

+def generate_func(self, udfs):

+user_defined_funcs = []

+self.window_indexes = []

+self.mapper = []

+for udf in udfs:

+pandas_agg_function, variable_dict, user_defined_func,

window_index = \

+operation_utils.extract_over_window_user_defined_function(udf)

+user_defined_funcs.extend(user_defined_func)

+self.window_indexes.append(window_index)

+self.mapper.append(eval('lambda value: %s' % pandas_agg_function,

[GitHub] [flink] flinkbot edited a comment on pull request #13419: [FLINK-18842][e2e] Added 10min timeout to building the container.

flinkbot edited a comment on pull request #13419: URL: https://github.com/apache/flink/pull/13419#issuecomment-694745717 ## CI report: * bf0418c1332c5371f14820d80af9ceea7507bedc Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6913) * e955f78bf582ca3b1c50e357d6ed5d7bfe159268 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13128: [FLINK-18795][hbase] Support for HBase 2

flinkbot edited a comment on pull request #13128: URL: https://github.com/apache/flink/pull/13128#issuecomment-672766836 ## CI report: * 313b80f4474455d7013b0852929b5a8458f391a1 UNKNOWN * ef7c9791b678f3cc6b524a3d5d0b7b9f0f0402f8 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6930) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] PoojaChandak removed a comment on pull request #13391: Update README.md

PoojaChandak removed a comment on pull request #13391: URL: https://github.com/apache/flink/pull/13391#issuecomment-693400261 Let me know if any other changes required or it is good to merge. Thanks This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] PoojaChandak commented on pull request #13391: Update README.md

PoojaChandak commented on pull request #13391: URL: https://github.com/apache/flink/pull/13391#issuecomment-698724331 Hi, Let me know if any changes are required or the pull request is good to be merged. Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] WeiZhong94 commented on pull request #13462: [FLINK-19229][python] Introduces rules and python side implementation to ensure the basic functionality of the general Python UDAF.

WeiZhong94 commented on pull request #13462: URL: https://github.com/apache/flink/pull/13462#issuecomment-698721421 @dianfu @HuangXingBo Thanks for your comments! I have updated this PR. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (FLINK-17613) Run K8s related e2e tests with multiple K8s versions

[ https://issues.apache.org/jira/browse/FLINK-17613?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201894#comment-17201894 ] Di Xu edited comment on FLINK-17613 at 9/25/20, 4:59 AM: - [~rmetzger] and [~fly_in_gis] I would like to take this ticket. Here is the proposed solution: 1. Determine kubernetes version range to test against minikube follows[1] the Kubernetes Version and Version Skew Support Policy[2] which indicates that the most recent three minor releases are maintained. At the time of writing, v1.19.2, v1.18.9 and v1.17.12 are versions we should test against according to the release page[3]. I am not aware any API to obtain the versions so my plan is to put them into common_kubernetes.sh. If you know better ways please suggest here. 2. Modify test_kubernetes_*.sh to loop over the versions and run test. Moreover, * in case of Linux, add --kubernetes-version=<> to minikube command to start specific kubernetes version * in case of Non-Linux, check the pre-started kubernetes version by 'kubectl version --short' (follows the minikube status check) and ensure it matches the version we want to test against, otherwise fails the test and give hint to user. [1] [https://minikube.sigs.k8s.io/docs/handbook/config/#selecting-a-kubernetes-version] [2] [https://kubernetes.io/docs/setup/release/version-skew-policy/] [3] [https://github.com/kubernetes/kubernetes/releases] was (Author: xudi): [~rmetzger] and [~fly_in_gis] I would like to take this ticket. Here is the proposed solution: 1. Determine kubernetes version range to test against minikube follows[1] the Kubernetes Version and Version Skew Support Policy[2] which indicates that the most recent three minor releases are maintained. At the time of writing, v1.19.2, v1.18.9 and v1.17.12 are versions we should test against according to the release page[3]. I am not aware any API to obtain the versions so my plan is to put them into common_kubernetes.sh. If you know better ways please suggest here. 2. Modify test_kubernetes_*.sh to loop over the versions and run test like before. Moreover, * in case of Linux, add --kubernetes-version=<> to minikube command to start specific kubernetes version * in case of Non-Linux, check the pre-started kubernetes version by 'kubectl version --short' (follows the minikube status check) and ensure it matches the version we want to test against, otherwise fails the test and give hint to user. [1] [https://minikube.sigs.k8s.io/docs/handbook/config/#selecting-a-kubernetes-version] [2] [https://kubernetes.io/docs/setup/release/version-skew-policy/] [3] [https://github.com/kubernetes/kubernetes/releases] > Run K8s related e2e tests with multiple K8s versions > > > Key: FLINK-17613 > URL: https://issues.apache.org/jira/browse/FLINK-17613 > Project: Flink > Issue Type: Improvement > Components: Deployment / Kubernetes, Tests >Reporter: Yang Wang >Priority: Major > Labels: starter > > Follow the discussion in this PR [1]. > If we could run the K8s related e2e tests in multiple versions(the latest > version, oldest maintained version, etc.), it will help us to find the > usability issues earlier before users post them in the user ML[2]. > [1].https://github.com/apache/flink/pull/12071#discussion_r422838483 > [2].https://lists.apache.org/list.html?u...@flink.apache.org:lte=1M:Cannot%20start%20native%20K8s -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on pull request #13471: [FLINK-19124][datastream] Some JobClient methods are not ergonomic, require ClassLoader argument

flinkbot edited a comment on pull request #13471: URL: https://github.com/apache/flink/pull/13471#issuecomment-698161948 ## CI report: * 56ba62dd0ca89a5037144d5401d9aee23a7caed2 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6929) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13449: [FLINK-19282][table sql/planner]Supports watermark push down with Wat…

flinkbot edited a comment on pull request #13449: URL: https://github.com/apache/flink/pull/13449#issuecomment-696509354 ## CI report: * 28d25983818d7805f6708b36125a65a493d03aa9 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6934) Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6938) * d2504d1408f62a1ba5818d4f906b70866f4ef49f Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6937) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13462: [FLINK-19229][python] Introduces rules and python side implementation to ensure the basic functionality of the general Python UDAF.

flinkbot edited a comment on pull request #13462: URL: https://github.com/apache/flink/pull/13462#issuecomment-697272556 ## CI report: * d7401ae9d8da75274c3ba6a2fb04f4639409b59d Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6936) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] rkhachatryan commented on a change in pull request #13040: [FLINK-17073] [checkpointing] checkpointing backpressure if there are too many checkpoints to clean

rkhachatryan commented on a change in pull request #13040:

URL: https://github.com/apache/flink/pull/13040#discussion_r494742471

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/checkpoint/ZooKeeperCompletedCheckpointStoreITCase.java

##

@@ -283,6 +286,54 @@ public void testConcurrentCheckpointOperations() throws

Exception {

recoveredTestCheckpoint.awaitDiscard();

}

+ /**

+* FLINK-17073 tests that there is no request triggered when there are

too many checkpoints

+* waiting to clean and that it resumes when the number of waiting

checkpoints as gone below

+* the threshold.

+*

+*/

+ @Test

+ public void testChekpointingPausesAndResumeWhenTooManyCheckpoints()

throws Exception{

+ ManualClock clock = new ManualClock();

+ clock.advanceTime(1, TimeUnit.DAYS);

+ int maxCleaningCheckpoints = 1;

+ CheckpointsCleaner checkpointsCleaner = new

CheckpointsCleaner();

+ CheckpointRequestDecider checkpointRequestDecider = new

CheckpointRequestDecider(maxCleaningCheckpoints, unused ->{}, clock, 1, new

AtomicInteger(0)::get, checkpointsCleaner::getNumberOfCheckpointsToClean);

+

+ final int maxCheckpointsToRetain = 1;

+ Executors.PausableThreadPoolExecutor executor =

Executors.pausableExecutor();

+ ZooKeeperCompletedCheckpointStore checkpointStore =

createCompletedCheckpoints(maxCheckpointsToRetain, executor);

+

+ //pause the executor to pause checkpoints cleaning, to allow

assertions

+ executor.pause();

+

+ int nbCheckpointsToInject = 3;

+ for (int i = 1; i <= nbCheckpointsToInject; i++) {

+ // add checkpoints to clean

+ TestCompletedCheckpoint completedCheckpoint = new

TestCompletedCheckpoint(new JobID(), i,

+ i, Collections.emptyMap(),

CheckpointProperties.forCheckpoint(CheckpointRetentionPolicy.RETAIN_ON_FAILURE),

+ checkpointsCleaner::cleanCheckpoint);

+ checkpointStore.addCheckpoint(completedCheckpoint);

+ }

+

+ Thread.sleep(100L); // give time to submit checkpoints for

cleaning

+

+ int nbCheckpointsSubmittedForCleaningByCheckpointStore =

nbCheckpointsToInject - maxCheckpointsToRetain;

+

assertEquals(nbCheckpointsSubmittedForCleaningByCheckpointStore,

checkpointsCleaner.getNumberOfCheckpointsToClean());

Review comment:

I think the assumption that each cleanup action is a separate runnable

(and that there are no other runnable) can break at some point and is less

straightforward than `checkpointsCleaner.getNumberOfCheckpointsToClean() <

expected`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13462: [FLINK-19229][python] Introduces rules and python side implementation to ensure the basic functionality of the general Python UDAF.

flinkbot edited a comment on pull request #13462: URL: https://github.com/apache/flink/pull/13462#issuecomment-697272556 ## CI report: * f043157df80e534f25f07f8346c902b0032fca26 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6841) * d7401ae9d8da75274c3ba6a2fb04f4639409b59d Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6936) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13449: [FLINK-19282][table sql/planner]Supports watermark push down with Wat…

flinkbot edited a comment on pull request #13449: URL: https://github.com/apache/flink/pull/13449#issuecomment-696509354 ## CI report: * 28d25983818d7805f6708b36125a65a493d03aa9 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6934) Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6938) * d2504d1408f62a1ba5818d4f906b70866f4ef49f UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (FLINK-17613) Run K8s related e2e tests with multiple K8s versions

[ https://issues.apache.org/jira/browse/FLINK-17613?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201894#comment-17201894 ] Di Xu edited comment on FLINK-17613 at 9/25/20, 4:22 AM: - [~rmetzger] and [~fly_in_gis] I would like to take this ticket. Here is the proposed solution: 1. Determine kubernetes version range to test against minikube follows[1] the Kubernetes Version and Version Skew Support Policy[2] which indicates that the most recent three minor releases are maintained. At the time of writing, v1.19.2, v1.18.9 and v1.17.12 are versions we should test against according to the release page[3]. I am not aware any API to obtain the versions so my plan is to put them into common_kubernetes.sh. If you know better ways please suggest here. 2. Modify test_kubernetes_*.sh to loop over the versions and run test like before. Moreover, * in case of Linux, add --kubernetes-version=<> to minikube command to start specific kubernetes version * in case of Non-Linux, check the pre-started kubernetes version by 'kubectl version --short' (follows the minikube status check) and ensure it matches the version we want to test against, otherwise fails the test and give hint to user. [1] [https://minikube.sigs.k8s.io/docs/handbook/config/#selecting-a-kubernetes-version] [2] [https://kubernetes.io/docs/setup/release/version-skew-policy/] [3] [https://github.com/kubernetes/kubernetes/releases] was (Author: xudi): [~rmetzger] and [~fly_in_gis] I would like to take this ticket. Here is the proposed solution: 1. Determine kubernetes version range to test against minikube follows[1] the Kubernetes Version and Version Skew Support Policy[2] which indicates that the most recent three minor releases are maintained. At the time of writing, v1.19.2, v1.18.9 and v1.17.12 are versions we should test against according to the release page[3]. I am not aware any API to obtain the versions so my plan is to put them into common_kubernetes.sh. If you know better ways please suggest here. 2. Modify test_kubernetes_*.sh to loop over the versions and tests like before. Moreover, * in case of Linux, add --kubernetes-version=<> to minikube command to start specific kubernetes version * in case of Non-Linux, check the pre-started kubernetes version by 'kubectl version --short' (follows the minikube status check) and ensure it matches the version we want to test against, otherwise fails the test and give hint to user. [1] [https://minikube.sigs.k8s.io/docs/handbook/config/#selecting-a-kubernetes-version] [2] [https://kubernetes.io/docs/setup/release/version-skew-policy/] [3] [https://github.com/kubernetes/kubernetes/releases] > Run K8s related e2e tests with multiple K8s versions > > > Key: FLINK-17613 > URL: https://issues.apache.org/jira/browse/FLINK-17613 > Project: Flink > Issue Type: Improvement > Components: Deployment / Kubernetes, Tests >Reporter: Yang Wang >Priority: Major > Labels: starter > > Follow the discussion in this PR [1]. > If we could run the K8s related e2e tests in multiple versions(the latest > version, oldest maintained version, etc.), it will help us to find the > usability issues earlier before users post them in the user ML[2]. > [1].https://github.com/apache/flink/pull/12071#discussion_r422838483 > [2].https://lists.apache.org/list.html?u...@flink.apache.org:lte=1M:Cannot%20start%20native%20K8s -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-17613) Run K8s related e2e tests with multiple K8s versions

[ https://issues.apache.org/jira/browse/FLINK-17613?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201894#comment-17201894 ] Di Xu commented on FLINK-17613: --- [~rmetzger] and [~fly_in_gis] I would like to take this ticket. Here is the proposed solution: 1. Determine kubernetes version range to test against minikube follows[1] the Kubernetes Version and Version Skew Support Policy[2] which indicates that the most recent three minor releases are maintained. At the time of writing, v1.19.2, v1.18.9 and v1.17.12 are versions we should test against according to the release page[3]. I am not aware any API to obtain the versions so my plan is to put them into common_kubernetes.sh. If you know better ways please suggest here. 2. Modify test_kubernetes_*.sh to loop over the versions and tests like before. Moreover, * in case of Linux, add --kubernetes-version=<> to minikube command to start specific kubernetes version * in case of Non-Linux, check the pre-started kubernetes version by 'kubectl version --short' (follows the minikube status check) and ensure it matches the version we want to test against, otherwise fails the test and give hint to user. [1] [https://minikube.sigs.k8s.io/docs/handbook/config/#selecting-a-kubernetes-version] [2] [https://kubernetes.io/docs/setup/release/version-skew-policy/] [3] [https://github.com/kubernetes/kubernetes/releases] > Run K8s related e2e tests with multiple K8s versions > > > Key: FLINK-17613 > URL: https://issues.apache.org/jira/browse/FLINK-17613 > Project: Flink > Issue Type: Improvement > Components: Deployment / Kubernetes, Tests >Reporter: Yang Wang >Priority: Major > Labels: starter > > Follow the discussion in this PR [1]. > If we could run the K8s related e2e tests in multiple versions(the latest > version, oldest maintained version, etc.), it will help us to find the > usability issues earlier before users post them in the user ML[2]. > [1].https://github.com/apache/flink/pull/12071#discussion_r422838483 > [2].https://lists.apache.org/list.html?u...@flink.apache.org:lte=1M:Cannot%20start%20native%20K8s -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] fsk119 commented on pull request #13449: [FLINK-19282][table sql/planner]Supports watermark push down with Wat…

fsk119 commented on pull request #13449: URL: https://github.com/apache/flink/pull/13449#issuecomment-698710664 @flinkbot run azure This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13462: [FLINK-19229][python] Introduces rules and python side implementation to ensure the basic functionality of the general Python UDAF.

flinkbot edited a comment on pull request #13462: URL: https://github.com/apache/flink/pull/13462#issuecomment-697272556 ## CI report: * f043157df80e534f25f07f8346c902b0032fca26 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6841) * d7401ae9d8da75274c3ba6a2fb04f4639409b59d UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-19407) Translate "Elasticsearch Connector" page of "Connectors" into Chinese

[ https://issues.apache.org/jira/browse/FLINK-19407?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201890#comment-17201890 ] 魏旭斌 commented on FLINK-19407: - Hi [~jark] ,I would like to translate this page . Could you please assign it to me? > Translate "Elasticsearch Connector" page of "Connectors" into Chinese > - > > Key: FLINK-19407 > URL: https://issues.apache.org/jira/browse/FLINK-19407 > Project: Flink > Issue Type: Improvement > Components: chinese-translation, Documentation >Reporter: 魏旭斌 >Priority: Major > > The page url is > [https://ci.apache.org/projects/flink/flink-docs-master/dev/connectors/elasticsearch.html] > The markdown file is located in flink/docs/dev/connectors/elasticsearch.zh.md -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (FLINK-19407) Translate "Elasticsearch Connector" page of "Connectors" into Chinese

魏旭斌 created FLINK-19407: --- Summary: Translate "Elasticsearch Connector" page of "Connectors" into Chinese Key: FLINK-19407 URL: https://issues.apache.org/jira/browse/FLINK-19407 Project: Flink Issue Type: Improvement Components: chinese-translation, Documentation Reporter: 魏旭斌 The page url is [https://ci.apache.org/projects/flink/flink-docs-master/dev/connectors/elasticsearch.html] The markdown file is located in flink/docs/dev/connectors/elasticsearch.zh.md -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on pull request #13477: [FLINK-19406][table-planner-blink] Casting row time to timestamp lose…

flinkbot edited a comment on pull request #13477: URL: https://github.com/apache/flink/pull/13477#issuecomment-698702956 ## CI report: * 3c9bb3850b73a0499bd2eb99ee0a738cbee63789 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6935) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] V1ncentzzZ commented on pull request #13472: [FLINK-19291][Formats] Fix `SchemaParseException` when use `AvroSchemaConverter` converts flink logical type

V1ncentzzZ commented on pull request #13472: URL: https://github.com/apache/flink/pull/13472#issuecomment-698705423 Hi @danny0405 @wuchong , would you help review this PR? Thank you very much. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (FLINK-19404) Support Pandas Stream Over Window Aggregation

[ https://issues.apache.org/jira/browse/FLINK-19404?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dian Fu reassigned FLINK-19404: --- Assignee: Huang Xingbo > Support Pandas Stream Over Window Aggregation > - > > Key: FLINK-19404 > URL: https://issues.apache.org/jira/browse/FLINK-19404 > Project: Flink > Issue Type: Sub-task > Components: API / Python >Reporter: Huang Xingbo >Assignee: Huang Xingbo >Priority: Major > Fix For: 1.12.0 > > > We will add Stream Physical Pandas Over Window RelNode and > StreamArrowPythonOverWindowAggregateFunctionOperator to support Pandas Stream > Over Window Aggregation -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (FLINK-19403) Support Pandas Stream Group Window Aggregation

[ https://issues.apache.org/jira/browse/FLINK-19403?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dian Fu reassigned FLINK-19403: --- Assignee: Huang Xingbo > Support Pandas Stream Group Window Aggregation > -- > > Key: FLINK-19403 > URL: https://issues.apache.org/jira/browse/FLINK-19403 > Project: Flink > Issue Type: Sub-task > Components: API / Python >Reporter: Huang Xingbo >Assignee: Huang Xingbo >Priority: Major > Fix For: 1.12.0 > > > We will add Stream Physical Pandas Group Window RelNode and > StreamArrowPythonGroupWindowAggregateFunctionOperator to support Pandas > Stream Group Window Aggregation -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot commented on pull request #13477: [FLINK-19406][table-planner-blink] Casting row time to timestamp lose…

flinkbot commented on pull request #13477: URL: https://github.com/apache/flink/pull/13477#issuecomment-698702956 ## CI report: * 3c9bb3850b73a0499bd2eb99ee0a738cbee63789 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13449: [FLINK-19282][table sql/planner]Supports watermark push down with Wat…

flinkbot edited a comment on pull request #13449: URL: https://github.com/apache/flink/pull/13449#issuecomment-696509354 ## CI report: * 28d25983818d7805f6708b36125a65a493d03aa9 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6934) * d2504d1408f62a1ba5818d4f906b70866f4ef49f UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13303: [FLINK-19098][format] Make row data converters public

flinkbot edited a comment on pull request #13303: URL: https://github.com/apache/flink/pull/13303#issuecomment-685551684 ## CI report: * d1b9c21ec9330791b02f6836fed0b36c4d62a253 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6901) * e65696be08fc766fb96afc45f5c6ea9cbd91e99b Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6933) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] dianfu commented on a change in pull request #13462: [FLINK-19229][python] Introduces rules and python side implementation to ensure the basic functionality of the general Python UDAF

dianfu commented on a change in pull request #13462: URL: https://github.com/apache/flink/pull/13462#discussion_r494728861 ## File path: flink-python/pyflink/fn_execution/beam/beam_coder_impl_slow.py ## @@ -46,8 +46,7 @@ def __init__(self, field_coders): self._remaining_bits_num = (self._field_count + ROW_KIND_BIT_SIZE) % 8 self.null_mask_search_table = self.generate_null_mask_search_table() self.null_byte_search_table = (0x80, 0x40, 0x20, 0x10, 0x08, 0x04, 0x02, 0x01) -self.row_kind_search_table = \ -[i << (8 - ROW_KIND_BIT_SIZE) for i in range(2 ** ROW_KIND_BIT_SIZE)] +self.row_kind_search_table = [0x00, 0x80, 0x40, 0xC0] Review comment: OK. Then could you add some description on how the search table is constructed? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] dianfu commented on a change in pull request #13462: [FLINK-19229][python] Introduces rules and python side implementation to ensure the basic functionality of the general Python UDAF

dianfu commented on a change in pull request #13462: URL: https://github.com/apache/flink/pull/13462#discussion_r494728861 ## File path: flink-python/pyflink/fn_execution/beam/beam_coder_impl_slow.py ## @@ -46,8 +46,7 @@ def __init__(self, field_coders): self._remaining_bits_num = (self._field_count + ROW_KIND_BIT_SIZE) % 8 self.null_mask_search_table = self.generate_null_mask_search_table() self.null_byte_search_table = (0x80, 0x40, 0x20, 0x10, 0x08, 0x04, 0x02, 0x01) -self.row_kind_search_table = \ -[i << (8 - ROW_KIND_BIT_SIZE) for i in range(2 ** ROW_KIND_BIT_SIZE)] +self.row_kind_search_table = [0x00, 0x80, 0x40, 0xC0] Review comment: If so, could you add some description on how the search table is constructed? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #13477: [FLINK-19406][table-planner-blink] Casting row time to timestamp lose…

flinkbot commented on pull request #13477: URL: https://github.com/apache/flink/pull/13477#issuecomment-698699571 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 3c9bb3850b73a0499bd2eb99ee0a738cbee63789 (Fri Sep 25 03:27:40 UTC 2020) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! * **This pull request references an unassigned [Jira ticket](https://issues.apache.org/jira/browse/FLINK-19406).** According to the [code contribution guide](https://flink.apache.org/contributing/contribute-code.html), tickets need to be assigned before starting with the implementation work. Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-19406) Casting row time to timestamp loses nullability info

[ https://issues.apache.org/jira/browse/FLINK-19406?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-19406: --- Labels: pull-request-available (was: ) > Casting row time to timestamp loses nullability info > > > Key: FLINK-19406 > URL: https://issues.apache.org/jira/browse/FLINK-19406 > Project: Flink > Issue Type: Bug > Components: Table SQL / Planner >Reporter: Rui Li >Priority: Major > Labels: pull-request-available > Fix For: 1.12.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] lirui-apache opened a new pull request #13477: [FLINK-19406][table-planner-blink] Casting row time to timestamp lose…

lirui-apache opened a new pull request #13477: URL: https://github.com/apache/flink/pull/13477 …s nullability info ## What is the purpose of the change We lose nullability info when casting row time to timestamp. If the timestamp is passed to a function that takes not null parameter, the planner complains type mismatch. ## Brief change log - Fix in `ScalarOperatorGens::generateCast` - Add test case ## Verifying this change Added test case ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn/Mesos, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? NA This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13475: [FLINK-19372][python] Support Pandas Batch Over Window Aggregation

flinkbot edited a comment on pull request #13475: URL: https://github.com/apache/flink/pull/13475#issuecomment-698240452 ## CI report: * e1098322eb0d29b6bd1cf47bbafdfe179c948e7a Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6903) * 76736cc092aa256ff4680e54613e8c17cf45b596 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6931) * 469104d651f8f47e1dc76f0d317ea0477c1eef87 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6932) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13458: FLINK-18851 [runtime] Add checkpoint type to checkpoint history entries in Web UI

flinkbot edited a comment on pull request #13458: URL: https://github.com/apache/flink/pull/13458#issuecomment-697041219 ## CI report: * a0ecc93483203cb019f279955cc466d2a6944fc3 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6928) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #13449: [FLINK-19282][table sql/planner]Supports watermark push down with Wat…

flinkbot edited a comment on pull request #13449: URL: https://github.com/apache/flink/pull/13449#issuecomment-696509354 ## CI report: * 3925844a1496578ba81e55f1969729c2ddca31c8 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6911) * 28d25983818d7805f6708b36125a65a493d03aa9 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-19406) Casting row time to timestamp loses nullability info

Rui Li created FLINK-19406: -- Summary: Casting row time to timestamp loses nullability info Key: FLINK-19406 URL: https://issues.apache.org/jira/browse/FLINK-19406 Project: Flink Issue Type: Bug Components: Table SQL / Planner Reporter: Rui Li Fix For: 1.12.0 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on pull request #13303: [FLINK-19098][format] Make row data converters public

flinkbot edited a comment on pull request #13303: URL: https://github.com/apache/flink/pull/13303#issuecomment-685551684 ## CI report: * d1b9c21ec9330791b02f6836fed0b36c4d62a253 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6901) * e65696be08fc766fb96afc45f5c6ea9cbd91e99b UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] WeiZhong94 commented on a change in pull request #13462: [FLINK-19229][python] Introduces rules and python side implementation to ensure the basic functionality of the general Python

WeiZhong94 commented on a change in pull request #13462:

URL: https://github.com/apache/flink/pull/13462#discussion_r494722379

##

File path:

flink-python/src/main/java/org/apache/flink/table/runtime/arrow/ArrowUtils.java

##

@@ -749,7 +749,7 @@ private static boolean isAppendOnlyTable(Table table)

throws Exception {

OutputConversionModifyOperation.UpdateMode.APPEND);

tableEnv.getPlanner().translate(Collections.singletonList(modifyOperation));

} catch (Throwable t) {

- if (t.getMessage().contains("doesn't support

consuming update changes") ||

Review comment:

This change is used to match the error message "xxx doesn't support

consuming update and delete changes xx"

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (FLINK-19405) Translate "DataSet Connectors" page of "Connectors" into Chinese

[ https://issues.apache.org/jira/browse/FLINK-19405?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201884#comment-17201884 ] weizheng commented on FLINK-19405: -- ok thanks > Translate "DataSet Connectors" page of "Connectors" into Chinese > > > Key: FLINK-19405 > URL: https://issues.apache.org/jira/browse/FLINK-19405 > Project: Flink > Issue Type: Improvement > Components: chinese-translation, Documentation >Reporter: weizheng >Priority: Major > > The page url is > [https://ci.apache.org/projects/flink/flink-docs-master/dev/batch/connectors.html > > |https://ci.apache.org/projects/flink/flink-docs-master/dev/batch/connectors.html] > The markdown file is located in flink/docs/dev/batch/connectors.zh.md -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-19405) Translate "DataSet Connectors" page of "Connectors" into Chinese

[ https://issues.apache.org/jira/browse/FLINK-19405?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201879#comment-17201879 ] Jark Wu commented on FLINK-19405: - DataSet is going to be removed in the future. I would suggest to put more efforts on other untranslated pages. > Translate "DataSet Connectors" page of "Connectors" into Chinese > > > Key: FLINK-19405 > URL: https://issues.apache.org/jira/browse/FLINK-19405 > Project: Flink > Issue Type: Improvement > Components: chinese-translation, Documentation >Reporter: weizheng >Priority: Major > > The page url is > [https://ci.apache.org/projects/flink/flink-docs-master/dev/batch/connectors.html > > |https://ci.apache.org/projects/flink/flink-docs-master/dev/batch/connectors.html] > The markdown file is located in flink/docs/dev/batch/connectors.zh.md -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on pull request #13475: [FLINK-19372][python] Support Pandas Batch Over Window Aggregation

flinkbot edited a comment on pull request #13475: URL: https://github.com/apache/flink/pull/13475#issuecomment-698240452 ## CI report: * e1098322eb0d29b6bd1cf47bbafdfe179c948e7a Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6903) * 76736cc092aa256ff4680e54613e8c17cf45b596 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=6931) * 469104d651f8f47e1dc76f0d317ea0477c1eef87 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

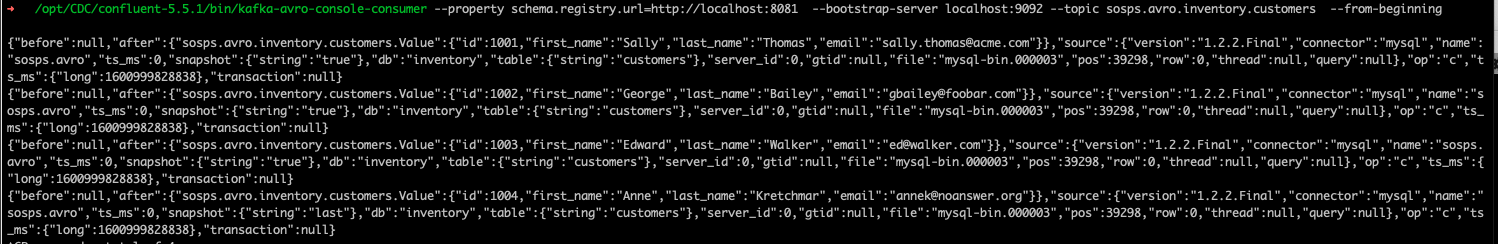

[GitHub] [flink] HsbcJone commented on pull request #13296: [FLINK-18774][format][debezium] Support debezium-avro format

HsbcJone commented on pull request #13296: URL: https://github.com/apache/flink/pull/13296#issuecomment-698688361 @caozhen1937  this is the record by confluent `kafka-avro-console-consumer ` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] WeiZhong94 commented on a change in pull request #13462: [FLINK-19229][python] Introduces rules and python side implementation to ensure the basic functionality of the general Python

WeiZhong94 commented on a change in pull request #13462: URL: https://github.com/apache/flink/pull/13462#discussion_r494714656 ## File path: flink-python/pyflink/fn_execution/beam/beam_coder_impl_slow.py ## @@ -46,8 +46,7 @@ def __init__(self, field_coders): self._remaining_bits_num = (self._field_count + ROW_KIND_BIT_SIZE) % 8 self.null_mask_search_table = self.generate_null_mask_search_table() self.null_byte_search_table = (0x80, 0x40, 0x20, 0x10, 0x08, 0x04, 0x02, 0x01) -self.row_kind_search_table = \ -[i << (8 - ROW_KIND_BIT_SIZE) for i in range(2 ** ROW_KIND_BIT_SIZE)] +self.row_kind_search_table = [0x00, 0x80, 0x40, 0xC0] Review comment: The '[i << (8 - ROW_KIND_BIT_SIZE) for i in range(2 ** ROW_KIND_BIT_SIZE)]' would not generate the correct row_kind_search_table, so assign the correct table directly. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (FLINK-19180) Make RocksDB respect the calculated managed memory fraction

[ https://issues.apache.org/jira/browse/FLINK-19180?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Xintong Song reassigned FLINK-19180: Assignee: Xintong Song > Make RocksDB respect the calculated managed memory fraction > --- > > Key: FLINK-19180 > URL: https://issues.apache.org/jira/browse/FLINK-19180 > Project: Flink > Issue Type: Sub-task > Components: Runtime / State Backends >Reporter: Xintong Song >Assignee: Xintong Song >Priority: Major > Fix For: 1.12.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (FLINK-19181) Make python processes respect the calculated managed memory fraction

[ https://issues.apache.org/jira/browse/FLINK-19181?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dian Fu reassigned FLINK-19181: --- Assignee: Dian Fu > Make python processes respect the calculated managed memory fraction > > > Key: FLINK-19181 > URL: https://issues.apache.org/jira/browse/FLINK-19181 > Project: Flink > Issue Type: Sub-task > Components: API / Python >Reporter: Xintong Song >Assignee: Dian Fu >Priority: Major > Fix For: 1.12.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-19389) Error determining python major version to start fileserver during e-2-e tests

[ https://issues.apache.org/jira/browse/FLINK-19389?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201868#comment-17201868 ] Di Xu commented on FLINK-19389: --- [~fly_in_gis] would you mind help to confirm and assign it to me? > Error determining python major version to start fileserver during e-2-e tests > - > > Key: FLINK-19389 > URL: https://issues.apache.org/jira/browse/FLINK-19389 > Project: Flink > Issue Type: Bug > Components: Tests >Reporter: Di Xu >Priority: Major > > Currently the logic to determine python version in command-docker.sh[1] has > an error: > * when there is only python3 installed, `command -v python` also returns 0 > and then `python (actually 3) python2_fileserver.py` will be executed then > it causes an error > * in the case of python version management tool pyenv[2] is used, the soft > link to python3 would exist even if user switched to python2, so simply check > python3 before python(2) will NOT solve the problem. > The suggested way is: > python -c 'import sys; print(sys.version_info.major)' > > [1] > [https://github.com/apache/flink/blob/master/flink-end-to-end-tests/test-scripts/common_docker.sh#L57] > [2] [https://github.com/pyenv/pyenv] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Issue Comment Deleted] (FLINK-19389) Error determining python major version to start fileserver during e-2-e tests

[ https://issues.apache.org/jira/browse/FLINK-19389?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Di Xu updated FLINK-19389: -- Comment: was deleted (was: I can help to fix it, if this is a confirmed issue.) > Error determining python major version to start fileserver during e-2-e tests > - > > Key: FLINK-19389 > URL: https://issues.apache.org/jira/browse/FLINK-19389 > Project: Flink > Issue Type: Bug > Components: Tests >Reporter: Di Xu >Priority: Major > > Currently the logic to determine python version in command-docker.sh[1] has > an error: > * when there is only python3 installed, `command -v python` also returns 0 > and then `python (actually 3) python2_fileserver.py` will be executed then > it causes an error > * in the case of python version management tool pyenv[2] is used, the soft > link to python3 would exist even if user switched to python2, so simply check > python3 before python(2) will NOT solve the problem. > The suggested way is: > python -c 'import sys; print(sys.version_info.major)' > > [1] > [https://github.com/apache/flink/blob/master/flink-end-to-end-tests/test-scripts/common_docker.sh#L57] > [2] [https://github.com/pyenv/pyenv] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-19388) Streaming bucketing end-to-end test failed with "Number of running task managers has not reached 4 within a timeout of 40 sec"

[

https://issues.apache.org/jira/browse/FLINK-19388?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Dian Fu updated FLINK-19388:

Component/s: (was: Tests)

> Streaming bucketing end-to-end test failed with "Number of running task

> managers has not reached 4 within a timeout of 40 sec"

> --

>

> Key: FLINK-19388

> URL: https://issues.apache.org/jira/browse/FLINK-19388

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem, Runtime / Coordination

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Priority: Blocker

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=6876=logs=c88eea3b-64a0-564d-0031-9fdcd7b8abee=ff888d9b-cd34-53cc-d90f-3e446d355529

> {code}

> 2020-09-24T03:13:12.1370987Z Starting taskexecutor daemon on host fv-az661.

> 2020-09-24T03:13:12.1773280Z Number of running task managers 2 is not yet 4.

> 2020-09-24T03:13:16.4342638Z Number of running task managers 3 is not yet 4.

> 2020-09-24T03:13:20.4570976Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:24.4762428Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:28.4955622Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:32.5110079Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:36.5272551Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:40.5672343Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:44.5857760Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:48.6039181Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:52.6056222Z Number of running task managers has not reached

> 4 within a timeout of 40 sec

> 2020-09-24T03:13:52.9275629Z Stopping taskexecutor daemon (pid: 13694) on

> host fv-az661.

> 2020-09-24T03:13:53.1753734Z No standalonesession daemon (pid: 10610) is

> running anymore on fv-az661.

> 2020-09-24T03:13:53.5812242Z Skipping taskexecutor daemon (pid: 10912),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5813449Z Stopping taskexecutor daemon (pid: 11330) on

> host fv-az661.

> 2020-09-24T03:13:53.5818053Z Stopping taskexecutor daemon (pid: 11632) on

> host fv-az661.

> 2020-09-24T03:13:53.5819341Z Skipping taskexecutor daemon (pid: 11965),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5820870Z Skipping taskexecutor daemon (pid: 12906),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5821698Z Stopping taskexecutor daemon (pid: 13392) on

> host fv-az661.

> 2020-09-24T03:13:53.5839544Z [FAIL] Test script contains errors.

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-19388) Streaming bucketing end-to-end test failed with "Number of running task managers has not reached 4 within a timeout of 40 sec"

[

https://issues.apache.org/jira/browse/FLINK-19388?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Dian Fu updated FLINK-19388:

Component/s: Runtime / Coordination

> Streaming bucketing end-to-end test failed with "Number of running task

> managers has not reached 4 within a timeout of 40 sec"

> --

>

> Key: FLINK-19388

> URL: https://issues.apache.org/jira/browse/FLINK-19388

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem, Runtime / Coordination, Tests

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Priority: Blocker

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=6876=logs=c88eea3b-64a0-564d-0031-9fdcd7b8abee=ff888d9b-cd34-53cc-d90f-3e446d355529

> {code}

> 2020-09-24T03:13:12.1370987Z Starting taskexecutor daemon on host fv-az661.

> 2020-09-24T03:13:12.1773280Z Number of running task managers 2 is not yet 4.

> 2020-09-24T03:13:16.4342638Z Number of running task managers 3 is not yet 4.

> 2020-09-24T03:13:20.4570976Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:24.4762428Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:28.4955622Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:32.5110079Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:36.5272551Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:40.5672343Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:44.5857760Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:48.6039181Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:52.6056222Z Number of running task managers has not reached

> 4 within a timeout of 40 sec

> 2020-09-24T03:13:52.9275629Z Stopping taskexecutor daemon (pid: 13694) on

> host fv-az661.

> 2020-09-24T03:13:53.1753734Z No standalonesession daemon (pid: 10610) is

> running anymore on fv-az661.

> 2020-09-24T03:13:53.5812242Z Skipping taskexecutor daemon (pid: 10912),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5813449Z Stopping taskexecutor daemon (pid: 11330) on

> host fv-az661.

> 2020-09-24T03:13:53.5818053Z Stopping taskexecutor daemon (pid: 11632) on

> host fv-az661.

> 2020-09-24T03:13:53.5819341Z Skipping taskexecutor daemon (pid: 11965),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5820870Z Skipping taskexecutor daemon (pid: 12906),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5821698Z Stopping taskexecutor daemon (pid: 13392) on

> host fv-az661.

> 2020-09-24T03:13:53.5839544Z [FAIL] Test script contains errors.

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-19405) Translate "DataSet Connectors" page of "Connectors" into Chinese

[ https://issues.apache.org/jira/browse/FLINK-19405?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201867#comment-17201867 ] weizheng commented on FLINK-19405: -- Hi [~jark], Could you please assign it to me > Translate "DataSet Connectors" page of "Connectors" into Chinese > > > Key: FLINK-19405 > URL: https://issues.apache.org/jira/browse/FLINK-19405 > Project: Flink > Issue Type: Improvement > Components: chinese-translation, Documentation >Reporter: weizheng >Priority: Major > > The page url is > [https://ci.apache.org/projects/flink/flink-docs-master/dev/batch/connectors.html > > |https://ci.apache.org/projects/flink/flink-docs-master/dev/batch/connectors.html] > The markdown file is located in flink/docs/dev/batch/connectors.zh.md -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] xintongsong edited a comment on pull request #13464: [FLINK-19307][coordination] Add ResourceTracker

xintongsong edited a comment on pull request #13464: URL: https://github.com/apache/flink/pull/13464#issuecomment-698685698 Sorry for the typo. Just corrected. Having heuristics for triggering re-assignment on both JM/RM sides sounds promising to me. Just to add another idea, we may also consider exactly matching between requirement/resource profiles that are not `UNKNOWN`. We can keep the discussion on the pros and cons of the two approaches and potential other ideas open. I think this issues should not block this PR. Anyway, we do not have the different profiles at the moment. I was just trying to better understand the status and limitations of the current implementation. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (FLINK-19388) Streaming bucketing end-to-end test failed with "Number of running task managers has not reached 4 within a timeout of 40 sec"

[

https://issues.apache.org/jira/browse/FLINK-19388?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201864#comment-17201864

]

Dian Fu edited comment on FLINK-19388 at 9/25/20, 2:34 AM:

---

cc [~azagrebin] Could you help to take a look at this issue to see if it's

related to FLINK-18957?

was (Author: dian.fu):

cc [~azagrebin] Could you help to take a look at this issue to see if it was

introduced in FLINK-18957?

> Streaming bucketing end-to-end test failed with "Number of running task

> managers has not reached 4 within a timeout of 40 sec"

> --

>

> Key: FLINK-19388

> URL: https://issues.apache.org/jira/browse/FLINK-19388

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem, Tests

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Priority: Blocker

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=6876=logs=c88eea3b-64a0-564d-0031-9fdcd7b8abee=ff888d9b-cd34-53cc-d90f-3e446d355529

> {code}

> 2020-09-24T03:13:12.1370987Z Starting taskexecutor daemon on host fv-az661.

> 2020-09-24T03:13:12.1773280Z Number of running task managers 2 is not yet 4.

> 2020-09-24T03:13:16.4342638Z Number of running task managers 3 is not yet 4.

> 2020-09-24T03:13:20.4570976Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:24.4762428Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:28.4955622Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:32.5110079Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:36.5272551Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:40.5672343Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:44.5857760Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:48.6039181Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:52.6056222Z Number of running task managers has not reached

> 4 within a timeout of 40 sec

> 2020-09-24T03:13:52.9275629Z Stopping taskexecutor daemon (pid: 13694) on

> host fv-az661.

> 2020-09-24T03:13:53.1753734Z No standalonesession daemon (pid: 10610) is

> running anymore on fv-az661.

> 2020-09-24T03:13:53.5812242Z Skipping taskexecutor daemon (pid: 10912),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5813449Z Stopping taskexecutor daemon (pid: 11330) on

> host fv-az661.

> 2020-09-24T03:13:53.5818053Z Stopping taskexecutor daemon (pid: 11632) on

> host fv-az661.

> 2020-09-24T03:13:53.5819341Z Skipping taskexecutor daemon (pid: 11965),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5820870Z Skipping taskexecutor daemon (pid: 12906),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5821698Z Stopping taskexecutor daemon (pid: 13392) on

> host fv-az661.

> 2020-09-24T03:13:53.5839544Z [FAIL] Test script contains errors.

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Created] (FLINK-19405) Translate "DataSet Connectors" page of "Connectors" into Chinese

weizheng created FLINK-19405: Summary: Translate "DataSet Connectors" page of "Connectors" into Chinese Key: FLINK-19405 URL: https://issues.apache.org/jira/browse/FLINK-19405 Project: Flink Issue Type: Improvement Components: chinese-translation, Documentation Reporter: weizheng The page url is [https://ci.apache.org/projects/flink/flink-docs-master/dev/batch/connectors.html |https://ci.apache.org/projects/flink/flink-docs-master/dev/batch/connectors.html] The markdown file is located in flink/docs/dev/batch/connectors.zh.md -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (FLINK-18851) Add checkpoint type to checkpoint history entries in Web UI

[ https://issues.apache.org/jira/browse/FLINK-18851?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201866#comment-17201866 ] goutham commented on FLINK-18851: - [~mapohl] Thanks, Also can you provide access to Jira to assign tasks? This is my Jira user name gkrish24 > Add checkpoint type to checkpoint history entries in Web UI > --- > > Key: FLINK-18851 > URL: https://issues.apache.org/jira/browse/FLINK-18851 > Project: Flink > Issue Type: Improvement > Components: Runtime / REST, Runtime / Web Frontend >Affects Versions: 1.12.0 >Reporter: Arvid Heise >Assignee: goutham >Priority: Minor > Labels: pull-request-available, starter > Attachments: Checkpoint details.png > > > It would be helpful to users to better understand checkpointing times, if the > type of the checkpoint is displayed in the checkpoint history. > Possible types are savepoint, aligned checkpoint, unaligned checkpoint. > A possible place can be seen in the screenshot > !Checkpoint details.png! -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] xintongsong commented on pull request #13464: [FLINK-19307][coordination] Add ResourceTracker

xintongsong commented on pull request #13464: URL: https://github.com/apache/flink/pull/13464#issuecomment-698685698 Sorry for the typo. Just corrected. Having heuristics for triggering re-assignment on both JM/RM sides sounds promising to me. Just to add another idea, we may also consider exactly matching between requirement/resource profiles that are not `UNKNOWN`. I think this issues should not block this PR. Anyway, we do not have the different profiles at the moment. I was just trying to better understand the status and limitations of the current implementation. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (FLINK-18851) Add checkpoint type to checkpoint history entries in Web UI

[ https://issues.apache.org/jira/browse/FLINK-18851?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201866#comment-17201866 ] goutham edited comment on FLINK-18851 at 9/25/20, 2:31 AM: --- [~mapohl] Thanks for your response, Also can you provide access to Jira to assign tasks? This is my Jira user name gkrish24 was (Author: gkrish24): [~mapohl] Thanks, Also can you provide access to Jira to assign tasks? This is my Jira user name gkrish24 > Add checkpoint type to checkpoint history entries in Web UI > --- > > Key: FLINK-18851 > URL: https://issues.apache.org/jira/browse/FLINK-18851 > Project: Flink > Issue Type: Improvement > Components: Runtime / REST, Runtime / Web Frontend >Affects Versions: 1.12.0 >Reporter: Arvid Heise >Assignee: goutham >Priority: Minor > Labels: pull-request-available, starter > Attachments: Checkpoint details.png > > > It would be helpful to users to better understand checkpointing times, if the > type of the checkpoint is displayed in the checkpoint history. > Possible types are savepoint, aligned checkpoint, unaligned checkpoint. > A possible place can be seen in the screenshot > !Checkpoint details.png! -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Comment Edited] (FLINK-19388) Streaming bucketing end-to-end test failed with "Number of running task managers has not reached 4 within a timeout of 40 sec"

[

https://issues.apache.org/jira/browse/FLINK-19388?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17201864#comment-17201864

]

Dian Fu edited comment on FLINK-19388 at 9/25/20, 2:28 AM:

---

cc [~azagrebin] Could you help to take a look at this issue to see if it was

introduced in FLINK-18957.

was (Author: dian.fu):

cc [~azagrebin] Could you help to take a look at this issue? The exception

happens in the class which was introduced in FLINK-18957.

> Streaming bucketing end-to-end test failed with "Number of running task

> managers has not reached 4 within a timeout of 40 sec"

> --

>

> Key: FLINK-19388

> URL: https://issues.apache.org/jira/browse/FLINK-19388

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem, Tests

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Priority: Blocker

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=6876=logs=c88eea3b-64a0-564d-0031-9fdcd7b8abee=ff888d9b-cd34-53cc-d90f-3e446d355529

> {code}

> 2020-09-24T03:13:12.1370987Z Starting taskexecutor daemon on host fv-az661.

> 2020-09-24T03:13:12.1773280Z Number of running task managers 2 is not yet 4.

> 2020-09-24T03:13:16.4342638Z Number of running task managers 3 is not yet 4.

> 2020-09-24T03:13:20.4570976Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:24.4762428Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:28.4955622Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:32.5110079Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:36.5272551Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:40.5672343Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:44.5857760Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:48.6039181Z Number of running task managers 0 is not yet 4.

> 2020-09-24T03:13:52.6056222Z Number of running task managers has not reached

> 4 within a timeout of 40 sec

> 2020-09-24T03:13:52.9275629Z Stopping taskexecutor daemon (pid: 13694) on

> host fv-az661.

> 2020-09-24T03:13:53.1753734Z No standalonesession daemon (pid: 10610) is

> running anymore on fv-az661.

> 2020-09-24T03:13:53.5812242Z Skipping taskexecutor daemon (pid: 10912),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5813449Z Stopping taskexecutor daemon (pid: 11330) on

> host fv-az661.

> 2020-09-24T03:13:53.5818053Z Stopping taskexecutor daemon (pid: 11632) on

> host fv-az661.

> 2020-09-24T03:13:53.5819341Z Skipping taskexecutor daemon (pid: 11965),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5820870Z Skipping taskexecutor daemon (pid: 12906),

> because it is not running anymore on fv-az661.

> 2020-09-24T03:13:53.5821698Z Stopping taskexecutor daemon (pid: 13392) on

> host fv-az661.

> 2020-09-24T03:13:53.5839544Z [FAIL] Test script contains errors.

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

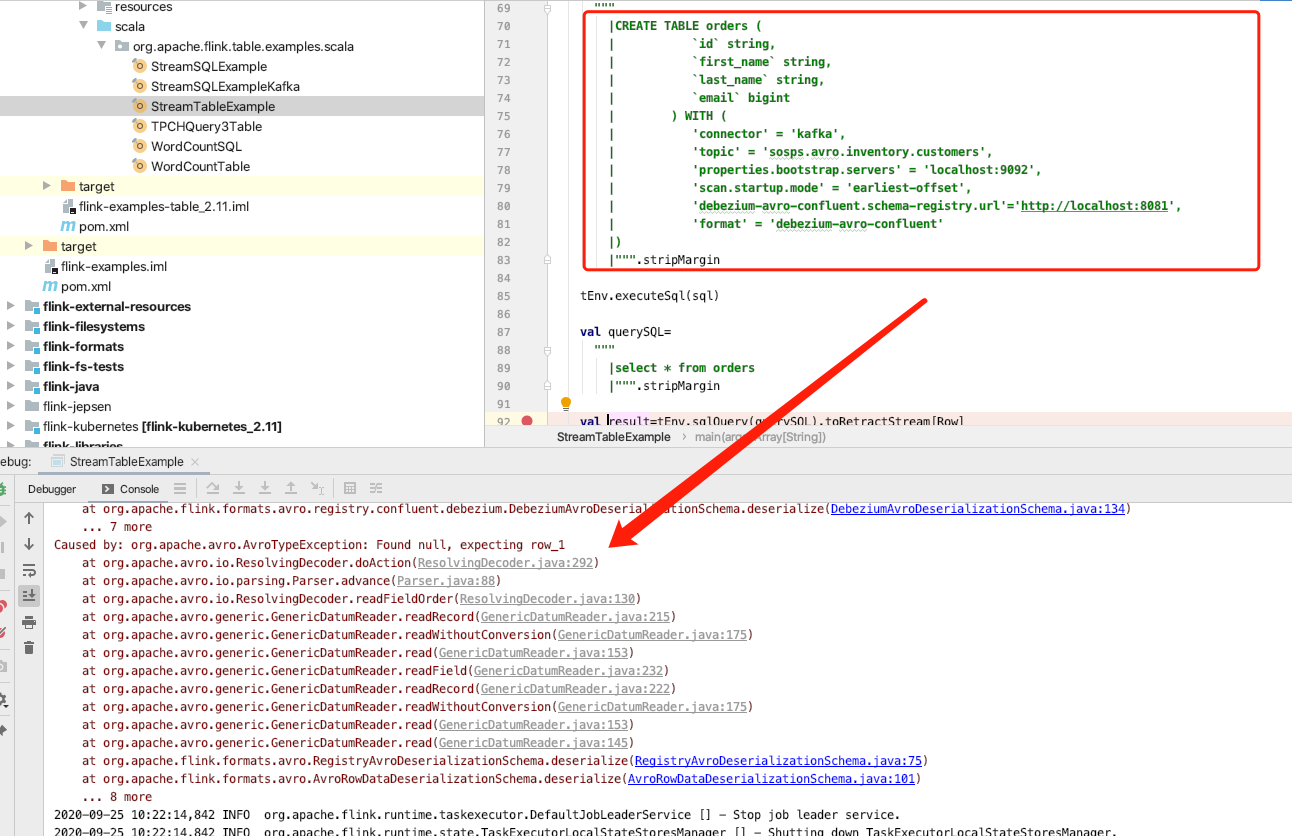

[GitHub] [flink] HsbcJone edited a comment on pull request #13296: [FLINK-18774][format][debezium] Support debezium-avro format

HsbcJone edited a comment on pull request #13296:

URL: https://github.com/apache/flink/pull/13296#issuecomment-698684598

@caozhen1937 hi i fork ur code for test debezium-avro-confluent . i

debug the source code .but its do not work .

@wuchong

here is the exception;

the belows is the 'used topic schema'

`{

"connect.name": "sosps.avro.inventory.customers.Envelope",

"fields": [

{