[GitHub] [flink] Airblader commented on pull request #17898: [FLINK-25047][table] Resolve architectural violations

Airblader commented on pull request #17898: URL: https://github.com/apache/flink/pull/17898#issuecomment-981374898 Thanks @wenlong88. Although I think chances are small that anyone is using these exceptions, I would like this to be a non-breaking PR, so it makes sense to do that. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] MartijnVisser commented on pull request #17845: [FLINK-24352] [flink-table-planner] Add null check for temporal table check on SqlSnapshot

MartijnVisser commented on pull request #17845: URL: https://github.com/apache/flink/pull/17845#issuecomment-981374790 Should we create a follow-up ticket for the issue you've mentioned @godfreyhe ? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] AHeise merged pull request #17538: [FLINK-24325][connectors/elasticsearch] Create Elasticsearch 6 Sink

AHeise merged pull request #17538: URL: https://github.com/apache/flink/pull/17538 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] AHeise commented on a change in pull request #17870: [FLINK-24596][kafka] Fix buffered KafkaUpsert sink

AHeise commented on a change in pull request #17870:

URL: https://github.com/apache/flink/pull/17870#discussion_r758107290

##

File path:

flink-connectors/flink-connector-kafka/src/main/java/org/apache/flink/connector/kafka/sink/TopicSelector.java

##

@@ -18,14 +18,12 @@

package org.apache.flink.connector.kafka.sink;

import org.apache.flink.annotation.PublicEvolving;

-

-import java.io.Serializable;

-import java.util.function.Function;

+import org.apache.flink.util.function.SerializableFunction;

/**

* Selects a topic for the incoming record.

*

* @param type of the incoming record

*/

@PublicEvolving

-public interface TopicSelector extends Function, Serializable

{}

+public interface TopicSelector extends SerializableFunction {}

Review comment:

This is a probably breaking change (just double check if user code is

affected or not). Since this PR is aimed as a bugfix to 1.14.0, I'd remove it

for now. Please also double-check the other migrations.

In general, I think that `TopicSelector` should not have inherited from

`Function` to begin with. :/

##

File path:

flink-connectors/flink-connector-kafka/src/test/java/org/apache/flink/streaming/connectors/kafka/table/ReducingUpsertWriterTest.java

##

@@ -320,8 +319,8 @@ public void registerProcessingTimer(

long time, ProcessingTimeCallback

processingTimerCallback) {}

},

enableObjectReuse

-? typeInformation.createSerializer(new

ExecutionConfig())::copy

-: Function.identity());

+? r -> typeInformation.createSerializer(new

ExecutionConfig()).copy(r)

Review comment:

You can revert that line as well.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17924: [FLINK-25072][streaming] Introduce description on Transformation and …

flinkbot edited a comment on pull request #17924: URL: https://github.com/apache/flink/pull/17924#issuecomment-979870210 ## CI report: * 07174990a0a73184286f32792dda356ea71d96a6 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27174) * 3dab421140db64ac746629e032738a457ae78d99 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27192) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17924: [FLINK-25072][streaming] Introduce description on Transformation and …

flinkbot edited a comment on pull request #17924: URL: https://github.com/apache/flink/pull/17924#issuecomment-979870210 ## CI report: * 07174990a0a73184286f32792dda356ea71d96a6 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27174) * 3dab421140db64ac746629e032738a457ae78d99 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17938: [Flink-25073][streaming] Introduce TreeMode description for vertices

flinkbot edited a comment on pull request #17938: URL: https://github.com/apache/flink/pull/17938#issuecomment-981363546 ## CI report: * d29e331ebbccb61bbb784d4b0e41cf1e591cb9c0 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27191) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #17938: [Flink-25073][streaming] Introduce TreeMode description for vertices

flinkbot commented on pull request #17938: URL: https://github.com/apache/flink/pull/17938#issuecomment-981364305 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit d29e331ebbccb61bbb784d4b0e41cf1e591cb9c0 (Mon Nov 29 07:42:28 UTC 2021) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! * **This pull request references an unassigned [Jira ticket](https://issues.apache.org/jira/browse/FLINK-25073).** According to the [code contribution guide](https://flink.apache.org/contributing/contribute-code.html), tickets need to be assigned before starting with the implementation work. Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #17938: [Flink-25073][streaming] Introduce TreeMode description for vertices

flinkbot commented on pull request #17938: URL: https://github.com/apache/flink/pull/17938#issuecomment-981363546 ## CI report: * d29e331ebbccb61bbb784d4b0e41cf1e591cb9c0 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

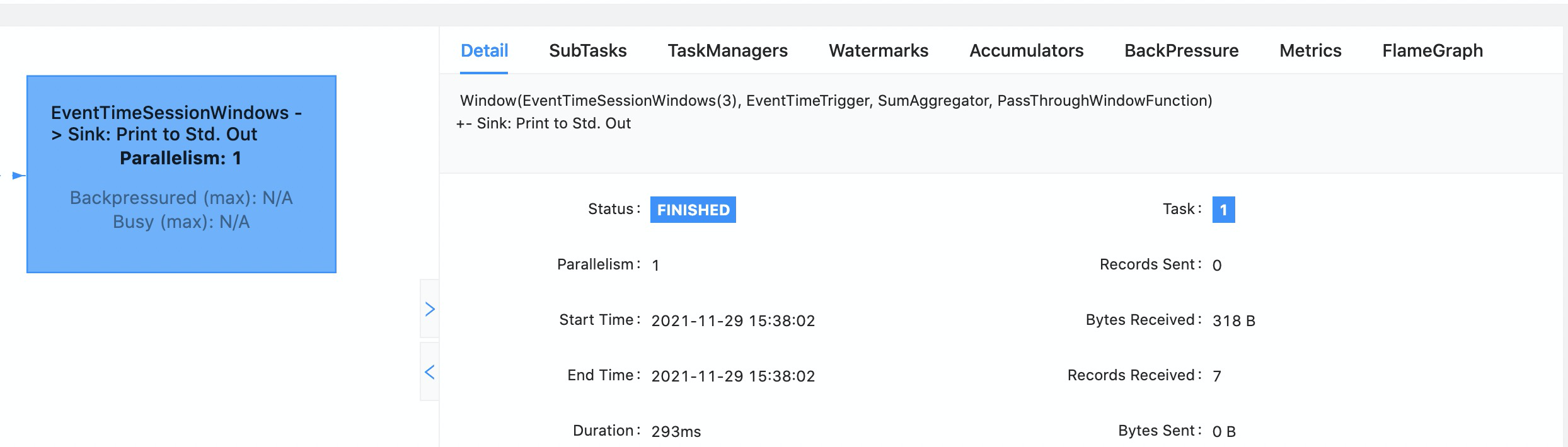

[GitHub] [flink] wenlong88 opened a new pull request #17938: [Flink-25073][streaming] Introduce TreeMode description for vertices

wenlong88 opened a new pull request #17938: URL: https://github.com/apache/flink/pull/17938 ## What is the purpose of the change This PR is a following up PR of FLINK-25072, inits the operatorPrettyName of JobVertex according to the description of operators introduced in FLINK-25072 and exposes the description in web ui. ## Brief change log - init operatorPrettyName of JobVertex in streaming jobs according to description of operators. - introduce an new tree mode description to make the operatorPrettyName easier to understand. - update web ui: display name in DAG of web ui and display description in detail of job vertex. ## Verifying this change This change added tests and can be verified as follows: - *Added unit tests for StreamingJobGraphGeneratorTest to verify the generation of description * - *verified changed in web ui manually, following is the result in examples. *  ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: (no) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? (yes) - If yes, how is the feature documented? (JavaDocs) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17788: [FLINK-15826][Tabel SQL/API] Add renameFunction() to Catalog

flinkbot edited a comment on pull request #17788: URL: https://github.com/apache/flink/pull/17788#issuecomment-968179643 ## CI report: * 4e6d4e3bab6c9fe8caf2363177e74011a175ee72 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=26633) * b6a9b9e36cb882db39de2e5afab9068e86655c2e Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27190) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17867: [FLINK-24592][Table SQL/Client] FlinkSQL Client multiline parser improvements

flinkbot edited a comment on pull request #17867: URL: https://github.com/apache/flink/pull/17867#issuecomment-975551530 ## CI report: * ed90e252cfa8e3fd725ca71473339aafe213a4b3 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=26852) * 4df1c7765d87265518ccb5dd008118a8dbf80f5b Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27189) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17788: [FLINK-15826][Tabel SQL/API] Add renameFunction() to Catalog

flinkbot edited a comment on pull request #17788: URL: https://github.com/apache/flink/pull/17788#issuecomment-968179643 ## CI report: * 4e6d4e3bab6c9fe8caf2363177e74011a175ee72 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=26633) * b6a9b9e36cb882db39de2e5afab9068e86655c2e UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] shenzhu removed a comment on pull request #17788: [FLINK-15826][Tabel SQL/API] Add renameFunction() to Catalog

shenzhu removed a comment on pull request #17788: URL: https://github.com/apache/flink/pull/17788#issuecomment-969976164 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17867: [FLINK-24592][Table SQL/Client] FlinkSQL Client multiline parser improvements

flinkbot edited a comment on pull request #17867: URL: https://github.com/apache/flink/pull/17867#issuecomment-975551530 ## CI report: * ed90e252cfa8e3fd725ca71473339aafe213a4b3 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=26852) * 4df1c7765d87265518ccb5dd008118a8dbf80f5b UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (FLINK-25044) Add More Unit Test For Pulsar Source

[ https://issues.apache.org/jira/browse/FLINK-25044?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Arvid Heise reassigned FLINK-25044: --- Assignee: Yufei Zhang > Add More Unit Test For Pulsar Source > > > Key: FLINK-25044 > URL: https://issues.apache.org/jira/browse/FLINK-25044 > Project: Flink > Issue Type: Improvement > Components: Connectors / Pulsar >Reporter: Yufei Zhang >Assignee: Yufei Zhang >Priority: Minor > Labels: pull-request-available, testing > > We should enhance the pulsar source connector tests by adding more unit tests. > > * SourceReader > * SplitReader > * Enumerator > * SourceBuilder -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [flink] klion26 commented on a change in pull request #10925: [FLINK-15690][core][configuration] Enable configuring ExecutionConfig & CheckpointConfig from flink-conf.yaml

klion26 commented on a change in pull request #10925: URL: https://github.com/apache/flink/pull/10925#discussion_r75800 ## File path: flink-core/src/main/java/org/apache/flink/api/common/ExecutionConfig.java ## @@ -99,7 +99,7 @@ private ClosureCleanerLevel closureCleanerLevel = ClosureCleanerLevel.RECURSIVE; - private int parallelism = PARALLELISM_DEFAULT; + private int parallelism = CoreOptions.DEFAULT_PARALLELISM.defaultValue(); Review comment: @dawidwys this changes the default parallelism from -1(undefined) to 1, is this what we expect? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17666: [FLINK-21327][table-planner-blink] Support window TVF in batch mode

flinkbot edited a comment on pull request #17666: URL: https://github.com/apache/flink/pull/17666#issuecomment-960514675 ## CI report: * cc426ff491234fd94ff50163786c02f9a0e1e5b5 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27188) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (FLINK-25077) Postgresql connector fails in case column with nested arrays

[

https://issues.apache.org/jira/browse/FLINK-25077?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450228#comment-17450228

]

Sergey Nuyanzin edited comment on FLINK-25077 at 11/29/21, 7:14 AM:

Hi [~jark] thanks for your response.

Yes, I think so

I have a fix for that in my branch [1].

Could you please clarify this question?

The only thing that I noticed: there is no tests for

_org.apache.flink.connector.jdbc.internal.converter.PostgresRowConverter_ at

all...

Is there any specific reason for that or just another thing to be improved?

[1]

https://github.com/snuyanzin/flink/commit/bd5e9003c86fcc3ce03b2c109f1feb916029d3c8

was (Author: sergey nuyanzin):

Hi [~jark] thanks for your response.

Yes, I think so

I have a fix for that in my branch [1].

Could you please clarify this question?

The only thing that I noticed: there is no tests for

_org.apache.flink.connector.jdbc.internal.converter.PostgresRowConverter_ at

all...

Is there any specific reason for that or just another thing to be improved?

> Postgresql connector fails in case column with nested arrays

>

>

> Key: FLINK-25077

> URL: https://issues.apache.org/jira/browse/FLINK-25077

> Project: Flink

> Issue Type: Bug

> Components: Connectors / JDBC

>Affects Versions: 1.14.0

>Reporter: Sergey Nuyanzin

>Priority: Major

>

> On Postgres

> {code:sql}

> CREATE TABLE sal_emp (

> name VARCHAR,

> pay_by_quarter INT[],

> schedule VARCHAR[][]

> );

> INSERT INTO sal_emp VALUES ('test', ARRAY[1], ARRAY[ARRAY['nested']]);

> {code}

> on Flink

> {code:sql}

> CREATE TABLE flink_sal_emp (

> name STRING,

> pay_by_quarter ARRAY,

> schedule ARRAY>

> ) WITH (

> 'connector' = 'jdbc',

> 'url' = 'jdbc:postgresql://localhost:5432/postgres',

> 'table-name' = 'sal_emp',

> 'username' = 'postgres',

> 'password' = 'postgres'

> );

> SELECT * FROM default_catalog.default_database.flink_sal_emp ;

> {code}

> result

> {noformat}

> [ERROR] Could not execute SQL statement. Reason:

> java.lang.ClassCastException: class [Ljava.lang.String; cannot be cast to

> class org.postgresql.jdbc.PgArray ([Ljava.lang.String; is in module java.base

> of loader 'bootstrap'; org.postgresql.jdbc.PgArray is in unnamed module of

> loader 'app')

> at

> org.apache.flink.connector.jdbc.internal.converter.PostgresRowConverter.lambda$createPostgresArrayConverter$4f4cdb95$2(PostgresRowConverter.java:104)

> at

> org.apache.flink.connector.jdbc.converter.AbstractJdbcRowConverter.lambda$wrapIntoNullableInternalConverter$ea5b8348$1(AbstractJdbcRowConverter.java:127)

> at

> org.apache.flink.connector.jdbc.internal.converter.PostgresRowConverter.lambda$createPostgresArrayConverter$4f4cdb95$2(PostgresRowConverter.java:108)

> at

> org.apache.flink.connector.jdbc.converter.AbstractJdbcRowConverter.lambda$wrapIntoNullableInternalConverter$ea5b8348$1(AbstractJdbcRowConverter.java:127)

> at

> org.apache.flink.connector.jdbc.converter.AbstractJdbcRowConverter.toInternal(AbstractJdbcRowConverter.java:78)

> at

> org.apache.flink.connector.jdbc.table.JdbcRowDataInputFormat.nextRecord(JdbcRowDataInputFormat.java:257)

> at

> org.apache.flink.connector.jdbc.table.JdbcRowDataInputFormat.nextRecord(JdbcRowDataInputFormat.java:56)

> at

> org.apache.flink.streaming.api.functions.source.InputFormatSourceFunction.run(InputFormatSourceFunction.java:90)

> at

> org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:110)

> at

> org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:67)

> at

> org.apache.flink.streaming.runtime.tasks.SourceStreamTask$LegacySourceFunctionThread.run(SourceStreamTask.java:330)

> {noformat}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Commented] (FLINK-25077) Postgresql connector fails in case column with nested arrays

[

https://issues.apache.org/jira/browse/FLINK-25077?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450228#comment-17450228

]

Sergey Nuyanzin commented on FLINK-25077:

-

Hi [~jark] thanks for your response.

Yes, I think so

I have a fix for that in my branch [1].

Could you please clarify this question?

The only thing that I noticed: there is no tests for

_org.apache.flink.connector.jdbc.internal.converter.PostgresRowConverter_ at

all...

Is there any specific reason for that or just another thing to be improved?

> Postgresql connector fails in case column with nested arrays

>

>

> Key: FLINK-25077

> URL: https://issues.apache.org/jira/browse/FLINK-25077

> Project: Flink

> Issue Type: Bug

> Components: Connectors / JDBC

>Affects Versions: 1.14.0

>Reporter: Sergey Nuyanzin

>Priority: Major

>

> On Postgres

> {code:sql}

> CREATE TABLE sal_emp (

> name VARCHAR,

> pay_by_quarter INT[],

> schedule VARCHAR[][]

> );

> INSERT INTO sal_emp VALUES ('test', ARRAY[1], ARRAY[ARRAY['nested']]);

> {code}

> on Flink

> {code:sql}

> CREATE TABLE flink_sal_emp (

> name STRING,

> pay_by_quarter ARRAY,

> schedule ARRAY>

> ) WITH (

> 'connector' = 'jdbc',

> 'url' = 'jdbc:postgresql://localhost:5432/postgres',

> 'table-name' = 'sal_emp',

> 'username' = 'postgres',

> 'password' = 'postgres'

> );

> SELECT * FROM default_catalog.default_database.flink_sal_emp ;

> {code}

> result

> {noformat}

> [ERROR] Could not execute SQL statement. Reason:

> java.lang.ClassCastException: class [Ljava.lang.String; cannot be cast to

> class org.postgresql.jdbc.PgArray ([Ljava.lang.String; is in module java.base

> of loader 'bootstrap'; org.postgresql.jdbc.PgArray is in unnamed module of

> loader 'app')

> at

> org.apache.flink.connector.jdbc.internal.converter.PostgresRowConverter.lambda$createPostgresArrayConverter$4f4cdb95$2(PostgresRowConverter.java:104)

> at

> org.apache.flink.connector.jdbc.converter.AbstractJdbcRowConverter.lambda$wrapIntoNullableInternalConverter$ea5b8348$1(AbstractJdbcRowConverter.java:127)

> at

> org.apache.flink.connector.jdbc.internal.converter.PostgresRowConverter.lambda$createPostgresArrayConverter$4f4cdb95$2(PostgresRowConverter.java:108)

> at

> org.apache.flink.connector.jdbc.converter.AbstractJdbcRowConverter.lambda$wrapIntoNullableInternalConverter$ea5b8348$1(AbstractJdbcRowConverter.java:127)

> at

> org.apache.flink.connector.jdbc.converter.AbstractJdbcRowConverter.toInternal(AbstractJdbcRowConverter.java:78)

> at

> org.apache.flink.connector.jdbc.table.JdbcRowDataInputFormat.nextRecord(JdbcRowDataInputFormat.java:257)

> at

> org.apache.flink.connector.jdbc.table.JdbcRowDataInputFormat.nextRecord(JdbcRowDataInputFormat.java:56)

> at

> org.apache.flink.streaming.api.functions.source.InputFormatSourceFunction.run(InputFormatSourceFunction.java:90)

> at

> org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:110)

> at

> org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:67)

> at

> org.apache.flink.streaming.runtime.tasks.SourceStreamTask$LegacySourceFunctionThread.run(SourceStreamTask.java:330)

> {noformat}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (FLINK-25050) Translate "Metrics" page of "Operations" into Chinese

[ https://issues.apache.org/jira/browse/FLINK-25050?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ZhiJie Yang updated FLINK-25050: Component/s: Documentation > Translate "Metrics" page of "Operations" into Chinese > - > > Key: FLINK-25050 > URL: https://issues.apache.org/jira/browse/FLINK-25050 > Project: Flink > Issue Type: Improvement > Components: chinese-translation, Documentation >Reporter: ZhiJie Yang >Assignee: ZhiJie Yang >Priority: Minor > Labels: pull-request-available > > The page url is > https://nightlies.apache.org/flink/flink-docs-master/docs/zh/docs/ops/metrics/ > The markdown file is located in flink/docs/content.zh/docs/ops/metrics.md -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-25077) Postgresql connector fails in case column with nested arrays

[

https://issues.apache.org/jira/browse/FLINK-25077?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450222#comment-17450222

]

Jark Wu commented on FLINK-25077:

-

I think this might because JDBC connector with PG dialect just doesn't

supported nested array.

> Postgresql connector fails in case column with nested arrays

>

>

> Key: FLINK-25077

> URL: https://issues.apache.org/jira/browse/FLINK-25077

> Project: Flink

> Issue Type: Bug

> Components: Connectors / JDBC

>Affects Versions: 1.14.0

>Reporter: Sergey Nuyanzin

>Priority: Major

>

> On Postgres

> {code:sql}

> CREATE TABLE sal_emp (

> name VARCHAR,

> pay_by_quarter INT[],

> schedule VARCHAR[][]

> );

> INSERT INTO sal_emp VALUES ('test', ARRAY[1], ARRAY[ARRAY['nested']]);

> {code}

> on Flink

> {code:sql}

> CREATE TABLE flink_sal_emp (

> name STRING,

> pay_by_quarter ARRAY,

> schedule ARRAY>

> ) WITH (

> 'connector' = 'jdbc',

> 'url' = 'jdbc:postgresql://localhost:5432/postgres',

> 'table-name' = 'sal_emp',

> 'username' = 'postgres',

> 'password' = 'postgres'

> );

> SELECT * FROM default_catalog.default_database.flink_sal_emp ;

> {code}

> result

> {noformat}

> [ERROR] Could not execute SQL statement. Reason:

> java.lang.ClassCastException: class [Ljava.lang.String; cannot be cast to

> class org.postgresql.jdbc.PgArray ([Ljava.lang.String; is in module java.base

> of loader 'bootstrap'; org.postgresql.jdbc.PgArray is in unnamed module of

> loader 'app')

> at

> org.apache.flink.connector.jdbc.internal.converter.PostgresRowConverter.lambda$createPostgresArrayConverter$4f4cdb95$2(PostgresRowConverter.java:104)

> at

> org.apache.flink.connector.jdbc.converter.AbstractJdbcRowConverter.lambda$wrapIntoNullableInternalConverter$ea5b8348$1(AbstractJdbcRowConverter.java:127)

> at

> org.apache.flink.connector.jdbc.internal.converter.PostgresRowConverter.lambda$createPostgresArrayConverter$4f4cdb95$2(PostgresRowConverter.java:108)

> at

> org.apache.flink.connector.jdbc.converter.AbstractJdbcRowConverter.lambda$wrapIntoNullableInternalConverter$ea5b8348$1(AbstractJdbcRowConverter.java:127)

> at

> org.apache.flink.connector.jdbc.converter.AbstractJdbcRowConverter.toInternal(AbstractJdbcRowConverter.java:78)

> at

> org.apache.flink.connector.jdbc.table.JdbcRowDataInputFormat.nextRecord(JdbcRowDataInputFormat.java:257)

> at

> org.apache.flink.connector.jdbc.table.JdbcRowDataInputFormat.nextRecord(JdbcRowDataInputFormat.java:56)

> at

> org.apache.flink.streaming.api.functions.source.InputFormatSourceFunction.run(InputFormatSourceFunction.java:90)

> at

> org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:110)

> at

> org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:67)

> at

> org.apache.flink.streaming.runtime.tasks.SourceStreamTask$LegacySourceFunctionThread.run(SourceStreamTask.java:330)

> {noformat}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink] flinkbot edited a comment on pull request #17937: [FLINK-25044][testing][Pulsar Connector] Add More Unit Test For Pulsar Source

flinkbot edited a comment on pull request #17937: URL: https://github.com/apache/flink/pull/17937#issuecomment-981287170 ## CI report: * 1fb86a6233a0fab5a0ef858b40566c3cc76f1a82 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=27187) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (FLINK-25065) Update lookup document for jdbc connector

[ https://issues.apache.org/jira/browse/FLINK-25065?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jark Wu reassigned FLINK-25065: --- Assignee: Gaurav Miglani > Update lookup document for jdbc connector > - > > Key: FLINK-25065 > URL: https://issues.apache.org/jira/browse/FLINK-25065 > Project: Flink > Issue Type: Improvement > Components: Documentation >Affects Versions: 1.15.0 >Reporter: Gaurav Miglani >Assignee: Gaurav Miglani >Priority: Minor > Labels: pull-request-available > > Update `lookup.cache.caching-missing-key` document for jdbc connector -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (FLINK-25065) Update lookup document for jdbc connector

[ https://issues.apache.org/jira/browse/FLINK-25065?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jark Wu updated FLINK-25065: Component/s: Connectors / JDBC > Update lookup document for jdbc connector > - > > Key: FLINK-25065 > URL: https://issues.apache.org/jira/browse/FLINK-25065 > Project: Flink > Issue Type: Improvement > Components: Connectors / JDBC, Documentation >Affects Versions: 1.15.0 >Reporter: Gaurav Miglani >Assignee: Gaurav Miglani >Priority: Minor > Labels: pull-request-available > > Update `lookup.cache.caching-missing-key` document for jdbc connector -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [flink] wuchong commented on pull request #17918: [FLINK-25065][docs] Update document for jdbc connector

wuchong commented on pull request #17918: URL: https://github.com/apache/flink/pull/17918#issuecomment-98154 @gaurav726 , could you update the content for Chinese documentation as well? The file is located in `docs/content.zh/docs/connectors/table/jdbc.md`. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-19358) when submit job on application mode with HA,the jobid will be 0000000000

[ https://issues.apache.org/jira/browse/FLINK-19358?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450220#comment-17450220 ] jocean.shi commented on FLINK-19358: [~trohrmann] I get your concern, In the other word, Whether it's reasonable the code generate JobGraph run twice when failover in "application mode", maybe user code has random code or time code,running twice may be generate different logic. > when submit job on application mode with HA,the jobid will be 00 > > > Key: FLINK-19358 > URL: https://issues.apache.org/jira/browse/FLINK-19358 > Project: Flink > Issue Type: Bug > Components: Runtime / Coordination >Affects Versions: 1.11.0 >Reporter: Jun Zhang >Priority: Minor > Labels: auto-deprioritized-major, usability > > when submit a flink job on application mode with HA ,the flink job id will be > , when I have many jobs ,they have the same > job id , it will be lead to a checkpoint error -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Assigned] (FLINK-25050) Translate "Metrics" page of "Operations" into Chinese

[ https://issues.apache.org/jira/browse/FLINK-25050?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jark Wu reassigned FLINK-25050: --- Assignee: ZhiJie Yang > Translate "Metrics" page of "Operations" into Chinese > - > > Key: FLINK-25050 > URL: https://issues.apache.org/jira/browse/FLINK-25050 > Project: Flink > Issue Type: Improvement > Components: chinese-translation >Reporter: ZhiJie Yang >Assignee: ZhiJie Yang >Priority: Minor > Labels: pull-request-available > > The page url is > https://nightlies.apache.org/flink/flink-docs-master/docs/zh/docs/ops/metrics/ > The markdown file is located in flink/docs/content.zh/docs/ops/metrics.md -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-25055) Support listen and notify mechanism for PartitionRequest

[ https://issues.apache.org/jira/browse/FLINK-25055?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450218#comment-17450218 ] Shammon commented on FLINK-25055: - [~trohrmann] Thank you for your rely. Yes, your understanding is quite correct, this mechanism helps to optimize the interaction between tasks. And thank you for suggestions, I agree with you that it's necessary and important to delete the specified callback in case of timeout or task deployment failure. I want to add this mechanism in flink when job's tasks are deployed in a same pipelined region, and users can use it in their clusters with a specify config. Could you assign this issue to me? Thanks > Support listen and notify mechanism for PartitionRequest > > > Key: FLINK-25055 > URL: https://issues.apache.org/jira/browse/FLINK-25055 > Project: Flink > Issue Type: Improvement > Components: Runtime / Network >Affects Versions: 1.14.0, 1.12.5, 1.13.3 >Reporter: Shammon >Priority: Major > > We submit batch jobs to flink session cluster with eager scheduler for olap. > JM deploys subtasks to TaskManager independently, and the downstream subtasks > may start before the upstream ones are ready. The downstream subtask sends > PartitionRequest to upstream ones, and may receive PartitionNotFoundException > from them. Then it will retry to send PartitionRequest after a few ms until > timeout. > The current approach raises two problems. First, there will be too many retry > PartitionRequest messages. Each downstream subtask will send PartitionRequest > to all its upstream subtasks and the total number of messages will be O(N*N), > where N is the parallelism of subtasks. Secondly, the interval between > polling retries will increase the delay for upstream and downstream tasks to > confirm PartitionRequest. > We want to support listen and notify mechanism for PartitionRequest when the > job needs no failover. Upstream TaskManager will add the PartitionRequest to > a listen list with a timeout checker, and notify the request when the task > register its partition in the TaskManager. > [~nkubicek] I noticed that your scenario of using flink is similar to ours. > What do you think? And hope to hear from you [~trohrmann] THX -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-25049) sql-client.sh execute batch job in async mode failed with java.io.FileNotFoundException

[

https://issues.apache.org/jira/browse/FLINK-25049?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450217#comment-17450217

]

Jark Wu commented on FLINK-25049:

-

[~macdoor615], could you share the DDL of the tables used in your DMLs?

cc [~lzljs3620320]

> sql-client.sh execute batch job in async mode failed with

> java.io.FileNotFoundException

> ---

>

> Key: FLINK-25049

> URL: https://issues.apache.org/jira/browse/FLINK-25049

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem, Table SQL / Ecosystem

>Affects Versions: 1.14.0

>Reporter: macdoor615

>Priority: Major

>

> execute multi simple sql in a sql file, like

>

> {code:java}

> insert overwrite bnpmp.p_biz_hcd_5m select * from bnpmp.p_biz_hcd_5m where

> dt='2021-11-22';

> insert overwrite bnpmp.p_biz_hjr_5m select * from bnpmp.p_biz_hjr_5m where

> dt='2021-11-22';

> insert overwrite bnpmp.p_biz_hswtv_5m select * from bnpmp.p_biz_hswtv_5m

> where dt='2021-11-22';

> ...

> {code}

>

> if

> {code:java}

> SET table.dml-sync = true;{code}

> execute properly.

> if

> {code:java}

> SET table.dml-sync = false;{code}

> some SQL Job failed with the following error

>

> {code:java}

> Caused by: java.lang.Exception: Failed to finalize execution on master ...

> 37 more Caused by: org.apache.flink.table.api.TableException: Exception in

> finalizeGlobal at

> org.apache.flink.table.filesystem.FileSystemOutputFormat.finalizeGlobal(FileSystemOutputFormat.java:91)

> at

> org.apache.flink.runtime.jobgraph.InputOutputFormatVertex.finalizeOnMaster(InputOutputFormatVertex.java:148)

> at

> org.apache.flink.runtime.executiongraph.DefaultExecutionGraph.vertexFinished(DefaultExecutionGraph.java:1086)

> ... 36 more Caused by: java.io.FileNotFoundException: File

> hdfs://service1/user/hive/warehouse/bnpmp.db/p_snmp_f5_traffic_5m/.staging_1637821441497

> does not exist. at

> org.apache.hadoop.hdfs.DistributedFileSystem.listStatusInternal(DistributedFileSystem.java:901)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem.access$600(DistributedFileSystem.java:112)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:961)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:958)

> at

> org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem.listStatus(DistributedFileSystem.java:958)

> at

> org.apache.flink.hive.shaded.fs.hdfs.HadoopFileSystem.listStatus(HadoopFileSystem.java:170)

> at

> org.apache.flink.table.filesystem.PartitionTempFileManager.listTaskTemporaryPaths(PartitionTempFileManager.java:104)

> at

> org.apache.flink.table.filesystem.FileSystemCommitter.commitPartitions(FileSystemCommitter.java:77)

> at

> org.apache.flink.table.filesystem.FileSystemOutputFormat.finalizeGlobal(FileSystemOutputFormat.java:89)

> ... 38 more

>

> {code}

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (FLINK-25049) sql-client.sh execute batch job in async mode failed with java.io.FileNotFoundException

[

https://issues.apache.org/jira/browse/FLINK-25049?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jark Wu updated FLINK-25049:

Component/s: Table SQL / Ecosystem

> sql-client.sh execute batch job in async mode failed with

> java.io.FileNotFoundException

> ---

>

> Key: FLINK-25049

> URL: https://issues.apache.org/jira/browse/FLINK-25049

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem, Table SQL / Ecosystem

>Affects Versions: 1.14.0

>Reporter: macdoor615

>Priority: Major

>

> execute multi simple sql in a sql file, like

>

> {code:java}

> insert overwrite bnpmp.p_biz_hcd_5m select * from bnpmp.p_biz_hcd_5m where

> dt='2021-11-22';

> insert overwrite bnpmp.p_biz_hjr_5m select * from bnpmp.p_biz_hjr_5m where

> dt='2021-11-22';

> insert overwrite bnpmp.p_biz_hswtv_5m select * from bnpmp.p_biz_hswtv_5m

> where dt='2021-11-22';

> ...

> {code}

>

> if

> {code:java}

> SET table.dml-sync = true;{code}

> execute properly.

> if

> {code:java}

> SET table.dml-sync = false;{code}

> some SQL Job failed with the following error

>

> {code:java}

> Caused by: java.lang.Exception: Failed to finalize execution on master ...

> 37 more Caused by: org.apache.flink.table.api.TableException: Exception in

> finalizeGlobal at

> org.apache.flink.table.filesystem.FileSystemOutputFormat.finalizeGlobal(FileSystemOutputFormat.java:91)

> at

> org.apache.flink.runtime.jobgraph.InputOutputFormatVertex.finalizeOnMaster(InputOutputFormatVertex.java:148)

> at

> org.apache.flink.runtime.executiongraph.DefaultExecutionGraph.vertexFinished(DefaultExecutionGraph.java:1086)

> ... 36 more Caused by: java.io.FileNotFoundException: File

> hdfs://service1/user/hive/warehouse/bnpmp.db/p_snmp_f5_traffic_5m/.staging_1637821441497

> does not exist. at

> org.apache.hadoop.hdfs.DistributedFileSystem.listStatusInternal(DistributedFileSystem.java:901)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem.access$600(DistributedFileSystem.java:112)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:961)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:958)

> at

> org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem.listStatus(DistributedFileSystem.java:958)

> at

> org.apache.flink.hive.shaded.fs.hdfs.HadoopFileSystem.listStatus(HadoopFileSystem.java:170)

> at

> org.apache.flink.table.filesystem.PartitionTempFileManager.listTaskTemporaryPaths(PartitionTempFileManager.java:104)

> at

> org.apache.flink.table.filesystem.FileSystemCommitter.commitPartitions(FileSystemCommitter.java:77)

> at

> org.apache.flink.table.filesystem.FileSystemOutputFormat.finalizeGlobal(FileSystemOutputFormat.java:89)

> ... 38 more

>

> {code}

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (FLINK-25049) sql-client.sh execute batch job in async mode failed with java.io.FileNotFoundException

[

https://issues.apache.org/jira/browse/FLINK-25049?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jark Wu updated FLINK-25049:

Environment: (was: {code:java}

//代码占位符

{code})

> sql-client.sh execute batch job in async mode failed with

> java.io.FileNotFoundException

> ---

>

> Key: FLINK-25049

> URL: https://issues.apache.org/jira/browse/FLINK-25049

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem

>Affects Versions: 1.14.0

>Reporter: macdoor615

>Priority: Major

>

> execute multi simple sql in a sql file, like

>

> {code:java}

> insert overwrite bnpmp.p_biz_hcd_5m select * from bnpmp.p_biz_hcd_5m where

> dt='2021-11-22';

> insert overwrite bnpmp.p_biz_hjr_5m select * from bnpmp.p_biz_hjr_5m where

> dt='2021-11-22';

> insert overwrite bnpmp.p_biz_hswtv_5m select * from bnpmp.p_biz_hswtv_5m

> where dt='2021-11-22';

> ...

> {code}

>

> if

> {code:java}

> SET table.dml-sync = true;{code}

> execute properly.

> if

> {code:java}

> SET table.dml-sync = false;{code}

> some SQL Job failed with the following error

>

> {code:java}

> Caused by: java.lang.Exception: Failed to finalize execution on master ...

> 37 more Caused by: org.apache.flink.table.api.TableException: Exception in

> finalizeGlobal at

> org.apache.flink.table.filesystem.FileSystemOutputFormat.finalizeGlobal(FileSystemOutputFormat.java:91)

> at

> org.apache.flink.runtime.jobgraph.InputOutputFormatVertex.finalizeOnMaster(InputOutputFormatVertex.java:148)

> at

> org.apache.flink.runtime.executiongraph.DefaultExecutionGraph.vertexFinished(DefaultExecutionGraph.java:1086)

> ... 36 more Caused by: java.io.FileNotFoundException: File

> hdfs://service1/user/hive/warehouse/bnpmp.db/p_snmp_f5_traffic_5m/.staging_1637821441497

> does not exist. at

> org.apache.hadoop.hdfs.DistributedFileSystem.listStatusInternal(DistributedFileSystem.java:901)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem.access$600(DistributedFileSystem.java:112)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:961)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:958)

> at

> org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem.listStatus(DistributedFileSystem.java:958)

> at

> org.apache.flink.hive.shaded.fs.hdfs.HadoopFileSystem.listStatus(HadoopFileSystem.java:170)

> at

> org.apache.flink.table.filesystem.PartitionTempFileManager.listTaskTemporaryPaths(PartitionTempFileManager.java:104)

> at

> org.apache.flink.table.filesystem.FileSystemCommitter.commitPartitions(FileSystemCommitter.java:77)

> at

> org.apache.flink.table.filesystem.FileSystemOutputFormat.finalizeGlobal(FileSystemOutputFormat.java:89)

> ... 38 more

>

> {code}

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (FLINK-25049) sql-client.sh execute batch job in async mode failed with java.io.FileNotFoundException

[

https://issues.apache.org/jira/browse/FLINK-25049?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jark Wu updated FLINK-25049:

Component/s: Connectors / FileSystem

(was: Table SQL / Client)

> sql-client.sh execute batch job in async mode failed with

> java.io.FileNotFoundException

> ---

>

> Key: FLINK-25049

> URL: https://issues.apache.org/jira/browse/FLINK-25049

> Project: Flink

> Issue Type: Bug

> Components: Connectors / FileSystem

>Affects Versions: 1.14.0

> Environment: {code:java}

> //代码占位符

> {code}

>Reporter: macdoor615

>Priority: Major

>

> execute multi simple sql in a sql file, like

>

> {code:java}

> insert overwrite bnpmp.p_biz_hcd_5m select * from bnpmp.p_biz_hcd_5m where

> dt='2021-11-22';

> insert overwrite bnpmp.p_biz_hjr_5m select * from bnpmp.p_biz_hjr_5m where

> dt='2021-11-22';

> insert overwrite bnpmp.p_biz_hswtv_5m select * from bnpmp.p_biz_hswtv_5m

> where dt='2021-11-22';

> ...

> {code}

>

> if

> {code:java}

> SET table.dml-sync = true;{code}

> execute properly.

> if

> {code:java}

> SET table.dml-sync = false;{code}

> some SQL Job failed with the following error

>

> {code:java}

> Caused by: java.lang.Exception: Failed to finalize execution on master ...

> 37 more Caused by: org.apache.flink.table.api.TableException: Exception in

> finalizeGlobal at

> org.apache.flink.table.filesystem.FileSystemOutputFormat.finalizeGlobal(FileSystemOutputFormat.java:91)

> at

> org.apache.flink.runtime.jobgraph.InputOutputFormatVertex.finalizeOnMaster(InputOutputFormatVertex.java:148)

> at

> org.apache.flink.runtime.executiongraph.DefaultExecutionGraph.vertexFinished(DefaultExecutionGraph.java:1086)

> ... 36 more Caused by: java.io.FileNotFoundException: File

> hdfs://service1/user/hive/warehouse/bnpmp.db/p_snmp_f5_traffic_5m/.staging_1637821441497

> does not exist. at

> org.apache.hadoop.hdfs.DistributedFileSystem.listStatusInternal(DistributedFileSystem.java:901)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem.access$600(DistributedFileSystem.java:112)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:961)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:958)

> at

> org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

> at

> org.apache.hadoop.hdfs.DistributedFileSystem.listStatus(DistributedFileSystem.java:958)

> at

> org.apache.flink.hive.shaded.fs.hdfs.HadoopFileSystem.listStatus(HadoopFileSystem.java:170)

> at

> org.apache.flink.table.filesystem.PartitionTempFileManager.listTaskTemporaryPaths(PartitionTempFileManager.java:104)

> at

> org.apache.flink.table.filesystem.FileSystemCommitter.commitPartitions(FileSystemCommitter.java:77)

> at

> org.apache.flink.table.filesystem.FileSystemOutputFormat.finalizeGlobal(FileSystemOutputFormat.java:89)

> ... 38 more

>

> {code}

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Commented] (FLINK-25054) Improve exception message for unsupported hashLength for SHA2 function

[ https://issues.apache.org/jira/browse/FLINK-25054?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450215#comment-17450215 ] Jark Wu commented on FLINK-25054: - Yes, I think the exception message should be improved. > Improve exception message for unsupported hashLength for SHA2 function > -- > > Key: FLINK-25054 > URL: https://issues.apache.org/jira/browse/FLINK-25054 > Project: Flink > Issue Type: Improvement > Components: Table SQL / API >Affects Versions: 1.12.3 >Reporter: chenbowen >Priority: Major > Attachments: image-2021-11-25-16-59-56-699.png > > Original Estimate: 1h > Remaining Estimate: 1h > > 【exception sql】 > SELECT > SHA2(, 128) > FROM > > 【effect】 > when sql is long , it`s hard to clear where is the problem on this issue > 【reason】 > build-in function SHA2, hashLength do not support “128”, but I could not > understand from > 【Exception log】 > !image-2021-11-25-16-59-56-699.png! -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-25054) Improve exception message for unsupported hashLength for SHA2 function

[ https://issues.apache.org/jira/browse/FLINK-25054?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450216#comment-17450216 ] Jark Wu commented on FLINK-25054: - Yes, I think the exception message should be improved. > Improve exception message for unsupported hashLength for SHA2 function > -- > > Key: FLINK-25054 > URL: https://issues.apache.org/jira/browse/FLINK-25054 > Project: Flink > Issue Type: Improvement > Components: Table SQL / API >Affects Versions: 1.12.3 >Reporter: chenbowen >Priority: Major > Attachments: image-2021-11-25-16-59-56-699.png > > Original Estimate: 1h > Remaining Estimate: 1h > > 【exception sql】 > SELECT > SHA2(, 128) > FROM > > 【effect】 > when sql is long , it`s hard to clear where is the problem on this issue > 【reason】 > build-in function SHA2, hashLength do not support “128”, but I could not > understand from > 【Exception log】 > !image-2021-11-25-16-59-56-699.png! -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (FLINK-25054) Improve exception message for unsupported hashLength for SHA2 function

[ https://issues.apache.org/jira/browse/FLINK-25054?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jark Wu updated FLINK-25054: Language: (was: JAVA) > Improve exception message for unsupported hashLength for SHA2 function > -- > > Key: FLINK-25054 > URL: https://issues.apache.org/jira/browse/FLINK-25054 > Project: Flink > Issue Type: Improvement > Components: Table SQL / API >Affects Versions: 1.12.3 >Reporter: chenbowen >Priority: Major > Attachments: image-2021-11-25-16-59-56-699.png > > Original Estimate: 1h > Remaining Estimate: 1h > > 【exception sql】 > SELECT > SHA2(, 128) > FROM > > 【effect】 > when sql is long , it`s hard to clear where is the problem on this issue > 【reason】 > build-in function SHA2, hashLength do not support “128”, but I could not > understand from > 【Exception log】 > !image-2021-11-25-16-59-56-699.png! -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (FLINK-25054) Improve exception message for unsupported hashLength for SHA2 function

[ https://issues.apache.org/jira/browse/FLINK-25054?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jark Wu updated FLINK-25054: Summary: Improve exception message for unsupported hashLength for SHA2 function (was: flink sql System (Built-in) Functions 【SHA2】,hashLength validation Unsurpport) > Improve exception message for unsupported hashLength for SHA2 function > -- > > Key: FLINK-25054 > URL: https://issues.apache.org/jira/browse/FLINK-25054 > Project: Flink > Issue Type: Improvement > Components: Build System >Affects Versions: 1.12.3 >Reporter: chenbowen >Priority: Major > Attachments: image-2021-11-25-16-59-56-699.png > > Original Estimate: 1h > Remaining Estimate: 1h > > 【exception sql】 > SELECT > SHA2(, 128) > FROM > > 【effect】 > when sql is long , it`s hard to clear where is the problem on this issue > 【reason】 > build-in function SHA2, hashLength do not support “128”, but I could not > understand from > 【Exception log】 > !image-2021-11-25-16-59-56-699.png! -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (FLINK-25054) Improve exception message for unsupported hashLength for SHA2 function

[ https://issues.apache.org/jira/browse/FLINK-25054?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jark Wu updated FLINK-25054: Component/s: Table SQL / API (was: Build System) > Improve exception message for unsupported hashLength for SHA2 function > -- > > Key: FLINK-25054 > URL: https://issues.apache.org/jira/browse/FLINK-25054 > Project: Flink > Issue Type: Improvement > Components: Table SQL / API >Affects Versions: 1.12.3 >Reporter: chenbowen >Priority: Major > Attachments: image-2021-11-25-16-59-56-699.png > > Original Estimate: 1h > Remaining Estimate: 1h > > 【exception sql】 > SELECT > SHA2(, 128) > FROM > > 【effect】 > when sql is long , it`s hard to clear where is the problem on this issue > 【reason】 > build-in function SHA2, hashLength do not support “128”, but I could not > understand from > 【Exception log】 > !image-2021-11-25-16-59-56-699.png! -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-25029) Hadoop Caller Context Setting In Flink

[ https://issues.apache.org/jira/browse/FLINK-25029?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450209#comment-17450209 ] 刘方奇 commented on FLINK-25029: - Sorry to bother. Could someone help to look this issue? In my opinion, this issue do not go the end. [~lzljs3620320] [~arvid] [~dmvk] [~jark] > Hadoop Caller Context Setting In Flink > -- > > Key: FLINK-25029 > URL: https://issues.apache.org/jira/browse/FLINK-25029 > Project: Flink > Issue Type: Improvement > Components: Runtime / Task >Reporter: 刘方奇 >Priority: Major > > For a given HDFS operation (e.g. delete file), it's very helpful to track > which upper level job issues it. The upper level callers may be specific > Oozie tasks, MR jobs, and hive queries. One scenario is that the namenode > (NN) is abused/spammed, the operator may want to know immediately which MR > job should be blamed so that she can kill it. To this end, the caller context > contains at least the application-dependent "tracking id". > The above is the main effect of the Caller Context. HDFS Client set Caller > Context, then name node get it in audit log to do some work. > Now the Spark and hive have the Caller Context to meet the HDFS Job Audit > requirement. > In my company, flink jobs often cause some problems for HDFS, so we did it > for preventing some cases. > If the feature is general enough. Should we support it, then I can submit a > PR for this. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [flink-ml] yunfengzhou-hub commented on a change in pull request #32: [FLINK-24817] Add Naive Bayes implementation

yunfengzhou-hub commented on a change in pull request #32:

URL: https://github.com/apache/flink-ml/pull/32#discussion_r758054855

##

File path:

flink-ml-lib/src/main/java/org/apache/flink/ml/classification/naivebayes/NaiveBayesModelData.java

##

@@ -0,0 +1,148 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.ml.classification.naivebayes;

+

+import org.apache.flink.api.common.functions.MapFunction;

+import org.apache.flink.api.common.serialization.Encoder;

+import org.apache.flink.api.common.typeinfo.TypeInformation;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.connector.file.src.reader.SimpleStreamFormat;

+import org.apache.flink.core.fs.FSDataInputStream;

+import org.apache.flink.streaming.api.datastream.DataStream;

+import org.apache.flink.table.api.Table;

+import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

+import org.apache.flink.types.Row;

+

+import com.esotericsoftware.kryo.io.Input;

+import com.esotericsoftware.kryo.io.Output;

+

+import java.io.IOException;

+import java.io.OutputStream;

+import java.io.Serializable;

+import java.util.HashMap;

+import java.util.Map;

+

+/**

+ * The model data of {@link NaiveBayesModel}.

+ *

+ * This class also provides methods to convert model data between Table and

Datastream, and

+ * classes to save/load model data.

+ */

+public class NaiveBayesModelData implements Serializable {

+public final Map[][] theta;

+public final double[] piArray;

+public final int[] labels;

+

+public NaiveBayesModelData(Map[][] theta, double[]

piArray, int[] labels) {

+this.theta = theta;

+this.piArray = piArray;

+this.labels = labels;

+}

+

+/** Converts the provided modelData Datastream into corresponding Table. */

+public static Table getModelDataTable(

+StreamTableEnvironment tEnv, DataStream

stream) {

Review comment:

Yes. it makes sense. I'll make the change.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Created] (FLINK-25089) KafkaSourceITCase.testBasicRead hangs on azure

Yun Gao created FLINK-25089: --- Summary: KafkaSourceITCase.testBasicRead hangs on azure Key: FLINK-25089 URL: https://issues.apache.org/jira/browse/FLINK-25089 Project: Flink Issue Type: Bug Components: Connectors / Kafka Affects Versions: 1.13.4 Reporter: Yun Gao https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=27180=logs=1fc6e7bf-633c-5081-c32a-9dea24b05730=80a658d1-f7f6-5d93-2758-53ac19fd5b19=6933 -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-24764) pyflink/table/tests/test_udf.py hang on Azure

[

https://issues.apache.org/jira/browse/FLINK-24764?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450202#comment-17450202

]

Yun Gao commented on FLINK-24764:

-

[https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=27181=logs=821b528f-1eed-5598-a3b4-7f748b13f261=6bb545dd-772d-5d8c-f258-f5085fba3295=22361]

> pyflink/table/tests/test_udf.py hang on Azure

> -

>

> Key: FLINK-24764

> URL: https://issues.apache.org/jira/browse/FLINK-24764

> Project: Flink

> Issue Type: Bug

> Components: API / Python

>Affects Versions: 1.14.0, 1.12.5, 1.15.0

>Reporter: Till Rohrmann

>Priority: Critical

> Labels: test-stability

> Fix For: 1.15.0, 1.14.1, 1.13.4

>

>

> {{pyflink/table/tests/test_udf.py}} seems to hang on Azure.

> {code}

> 2021-11-04T03:12:01.4537829Z py36-cython run-test: commands[3] | pytest

> --durations=20

> 2021-11-04T03:12:03.6955063Z = test session

> starts ==

> 2021-11-04T03:12:03.6957461Z platform linux -- Python 3.6.13, pytest-6.2.5,

> py-1.10.0, pluggy-1.0.0

> 2021-11-04T03:12:03.6959285Z cachedir: .tox/py36-cython/.pytest_cache

> 2021-11-04T03:12:03.6960653Z rootdir: /__w/1/s/flink-python

> 2021-11-04T03:12:03.6961356Z collected 690 items

> 2021-11-04T03:12:03.6961755Z

> 2021-11-04T03:12:04.6615796Z pyflink/common/tests/test_configuration.py

> ..[ 1%]

> 2021-11-04T03:12:04.9315499Z pyflink/common/tests/test_execution_config.py

> ...[ 4%]

> 2021-11-04T03:12:05.4226061Z

> pyflink/common/tests/test_serialization_schemas.py ... [

> 5%]

> 2021-11-04T03:12:05.8920762Z pyflink/common/tests/test_typeinfo.py ...

> [ 5%]

> 2021-11-04T03:12:10.3843622Z

> pyflink/dataset/tests/test_execution_environment.py ...s.[

> 6%]

> 2021-11-04T03:12:10.4385641Z

> pyflink/dataset/tests/test_execution_environment_completeness.py . [

> 7%]

> 2021-11-04T03:12:10.5390180Z

> pyflink/datastream/tests/test_check_point_config.py ... [

> 8%]

> 2021-11-04T03:12:20.1148835Z pyflink/datastream/tests/test_connectors.py ...

> [ 9%]

> 2021-11-04T03:13:12.4436977Z pyflink/datastream/tests/test_data_stream.py

> ... [ 13%]

> 2021-11-04T03:13:22.6815256Z

> [ 14%]

> 2021-11-04T03:13:22.9777981Z pyflink/datastream/tests/test_state_backend.py

> ..[ 16%]

> 2021-11-04T03:13:33.4281095Z

> pyflink/datastream/tests/test_stream_execution_environment.py .. [

> 18%]

> 2021-11-04T03:13:45.3707210Z .s.

> [ 21%]

> 2021-11-04T03:13:45.5100419Z

> pyflink/datastream/tests/test_stream_execution_environment_completeness.py .

> [ 21%]

> 2021-11-04T03:13:45.5107357Z

> [ 21%]

> 2021-11-04T03:13:45.5824541Z pyflink/fn_execution/tests/test_coders.py

> s [ 24%]

> 2021-11-04T03:13:45.6311670Z pyflink/fn_execution/tests/test_fast_coders.py

> ... [ 27%]

> 2021-11-04T03:13:45.6480686Z

> pyflink/fn_execution/tests/test_flink_fn_execution_pb2_synced.py . [

> 27%]

> 2021-11-04T03:13:48.3033527Z

> pyflink/fn_execution/tests/test_process_mode_boot.py ... [

> 28%]

> 2021-11-04T03:13:48.3169538Z pyflink/metrics/tests/test_metric.py .

> [ 28%]

> 2021-11-04T03:13:48.3928810Z pyflink/ml/tests/test_ml_environment.py ...

> [ 29%]

> 2021-11-04T03:13:48.4381082Z pyflink/ml/tests/test_ml_environment_factory.py

> ... [ 29%]

> 2021-11-04T03:13:48.4696143Z pyflink/ml/tests/test_params.py .

> [ 31%]

> 2021-11-04T03:13:48.5140301Z pyflink/ml/tests/test_pipeline.py

> [ 32%]

> 2021-11-04T03:13:50.2573824Z pyflink/ml/tests/test_pipeline_it_case.py ...

> [ 32%]

> 2021-11-04T03:13:50.3598135Z pyflink/ml/tests/test_pipeline_stage.py ..

> [ 32%]

> 2021-11-04T03:14:18.5397420Z pyflink/table/tests/test_aggregate.py .

> [ 34%]

> 2021-11-04T03:14:20.1852937Z pyflink/table/tests/test_calc.py ...

> [ 35%]

> 2021-11-04T03:14:21.3674525Z pyflink/table/tests/test_catalog.py

> [ 40%]

> 2021-11-04T03:14:22.4375814Z ...

> [ 46%]

> 2021-11-04T03:14:22.4966492Z

[jira] [Created] (FLINK-25088) KafkaSinkITCase failed on azure due to Container did not start correctly

Yun Gao created FLINK-25088:

---

Summary: KafkaSinkITCase failed on azure due to Container did not

start correctly

Key: FLINK-25088

URL: https://issues.apache.org/jira/browse/FLINK-25088

Project: Flink

Issue Type: Bug

Components: Build System / Azure Pipelines, Connectors / Kafka

Affects Versions: 1.14.1

Reporter: Yun Gao

{code:java}

Nov 29 03:38:00 at

org.junit.vintage.engine.VintageTestEngine.executeAllChildren(VintageTestEngine.java:82)

Nov 29 03:38:00 at

org.junit.vintage.engine.VintageTestEngine.execute(VintageTestEngine.java:73)

Nov 29 03:38:00 at

org.junit.platform.launcher.core.DefaultLauncher.execute(DefaultLauncher.java:220)

Nov 29 03:38:00 at

org.junit.platform.launcher.core.DefaultLauncher.lambda$execute$6(DefaultLauncher.java:188)

Nov 29 03:38:00 at

org.junit.platform.launcher.core.DefaultLauncher.withInterceptedStreams(DefaultLauncher.java:202)

Nov 29 03:38:00 at

org.junit.platform.launcher.core.DefaultLauncher.execute(DefaultLauncher.java:181)

Nov 29 03:38:00 at

org.junit.platform.launcher.core.DefaultLauncher.execute(DefaultLauncher.java:128)

Nov 29 03:38:00 at

org.apache.maven.surefire.junitplatform.JUnitPlatformProvider.invokeAllTests(JUnitPlatformProvider.java:150)

Nov 29 03:38:00 at

org.apache.maven.surefire.junitplatform.JUnitPlatformProvider.invoke(JUnitPlatformProvider.java:120)

Nov 29 03:38:00 at

org.apache.maven.surefire.booter.ForkedBooter.invokeProviderInSameClassLoader(ForkedBooter.java:384)

Nov 29 03:38:00 at

org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:345)

Nov 29 03:38:00 at

org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:126)

Nov 29 03:38:00 at

org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:418)

Nov 29 03:38:00 Caused by: org.rnorth.ducttape.RetryCountExceededException:

Retry limit hit with exception

Nov 29 03:38:00 at

org.rnorth.ducttape.unreliables.Unreliables.retryUntilSuccess(Unreliables.java:88)

Nov 29 03:38:00 at

org.testcontainers.containers.GenericContainer.doStart(GenericContainer.java:327)

Nov 29 03:38:00 ... 33 more

Nov 29 03:38:00 Caused by:

org.testcontainers.containers.ContainerLaunchException: Could not create/start

container

Nov 29 03:38:00 at

org.testcontainers.containers.GenericContainer.tryStart(GenericContainer.java:523)

Nov 29 03:38:00 at

org.testcontainers.containers.GenericContainer.lambda$doStart$0(GenericContainer.java:329)

Nov 29 03:38:00 at

org.rnorth.ducttape.unreliables.Unreliables.retryUntilSuccess(Unreliables.java:81)

Nov 29 03:38:00 ... 34 more

Nov 29 03:38:00 Caused by: java.lang.IllegalStateException: Container did not

start correctly.

Nov 29 03:38:00 at

org.testcontainers.containers.GenericContainer.tryStart(GenericContainer.java:461)

Nov 29 03:38:00 ... 36 more

Nov 29 03:38:00

Nov 29 03:38:01 [INFO] Running

org.apache.flink.connector.kafka.sink.FlinkKafkaInternalProducerITCase

Nov 29 03:38:16 [INFO] Tests run: 1, Failures: 0, Errors: 0, Skipped: 0, Time

elapsed: 14.442 s - in

org.apache.flink.connector.kafka.sink.FlinkKafkaInternalProducerITCase

Nov 29 03:38:16 [INFO]

Nov 29 03:38:16 [INFO] Results:

Nov 29 03:38:16 [INFO]

Nov 29 03:38:16 [ERROR] Errors:

Nov 29 03:38:16 [ERROR] KafkaSinkITCase » ContainerLaunch Container startup

failed

Nov 29 03:38:16 [INFO]

Nov 29 03:38:16 [ERROR] Tests run: 186, Failures: 0, Errors: 1, Skipped: 0

{code}

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=27181=logs=b0097207-033c-5d9a-b48c-6d4796fbe60d=8338a7d2-16f7-52e5-f576-4b7b3071eb3d=7151

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Commented] (FLINK-24935) Python module failed to compile due to "Could not create local repository"

[

https://issues.apache.org/jira/browse/FLINK-24935?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450200#comment-17450200

]

Yun Gao commented on FLINK-24935:

-

[https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=27179=logs=a29bcfe1-064d-50b9-354f-07802213a3c0=47ff6576-c9dc-5eab-9db8-183dcca3bede]

Hi [~chesnay] sorry this issue seems to still pop up, does disabling the repo

sync already take effect~?

> Python module failed to compile due to "Could not create local repository"

> --

>

> Key: FLINK-24935

> URL: https://issues.apache.org/jira/browse/FLINK-24935

> Project: Flink

> Issue Type: Bug

> Components: Build System / Azure Pipelines

>Affects Versions: 1.12.5

>Reporter: Yun Gao

>Priority: Critical

> Labels: test-stability

>

> {code:java}

> Invoking mvn with 'mvn -Dmaven.wagon.http.pool=false --settings

> /__w/1/s/tools/ci/google-mirror-settings.xml

> -Dorg.slf4j.simpleLogger.showDateTime=true

> -Dorg.slf4j.simpleLogger.dateTimeFormat=HH:mm:ss.SSS

> -Dorg.slf4j.simpleLogger.log.org.apache.maven.cli.transfer.Slf4jMavenTransferListener=warn

> --no-snapshot-updates -B -Dhadoop.version=2.8.3 -Dinclude_hadoop_aws

> -Dscala-2.11 clean deploy

> -DaltDeploymentRepository=validation_repository::default::file:/tmp/flink-validation-deployment

> -Dmaven.repo.local=/home/vsts/work/1/.m2/repository

> -Dflink.convergence.phase=install -Pcheck-convergence -Dflink.forkCount=2

> -Dflink.forkCountTestPackage=2 -Dmaven.javadoc.skip=true -U -DskipTests'

> [ERROR] Could not create local repository at /home/vsts/work/1/.m2/repository

> -> [Help 1]

> [ERROR]

> [ERROR] To see the full stack trace of the errors, re-run Maven with the -e

> switch.

> [ERROR] Re-run Maven using the -X switch to enable full debug logging.

> [ERROR]

> [ERROR] For more information about the errors and possible solutions, please

> read the following articles:

> [ERROR] [Help 1]

> http://cwiki.apache.org/confluence/display/MAVEN/LocalRepositoryNotAccessibleException

> {code}

> [https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=26625=logs=a29bcfe1-064d-50b9-354f-07802213a3c0=47ff6576-c9dc-5eab-9db8-183dcca3bede]

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Commented] (FLINK-23391) KafkaSourceReaderTest.testKafkaSourceMetrics fails on azure

[

https://issues.apache.org/jira/browse/FLINK-23391?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17450198#comment-17450198

]

Yun Gao commented on FLINK-23391:

-

[https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=27178=logs=c5f0071e-1851-543e-9a45-9ac140befc32=15a22db7-8faa-5b34-3920-d33c9f0ca23c=6861]

The ci is running against c40bbf1e87cc62880905cd567dca05a4e15aff35, which seems

to contains c461338d0009a164d9c236aeab691677384d1d9f on master, [~renqs] could

you have a double look~?

> KafkaSourceReaderTest.testKafkaSourceMetrics fails on azure

> ---

>

> Key: FLINK-23391

> URL: https://issues.apache.org/jira/browse/FLINK-23391

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.14.0, 1.13.1

>Reporter: Xintong Song

>Assignee: Qingsheng Ren

>Priority: Major

> Labels: pull-request-available, test-stability

> Fix For: 1.15.0, 1.14.1, 1.13.4

>

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=20456=logs=c5612577-f1f7-5977-6ff6-7432788526f7=53f6305f-55e6-561c-8f1e-3a1dde2c77df=6783

> {code}

> Jul 14 23:00:26 [ERROR] Tests run: 10, Failures: 0, Errors: 1, Skipped: 0,

> Time elapsed: 99.93 s <<< FAILURE! - in

> org.apache.flink.connector.kafka.source.reader.KafkaSourceReaderTest

> Jul 14 23:00:26 [ERROR]

> testKafkaSourceMetrics(org.apache.flink.connector.kafka.source.reader.KafkaSourceReaderTest)

> Time elapsed: 60.225 s <<< ERROR!

> Jul 14 23:00:26 java.util.concurrent.TimeoutException: Offsets are not

> committed successfully. Dangling offsets:

> {15213={KafkaSourceReaderTest-0=OffsetAndMetadata{offset=10,

> leaderEpoch=null, metadata=''}}}

> Jul 14 23:00:26 at

> org.apache.flink.core.testutils.CommonTestUtils.waitUtil(CommonTestUtils.java:210)

> Jul 14 23:00:26 at

> org.apache.flink.connector.kafka.source.reader.KafkaSourceReaderTest.testKafkaSourceMetrics(KafkaSourceReaderTest.java:275)

> Jul 14 23:00:26 at sun.reflect.NativeMethodAccessorImpl.invoke0(Native

> Method)

> Jul 14 23:00:26 at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> Jul 14 23:00:26 at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> Jul 14 23:00:26 at java.lang.reflect.Method.invoke(Method.java:498)

> Jul 14 23:00:26 at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

> Jul 14 23:00:26 at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> Jul 14 23:00:26 at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

> Jul 14 23:00:26 at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> Jul 14 23:00:26 at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> Jul 14 23:00:26 at

> org.junit.rules.ExpectedException$ExpectedExceptionStatement.evaluate(ExpectedException.java:239)

> Jul 14 23:00:26 at

> org.apache.flink.util.TestNameProvider$1.evaluate(TestNameProvider.java:45)

> Jul 14 23:00:26 at

> org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:55)

> Jul 14 23:00:26 at org.junit.rules.RunRules.evaluate(RunRules.java:20)

> Jul 14 23:00:26 at

> org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

> Jul 14 23:00:26 at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

> Jul 14 23:00:26 at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

> Jul 14 23:00:26 at

> org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

> Jul 14 23:00:26 at

> org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

> Jul 14 23:00:26 at

> org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

> Jul 14 23:00:26 at