[GitHub] [flink] MonsterChenzhuo commented on a change in pull request #17994: [FLINK-24456][Connectors / Kafka,Table SQL / Ecosystem] Support bound…

MonsterChenzhuo commented on a change in pull request #17994:

URL: https://github.com/apache/flink/pull/17994#discussion_r782790555

##

File path:

flink-connectors/flink-connector-kafka/src/main/java/org/apache/flink/streaming/connectors/kafka/table/KafkaConnectorOptions.java

##

@@ -158,6 +158,13 @@

.withDescription(

"Optional offsets used in case of

\"specific-offsets\" startup mode");

+public static final ConfigOption SCAN_BOUNDED_SPECIFIC_OFFSETS =

+ConfigOptions.key("scan.bounded.specific-offsets")

+.stringType()

+.noDefaultValue()

+.withDescription(

+"When all partitions have reached their stop

offsets, the source will exit");

+

public static final ConfigOption SCAN_STARTUP_TIMESTAMP_MILLIS =

Review comment:

I will make changes as soon as possible

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (FLINK-25582) flink sql kafka source cannot special custom parallelism

[ https://issues.apache.org/jira/browse/FLINK-25582?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17474331#comment-17474331 ] venn wu commented on FLINK-25582: - [~MartijnVisser] thanks for reminding, i have add the describe for the issure, please check again > flink sql kafka source cannot special custom parallelism > > > Key: FLINK-25582 > URL: https://issues.apache.org/jira/browse/FLINK-25582 > Project: Flink > Issue Type: Improvement > Components: Connectors / Kafka, Table SQL / API >Affects Versions: 1.13.0, 1.14.0 >Reporter: venn wu >Priority: Minor > Labels: pull-request-available > > when use flink sql api, all operator have same parallelism, but in some times > we want specify the source / sink parallelism for kafka source, i noticed the > kafka sink already have parameter "sink.parallelism" to specify the sink > parallelism, but kafka source no, so we want flink sql api, have a parameter > to specify the kafka source parallelism like sink. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-25615) FlinkKafkaProducer fail to correctly migrate pre Flink 1.9 state

[

https://issues.apache.org/jira/browse/FLINK-25615?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17474328#comment-17474328

]

Martijn Visser commented on FLINK-25615:

Goedemorgen [~Matthias Schwalbe] :)

Thanks for the extra info!

> FlinkKafkaProducer fail to correctly migrate pre Flink 1.9 state

>

>

> Key: FLINK-25615

> URL: https://issues.apache.org/jira/browse/FLINK-25615

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.9.0

>Reporter: Matthias Schwalbe

>Priority: Major

>

> I've found an unnoticed error in FlinkKafkaProvider when migrating from pre

> Flink 1.9 state to versions starting with Flink 1.9:

> * the operator state for next-transactional-id-hint should be deleted and

> replaced by operator state next-transactional-id-hint-v2, however

> * operator state next-transactional-id-hint is never deleted

> * see here: [1] :

> {quote} if (context.getOperatorStateStore()

> .getRegisteredStateNames()

> .contains(NEXT_TRANSACTIONAL_ID_HINT_DESCRIPTOR)) {

> migrateNextTransactionalIdHindState(context);

> }{quote} * migrateNextTransactionalIdHindState is never called, as

> the condition cannot become true:

> ** getRegisteredStateNames returns a list of String, whereas

> NEXT_TRANSACTIONAL_ID_HINT_DESCRIPTOR is ListStateDescriptor (type mismatch)

> The Effect is:

> * because NEXT_TRANSACTIONAL_ID_HINT_DESCRIPTOR is for a UnionListState, and

> * the state is not cleared,

> * each time the job restarts from a savepoint or checkpoint the size

> multiplies times the parallelism

> * then because each entry leaves an offset in metadata, akka.framesize

> becomes too small, before we run into memory overflow

>

> The breaking change has been introduced in commit

> 70fa80e3862b367be22b593db685f9898a2838ef

>

> A simple fix would be to change the code to:

> {quote} if (context.getOperatorStateStore()

> .getRegisteredStateNames()

> .contains(NEXT_TRANSACTIONAL_ID_HINT_DESCRIPTOR.getName())) {

> migrateNextTransactionalIdHindState(context);

> }

> {quote}

>

> Although FlinkKafkaProvider is marked as deprecated it is probably a while

> here to stay

>

> Greeting

> Matthias (Thias) Schwalbe

>

> [1]

> https://github.com/apache/flink/blob/d7cf2c10f8d4fba81173854cbd8be27c657c7c7f/flink-connectors/flink-connector-kafka/src/main/java/org/apache/flink/streaming/connectors/kafka/FlinkKafkaProducer.java#L1167-L1171

>

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (FLINK-25582) flink sql kafka source cannot special custom parallelism

[ https://issues.apache.org/jira/browse/FLINK-25582?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] venn wu updated FLINK-25582: Description: when use flink sql api, all operator have same parallelism, but in some times we want specify the source / sink parallelism for kafka source, i noticed the kafka sink already have parameter "sink.parallelism" to specify the sink parallelism, but kafka source no, so we want flink sql api, have a parameter to specify the kafka source parallelism like sink. (was: when use flink sql api, all operator have same parallelism, but in some times we want specify the source / sink parallelism for kafka source, i notices the kafka sink already have parameter "sink.parallelism" to specify the sink parallelism, but kafka source no, so we want flink sql api, have a parameter to specify the kafka source parallelism like sink.) > flink sql kafka source cannot special custom parallelism > > > Key: FLINK-25582 > URL: https://issues.apache.org/jira/browse/FLINK-25582 > Project: Flink > Issue Type: Improvement > Components: Connectors / Kafka, Table SQL / API >Affects Versions: 1.13.0, 1.14.0 >Reporter: venn wu >Priority: Minor > Labels: pull-request-available > > when use flink sql api, all operator have same parallelism, but in some times > we want specify the source / sink parallelism for kafka source, i noticed the > kafka sink already have parameter "sink.parallelism" to specify the sink > parallelism, but kafka source no, so we want flink sql api, have a parameter > to specify the kafka source parallelism like sink. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Updated] (FLINK-25582) flink sql kafka source cannot special custom parallelism

[ https://issues.apache.org/jira/browse/FLINK-25582?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] venn wu updated FLINK-25582: Description: when use flink sql api, all operator have same parallelism, but in some times we want specify the source / sink parallelism for kafka source, i notices the kafka sink already have parameter "sink.parallelism" to specify the sink parallelism, but kafka source no, so we want flink sql api, have a parameter to specify the kafka source parallelism like sink. (was: costom flink sql kafka source parallelism) > flink sql kafka source cannot special custom parallelism > > > Key: FLINK-25582 > URL: https://issues.apache.org/jira/browse/FLINK-25582 > Project: Flink > Issue Type: Improvement > Components: Connectors / Kafka, Table SQL / API >Affects Versions: 1.13.0, 1.14.0 >Reporter: venn wu >Priority: Minor > Labels: pull-request-available > > when use flink sql api, all operator have same parallelism, but in some times > we want specify the source / sink parallelism for kafka source, i notices the > kafka sink already have parameter "sink.parallelism" to specify the sink > parallelism, but kafka source no, so we want flink sql api, have a parameter > to specify the kafka source parallelism like sink. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-25070) FLIP-195: Improve the name and structure of vertex and operator name for job

[ https://issues.apache.org/jira/browse/FLINK-25070?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17474326#comment-17474326 ] Martijn Visser commented on FLINK-25070: [~wenlong.lwl] Does your FLIP also supersede https://issues.apache.org/jira/browse/FLINK-20375? > FLIP-195: Improve the name and structure of vertex and operator name for job > > > Key: FLINK-25070 > URL: https://issues.apache.org/jira/browse/FLINK-25070 > Project: Flink > Issue Type: Improvement > Components: API / DataStream, Runtime / Web Frontend, Table SQL / > Runtime >Reporter: Wenlong Lyu >Assignee: Wenlong Lyu >Priority: Major > Fix For: 1.15.0 > > > this is an umbrella issue tracking the improvement of operator/vertex names > in flink: > https://cwiki.apache.org/confluence/display/FLINK/FLIP-195%3A+Improve+the+name+and+structure+of+vertex+and+operator+name+for+job -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-25095) Case when would be translated into different expression in Hive dialect and default dialect

[

https://issues.apache.org/jira/browse/FLINK-25095?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17474325#comment-17474325

]

Shuo Cheng commented on FLINK-25095:

Similar issue: FLINK-20765

> Case when would be translated into different expression in Hive dialect and

> default dialect

> ---

>

> Key: FLINK-25095

> URL: https://issues.apache.org/jira/browse/FLINK-25095

> Project: Flink

> Issue Type: Sub-task

> Components: Connectors / Hive, Table SQL / Planner

>Affects Versions: 1.13.0, 1.14.0

>Reporter: xiangqiao

>Priority: Major

> Labels: pull-request-available

>

> When we {*}use blink planner{*}'s *batch mode* and set {*}hive dialect{*}.

> This exception will be reported when the *subquery field* is used in {*}case

> when{*}.

> {code:java}

> org.apache.flink.table.planner.codegen.CodeGenException: Mismatch of

> function's argument data type 'BOOLEAN NOT NULL' and actual argument type

> 'BOOLEAN'. at

> org.apache.flink.table.planner.codegen.calls.BridgingFunctionGenUtil$$anonfun$verifyArgumentTypes$1.apply(BridgingFunctionGenUtil.scala:326)

> at

> org.apache.flink.table.planner.codegen.calls.BridgingFunctionGenUtil$$anonfun$verifyArgumentTypes$1.apply(BridgingFunctionGenUtil.scala:323)

> at

> scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

> at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

> at

> org.apache.flink.table.planner.codegen.calls.BridgingFunctionGenUtil$.verifyArgumentTypes(BridgingFunctionGenUtil.scala:323)

> at

> org.apache.flink.table.planner.codegen.calls.BridgingFunctionGenUtil$.generateFunctionAwareCallWithDataType(BridgingFunctionGenUtil.scala:98)

> at

> org.apache.flink.table.planner.codegen.calls.BridgingFunctionGenUtil$.generateFunctionAwareCall(BridgingFunctionGenUtil.scala:65)

> at

> org.apache.flink.table.planner.codegen.calls.BridgingSqlFunctionCallGen.generate(BridgingSqlFunctionCallGen.scala:73)

> at

> org.apache.flink.table.planner.codegen.ExprCodeGenerator.generateCallExpression(ExprCodeGenerator.scala:811)

> at

> org.apache.flink.table.planner.codegen.ExprCodeGenerator.visitCall(ExprCodeGenerator.scala:501)

> at

> org.apache.flink.table.planner.codegen.ExprCodeGenerator.visitCall(ExprCodeGenerator.scala:56)

> at org.apache.calcite.rex.RexCall.accept(RexCall.java:174)

> at

> org.apache.flink.table.planner.codegen.ExprCodeGenerator.generateExpression(ExprCodeGenerator.scala:155)

> at

> org.apache.flink.table.planner.codegen.CalcCodeGenerator$$anonfun$2.apply(CalcCodeGenerator.scala:152)

> at

> org.apache.flink.table.planner.codegen.CalcCodeGenerator$$anonfun$2.apply(CalcCodeGenerator.scala:152)

> at

> scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

> at

> scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

> at

> scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

> at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

> at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

> at scala.collection.AbstractTraversable.map(Traversable.scala:104)

> at

> org.apache.flink.table.planner.codegen.CalcCodeGenerator$.produceProjectionCode$1(CalcCodeGenerator.scala:152)

> at

> org.apache.flink.table.planner.codegen.CalcCodeGenerator$.generateProcessCode(CalcCodeGenerator.scala:177)

> at

> org.apache.flink.table.planner.codegen.CalcCodeGenerator$.generateCalcOperator(CalcCodeGenerator.scala:50)

> at

> org.apache.flink.table.planner.codegen.CalcCodeGenerator.generateCalcOperator(CalcCodeGenerator.scala)

> at

> org.apache.flink.table.planner.plan.nodes.exec.common.CommonExecCalc.translateToPlanInternal(CommonExecCalc.java:95)

> at

> org.apache.flink.table.planner.plan.nodes.exec.ExecNodeBase.translateToPlan(ExecNodeBase.java:134)

> at

> org.apache.flink.table.planner.plan.nodes.exec.ExecEdge.translateToPlan(ExecEdge.java:250)

> at

> org.apache.flink.table.planner.plan.nodes.exec.batch.BatchExecHashAggregate.translateToPlanInternal(BatchExecHashAggregate.java:84)

> at

> org.apache.flink.table.planner.plan.nodes.exec.ExecNodeBase.translateToPlan(ExecNodeBase.java:134)

> at

> org.apache.flink.table.planner.plan.nodes.exec.ExecEdge.translateToPlan(ExecEdge.java:250)

> at

> org.apache.flink.table.planner.plan.nodes.exec.batch.BatchExecExchange.translateToPlanInternal(BatchExecExchange.java:103)

> at

> org.apache.flink.table.planner.plan.nodes.exec.ExecNodeBase.translateToPlan(ExecNodeBase.java:134)

> at

> org.apache.flink.table.planner.plan.nodes.exec.ExecEdge.translateToPlan(ExecEdge.java:250)

>

[GitHub] [flink-table-store] JingsongLi commented on a change in pull request #2: [FLINK-25625] Introduce FileFormat

JingsongLi commented on a change in pull request #2: URL: https://github.com/apache/flink-table-store/pull/2#discussion_r782782673 ## File path: pom.xml ## @@ -444,7 +439,7 @@ under the License. - com.fasterxml.jackson*:*:(,2.12.0] Review comment: flink-avro uses jackson 2.11 (from avro) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-table-store] JingsongLi commented on a change in pull request #2: [FLINK-25625] Introduce FileFormat

JingsongLi commented on a change in pull request #2: URL: https://github.com/apache/flink-table-store/pull/2#discussion_r782782354 ## File path: pom.xml ## @@ -153,12 +154,6 @@ under the License. 1.3.9 - -org.apache.flink -flink-shaded-jackson Review comment: Useless depedency. ## File path: pom.xml ## @@ -153,12 +154,6 @@ under the License. 1.3.9 - -org.apache.flink -flink-shaded-jackson Review comment: Useless dependency. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] ruanhang1993 commented on a change in pull request #17994: [FLINK-24456][Connectors / Kafka,Table SQL / Ecosystem] Support bound…

ruanhang1993 commented on a change in pull request #17994:

URL: https://github.com/apache/flink/pull/17994#discussion_r782782134

##

File path:

flink-connectors/flink-connector-kafka/src/main/java/org/apache/flink/streaming/connectors/kafka/table/KafkaConnectorOptions.java

##

@@ -158,6 +158,13 @@

.withDescription(

"Optional offsets used in case of

\"specific-offsets\" startup mode");

+public static final ConfigOption SCAN_BOUNDED_SPECIFIC_OFFSETS =

+ConfigOptions.key("scan.bounded.specific-offsets")

+.stringType()

+.noDefaultValue()

+.withDescription(

+"When all partitions have reached their stop

offsets, the source will exit");

+

public static final ConfigOption SCAN_STARTUP_TIMESTAMP_MILLIS =

Review comment:

`scan.startup.mode` has nothing to do with end logic.

I suggest to provide a `scan.bounded.stop-mode` table option.

If it is `timestamp`, use `TimestampOffsetsInitializer` for the

`stoppingOffsetsInitializer` in the `KafkaSourceBuilder`.

If it is `specific-offsets`, use `SpecifiedOffsetsInitializer` for the

`stoppingOffsetsInitializer` in the `KafkaSourceBuilder`.

Or else it will be the `NoStoppingOffsetsInitializer` by default.

Besides, we need to check the corresponding setting for different modes.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (FLINK-25624) KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee blocked on azure pipeline

[

https://issues.apache.org/jira/browse/FLINK-25624?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Yun Gao updated FLINK-25624:

Affects Version/s: (was: 1.14.2)

> KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee blocked on azure pipeline

> --

>

> Key: FLINK-25624

> URL: https://issues.apache.org/jira/browse/FLINK-25624

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Reporter: Yun Gao

>Priority: Major

> Labels: test-stability

>

> {code:java}

> "main" #1 prio=5 os_prio=0 tid=0x7fda8c00b000 nid=0x21b2 waiting on

> condition [0x7fda92dd7000]

>java.lang.Thread.State: WAITING (parking)

> at sun.misc.Unsafe.park(Native Method)

> - parking to wait for <0x826165c0> (a

> java.util.concurrent.CompletableFuture$Signaller)

> at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

> at

> java.util.concurrent.CompletableFuture$Signaller.block(CompletableFuture.java:1707)

> at

> java.util.concurrent.ForkJoinPool.managedBlock(ForkJoinPool.java:3323)

> at

> java.util.concurrent.CompletableFuture.waitingGet(CompletableFuture.java:1742)

> at

> java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1908)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1989)

> at

> org.apache.flink.streaming.api.environment.LocalStreamEnvironment.execute(LocalStreamEnvironment.java:69)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1951)

> at

> org.apache.flink.connector.kafka.sink.KafkaSinkITCase.testRecoveryWithAssertion(KafkaSinkITCase.java:335)

> at

> org.apache.flink.connector.kafka.sink.KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee(KafkaSinkITCase.java:190)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> {code}

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=29285=logs=c5612577-f1f7-5977-6ff6-7432788526f7=ffa8837a-b445-534e-cdf4-db364cf8235d=42106

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Updated] (FLINK-25625) Introduce FileFormat for table-store

[ https://issues.apache.org/jira/browse/FLINK-25625?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-25625: --- Labels: pull-request-available (was: ) > Introduce FileFormat for table-store > > > Key: FLINK-25625 > URL: https://issues.apache.org/jira/browse/FLINK-25625 > Project: Flink > Issue Type: Sub-task > Components: Table Store >Reporter: Jingsong Lee >Assignee: Jingsong Lee >Priority: Major > Labels: pull-request-available > Fix For: table-store-0.1.0 > > > Introduce file format class which creates reader and writer factories for > specific file format. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [flink-table-store] JingsongLi opened a new pull request #2: [FLINK-25625] Introduce FileFormat

JingsongLi opened a new pull request #2: URL: https://github.com/apache/flink-table-store/pull/2 Introduce file format class which creates reader and writer factories for specific file format. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-25625) Introduce FileFormat for table-store

Jingsong Lee created FLINK-25625: Summary: Introduce FileFormat for table-store Key: FLINK-25625 URL: https://issues.apache.org/jira/browse/FLINK-25625 Project: Flink Issue Type: Sub-task Components: Table Store Reporter: Jingsong Lee Fix For: table-store-0.1.0 Introduce file format class which creates reader and writer factories for specific file format. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Assigned] (FLINK-25625) Introduce FileFormat for table-store

[ https://issues.apache.org/jira/browse/FLINK-25625?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jingsong Lee reassigned FLINK-25625: Assignee: Jingsong Lee > Introduce FileFormat for table-store > > > Key: FLINK-25625 > URL: https://issues.apache.org/jira/browse/FLINK-25625 > Project: Flink > Issue Type: Sub-task > Components: Table Store >Reporter: Jingsong Lee >Assignee: Jingsong Lee >Priority: Major > Fix For: table-store-0.1.0 > > > Introduce file format class which creates reader and writer factories for > specific file format. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Closed] (FLINK-25619) Init flink-table-store repository

[ https://issues.apache.org/jira/browse/FLINK-25619?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jingsong Lee closed FLINK-25619. Resolution: Fixed master: d0e4138a24ca35b944c0243f97d19c48b2501c59 96de3c96feecc1337af8f7d99aed73e8f948eb73 8098f0a604503795de347bfc27478b53d6bef285 7d01fc003c39046f52a7c1652d93e251d614869b 0a6c41aa844c30627ebcd3b0bc8c662956d9ac28 2bd3dd55c39dfded93d55b2dac42fa19cb8582e8 9d5c08c86cbda10119a0f4da679529974617d637 > Init flink-table-store repository > - > > Key: FLINK-25619 > URL: https://issues.apache.org/jira/browse/FLINK-25619 > Project: Flink > Issue Type: Sub-task > Components: Table Store >Reporter: Jingsong Lee >Assignee: Jingsong Lee >Priority: Major > Labels: pull-request-available > Fix For: table-store-0.1.0 > > > Create: > * README.md > * NOTICE LICENSE CODE_OF_CONDUCT > * .gitignore > * maven tools > * releasing tools > * github build workflow > * pom.xml -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-25523) KafkaSourceITCase$KafkaSpecificTests.testTimestamp fails on AZP

[

https://issues.apache.org/jira/browse/FLINK-25523?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17474323#comment-17474323

]

Yun Gao commented on FLINK-25523:

-

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=29270=logs=c5f0071e-1851-543e-9a45-9ac140befc32=15a22db7-8faa-5b34-3920-d33c9f0ca23c=35635

> KafkaSourceITCase$KafkaSpecificTests.testTimestamp fails on AZP

> ---

>

> Key: FLINK-25523

> URL: https://issues.apache.org/jira/browse/FLINK-25523

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.15.0

>Reporter: Till Rohrmann

>Priority: Critical

> Labels: test-stability

> Fix For: 1.15.0

>

>

> The test {{KafkaSourceITCase$KafkaSpecificTests.testTimestamp}} fails on AZP

> with

> {code}

> 2022-01-05T03:08:57.1647316Z java.util.concurrent.TimeoutException: The topic

> metadata failed to propagate to Kafka broker.

> 2022-01-05T03:08:57.1660635Z at

> org.apache.flink.core.testutils.CommonTestUtils.waitUtil(CommonTestUtils.java:214)

> 2022-01-05T03:08:57.1667856Z at

> org.apache.flink.core.testutils.CommonTestUtils.waitUtil(CommonTestUtils.java:230)

> 2022-01-05T03:08:57.1668778Z at

> org.apache.flink.streaming.connectors.kafka.KafkaTestEnvironmentImpl.createTestTopic(KafkaTestEnvironmentImpl.java:216)

> 2022-01-05T03:08:57.1670072Z at

> org.apache.flink.streaming.connectors.kafka.KafkaTestEnvironment.createTestTopic(KafkaTestEnvironment.java:98)

> 2022-01-05T03:08:57.1671078Z at

> org.apache.flink.streaming.connectors.kafka.KafkaTestBase.createTestTopic(KafkaTestBase.java:216)

> 2022-01-05T03:08:57.1671942Z at

> org.apache.flink.connector.kafka.source.KafkaSourceITCase$KafkaSpecificTests.testTimestamp(KafkaSourceITCase.java:104)

> 2022-01-05T03:08:57.1672619Z at

> java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> 2022-01-05T03:08:57.1673715Z at

> java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> 2022-01-05T03:08:57.1675000Z at

> java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> 2022-01-05T03:08:57.1675907Z at

> java.base/java.lang.reflect.Method.invoke(Method.java:566)

> 2022-01-05T03:08:57.1676587Z at

> org.junit.platform.commons.util.ReflectionUtils.invokeMethod(ReflectionUtils.java:688)

> 2022-01-05T03:08:57.1677316Z at

> org.junit.jupiter.engine.execution.MethodInvocation.proceed(MethodInvocation.java:60)

> 2022-01-05T03:08:57.1678380Z at

> org.junit.jupiter.engine.execution.InvocationInterceptorChain$ValidatingInvocation.proceed(InvocationInterceptorChain.java:131)

> 2022-01-05T03:08:57.1679264Z at

> org.junit.jupiter.engine.extension.TimeoutExtension.intercept(TimeoutExtension.java:149)

> 2022-01-05T03:08:57.1680002Z at

> org.junit.jupiter.engine.extension.TimeoutExtension.interceptTestableMethod(TimeoutExtension.java:140)

> 2022-01-05T03:08:57.1680776Z at

> org.junit.jupiter.engine.extension.TimeoutExtension.interceptTestTemplateMethod(TimeoutExtension.java:92)

> 2022-01-05T03:08:57.1681682Z at

> org.junit.jupiter.engine.execution.ExecutableInvoker$ReflectiveInterceptorCall.lambda$ofVoidMethod$0(ExecutableInvoker.java:115)

> 2022-01-05T03:08:57.1682442Z at

> org.junit.jupiter.engine.execution.ExecutableInvoker.lambda$invoke$0(ExecutableInvoker.java:105)

> 2022-01-05T03:08:57.1683450Z at

> org.junit.jupiter.engine.execution.InvocationInterceptorChain$InterceptedInvocation.proceed(InvocationInterceptorChain.java:106)

> 2022-01-05T03:08:57.1685362Z at

> org.junit.jupiter.engine.execution.InvocationInterceptorChain.proceed(InvocationInterceptorChain.java:64)

> 2022-01-05T03:08:57.1686284Z at

> org.junit.jupiter.engine.execution.InvocationInterceptorChain.chainAndInvoke(InvocationInterceptorChain.java:45)

> 2022-01-05T03:08:57.1687152Z at

> org.junit.jupiter.engine.execution.InvocationInterceptorChain.invoke(InvocationInterceptorChain.java:37)

> 2022-01-05T03:08:57.1687818Z at

> org.junit.jupiter.engine.execution.ExecutableInvoker.invoke(ExecutableInvoker.java:104)

> 2022-01-05T03:08:57.1688479Z at

> org.junit.jupiter.engine.execution.ExecutableInvoker.invoke(ExecutableInvoker.java:98)

> 2022-01-05T03:08:57.1689376Z at

> org.junit.jupiter.engine.descriptor.TestMethodTestDescriptor.lambda$invokeTestMethod$6(TestMethodTestDescriptor.java:210)

> 2022-01-05T03:08:57.1690108Z at

> org.junit.platform.engine.support.hierarchical.ThrowableCollector.execute(ThrowableCollector.java:73)

> 2022-01-05T03:08:57.1690825Z at

> org.junit.jupiter.engine.descriptor.TestMethodTestDescriptor.invokeTestMethod(TestMethodTestDescriptor.java:206)

> 2022-01-05T03:08:57.1691470Z at

>

[jira] [Commented] (FLINK-24801) "Post-job: Cache Maven local repo" failed on Azure

[

https://issues.apache.org/jira/browse/FLINK-24801?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17474321#comment-17474321

]

Yun Gao commented on FLINK-24801:

-

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=29276=logs=af184cdd-c6d8-5084-0b69-7e9c67b35f7a=bccfcce4-958a-475a-a593-8a3baa959662=225

> "Post-job: Cache Maven local repo" failed on Azure

> --

>

> Key: FLINK-24801

> URL: https://issues.apache.org/jira/browse/FLINK-24801

> Project: Flink

> Issue Type: Bug

> Components: Build System / Azure Pipelines

>Affects Versions: 1.15.0

>Reporter: Yun Gao

>Assignee: Chesnay Schepler

>Priority: Critical

> Labels: test-stability

>

> {code:java}

> 2021-11-05T13:49:20.5298458Z Resolved to:

> maven|Linux|kL7EJ8TeMrJ0VZs51DUWRqheXcKK2cN2spGtx9IbVxQ=

> 2021-11-05T13:49:21.0445785Z ApplicationInsightsTelemetrySender will

> correlate events with X-TFS-Session b5cbe6a0-61e7-4345-81b6-efaed3924cbe

> 2021-11-05T13:49:21.0700758Z Getting a pipeline cache artifact with one of

> the following fingerprints:

> 2021-11-05T13:49:21.0702157Z Fingerprint:

> `maven|Linux|kL7EJ8TeMrJ0VZs51DUWRqheXcKK2cN2spGtx9IbVxQ=`

> 2021-11-05T13:49:21.3648278Z There is a cache miss.

> 2021-11-05T13:50:26.4782603Z tar:

> c9692460fbd54c808fca7be315d83578_archive.tar: Wrote only 2048 of 10240 bytes

> 2021-11-05T13:50:26.4784975Z tar: Error is not recoverable: exiting now

> 2021-11-05T13:50:27.0397318Z ApplicationInsightsTelemetrySender correlated 1

> events with X-TFS-Session b5cbe6a0-61e7-4345-81b6-efaed3924cbe

> 2021-11-05T13:50:27.0531774Z ##[error]Process returned non-zero exit code: 2

> 2021-11-05T13:50:27.0666804Z ##[section]Finishing: Cache Maven local repo

> {code}

> [https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=26018=logs=c88eea3b-64a0-564d-0031-9fdcd7b8abee=96bd9872-da2e-43b4-b013-1295f1c23a41=220]

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink] flinkbot edited a comment on pull request #18119: [FLINK-24947] Support hostNetwork for native K8s integration on session mode

flinkbot edited a comment on pull request #18119: URL: https://github.com/apache/flink/pull/18119#issuecomment-994734000 ## CI report: * 3dbd53a6bc03cad9a218fdc080d367d85f15ec96 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29291) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-25624) KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee blocked on azure pipeline

[

https://issues.apache.org/jira/browse/FLINK-25624?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Yun Gao updated FLINK-25624:

Affects Version/s: 1.15.0

> KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee blocked on azure pipeline

> --

>

> Key: FLINK-25624

> URL: https://issues.apache.org/jira/browse/FLINK-25624

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.15.0

>Reporter: Yun Gao

>Priority: Major

> Labels: test-stability

>

> {code:java}

> "main" #1 prio=5 os_prio=0 tid=0x7fda8c00b000 nid=0x21b2 waiting on

> condition [0x7fda92dd7000]

>java.lang.Thread.State: WAITING (parking)

> at sun.misc.Unsafe.park(Native Method)

> - parking to wait for <0x826165c0> (a

> java.util.concurrent.CompletableFuture$Signaller)

> at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

> at

> java.util.concurrent.CompletableFuture$Signaller.block(CompletableFuture.java:1707)

> at

> java.util.concurrent.ForkJoinPool.managedBlock(ForkJoinPool.java:3323)

> at

> java.util.concurrent.CompletableFuture.waitingGet(CompletableFuture.java:1742)

> at

> java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1908)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1989)

> at

> org.apache.flink.streaming.api.environment.LocalStreamEnvironment.execute(LocalStreamEnvironment.java:69)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1951)

> at

> org.apache.flink.connector.kafka.sink.KafkaSinkITCase.testRecoveryWithAssertion(KafkaSinkITCase.java:335)

> at

> org.apache.flink.connector.kafka.sink.KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee(KafkaSinkITCase.java:190)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> {code}

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=29285=logs=c5612577-f1f7-5977-6ff6-7432788526f7=ffa8837a-b445-534e-cdf4-db364cf8235d=42106

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Commented] (FLINK-25624) KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee blocked on azure pipeline

[

https://issues.apache.org/jira/browse/FLINK-25624?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17474320#comment-17474320

]

Yun Gao commented on FLINK-25624:

-

Perhaps cc [~fpaul] ~

> KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee blocked on azure pipeline

> --

>

> Key: FLINK-25624

> URL: https://issues.apache.org/jira/browse/FLINK-25624

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.15.0

>Reporter: Yun Gao

>Priority: Major

> Labels: test-stability

>

> {code:java}

> "main" #1 prio=5 os_prio=0 tid=0x7fda8c00b000 nid=0x21b2 waiting on

> condition [0x7fda92dd7000]

>java.lang.Thread.State: WAITING (parking)

> at sun.misc.Unsafe.park(Native Method)

> - parking to wait for <0x826165c0> (a

> java.util.concurrent.CompletableFuture$Signaller)

> at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

> at

> java.util.concurrent.CompletableFuture$Signaller.block(CompletableFuture.java:1707)

> at

> java.util.concurrent.ForkJoinPool.managedBlock(ForkJoinPool.java:3323)

> at

> java.util.concurrent.CompletableFuture.waitingGet(CompletableFuture.java:1742)

> at

> java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1908)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1989)

> at

> org.apache.flink.streaming.api.environment.LocalStreamEnvironment.execute(LocalStreamEnvironment.java:69)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1951)

> at

> org.apache.flink.connector.kafka.sink.KafkaSinkITCase.testRecoveryWithAssertion(KafkaSinkITCase.java:335)

> at

> org.apache.flink.connector.kafka.sink.KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee(KafkaSinkITCase.java:190)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> {code}

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=29285=logs=c5612577-f1f7-5977-6ff6-7432788526f7=ffa8837a-b445-534e-cdf4-db364cf8235d=42106

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

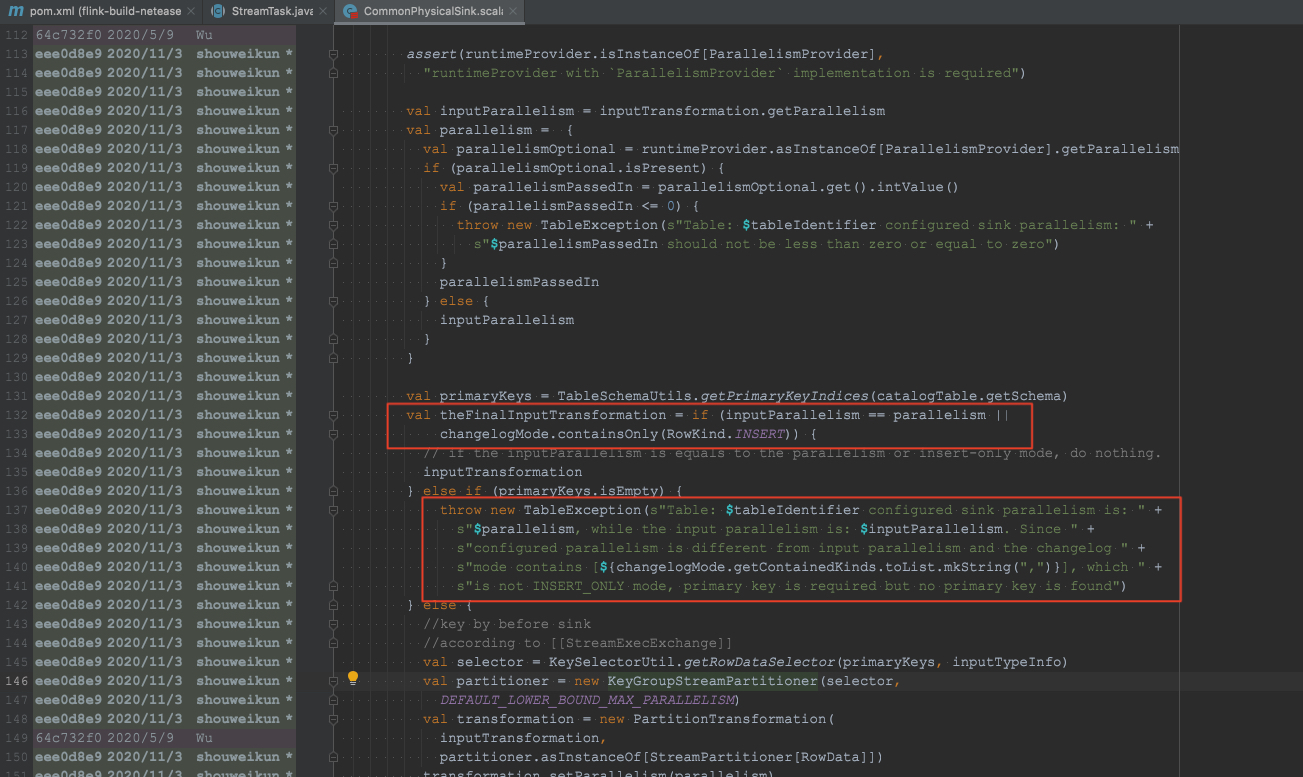

[GitHub] [flink] Aireed removed a comment on pull request #13789: [FLINK-19727][table-runtime] Implement ParallelismProvider for sink i…

Aireed removed a comment on pull request #13789: URL: https://github.com/apache/flink/pull/13789#issuecomment-1009713407 @shouweikun hello, what's the purpose of the check?? A connector which support cdc must be have same parallelism with source or have primary keys even if it's not in changelog mode  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-25624) KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee blocked on azure pipeline

[

https://issues.apache.org/jira/browse/FLINK-25624?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Yun Gao updated FLINK-25624:

Labels: test-stability (was: )

> KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee blocked on azure pipeline

> --

>

> Key: FLINK-25624

> URL: https://issues.apache.org/jira/browse/FLINK-25624

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka

>Affects Versions: 1.14.2

>Reporter: Yun Gao

>Priority: Major

> Labels: test-stability

>

> {code:java}

> "main" #1 prio=5 os_prio=0 tid=0x7fda8c00b000 nid=0x21b2 waiting on

> condition [0x7fda92dd7000]

>java.lang.Thread.State: WAITING (parking)

> at sun.misc.Unsafe.park(Native Method)

> - parking to wait for <0x826165c0> (a

> java.util.concurrent.CompletableFuture$Signaller)

> at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

> at

> java.util.concurrent.CompletableFuture$Signaller.block(CompletableFuture.java:1707)

> at

> java.util.concurrent.ForkJoinPool.managedBlock(ForkJoinPool.java:3323)

> at

> java.util.concurrent.CompletableFuture.waitingGet(CompletableFuture.java:1742)

> at

> java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1908)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1989)

> at

> org.apache.flink.streaming.api.environment.LocalStreamEnvironment.execute(LocalStreamEnvironment.java:69)

> at

> org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1951)

> at

> org.apache.flink.connector.kafka.sink.KafkaSinkITCase.testRecoveryWithAssertion(KafkaSinkITCase.java:335)

> at

> org.apache.flink.connector.kafka.sink.KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee(KafkaSinkITCase.java:190)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

> {code}

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=29285=logs=c5612577-f1f7-5977-6ff6-7432788526f7=ffa8837a-b445-534e-cdf4-db364cf8235d=42106

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Created] (FLINK-25624) KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee blocked on azure pipeline

Yun Gao created FLINK-25624:

---

Summary: KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee

blocked on azure pipeline

Key: FLINK-25624

URL: https://issues.apache.org/jira/browse/FLINK-25624

Project: Flink

Issue Type: Bug

Components: Connectors / Kafka

Affects Versions: 1.14.2

Reporter: Yun Gao

{code:java}

"main" #1 prio=5 os_prio=0 tid=0x7fda8c00b000 nid=0x21b2 waiting on

condition [0x7fda92dd7000]

java.lang.Thread.State: WAITING (parking)

at sun.misc.Unsafe.park(Native Method)

- parking to wait for <0x826165c0> (a

java.util.concurrent.CompletableFuture$Signaller)

at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

at

java.util.concurrent.CompletableFuture$Signaller.block(CompletableFuture.java:1707)

at

java.util.concurrent.ForkJoinPool.managedBlock(ForkJoinPool.java:3323)

at

java.util.concurrent.CompletableFuture.waitingGet(CompletableFuture.java:1742)

at

java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1908)

at

org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1989)

at

org.apache.flink.streaming.api.environment.LocalStreamEnvironment.execute(LocalStreamEnvironment.java:69)

at

org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1951)

at

org.apache.flink.connector.kafka.sink.KafkaSinkITCase.testRecoveryWithAssertion(KafkaSinkITCase.java:335)

at

org.apache.flink.connector.kafka.sink.KafkaSinkITCase.testRecoveryWithExactlyOnceGuarantee(KafkaSinkITCase.java:190)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

at

org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at

org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

at

org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at

org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

at

org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:54)

{code}

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=29285=logs=c5612577-f1f7-5977-6ff6-7432788526f7=ffa8837a-b445-534e-cdf4-db364cf8235d=42106

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink] flinkbot edited a comment on pull request #18306: [FLINK-25445] No need to create local recovery dirs when disabled loc…

flinkbot edited a comment on pull request #18306: URL: https://github.com/apache/flink/pull/18306#issuecomment-1008234006 ## CI report: * 32883ca0240ef8ef0f3fb7162035a3aff23cdb7d Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29293) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-6757) Investigate Apache Atlas integration

[ https://issues.apache.org/jira/browse/FLINK-6757?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17474316#comment-17474316 ] HideOnBush commented on FLINK-6757: --- I think this is very meaningful, especially in the construction of real-time data warehouses, through hooks, many manual recording tasks can be solved. and we are already using this function > Investigate Apache Atlas integration > > > Key: FLINK-6757 > URL: https://issues.apache.org/jira/browse/FLINK-6757 > Project: Flink > Issue Type: New Feature > Components: Connectors / Common >Reporter: Till Rohrmann >Priority: Minor > Labels: auto-deprioritized-major, auto-unassigned > > Users asked for an integration of Apache Flink with Apache Atlas. It might be > worthwhile to investigate what is necessary to achieve this task. > References: > http://atlas.incubator.apache.org/StormAtlasHook.html -- This message was sent by Atlassian Jira (v8.20.1#820001)

[jira] [Commented] (FLINK-25374) Azure pipeline get stalled on scanning project

[

https://issues.apache.org/jira/browse/FLINK-25374?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17474314#comment-17474314

]

Yun Gao commented on FLINK-25374:

-

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=29285=logs=e92ecf6d-e207-5a42-7ff7-528ff0c5b259=40fc352e-9b4c-5fd8-363f-628f24b01ec2=8314

> Azure pipeline get stalled on scanning project

> --

>

> Key: FLINK-25374

> URL: https://issues.apache.org/jira/browse/FLINK-25374

> Project: Flink

> Issue Type: Bug

> Components: Build System / Azure Pipelines

>Affects Versions: 1.15.0, 1.13.5, 1.14.2

>Reporter: Yun Gao

>Assignee: Martijn Visser

>Priority: Blocker

> Labels: test-stability

> Fix For: 1.15.0

>

> Attachments: network2.jpg

>

>

> {code:java}

> 2021-12-18T02:01:01.8980373Z Dec 18 02:01:01 RUNNING 'run_mvn

> -Dflink.forkCount=2 -Dflink.forkCountTestPackage=2 -Dfast -Pskip-webui-build

> -Dlog.dir=/__w/_temp/debug_files

> -Dlog4j.configurationFile=file:///__w/2/s/tools/ci/log4j.properties

> -DskipTests -Dhadoop.version=2.8.3 -Dinclude_hadoop_aws -Dscala-2.11

> install'.

> 2021-12-18T02:01:01.885Z Dec 18 02:01:01 Invoking mvn with 'mvn

> -Dmaven.wagon.http.pool=false -Dorg.slf4j.simpleLogger.showDateTime=true

> -Dorg.slf4j.simpleLogger.dateTimeFormat=HH:mm:ss.SSS

> -Dorg.slf4j.simpleLogger.log.org.apache.maven.cli.transfer.Slf4jMavenTransferListener=warn

> --no-snapshot-updates -B -Dhadoop.version=2.8.3 -Dinclude_hadoop_aws

> -Dscala-2.11 --settings /__w/2/s/tools/ci/alibaba-mirror-settings.xml

> -Dflink.forkCount=2 -Dflink.forkCountTestPackage=2 -Dfast -Pskip-webui-build

> -Dlog.dir=/__w/_temp/debug_files

> -Dlog4j.configurationFile=file:///__w/2/s/tools/ci/log4j.properties

> -DskipTests -Dhadoop.version=2.8.3 -Dinclude_hadoop_aws -Dscala-2.11 install'

> 2021-12-18T02:01:01.9407169Z Picked up JAVA_TOOL_OPTIONS:

> -XX:+HeapDumpOnOutOfMemoryError

> 2021-12-18T02:01:02.8291019Z Dec 18 02:01:02 [INFO] Scanning for projects...

> 2021-12-18T02:16:02.4676481Z Dec 18 02:16:02

> ==

> 2021-12-18T02:16:02.4679732Z Dec 18 02:16:02 Process produced no output for

> 900 seconds.

> 2021-12-18T02:16:02.4680416Z Dec 18 02:16:02

> ==

> 2021-12-18T02:16:02.4681062Z Dec 18 02:16:02

> ==

> 2021-12-18T02:16:02.4681601Z Dec 18 02:16:02 The following Java processes are

> running (JPS)

> 2021-12-18T02:16:02.4682191Z Dec 18 02:16:02

> ==

> 2021-12-18T02:16:02.4743659Z Picked up JAVA_TOOL_OPTIONS:

> -XX:+HeapDumpOnOutOfMemoryError

> 2021-12-18T02:16:03.1019936Z Dec 18 02:16:03 354 Launcher

> 2021-12-18T02:16:03.1020514Z Dec 18 02:16:03 857 Jps

> 2021-12-18T02:16:03.1052014Z Dec 18 02:16:03

> ==

> 2021-12-18T02:16:03.1052803Z Dec 18 02:16:03 Printing stack trace of Java

> process 354

> 2021-12-18T02:16:03.1053382Z Dec 18 02:16:03

> ==

> 2021-12-18T02:16:03.1123385Z Picked up JAVA_TOOL_OPTIONS:

> -XX:+HeapDumpOnOutOfMemoryError

> 2021-12-18T02:16:03.4416679Z Dec 18 02:16:03 2021-12-18 02:16:03

> 2021-12-18T02:16:03.4417639Z Dec 18 02:16:03 Full thread dump OpenJDK 64-Bit

> Server VM (25.292-b10 mixed mode):

> 2021-12-18T02:16:03.4418277Z Dec 18 02:16:03

> 2021-12-18T02:16:03.4419452Z Dec 18 02:16:03 "Attach Listener" #22 daemon

> prio=9 os_prio=0 tid=0x7fbc9c001000 nid=0x3b8 waiting on condition

> [0x]

> 2021-12-18T02:16:03.4420652Z Dec 18 02:16:03java.lang.Thread.State:

> RUNNABLE

> 2021-12-18T02:16:03.4421479Z Dec 18 02:16:03

> 2021-12-18T02:16:03.4422239Z Dec 18 02:16:03 "Service Thread" #20 daemon

> prio=9 os_prio=0 tid=0x7fbd4810c800 nid=0x196 runnable

> [0x]

> 2021-12-18T02:16:03.4422936Z Dec 18 02:16:03java.lang.Thread.State:

> RUNNABLE

> 2021-12-18T02:16:03.4423280Z Dec 18 02:16:03

> 2021-12-18T02:16:03.4423900Z Dec 18 02:16:03 "C1 CompilerThread14" #19 daemon

> prio=9 os_prio=0 tid=0x7fbd48109800 nid=0x195 waiting on condition

> [0x]

> 2021-12-18T02:16:03.4424648Z Dec 18 02:16:03java.lang.Thread.State:

> RUNNABLE

> 2021-12-18T02:16:03.4425089Z Dec 18 02:16:03

> 2021-12-18T02:16:03.4425734Z Dec 18 02:16:03 "C1 CompilerThread13" #18 daemon

> prio=9 os_prio=0 tid=0x7fbd48107800 nid=0x194 waiting on condition

> [0x]

> 2021-12-18T02:16:03.4426339Z Dec 18 02:16:03java.lang.Thread.State:

> RUNNABLE

>

[GitHub] [flink] wsry commented on a change in pull request #17936: [FLINK-24954][network] Reset read buffer request timeout on buffer recycling for sort-shuffle

wsry commented on a change in pull request #17936:

URL: https://github.com/apache/flink/pull/17936#discussion_r782747038

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/io/disk/BatchShuffleReadBufferPoolTest.java

##

@@ -98,6 +98,34 @@ public void testRecycle() throws Exception {

assertEquals(bufferPool.getNumTotalBuffers(),

bufferPool.getAvailableBuffers());

}

+@Test

+public void testBufferOperationTimestampUpdated() throws Exception {

+BatchShuffleReadBufferPool bufferPool = new

BatchShuffleReadBufferPool(1024, 1024);

+long oldTimestamp = bufferPool.getLastBufferOperationTimestamp();

+List buffers = bufferPool.requestBuffers();

+assertEquals(1, buffers.size());

+long nowTimestamp = bufferPool.getLastBufferOperationTimestamp();

+// The timestamp is updated when requesting buffers successfully

+assertTrue(nowTimestamp > oldTimestamp);

+assertEquals(nowTimestamp,

bufferPool.getLastBufferOperationTimestamp());

+

+oldTimestamp = nowTimestamp;

+bufferPool.recycle(buffers);

Review comment:

It is better to sleep for example 100ms before recycling buffers.

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/io/disk/BatchShuffleReadBufferPoolTest.java

##

@@ -98,6 +98,34 @@ public void testRecycle() throws Exception {

assertEquals(bufferPool.getNumTotalBuffers(),

bufferPool.getAvailableBuffers());

}

+@Test

+public void testBufferOperationTimestampUpdated() throws Exception {

+BatchShuffleReadBufferPool bufferPool = new

BatchShuffleReadBufferPool(1024, 1024);

+long oldTimestamp = bufferPool.getLastBufferOperationTimestamp();

+List buffers = bufferPool.requestBuffers();

Review comment:

It is better to sleep for example 100ms before requesting buffers.

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/io/disk/BatchShuffleReadBufferPoolTest.java

##

@@ -98,6 +98,34 @@ public void testRecycle() throws Exception {

assertEquals(bufferPool.getNumTotalBuffers(),

bufferPool.getAvailableBuffers());

}

+@Test

+public void testBufferOperationTimestampUpdated() throws Exception {

+BatchShuffleReadBufferPool bufferPool = new

BatchShuffleReadBufferPool(1024, 1024);

+long oldTimestamp = bufferPool.getLastBufferOperationTimestamp();

+List buffers = bufferPool.requestBuffers();

+assertEquals(1, buffers.size());

+long nowTimestamp = bufferPool.getLastBufferOperationTimestamp();

+// The timestamp is updated when requesting buffers successfully

+assertTrue(nowTimestamp > oldTimestamp);

+assertEquals(nowTimestamp,

bufferPool.getLastBufferOperationTimestamp());

+

+oldTimestamp = nowTimestamp;

Review comment:

I guess we can replace the above two lines of code with this?

```oldTimestamp = bufferPool.getLastBufferOperationTimestamp();```

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/io/disk/BatchShuffleReadBufferPoolTest.java

##

@@ -98,6 +98,34 @@ public void testRecycle() throws Exception {

assertEquals(bufferPool.getNumTotalBuffers(),

bufferPool.getAvailableBuffers());

}

+@Test

+public void testBufferOperationTimestampUpdated() throws Exception {

+BatchShuffleReadBufferPool bufferPool = new

BatchShuffleReadBufferPool(1024, 1024);

+long oldTimestamp = bufferPool.getLastBufferOperationTimestamp();

+List buffers = bufferPool.requestBuffers();

+assertEquals(1, buffers.size());

+long nowTimestamp = bufferPool.getLastBufferOperationTimestamp();

+// The timestamp is updated when requesting buffers successfully

+assertTrue(nowTimestamp > oldTimestamp);

+assertEquals(nowTimestamp,

bufferPool.getLastBufferOperationTimestamp());

+

+oldTimestamp = nowTimestamp;

+bufferPool.recycle(buffers);

+// The timestamp is updated when recycling buffers

+assertTrue(bufferPool.getLastBufferOperationTimestamp() >

oldTimestamp);

+

+bufferPool.requestBuffers();

+

+oldTimestamp = bufferPool.getLastBufferOperationTimestamp();

+buffers = bufferPool.requestBuffers();

+assertEquals(0, buffers.size());

Review comment:

The variable buffers should not be updated, if it is updated to empty,

the bellow ```bufferPool.recycle(buffers);``` will recycle nothing. I think we

can replace the above two lines of code with "assertEquals(0,

bufferPool.requestBuffers().size());".

##

File path:

flink-runtime/src/test/java/org/apache/flink/runtime/io/network/partition/SortMergeResultPartitionReadSchedulerTest.java

##

@@ -192,6 +199,131 @@ public void testOnReadBufferRequestError() throws

Exception {

assertAllResourcesReleased();

}

+@Test

+public void

[GitHub] [flink] flinkbot edited a comment on pull request #18268: [FLINK-14902][connector] Supports jdbc async lookup join

flinkbot edited a comment on pull request #18268: URL: https://github.com/apache/flink/pull/18268#issuecomment-1005479356 ## CI report: * fe7d298f1ac142a2fa53241df09eaf93b44ec4be Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29290) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] MonsterChenzhuo commented on a change in pull request #17994: [FLINK-24456][Connectors / Kafka,Table SQL / Ecosystem] Support bound…

MonsterChenzhuo commented on a change in pull request #17994:

URL: https://github.com/apache/flink/pull/17994#discussion_r782766430

##

File path:

flink-connectors/flink-connector-kafka/src/main/java/org/apache/flink/streaming/connectors/kafka/table/KafkaConnectorOptions.java

##

@@ -158,6 +158,13 @@

.withDescription(

"Optional offsets used in case of

\"specific-offsets\" startup mode");

+public static final ConfigOption SCAN_BOUNDED_SPECIFIC_OFFSETS =

+ConfigOptions.key("scan.bounded.specific-offsets")

+.stringType()

+.noDefaultValue()

+.withDescription(

+"When all partitions have reached their stop

offsets, the source will exit");

+

public static final ConfigOption SCAN_STARTUP_TIMESTAMP_MILLIS =

Review comment:

@ruanhang1993 Do you think the above logic is OK, if so I will make

changes

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] MonsterChenzhuo commented on a change in pull request #17994: [FLINK-24456][Connectors / Kafka,Table SQL / Ecosystem] Support bound…

MonsterChenzhuo commented on a change in pull request #17994:

URL: https://github.com/apache/flink/pull/17994#discussion_r782766011

##

File path:

flink-connectors/flink-connector-kafka/src/main/java/org/apache/flink/streaming/connectors/kafka/table/KafkaConnectorOptions.java

##

@@ -158,6 +158,13 @@

.withDescription(

"Optional offsets used in case of

\"specific-offsets\" startup mode");

+public static final ConfigOption SCAN_BOUNDED_SPECIFIC_OFFSETS =

+ConfigOptions.key("scan.bounded.specific-offsets")

+.stringType()

+.noDefaultValue()

+.withDescription(

+"When all partitions have reached their stop

offsets, the source will exit");

+

public static final ConfigOption SCAN_STARTUP_TIMESTAMP_MILLIS =

Review comment:

hi @ruanhang1993 You can see if my idea is correct:

First, there are two options for the start read position, an offset and a

timestamp.

When the start read position is an offset, it can be either specified or

currently consumed, and your end position corresponds to

`scan.bounded.stop-offsets`.

When your start bit is a timestamp, your end position should also go to the

corresponding timestamp.

So the logic can be changed to:

with a bounded offset set, first go to determine if `scan.startup.mode` is

set, if not go to the logic of `scan.bounded.stop-offsets`, if it is set see if

it is a timestamp, if not go to the logic of `scan.bounded. stop-offsets`

logic, if it is a timestamp then `scan.bounded.stop-timestamp` logic

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] xintongsong commented on pull request #18303: [FLINK-25085][runtime] Add a scheduled thread pool for periodic tasks in RpcEndpoint

xintongsong commented on pull request #18303: URL: https://github.com/apache/flink/pull/18303#issuecomment-1010713720 Thanks for working on this, @zjureel & @KarmaGYZ. I'm still not entirely sure about exposing two executors (or two sets of scheduling interfaces) from the RPC endpoint. It's not easy to maintain as it requires callers to understand the differences between the two executors (tasks will be canceled as soon as the RPC endpoint shutdown for one executor, while not canceled immediately for the other). I understand this is in response to the previous CI problems, where we suspect some clean-up operations are not completed due to tasks being canceled, and it's not easy to identify which clean-up operations are causing the problems. However, I'm not comfortable with scarifying the new design just to cover up for the real problems. I'd suggest to try a few more things before we go for the two-executors approach. - I noticed there are some calls to `schedule` with a zero delay, (e.g., `ApplicationDispatcherBootstrap#runApplicationAsync`). I think the purpose for these calls are to process asynchronously, rather than to schedule a future task. It might be helpful to check the delay value in `MainThreadExecutor#schedule`, and only use the scheduling thread pool when delay is positive. My gut feeling is most clean-up tasks are async tasks that needs to be executed immediately rather than in future. - Another thing we may want to try is to replace `ExecutorService#shutdownNow` with `Executor#awaitTermination`, to leave a short time for the clean-up tasks to complete. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-25441) ProducerFailedException will cause task status switch from RUNNING to CANCELED, which will cause the job to hang.

[

https://issues.apache.org/jira/browse/FLINK-25441?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17474304#comment-17474304

]

Lijie Wang commented on FLINK-25441:

[~kevin.cyj] I would, I will prepare a fix according your suggestion.

> ProducerFailedException will cause task status switch from RUNNING to

> CANCELED, which will cause the job to hang.

> -

>

> Key: FLINK-25441

> URL: https://issues.apache.org/jira/browse/FLINK-25441

> Project: Flink

> Issue Type: Bug

> Components: Runtime / Network

>Affects Versions: 1.15.0

>Reporter: Lijie Wang

>Priority: Major

> Fix For: 1.15.0

>

>

> The {{ProducerFailedException}} extends {{{}CancelTaskException{}}}, which

> will cause the task status switched from RUNNING to CANCELED. As described in

> FLINK-17726, if a task is directly CANCELED by TaskManager due to its own

> runtime issue, the task will not be recovered by JM and thus the job would

> hang.

> Note that it will not cause problems before FLINK-24182 (it unifies the

> failureCause handling, changes the check of CancelTaskException from

> "{{instanceof CancelTaskException}}" to "{{ExceptionUtils.findThrowable}}"),

> because the {{ProducerFailedException}} is always wrapped by

> {{{}RemoteTransportException{}}}.

> The example log is as follows:

> {code:java}

> 2021-12-23 21:20:14,965 DEBUG org.apache.flink.runtime.taskmanager.Task

> [] - MultipleInput[945] [Source:

> HiveSource-tpcds_bin_orc_1.catalog_sales, Source:

> HiveSource-tpcds_bin_orc_1.store_sales, Source:

> HiveSource-tpcds_bin_orc_1.catalog_sales, Source:

> HiveSource-tpcds_bin_orc_1.store_sales, Source:

> HiveSource-tpcds_bin_orc_1.store_sales, Source:

> HiveSource-tpcds_bin_orc_1.item, Source:

> HiveSource-tpcds_bin_orc_1.web_sales, Source:

> HiveSource-tpcds_bin_orc_1.web_sales] - Calc[885] (143/1024)#0

> (8a883116ab601dd5b9ad5d2717d18918) switched from RUNNING to CANCELED due to

> CancelTaskException:

> org.apache.flink.runtime.io.network.netty.exception.RemoteTransportException:

> Error at remote task manager

> 'k28b09250.eu95sqa.tbsite.net/100.69.96.154:47459'.

> at

> org.apache.flink.runtime.io.network.netty.CreditBasedPartitionRequestClientHandler.decodeMsg(CreditBasedPartitionRequestClientHandler.java:301)

> at

> org.apache.flink.runtime.io.network.netty.CreditBasedPartitionRequestClientHandler.channelRead(CreditBasedPartitionRequestClientHandler.java:190)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

> at

> org.apache.flink.runtime.io.network.netty.NettyMessageClientDecoderDelegate.channelRead(NettyMessageClientDecoderDelegate.java:112)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.epoll.EpollEventLoop.processReady(EpollEventLoop.java:480)

> at

> org.apache.flink.shaded.netty4.io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:378)

> at

> org.apache.flink.shaded.netty4.io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989)

> at

> org.apache.flink.shaded.netty4.io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

> at java.lang.Thread.run(Thread.java:834)

> Caused by:

[GitHub] [flink-table-store] JingsongLi merged pull request #1: [FLINK-25619] Init flink-table-store repository

JingsongLi merged pull request #1: URL: https://github.com/apache/flink-table-store/pull/1 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #17777: [FLINK-24886][core] TimeUtils supports the form of m.

flinkbot edited a comment on pull request #1: URL: https://github.com/apache/flink/pull/1#issuecomment-966971402 ## CI report: * b00b49e806baf132df4f13124b98469cef9ebb91 Azure: [FAILURE](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=29288) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Closed] (FLINK-25209) SQLClientSchemaRegistryITCase#testReading is broken

[

https://issues.apache.org/jira/browse/FLINK-25209?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jingsong Lee closed FLINK-25209.

Resolution: Fixed

Fixed in master: 2d98608122574a9e56c00012be24824577ff47e6

The test has been re-opened.

> SQLClientSchemaRegistryITCase#testReading is broken

> ---

>

> Key: FLINK-25209

> URL: https://issues.apache.org/jira/browse/FLINK-25209

> Project: Flink

> Issue Type: Technical Debt

> Components: Connectors / Kafka, Table SQL / Client, Tests

>Affects Versions: 1.13.3

>Reporter: Chesnay Schepler

>Assignee: Qingsheng Ren

>Priority: Blocker

> Labels: pull-request-available

> Fix For: 1.15.0

>

>

> https://dev.azure.com/chesnay/flink/_build/results?buildId=1880=logs=0e31ee24-31a6-528c-a4bf-45cde9b2a14e=ff03a8fa-e84e-5199-efb2-5433077ce8e2

> {code:java}

> Dec 06 11:33:16 [ERROR] Tests run: 2, Failures: 0, Errors: 1, Skipped: 0,

> Time elapsed: 236.417 s <<< FAILURE! - in

> org.apache.flink.tests.util.kafka.SQLClientSchemaRegistryITCase

> Dec 06 11:33:16 [ERROR]

> org.apache.flink.tests.util.kafka.SQLClientSchemaRegistryITCase.testReading

> Time elapsed: 152.789 s <<< ERROR!

> Dec 06 11:33:16 java.io.IOException: Could not read expected number of

> messages.

> Dec 06 11:33:16 at

> org.apache.flink.tests.util.kafka.KafkaContainerClient.readMessages(KafkaContainerClient.java:115)

> Dec 06 11:33:16 at

> org.apache.flink.tests.util.kafka.SQLClientSchemaRegistryITCase.testReading(SQLClientSchemaRegistryITCase.java:165)

> {code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [flink-table-store] LadyForest commented on pull request #1: [FLINK-25619] Init flink-table-store repository

LadyForest commented on pull request #1: URL: https://github.com/apache/flink-table-store/pull/1#issuecomment-1010710055 LGTM :) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] JingsongLi merged pull request #18326: [FLINK-25209][tests] Wait until the consuming topic is ready before polling records in KafkaContainerClient#readMessages

JingsongLi merged pull request #18326: URL: https://github.com/apache/flink/pull/18326 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-25623) TPC-DS end-to-end test (Blink planner) failed on azure due to download file tpcds.idx failed.

Yun Gao created FLINK-25623:

---

Summary: TPC-DS end-to-end test (Blink planner) failed on azure

due to download file tpcds.idx failed.

Key: FLINK-25623

URL: https://issues.apache.org/jira/browse/FLINK-25623

Project: Flink

Issue Type: Bug

Components: Build System / Azure Pipelines

Affects Versions: 1.13.5

Reporter: Yun Gao

{code:java}

Jan 12 02:22:14 [WARN] Download file tpcds.idx failed.

Jan 12 02:22:14 Command: download_and_validate tpcds.idx ../target/generator

https://raw.githubusercontent.com/ververica/tpc-ds-generators/f5d6c11681637908ce15d697ae683676a5383641/generators/tpcds.idx

376152c9aa150c59a386b148f954c47d linux failed. Retrying...

Jan 12 02:22:19 Command: download_and_validate tpcds.idx ../target/generator

https://raw.githubusercontent.com/ververica/tpc-ds-generators/f5d6c11681637908ce15d697ae683676a5383641/generators/tpcds.idx

376152c9aa150c59a386b148f954c47d linux failed 3 times.

Jan 12 02:22:19 [WARN] Download file tpcds.idx failed.

Jan 12 02:22:19 [ERROR] Download and validate data generator files fail, please

check the network.

Jan 12 02:22:19 [FAIL] Test script contains errors.

Jan 12 02:22:19 Checking for errors...

Jan 12 02:22:19 No errors in log files.

Jan 12 02:22:19 Checking for exceptions...

Jan 12 02:22:19 No exceptions in log files.

Jan 12 02:22:19 Checking for non-empty .out files...

grep: /home/vsts/work/_temp/debug_files/flink-logs/*.out: No such file or

directory

Jan 12 02:22:19 No non-empty .out files.

Jan 12 02:22:19

Jan 12 02:22:19 [FAIL] 'TPC-DS end-to-end test (Blink planner)' failed after 1

minutes and 12 seconds! Test exited with exit code 1

Jan 12 02:22:19

{code}

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=29284=logs=08866332-78f7-59e4-4f7e-49a56faa3179=7f606211-1454-543c-70ab-c7a028a1ce8c=19463

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Commented] (FLINK-22411) Checkpoint failed caused by Mkdirs failed to create file, the path for Flink state.checkpoints.dir in docker-compose can not work from Flink Operations Playground

[

https://issues.apache.org/jira/browse/FLINK-22411?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17474293#comment-17474293

]

Serge commented on FLINK-22411:

---

That is really confusing. Maybe u can try `chmod 777

/tmp/flink-checkpoints-directory`[~lvshuang]

> Checkpoint failed caused by Mkdirs failed to create file, the path for Flink

> state.checkpoints.dir in docker-compose can not work from Flink Operations

> Playground

> --

>

> Key: FLINK-22411

> URL: https://issues.apache.org/jira/browse/FLINK-22411

> Project: Flink