Re: [PR] [FLINK-35265] Implement FlinkStateSnapshot custom resource [flink-kubernetes-operator]

mateczagany commented on code in PR #821:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/821#discussion_r1691974263

##

flink-kubernetes-operator/src/main/java/org/apache/flink/kubernetes/operator/service/AbstractFlinkService.java:

##

@@ -716,6 +695,48 @@ public CheckpointFetchResult fetchCheckpointInfo(

}

}

+@Override

+public CheckpointStatsResult fetchCheckpointStats(

+String jobId, Long checkpointId, Configuration conf) {

+try (RestClusterClient clusterClient = getClusterClient(conf))

{

+var checkpointStatusHeaders =

CheckpointStatisticDetailsHeaders.getInstance();

+var parameters =

checkpointStatusHeaders.getUnresolvedMessageParameters();

+parameters.jobPathParameter.resolve(JobID.fromHexString(jobId));

+

+// This was needed because the parameter is protected

+var checkpointIdPathParameter =

+(CheckpointIdPathParameter)

Iterables.getLast(parameters.getPathParameters());

+checkpointIdPathParameter.resolve(checkpointId);

+

+var response =

+clusterClient.sendRequest(

+checkpointStatusHeaders, parameters,

EmptyRequestBody.getInstance());

+

+var stats = response.get();

+if (stats == null) {

+throw new IllegalStateException("Checkpoint ID %d for job %s

does not exist!");

+} else if (stats instanceof

CheckpointStatistics.CompletedCheckpointStatistics) {

+return CheckpointStatsResult.completed(

+((CheckpointStatistics.CompletedCheckpointStatistics)

stats)

+.getExternalPath());

+} else if (stats instanceof

CheckpointStatistics.FailedCheckpointStatistics) {

+return CheckpointStatsResult.error(

+((CheckpointStatistics.FailedCheckpointStatistics)

stats)

+.getFailureMessage());

+} else if (stats instanceof

CheckpointStatistics.PendingCheckpointStatistics) {

+return CheckpointStatsResult.pending();

+} else {

+throw new IllegalArgumentException(

+String.format(

+"Unknown checkpoint statistics result class:

%s",

+stats.getClass().getSimpleName()));

+}

+} catch (Exception e) {

+LOG.error("Exception while fetching checkpoint statistics", e);

Review Comment:

This commit made it so we ignore errors when fetching checkpoint stats,

since at that point we have determined that the checkpoint was successful

anyways. We just set an empty path:

https://github.com/apache/flink-kubernetes-operator/pull/821/commits/637b3053b9644b3d6cadd1fb6a05e1f5aab75fa6

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Re: [PR] [FLINK-35736][tests] Add migration test scripts & CI workflows [flink-cdc]

morazow commented on code in PR #3447: URL: https://github.com/apache/flink-cdc/pull/3447#discussion_r1691956059 ## tools/mig-test/README.md: ## @@ -0,0 +1,36 @@ +# Flink CDC Migration Test Utilities + +## Pipeline Jobs +### Preparation + +1. Install Ruby (macOS has embedded it by default) +2. (Optional) Run `gem install terminal-table` for better display + +### Compile snapshot CDC versions +3. Set `CDC_SOURCE_HOME` to the root directory of the Flink CDC git repository +4. Go to `tools/mig-test` and run `ruby prepare_libs.rb` to download released / compile snapshot CDC versions + +### Run migration tests +5. Enter `conf/` and run `docker compose up -d` to start up test containers +6. Set `FLINK_HOME` to the home directory of Flink +7. Go back to `tools/mig-test` and run `ruby run_migration_test.rb` to start testing + +### Result +The migration result will be displayed in the console like this: + +``` +++ +| Migration Test Result| ++--+---+---+---+--+--+ +| | 3.0.0 | 3.0.1 | 3.1.0 | 3.1-SNAPSHOT | 3.2-SNAPSHOT | +| 3.0.0| ❓| ❓| ❌| ✅ | ✅ | +| 3.0.1| | ❓| ❌| ✅ | ✅ | +| 3.1.0| | | ✅| ❌ | ❌ | +| 3.1-SNAPSHOT | | | | ✅ | ✅ | +| 3.2-SNAPSHOT | | | | | ✅ | ++--+---+---+---+--+--+ +``` Review Comment: Could we add the meaning of `?` here? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-35265] Implement FlinkStateSnapshot custom resource [flink-kubernetes-operator]

mateczagany commented on code in PR #821:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/821#discussion_r1691962117

##

flink-kubernetes-operator/src/main/java/org/apache/flink/kubernetes/operator/utils/FlinkStateSnapshotUtils.java:

##

@@ -0,0 +1,382 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.kubernetes.operator.utils;

+

+import org.apache.flink.autoscaler.utils.DateTimeUtils;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.kubernetes.operator.api.AbstractFlinkResource;

+import org.apache.flink.kubernetes.operator.api.CrdConstants;

+import org.apache.flink.kubernetes.operator.api.FlinkStateSnapshot;

+import org.apache.flink.kubernetes.operator.api.spec.CheckpointSpec;

+import

org.apache.flink.kubernetes.operator.api.spec.FlinkStateSnapshotReference;

+import org.apache.flink.kubernetes.operator.api.spec.FlinkStateSnapshotSpec;

+import org.apache.flink.kubernetes.operator.api.spec.JobReference;

+import org.apache.flink.kubernetes.operator.api.spec.SavepointSpec;

+import org.apache.flink.kubernetes.operator.api.status.CheckpointType;

+import org.apache.flink.kubernetes.operator.api.status.SavepointFormatType;

+import org.apache.flink.kubernetes.operator.api.status.SnapshotTriggerType;

+import org.apache.flink.kubernetes.operator.config.FlinkOperatorConfiguration;

+import

org.apache.flink.kubernetes.operator.config.KubernetesOperatorConfigOptions;

+import org.apache.flink.kubernetes.operator.reconciler.ReconciliationUtils;

+import org.apache.flink.kubernetes.operator.reconciler.SnapshotType;

+

+import io.fabric8.kubernetes.api.model.ObjectMeta;

+import io.fabric8.kubernetes.client.KubernetesClient;

+import org.apache.commons.lang3.StringUtils;

+

+import javax.annotation.Nullable;

+

+import java.time.Instant;

+import java.util.UUID;

+

+import static

org.apache.flink.kubernetes.operator.api.status.FlinkStateSnapshotStatus.State.ABANDONED;

+import static

org.apache.flink.kubernetes.operator.api.status.FlinkStateSnapshotStatus.State.COMPLETED;

+import static

org.apache.flink.kubernetes.operator.api.status.FlinkStateSnapshotStatus.State.IN_PROGRESS;

+import static

org.apache.flink.kubernetes.operator.api.status.FlinkStateSnapshotStatus.State.TRIGGER_PENDING;

+import static

org.apache.flink.kubernetes.operator.config.KubernetesOperatorConfigOptions.SNAPSHOT_RESOURCE_ENABLED;

+import static

org.apache.flink.kubernetes.operator.reconciler.SnapshotType.CHECKPOINT;

+import static

org.apache.flink.kubernetes.operator.reconciler.SnapshotType.SAVEPOINT;

+

+/** Utilities class for FlinkStateSnapshot resources. */

+public class FlinkStateSnapshotUtils {

+

+/**

+ * From a snapshot reference, return its snapshot path. If a {@link

FlinkStateSnapshot} is

+ * referenced, it will be retrieved from Kubernetes.

+ *

+ * @param kubernetesClient kubernetes client

+ * @param snapshotRef snapshot reference

+ * @return found savepoint path

+ */

+public static String getValidatedFlinkStateSnapshotPath(

+KubernetesClient kubernetesClient, FlinkStateSnapshotReference

snapshotRef) {

+if (StringUtils.isNotBlank(snapshotRef.getPath())) {

+return snapshotRef.getPath();

+}

+

+if (StringUtils.isBlank(snapshotRef.getName())) {

+throw new IllegalArgumentException(

+String.format("Invalid snapshot name: %s",

snapshotRef.getName()));

+}

+

+var result =

+snapshotRef.getNamespace() == null

+? kubernetesClient

+.resources(FlinkStateSnapshot.class)

+.withName(snapshotRef.getName())

+.get()

+: kubernetesClient

+.resources(FlinkStateSnapshot.class)

+.inNamespace(snapshotRef.getNamespace())

+.withName(snapshotRef.getName())

+.get();

+

+if (result == null) {

+throw new IllegalStateException(

+String.format(

+

Re: [PR] [FLINK-30481][FLIP-277] GlueCatalog Implementation [flink-connector-aws]

Samrat002 commented on code in PR #47:

URL:

https://github.com/apache/flink-connector-aws/pull/47#discussion_r1691963997

##

flink-catalog-aws/flink-catalog-aws-glue/src/main/java/org/apache/flink/table/catalog/glue/util/GlueUtils.java:

##

@@ -0,0 +1,384 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.table.catalog.glue.util;

+

+import org.apache.flink.annotation.Internal;

+import org.apache.flink.table.api.Schema;

+import org.apache.flink.table.api.ValidationException;

+import org.apache.flink.table.catalog.CatalogBaseTable;

+import org.apache.flink.table.catalog.CatalogDatabase;

+import org.apache.flink.table.catalog.CatalogDatabaseImpl;

+import org.apache.flink.table.catalog.CatalogFunction;

+import org.apache.flink.table.catalog.CatalogTable;

+import org.apache.flink.table.catalog.FunctionLanguage;

+import org.apache.flink.table.catalog.ObjectPath;

+import org.apache.flink.table.catalog.exceptions.CatalogException;

+import org.apache.flink.table.catalog.glue.GlueCatalogOptions;

+import org.apache.flink.table.catalog.glue.constants.GlueCatalogConstants;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+import software.amazon.awssdk.services.glue.model.Column;

+import software.amazon.awssdk.services.glue.model.Database;

+import software.amazon.awssdk.services.glue.model.GlueResponse;

+import software.amazon.awssdk.services.glue.model.Table;

+import software.amazon.awssdk.services.glue.model.UserDefinedFunction;

+

+import java.util.Arrays;

+import java.util.Collection;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Locale;

+import java.util.Map;

+import java.util.Set;

+import java.util.stream.Collectors;

+

+import static org.apache.flink.util.Preconditions.checkArgument;

+import static org.apache.flink.util.Preconditions.checkNotNull;

+import static org.apache.flink.util.StringUtils.isNullOrWhitespaceOnly;

+

+/** Utilities related glue Operation. */

+@Internal

+public class GlueUtils {

+

+private static final Logger LOG = LoggerFactory.getLogger(GlueUtils.class);

+

+/**

+ * Glue supports lowercase naming convention.

+ *

+ * @param name fully qualified name.

+ * @return modified name according to glue convention.

+ */

+public static String getGlueConventionalName(String name) {

+

+return name.toLowerCase(Locale.ROOT);

+}

+

+/**

+ * Extract location from database properties if present and remove

location from properties.

+ * fallback to create default location if not present

+ *

+ * @param databaseProperties database properties.

+ * @param databaseName fully qualified name for database.

+ * @return location for database.

+ */

+public static String extractDatabaseLocation(

+final Map databaseProperties,

+final String databaseName,

+final String catalogPath) {

+if (databaseProperties.containsKey(GlueCatalogConstants.LOCATION_URI))

{

+return

databaseProperties.remove(GlueCatalogConstants.LOCATION_URI);

+} else {

+LOG.info("No location URI Set. Using Catalog Path as default");

+return catalogPath + GlueCatalogConstants.LOCATION_SEPARATOR +

databaseName;

+}

+}

+

+/**

+ * Extract location from database properties if present and remove

location from properties.

+ * fallback to create default location if not present

+ *

+ * @param tableProperties table properties.

+ * @param tablePath fully qualified object for table.

+ * @return location for table.

+ */

+public static String extractTableLocation(

+final Map tableProperties,

+final ObjectPath tablePath,

+final String catalogPath) {

+if (tableProperties.containsKey(GlueCatalogConstants.LOCATION_URI)) {

+return tableProperties.remove(GlueCatalogConstants.LOCATION_URI);

+} else {

+return catalogPath

++ GlueCatalogConstants.LOCATION_SEPARATOR

++ tablePath.getDatabaseName()

++ GlueCatalogConstants.LOCATION_SEPARATOR

++

Re: [PR] [FLINK-35305]Amazon SQS Sink Connector [flink-connector-aws]

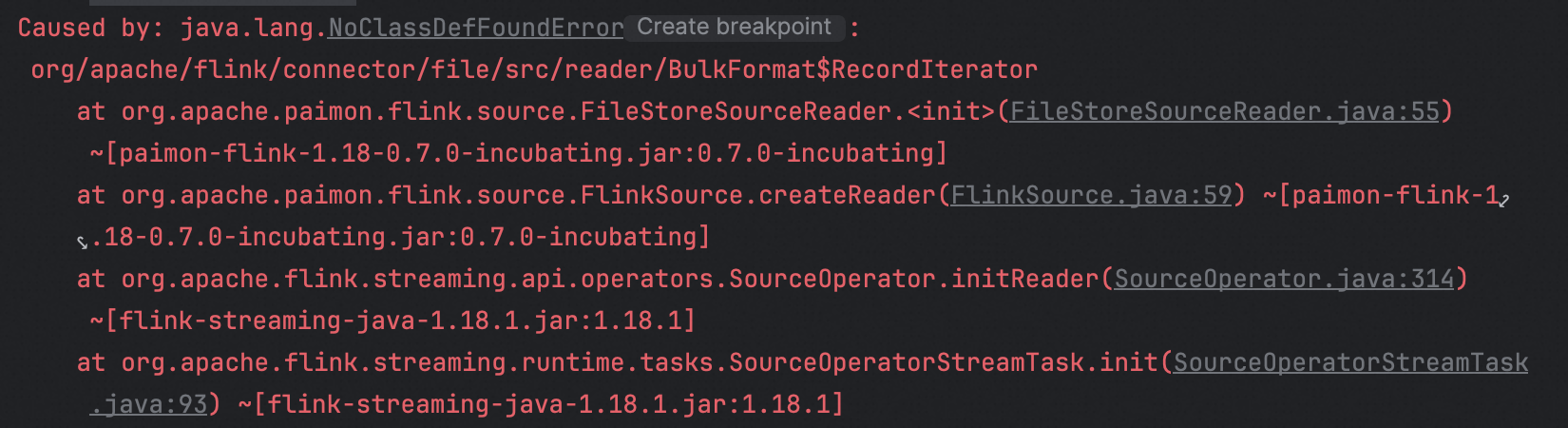

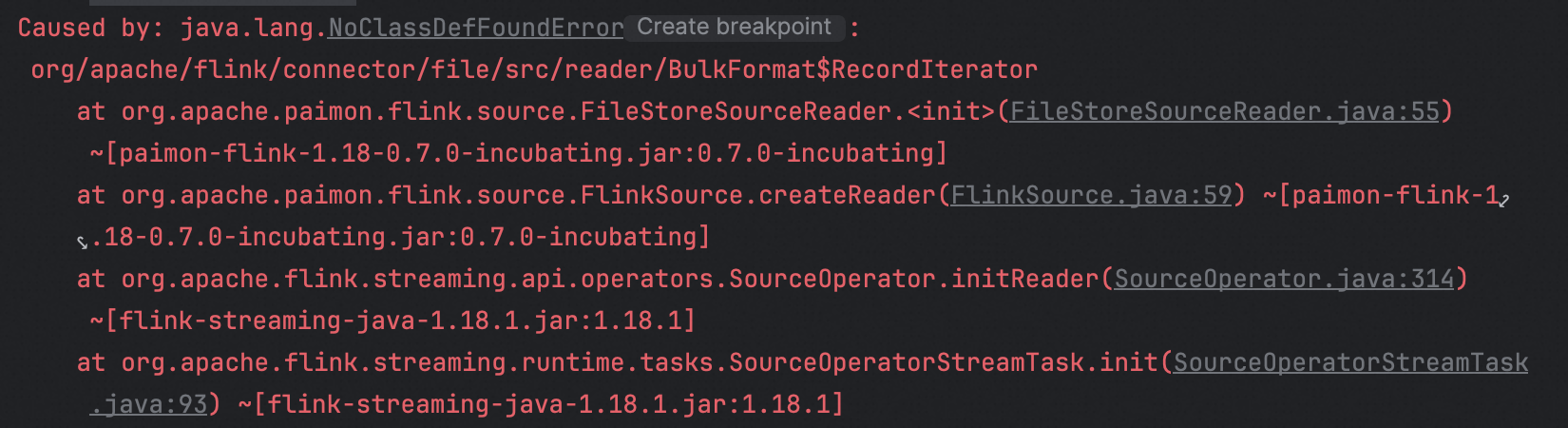

19priyadhingra commented on PR #141: URL: https://github.com/apache/flink-connector-aws/pull/141#issuecomment-2251149507 > @19priyadhingra The tests seems to be failing. Can we please take a look? > > Also - it would be good if we squash the commits! 1. Hi Hong, I tried to deep dive more on the only failed test: elementConverterWillOpenSerializationSchema failed logs: https://paste.amazon.com/show/dhipriya/1721770192 it complains TestSinkInitContext cant be cast to sink2.Sink$InitContext. I understood the reason why it is failing but not sure about how to fix it. This issue started coming post 1.19.1 where we made change in TestSinkInitContext. One of the easy way could be to remove this test if it is not adding any big value or other fix might require change in flink-connector-base where we have to keep the old TestSinkInitContext? Right now I removed that test, please let me know your thought on this. [https://github.com/apache/flink/blob/release-1.19.1/flink-connectors/flink-connect[…]pache/flink/connector/base/sink/writer/TestSinkInitContext.java](https://github.com/apache/flink/blob/release-1.19.1/flink-connectors/flink-connector-base/src/test/java/org/apache/flink/connector/base/sink/writer/TestSinkInitContext.java#L51) -> new TestSinkInitContext implements WriterInitContext whereas old TestSinkInitContext ( [https://github.com/apache/flink/blob/release-1.18.1/flink-connectors/flink-connect[…]pache/flink/connector/base/sink/writer/TestSinkInitContext.java](https://github.com/apache/flink/blob/release-1.18.1/flink-connectors/flink-connector-base/src/test/java/org/apache/flink/connector/base/sink/writer/TestSinkInitContext.java#L47)) implements Sink.InitContext Now new one which implements [WriterInitContext](https://github.com/apache/flink/blob/release-1.19.1/flink-core/src/main/java/org/apache/flink/api/connector/sink2/WriterInitContext.java#L35) extends org.apache.flink.api.connector.sink2.InitContext, not the required one org.apache.flink.api.connector.sink2.Sink.InitContext 2. Squashed the commits -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-35265] Implement FlinkStateSnapshot custom resource [flink-kubernetes-operator]

mateczagany commented on code in PR #821:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/821#discussion_r1691933742

##

flink-kubernetes-operator/src/main/java/org/apache/flink/kubernetes/operator/reconciler/snapshot/StateSnapshotReconciler.java:

##

@@ -0,0 +1,230 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.kubernetes.operator.reconciler.snapshot;

+

+import org.apache.flink.configuration.CheckpointingOptions;

+import org.apache.flink.kubernetes.operator.api.FlinkDeployment;

+import org.apache.flink.kubernetes.operator.api.FlinkStateSnapshot;

+import org.apache.flink.kubernetes.operator.api.spec.FlinkStateSnapshotSpec;

+import

org.apache.flink.kubernetes.operator.controller.FlinkStateSnapshotContext;

+import org.apache.flink.kubernetes.operator.exception.ReconciliationException;

+import org.apache.flink.kubernetes.operator.reconciler.ReconciliationUtils;

+import

org.apache.flink.kubernetes.operator.service.FlinkResourceContextFactory;

+import org.apache.flink.kubernetes.operator.utils.EventRecorder;

+import org.apache.flink.kubernetes.operator.utils.FlinkStateSnapshotUtils;

+import org.apache.flink.kubernetes.operator.utils.SnapshotUtils;

+import org.apache.flink.util.Preconditions;

+

+import io.javaoperatorsdk.operator.api.reconciler.DeleteControl;

+import lombok.RequiredArgsConstructor;

+import org.apache.commons.lang3.ObjectUtils;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.util.Optional;

+

+import static

org.apache.flink.kubernetes.operator.api.status.FlinkStateSnapshotStatus.State.TRIGGER_PENDING;

+

+/** The reconciler for the {@link

org.apache.flink.kubernetes.operator.api.FlinkStateSnapshot}. */

+@RequiredArgsConstructor

+public class StateSnapshotReconciler {

+

+private static final Logger LOG =

LoggerFactory.getLogger(StateSnapshotReconciler.class);

+

+private final FlinkResourceContextFactory ctxFactory;

+private final EventRecorder eventRecorder;

+

+public void reconcile(FlinkStateSnapshotContext ctx) {

+var resource = ctx.getResource();

+

+var savepointState = resource.getStatus().getState();

+if (!TRIGGER_PENDING.equals(savepointState)) {

+return;

+}

+

+if (resource.getSpec().isSavepoint()

+&& resource.getSpec().getSavepoint().getAlreadyExists()) {

+LOG.info(

+"Snapshot {} is marked as completed in spec, skipping

triggering savepoint.",

+resource.getMetadata().getName());

+

+FlinkStateSnapshotUtils.snapshotSuccessful(

+resource, resource.getSpec().getSavepoint().getPath(),

true);

+return;

+}

+

+if (FlinkStateSnapshotUtils.abandonSnapshotIfJobNotRunning(

+ctx.getKubernetesClient(),

+ctx.getResource(),

+ctx.getSecondaryResource().orElse(null),

+eventRecorder)) {

+return;

+}

+

+var jobId =

ctx.getSecondaryResource().orElseThrow().getStatus().getJobStatus().getJobId();

+

+Optional triggerIdOpt;

+try {

+triggerIdOpt = triggerCheckpointOrSavepoint(resource.getSpec(),

ctx, jobId);

+} catch (Exception e) {

+LOG.error("Failed to trigger snapshot for resource {}",

ctx.getResource(), e);

+throw new ReconciliationException(e);

+}

+

+if (triggerIdOpt.isEmpty()) {

+LOG.warn("Failed to trigger snapshot {}",

resource.getMetadata().getName());

+return;

+}

+

+FlinkStateSnapshotUtils.snapshotInProgress(resource,

triggerIdOpt.get());

+}

+

+public DeleteControl cleanup(FlinkStateSnapshotContext ctx) throws

Exception {

+var resource = ctx.getResource();

+var state = resource.getStatus().getState();

+var resourceName = resource.getMetadata().getName();

+LOG.info("Cleaning up resource {}...", resourceName);

+

+if (resource.getSpec().isCheckpoint()) {

+return DeleteControl.defaultDelete();

+}

+if (!resource.getSpec().getSavepoint().getDisposeOnDelete()) {

+return

Re: [PR] [FLINK-35265] Implement FlinkStateSnapshot custom resource [flink-kubernetes-operator]

anupamaggarwal commented on code in PR #821:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/821#discussion_r1691868012

##

flink-kubernetes-operator/src/main/java/org/apache/flink/kubernetes/operator/utils/FlinkStateSnapshotUtils.java:

##

@@ -0,0 +1,382 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.kubernetes.operator.utils;

+

+import org.apache.flink.autoscaler.utils.DateTimeUtils;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.kubernetes.operator.api.AbstractFlinkResource;

+import org.apache.flink.kubernetes.operator.api.CrdConstants;

+import org.apache.flink.kubernetes.operator.api.FlinkStateSnapshot;

+import org.apache.flink.kubernetes.operator.api.spec.CheckpointSpec;

+import

org.apache.flink.kubernetes.operator.api.spec.FlinkStateSnapshotReference;

+import org.apache.flink.kubernetes.operator.api.spec.FlinkStateSnapshotSpec;

+import org.apache.flink.kubernetes.operator.api.spec.JobReference;

+import org.apache.flink.kubernetes.operator.api.spec.SavepointSpec;

+import org.apache.flink.kubernetes.operator.api.status.CheckpointType;

+import org.apache.flink.kubernetes.operator.api.status.SavepointFormatType;

+import org.apache.flink.kubernetes.operator.api.status.SnapshotTriggerType;

+import org.apache.flink.kubernetes.operator.config.FlinkOperatorConfiguration;

+import

org.apache.flink.kubernetes.operator.config.KubernetesOperatorConfigOptions;

+import org.apache.flink.kubernetes.operator.reconciler.ReconciliationUtils;

+import org.apache.flink.kubernetes.operator.reconciler.SnapshotType;

+

+import io.fabric8.kubernetes.api.model.ObjectMeta;

+import io.fabric8.kubernetes.client.KubernetesClient;

+import org.apache.commons.lang3.StringUtils;

+

+import javax.annotation.Nullable;

+

+import java.time.Instant;

+import java.util.UUID;

+

+import static

org.apache.flink.kubernetes.operator.api.status.FlinkStateSnapshotStatus.State.ABANDONED;

+import static

org.apache.flink.kubernetes.operator.api.status.FlinkStateSnapshotStatus.State.COMPLETED;

+import static

org.apache.flink.kubernetes.operator.api.status.FlinkStateSnapshotStatus.State.IN_PROGRESS;

+import static

org.apache.flink.kubernetes.operator.api.status.FlinkStateSnapshotStatus.State.TRIGGER_PENDING;

+import static

org.apache.flink.kubernetes.operator.config.KubernetesOperatorConfigOptions.SNAPSHOT_RESOURCE_ENABLED;

+import static

org.apache.flink.kubernetes.operator.reconciler.SnapshotType.CHECKPOINT;

+import static

org.apache.flink.kubernetes.operator.reconciler.SnapshotType.SAVEPOINT;

+

+/** Utilities class for FlinkStateSnapshot resources. */

+public class FlinkStateSnapshotUtils {

+

+/**

+ * From a snapshot reference, return its snapshot path. If a {@link

FlinkStateSnapshot} is

+ * referenced, it will be retrieved from Kubernetes.

+ *

+ * @param kubernetesClient kubernetes client

+ * @param snapshotRef snapshot reference

+ * @return found savepoint path

+ */

+public static String getValidatedFlinkStateSnapshotPath(

+KubernetesClient kubernetesClient, FlinkStateSnapshotReference

snapshotRef) {

+if (StringUtils.isNotBlank(snapshotRef.getPath())) {

+return snapshotRef.getPath();

+}

+

+if (StringUtils.isBlank(snapshotRef.getName())) {

+throw new IllegalArgumentException(

+String.format("Invalid snapshot name: %s",

snapshotRef.getName()));

+}

+

+var result =

+snapshotRef.getNamespace() == null

+? kubernetesClient

+.resources(FlinkStateSnapshot.class)

+.withName(snapshotRef.getName())

+.get()

+: kubernetesClient

+.resources(FlinkStateSnapshot.class)

+.inNamespace(snapshotRef.getNamespace())

+.withName(snapshotRef.getName())

+.get();

+

+if (result == null) {

+throw new IllegalStateException(

+String.format(

+

Re: [PR] [FLINK-35265] Implement FlinkStateSnapshot custom resource [flink-kubernetes-operator]

anupamaggarwal commented on code in PR #821:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/821#discussion_r1691872393

##

flink-kubernetes-operator/src/main/java/org/apache/flink/kubernetes/operator/reconciler/snapshot/StateSnapshotReconciler.java:

##

@@ -0,0 +1,230 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.kubernetes.operator.reconciler.snapshot;

+

+import org.apache.flink.configuration.CheckpointingOptions;

+import org.apache.flink.kubernetes.operator.api.FlinkDeployment;

+import org.apache.flink.kubernetes.operator.api.FlinkStateSnapshot;

+import org.apache.flink.kubernetes.operator.api.spec.FlinkStateSnapshotSpec;

+import

org.apache.flink.kubernetes.operator.controller.FlinkStateSnapshotContext;

+import org.apache.flink.kubernetes.operator.exception.ReconciliationException;

+import org.apache.flink.kubernetes.operator.reconciler.ReconciliationUtils;

+import

org.apache.flink.kubernetes.operator.service.FlinkResourceContextFactory;

+import org.apache.flink.kubernetes.operator.utils.EventRecorder;

+import org.apache.flink.kubernetes.operator.utils.FlinkStateSnapshotUtils;

+import org.apache.flink.kubernetes.operator.utils.SnapshotUtils;

+import org.apache.flink.util.Preconditions;

+

+import io.javaoperatorsdk.operator.api.reconciler.DeleteControl;

+import lombok.RequiredArgsConstructor;

+import org.apache.commons.lang3.ObjectUtils;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.util.Optional;

+

+import static

org.apache.flink.kubernetes.operator.api.status.FlinkStateSnapshotStatus.State.TRIGGER_PENDING;

+

+/** The reconciler for the {@link

org.apache.flink.kubernetes.operator.api.FlinkStateSnapshot}. */

+@RequiredArgsConstructor

+public class StateSnapshotReconciler {

+

+private static final Logger LOG =

LoggerFactory.getLogger(StateSnapshotReconciler.class);

+

+private final FlinkResourceContextFactory ctxFactory;

+private final EventRecorder eventRecorder;

+

+public void reconcile(FlinkStateSnapshotContext ctx) {

+var resource = ctx.getResource();

+

+var savepointState = resource.getStatus().getState();

+if (!TRIGGER_PENDING.equals(savepointState)) {

+return;

+}

+

+if (resource.getSpec().isSavepoint()

+&& resource.getSpec().getSavepoint().getAlreadyExists()) {

+LOG.info(

+"Snapshot {} is marked as completed in spec, skipping

triggering savepoint.",

+resource.getMetadata().getName());

+

+FlinkStateSnapshotUtils.snapshotSuccessful(

+resource, resource.getSpec().getSavepoint().getPath(),

true);

+return;

+}

+

+if (FlinkStateSnapshotUtils.abandonSnapshotIfJobNotRunning(

+ctx.getKubernetesClient(),

+ctx.getResource(),

+ctx.getSecondaryResource().orElse(null),

+eventRecorder)) {

+return;

+}

+

+var jobId =

ctx.getSecondaryResource().orElseThrow().getStatus().getJobStatus().getJobId();

+

+Optional triggerIdOpt;

+try {

+triggerIdOpt = triggerCheckpointOrSavepoint(resource.getSpec(),

ctx, jobId);

+} catch (Exception e) {

+LOG.error("Failed to trigger snapshot for resource {}",

ctx.getResource(), e);

+throw new ReconciliationException(e);

+}

+

+if (triggerIdOpt.isEmpty()) {

+LOG.warn("Failed to trigger snapshot {}",

resource.getMetadata().getName());

+return;

+}

+

+FlinkStateSnapshotUtils.snapshotInProgress(resource,

triggerIdOpt.get());

+}

+

+public DeleteControl cleanup(FlinkStateSnapshotContext ctx) throws

Exception {

+var resource = ctx.getResource();

+var state = resource.getStatus().getState();

+var resourceName = resource.getMetadata().getName();

+LOG.info("Cleaning up resource {}...", resourceName);

+

+if (resource.getSpec().isCheckpoint()) {

+return DeleteControl.defaultDelete();

+}

+if (!resource.getSpec().getSavepoint().getDisposeOnDelete()) {

+return

Re: [PR] [FLINK-35265] Implement FlinkStateSnapshot custom resource [flink-kubernetes-operator]

anupamaggarwal commented on code in PR #821:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/821#discussion_r1691872393

##

flink-kubernetes-operator/src/main/java/org/apache/flink/kubernetes/operator/reconciler/snapshot/StateSnapshotReconciler.java:

##

@@ -0,0 +1,230 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.kubernetes.operator.reconciler.snapshot;

+

+import org.apache.flink.configuration.CheckpointingOptions;

+import org.apache.flink.kubernetes.operator.api.FlinkDeployment;

+import org.apache.flink.kubernetes.operator.api.FlinkStateSnapshot;

+import org.apache.flink.kubernetes.operator.api.spec.FlinkStateSnapshotSpec;

+import

org.apache.flink.kubernetes.operator.controller.FlinkStateSnapshotContext;

+import org.apache.flink.kubernetes.operator.exception.ReconciliationException;

+import org.apache.flink.kubernetes.operator.reconciler.ReconciliationUtils;

+import

org.apache.flink.kubernetes.operator.service.FlinkResourceContextFactory;

+import org.apache.flink.kubernetes.operator.utils.EventRecorder;

+import org.apache.flink.kubernetes.operator.utils.FlinkStateSnapshotUtils;

+import org.apache.flink.kubernetes.operator.utils.SnapshotUtils;

+import org.apache.flink.util.Preconditions;

+

+import io.javaoperatorsdk.operator.api.reconciler.DeleteControl;

+import lombok.RequiredArgsConstructor;

+import org.apache.commons.lang3.ObjectUtils;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.util.Optional;

+

+import static

org.apache.flink.kubernetes.operator.api.status.FlinkStateSnapshotStatus.State.TRIGGER_PENDING;

+

+/** The reconciler for the {@link

org.apache.flink.kubernetes.operator.api.FlinkStateSnapshot}. */

+@RequiredArgsConstructor

+public class StateSnapshotReconciler {

+

+private static final Logger LOG =

LoggerFactory.getLogger(StateSnapshotReconciler.class);

+

+private final FlinkResourceContextFactory ctxFactory;

+private final EventRecorder eventRecorder;

+

+public void reconcile(FlinkStateSnapshotContext ctx) {

+var resource = ctx.getResource();

+

+var savepointState = resource.getStatus().getState();

+if (!TRIGGER_PENDING.equals(savepointState)) {

+return;

+}

+

+if (resource.getSpec().isSavepoint()

+&& resource.getSpec().getSavepoint().getAlreadyExists()) {

+LOG.info(

+"Snapshot {} is marked as completed in spec, skipping

triggering savepoint.",

+resource.getMetadata().getName());

+

+FlinkStateSnapshotUtils.snapshotSuccessful(

+resource, resource.getSpec().getSavepoint().getPath(),

true);

+return;

+}

+

+if (FlinkStateSnapshotUtils.abandonSnapshotIfJobNotRunning(

+ctx.getKubernetesClient(),

+ctx.getResource(),

+ctx.getSecondaryResource().orElse(null),

+eventRecorder)) {

+return;

+}

+

+var jobId =

ctx.getSecondaryResource().orElseThrow().getStatus().getJobStatus().getJobId();

+

+Optional triggerIdOpt;

+try {

+triggerIdOpt = triggerCheckpointOrSavepoint(resource.getSpec(),

ctx, jobId);

+} catch (Exception e) {

+LOG.error("Failed to trigger snapshot for resource {}",

ctx.getResource(), e);

+throw new ReconciliationException(e);

+}

+

+if (triggerIdOpt.isEmpty()) {

+LOG.warn("Failed to trigger snapshot {}",

resource.getMetadata().getName());

+return;

+}

+

+FlinkStateSnapshotUtils.snapshotInProgress(resource,

triggerIdOpt.get());

+}

+

+public DeleteControl cleanup(FlinkStateSnapshotContext ctx) throws

Exception {

+var resource = ctx.getResource();

+var state = resource.getStatus().getState();

+var resourceName = resource.getMetadata().getName();

+LOG.info("Cleaning up resource {}...", resourceName);

+

+if (resource.getSpec().isCheckpoint()) {

+return DeleteControl.defaultDelete();

+}

+if (!resource.getSpec().getSavepoint().getDisposeOnDelete()) {

+return

Re: [PR] [FLINK-35897][Checkpoint] Cleanup completed resource when checkpoint is canceled [flink]

flinkbot commented on PR #25120: URL: https://github.com/apache/flink/pull/25120#issuecomment-2250449661 ## CI report: * 5b0ca74d3951873a800d5b3ef0d23736f6fab755 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[PR] [FLINK-35897][Checkpoint] Cleanup completed resource when checkpoint is canceled [flink]

ljz2051 opened a new pull request, #25120: URL: https://github.com/apache/flink/pull/25120 ## What is the purpose of the change This pull request cleanup completed checkpoint resource when the job checkpoint is canceled. ## Brief change log - When the asynchronous snapshot thread completes a checkpoint, cleanup the completed checkpoint if it finds that the checkpoint has been canceled. ## Verifying this change This change is already covered by existing tests, such as AsyncSnapshotCallableTest. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no ) - The runtime per-record code paths (performance sensitive): (no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: (yes) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? no -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-35885][table] Ignore advancing window processor by watermark in window agg based on proctime [flink]

flinkbot commented on PR #25119: URL: https://github.com/apache/flink/pull/25119#issuecomment-2250260386 ## CI report: * f529368b2ff98c7a50d5e790c9b9ca879ca200a8 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[PR] [FLINK-35885][table] Ignore advancing window processor by watermark in window agg based on proctime [flink]

xuyangzhong opened a new pull request, #25119: URL: https://github.com/apache/flink/pull/25119 ## What is the purpose of the change This pr tries to ignore the advancement of the window processor caused by watermark in proctime window agg. ## Brief change log - *Ignore the advancement of the window processor caused by watermark in proctime window agg* - *Add harness test for this bugfix* ## Verifying this change A harness test has been added to verify this pr. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-35282][FLINK-35520] PyFlink Support for Apache Beam > 2.49 [flink]

xaniasd commented on PR #24908: URL: https://github.com/apache/flink/pull/24908#issuecomment-2250200976 hi there @snuyanzin @hlteoh37, are you actively working on this PR? Is there any way I could help with this? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-35894] Add Elasticsearch Sink Connector for Flink CDC Pipeline [flink-cdc]

lvyanquan commented on PR #3495: URL: https://github.com/apache/flink-cdc/pull/3495#issuecomment-2250180097 Thanks @proletarians for this contribution, left some comments. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-35894] Add Elasticsearch Sink Connector for Flink CDC Pipeline [flink-cdc]

lvyanquan commented on code in PR #3495:

URL: https://github.com/apache/flink-cdc/pull/3495#discussion_r1691213209

##

flink-cdc-connect/flink-cdc-pipeline-connectors/flink-cdc-pipeline-connector-elasticsearch/pom.xml:

##

@@ -0,0 +1,211 @@

+http://maven.apache.org/POM/4.0.0;

+ xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance;

+ xsi:schemaLocation="http://maven.apache.org/POM/4.0.0

http://maven.apache.org/xsd/maven-4.0.0.xsd;>

+

+4.0.0

+

+

+org.apache.flink

+flink-cdc-pipeline-connectors

+3.2-SNAPSHOT

Review Comment:

please use `${revision}` like other connectors.

##

flink-cdc-connect/flink-cdc-pipeline-connectors/flink-cdc-pipeline-connector-elasticsearch/src/main/java/org/apache/flink/cdc/connectors/elasticsearch/serializer/ElasticsearchEventSerializer.java:

##

@@ -0,0 +1,230 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.cdc.connectors.elasticsearch.serializer;

+

+import org.apache.flink.api.connector.sink2.Sink;

+import org.apache.flink.api.connector.sink2.SinkWriter;

+import org.apache.flink.cdc.common.data.RecordData;

+import org.apache.flink.cdc.common.event.*;

+import org.apache.flink.cdc.common.schema.Column;

+import org.apache.flink.cdc.common.schema.Schema;

+import org.apache.flink.cdc.common.types.*;

+import org.apache.flink.cdc.common.utils.Preconditions;

+import org.apache.flink.cdc.common.utils.SchemaUtils;

+import org.apache.flink.connector.base.sink.writer.ElementConverter;

+

+import co.elastic.clients.elasticsearch.core.bulk.BulkOperationVariant;

+import co.elastic.clients.elasticsearch.core.bulk.DeleteOperation;

+import co.elastic.clients.elasticsearch.core.bulk.IndexOperation;

+import com.fasterxml.jackson.core.JsonProcessingException;

+import com.fasterxml.jackson.databind.ObjectMapper;

+

+import java.io.IOException;

+import java.time.ZoneId;

+import java.time.format.DateTimeFormatter;

+import java.util.Arrays;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.stream.Collectors;

+

+/** A serializer for Event to BulkOperationVariant. */

+public class ElasticsearchEventSerializer implements ElementConverter {

+private final ObjectMapper objectMapper = new ObjectMapper();

+private final Map schemaMaps = new HashMap<>();

+

+/** Format DATE type data. */

+public static final DateTimeFormatter DATE_FORMATTER =

+DateTimeFormatter.ofPattern("-MM-dd");

+

+/** Format timestamp-related type data. */

+public static final DateTimeFormatter DATE_TIME_FORMATTER =

+DateTimeFormatter.ofPattern("-MM-dd HH:mm:ss.SS");

+

+/** ZoneId from pipeline config to support timestamp with local time zone.

*/

+private final ZoneId pipelineZoneId;

+

+public ElasticsearchEventSerializer(ZoneId zoneId) {

+this.pipelineZoneId = zoneId;

+}

+

+@Override

+public BulkOperationVariant apply(Event event, SinkWriter.Context context)

{

+try {

+if (event instanceof DataChangeEvent) {

+return applyDataChangeEvent((DataChangeEvent) event);

+} else if (event instanceof SchemaChangeEvent) {

+IndexOperation> indexOperation =

+applySchemaChangeEvent((SchemaChangeEvent) event);

+if (indexOperation != null) {

+return indexOperation;

+}

+}

+} catch (IOException e) {

+throw new RuntimeException("Failed to serialize event", e);

+}

+return null;

+}

+

+private IndexOperation> applySchemaChangeEvent(

+SchemaChangeEvent schemaChangeEvent) throws IOException {

+TableId tableId = schemaChangeEvent.tableId();

+if (schemaChangeEvent instanceof CreateTableEvent) {

+Schema schema = ((CreateTableEvent) schemaChangeEvent).getSchema();

+schemaMaps.put(tableId, schema);

+return createSchemaIndexOperation(tableId, schema);

+} else if (schemaChangeEvent instanceof AddColumnEvent

+|| schemaChangeEvent instanceof DropColumnEvent) {

+if

Re: [PR] [FLINK-35868][cdc-connector][mongodb] Bump dependency version to support MongoDB 7.0 [flink-cdc]

leonardBang merged PR #3489: URL: https://github.com/apache/flink-cdc/pull/3489 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-35391][cdc-connector][paimon] Bump dependency of Paimon Pipeline connector to 0.8.0 [flink-cdc]

beryllw commented on PR #3335: URL: https://github.com/apache/flink-cdc/pull/3335#issuecomment-2249967829 @leonardBang It seems that the failure of unit ci has nothing to do with Paimon's changes. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-35016] catalog changes for model resource [flink]

twalthr commented on code in PR #25036:

URL: https://github.com/apache/flink/pull/25036#discussion_r1690005374

##

flink-table/flink-table-common/src/main/java/org/apache/flink/table/catalog/Catalog.java:

##

@@ -790,4 +792,126 @@ void alterPartitionColumnStatistics(

CatalogColumnStatistics columnStatistics,

boolean ignoreIfNotExists)

throws PartitionNotExistException, CatalogException;

+

+// -- models --

+

+/**

+ * Get names of all tables models under this database. An empty list is

returned if none exists.

+ *

+ * @return a list of the names of all models in this database

+ * @throws DatabaseNotExistException if the database does not exist

+ * @throws CatalogException in case of any runtime exception

+ */

+default List listModels(String databaseName)

+throws DatabaseNotExistException, CatalogException {

+throw new UnsupportedOperationException(

+String.format("listModel(String) is not implemented for %s.",

this.getClass()));

Review Comment:

Let's return an empty list instead. If possible we should avoid errors to

make the system more robust. At least on the read path, not necessarily on the

write path.

##

flink-table/flink-table-common/src/main/java/org/apache/flink/table/catalog/Catalog.java:

##

@@ -790,4 +792,126 @@ void alterPartitionColumnStatistics(

CatalogColumnStatistics columnStatistics,

boolean ignoreIfNotExists)

throws PartitionNotExistException, CatalogException;

+

+// -- models --

+

+/**

+ * Get names of all tables models under this database. An empty list is

returned if none exists.

Review Comment:

```suggestion

* Get names of all models under this database. An empty list is

returned if none exists.

```

##

flink-table/flink-table-common/src/main/java/org/apache/flink/table/catalog/CatalogModel.java:

##

@@ -0,0 +1,108 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.table.catalog;

+

+import org.apache.flink.annotation.PublicEvolving;

+import org.apache.flink.table.api.Schema;

+

+import javax.annotation.Nullable;

+

+import java.util.ArrayList;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+

+/** Interface for a model in a catalog. */

+@PublicEvolving

+public interface CatalogModel {

+/** Returns a map of string-based model options. */

+Map getOptions();

+

+/** Returns a list of model changes. */

+List getModelChanges();

+

+/**

+ * Get the unresolved input schema of the model.

+ *

+ * @return unresolved input schema of the model.

+ */

+Schema getInputSchema();

+

+/**

+ * Get the unresolved output schema of the model.

+ *

+ * @return unresolved output schema of the model.

+ */

+Schema getOutputSchema();

+

+/**

+ * Get comment of the model.

+ *

+ * @return comment of the model.

+ */

+String getComment();

+

+/**

+ * Get a deep copy of the CatalogModel instance.

+ *

+ * @return a copy of the CatalogModel instance

+ */

+CatalogModel copy();

+

+/**

+ * Copy the input model options into the CatalogModel instance.

+ *

+ * @return a copy of the CatalogModel instance with new model options.

+ */

+CatalogModel copy(Map options);

+

+/**

+ * Creates a basic implementation of this interface.

+ *

+ * @param inputSchema unresolved input schema

+ * @param outputSchema unresolved output schema

+ * @param modelOptions model options

+ * @param comment optional comment

+ */

+static CatalogModel of(

+Schema inputSchema,

+Schema outputSchema,

+Map modelOptions,

+@Nullable String comment) {

+return new DefaultCatalogModel(

+inputSchema, outputSchema, modelOptions, new ArrayList<>(),

comment);

+}

+

+/**

+ * Creates a basic implementation of this interface.

+ *

+ * @param inputSchema unresolved input schema

+ * @param outputSchema unresolved output schema

+ * @param modelChanges

Re: [PR] [FLINK-33874][runtime] Support resource request wait mechanism at DefaultDeclarativeSlotPool side for Default Scheduler [flink]

RocMarshal commented on code in PR #25113:

URL: https://github.com/apache/flink/pull/25113#discussion_r1691120384

##

flink-runtime/src/main/java/org/apache/flink/runtime/resourcemanager/ResourceManagerGateway.java:

##

@@ -88,7 +88,22 @@ CompletableFuture registerJobMaster(

CompletableFuture declareRequiredResources(

JobMasterId jobMasterId,

ResourceRequirements resourceRequirements,

-@RpcTimeout Time timeout);

+@RpcTimeout Duration timeout);

+

+/**

+ * @deprecated Use {@link #declareRequiredResources(JobMasterId,

ResourceRequirements,

+ * Duration)}. Declares the absolute resource requirements for a job.

+ * @param jobMasterId id of the JobMaster

+ * @param resourceRequirements resource requirements

+ * @return The confirmation that the requirements have been processed

+ */

+@Deprecated

+default CompletableFuture declareRequiredResources(

Review Comment:

sounds good~

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Re: [PR] [FLINK-35847][release] Add release note for version 1.20 [flink]

superdiaodiao commented on code in PR #25091:

URL: https://github.com/apache/flink/pull/25091#discussion_r1691112012

##

docs/content.zh/release-notes/flink-1.20.md:

##

@@ -0,0 +1,434 @@

+---

+title: "Release Notes - Flink 1.20"

+---

+

+

+# Release notes - Flink 1.20

+

+These release notes discuss important aspects, such as configuration, behavior

or dependencies,

+that changed between Flink 1.19 and Flink 1.20. Please read these notes

carefully if you are

+planning to upgrade your Flink version to 1.20.

+

+### Checkpoints

+

+ Unified File Merging Mechanism for Checkpoints

+

+# [FLINK-32070](https://issues.apache.org/jira/browse/FLINK-32070)

+

+The unified file merging mechanism for checkpointing is introduced to Flink

1.20 as an MVP ("minimum viable product")

+feature, which allows scattered small checkpoint files to be written into

larger files, reducing the

+number of file creations and file deletions and alleviating the pressure of

file system metadata management

+raised by the file flooding problem during checkpoints. The mechanism can be

enabled by setting

+`state.checkpoints.file-merging.enabled` to `true`. For more advanced options

and principle behind

+this feature, please refer to the document of `Checkpointing`.

+

+ Reorganize State & Checkpointing & Recovery Configuration

+

+# [FLINK-34255](https://issues.apache.org/jira/browse/FLINK-34255)

+

+Currently, all the options about state and checkpointing are reorganized and

categorized by

+prefixes as listed below:

+

+1. execution.checkpointing: all configurations associated with checkpointing

and savepoint.

+2. execution.state-recovery: all configurations pertinent to state recovery.

+3. state.*: all configurations related to the state accessing.

+1. state.backend.*: specific options for individual state backends, such

as RocksDB.

+2. state.changelog: configurations for the changelog, as outlined in

FLIP-158, including the options for the "Durable Short-term Log" (DSTL).

+3. state.latency-track: configurations related to the latency tracking of

state access.

+

+At the meantime, all the original options scattered everywhere are annotated

as `@Deprecated`.

+

+ Use common thread pools when transferring RocksDB state files

+

+# [FLINK-35501](https://issues.apache.org/jira/browse/FLINK-35501)

+

+The semantics of `state.backend.rocksdb.checkpoint.transfer.thread.num`

changed slightly:

+If negative, the common (TM) IO thread pool is used (see

`cluster.io-pool.size`) for up/downloading RocksDB files.

+

+ Expose RocksDB bloom filter metrics

+

+# [FLINK-34386](https://issues.apache.org/jira/browse/FLINK-34386)

+

+We expose some RocksDB bloom filter metrics to monitor the effectiveness of

bloom filter optimization:

+

+`BLOOM_FILTER_USEFUL`: times bloom filter has avoided file reads.

+`BLOOM_FILTER_FULL_POSITIVE`: times bloom FullFilter has not avoided the reads.

+`BLOOM_FILTER_FULL_TRUE_POSITIVE`: times bloom FullFilter has not avoided the

reads and data actually exist.

+

+ Manually Compact Small SST Files

+

+# [FLINK-26050](https://issues.apache.org/jira/browse/FLINK-26050)

+

+In some cases, the number of files produced by RocksDB state backend grows

indefinitely.This might

+cause task state info (TDD and checkpoint ACK) to exceed RPC message size and

fail recovery/checkpoint

+in addition to having lots of small files.

+

+In Flink 1.20, you can manually merge such files in the background using

RocksDB API.

+

+### Runtime & Coordination

+

+ Support Job Recovery from JobMaster Failures for Batch Jobs

+

+# [FLINK-33892](https://issues.apache.org/jira/browse/FLINK-33892)

+

+In 1.20, we introduced a batch job recovery mechanism to enable batch jobs to

recover as much progress as possible

+after a JobMaster failover, avoiding the need to rerun tasks that have already

been finished.

+

+More information about this feature and how to enable it could be found in:

https://nightlies.apache.org/flink/flink-docs-master/docs/ops/batch/recovery_from_job_master_failure/

+

+ Extend Curator config option for Zookeeper configuration

+

+# [FLINK-33376](https://issues.apache.org/jira/browse/FLINK-33376)

+

+Adds support for the following curator parameters:

+`high-availability.zookeeper.client.authorization` (corresponding curator

parameter: `authorization`),

+`high-availability.zookeeper.client.max-close-wait` (corresponding curator

parameter: `maxCloseWaitMs`),

+`high-availability.zookeeper.client.simulated-session-expiration-percent`

(corresponding curator parameter: `simulatedSessionExpirationPercent`).

+

+ More fine-grained timer processing

+

+# [FLINK-20217](https://issues.apache.org/jira/browse/FLINK-20217)

+

+Firing timers can now be interrupted to speed up checkpointing. Timers that

were interrupted by a checkpoint,

+will be fired shortly after checkpoint completes.

+

+By default, this features is disabled. To enabled it please set

Re: [PR] [FLINK-33386][runtime] Support tasks balancing at slot level for Default Scheduler [flink]

RocMarshal commented on code in PR #23635:

URL: https://github.com/apache/flink/pull/23635#discussion_r1691113488

##

flink-runtime/src/test/java/org/apache/flink/runtime/scheduler/LocalInputPreferredSlotSharingStrategyTest.java:

##

@@ -69,46 +69,29 @@ class LocalInputPreferredSlotSharingStrategyTest {

private TestingSchedulingExecutionVertex ev21;

private TestingSchedulingExecutionVertex ev22;

-private Set slotSharingGroups;

-

-@BeforeEach

-void setUp() {

-topology = new TestingSchedulingTopology();

-

+private void setupCase() {

ev11 = topology.newExecutionVertex(JOB_VERTEX_ID_1, 0);

ev12 = topology.newExecutionVertex(JOB_VERTEX_ID_1, 1);

ev21 = topology.newExecutionVertex(JOB_VERTEX_ID_2, 0);

ev22 = topology.newExecutionVertex(JOB_VERTEX_ID_2, 1);

-final SlotSharingGroup slotSharingGroup = new SlotSharingGroup();

-slotSharingGroup.addVertexToGroup(JOB_VERTEX_ID_1);

-slotSharingGroup.addVertexToGroup(JOB_VERTEX_ID_2);

-slotSharingGroups = Collections.singleton(slotSharingGroup);

+slotsharingGroup.addVertexToGroup(JOB_VERTEX_ID_1);

+slotsharingGroup.addVertexToGroup(JOB_VERTEX_ID_2);

}

-@Test

-void testCoLocationConstraintIsRespected() {

-topology.connect(ev11, ev22);

-topology.connect(ev12, ev21);

-

-final CoLocationGroup coLocationGroup =

-new TestingCoLocationGroup(JOB_VERTEX_ID_1, JOB_VERTEX_ID_2);

-final Set coLocationGroups =

Collections.singleton(coLocationGroup);

-

-final SlotSharingStrategy strategy =

-new LocalInputPreferredSlotSharingStrategy(

-topology, slotSharingGroups, coLocationGroups);

-

-assertThat(strategy.getExecutionSlotSharingGroups()).hasSize(2);

-

assertThat(strategy.getExecutionSlotSharingGroup(ev11.getId()).getExecutionVertexIds())

-.contains(ev11.getId(), ev21.getId());

-

assertThat(strategy.getExecutionSlotSharingGroup(ev12.getId()).getExecutionVertexIds())

-.contains(ev12.getId(), ev22.getId());

+@Override

+protected SlotSharingStrategy getSlotSharingStrategy(

+SchedulingTopology topology,

+Set slotSharingGroups,

+Set coLocationGroups) {

+return new LocalInputPreferredSlotSharingStrategy(

+topology, slotSharingGroups, coLocationGroups);

}

@Test

void testInputLocalityIsRespectedWithRescaleEdge() {

+setupCase();

Review Comment:

Thank @1996fanrui you very much for the review ,

I updated related parts based on your comments. PTAL if you had the free

time. :)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Re: [PR] [FLINK-35847][release] Add release note for version 1.20 [flink]

superdiaodiao commented on code in PR #25091:

URL: https://github.com/apache/flink/pull/25091#discussion_r1691112012

##

docs/content.zh/release-notes/flink-1.20.md:

##

@@ -0,0 +1,434 @@

+---

+title: "Release Notes - Flink 1.20"

+---

+

+

+# Release notes - Flink 1.20

+

+These release notes discuss important aspects, such as configuration, behavior

or dependencies,

+that changed between Flink 1.19 and Flink 1.20. Please read these notes

carefully if you are

+planning to upgrade your Flink version to 1.20.

+

+### Checkpoints

+

+ Unified File Merging Mechanism for Checkpoints

+

+# [FLINK-32070](https://issues.apache.org/jira/browse/FLINK-32070)

+

+The unified file merging mechanism for checkpointing is introduced to Flink

1.20 as an MVP ("minimum viable product")

+feature, which allows scattered small checkpoint files to be written into

larger files, reducing the

+number of file creations and file deletions and alleviating the pressure of

file system metadata management

+raised by the file flooding problem during checkpoints. The mechanism can be

enabled by setting

+`state.checkpoints.file-merging.enabled` to `true`. For more advanced options

and principle behind

+this feature, please refer to the document of `Checkpointing`.

+

+ Reorganize State & Checkpointing & Recovery Configuration

+

+# [FLINK-34255](https://issues.apache.org/jira/browse/FLINK-34255)

+

+Currently, all the options about state and checkpointing are reorganized and

categorized by

+prefixes as listed below:

+

+1. execution.checkpointing: all configurations associated with checkpointing

and savepoint.

+2. execution.state-recovery: all configurations pertinent to state recovery.

+3. state.*: all configurations related to the state accessing.

+1. state.backend.*: specific options for individual state backends, such

as RocksDB.

+2. state.changelog: configurations for the changelog, as outlined in

FLIP-158, including the options for the "Durable Short-term Log" (DSTL).

+3. state.latency-track: configurations related to the latency tracking of

state access.

+

+At the meantime, all the original options scattered everywhere are annotated

as `@Deprecated`.

+

+ Use common thread pools when transferring RocksDB state files

+

+# [FLINK-35501](https://issues.apache.org/jira/browse/FLINK-35501)

+

+The semantics of `state.backend.rocksdb.checkpoint.transfer.thread.num`

changed slightly:

+If negative, the common (TM) IO thread pool is used (see

`cluster.io-pool.size`) for up/downloading RocksDB files.

+

+ Expose RocksDB bloom filter metrics

+

+# [FLINK-34386](https://issues.apache.org/jira/browse/FLINK-34386)

+

+We expose some RocksDB bloom filter metrics to monitor the effectiveness of

bloom filter optimization:

+

+`BLOOM_FILTER_USEFUL`: times bloom filter has avoided file reads.

+`BLOOM_FILTER_FULL_POSITIVE`: times bloom FullFilter has not avoided the reads.

+`BLOOM_FILTER_FULL_TRUE_POSITIVE`: times bloom FullFilter has not avoided the

reads and data actually exist.

+

+ Manually Compact Small SST Files

+

+# [FLINK-26050](https://issues.apache.org/jira/browse/FLINK-26050)

+

+In some cases, the number of files produced by RocksDB state backend grows

indefinitely.This might

+cause task state info (TDD and checkpoint ACK) to exceed RPC message size and

fail recovery/checkpoint

+in addition to having lots of small files.

+

+In Flink 1.20, you can manually merge such files in the background using

RocksDB API.

+

+### Runtime & Coordination

+

+ Support Job Recovery from JobMaster Failures for Batch Jobs

+

+# [FLINK-33892](https://issues.apache.org/jira/browse/FLINK-33892)

+

+In 1.20, we introduced a batch job recovery mechanism to enable batch jobs to

recover as much progress as possible

+after a JobMaster failover, avoiding the need to rerun tasks that have already

been finished.

+

+More information about this feature and how to enable it could be found in:

https://nightlies.apache.org/flink/flink-docs-master/docs/ops/batch/recovery_from_job_master_failure/

+

+ Extend Curator config option for Zookeeper configuration

+

+# [FLINK-33376](https://issues.apache.org/jira/browse/FLINK-33376)

+

+Adds support for the following curator parameters:

+`high-availability.zookeeper.client.authorization` (corresponding curator

parameter: `authorization`),

+`high-availability.zookeeper.client.max-close-wait` (corresponding curator

parameter: `maxCloseWaitMs`),

+`high-availability.zookeeper.client.simulated-session-expiration-percent`

(corresponding curator parameter: `simulatedSessionExpirationPercent`).

+

+ More fine-grained timer processing

+

+# [FLINK-20217](https://issues.apache.org/jira/browse/FLINK-20217)

+

+Firing timers can now be interrupted to speed up checkpointing. Timers that

were interrupted by a checkpoint,

+will be fired shortly after checkpoint completes.

+

+By default, this features is disabled. To enabled it please set

Re: [PR] [FLINK-35864][Table SQL / API] Add CONV function [flink]

superdiaodiao commented on code in PR #25109:

URL: https://github.com/apache/flink/pull/25109#discussion_r1691055255

##

flink-table/flink-table-planner/src/test/java/org/apache/flink/table/planner/functions/MathFunctionsITCase.java:

##

@@ -141,4 +178,181 @@ Stream getTestSetSpecs() {

new BigDecimal("123.45"),

DataTypes.DECIMAL(6, 2).notNull()));

}

+

+private Stream convTestCases() {

+return Stream.of(

+TestSetSpec.forFunction(BuiltInFunctionDefinitions.CONV)

+.onFieldsWithData(

+null, null, "100", "", "11abc", "\u000B\f

4521 \n\r\t")

+.andDataTypes(

+DataTypes.INT(),

+DataTypes.STRING(),

+DataTypes.STRING(),

+DataTypes.STRING(),

+DataTypes.STRING(),

+DataTypes.STRING())

+// null input

+.testResult($("f0").conv(2, 2), "CONV(f0, 2, 2)",

null, DataTypes.STRING())

+.testResult($("f1").conv(2, 2), "CONV(f1, 2, 2)",

null, DataTypes.STRING())

+.testResult(

+$("f2").conv($("f0"), 2),

+"CONV(f2, f0, 2)",

+null,

+DataTypes.STRING())

+.testResult(

+$("f2").conv(2, $("f0")),

+"CONV(f2, 2, f0)",

+null,

+DataTypes.STRING())

+// empty string

+.testResult($("f3").conv(2, 4), "CONV(f3, 2, 4)",

null, DataTypes.STRING())

+// invalid fromBase

+.testResult($("f2").conv(1, 2), "CONV(f2, 1, 2)",

null, DataTypes.STRING())

+.testResult(

+$("f2").conv(40, 2), "CONV(f2, 40, 2)", null,

DataTypes.STRING())

+// invalid toBase

+.testResult(

+$("f2").conv(2, -1), "CONV(f2, 2, -1)", null,

DataTypes.STRING())

+.testResult(

+$("f2").conv(2, -40), "CONV(f2, 2, -40)",

null, DataTypes.STRING())

+// invalid num format, ignore suffix

+.testResult(

+$("f4").conv(10, 16), "CONV(f4, 10, 16)", "B",

DataTypes.STRING())

+// num overflow

+.testTableApiRuntimeError(

+lit("").conv(16, 16),

+NumberFormatException.class,

+"The number overflows.")

+.testSqlRuntimeError(

+"CONV('', 16, 16)",

+NumberFormatException.class,

+"The number overflows.")

+.testTableApiRuntimeError(

+lit("FFFEE").conv(16, 16),

+NumberFormatException.class,

+"The number FFFEE overflows.")

Review Comment:

Make sense!

Thanks~

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Re: [PR] [hotfix][test] Add CDC 3.1.1 release to migration test versions [flink-cdc]

leonardBang merged PR #3426: URL: https://github.com/apache/flink-cdc/pull/3426 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

Re: [PR] [FLINK-33874][runtime] Support resource request wait mechanism at DefaultDeclarativeSlotPool side for Default Scheduler [flink]

1996fanrui commented on code in PR #25113:

URL: https://github.com/apache/flink/pull/25113#discussion_r1690930831

##

flink-runtime/src/main/java/org/apache/flink/runtime/resourcemanager/ResourceManagerGateway.java:

##

@@ -88,7 +88,22 @@ CompletableFuture registerJobMaster(

CompletableFuture declareRequiredResources(

JobMasterId jobMasterId,

ResourceRequirements resourceRequirements,

-@RpcTimeout Time timeout);

+@RpcTimeout Duration timeout);

+

+/**

+ * @deprecated Use {@link #declareRequiredResources(JobMasterId,

ResourceRequirements,

+ * Duration)}. Declares the absolute resource requirements for a job.

+ * @param jobMasterId id of the JobMaster

+ * @param resourceRequirements resource requirements

+ * @return The confirmation that the requirements have been processed

+ */

+@Deprecated

+default CompletableFuture declareRequiredResources(

Review Comment:

We could refactor all callers from Time to Duration. And the old method

isn't needed anymore. Because it's not public api, we don't need to mark it to

`@Deprecated`.

Be careful which commit this change belongs to.

##

flink-runtime/src/main/java/org/apache/flink/runtime/jobmaster/slotpool/DeclarativeSlotPoolService.java:

##

@@ -75,21 +76,30 @@ public class DeclarativeSlotPoolService implements

SlotPoolService {

@Nullable private String jobManagerAddress;

private State state = State.CREATED;

+protected ComponentMainThreadExecutor componentMainThreadExecutor;

Review Comment:

```suggestion

protected final ComponentMainThreadExecutor componentMainThreadExecutor;

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Re: [PR] [FLINK-33874][runtime] Support resource request wait mechanism at DefaultDeclarativeSlotPool side for Default Scheduler [flink]

1996fanrui commented on code in PR #25113:

URL: https://github.com/apache/flink/pull/25113#discussion_r1690930831

##

flink-runtime/src/main/java/org/apache/flink/runtime/resourcemanager/ResourceManagerGateway.java:

##

@@ -88,7 +88,22 @@ CompletableFuture registerJobMaster(

CompletableFuture declareRequiredResources(

JobMasterId jobMasterId,

ResourceRequirements resourceRequirements,

-@RpcTimeout Time timeout);

+@RpcTimeout Duration timeout);

+

+/**

+ * @deprecated Use {@link #declareRequiredResources(JobMasterId,

ResourceRequirements,

+ * Duration)}. Declares the absolute resource requirements for a job.

+ * @param jobMasterId id of the JobMaster

+ * @param resourceRequirements resource requirements

+ * @return The confirmation that the requirements have been processed

+ */

+@Deprecated

+default CompletableFuture declareRequiredResources(

Review Comment:

We could refactor all callers from Time to Duration (Only 3 callers). And