[jira] [Commented] (HIVE-22962) Reuse HiveRelFieldTrimmer instance across queries

[

https://issues.apache.org/jira/browse/HIVE-22962?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053923#comment-17053923

]

Hive QA commented on HIVE-22962:

Here are the results of testing the latest attachment:

https://issues.apache.org/jira/secure/attachment/12995953/HIVE-22962.06.patch

{color:green}SUCCESS:{color} +1 due to 1 test(s) being added or modified.

{color:green}SUCCESS:{color} +1 due to 18102 tests passed

Test results:

https://builds.apache.org/job/PreCommit-HIVE-Build/20993/testReport

Console output: https://builds.apache.org/job/PreCommit-HIVE-Build/20993/console

Test logs: http://104.198.109.242/logs/PreCommit-HIVE-Build-20993/

Messages:

{noformat}

Executing org.apache.hive.ptest.execution.TestCheckPhase

Executing org.apache.hive.ptest.execution.PrepPhase

Executing org.apache.hive.ptest.execution.YetusPhase

Executing org.apache.hive.ptest.execution.ExecutionPhase

Executing org.apache.hive.ptest.execution.ReportingPhase

{noformat}

This message is automatically generated.

ATTACHMENT ID: 12995953 - PreCommit-HIVE-Build

> Reuse HiveRelFieldTrimmer instance across queries

> -

>

> Key: HIVE-22962

> URL: https://issues.apache.org/jira/browse/HIVE-22962

> Project: Hive

> Issue Type: Improvement

> Components: CBO

>Reporter: Jesus Camacho Rodriguez

>Assignee: Jesus Camacho Rodriguez

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-22962.01.patch, HIVE-22962.02.patch,

> HIVE-22962.03.patch, HIVE-22962.04.patch, HIVE-22962.05.patch,

> HIVE-22962.06.patch, HIVE-22962.patch

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Currently we create multiple {{HiveRelFieldTrimmer}} instances per query.

> {{HiveRelFieldTrimmer}} uses a method dispatcher that has a built-in caching

> mechanism: given a certain object, it stores the method that was called for

> the object class. However, by instantiating the trimmer multiple times per

> query and across queries, we create a new dispatcher with each instantiation,

> thus effectively removing the caching mechanism that is built within the

> dispatcher.

> This issue is to reutilize the same {{HiveRelFieldTrimmer}} instance within a

> single query and across queries.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HIVE-22962) Reuse HiveRelFieldTrimmer instance across queries

[

https://issues.apache.org/jira/browse/HIVE-22962?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053913#comment-17053913

]

Hive QA commented on HIVE-22962:

| (x) *{color:red}-1 overall{color}* |

\\

\\

|| Vote || Subsystem || Runtime || Comment ||

|| || || || {color:brown} Prechecks {color} ||

| {color:green}+1{color} | {color:green} @author {color} | {color:green} 0m

0s{color} | {color:green} The patch does not contain any @author tags. {color} |

|| || || || {color:brown} master Compile Tests {color} ||

| {color:blue}0{color} | {color:blue} mvndep {color} | {color:blue} 1m

39s{color} | {color:blue} Maven dependency ordering for branch {color} |

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 7m

35s{color} | {color:green} master passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 1m

56s{color} | {color:green} master passed {color} |

| {color:green}+1{color} | {color:green} checkstyle {color} | {color:green} 1m

11s{color} | {color:green} master passed {color} |

| {color:blue}0{color} | {color:blue} findbugs {color} | {color:blue} 3m

39s{color} | {color:blue} ql in master has 1531 extant Findbugs warnings.

{color} |

| {color:blue}0{color} | {color:blue} findbugs {color} | {color:blue} 0m

40s{color} | {color:blue} service in master has 51 extant Findbugs warnings.

{color} |

| {color:blue}0{color} | {color:blue} findbugs {color} | {color:blue} 0m

28s{color} | {color:blue} cli in master has 9 extant Findbugs warnings. {color}

|

| {color:red}-1{color} | {color:red} findbugs {color} | {color:red} 3m

11s{color} | {color:red} branch/itests/hive-jmh cannot run convertXmlToText

from findbugs {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 1m

35s{color} | {color:green} master passed {color} |

|| || || || {color:brown} Patch Compile Tests {color} ||

| {color:blue}0{color} | {color:blue} mvndep {color} | {color:blue} 0m

27s{color} | {color:blue} Maven dependency ordering for patch {color} |

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 2m

43s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 2m

1s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} javac {color} | {color:green} 2m

1s{color} | {color:green} the patch passed {color} |

| {color:red}-1{color} | {color:red} checkstyle {color} | {color:red} 0m

40s{color} | {color:red} ql: The patch generated 90 new + 141 unchanged - 0

fixed = 231 total (was 141) {color} |

| {color:green}+1{color} | {color:green} whitespace {color} | {color:green} 0m

0s{color} | {color:green} The patch has no whitespace issues. {color} |

| {color:red}-1{color} | {color:red} findbugs {color} | {color:red} 3m

7s{color} | {color:red} patch/itests/hive-jmh cannot run convertXmlToText from

findbugs {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 1m

32s{color} | {color:green} the patch passed {color} |

|| || || || {color:brown} Other Tests {color} ||

| {color:red}-1{color} | {color:red} asflicense {color} | {color:red} 0m

14s{color} | {color:red} The patch generated 2 ASF License warnings. {color} |

| {color:black}{color} | {color:black} {color} | {color:black} 39m 19s{color} |

{color:black} {color} |

\\

\\

|| Subsystem || Report/Notes ||

| Optional Tests | asflicense javac javadoc findbugs checkstyle compile |

| uname | Linux hiveptest-server-upstream 3.16.0-4-amd64 #1 SMP Debian

3.16.43-2+deb8u5 (2017-09-19) x86_64 GNU/Linux |

| Build tool | maven |

| Personality |

/data/hiveptest/working/yetus_PreCommit-HIVE-Build-20993/dev-support/hive-personality.sh

|

| git revision | master / 1b50b70 |

| Default Java | 1.8.0_111 |

| findbugs | v3.0.1 |

| findbugs |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20993/yetus/branch-findbugs-itests_hive-jmh.txt

|

| checkstyle |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20993/yetus/diff-checkstyle-ql.txt

|

| findbugs |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20993/yetus/patch-findbugs-itests_hive-jmh.txt

|

| asflicense |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20993/yetus/patch-asflicense-problems.txt

|

| modules | C: ql service cli itests/hive-jmh U: . |

| Console output |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20993/yetus.txt |

| Powered by | Apache Yetushttp://yetus.apache.org |

This message was automatically generated.

> Reuse HiveRelFieldTrimmer instance across queries

> -

>

> Key: HIVE-22962

> URL: https://issues.apache.org/jira/browse/HIVE-22962

> Project: Hive

> Issue Type: Improvement

> Components: CBO

>Reporter: Jesus Camacho Rodriguez

>Assignee: J

[jira] [Commented] (HIVE-22978) Fix decimal precision and scale inference for aggregate rewriting in Calcite

[

https://issues.apache.org/jira/browse/HIVE-22978?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053899#comment-17053899

]

Hive QA commented on HIVE-22978:

Here are the results of testing the latest attachment:

https://issues.apache.org/jira/secure/attachment/12995800/HIVE-22978.01.patch

{color:green}SUCCESS:{color} +1 due to 1 test(s) being added or modified.

{color:green}SUCCESS:{color} +1 due to 18102 tests passed

Test results:

https://builds.apache.org/job/PreCommit-HIVE-Build/20992/testReport

Console output: https://builds.apache.org/job/PreCommit-HIVE-Build/20992/console

Test logs: http://104.198.109.242/logs/PreCommit-HIVE-Build-20992/

Messages:

{noformat}

Executing org.apache.hive.ptest.execution.TestCheckPhase

Executing org.apache.hive.ptest.execution.PrepPhase

Executing org.apache.hive.ptest.execution.YetusPhase

Executing org.apache.hive.ptest.execution.ExecutionPhase

Executing org.apache.hive.ptest.execution.ReportingPhase

{noformat}

This message is automatically generated.

ATTACHMENT ID: 12995800 - PreCommit-HIVE-Build

> Fix decimal precision and scale inference for aggregate rewriting in Calcite

>

>

> Key: HIVE-22978

> URL: https://issues.apache.org/jira/browse/HIVE-22978

> Project: Hive

> Issue Type: Bug

> Components: CBO

>Reporter: Jesus Camacho Rodriguez

>Assignee: Jesus Camacho Rodriguez

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-22978.01.patch, HIVE-22978.patch

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Calcite rules can do rewritings of aggregate functions, e.g., {{avg}} into

> {{sum/count}}. When type of {{avg}} is decimal, inference of intermediate

> precision and scale for the division is not done correctly. The reason is

> that we miss support for some types in method {{getDefaultPrecision}} in

> {{HiveTypeSystemImpl}}. Additionally, {{deriveSumType}} should be overridden

> in {{HiveTypeSystemImpl}} to abide by the Hive semantics for sum aggregate

> type inference.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HIVE-22987) ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null

[ https://issues.apache.org/jira/browse/HIVE-22987?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Ashutosh Chauhan updated HIVE-22987: Fix Version/s: 4.0.0 Resolution: Fixed Status: Resolved (was: Patch Available) Pushed to master. Thanks, Ramesh! > ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null > --- > > Key: HIVE-22987 > URL: https://issues.apache.org/jira/browse/HIVE-22987 > Project: Hive > Issue Type: Bug >Reporter: Ramesh Kumar Thangarajan >Assignee: Ramesh Kumar Thangarajan >Priority: Major > Fix For: 4.0.0 > > Attachments: HIVE-22987.1.patch > > > ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HIVE-22987) ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null

[ https://issues.apache.org/jira/browse/HIVE-22987?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053891#comment-17053891 ] Ashutosh Chauhan commented on HIVE-22987: - +1 > ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null > --- > > Key: HIVE-22987 > URL: https://issues.apache.org/jira/browse/HIVE-22987 > Project: Hive > Issue Type: Bug >Reporter: Ramesh Kumar Thangarajan >Assignee: Ramesh Kumar Thangarajan >Priority: Major > Attachments: HIVE-22987.1.patch > > > ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HIVE-22996) BasicStats parsing should check proactively for null or empty string

[ https://issues.apache.org/jira/browse/HIVE-22996?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053890#comment-17053890 ] Gopal Vijayaraghavan commented on HIVE-22996: - +1 tests pending > BasicStats parsing should check proactively for null or empty string > > > Key: HIVE-22996 > URL: https://issues.apache.org/jira/browse/HIVE-22996 > Project: Hive > Issue Type: Bug > Components: Statistics >Reporter: Jesus Camacho Rodriguez >Assignee: Jesus Camacho Rodriguez >Priority: Major > Labels: pull-request-available > Attachments: HIVE-22996.patch > > Time Spent: 10m > Remaining Estimate: 0h > > Rather than throwing an Exception for control flow, which will create > unnecessary overhead. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HIVE-22978) Fix decimal precision and scale inference for aggregate rewriting in Calcite

[

https://issues.apache.org/jira/browse/HIVE-22978?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053889#comment-17053889

]

Hive QA commented on HIVE-22978:

| (x) *{color:red}-1 overall{color}* |

\\

\\

|| Vote || Subsystem || Runtime || Comment ||

|| || || || {color:brown} Prechecks {color} ||

| {color:green}+1{color} | {color:green} @author {color} | {color:green} 0m

0s{color} | {color:green} The patch does not contain any @author tags. {color} |

|| || || || {color:brown} master Compile Tests {color} ||

| {color:blue}0{color} | {color:blue} mvndep {color} | {color:blue} 1m

36s{color} | {color:blue} Maven dependency ordering for branch {color} |

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 7m

42s{color} | {color:green} master passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 1m

41s{color} | {color:green} master passed {color} |

| {color:green}+1{color} | {color:green} checkstyle {color} | {color:green} 0m

56s{color} | {color:green} master passed {color} |

| {color:blue}0{color} | {color:blue} findbugs {color} | {color:blue} 3m

35s{color} | {color:blue} ql in master has 1531 extant Findbugs warnings.

{color} |

| {color:blue}0{color} | {color:blue} findbugs {color} | {color:blue} 0m

41s{color} | {color:blue} itests/hive-unit in master has 2 extant Findbugs

warnings. {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 1m

17s{color} | {color:green} master passed {color} |

|| || || || {color:brown} Patch Compile Tests {color} ||

| {color:blue}0{color} | {color:blue} mvndep {color} | {color:blue} 0m

27s{color} | {color:blue} Maven dependency ordering for patch {color} |

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 1m

59s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 1m

42s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} javac {color} | {color:green} 1m

42s{color} | {color:green} the patch passed {color} |

| {color:red}-1{color} | {color:red} checkstyle {color} | {color:red} 0m

40s{color} | {color:red} ql: The patch generated 4 new + 19 unchanged - 1 fixed

= 23 total (was 20) {color} |

| {color:green}+1{color} | {color:green} whitespace {color} | {color:green} 0m

0s{color} | {color:green} The patch has no whitespace issues. {color} |

| {color:green}+1{color} | {color:green} findbugs {color} | {color:green} 4m

31s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 1m

21s{color} | {color:green} the patch passed {color} |

|| || || || {color:brown} Other Tests {color} ||

| {color:red}-1{color} | {color:red} asflicense {color} | {color:red} 0m

15s{color} | {color:red} The patch generated 2 ASF License warnings. {color} |

| {color:black}{color} | {color:black} {color} | {color:black} 29m 35s{color} |

{color:black} {color} |

\\

\\

|| Subsystem || Report/Notes ||

| Optional Tests | asflicense javac javadoc findbugs checkstyle compile |

| uname | Linux hiveptest-server-upstream 3.16.0-4-amd64 #1 SMP Debian

3.16.43-2+deb8u5 (2017-09-19) x86_64 GNU/Linux |

| Build tool | maven |

| Personality |

/data/hiveptest/working/yetus_PreCommit-HIVE-Build-20992/dev-support/hive-personality.sh

|

| git revision | master / 1b50b70 |

| Default Java | 1.8.0_111 |

| findbugs | v3.0.1 |

| checkstyle |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20992/yetus/diff-checkstyle-ql.txt

|

| asflicense |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20992/yetus/patch-asflicense-problems.txt

|

| modules | C: ql itests/hive-unit U: . |

| Console output |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20992/yetus.txt |

| Powered by | Apache Yetushttp://yetus.apache.org |

This message was automatically generated.

> Fix decimal precision and scale inference for aggregate rewriting in Calcite

>

>

> Key: HIVE-22978

> URL: https://issues.apache.org/jira/browse/HIVE-22978

> Project: Hive

> Issue Type: Bug

> Components: CBO

>Reporter: Jesus Camacho Rodriguez

>Assignee: Jesus Camacho Rodriguez

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-22978.01.patch, HIVE-22978.patch

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Calcite rules can do rewritings of aggregate functions, e.g., {{avg}} into

> {{sum/count}}. When type of {{avg}} is decimal, inference of intermediate

> precision and scale for the division is not done correctly. The reason is

> that we miss support for some types in method {{getDefaultPrecision}} in

> {{HiveTypeSystemImpl}}. Additionally

[jira] [Commented] (HIVE-22986) Prevent Decimal64 to Decimal conversion when other operations support Decimal64

[

https://issues.apache.org/jira/browse/HIVE-22986?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053878#comment-17053878

]

Hive QA commented on HIVE-22986:

Here are the results of testing the latest attachment:

https://issues.apache.org/jira/secure/attachment/12995798/HIVE-22986.1.patch

{color:red}ERROR:{color} -1 due to build exiting with an error

Test results:

https://builds.apache.org/job/PreCommit-HIVE-Build/20991/testReport

Console output: https://builds.apache.org/job/PreCommit-HIVE-Build/20991/console

Test logs: http://104.198.109.242/logs/PreCommit-HIVE-Build-20991/

Messages:

{noformat}

Executing org.apache.hive.ptest.execution.TestCheckPhase

Executing org.apache.hive.ptest.execution.PrepPhase

Tests exited with: NonZeroExitCodeException

Command 'bash /data/hiveptest/working/scratch/source-prep.sh' failed with exit

status 1 and output '+ date '+%Y-%m-%d %T.%3N'

2020-03-07 04:25:01.282

+ [[ -n /usr/lib/jvm/java-8-openjdk-amd64 ]]

+ export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

+ JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

+ export

PATH=/usr/lib/jvm/java-8-openjdk-amd64/bin/:/usr/local/bin:/usr/bin:/bin:/usr/local/games:/usr/games

+

PATH=/usr/lib/jvm/java-8-openjdk-amd64/bin/:/usr/local/bin:/usr/bin:/bin:/usr/local/games:/usr/games

+ export 'ANT_OPTS=-Xmx1g -XX:MaxPermSize=256m '

+ ANT_OPTS='-Xmx1g -XX:MaxPermSize=256m '

+ export 'MAVEN_OPTS=-Xmx1g '

+ MAVEN_OPTS='-Xmx1g '

+ cd /data/hiveptest/working/

+ tee /data/hiveptest/logs/PreCommit-HIVE-Build-20991/source-prep.txt

+ [[ false == \t\r\u\e ]]

+ mkdir -p maven ivy

+ [[ git = \s\v\n ]]

+ [[ git = \g\i\t ]]

+ [[ -z master ]]

+ [[ -d apache-github-source-source ]]

+ [[ ! -d apache-github-source-source/.git ]]

+ [[ ! -d apache-github-source-source ]]

+ date '+%Y-%m-%d %T.%3N'

2020-03-07 04:25:01.284

+ cd apache-github-source-source

+ git fetch origin

+ git reset --hard HEAD

HEAD is now at 1b50b70 HIVE-22673: Replace Base64 in contrib Package (David

Mollitor, reviewed by Zoltan Haindrich)

+ git clean -f -d

Removing standalone-metastore/metastore-server/src/gen/

+ git checkout master

Already on 'master'

Your branch is up-to-date with 'origin/master'.

+ git reset --hard origin/master

HEAD is now at 1b50b70 HIVE-22673: Replace Base64 in contrib Package (David

Mollitor, reviewed by Zoltan Haindrich)

+ git merge --ff-only origin/master

Already up-to-date.

+ date '+%Y-%m-%d %T.%3N'

2020-03-07 04:25:03.050

+ rm -rf ../yetus_PreCommit-HIVE-Build-20991

+ mkdir ../yetus_PreCommit-HIVE-Build-20991

+ git gc

+ cp -R . ../yetus_PreCommit-HIVE-Build-20991

+ mkdir /data/hiveptest/logs/PreCommit-HIVE-Build-20991/yetus

+ patchCommandPath=/data/hiveptest/working/scratch/smart-apply-patch.sh

+ patchFilePath=/data/hiveptest/working/scratch/build.patch

+ [[ -f /data/hiveptest/working/scratch/build.patch ]]

+ chmod +x /data/hiveptest/working/scratch/smart-apply-patch.sh

+ /data/hiveptest/working/scratch/smart-apply-patch.sh

/data/hiveptest/working/scratch/build.patch

Trying to apply the patch with -p0

error: a/itests/src/test/resources/testconfiguration.properties: does not exist

in index

error:

a/ql/src/java/org/apache/hadoop/hive/ql/exec/vector/VectorizationContext.java:

does not exist in index

Trying to apply the patch with -p1

error: patch failed: itests/src/test/resources/testconfiguration.properties:876

Falling back to three-way merge...

Applied patch to 'itests/src/test/resources/testconfiguration.properties' with

conflicts.

Going to apply patch with: git apply -p1

/data/hiveptest/working/scratch/build.patch:234: trailing whitespace.

Map 1

/data/hiveptest/working/scratch/build.patch:265: trailing whitespace.

null sort order:

/data/hiveptest/working/scratch/build.patch:266: trailing whitespace.

sort order:

/data/hiveptest/working/scratch/build.patch:291: trailing whitespace.

Reducer 2

/data/hiveptest/working/scratch/build.patch:296: trailing whitespace.

reduceColumnNullOrder:

error: patch failed: itests/src/test/resources/testconfiguration.properties:876

Falling back to three-way merge...

Applied patch to 'itests/src/test/resources/testconfiguration.properties' with

conflicts.

U itests/src/test/resources/testconfiguration.properties

warning: squelched 7 whitespace errors

warning: 12 lines add whitespace errors.

+ result=1

+ '[' 1 -ne 0 ']'

+ rm -rf yetus_PreCommit-HIVE-Build-20991

+ exit 1

'

{noformat}

This message is automatically generated.

ATTACHMENT ID: 12995798 - PreCommit-HIVE-Build

> Prevent Decimal64 to Decimal conversion when other operations support

> Decimal64

> ---

>

> Key: HIVE-22986

> URL: https://issues.apache.org/jira/browse/HIVE-22986

> Project: Hive

> Issue Type: Bug

> Components: Vectorization

>Reporter: Rames

[jira] [Commented] (HIVE-22987) ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null

[

https://issues.apache.org/jira/browse/HIVE-22987?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053876#comment-17053876

]

Hive QA commented on HIVE-22987:

Here are the results of testing the latest attachment:

https://issues.apache.org/jira/secure/attachment/12995797/HIVE-22987.1.patch

{color:red}ERROR:{color} -1 due to no test(s) being added or modified.

{color:green}SUCCESS:{color} +1 due to 18102 tests passed

Test results:

https://builds.apache.org/job/PreCommit-HIVE-Build/20990/testReport

Console output: https://builds.apache.org/job/PreCommit-HIVE-Build/20990/console

Test logs: http://104.198.109.242/logs/PreCommit-HIVE-Build-20990/

Messages:

{noformat}

Executing org.apache.hive.ptest.execution.TestCheckPhase

Executing org.apache.hive.ptest.execution.PrepPhase

Executing org.apache.hive.ptest.execution.YetusPhase

Executing org.apache.hive.ptest.execution.ExecutionPhase

Executing org.apache.hive.ptest.execution.ReportingPhase

{noformat}

This message is automatically generated.

ATTACHMENT ID: 12995797 - PreCommit-HIVE-Build

> ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null

> ---

>

> Key: HIVE-22987

> URL: https://issues.apache.org/jira/browse/HIVE-22987

> Project: Hive

> Issue Type: Bug

>Reporter: Ramesh Kumar Thangarajan

>Assignee: Ramesh Kumar Thangarajan

>Priority: Major

> Attachments: HIVE-22987.1.patch

>

>

> ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HIVE-21218) KafkaSerDe doesn't support topics created via Confluent Avro serializer

[ https://issues.apache.org/jira/browse/HIVE-21218?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] David McGinnis updated HIVE-21218: -- Attachment: HIVE-21218.8.patch > KafkaSerDe doesn't support topics created via Confluent Avro serializer > --- > > Key: HIVE-21218 > URL: https://issues.apache.org/jira/browse/HIVE-21218 > Project: Hive > Issue Type: Bug > Components: kafka integration, Serializers/Deserializers >Affects Versions: 3.1.1 >Reporter: Milan Baran >Assignee: David McGinnis >Priority: Major > Labels: pull-request-available > Attachments: HIVE-21218.2.patch, HIVE-21218.3.patch, > HIVE-21218.4.patch, HIVE-21218.5.patch, HIVE-21218.6.patch, > HIVE-21218.7.patch, HIVE-21218.8.patch, HIVE-21218.patch > > Time Spent: 14h 20m > Remaining Estimate: 0h > > According to [Google > groups|https://groups.google.com/forum/#!topic/confluent-platform/JYhlXN0u9_A] > the Confluent avro serialzier uses propertiary format for kafka value - > <4 bytes of schema ID> conforms to schema>. > This format does not cause any problem for Confluent kafka deserializer which > respect the format however for hive kafka handler its bit a problem to > correctly deserialize kafka value, because Hive uses custom deserializer from > bytes to objects and ignores kafka consumer ser/deser classes provided via > table property. > It would be nice to support Confluent format with magic byte. > Also it would be great to support Schema registry as well. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HIVE-22955) PreUpgradeTool can fail because access to CharsetDecoder is not synchronized

[

https://issues.apache.org/jira/browse/HIVE-22955?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053822#comment-17053822

]

Hive QA commented on HIVE-22955:

Here are the results of testing the latest attachment:

https://issues.apache.org/jira/secure/attachment/12995775/HIVE-22955.1.patch

{color:green}SUCCESS:{color} +1 due to 1 test(s) being added or modified.

{color:red}ERROR:{color} -1 due to 1 failed/errored test(s), 18103 tests

executed

*Failed tests:*

{noformat}

org.apache.hive.minikdc.TestJdbcWithMiniKdcSQLAuthBinary.testAuthorization1

(batchId=307)

{noformat}

Test results:

https://builds.apache.org/job/PreCommit-HIVE-Build/20985/testReport

Console output: https://builds.apache.org/job/PreCommit-HIVE-Build/20985/console

Test logs: http://104.198.109.242/logs/PreCommit-HIVE-Build-20985/

Messages:

{noformat}

Executing org.apache.hive.ptest.execution.TestCheckPhase

Executing org.apache.hive.ptest.execution.PrepPhase

Executing org.apache.hive.ptest.execution.YetusPhase

Executing org.apache.hive.ptest.execution.ExecutionPhase

Executing org.apache.hive.ptest.execution.ReportingPhase

Tests exited with: TestsFailedException: 1 tests failed

{noformat}

This message is automatically generated.

ATTACHMENT ID: 12995775 - PreCommit-HIVE-Build

> PreUpgradeTool can fail because access to CharsetDecoder is not synchronized

>

>

> Key: HIVE-22955

> URL: https://issues.apache.org/jira/browse/HIVE-22955

> Project: Hive

> Issue Type: Bug

> Components: Transactions

>Affects Versions: 4.0.0

>Reporter: Hankó Gergely

>Assignee: Hankó Gergely

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-22955.1.patch

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> {code:java}

> 2020-02-26 20:22:49,683 ERROR [main] acid.PreUpgradeTool

> (PreUpgradeTool.java:main(150)) - PreUpgradeTool failed

> org.apache.hadoop.hive.ql.metadata.HiveException at

> org.apache.hadoop.hive.upgrade.acid.PreUpgradeTool.prepareAcidUpgradeInternal(PreUpgradeTool.java:283)

> at

> org.apache.hadoop.hive.upgrade.acid.PreUpgradeTool.main(PreUpgradeTool.java:146)

> Caused by: java.lang.RuntimeException:

> java.util.concurrent.ExecutionException: java.lang.RuntimeException:

> java.lang.RuntimeException: java.lang.RuntimeException:

> java.lang.IllegalStateException: Current state = RESET, new state = FLUSHED

> ...

> Caused by: java.lang.IllegalStateException: Current state = RESET, new state

> = FLUSHED at

> java.nio.charset.CharsetDecoder.throwIllegalStateException(CharsetDecoder.java:992)

> at java.nio.charset.CharsetDecoder.flush(CharsetDecoder.java:675) at

> java.nio.charset.CharsetDecoder.decode(CharsetDecoder.java:804) at

> org.apache.hadoop.hive.upgrade.acid.PreUpgradeTool.needsCompaction(PreUpgradeTool.java:606)

> at

> org.apache.hadoop.hive.upgrade.acid.PreUpgradeTool.needsCompaction(PreUpgradeTool.java:567)

> at

> org.apache.hadoop.hive.upgrade.acid.PreUpgradeTool.getCompactionCommands(PreUpgradeTool.java:464)

> at

> org.apache.hadoop.hive.upgrade.acid.PreUpgradeTool.processTable(PreUpgradeTool.java:374)

> {code}

> This is probably caused by HIVE-21948.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HIVE-21778) CBO: "Struct is not null" gets evaluated as `nullable` always causing filter miss in the query

[

https://issues.apache.org/jira/browse/HIVE-21778?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Vineet Garg updated HIVE-21778:

---

Status: Patch Available (was: Open)

> CBO: "Struct is not null" gets evaluated as `nullable` always causing filter

> miss in the query

> --

>

> Key: HIVE-21778

> URL: https://issues.apache.org/jira/browse/HIVE-21778

> Project: Hive

> Issue Type: Bug

> Components: CBO

>Affects Versions: 2.3.5, 4.0.0

>Reporter: Rajesh Balamohan

>Assignee: Vineet Garg

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-21778.1.patch, HIVE-21778.2.patch,

> HIVE-21778.3.patch, HIVE-21778.4.patch, HIVE-21778.5.patch,

> HIVE-21778.6.patch, HIVE-21778.7.patch, test_null.q, test_null.q.out

>

> Time Spent: 40m

> Remaining Estimate: 0h

>

> {noformat}

> drop table if exists test_struct;

> CREATE external TABLE test_struct

> (

> f1 string,

> demo_struct struct,

> datestr string

> );

> set hive.cbo.enable=true;

> explain select * from etltmp.test_struct where datestr='2019-01-01' and

> demo_struct is not null;

> STAGE PLANS:

> Stage: Stage-0

> Fetch Operator

> limit: -1

> Processor Tree:

> TableScan

> alias: test_struct

> filterExpr: (datestr = '2019-01-01') (type: boolean) <- Note

> that demo_struct filter is not added here

> Filter Operator

> predicate: (datestr = '2019-01-01') (type: boolean)

> Select Operator

> expressions: f1 (type: string), demo_struct (type:

> struct), '2019-01-01' (type: string)

> outputColumnNames: _col0, _col1, _col2

> ListSink

> set hive.cbo.enable=false;

> explain select * from etltmp.test_struct where datestr='2019-01-01' and

> demo_struct is not null;

> STAGE PLANS:

> Stage: Stage-0

> Fetch Operator

> limit: -1

> Processor Tree:

> TableScan

> alias: test_struct

> filterExpr: ((datestr = '2019-01-01') and demo_struct is not null)

> (type: boolean) <- Note that demo_struct filter is added when CBO is

> turned off

> Filter Operator

> predicate: ((datestr = '2019-01-01') and demo_struct is not null)

> (type: boolean)

> Select Operator

> expressions: f1 (type: string), demo_struct (type:

> struct), '2019-01-01' (type: string)

> outputColumnNames: _col0, _col1, _col2

> ListSink

> {noformat}

> In CalcitePlanner::genFilterRelNode, the following code misses to evaluate

> this filter.

> {noformat}

> RexNode factoredFilterExpr = RexUtil

> .pullFactors(cluster.getRexBuilder(), convertedFilterExpr);

> {noformat}

> Note that even if we add `demo_struct.f1` it would end up pushing the filter

> correctly.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HIVE-22995) Add support for location for managed tables on database

[

https://issues.apache.org/jira/browse/HIVE-22995?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Thejas Nair updated HIVE-22995:

---

Description:

I have attached the initial spec to this jira.

Default location for database would be the external table base directory.

Managed location can be optionally specified.

{code}

CREATE (DATABASE|SCHEMA) [IF NOT EXISTS] database_name

[COMMENT database_comment]

[LOCATION hdfs_path]

[MANAGEDLOCATION hdfs_path]

[WITH DBPROPERTIES (property_name=property_value, ...)];

ALTER (DATABASE|SCHEMA) database_name SET

MANAGEDLOCATION

hdfs_path;

{code}

was:

I have attached the initial spec to this jira.

Proposed syntax -

{code}

CREATE (DATABASE|SCHEMA) [IF NOT EXISTS] database_name

[COMMENT database_comment]

[LOCATION hdfs_path]

[MANAGEDLOCATION hdfs_path]

[WITH DBPROPERTIES (property_name=property_value, ...)];

ALTER (DATABASE|SCHEMA) database_name SET

MANAGEDLOCATION

hdfs_path;

{code}

> Add support for location for managed tables on database

> ---

>

> Key: HIVE-22995

> URL: https://issues.apache.org/jira/browse/HIVE-22995

> Project: Hive

> Issue Type: Improvement

> Components: Hive

>Affects Versions: 3.1.0

>Reporter: Naveen Gangam

>Assignee: Naveen Gangam

>Priority: Major

> Attachments: Hive Metastore Support for Tenant-based storage

> heirarchy.pdf

>

>

> I have attached the initial spec to this jira.

> Default location for database would be the external table base directory.

> Managed location can be optionally specified.

> {code}

> CREATE (DATABASE|SCHEMA) [IF NOT EXISTS] database_name

> [COMMENT database_comment]

> [LOCATION hdfs_path]

> [MANAGEDLOCATION hdfs_path]

> [WITH DBPROPERTIES (property_name=property_value, ...)];

> ALTER (DATABASE|SCHEMA) database_name SET

> MANAGEDLOCATION

> hdfs_path;

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HIVE-22962) Reuse HiveRelFieldTrimmer instance across queries

[

https://issues.apache.org/jira/browse/HIVE-22962?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jesus Camacho Rodriguez updated HIVE-22962:

---

Attachment: HIVE-22962.06.patch

> Reuse HiveRelFieldTrimmer instance across queries

> -

>

> Key: HIVE-22962

> URL: https://issues.apache.org/jira/browse/HIVE-22962

> Project: Hive

> Issue Type: Improvement

> Components: CBO

>Reporter: Jesus Camacho Rodriguez

>Assignee: Jesus Camacho Rodriguez

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-22962.01.patch, HIVE-22962.02.patch,

> HIVE-22962.03.patch, HIVE-22962.04.patch, HIVE-22962.05.patch,

> HIVE-22962.06.patch, HIVE-22962.patch

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Currently we create multiple {{HiveRelFieldTrimmer}} instances per query.

> {{HiveRelFieldTrimmer}} uses a method dispatcher that has a built-in caching

> mechanism: given a certain object, it stores the method that was called for

> the object class. However, by instantiating the trimmer multiple times per

> query and across queries, we create a new dispatcher with each instantiation,

> thus effectively removing the caching mechanism that is built within the

> dispatcher.

> This issue is to reutilize the same {{HiveRelFieldTrimmer}} instance within a

> single query and across queries.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HIVE-21778) CBO: "Struct is not null" gets evaluated as `nullable` always causing filter miss in the query

[

https://issues.apache.org/jira/browse/HIVE-21778?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Vineet Garg updated HIVE-21778:

---

Status: Open (was: Patch Available)

> CBO: "Struct is not null" gets evaluated as `nullable` always causing filter

> miss in the query

> --

>

> Key: HIVE-21778

> URL: https://issues.apache.org/jira/browse/HIVE-21778

> Project: Hive

> Issue Type: Bug

> Components: CBO

>Affects Versions: 2.3.5, 4.0.0

>Reporter: Rajesh Balamohan

>Assignee: Vineet Garg

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-21778.1.patch, HIVE-21778.2.patch,

> HIVE-21778.3.patch, HIVE-21778.4.patch, HIVE-21778.5.patch,

> HIVE-21778.6.patch, HIVE-21778.7.patch, test_null.q, test_null.q.out

>

> Time Spent: 40m

> Remaining Estimate: 0h

>

> {noformat}

> drop table if exists test_struct;

> CREATE external TABLE test_struct

> (

> f1 string,

> demo_struct struct,

> datestr string

> );

> set hive.cbo.enable=true;

> explain select * from etltmp.test_struct where datestr='2019-01-01' and

> demo_struct is not null;

> STAGE PLANS:

> Stage: Stage-0

> Fetch Operator

> limit: -1

> Processor Tree:

> TableScan

> alias: test_struct

> filterExpr: (datestr = '2019-01-01') (type: boolean) <- Note

> that demo_struct filter is not added here

> Filter Operator

> predicate: (datestr = '2019-01-01') (type: boolean)

> Select Operator

> expressions: f1 (type: string), demo_struct (type:

> struct), '2019-01-01' (type: string)

> outputColumnNames: _col0, _col1, _col2

> ListSink

> set hive.cbo.enable=false;

> explain select * from etltmp.test_struct where datestr='2019-01-01' and

> demo_struct is not null;

> STAGE PLANS:

> Stage: Stage-0

> Fetch Operator

> limit: -1

> Processor Tree:

> TableScan

> alias: test_struct

> filterExpr: ((datestr = '2019-01-01') and demo_struct is not null)

> (type: boolean) <- Note that demo_struct filter is added when CBO is

> turned off

> Filter Operator

> predicate: ((datestr = '2019-01-01') and demo_struct is not null)

> (type: boolean)

> Select Operator

> expressions: f1 (type: string), demo_struct (type:

> struct), '2019-01-01' (type: string)

> outputColumnNames: _col0, _col1, _col2

> ListSink

> {noformat}

> In CalcitePlanner::genFilterRelNode, the following code misses to evaluate

> this filter.

> {noformat}

> RexNode factoredFilterExpr = RexUtil

> .pullFactors(cluster.getRexBuilder(), convertedFilterExpr);

> {noformat}

> Note that even if we add `demo_struct.f1` it would end up pushing the filter

> correctly.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HIVE-22962) Reuse HiveRelFieldTrimmer instance across queries

[

https://issues.apache.org/jira/browse/HIVE-22962?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jesus Camacho Rodriguez updated HIVE-22962:

---

Attachment: (was: HIVE-22962.06.patch)

> Reuse HiveRelFieldTrimmer instance across queries

> -

>

> Key: HIVE-22962

> URL: https://issues.apache.org/jira/browse/HIVE-22962

> Project: Hive

> Issue Type: Improvement

> Components: CBO

>Reporter: Jesus Camacho Rodriguez

>Assignee: Jesus Camacho Rodriguez

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-22962.01.patch, HIVE-22962.02.patch,

> HIVE-22962.03.patch, HIVE-22962.04.patch, HIVE-22962.05.patch,

> HIVE-22962.06.patch, HIVE-22962.patch

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Currently we create multiple {{HiveRelFieldTrimmer}} instances per query.

> {{HiveRelFieldTrimmer}} uses a method dispatcher that has a built-in caching

> mechanism: given a certain object, it stores the method that was called for

> the object class. However, by instantiating the trimmer multiple times per

> query and across queries, we create a new dispatcher with each instantiation,

> thus effectively removing the caching mechanism that is built within the

> dispatcher.

> This issue is to reutilize the same {{HiveRelFieldTrimmer}} instance within a

> single query and across queries.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HIVE-22987) ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null

[

https://issues.apache.org/jira/browse/HIVE-22987?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053861#comment-17053861

]

Hive QA commented on HIVE-22987:

| (x) *{color:red}-1 overall{color}* |

\\

\\

|| Vote || Subsystem || Runtime || Comment ||

|| || || || {color:brown} Prechecks {color} ||

| {color:green}+1{color} | {color:green} @author {color} | {color:green} 0m

0s{color} | {color:green} The patch does not contain any @author tags. {color} |

|| || || || {color:brown} master Compile Tests {color} ||

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 9m

1s{color} | {color:green} master passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 1m

0s{color} | {color:green} master passed {color} |

| {color:green}+1{color} | {color:green} checkstyle {color} | {color:green} 0m

42s{color} | {color:green} master passed {color} |

| {color:blue}0{color} | {color:blue} findbugs {color} | {color:blue} 3m

48s{color} | {color:blue} ql in master has 1531 extant Findbugs warnings.

{color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 0m

54s{color} | {color:green} master passed {color} |

|| || || || {color:brown} Patch Compile Tests {color} ||

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 1m

17s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 0m

59s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} javac {color} | {color:green} 0m

59s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} checkstyle {color} | {color:green} 0m

43s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} whitespace {color} | {color:green} 0m

0s{color} | {color:green} The patch has no whitespace issues. {color} |

| {color:green}+1{color} | {color:green} findbugs {color} | {color:green} 3m

56s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 0m

53s{color} | {color:green} the patch passed {color} |

|| || || || {color:brown} Other Tests {color} ||

| {color:red}-1{color} | {color:red} asflicense {color} | {color:red} 0m

15s{color} | {color:red} The patch generated 2 ASF License warnings. {color} |

| {color:black}{color} | {color:black} {color} | {color:black} 23m 58s{color} |

{color:black} {color} |

\\

\\

|| Subsystem || Report/Notes ||

| Optional Tests | asflicense javac javadoc findbugs checkstyle compile |

| uname | Linux hiveptest-server-upstream 3.16.0-4-amd64 #1 SMP Debian

3.16.43-2+deb8u5 (2017-09-19) x86_64 GNU/Linux |

| Build tool | maven |

| Personality |

/data/hiveptest/working/yetus_PreCommit-HIVE-Build-20990/dev-support/hive-personality.sh

|

| git revision | master / 1b50b70 |

| Default Java | 1.8.0_111 |

| findbugs | v3.0.1 |

| asflicense |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20990/yetus/patch-asflicense-problems.txt

|

| modules | C: ql U: ql |

| Console output |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20990/yetus.txt |

| Powered by | Apache Yetushttp://yetus.apache.org |

This message was automatically generated.

> ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null

> ---

>

> Key: HIVE-22987

> URL: https://issues.apache.org/jira/browse/HIVE-22987

> Project: Hive

> Issue Type: Bug

>Reporter: Ramesh Kumar Thangarajan

>Assignee: Ramesh Kumar Thangarajan

>Priority: Major

> Attachments: HIVE-22987.1.patch

>

>

> ClassCastException in VectorCoalesce when DataTypePhysicalVariation is null

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HIVE-22760) Add Clock caching eviction based strategy

[ https://issues.apache.org/jira/browse/HIVE-22760?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Slim Bouguerra updated HIVE-22760: -- Attachment: HIVE-22760.patch > Add Clock caching eviction based strategy > - > > Key: HIVE-22760 > URL: https://issues.apache.org/jira/browse/HIVE-22760 > Project: Hive > Issue Type: New Feature > Components: llap >Reporter: Slim Bouguerra >Assignee: Slim Bouguerra >Priority: Major > Attachments: HIVE-22760.patch > > > LRFU is the current default right now. > The main issue with such Strategy is that it has a very high memory overhead, > in addition to that, most of the accounting has to happen under locks thus > can be source of contentions. > Add Simpler policy like clock, can help with both issues. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HIVE-22760) Add Clock caching eviction based strategy

[ https://issues.apache.org/jira/browse/HIVE-22760?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Slim Bouguerra updated HIVE-22760: -- Status: Patch Available (was: Open) > Add Clock caching eviction based strategy > - > > Key: HIVE-22760 > URL: https://issues.apache.org/jira/browse/HIVE-22760 > Project: Hive > Issue Type: New Feature > Components: llap >Reporter: Slim Bouguerra >Assignee: Slim Bouguerra >Priority: Major > Attachments: HIVE-22760.patch > > > LRFU is the current default right now. > The main issue with such Strategy is that it has a very high memory overhead, > in addition to that, most of the accounting has to happen under locks thus > can be source of contentions. > Add Simpler policy like clock, can help with both issues. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HIVE-21851) FireEventResponse should include event id when available

[

https://issues.apache.org/jira/browse/HIVE-21851?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053835#comment-17053835

]

Hive QA commented on HIVE-21851:

Here are the results of testing the latest attachment:

https://issues.apache.org/jira/secure/attachment/12995788/HIVE-21851.06.patch

{color:green}SUCCESS:{color} +1 due to 1 test(s) being added or modified.

{color:green}SUCCESS:{color} +1 due to 18102 tests passed

Test results:

https://builds.apache.org/job/PreCommit-HIVE-Build/20986/testReport

Console output: https://builds.apache.org/job/PreCommit-HIVE-Build/20986/console

Test logs: http://104.198.109.242/logs/PreCommit-HIVE-Build-20986/

Messages:

{noformat}

Executing org.apache.hive.ptest.execution.TestCheckPhase

Executing org.apache.hive.ptest.execution.PrepPhase

Executing org.apache.hive.ptest.execution.YetusPhase

Executing org.apache.hive.ptest.execution.ExecutionPhase

Executing org.apache.hive.ptest.execution.ReportingPhase

{noformat}

This message is automatically generated.

ATTACHMENT ID: 12995788 - PreCommit-HIVE-Build

> FireEventResponse should include event id when available

>

>

> Key: HIVE-21851

> URL: https://issues.apache.org/jira/browse/HIVE-21851

> Project: Hive

> Issue Type: Improvement

>Reporter: Vihang Karajgaonkar

>Assignee: Vihang Karajgaonkar

>Priority: Minor

> Attachments: HIVE-21851.01.patch, HIVE-21851.02.patch,

> HIVE-21851.03.patch, HIVE-21851.04.patch, HIVE-21851.05.patch,

> HIVE-21851.06.patch

>

>

> The metastore API {{fire_listener_event}} gives clients the ability to fire a

> INSERT event on DML operations. However, the returned response is empty

> struct. It would be useful to sent back the event id information in the

> response so that clients can take actions based of the event id.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HIVE-22996) BasicStats parsing should check proactively for null or empty string

[ https://issues.apache.org/jira/browse/HIVE-22996?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053729#comment-17053729 ] Jesus Camacho Rodriguez commented on HIVE-22996: [~kgyrtkirk], could you take a look? Thanks > BasicStats parsing should check proactively for null or empty string > > > Key: HIVE-22996 > URL: https://issues.apache.org/jira/browse/HIVE-22996 > Project: Hive > Issue Type: Bug > Components: Statistics >Reporter: Jesus Camacho Rodriguez >Assignee: Jesus Camacho Rodriguez >Priority: Major > Labels: pull-request-available > Attachments: HIVE-22996.patch > > Time Spent: 10m > Remaining Estimate: 0h > > Rather than throwing an Exception for control flow, which will create > unnecessary overhead. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-21218) KafkaSerDe doesn't support topics created via Confluent Avro serializer

[ https://issues.apache.org/jira/browse/HIVE-21218?focusedWorklogId=399460&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-399460 ] ASF GitHub Bot logged work on HIVE-21218: - Author: ASF GitHub Bot Created on: 07/Mar/20 00:28 Start Date: 07/Mar/20 00:28 Worklog Time Spent: 10m Work Description: cricket007 commented on issue #933: HIVE-21218: Adding support for Confluent Kafka Avro message format URL: https://github.com/apache/hive/pull/933#issuecomment-596019430 I think that is a side effect of the Avro Maven Plugin on that configuration block... You can put `Charsequence` or `String`, I think, but the default is `Utf8` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 399460) Time Spent: 13.5h (was: 13h 20m) > KafkaSerDe doesn't support topics created via Confluent Avro serializer > --- > > Key: HIVE-21218 > URL: https://issues.apache.org/jira/browse/HIVE-21218 > Project: Hive > Issue Type: Bug > Components: kafka integration, Serializers/Deserializers >Affects Versions: 3.1.1 >Reporter: Milan Baran >Assignee: David McGinnis >Priority: Major > Labels: pull-request-available > Attachments: HIVE-21218.2.patch, HIVE-21218.3.patch, > HIVE-21218.4.patch, HIVE-21218.5.patch, HIVE-21218.6.patch, > HIVE-21218.7.patch, HIVE-21218.patch > > Time Spent: 13.5h > Remaining Estimate: 0h > > According to [Google > groups|https://groups.google.com/forum/#!topic/confluent-platform/JYhlXN0u9_A] > the Confluent avro serialzier uses propertiary format for kafka value - > <4 bytes of schema ID> conforms to schema>. > This format does not cause any problem for Confluent kafka deserializer which > respect the format however for hive kafka handler its bit a problem to > correctly deserialize kafka value, because Hive uses custom deserializer from > bytes to objects and ignores kafka consumer ser/deser classes provided via > table property. > It would be nice to support Confluent format with magic byte. > Also it would be great to support Schema registry as well. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HIVE-22954) Schedule Repl Load using Hive Scheduler

[

https://issues.apache.org/jira/browse/HIVE-22954?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053787#comment-17053787

]

Hive QA commented on HIVE-22954:

Here are the results of testing the latest attachment:

https://issues.apache.org/jira/secure/attachment/12995811/HIVE-22954.20.patch

{color:green}SUCCESS:{color} +1 due to 23 test(s) being added or modified.

{color:green}SUCCESS:{color} +1 due to 18093 tests passed

Test results:

https://builds.apache.org/job/PreCommit-HIVE-Build/20984/testReport

Console output: https://builds.apache.org/job/PreCommit-HIVE-Build/20984/console

Test logs: http://104.198.109.242/logs/PreCommit-HIVE-Build-20984/

Messages:

{noformat}

Executing org.apache.hive.ptest.execution.TestCheckPhase

Executing org.apache.hive.ptest.execution.PrepPhase

Executing org.apache.hive.ptest.execution.YetusPhase

Executing org.apache.hive.ptest.execution.ExecutionPhase

Executing org.apache.hive.ptest.execution.ReportingPhase

{noformat}

This message is automatically generated.

ATTACHMENT ID: 12995811 - PreCommit-HIVE-Build

> Schedule Repl Load using Hive Scheduler

> ---

>

> Key: HIVE-22954

> URL: https://issues.apache.org/jira/browse/HIVE-22954

> Project: Hive

> Issue Type: Task

>Reporter: Aasha Medhi

>Assignee: Aasha Medhi

>Priority: Major

> Labels: pull-request-available

> Attachments: HIVE-22954.01.patch, HIVE-22954.02.patch,

> HIVE-22954.03.patch, HIVE-22954.04.patch, HIVE-22954.05.patch,

> HIVE-22954.06.patch, HIVE-22954.07.patch, HIVE-22954.08.patch,

> HIVE-22954.09.patch, HIVE-22954.10.patch, HIVE-22954.11.patch,

> HIVE-22954.12.patch, HIVE-22954.13.patch, HIVE-22954.15.patch,

> HIVE-22954.16.patch, HIVE-22954.17.patch, HIVE-22954.18.patch,

> HIVE-22954.19.patch, HIVE-22954.20.patch, HIVE-22954.patch

>

> Time Spent: 1h 10m

> Remaining Estimate: 0h

>

> [https://github.com/apache/hive/pull/932]

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HIVE-21851) FireEventResponse should include event id when available

[

https://issues.apache.org/jira/browse/HIVE-21851?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053827#comment-17053827

]

Hive QA commented on HIVE-21851:

| (x) *{color:red}-1 overall{color}* |

\\

\\

|| Vote || Subsystem || Runtime || Comment ||

|| || || || {color:brown} Prechecks {color} ||

| {color:green}+1{color} | {color:green} @author {color} | {color:green} 0m

0s{color} | {color:green} The patch does not contain any @author tags. {color} |

|| || || || {color:brown} master Compile Tests {color} ||

| {color:blue}0{color} | {color:blue} mvndep {color} | {color:blue} 2m

2s{color} | {color:blue} Maven dependency ordering for branch {color} |

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 7m

34s{color} | {color:green} master passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 1m

20s{color} | {color:green} master passed {color} |

| {color:green}+1{color} | {color:green} checkstyle {color} | {color:green} 0m

41s{color} | {color:green} master passed {color} |

| {color:blue}0{color} | {color:blue} findbugs {color} | {color:blue} 2m

32s{color} | {color:blue} standalone-metastore/metastore-common in master has

35 extant Findbugs warnings. {color} |

| {color:blue}0{color} | {color:blue} findbugs {color} | {color:blue} 1m

15s{color} | {color:blue} standalone-metastore/metastore-server in master has

185 extant Findbugs warnings. {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 1m

29s{color} | {color:green} master passed {color} |

|| || || || {color:brown} Patch Compile Tests {color} ||

| {color:blue}0{color} | {color:blue} mvndep {color} | {color:blue} 0m

28s{color} | {color:blue} Maven dependency ordering for patch {color} |

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 1m

28s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 1m

17s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} javac {color} | {color:green} 1m

17s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} checkstyle {color} | {color:green} 0m

44s{color} | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} whitespace {color} | {color:green} 0m

0s{color} | {color:green} The patch has no whitespace issues. {color} |

| {color:red}-1{color} | {color:red} findbugs {color} | {color:red} 1m

24s{color} | {color:red} standalone-metastore/metastore-server generated 1 new

+ 185 unchanged - 0 fixed = 186 total (was 185) {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 1m

28s{color} | {color:green} the patch passed {color} |

|| || || || {color:brown} Other Tests {color} ||

| {color:red}-1{color} | {color:red} asflicense {color} | {color:red} 0m

14s{color} | {color:red} The patch generated 2 ASF License warnings. {color} |

| {color:black}{color} | {color:black} {color} | {color:black} 27m 21s{color} |

{color:black} {color} |

\\

\\

|| Reason || Tests ||

| FindBugs | module:standalone-metastore/metastore-server |

| | Boxing/unboxing to parse a primitive

org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.fire_listener_event(FireEventRequest)

At

HiveMetaStore.java:org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.fire_listener_event(FireEventRequest)

At HiveMetaStore.java:[line 8623] |

\\

\\

|| Subsystem || Report/Notes ||

| Optional Tests | asflicense javac javadoc findbugs checkstyle compile |

| uname | Linux hiveptest-server-upstream 3.16.0-4-amd64 #1 SMP Debian

3.16.43-2+deb8u5 (2017-09-19) x86_64 GNU/Linux |

| Build tool | maven |

| Personality |

/data/hiveptest/working/yetus_PreCommit-HIVE-Build-20986/dev-support/hive-personality.sh

|

| git revision | master / 1b50b70 |

| Default Java | 1.8.0_111 |

| findbugs | v3.0.0 |

| findbugs |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20986/yetus/new-findbugs-standalone-metastore_metastore-server.html

|

| asflicense |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20986/yetus/patch-asflicense-problems.txt

|

| modules | C: standalone-metastore/metastore-common

standalone-metastore/metastore-server itests/hcatalog-unit U: . |

| Console output |

http://104.198.109.242/logs//PreCommit-HIVE-Build-20986/yetus.txt |

| Powered by | Apache Yetushttp://yetus.apache.org |

This message was automatically generated.

> FireEventResponse should include event id when available

>

>

> Key: HIVE-21851

> URL: https://issues.apache.org/jira/browse/HIVE-21851

> Project: Hive

> Issue Type: Improvement

>Reporter: Vihang Karajgaonkar

>Assignee: Vihang Karajgaonkar

>Priority: Mino

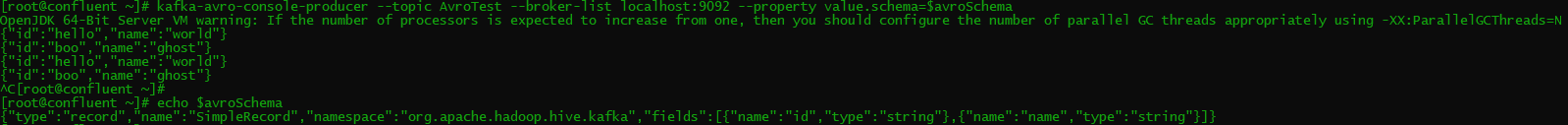

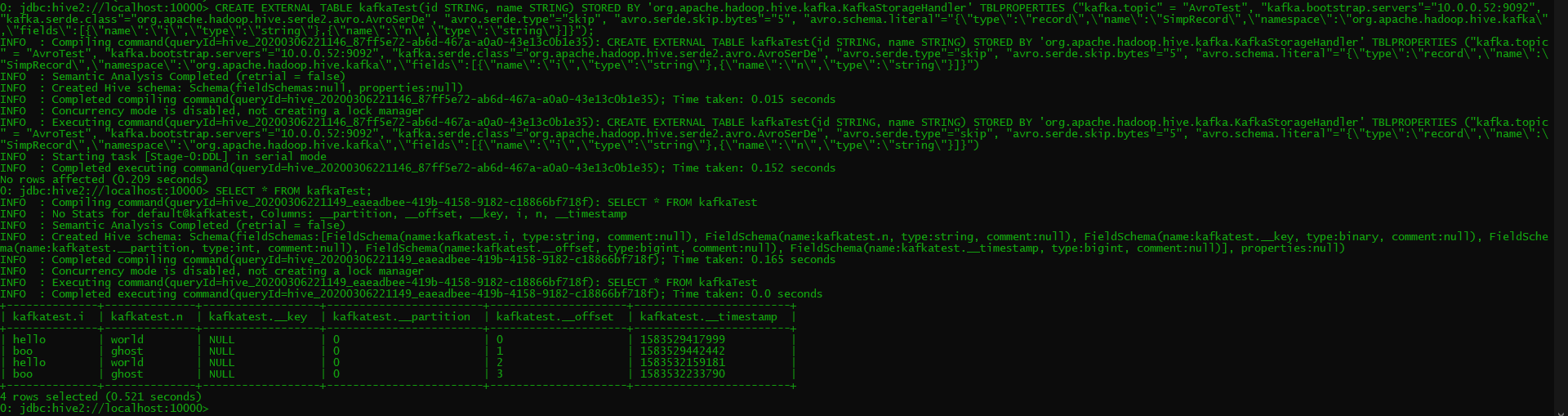

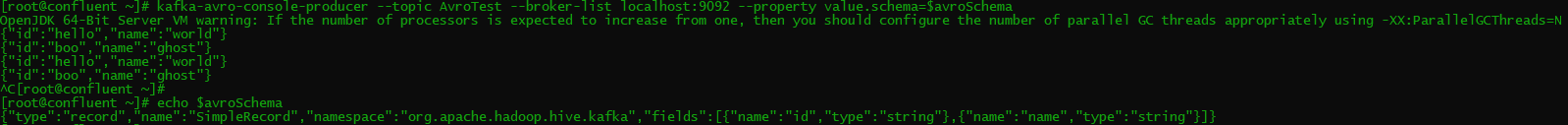

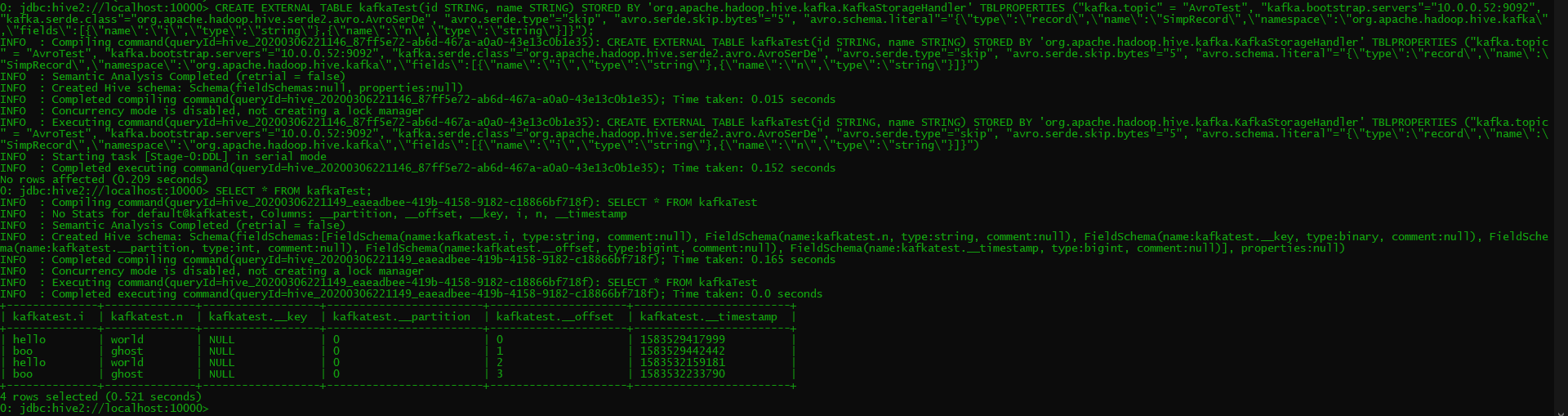

[jira] [Work logged] (HIVE-21218) KafkaSerDe doesn't support topics created via Confluent Avro serializer

[ https://issues.apache.org/jira/browse/HIVE-21218?focusedWorklogId=399396&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-399396 ] ASF GitHub Bot logged work on HIVE-21218: - Author: ASF GitHub Bot Created on: 06/Mar/20 22:19 Start Date: 06/Mar/20 22:19 Worklog Time Spent: 10m Work Description: davidov541 commented on issue #933: HIVE-21218: Adding support for Confluent Kafka Avro message format URL: https://github.com/apache/hive/pull/933#issuecomment-595988456 OK, I was able to successfully test this build using a Confluent single-node cluster and a Hive pseudo-standalone cluster. I was able to create a topic with a simple Avro schema and a few records, and then read that from Hive successfully. Confluent Cluster Production:  Hive Table Creation and Querying:  One thing I noticed was that on the Hive side, if I used the exact same schema as the SimpleRecord schema which we use for testing, I got the following error. As you can see in the screenshots, I was able to edit the field and schema names, and avoid this error, so it was specifically due to Hive pulling in the SimpleRecord class which we use for testing. ``` 2020-03-06T22:05:23,739 WARN [HiveServer2-Handler-Pool: Thread-165] thrift.ThriftCLIService: Error fetching results: org.apache.hive.service.cli.HiveSQLException: java.io.IOException: java.lang.ClassCastException: org.apache.avro.util.Utf8 cannot be cast to java.lang.String at org.apache.hive.service.cli.operation.SQLOperation.getNextRowSet(SQLOperation.java:481) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.cli.operation.OperationManager.getOperationNextRowSet(OperationManager.java:331) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.cli.session.HiveSessionImpl.fetchResults(HiveSessionImpl.java:946) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.cli.CLIService.fetchResults(CLIService.java:567) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.cli.thrift.ThriftCLIService.FetchResults(ThriftCLIService.java:801) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.rpc.thrift.TCLIService$Processor$FetchResults.getResult(TCLIService.java:1837) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.rpc.thrift.TCLIService$Processor$FetchResults.getResult(TCLIService.java:1822) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:56) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_242] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_242] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_242] Caused by: java.io.IOException: java.lang.ClassCastException: org.apache.avro.util.Utf8 cannot be cast to java.lang.String at org.apache.hadoop.hive.ql.exec.FetchOperator.getNextRow(FetchOperator.java:638) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hadoop.hive.ql.exec.FetchOperator.pushRow(FetchOperator.java:545) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hadoop.hive.ql.exec.FetchTask.fetch(FetchTask.java:150) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hadoop.hive.ql.Driver.getResults(Driver.java:880) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hadoop.hive.ql.reexec.ReExecDriver.getResults(ReExecDriver.java:241) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.cli.operation.SQLOperation.getNextRowSet(SQLOperation.java:476) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] ... 13 more Caused by: java.lang.ClassCastException: org.apache.avro.util.Utf8 cannot be cast to java.lang.String at org.apache.hadoop.hive.kafka.SimpleRecord.put(SimpleRecord.java:88) ~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0

[jira] [Updated] (HIVE-22996) BasicStats parsing should check proactively for null or empty string

[ https://issues.apache.org/jira/browse/HIVE-22996?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HIVE-22996: -- Labels: pull-request-available (was: ) > BasicStats parsing should check proactively for null or empty string > > > Key: HIVE-22996 > URL: https://issues.apache.org/jira/browse/HIVE-22996 > Project: Hive > Issue Type: Bug > Components: Statistics >Reporter: Jesus Camacho Rodriguez >Assignee: Jesus Camacho Rodriguez >Priority: Major > Labels: pull-request-available > Attachments: HIVE-22996.patch > > > Rather than throwing an Exception for control flow, which will create > unnecessary overhead. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Work logged] (HIVE-22996) BasicStats parsing should check proactively for null or empty string

[ https://issues.apache.org/jira/browse/HIVE-22996?focusedWorklogId=399331&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-399331 ] ASF GitHub Bot logged work on HIVE-22996: - Author: ASF GitHub Bot Created on: 06/Mar/20 19:48 Start Date: 06/Mar/20 19:48 Worklog Time Spent: 10m Work Description: jcamachor commented on pull request #942: HIVE-22996 URL: https://github.com/apache/hive/pull/942 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 399331) Remaining Estimate: 0h Time Spent: 10m > BasicStats parsing should check proactively for null or empty string > > > Key: HIVE-22996 > URL: https://issues.apache.org/jira/browse/HIVE-22996 > Project: Hive > Issue Type: Bug > Components: Statistics >Reporter: Jesus Camacho Rodriguez >Assignee: Jesus Camacho Rodriguez >Priority: Major > Labels: pull-request-available > Attachments: HIVE-22996.patch > > Time Spent: 10m > Remaining Estimate: 0h > > Rather than throwing an Exception for control flow, which will create > unnecessary overhead. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HIVE-22126) hive-exec packaging should shade guava

[ https://issues.apache.org/jira/browse/HIVE-22126?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17053747#comment-17053747 ] Eugene Chung commented on HIVE-22126: - If common-complier and janino modules are included in hive-exec, jar signing error is occurred. Execution default-test of goal org.apache.maven.plugins:maven-surefire-plugin:2.21.0:test failed: java.lang.SecurityException: Invalid signature file digest for Manifest main attributes at org.apache.maven.lifecycle.internal.MojoExecutor.execute (MojoExecutor.java:215) at org.apache.maven.lifecycle.internal.MojoExecutor.execute (MojoExecutor.java:156) at org.apache.maven.lifecycle.internal.MojoExecutor.execute (MojoExecutor.java:148) at org.apache.maven.lifecycle.internal.LifecycleModuleBuilder.buildProject (LifecycleModuleBuilder.java:117) at org.apache.maven.lifecycle.internal.LifecycleModuleBuilder.buildProject (LifecycleModuleBuilder.java:81) at org.apache.maven.lifecycle.internal.builder.singlethreaded.SingleThreadedBuilder.build (SingleThreadedBuilder.java:56) at org.apache.maven.lifecycle.internal.LifecycleStarter.execute (LifecycleStarter.java:128) at org.apache.maven.DefaultMaven.doExecute (DefaultMaven.java:305) at org.apache.maven.DefaultMaven.doExecute (DefaultMaven.java:192) at org.apache.maven.DefaultMaven.execute (DefaultMaven.java:105) at org.apache.maven.cli.MavenCli.execute (MavenCli.java:957) at org.apache.maven.cli.MavenCli.doMain (MavenCli.java:289) at org.apache.maven.cli.MavenCli.main (MavenCli.java:193) at sun.reflect.NativeMethodAccessorImpl.invoke0 (Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke (NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke (DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke (Method.java:498) at org.codehaus.plexus.classworlds.launcher.Launcher.launchEnhanced (Launcher.java:282) at org.codehaus.plexus.classworlds.launcher.Launcher.launch (Launcher.java:225) at org.codehaus.plexus.classworlds.launcher.Launcher.mainWithExitCode (Launcher.java:406) at org.codehaus.plexus.classworlds.launcher.Launcher.main (Launcher.java:347) Caused by: org.apache.maven.plugin.PluginExecutionException: Execution default-test of goal org.apache.maven.plugins:maven-surefire-plugin:2.21.0:test failed: java.lang.SecurityException: Invalid signature file digest for Manifest main attributes at org.apache.maven.plugin.DefaultBuildPluginManager.executeMojo (DefaultBuildPluginManager.java:148) at org.apache.maven.lifecycle.internal.MojoExecutor.execute (MojoExecutor.java:210) at org.apache.maven.lifecycle.internal.MojoExecutor.execute (MojoExecutor.java:156) at org.apache.maven.lifecycle.internal.MojoExecutor.execute (MojoExecutor.java:148) at org.apache.maven.lifecycle.internal.LifecycleModuleBuilder.buildProject (LifecycleModuleBuilder.java:117) at org.apache.maven.lifecycle.internal.LifecycleModuleBuilder.buildProject (LifecycleModuleBuilder.java:81) at org.apache.maven.lifecycle.internal.builder.singlethreaded.SingleThreadedBuilder.build (SingleThreadedBuilder.java:56) at org.apache.maven.lifecycle.internal.LifecycleStarter.execute (LifecycleStarter.java:128) at org.apache.maven.DefaultMaven.doExecute (DefaultMaven.java:305) at org.apache.maven.DefaultMaven.doExecute (DefaultMaven.java:192) at org.apache.maven.DefaultMaven.execute (DefaultMaven.java:105) at org.apache.maven.cli.MavenCli.execute (MavenCli.java:957) at org.apache.maven.cli.MavenCli.doMain (MavenCli.java:289) at org.apache.maven.cli.MavenCli.main (MavenCli.java:193) at sun.reflect.NativeMethodAccessorImpl.invoke0 (Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke (NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke (DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke (Method.java:498) at org.codehaus.plexus.classworlds.launcher.Launcher.launchEnhanced (Launcher.java:282) at org.codehaus.plexus.classworlds.launcher.Launcher.launch (Launcher.java:225) at org.codehaus.plexus.classworlds.launcher.Launcher.mainWithExitCode (Launcher.java:406) at org.codehaus.plexus.classworlds.launcher.Launcher.main (Launcher.java:347) Caused by: org.apache.maven.surefire.util.SurefireReflectionException: java.lang.SecurityException: Invalid signature file digest for Manifest main attributes at org.apache.maven.surefire.util.ReflectionUtils.invokeMethodWithArray (ReflectionUtils.java:197) at org.apache.maven.surefire.util.ReflectionUtils.invokeGetter (ReflectionUtils.java:76) at org.apache.maven.surefire.util.ReflectionUtils.invokeGetter (ReflectionUtils.java:70) at org.apache.maven.surefire.booter.ProviderFactory$ProviderProxy.getSuites (ProviderFactory.java:144) at org.apache.maven.plugin.surefire.booterclient.ForkStarter.getSuitesIterator (ForkStarter.java:699) at org.apach

[jira] [Work logged] (HIVE-21218) KafkaSerDe doesn't support topics created via Confluent Avro serializer

[ https://issues.apache.org/jira/browse/HIVE-21218?focusedWorklogId=399394&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-399394 ] ASF GitHub Bot logged work on HIVE-21218: - Author: ASF GitHub Bot Created on: 06/Mar/20 22:17 Start Date: 06/Mar/20 22:17 Worklog Time Spent: 10m Work Description: davidov541 commented on issue #933: HIVE-21218: Adding support for Confluent Kafka Avro message format URL: https://github.com/apache/hive/pull/933#issuecomment-595988456 OK, I was able to successfully test this build using a Confluent single-node cluster and a Hive pseudo-standalone cluster. I was able to create a topic with a simple Avro schema and a few records, and then read that from Hive successfully. Confluent Cluster Production:  Hive Table Creation and Querying:  One thing I noticed was that on the Hive side, if I used the exact same schema as the SimpleRecord schema which we use for testing, I got the following error. ``` 2020-03-06T22:05:23,739 WARN [HiveServer2-Handler-Pool: Thread-165] thrift.ThriftCLIService: Error fetching results: org.apache.hive.service.cli.HiveSQLException: java.io.IOException: java.lang.ClassCastException: org.apache.avro.util.Utf8 cannot be cast to java.lang.String at org.apache.hive.service.cli.operation.SQLOperation.getNextRowSet(SQLOperation.java:481) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.cli.operation.OperationManager.getOperationNextRowSet(OperationManager.java:331) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.cli.session.HiveSessionImpl.fetchResults(HiveSessionImpl.java:946) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.cli.CLIService.fetchResults(CLIService.java:567) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.cli.thrift.ThriftCLIService.FetchResults(ThriftCLIService.java:801) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.rpc.thrift.TCLIService$Processor$FetchResults.getResult(TCLIService.java:1837) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.rpc.thrift.TCLIService$Processor$FetchResults.getResult(TCLIService.java:1822) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:56) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_242] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_242] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_242] Caused by: java.io.IOException: java.lang.ClassCastException: org.apache.avro.util.Utf8 cannot be cast to java.lang.String at org.apache.hadoop.hive.ql.exec.FetchOperator.getNextRow(FetchOperator.java:638) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hadoop.hive.ql.exec.FetchOperator.pushRow(FetchOperator.java:545) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hadoop.hive.ql.exec.FetchTask.fetch(FetchTask.java:150) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hadoop.hive.ql.Driver.getResults(Driver.java:880) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hadoop.hive.ql.reexec.ReExecDriver.getResults(ReExecDriver.java:241) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.hive.service.cli.operation.SQLOperation.getNextRowSet(SQLOperation.java:476) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] ... 13 more Caused by: java.lang.ClassCastException: org.apache.avro.util.Utf8 cannot be cast to java.lang.String at org.apache.hadoop.hive.kafka.SimpleRecord.put(SimpleRecord.java:88) ~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT] at org.apache.avro.generic.GenericData.setField(GenericData.java:690) ~[avro-1.8.2.jar:1.8.2] at org.apache.avro.specific.SpecificDatumReader.readField(SpecificDatum

[jira] [Updated] (HIVE-22953) Update Apache Arrow and flatbuffer versions

[ https://issues.apache.org/jira/browse/HIVE-22953?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jesus Camacho Rodriguez updated HIVE-22953: --- Attachment: HIVE-22953.patch > Update Apache Arrow and flatbuffer versions > --- > > Key: HIVE-22953 > URL: https://issues.apache.org/jira/browse/HIVE-22953 > Project: Hive > Issue Type: Improvement > Components: Serializers/Deserializers >Reporter: Jesus Camacho Rodriguez >Assignee: Jesus Camacho Rodriguez >Priority: Major > Attachments: HIVE-22953.patch, HIVE-22953.patch > > > HIVE-22827 updated flatbuffer version to 1.6.0.1. Current Arrow version > consumed by Hive uses 1.2.0 (com.vlkan:flatbuffers version). > This issue is to update Arrow and flatbuffers (from official flatbuffers > release, same version used by Arrow). -- This message was sent by Atlassian Jira (v8.3.4#803005)