[GitHub] nifi issue #3200: NIFI-5826 WIP Fix back-slash escaping at Lexers

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/3200 Reviewing... ---

[GitHub] nifi pull request #3183: NIFI-5826 Fix to escaped backslash

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/3183#discussion_r238642945

--- Diff:

nifi-commons/nifi-record-path/src/main/java/org/apache/nifi/record/path/util/RecordPathUtils.java

---

@@ -39,4 +39,52 @@ public static String getFirstStringValue(final

RecordPathSegment segment, final

return stringValue;

}

+

+/**

+ * This method handles backslash sequences after ANTLR parser converts

all backslash into double ones

+ * with exception for \t, \r and \n. See

+ * org/apache/nifi/record/path/RecordPathParser.g

--- End diff --

@ijokarumawak ,

I understand what you are talking about, that was my first idea as well.

the problem is coming with regression on WORKING functionality, like EL.

If we change Lexer in all three cases, we will have a problem of backward

compatibility.

As an example: define GFF with attribute "a1" having value (with actual new

line):

```

"this is new line

and this is just a backslash \n"

```

(doesn't matter with quotes or w/o).

next: UpdateAttribute with:

`a1: "${a:replaceAll('\\n','_')}"`

and

`a2: "${a:replaceAll('\n','_')}"`

(note single and double backslash)

In both cases the only character that will be replaced will be actual new

line, resulting in:

`a1=a2="this is new line_and this is just a backslash \n"

`

If we change Lexer for EL, this will be changed and will behave differently

and will result in:

`a1 = "his is new line`

and this is just a backslash _"

`a2 = "his is new line_and this is just a backslash \n"`

Of course, we can do "right" way for RecordPath regex-based functions, but

then we will have different user experience with regex in RecordPath and EL,

which I think should be avoided.

Regarding Java method "unescapeBackslash". As a name suggests, this

function it to treat string values having "backslash" in their values. I do

agree that for the test cases we have, some parts of the code are dead, but

since this is public method in utility class, it can have generic functionality

to support wider varieties of the use cases.

Would appreciate your feedback!

---

[GitHub] nifi pull request #3183: NIFI-5826 Fix to escaped backslash

Github user bdesert commented on a diff in the pull request: https://github.com/apache/nifi/pull/3183#discussion_r237529258 --- Diff: nifi-commons/nifi-record-path/src/main/java/org/apache/nifi/record/path/util/RecordPathUtils.java --- @@ -39,4 +39,52 @@ public static String getFirstStringValue(final RecordPathSegment segment, final return stringValue; } + +/** + * This method handles backslash sequences after ANTLR parser converts all backslash into double ones + * with exception for \t, \r and \n. See + * org/apache/nifi/record/path/RecordPathParser.g --- End diff -- Yeah, that was supposed to be Lexer (I'll update PR later just in case I'll need to make more changes). Regarding your question on Lexer. When I started working on this issue, I also first started looking into Lexer, trying to understand all these escapes for backslash. After that I took a look at Expression Language Lexer and found the same code there: https://github.com/apache/nifi/blob/master/nifi-commons/nifi-expression-language/src/main/antlr3/org/apache/nifi/attribute/expression/language/antlr/AttributeExpressionLexer.g#L235 Then I started digging into actual code in Java and understood the reasons. ANTLR parses the code coming from a textbox. All special cases for backslash sequences (\r,\n,\t) should be supported and escaped to be able to capture actual new lines and tab chars. But then, if you support backslash sequences, you would also need to escape "\" itself, so there is another escape for backslash on a line 152. And then, if you escape single backslash, as special character, to avoid confusion with \r,\n,\t,\\, the rest of the characters are being escaped with double backslash + character (line 155)- to escape actual two char sequence of "\t" or "\r" , etc... Changing Lexer would change default behavior for backslash sequences not only for regex functions, but for all record based input. That would create backward compatibility issue, and that is the main reason I decided not to change Lexer. And also, as I mentioned in already above, current "escape" policy is consistent with EL, so i preferred to keep consistency and just fix a bug of not escaping back for regular expressions. Let me know if that makes sense, or if you have better ideas. ---

[GitHub] nifi pull request #3183: NIFI-5826 Fix to escaped backslash

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/3183#discussion_r236843474

--- Diff:

nifi-commons/nifi-record-path/src/main/java/org/apache/nifi/record/path/util/RecordPathUtils.java

---

@@ -39,4 +39,44 @@ public static String getFirstStringValue(final

RecordPathSegment segment, final

return stringValue;

}

+

+public static String unescapeBackslash(String value) {

+if (value == null || value.isEmpty()) {

+return value;

+}

+// need to escape characters after backslashes

+final StringBuilder sb = new StringBuilder();

+boolean lastCharIsBackslash = false;

+for (int i = 0; i < value.length(); i++) {

+final char c = value.charAt(i);

+

--- End diff --

@ottobackwards I've added Javadocs with reference to antlr parser and

explaining the reasons for this transformation.

---

[GitHub] nifi pull request #3183: NIFI-5826 Fix to escaped backslash

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/3183#discussion_r236829982

--- Diff:

nifi-commons/nifi-record-path/src/test/java/org/apache/nifi/record/path/TestRecordPath.java

---

@@ -1008,12 +1008,16 @@ public void testReplaceRegex() {

final List fields = new ArrayList<>();

fields.add(new RecordField("id",

RecordFieldType.INT.getDataType()));

fields.add(new RecordField("name",

RecordFieldType.STRING.getDataType()));

--- End diff --

added tests to cover all of special chars. Also added backslash escape

sequences to test exceptions.

---

[GitHub] nifi pull request #3183: NIFI-5826 Fix to escaped backslash

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/3183#discussion_r236789857

--- Diff:

nifi-commons/nifi-record-path/src/main/java/org/apache/nifi/record/path/util/RecordPathUtils.java

---

@@ -39,4 +39,44 @@ public static String getFirstStringValue(final

RecordPathSegment segment, final

return stringValue;

}

+

+public static String unescapeBackslash(String value) {

+if (value == null || value.isEmpty()) {

+return value;

+}

+// need to escape characters after backslashes

+final StringBuilder sb = new StringBuilder();

+boolean lastCharIsBackslash = false;

+for (int i = 0; i < value.length(); i++) {

+final char c = value.charAt(i);

+

--- End diff --

Added to the PR description:

EL References: StringLiteralEvaluator. Special characters (backslash

sequences) will be handled for any string literal value, and will affect the

way regex and escaped chars must be defined. Same logic has been added to

RecordPathUtils.unescapeBackslash.

---

[GitHub] nifi pull request #3183: NIFI-5826 Fix to escaped backslash

GitHub user bdesert opened a pull request:

https://github.com/apache/nifi/pull/3183

NIFI-5826 Fix to escaped backslash

Fixing escaped backslash by unescaping it in java just before compiling

regex.

-

For a consistency with expression language regex-based evaluators, all

record path operators will follow the same escape sequence for regex. Single

backslash should be defined as double backslash.

Examples:

- replaceRegex(/col1, '\\s', "_") - will replace all whitespaces (spaces,

tabs) with underscore.

- replaceRegex(/col1, '\\.', ",") - will replace all a period with

- replaceRegex(/col1, '', "/") - will replace backslash with forward

slash

-

Thank you for submitting a contribution to Apache NiFi.

In order to streamline the review of the contribution we ask you

to ensure the following steps have been taken:

### For all changes:

- [x] Is there a JIRA ticket associated with this PR? Is it referenced

in the commit message?

- [x] Does your PR title start with NIFI- where is the JIRA number

you are trying to resolve? Pay particular attention to the hyphen "-" character.

- [x] Has your PR been rebased against the latest commit within the target

branch (typically master)?

- [x] Is your initial contribution a single, squashed commit?

### For code changes:

- [x] Have you ensured that the full suite of tests is executed via mvn

-Pcontrib-check clean install at the root nifi folder?

- [x] Have you written or updated unit tests to verify your changes?

- [ ] If adding new dependencies to the code, are these dependencies

licensed in a way that is compatible for inclusion under [ASF

2.0](http://www.apache.org/legal/resolved.html#category-a)?

- [ ] If applicable, have you updated the LICENSE file, including the main

LICENSE file under nifi-assembly?

- [ ] If applicable, have you updated the NOTICE file, including the main

NOTICE file found under nifi-assembly?

- [ ] If adding new Properties, have you added .displayName in addition to

.name (programmatic access) for each of the new properties?

### For documentation related changes:

- [ ] Have you ensured that format looks appropriate for the output in

which it is rendered?

### Note:

Please ensure that once the PR is submitted, you check travis-ci for build

issues and submit an update to your PR as soon as possible.

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/bdesert/nifi NIFI-5826_RegexPath

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/nifi/pull/3183.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #3183

commit 4e0eed56f76556b323f96640d4d3126d35750096

Author: Ed

Date: 2018-11-27T17:19:20Z

NIFI-5826 Fix to escaped backslash

Fixing escaped backslash by unescaping it in java just before compiling

regex.

For a consistency with expression language regex-based evaluators, all

record path operators will follow the same escape sequence for regex. Single

backslash should be defined as double backslash.

Examples:

- replaceRegex(/col1, '\\s', "_") - will replace all whitespaces (spaces,

tabs) with underscore.

- replaceRegex(/col1, '\\.', ",") - will replace all a period with

- replaceRegex(/col1, '', "/") - will replace backslash with forward

slash

NIFI-5826 Fix to escaped backslash

Fixing escaped backslash by unescaping it in java just before compiling

regex.

For a consistency with expression language regex-based evaluators, all

record path operators will follow the same escape sequence for regex. Single

backslash should be defined as double backslash.

Examples:

- replaceRegex(/col1, '\\s', "_") - will replace all whitespaces (spaces,

tabs) with underscore.

- replaceRegex(/col1, '\\.', ",") - will replace all a period with

- replaceRegex(/col1, '', "/") - will replace backslash with forward

slash

---

[GitHub] nifi issue #1953: NIFI-4130 Add lookup controller service in TransformXML to...

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/1953 +1 LGTM. Tested on local env, with XSLT as a file (regression), with lookup service, cache size 0 and >0, all works as expected. Ready for merge. @mattyb149 , please could you please give a final look? ---

[GitHub] nifi pull request #1953: NIFI-4130 Add lookup controller service in Transfor...

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/1953#discussion_r233016908

--- Diff:

nifi-nar-bundles/nifi-standard-bundle/nifi-standard-processors/src/main/java/org/apache/nifi/processors/standard/TransformXml.java

---

@@ -82,12 +94,32 @@

public static final PropertyDescriptor XSLT_FILE_NAME = new

PropertyDescriptor.Builder()

.name("XSLT file name")

-.description("Provides the name (including full path) of the

XSLT file to apply to the flowfile XML content.")

-.required(true)

+.description("Provides the name (including full path) of the

XSLT file to apply to the flowfile XML content."

++ "One of the XSLT file name and XSLT controller

properties must be defined.")

+.required(false)

.expressionLanguageSupported(ExpressionLanguageScope.FLOWFILE_ATTRIBUTES)

.addValidator(StandardValidators.FILE_EXISTS_VALIDATOR)

.build();

+public static final PropertyDescriptor XSLT_CONTROLLER = new

PropertyDescriptor.Builder()

+.name("xslt-controller")

+.displayName("XSLT controller")

--- End diff --

"XSLT Lookup" Would be more readable.

Description: "Lookup controller used to store..."

And: XSLT_CONTROLLER_KEY: "XSLT Lookup Key" (description looks fine)

---

[GitHub] nifi pull request #1953: NIFI-4130 Add lookup controller service in Transfor...

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/1953#discussion_r233019686

--- Diff:

nifi-nar-bundles/nifi-standard-bundle/nifi-standard-processors/src/main/java/org/apache/nifi/processors/standard/TransformXml.java

---

@@ -82,12 +94,32 @@

public static final PropertyDescriptor XSLT_FILE_NAME = new

PropertyDescriptor.Builder()

.name("XSLT file name")

-.description("Provides the name (including full path) of the

XSLT file to apply to the flowfile XML content.")

-.required(true)

+.description("Provides the name (including full path) of the

XSLT file to apply to the flowfile XML content."

++ "One of the XSLT file name and XSLT controller

properties must be defined.")

+.required(false)

.expressionLanguageSupported(ExpressionLanguageScope.FLOWFILE_ATTRIBUTES)

.addValidator(StandardValidators.FILE_EXISTS_VALIDATOR)

.build();

+public static final PropertyDescriptor XSLT_CONTROLLER = new

PropertyDescriptor.Builder()

+.name("xslt-controller")

+.displayName("XSLT controller")

+.description("Controller used to store XSLT definitions. One

of the XSLT file name and "

++ "XSLT controller properties must be defined.")

+.required(false)

+.identifiesControllerService(StringLookupService.class)

+.build();

+

+public static final PropertyDescriptor XSLT_CONTROLLER_KEY = new

PropertyDescriptor.Builder()

+.name("xslt-controller-key")

+.displayName("XSLT controller key")

+.description("Key used to retrieve the XSLT definition from

the XSLT controller. This property must be set when using "

++ "the XSLT controller property.")

+.required(false)

+

.expressionLanguageSupported(ExpressionLanguageScope.FLOWFILE_ATTRIBUTES)

+.addValidator(StandardValidators.NON_EMPTY_VALIDATOR)

--- End diff --

since this property supports EL, shouldn't it be NON_EMPTY_**EL**_VALIDATOR?

---

[GitHub] nifi issue #1953: NIFI-4130 Add lookup controller service in TransformXML to...

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/1953 Reviewing... ---

[GitHub] nifi pull request #3164: NIFI-5810 Add UserName EL support to JMS processors

GitHub user bdesert opened a pull request: https://github.com/apache/nifi/pull/3164 NIFI-5810 Add UserName EL support to JMS processors Adding EL support to a property "User Name" to ConsumeJMS and PublishJSM Thank you for submitting a contribution to Apache NiFi. In order to streamline the review of the contribution we ask you to ensure the following steps have been taken: ### For all changes: - [x] Is there a JIRA ticket associated with this PR? Is it referenced in the commit message? - [x] Does your PR title start with NIFI- where is the JIRA number you are trying to resolve? Pay particular attention to the hyphen "-" character. - [x] Has your PR been rebased against the latest commit within the target branch (typically master)? - [x] Is your initial contribution a single, squashed commit? ### For code changes: - [x] Have you ensured that the full suite of tests is executed via mvn -Pcontrib-check clean install at the root nifi folder? - [ ] Have you written or updated unit tests to verify your changes? - [ ] If adding new dependencies to the code, are these dependencies licensed in a way that is compatible for inclusion under [ASF 2.0](http://www.apache.org/legal/resolved.html#category-a)? - [ ] If applicable, have you updated the LICENSE file, including the main LICENSE file under nifi-assembly? - [ ] If applicable, have you updated the NOTICE file, including the main NOTICE file found under nifi-assembly? - [ ] If adding new Properties, have you added .displayName in addition to .name (programmatic access) for each of the new properties? ### For documentation related changes: - [ ] Have you ensured that format looks appropriate for the output in which it is rendered? ### Note: Please ensure that once the PR is submitted, you check travis-ci for build issues and submit an update to your PR as soon as possible. You can merge this pull request into a Git repository by running: $ git pull https://github.com/bdesert/nifi NIFI-5810_JMS_EL Alternatively you can review and apply these changes as the patch at: https://github.com/apache/nifi/pull/3164.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #3164 commit 5013dfff04bff154f8e1fc150e096a3ab6098a49 Author: Ed B Date: 2018-11-10T00:27:10Z NIFI-5810 Add UserName EL support to JMS processors Adding EL support to a property "User Name" to ConsumeJMS and PublishJSM ---

[GitHub] nifi pull request #3117: NIFI-5770 Fix Memory Leak in ExecuteScript on Jytho...

GitHub user bdesert opened a pull request: https://github.com/apache/nifi/pull/3117 NIFI-5770 Fix Memory Leak in ExecuteScript on Jython Moved module appending (aka classpath in python) into init stage instead of running each time onTrigger. Thank you for submitting a contribution to Apache NiFi. In order to streamline the review of the contribution we ask you to ensure the following steps have been taken: ### For all changes: - [x] Is there a JIRA ticket associated with this PR? Is it referenced in the commit message? - [x] Does your PR title start with NIFI- where is the JIRA number you are trying to resolve? Pay particular attention to the hyphen "-" character. - [x] Has your PR been rebased against the latest commit within the target branch (typically master)? - [x] Is your initial contribution a single, squashed commit? ### For code changes: - [x] Have you ensured that the full suite of tests is executed via mvn -Pcontrib-check clean install at the root nifi folder? - [ ] Have you written or updated unit tests to verify your changes? - [ ] If adding new dependencies to the code, are these dependencies licensed in a way that is compatible for inclusion under [ASF 2.0](http://www.apache.org/legal/resolved.html#category-a)? - [ ] If applicable, have you updated the LICENSE file, including the main LICENSE file under nifi-assembly? - [ ] If applicable, have you updated the NOTICE file, including the main NOTICE file found under nifi-assembly? - [ ] If adding new Properties, have you added .displayName in addition to .name (programmatic access) for each of the new properties? ### For documentation related changes: - [ ] Have you ensured that format looks appropriate for the output in which it is rendered? ### Note: Please ensure that once the PR is submitted, you check travis-ci for build issues and submit an update to your PR as soon as possible. You can merge this pull request into a Git repository by running: $ git pull https://github.com/bdesert/nifi NIFI-5770_ExecuteScript Alternatively you can review and apply these changes as the patch at: https://github.com/apache/nifi/pull/3117.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #3117 commit e6837c81e84b2d7fa29b020c8192b4a2a9783e18 Author: Ed B Date: 2018-10-31T13:10:27Z NIFI-5770 Fix Memory Leak in ExecuteScript on Jython Moved module appending (aka classpath in python) into init stage instead of running each time onTrigger. ---

[GitHub] nifi pull request #3098: NIFI-5728 XML Writer to populate record tag name pr...

GitHub user bdesert opened a pull request: https://github.com/apache/nifi/pull/3098 NIFI-5728 XML Writer to populate record tag name properly All the changes are tested against - "Use Schema Text" (regression) - "AvroSchemaRegistry" Controller Service - "Hortonworks Schema Registry" Controller Service (docker). Also added Test Case to handle scenario when schema name in xxxRegistry Controller Service doesn't mach "name" field of the root record in schema text. --- Thank you for submitting a contribution to Apache NiFi. In order to streamline the review of the contribution we ask you to ensure the following steps have been taken: ### For all changes: - [x] Is there a JIRA ticket associated with this PR? Is it referenced in the commit message? - [x] Does your PR title start with NIFI- where is the JIRA number you are trying to resolve? Pay particular attention to the hyphen "-" character. - [x] Has your PR been rebased against the latest commit within the target branch (typically master)? - [x] Is your initial contribution a single, squashed commit? ### For code changes: - [x] Have you ensured that the full suite of tests is executed via mvn -Pcontrib-check clean install at the root nifi folder? - [x] Have you written or updated unit tests to verify your changes? - [ ] If adding new dependencies to the code, are these dependencies licensed in a way that is compatible for inclusion under [ASF 2.0](http://www.apache.org/legal/resolved.html#category-a)? - [ ] If applicable, have you updated the LICENSE file, including the main LICENSE file under nifi-assembly? - [ ] If applicable, have you updated the NOTICE file, including the main NOTICE file found under nifi-assembly? - [ ] If adding new Properties, have you added .displayName in addition to .name (programmatic access) for each of the new properties? ### For documentation related changes: - [ ] Have you ensured that format looks appropriate for the output in which it is rendered? ### Note: Please ensure that once the PR is submitted, you check travis-ci for build issues and submit an update to your PR as soon as possible. You can merge this pull request into a Git repository by running: $ git pull https://github.com/bdesert/nifi NIFI-5728_XMLWriter Alternatively you can review and apply these changes as the patch at: https://github.com/apache/nifi/pull/3098.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #3098 commit 268ae74deeaf0228fb07cf50c3dd343ff9fb7e9f Author: Ed B Date: 2018-10-21T01:42:21Z NIFI-5728 XML Writer to populate record tag name properly ---

[GitHub] nifi issue #3078: NIFI-4805 Allow Delayed Transfer

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/3078 @joewitt , @patricker While working on implementations, I faced a problem of penalization on failure. Some processors are penalizing FF on failure, some do only on rollback, some gives you a choice whether to penalize or not on failure. Use Case: FetchFile -> transform -> PutHiveStreaming On PutHiveStreaming failure -> 1) wait 1 min + retry, 2) create a table (puthiveql will make you overwrite content, so you cannot do that sequentially). There are more use, fo now I do like this:  ---

[GitHub] nifi issue #3078: NIFI-4805 Allow Delayed Transfer

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/3078 +1 LGTM Pulled the changes, tested on local env, all looks good to go. @markap14 , @alopresto , any additional comments? ---

[GitHub] nifi pull request #3078: NIFI-4805 Allow Delayed Transfer

Github user bdesert commented on a diff in the pull request: https://github.com/apache/nifi/pull/3078#discussion_r226029162 --- Diff: nifi-nar-bundles/nifi-standard-bundle/nifi-standard-processors/src/main/java/org/apache/nifi/processors/standard/PenalizeFlowFile.java --- @@ -0,0 +1,74 @@ +/* + * Licensed to the Apache Software Foundation (ASF) under one or more + * contributor license agreements. See the NOTICE file distributed with + * this work for additional information regarding copyright ownership. + * The ASF licenses this file to You under the Apache License, Version 2.0 + * (the "License"); you may not use this file except in compliance with + * the License. You may obtain a copy of the License at + * + * http://www.apache.org/licenses/LICENSE-2.0 + * + * Unless required by applicable law or agreed to in writing, software + * distributed under the License is distributed on an "AS IS" BASIS, + * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + * See the License for the specific language governing permissions and + * limitations under the License. + */ + +package org.apache.nifi.processors.standard; +import org.apache.nifi.annotation.behavior.EventDriven; +import org.apache.nifi.annotation.behavior.InputRequirement; +import org.apache.nifi.annotation.behavior.SideEffectFree; +import org.apache.nifi.annotation.behavior.SupportsBatching; +import org.apache.nifi.annotation.behavior.WritesAttribute; +import org.apache.nifi.annotation.behavior.WritesAttributes; +import org.apache.nifi.annotation.documentation.CapabilityDescription; +import org.apache.nifi.annotation.documentation.Tags; +import org.apache.nifi.components.PropertyDescriptor; +import org.apache.nifi.flowfile.FlowFile; +import org.apache.nifi.processor.AbstractProcessor; +import org.apache.nifi.processor.ProcessContext; +import org.apache.nifi.processor.ProcessSession; +import org.apache.nifi.processor.ProcessorInitializationContext; +import org.apache.nifi.processor.Relationship; +import org.apache.nifi.processor.exception.ProcessException; + +import java.util.Collections; +import java.util.HashSet; +import java.util.List; --- End diff -- ```suggestion ``` ---

[GitHub] nifi pull request #3078: NIFI-4805 Allow Delayed Transfer

Github user bdesert commented on a diff in the pull request: https://github.com/apache/nifi/pull/3078#discussion_r226028831 --- Diff: nifi-nar-bundles/nifi-standard-bundle/nifi-standard-processors/src/main/java/org/apache/nifi/processors/standard/PenalizeFlowFile.java --- @@ -0,0 +1,74 @@ +/* + * Licensed to the Apache Software Foundation (ASF) under one or more + * contributor license agreements. See the NOTICE file distributed with + * this work for additional information regarding copyright ownership. + * The ASF licenses this file to You under the Apache License, Version 2.0 + * (the "License"); you may not use this file except in compliance with + * the License. You may obtain a copy of the License at + * + * http://www.apache.org/licenses/LICENSE-2.0 + * + * Unless required by applicable law or agreed to in writing, software + * distributed under the License is distributed on an "AS IS" BASIS, + * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + * See the License for the specific language governing permissions and + * limitations under the License. + */ + +package org.apache.nifi.processors.standard; +import org.apache.nifi.annotation.behavior.EventDriven; +import org.apache.nifi.annotation.behavior.InputRequirement; +import org.apache.nifi.annotation.behavior.SideEffectFree; +import org.apache.nifi.annotation.behavior.SupportsBatching; +import org.apache.nifi.annotation.behavior.WritesAttribute; --- End diff -- ```suggestion ``` ---

[GitHub] nifi pull request #3078: NIFI-4805 Allow Delayed Transfer

Github user bdesert commented on a diff in the pull request: https://github.com/apache/nifi/pull/3078#discussion_r226029040 --- Diff: nifi-nar-bundles/nifi-standard-bundle/nifi-standard-processors/src/main/java/org/apache/nifi/processors/standard/PenalizeFlowFile.java --- @@ -0,0 +1,74 @@ +/* + * Licensed to the Apache Software Foundation (ASF) under one or more + * contributor license agreements. See the NOTICE file distributed with + * this work for additional information regarding copyright ownership. + * The ASF licenses this file to You under the Apache License, Version 2.0 + * (the "License"); you may not use this file except in compliance with + * the License. You may obtain a copy of the License at + * + * http://www.apache.org/licenses/LICENSE-2.0 + * + * Unless required by applicable law or agreed to in writing, software + * distributed under the License is distributed on an "AS IS" BASIS, + * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + * See the License for the specific language governing permissions and + * limitations under the License. + */ + +package org.apache.nifi.processors.standard; +import org.apache.nifi.annotation.behavior.EventDriven; +import org.apache.nifi.annotation.behavior.InputRequirement; +import org.apache.nifi.annotation.behavior.SideEffectFree; +import org.apache.nifi.annotation.behavior.SupportsBatching; +import org.apache.nifi.annotation.behavior.WritesAttribute; +import org.apache.nifi.annotation.behavior.WritesAttributes; +import org.apache.nifi.annotation.documentation.CapabilityDescription; +import org.apache.nifi.annotation.documentation.Tags; +import org.apache.nifi.components.PropertyDescriptor; --- End diff -- ```suggestion ``` ---

[GitHub] nifi pull request #3078: NIFI-4805 Allow Delayed Transfer

Github user bdesert commented on a diff in the pull request: https://github.com/apache/nifi/pull/3078#discussion_r226028907 --- Diff: nifi-nar-bundles/nifi-standard-bundle/nifi-standard-processors/src/main/java/org/apache/nifi/processors/standard/PenalizeFlowFile.java --- @@ -0,0 +1,74 @@ +/* + * Licensed to the Apache Software Foundation (ASF) under one or more + * contributor license agreements. See the NOTICE file distributed with + * this work for additional information regarding copyright ownership. + * The ASF licenses this file to You under the Apache License, Version 2.0 + * (the "License"); you may not use this file except in compliance with + * the License. You may obtain a copy of the License at + * + * http://www.apache.org/licenses/LICENSE-2.0 + * + * Unless required by applicable law or agreed to in writing, software + * distributed under the License is distributed on an "AS IS" BASIS, + * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + * See the License for the specific language governing permissions and + * limitations under the License. + */ + +package org.apache.nifi.processors.standard; +import org.apache.nifi.annotation.behavior.EventDriven; +import org.apache.nifi.annotation.behavior.InputRequirement; +import org.apache.nifi.annotation.behavior.SideEffectFree; +import org.apache.nifi.annotation.behavior.SupportsBatching; +import org.apache.nifi.annotation.behavior.WritesAttribute; +import org.apache.nifi.annotation.behavior.WritesAttributes; --- End diff -- ```suggestion ``` ---

[GitHub] nifi pull request #3078: NIFI-4805 Allow Delayed Transfer

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/3078#discussion_r226035394

--- Diff:

nifi-nar-bundles/nifi-standard-bundle/nifi-standard-processors/src/main/java/org/apache/nifi/processors/standard/PenalizeFlowFile.java

---

@@ -0,0 +1,74 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.nifi.processors.standard;

+import org.apache.nifi.annotation.behavior.EventDriven;

+import org.apache.nifi.annotation.behavior.InputRequirement;

+import org.apache.nifi.annotation.behavior.SideEffectFree;

+import org.apache.nifi.annotation.behavior.SupportsBatching;

+import org.apache.nifi.annotation.behavior.WritesAttribute;

+import org.apache.nifi.annotation.behavior.WritesAttributes;

+import org.apache.nifi.annotation.documentation.CapabilityDescription;

+import org.apache.nifi.annotation.documentation.Tags;

+import org.apache.nifi.components.PropertyDescriptor;

+import org.apache.nifi.flowfile.FlowFile;

+import org.apache.nifi.processor.AbstractProcessor;

+import org.apache.nifi.processor.ProcessContext;

+import org.apache.nifi.processor.ProcessSession;

+import org.apache.nifi.processor.ProcessorInitializationContext;

+import org.apache.nifi.processor.Relationship;

+import org.apache.nifi.processor.exception.ProcessException;

+

+import java.util.Collections;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Set;

+

+@EventDriven

+@SideEffectFree

+@SupportsBatching

+@Tags({"penalty", "penalize", "flowfile"})

+@InputRequirement(InputRequirement.Requirement.INPUT_REQUIRED)

+@CapabilityDescription("Penalizes a FlowFile.")

--- End diff --

```suggestion

@CapabilityDescription("This processor provides capability to penalize flow

files. "

+ "Every flow file will be penalized as per 'Penalty Duration'

property of the processor.")

```

---

[GitHub] nifi issue #3078: NIFI-4805 Allow Delayed Transfer

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/3078 @patricker , the code looks good. I'll test them locally later today and will provide my feedback. ---

[GitHub] nifi pull request #3078: NIFI-4805 Allow Delayed Transfer

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/3078#discussion_r226004572

--- Diff:

nifi-nar-bundles/nifi-standard-bundle/nifi-standard-processors/src/main/java/org/apache/nifi/processors/standard/PenalizeFlowFile.java

---

@@ -0,0 +1,91 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.nifi.processors.standard;

+import org.apache.nifi.annotation.behavior.EventDriven;

+import org.apache.nifi.annotation.behavior.InputRequirement;

+import org.apache.nifi.annotation.behavior.SideEffectFree;

+import org.apache.nifi.annotation.behavior.SupportsBatching;

+import org.apache.nifi.annotation.behavior.WritesAttribute;

+import org.apache.nifi.annotation.behavior.WritesAttributes;

+import org.apache.nifi.annotation.documentation.CapabilityDescription;

+import org.apache.nifi.annotation.documentation.Tags;

+import org.apache.nifi.components.PropertyDescriptor;

+import org.apache.nifi.flowfile.FlowFile;

+import org.apache.nifi.processor.AbstractProcessor;

+import org.apache.nifi.processor.ProcessContext;

+import org.apache.nifi.processor.ProcessSession;

+import org.apache.nifi.processor.ProcessorInitializationContext;

+import org.apache.nifi.processor.Relationship;

+import org.apache.nifi.processor.exception.ProcessException;

+

+import java.util.Collections;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Set;

+

+@EventDriven

+@SideEffectFree

+@SupportsBatching

+@Tags({"penalty", "penalize", "flowfile"})

+@InputRequirement(InputRequirement.Requirement.INPUT_REQUIRED)

+@CapabilityDescription("Penalizes a FlowFile.")

+@WritesAttributes({

+@WritesAttribute(attribute = "penalization.count.{processor

uuid}", description = "How many times this processor has penalized this

FlowFile.")

+})

+

+public class PenalizeFlowFile extends AbstractProcessor {

+public static final Relationship REL_SUCCESS = new

Relationship.Builder().name("success")

+.description("Successfully penalized FlowFile").build();

+

+private List properties;

+private Set relationships;

+

+@Override

+protected void init(final ProcessorInitializationContext context) {

+final Set relationships = new HashSet<>();

+relationships.add(REL_SUCCESS);

+this.relationships = Collections.unmodifiableSet(relationships);

+}

+@Override

+public Set getRelationships() {

+return relationships;

+}

+

+@Override

+public void onTrigger(ProcessContext context, ProcessSession session)

throws ProcessException {

+FlowFile flowFile = session.get();

+if (flowFile == null) {

+return;

+}

+

+// Track how many times a FlowFile passes through this processor

to better support the Retry use case

+final String retryAttrName = "penalization.count." +

this.getIdentifier();

+final String initialCount = flowFile.getAttribute(retryAttrName);

+long cnt = 0;

+if(initialCount != null) {

+cnt = Long.parseLong(initialCount);

+}

+

+cnt++;

+

+flowFile = session.putAttribute(flowFile, retryAttrName,

Long.toString(cnt));

--- End diff --

as discussed under JIRA, if this processor goes for penalizing only

(without re-try functionality), then I'd remove lines 77-86 at all. retry

capabilities should be implemented then in separate processor.

---

[GitHub] nifi issue #3078: NIFI-4805 Allow Delayed Transfer

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/3078 @patricker, this PR doesn't implement all the features requested originally in NIFI-4805, and discussed throughout the thread. Please take a look on comments from Andy, Mark and Martin. Missing features: gradual penalization on re-try, support of EL for defining penalization period ---

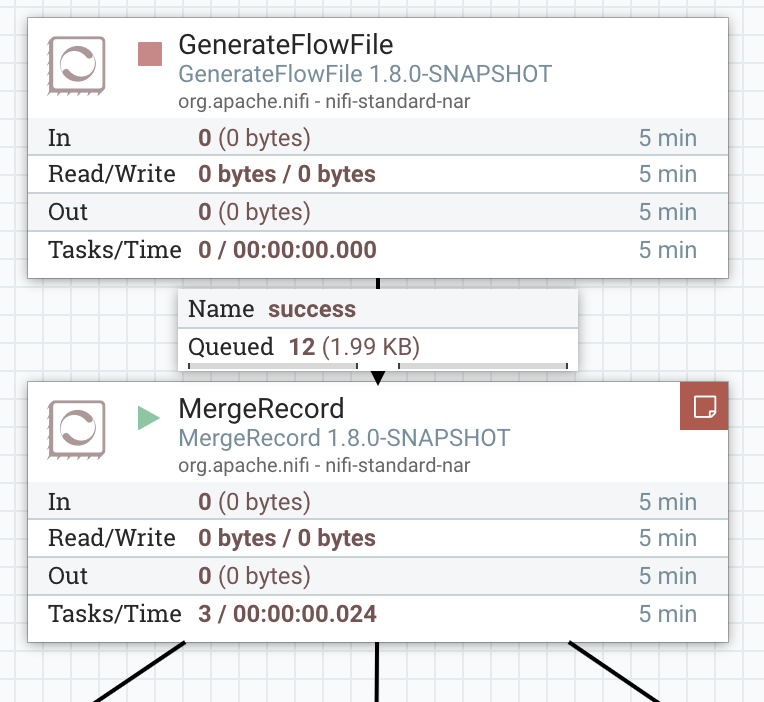

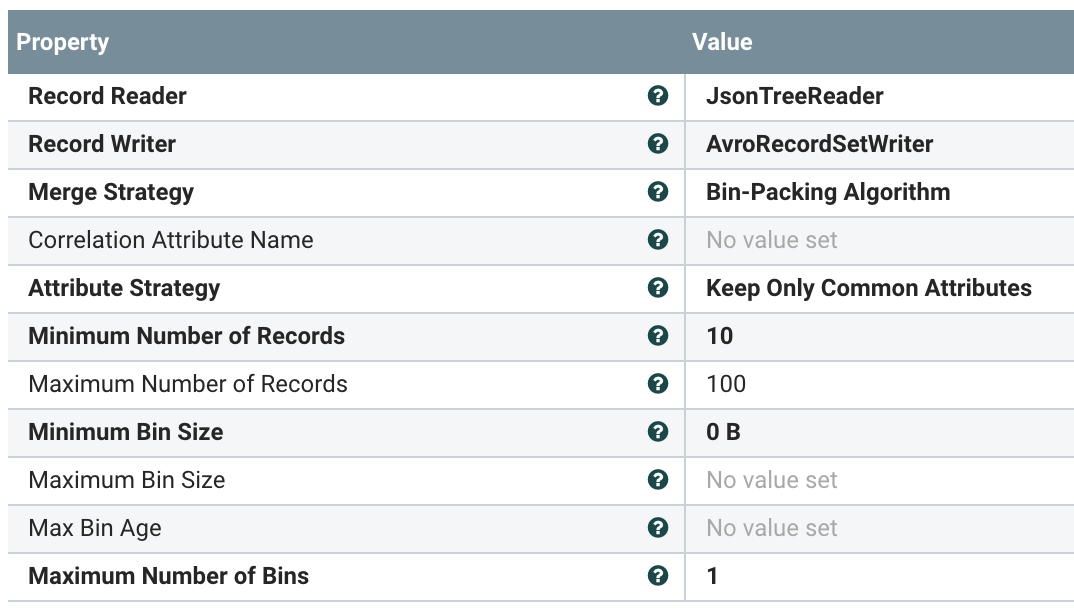

[GitHub] nifi issue #2954: NIFI-5514: Fixed bugs in MergeRecord around minimum thresh...

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/2954 +1 LGTM. Test case updated. Live test on local env (up to date) succeeded. Works as expected. Travis is failing for JP only (US and FR are OK). Can be merged. ---

[GitHub] nifi pull request #2954: NIFI-5514: Fixed bugs in MergeRecord around minimum...

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/2954#discussion_r219683471

--- Diff:

nifi-nar-bundles/nifi-standard-bundle/nifi-standard-processors/src/main/java/org/apache/nifi/processors/standard/MergeRecord.java

---

@@ -304,13 +336,25 @@ public void onTrigger(final ProcessContext context,

final ProcessSessionFactory

session.commit();

}

+// If there is no more data queued up, complete any bin that meets

our minimum threshold

+int completedBins = 0;

+final QueueSize queueSize = session.getQueueSize();

--- End diff --

@markap14

Test for MinRecords is successful, but actual flow doesn't work as expected

and still doesn't respect Min Records setting.

There is my set up:

The problem is in different behavior of MockProcessSession and

StandardProcessSession.

MockProcessSession.getQueueSize() will return 0, after session.get(...)

StandardProcessSession.getQueueSize() will return same number as before

session.get(...), regardless flow files have been polled or not.

As a result, this condition will block from actually emitting FF when min

requirements are reached.

I would recommend to change this condition to:

if (flowFiles.size() != 0) {...}

In parallel, we probably need to open a JIRA for inconsistency between

MockProcessSession and StandardProcessSession.

---

[GitHub] nifi issue #2954: NIFI-5514: Fixed bugs in MergeRecord around minimum thresh...

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/2954 Reviewing... ---

[GitHub] nifi issue #3005: NIFI-5598: Allow JMS Processors to lookup Connection Facto...

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/3005 +1. Ready for merge. Tested JNDI lookup with Tibco Context Factory (with authentication). Successfully pulled records from my queue. ---

[GitHub] nifi issue #3005: NIFI-5598: Allow JMS Processors to lookup Connection Facto...

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/3005 I'll test it and will let you know results (I have JNDI server with authentication for JMS queues). ---

[GitHub] nifi pull request #3005: NIFI-5598: Allow JMS Processors to lookup Connectio...

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/3005#discussion_r218387623

--- Diff:

nifi-nar-bundles/nifi-jms-bundle/nifi-jms-processors/src/main/java/org/apache/nifi/jms/cf/JndiJmsConnectionFactoryProvider.java

---

@@ -0,0 +1,165 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.nifi.jms.cf;

+

+import org.apache.nifi.annotation.behavior.DynamicProperty;

+import org.apache.nifi.annotation.documentation.CapabilityDescription;

+import org.apache.nifi.annotation.documentation.SeeAlso;

+import org.apache.nifi.annotation.documentation.Tags;

+import org.apache.nifi.annotation.lifecycle.OnDisabled;

+import org.apache.nifi.components.PropertyDescriptor;

+import org.apache.nifi.components.PropertyDescriptor.Builder;

+import org.apache.nifi.components.Validator;

+import org.apache.nifi.controller.AbstractControllerService;

+import org.apache.nifi.controller.ConfigurationContext;

+import org.apache.nifi.expression.ExpressionLanguageScope;

+import org.apache.nifi.processor.exception.ProcessException;

+import org.apache.nifi.processor.util.StandardValidators;

+

+import javax.jms.ConnectionFactory;

+import javax.naming.Context;

+import javax.naming.InitialContext;

+import javax.naming.NamingException;

+import java.util.Arrays;

+import java.util.Hashtable;

+import java.util.List;

+

+import static

org.apache.nifi.processor.util.StandardValidators.NON_EMPTY_VALIDATOR;

+

+@Tags({"jms", "jndi", "messaging", "integration", "queue", "topic",

"publish", "subscribe"})

+@CapabilityDescription("Provides a service to lookup an existing JMS

ConnectionFactory using the Java Naming and Directory Interface (JNDI).")

+@DynamicProperty(

+description = "In order to perform a JNDI Lookup, an Initial Context

must be established. When this is done, an Environment can be established for

the context. Any dynamic/user-defined property" +

+" that is added to this Controller Service will be added as an

Environment configuration/variable to this Context.",

+name = "The name of a JNDI Initial Context environment variable.",

+value = "The value of the JNDI Initial Context Environment variable.",

+expressionLanguageScope = ExpressionLanguageScope.VARIABLE_REGISTRY)

+@SeeAlso(classNames = {"org.apache.nifi.jms.processors.ConsumeJMS",

"org.apache.nifi.jms.processors.PublishJMS",

"org.apache.nifi.jms.cf.JMSConnectionFactoryProvider"})

+public class JndiJmsConnectionFactoryProvider extends

AbstractControllerService implements JMSConnectionFactoryProviderDefinition{

+

+static final PropertyDescriptor INITIAL_NAMING_FACTORY_CLASS = new

Builder()

+.name("java.naming.factory.initial")

+.displayName("Initial Naming Factory Class")

+.description("The fully qualified class name of the Java Initial

Naming Factory (java.naming.factory.initial).")

+.addValidator(NON_EMPTY_VALIDATOR)

+

.expressionLanguageSupported(ExpressionLanguageScope.VARIABLE_REGISTRY)

+.required(true)

+.build();

+static final PropertyDescriptor NAMING_PROVIDER_URL = new Builder()

+.name("java.naming.provider.url")

+.displayName("Naming Provider URL")

+.description("The URL of the JNDI Naming Provider to use")

+.required(true)

+.addValidator(NON_EMPTY_VALIDATOR)

+

.expressionLanguageSupported(ExpressionLanguageScope.VARIABLE_REGISTRY)

+.build();

+static final PropertyDescriptor CONNECTION_FACTORY_NAME = new Builder()

+.name("connection.factory.name")

+.displayName("Connection Factory Name")

+.description("The name o

[GitHub] nifi pull request #3005: NIFI-5598: Allow JMS Processors to lookup Connectio...

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/3005#discussion_r218386884

--- Diff:

nifi-nar-bundles/nifi-jms-bundle/nifi-jms-processors/src/main/java/org/apache/nifi/jms/cf/JndiJmsConnectionFactoryProvider.java

---

@@ -0,0 +1,165 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.nifi.jms.cf;

+

+import org.apache.nifi.annotation.behavior.DynamicProperty;

+import org.apache.nifi.annotation.documentation.CapabilityDescription;

+import org.apache.nifi.annotation.documentation.SeeAlso;

+import org.apache.nifi.annotation.documentation.Tags;

+import org.apache.nifi.annotation.lifecycle.OnDisabled;

+import org.apache.nifi.components.PropertyDescriptor;

+import org.apache.nifi.components.PropertyDescriptor.Builder;

+import org.apache.nifi.components.Validator;

+import org.apache.nifi.controller.AbstractControllerService;

+import org.apache.nifi.controller.ConfigurationContext;

+import org.apache.nifi.expression.ExpressionLanguageScope;

+import org.apache.nifi.processor.exception.ProcessException;

+import org.apache.nifi.processor.util.StandardValidators;

+

+import javax.jms.ConnectionFactory;

+import javax.naming.Context;

+import javax.naming.InitialContext;

+import javax.naming.NamingException;

+import java.util.Arrays;

+import java.util.Hashtable;

+import java.util.List;

+

+import static

org.apache.nifi.processor.util.StandardValidators.NON_EMPTY_VALIDATOR;

+

+@Tags({"jms", "jndi", "messaging", "integration", "queue", "topic",

"publish", "subscribe"})

+@CapabilityDescription("Provides a service to lookup an existing JMS

ConnectionFactory using the Java Naming and Directory Interface (JNDI).")

+@DynamicProperty(

+description = "In order to perform a JNDI Lookup, an Initial Context

must be established. When this is done, an Environment can be established for

the context. Any dynamic/user-defined property" +

+" that is added to this Controller Service will be added as an

Environment configuration/variable to this Context.",

+name = "The name of a JNDI Initial Context environment variable.",

+value = "The value of the JNDI Initial Context Environment variable.",

+expressionLanguageScope = ExpressionLanguageScope.VARIABLE_REGISTRY)

+@SeeAlso(classNames = {"org.apache.nifi.jms.processors.ConsumeJMS",

"org.apache.nifi.jms.processors.PublishJMS",

"org.apache.nifi.jms.cf.JMSConnectionFactoryProvider"})

+public class JndiJmsConnectionFactoryProvider extends

AbstractControllerService implements JMSConnectionFactoryProviderDefinition{

+

+static final PropertyDescriptor INITIAL_NAMING_FACTORY_CLASS = new

Builder()

+.name("java.naming.factory.initial")

+.displayName("Initial Naming Factory Class")

+.description("The fully qualified class name of the Java Initial

Naming Factory (java.naming.factory.initial).")

+.addValidator(NON_EMPTY_VALIDATOR)

+

.expressionLanguageSupported(ExpressionLanguageScope.VARIABLE_REGISTRY)

+.required(true)

+.build();

+static final PropertyDescriptor NAMING_PROVIDER_URL = new Builder()

+.name("java.naming.provider.url")

+.displayName("Naming Provider URL")

+.description("The URL of the JNDI Naming Provider to use")

+.required(true)

+.addValidator(NON_EMPTY_VALIDATOR)

+

.expressionLanguageSupported(ExpressionLanguageScope.VARIABLE_REGISTRY)

+.build();

+static final PropertyDescriptor CONNECTION_FACTORY_NAME = new Builder()

+.name("connection.factory.name")

+.displayName("Connection Factory Name")

+.description("The name o

[GitHub] nifi issue #3008: NIFI-5492_EXEC Adding UDF to EL

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/3008 @joewitt , @alopresto , @mattyb149 Since we had discussions on this topic before, I would like to get your opinion. I've addressed all the issues related to security (separate class loader), API changes (there are no such), scope (separate interface implementation enforced). If that's not enough to feel safe on this, do not hesitate to trash this PR, or give me some feedback how it can be improved. Thank you! ---

[GitHub] nifi pull request #3008: NIFI-5492_EXEC Adding UDF to EL

GitHub user bdesert opened a pull request: https://github.com/apache/nifi/pull/3008 NIFI-5492_EXEC Adding UDF to EL **this PR adds new functinoality to expression language - user defined funtions.** Thank you for submitting a contribution to Apache NiFi. In order to streamline the review of the contribution we ask you to ensure the following steps have been taken: ### For all changes: - [x] Is there a JIRA ticket associated with this PR? Is it referenced in the commit message? - [x] Does your PR title start with NIFI- where is the JIRA number you are trying to resolve? Pay particular attention to the hyphen "-" character. - [x] Has your PR been rebased against the latest commit within the target branch (typically master)? - [x] Is your initial contribution a single, squashed commit? ### For code changes: - [x] Have you ensured that the full suite of tests is executed via mvn -Pcontrib-check clean install at the root nifi folder? - [x] Have you written or updated unit tests to verify your changes? - [ ] If adding new dependencies to the code, are these dependencies licensed in a way that is compatible for inclusion under [ASF 2.0](http://www.apache.org/legal/resolved.html#category-a)? - [ ] If applicable, have you updated the LICENSE file, including the main LICENSE file under nifi-assembly? - [ ] If applicable, have you updated the NOTICE file, including the main NOTICE file found under nifi-assembly? - [ ] If adding new Properties, have you added .displayName in addition to .name (programmatic access) for each of the new properties? ### For documentation related changes: - [ ] Have you ensured that format looks appropriate for the output in which it is rendered? ### Note: Please ensure that once the PR is submitted, you check travis-ci for build issues and submit an update to your PR as soon as possible. You can merge this pull request into a Git repository by running: $ git pull https://github.com/bdesert/nifi NIFI-5492_EXEC Alternatively you can review and apply these changes as the patch at: https://github.com/apache/nifi/pull/3008.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #3008 commit 9cdb0f7b9fa963cfa29100d7b9ae76645388404b Author: Ed B Date: 2018-09-16T14:31:27Z NIFI-5492_EXEC Adding UDF to EL this PR adds new functinoality to expression language - user defined funtions. ---

[GitHub] nifi issue #2639: NIFI-4906 Add GetHDFSFileInfo

Github user bdesert commented on the issue: https://github.com/apache/nifi/pull/2639 @bbende , thank you for your comments! I've made the changes to address all your concerns. Please review once you have a time. ---

[GitHub] nifi pull request #2639: NIFI-4906 Add GetHDFSFileInfo

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/2639#discussion_r195907971

--- Diff:

nifi-nar-bundles/nifi-hadoop-bundle/nifi-hdfs-processors/src/main/java/org/apache/nifi/processors/hadoop/GetHDFSFileInfo.java

---

@@ -0,0 +1,803 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.nifi.processors.hadoop;

+

+import java.io.IOException;

+import java.security.PrivilegedExceptionAction;

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.LinkedList;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+import java.util.regex.Pattern;

+

+import org.apache.commons.lang3.StringUtils;

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.Path;

+import org.apache.hadoop.fs.permission.FsAction;

+import org.apache.hadoop.fs.permission.FsPermission;

+import org.apache.hadoop.security.UserGroupInformation;

+import org.apache.nifi.annotation.behavior.InputRequirement;

+import org.apache.nifi.annotation.behavior.InputRequirement.Requirement;

+import org.apache.nifi.annotation.behavior.TriggerSerially;

+import org.apache.nifi.annotation.behavior.TriggerWhenEmpty;

+import org.apache.nifi.annotation.behavior.WritesAttribute;

+import org.apache.nifi.annotation.behavior.WritesAttributes;

+import org.apache.nifi.annotation.documentation.CapabilityDescription;

+import org.apache.nifi.annotation.documentation.SeeAlso;

+import org.apache.nifi.annotation.documentation.Tags;

+import org.apache.nifi.components.AllowableValue;

+import org.apache.nifi.components.PropertyDescriptor;

+import org.apache.nifi.components.PropertyValue;

+import org.apache.nifi.components.ValidationContext;

+import org.apache.nifi.components.ValidationResult;

+import org.apache.nifi.expression.ExpressionLanguageScope;

+import org.apache.nifi.flowfile.FlowFile;

+import org.apache.nifi.processor.ProcessContext;

+import org.apache.nifi.processor.ProcessSession;

+import org.apache.nifi.processor.ProcessorInitializationContext;

+import org.apache.nifi.processor.Relationship;

+import org.apache.nifi.processor.exception.ProcessException;

+import org.apache.nifi.processor.util.StandardValidators;

+import

org.apache.nifi.processors.hadoop.GetHDFSFileInfo.HDFSFileInfoRequest.Groupping;

+

+@TriggerSerially

+@TriggerWhenEmpty

+@InputRequirement(Requirement.INPUT_ALLOWED)

+@Tags({"hadoop", "HDFS", "get", "list", "ingest", "source", "filesystem"})

+@CapabilityDescription("Retrieves a listing of files and directories from

HDFS. "

++ "This processor creates a FlowFile(s) that represents the HDFS

file/dir with relevant information. "

++ "Main purpose of this processor to provide functionality similar

to HDFS Client, i.e. count, du, ls, test, etc. "

++ "Unlike ListHDFS, this processor is stateless, supports incoming

connections and provides information on a dir level. "

+)

+@WritesAttributes({

+@WritesAttribute(attribute="hdfs.objectName", description="The name of

the file/dir found on HDFS."),

+@WritesAttribute(attribute="hdfs.path", description="The path is set

to the absolute path of the object's parent directory on HDFS. "

++ "For example, if an object is a directory 'foo', under

directory '/bar' then 'hdfs.objectName' will have value 'foo', and 'hdfs.path'

will be '/bar'"),

+@WritesAttribute(attribute="hdfs.type", description="The type of an

object. Possible values: directory, file, link"),

+@WritesAttribute(attribute="hdfs.owner", description="T

[GitHub] nifi pull request #2639: NIFI-4906 Add GetHDFSFileInfo

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/2639#discussion_r195906907

--- Diff:

nifi-nar-bundles/nifi-hadoop-bundle/nifi-hdfs-processors/src/main/java/org/apache/nifi/processors/hadoop/GetHDFSFileInfo.java

---

@@ -0,0 +1,803 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.nifi.processors.hadoop;

+

+import java.io.IOException;

+import java.security.PrivilegedExceptionAction;

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.LinkedList;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+import java.util.regex.Pattern;

+

+import org.apache.commons.lang3.StringUtils;

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.Path;

+import org.apache.hadoop.fs.permission.FsAction;

+import org.apache.hadoop.fs.permission.FsPermission;

+import org.apache.hadoop.security.UserGroupInformation;

+import org.apache.nifi.annotation.behavior.InputRequirement;

+import org.apache.nifi.annotation.behavior.InputRequirement.Requirement;

+import org.apache.nifi.annotation.behavior.TriggerSerially;

+import org.apache.nifi.annotation.behavior.TriggerWhenEmpty;

+import org.apache.nifi.annotation.behavior.WritesAttribute;

+import org.apache.nifi.annotation.behavior.WritesAttributes;

+import org.apache.nifi.annotation.documentation.CapabilityDescription;

+import org.apache.nifi.annotation.documentation.SeeAlso;

+import org.apache.nifi.annotation.documentation.Tags;

+import org.apache.nifi.components.AllowableValue;

+import org.apache.nifi.components.PropertyDescriptor;

+import org.apache.nifi.components.PropertyValue;

+import org.apache.nifi.components.ValidationContext;

+import org.apache.nifi.components.ValidationResult;

+import org.apache.nifi.expression.ExpressionLanguageScope;

+import org.apache.nifi.flowfile.FlowFile;

+import org.apache.nifi.processor.ProcessContext;

+import org.apache.nifi.processor.ProcessSession;

+import org.apache.nifi.processor.ProcessorInitializationContext;

+import org.apache.nifi.processor.Relationship;

+import org.apache.nifi.processor.exception.ProcessException;

+import org.apache.nifi.processor.util.StandardValidators;

+import

org.apache.nifi.processors.hadoop.GetHDFSFileInfo.HDFSFileInfoRequest.Groupping;

+

+@TriggerSerially

+@TriggerWhenEmpty

+@InputRequirement(Requirement.INPUT_ALLOWED)

+@Tags({"hadoop", "HDFS", "get", "list", "ingest", "source", "filesystem"})

+@CapabilityDescription("Retrieves a listing of files and directories from

HDFS. "

++ "This processor creates a FlowFile(s) that represents the HDFS

file/dir with relevant information. "

++ "Main purpose of this processor to provide functionality similar

to HDFS Client, i.e. count, du, ls, test, etc. "

++ "Unlike ListHDFS, this processor is stateless, supports incoming

connections and provides information on a dir level. "

+)

+@WritesAttributes({

+@WritesAttribute(attribute="hdfs.objectName", description="The name of

the file/dir found on HDFS."),

+@WritesAttribute(attribute="hdfs.path", description="The path is set

to the absolute path of the object's parent directory on HDFS. "

++ "For example, if an object is a directory 'foo', under

directory '/bar' then 'hdfs.objectName' will have value 'foo', and 'hdfs.path'

will be '/bar'"),

+@WritesAttribute(attribute="hdfs.type", description="The type of an

object. Possible values: directory, file, link"),

+@WritesAttribute(attribute="hdfs.owner", description="T

[GitHub] nifi pull request #2639: NIFI-4906 Add GetHDFSFileInfo

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/2639#discussion_r195906819

--- Diff:

nifi-nar-bundles/nifi-hadoop-bundle/nifi-hdfs-processors/src/main/java/org/apache/nifi/processors/hadoop/GetHDFSFileInfo.java

---

@@ -0,0 +1,803 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.nifi.processors.hadoop;

+

+import java.io.IOException;

+import java.security.PrivilegedExceptionAction;

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.LinkedList;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+import java.util.regex.Pattern;

+

+import org.apache.commons.lang3.StringUtils;

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.Path;

+import org.apache.hadoop.fs.permission.FsAction;

+import org.apache.hadoop.fs.permission.FsPermission;

+import org.apache.hadoop.security.UserGroupInformation;

+import org.apache.nifi.annotation.behavior.InputRequirement;

+import org.apache.nifi.annotation.behavior.InputRequirement.Requirement;

+import org.apache.nifi.annotation.behavior.TriggerSerially;

+import org.apache.nifi.annotation.behavior.TriggerWhenEmpty;

+import org.apache.nifi.annotation.behavior.WritesAttribute;

+import org.apache.nifi.annotation.behavior.WritesAttributes;

+import org.apache.nifi.annotation.documentation.CapabilityDescription;

+import org.apache.nifi.annotation.documentation.SeeAlso;

+import org.apache.nifi.annotation.documentation.Tags;

+import org.apache.nifi.components.AllowableValue;

+import org.apache.nifi.components.PropertyDescriptor;

+import org.apache.nifi.components.PropertyValue;

+import org.apache.nifi.components.ValidationContext;

+import org.apache.nifi.components.ValidationResult;

+import org.apache.nifi.expression.ExpressionLanguageScope;

+import org.apache.nifi.flowfile.FlowFile;

+import org.apache.nifi.processor.ProcessContext;

+import org.apache.nifi.processor.ProcessSession;

+import org.apache.nifi.processor.ProcessorInitializationContext;

+import org.apache.nifi.processor.Relationship;

+import org.apache.nifi.processor.exception.ProcessException;

+import org.apache.nifi.processor.util.StandardValidators;

+import

org.apache.nifi.processors.hadoop.GetHDFSFileInfo.HDFSFileInfoRequest.Groupping;

+

+@TriggerSerially

+@TriggerWhenEmpty

+@InputRequirement(Requirement.INPUT_ALLOWED)

+@Tags({"hadoop", "HDFS", "get", "list", "ingest", "source", "filesystem"})

+@CapabilityDescription("Retrieves a listing of files and directories from

HDFS. "

++ "This processor creates a FlowFile(s) that represents the HDFS

file/dir with relevant information. "

++ "Main purpose of this processor to provide functionality similar

to HDFS Client, i.e. count, du, ls, test, etc. "

++ "Unlike ListHDFS, this processor is stateless, supports incoming

connections and provides information on a dir level. "

+)

+@WritesAttributes({

+@WritesAttribute(attribute="hdfs.objectName", description="The name of

the file/dir found on HDFS."),

+@WritesAttribute(attribute="hdfs.path", description="The path is set

to the absolute path of the object's parent directory on HDFS. "

++ "For example, if an object is a directory 'foo', under

directory '/bar' then 'hdfs.objectName' will have value 'foo', and 'hdfs.path'

will be '/bar'"),

+@WritesAttribute(attribute="hdfs.type", description="The type of an

object. Possible values: directory, file, link"),

+@WritesAttribute(attribute="hdfs.owner", description="T

[GitHub] nifi pull request #2639: NIFI-4906 Add GetHDFSFileInfo

Github user bdesert commented on a diff in the pull request: https://github.com/apache/nifi/pull/2639#discussion_r195906462 --- Diff: nifi-nar-bundles/nifi-hadoop-bundle/nifi-hdfs-processors/src/main/java/org/apache/nifi/processors/hadoop/GetHDFSFileInfo.java --- @@ -0,0 +1,803 @@ +/* + * Licensed to the Apache Software Foundation (ASF) under one or more + * contributor license agreements. See the NOTICE file distributed with + * this work for additional information regarding copyright ownership. + * The ASF licenses this file to You under the Apache License, Version 2.0 + * (the "License"); you may not use this file except in compliance with + * the License. You may obtain a copy of the License at + * + * http://www.apache.org/licenses/LICENSE-2.0 + * + * Unless required by applicable law or agreed to in writing, software + * distributed under the License is distributed on an "AS IS" BASIS, + * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + * See the License for the specific language governing permissions and + * limitations under the License. + */ +package org.apache.nifi.processors.hadoop; + +import java.io.IOException; +import java.security.PrivilegedExceptionAction; +import java.util.ArrayList; +import java.util.Collection; +import java.util.HashMap; +import java.util.HashSet; +import java.util.LinkedList; +import java.util.List; +import java.util.Map; +import java.util.Set; +import java.util.concurrent.TimeUnit; +import java.util.regex.Pattern; + +import org.apache.commons.lang3.StringUtils; +import org.apache.hadoop.fs.FileStatus; +import org.apache.hadoop.fs.FileSystem; +import org.apache.hadoop.fs.Path; +import org.apache.hadoop.fs.permission.FsAction; +import org.apache.hadoop.fs.permission.FsPermission; +import org.apache.hadoop.security.UserGroupInformation; +import org.apache.nifi.annotation.behavior.InputRequirement; +import org.apache.nifi.annotation.behavior.InputRequirement.Requirement; +import org.apache.nifi.annotation.behavior.TriggerSerially; +import org.apache.nifi.annotation.behavior.TriggerWhenEmpty; +import org.apache.nifi.annotation.behavior.WritesAttribute; +import org.apache.nifi.annotation.behavior.WritesAttributes; +import org.apache.nifi.annotation.documentation.CapabilityDescription; +import org.apache.nifi.annotation.documentation.SeeAlso; +import org.apache.nifi.annotation.documentation.Tags; +import org.apache.nifi.components.AllowableValue; +import org.apache.nifi.components.PropertyDescriptor; +import org.apache.nifi.components.PropertyValue; +import org.apache.nifi.components.ValidationContext; +import org.apache.nifi.components.ValidationResult; +import org.apache.nifi.expression.ExpressionLanguageScope; +import org.apache.nifi.flowfile.FlowFile; +import org.apache.nifi.processor.ProcessContext; +import org.apache.nifi.processor.ProcessSession; +import org.apache.nifi.processor.ProcessorInitializationContext; +import org.apache.nifi.processor.Relationship; +import org.apache.nifi.processor.exception.ProcessException; +import org.apache.nifi.processor.util.StandardValidators; +import org.apache.nifi.processors.hadoop.GetHDFSFileInfo.HDFSFileInfoRequest.Groupping; + +@TriggerSerially +@TriggerWhenEmpty --- End diff -- @bbende , removed TriggerWhenEmpty and TriggerSerially. Copy/paste from statefull processor, no need in this case. ---

[GitHub] nifi pull request #2639: NIFI-4906 Add GetHDFSFileInfo

Github user bdesert commented on a diff in the pull request:

https://github.com/apache/nifi/pull/2639#discussion_r195905944

--- Diff:

nifi-nar-bundles/nifi-hadoop-bundle/nifi-hdfs-processors/src/main/java/org/apache/nifi/processors/hadoop/GetHDFSFileInfo.java

---

@@ -0,0 +1,803 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.nifi.processors.hadoop;

+

+import java.io.IOException;

+import java.security.PrivilegedExceptionAction;

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.LinkedList;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+import java.util.regex.Pattern;

+

+import org.apache.commons.lang3.StringUtils;

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.Path;

+import org.apache.hadoop.fs.permission.FsAction;

+import org.apache.hadoop.fs.permission.FsPermission;

+import org.apache.hadoop.security.UserGroupInformation;

+import org.apache.nifi.annotation.behavior.InputRequirement;

+import org.apache.nifi.annotation.behavior.InputRequirement.Requirement;

+import org.apache.nifi.annotation.behavior.TriggerSerially;

+import org.apache.nifi.annotation.behavior.TriggerWhenEmpty;

+import org.apache.nifi.annotation.behavior.WritesAttribute;

+import org.apache.nifi.annotation.behavior.WritesAttributes;

+import org.apache.nifi.annotation.documentation.CapabilityDescription;

+import org.apache.nifi.annotation.documentation.SeeAlso;

+import org.apache.nifi.annotation.documentation.Tags;

+import org.apache.nifi.components.AllowableValue;

+import org.apache.nifi.components.PropertyDescriptor;

+import org.apache.nifi.components.PropertyValue;

+import org.apache.nifi.components.ValidationContext;

+import org.apache.nifi.components.ValidationResult;

+import org.apache.nifi.expression.ExpressionLanguageScope;

+import org.apache.nifi.flowfile.FlowFile;

+import org.apache.nifi.processor.ProcessContext;

+import org.apache.nifi.processor.ProcessSession;

+import org.apache.nifi.processor.ProcessorInitializationContext;

+import org.apache.nifi.processor.Relationship;

+import org.apache.nifi.processor.exception.ProcessException;

+import org.apache.nifi.processor.util.StandardValidators;

+import

org.apache.nifi.processors.hadoop.GetHDFSFileInfo.HDFSFileInfoRequest.Groupping;

+

+@TriggerSerially

+@TriggerWhenEmpty

+@InputRequirement(Requirement.INPUT_ALLOWED)

+@Tags({"hadoop", "HDFS", "get", "list", "ingest", "source", "filesystem"})

+@CapabilityDescription("Retrieves a listing of files and directories from

HDFS. "

++ "This processor creates a FlowFile(s) that represents the HDFS

file/dir with relevant information. "

++ "Main purpose of this processor to provide functionality similar

to HDFS Client, i.e. count, du, ls, test, etc. "

++ "Unlike ListHDFS, this processor is stateless, supports incoming

connections and provides information on a dir level. "

+)

+@WritesAttributes({

+@WritesAttribute(attribute="hdfs.objectName", description="The name of

the file/dir found on HDFS."),