[GitHub] [kafka] lkokhreidze commented on pull request #8558: KAFKA-8611 / KIP-221 documentation

lkokhreidze commented on pull request #8558: URL: https://github.com/apache/kafka/pull/8558#issuecomment-628419029 Hi @mjsax I've rebased the branch. Do you mean comment by Guozhang in the voting thread? If not, I missed it and can't find anything new in the DISCUSS thread. Can you point me where was it asked? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (KAFKA-9676) Add test coverage for new ActiveTaskCreator and StandbyTaskCreator

[ https://issues.apache.org/jira/browse/KAFKA-9676?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Boyang Chen resolved KAFKA-9676. Resolution: Fixed The current unit test coverage is pretty good now, closing the ticket. > Add test coverage for new ActiveTaskCreator and StandbyTaskCreator > -- > > Key: KAFKA-9676 > URL: https://issues.apache.org/jira/browse/KAFKA-9676 > Project: Kafka > Issue Type: Test > Components: streams >Reporter: Boyang Chen >Priority: Major > Labels: help-wanted, newbie > > The newly separated ActiveTaskCreator and StandbyTaskCreator have no unit > test coverage. We should add corresponding tests. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-9989) StreamsUpgradeTest.test_metadata_upgrade could not guarantee all processor gets assigned task

[ https://issues.apache.org/jira/browse/KAFKA-9989?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17106950#comment-17106950 ] Boyang Chen commented on KAFKA-9989: I didn't find that in the log [~ableegoldman] > StreamsUpgradeTest.test_metadata_upgrade could not guarantee all processor > gets assigned task > - > > Key: KAFKA-9989 > URL: https://issues.apache.org/jira/browse/KAFKA-9989 > Project: Kafka > Issue Type: Bug > Components: streams, system tests >Reporter: Boyang Chen >Assignee: Boyang Chen >Priority: Major > > System test StreamsUpgradeTest.test_metadata_upgrade could fail due to: > "Never saw output 'processed [0-9]* records' on ubuntu@worker6" > which if we take a closer look at, the rebalance happens but has no task > assignment. We should fix this problem by making the rebalance result as part > of the check, and skip the record processing validation when the assignment > is empty. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] abbccdda commented on a change in pull request #8395: Added doc for KIP-535 and updated it for KIP-562

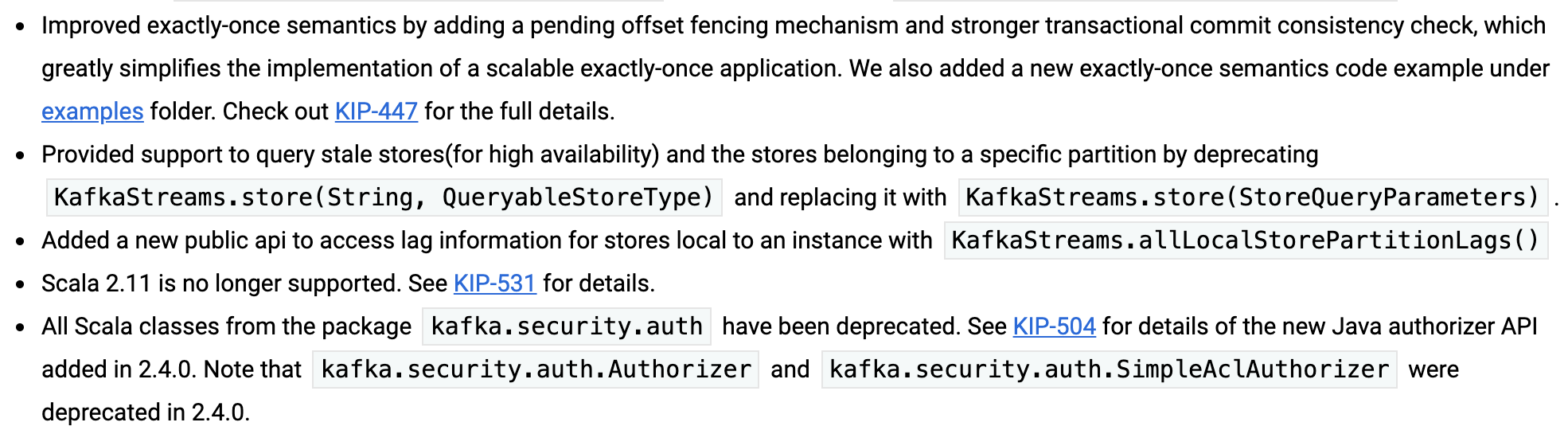

abbccdda commented on a change in pull request #8395: URL: https://github.com/apache/kafka/pull/8395#discussion_r404455662 ## File path: docs/upgrade.html ## @@ -39,7 +39,8 @@ Notable changes in 2 https://github.com/apache/kafka/tree/2.5/examples";>examples folder. Check out https://cwiki.apache.org/confluence/display/KAFKA/KIP-447%3A+Producer+scalability+for+exactly+once+semantics";>KIP-447 for the full details. -Deprecated KafkaStreams.store(String, QueryableStoreType) and replaced it with KafkaStreams.store(StoreQueryParameters). +Provided support to query stale stores(for high availability) and the stores belonging to a specific partition by deprecating KafkaStreams.store(String, QueryableStoreType) and replacing it with KafkaStreams.store(StoreQueryParameters). Review comment:  Let's add a space between `stores(` as `stores (`, and add a period after `KafkaStreams.allLocalStorePartitionLags()` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] zhaohaidao opened a new pull request #8665: KAFKA-9984 Should fail the subscription when pattern is empty

zhaohaidao opened a new pull request #8665: URL: https://github.com/apache/kafka/pull/8665 Ticket: KAFKA-9984 It indicates a configuration error when consumer subscribes an empty pattern: ``` [Consumer ... ] Subscribed to pattern: '' ``` The `consumer.subscribe(pattern)` call should fail with illegal argument for this case. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on pull request #8623: MINOR: Update the documentations

showuon commented on pull request #8623: URL: https://github.com/apache/kafka/pull/8623#issuecomment-628403219 Hi @kkonstantine , could you please review this small PR? Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #8662: HOTFIX: skip listOffsets request for newly created changelog topics

vvcephei commented on a change in pull request #8662:

URL: https://github.com/apache/kafka/pull/8662#discussion_r424712060

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ClientUtils.java

##

@@ -105,6 +109,7 @@ public static String getTaskProducerClientId(final String

threadClientId, final

endOffsets = future.get(timeout.toMillis(),

TimeUnit.MILLISECONDS);

}

} catch (final TimeoutException | RuntimeException |

InterruptedException | ExecutionException e) {

+LOG.warn("listOffsets request failed due to ", e);

Review comment:

```suggestion

LOG.warn("listOffsets request failed.", e);

```

Thanks! (minor suggestion to make the log message more typical)

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/InternalTopicManager.java

##

@@ -169,6 +173,9 @@ public void makeReady(final Map topics) {

log.error(timeoutAndRetryError);

throw new StreamsException(timeoutAndRetryError);

}

+log.debug("Completed validating internal topics and created {}",

newlyCreatedTopics);

Review comment:

It's not what you signed up for, but I'm wondering if we should at least

submit a Jira to give some of these AdminClient methods a "full consistency"

mode. In other words, since the command returns a future anyway, it would be

nice to be able to tell it not to return until it can guarantee the topic will

appear to be fully created on all brokers.

I'm mildly concerned that we're just kicking the can down the road a little

ways with this PR. I.e., we let the assignment happen, but then some other

metadata (or data) operation for that topic will just fail shortly thereafter.

More generally, we jump through a lot of hoops in our own tests to try and

make sure that the topics are really, actually created (or deleted) before

proceeding with the test, and I'm sure that our users also suffer from the same

problem in their testing and production code.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #8663: KAFKA-9985: Sink connector may exhaust broker when writing in DLQ

chia7712 commented on a change in pull request #8663:

URL: https://github.com/apache/kafka/pull/8663#discussion_r424860254

##

File path:

connect/runtime/src/main/java/org/apache/kafka/connect/runtime/SinkConnectorConfig.java

##

@@ -96,6 +101,25 @@ public static void validate(Map props) {

throw new ConfigException("Must configure one of " +

SinkTask.TOPICS_CONFIG + " or " +

SinkTask.TOPICS_REGEX_CONFIG);

}

+

+if (hasDlqTopicConfig) {

+String dlqTopic = props.get(DLQ_TOPIC_NAME_CONFIG).trim();

+if (hasTopicsConfig) {

+List topics = parseTopicsList(props);

+if (topics.contains(dlqTopic)) {

+throw new ConfigException(DLQ_TOPIC_NAME_CONFIG + " has a

topic name which is already in " +

Review comment:

Should we log the topic name for this exception? For example, ```has a

topic name (xxx) which```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-9981) Running a dedicated mm2 cluster with more than one nodes,When the configuration is updated the task is not aware and will lose the update operation.

[

https://issues.apache.org/jira/browse/KAFKA-9981?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17106865#comment-17106865

]

Andre Price commented on KAFKA-9981:

Not sure if related but I think I'm seeing issues where task configs don't seem

to be getting updated consistently when new topics/partitions are found.

> Running a dedicated mm2 cluster with more than one nodes,When the

> configuration is updated the task is not aware and will lose the update

> operation.

>

>

> Key: KAFKA-9981

> URL: https://issues.apache.org/jira/browse/KAFKA-9981

> Project: Kafka

> Issue Type: Bug

> Components: mirrormaker

>Affects Versions: 2.4.0, 2.5.0, 2.4.1

>Reporter: victor

>Priority: Major

>

> DistributedHerder.reconfigureConnector induction config update as follows:

> {code:java}

> if (changed) {

> List> rawTaskProps = reverseTransform(connName,

> configState, taskProps);

> if (isLeader()) {

> configBackingStore.putTaskConfigs(connName, rawTaskProps);

> cb.onCompletion(null, null);

> } else {

> // We cannot forward the request on the same thread because this

> reconfiguration can happen as a result of connector

> // addition or removal. If we blocked waiting for the response from

> leader, we may be kicked out of the worker group.

> forwardRequestExecutor.submit(new Runnable() {

> @Override

> public void run() {

> try {

> String leaderUrl = leaderUrl();

> if (leaderUrl == null || leaderUrl.trim().isEmpty()) {

> cb.onCompletion(new ConnectException("Request to

> leader to " +

> "reconfigure connector tasks failed " +

> "because the URL of the leader's REST

> interface is empty!"), null);

> return;

> }

> String reconfigUrl = RestServer.urlJoin(leaderUrl,

> "/connectors/" + connName + "/tasks");

> log.trace("Forwarding task configurations for connector

> {} to leader", connName);

> RestClient.httpRequest(reconfigUrl, "POST", null,

> rawTaskProps, null, config, sessionKey, requestSignatureAlgorithm);

> cb.onCompletion(null, null);

> } catch (ConnectException e) {

> log.error("Request to leader to reconfigure connector

> tasks failed", e);

> cb.onCompletion(e, null);

> }

> }

> });

> }

> }

> {code}

> KafkaConfigBackingStore task checks for configuration updates,such as topic

> whitelist update.If KafkaConfigBackingStore task is not running on leader

> node,an HTTP request will be send to notify the leader of the configuration

> update.However,dedicated mm2 cluster does not have the HTTP server turned

> on,so the request will fail to be sent,causing the update operation to be

> lost.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] chia7712 commented on a change in pull request #8663: KAFKA-9985: Sink connector may exhaust broker when writing in DLQ

chia7712 commented on a change in pull request #8663:

URL: https://github.com/apache/kafka/pull/8663#discussion_r424859533

##

File path:

connect/runtime/src/main/java/org/apache/kafka/connect/runtime/SinkConnectorConfig.java

##

@@ -108,6 +132,20 @@ public static boolean hasTopicsRegexConfig(Map props) {

return topicsRegexStr != null && !topicsRegexStr.trim().isEmpty();

}

+public static boolean hasDlqTopicConfig(Map props) {

+String dqlTopicStr = props.get(DLQ_TOPIC_NAME_CONFIG);

+return dqlTopicStr != null && !dqlTopicStr.trim().isEmpty();

+}

+

+public static List parseTopicsList(Map props) {

+List topics = (List)

ConfigDef.parseType(TOPICS_CONFIG, props.get(TOPICS_CONFIG), Type.LIST);

+return topics

+.stream()

+.filter(topic -> !topic.isEmpty())

+.distinct()

Review comment:

How about returning a Set instead of List?

```

return topics

.stream()

.filter(topic -> !topic.isEmpty())

.collect(Collectors.toSet());

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-9989) StreamsUpgradeTest.test_metadata_upgrade could not guarantee all processor gets assigned task

[

https://issues.apache.org/jira/browse/KAFKA-9989?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17106864#comment-17106864

]

Sophie Blee-Goldman commented on KAFKA-9989:

Yeah that looks like the FallbackPriorTaskAssignor to me. Do you see something

like

{code:java}

Failed to fetch end offsets for changelogs, will return previous assignment to

clients and " + "trigger another rebalance to retry.

{code}

in the logs? Depending on when this test was run this specific log message may

or may not have existed, we've made a lot of changes here lately..

> StreamsUpgradeTest.test_metadata_upgrade could not guarantee all processor

> gets assigned task

> -

>

> Key: KAFKA-9989

> URL: https://issues.apache.org/jira/browse/KAFKA-9989

> Project: Kafka

> Issue Type: Bug

> Components: streams, system tests

>Reporter: Boyang Chen

>Assignee: Boyang Chen

>Priority: Major

>

> System test StreamsUpgradeTest.test_metadata_upgrade could fail due to:

> "Never saw output 'processed [0-9]* records' on ubuntu@worker6"

> which if we take a closer look at, the rebalance happens but has no task

> assignment. We should fix this problem by making the rebalance result as part

> of the check, and skip the record processing validation when the assignment

> is empty.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] chia7712 commented on a change in pull request #8663: KAFKA-9985: Sink connector may exhaust broker when writing in DLQ

chia7712 commented on a change in pull request #8663:

URL: https://github.com/apache/kafka/pull/8663#discussion_r424859533

##

File path:

connect/runtime/src/main/java/org/apache/kafka/connect/runtime/SinkConnectorConfig.java

##

@@ -108,6 +132,20 @@ public static boolean hasTopicsRegexConfig(Map props) {

return topicsRegexStr != null && !topicsRegexStr.trim().isEmpty();

}

+public static boolean hasDlqTopicConfig(Map props) {

+String dqlTopicStr = props.get(DLQ_TOPIC_NAME_CONFIG);

+return dqlTopicStr != null && !dqlTopicStr.trim().isEmpty();

+}

+

+public static List parseTopicsList(Map props) {

+List topics = (List)

ConfigDef.parseType(TOPICS_CONFIG, props.get(TOPICS_CONFIG), Type.LIST);

+return topics

+.stream()

+.filter(topic -> !topic.isEmpty())

+.distinct()

Review comment:

why not just return a Set instead of List?

```

return topics

.stream()

.filter(topic -> !topic.isEmpty())

.collect(Collectors.toSet());

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Assigned] (KAFKA-9984) Should fail the subscription when pattern is empty

[ https://issues.apache.org/jira/browse/KAFKA-9984?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] HaiyuanZhao reassigned KAFKA-9984: -- Assignee: HaiyuanZhao > Should fail the subscription when pattern is empty > -- > > Key: KAFKA-9984 > URL: https://issues.apache.org/jira/browse/KAFKA-9984 > Project: Kafka > Issue Type: Bug > Components: consumer >Reporter: Boyang Chen >Assignee: HaiyuanZhao >Priority: Major > > We have seen a case where the consumer subscribes to an empty string pattern: > ``` > [Consumer ... ] Subscribed to pattern: '' > ``` > which doesn't make any sense and usually indicate a configuration error. The > `consumer.subscribe(pattern)` call should fail with illegal argument for this > case. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-9984) Should fail the subscription when pattern is empty

[ https://issues.apache.org/jira/browse/KAFKA-9984?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17106857#comment-17106857 ] Boyang Chen commented on KAFKA-9984: [~guozhang] Thanks! > Should fail the subscription when pattern is empty > -- > > Key: KAFKA-9984 > URL: https://issues.apache.org/jira/browse/KAFKA-9984 > Project: Kafka > Issue Type: Bug > Components: consumer >Reporter: Boyang Chen >Priority: Major > > We have seen a case where the consumer subscribes to an empty string pattern: > ``` > [Consumer ... ] Subscribed to pattern: '' > ``` > which doesn't make any sense and usually indicate a configuration error. The > `consumer.subscribe(pattern)` call should fail with illegal argument for this > case. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-9989) StreamsUpgradeTest.test_metadata_upgrade could not guarantee all processor gets assigned task

[

https://issues.apache.org/jira/browse/KAFKA-9989?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17106853#comment-17106853

]

Boyang Chen commented on KAFKA-9989:

Looks like it is determined by the assignor: (the latest added consumer gets 0

partition assigned)

[2020-05-07 07:53:15,487] DEBUG stream-thread

[StreamsUpgradeTest-0626d80d-03ed-4cf9-95a2-d778a791394f-StreamThread-1-consumer]

Assigning tasks [0_0, 0_1, 0_2, 0_3, 0_4] to clients

\{0626d80d-03ed-4cf9-95a2-d778a791394f=[activeTasks: ([]) standbyTasks: ([])

assignedTasks: ([]) prevActiveTasks: ([0_1, 0_3]) prevStandbyTasks: ([])

prevAssignedTasks: ([0_1, 0_3]) prevOwnedPartitionsByConsumerId: ([data-1,

data-3]) changelogOffsetTotalsByTask: ([]) capacity: 1],

3405b9f3-7f28-454a-9a00-906f8c1b520f=[activeTasks: ([]) standbyTasks: ([])

assignedTasks: ([]) prevActiveTasks: ([]) prevStandbyTasks: ([])

prevAssignedTasks: ([]) prevOwnedPartitionsByConsumerId: ([])

changelogOffsetTotalsByTask: ([]) capacity: 1],

8611ff87-6782-4c27-b93e-c145b0bacccd=[activeTasks: ([]) standbyTasks: ([])

assignedTasks: ([]) prevActiveTasks: ([0_0, 0_2, 0_4]) prevStandbyTasks: ([])

prevAssignedTasks: ([0_0, 0_2, 0_4]) prevOwnedPartitionsByConsumerId: ([data-0,

data-2, data-4]) changelogOffsetTotalsByTask: ([]) capacity: 1]} with number of

replicas 0

(org.apache.kafka.streams.processor.internals.StreamsPartitionAssignor)

[2020-05-07 07:53:15,487] DEBUG [AdminClient

clientId=StreamsUpgradeTest-0626d80d-03ed-4cf9-95a2-d778a791394f-admin] Sending

MetadataRequestData(topics=[], allowAutoTopicCreation=false,

includeClusterAuthorizedOperations=false,

includeTopicAuthorizedOperations=false) to worker2:9092 (id: 1 rack: null).

correlationId=7 (org.apache.kafka.clients.admin.KafkaAdminClient)

[2020-05-07 07:53:15,487] INFO stream-thread

[StreamsUpgradeTest-0626d80d-03ed-4cf9-95a2-d778a791394f-StreamThread-1-consumer]

Assigned tasks to clients as

0626d80d-03ed-4cf9-95a2-d778a791394f=[activeTasks: ([0_1, 0_3]) standbyTasks:

([]) assignedTasks: ([0_1, 0_3]) prevActiveTasks: ([0_1, 0_3])

prevStandbyTasks: ([]) prevAssignedTasks: ([0_1, 0_3])

prevOwnedPartitionsByConsumerId: ([data-1, data-3])

changelogOffsetTotalsByTask: ([]) capacity: 1]

3405b9f3-7f28-454a-9a00-906f8c1b520f=[activeTasks: ([]) standbyTasks: ([])

assignedTasks: ([]) prevActiveTasks: ([]) prevStandbyTasks: ([])

prevAssignedTasks: ([]) prevOwnedPartitionsByConsumerId: ([])

changelogOffsetTotalsByTask: ([]) capacity: 1]

8611ff87-6782-4c27-b93e-c145b0bacccd=[activeTasks: ([0_0, 0_2, 0_4])

standbyTasks: ([]) assignedTasks: ([0_0, 0_2, 0_4]) prevActiveTasks: ([0_0,

0_2, 0_4]) prevStandbyTasks: ([]) prevAssignedTasks: ([0_0, 0_2, 0_4])

prevOwnedPartitionsByConsumerId: ([data-0, data-2, data-4])

changelogOffsetTotalsByTask: ([]) capacity: 1].

(org.apache.kafka.streams.processor.internals.StreamsPartitionAssignor)

> StreamsUpgradeTest.test_metadata_upgrade could not guarantee all processor

> gets assigned task

> -

>

> Key: KAFKA-9989

> URL: https://issues.apache.org/jira/browse/KAFKA-9989

> Project: Kafka

> Issue Type: Bug

> Components: streams, system tests

>Reporter: Boyang Chen

>Assignee: Boyang Chen

>Priority: Major

>

> System test StreamsUpgradeTest.test_metadata_upgrade could fail due to:

> "Never saw output 'processed [0-9]* records' on ubuntu@worker6"

> which if we take a closer look at, the rebalance happens but has no task

> assignment. We should fix this problem by making the rebalance result as part

> of the check, and skip the record processing validation when the assignment

> is empty.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (KAFKA-9617) Replica Fetcher can mark partition as failed when max.message.bytes is changed

[

https://issues.apache.org/jira/browse/KAFKA-9617?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17106843#comment-17106843

]

Chia-Ping Tsai commented on KAFKA-9617:

---

[https://github.com/apache/kafka/pull/8659]

> Replica Fetcher can mark partition as failed when max.message.bytes is changed

> --

>

> Key: KAFKA-9617

> URL: https://issues.apache.org/jira/browse/KAFKA-9617

> Project: Kafka

> Issue Type: Bug

>Reporter: Stanislav Kozlovski

>Assignee: Chia-Ping Tsai

>Priority: Major

> Labels: newbie

>

> There exists a race condition when changing the dynamic max.message.bytes

> config for a topic. A follower replica can replicate a message that is over

> that size after it processes the config change. When this happens, the

> replica fetcher catches the unexpected exception, marks the partition as

> failed and stops replicating it.

> {code:java}

> 06:38:46.596 Processing override for entityPath: topics/partition-1 with

> config: Map(max.message.bytes -> 512)

> 06:38:46.597 [ReplicaFetcher replicaId=1, leaderId=3, fetcherId=0]

> Unexpected error occurred while processing data for partition partition-1 at

> offset 20964

> org.apache.kafka.common.errors.RecordTooLargeException: The record batch size

> in the append to partition-1 is 3349 bytes which exceeds the maximum

> configured value of 512.

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (KAFKA-9987) Improve sticky partition assignor algorithm

[

https://issues.apache.org/jira/browse/KAFKA-9987?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17106832#comment-17106832

]

Sophie Blee-Goldman commented on KAFKA-9987:

Pseudo-code sketch of the algorithm:

{{C_f := (P/N)_floor, the floor capacity}}

{{C_c := (P/N)_ceil, the ceiling capacity}}

{{members := the sorted set of all consumers}}

{{partitions := the set of all partitions}}

{{unassigned_partitions := the set of partitions not yet assigned, initialized

to be all partitions}}

{{unfilled_members := the set of consumers not yet at capacity, initialized to

empty}}

{{max_capacity_members := the set of members with exactly C_c partitions

assigned, initialized to empty}}

{{member.owned_partitions := the set of previously owned partitions encoded in

the Subscription}}

{{// Reassign as many previously owned partitions as possible}}

{{for member : members}}

{{}}{{remove any partitions that are no longer in the subscription from

its owned partitions}}

{{}}{{remove all owned_partitions if the generation is old}}

{{}}{{if member.owned_partitions.size < C_f}}

{{}}{{assign all owned partitions to member and remove from

unassigned_partitions}}

{{}}{{add member to unfilled_members}}

{{}}{{else if member.owned_partitions.size == C_f}}

{{}}{{assign first C_f owned_partitions to member and remove from

unassigned_partitions}}

{{}}{{else}}

{{}}{{assign first C_c owned_partitions to member and remove from

unassigned_partitions}}

{{}}{{add member to max_capacity_members}}

{{sort unassigned_partitions in partition order, ie t0_p0, t1_p0, t2_p0, t0_p1,

t1_p0 (for data parallelism)}}

{{sort unfilled_members by memberId (for determinism)}}

{{// Fill remaining members up to C_f}}

{{for member : unfilled_members}}

{{}}{{compute the remaining capacity as C = C_f - num_assigned_partitions}}

{{}}{{pop the first C partitions from unassigned_partitions and assign to

member}}

{{// Steal partitions from members with max_capacity if necessary}}

{{if we run out of partitions before getting to the end of unfilled members:}}

{{}}{{for member : unfilled_members}}

{{}}{{poll for first member in max_capacity_members and remove one

partition}}

{{}}{{assign this partition to the unfilled member}}

{{}}

{{// Distribute remaining partitions, one per consumer, to fill some up to C_c

if necessary}}

{{if we run out of unfilled_members before assigning all partitions:}}

{{}}{{for partition : unassigned_partitions}}

{{}}{{assign to next member in members that is not in

max_capacity_members (then add member to max_capacity_members)}}

> Improve sticky partition assignor algorithm

> ---

>

> Key: KAFKA-9987

> URL: https://issues.apache.org/jira/browse/KAFKA-9987

> Project: Kafka

> Issue Type: Improvement

> Components: clients

>Reporter: Sophie Blee-Goldman

>Assignee: Sophie Blee-Goldman

>Priority: Major

>

> In

> [KIP-429|https://cwiki.apache.org/confluence/display/KAFKA/KIP-429%3A+Kafka+Consumer+Incremental+Rebalance+Protocol]

> we added the new CooperativeStickyAssignor which leverages on the underlying

> sticky assignment algorithm of the existing StickyAssignor (moved to

> AbstractStickyAssignor). The algorithm is fairly complex as it tries to

> optimize stickiness while satisfying perfect balance _in the case individual

> consumers may be subscribed to different subsets of the topics._ While it

> does a pretty good job at what it promises to do, it doesn't scale well with

> large numbers of consumers and partitions.

> To give a concrete example, users have reported that it takes 2.5 minutes for

> the assignment to complete with just 2100 consumers reading from 2100

> partitions. Since partitions revoked during the first of two cooperative

> rebalances will remain unassigned until the end of the second rebalance, it's

> important for the rebalance to be as fast as possible. And since one of the

> primary improvements of the cooperative rebalancing protocol is better

> scaling experience, the only OOTB cooperative assignor should not itself

> scale poorly

> If we can constrain the problem a bit, we can simplify the algorithm greatly.

> In many cases the individual consumers won't be subscribed to some random

> subset of the total subscription, they will all be subscribed to the same set

> of topics and rely on the assignor to balance the partition workload.

> We can detect this case by checking the group's individual subscriptions and

> call on a more efficient assignment algorithm.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] abbccdda closed pull request #7172: (DO NOT MERGE) Kip 447 condensed POC

abbccdda closed pull request #7172: URL: https://github.com/apache/kafka/pull/7172 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda closed pull request #7676: DEBUG: add partition empty check

abbccdda closed pull request #7676: URL: https://github.com/apache/kafka/pull/7676 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] abbccdda closed pull request #7179: MINOR: improve StreamsBrokerDownResilienceTest debuggability

abbccdda closed pull request #7179: URL: https://github.com/apache/kafka/pull/7179 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #8588: KAFKA-6145: KIP-441: Improve assignment balance

ableegoldman commented on a change in pull request #8588:

URL: https://github.com/apache/kafka/pull/8588#discussion_r424830762

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/assignment/ConstrainedPrioritySet.java

##

@@ -16,77 +16,58 @@

*/

package org.apache.kafka.streams.processor.internals.assignment;

+import org.apache.kafka.streams.processor.TaskId;

+

import java.util.Collection;

+import java.util.Comparator;

import java.util.HashSet;

-import java.util.LinkedList;

-import java.util.List;

-import java.util.Map;

import java.util.PriorityQueue;

import java.util.Set;

import java.util.UUID;

import java.util.function.BiFunction;

-import org.apache.kafka.streams.processor.TaskId;

+import java.util.function.Function;

/**

* Wraps a priority queue of clients and returns the next valid candidate(s)

based on the current task assignment

*/

-class ValidClientsByTaskLoadQueue {

+class ConstrainedPrioritySet {

private final PriorityQueue clientsByTaskLoad;

-private final BiFunction validClientCriteria;

+private final BiFunction constraint;

private final Set uniqueClients = new HashSet<>();

-ValidClientsByTaskLoadQueue(final Map clientStates,

-final BiFunction

validClientCriteria) {

-this.validClientCriteria = validClientCriteria;

-

-clientsByTaskLoad = new PriorityQueue<>(

-(client, other) -> {

-final double clientTaskLoad =

clientStates.get(client).taskLoad();

-final double otherTaskLoad =

clientStates.get(other).taskLoad();

-if (clientTaskLoad < otherTaskLoad) {

-return -1;

-} else if (clientTaskLoad > otherTaskLoad) {

-return 1;

-} else {

-return client.compareTo(other);

-}

-});

+ConstrainedPrioritySet(final BiFunction constraint,

+ final Function weight) {

+this.constraint = constraint;

+clientsByTaskLoad = new

PriorityQueue<>(Comparator.comparing(weight).thenComparing(clientId ->

clientId));

}

/**

* @return the next least loaded client that satisfies the given criteria,

or null if none do

*/

-UUID poll(final TaskId task) {

-final List validClient = poll(task, 1);

-return validClient.isEmpty() ? null : validClient.get(0);

-}

-

-/**

- * @return the next N <= {@code numClientsPerTask} clients in the

underlying priority queue that are valid candidates for the given task

- */

-List poll(final TaskId task, final int numClients) {

-final List nextLeastLoadedValidClients = new LinkedList<>();

+UUID poll(final TaskId task, final Function

extraConstraint) {

Review comment:

> we know that we cannot consider C1 again for the second poll

Yep, that's what I was getting at above. I'm totally on board with reducing

the number of assumptions, especially as this class becomes more generally

used. I was just intrigued by what you said initially and thought "This

actually results in better balancing characteristics when assigning standbys"

meant that you had actually seen a difference in the tests.

Thanks for continuing to improve this class!

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] d8tltanc commented on pull request #8528: System tests should use --bootstrap-server rather than --zookeeper when testing new Kafka versions

d8tltanc commented on pull request #8528: URL: https://github.com/apache/kafka/pull/8528#issuecomment-628340161 Latest dev branch builds here (REMOVE_SYSTEM_4): https://jenkins.confluent.io/job/system-test-kafka-branch-builder/3943/ This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #8588: KAFKA-6145: KIP-441: Improve assignment balance

vvcephei commented on a change in pull request #8588:

URL: https://github.com/apache/kafka/pull/8588#discussion_r424825641

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/assignment/ConstrainedPrioritySet.java

##

@@ -16,77 +16,58 @@

*/

package org.apache.kafka.streams.processor.internals.assignment;

+import org.apache.kafka.streams.processor.TaskId;

+

import java.util.Collection;

+import java.util.Comparator;

import java.util.HashSet;

-import java.util.LinkedList;

-import java.util.List;

-import java.util.Map;

import java.util.PriorityQueue;

import java.util.Set;

import java.util.UUID;

import java.util.function.BiFunction;

-import org.apache.kafka.streams.processor.TaskId;

+import java.util.function.Function;

/**

* Wraps a priority queue of clients and returns the next valid candidate(s)

based on the current task assignment

*/

-class ValidClientsByTaskLoadQueue {

+class ConstrainedPrioritySet {

private final PriorityQueue clientsByTaskLoad;

-private final BiFunction validClientCriteria;

+private final BiFunction constraint;

private final Set uniqueClients = new HashSet<>();

-ValidClientsByTaskLoadQueue(final Map clientStates,

-final BiFunction

validClientCriteria) {

-this.validClientCriteria = validClientCriteria;

-

-clientsByTaskLoad = new PriorityQueue<>(

-(client, other) -> {

-final double clientTaskLoad =

clientStates.get(client).taskLoad();

-final double otherTaskLoad =

clientStates.get(other).taskLoad();

-if (clientTaskLoad < otherTaskLoad) {

-return -1;

-} else if (clientTaskLoad > otherTaskLoad) {

-return 1;

-} else {

-return client.compareTo(other);

-}

-});

+ConstrainedPrioritySet(final BiFunction constraint,

+ final Function weight) {

+this.constraint = constraint;

+clientsByTaskLoad = new

PriorityQueue<>(Comparator.comparing(weight).thenComparing(clientId ->

clientId));

}

/**

* @return the next least loaded client that satisfies the given criteria,

or null if none do

*/

-UUID poll(final TaskId task) {

-final List validClient = poll(task, 1);

-return validClient.isEmpty() ? null : validClient.get(0);

-}

-

-/**

- * @return the next N <= {@code numClientsPerTask} clients in the

underlying priority queue that are valid candidates for the given task

- */

-List poll(final TaskId task, final int numClients) {

-final List nextLeastLoadedValidClients = new LinkedList<>();

+UUID poll(final TaskId task, final Function

extraConstraint) {

Review comment:

I was operating more on intuition here. To be honest, I had a suspicion

you would call this out, so I probably should have just saved time and taken

the time to prove it.

Forgetting about the constraint for a minute, I think that what I had in

mind for balance is something like, suppose you have two clients "C1" and

"C2"... C1 has one task and C2 has two. You poll and get C1 and add a task.

Now, they both have two.

If you add it back and poll again, you might prefer to get C1 back again.

Maybe because the "weight" function takes into account more than just the task

load, or maybe just because of the total order we impose based on clientId, in

which `C1 < C2`. But if you just poll two clients to begin with, then C1

doesn't get a chance to be included for the second poll, you just automatically

get C1 and C2.

In retrospect, this might be moot in practice, because the only time we

actually polled for multiple clients was when assigning standbys, and

specifically when we were assigning multiple replicas of the same task, in

which case, we know that we _cannot_ consider C1 again for the second poll.

From a computer-sciencey perspective, it doesn't seem like the data

structure should be able to make this assumption, though, since it can't know

that polling a client also invalidates it for a subsequent poll with the same

last-mile predicate.

So, even in retrospect, I'm tempted to leave it this way (big surprise

there), although I'd acknowledge that the outcome is actually not different in

the way that we would use the method.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Created] (KAFKA-9991) Flaky Test KTableSourceTopicRestartIntegrationTest.shouldRestoreAndProgressWhenTopicWrittenToDuringRestorationWithEosAlphaEnabled

Sophie Blee-Goldman created KAFKA-9991: -- Summary: Flaky Test KTableSourceTopicRestartIntegrationTest.shouldRestoreAndProgressWhenTopicWrittenToDuringRestorationWithEosAlphaEnabled Key: KAFKA-9991 URL: https://issues.apache.org/jira/browse/KAFKA-9991 Project: Kafka Issue Type: Bug Components: streams Reporter: Sophie Blee-Goldman [https://builds.apache.org/job/kafka-pr-jdk11-scala2.13/6280/testReport/junit/org.apache.kafka.streams.integration/KTableSourceTopicRestartIntegrationTest/shouldRestoreAndProgressWhenTopicWrittenToDuringRestorationWithEosAlphaEnabled/] h3. Stacktrace java.lang.AssertionError: Condition not met within timeout 3. Table did not read all values at org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:26) at org.apache.kafka.test.TestUtils.lambda$waitForCondition$5(TestUtils.java:381) at org.apache.kafka.test.TestUtils.retryOnExceptionWithTimeout(TestUtils.java:429) at org.apache.kafka.test.TestUtils.retryOnExceptionWithTimeout(TestUtils.java:397) at org.apache.kafka.test.TestUtils.waitForCondition(TestUtils.java:378) at org.apache.kafka.test.TestUtils.waitForCondition(TestUtils.java:368) at org.apache.kafka.streams.integration.KTableSourceTopicRestartIntegrationTest.assertNumberValuesRead(KTableSourceTopicRestartIntegrationTest.java:205) at org.apache.kafka.streams.integration.KTableSourceTopicRestartIntegrationTest.shouldRestoreAndProgressWhenTopicWrittenToDuringRestorationWithEosEnabled(KTableSourceTopicRestartIntegrationTest.java:159) at org.apache.kafka.streams.integration.KTableSourceTopicRestartIntegrationTest.shouldRestoreAndProgressWhenTopicWrittenToDuringRestorationWithEosAlphaEnabled(KTableSourceTopicRestartIntegrationTest.java:143) -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (KAFKA-9688) kafka-topic.sh should show KIP-455 adding and removing replicas

[ https://issues.apache.org/jira/browse/KAFKA-9688?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Colin McCabe resolved KAFKA-9688. - Fix Version/s: 2.5.0 Reviewer: Colin McCabe Resolution: Fixed > kafka-topic.sh should show KIP-455 adding and removing replicas > --- > > Key: KAFKA-9688 > URL: https://issues.apache.org/jira/browse/KAFKA-9688 > Project: Kafka > Issue Type: Improvement > Components: tools >Reporter: Colin McCabe >Assignee: Cheng Tan >Priority: Major > Fix For: 2.5.0 > > > kafka-topic.sh should show KIP-455 adding and removing replicas, as described > in KIP-455. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] vvcephei commented on a change in pull request #8588: KAFKA-6145: KIP-441: Improve assignment balance

vvcephei commented on a change in pull request #8588:

URL: https://github.com/apache/kafka/pull/8588#discussion_r424822166

##

File path:

streams/src/test/java/org/apache/kafka/streams/processor/internals/assignment/TaskAssignorConvergenceTest.java

##

@@ -236,16 +233,17 @@ public void

staticAssignmentShouldConvergeWithTheFirstAssignment() {

0,

1000L);

-final Harness harness = Harness.initializeCluster(1, 1, 1);

+final Harness harness = Harness.initializeCluster(1, 1, 1, () -> 1);

testForConvergence(harness, configs, 1);

verifyValidAssignment(0, harness);

+verifyBalancedAssignment(harness);

}

@Test

public void assignmentShouldConvergeAfterAddingNode() {

-final int numStatelessTasks = 15;

-final int numStatefulTasks = 13;

+final int numStatelessTasks = 7;

Review comment:

Right, but I think there's a "3" or a "5" in there somewhere, maybe in

the other test. Anyway, my _intent_ was to make them all prime so I wouldn't

have to think to hard about whether they were all coprime. But, in reality, I

managed to screw up both.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #8588: KAFKA-6145: KIP-441: Improve assignment balance

vvcephei commented on a change in pull request #8588:

URL: https://github.com/apache/kafka/pull/8588#discussion_r424821641

##

File path:

streams/src/test/java/org/apache/kafka/streams/processor/internals/assignment/TaskMovementTest.java

##

@@ -35,262 +44,161 @@

import static

org.apache.kafka.streams.processor.internals.assignment.AssignmentTestUtils.UUID_2;

import static

org.apache.kafka.streams.processor.internals.assignment.AssignmentTestUtils.UUID_3;

import static

org.apache.kafka.streams.processor.internals.assignment.AssignmentTestUtils.getClientStatesMap;

+import static

org.apache.kafka.streams.processor.internals.assignment.AssignmentTestUtils.hasProperty;

import static

org.apache.kafka.streams.processor.internals.assignment.TaskMovement.assignTaskMovements;

import static org.hamcrest.MatcherAssert.assertThat;

-import static org.hamcrest.Matchers.equalTo;

-import static org.junit.Assert.assertTrue;

-import static org.junit.Assert.assertFalse;

-

-import java.util.HashMap;

-import java.util.HashSet;

-import java.util.List;

-import java.util.Map;

-import java.util.Set;

-import java.util.SortedSet;

-import java.util.UUID;

-import java.util.stream.Collectors;

-import org.apache.kafka.streams.processor.TaskId;

-import org.junit.Test;

+import static org.hamcrest.Matchers.is;

public class TaskMovementTest {

-private final ClientState client1 = new ClientState(1);

-private final ClientState client2 = new ClientState(1);

-private final ClientState client3 = new ClientState(1);

-

-private final Map clientStates =

getClientStatesMap(client1, client2, client3);

-

-private final Map> emptyWarmupAssignment = mkMap(

-mkEntry(UUID_1, EMPTY_TASK_LIST),

-mkEntry(UUID_2, EMPTY_TASK_LIST),

-mkEntry(UUID_3, EMPTY_TASK_LIST)

-);

-

@Test

public void

shouldAssignTasksToClientsAndReturnFalseWhenAllClientsCaughtUp() {

final int maxWarmupReplicas = Integer.MAX_VALUE;

final Set allTasks = mkSet(TASK_0_0, TASK_0_1, TASK_0_2,

TASK_1_0, TASK_1_1, TASK_1_2);

-final Map> balancedAssignment = mkMap(

-mkEntry(UUID_1, asList(TASK_0_0, TASK_1_0)),

-mkEntry(UUID_2, asList(TASK_0_1, TASK_1_1)),

-mkEntry(UUID_3, asList(TASK_0_2, TASK_1_2))

-);

-

final Map> tasksToCaughtUpClients = new

HashMap<>();

for (final TaskId task : allTasks) {

tasksToCaughtUpClients.put(task, mkSortedSet(UUID_1, UUID_2,

UUID_3));

}

-

-assertFalse(

+

+final ClientState client1 =

getClientStateWithActiveAssignment(asList(TASK_0_0, TASK_1_0));

+final ClientState client2 =

getClientStateWithActiveAssignment(asList(TASK_0_1, TASK_1_1));

+final ClientState client3 =

getClientStateWithActiveAssignment(asList(TASK_0_2, TASK_1_2));

+

+assertThat(

assignTaskMovements(

-balancedAssignment,

tasksToCaughtUpClients,

-clientStates,

-getMapWithNumStandbys(allTasks, 1),

-maxWarmupReplicas)

+getClientStatesMap(client1, client2, client3),

+maxWarmupReplicas),

+is(false)

);

-

-verifyClientStateAssignments(balancedAssignment,

emptyWarmupAssignment);

}

@Test

public void

shouldAssignAllTasksToClientsAndReturnFalseIfNoClientsAreCaughtUp() {

-final int maxWarmupReplicas = 2;

-final Set allTasks = mkSet(TASK_0_0, TASK_0_1, TASK_0_2,

TASK_1_0, TASK_1_1, TASK_1_2);

+final int maxWarmupReplicas = Integer.MAX_VALUE;

-final Map> balancedAssignment = mkMap(

-mkEntry(UUID_1, asList(TASK_0_0, TASK_1_0)),

-mkEntry(UUID_2, asList(TASK_0_1, TASK_1_1)),

-mkEntry(UUID_3, asList(TASK_0_2, TASK_1_2))

-);

+final ClientState client1 =

getClientStateWithActiveAssignment(asList(TASK_0_0, TASK_1_0));

+final ClientState client2 =

getClientStateWithActiveAssignment(asList(TASK_0_1, TASK_1_1));

+final ClientState client3 =

getClientStateWithActiveAssignment(asList(TASK_0_2, TASK_1_2));

-assertFalse(

+assertThat(

assignTaskMovements(

-balancedAssignment,

emptyMap(),

-clientStates,

-getMapWithNumStandbys(allTasks, 1),

-maxWarmupReplicas)

+getClientStatesMap(client1, client2, client3),

+maxWarmupReplicas),

+is(false)

);

-verifyClientStateAssignments(balancedAssignment,

emptyWarmupAssignment);

}

@Test

public void

shouldMoveTasksToCaughtUpClientsAndAssignWarmupReplicasInTheirPlace() {

final int maxWarmupReplicas = Integer.MAX_VALUE;

-final Set allTasks = mkSet(TASK_0_0, TASK_0_1, TASK_0_2);

+final ClientState client1 =

getClientStateWithActiveAssignment(singletonList(TASK_0_

[GitHub] [kafka] vvcephei commented on a change in pull request #8588: KAFKA-6145: KIP-441: Improve assignment balance

vvcephei commented on a change in pull request #8588:

URL: https://github.com/apache/kafka/pull/8588#discussion_r424820836

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/assignment/TaskMovement.java

##

@@ -53,75 +67,94 @@ private static boolean

taskIsCaughtUpOnClientOrNoCaughtUpClientsExist(final Task

/**

* @return whether any warmup replicas were assigned

*/

-static boolean assignTaskMovements(final Map>

statefulActiveTaskAssignment,

- final Map>

tasksToCaughtUpClients,

+static boolean assignTaskMovements(final Map>

tasksToCaughtUpClients,

final Map

clientStates,

- final Map

tasksToRemainingStandbys,

final int maxWarmupReplicas) {

-boolean warmupReplicasAssigned = false;

+final BiFunction caughtUpPredicate =

+(client, task) ->

taskIsCaughtUpOnClientOrNoCaughtUpClientsExist(task, client,

tasksToCaughtUpClients);

-final ValidClientsByTaskLoadQueue clientsByTaskLoad = new

ValidClientsByTaskLoadQueue(

-clientStates,

-(client, task) ->

taskIsCaughtUpOnClientOrNoCaughtUpClientsExist(task, client,

tasksToCaughtUpClients)

+final ConstrainedPrioritySet clientsByTaskLoad = new

ConstrainedPrioritySet(

+caughtUpPredicate,

+client -> clientStates.get(client).taskLoad()

);

-final SortedSet taskMovements = new TreeSet<>(

-(movement, other) -> {

-final int numCaughtUpClients = movement.caughtUpClients.size();

-final int otherNumCaughtUpClients =

other.caughtUpClients.size();

-if (numCaughtUpClients != otherNumCaughtUpClients) {

-return Integer.compare(numCaughtUpClients,

otherNumCaughtUpClients);

-} else {

-return movement.task.compareTo(other.task);

-}

-}

+final Queue taskMovements = new PriorityQueue<>(

+

Comparator.comparing(TaskMovement::numCaughtUpClients).thenComparing(TaskMovement::task)

);

-for (final Map.Entry> assignmentEntry :

statefulActiveTaskAssignment.entrySet()) {

-final UUID client = assignmentEntry.getKey();

-final ClientState state = clientStates.get(client);

-for (final TaskId task : assignmentEntry.getValue()) {

-if (taskIsCaughtUpOnClientOrNoCaughtUpClientsExist(task,

client, tasksToCaughtUpClients)) {

-state.assignActive(task);

-} else {

-final TaskMovement taskMovement = new TaskMovement(task,

client, tasksToCaughtUpClients.get(task));

-taskMovements.add(taskMovement);

+for (final Map.Entry clientStateEntry :

clientStates.entrySet()) {

+final UUID client = clientStateEntry.getKey();

+final ClientState state = clientStateEntry.getValue();

+for (final TaskId task : state.activeTasks()) {

+// if the desired client is not caught up, and there is

another client that _is_ caught up, then

+// we schedule a movement, so we can move the active task to

the caught-up client. We'll try to

+// assign a warm-up to the desired client so that we can move

it later on.

+if (!taskIsCaughtUpOnClientOrNoCaughtUpClientsExist(task,

client, tasksToCaughtUpClients)) {

+taskMovements.add(new TaskMovement(task, client,

tasksToCaughtUpClients.get(task)));

}

}

clientsByTaskLoad.offer(client);

}

+final boolean movementsNeeded = !taskMovements.isEmpty();

+

final AtomicInteger remainingWarmupReplicas = new

AtomicInteger(maxWarmupReplicas);

for (final TaskMovement movement : taskMovements) {

-final UUID sourceClient = clientsByTaskLoad.poll(movement.task);

-if (sourceClient == null) {

-throw new IllegalStateException("Tried to move task to

caught-up client but none exist");

-}

-

-final ClientState sourceClientState =

clientStates.get(sourceClient);

-sourceClientState.assignActive(movement.task);

-clientsByTaskLoad.offer(sourceClient);

+final UUID standbySourceClient = clientsByTaskLoad.poll(

Review comment:

Agreed, it would just be good luck right now, but I figured we might as

well capitalize on the luck. I'm planning to follow up pretty soon with the

standby stickiness.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contac

[GitHub] [kafka] vvcephei commented on a change in pull request #8588: KAFKA-6145: KIP-441: Improve assignment balance

vvcephei commented on a change in pull request #8588:

URL: https://github.com/apache/kafka/pull/8588#discussion_r424819838

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/assignment/TaskMovement.java

##

@@ -53,75 +67,94 @@ private static boolean

taskIsCaughtUpOnClientOrNoCaughtUpClientsExist(final Task

/**

* @return whether any warmup replicas were assigned

*/

-static boolean assignTaskMovements(final Map>

statefulActiveTaskAssignment,

- final Map>

tasksToCaughtUpClients,

+static boolean assignTaskMovements(final Map>

tasksToCaughtUpClients,

final Map

clientStates,

- final Map

tasksToRemainingStandbys,

final int maxWarmupReplicas) {

-boolean warmupReplicasAssigned = false;

+final BiFunction caughtUpPredicate =

+(client, task) ->

taskIsCaughtUpOnClientOrNoCaughtUpClientsExist(task, client,

tasksToCaughtUpClients);

-final ValidClientsByTaskLoadQueue clientsByTaskLoad = new

ValidClientsByTaskLoadQueue(

-clientStates,

-(client, task) ->

taskIsCaughtUpOnClientOrNoCaughtUpClientsExist(task, client,

tasksToCaughtUpClients)

+final ConstrainedPrioritySet clientsByTaskLoad = new

ConstrainedPrioritySet(

Review comment:

sure, that's a good idea.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on pull request #8662: HOTFIX: skip listOffsets request for newly created changelog topics

ableegoldman commented on pull request #8662: URL: https://github.com/apache/kafka/pull/8662#issuecomment-628329507 Nooo `EosBetaUpgradeIntegrationTest.shouldUpgradeFromEosAlphaToEosBeta[true]` still had one failure on the Java 8 build 😭 But it failed on a different line which seems more in line with real flakiness. FWIW I ran this locally 40 times without failures (technically 80 in total for both true/false variations) ... I think it's worth still merging this PR and we can continue investigating it from there. ``` java.lang.AssertionError: Did not receive all 20 records from topic multiPartitionOutputTopic within 6 ms Expected: is a value equal to or greater than <20> but: <5> was less than <20> at org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:20) at org.apache.kafka.streams.integration.utils.IntegrationTestUtils.lambda$waitUntilMinKeyValueRecordsReceived$1(IntegrationTestUtils.java:563) at org.apache.kafka.test.TestUtils.retryOnExceptionWithTimeout(TestUtils.java:429) at org.apache.kafka.test.TestUtils.retryOnExceptionWithTimeout(TestUtils.java:397) at org.apache.kafka.streams.integration.utils.IntegrationTestUtils.waitUntilMinKeyValueRecordsReceived(IntegrationTestUtils.java:559) at org.apache.kafka.streams.integration.utils.IntegrationTestUtils.waitUntilMinKeyValueRecordsReceived(IntegrationTestUtils.java:530) at org.apache.kafka.streams.integration.EosBetaUpgradeIntegrationTest.readResult(EosBetaUpgradeIntegrationTest.java:973) at org.apache.kafka.streams.integration.EosBetaUpgradeIntegrationTest.verifyCommitted(EosBetaUpgradeIntegrationTest.java:961) at org.apache.kafka.streams.integration.EosBetaUpgradeIntegrationTest.shouldUpgradeFromEosAlphaToEosBeta(EosBetaUpgradeIntegrationTest.java:427) ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mjsax commented on pull request #6792: KAFKA 8311 : Augment the error message to let user change `default.api.timeout.ms`

mjsax commented on pull request #6792: URL: https://github.com/apache/kafka/pull/6792#issuecomment-628323776 @clearpal7 @abbccdda Just cleaning up my old backlog. Do you still want to get this in? PR would need a rebase. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mjsax commented on pull request #6617: MINOR: Allow scripts to be symlinked

mjsax commented on pull request #6617: URL: https://github.com/apache/kafka/pull/6617#issuecomment-628323063 Closing this PR due to inactivity. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mjsax closed pull request #6617: MINOR: Allow scripts to be symlinked

mjsax closed pull request #6617: URL: https://github.com/apache/kafka/pull/6617 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mjsax merged pull request #6656: MINOR: Add option to rebuild source for system tests

mjsax merged pull request #6656: URL: https://github.com/apache/kafka/pull/6656 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (KAFKA-9987) Improve sticky partition assignor algorithm

[ https://issues.apache.org/jira/browse/KAFKA-9987?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Sophie Blee-Goldman updated KAFKA-9987: --- Description: In [KIP-429|https://cwiki.apache.org/confluence/display/KAFKA/KIP-429%3A+Kafka+Consumer+Incremental+Rebalance+Protocol] we added the new CooperativeStickyAssignor which leverages on the underlying sticky assignment algorithm of the existing StickyAssignor (moved to AbstractStickyAssignor). The algorithm is fairly complex as it tries to optimize stickiness while satisfying perfect balance _in the case individual consumers may be subscribed to different subsets of the topics._ While it does a pretty good job at what it promises to do, it doesn't scale well with large numbers of consumers and partitions. To give a concrete example, users have reported that it takes 2.5 minutes for the assignment to complete with just 2100 consumers reading from 2100 partitions. Since partitions revoked during the first of two cooperative rebalances will remain unassigned until the end of the second rebalance, it's important for the rebalance to be as fast as possible. And since one of the primary improvements of the cooperative rebalancing protocol is better scaling experience, the only OOTB cooperative assignor should not itself scale poorly If we can constrain the problem a bit, we can simplify the algorithm greatly. In many cases the individual consumers won't be subscribed to some random subset of the total subscription, they will all be subscribed to the same set of topics and rely on the assignor to balance the partition workload. We can detect this case by checking the group's individual subscriptions and call on a more efficient assignment algorithm. was: In KIP-429 we added the new CooperativeStickyAssignor which leverages on the underlying sticky assignment algorithm of the existing StickyAssignor (moved to AbstractStickyAssignor). The algorithm is fairly complex as it tries to optimize stickiness while satisfying perfect balance _in the case individual consumers may be subscribed to a random subset of the topics._ While it does a pretty good job at what it promises to do, it doesn't scale well with large numbers of consumers and partitions. If we can make the assumption that all consumers are subscribed to the same set of topics, we can simplify the algorithm greatly and do a sticky-but-balanced assignment in a single pass. It would be nice to have an additional cooperative assignor OOTB that performs efficiently for users who know their group will satisfy this constraint. > Improve sticky partition assignor algorithm > --- > > Key: KAFKA-9987 > URL: https://issues.apache.org/jira/browse/KAFKA-9987 > Project: Kafka > Issue Type: Improvement > Components: clients >Reporter: Sophie Blee-Goldman >Assignee: Sophie Blee-Goldman >Priority: Major > > In > [KIP-429|https://cwiki.apache.org/confluence/display/KAFKA/KIP-429%3A+Kafka+Consumer+Incremental+Rebalance+Protocol] > we added the new CooperativeStickyAssignor which leverages on the underlying > sticky assignment algorithm of the existing StickyAssignor (moved to > AbstractStickyAssignor). The algorithm is fairly complex as it tries to > optimize stickiness while satisfying perfect balance _in the case individual > consumers may be subscribed to different subsets of the topics._ While it > does a pretty good job at what it promises to do, it doesn't scale well with > large numbers of consumers and partitions. > To give a concrete example, users have reported that it takes 2.5 minutes for > the assignment to complete with just 2100 consumers reading from 2100 > partitions. Since partitions revoked during the first of two cooperative > rebalances will remain unassigned until the end of the second rebalance, it's > important for the rebalance to be as fast as possible. And since one of the > primary improvements of the cooperative rebalancing protocol is better > scaling experience, the only OOTB cooperative assignor should not itself > scale poorly > If we can constrain the problem a bit, we can simplify the algorithm greatly. > In many cases the individual consumers won't be subscribed to some random > subset of the total subscription, they will all be subscribed to the same set > of topics and rely on the assignor to balance the partition workload. > We can detect this case by checking the group's individual subscriptions and > call on a more efficient assignment algorithm. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (KAFKA-9987) Improve sticky partition assignor algorithm

[ https://issues.apache.org/jira/browse/KAFKA-9987?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Sophie Blee-Goldman updated KAFKA-9987: --- Summary: Improve sticky partition assignor algorithm (was: Add new cooperative assignor optimized for constant-subscription group) > Improve sticky partition assignor algorithm > --- > > Key: KAFKA-9987 > URL: https://issues.apache.org/jira/browse/KAFKA-9987 > Project: Kafka > Issue Type: Improvement > Components: clients >Reporter: Sophie Blee-Goldman >Assignee: Sophie Blee-Goldman >Priority: Major > > In KIP-429 we added the new CooperativeStickyAssignor which leverages on the > underlying sticky assignment algorithm of the existing StickyAssignor (moved > to AbstractStickyAssignor). > > The algorithm is fairly complex as it tries to optimize stickiness while > satisfying perfect balance _in the case individual consumers may be > subscribed to a random subset of the topics._ While it does a pretty good job > at what it promises to do, it doesn't scale well with large numbers of > consumers and partitions. > > If we can make the assumption that all consumers are subscribed to the same > set of topics, we can simplify the algorithm greatly and do a > sticky-but-balanced assignment in a single pass. It would be nice to have an > additional cooperative assignor OOTB that performs efficiently for users who > know their group will satisfy this constraint. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (KAFKA-9850) Move KStream#repartition operator validation during Topology build process

[ https://issues.apache.org/jira/browse/KAFKA-9850?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Matthias J. Sax resolved KAFKA-9850. Fix Version/s: 2.6.0 Resolution: Fixed > Move KStream#repartition operator validation during Topology build process > --- > > Key: KAFKA-9850 > URL: https://issues.apache.org/jira/browse/KAFKA-9850 > Project: Kafka > Issue Type: Improvement > Components: streams >Reporter: Levani Kokhreidze >Assignee: HaiyuanZhao >Priority: Major > Labels: help-wanted, newbie, newbie++ > Fix For: 2.6.0 > > > `KStream#repartition` operation performs most of its validation regarding > joining, co-partitioning, etc after starting Kafka Streams instance. Some > parts of this validation can be detected much earlier, specifically during > topology `build()`. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] mjsax merged pull request #8550: KAFKA-9850 Move KStream#repartition operator validation during Topolo…

mjsax merged pull request #8550: URL: https://github.com/apache/kafka/pull/8550 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mjsax commented on pull request #8550: KAFKA-9850 Move KStream#repartition operator validation during Topolo…

mjsax commented on pull request #8550: URL: https://github.com/apache/kafka/pull/8550#issuecomment-628317830 Thanks for the PR @zhaohaidao! Merged to `trunk`. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (KAFKA-9465) Enclose consumer call with catching InvalidOffsetException

[ https://issues.apache.org/jira/browse/KAFKA-9465?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Matthias J. Sax resolved KAFKA-9465. Resolution: Not A Problem This was fixed implicitly via some code refactoring. > Enclose consumer call with catching InvalidOffsetException > -- > > Key: KAFKA-9465 > URL: https://issues.apache.org/jira/browse/KAFKA-9465 > Project: Kafka > Issue Type: Improvement >Reporter: Ted Yu >Assignee: Ted Yu >Priority: Minor > > In maybeUpdateStandbyTasks, the try block encloses restoreConsumer.poll and > record handling. > Since InvalidOffsetException is thrown by restoreConsumer.poll, we should > enclose this call in the try block. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] mjsax closed pull request #8001: KAFKA-9465: Enclose consumer call with catching InvalidOffsetException

mjsax closed pull request #8001: URL: https://github.com/apache/kafka/pull/8001 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mjsax commented on pull request #8001: KAFKA-9465: Enclose consumer call with catching InvalidOffsetException

mjsax commented on pull request #8001: URL: https://github.com/apache/kafka/pull/8001#issuecomment-628316756 Thanks @guozhangwang. I resolve the Jira ticket. Closing this PR. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mjsax commented on a change in pull request #8049: MINOR: Added missing default serdes to the streams.scala.Serdes

mjsax commented on a change in pull request #8049:

URL: https://github.com/apache/kafka/pull/8049#discussion_r424805332

##

File path:

streams/streams-scala/src/main/scala/org/apache/kafka/streams/scala/Serdes.scala

##

@@ -30,12 +32,15 @@ object Serdes {

implicit def JavaLong: Serde[java.lang.Long] = JSerdes.Long()

implicit def ByteArray: Serde[Array[Byte]] = JSerdes.ByteArray()

implicit def Bytes: Serde[org.apache.kafka.common.utils.Bytes] =

JSerdes.Bytes()

+ implicit def byteBufferSerde: Serde[ByteBuffer] = JSerdes.ByteBuffer()

+ implicit def shortSerde: Serde[Short] =

JSerdes.Short().asInstanceOf[Serde[Short]]

implicit def Float: Serde[Float] = JSerdes.Float().asInstanceOf[Serde[Float]]

implicit def JavaFloat: Serde[java.lang.Float] = JSerdes.Float()

implicit def Double: Serde[Double] =

JSerdes.Double().asInstanceOf[Serde[Double]]

implicit def JavaDouble: Serde[java.lang.Double] = JSerdes.Double()

implicit def Integer: Serde[Int] = JSerdes.Integer().asInstanceOf[Serde[Int]]

implicit def JavaInteger: Serde[java.lang.Integer] = JSerdes.Integer()

+ implicit def uuidSerde: Serde[UUID] = JSerdes.UUID()

Review comment:

I am fine with postponing this PR. \cc @vvcephei

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] mjsax commented on pull request #8637: KAFKA-9976: Reuse repartition node in all cases for KGroupedStream and KGroupedTable aggregates

mjsax commented on pull request #8637: URL: https://github.com/apache/kafka/pull/8637#issuecomment-628315503 @bbejeck Wondering if there are any backward incompatibility concerns? Can you explain why this change is safe? For #8504 there would be an exception before and thus it was broken and no compatibility concern raises. Not 100% sure about this PR though. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mjsax commented on pull request #8558: KAFKA-8611 / KIP-221 documentation

mjsax commented on pull request #8558: URL: https://github.com/apache/kafka/pull/8558#issuecomment-628314584 @lkokhreidze Did PR shows a conflict. Can you rebase it? Btw: did you see the follow up question on the mailing list? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] soondenana edited a comment on pull request #8068: MINOR: Update to Gradle 4.10.3

soondenana edited a comment on pull request #8068: URL: https://github.com/apache/kafka/pull/8068#issuecomment-628308978 Closing PR as this doesn't fix issue. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] soondenana commented on pull request #8068: MINOR: Update to Gradle 4.10.3

soondenana commented on pull request #8068: URL: https://github.com/apache/kafka/pull/8068#issuecomment-628308978 Issue is now fixed by release team by adding JVM flags for this. This PR is no longer needed. Closing. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] soondenana closed pull request #8068: MINOR: Update to Gradle 4.10.3

soondenana closed pull request #8068: URL: https://github.com/apache/kafka/pull/8068 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-9989) StreamsUpgradeTest.test_metadata_upgrade could not guarantee all processor gets assigned task

[ https://issues.apache.org/jira/browse/KAFKA-9989?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17106744#comment-17106744 ] Sophie Blee-Goldman commented on KAFKA-9989: Yeah it would be good to dive into why this is happening – are the partitions missing because of a cooperative rebalance and we need to wait for one more rebalance to get the assignment? Or did the admin client's listOffsets request fail causing us to fall back on the PriorTaskAssignor? > StreamsUpgradeTest.test_metadata_upgrade could not guarantee all processor > gets assigned task > - > > Key: KAFKA-9989 > URL: https://issues.apache.org/jira/browse/KAFKA-9989 > Project: Kafka > Issue Type: Bug > Components: streams, system tests >Reporter: Boyang Chen >Assignee: Boyang Chen >Priority: Major > > System test StreamsUpgradeTest.test_metadata_upgrade could fail due to: > "Never saw output 'processed [0-9]* records' on ubuntu@worker6" > which if we take a closer look at, the rebalance happens but has no task > assignment. We should fix this problem by making the rebalance result as part > of the check, and skip the record processing validation when the assignment > is empty. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] guozhangwang commented on a change in pull request #8661: KAFKA-9603: Do not turn on bulk loading for segmented stores on stand-by tasks

guozhangwang commented on a change in pull request #8661:

URL: https://github.com/apache/kafka/pull/8661#discussion_r424796625

##

File path:

streams/src/main/java/org/apache/kafka/streams/state/internals/AbstractRocksDBSegmentedBytesStore.java

##

@@ -248,17 +243,6 @@ void restoreAllInternal(final Collection> records) {

final long segmentId = segments.segmentId(timestamp);

final S segment = segments.getOrCreateSegmentIfLive(segmentId,

context, observedStreamTime);

if (segment != null) {

-// This handles the case that state store is moved to a new

client and does not

-// have the local RocksDB instance for the segment. In this

case, toggleDBForBulkLoading

-// will only close the database and open it again with bulk

loading enabled.

-if (!bulkLoadSegments.contains(segment)) {

-segment.toggleDbForBulkLoading(true);

-// If the store does not exist yet, the

getOrCreateSegmentIfLive will call openDB that

-// makes the open flag for the newly created store.

-// if the store does exist already, then

toggleDbForBulkLoading will make sure that

-// the store is already open here.

-bulkLoadSegments = new HashSet<>(segments.allSegments());

-}

Review comment:

Yup, that makes sense to me. I'm thinking about the world where standbys

(and also restoring tasks) are executed on different threads. The concern about

IQ are valid indeed that with a large set of un-compacted L0 files.

In the even larger scope, where we would have checkpoints I'd believe that

bulk-loading would not be very necessary since we would not have a huge number

of records to catch up any more :)

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] rgroothuijsen opened a new pull request #8664: KAFKA-9716: Clarify meaning of compression rate metrics