[jira] [Resolved] (KAFKA-10453) Backport of PR-7781

[ https://issues.apache.org/jira/browse/KAFKA-10453?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Niketh Sabbineni resolved KAFKA-10453. -- Resolution: Workaround > Backport of PR-7781 > --- > > Key: KAFKA-10453 > URL: https://issues.apache.org/jira/browse/KAFKA-10453 > Project: Kafka > Issue Type: Wish > Components: clients >Affects Versions: 1.1.1 >Reporter: Niketh Sabbineni >Priority: Major > > We have been hitting this bug (with kafka 1.1.1) where the Producer takes > forever to load metadata. The issue seems to have been patched in master > [here|[https://github.com/apache/kafka/pull/7781]]. > Would you *recommend* a backport of that above change to 1.1? There are 7-8 > changes that need to be cherry picked. The other option is to upgrade to 2.5 > (which would be much more involved) -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (KAFKA-10072) Kafkaconsumer is configured with different clientid parameters to obtain different results

[

https://issues.apache.org/jira/browse/KAFKA-10072?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Ankit Kumar reassigned KAFKA-10072:

---

Assignee: Ankit Kumar

> Kafkaconsumer is configured with different clientid parameters to obtain

> different results

> --

>

> Key: KAFKA-10072

> URL: https://issues.apache.org/jira/browse/KAFKA-10072

> Project: Kafka

> Issue Type: Bug

> Components: core

>Affects Versions: 2.4.0

> Environment: centos7.6 8C 32G

>Reporter: victor

>Assignee: Ankit Kumar

>Priority: Blocker

>

> kafka-console-consumer.sh --bootstrap-server host1:port --consumer-property

> {color:#DE350B}client.id=aa{color} --from-beginning --topic topicA

> {color:#DE350B}There's no data

> {color}

> kafka-console-consumer.sh --bootstrap-server host1:port --consumer-property

> {color:#DE350B}clientid=bb{color} --from-beginning --topic topicA

> {color:#DE350B}Successfully consume data{color}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (KAFKA-10134) High CPU issue during rebalance in Kafka consumer after upgrading to 2.5

[ https://issues.apache.org/jira/browse/KAFKA-10134?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188116#comment-17188116 ] Guozhang Wang commented on KAFKA-10134: --- Hello [~zhowei] that broker-side change is not mandatory, I just included that part to make the whole PR complete, but it is not a necessary change for your situation. > High CPU issue during rebalance in Kafka consumer after upgrading to 2.5 > > > Key: KAFKA-10134 > URL: https://issues.apache.org/jira/browse/KAFKA-10134 > Project: Kafka > Issue Type: Bug > Components: clients >Affects Versions: 2.5.0 >Reporter: Sean Guo >Assignee: Guozhang Wang >Priority: Blocker > Fix For: 2.5.2, 2.6.1 > > Attachments: consumer3.log.2020-08-20.log, > consumer5.log.2020-07-22.log > > > We want to utilize the new rebalance protocol to mitigate the stop-the-world > effect during the rebalance as our tasks are long running task. > But after the upgrade when we try to kill an instance to let rebalance happen > when there is some load(some are long running tasks >30S) there, the CPU will > go sky-high. It reads ~700% in our metrics so there should be several threads > are in a tight loop. We have several consumer threads consuming from > different partitions during the rebalance. This is reproducible in both the > new CooperativeStickyAssignor and old eager rebalance rebalance protocol. The > difference is that with old eager rebalance rebalance protocol used the high > CPU usage will dropped after the rebalance done. But when using cooperative > one, it seems the consumers threads are stuck on something and couldn't > finish the rebalance so the high CPU usage won't drop until we stopped our > load. Also a small load without long running task also won't cause continuous > high CPU usage as the rebalance can finish in that case. > > "executor.kafka-consumer-executor-4" #124 daemon prio=5 os_prio=0 > cpu=76853.07ms elapsed=841.16s tid=0x7fe11f044000 nid=0x1f4 runnable > [0x7fe119aab000]"executor.kafka-consumer-executor-4" #124 daemon prio=5 > os_prio=0 cpu=76853.07ms elapsed=841.16s tid=0x7fe11f044000 nid=0x1f4 > runnable [0x7fe119aab000] java.lang.Thread.State: RUNNABLE at > org.apache.kafka.clients.consumer.internals.ConsumerCoordinator.poll(ConsumerCoordinator.java:467) > at > org.apache.kafka.clients.consumer.KafkaConsumer.updateAssignmentMetadataIfNeeded(KafkaConsumer.java:1275) > at > org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:1241) > at > org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:1216) > at > > By debugging into the code we found it looks like the clients are in a loop > on finding the coordinator. > I also tried the old rebalance protocol for the new version the issue still > exists but the CPU will be back to normal when the rebalance is done. > Also tried the same on the 2.4.1 which seems don't have this issue. So it > seems related something changed between 2.4.1 and 2.5.0. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10366) TimeWindowedDeserializer doesn't allow users to set a custom window size

[ https://issues.apache.org/jira/browse/KAFKA-10366?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188115#comment-17188115 ] Sophie Blee-Goldman commented on KAFKA-10366: - Yeah thanks for tracking that down [~mjsax]. I think we can repurpose/reinterpret this ticket to track fixing the Streams tests/test utils. If we have to add a lot of overloads throughout the call hierarchy between the test and the actual Consumer creation, maybe we can save some work by just adding a ConsumerParameters class (better names welcome) and replacing the ConsumerConfig/Properties parameter in all the methods instead. Then we can just call the appropriate Consumer constructor based on whether the deserializers have been set in the passed in. ConsumerParameters > TimeWindowedDeserializer doesn't allow users to set a custom window size > > > Key: KAFKA-10366 > URL: https://issues.apache.org/jira/browse/KAFKA-10366 > Project: Kafka > Issue Type: Bug >Reporter: Leah Thomas >Assignee: Leah Thomas >Priority: Major > Labels: streams > > Related to [KAFKA-4468|https://issues.apache.org/jira/browse/KAFKA-4468], in > timeWindowedDeserializer Long.MAX_VALUE is used as _windowSize_ for any > deserializer that uses the default constructor. While streams apps can pass > in a window size in serdes or while creating a timeWindowedDeserializer, the > deserializer that is actually used in processing the messages is created by > the Kafka consumer, without passing in the set windowSize. The deserializer > the consumer creates uses the configs, but as there is no config for > windowSize, the window size is always default. > See _KStreamAggregationIntegrationTest #ShouldReduceWindowed()_ as an example > of this issue. Despite passing in the windowSize to both the serdes and the > timeWindowedDeserializer, the window size is set to Long.MAX_VALUE. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] mumrah commented on pull request #9226: KAFKA-10444: Jenkinsfile testing

mumrah commented on pull request #9226: URL: https://github.com/apache/kafka/pull/9226#issuecomment-684175203 Example failed build with a compile error: https://ci-builds.apache.org/job/Kafka/job/kafka-pr/view/change-requests/job/PR-9226/67 One with a rat failure: https://ci-builds.apache.org/job/Kafka/job/kafka-pr/view/change-requests/job/PR-9226/66/ This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (KAFKA-10450) console-producer throws Uncaught error in kafka producer I/O thread: (org.apache.kafka.clients.producer.internals.Sender) java.lang.IllegalStateException: There are no

[

https://issues.apache.org/jira/browse/KAFKA-10450?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jigar Naik updated KAFKA-10450:

---

Priority: Minor (was: Critical)

> console-producer throws Uncaught error in kafka producer I/O thread:

> (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> ---

>

> Key: KAFKA-10450

> URL: https://issues.apache.org/jira/browse/KAFKA-10450

> Project: Kafka

> Issue Type: Bug

> Components: producer

>Affects Versions: 2.6.0

> Environment: Kafka Version 2.6.0

> MacOS Version - macOS Catalina 10.15.6 (19G2021)

> java version "11.0.8" 2020-07-14 LTS

> Java(TM) SE Runtime Environment 18.9 (build 11.0.8+10-LTS)

> Java HotSpot(TM) 64-Bit Server VM 18.9 (build 11.0.8+10-LTS, mixed mode)

>Reporter: Jigar Naik

>Priority: Minor

>

> Kafka-console-producer.sh gives below error on Mac

> ERROR [Producer clientId=console-producer] Uncaught error in kafka producer

> I/O thread: (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> *Steps to re-produce the issue.*

> Download Kafka from

> [kafka_2.13-2.6.0.tgz|https://www.apache.org/dyn/closer.cgi?path=/kafka/2.6.0/kafka_2.13-2.6.0.tgz]

>

> Change data and log directory (Optional)

> Create Topic Using below command

>

> {code:java}

> ./kafka-topics.sh \

> --create \

> --zookeeper localhost:2181 \

> --replication-factor 1 \

> --partitions 1 \

> --topic my-topic{code}

>

> Start Kafka console producer using below command

>

> {code:java}

> ./kafka-console-consumer.sh \

> --topic my-topic \

> --from-beginning \

> --bootstrap-server localhost:9092{code}

>

> Gives below output

>

> {code:java}

> ./kafka-console-producer.sh \

> --topic my-topic \

> --bootstrap-server 127.0.0.1:9092

> >[2020-09-01 00:24:18,177] ERROR [Producer clientId=console-producer]

> >Uncaught error in kafka producer I/O thread:

> >(org.apache.kafka.clients.producer.internals.Sender)

> java.nio.BufferUnderflowException

> at java.base/java.nio.Buffer.nextGetIndex(Buffer.java:650)

> at java.base/java.nio.HeapByteBuffer.getInt(HeapByteBuffer.java:391)

> at

> org.apache.kafka.common.protocol.ByteBufferAccessor.readInt(ByteBufferAccessor.java:43)

> at

> org.apache.kafka.common.message.ResponseHeaderData.read(ResponseHeaderData.java:102)

> at

> org.apache.kafka.common.message.ResponseHeaderData.(ResponseHeaderData.java:70)

> at

> org.apache.kafka.common.requests.ResponseHeader.parse(ResponseHeader.java:66)

> at

> org.apache.kafka.clients.NetworkClient.parseStructMaybeUpdateThrottleTimeMetrics(NetworkClient.java:717)

> at

> org.apache.kafka.clients.NetworkClient.handleCompletedReceives(NetworkClient.java:834)

> at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:553)

> at org.apache.kafka.clients.producer.internals.Sender.runOnce(Sender.java:325)

> at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:240)

> at java.base/java.lang.Thread.run(Thread.java:834)

> [2020-09-01 00:24:18,179] ERROR [Producer clientId=console-producer] Uncaught

> error in kafka producer I/O thread:

> (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> at

> org.apache.kafka.clients.InFlightRequests.requestQueue(InFlightRequests.java:62)

> at

> org.apache.kafka.clients.InFlightRequests.completeNext(InFlightRequests.java:70)

> at

> org.apache.kafka.clients.NetworkClient.handleCompletedReceives(NetworkClient.java:833)

> at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:553)

> at org.apache.kafka.clients.producer.internals.Sender.runOnce(Sender.java:325)

> at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:240)

> at java.base/java.lang.Thread.run(Thread.java:834)

> [2020-09-01 00:24:18,682] WARN [Producer clientId=console-producer] Bootstrap

> broker 127.0.0.1:9092 (id: -1 rack: null) disconnected

> (org.apache.kafka.clients.NetworkClient)

> {code}

>

>

> The same steps works fine with Kafka version 2.0.0 on Mac.

> The same steps works fine with Kafka version 2.6.0 on Windows.

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (KAFKA-10450) console-producer throws Uncaught error in kafka producer I/O thread: (org.apache.kafka.clients.producer.internals.Sender) java.lang.IllegalStateException: There are n

[

https://issues.apache.org/jira/browse/KAFKA-10450?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188085#comment-17188085

]

Jigar Naik commented on KAFKA-10450:

Yes, same version for both. And also from kafka console consumer which ship

with kafka gave the same error.

i have found the issue. The port 9092 was being used by sonarqube H2 DB.

after stopping sonarqube everything worked fine.

surprisingly i was hoping to getting address already in use instead of this

stack trace.

and also the kafka_topic.sh worked fine without any issue.

Changing the priority to minor as its no-more blocker. It would be better if

proper error message is displayed instead of this stacktrace. It would have

saved few hours for me:) thanks!

> console-producer throws Uncaught error in kafka producer I/O thread:

> (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> ---

>

> Key: KAFKA-10450

> URL: https://issues.apache.org/jira/browse/KAFKA-10450

> Project: Kafka

> Issue Type: Bug

> Components: producer

>Affects Versions: 2.6.0

> Environment: Kafka Version 2.6.0

> MacOS Version - macOS Catalina 10.15.6 (19G2021)

> java version "11.0.8" 2020-07-14 LTS

> Java(TM) SE Runtime Environment 18.9 (build 11.0.8+10-LTS)

> Java HotSpot(TM) 64-Bit Server VM 18.9 (build 11.0.8+10-LTS, mixed mode)

>Reporter: Jigar Naik

>Priority: Critical

>

> Kafka-console-producer.sh gives below error on Mac

> ERROR [Producer clientId=console-producer] Uncaught error in kafka producer

> I/O thread: (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> *Steps to re-produce the issue.*

> Download Kafka from

> [kafka_2.13-2.6.0.tgz|https://www.apache.org/dyn/closer.cgi?path=/kafka/2.6.0/kafka_2.13-2.6.0.tgz]

>

> Change data and log directory (Optional)

> Create Topic Using below command

>

> {code:java}

> ./kafka-topics.sh \

> --create \

> --zookeeper localhost:2181 \

> --replication-factor 1 \

> --partitions 1 \

> --topic my-topic{code}

>

> Start Kafka console producer using below command

>

> {code:java}

> ./kafka-console-consumer.sh \

> --topic my-topic \

> --from-beginning \

> --bootstrap-server localhost:9092{code}

>

> Gives below output

>

> {code:java}

> ./kafka-console-producer.sh \

> --topic my-topic \

> --bootstrap-server 127.0.0.1:9092

> >[2020-09-01 00:24:18,177] ERROR [Producer clientId=console-producer]

> >Uncaught error in kafka producer I/O thread:

> >(org.apache.kafka.clients.producer.internals.Sender)

> java.nio.BufferUnderflowException

> at java.base/java.nio.Buffer.nextGetIndex(Buffer.java:650)

> at java.base/java.nio.HeapByteBuffer.getInt(HeapByteBuffer.java:391)

> at

> org.apache.kafka.common.protocol.ByteBufferAccessor.readInt(ByteBufferAccessor.java:43)

> at

> org.apache.kafka.common.message.ResponseHeaderData.read(ResponseHeaderData.java:102)

> at

> org.apache.kafka.common.message.ResponseHeaderData.(ResponseHeaderData.java:70)

> at

> org.apache.kafka.common.requests.ResponseHeader.parse(ResponseHeader.java:66)

> at

> org.apache.kafka.clients.NetworkClient.parseStructMaybeUpdateThrottleTimeMetrics(NetworkClient.java:717)

> at

> org.apache.kafka.clients.NetworkClient.handleCompletedReceives(NetworkClient.java:834)

> at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:553)

> at org.apache.kafka.clients.producer.internals.Sender.runOnce(Sender.java:325)

> at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:240)

> at java.base/java.lang.Thread.run(Thread.java:834)

> [2020-09-01 00:24:18,179] ERROR [Producer clientId=console-producer] Uncaught

> error in kafka producer I/O thread:

> (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> at

> org.apache.kafka.clients.InFlightRequests.requestQueue(InFlightRequests.java:62)

> at

> org.apache.kafka.clients.InFlightRequests.completeNext(InFlightRequests.java:70)

> at

> org.apache.kafka.clients.NetworkClient.handleCompletedReceives(NetworkClient.java:833)

> at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:553)

> at org.apache.kafka.clients.producer.internals.Sender.runOnce(Sender.java:325)

> at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:240)

> at java.base/java.lang.Thread.run(Thread.java:834)

> [2020-09-01 00:24:18,682] WARN [Producer clientId=console-producer] Bootstrap

> broker 127.0.0.1:9092 (id: -1 rack: null) disconnected

>

[jira] [Updated] (KAFKA-10450) console-producer throws Uncaught error in kafka producer I/O thread: (org.apache.kafka.clients.producer.internals.Sender) java.lang.IllegalStateException: There are no

[

https://issues.apache.org/jira/browse/KAFKA-10450?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jigar Naik updated KAFKA-10450:

---

Priority: Critical (was: Blocker)

> console-producer throws Uncaught error in kafka producer I/O thread:

> (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> ---

>

> Key: KAFKA-10450

> URL: https://issues.apache.org/jira/browse/KAFKA-10450

> Project: Kafka

> Issue Type: Bug

> Components: producer

>Affects Versions: 2.6.0

> Environment: Kafka Version 2.6.0

> MacOS Version - macOS Catalina 10.15.6 (19G2021)

> java version "11.0.8" 2020-07-14 LTS

> Java(TM) SE Runtime Environment 18.9 (build 11.0.8+10-LTS)

> Java HotSpot(TM) 64-Bit Server VM 18.9 (build 11.0.8+10-LTS, mixed mode)

>Reporter: Jigar Naik

>Priority: Critical

>

> Kafka-console-producer.sh gives below error on Mac

> ERROR [Producer clientId=console-producer] Uncaught error in kafka producer

> I/O thread: (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> *Steps to re-produce the issue.*

> Download Kafka from

> [kafka_2.13-2.6.0.tgz|https://www.apache.org/dyn/closer.cgi?path=/kafka/2.6.0/kafka_2.13-2.6.0.tgz]

>

> Change data and log directory (Optional)

> Create Topic Using below command

>

> {code:java}

> ./kafka-topics.sh \

> --create \

> --zookeeper localhost:2181 \

> --replication-factor 1 \

> --partitions 1 \

> --topic my-topic{code}

>

> Start Kafka console producer using below command

>

> {code:java}

> ./kafka-console-consumer.sh \

> --topic my-topic \

> --from-beginning \

> --bootstrap-server localhost:9092{code}

>

> Gives below output

>

> {code:java}

> ./kafka-console-producer.sh \

> --topic my-topic \

> --bootstrap-server 127.0.0.1:9092

> >[2020-09-01 00:24:18,177] ERROR [Producer clientId=console-producer]

> >Uncaught error in kafka producer I/O thread:

> >(org.apache.kafka.clients.producer.internals.Sender)

> java.nio.BufferUnderflowException

> at java.base/java.nio.Buffer.nextGetIndex(Buffer.java:650)

> at java.base/java.nio.HeapByteBuffer.getInt(HeapByteBuffer.java:391)

> at

> org.apache.kafka.common.protocol.ByteBufferAccessor.readInt(ByteBufferAccessor.java:43)

> at

> org.apache.kafka.common.message.ResponseHeaderData.read(ResponseHeaderData.java:102)

> at

> org.apache.kafka.common.message.ResponseHeaderData.(ResponseHeaderData.java:70)

> at

> org.apache.kafka.common.requests.ResponseHeader.parse(ResponseHeader.java:66)

> at

> org.apache.kafka.clients.NetworkClient.parseStructMaybeUpdateThrottleTimeMetrics(NetworkClient.java:717)

> at

> org.apache.kafka.clients.NetworkClient.handleCompletedReceives(NetworkClient.java:834)

> at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:553)

> at org.apache.kafka.clients.producer.internals.Sender.runOnce(Sender.java:325)

> at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:240)

> at java.base/java.lang.Thread.run(Thread.java:834)

> [2020-09-01 00:24:18,179] ERROR [Producer clientId=console-producer] Uncaught

> error in kafka producer I/O thread:

> (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> at

> org.apache.kafka.clients.InFlightRequests.requestQueue(InFlightRequests.java:62)

> at

> org.apache.kafka.clients.InFlightRequests.completeNext(InFlightRequests.java:70)

> at

> org.apache.kafka.clients.NetworkClient.handleCompletedReceives(NetworkClient.java:833)

> at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:553)

> at org.apache.kafka.clients.producer.internals.Sender.runOnce(Sender.java:325)

> at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:240)

> at java.base/java.lang.Thread.run(Thread.java:834)

> [2020-09-01 00:24:18,682] WARN [Producer clientId=console-producer] Bootstrap

> broker 127.0.0.1:9092 (id: -1 rack: null) disconnected

> (org.apache.kafka.clients.NetworkClient)

> {code}

>

>

> The same steps works fine with Kafka version 2.0.0 on Mac.

> The same steps works fine with Kafka version 2.6.0 on Windows.

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (KAFKA-10450) console-producer throws Uncaught error in kafka producer I/O thread: (org.apache.kafka.clients.producer.internals.Sender) java.lang.IllegalStateException: There are n

[

https://issues.apache.org/jira/browse/KAFKA-10450?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188082#comment-17188082

]

huxihx commented on KAFKA-10450:

Same version for both clients and brokers?

> console-producer throws Uncaught error in kafka producer I/O thread:

> (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> ---

>

> Key: KAFKA-10450

> URL: https://issues.apache.org/jira/browse/KAFKA-10450

> Project: Kafka

> Issue Type: Bug

> Components: producer

>Affects Versions: 2.6.0

> Environment: Kafka Version 2.6.0

> MacOS Version - macOS Catalina 10.15.6 (19G2021)

> java version "11.0.8" 2020-07-14 LTS

> Java(TM) SE Runtime Environment 18.9 (build 11.0.8+10-LTS)

> Java HotSpot(TM) 64-Bit Server VM 18.9 (build 11.0.8+10-LTS, mixed mode)

>Reporter: Jigar Naik

>Priority: Blocker

>

> Kafka-console-producer.sh gives below error on Mac

> ERROR [Producer clientId=console-producer] Uncaught error in kafka producer

> I/O thread: (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> *Steps to re-produce the issue.*

> Download Kafka from

> [kafka_2.13-2.6.0.tgz|https://www.apache.org/dyn/closer.cgi?path=/kafka/2.6.0/kafka_2.13-2.6.0.tgz]

>

> Change data and log directory (Optional)

> Create Topic Using below command

>

> {code:java}

> ./kafka-topics.sh \

> --create \

> --zookeeper localhost:2181 \

> --replication-factor 1 \

> --partitions 1 \

> --topic my-topic{code}

>

> Start Kafka console producer using below command

>

> {code:java}

> ./kafka-console-consumer.sh \

> --topic my-topic \

> --from-beginning \

> --bootstrap-server localhost:9092{code}

>

> Gives below output

>

> {code:java}

> ./kafka-console-producer.sh \

> --topic my-topic \

> --bootstrap-server 127.0.0.1:9092

> >[2020-09-01 00:24:18,177] ERROR [Producer clientId=console-producer]

> >Uncaught error in kafka producer I/O thread:

> >(org.apache.kafka.clients.producer.internals.Sender)

> java.nio.BufferUnderflowException

> at java.base/java.nio.Buffer.nextGetIndex(Buffer.java:650)

> at java.base/java.nio.HeapByteBuffer.getInt(HeapByteBuffer.java:391)

> at

> org.apache.kafka.common.protocol.ByteBufferAccessor.readInt(ByteBufferAccessor.java:43)

> at

> org.apache.kafka.common.message.ResponseHeaderData.read(ResponseHeaderData.java:102)

> at

> org.apache.kafka.common.message.ResponseHeaderData.(ResponseHeaderData.java:70)

> at

> org.apache.kafka.common.requests.ResponseHeader.parse(ResponseHeader.java:66)

> at

> org.apache.kafka.clients.NetworkClient.parseStructMaybeUpdateThrottleTimeMetrics(NetworkClient.java:717)

> at

> org.apache.kafka.clients.NetworkClient.handleCompletedReceives(NetworkClient.java:834)

> at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:553)

> at org.apache.kafka.clients.producer.internals.Sender.runOnce(Sender.java:325)

> at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:240)

> at java.base/java.lang.Thread.run(Thread.java:834)

> [2020-09-01 00:24:18,179] ERROR [Producer clientId=console-producer] Uncaught

> error in kafka producer I/O thread:

> (org.apache.kafka.clients.producer.internals.Sender)

> java.lang.IllegalStateException: There are no in-flight requests for node -1

> at

> org.apache.kafka.clients.InFlightRequests.requestQueue(InFlightRequests.java:62)

> at

> org.apache.kafka.clients.InFlightRequests.completeNext(InFlightRequests.java:70)

> at

> org.apache.kafka.clients.NetworkClient.handleCompletedReceives(NetworkClient.java:833)

> at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:553)

> at org.apache.kafka.clients.producer.internals.Sender.runOnce(Sender.java:325)

> at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:240)

> at java.base/java.lang.Thread.run(Thread.java:834)

> [2020-09-01 00:24:18,682] WARN [Producer clientId=console-producer] Bootstrap

> broker 127.0.0.1:9092 (id: -1 rack: null) disconnected

> (org.apache.kafka.clients.NetworkClient)

> {code}

>

>

> The same steps works fine with Kafka version 2.0.0 on Mac.

> The same steps works fine with Kafka version 2.6.0 on Windows.

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (KAFKA-10134) High CPU issue during rebalance in Kafka consumer after upgrading to 2.5

[ https://issues.apache.org/jira/browse/KAFKA-10134?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188058#comment-17188058 ] Jerry Wei commented on KAFKA-10134: --- [~guozhang] one more question about PR #8834, whether or not *GroupCoordinator* changes is mandatory. I mean Kafka server changes should be more expensive that clients. > High CPU issue during rebalance in Kafka consumer after upgrading to 2.5 > > > Key: KAFKA-10134 > URL: https://issues.apache.org/jira/browse/KAFKA-10134 > Project: Kafka > Issue Type: Bug > Components: clients >Affects Versions: 2.5.0 >Reporter: Sean Guo >Assignee: Guozhang Wang >Priority: Blocker > Fix For: 2.5.2, 2.6.1 > > Attachments: consumer3.log.2020-08-20.log, > consumer5.log.2020-07-22.log > > > We want to utilize the new rebalance protocol to mitigate the stop-the-world > effect during the rebalance as our tasks are long running task. > But after the upgrade when we try to kill an instance to let rebalance happen > when there is some load(some are long running tasks >30S) there, the CPU will > go sky-high. It reads ~700% in our metrics so there should be several threads > are in a tight loop. We have several consumer threads consuming from > different partitions during the rebalance. This is reproducible in both the > new CooperativeStickyAssignor and old eager rebalance rebalance protocol. The > difference is that with old eager rebalance rebalance protocol used the high > CPU usage will dropped after the rebalance done. But when using cooperative > one, it seems the consumers threads are stuck on something and couldn't > finish the rebalance so the high CPU usage won't drop until we stopped our > load. Also a small load without long running task also won't cause continuous > high CPU usage as the rebalance can finish in that case. > > "executor.kafka-consumer-executor-4" #124 daemon prio=5 os_prio=0 > cpu=76853.07ms elapsed=841.16s tid=0x7fe11f044000 nid=0x1f4 runnable > [0x7fe119aab000]"executor.kafka-consumer-executor-4" #124 daemon prio=5 > os_prio=0 cpu=76853.07ms elapsed=841.16s tid=0x7fe11f044000 nid=0x1f4 > runnable [0x7fe119aab000] java.lang.Thread.State: RUNNABLE at > org.apache.kafka.clients.consumer.internals.ConsumerCoordinator.poll(ConsumerCoordinator.java:467) > at > org.apache.kafka.clients.consumer.KafkaConsumer.updateAssignmentMetadataIfNeeded(KafkaConsumer.java:1275) > at > org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:1241) > at > org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:1216) > at > > By debugging into the code we found it looks like the clients are in a loop > on finding the coordinator. > I also tried the old rebalance protocol for the new version the issue still > exists but the CPU will be back to normal when the rebalance is done. > Also tried the same on the 2.4.1 which seems don't have this issue. So it > seems related something changed between 2.4.1 and 2.5.0. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] ableegoldman commented on a change in pull request #9138: KAFKA-9929: Support backward iterator on WindowStore

ableegoldman commented on a change in pull request #9138:

URL: https://github.com/apache/kafka/pull/9138#discussion_r480482350

##

File path:

streams/src/main/java/org/apache/kafka/streams/state/internals/SegmentIterator.java

##

@@ -67,14 +70,22 @@ public Bytes peekNextKey() {

public boolean hasNext() {

boolean hasNext = false;

while ((currentIterator == null || !(hasNext =

hasNextConditionHasNext()) || !currentSegment.isOpen())

-&& segments.hasNext()) {

+&& segments.hasNext()) {

close();

currentSegment = segments.next();

try {

if (from == null || to == null) {

-currentIterator = currentSegment.all();

+if (forward) {

+currentIterator = currentSegment.all();

+} else {

+currentIterator = currentSegment.reverseAll();

Review comment:

Yeah that's a good question, it does seem like we can just remove the

`range` and `all` on the Segment interface

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #9138: KAFKA-9929: Support backward iterator on WindowStore

ableegoldman commented on a change in pull request #9138:

URL: https://github.com/apache/kafka/pull/9138#discussion_r480481060

##

File path:

streams/src/main/java/org/apache/kafka/streams/state/internals/CachingWindowStore.java

##

@@ -338,25 +452,36 @@ public synchronized void close() {

private CacheIteratorWrapper(final Bytes key,

final long timeFrom,

- final long timeTo) {

-this(key, key, timeFrom, timeTo);

+ final long timeTo,

+ final boolean forward) {

+this(key, key, timeFrom, timeTo, forward);

}

private CacheIteratorWrapper(final Bytes keyFrom,

final Bytes keyTo,

final long timeFrom,

- final long timeTo) {

+ final long timeTo,

+ final boolean forward) {

this.keyFrom = keyFrom;

this.keyTo = keyTo;

this.timeTo = timeTo;

-this.lastSegmentId = cacheFunction.segmentId(Math.min(timeTo,

maxObservedTimestamp.get()));

+this.forward = forward;

this.segmentInterval = cacheFunction.getSegmentInterval();

-this.currentSegmentId = cacheFunction.segmentId(timeFrom);

-setCacheKeyRange(timeFrom, currentSegmentLastTime());

+if (forward) {

+this.lastSegmentId = cacheFunction.segmentId(Math.min(timeTo,

maxObservedTimestamp.get()));

+this.currentSegmentId = cacheFunction.segmentId(timeFrom);

-this.current = context.cache().range(cacheName, cacheKeyFrom,

cacheKeyTo);

+setCacheKeyRange(timeFrom, currentSegmentLastTime());

+this.current = context.cache().range(cacheName, cacheKeyFrom,

cacheKeyTo);

+} else {

+this.currentSegmentId =

cacheFunction.segmentId(Math.min(timeTo, maxObservedTimestamp.get()));

+this.lastSegmentId = cacheFunction.segmentId(timeFrom);

+

+setCacheKeyRange(currentSegmentBeginTime(), Math.min(timeTo,

maxObservedTimestamp.get()));

Review comment:

This looks right to me -- in the iterator constructor, we would normally

start from `timeFrom` (the minimum time) and advance to the end of the current

segment (that's what the "cache key range" defines, the range of the current

segment) When iterating backwards, the current segment is actually the largest

segment, so the cache key lower range is the current (largest) segment's

beginning timestamp, and the upper range is the maximum timestamp of the

backwards fetch. Does that make sense?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #9138: KAFKA-9929: Support backward iterator on WindowStore

ableegoldman commented on a change in pull request #9138: URL: https://github.com/apache/kafka/pull/9138#discussion_r480479677 ## File path: streams/src/main/java/org/apache/kafka/streams/state/internals/CachingWindowStore.java ## @@ -271,27 +345,68 @@ public synchronized void put(final Bytes key, final PeekingKeyValueIterator filteredCacheIterator = new FilteredCacheIterator(cacheIterator, hasNextCondition, cacheFunction); return new MergedSortedCacheWindowStoreKeyValueIterator( -filteredCacheIterator, -underlyingIterator, -bytesSerdes, -windowSize, -cacheFunction +filteredCacheIterator, +underlyingIterator, +bytesSerdes, +windowSize, +cacheFunction, +true +); +} + +@Override +public KeyValueIterator, byte[]> backwardFetchAll(final long timeFrom, Review comment: We would still need to keep this method: we're not removing all long-based APIs, just the public/IQ methods in ReadOnlyWindowStore. But we still want to keep the long-based methods on WindowStore and all the internal store interfaces for performance reasons. Maybe once we move everything to use `Instant` all the way down to the serialization then we can remove these long-based methods. I guess we should consider that when discussing KIP-667, but for the time being at least, we should keep them for internal use This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] guozhangwang commented on a change in pull request #9232: KAFKA-9924: Add remaining property-based RocksDB metrics as described in KIP-607

guozhangwang commented on a change in pull request #9232:

URL: https://github.com/apache/kafka/pull/9232#discussion_r480477639

##

File path:

streams/src/main/java/org/apache/kafka/streams/state/internals/metrics/RocksDBMetricsRecorder.java

##

@@ -150,14 +176,55 @@ private void verifyStatistics(final String segmentName,

final Statistics statist

statistics != null &&

storeToValueProviders.values().stream().anyMatch(valueProviders ->

valueProviders.statistics == null))) {

-throw new IllegalStateException("Statistics for store \"" +

segmentName + "\" of task " + taskId +

-" is" + (statistics == null ? " " : " not ") + "null although

the statistics of another store in this " +

+throw new IllegalStateException("Statistics for segment " +

segmentName + " of task " + taskId +

+" is" + (statistics == null ? " " : " not ") + "null although

the statistics of another segment in this " +

"metrics recorder is" + (statistics != null ? " " : " not ") +

"null. " +

"This is a bug in Kafka Streams. " +

"Please open a bug report under

https://issues.apache.org/jira/projects/KAFKA/issues;);

}

}

+private void verifyDbAndCacheAndStatistics(final String segmentName,

+ final RocksDB db,

+ final Cache cache,

+ final Statistics statistics) {

+for (final DbAndCacheAndStatistics valueProviders :

storeToValueProviders.values()) {

+verifyIfSomeAreNull(segmentName, statistics,

valueProviders.statistics, "statistics");

+verifyIfSomeAreNull(segmentName, cache, valueProviders.cache,

"cache");

+if (db == valueProviders.db) {

+throw new IllegalStateException("DB instance for store " +

segmentName + " of task " + taskId +

+" was already added for another segment as a value

provider. This is a bug in Kafka Streams. " +

+"Please open a bug report under

https://issues.apache.org/jira/projects/KAFKA/issues;);

+}

+if (storeToValueProviders.size() == 1 && cache !=

valueProviders.cache) {

Review comment:

Hmm, why we need the second condition to determine `singleCache = false`

here?

##

File path:

streams/src/main/java/org/apache/kafka/streams/state/internals/metrics/RocksDBMetricsRecorder.java

##

@@ -150,14 +176,55 @@ private void verifyStatistics(final String segmentName,

final Statistics statist

statistics != null &&

storeToValueProviders.values().stream().anyMatch(valueProviders ->

valueProviders.statistics == null))) {

-throw new IllegalStateException("Statistics for store \"" +

segmentName + "\" of task " + taskId +

-" is" + (statistics == null ? " " : " not ") + "null although

the statistics of another store in this " +

+throw new IllegalStateException("Statistics for segment " +

segmentName + " of task " + taskId +

+" is" + (statistics == null ? " " : " not ") + "null although

the statistics of another segment in this " +

"metrics recorder is" + (statistics != null ? " " : " not ") +

"null. " +

"This is a bug in Kafka Streams. " +

"Please open a bug report under

https://issues.apache.org/jira/projects/KAFKA/issues;);

}

}

+private void verifyDbAndCacheAndStatistics(final String segmentName,

+ final RocksDB db,

+ final Cache cache,

+ final Statistics statistics) {

+for (final DbAndCacheAndStatistics valueProviders :

storeToValueProviders.values()) {

+verifyIfSomeAreNull(segmentName, statistics,

valueProviders.statistics, "statistics");

+verifyIfSomeAreNull(segmentName, cache, valueProviders.cache,

"cache");

+if (db == valueProviders.db) {

+throw new IllegalStateException("DB instance for store " +

segmentName + " of task " + taskId +

+" was already added for another segment as a value

provider. This is a bug in Kafka Streams. " +

+"Please open a bug report under

https://issues.apache.org/jira/projects/KAFKA/issues;);

+}

+if (storeToValueProviders.size() == 1 && cache !=

valueProviders.cache) {

+singleCache = false;

+} else if (singleCache && cache != valueProviders.cache ||

!singleCache && cache == valueProviders.cache) {

+throw new IllegalStateException("Caches for store " +

storeName + " of task " + taskId +

+" are either not all distinct or do not all refer to the

[GitHub] [kafka] guozhangwang commented on a change in pull request #9138: KAFKA-9929: Support backward iterator on WindowStore

guozhangwang commented on a change in pull request #9138:

URL: https://github.com/apache/kafka/pull/9138#discussion_r480469688

##

File path:

streams/src/main/java/org/apache/kafka/streams/state/ReadOnlyWindowStore.java

##

@@ -136,34 +174,64 @@

*

* This iterator must be closed after use.

*

- * @param from the first key in the range

- * @param tothe last key in the range

- * @param fromTime time range start (inclusive)

- * @param toTimetime range end (inclusive)

- * @return an iterator over windowed key-value pairs {@code ,

value>}

+ * @param from the first key in the range

+ * @param to the last key in the range

+ * @param timeFrom time range start (inclusive), where iteration starts.

+ * @param timeTo time range end (inclusive), where iteration ends.

+ * @return an iterator over windowed key-value pairs {@code ,

value>}, from beginning to end of time.

* @throws InvalidStateStoreException if the store is not initialized

- * @throws NullPointerException If {@code null} is used for any key.

- * @throws IllegalArgumentException if duration is negative or can't be

represented as {@code long milliseconds}

+ * @throws NullPointerException If {@code null} is used for any key.

+ * @throws IllegalArgumentException if duration is negative or can't be

represented as {@code long milliseconds}

*/

-KeyValueIterator, V> fetch(K from, K to, Instant fromTime,

Instant toTime)

+KeyValueIterator, V> fetch(K from, K to, Instant timeFrom,

Instant timeTo)

throws IllegalArgumentException;

/**

-* Gets all the key-value pairs in the existing windows.

-*

-* @return an iterator over windowed key-value pairs {@code ,

value>}

-* @throws InvalidStateStoreException if the store is not initialized

-*/

+ * Get all the key-value pairs in the given key range and time range from

all the existing windows

+ * in backward order with respect to time (from end to beginning of time).

+ *

+ * This iterator must be closed after use.

+ *

+ * @param from the first key in the range

+ * @param to the last key in the range

+ * @param timeFrom time range start (inclusive), where iteration ends.

+ * @param timeTo time range end (inclusive), where iteration starts.

+ * @return an iterator over windowed key-value pairs {@code ,

value>}, from end to beginning of time.

+ * @throws InvalidStateStoreException if the store is not initialized

+ * @throws NullPointerException If {@code null} is used for any key.

+ * @throws IllegalArgumentException if duration is negative or can't be

represented as {@code long milliseconds}

+ */

+KeyValueIterator, V> backwardFetch(K from, K to, Instant

timeFrom, Instant timeTo)

Review comment:

This is out of the scope of this PR, but I'd like to point out that the

current IQ does not actually obey the ordering when there are multiple local

stores hosted on that instance. For example, if there are two stores from two

tasks hosting keys {1, 3} and {2,4}, then a range query of key [1,4] would

return in the order of `1,3,2,4` but not `1,2,3,4` since it is looping over the

stores only. This would be the case for either forward or backward fetches on

range-key-range-time.

For single key time range fetch, or course, there's no such issue.

I think it worth documenting this for now until we have a fix (and actually

we are going to propose something soon).

##

File path:

streams/src/main/java/org/apache/kafka/streams/state/ReadOnlyWindowStore.java

##

@@ -33,11 +33,11 @@

/**

* Get the value of key from a window.

*

- * @param key the key to fetch

- * @param time start timestamp (inclusive) of the window

+ * @param key the key to fetch

+ * @param time start timestamp (inclusive) of the window

* @return The value or {@code null} if no value is found in the window

* @throws InvalidStateStoreException if the store is not initialized

- * @throws NullPointerException If {@code null} is used for any key.

+ * @throws NullPointerException If {@code null} is used for any key.

Review comment:

nit: is this intentional? Also I'd suggest we do not use capitalized

`If` to be consistent with the above line, ditto elsewhere below.

##

File path: streams/src/main/java/org/apache/kafka/streams/state/WindowStore.java

##

@@ -150,13 +185,25 @@

* @return an iterator over windowed key-value pairs {@code ,

value>}

* @throws InvalidStateStoreException if the store is not initialized

*/

-@SuppressWarnings("deprecation") // note, this method must be kept if

super#fetchAll(...) is removed

+// note, this method must be kept if super#fetchAll(...) is removed

+@SuppressWarnings("deprecation")

KeyValueIterator, V>

[jira] [Assigned] (KAFKA-2200) kafkaProducer.send() should not call callback.onCompletion()

[ https://issues.apache.org/jira/browse/KAFKA-2200?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Aakash Gupta reassigned KAFKA-2200: --- Assignee: Aakash Gupta > kafkaProducer.send() should not call callback.onCompletion() > > > Key: KAFKA-2200 > URL: https://issues.apache.org/jira/browse/KAFKA-2200 > Project: Kafka > Issue Type: Bug > Components: clients >Affects Versions: 0.10.1.0 >Reporter: Jiangjie Qin >Assignee: Aakash Gupta >Priority: Major > Labels: newbie > > KafkaProducer.send() should not call callback.onCompletion() because this > might break the callback firing order. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Comment Edited] (KAFKA-2200) kafkaProducer.send() should not call callback.onCompletion()

[

https://issues.apache.org/jira/browse/KAFKA-2200?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188042#comment-17188042

]

Aakash Gupta edited comment on KAFKA-2200 at 8/31/20, 11:20 PM:

Hi [~becket_qin]

I am willing to take this ticket.

As of now till date, this is how exceptions are being handled in

kafkaProducer.send() method:

{code:java}

catch (ApiException e) {

log.debug("Exception occurred during message send:", e);

if (callback != null)

callback.onCompletion(null, e);

this.errors.record();

this.interceptors.onSendError(record, tp, e);

return new FutureFailure(e);

} catch (InterruptedException e) {

this.errors.record();

this.interceptors.onSendError(record, tp, e);

throw new InterruptException(e);

} catch (KafkaException e) {

this.errors.record();

this.interceptors.onSendError(record, tp, e);

throw e;

} catch (Exception e) {

// we notify interceptor about all exceptions, since onSend is

called before anything else in this method

this.interceptors.onSendError(record, tp, e);

throw e;

}

{code}

# TimeoutException in waiting for metadata update, what is your suggestion?

How should it be handled if not via ApiException callback? As you mentioned, we

are misusing this TimeoutException as idea was to use it only where replication

couldn't complete within the allowed time, so should we create a new exception

'ClientTimeoutException' to handle such scenarios, and also use the same in

waitOnMetadata() method ?

# Validation of message size is throwing RecordTooLargeException which extends

ApiException. In this case, you are correct to say that producer client is

throwing RecordTooLargeException without even interacting with server.

You've suggested 2 scenarios which can cause exceptions :

## *If the size of serialised uncompressed message is more than

maxRequestSize*: I'm not sure if we can estimate the size of message keeping

compression type in consideration. So, current implementation throws

RecordTooLargeException based on the ESTIMATE w/o keeping into account the

compression type. What is the expected behaviour in this case?

## *If the message size is bigger than the totalMemorySize or

memoryBufferSize* : Buffer pool would throw IllegalArgumentException when asked

for allocation. Should we just catch this exception, record it and throw it

back?

[~becket_qin] Can you please answer above queries and validate my

understanding? Apologies if I've misunderstood something as I am new to Kafka

community.

was (Author: aakashgupta96):

Hi [~becket_qin]

I am willing to take this ticket.

As of now till date, this is how exceptions are being handled in

kafkaProducer.send() method:

{code:java}

catch (ApiException e) {

log.debug("Exception occurred during message send:", e);

if (callback != null)

callback.onCompletion(null, e);

this.errors.record();

this.interceptors.onSendError(record, tp, e);

return new FutureFailure(e);

} catch (InterruptedException e) {

this.errors.record();

this.interceptors.onSendError(record, tp, e);

throw new InterruptException(e);

} catch (KafkaException e) {

this.errors.record();

this.interceptors.onSendError(record, tp, e);

throw e;

} catch (Exception e) {

// we notify interceptor about all exceptions, since onSend is

called before anything else in this method

this.interceptors.onSendError(record, tp, e);

throw e;

}

{code}

# TimeoutException in waiting for metadata update, what is your suggestion?

How should it be handled if not via ApiException callback? As you mentioned, we

are misusing this TimeoutException as idea was to use it only where replication

couldn't complete within the allowed time, so should we create a new exception

'ClientTimeoutException' to handle such scenarios, and also use the same in

waitOnMetadata() method ?

# Validation of message size is throwing RecordTooLargeException which extends

ApiException. In this case, you are correct to say that producer client is

throwing RecordTooLargeException without even interacting with server.

You've suggested 2 scenarios which can cause exceptions :

## *If the size of serialised uncompressed message is more than

maxRequestSize*: I'm not sure if we can estimate the size of message keeping

compression type in consideration. So, current implementation throws

RecordTooLargeException based on the ESTIMATE w/o keeping into account the

compression type. What is the expected behaviour in this case?

## *If the message size is bigger than the totalMemorySize or

[jira] [Commented] (KAFKA-2200) kafkaProducer.send() should not call callback.onCompletion()

[

https://issues.apache.org/jira/browse/KAFKA-2200?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188042#comment-17188042

]

Aakash Gupta commented on KAFKA-2200:

-

Hi [~becket_qin]

I am willing to take this ticket.

As of now till date, this is how exceptions are being handled in

kafkaProducer.send() method:

{code:java}

catch (ApiException e) {

log.debug("Exception occurred during message send:", e);

if (callback != null)

callback.onCompletion(null, e);

this.errors.record();

this.interceptors.onSendError(record, tp, e);

return new FutureFailure(e);

} catch (InterruptedException e) {

this.errors.record();

this.interceptors.onSendError(record, tp, e);

throw new InterruptException(e);

} catch (KafkaException e) {

this.errors.record();

this.interceptors.onSendError(record, tp, e);

throw e;

} catch (Exception e) {

// we notify interceptor about all exceptions, since onSend is

called before anything else in this method

this.interceptors.onSendError(record, tp, e);

throw e;

}

{code}

# TimeoutException in waiting for metadata update, what is your suggestion?

How should it be handled if not via ApiException callback? As you mentioned, we

are misusing this TimeoutException as idea was to use it only where replication

couldn't complete within the allowed time, so should we create a new exception

'ClientTimeoutException' to handle such scenarios, and also use the same in

waitOnMetadata() method ?

# Validation of message size is throwing RecordTooLargeException which extends

ApiException. In this case, you are correct to say that producer client is

throwing RecordTooLargeException without even interacting with server.

You've suggested 2 scenarios which can cause exceptions :

## *If the size of serialised uncompressed message is more than

maxRequestSize*: I'm not sure if we can estimate the size of message keeping

compression type in consideration. So, current implementation throws

RecordTooLargeException based on the ESTIMATE w/o keeping into account the

compression type. What is the expected behaviour in this case?

## *If the message size is bigger than the totalMemorySize or

memoryBufferSize* : **Buffer pool would throw IllegalArgumentException when

asked for allocation. Should we just catch this exception, record it and throw

it back?

[~becket_qin] Can you please answer above queries and validate my

understanding? Apologies if I've misunderstood something as I am new to Kafka

community.

> kafkaProducer.send() should not call callback.onCompletion()

>

>

> Key: KAFKA-2200

> URL: https://issues.apache.org/jira/browse/KAFKA-2200

> Project: Kafka

> Issue Type: Bug

> Components: clients

>Affects Versions: 0.10.1.0

>Reporter: Jiangjie Qin

>Priority: Major

> Labels: newbie

>

> KafkaProducer.send() should not call callback.onCompletion() because this

> might break the callback firing order.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

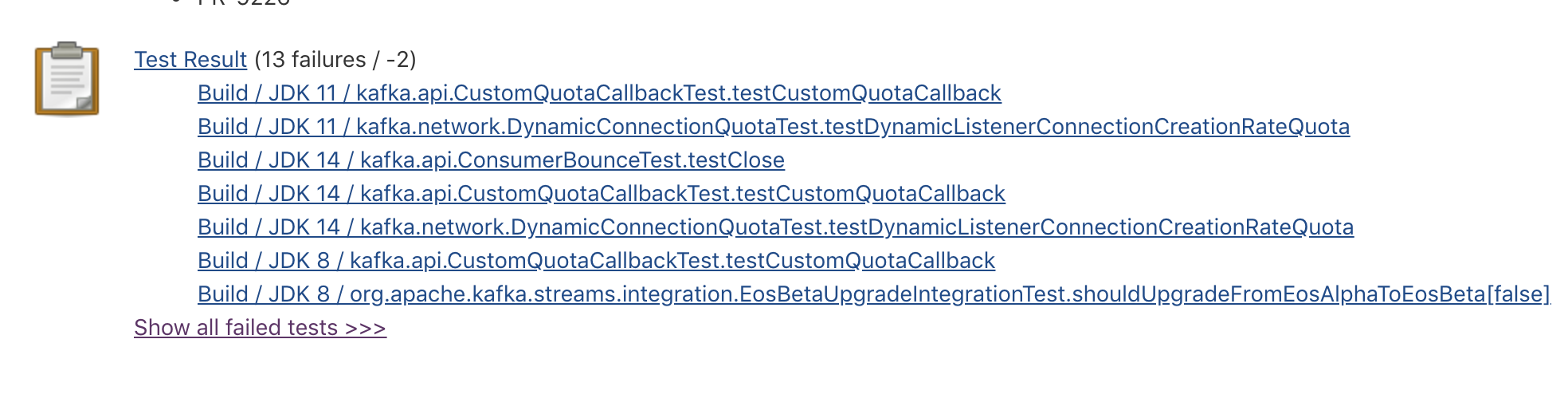

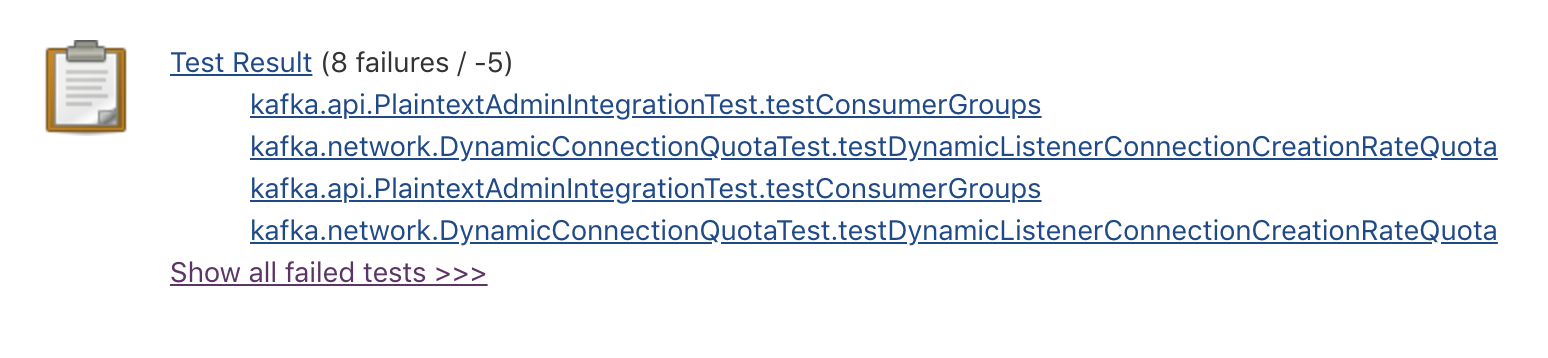

[GitHub] [kafka] mumrah commented on a change in pull request #9226: KAFKA-10444: Jenkinsfile testing

mumrah commented on a change in pull request #9226:

URL: https://github.com/apache/kafka/pull/9226#discussion_r480452574

##

File path: Jenkinsfile

##

@@ -0,0 +1,200 @@

+/*

+ *

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ *

+ */

+

+def setupGradle() {

+ // Delete gradle cache to workaround cache corruption bugs, see KAFKA-3167

+ dir('.gradle') {

+deleteDir()

+ }

+ sh './gradlew -version'

+}

+

+def doValidation() {

+ try {

+sh '''

+ ./gradlew -PscalaVersion=$SCALA_VERSION clean compileJava compileScala

compileTestJava compileTestScala \

+ spotlessScalaCheck checkstyleMain checkstyleTest spotbugsMain rat \

+ --profile --no-daemon --continue -PxmlSpotBugsReport=true \"$@\"

+'''

+ } catch(err) {

+error('Validation checks failed, aborting this build')

+ }

+}

+

+def doTest() {

+ try {

+sh '''

+ ./gradlew -PscalaVersion=$SCALA_VERSION unitTest integrationTest \

+ --profile --no-daemon --continue

-PtestLoggingEvents=started,passed,skipped,failed "$@"

+'''

+ } catch(err) {

+echo 'Some tests failed, marking this build UNSTABLE'

+currentBuild.result = 'UNSTABLE'

Review comment:

So, this seems to have caused us to lose which build had which results

in the summary.

Before we had:

Now we have:

I'll try moving the `junit` call inside the actual stage and see if that

helps

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on pull request #9039: KAFKA-5636: SlidingWindows (KIP-450)

vvcephei commented on pull request #9039: URL: https://github.com/apache/kafka/pull/9039#issuecomment-684074223 Since Jenkins PR builds are still not functioning, I've merged in trunk and verified this pull request locally before merging it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei merged pull request #9039: KAFKA-5636: SlidingWindows (KIP-450)

vvcephei merged pull request #9039: URL: https://github.com/apache/kafka/pull/9039 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] guozhangwang commented on a change in pull request #8834: KAFKA-10134: Enable heartbeat during PrepareRebalance and Depend On State For Poll Timeout

guozhangwang commented on a change in pull request #8834:

URL: https://github.com/apache/kafka/pull/8834#discussion_r480429496

##

File path:

clients/src/main/java/org/apache/kafka/clients/consumer/internals/AbstractCoordinator.java

##

@@ -528,7 +528,6 @@ public void onFailure(RuntimeException e) {

}

private void recordRebalanceFailure() {

-state = MemberState.UNJOINED;

Review comment:

Previously this function has two lines: update the state and record

sensors. Now that the first is called in the caller, this function becomes a

one-liner and hence not worthy anymore so I in-lined it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #9039: KAFKA-5636: SlidingWindows (KIP-450)

vvcephei commented on a change in pull request #9039:

URL: https://github.com/apache/kafka/pull/9039#discussion_r480429410

##

File path:

streams/src/main/java/org/apache/kafka/streams/kstream/internals/KStreamSlidingWindowAggregate.java

##

@@ -0,0 +1,391 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.streams.kstream.internals;

+

+import org.apache.kafka.clients.consumer.ConsumerRecord;

+import org.apache.kafka.common.metrics.Sensor;

+import org.apache.kafka.streams.KeyValue;

+import org.apache.kafka.streams.kstream.Aggregator;

+import org.apache.kafka.streams.kstream.Initializer;

+import org.apache.kafka.streams.kstream.Window;

+import org.apache.kafka.streams.kstream.Windowed;

+import org.apache.kafka.streams.kstream.SlidingWindows;

+import org.apache.kafka.streams.processor.AbstractProcessor;

+import org.apache.kafka.streams.processor.Processor;

+import org.apache.kafka.streams.processor.ProcessorContext;

+import org.apache.kafka.streams.processor.internals.InternalProcessorContext;

+import org.apache.kafka.streams.processor.internals.metrics.StreamsMetricsImpl;

+import org.apache.kafka.streams.state.KeyValueIterator;

+import org.apache.kafka.streams.state.TimestampedWindowStore;

+import org.apache.kafka.streams.state.ValueAndTimestamp;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+import java.util.HashSet;

+import static

org.apache.kafka.streams.processor.internals.metrics.TaskMetrics.droppedRecordsSensorOrLateRecordDropSensor;

+import static

org.apache.kafka.streams.processor.internals.metrics.TaskMetrics.droppedRecordsSensorOrSkippedRecordsSensor;

+import static org.apache.kafka.streams.state.ValueAndTimestamp.getValueOrNull;

+

+public class KStreamSlidingWindowAggregate implements

KStreamAggProcessorSupplier, V, Agg> {

+private final Logger log = LoggerFactory.getLogger(getClass());

+

+private final String storeName;

+private final SlidingWindows windows;

+private final Initializer initializer;

+private final Aggregator aggregator;

+

+private boolean sendOldValues = false;

+

+public KStreamSlidingWindowAggregate(final SlidingWindows windows,

+ final String storeName,

+ final Initializer initializer,

+ final Aggregator aggregator) {

+this.windows = windows;

+this.storeName = storeName;

+this.initializer = initializer;

+this.aggregator = aggregator;

+}

+

+@Override

+public Processor get() {

+return new KStreamSlidingWindowAggregateProcessor();

+}

+

+public SlidingWindows windows() {

+return windows;

+}

+

+@Override

+public void enableSendingOldValues() {

+sendOldValues = true;

+}

+

+private class KStreamSlidingWindowAggregateProcessor extends

AbstractProcessor {

+private TimestampedWindowStore windowStore;

+private TimestampedTupleForwarder, Agg> tupleForwarder;

+private StreamsMetricsImpl metrics;

+private InternalProcessorContext internalProcessorContext;

+private Sensor lateRecordDropSensor;

+private Sensor droppedRecordsSensor;

+private long observedStreamTime = ConsumerRecord.NO_TIMESTAMP;

+private boolean reverseIteratorImplemented = false;

+

+@SuppressWarnings("unchecked")

+@Override

+public void init(final ProcessorContext context) {

+super.init(context);

+internalProcessorContext = (InternalProcessorContext) context;

+metrics = internalProcessorContext.metrics();

+final String threadId = Thread.currentThread().getName();

+lateRecordDropSensor = droppedRecordsSensorOrLateRecordDropSensor(

+threadId,

+context.taskId().toString(),

+internalProcessorContext.currentNode().name(),

+metrics

+);

+//catch unsupported operation error

+droppedRecordsSensor =

droppedRecordsSensorOrSkippedRecordsSensor(threadId,

context.taskId().toString(), metrics);

+windowStore =

[GitHub] [kafka] guozhangwang commented on a change in pull request #8834: KAFKA-10134: Enable heartbeat during PrepareRebalance and Depend On State For Poll Timeout

guozhangwang commented on a change in pull request #8834:

URL: https://github.com/apache/kafka/pull/8834#discussion_r480425820

##

File path:

clients/src/main/java/org/apache/kafka/clients/consumer/internals/AbstractCoordinator.java

##

@@ -497,40 +501,18 @@ private synchronized void resetStateAndRejoin() {

joinFuture.addListener(new RequestFutureListener() {

@Override

public void onSuccess(ByteBuffer value) {

-// handle join completion in the callback so that the

callback will be invoked

Review comment:

Well I should say part of that (the enabling of the heartbeat thread) is

in JoinGroup response handler, while the rest (update metrics, etc) is in

SyncGroup response handler.

##

File path: core/src/main/scala/kafka/coordinator/group/GroupCoordinator.scala

##

@@ -639,7 +641,11 @@ class GroupCoordinator(val brokerId: Int,

responseCallback(Errors.UNKNOWN_MEMBER_ID)

case CompletingRebalance =>

-responseCallback(Errors.REBALANCE_IN_PROGRESS)

+ // consumers may start sending heartbeat after join-group

response, in which case

+ // we should treat them as normal hb request and reset the timer

+ val member = group.get(memberId)

Review comment:

It would return the error code before: that is because it does not

expect clients to send heartbeat before sending sync-group requests. Now it is

not the case any more.

##

File path: core/src/main/scala/kafka/coordinator/group/GroupCoordinator.scala

##

@@ -639,7 +641,11 @@ class GroupCoordinator(val brokerId: Int,

responseCallback(Errors.UNKNOWN_MEMBER_ID)

case CompletingRebalance =>

-responseCallback(Errors.REBALANCE_IN_PROGRESS)

Review comment:

I had a discussion with @hachikuji about this. I think logically it

should not return `REBALANCE_IN_PROGRESS` and clients in the future should

update its handling logic too, maybe after some releases where we can break

client-broker compatibility.

##

File path:

clients/src/main/java/org/apache/kafka/clients/consumer/internals/AbstractCoordinator.java

##

@@ -446,14 +453,15 @@ boolean joinGroupIfNeeded(final Timer timer) {

resetJoinGroupFuture();

needsJoinPrepare = true;

} else {

-log.info("Generation data was cleared by heartbeat thread.

Initiating rejoin.");

+log.info("Generation data was cleared by heartbeat thread

to {} and state is now {} before " +

+ "the rebalance callback is triggered, marking this

rebalance as failed and retry",

+ generation, state);

resetStateAndRejoin();

resetJoinGroupFuture();

-return false;

}

} else {

final RuntimeException exception = future.exception();

-log.info("Join group failed with {}", exception.toString());

+log.info("Rebalance failed with {}", exception.toString());

Review comment:

The reason I changed it is exactly that it may not always due to

join-group :) If sync-group failed, this could also be triggered.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-10453) Backport of PR-7781

[ https://issues.apache.org/jira/browse/KAFKA-10453?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188031#comment-17188031 ] Niketh Sabbineni commented on KAFKA-10453: -- Thank you! Let me test that out then. Thanks for your help. > Backport of PR-7781 > --- > > Key: KAFKA-10453 > URL: https://issues.apache.org/jira/browse/KAFKA-10453 > Project: Kafka > Issue Type: Wish > Components: clients >Affects Versions: 1.1.1 >Reporter: Niketh Sabbineni >Priority: Major > > We have been hitting this bug (with kafka 1.1.1) where the Producer takes > forever to load metadata. The issue seems to have been patched in master > [here|[https://github.com/apache/kafka/pull/7781]]. > Would you *recommend* a backport of that above change to 1.1? There are 7-8 > changes that need to be cherry picked. The other option is to upgrade to 2.5 > (which would be much more involved) -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] guozhangwang commented on a change in pull request #8834: KAFKA-10134: Enable heartbeat during PrepareRebalance and Depend On State For Poll Timeout

guozhangwang commented on a change in pull request #8834:

URL: https://github.com/apache/kafka/pull/8834#discussion_r476954744

##

File path:

clients/src/main/java/org/apache/kafka/clients/consumer/internals/AbstractCoordinator.java

##

@@ -917,17 +938,14 @@ private synchronized void resetGeneration() {

synchronized void resetGenerationOnResponseError(ApiKeys api, Errors

error) {

log.debug("Resetting generation after encountering {} from {} response

and requesting re-join", error, api);

-// only reset the state to un-joined when it is not already in

rebalancing

Review comment:

We do not need this check any more since when we are only resetting

generation if we see illegal generation or unknown member id, and in either

case we should no longer heartbeat

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-10453) Backport of PR-7781

[ https://issues.apache.org/jira/browse/KAFKA-10453?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188029#comment-17188029 ] Ismael Juma commented on KAFKA-10453: - Yes, it is. > Backport of PR-7781 > --- > > Key: KAFKA-10453 > URL: https://issues.apache.org/jira/browse/KAFKA-10453 > Project: Kafka > Issue Type: Wish > Components: clients >Affects Versions: 1.1.1 >Reporter: Niketh Sabbineni >Priority: Major > > We have been hitting this bug (with kafka 1.1.1) where the Producer takes > forever to load metadata. The issue seems to have been patched in master > [here|[https://github.com/apache/kafka/pull/7781]]. > Would you *recommend* a backport of that above change to 1.1? There are 7-8 > changes that need to be cherry picked. The other option is to upgrade to 2.5 > (which would be much more involved) -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Comment Edited] (KAFKA-10453) Backport of PR-7781

[ https://issues.apache.org/jira/browse/KAFKA-10453?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188028#comment-17188028 ] Niketh Sabbineni edited comment on KAFKA-10453 at 8/31/20, 10:07 PM: - [~ijuma] Thanks for your reply. Do you happen to know if kafka 2.5 client is compatible with kafka 1.1.1 core/server? I should have checked that before opening the issue. I am digging to check that. was (Author: niketh): [~ijuma] Thanks for your reply. Do you happen to know if kafka 2.5 client is compatible with kafka 1.1.1 core/server? I should have checked that before opening the issue. > Backport of PR-7781 > --- > > Key: KAFKA-10453 > URL: https://issues.apache.org/jira/browse/KAFKA-10453 > Project: Kafka > Issue Type: Wish > Components: clients >Affects Versions: 1.1.1 >Reporter: Niketh Sabbineni >Priority: Major > > We have been hitting this bug (with kafka 1.1.1) where the Producer takes > forever to load metadata. The issue seems to have been patched in master > [here|[https://github.com/apache/kafka/pull/7781]]. > Would you *recommend* a backport of that above change to 1.1? There are 7-8 > changes that need to be cherry picked. The other option is to upgrade to 2.5 > (which would be much more involved) -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10453) Backport of PR-7781

[ https://issues.apache.org/jira/browse/KAFKA-10453?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188028#comment-17188028 ] Niketh Sabbineni commented on KAFKA-10453: -- [~ijuma] Thanks for your reply. Do you happen to know if kafka 2.5 client is compatible with kafka 1.1.1 core/server? I should have checked that before opening the issue. > Backport of PR-7781 > --- > > Key: KAFKA-10453 > URL: https://issues.apache.org/jira/browse/KAFKA-10453 > Project: Kafka > Issue Type: Wish > Components: clients >Affects Versions: 1.1.1 >Reporter: Niketh Sabbineni >Priority: Major > > We have been hitting this bug (with kafka 1.1.1) where the Producer takes > forever to load metadata. The issue seems to have been patched in master > [here|[https://github.com/apache/kafka/pull/7781]]. > Would you *recommend* a backport of that above change to 1.1? There are 7-8 > changes that need to be cherry picked. The other option is to upgrade to 2.5 > (which would be much more involved) -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (KAFKA-10453) Backport of PR-7781

[ https://issues.apache.org/jira/browse/KAFKA-10453?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17188024#comment-17188024 ] Ismael Juma commented on KAFKA-10453: - Kafka clients have no ZK dependency, so you can upgrade them without changing anything else. > Backport of PR-7781 > --- > > Key: KAFKA-10453 > URL: https://issues.apache.org/jira/browse/KAFKA-10453 > Project: Kafka > Issue Type: Wish > Components: clients >Affects Versions: 1.1.1 >Reporter: Niketh Sabbineni >Priority: Major > > We have been hitting this bug (with kafka 1.1.1) where the Producer takes > forever to load metadata. The issue seems to have been patched in master > [here|[https://github.com/apache/kafka/pull/7781]]. > Would you *recommend* a backport of that above change to 1.1? There are 7-8 > changes that need to be cherry picked. The other option is to upgrade to 2.5 > (which would be much more involved) -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] tinawenqiao commented on pull request #9235: KAFKA-10449: Add some important parameter desc in connect-distributed.properties

tinawenqiao commented on pull request #9235: URL: https://github.com/apache/kafka/pull/9235#issuecomment-684064832 In WokerConfig.java we found that REST_HOST_NAME_CONFIG(rest.host.name) and REST_PORT_CONFIG(rest.port) were deprecated. And some new configuration parameters are introduced such as LISTENERS_CONFIG(listeners), REST_ADVERTISED_LISTENER_CONFIG(rest.advertised.listener),ADMIN_LISTENERS_CONFIG(admin.listeners) but not list in the sample conf file. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] tinawenqiao opened a new pull request #9235: KAFKA-10449: Add some important parameter description in connect-distributed.prope…