[GitHub] [kafka] cmccabe commented on a change in pull request #10564: MINOR: clean up some replication code

cmccabe commented on a change in pull request #10564:

URL: https://github.com/apache/kafka/pull/10564#discussion_r618131621

##

File path:

metadata/src/main/java/org/apache/kafka/controller/ReplicationControlManager.java

##

@@ -957,22 +890,27 @@ ApiError electLeader(String topic, int partitionId,

boolean unclean,

return ControllerResult.of(records, reply);

}

-int bestLeader(int[] replicas, int[] isr, boolean unclean) {

+static boolean isGoodLeader(int[] isr, int leader) {

+return Replicas.contains(isr, leader);

+}

+

+static int bestLeader(int[] replicas, int[] isr, boolean uncleanOk,

Review comment:

We use NO_LEADER in a lot of different places in the code, so I think

it's reasonable to use it here. it is actually a valid value for the leader of

the partition, so translating to and from OptionalInt would be awkward, I think.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] wenbingshen commented on pull request #10558: KAFKA-12684: Fix noop set is incorrectly replaced with succeeded set from LeaderElectionCommand

wenbingshen commented on pull request #10558: URL: https://github.com/apache/kafka/pull/10558#issuecomment-824593661 > @wenbingshen感谢您的更新代码。经过质量检查合格后,我将其合并。 Thank you very much for your guidance and help. :) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on a change in pull request #10564: MINOR: clean up some replication code

cmccabe commented on a change in pull request #10564:

URL: https://github.com/apache/kafka/pull/10564#discussion_r618132526

##

File path:

metadata/src/main/java/org/apache/kafka/controller/ReplicationControlManager.java

##

@@ -356,7 +369,8 @@ public void replay(PartitionChangeRecord record) {

brokersToIsrs.update(record.topicId(), record.partitionId(),

prevPartitionInfo.isr, newPartitionInfo.isr,

prevPartitionInfo.leader,

newPartitionInfo.leader);

-log.debug("Applied ISR change record: {}", record.toString());

+String topicPart = topicInfo.name + "-" + record.partitionId();

Review comment:

sorry. added.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on a change in pull request #10564: MINOR: clean up some replication code

cmccabe commented on a change in pull request #10564:

URL: https://github.com/apache/kafka/pull/10564#discussion_r618133367

##

File path:

metadata/src/main/java/org/apache/kafka/controller/ReplicationControlManager.java

##

@@ -172,47 +174,54 @@ String diff(PartitionControlInfo prev) {

StringBuilder builder = new StringBuilder();

String prefix = "";

if (!Arrays.equals(replicas, prev.replicas)) {

-

builder.append(prefix).append("oldReplicas=").append(Arrays.toString(prev.replicas));

+builder.append(prefix).append("replicas: ").

+append(Arrays.toString(prev.replicas)).

+append(" -> ").append(Arrays.toString(replicas));

prefix = ", ";

-

builder.append(prefix).append("newReplicas=").append(Arrays.toString(replicas));

}

if (!Arrays.equals(isr, prev.isr)) {

-

builder.append(prefix).append("oldIsr=").append(Arrays.toString(prev.isr));

+builder.append(prefix).append("isr: ").

+append(Arrays.toString(prev.isr)).

+append(" -> ").append(Arrays.toString(isr));

prefix = ", ";

-

builder.append(prefix).append("newIsr=").append(Arrays.toString(isr));

}

if (!Arrays.equals(removingReplicas, prev.removingReplicas)) {

-builder.append(prefix).append("oldRemovingReplicas=").

-append(Arrays.toString(prev.removingReplicas));

+builder.append(prefix).append("removingReplicas: ").

+append(Arrays.toString(prev.removingReplicas)).

+append(" -> ").append(Arrays.toString(removingReplicas));

prefix = ", ";

-builder.append(prefix).append("newRemovingReplicas=").

-append(Arrays.toString(removingReplicas));

}

if (!Arrays.equals(addingReplicas, prev.addingReplicas)) {

-builder.append(prefix).append("oldAddingReplicas=").

-append(Arrays.toString(prev.addingReplicas));

+builder.append(prefix).append("addingReplicas: ").

+append(Arrays.toString(prev.addingReplicas)).

+append(" -> ").append(Arrays.toString(addingReplicas));

prefix = ", ";

-builder.append(prefix).append("newAddingReplicas=").

-append(Arrays.toString(addingReplicas));

}

if (leader != prev.leader) {

-

builder.append(prefix).append("oldLeader=").append(prev.leader);

+builder.append(prefix).append("leader: ").

+append(prev.leader).append(" -> ").append(leader);

prefix = ", ";

-builder.append(prefix).append("newLeader=").append(leader);

}

if (leaderEpoch != prev.leaderEpoch) {

-

builder.append(prefix).append("oldLeaderEpoch=").append(prev.leaderEpoch);

+builder.append(prefix).append("leaderEpoch: ").

+append(prev.leaderEpoch).append(" ->

").append(leaderEpoch);

prefix = ", ";

-

builder.append(prefix).append("newLeaderEpoch=").append(leaderEpoch);

}

if (partitionEpoch != prev.partitionEpoch) {

-

builder.append(prefix).append("oldPartitionEpoch=").append(prev.partitionEpoch);

-prefix = ", ";

-

builder.append(prefix).append("newPartitionEpoch=").append(partitionEpoch);

+builder.append(prefix).append("partitionEpoch: ").

+append(prev.partitionEpoch).append(" ->

").append(partitionEpoch);

}

return builder.toString();

}

+void maybeLogPartitionChange(Logger log, String description,

PartitionControlInfo prev) {

+if (!electionWasClean(leader, prev.isr)) {

+log.info("UNCLEAN partition change for {}: {}", description,

diff(prev));

+} else if (log.isDebugEnabled()) {

+log.debug("partition change for {}: {}", description,

diff(prev));

Review comment:

I don't think this will be practical as the number of partitions grows.

A 10-node cluster with a million partitions could have tens of thousands of

partition changes when a node goes away or comes back.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on a change in pull request #10564: MINOR: clean up some replication code

cmccabe commented on a change in pull request #10564:

URL: https://github.com/apache/kafka/pull/10564#discussion_r618133367

##

File path:

metadata/src/main/java/org/apache/kafka/controller/ReplicationControlManager.java

##

@@ -172,47 +174,54 @@ String diff(PartitionControlInfo prev) {

StringBuilder builder = new StringBuilder();

String prefix = "";

if (!Arrays.equals(replicas, prev.replicas)) {

-

builder.append(prefix).append("oldReplicas=").append(Arrays.toString(prev.replicas));

+builder.append(prefix).append("replicas: ").

+append(Arrays.toString(prev.replicas)).

+append(" -> ").append(Arrays.toString(replicas));

prefix = ", ";

-

builder.append(prefix).append("newReplicas=").append(Arrays.toString(replicas));

}

if (!Arrays.equals(isr, prev.isr)) {

-

builder.append(prefix).append("oldIsr=").append(Arrays.toString(prev.isr));

+builder.append(prefix).append("isr: ").

+append(Arrays.toString(prev.isr)).

+append(" -> ").append(Arrays.toString(isr));

prefix = ", ";

-

builder.append(prefix).append("newIsr=").append(Arrays.toString(isr));

}

if (!Arrays.equals(removingReplicas, prev.removingReplicas)) {

-builder.append(prefix).append("oldRemovingReplicas=").

-append(Arrays.toString(prev.removingReplicas));

+builder.append(prefix).append("removingReplicas: ").

+append(Arrays.toString(prev.removingReplicas)).

+append(" -> ").append(Arrays.toString(removingReplicas));

prefix = ", ";

-builder.append(prefix).append("newRemovingReplicas=").

-append(Arrays.toString(removingReplicas));

}

if (!Arrays.equals(addingReplicas, prev.addingReplicas)) {

-builder.append(prefix).append("oldAddingReplicas=").

-append(Arrays.toString(prev.addingReplicas));

+builder.append(prefix).append("addingReplicas: ").

+append(Arrays.toString(prev.addingReplicas)).

+append(" -> ").append(Arrays.toString(addingReplicas));

prefix = ", ";

-builder.append(prefix).append("newAddingReplicas=").

-append(Arrays.toString(addingReplicas));

}

if (leader != prev.leader) {

-

builder.append(prefix).append("oldLeader=").append(prev.leader);

+builder.append(prefix).append("leader: ").

+append(prev.leader).append(" -> ").append(leader);

prefix = ", ";

-builder.append(prefix).append("newLeader=").append(leader);

}

if (leaderEpoch != prev.leaderEpoch) {

-

builder.append(prefix).append("oldLeaderEpoch=").append(prev.leaderEpoch);

+builder.append(prefix).append("leaderEpoch: ").

+append(prev.leaderEpoch).append(" ->

").append(leaderEpoch);

prefix = ", ";

-

builder.append(prefix).append("newLeaderEpoch=").append(leaderEpoch);

}

if (partitionEpoch != prev.partitionEpoch) {

-

builder.append(prefix).append("oldPartitionEpoch=").append(prev.partitionEpoch);

-prefix = ", ";

-

builder.append(prefix).append("newPartitionEpoch=").append(partitionEpoch);

+builder.append(prefix).append("partitionEpoch: ").

+append(prev.partitionEpoch).append(" ->

").append(partitionEpoch);

}

return builder.toString();

}

+void maybeLogPartitionChange(Logger log, String description,

PartitionControlInfo prev) {

+if (!electionWasClean(leader, prev.isr)) {

+log.info("UNCLEAN partition change for {}: {}", description,

diff(prev));

+} else if (log.isDebugEnabled()) {

+log.debug("partition change for {}: {}", description,

diff(prev));

Review comment:

I don't think this will be practical as the number of partitions grows.

A 10-node cluster with a million partitions could have hundreds of thousands of

partition changes when a node goes away or comes back.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on a change in pull request #10564: MINOR: clean up some replication code

cmccabe commented on a change in pull request #10564:

URL: https://github.com/apache/kafka/pull/10564#discussion_r618135556

##

File path:

metadata/src/main/java/org/apache/kafka/controller/ReplicationControlManager.java

##

@@ -1119,6 +1057,83 @@ void validateManualPartitionAssignment(List

assignment,

}

}

+/**

+ * Iterate over a sequence of partitions and generate ISR changes and/or

leader

+ * changes if necessary.

+ *

+ * @param context A human-readable context string used in log4j

logging.

+ * @param brokerToRemoveNO_LEADER if no broker is being removed; the

ID of the

+ * broker to remove from the ISR and leadership,

otherwise.

+ * @param brokerToAdd NO_LEADER if no broker is being added; the ID

of the

+ * broker which is now eligible to be a leader,

otherwise.

+ * @param records A list of records which we will append to.

+ * @param iterator The iterator containing the partitions to

examine.

+ */

+void generateLeaderAndIsrUpdates(String context,

+ int brokerToRemove,

+ int brokerToAdd,

+ List records,

+ Iterator iterator) {

+int oldSize = records.size();

+Function isAcceptableLeader =

+r -> r == brokerToAdd || clusterControl.unfenced(r);

Review comment:

In the case of an unclean leader election, I suppose we do need to

consider brokerToRemove, since we'll want to explicitly exclude it. Good catch.

In general the code will work if both brokerToRemove and brokerToAdd are

both set, although we don't plan to do this.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on a change in pull request #10564: MINOR: clean up some replication code

cmccabe commented on a change in pull request #10564:

URL: https://github.com/apache/kafka/pull/10564#discussion_r618138346

##

File path:

metadata/src/main/java/org/apache/kafka/controller/ReplicationControlManager.java

##

@@ -1119,6 +1057,83 @@ void validateManualPartitionAssignment(List

assignment,

}

}

+/**

+ * Iterate over a sequence of partitions and generate ISR changes and/or

leader

+ * changes if necessary.

+ *

+ * @param context A human-readable context string used in log4j

logging.

+ * @param brokerToRemoveNO_LEADER if no broker is being removed; the

ID of the

+ * broker to remove from the ISR and leadership,

otherwise.

+ * @param brokerToAdd NO_LEADER if no broker is being added; the ID

of the

+ * broker which is now eligible to be a leader,

otherwise.

+ * @param records A list of records which we will append to.

+ * @param iterator The iterator containing the partitions to

examine.

+ */

+void generateLeaderAndIsrUpdates(String context,

+ int brokerToRemove,

+ int brokerToAdd,

+ List records,

+ Iterator iterator) {

+int oldSize = records.size();

+Function isAcceptableLeader =

+r -> r == brokerToAdd || clusterControl.unfenced(r);

+while (iterator.hasNext()) {

+TopicIdPartition topicIdPart = iterator.next();

+TopicControlInfo topic = topics.get(topicIdPart.topicId());

+if (topic == null) {

+throw new RuntimeException("Topic ID " + topicIdPart.topicId()

+

+" existed in isrMembers, but not in the topics map.");

+}

+PartitionControlInfo partition =

topic.parts.get(topicIdPart.partitionId());

+if (partition == null) {

+throw new RuntimeException("Partition " + topicIdPart +

+" existed in isrMembers, but not in the partitions map.");

+}

+int[] newIsr = Replicas.copyWithout(partition.isr, brokerToRemove);

+int newLeader;

+if (isGoodLeader(newIsr, partition.leader)) {

+// If the current leader is good, don't change.

+newLeader = partition.leader;

+} else {

+// Choose a new leader.

+boolean uncleanOk =

configurationControl.uncleanLeaderElectionEnabledForTopic(topic.name);

+newLeader = bestLeader(partition.replicas, newIsr, uncleanOk,

isAcceptableLeader);

Review comment:

That will block the controlled shutdown from finishing in many cases.

Is the intention to allow for a clean leader election later on if the other

replicas catch up?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on a change in pull request #10564: MINOR: clean up some replication code

cmccabe commented on a change in pull request #10564:

URL: https://github.com/apache/kafka/pull/10564#discussion_r618138592

##

File path:

metadata/src/main/java/org/apache/kafka/controller/ReplicationControlManager.java

##

@@ -957,22 +890,27 @@ ApiError electLeader(String topic, int partitionId,

boolean unclean,

return ControllerResult.of(records, reply);

}

-int bestLeader(int[] replicas, int[] isr, boolean unclean) {

+static boolean isGoodLeader(int[] isr, int leader) {

+return Replicas.contains(isr, leader);

+}

+

+static int bestLeader(int[] replicas, int[] isr, boolean uncleanOk,

+ Function isAcceptableLeader) {

+int bestUnclean = NO_LEADER;

for (int i = 0; i < replicas.length; i++) {

int replica = replicas[i];

-if (Replicas.contains(isr, replica)) {

-return replica;

-}

-}

-if (unclean) {

-for (int i = 0; i < replicas.length; i++) {

-int replica = replicas[i];

-if (clusterControl.unfenced(replica)) {

+if (isAcceptableLeader.apply(replica)) {

+if (bestUnclean == NO_LEADER) bestUnclean = replica;

+if (Replicas.contains(isr, replica)) {

return replica;

}

}

}

-return NO_LEADER;

+return uncleanOk ? bestUnclean : NO_LEADER;

+}

+

+static boolean electionWasClean(int newLeader, int[] prevIsr) {

Review comment:

I will just call it isr.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #9627: KAFKA-10746: Change to Warn logs when necessary to notify users

showuon commented on a change in pull request #9627:

URL: https://github.com/apache/kafka/pull/9627#discussion_r618158921

##

File path:

connect/runtime/src/main/java/org/apache/kafka/connect/runtime/distributed/WorkerGroupMember.java

##

@@ -203,8 +203,8 @@ public void requestRejoin() {

coordinator.requestRejoin();

}

-public void maybeLeaveGroup(String leaveReason) {

-coordinator.maybeLeaveGroup(leaveReason);

+public void maybeLeaveGroup(String leaveReason, boolean shouldWarn) {

Review comment:

Nice suggestion!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #9627: KAFKA-10746: Change to Warn logs when necessary to notify users

showuon commented on a change in pull request #9627:

URL: https://github.com/apache/kafka/pull/9627#discussion_r618159827

##

File path:

clients/src/main/java/org/apache/kafka/clients/consumer/internals/AbstractCoordinator.java

##

@@ -1001,9 +1001,14 @@ protected void close(Timer timer) {

}

/**

+ * Leave the group. This method also sends LeaveGroupRequest and log

{@code leaveReason} if this is dynamic members

+ * or unknown coordinator or state is not UNJOINED or this generation has

a valid member id.

+ *

+ * @param leaveReason the reason to leave the group for logging

+ * @param shouldWarn should log as WARN level or INFO

* @throws KafkaException if the rebalance callback throws exception

*/

-public synchronized RequestFuture maybeLeaveGroup(String

leaveReason) {

+public synchronized RequestFuture maybeLeaveGroup(String

leaveReason, boolean shouldWarn) throws KafkaException {

Review comment:

Cool! And, it cannot change to `protected` method since we used this

method in `KafkaConsumer`, which is in different package.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on pull request #9627: KAFKA-10746: Change to Warn logs when necessary to notify users

showuon commented on pull request #9627: URL: https://github.com/apache/kafka/pull/9627#issuecomment-824620099 @chia7712 , thanks for the good suggestion! You make the change simpler!! :) Please help check again. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (KAFKA-12708) Rewrite org.apache.kafka.test.Microbenchmarks by JMH

Chia-Ping Tsai created KAFKA-12708: -- Summary: Rewrite org.apache.kafka.test.Microbenchmarks by JMH Key: KAFKA-12708 URL: https://issues.apache.org/jira/browse/KAFKA-12708 Project: Kafka Issue Type: Task Reporter: Chia-Ping Tsai The benchmark code is a bit obsolete and it would be better to rewrite it by JMH -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (KAFKA-12528) kafka-configs.sh does not work while changing the sasl jaas configurations.

[

https://issues.apache.org/jira/browse/KAFKA-12528?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Chia-Ping Tsai resolved KAFKA-12528.

Resolution: Duplicate

see KAFKA-12530

> kafka-configs.sh does not work while changing the sasl jaas configurations.

> ---

>

> Key: KAFKA-12528

> URL: https://issues.apache.org/jira/browse/KAFKA-12528

> Project: Kafka

> Issue Type: Bug

> Components: admin, core

>Reporter: kaushik srinivas

>Priority: Major

>

> We are trying to modify the sasl jaas configurations for the kafka broker

> runtime using the dynamic config update functionality using the

> kafka-configs.sh script. But we are unable to get it working.

> Below is our command:

> ./kafka-configs.sh --bootstrap-server localhost:9092 --entity-type brokers

> --entity-name 59 --alter --add-config 'sasl.jaas.config=KafkaServer \{\n

> org.apache.kafka.common.security.plain.PlainLoginModule required \n

> username=\"test\" \n password=\"test\"; \n };'

>

> command is exiting with error:

> requirement failed: Invalid entity config: all configs to be added must be in

> the format "key=val".

>

> we also tried below format as well:

> kafka-configs.sh --bootstrap-server localhost:9092 --entity-type brokers

> --entity-name 59 --alter --add-config

> 'sasl.jaas.config=[username=test,password=test]'

> command does not return but the kafka broker logs prints the below error

> messages.

> org.apache.kafka.common.security.authenticator.SaslServerAuthenticator - Set

> SASL server state to FAILED during authentication"}}

> {"type":"log", "host":"kf-kaudynamic-0", "level":"INFO",

> "neid":"kafka-cfd5ccf2af7f47868e83471a5b603408", "system":"kafka",

> "time":"2021-03-23T08:29:00.946", "timezone":"UTC",

> "log":\{"message":"data-plane-kafka-network-thread-1001-ListenerName(SASL_PLAINTEXT)-SASL_PLAINTEXT-2

> - org.apache.kafka.common.network.Selector - [SocketServer brokerId=1001]

> Failed authentication with /127.0.0.1 (Unexpected Kafka request of type

> METADATA during SASL handshake.)"}}

>

> 1. If one has SASL enabled and with a single listener, how are we supposed to

> change the sasl credentials using this command ?

> 2. can anyone point us out to some example commands for modifying the sasl

> jaas configurations ?

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Resolved] (KAFKA-12529) kafka-configs.sh does not work while changing the sasl jaas configurations.

[

https://issues.apache.org/jira/browse/KAFKA-12529?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Chia-Ping Tsai resolved KAFKA-12529.

Resolution: Duplicate

see KAFKA-12530

> kafka-configs.sh does not work while changing the sasl jaas configurations.

> ---

>

> Key: KAFKA-12529

> URL: https://issues.apache.org/jira/browse/KAFKA-12529

> Project: Kafka

> Issue Type: Bug

>Reporter: kaushik srinivas

>Priority: Major

>

> We are trying to use kafka-configs script to modify the sasl jaas

> configurations, but unable to do so.

> Command used:

> ./kafka-configs.sh --bootstrap-server localhost:9092 --entity-type brokers

> --entity-name 59 --alter --add-config 'sasl.jaas.config=KafkaServer \{\n

> org.apache.kafka.common.security.plain.PlainLoginModule required \n

> username=\"test\" \n password=\"test\"; \n };'

> error:

> requirement failed: Invalid entity config: all configs to be added must be in

> the format "key=val".

> command 2:

> kafka-configs.sh --bootstrap-server localhost:9092 --entity-type brokers

> --entity-name 59 --alter --add-config

> 'sasl.jaas.config=[username=test,password=test]'

> output:

> command does not return , but kafka broker logs below error:

> DEBUG", "neid":"kafka-cfd5ccf2af7f47868e83471a5b603408", "system":"kafka",

> "time":"2021-03-23T08:29:00.946", "timezone":"UTC",

> "log":\{"message":"data-plane-kafka-network-thread-1001-ListenerName(SASL_PLAINTEXT)-SASL_PLAINTEXT-2

> - org.apache.kafka.common.security.authenticator.SaslServerAuthenticator -

> Set SASL server state to FAILED during authentication"}}

> {"type":"log", "host":"kf-kaudynamic-0", "level":"INFO",

> "neid":"kafka-cfd5ccf2af7f47868e83471a5b603408", "system":"kafka",

> "time":"2021-03-23T08:29:00.946", "timezone":"UTC",

> "log":\{"message":"data-plane-kafka-network-thread-1001-ListenerName(SASL_PLAINTEXT)-SASL_PLAINTEXT-2

> - org.apache.kafka.common.network.Selector - [SocketServer brokerId=1001]

> Failed authentication with /127.0.0.1 (Unexpected Kafka request of type

> METADATA during SASL handshake.)"}}

> We have below issues:

> 1. If one installs kafka broker with SASL mechanism and wants to change the

> SASL jaas config via kafka-configs scripts, how is it supposed to be done ?

> does kafka-configs needs client credentials to do the same ?

> 2. Can anyone point us to example commands of kafka-configs to alter the

> sasl.jaas.config property of kafka broker. We do not see any documentation or

> examples for the same.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Resolved] (KAFKA-12371) MirrorMaker 2.0 documentation is incorrect

[ https://issues.apache.org/jira/browse/KAFKA-12371?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Chia-Ping Tsai resolved KAFKA-12371. Resolution: Duplicate > MirrorMaker 2.0 documentation is incorrect > -- > > Key: KAFKA-12371 > URL: https://issues.apache.org/jira/browse/KAFKA-12371 > Project: Kafka > Issue Type: Improvement > Components: docs, documentation >Affects Versions: 2.7.0 >Reporter: Scott Kirkpatrick >Priority: Minor > > There are a few places in the official MirrorMaker 2.0 docs that are either > confusing or incorrect. Here are a few examples I've found: > The documentation for the 'sync.group.offsets.enabled' config states that > it's enabled by default > [here|https://github.com/apache/kafka-site/blob/61f4707381c369a98a7a77e1a7c3a11d5983909c/27/ops.html#L802], > but the actual source code indicates that it's disabled by default > [here|https://github.com/apache/kafka/blob/f75efb96fae99a22eb54b5d0ef4e23b28fe8cd2d/connect/mirror/src/main/java/org/apache/kafka/connect/mirror/MirrorConnectorConfig.java#L185]. > I'm unsure if the intent is to have it enabled or disabled by default. > There are also some numerical typos, > [here|https://github.com/apache/kafka-site/blob/61f4707381c369a98a7a77e1a7c3a11d5983909c/27/ops.html#L791] > and > [here|https://github.com/apache/kafka-site/blob/61f4707381c369a98a7a77e1a7c3a11d5983909c/27/ops.html#L793]. > These lines state that the default is 6000 seconds (and incorrectly that > it's equal to 10 minutes), but the actual default is 600 seconds, shown > [here|https://github.com/apache/kafka/blob/f75efb96fae99a22eb54b5d0ef4e23b28fe8cd2d/connect/mirror/src/main/java/org/apache/kafka/connect/mirror/MirrorConnectorConfig.java#L145] > and > [here|https://github.com/apache/kafka/blob/f75efb96fae99a22eb54b5d0ef4e23b28fe8cd2d/connect/mirror/src/main/java/org/apache/kafka/connect/mirror/MirrorConnectorConfig.java#L152] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] chia7712 commented on pull request #10547: KAFKA-12284: increase request timeout to make tests reliable

chia7712 commented on pull request #10547: URL: https://github.com/apache/kafka/pull/10547#issuecomment-824640807 @showuon Could you merge trunk to trigger QA again? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on pull request #10547: KAFKA-12284: increase request timeout to make tests reliable

showuon commented on pull request #10547: URL: https://github.com/apache/kafka/pull/10547#issuecomment-824644809 Done. Let's wait and see :) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

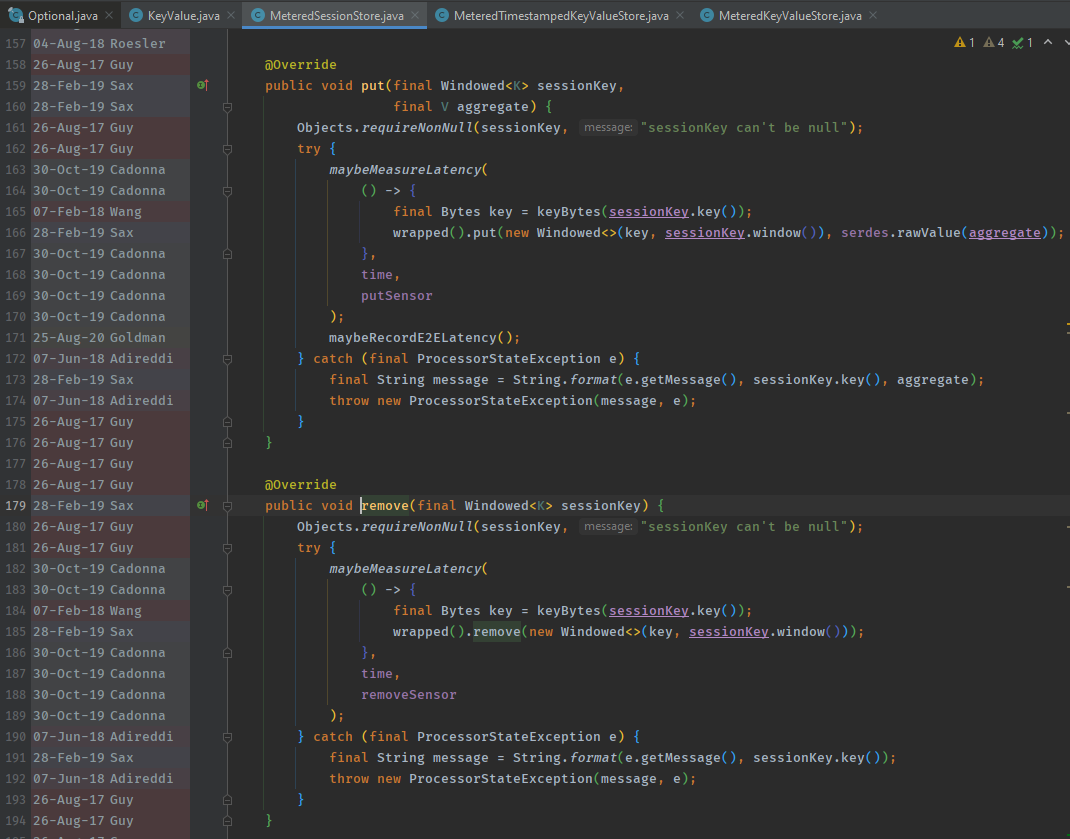

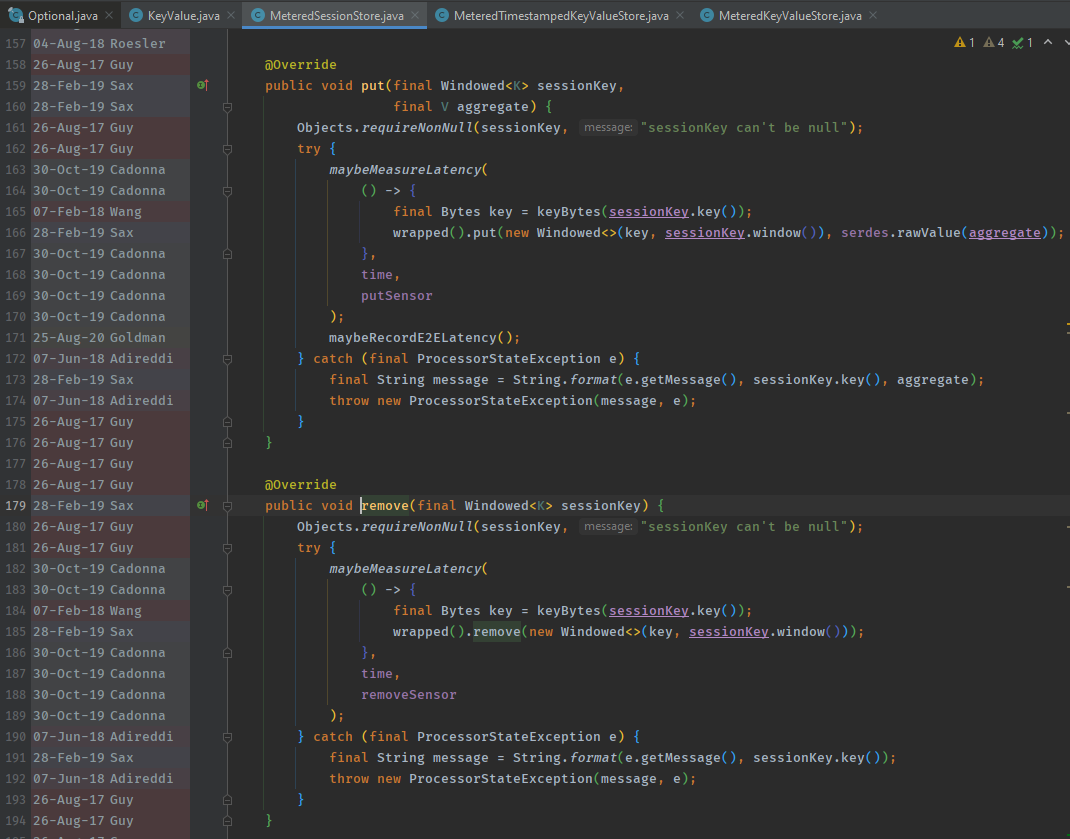

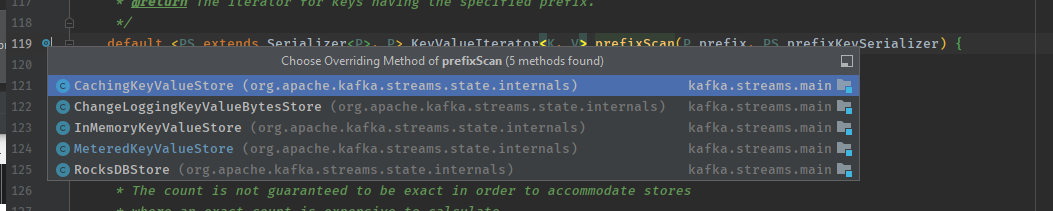

[GitHub] [kafka] cadonna commented on pull request #10548: KAFKA-12396 added a nullcheck before trying to retrieve a key

cadonna commented on pull request #10548:

URL: https://github.com/apache/kafka/pull/10548#issuecomment-824665014

>

> * prefixScan() (prefix should not be `null` either I guess? \cc

@guozhangwang @cadonna

>

Yes! Actually, the implementations in `RocksDBStore` and

`InMemoryKeyValueStore` already have a `null` check.

```

public , P> KeyValueIterator

prefixScan(final P prefix,

final PS prefixKeySerializer) {

Objects.requireNonNull(prefix, "prefix cannot be null");

Objects.requireNonNull(prefixKeySerializer, "prefixKeySerializer

cannot be null");

```

I think it would make sense to move them to the `MeteredKeyValueStore`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] satishd commented on a change in pull request #10271: KAFKA-12429: Added serdes for the default implementation of RLMM based on an internal topic as storage.

satishd commented on a change in pull request #10271:

URL: https://github.com/apache/kafka/pull/10271#discussion_r618221025

##

File path:

storage/src/main/java/org/apache/kafka/server/log/remote/metadata/storage/serialization/AbstractApiMessageAndVersionSerde.java

##

@@ -0,0 +1,77 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.metadata.storage.serialization;

+

+import org.apache.kafka.common.protocol.ApiMessage;

+import org.apache.kafka.common.protocol.ByteBufferAccessor;

+import org.apache.kafka.common.protocol.ObjectSerializationCache;

+import org.apache.kafka.metadata.ApiMessageAndVersion;

+

+import java.nio.ByteBuffer;

+

+/**

+ * This class provides serialization/deserialization of {@code

ApiMessageAndVersion}.

+ *

+ * Implementors need to extend this class and implement {@link

#apiMessageFor(short)} method to return a respective

+ * {@code ApiMessage} for the given {@code apiKey}. This is required to

deserialize the bytes to build the respective

+ * {@code ApiMessage} instance.

+ */

+public abstract class AbstractApiMessageAndVersionSerde {

+

+public byte[] serialize(ApiMessageAndVersion messageAndVersion) {

+ObjectSerializationCache cache = new ObjectSerializationCache();

+short version = messageAndVersion.version();

+ApiMessage message = messageAndVersion.message();

+

+// Add header containing apiKey and apiVersion,

+// headerSize is 1 byte for apiKey and 1 byte for apiVersion

+int headerSize = 1 + 1;

+int messageSize = message.size(cache, version);

+ByteBufferAccessor writable = new

ByteBufferAccessor(ByteBuffer.allocate(headerSize + messageSize));

+

+// Write apiKey and version

+writable.writeUnsignedVarint(message.apiKey());

Review comment:

It adds one more indirection but it is good to have a standardized

serialization mechanism for different metadata logs.

We can introduce `AbsatrctMetadataRecordSerde` which is mostly the current

`MetadataRecordSerde` excluding RAFT related `ApiMessage` generation or a given

`apiKey`. I prefer not to share the `apiKey `space across different modules

but they can implement their own serde derived from

`AbstractMetadataRecordSerde` by providing `ApiMessage` instances for the

respective `apiKey`s.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] satishd commented on a change in pull request #10271: KAFKA-12429: Added serdes for the default implementation of RLMM based on an internal topic as storage.

satishd commented on a change in pull request #10271:

URL: https://github.com/apache/kafka/pull/10271#discussion_r618227233

##

File path:

storage/src/main/java/org/apache/kafka/server/log/remote/metadata/storage/serialization/AbstractApiMessageAndVersionSerde.java

##

@@ -0,0 +1,77 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.metadata.storage.serialization;

+

+import org.apache.kafka.common.protocol.ApiMessage;

+import org.apache.kafka.common.protocol.ByteBufferAccessor;

+import org.apache.kafka.common.protocol.ObjectSerializationCache;

+import org.apache.kafka.metadata.ApiMessageAndVersion;

+

+import java.nio.ByteBuffer;

+

+/**

+ * This class provides serialization/deserialization of {@code

ApiMessageAndVersion}.

+ *

+ * Implementors need to extend this class and implement {@link

#apiMessageFor(short)} method to return a respective

+ * {@code ApiMessage} for the given {@code apiKey}. This is required to

deserialize the bytes to build the respective

+ * {@code ApiMessage} instance.

+ */

+public abstract class AbstractApiMessageAndVersionSerde {

+

+public byte[] serialize(ApiMessageAndVersion messageAndVersion) {

+ObjectSerializationCache cache = new ObjectSerializationCache();

+short version = messageAndVersion.version();

+ApiMessage message = messageAndVersion.message();

+

+// Add header containing apiKey and apiVersion,

+// headerSize is 1 byte for apiKey and 1 byte for apiVersion

+int headerSize = 1 + 1;

+int messageSize = message.size(cache, version);

+ByteBufferAccessor writable = new

ByteBufferAccessor(ByteBuffer.allocate(headerSize + messageSize));

+

+// Write apiKey and version

+writable.writeUnsignedVarint(message.apiKey());

Review comment:

@junrao I gave a shot at introducing the mentioned changes in the

earlier comment.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] satishd commented on a change in pull request #10271: KAFKA-12429: Added serdes for the default implementation of RLMM based on an internal topic as storage.

satishd commented on a change in pull request #10271:

URL: https://github.com/apache/kafka/pull/10271#discussion_r618228663

##

File path: build.gradle

##

@@ -1410,6 +1410,7 @@ project(':storage') {

implementation project(':storage:api')

implementation project(':clients')

implementation project(':metadata')

+implementation project(':raft')

Review comment:

I will remove this dependency once `AbstractMetadataRecordSerde` is

moved to `clients` module. I plan to do that in a followup PR if we go with

this approach.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] satishd commented on a change in pull request #10271: KAFKA-12429: Added serdes for the default implementation of RLMM based on an internal topic as storage.

satishd commented on a change in pull request #10271:

URL: https://github.com/apache/kafka/pull/10271#discussion_r618229816

##

File path:

storage/src/main/java/org/apache/kafka/server/log/remote/metadata/storage/serialization/AbstractMetadataMessageSerde.java

##

@@ -19,52 +19,41 @@

import org.apache.kafka.common.protocol.ApiMessage;

import org.apache.kafka.common.protocol.ByteBufferAccessor;

import org.apache.kafka.common.protocol.ObjectSerializationCache;

-import org.apache.kafka.common.utils.ByteUtils;

+import org.apache.kafka.common.protocol.Readable;

import org.apache.kafka.metadata.ApiMessageAndVersion;

-

+import org.apache.kafka.raft.metadata.AbstractMetadataRecordSerde;

import java.nio.ByteBuffer;

/**

- * This class provides serialization/deserialization of {@code

ApiMessageAndVersion}.

+ * This class provides serialization/deserialization of {@code

ApiMessageAndVersion}. This can be used as

+ * serialization/deserialization protocol for any metadata records derived of

{@code ApiMessage}s.

*

* Implementors need to extend this class and implement {@link

#apiMessageFor(short)} method to return a respective

* {@code ApiMessage} for the given {@code apiKey}. This is required to

deserialize the bytes to build the respective

* {@code ApiMessage} instance.

*/

-public abstract class AbstractApiMessageAndVersionSerde {

+public abstract class AbstractMetadataMessageSerde {

Review comment:

I could not find a better name here. Pl let me know if you have any.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] satishd commented on a change in pull request #10271: KAFKA-12429: Added serdes for the default implementation of RLMM based on an internal topic as storage.

satishd commented on a change in pull request #10271:

URL: https://github.com/apache/kafka/pull/10271#discussion_r618227233

##

File path:

storage/src/main/java/org/apache/kafka/server/log/remote/metadata/storage/serialization/AbstractApiMessageAndVersionSerde.java

##

@@ -0,0 +1,77 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.metadata.storage.serialization;

+

+import org.apache.kafka.common.protocol.ApiMessage;

+import org.apache.kafka.common.protocol.ByteBufferAccessor;

+import org.apache.kafka.common.protocol.ObjectSerializationCache;

+import org.apache.kafka.metadata.ApiMessageAndVersion;

+

+import java.nio.ByteBuffer;

+

+/**

+ * This class provides serialization/deserialization of {@code

ApiMessageAndVersion}.

+ *

+ * Implementors need to extend this class and implement {@link

#apiMessageFor(short)} method to return a respective

+ * {@code ApiMessage} for the given {@code apiKey}. This is required to

deserialize the bytes to build the respective

+ * {@code ApiMessage} instance.

+ */

+public abstract class AbstractApiMessageAndVersionSerde {

+

+public byte[] serialize(ApiMessageAndVersion messageAndVersion) {

+ObjectSerializationCache cache = new ObjectSerializationCache();

+short version = messageAndVersion.version();

+ApiMessage message = messageAndVersion.message();

+

+// Add header containing apiKey and apiVersion,

+// headerSize is 1 byte for apiKey and 1 byte for apiVersion

+int headerSize = 1 + 1;

+int messageSize = message.size(cache, version);

+ByteBufferAccessor writable = new

ByteBufferAccessor(ByteBuffer.allocate(headerSize + messageSize));

+

+// Write apiKey and version

+writable.writeUnsignedVarint(message.apiKey());

Review comment:

@junrao I gave a shot at introducing the mentioned changes in the

earlier comment with

https://github.com/apache/kafka/pull/10271/commits/e962ddf01894118655776e93907eb41a9e04a8e7.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-10493) KTable out-of-order updates are not being ignored

[ https://issues.apache.org/jira/browse/KAFKA-10493?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17327212#comment-17327212 ] Bruno Cadonna commented on KAFKA-10493: --- The options that I see are: # verify the order during restoration # disable the source topic optimization entirely # live with the inconsistency and warn users when they enable the source topic optimization # let users enable/disable dropping out-of-order updates per table # let users enable/disable source topic optimizaton per table (that has been already under discussion if I remember correctly) I think a mix of 3, 4, and 5 would be the most flexible solution. By default, I would enable dropping out-of-order updates and disabling the source topic optimization. > KTable out-of-order updates are not being ignored > - > > Key: KAFKA-10493 > URL: https://issues.apache.org/jira/browse/KAFKA-10493 > Project: Kafka > Issue Type: Bug > Components: streams >Affects Versions: 2.6.0 >Reporter: Pedro Gontijo >Assignee: Matthias J. Sax >Priority: Blocker > Fix For: 3.0.0 > > Attachments: KTableOutOfOrderBug.java > > > On a materialized KTable, out-of-order records for a given key (records which > timestamp are older than the current value in store) are not being ignored > but used to update the local store value and also being forwarded. > I believe the bug is here: > [https://github.com/apache/kafka/blob/2.6.0/streams/src/main/java/org/apache/kafka/streams/state/internals/ValueAndTimestampSerializer.java#L77] > It should return true, not false (see javadoc) > The bug impacts here: > [https://github.com/apache/kafka/blob/2.6.0/streams/src/main/java/org/apache/kafka/streams/kstream/internals/KTableSource.java#L142-L148] > I have attached a simple stream app that shows the issue happening. > Thank you! -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] showuon commented on pull request #10547: KAFKA-12284: increase request timeout to make tests reliable

showuon commented on pull request #10547: URL: https://github.com/apache/kafka/pull/10547#issuecomment-824789512 Failed tests are ``` Build / JDK 8 and Scala 2.12 / org.apache.kafka.connect.mirror.integration.MirrorConnectorsIntegrationSSLTest.testReplication() Build / JDK 8 and Scala 2.12 / kafka.server.RaftClusterTest.testCreateClusterAndCreateAndManyTopicsWithManyPartitions() Build / JDK 15 and Scala 2.13 / org.apache.kafka.connect.integration.RebalanceSourceConnectorsIntegrationTest.testDeleteConnector Build / JDK 15 and Scala 2.13 / org.apache.kafka.streams.integration.KTableKTableForeignKeyInnerJoinMultiIntegrationTest.shouldInnerJoinMultiPartitionQueryable ``` The failed `MirrorConnectorsIntegrationSSLTest.testReplication()` is not request timeout anymore. ``` org.opentest4j.AssertionFailedError: Condition not met within timeout 2. Offsets not translated downstream to primary cluster. ==> expected: but was: ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] tombentley commented on a change in pull request #9441: KAFKA-10614: Ensure group state (un)load is executed in the submitted order

tombentley commented on a change in pull request #9441:

URL: https://github.com/apache/kafka/pull/9441#discussion_r618358643

##

File path: core/src/main/scala/kafka/coordinator/group/GroupCoordinator.scala

##

@@ -905,19 +908,32 @@ class GroupCoordinator(val brokerId: Int,

*

* @param offsetTopicPartitionId The partition we are now leading

*/

- def onElection(offsetTopicPartitionId: Int): Unit = {

-info(s"Elected as the group coordinator for partition

$offsetTopicPartitionId")

-groupManager.scheduleLoadGroupAndOffsets(offsetTopicPartitionId,

onGroupLoaded)

+ def onElection(offsetTopicPartitionId: Int, coordinatorEpoch: Int): Unit = {

+val currentEpoch = Option(epochForPartitionId.get(offsetTopicPartitionId))

+if (currentEpoch.forall(currentEpoch => coordinatorEpoch > currentEpoch)) {

+ info(s"Elected as the group coordinator for partition

$offsetTopicPartitionId in epoch $coordinatorEpoch")

+ groupManager.scheduleLoadGroupAndOffsets(offsetTopicPartitionId,

onGroupLoaded)

+ epochForPartitionId.put(offsetTopicPartitionId, coordinatorEpoch)

+} else {

+ warn(s"Ignored election as group coordinator for partition

$offsetTopicPartitionId " +

+s"in epoch $coordinatorEpoch since current epoch is $currentEpoch")

+}

}

/**

* Unload cached state for the given partition and stop handling requests

for groups which map to it.

*

* @param offsetTopicPartitionId The partition we are no longer leading

*/

- def onResignation(offsetTopicPartitionId: Int): Unit = {

-info(s"Resigned as the group coordinator for partition

$offsetTopicPartitionId")

-groupManager.removeGroupsForPartition(offsetTopicPartitionId,

onGroupUnloaded)

+ def onResignation(offsetTopicPartitionId: Int, coordinatorEpoch:

Option[Int]): Unit = {

+val currentEpoch = Option(epochForPartitionId.get(offsetTopicPartitionId))

+if (currentEpoch.forall(currentEpoch => currentEpoch <=

coordinatorEpoch.getOrElse(Int.MaxValue))) {

Review comment:

This one doesn't do an update any more (following your other comment).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on pull request #9627: KAFKA-10746: Change to Warn logs when necessary to notify users

showuon commented on pull request #9627: URL: https://github.com/apache/kafka/pull/9627#issuecomment-824786063 All failed tests are flaky and unrelated. ``` Build / JDK 11 and Scala 2.13 / kafka.server.RaftClusterTest.testCreateClusterAndCreateListDeleteTopic() Build / JDK 15 and Scala 2.13 / org.apache.kafka.connect.mirror.integration.MirrorConnectorsIntegrationSSLTest.testReplicationWithEmptyPartition() Build / JDK 15 and Scala 2.13 / org.apache.kafka.connect.mirror.integration.MirrorConnectorsIntegrationSSLTest.testOneWayReplicationWithAutoOffsetSync() Build / JDK 15 and Scala 2.13 / org.apache.kafka.connect.mirror.integration.MirrorConnectorsIntegrationSSLTest.testReplicationWithEmptyPartition() Build / JDK 8 and Scala 2.12 / org.apache.kafka.connect.mirror.integration.MirrorConnectorsIntegrationTest.testReplication() ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] tombentley commented on a change in pull request #10530: KAFKA-10231 fail bootstrap of Rest server if the host name in the adv…

tombentley commented on a change in pull request #10530:

URL: https://github.com/apache/kafka/pull/10530#discussion_r618372208

##

File path:

connect/runtime/src/main/java/org/apache/kafka/connect/runtime/rest/RestServer.java

##

@@ -357,21 +358,53 @@ public URI advertisedUrl() {

ServerConnector serverConnector =

findConnector(advertisedSecurityProtocol);

builder.scheme(advertisedSecurityProtocol);

-String advertisedHostname =

config.getString(WorkerConfig.REST_ADVERTISED_HOST_NAME_CONFIG);

-if (advertisedHostname != null && !advertisedHostname.isEmpty())

-builder.host(advertisedHostname);

-else if (serverConnector != null && serverConnector.getHost() != null

&& serverConnector.getHost().length() > 0)

-builder.host(serverConnector.getHost());

+String hostNameOverride = hostNameOverride(serverConnector);

+if (hostNameOverride != null) {

+builder.host(hostNameOverride);

+}

Integer advertisedPort =

config.getInt(WorkerConfig.REST_ADVERTISED_PORT_CONFIG);

if (advertisedPort != null)

builder.port(advertisedPort);

else if (serverConnector != null && serverConnector.getPort() > 0)

builder.port(serverConnector.getPort());

-log.info("Advertised URI: {}", builder.build());

+URI uri = builder.build();

+maybeThrowInvalidHostNameException(uri, hostNameOverride);

+log.info("Advertised URI: {}", uri);

-return builder.build();

+return uri;

+}

+

+private String hostNameOverride(ServerConnector serverConnector) {

+String advertisedHostname =

config.getString(WorkerConfig.REST_ADVERTISED_HOST_NAME_CONFIG);

+if (advertisedHostname != null && !advertisedHostname.isEmpty())

+return advertisedHostname;

+else if (serverConnector != null && serverConnector.getHost() != null

&& serverConnector.getHost().length() > 0)

+return serverConnector.getHost();

+return null;

+}

+

+/**

+ * Parses the uri and throws a more definitive error

+ * when the internal node to node communication can't happen due to an

invalid host name.

+ */

+static void maybeThrowInvalidHostNameException(URI uri, String

hostNameOverride) {

+//java URI parsing will fail silently returning null in the host if

the host name contains invalid characters like _

+//this bubbles up later when the Herder tries to communicate on the

advertised url and the current HttpClient fails with an ambiguous message

+if (uri.getHost() == null) {

+String errorMsg = "Could not parse host from advertised URL: '" +

uri.toString() + "'";

+if (hostNameOverride != null) {

+//validate hostname using IDN class to see if it can bubble up

the real cause and we can show the user a more detailed exception

+try {

+IDN.toASCII(hostNameOverride, IDN.USE_STD3_ASCII_RULES);

+} catch (IllegalArgumentException e) {

+errorMsg += ", as it doesn't conform to RFC 1123

specification: " + e.getMessage();

Review comment:

If we're going to mention the RFC let's mention section 2.1

specifically. We're referring the user to a nearly 100 page document and it's

only a tiny part of it which is relevant here.

##

File path:

connect/runtime/src/main/java/org/apache/kafka/connect/runtime/rest/RestServer.java

##

@@ -357,21 +358,53 @@ public URI advertisedUrl() {

ServerConnector serverConnector =

findConnector(advertisedSecurityProtocol);

builder.scheme(advertisedSecurityProtocol);

-String advertisedHostname =

config.getString(WorkerConfig.REST_ADVERTISED_HOST_NAME_CONFIG);

-if (advertisedHostname != null && !advertisedHostname.isEmpty())

-builder.host(advertisedHostname);

-else if (serverConnector != null && serverConnector.getHost() != null

&& serverConnector.getHost().length() > 0)

-builder.host(serverConnector.getHost());

+String hostNameOverride = hostNameOverride(serverConnector);

+if (hostNameOverride != null) {

+builder.host(hostNameOverride);

+}

Integer advertisedPort =

config.getInt(WorkerConfig.REST_ADVERTISED_PORT_CONFIG);

if (advertisedPort != null)

builder.port(advertisedPort);

else if (serverConnector != null && serverConnector.getPort() > 0)

builder.port(serverConnector.getPort());

-log.info("Advertised URI: {}", builder.build());

+URI uri = builder.build();

+maybeThrowInvalidHostNameException(uri, hostNameOverride);

+log.info("Advertised URI: {}", uri);

-return builder.build();

+return uri;

+}

+

+private String hostNameOverride(ServerConnector serverConnector) {

+String advertisedHostname =

con

[GitHub] [kafka] tombentley commented on pull request #9441: KAFKA-10614: Ensure group state (un)load is executed in the submitted order

tombentley commented on pull request #9441: URL: https://github.com/apache/kafka/pull/9441#issuecomment-824800741 Thanks @hachikuji, it's much simpler now, if you could take another look? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cadonna opened a new pull request #10587: KAFKA-8897: Upgrade RocksDB to 6.8.1

cadonna opened a new pull request #10587: URL: https://github.com/apache/kafka/pull/10587 RocksDB 6.8.1 is the newest version I could upgrade without running into a SIGABRT issue with error message "Pure virtual function called!" during Gradle builds. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] C0urante commented on pull request #10530: KAFKA-10231 fail bootstrap of Rest server if the host name in the adv…

C0urante commented on pull request #10530: URL: https://github.com/apache/kafka/pull/10530#issuecomment-824847306 I've just realized--this may not be a safe change to make. Although we should fail fast on startup if a worker tries to join an existing cluster with a bad hostname, it's still possible right now to run a single worker in that scenario. Although running a single worker in distributed mode may sound like an anti-pattern, there are still benefits to such a setup, such as persistent storage of configurations in a Kafka topic. It's also possible that there are Dockerized quickstarts and demos that use a single Connect worker and may have a bad hostname; these would suddenly break on upgrade if we merge this change. At the very least, I think we should be more explicit about _why_ we're failing workers on startup with our error message, try to give users a better picture of how workers with a bad hostname can be dangerous (since right now they don't fail on startup but instead begin to fail silently when forwarding user requests or task configs to the leader), and even call out Dockerized setups with instructions on how to fix the worker config in that case by changing the advertised URL to use a valid hostname and, if running a multi-node cluster, making sure that the worker is reachable from other workers with that advertised URL. But I think at this point we're doing a fair bit of work to try to circumvent this issue instead of addressing it head-on. Using a different HTTP client has already been discussed as an option but punted on in favor of a smaller, simpler change; given that this change seems less simple now, I wonder if it's worth reconsidering. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] Nathan22177 edited a comment on pull request #10548: KAFKA-12396 added a nullcheck before trying to retrieve a key

Nathan22177 edited a comment on pull request #10548: URL: https://github.com/apache/kafka/pull/10548#issuecomment-824837667 > Seems, `put(final Windowed sessionKey,...)` and `remove(final Windowed sessionKey)` don't check for `sessionKey.key() != null` though? Can we add this check? They do? Since 2017 according to annotations? Might be that we're talking different classes?  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] Nathan22177 commented on pull request #10548: KAFKA-12396 added a nullcheck before trying to retrieve a key

Nathan22177 commented on pull request #10548: URL: https://github.com/apache/kafka/pull/10548#issuecomment-824837667 > > All of them already had the checks. > > Sweet. I did not double check the code before. > > Seems, `put(final Windowed sessionKey,...)` and `remove(final Windowed sessionKey)` don't check for `sessionKey.key() != null` though? Can we add this check? They do? Might be that we're talking different classes?  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] Nathan22177 commented on pull request #10548: KAFKA-12396 added a nullcheck before trying to retrieve a key

Nathan22177 commented on pull request #10548: URL: https://github.com/apache/kafka/pull/10548#issuecomment-824839639 And both are covered with tests.  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] C0urante commented on pull request #10074: KAFKA-12305: Fix Flatten SMT for array types

C0urante commented on pull request #10074: URL: https://github.com/apache/kafka/pull/10074#issuecomment-824801861 Thanks @tombentley--good call on updating the docs; I've done that. RE a test for the recursive case--I don't think it'll hurt, so I updated the existing tests to include it. Probably not too valuable right now since arrays are just passed through unmodified but it might save someone else that bit of legwork in the future if we decide we want bona fide flattening for array types as well. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] Nathan22177 commented on pull request #10548: KAFKA-12396 added a nullcheck before trying to retrieve a key

Nathan22177 commented on pull request #10548: URL: https://github.com/apache/kafka/pull/10548#issuecomment-824842899 Tests for aforementioned checks were added here: https://github.com/apache/kafka/pull/9520/files#diff-dc416cbdf3efca7ee284fe1e27110e737c4178b7a1cc0696d9d7a72bb8e6e764 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] tombentley commented on pull request #10530: KAFKA-10231 fail bootstrap of Rest server if the host name in the adv…

tombentley commented on pull request #10530: URL: https://github.com/apache/kafka/pull/10530#issuecomment-824864967 Great spot @C0urante! I completely agree we shouldn't break working single-node distributed installs and that trying to fix this validation approach is going to end up being a lot of work. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kpatelatwork commented on pull request #10530: KAFKA-10231 fail bootstrap of Rest server if the host name in the adv…

kpatelatwork commented on pull request #10530: URL: https://github.com/apache/kafka/pull/10530#issuecomment-824873571 Great catch @C0urante . Let me investigate what it takes to upgrade to an Apache HttpClient and how much of an effort it is. I Agree a seemingly simple change doesn't seem simple anymore so why not put the effort in the right future direction to use a client that supports IDNs. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kkonstantine commented on a change in pull request #9627: KAFKA-10746: Change to Warn logs when necessary to notify users

kkonstantine commented on a change in pull request #9627:

URL: https://github.com/apache/kafka/pull/9627#discussion_r618521825

##

File path:

clients/src/main/java/org/apache/kafka/clients/consumer/internals/AbstractCoordinator.java

##

@@ -1023,9 +1023,14 @@ protected void close(Timer timer) {

}

/**

+ * Leaving the group. This method also sends LeaveGroupRequest and log

{@code leaveReason} if this is dynamic members

+ * or unknown coordinator or state is not UNJOINED or this generation has

a valid member id.

+ *

+ * @param leaveReason the reason to leave the group for logging

+ * @param shouldWarn should log as WARN level or INFO

* @throws KafkaException if the rebalance callback throws exception

*/

-public synchronized RequestFuture maybeLeaveGroup(String

leaveReason) {

+public synchronized RequestFuture maybeLeaveGroup(String

leaveReason, boolean shouldWarn) throws KafkaException {

Review comment:

`KafkaException` is a runtime exception and therefore should only be

included in the javadoc. In the method signature we include checked exceptions.

##

File path:

clients/src/main/java/org/apache/kafka/clients/consumer/internals/AbstractCoordinator.java

##

@@ -1051,6 +1061,10 @@ protected void close(Timer timer) {

return future;

}

+public synchronized RequestFuture maybeLeaveGroup(String

leaveReason) throws KafkaException {

Review comment:

same here

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] tang7526 opened a new pull request #10588: KAFKA-12662: add unit test for ProducerPerformance

tang7526 opened a new pull request #10588: URL: https://github.com/apache/kafka/pull/10588 https://issues.apache.org/jira/browse/KAFKA-12662 ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on pull request #10483: KAFKA-12586; Add `DescribeTransactions` Admin API

hachikuji commented on pull request #10483: URL: https://github.com/apache/kafka/pull/10483#issuecomment-824996875 @dajac Thanks for reviewing. I will go ahead and merge since the only failures look like the usual MM ones. @chia7712 Feel free to leave additional comments and I can address them in a separate PR. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji merged pull request #10483: KAFKA-12586; Add `DescribeTransactions` Admin API

hachikuji merged pull request #10483: URL: https://github.com/apache/kafka/pull/10483 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (KAFKA-12586) Admin API for DescribeTransactions

[ https://issues.apache.org/jira/browse/KAFKA-12586?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jason Gustafson resolved KAFKA-12586. - Resolution: Fixed > Admin API for DescribeTransactions > -- > > Key: KAFKA-12586 > URL: https://issues.apache.org/jira/browse/KAFKA-12586 > Project: Kafka > Issue Type: Sub-task >Reporter: Jason Gustafson >Assignee: Jason Gustafson >Priority: Major > > Add the Admin API for DescribeTransactions documented on KIP-664: > https://cwiki.apache.org/confluence/display/KAFKA/KIP-664%3A+Provide+tooling+to+detect+and+abort+hanging+transactions. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (KAFKA-12709) Admin API for ListTransactions

Jason Gustafson created KAFKA-12709: --- Summary: Admin API for ListTransactions Key: KAFKA-12709 URL: https://issues.apache.org/jira/browse/KAFKA-12709 Project: Kafka Issue Type: Sub-task Reporter: Jason Gustafson Assignee: Jason Gustafson Add the `listTransactions` API described in KIP-664: https://cwiki.apache.org/confluence/display/KAFKA/KIP-664%3A+Provide+tooling+to+detect+and+abort+hanging+transactions. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] jolshan commented on pull request #10584: KAFKA-12701: NPE in MetadataRequest when using topic IDs

jolshan commented on pull request #10584: URL: https://github.com/apache/kafka/pull/10584#issuecomment-825041572 Test failures look unrelated. Some of the usual suspects like `RaftClusterTest.testCreateClusterAndCreateAndManyTopicsWithManyPartitions()` and `MirrorConnectorsIntegrationSSLTest`/`MirrorConnectorsIntegrationTest`tests -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-9895) Truncation request on broker start up may cause OffsetOutOfRangeException

[

https://issues.apache.org/jira/browse/KAFKA-9895?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17329317#comment-17329317

]

Konstantin commented on KAFKA-9895:

---

We bumped into this as well on kafka 2.4.1.

Here's the sequence of events, which I believe leads to this error:

1. Leader of partition test-3 (broker 2) stops due to graceful shutdown with hw

502921625, but is has ongoing unreplicated transaction, starting from

502921626, so it writes this transaction to producer snapshot.

{code:java}

[2021-04-11 23:27:44,313] INFO [ProducerStateManager partition=topic-3] Writing

producer snapshot at offset 502921627 (kafka.log.ProducerStateManager){code}

2. Another broker (1) becomes leader and starts partition from hw 502921625

{code:java}

[2021-04-11 23:27:43,081] INFO [Partition topic-3 broker=1] topic-3 starts at

leader epoch 30 from offset 502921625 with high watermark 502921625. Previous

leader epoch was 29. (kafka.cluster.Partition){code}

3. Broker 2 starts again, loads that transaction's start offset as first

unstable offset and tries to become follower. Then it tries to truncate to last

replicated offset - 502921625.

{code:java}

[2021-04-11 23:28:31,447] INFO [ProducerStateManager partition=topic-3] Loading

producer state from snapshot file

'/KAFKADATA/topic-3/000502921627.snapshot'

(kafka.log.ProducerStateManager)

[2021-04-11 23:28:31,454] INFO [Log partition=topic-3, dir=/KAFKADATA]

Completed load of log with 25 segments, log start offset 496341849 and log end

offset 502921627 in 29 ms (kafka.log.Log)

[2021-04-11 23:28:33,776] INFO [Partition topic-3 broker=2] Log loaded for

partition topic-3 with initial high watermark 502921625

(kafka.cluster.Partition)

[2021-04-11 23:28:33,885] INFO [ReplicaFetcherManager on broker 2] Added

fetcher to broker BrokerEndPoint(id=1, host=***:9093) for partitions

Map(topic-3 -> (offset=502921625, leaderEpoch=30), ***)

(kafka.server.ReplicaFetcherManager)

[2021-04-11 23:28:34,002] INFO [Log partition=topic-3, dir=/KAFKADATA]

Truncating to offset 502921625 (kafka.log.Log)

{code}

4. During truncation it updates logEndOffset, which leads to updating hw and

incrementing first unstable offset here:

{code:java}

private def maybeIncrementFirstUnstableOffset(): Unit = lock synchronized {

checkIfMemoryMappedBufferClosed()

val updatedFirstStableOffset = producerStateManager.firstUnstableOffset match

{

case Some(logOffsetMetadata) if logOffsetMetadata.messageOffsetOnly ||

logOffsetMetadata.messageOffset < logStartOffset =>

val offset = math.max(logOffsetMetadata.messageOffset, logStartOffset)

Some(convertToOffsetMetadataOrThrow(offset))

case other => other

}

if (updatedFirstStableOffset != this.firstUnstableOffsetMetadata) {

debug(s"First unstable offset updated to $updatedFirstStableOffset")

this.firstUnstableOffsetMetadata = updatedFirstStableOffset

}

}

{code}

It finds producerStateManager.firstUnstableOffset (502921626, loaded from

producer snapshot) and calls convertToOffsetMetadataOrThrow(), which tries to

read the log at 502921626 and fails, because log end offset is already

truncated to 502921625.

{code:java}

[2021-04-11 23:28:34,003] ERROR [ReplicaFetcher replicaId=2, leaderId=1,

fetcherId=2] Unexpected error occurred during truncation for topic-3 at offset

502921625

(kafka.server.ReplicaFetcherThread)org.apache.kafka.common.errors.OffsetOutOfRangeException:

Received request for offset 502921626 for partition topic-3, but we only have

log segments in the range 496341849 to 502921625.

[2021-04-11 23:28:34,004] WARN [ReplicaFetcher replicaId=2, leaderId=1,

fetcherId=2] Partition topic-3 marked as failed

{code}

We didn't manage to reproduce this as is, but it can be emulated via writing

transaction to only one replica, shutting it down and starting another one with

unclean leader election.

So, the steps to reproduce are:

# Start 2 brokers, create a topic with 1 partition and replication factor of

2. Let's say end offset is 5.

# Stop broker 1

# Write any record (to offset 5), then start transaction and write another one

(to offset 6) - transaction start offset should be greater than end offset of

1st broker's replica, or it won't be out of range.

# Stop broker 2

# Start broker 1 with unclean leader election. It will start from offset 5.

# Start broker 2 and it will throw OffsetOutOfRangeException during truncation

to 5 for transaction start offset 6.

Works (or rather fails) like a charm on any kafka from 2.4.1 to 2.7.0.

> Truncation request on broker start up may cause OffsetOutOfRangeException

> -

>

> Key: KAFKA-9895

> URL: https://issues.apache.org/jira/browse/KAFKA-9895

> Project: Kafka

> Issue Type: Bug

>Affects Versions: 2.4.0

>Reporte

[GitHub] [kafka] tang7526 commented on pull request #10588: KAFKA-12662: add unit test for ProducerPerformance

tang7526 commented on pull request #10588: URL: https://github.com/apache/kafka/pull/10588#issuecomment-825051567 @chia7712 Could you help to review this PR? Thanks. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] tang7526 commented on pull request #10534: KAFKA-806: Index may not always observe log.index.interval.bytes