[jira] [Updated] (KAFKA-13512) topicIdsToNames and topicNamesToIds allocate unnecessary maps

[

https://issues.apache.org/jira/browse/KAFKA-13512?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

David Jacot updated KAFKA-13512:

Fix Version/s: 3.1.0

> topicIdsToNames and topicNamesToIds allocate unnecessary maps

> -

>

> Key: KAFKA-13512

> URL: https://issues.apache.org/jira/browse/KAFKA-13512

> Project: Kafka

> Issue Type: Bug

>Affects Versions: 3.1.0

>Reporter: Justine Olshan

>Assignee: Justine Olshan

>Priority: Blocker

> Fix For: 3.1.0

>

>

> Currently we write the methods as follows:

> {{def topicNamesToIds(): util.Map[String, Uuid] = {}}

> {{ new util.HashMap(metadataSnapshot.topicIds.asJava)}}

> {{}}}

> We do not need to allocate a new map however, we can simply use

> {{Collections.unmodifiableMap(metadataSnapshot.topicIds.asJava)}}

> We can do something similar for the topicIdsToNames implementation.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [kafka] itweixiang removed a comment on pull request #9902: KAFKA-12193: Re-resolve IPs after a client disconnects

itweixiang removed a comment on pull request #9902:

URL: https://github.com/apache/kafka/pull/9902#issuecomment-987738907

hi bob-barrett , I hava a quetion about your issue ,can you help me ?

our kafka are deloying in k8s , where kafka cluster restart , result in

producer and consumer will disconnect.

kafka cluster restart will generate new ips, but kafka client store old ips

. so we must restart producer and consumer , so tired.

in kafka client 2.8.1 version ,I see

org.apache.kafka.clients.NetworkClient#initiateConnect , `node.host()` maybe

return a ip instead of domain. if return a ip , will result in lose efficacy

about your updated version .

my english level is match awful , can you know my words?

```

private void initiateConnect(Node node, long now) {

String nodeConnectionId = node.idString();

try {

connectionStates.connecting(nodeConnectionId, now, node.host(),

clientDnsLookup);

InetAddress address =

connectionStates.currentAddress(nodeConnectionId);

log.debug("Initiating connection to node {} using address {}",

node, address);

selector.connect(nodeConnectionId,

new InetSocketAddress(address, node.port()),

this.socketSendBuffer,

this.socketReceiveBuffer);

} catch (IOException e) {

log.warn("Error connecting to node {}", node, e);

// Attempt failed, we'll try again after the backoff

connectionStates.disconnected(nodeConnectionId, now);

// Notify metadata updater of the connection failure

metadataUpdater.handleServerDisconnect(now, nodeConnectionId,

Optional.empty());

}

}

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon opened a new pull request #11578: KAFKA-13514: reduce consumer count and timeout to speed up the test

showuon opened a new pull request #11578: URL: https://github.com/apache/kafka/pull/11578 Reduce the timeout from 60s to 40s, and reduce the consumer count from 2000 -> 1000. In local env, before change, the time took 44s, after change, time took 16s. If before change, in jenkins we took around 60s, we should now, took around 25s. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (KAFKA-13517) Add ConfigurationKeys to ConfigResource class

Vikas Singh created KAFKA-13517:

---

Summary: Add ConfigurationKeys to ConfigResource class

Key: KAFKA-13517

URL: https://issues.apache.org/jira/browse/KAFKA-13517

Project: Kafka

Issue Type: Improvement

Components: clients

Affects Versions: 3.0.0, 2.8.1

Reporter: Vikas Singh

Assignee: Vikas Singh

Fix For: 2.8.1

A list of {{ConfigResource}} class is passed as argument to

{{AdminClient::describeConfigs}} api to indicate configuration of the entities

to fetch. The {{ConfigResource}} class is made up of two fields, name and type

of entity. Kafka returns *all* configurations for the entities provided to the

admin client api.

This admin api in turn uses {{DescribeConfigsRequest}} kafka api to get the

configuration for the entities in question. In addition to name and type of

entity whose configuration to get, Kafka {{DescribeConfigsResource}} structure

also lets users provide {{ConfigurationKeys}} list, which allows users to fetch

only the configurations that are needed.

However, this field isn't exposed in the {{ConfigResource}} class that is used

by AdminClient, so users of AdminClient have no way to ask for specific

configuration. The API always returns *all* configurations. Then the user of

the {{AdminClient::describeConfigs}} go over the returned list and filter out

the config keys that they are interested in.

This results in boilerplate code for all users of

{{AdminClient::describeConfigs}} api, in addition to being wasteful use of

resource. It becomes painful in large cluster case where to fetch one

configuration of all topics, we need to fetch all configuration of all topics,

which can be huge in size.

Creating this Jira to add same field (i.e. {{{}ConfigurationKeys{}}}) to the

{{ConfigResource}} structure to bring it to parity to

{{DescribeConfigsResource}} Kafka API structure. There should be no backward

compatibility issue as the field will be optional and will behave same way if

it is not specified (i.e. by passing null to backend kafka api)

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [kafka] tamara-skokova commented on pull request #11573: KAFKA-13507: GlobalProcessor ignores user specified names

tamara-skokova commented on pull request #11573: URL: https://github.com/apache/kafka/pull/11573#issuecomment-988484139 @showuon, thanks, added a test that verifies the default names -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jeffkbkim edited a comment on pull request #11571: KAFKA-13496: add reason to LeaveGroupRequest

jeffkbkim edited a comment on pull request #11571: URL: https://github.com/apache/kafka/pull/11571#issuecomment-988460630 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jeffkbkim edited a comment on pull request #11571: KAFKA-13496: add reason to LeaveGroupRequest

jeffkbkim edited a comment on pull request #11571: URL: https://github.com/apache/kafka/pull/11571#issuecomment-988460630 tests calling `adminClient.removeMembersFromConsumerGroup` are failing: ``` ERROR [KafkaApi-2] Unexpected error handling request RequestHeader(apiKey=LEAVE_GROUP, apiVersion=5, clientId=adminclient-1, correlationId=30) -- LeaveGroupRequestData(groupId='test_group_id', memberId='', members=[MemberIdentity(memberId='', groupInstanceId='invalid-instance-id', reason='null')]) with context RequestContext(header=RequestHeader(apiKey=LEAVE_GROUP, apiVersion=5, clientId=adminclient-1, correlationId=30), connectionId='127.0.0.1:58238-127.0.0.1:58241-0', clientAddress=/127.0.0.1, principal=User:ANONYMOUS, listenerName=ListenerName(PLAINTEXT), securityProtocol=PLAINTEXT, clientInformation=ClientInformation(softwareName=apache-kafka-java, softwareVersion=unknown), fromPrivilegedListener=true, principalSerde=Optional[org.apache.kafka.common.security.authenticator.DefaultKafkaPrincipalBuilder@5d777a17]) (kafka.server.KafkaApis:76) org.apache.kafka.common.errors.UnsupportedVersionException: Can't size version 5 of MemberResponse ``` i'm not sure i understand the exception. (key=LEAVE_GROUP,api version=5) requests are valid. the error is from `LeaveGroupResponseData.addSize` - why is the response validating the request api version? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jeffkbkim commented on pull request #11571: KAFKA-13496: add reason to LeaveGroupRequest

jeffkbkim commented on pull request #11571: URL: https://github.com/apache/kafka/pull/11571#issuecomment-988460630 tests calling `adminClient.removeMembersFromConsumerGroup` are failing: ``` ERROR [KafkaApi-2] Unexpected error handling request RequestHeader(apiKey=LEAVE_GROUP, apiVersion=5, clientId=adminclient-1, correlationId=30) -- LeaveGroupRequestData(groupId='test_group_id', memberId='', members=[MemberIdentity(memberId='', groupInstanceId='invalid-instance-id', reason='null')]) with context RequestContext(header=RequestHeader(apiKey=LEAVE_GROUP, apiVersion=5, clientId=adminclient-1, correlationId=30), connectionId='127.0.0.1:58238-127.0.0.1:58241-0', clientAddress=/127.0.0.1, principal=User:ANONYMOUS, listenerName=ListenerName(PLAINTEXT), securityProtocol=PLAINTEXT, clientInformation=ClientInformation(softwareName=apache-kafka-java, softwareVersion=unknown), fromPrivilegedListener=true, principalSerde=Optional[org.apache.kafka.common.security.authenticator.DefaultKafkaPrincipalBuilder@5d777a17]) (kafka.server.KafkaApis:76) org.apache.kafka.common.errors.UnsupportedVersionException: Can't size version 5 of MemberResponse ``` i'm not sure i understand the exception. LEAVE_GROUP/API_VERSION=5 requests are valid. the error is from `LeaveGroupResponseData.addSize` - why is the response validating the request api version? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #11573: KAFKA-13507: GlobalProcessor ignores user specified names

showuon commented on a change in pull request #11573:

URL: https://github.com/apache/kafka/pull/11573#discussion_r764516991

##

File path:

streams/src/test/java/org/apache/kafka/streams/StreamsBuilderTest.java

##

@@ -998,6 +999,23 @@ public void

shouldUseSpecifiedNameForAggregateOperationGivenTable() {

STREAM_OPERATION_NAME);

}

+@Test

+public void shouldUseSpecifiedNameForGlobalStoreProcessor() {

Review comment:

I didn't see there is tests for `GlobalStoreProcessor` using default

name, right? That is, a test to create global store by `addGlobalStore` with

`Consumed` instance without specifying a custom name, and verify the names.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Comment Edited] (KAFKA-10503) MockProducer doesn't throw ClassCastException when no partition for topic

[

https://issues.apache.org/jira/browse/KAFKA-10503?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17454673#comment-17454673

]

Dennis Hunziker edited comment on KAFKA-10503 at 12/8/21, 2:58 AM:

---

[~gmunozfe] This change did break our tests. When using the no-arg constructor

the serializers are null but now that they're referenced in any case we'll just

get an NPE. Was that on purpose? Shouldn't the change made here only be applied

if the serializers aren't null?

Happy to create a separate ticket and fix if you agree.

was (Author: JIRAUSER281343):

This change did break our tests. When using the no-arg constructor the

serializers are null but now that they're referenced in any case we'll just get

an NPE. Was that on purpose? Shouldn't the change made here only be applied if

the serializers aren't null?

Happy to create a separate ticket and fix if you agree.

> MockProducer doesn't throw ClassCastException when no partition for topic

> -

>

> Key: KAFKA-10503

> URL: https://issues.apache.org/jira/browse/KAFKA-10503

> Project: Kafka

> Issue Type: Improvement

> Components: clients, producer

>Affects Versions: 2.6.0

>Reporter: Gonzalo Muñoz Fernández

>Assignee: Gonzalo Muñoz Fernández

>Priority: Minor

> Labels: mock, producer

> Fix For: 2.7.0

>

> Original Estimate: 1h

> Remaining Estimate: 1h

>

> Though {{MockProducer}} admits serializers in its constructors, it doesn't

> check during {{send}} method that those serializers are the proper ones to

> serialize key/value included into the {{ProducerRecord}}.

> [This

> check|https://github.com/apache/kafka/blob/trunk/clients/src/main/java/org/apache/kafka/clients/producer/MockProducer.java#L499-L500]

> is only done if there is a partition assigned for that topic.

> It would be an enhancement if these serialize methods were also invoked in

> simple scenarios, where no partition is assigned to a topic.

> eg:

> {code:java}

> @Test

> public void shouldThrowClassCastException() {

> MockProducer producer = new MockProducer<>(true, new

> IntegerSerializer(), new StringSerializer());

> ProducerRecord record = new ProducerRecord(TOPIC, "key1", "value1");

> try {

> producer.send(record);

> fail("Should have thrown ClassCastException because record cannot

> be casted with serializers");

> } catch (ClassCastException e) {}

> }

> {code}

> Currently, for obtaining the ClassCastException is needed to define the topic

> into a partition:

> {code:java}

> PartitionInfo partitionInfo = new PartitionInfo(TOPIC, 0, null, null, null);

> Cluster cluster = new Cluster(null, emptyList(), asList(partitionInfo),

> emptySet(), emptySet());

> producer = new MockProducer(cluster,

> true,

> new DefaultPartitioner(),

> new IntegerSerializer(),

> new StringSerializer());

> {code}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Assigned] (KAFKA-13514) Flakey test StickyAssignorTest

[

https://issues.apache.org/jira/browse/KAFKA-13514?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Luke Chen reassigned KAFKA-13514:

-

Assignee: Luke Chen

> Flakey test StickyAssignorTest

> --

>

> Key: KAFKA-13514

> URL: https://issues.apache.org/jira/browse/KAFKA-13514

> Project: Kafka

> Issue Type: Test

> Components: clients, unit tests

>Reporter: Matthias J. Sax

>Assignee: Luke Chen

>Priority: Critical

> Labels: flaky-test

>

> org.apache.kafka.clients.consumer.StickyAssignorTest.testLargeAssignmentAndGroupWithNonEqualSubscription()

> No real stack trace, but only:

> {quote}java.util.concurrent.TimeoutException:

> testLargeAssignmentAndGroupWithNonEqualSubscription() timed out after 60

> seconds{quote}

> STDOUT

> {quote}[2021-12-07 01:32:23,920] ERROR Found multiple consumers consumer1 and

> consumer2 claiming the same TopicPartition topic-0 in the same generation -1,

> this will be invalidated and removed from their previous assignment.

> (org.apache.kafka.clients.consumer.internals.AbstractStickyAssignor:150)

> [2021-12-07 01:32:58,964] ERROR Found multiple consumers consumer1 and

> consumer2 claiming the same TopicPartition topic-0 in the same generation -1,

> this will be invalidated and removed from their previous assignment.

> (org.apache.kafka.clients.consumer.internals.AbstractStickyAssignor:150)

> [2021-12-07 01:32:58,976] ERROR Found multiple consumers consumer1 and

> consumer2 claiming the same TopicPartition topic-0 in the same generation -1,

> this will be invalidated and removed from their previous assignment.

> (org.apache.kafka.clients.consumer.internals.AbstractStickyAssignor:150){quote}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [kafka] Loyilee closed pull request #10781: MINOR: Reduce duplicate authentication check

Loyilee closed pull request #10781: URL: https://github.com/apache/kafka/pull/10781 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] Loyilee closed pull request #10601: KAFKA-12723: Fix potential NPE in HashTier

Loyilee closed pull request #10601: URL: https://github.com/apache/kafka/pull/10601 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on pull request #11562: KAFKA-12648: extend IQ APIs to work with named topologies

ableegoldman commented on pull request #11562: URL: https://github.com/apache/kafka/pull/11562#issuecomment-988400580 Hey @tolgadur can we also add one more thing to this -- see https://github.com/tolgadur/kafka/pull/1 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] florin-akermann commented on pull request #11456: KAFKA-13351: Add possibility to write kafka headers in Kafka Console Producer

florin-akermann commented on pull request #11456: URL: https://github.com/apache/kafka/pull/11456#issuecomment-988371209 Hi again, @dajac @mimaison I made the adjustments based on your inputs. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

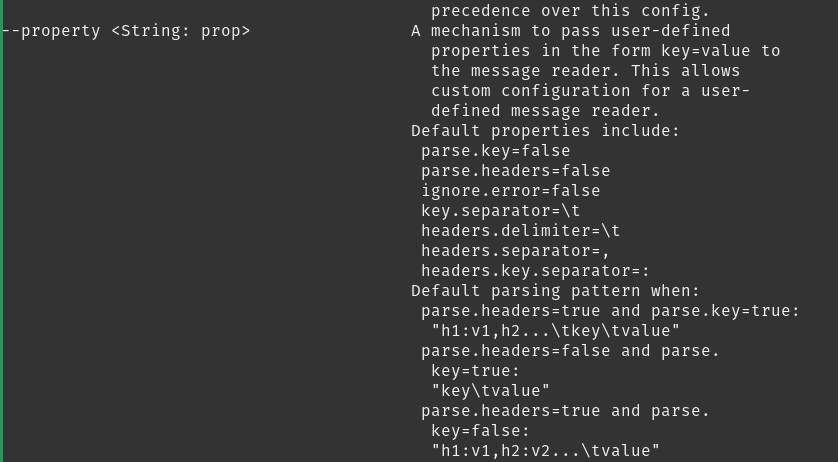

[GitHub] [kafka] florin-akermann commented on a change in pull request #11456: KAFKA-13351: Add possibility to write kafka headers in Kafka Console Producer

florin-akermann commented on a change in pull request #11456:

URL: https://github.com/apache/kafka/pull/11456#discussion_r764457132

##

File path: core/src/main/scala/kafka/tools/ConsoleProducer.scala

##

@@ -206,11 +210,25 @@ object ConsoleProducer {

.describedAs("size")

.ofType(classOf[java.lang.Integer])

.defaultsTo(1024*100)

-val propertyOpt = parser.accepts("property", "A mechanism to pass

user-defined properties in the form key=value to the message reader. " +

- "This allows custom configuration for a user-defined message reader.

Default properties include:\n" +

- "\tparse.key=true|false\n" +

- "\tkey.separator=\n" +

- "\tignore.error=true|false")

+val propertyOpt = parser.accepts("property",

+ """A mechanism to pass user-defined properties in the form key=value to

the message reader. This allows custom configuration for a user-defined message

reader.

+|Default properties include:

+| parse.key=false

+| parse.headers=false

+| ignore.error=false

+| key.separator=\t

+| headers.delimiter=\t

+| headers.separator=,

+| headers.key.separator=:

+|Default parsing pattern when:

+| parse.headers=true & parse.key=true:

Review comment:

Now i remember the reason why I chose &

The lines break in an unfortunate way if and is used. What do you think?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Created] (KAFKA-13516) Connection level metrics are not closed

Aman Agarwal created KAFKA-13516: Summary: Connection level metrics are not closed Key: KAFKA-13516 URL: https://issues.apache.org/jira/browse/KAFKA-13516 Project: Kafka Issue Type: Bug Components: clients Affects Versions: 3.0.0 Reporter: Aman Agarwal Connection level metrics are not closed by the Selector on connection close, hence leaking the sensors. -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [kafka] cmccabe opened a new pull request #11577: KAFKA-13515: Fix KRaft config validation issues

cmccabe opened a new pull request #11577: URL: https://github.com/apache/kafka/pull/11577 Require that topics exist before topic configurations can be created for them. Merge the code from ConfigurationControlManager#checkConfigResource into ControllerConfigurationValidator to avoid duplication. Add KRaft support to DynamicConfigChangeTest. Split out tests in DynamicConfigChangeTest that don't require a cluster into DynamicConfigChangeUnitTest to save test time. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (KAFKA-13515) Fix KRaft config validation issues

Colin McCabe created KAFKA-13515: Summary: Fix KRaft config validation issues Key: KAFKA-13515 URL: https://issues.apache.org/jira/browse/KAFKA-13515 Project: Kafka Issue Type: Bug Reporter: Colin McCabe -- This message was sent by Atlassian Jira (v8.20.1#820001)

[GitHub] [kafka] rondagostino commented on a change in pull request #11503: KAFKA-13456: Tighten KRaft config checks/constraints

rondagostino commented on a change in pull request #11503:

URL: https://github.com/apache/kafka/pull/11503#discussion_r764387735

##

File path: core/src/main/scala/kafka/server/KafkaConfig.scala

##

@@ -2018,12 +2025,103 @@ class KafkaConfig private(doLog: Boolean, val props:

java.util.Map[_, _], dynami

"offsets.commit.required.acks must be greater or equal -1 and less or

equal to offsets.topic.replication.factor")

require(BrokerCompressionCodec.isValid(compressionType), "compression.type

: " + compressionType + " is not valid." +

" Valid options are " +

BrokerCompressionCodec.brokerCompressionOptions.mkString(","))

-require(!processRoles.contains(ControllerRole) ||

controllerListeners.nonEmpty,

- s"${KafkaConfig.ControllerListenerNamesProp} cannot be empty if the

server has the controller role")

+val advertisedListenerNames =

effectiveAdvertisedListeners.map(_.listenerName).toSet

+

+// validate KRaft-related configs

+val voterAddressSpecsByNodeId =

RaftConfig.parseVoterConnections(quorumVoters)

+def validateNonEmptyQuorumVotersForKRaft(): Unit = {

+ if (voterAddressSpecsByNodeId.isEmpty) {

+throw new ConfigException(s"If using ${KafkaConfig.ProcessRolesProp},

${KafkaConfig.QuorumVotersProp} must contain a parseable set of voters.")

+ }

+}

+def validateControlPlaneListenerEmptyForKRaft(): Unit = {

+ require(controlPlaneListenerName.isEmpty,

+s"${KafkaConfig.ControlPlaneListenerNameProp} is not supported in

KRaft mode. KRaft uses ${KafkaConfig.ControllerListenerNamesProp} instead.")

+}

+val sourceOfAdvertisedListeners: String =

+ if (getString(KafkaConfig.AdvertisedListenersProp) != null)

+s"${KafkaConfig.AdvertisedListenersProp}"

+ else

+s"${KafkaConfig.ListenersProp}"

+def

validateAdvertisedListenersDoesNotContainControllerListenersForKRaftBroker():

Unit = {

+ require(!advertisedListenerNames.exists(aln =>

controllerListenerNames.contains(aln.value())),

+s"$sourceOfAdvertisedListeners must not contain KRaft controller

listeners from ${KafkaConfig.ControllerListenerNamesProp} when

${KafkaConfig.ProcessRolesProp} contains the broker role because Kafka clients

that send requests via advertised listeners do not send requests to KRaft

controllers -- they only send requests to KRaft brokers.")

+}

+def validateControllerQuorumVotersMustContainNodeIDForKRaftController():

Unit = {

+ require(voterAddressSpecsByNodeId.containsKey(nodeId),

+s"If ${KafkaConfig.ProcessRolesProp} contains the 'controller' role,

the node id $nodeId must be included in the set of voters

${KafkaConfig.QuorumVotersProp}=${voterAddressSpecsByNodeId.asScala.keySet.toSet}")

+}

+def validateControllerListenerExistsForKRaftController(): Unit = {

+ require(controllerListeners.nonEmpty,

+s"${KafkaConfig.ControllerListenerNamesProp} must contain at least one

value appearing in the '${KafkaConfig.ListenersProp}' configuration when

running the KRaft controller role")

+}

+def

validateControllerListenerNamesMustAppearInListenersForKRaftController(): Unit

= {

+ val listenerNameValues = listeners.map(_.listenerName.value).toSet

+ require(controllerListenerNames.forall(cln =>

listenerNameValues.contains(cln)),

+s"${KafkaConfig.ControllerListenerNamesProp} must only contain values

appearing in the '${KafkaConfig.ListenersProp}' configuration when running the

KRaft controller role")

+}

+def validateAdvertisedListenersNonEmptyForBroker(): Unit = {

+ require(advertisedListenerNames.nonEmpty,

+"There must be at least one advertised listener." + (

+ if (processRoles.contains(BrokerRole)) s" Perhaps all listeners

appear in ${ControllerListenerNamesProp}?" else ""))

+}

+if (processRoles == Set(BrokerRole)) {

+ // KRaft broker-only

+ validateNonEmptyQuorumVotersForKRaft()

+ validateControlPlaneListenerEmptyForKRaft()

+

validateAdvertisedListenersDoesNotContainControllerListenersForKRaftBroker()

+ // nodeId must not appear in controller.quorum.voters

+ require(!voterAddressSpecsByNodeId.containsKey(nodeId),

+s"If ${KafkaConfig.ProcessRolesProp} contains just the 'broker' role,

the node id $nodeId must not be included in the set of voters

${KafkaConfig.QuorumVotersProp}=${voterAddressSpecsByNodeId.asScala.keySet.toSet}")

+ // controller.listener.names must be non-empty...

+ require(controllerListenerNames.exists(_.nonEmpty),

+s"${KafkaConfig.ControllerListenerNamesProp} must contain at least one

value when running KRaft with just the broker role")

+ // controller.listener.names are forbidden in listeners...

+ require(controllerListeners.isEmpty,

+s"${KafkaConfig.ControllerListenerNamesProp} must not contain a value

appearing in the '${KafkaConfig.ListenersProp}' configuration when running

KRaft with just

[GitHub] [kafka] dajac commented on pull request #11576: KAFKA-13512: topicIdsToNames and topicNamesToIds allocate unnecessary maps

dajac commented on pull request #11576: URL: https://github.com/apache/kafka/pull/11576#issuecomment-988280848 The MetadataSnapshot is immutable so this seems fine to me as well. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] C0urante commented on a change in pull request #11046: KAFKA-12980: Return empty record batch from Consumer::poll when position advances due to aborted transactions

C0urante commented on a change in pull request #11046:

URL: https://github.com/apache/kafka/pull/11046#discussion_r764381476

##

File path:

clients/src/main/java/org/apache/kafka/clients/consumer/internals/Fetcher.java

##

@@ -725,17 +725,13 @@ public void onFailure(RuntimeException e) {

completedFetch.nextFetchOffset,

completedFetch.lastEpoch,

position.currentLeader);

-log.trace("Update fetching position to {} for partition

{}", nextPosition, completedFetch.partition);

+log.trace("Updating fetch position from {} to {} for

partition {} and returning {} records from `poll()`",

+position, nextPosition, completedFetch.partition,

partRecords.size());

subscriptions.position(completedFetch.partition,

nextPosition);

positionAdvanced = true;

if (partRecords.isEmpty()) {

-log.debug(

-"Advanced position for partition {} without

receiving any user-visible records. "

-+ "This is likely due to skipping over

control records in the current fetch, "

-+ "and may result in the consumer

returning an empty record batch when "

-+ "polled before its poll timeout has

elapsed.",

-completedFetch.partition

-);

+log.trace("Returning empty records from `poll()` "

++ "since the consumer's position has advanced

for at least one topic partition");

Review comment:

Moved it to `KafkaConsumer`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] jolshan opened a new pull request #11576: KAFKA-13512: topicIdsToNames and topicNamesToIds allocate unnecessary maps

jolshan opened a new pull request #11576: URL: https://github.com/apache/kafka/pull/11576 We are creating a new map unnecessarily for these methods. Remove the extra map creation and simply wrap in unmodifiable map. I've also added a benchmark for the map method. Here are some results when I limited partitions to 20 only. Before change: ``` Benchmark (partitionCount) (topicCount) Mode Cnt Score Error Units MetadataRequestBenchmark.testTopicIdInfo20 500 avgt 15 16.942 ± 0.306 ns/op MetadataRequestBenchmark.testTopicIdInfo20 1000 avgt 15 19.476 ± 0.339 ns/op MetadataRequestBenchmark.testTopicIdInfo20 5000 avgt 15 18.989 ± 0.482 ns/op ``` After change: ``` Benchmark (partitionCount) (topicCount) Mode Cnt Score Error Units MetadataRequestBenchmark.testTopicIdInfo20 500 avgt 15 11.120 ± 0.336 ns/op MetadataRequestBenchmark.testTopicIdInfo20 1000 avgt 15 11.173 ± 0.489 ns/op MetadataRequestBenchmark.testTopicIdInfo20 5000 avgt 15 11.003 ± 0.042 ns/op ``` ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] rondagostino commented on a change in pull request #11503: KAFKA-13456: Tighten KRaft config checks/constraints

rondagostino commented on a change in pull request #11503:

URL: https://github.com/apache/kafka/pull/11503#discussion_r764369935

##

File path: core/src/main/scala/kafka/server/KafkaConfig.scala

##

@@ -2018,12 +2025,103 @@ class KafkaConfig private(doLog: Boolean, val props:

java.util.Map[_, _], dynami

"offsets.commit.required.acks must be greater or equal -1 and less or

equal to offsets.topic.replication.factor")

require(BrokerCompressionCodec.isValid(compressionType), "compression.type

: " + compressionType + " is not valid." +

" Valid options are " +

BrokerCompressionCodec.brokerCompressionOptions.mkString(","))

-require(!processRoles.contains(ControllerRole) ||

controllerListeners.nonEmpty,

- s"${KafkaConfig.ControllerListenerNamesProp} cannot be empty if the

server has the controller role")

+val advertisedListenerNames =

effectiveAdvertisedListeners.map(_.listenerName).toSet

+

+// validate KRaft-related configs

+val voterAddressSpecsByNodeId =

RaftConfig.parseVoterConnections(quorumVoters)

+def validateNonEmptyQuorumVotersForKRaft(): Unit = {

+ if (voterAddressSpecsByNodeId.isEmpty) {

+throw new ConfigException(s"If using ${KafkaConfig.ProcessRolesProp},

${KafkaConfig.QuorumVotersProp} must contain a parseable set of voters.")

+ }

+}

+def validateControlPlaneListenerEmptyForKRaft(): Unit = {

+ require(controlPlaneListenerName.isEmpty,

+s"${KafkaConfig.ControlPlaneListenerNameProp} is not supported in

KRaft mode. KRaft uses ${KafkaConfig.ControllerListenerNamesProp} instead.")

+}

+val sourceOfAdvertisedListeners: String =

+ if (getString(KafkaConfig.AdvertisedListenersProp) != null)

+s"${KafkaConfig.AdvertisedListenersProp}"

+ else

+s"${KafkaConfig.ListenersProp}"

+def

validateAdvertisedListenersDoesNotContainControllerListenersForKRaftBroker():

Unit = {

+ require(!advertisedListenerNames.exists(aln =>

controllerListenerNames.contains(aln.value())),

+s"$sourceOfAdvertisedListeners must not contain KRaft controller

listeners from ${KafkaConfig.ControllerListenerNamesProp} when

${KafkaConfig.ProcessRolesProp} contains the broker role because Kafka clients

that send requests via advertised listeners do not send requests to KRaft

controllers -- they only send requests to KRaft brokers.")

+}

+def validateControllerQuorumVotersMustContainNodeIDForKRaftController():

Unit = {

+ require(voterAddressSpecsByNodeId.containsKey(nodeId),

+s"If ${KafkaConfig.ProcessRolesProp} contains the 'controller' role,

the node id $nodeId must be included in the set of voters

${KafkaConfig.QuorumVotersProp}=${voterAddressSpecsByNodeId.asScala.keySet.toSet}")

+}

+def validateControllerListenerExistsForKRaftController(): Unit = {

+ require(controllerListeners.nonEmpty,

+s"${KafkaConfig.ControllerListenerNamesProp} must contain at least one

value appearing in the '${KafkaConfig.ListenersProp}' configuration when

running the KRaft controller role")

+}

+def

validateControllerListenerNamesMustAppearInListenersForKRaftController(): Unit

= {

+ val listenerNameValues = listeners.map(_.listenerName.value).toSet

+ require(controllerListenerNames.forall(cln =>

listenerNameValues.contains(cln)),

+s"${KafkaConfig.ControllerListenerNamesProp} must only contain values

appearing in the '${KafkaConfig.ListenersProp}' configuration when running the

KRaft controller role")

+}

+def validateAdvertisedListenersNonEmptyForBroker(): Unit = {

+ require(advertisedListenerNames.nonEmpty,

+"There must be at least one advertised listener." + (

+ if (processRoles.contains(BrokerRole)) s" Perhaps all listeners

appear in ${ControllerListenerNamesProp}?" else ""))

+}

+if (processRoles == Set(BrokerRole)) {

+ // KRaft broker-only

+ validateNonEmptyQuorumVotersForKRaft()

+ validateControlPlaneListenerEmptyForKRaft()

+

validateAdvertisedListenersDoesNotContainControllerListenersForKRaftBroker()

+ // nodeId must not appear in controller.quorum.voters

+ require(!voterAddressSpecsByNodeId.containsKey(nodeId),

+s"If ${KafkaConfig.ProcessRolesProp} contains just the 'broker' role,

the node id $nodeId must not be included in the set of voters

${KafkaConfig.QuorumVotersProp}=${voterAddressSpecsByNodeId.asScala.keySet.toSet}")

+ // controller.listener.names must be non-empty...

+ require(controllerListenerNames.exists(_.nonEmpty),

+s"${KafkaConfig.ControllerListenerNamesProp} must contain at least one

value when running KRaft with just the broker role")

+ // controller.listener.names are forbidden in listeners...

+ require(controllerListeners.isEmpty,

+s"${KafkaConfig.ControllerListenerNamesProp} must not contain a value

appearing in the '${KafkaConfig.ListenersProp}' configuration when running

KRaft with just

[GitHub] [kafka] rondagostino commented on a change in pull request #11503: KAFKA-13456: Tighten KRaft config checks/constraints

rondagostino commented on a change in pull request #11503:

URL: https://github.com/apache/kafka/pull/11503#discussion_r764367910

##

File path: core/src/main/scala/kafka/server/KafkaConfig.scala

##

@@ -2018,12 +2025,103 @@ class KafkaConfig private(doLog: Boolean, val props:

java.util.Map[_, _], dynami

"offsets.commit.required.acks must be greater or equal -1 and less or

equal to offsets.topic.replication.factor")

require(BrokerCompressionCodec.isValid(compressionType), "compression.type

: " + compressionType + " is not valid." +

" Valid options are " +

BrokerCompressionCodec.brokerCompressionOptions.mkString(","))

-require(!processRoles.contains(ControllerRole) ||

controllerListeners.nonEmpty,

- s"${KafkaConfig.ControllerListenerNamesProp} cannot be empty if the

server has the controller role")

+val advertisedListenerNames =

effectiveAdvertisedListeners.map(_.listenerName).toSet

+

+// validate KRaft-related configs

+val voterAddressSpecsByNodeId =

RaftConfig.parseVoterConnections(quorumVoters)

+def validateNonEmptyQuorumVotersForKRaft(): Unit = {

+ if (voterAddressSpecsByNodeId.isEmpty) {

+throw new ConfigException(s"If using ${KafkaConfig.ProcessRolesProp},

${KafkaConfig.QuorumVotersProp} must contain a parseable set of voters.")

+ }

+}

+def validateControlPlaneListenerEmptyForKRaft(): Unit = {

+ require(controlPlaneListenerName.isEmpty,

+s"${KafkaConfig.ControlPlaneListenerNameProp} is not supported in

KRaft mode. KRaft uses ${KafkaConfig.ControllerListenerNamesProp} instead.")

+}

+val sourceOfAdvertisedListeners: String =

+ if (getString(KafkaConfig.AdvertisedListenersProp) != null)

+s"${KafkaConfig.AdvertisedListenersProp}"

+ else

+s"${KafkaConfig.ListenersProp}"

+def

validateAdvertisedListenersDoesNotContainControllerListenersForKRaftBroker():

Unit = {

+ require(!advertisedListenerNames.exists(aln =>

controllerListenerNames.contains(aln.value())),

+s"$sourceOfAdvertisedListeners must not contain KRaft controller

listeners from ${KafkaConfig.ControllerListenerNamesProp} when

${KafkaConfig.ProcessRolesProp} contains the broker role because Kafka clients

that send requests via advertised listeners do not send requests to KRaft

controllers -- they only send requests to KRaft brokers.")

+}

+def validateControllerQuorumVotersMustContainNodeIDForKRaftController():

Unit = {

+ require(voterAddressSpecsByNodeId.containsKey(nodeId),

+s"If ${KafkaConfig.ProcessRolesProp} contains the 'controller' role,

the node id $nodeId must be included in the set of voters

${KafkaConfig.QuorumVotersProp}=${voterAddressSpecsByNodeId.asScala.keySet.toSet}")

+}

+def validateControllerListenerExistsForKRaftController(): Unit = {

+ require(controllerListeners.nonEmpty,

+s"${KafkaConfig.ControllerListenerNamesProp} must contain at least one

value appearing in the '${KafkaConfig.ListenersProp}' configuration when

running the KRaft controller role")

+}

+def

validateControllerListenerNamesMustAppearInListenersForKRaftController(): Unit

= {

+ val listenerNameValues = listeners.map(_.listenerName.value).toSet

+ require(controllerListenerNames.forall(cln =>

listenerNameValues.contains(cln)),

+s"${KafkaConfig.ControllerListenerNamesProp} must only contain values

appearing in the '${KafkaConfig.ListenersProp}' configuration when running the

KRaft controller role")

+}

+def validateAdvertisedListenersNonEmptyForBroker(): Unit = {

+ require(advertisedListenerNames.nonEmpty,

+"There must be at least one advertised listener." + (

+ if (processRoles.contains(BrokerRole)) s" Perhaps all listeners

appear in ${ControllerListenerNamesProp}?" else ""))

+}

+if (processRoles == Set(BrokerRole)) {

+ // KRaft broker-only

+ validateNonEmptyQuorumVotersForKRaft()

+ validateControlPlaneListenerEmptyForKRaft()

+

validateAdvertisedListenersDoesNotContainControllerListenersForKRaftBroker()

+ // nodeId must not appear in controller.quorum.voters

+ require(!voterAddressSpecsByNodeId.containsKey(nodeId),

+s"If ${KafkaConfig.ProcessRolesProp} contains just the 'broker' role,

the node id $nodeId must not be included in the set of voters

${KafkaConfig.QuorumVotersProp}=${voterAddressSpecsByNodeId.asScala.keySet.toSet}")

+ // controller.listener.names must be non-empty...

+ require(controllerListenerNames.exists(_.nonEmpty),

+s"${KafkaConfig.ControllerListenerNamesProp} must contain at least one

value when running KRaft with just the broker role")

+ // controller.listener.names are forbidden in listeners...

+ require(controllerListeners.isEmpty,

+s"${KafkaConfig.ControllerListenerNamesProp} must not contain a value

appearing in the '${KafkaConfig.ListenersProp}' configuration when running

KRaft with just

[GitHub] [kafka] rondagostino commented on a change in pull request #11503: KAFKA-13456: Tighten KRaft config checks/constraints

rondagostino commented on a change in pull request #11503:

URL: https://github.com/apache/kafka/pull/11503#discussion_r764367513

##

File path: core/src/test/scala/unit/kafka/server/KafkaConfigTest.scala

##

@@ -1000,46 +1139,78 @@ class KafkaConfigTest {

}

}

- def assertDistinctControllerAndAdvertisedListeners(): Unit = {

-val props = TestUtils.createBrokerConfig(0, TestUtils.MockZkConnect, port

= TestUtils.MockZkPort)

-val listeners = "PLAINTEXT://A:9092,SSL://B:9093,SASL_SSL://C:9094"

-props.put(KafkaConfig.ListenersProp, listeners)

-props.put(KafkaConfig.AdvertisedListenersProp,

"PLAINTEXT://A:9092,SSL://B:9093")

+ @Test

+ def assertDistinctControllerAndAdvertisedListenersAllowedForKRaftBroker():

Unit = {

+val props = new Properties()

+props.put(KafkaConfig.ProcessRolesProp, "broker")

+props.put(KafkaConfig.ListenersProp,

"PLAINTEXT://A:9092,SSL://B:9093,SASL_SSL://C:9094")

+props.put(KafkaConfig.AdvertisedListenersProp,

"PLAINTEXT://A:9092,SSL://B:9093") // explicitly setting it in KRaft

+props.put(KafkaConfig.ControllerListenerNamesProp, "SASL_SSL")

+props.put(KafkaConfig.NodeIdProp, "2")

+props.put(KafkaConfig.QuorumVotersProp, "3@localhost:9094")

+

+// invalid due to extra listener also appearing in controller listeners

+assertBadConfigContainingMessage(props,

+ "controller.listener.names must not contain a value appearing in the

'listeners' configuration when running KRaft with just the broker role")

+

// Valid now

-assertTrue(isValidKafkaConfig(props))

+props.put(KafkaConfig.ListenersProp, "PLAINTEXT://A:9092,SSL://B:9093")

+KafkaConfig.fromProps(props)

-// Still valid

-val controllerListeners = "SASL_SSL"

-props.put(KafkaConfig.ControllerListenerNamesProp, controllerListeners)

-assertTrue(isValidKafkaConfig(props))

+// Also valid if we let advertised listeners be derived from

listeners/controller.listener.names

+// since listeners and advertised.listeners are explicitly identical at

this point

+props.remove(KafkaConfig.AdvertisedListenersProp)

+KafkaConfig.fromProps(props)

}

@Test

- def assertAllControllerListenerCannotBeAdvertised(): Unit = {

-val props = TestUtils.createBrokerConfig(0, TestUtils.MockZkConnect, port

= TestUtils.MockZkPort)

+ def assertControllerListenersCannotBeAdvertisedForKRaftBroker(): Unit = {

+val props = new Properties()

+props.put(KafkaConfig.ProcessRolesProp, "broker,controller")

val listeners = "PLAINTEXT://A:9092,SSL://B:9093,SASL_SSL://C:9094"

props.put(KafkaConfig.ListenersProp, listeners)

-props.put(KafkaConfig.AdvertisedListenersProp, listeners)

+props.put(KafkaConfig.AdvertisedListenersProp, listeners) // explicitly

setting it in KRaft

+props.put(KafkaConfig.InterBrokerListenerNameProp, "SASL_SSL")

+props.put(KafkaConfig.ControllerListenerNamesProp, "PLAINTEXT,SSL")

+props.put(KafkaConfig.NodeIdProp, "2")

+props.put(KafkaConfig.QuorumVotersProp, "2@localhost:9092")

+assertBadConfigContainingMessage(props,

+ "advertised.listeners must not contain KRaft controller listeners from

controller.listener.names when process.roles contains the broker role")

+

// Valid now

-assertTrue(isValidKafkaConfig(props))

+props.put(KafkaConfig.AdvertisedListenersProp, "SASL_SSL://C:9094")

+KafkaConfig.fromProps(props)

-// Invalid now

-props.put(KafkaConfig.ControllerListenerNamesProp,

"PLAINTEXT,SSL,SASL_SSL")

-assertFalse(isValidKafkaConfig(props))

+// Also valid if we allow advertised listeners to derive from

listeners/controller.listener.names

+props.remove(KafkaConfig.AdvertisedListenersProp)

+KafkaConfig.fromProps(props)

}

@Test

- def assertEvenOneControllerListenerCannotBeAdvertised(): Unit = {

-val props = TestUtils.createBrokerConfig(0, TestUtils.MockZkConnect, port

= TestUtils.MockZkPort)

+ def assertAdvertisedListenersDisallowedForKRaftControllerOnlyRole(): Unit = {

Review comment:

> do we have a test for the scenario where SSL/SASL are in use and no

controller listener security mapping is defined

Yes, testControllerListenerNameMapsToPlaintextByDefaultForKRaft() has this

case.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] bbejeck commented on pull request #11573: KAFKA-13507: GlobalProcessor ignores user specified names

bbejeck commented on pull request #11573: URL: https://github.com/apache/kafka/pull/11573#issuecomment-988225508 Failures unrelated to this PR - kicked off another build - JDK 8 and Scala 2.12 / kafka.admin.LeaderElectionCommandTest.[1] Type=Raft, Name=testTopicPartition, Security=PLAINTEXT - JDK 11 and Scala 2.13 / org.apache.kafka.streams.integration.EosV2UpgradeIntegrationTest.shouldUpgradeFromEosAlphaToEosV2[true] - JDK 17 and Scala 2.13 / kafka.server.ReplicaManagerTest.[1] usesTopicIds=true -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #11513: feat: Write and restore position to/from changelog

vvcephei commented on a change in pull request #11513:

URL: https://github.com/apache/kafka/pull/11513#discussion_r764279791

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ProcessorContextImpl.java

##

@@ -110,17 +121,27 @@ public RecordCollector recordCollector() {

public void logChange(final String storeName,

final Bytes key,

final byte[] value,

- final long timestamp) {

+ final long timestamp,

+ final Position position) {

throwUnsupportedOperationExceptionIfStandby("logChange");

final TopicPartition changelogPartition =

stateManager().registeredChangelogPartitionFor(storeName);

-// Sending null headers to changelog topics (KIP-244)

+Headers headers = new RecordHeaders();

Review comment:

nit: I'd make this final with no assignment, then assign it in both

branches below.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #11513: feat: Write and restore position to/from changelog

vvcephei commented on a change in pull request #11513:

URL: https://github.com/apache/kafka/pull/11513#discussion_r764278336

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ChangelogRecordDeserializationHelper.java

##

@@ -0,0 +1,75 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.streams.processor.internals;

+

+import org.apache.kafka.clients.consumer.ConsumerRecord;

+import org.apache.kafka.common.header.Header;

+import org.apache.kafka.common.header.internals.RecordHeader;

+import org.apache.kafka.streams.errors.StreamsException;

+import org.apache.kafka.streams.query.Position;

+import org.apache.kafka.streams.state.internals.PositionSerde;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.nio.ByteBuffer;

+

+/**

+ * Changelog records without any headers are considered old format.

+ * New format changelog records will have a version in their headers.

+ * Version 0: This indicates that the changelog records have consistency

information.

+ */

+public class ChangelogRecordDeserializationHelper {

+public static final Logger log =

LoggerFactory.getLogger(ChangelogRecordDeserializationHelper.class);

+private static final byte[] V_0_CHANGELOG_VERSION_HEADER_VALUE = {(byte)

0};

+public static final String CHANGELOG_VERSION_HEADER_KEY = "v";

+public static final String CHANGELOG_POSITION_HEADER_KEY = "c";

+public static final RecordHeader

CHANGELOG_VERSION_HEADER_RECORD_CONSISTENCY = new RecordHeader(

+CHANGELOG_VERSION_HEADER_KEY, V_0_CHANGELOG_VERSION_HEADER_VALUE);

+

+public static Position applyChecksAndUpdatePosition(

+final ConsumerRecord record,

+final boolean consistencyEnabled,

+final Position position

+) {

+Position restoredPosition = Position.emptyPosition();

+if (!consistencyEnabled) {

+return Position.emptyPosition();

Review comment:

Even though I think this is a bit off, I'm going to go ahead and merge

it, so we can fix forward. The overall feature isn't fully implemented yet

anyway, so this will have no negative effects if we release the branch right

now.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #11513: feat: Write and restore position to/from changelog

vvcephei commented on a change in pull request #11513:

URL: https://github.com/apache/kafka/pull/11513#discussion_r764277280

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ChangelogRecordDeserializationHelper.java

##

@@ -0,0 +1,75 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.streams.processor.internals;

+

+import org.apache.kafka.clients.consumer.ConsumerRecord;

+import org.apache.kafka.common.header.Header;

+import org.apache.kafka.common.header.internals.RecordHeader;

+import org.apache.kafka.streams.errors.StreamsException;

+import org.apache.kafka.streams.query.Position;

+import org.apache.kafka.streams.state.internals.PositionSerde;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.nio.ByteBuffer;

+

+/**

+ * Changelog records without any headers are considered old format.

+ * New format changelog records will have a version in their headers.

+ * Version 0: This indicates that the changelog records have consistency

information.

+ */

+public class ChangelogRecordDeserializationHelper {

+public static final Logger log =

LoggerFactory.getLogger(ChangelogRecordDeserializationHelper.class);

+private static final byte[] V_0_CHANGELOG_VERSION_HEADER_VALUE = {(byte)

0};

+public static final String CHANGELOG_VERSION_HEADER_KEY = "v";

+public static final String CHANGELOG_POSITION_HEADER_KEY = "c";

+public static final RecordHeader

CHANGELOG_VERSION_HEADER_RECORD_CONSISTENCY = new RecordHeader(

+CHANGELOG_VERSION_HEADER_KEY, V_0_CHANGELOG_VERSION_HEADER_VALUE);

+

+public static Position applyChecksAndUpdatePosition(

+final ConsumerRecord record,

+final boolean consistencyEnabled,

+final Position position

+) {

+Position restoredPosition = Position.emptyPosition();

+if (!consistencyEnabled) {

+return Position.emptyPosition();

Review comment:

Shouldn't these be returning `position`? This method's contract is to

"update" the position, not "replace" it, right?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a change in pull request #11513: feat: Write and restore position to/from changelog

vvcephei commented on a change in pull request #11513:

URL: https://github.com/apache/kafka/pull/11513#discussion_r764271813

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/ChangelogRecordDeserializationHelper.java

##

@@ -0,0 +1,75 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.streams.processor.internals;

+

+import org.apache.kafka.clients.consumer.ConsumerRecord;

+import org.apache.kafka.common.header.Header;

+import org.apache.kafka.common.header.internals.RecordHeader;

+import org.apache.kafka.streams.errors.StreamsException;

+import org.apache.kafka.streams.query.Position;

+import org.apache.kafka.streams.state.internals.PositionSerde;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.nio.ByteBuffer;

+

+/**

+ * Changelog records without any headers are considered old format.

+ * New format changelog records will have a version in their headers.

+ * Version 0: This indicates that the changelog records have consistency

information.

+ */

+public class ChangelogRecordDeserializationHelper {

+public static final Logger log =

LoggerFactory.getLogger(ChangelogRecordDeserializationHelper.class);

+private static final byte[] V_0_CHANGELOG_VERSION_HEADER_VALUE = {(byte)

0};

+public static final String CHANGELOG_VERSION_HEADER_KEY = "v";

+public static final String CHANGELOG_POSITION_HEADER_KEY = "c";

+public static final RecordHeader

CHANGELOG_VERSION_HEADER_RECORD_CONSISTENCY = new RecordHeader(

+CHANGELOG_VERSION_HEADER_KEY, V_0_CHANGELOG_VERSION_HEADER_VALUE);

+

+public static Position applyChecksAndUpdatePosition(

+final ConsumerRecord record,

+final boolean consistencyEnabled,

+final Position position

+) {

+Position restoredPosition = Position.emptyPosition();

+if (!consistencyEnabled) {

Review comment:

Good point! @vpapavas , can you handle stuff like this in a follow-on

PR? I'm doing a final pass to try and get this one merged.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #11046: KAFKA-12980: Return empty record batch from Consumer::poll when position advances due to aborted transactions

hachikuji commented on a change in pull request #11046:

URL: https://github.com/apache/kafka/pull/11046#discussion_r764255533

##

File path:

clients/src/main/java/org/apache/kafka/clients/consumer/internals/Fetcher.java

##

@@ -725,17 +725,13 @@ public void onFailure(RuntimeException e) {

completedFetch.nextFetchOffset,

completedFetch.lastEpoch,

position.currentLeader);

-log.trace("Update fetching position to {} for partition

{}", nextPosition, completedFetch.partition);

+log.trace("Updating fetch position from {} to {} for

partition {} and returning {} records from `poll()`",

+position, nextPosition, completedFetch.partition,

partRecords.size());

subscriptions.position(completedFetch.partition,

nextPosition);

positionAdvanced = true;

if (partRecords.isEmpty()) {

-log.debug(

-"Advanced position for partition {} without

receiving any user-visible records. "

-+ "This is likely due to skipping over

control records in the current fetch, "

-+ "and may result in the consumer

returning an empty record batch when "

-+ "polled before its poll timeout has

elapsed.",

-completedFetch.partition

-);

+log.trace("Returning empty records from `poll()` "

++ "since the consumer's position has advanced

for at least one topic partition");

Review comment:

I'm inclined to either remove the log line entirely or move it back to

its former location in `KafkaConsumer`. Will leave it up to you.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #11503: KAFKA-13456: Tighten KRaft config checks/constraints

hachikuji commented on a change in pull request #11503:

URL: https://github.com/apache/kafka/pull/11503#discussion_r764232658

##

File path: core/src/main/scala/kafka/server/KafkaConfig.scala

##

@@ -1959,10 +1962,26 @@ class KafkaConfig private(doLog: Boolean, val props:

java.util.Map[_, _], dynami

}

}

- def listenerSecurityProtocolMap: Map[ListenerName, SecurityProtocol] = {

-getMap(KafkaConfig.ListenerSecurityProtocolMapProp,

getString(KafkaConfig.ListenerSecurityProtocolMapProp))

+ def effectiveListenerSecurityProtocolMap: Map[ListenerName,

SecurityProtocol] = {

+val mapValue = getMap(KafkaConfig.ListenerSecurityProtocolMapProp,

getString(KafkaConfig.ListenerSecurityProtocolMapProp))

.map { case (listenerName, protocolName) =>

- ListenerName.normalised(listenerName) ->

getSecurityProtocol(protocolName, KafkaConfig.ListenerSecurityProtocolMapProp)

+ListenerName.normalised(listenerName) ->

getSecurityProtocol(protocolName, KafkaConfig.ListenerSecurityProtocolMapProp)

+ }

+if (usesSelfManagedQuorum &&

!originals.containsKey(ListenerSecurityProtocolMapProp)) {

+ // Nothing was specified explicitly for listener.security.protocol.map,

so we are using the default value,

+ // and we are using KRaft.

+ // Add PLAINTEXT mappings for controller listeners as long as there is

no SSL or SASL_{PLAINTEXT,SSL} in use

+ def isSslOrSasl(name: String) : Boolean =

name.equals(SecurityProtocol.SSL.name) ||

name.equals(SecurityProtocol.SASL_SSL.name) ||

name.equals(SecurityProtocol.SASL_PLAINTEXT.name)

+ if (controllerListenerNames.exists(isSslOrSasl) ||

Review comment:

Checking my understanding. Is the first clause here necessary for the

broker-only case in which the controller listener names are not included in

`listeners`? A comment to that effect might be useful.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #11503: KAFKA-13456: Tighten KRaft config checks/constraints

hachikuji commented on a change in pull request #11503:

URL: https://github.com/apache/kafka/pull/11503#discussion_r764221288

##

File path: core/src/main/scala/kafka/server/KafkaConfig.scala

##

@@ -748,7 +749,8 @@ object KafkaConfig {

"Different security (SSL and SASL) settings can be configured for each

listener by adding a normalised " +

"prefix (the listener name is lowercased) to the config name. For example,

to set a different keystore for the " +

"INTERNAL listener, a config with name

listener.name.internal.ssl.keystore.location would be set. " +

-"If the config for the listener name is not set, the config will fallback

to the generic config (i.e. ssl.keystore.location). "

+"If the config for the listener name is not set, the config will fallback

to the generic config (i.e. ssl.keystore.location). " +

+"Note that in KRaft an additional default mapping CONTROLLER to PLAINTEXT

is added."

Review comment:

How about this?

> Note that in KRaft, a default mapping from the listener names defined by

`controller.listener.names` to PLAINTEXT is assumed if no explicit mapping is

provided and no other security protocol is in use.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #11503: KAFKA-13456: Tighten KRaft config checks/constraints

hachikuji commented on a change in pull request #11503:

URL: https://github.com/apache/kafka/pull/11503#discussion_r764232658

##

File path: core/src/main/scala/kafka/server/KafkaConfig.scala

##

@@ -1959,10 +1962,26 @@ class KafkaConfig private(doLog: Boolean, val props:

java.util.Map[_, _], dynami

}

}

- def listenerSecurityProtocolMap: Map[ListenerName, SecurityProtocol] = {

-getMap(KafkaConfig.ListenerSecurityProtocolMapProp,

getString(KafkaConfig.ListenerSecurityProtocolMapProp))

+ def effectiveListenerSecurityProtocolMap: Map[ListenerName,

SecurityProtocol] = {

+val mapValue = getMap(KafkaConfig.ListenerSecurityProtocolMapProp,

getString(KafkaConfig.ListenerSecurityProtocolMapProp))

.map { case (listenerName, protocolName) =>

- ListenerName.normalised(listenerName) ->

getSecurityProtocol(protocolName, KafkaConfig.ListenerSecurityProtocolMapProp)

+ListenerName.normalised(listenerName) ->

getSecurityProtocol(protocolName, KafkaConfig.ListenerSecurityProtocolMapProp)

+ }

+if (usesSelfManagedQuorum &&

!originals.containsKey(ListenerSecurityProtocolMapProp)) {

+ // Nothing was specified explicitly for listener.security.protocol.map,

so we are using the default value,

+ // and we are using KRaft.

+ // Add PLAINTEXT mappings for controller listeners as long as there is

no SSL or SASL_{PLAINTEXT,SSL} in use

+ def isSslOrSasl(name: String) : Boolean =

name.equals(SecurityProtocol.SSL.name) ||

name.equals(SecurityProtocol.SASL_SSL.name) ||

name.equals(SecurityProtocol.SASL_PLAINTEXT.name)

+ if (controllerListenerNames.exists(isSslOrSasl) ||

Review comment:

Checking my understanding. Is the first clause here necessary for the

broker-only case in which the controller listener names are not included in

`listeners`? A comment to that effect that might be useful.

##

File path: core/src/main/scala/kafka/server/KafkaConfig.scala

##

@@ -2018,12 +2025,103 @@ class KafkaConfig private(doLog: Boolean, val props:

java.util.Map[_, _], dynami

"offsets.commit.required.acks must be greater or equal -1 and less or

equal to offsets.topic.replication.factor")

require(BrokerCompressionCodec.isValid(compressionType), "compression.type

: " + compressionType + " is not valid." +

" Valid options are " +

BrokerCompressionCodec.brokerCompressionOptions.mkString(","))

-require(!processRoles.contains(ControllerRole) ||

controllerListeners.nonEmpty,

- s"${KafkaConfig.ControllerListenerNamesProp} cannot be empty if the

server has the controller role")

+val advertisedListenerNames =

effectiveAdvertisedListeners.map(_.listenerName).toSet

+

+// validate KRaft-related configs

+val voterAddressSpecsByNodeId =

RaftConfig.parseVoterConnections(quorumVoters)

+def validateNonEmptyQuorumVotersForKRaft(): Unit = {

+ if (voterAddressSpecsByNodeId.isEmpty) {

+throw new ConfigException(s"If using ${KafkaConfig.ProcessRolesProp},

${KafkaConfig.QuorumVotersProp} must contain a parseable set of voters.")

+ }

+}

+def validateControlPlaneListenerEmptyForKRaft(): Unit = {

+ require(controlPlaneListenerName.isEmpty,

+s"${KafkaConfig.ControlPlaneListenerNameProp} is not supported in

KRaft mode. KRaft uses ${KafkaConfig.ControllerListenerNamesProp} instead.")

+}

+val sourceOfAdvertisedListeners: String =

+ if (getString(KafkaConfig.AdvertisedListenersProp) != null)

+s"${KafkaConfig.AdvertisedListenersProp}"

+ else

+s"${KafkaConfig.ListenersProp}"

+def

validateAdvertisedListenersDoesNotContainControllerListenersForKRaftBroker():

Unit = {

+ require(!advertisedListenerNames.exists(aln =>

controllerListenerNames.contains(aln.value())),

+s"$sourceOfAdvertisedListeners must not contain KRaft controller

listeners from ${KafkaConfig.ControllerListenerNamesProp} when

${KafkaConfig.ProcessRolesProp} contains the broker role because Kafka clients

that send requests via advertised listeners do not send requests to KRaft

controllers -- they only send requests to KRaft brokers.")

Review comment:

nit: The generality here seems misleading. We explicitly allow the

controller listeners to be included among `listeners` even when

`advertised.listeners` is empty. Hence I don't think it would ever be possible

for `sourceOfAdvertisedListeners` to refer to `listeners` here.

##

File path: core/src/test/scala/unit/kafka/server/KafkaConfigTest.scala

##

@@ -1000,46 +1139,78 @@ class KafkaConfigTest {

}

}

- def assertDistinctControllerAndAdvertisedListeners(): Unit = {

-val props = TestUtils.createBrokerConfig(0, TestUtils.MockZkConnect, port

= TestUtils.MockZkPort)

-val listeners = "PLAINTEXT://A:9092,SSL://B:9093,SASL_SSL://C:9094"

-props.put(KafkaConfig.ListenersProp, listeners)

-props.put(KafkaConfig.AdvertisedListenersProp,

[jira] [Created] (KAFKA-13514) Flakey test StickyAssignorTest

Matthias J. Sax created KAFKA-13514:

---

Summary: Flakey test StickyAssignorTest

Key: KAFKA-13514

URL: https://issues.apache.org/jira/browse/KAFKA-13514

Project: Kafka

Issue Type: Test

Components: clients, unit tests

Reporter: Matthias J. Sax

org.apache.kafka.clients.consumer.StickyAssignorTest.testLargeAssignmentAndGroupWithNonEqualSubscription()

No real stack trace, but only:

{quote}java.util.concurrent.TimeoutException:

testLargeAssignmentAndGroupWithNonEqualSubscription() timed out after 60

seconds{quote}

STDOUT

{quote}[2021-12-07 01:32:23,920] ERROR Found multiple consumers consumer1 and

consumer2 claiming the same TopicPartition topic-0 in the same generation -1,

this will be invalidated and removed from their previous assignment.

(org.apache.kafka.clients.consumer.internals.AbstractStickyAssignor:150)

[2021-12-07 01:32:58,964] ERROR Found multiple consumers consumer1 and

consumer2 claiming the same TopicPartition topic-0 in the same generation -1,

this will be invalidated and removed from their previous assignment.

(org.apache.kafka.clients.consumer.internals.AbstractStickyAssignor:150)

[2021-12-07 01:32:58,976] ERROR Found multiple consumers consumer1 and

consumer2 claiming the same TopicPartition topic-0 in the same generation -1,

this will be invalidated and removed from their previous assignment.

(org.apache.kafka.clients.consumer.internals.AbstractStickyAssignor:150){quote}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Created] (KAFKA-13513) Flaky test AdjustStreamThreadCountTest

Matthias J. Sax created KAFKA-13513:

---

Summary: Flaky test AdjustStreamThreadCountTest

Key: KAFKA-13513

URL: https://issues.apache.org/jira/browse/KAFKA-13513

Project: Kafka

Issue Type: Test

Components: streams, unit tests

Reporter: Matthias J. Sax

org.apache.kafka.streams.integration.AdjustStreamThreadCountTest.testConcurrentlyAccessThreads

{quote}java.lang.AssertionError: expected null, but

was: at

org.junit.Assert.fail(Assert.java:89) at

org.junit.Assert.failNotNull(Assert.java:756) at

org.junit.Assert.assertNull(Assert.java:738) at

org.junit.Assert.assertNull(Assert.java:748) at

org.apache.kafka.streams.integration.AdjustStreamThreadCountTest.testConcurrentlyAccessThreads(AdjustStreamThreadCountTest.java:367)

{quote}

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[jira] [Created] (KAFKA-13512) topicIdsToNames and topicNamesToIds allocate unnecessary maps

Justine Olshan created KAFKA-13512:

--

Summary: topicIdsToNames and topicNamesToIds allocate unnecessary

maps

Key: KAFKA-13512

URL: https://issues.apache.org/jira/browse/KAFKA-13512

Project: Kafka

Issue Type: Bug

Affects Versions: 3.1.0

Reporter: Justine Olshan

Assignee: Justine Olshan

Currently we write the methods as follows:

{{def topicNamesToIds(): util.Map[String, Uuid] = {}}

{{ new util.HashMap(metadataSnapshot.topicIds.asJava)}}

{{}}}

We do not need to allocate a new map however, we can simply use

{{Collections.unmodifiableMap(metadataSnapshot.topicIds.asJava)}}

We can do something similar for the topicIdsToNames implementation.

--

This message was sent by Atlassian Jira

(v8.20.1#820001)

[GitHub] [kafka] cadonna merged pull request #11561: MINOR: Bump version of grgit to 4.1.1

cadonna merged pull request #11561: URL: https://github.com/apache/kafka/pull/11561 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cadonna merged pull request #11574: MINOR: Fix internal topic manager tests

cadonna merged pull request #11574: URL: https://github.com/apache/kafka/pull/11574 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cadonna commented on pull request #11574: MINOR: Fix internal topic manager tests

cadonna commented on pull request #11574: URL: https://github.com/apache/kafka/pull/11574#issuecomment-988124933 Test failures are not related. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org