[jira] [Comment Edited] (KAFKA-14014) Flaky test NamedTopologyIntegrationTest.shouldAllowRemovingAndAddingNamedTopologyToRunningApplicationWithMultipleNodesAndResetsOffsets()

[

https://issues.apache.org/jira/browse/KAFKA-14014?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573211#comment-17573211

]

Matthew de Detrich edited comment on KAFKA-14014 at 7/30/22 5:57 AM:

-

In case people want to reproduce the flakiness, assuming you have a working

docker installation you can do the following

{code:java}

docker run -it --cpus=2 --rm -u gradle -v "$PWD":/home/gradle/project -w

/home/gradle/project gradle sh{code}

where cpus=2 is how you can toggle how many cpus you want (there is a tradeoff

between higher occurrence to encounter the flakiness vs how fast the test

runs). I wouldn't recommend doing lower than cpus=2 otherwise even building the

kafak project in gradle can take ages.

The above command will put you into a shell at which point you can do

{code:java}

while [ $? -eq 0 ]; do ./gradlew :streams:test --tests

org.apache.kafka.streams.integration.NamedTopologyIntegrationTest.shouldAllowRemovingAndAddingNamedTopologyToRunningApplicationWithMultipleNodesAndResetsOffsets;

done{code}

Which will re-run the tests until there is a failure.

Note that due to how Gradle cache's test runs, you need to do something like

[https://github.com/gradle/gradle/issues/9151#issue-434212465] in order to

force gradle to re-run the test every time.

was (Author: mdedetrich-aiven):

In case people want to reproduce the flakiness, assuming you have a working

docker installation you can do the following

{code:java}

docker run -it --cpus=2 --rm -u gradle -v "$PWD":/home/gradle/project -w

/home/gradle/project gradle sh{code}

where cpus=2 is how you can toggle how many cpus you want (there is a tradeoff

between higher occurrence to encounter the flakiness vs how fast the test

runs). I wouldn't recommend doing lower than cpus=2 otherwise even building the

kafak project in gradle can take ages.

The above command will put you into a shell at which point you can do

{code:java}

while [ $? -eq 0 ]; do ./gradlew :streams:test --tests

org.apache.kafka.streams.integration.NamedTopologyIntegrationTest.shouldAllowRemovingAndAddingNamedTopologyToRunningApplicationWithMultipleNodesAndResetsOffsets;

done{code}

Which will re-run the tests until there is a failure.

> Flaky test

> NamedTopologyIntegrationTest.shouldAllowRemovingAndAddingNamedTopologyToRunningApplicationWithMultipleNodesAndResetsOffsets()

>

>

> Key: KAFKA-14014

> URL: https://issues.apache.org/jira/browse/KAFKA-14014

> Project: Kafka

> Issue Type: Test

> Components: streams

>Reporter: Bruno Cadonna

>Priority: Critical

> Labels: flaky-test

>

> {code:java}

> java.lang.AssertionError:

> Expected: <[KeyValue(B, 1), KeyValue(A, 2), KeyValue(C, 2)]>

> but: was <[KeyValue(B, 1), KeyValue(A, 2), KeyValue(C, 1)]>

> at org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:20)

> at org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:6)

> at

> org.apache.kafka.streams.integration.NamedTopologyIntegrationTest.shouldAllowRemovingAndAddingNamedTopologyToRunningApplicationWithMultipleNodesAndResetsOffsets(NamedTopologyIntegrationTest.java:540)

> at

> java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

> at

> java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.base/java.lang.reflect.Method.invoke(Method.java:568)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at

> org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:299)

> at

> org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:293)

> at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

> at java.base/java.lang.Thread.run(Thread.java:833)

> {code}

>

[jira] [Commented] (KAFKA-14014) Flaky test NamedTopologyIntegrationTest.shouldAllowRemovingAndAddingNamedTopologyToRunningApplicationWithMultipleNodesAndResetsOffsets()

[

https://issues.apache.org/jira/browse/KAFKA-14014?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573211#comment-17573211

]

Matthew de Detrich commented on KAFKA-14014:

In case people want to reproduce the flakiness, assuming you have a working

docker installation you can do the following

{code:java}

docker run -it --cpus=2 --rm -u gradle -v "$PWD":/home/gradle/project -w

/home/gradle/project gradle sh{code}

where cpus=2 is how you can toggle how many cpus you want (there is a tradeoff

between higher occurrence to encounter the flakiness vs how fast the test

runs). I wouldn't recommend doing lower than cpus=2 otherwise even building the

kafak project in gradle can take ages.

The above command will put you into a shell at which point you can do

{code:java}

while [ $? -eq 0 ]; do ./gradlew :streams:test --tests

org.apache.kafka.streams.integration.NamedTopologyIntegrationTest.shouldAllowRemovingAndAddingNamedTopologyToRunningApplicationWithMultipleNodesAndResetsOffsets;

done{code}

Which will re-run the tests until there is a failure.

> Flaky test

> NamedTopologyIntegrationTest.shouldAllowRemovingAndAddingNamedTopologyToRunningApplicationWithMultipleNodesAndResetsOffsets()

>

>

> Key: KAFKA-14014

> URL: https://issues.apache.org/jira/browse/KAFKA-14014

> Project: Kafka

> Issue Type: Test

> Components: streams

>Reporter: Bruno Cadonna

>Priority: Critical

> Labels: flaky-test

>

> {code:java}

> java.lang.AssertionError:

> Expected: <[KeyValue(B, 1), KeyValue(A, 2), KeyValue(C, 2)]>

> but: was <[KeyValue(B, 1), KeyValue(A, 2), KeyValue(C, 1)]>

> at org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:20)

> at org.hamcrest.MatcherAssert.assertThat(MatcherAssert.java:6)

> at

> org.apache.kafka.streams.integration.NamedTopologyIntegrationTest.shouldAllowRemovingAndAddingNamedTopologyToRunningApplicationWithMultipleNodesAndResetsOffsets(NamedTopologyIntegrationTest.java:540)

> at

> java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

> at

> java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.base/java.lang.reflect.Method.invoke(Method.java:568)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at

> org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:299)

> at

> org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:293)

> at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

> at java.base/java.lang.Thread.run(Thread.java:833)

> {code}

> https://ci-builds.apache.org/job/Kafka/job/kafka-pr/job/PR-12310/2/testReport/junit/org.apache.kafka.streams.integration/NamedTopologyIntegrationTest/Build___JDK_11_and_Scala_2_13___shouldAllowRemovingAndAddingNamedTopologyToRunningApplicationWithMultipleNodesAndResetsOffsets/

> https://ci-builds.apache.org/job/Kafka/job/kafka-pr/job/PR-12310/2/testReport/junit/org.apache.kafka.streams.integration/NamedTopologyIntegrationTest/Build___JDK_17_and_Scala_2_13___shouldAllowRemovingAndAddingNamedTopologyToRunningApplicationWithMultipleNodesAndResetsOffsets/

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [kafka] vvcephei commented on a diff in pull request #12458: MINOR: Adds KRaft versions of most streams system tests

vvcephei commented on code in PR #12458: URL: https://github.com/apache/kafka/pull/12458#discussion_r933727376 ## tests/kafkatest/tests/streams/streams_broker_bounce_test.py: ## @@ -205,11 +211,17 @@ def collect_results(self, sleep_time_secs): return data @cluster(num_nodes=7) +@matrix(failure_mode=["clean_shutdown", "hard_shutdown", "clean_bounce", "hard_bounce"], +broker_type=["leader"], +num_threads=[1, 3], +sleep_time_secs=[120], +metadata_quorum=[quorum.remote_kraft]) @matrix(failure_mode=["clean_shutdown", "hard_shutdown", "clean_bounce", "hard_bounce"], broker_type=["leader", "controller"], num_threads=[1, 3], sleep_time_secs=[120]) Review Comment: Thanks, @AlanConfluent . I was actually making a slightly different suggestion, namely to explicitly include `metadata_quorum=[quorum.zk]` in this second matrix config, and to remove the default parameter value `metadata_quorum=quorum.zk` from the method signature. Github charmingly won't let me do a suggestion to make this clearer because the diff includes a deleted line. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] divijvaidya commented on pull request #12459: KAFKA-13036: Replace EasyMock and PowerMock with Mockito for RocksDBMetricsRecorderTest

divijvaidya commented on PR #12459: URL: https://github.com/apache/kafka/pull/12459#issuecomment-1200048488 @ableegoldman @cadonna please help review this test in the `streams` domain. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mumrah commented on a diff in pull request #12447: KAFKA-14124: improve quorum controller fault handling

mumrah commented on code in PR #12447:

URL: https://github.com/apache/kafka/pull/12447#discussion_r933689628

##

metadata/src/main/java/org/apache/kafka/controller/QuorumController.java:

##

@@ -728,6 +746,23 @@ public void run() throws Exception {

"reaches offset {}", this, resultAndOffset.offset());

}

} else {

+// Start by trying to apply the record to our in-memory state.

This should always

+// succeed; if it does not, that's a fatal error. It is

important to do this before

+// scheduling the record for Raft replication.

+int i = 1;

+for (ApiMessageAndVersion message : result.records()) {

+try {

+replay(message.message(), Optional.empty());

+} catch (Throwable e) {

+String failureMessage = String.format("Unable to apply

%s record, which was " +

+"%d of %d record(s) in the batch following last

writeOffset %d.",

+message.message().getClass().getSimpleName(), i,

result.records().size(),

+writeOffset);

+fatalFaultHandler.handleFault(failureMessage, e);

+}

+i++;

+}

+

// If the operation returned a batch of records, those records

need to be

Review Comment:

We should update this comment to something like "if the records could be

applied ... "

##

metadata/src/main/java/org/apache/kafka/controller/QuorumController.java:

##

@@ -862,13 +903,9 @@ public void

handleSnapshot(SnapshotReader reader) {

appendRaftEvent(String.format("handleSnapshot[snapshotId=%s]",

reader.snapshotId()), () -> {

try {

if (isActiveController()) {

-throw new IllegalStateException(

-String.format(

-"Asked to load snapshot (%s) when it is the

active controller (%d)",

-reader.snapshotId(),

-curClaimEpoch

-)

-);

+fatalFaultHandler.handleFault(String.format("Asked to

load snapshot " +

+"(%s) when it is the active controller (%d)",

reader.snapshotId(),

+curClaimEpoch), null);

Review Comment:

Can call the default method on the fault handler here instead of passing null

##

metadata/src/main/java/org/apache/kafka/controller/QuorumController.java:

##

@@ -1246,70 +1293,60 @@ private void handleFeatureControlChange() {

}

@SuppressWarnings("unchecked")

-private void replay(ApiMessage message, Optional

snapshotId, long offset) {

-try {

-MetadataRecordType type =

MetadataRecordType.fromId(message.apiKey());

-switch (type) {

-case REGISTER_BROKER_RECORD:

-clusterControl.replay((RegisterBrokerRecord) message);

-break;

-case UNREGISTER_BROKER_RECORD:

-clusterControl.replay((UnregisterBrokerRecord) message);

-break;

-case TOPIC_RECORD:

-replicationControl.replay((TopicRecord) message);

-break;

-case PARTITION_RECORD:

-replicationControl.replay((PartitionRecord) message);

-break;

-case CONFIG_RECORD:

-configurationControl.replay((ConfigRecord) message);

-break;

-case PARTITION_CHANGE_RECORD:

-replicationControl.replay((PartitionChangeRecord) message);

-break;

-case FENCE_BROKER_RECORD:

-clusterControl.replay((FenceBrokerRecord) message);

-break;

-case UNFENCE_BROKER_RECORD:

-clusterControl.replay((UnfenceBrokerRecord) message);

-break;

-case REMOVE_TOPIC_RECORD:

-replicationControl.replay((RemoveTopicRecord) message);

-break;

-case FEATURE_LEVEL_RECORD:

-featureControl.replay((FeatureLevelRecord) message);

-handleFeatureControlChange();

-break;

-case CLIENT_QUOTA_RECORD:

-clientQuotaControlManager.replay((ClientQuotaRecord)

message);

-break;

-case PRODUCER_IDS_RECORD:

-producerIdControlManager.replay((ProducerIdsRecord)

message);

-break;

-case BROKER_REGISTRATION_CHANGE_RECORD:

-

[GitHub] [kafka] andymg3 commented on a diff in pull request #12305: MINOR: Add __cluster_metadata topic to list of internal topics

andymg3 commented on code in PR #12305:

URL: https://github.com/apache/kafka/pull/12305#discussion_r933670311

##

clients/src/main/java/org/apache/kafka/common/internals/Topic.java:

##

@@ -33,7 +33,7 @@ public class Topic {

public static final String LEGAL_CHARS = "[a-zA-Z0-9._-]";

private static final Set INTERNAL_TOPICS =

Collections.unmodifiableSet(

-Utils.mkSet(GROUP_METADATA_TOPIC_NAME,

TRANSACTION_STATE_TOPIC_NAME));

+Utils.mkSet(GROUP_METADATA_TOPIC_NAME,

TRANSACTION_STATE_TOPIC_NAME, METADATA_TOPIC_NAME));

Review Comment:

@showuon @divijvaidya @hachikuji I took a little into adding a conditional

check. One thing that is tricky is the `isInternal` method

(https://github.com/apache/kafka/blob/trunk/clients/src/main/java/org/apache/kafka/common/internals/Topic.java#L59)

is used client side. For example, it’s used in TopicCommand.scala

(https://github.com/apache/kafka/blob/trunk/core/src/main/scala/kafka/admin/TopicCommand.scala#L427).

I don’t think it makes sense for the client to be in KRaft or ZK mode. During

an upgrade its possible there’s a mix or KRaft and ZK brokers, for example.

Furthermore, I can’t image we’d expect the client to provide metadata for

whether we’re in KRaft or ZK mode so I don’t know how we could know what mode

we’re even in.

Thus, I’m not sure how we can correctly handle sometimes including the the

__cluster_metadata topic and sometimes not. As I mentioned above, it seems

possible we will have this issue again in the future. Doesn’t seem unlikely new

internal topics will be created.

My feeling is we should include __cluster_metadata as an internal topic and

document it as a breaking change as such. Other solutions that start involving

the client feel overly complex. Open to thoughts and/or any other better ideas

folks might have though.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-13877) Flaky RackAwarenessIntegrationTest.shouldDistributeStandbyReplicasOverMultipleClientTags

[

https://issues.apache.org/jira/browse/KAFKA-13877?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573180#comment-17573180

]

Guozhang Wang commented on KAFKA-13877:

---

[~lkokhreidze] ping again, please let me know if you are still working on it.

> Flaky

> RackAwarenessIntegrationTest.shouldDistributeStandbyReplicasOverMultipleClientTags

>

>

> Key: KAFKA-13877

> URL: https://issues.apache.org/jira/browse/KAFKA-13877

> Project: Kafka

> Issue Type: Bug

> Components: streams, unit tests

>Reporter: Guozhang Wang

>Assignee: Levani Kokhreidze

>Priority: Major

> Labels: newbie

>

> The following test fails on local testbeds about once per 10-15 runs:

> {code}

> java.lang.AssertionError

> at org.junit.Assert.fail(Assert.java:87)

> at org.junit.Assert.assertTrue(Assert.java:42)

> at org.junit.Assert.assertTrue(Assert.java:53)

> at

> org.apache.kafka.streams.integration.RackAwarenessIntegrationTest.shouldDistributeStandbyReplicasOverMultipleClientTags(RackAwarenessIntegrationTest.java:192)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at

> org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

> at

> org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

> at

> org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

> at

> org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at

> org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

> at org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:61)

> at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

> at

> org.junit.runners.BlockJUnit4ClassRunner$1.evaluate(BlockJUnit4ClassRunner.java:100)

> at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:366)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:103)

> at

> org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:63)

> at org.junit.runners.ParentRunner$4.run(ParentRunner.java:331)

> at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:79)

> at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:329)

> at org.junit.runners.ParentRunner.access$100(ParentRunner.java:66)

> at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:293)

> at

> org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

> at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

> at org.junit.runners.ParentRunner.run(ParentRunner.java:413)

> at org.junit.runner.JUnitCore.run(JUnitCore.java:137)

> at

> com.intellij.junit4.JUnit4IdeaTestRunner.startRunnerWithArgs(JUnit4IdeaTestRunner.java:68)

> at

> com.intellij.rt.junit.IdeaTestRunner$Repeater.startRunnerWithArgs(IdeaTestRunner.java:53)

> at

> com.intellij.rt.junit.JUnitStarter.prepareStreamsAndStart(JUnitStarter.java:230)

> at com.intellij.rt.junit.JUnitStarter.main(JUnitStarter.java:58)

> {code}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [kafka] guozhangwang commented on pull request #12461: Minor: enable index for emit final sliding window

guozhangwang commented on PR #12461: URL: https://github.com/apache/kafka/pull/12461#issuecomment-1199966654 LGTM! Merged to trunk -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] guozhangwang merged pull request #12461: Minor: enable index for emit final sliding window

guozhangwang merged PR #12461: URL: https://github.com/apache/kafka/pull/12461 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] AlanConfluent commented on a diff in pull request #12458: MINOR: Adds KRaft versions of most streams system tests

AlanConfluent commented on code in PR #12458: URL: https://github.com/apache/kafka/pull/12458#discussion_r933614497 ## tests/kafkatest/tests/streams/streams_standby_replica_test.py: ## @@ -73,9 +77,9 @@ def test_standby_tasks_rebalance(self): processor_3.start() -self.wait_for_verification(processor_1, "ACTIVE_TASKS:2 STANDBY_TASKS:[1-3]", processor_1.STDOUT_FILE) -self.wait_for_verification(processor_2, "ACTIVE_TASKS:2 STANDBY_TASKS:[1-3]", processor_2.STDOUT_FILE) -self.wait_for_verification(processor_3, "ACTIVE_TASKS:2 STANDBY_TASKS:[1-3]", processor_3.STDOUT_FILE) +self.wait_for_verification(processor_1, "ACTIVE_TASKS:2 STANDBY_TASKS:2", processor_1.STDOUT_FILE) +self.wait_for_verification(processor_2, "ACTIVE_TASKS:2 STANDBY_TASKS:2", processor_2.STDOUT_FILE) +self.wait_for_verification(processor_3, "ACTIVE_TASKS:2 STANDBY_TASKS:2", processor_3.STDOUT_FILE) Review Comment: Ahh, I know how this change got made. I had made my changes in another repo locally and copied the file over to this one... Must have been made by another commit in the original repo. Will revert this and make sure there are no similar changes elsewhere. ## tests/kafkatest/tests/streams/streams_broker_bounce_test.py: ## @@ -205,11 +211,17 @@ def collect_results(self, sleep_time_secs): return data @cluster(num_nodes=7) +@matrix(failure_mode=["clean_shutdown", "hard_shutdown", "clean_bounce", "hard_bounce"], +broker_type=["leader"], +num_threads=[1, 3], +sleep_time_secs=[120], +metadata_quorum=[quorum.remote_kraft]) @matrix(failure_mode=["clean_shutdown", "hard_shutdown", "clean_bounce", "hard_bounce"], broker_type=["leader", "controller"], num_threads=[1, 3], sleep_time_secs=[120]) Review Comment: I added this extra matrix configuration to avoid `broker_type=="controller"` for `metadata_quorum=="quorum.remote_kraft"`-- not sure if you noticed that, otherwise I would have just added `metadata_quorum=quorum.all_non_upgrade` to the existing matrix to cover both modes as you said. This is because killing the controller when it's separate from the broker is maybe not what the test is attempting to test and also requires other changes to accommodate killing off the separate KRaft controller node, but that seems a bit overkill for this purpose. Is that what -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cmccabe merged pull request #12456: MINOR: convert some more junit tests to support KRaft

cmccabe merged PR #12456: URL: https://github.com/apache/kafka/pull/12456 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-13467) Clients never refresh cached bootstrap IPs

[ https://issues.apache.org/jira/browse/KAFKA-13467?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573162#comment-17573162 ] Ismael Juma commented on KAFKA-13467: - No, there's a Jira that's a lot older. I will try to find it later if no-one else beats us to it. > Clients never refresh cached bootstrap IPs > -- > > Key: KAFKA-13467 > URL: https://issues.apache.org/jira/browse/KAFKA-13467 > Project: Kafka > Issue Type: Improvement > Components: clients, network >Reporter: Matthias J. Sax >Priority: Minor > > Follow up ticket to https://issues.apache.org/jira/browse/KAFKA-13405. > For certain broker rolling upgrade scenarios, it would be beneficial to > expired cached bootstrap server IP addresses and re-resolve those IPs to > allow clients to re-connect to the cluster without the need to restart the > client. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [kafka] lihaosky opened a new pull request, #12461: Minor: enable index for emit final sliding window

lihaosky opened a new pull request, #12461: URL: https://github.com/apache/kafka/pull/12461 ### Summary Enable index for sliding window emit final case as it's faster to fetch windows for particular key ### Testing Existing test cases ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-13467) Clients never refresh cached bootstrap IPs

[ https://issues.apache.org/jira/browse/KAFKA-13467?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573144#comment-17573144 ] Matthew de Detrich commented on KAFKA-13467: [~ijuma] are you talking about https://issues.apache.org/jira/browse/KAFKA-13405, if so its already closed? > Clients never refresh cached bootstrap IPs > -- > > Key: KAFKA-13467 > URL: https://issues.apache.org/jira/browse/KAFKA-13467 > Project: Kafka > Issue Type: Improvement > Components: clients, network >Reporter: Matthias J. Sax >Priority: Minor > > Follow up ticket to https://issues.apache.org/jira/browse/KAFKA-13405. > For certain broker rolling upgrade scenarios, it would be beneficial to > expired cached bootstrap server IP addresses and re-resolve those IPs to > allow clients to re-connect to the cluster without the need to restart the > client. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [kafka] C0urante commented on pull request #10528: KAFKA-12497: Skip periodic offset commits for failed source tasks

C0urante commented on PR #10528: URL: https://github.com/apache/kafka/pull/10528#issuecomment-1199873204 @mimaison @vvcephei @tombentley I'd like to merge this in order to unblock https://github.com/apache/kafka/pull/12434, which adds a second use case for the `LogCaptureAppender` in the Connect unit tests. Would it be possible to give this another pass sometime next week? Thanks! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on a diff in pull request #12456: MINOR: convert some more junit tests to support KRaft

cmccabe commented on code in PR #12456:

URL: https://github.com/apache/kafka/pull/12456#discussion_r933515355

##

core/src/test/scala/integration/kafka/api/BaseProducerSendTest.scala:

##

@@ -34,19 +35,21 @@ import org.apache.kafka.common.record.TimestampType

import org.apache.kafka.common.security.auth.SecurityProtocol

import org.apache.kafka.common.{KafkaException, TopicPartition}

import org.junit.jupiter.api.Assertions._

-import org.junit.jupiter.api.{AfterEach, BeforeEach, Test, TestInfo}

+import org.junit.jupiter.api.{AfterEach, BeforeEach, TestInfo}

+import org.junit.jupiter.params.ParameterizedTest

+import org.junit.jupiter.params.provider.ValueSource

import scala.jdk.CollectionConverters._

import scala.collection.mutable.Buffer

import scala.concurrent.ExecutionException

abstract class BaseProducerSendTest extends KafkaServerTestHarness {

Review Comment:

Thanks for pointing this out. Looks like it was an issue with using the

wrong endpoint in one of the topic creates. Fixed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on a diff in pull request #12456: MINOR: convert some more junit tests to support KRaft

cmccabe commented on code in PR #12456:

URL: https://github.com/apache/kafka/pull/12456#discussion_r933527317

##

core/src/test/scala/unit/kafka/utils/TestUtils.scala:

##

@@ -918,6 +918,76 @@ object TestUtils extends Logging {

}

}

+ /**

+ * If neither oldLeaderOpt nor newLeaderOpt is defined, wait until the

leader of a partition is elected.

+ * If oldLeaderOpt is defined, it waits until the new leader is different

from the old leader.

+ * If newLeaderOpt is defined, it waits until the new leader becomes the

expected new leader.

+ *

+ * @return The new leader (note that negative values are used to indicate

conditions like NoLeader and

+ * LeaderDuringDelete).

+ * @throws AssertionError if the expected condition is not true within the

timeout.

+ */

+ def waitUntilLeaderIsElectedOrChangedWithAdmin(

+admin: Admin,

+topic: String,

+partition: Int,

+timeoutMs: Long = 3L,

+oldLeaderOpt: Option[Int] = None,

+newLeaderOpt: Option[Int] = None

+ ): Int = {

+require(!(oldLeaderOpt.isDefined && newLeaderOpt.isDefined), "Can't define

both the old and the new leader")

+val startTime = System.currentTimeMillis()

+val topicPartition = new TopicPartition(topic, partition)

+

+trace(s"Waiting for leader to be elected or changed for partition

$topicPartition, old leader is $oldLeaderOpt, " +

+ s"new leader is $newLeaderOpt")

+

+var leader: Option[Int] = None

+var electedOrChangedLeader: Option[Int] = None

+while (electedOrChangedLeader.isEmpty && System.currentTimeMillis() <

startTime + timeoutMs) {

Review Comment:

That is a good idea for a refactor, but this was just copied from the ZK

version. I didn't want to rewrite it too much since it's kind of complex.

I looked at this again and felt a bit bad about duplicating so much code

between the ZK and KRaft versions. So I revised it so that they both call into

the same function, avoiding the duplication.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on a diff in pull request #12456: MINOR: convert some more junit tests to support KRaft

cmccabe commented on code in PR #12456:

URL: https://github.com/apache/kafka/pull/12456#discussion_r933515355

##

core/src/test/scala/integration/kafka/api/BaseProducerSendTest.scala:

##

@@ -34,19 +35,21 @@ import org.apache.kafka.common.record.TimestampType

import org.apache.kafka.common.security.auth.SecurityProtocol

import org.apache.kafka.common.{KafkaException, TopicPartition}

import org.junit.jupiter.api.Assertions._

-import org.junit.jupiter.api.{AfterEach, BeforeEach, Test, TestInfo}

+import org.junit.jupiter.api.{AfterEach, BeforeEach, TestInfo}

+import org.junit.jupiter.params.ParameterizedTest

+import org.junit.jupiter.params.provider.ValueSource

import scala.jdk.CollectionConverters._

import scala.collection.mutable.Buffer

import scala.concurrent.ExecutionException

abstract class BaseProducerSendTest extends KafkaServerTestHarness {

Review Comment:

looks like it was an issue with using the wrong endpoint in one of the topic

creates. fixed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] dplavcic opened a new pull request, #12460: MINOR: Upgrade mockito test dependencies

dplavcic opened a new pull request, #12460: URL: https://github.com/apache/kafka/pull/12460 ## Changes - **mockito: 4.4.0 -> 4.6.1** (https://github.com/mockito/mockito/releases) - Regression? Strictness set in @MockitoSettings ignored after upgrade from 4.5.1 to 4.6.0 https://github.com/mockito/mockito/issues/2656 - Fixes https://github.com/mockito/mockito/issues/2648 : Add support for customising strictness via @mock annotation and MockSettings https://github.com/mockito/mockito/pull/2650 ## Why is this change needed? According to the [Mockito documentation](https://javadoc.io/doc/org.mockito/mockito-core/latest/org/mockito/Mockito.html#when(T)) : > Although it is possible to verify a stubbed invocation, usually it's just redundant. Let's say you've stubbed foo.bar(). If your code cares what foo.bar() returns then something else breaks(often before even verify() gets executed). If your code doesn't care what get(0) returns then it should not be stubbed. While working on the [Replace EasyMock and PowerMock with Mockito for StreamsMetricsImplTest ](https://issues.apache.org/jira/browse/KAFKA-12947) I noticed that described behavior wasn't applied when you create a new `mock` like this. ```java final Metrics metrics = mock(Metrics.class); when(metrics.metric(metricName)).thenReturn(null); ... invoke SUT verify(metrics).metric(metricName); // this should be redundant (according to docs) ``` After further investigation I figured out that described behaviour wasn't implemented until`v4.6.1`. With this change we are now able to mock objects like this: ```java Foo explicitStrictMock = mock(Foo.class, withSettings().strictness(Strictness.STRICT_STUBS)); ``` - link to docs: [MockSettings.html#strictness](https://javadoc.io/static/org.mockito/mockito-core/4.6.1/org/mockito/MockSettings.html#strictness(org.mockito.quality.Strictness)) ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-13467) Clients never refresh cached bootstrap IPs

[ https://issues.apache.org/jira/browse/KAFKA-13467?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573089#comment-17573089 ] Ismael Juma commented on KAFKA-13467: - There is an ancient Jira where this was proposed and there were some concerns (at the time). We should continue the discussion there instead of creating a new issue. Now, to find that issue. :) > Clients never refresh cached bootstrap IPs > -- > > Key: KAFKA-13467 > URL: https://issues.apache.org/jira/browse/KAFKA-13467 > Project: Kafka > Issue Type: Improvement > Components: clients, network >Reporter: Matthias J. Sax >Priority: Minor > > Follow up ticket to https://issues.apache.org/jira/browse/KAFKA-13405. > For certain broker rolling upgrade scenarios, it would be beneficial to > expired cached bootstrap server IP addresses and re-resolve those IPs to > allow clients to re-connect to the cluster without the need to restart the > client. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [kafka] zigarn commented on a diff in pull request #12434: KAFKA-14099 - Fix request logs in connect

zigarn commented on code in PR #12434:

URL: https://github.com/apache/kafka/pull/12434#discussion_r933495048

##

connect/runtime/src/test/java/org/apache/kafka/connect/runtime/rest/RestServerTest.java:

##

@@ -64,17 +70,34 @@ public class RestServerTest {

private Herder herder;

private Plugins plugins;

private RestServer server;

+private CloseableHttpClient httpClient;

+private Collection responses = new ArrayList<>();

protected static final String KAFKA_CLUSTER_ID = "Xbafgnagvar";

@Before

public void setUp() {

herder = mock(Herder.class);

plugins = mock(Plugins.class);

+httpClient = HttpClients.createMinimal();

}

@After

public void tearDown() {

+responses.stream().forEach(response -> {

+try {

+response.close();

+} catch (IOException e) {

+e.printStackTrace();

+}

Review Comment:

Done!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] niket-goel commented on a diff in pull request #12457: WIP -- added some CRC checking

niket-goel commented on code in PR #12457:

URL: https://github.com/apache/kafka/pull/12457#discussion_r933489518

##

raft/src/main/java/org/apache/kafka/snapshot/RecordsSnapshotReader.java:

##

@@ -106,6 +106,7 @@ public static RecordsSnapshotReader of(

BufferSupplier bufferSupplier,

int maxBatchSize

) {

+// TODO: Is this a good place to perform delimeter check (i.e.

existence of header and footer?)

Review Comment:

That is the downside, yes. We could also seek to the end of the snapshot

file and just read the first and last batch. More ideal w.r.t performance would

be to just check for the existence of the footer as you are iterating through

the snapshot. You would still realize that you have an incomplete snapshot, but

you would have applied a part of that incomplete snapshot to your memory (not

sure if that poses a correctness risk).

##

core/src/main/scala/kafka/log/UnifiedLog.scala:

##

@@ -1216,7 +1216,16 @@ class UnifiedLog(@volatile var logStartOffset: Long,

case FetchHighWatermark => fetchHighWatermarkMetadata

case FetchTxnCommitted => fetchLastStableOffsetMetadata

}

-localLog.read(startOffset, maxLength, minOneMessage, maxOffsetMetadata,

isolation == FetchTxnCommitted)

+val fetchDataInfo = localLog.read(startOffset, maxLength, minOneMessage,

maxOffsetMetadata, isolation == FetchTxnCommitted)

Review Comment:

That is a fair question. I was contemplating that too. The alternative of

having a reader verify the bytes places less stress on the log layer in Kafka,

but is not really fool proof.

e.g. in that scheme the listeners that are reading the batches (both for

controller and broker) would do the CRC check (assuming the batch header

reaches that layer).

Actually now that I think about it, does having the check in the

`RecordsIterator` protect all readers?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-7438) Replace EasyMock and PowerMock with Mockito

[ https://issues.apache.org/jira/browse/KAFKA-7438?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573084#comment-17573084 ] Ismael Juma commented on KAFKA-7438: [~divijvaidya] if the original author is unresponsive, it's ok to pick it up. If there was already a PR, then we can use the git co-author feature for proper attribution. > Replace EasyMock and PowerMock with Mockito > --- > > Key: KAFKA-7438 > URL: https://issues.apache.org/jira/browse/KAFKA-7438 > Project: Kafka > Issue Type: Improvement >Reporter: Ismael Juma >Assignee: Dalibor Plavcic >Priority: Major > > Development of EasyMock and PowerMock has stagnated while Mockito continues > to be actively developed. With the new Java cadence, it's a problem to depend > on libraries that do bytecode generation and are not actively maintained. In > addition, Mockito is also easier to use. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (KAFKA-13065) Replace EasyMock with Mockito for BasicAuthSecurityRestExtensionTest

[ https://issues.apache.org/jira/browse/KAFKA-13065?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573079#comment-17573079 ] Divij Vaidya commented on KAFKA-13065: -- Hey [~chunhao] Are you planning to work on this one? > Replace EasyMock with Mockito for BasicAuthSecurityRestExtensionTest > > > Key: KAFKA-13065 > URL: https://issues.apache.org/jira/browse/KAFKA-13065 > Project: Kafka > Issue Type: Sub-task >Reporter: Chun-Hao Tang >Assignee: Chun-Hao Tang >Priority: Major > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (KAFKA-13060) Replace EasyMock and PowerMock with Mockito in WorkerGroupMemberTest.java

[ https://issues.apache.org/jira/browse/KAFKA-13060?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573077#comment-17573077 ] Divij Vaidya commented on KAFKA-13060: -- [~chunhao] are you planning to work on this? If not, I would like to take it over. > Replace EasyMock and PowerMock with Mockito in WorkerGroupMemberTest.java > - > > Key: KAFKA-13060 > URL: https://issues.apache.org/jira/browse/KAFKA-13060 > Project: Kafka > Issue Type: Sub-task >Reporter: Chun-Hao Tang >Assignee: Chun-Hao Tang >Priority: Major > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [kafka] divijvaidya commented on pull request #10904: KAFKA-13060: Replace EasyMock and PowerMock with Mockito in WorkerGroupMemberTest

divijvaidya commented on PR #10904: URL: https://github.com/apache/kafka/pull/10904#issuecomment-1199777106 @tang7526 are you planning to work on this? If not, I would like to take it over. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] divijvaidya commented on a diff in pull request #12449: KAFKA-12947 [WIP]: Replace EasyMock and PowerMock with Mockito for StreamsMetricsImplTest

divijvaidya commented on code in PR #12449:

URL: https://github.com/apache/kafka/pull/12449#discussion_r933473975

##

streams/src/test/java/org/apache/kafka/streams/kstream/internals/graph/TableSourceNodeTest.java:

##

@@ -23,41 +23,39 @@

import org.apache.kafka.streams.kstream.internals.MaterializedInternal;

import

org.apache.kafka.streams.kstream.internals.graph.TableSourceNode.TableSourceNodeBuilder;

import org.apache.kafka.streams.processor.internals.InternalTopologyBuilder;

-import org.easymock.EasyMock;

import org.junit.Test;

import org.junit.runner.RunWith;

-import org.powermock.api.easymock.PowerMock;

-import org.powermock.core.classloader.annotations.PrepareForTest;

-import org.powermock.modules.junit4.PowerMockRunner;

+import org.mockito.Mock;

+import org.mockito.junit.MockitoJUnitRunner;

-@RunWith(PowerMockRunner.class)

-@PrepareForTest({InternalTopologyBuilder.class})

+import static org.mockito.ArgumentMatchers.any;

+import static org.mockito.Mockito.never;

+import static org.mockito.Mockito.verify;

+

+@RunWith(MockitoJUnitRunner.class)

public class TableSourceNodeTest {

private static final String STORE_NAME = "store-name";

private static final String TOPIC = "input-topic";

-private final InternalTopologyBuilder topologyBuilder =

PowerMock.createNiceMock(InternalTopologyBuilder.class);

+@Mock private InternalTopologyBuilder topologyBuilder;

@Test

public void

shouldConnectStateStoreToInputTopicIfInputTopicIsUsedAsChangelog() {

final boolean shouldReuseSourceTopicForChangelog = true;

-topologyBuilder.connectSourceStoreAndTopic(STORE_NAME, TOPIC);

Review Comment:

You can add

`doNothing().when(topologyBuilder).connectSourceStoreAndTopic(STORE_NAME,

TOPIC);` here.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Assigned] (KAFKA-13036) Replace EasyMock and PowerMock with Mockito for RocksDBMetricsRecorderTest

[ https://issues.apache.org/jira/browse/KAFKA-13036?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Divij Vaidya reassigned KAFKA-13036: Assignee: Divij Vaidya (was: YI-CHEN WANG) > Replace EasyMock and PowerMock with Mockito for RocksDBMetricsRecorderTest > -- > > Key: KAFKA-13036 > URL: https://issues.apache.org/jira/browse/KAFKA-13036 > Project: Kafka > Issue Type: Sub-task > Components: streams, unit tests >Reporter: YI-CHEN WANG >Assignee: Divij Vaidya >Priority: Major > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (KAFKA-7438) Replace EasyMock and PowerMock with Mockito

[ https://issues.apache.org/jira/browse/KAFKA-7438?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573067#comment-17573067 ] Divij Vaidya commented on KAFKA-7438: - [~ijuma] There are sub-tasks on this one which are pending activity from the author for quite some time. I and [~christo_lolov] would like to pick up this overall task and get it resolved in the weeks ahead. Would that be ok? > Replace EasyMock and PowerMock with Mockito > --- > > Key: KAFKA-7438 > URL: https://issues.apache.org/jira/browse/KAFKA-7438 > Project: Kafka > Issue Type: Improvement >Reporter: Ismael Juma >Assignee: Dalibor Plavcic >Priority: Major > > Development of EasyMock and PowerMock has stagnated while Mockito continues > to be actively developed. With the new Java cadence, it's a problem to depend > on libraries that do bytecode generation and are not actively maintained. In > addition, Mockito is also easier to use. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [kafka] C0urante commented on a diff in pull request #12432: KAFKA-14095: Improve handling of sync offset failures in MirrorMaker

C0urante commented on code in PR #12432:

URL: https://github.com/apache/kafka/pull/12432#discussion_r933464520

##

connect/mirror/src/main/java/org/apache/kafka/connect/mirror/MirrorCheckpointTask.java:

##

@@ -306,9 +308,18 @@ Map>

syncGroupOffset() {

void syncGroupOffset(String consumerGroupId, Map offsetToSync) {

if (targetAdminClient != null) {

-targetAdminClient.alterConsumerGroupOffsets(consumerGroupId,

offsetToSync);

-log.trace("sync-ed the offset for consumer group: {} with {}

number of offset entries",

- consumerGroupId, offsetToSync.size());

+AlterConsumerGroupOffsetsResult result =

targetAdminClient.alterConsumerGroupOffsets(consumerGroupId, offsetToSync);

Review Comment:

Nit: would it also be useful to have a log line here indicating that we're

attempting to sync offsets?

##

connect/mirror/src/main/java/org/apache/kafka/connect/mirror/MirrorCheckpointTask.java:

##

@@ -306,7 +308,16 @@ Map>

syncGroupOffset() {

void syncGroupOffset(String consumerGroupId, Map offsetToSync) {

if (targetAdminClient != null) {

-targetAdminClient.alterConsumerGroupOffsets(consumerGroupId,

offsetToSync);

+AlterConsumerGroupOffsetsResult result =

targetAdminClient.alterConsumerGroupOffsets(consumerGroupId, offsetToSync);

+result.all().whenComplete((v, throwable) -> {

+if (throwable != null) {

+if (throwable.getCause() instanceof

UnknownMemberIdException) {

Review Comment:

I took a closer look at the [`KafkaFuture`

Javadocs](https://kafka.apache.org/32/javadoc/org/apache/kafka/common/KafkaFuture.html).

The docs for `KafkaFuture::whenComplete` state (emphasis mine):

> Returns a new KafkaFuture **with the same result or exception as this

future**, that executes the given action when this future completes. When this

future is done, **the given action is invoked with the result (or null if none)

and the exception (or null if none) of this future as arguments**."

Given this, we know that `whenComplete` receives the same exception that

would be thrown by, e.g., `KafkaFuture::get`, if the action failed.

The method signatures for `KafkaFuture` declare that they throw

`ExecutionException` if the action fails, which always wraps the cause of

failure.

So, I'm convinced that we can expect this wrapping

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (KAFKA-12950) Replace EasyMock and PowerMock with Mockito for KafkaStreamsTest

[ https://issues.apache.org/jira/browse/KAFKA-12950?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573066#comment-17573066 ] Divij Vaidya commented on KAFKA-12950: -- [~a493172422] Are you planning to work on this JIRA? If not, I would like to pick this one up. > Replace EasyMock and PowerMock with Mockito for KafkaStreamsTest > > > Key: KAFKA-12950 > URL: https://issues.apache.org/jira/browse/KAFKA-12950 > Project: Kafka > Issue Type: Sub-task > Components: streams, unit tests >Reporter: Josep Prat >Assignee: YI-CHEN WANG >Priority: Major > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [kafka] divijvaidya commented on pull request #11017: KAFKA-12950 Replace EasyMock and PowerMock with Mockito for KafkaStream

divijvaidya commented on PR #11017: URL: https://github.com/apache/kafka/pull/11017#issuecomment-1199766419 @wycc are you planning to work further on this PR? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (KAFKA-13036) Replace EasyMock and PowerMock with Mockito for RocksDBMetricsRecorderTest

[ https://issues.apache.org/jira/browse/KAFKA-13036?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573062#comment-17573062 ] Divij Vaidya commented on KAFKA-13036: -- I started a new pull request since the older one if pending response from the author since Sept 2021. > Replace EasyMock and PowerMock with Mockito for RocksDBMetricsRecorderTest > -- > > Key: KAFKA-13036 > URL: https://issues.apache.org/jira/browse/KAFKA-13036 > Project: Kafka > Issue Type: Sub-task > Components: streams, unit tests >Reporter: YI-CHEN WANG >Assignee: YI-CHEN WANG >Priority: Major > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [kafka] divijvaidya opened a new pull request, #12459: KAFKA-13036: Replace EasyMock and PowerMock with Mockito for RocksDBMetricsRecorderTest

divijvaidya opened a new pull request, #12459: URL: https://github.com/apache/kafka/pull/12459 ## Changes 1. Migrate to Mockito 2. Add more assertive checks using `verify` ## Testing `./gradlew streams:unitTest --tests RocksDBMetricsRecorderTest` is successful -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mimaison commented on pull request #11565: KAFKA-13504: Retry connect internal topics' creation in case of InvalidReplicationFactorException

mimaison commented on PR #11565: URL: https://github.com/apache/kafka/pull/11565#issuecomment-1199642899 @akatona84 Thanks for taking another look and simplifying the configuration, I think it looks much better. This will still need a KIP. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mimaison commented on a diff in pull request #12432: KAFKA-14095: Improve handling of sync offset failures in MirrorMaker

mimaison commented on code in PR #12432:

URL: https://github.com/apache/kafka/pull/12432#discussion_r933420173

##

connect/mirror/src/main/java/org/apache/kafka/connect/mirror/MirrorCheckpointTask.java:

##

@@ -306,7 +308,16 @@ Map>

syncGroupOffset() {

void syncGroupOffset(String consumerGroupId, Map offsetToSync) {

if (targetAdminClient != null) {

-targetAdminClient.alterConsumerGroupOffsets(consumerGroupId,

offsetToSync);

+AlterConsumerGroupOffsetsResult result =

targetAdminClient.alterConsumerGroupOffsets(consumerGroupId, offsetToSync);

+result.all().whenComplete((v, throwable) -> {

+if (throwable != null) {

+if (throwable.getCause() instanceof

UnknownMemberIdException) {

Review Comment:

The outer exception depends how the future is completed but I _think_ the

actual error should always be wrapped.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] mimaison commented on a diff in pull request #12432: KAFKA-14095: Improve handling of sync offset failures in MirrorMaker

mimaison commented on code in PR #12432:

URL: https://github.com/apache/kafka/pull/12432#discussion_r933414015

##

connect/mirror/src/main/java/org/apache/kafka/connect/mirror/MirrorCheckpointTask.java:

##

@@ -306,7 +308,16 @@ Map>

syncGroupOffset() {

void syncGroupOffset(String consumerGroupId, Map offsetToSync) {

if (targetAdminClient != null) {

-targetAdminClient.alterConsumerGroupOffsets(consumerGroupId,

offsetToSync);

+AlterConsumerGroupOffsetsResult result =

targetAdminClient.alterConsumerGroupOffsets(consumerGroupId, offsetToSync);

+result.all().whenComplete((v, throwable) -> {

+if (throwable != null) {

+if (throwable.getCause() instanceof

UnknownMemberIdException) {

+log.warn("Unable to sync offsets for consumer group

{}. This is likely caused by consumers currently using this group in the target

cluster.", consumerGroupId);

+} else {

+log.error("Unable to sync offsets for consumer group

{}.", consumerGroupId, throwable);

+}

+}

+});

log.trace("sync-ed the offset for consumer group: {} with {}

number of offset entries",

Review Comment:

I've moved it in an `else` block above and tweaked the message slightly.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] mimaison commented on a diff in pull request #12432: KAFKA-14095: Improve handling of sync offset failures in MirrorMaker

mimaison commented on code in PR #12432: URL: https://github.com/apache/kafka/pull/12432#discussion_r933413745 ## clients/src/main/java/org/apache/kafka/clients/admin/internals/AlterConsumerGroupOffsetsHandler.java: ## @@ -179,6 +179,9 @@ private void handleError( case INVALID_GROUP_ID: case INVALID_COMMIT_OFFSET_SIZE: case GROUP_AUTHORIZATION_FAILED: +// Member level errors. +case UNKNOWN_MEMBER_ID: +case FENCED_INSTANCE_ID: Review Comment: You're right, currently this error code is not expected as the admin client does not set the group instance ID. I've removed it. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vvcephei commented on a diff in pull request #12458: MINOR: Adds KRaft versions of most streams system tests

vvcephei commented on code in PR #12458: URL: https://github.com/apache/kafka/pull/12458#discussion_r933394405 ## tests/kafkatest/tests/streams/streams_standby_replica_test.py: ## @@ -73,9 +77,9 @@ def test_standby_tasks_rebalance(self): processor_3.start() -self.wait_for_verification(processor_1, "ACTIVE_TASKS:2 STANDBY_TASKS:[1-3]", processor_1.STDOUT_FILE) -self.wait_for_verification(processor_2, "ACTIVE_TASKS:2 STANDBY_TASKS:[1-3]", processor_2.STDOUT_FILE) -self.wait_for_verification(processor_3, "ACTIVE_TASKS:2 STANDBY_TASKS:[1-3]", processor_3.STDOUT_FILE) +self.wait_for_verification(processor_1, "ACTIVE_TASKS:2 STANDBY_TASKS:2", processor_1.STDOUT_FILE) +self.wait_for_verification(processor_2, "ACTIVE_TASKS:2 STANDBY_TASKS:2", processor_2.STDOUT_FILE) +self.wait_for_verification(processor_3, "ACTIVE_TASKS:2 STANDBY_TASKS:2", processor_3.STDOUT_FILE) Review Comment: What's up with this verification change? It looks like you're increasing the specificity of the verification here, which is good. I'm wondering if it has some relationship to the quorum change, of if it's just on the side. ## tests/kafkatest/tests/streams/streams_broker_bounce_test.py: ## @@ -205,11 +211,17 @@ def collect_results(self, sleep_time_secs): return data @cluster(num_nodes=7) +@matrix(failure_mode=["clean_shutdown", "hard_shutdown", "clean_bounce", "hard_bounce"], +broker_type=["leader"], +num_threads=[1, 3], +sleep_time_secs=[120], +metadata_quorum=[quorum.remote_kraft]) @matrix(failure_mode=["clean_shutdown", "hard_shutdown", "clean_bounce", "hard_bounce"], broker_type=["leader", "controller"], num_threads=[1, 3], sleep_time_secs=[120]) Review Comment: What do you think about explicitly specifying `quorum.zk` instead of relying on the default? It's maybe mildly more verbose, but it avoids having to think through overrides when you're debugging a test failure out of the blue. If you're in favor, I'd suggest just removing all the default parameter values that got added here to be sure everything is explicitly configured. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] C0urante commented on a diff in pull request #12434: KAFKA-14099 - Fix request logs in connect

C0urante commented on code in PR #12434:

URL: https://github.com/apache/kafka/pull/12434#discussion_r933387182

##

connect/runtime/src/test/java/org/apache/kafka/connect/runtime/rest/RestServerTest.java:

##

@@ -64,17 +70,34 @@ public class RestServerTest {

private Herder herder;

private Plugins plugins;

private RestServer server;

+private CloseableHttpClient httpClient;

+private Collection responses = new ArrayList<>();

protected static final String KAFKA_CLUSTER_ID = "Xbafgnagvar";

@Before

public void setUp() {

herder = mock(Herder.class);

plugins = mock(Plugins.class);

+httpClient = HttpClients.createMinimal();

}

@After

public void tearDown() {

+responses.stream().forEach(response -> {

+try {

+response.close();

+} catch (IOException e) {

+e.printStackTrace();

+}

Review Comment:

I think fail-fast is fine. It may cause resource leaks during test runs, but

if we do our due diligence and make sure tests pass before merging changes,

this should never happen on trunk or backport branches, and should only affect

local runs while iterating on changes, in which case it's easier to do more

targeted testing by isolating to a single Gradle module, test class, or even

test case, and leaked resources should matter less.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] C0urante commented on a diff in pull request #12434: KAFKA-14099 - Fix request logs in connect

C0urante commented on code in PR #12434:

URL: https://github.com/apache/kafka/pull/12434#discussion_r933387182

##

connect/runtime/src/test/java/org/apache/kafka/connect/runtime/rest/RestServerTest.java:

##

@@ -64,17 +70,34 @@ public class RestServerTest {

private Herder herder;

private Plugins plugins;

private RestServer server;

+private CloseableHttpClient httpClient;

+private Collection responses = new ArrayList<>();

protected static final String KAFKA_CLUSTER_ID = "Xbafgnagvar";

@Before

public void setUp() {

herder = mock(Herder.class);

plugins = mock(Plugins.class);

+httpClient = HttpClients.createMinimal();

}

@After

public void tearDown() {

+responses.stream().forEach(response -> {

+try {

+response.close();

+} catch (IOException e) {

+e.printStackTrace();

+}

Review Comment:

I think fail-fast is fine. It may cause resource leaks during test runs, but

if we do our due diligence and make sure tests pass before merging changes,

this should never happen on trunk or backport branches, and should only affect

local runs while iterating on changes.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cadonna merged pull request #12441: KAFKA-14108: Ensure both JUnit 4 and JUnit 5 tests run

cadonna merged PR #12441: URL: https://github.com/apache/kafka/pull/12441 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] zigarn commented on a diff in pull request #12434: KAFKA-14099 - Fix request logs in connect

zigarn commented on code in PR #12434:

URL: https://github.com/apache/kafka/pull/12434#discussion_r933377429

##

connect/runtime/src/test/java/org/apache/kafka/connect/runtime/rest/RestServerTest.java:

##

@@ -64,17 +70,34 @@ public class RestServerTest {

private Herder herder;

private Plugins plugins;

private RestServer server;

+private CloseableHttpClient httpClient;

+private Collection responses = new ArrayList<>();

protected static final String KAFKA_CLUSTER_ID = "Xbafgnagvar";

@Before

public void setUp() {

herder = mock(Herder.class);

plugins = mock(Plugins.class);

+httpClient = HttpClients.createMinimal();

}

@After

public void tearDown() {

+responses.stream().forEach(response -> {

+try {

+response.close();

+} catch (IOException e) {

+e.printStackTrace();

+}

Review Comment:

A bit more complicated to correctly close everything but still raising

errors.

Or we could just fail everything on first closing issue?

Added commit for 1st option. Can go for a fail-fast option if you prefer.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cadonna commented on pull request #12441: KAFKA-14108: Ensure both JUnit 4 and JUnit 5 tests run

cadonna commented on PR #12441: URL: https://github.com/apache/kafka/pull/12441#issuecomment-1199503553 Build failures are unrelated. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

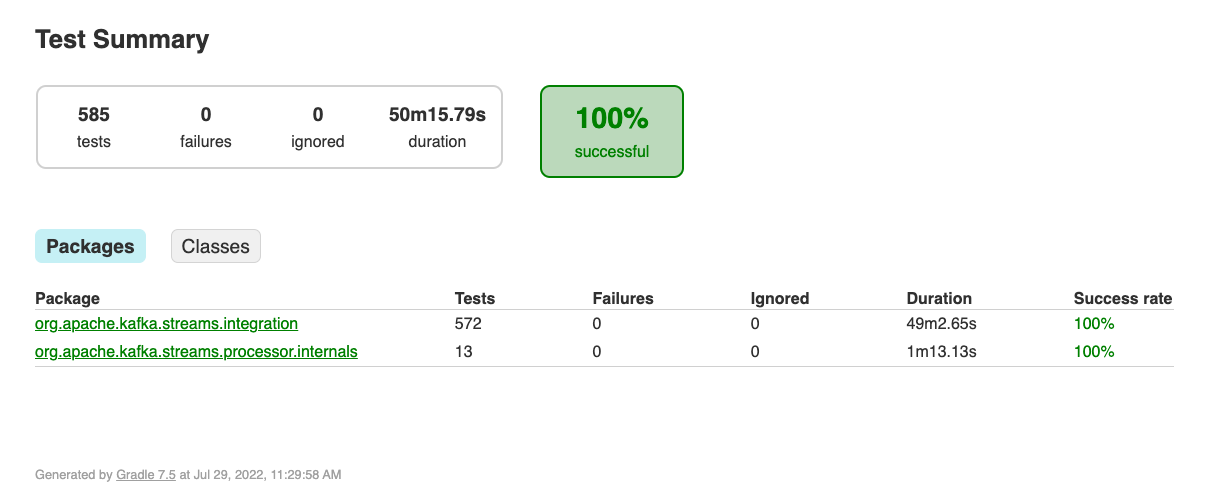

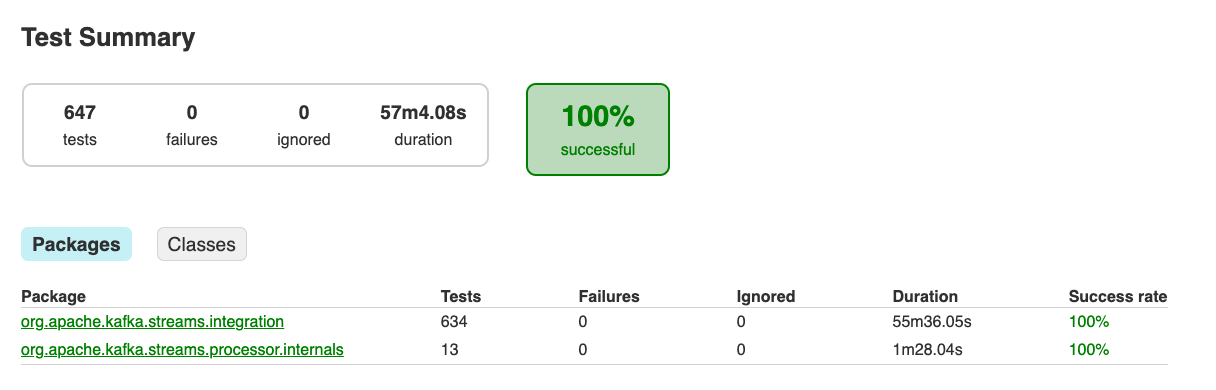

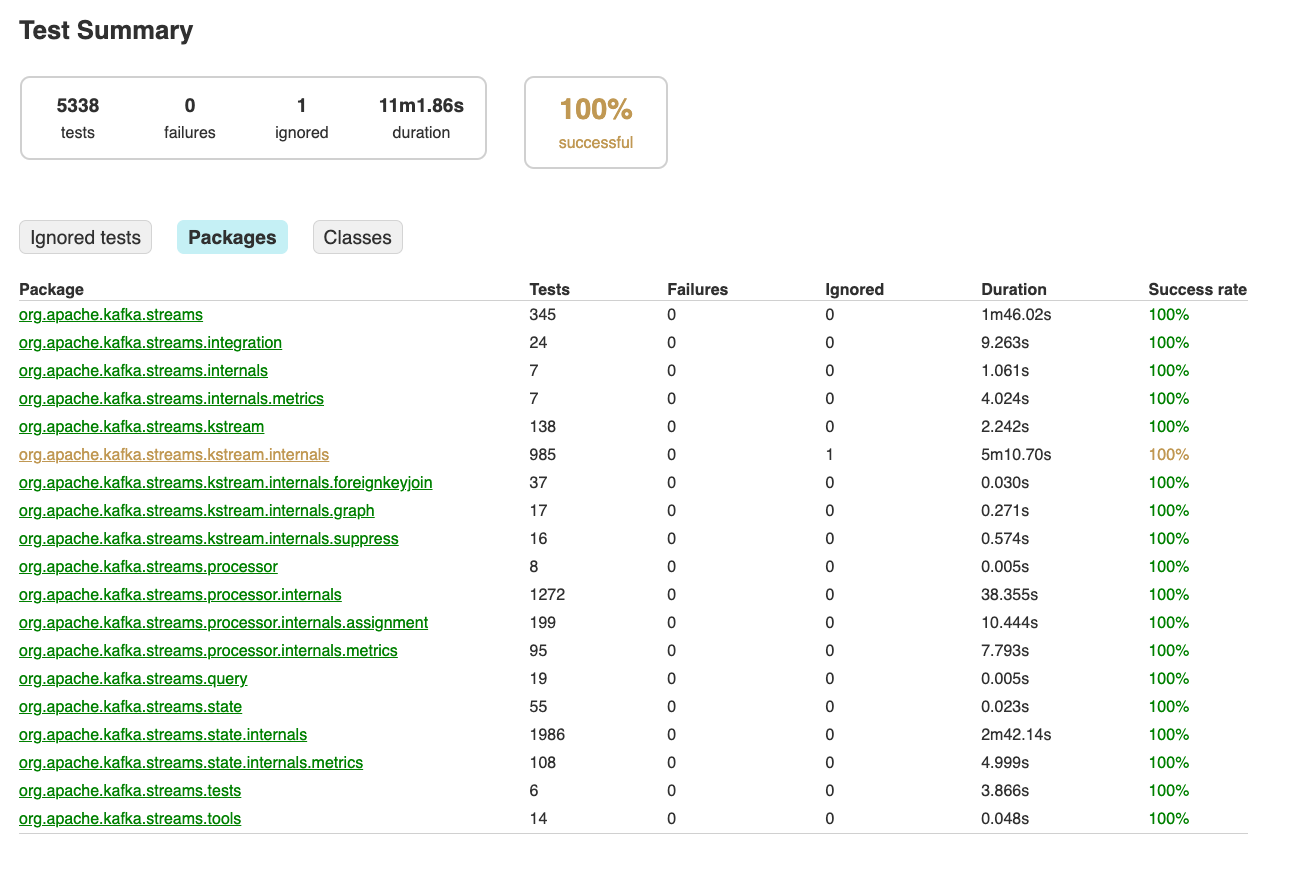

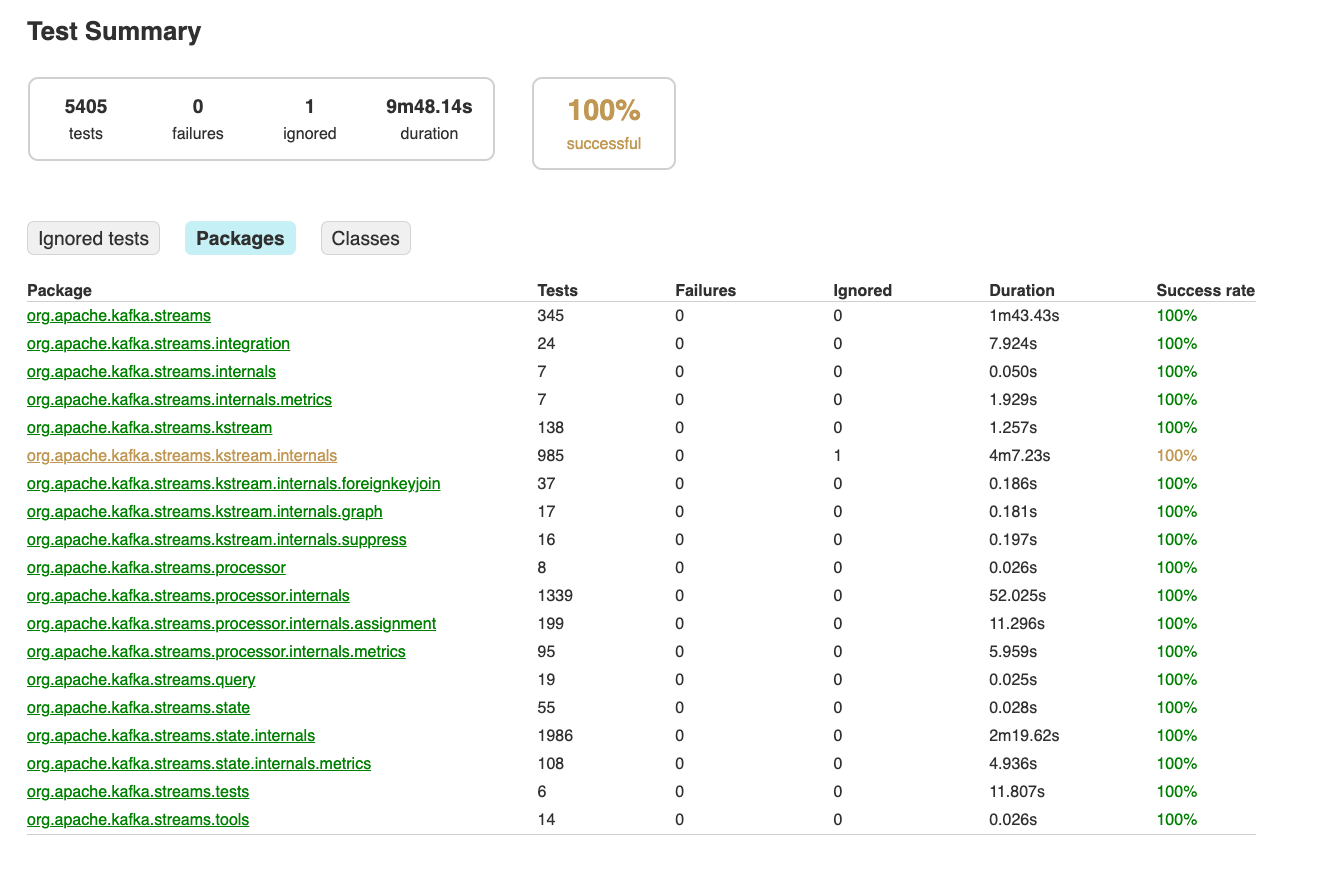

[GitHub] [kafka] cadonna commented on pull request #12441: KAFKA-14108: Ensure both JUnit 4 and JUnit 5 tests run

cadonna commented on PR #12441: URL: https://github.com/apache/kafka/pull/12441#issuecomment-1199502782 I verified that all Streams' tests are run in the builds and I also verified that the tests of other modules are run in the builds. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] C0urante commented on a diff in pull request #12434: KAFKA-14099 - Fix request logs in connect

C0urante commented on code in PR #12434:

URL: https://github.com/apache/kafka/pull/12434#discussion_r933363858

##

connect/runtime/src/test/java/org/apache/kafka/connect/runtime/rest/RestServerTest.java:

##

@@ -64,17 +70,34 @@ public class RestServerTest {

private Herder herder;

private Plugins plugins;

private RestServer server;

+private CloseableHttpClient httpClient;

+private Collection responses = new ArrayList<>();

protected static final String KAFKA_CLUSTER_ID = "Xbafgnagvar";

@Before

public void setUp() {

herder = mock(Herder.class);

plugins = mock(Plugins.class);

+httpClient = HttpClients.createMinimal();

}

@After

public void tearDown() {

+responses.stream().forEach(response -> {

+try {

+response.close();

+} catch (IOException e) {

+e.printStackTrace();

+}

Review Comment:

Any reason we shouldn't fail the test if this fails? It may indicate an

issue with the server if we can't close a response or a client.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cadonna merged pull request #12453: MINOR: Remove code of removed metric

cadonna merged PR #12453: URL: https://github.com/apache/kafka/pull/12453 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (KAFKA-14122) Flaky test DynamicBrokerReconfigurationTest.testKeyStoreAlter

[

https://issues.apache.org/jira/browse/KAFKA-14122?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573018#comment-17573018

]

Bruno Cadonna edited comment on KAFKA-14122 at 7/29/22 2:50 PM:

Encountered again:

{code:java}

org.opentest4j.AssertionFailedError: Duplicates not expected ==> expected:

but was:

at

app//org.junit.jupiter.api.AssertionUtils.fail(AssertionUtils.java:55)

at

app//org.junit.jupiter.api.AssertFalse.assertFalse(AssertFalse.java:40)

at

app//org.junit.jupiter.api.Assertions.assertFalse(Assertions.java:235)

at

app//kafka.server.DynamicBrokerReconfigurationTest.stopAndVerifyProduceConsume(DynamicBrokerReconfigurationTest.scala:1579)

at

app//kafka.server.DynamicBrokerReconfigurationTest.testKeyStoreAlter(DynamicBrokerReconfigurationTest.scala:399)

at

java.base@17.0.1/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native

Method)

at

java.base@17.0.1/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at

java.base@17.0.1/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base@17.0.1/java.lang.reflect.Method.invoke(Method.java:568)

at

app//org.junit.platform.commons.util.ReflectionUtils.invokeMethod(ReflectionUtils.java:725)

{code}

https://ci-builds.apache.org/job/Kafka/job/kafka-pr/job/PR-12453/3/testReport/kafka.server/DynamicBrokerReconfigurationTest/Build___JDK_17_and_Scala_2_13___testKeyStoreAlter__/

was (Author: cadonna):

Encountered again:

{code:java}

org.opentest4j.AssertionFailedError: Duplicates not expected ==> expected:

but was:

at

app//org.junit.jupiter.api.AssertionUtils.fail(AssertionUtils.java:55)

at

app//org.junit.jupiter.api.AssertFalse.assertFalse(AssertFalse.java:40)

at

app//org.junit.jupiter.api.Assertions.assertFalse(Assertions.java:235)

at

app//kafka.server.DynamicBrokerReconfigurationTest.stopAndVerifyProduceConsume(DynamicBrokerReconfigurationTest.scala:1579)

at

app//kafka.server.DynamicBrokerReconfigurationTest.testKeyStoreAlter(DynamicBrokerReconfigurationTest.scala:399)

at

java.base@17.0.1/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native

Method)

at

java.base@17.0.1/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at

java.base@17.0.1/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base@17.0.1/java.lang.reflect.Method.invoke(Method.java:568)

at

app//org.junit.platform.commons.util.ReflectionUtils.invokeMethod(ReflectionUtils.java:725)

{code}

> Flaky test DynamicBrokerReconfigurationTest.testKeyStoreAlter

> -

>

> Key: KAFKA-14122

> URL: https://issues.apache.org/jira/browse/KAFKA-14122

> Project: Kafka

> Issue Type: Bug

> Components: consumer, core, system tests

>Reporter: Divij Vaidya

>Assignee: Divij Vaidya

>Priority: Major

> Labels: flaky, flaky-test

> Fix For: 3.4.0

>

>

> CI Build:

> [https://ci-builds.apache.org/job/Kafka/job/kafka-pr/job/PR-12439/2/testReport/?cloudbees-analytics-link=scm-reporting%2Ftests%2Ffailed]

>

> Failure log:

> {code:java}

> org.opentest4j.AssertionFailedError: Duplicates not expected ==> expected:

> but was:

> at

> app//org.junit.jupiter.api.AssertionUtils.fail(AssertionUtils.java:55)

> at

> app//org.junit.jupiter.api.AssertFalse.assertFalse(AssertFalse.java:40)

> at

> app//org.junit.jupiter.api.Assertions.assertFalse(Assertions.java:235)

> at

> app//kafka.server.DynamicBrokerReconfigurationTest.stopAndVerifyProduceConsume(DynamicBrokerReconfigurationTest.scala:1579)

> at

> app//kafka.server.DynamicBrokerReconfigurationTest.testKeyStoreAlter(DynamicBrokerReconfigurationTest.scala:399)

> at

> java.base@17.0.1/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native

> Method)

> at

> java.base@17.0.1/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

> at

> java.base@17.0.1/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.base@17.0.1/java.lang.reflect.Method.invoke(Method.java:568)

> at

> app//org.junit.platform.commons.util.ReflectionUtils.invokeMethod(ReflectionUtils.java:725)

> at

> app//org.junit.jupiter.engine.execution.MethodInvocation.proceed(MethodInvocation.java:60)

> at

> app//org.junit.jupiter.engine.execution.InvocationInterceptorChain$ValidatingInvocation.proceed(InvocationInterceptorChain.java:131)

> at

>

[jira] [Commented] (KAFKA-14122) Flaky test DynamicBrokerReconfigurationTest.testKeyStoreAlter

[

https://issues.apache.org/jira/browse/KAFKA-14122?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17573018#comment-17573018

]

Bruno Cadonna commented on KAFKA-14122:

---

Encountered again:

{code:java}

org.opentest4j.AssertionFailedError: Duplicates not expected ==> expected:

but was:

at

app//org.junit.jupiter.api.AssertionUtils.fail(AssertionUtils.java:55)

at

app//org.junit.jupiter.api.AssertFalse.assertFalse(AssertFalse.java:40)

at

app//org.junit.jupiter.api.Assertions.assertFalse(Assertions.java:235)

at

app//kafka.server.DynamicBrokerReconfigurationTest.stopAndVerifyProduceConsume(DynamicBrokerReconfigurationTest.scala:1579)

at

app//kafka.server.DynamicBrokerReconfigurationTest.testKeyStoreAlter(DynamicBrokerReconfigurationTest.scala:399)

at

java.base@17.0.1/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native

Method)

at

java.base@17.0.1/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at

java.base@17.0.1/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base@17.0.1/java.lang.reflect.Method.invoke(Method.java:568)

at

app//org.junit.platform.commons.util.ReflectionUtils.invokeMethod(ReflectionUtils.java:725)

{code}

> Flaky test DynamicBrokerReconfigurationTest.testKeyStoreAlter

> -

>

> Key: KAFKA-14122