[GitHub] spark issue #18310: [SPARK-21103][SQL] QueryPlanConstraints should be part o...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18310 **[Test build #78087 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78087/testReport)** for PR 18310 at commit [`7040877`](https://github.com/apache/spark/commit/704087798dd4e451fb3bb3caab0cdadd72ae19e5). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user viirya commented on the issue: https://github.com/apache/spark/pull/18301 Because `SQLMetric` just stores long value. I was using a trick to multiply the avg probe by 1000 to get a long. When preparing the values for UI, dividing the long with 1000 to get a float back. So it's a workaround for long-based `SQLMetric`. But I finally don't use it. Doesn't it sound too hacky for you? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18308: [SPARK-21099][Spark Core] INFO Log Message Using ...

Github user jerryshao commented on a diff in the pull request:

https://github.com/apache/spark/pull/18308#discussion_r122129884

--- Diff:

core/src/main/scala/org/apache/spark/ExecutorAllocationManager.scala ---

@@ -432,8 +432,10 @@ private[spark] class ExecutorAllocationManager(

if (testing || executorsRemoved.nonEmpty) {

executorsRemoved.foreach { removedExecutorId =>

newExecutorTotal -= 1

+val hasCachedBlocks =

SparkEnv.get.blockManager.master.hasCachedBlocks(executorId);

--- End diff --

Btw, there could be a chance when querying executor from BlockManager, the

executor/block manager was already removed, so we will potentially get `false`.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18310: [SPARK-21103][SQL] QueryPlanConstraints should be...

GitHub user rxin reopened a pull request: https://github.com/apache/spark/pull/18310 [SPARK-21103][SQL] QueryPlanConstraints should be part of LogicalPlan ## What changes were proposed in this pull request? QueryPlanConstraints should be part of LogicalPlan, rather than QueryPlan, since the constraint framework is only used for query plan rewriting and not for physical planning. ## How was this patch tested? Should be covered by existing tests, since it is a simple refactoring. You can merge this pull request into a Git repository by running: $ git pull https://github.com/rxin/spark SPARK-21103 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18310.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18310 commit 90cff62eaffbbc7859c4c05bd53e58149bff499a Author: Reynold Xin Date: 2017-06-14T07:19:13Z [SPARK-21092][SQL] Wire SQLConf in logical plan and expressions commit b03af7ffe0650811aeaa376b639ac16a17e8c4a8 Author: Reynold Xin Date: 2017-06-14T07:22:56Z More docs commit ea091643ae48183b2a9294a6bc37ee3b991c9226 Author: Reynold Xin Date: 2017-06-14T21:35:25Z Merge with master commit 14f2b41642cb9adfc04a5aa95a0d0fc231205598 Author: Reynold Xin Date: 2017-06-14T23:25:19Z Update PruneFilter rule. commit a032106b8c98bd8a0e823d6576114dbdcb6da032 Author: Reynold Xin Date: 2017-06-14T23:28:30Z Properly unset configs. commit cec78b5cf1ced8322c8cd8e599a3197c50ed49c0 Author: Reynold Xin Date: 2017-06-15T01:50:07Z Update OuterJoinEliminationSuite commit 40de35c3ac6bdbf2bd2c43b9deca04a5cbdbc4ef Author: Reynold Xin Date: 2017-06-15T02:08:56Z [SPARK-21103][SQL] QueryPlanConstraints should be part of LogicalPlan --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user rxin commented on the issue: https://github.com/apache/spark/pull/18301 also the avg probe probably shouldn't be an integer. at least we should show something like 1.9? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #12147: [SPARK-14361][SQL]Window function exclude clause

Github user xwu0226 commented on the issue: https://github.com/apache/spark/pull/12147 Since there are a lot of changes by other PRs over the last year, many changes in this PR may not be applicable and I am doing the rebase and necessary rework on this PR. Will update soon. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user rxin commented on the issue: https://github.com/apache/spark/pull/18301 yes but i just feel it is getting very long and verbose .. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18308: [SPARK-21099][Spark Core] INFO Log Message Using ...

Github user jerryshao commented on a diff in the pull request:

https://github.com/apache/spark/pull/18308#discussion_r122129357

--- Diff:

core/src/main/scala/org/apache/spark/ExecutorAllocationManager.scala ---

@@ -432,8 +432,10 @@ private[spark] class ExecutorAllocationManager(

if (testing || executorsRemoved.nonEmpty) {

executorsRemoved.foreach { removedExecutorId =>

newExecutorTotal -= 1

+val hasCachedBlocks =

SparkEnv.get.blockManager.master.hasCachedBlocks(executorId);

--- End diff --

And final semicolon ";" is not necessary.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18308: [SPARK-21099][Spark Core] INFO Log Message Using ...

Github user jerryshao commented on a diff in the pull request:

https://github.com/apache/spark/pull/18308#discussion_r122129269

--- Diff:

core/src/main/scala/org/apache/spark/ExecutorAllocationManager.scala ---

@@ -432,8 +432,10 @@ private[spark] class ExecutorAllocationManager(

if (testing || executorsRemoved.nonEmpty) {

executorsRemoved.foreach { removedExecutorId =>

newExecutorTotal -= 1

+val hasCachedBlocks =

SparkEnv.get.blockManager.master.hasCachedBlocks(executorId);

--- End diff --

This variable `executorId` is not defined, should be change to

`removedExecutorId`.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18308: [SPARK-21099][Spark Core] INFO Log Message Using Incorre...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18308 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78086/ Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18308: [SPARK-21099][Spark Core] INFO Log Message Using Incorre...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18308 Merged build finished. Test FAILed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18308: [SPARK-21099][Spark Core] INFO Log Message Using Incorre...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18308 **[Test build #78086 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78086/testReport)** for PR 18308 at commit [`00a42e7`](https://github.com/apache/spark/commit/00a42e7c3d08ba01265db8c7329f0ba2148ce41a). * This patch **fails to build**. * This patch merges cleanly. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user viirya commented on the issue: https://github.com/apache/spark/pull/18301 So just get the global average of all avg hash probe metrics of all tasks? If there's skew, won't we like to see min, med, max? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18308: [SPARK-21099][Spark Core] INFO Log Message Using Incorre...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18308 **[Test build #78086 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78086/testReport)** for PR 18308 at commit [`00a42e7`](https://github.com/apache/spark/commit/00a42e7c3d08ba01265db8c7329f0ba2148ce41a). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18313: [SPARK-21087] [ML] CrossValidator, TrainValidationSplit ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18313 **[Test build #78085 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78085/testReport)** for PR 18313 at commit [`0fc43e1`](https://github.com/apache/spark/commit/0fc43e19c29f67c847749fb7ea0cf21ac47eb69f). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user rxin commented on the issue: https://github.com/apache/spark/pull/18301 I'd shorten it to "avg hash probe". Also do we really need min, med, max? Maybe just a single global avg? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user rxin commented on a diff in the pull request:

https://github.com/apache/spark/pull/18301#discussion_r122128307

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/joins/HashedRelation.scala

---

@@ -573,8 +586,11 @@ private[execution] final class LongToUnsafeRowMap(val

mm: TaskMemoryManager, cap

private def updateIndex(key: Long, address: Long): Unit = {

var pos = firstSlot(key)

assert(numKeys < array.length / 2)

+numKeyLookups += 1

--- End diff --

Ain't you on a beach somewhere?!

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #17758: [SPARK-20460][SQL] Make it more consistent to han...

Github user maropu commented on a diff in the pull request: https://github.com/apache/spark/pull/17758#discussion_r122128317 --- Diff: sql/core/src/test/resources/sql-tests/inputs/create.sql --- @@ -0,0 +1,9 @@ +-- Check name duplication in a regular case +CREATE TABLE t (c STRING, c INT) USING parquet; + +-- Check multiple name duplication +CREATE TABLE t (c0 STRING, c1 INT, c1 DOUBLE, c0 INT) USING parquet; + +-- Catch case-insensitive name duplication +SET spark.sql.caseSensitive=false; +CREATE TABLE t (ab STRING, cd INT, ef DOUBLE, Ab INT) USING parquet; --- End diff -- ok --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #17758: [SPARK-20460][SQL] Make it more consistent to han...

Github user maropu commented on a diff in the pull request: https://github.com/apache/spark/pull/17758#discussion_r122128225 --- Diff: sql/core/src/main/scala/org/apache/spark/sql/execution/command/ddl.scala --- @@ -466,13 +467,15 @@ case class AlterTableRenamePartitionCommand( oldPartition, table.partitionColumnNames, table.identifier.quotedString, - sparkSession.sessionState.conf.resolver) + sparkSession.sessionState.conf.resolver, + sparkSession.sessionState.conf.caseSensitiveAnalysis) val normalizedNewPartition = PartitioningUtils.normalizePartitionSpec( newPartition, table.partitionColumnNames, table.identifier.quotedString, - sparkSession.sessionState.conf.resolver) + sparkSession.sessionState.conf.resolver, + sparkSession.sessionState.conf.caseSensitiveAnalysis) --- End diff -- ok, I'll fix to do so. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #17758: [SPARK-20460][SQL] Make it more consistent to han...

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/17758#discussion_r122128029

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JdbcUtils.scala

---

@@ -741,14 +742,7 @@ object JdbcUtils extends Logging {

val nameEquality = df.sparkSession.sessionState.conf.resolver

// checks duplicate columns in the user specified column types.

-userSchema.fieldNames.foreach { col =>

- val duplicatesCols = userSchema.fieldNames.filter(nameEquality(_,

col))

- if (duplicatesCols.size >= 2) {

-throw new AnalysisException(

- "Found duplicate column(s) in createTableColumnTypes option

value: " +

-duplicatesCols.mkString(", "))

- }

-}

+SchemaUtils.checkSchemaColumnNameDuplication(userSchema,

"createTableColumnTypes option value")

--- End diff --

oh, sorry, my bad. I'll fix this.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

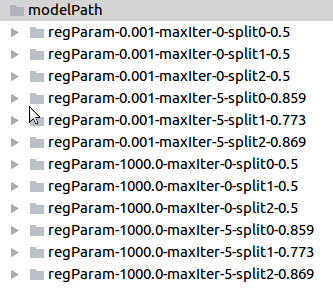

[GitHub] spark pull request #18313: [SPARK-21087] [ML] CrossValidator, TrainValidatio...

GitHub user hhbyyh opened a pull request: https://github.com/apache/spark/pull/18313 [SPARK-21087] [ML] CrossValidator, TrainValidationSplit should preserve all models after fitting: Scala ## What changes were proposed in this pull request? Allow `CrossValidatorModel` and `TrainValidationSplitModel` preserve the full list of fitted models. add a new string param `modelPath`, If set, all the models fitted during the training will be preserved under the specific directory path. By default the models will not be saved. Save the models during the training to avoid expensive memory consumption for caching the models. Sample for cross validation models: file name pattern: paramMap-split#-metric  Sample for train validation split: file name pattern: paramMap-metric  ## How was this patch tested? new unit tests and local test. You can merge this pull request into a Git repository by running: $ git pull https://github.com/hhbyyh/spark saveModels Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18313.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18313 commit f6dfc6624bee4dd3ab4048510b30517047fb979a Author: Yuhao Yang Date: 2017-06-15T05:53:13Z save all models commit 4c51912e0166a7b105533687b04a991e0e35257f Author: Yuhao Yang Date: 2017-06-15T06:06:34Z precision rounding commit 0fc43e19c29f67c847749fb7ea0cf21ac47eb69f Author: Yuhao Yang Date: 2017-06-15T06:12:35Z comment update --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18308: [SPARK-21099][Spark Core] INFO Log Message Using Incorre...

Github user srowen commented on the issue: https://github.com/apache/spark/pull/18308 Jenkins test this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user viirya commented on a diff in the pull request:

https://github.com/apache/spark/pull/18301#discussion_r122127953

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/joins/HashedRelation.scala

---

@@ -573,8 +586,11 @@ private[execution] final class LongToUnsafeRowMap(val

mm: TaskMemoryManager, cap

private def updateIndex(key: Long, address: Long): Unit = {

var pos = firstSlot(key)

assert(numKeys < array.length / 2)

+numKeyLookups += 1

--- End diff --

Yeah. OK. I think you're right. We should also care about the collision

when searching keys in join operator. I'll update this in next commit.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #17758: [SPARK-20460][SQL] Make it more consistent to han...

Github user wzhfy commented on a diff in the pull request: https://github.com/apache/spark/pull/17758#discussion_r122127202 --- Diff: sql/core/src/main/scala/org/apache/spark/sql/execution/command/ddl.scala --- @@ -466,13 +467,15 @@ case class AlterTableRenamePartitionCommand( oldPartition, table.partitionColumnNames, table.identifier.quotedString, - sparkSession.sessionState.conf.resolver) + sparkSession.sessionState.conf.resolver, + sparkSession.sessionState.conf.caseSensitiveAnalysis) val normalizedNewPartition = PartitioningUtils.normalizePartitionSpec( newPartition, table.partitionColumnNames, table.identifier.quotedString, - sparkSession.sessionState.conf.resolver) + sparkSession.sessionState.conf.resolver, + sparkSession.sessionState.conf.caseSensitiveAnalysis) --- End diff -- seems to me `sparkSession.sessionState.conf.caseSensitiveAnalysis` and `sparkSession.sessionState.conf.resolver` are kind of redundant, can we just use `resolver` to detect duplication? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #17758: [SPARK-20460][SQL] Make it more consistent to han...

Github user wzhfy commented on a diff in the pull request: https://github.com/apache/spark/pull/17758#discussion_r122126852 --- Diff: sql/core/src/test/resources/sql-tests/inputs/create.sql --- @@ -0,0 +1,9 @@ +-- Check name duplication in a regular case +CREATE TABLE t (c STRING, c INT) USING parquet; + +-- Check multiple name duplication +CREATE TABLE t (c0 STRING, c1 INT, c1 DOUBLE, c0 INT) USING parquet; + +-- Catch case-insensitive name duplication +SET spark.sql.caseSensitive=false; +CREATE TABLE t (ab STRING, cd INT, ef DOUBLE, Ab INT) USING parquet; --- End diff -- We only need two tests here, set case sensitive explicitly as true or false. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #17758: [SPARK-20460][SQL] Make it more consistent to han...

Github user wzhfy commented on a diff in the pull request:

https://github.com/apache/spark/pull/17758#discussion_r122126261

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/jdbc/JdbcUtils.scala

---

@@ -741,14 +742,7 @@ object JdbcUtils extends Logging {

val nameEquality = df.sparkSession.sessionState.conf.resolver

// checks duplicate columns in the user specified column types.

-userSchema.fieldNames.foreach { col =>

- val duplicatesCols = userSchema.fieldNames.filter(nameEquality(_,

col))

- if (duplicatesCols.size >= 2) {

-throw new AnalysisException(

- "Found duplicate column(s) in createTableColumnTypes option

value: " +

-duplicatesCols.mkString(", "))

- }

-}

+SchemaUtils.checkSchemaColumnNameDuplication(userSchema,

"createTableColumnTypes option value")

--- End diff --

Why? In `parseUserSpecifiedCreateTableColumnTypes`, apparently there are

case sensitive checking.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18311: Branch 2.0

Github user srowen commented on the issue: https://github.com/apache/spark/pull/18311 @yhqairqq close this --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18312: [SPARK-20980][DOCS] update doc to reflect multiLi...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/18312 --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18312: [SPARK-20980][DOCS] update doc to reflect multiLine chan...

Github user felixcheung commented on the issue: https://github.com/apache/spark/pull/18312 thx, merged to master/2.2 --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18312: [SPARK-20980][DOCS] update doc to reflect multiLine chan...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18312 **[Test build #78084 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78084/testReport)** for PR 18312 at commit [`a9aeb68`](https://github.com/apache/spark/commit/a9aeb686faf9652c28d3aca775f5964e282c7663). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18312: [SPARK-20980][DOCS] update doc to reflect multiLine chan...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18312 Merged build finished. Test PASSed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18312: [SPARK-20980][DOCS] update doc to reflect multiLine chan...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18312 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78084/ Test PASSed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18312: [SPARK-20980][DOCS] update doc to reflect multiLine chan...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/18312 LGTM --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18075: [SPARK-18016][SQL][CATALYST] Code Generation: Con...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/18075 --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18312: [SPARK-20980][DOCS] update doc to reflect multiLine chan...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18312 **[Test build #78084 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78084/testReport)** for PR 18312 at commit [`a9aeb68`](https://github.com/apache/spark/commit/a9aeb686faf9652c28d3aca775f5964e282c7663). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18202: [SPARK-20980] [SQL] Rename `wholeFile` to `multiLine` fo...

Github user felixcheung commented on the issue: https://github.com/apache/spark/pull/18202 opened #18312 --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18075: [SPARK-18016][SQL][CATALYST] Code Generation: Constant P...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/18075 thanks, merging to master! you can address the remaining comments in your other PRs --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18312: [SPARK-20980][DOCS] update doc to reflect multiLi...

GitHub user felixcheung opened a pull request: https://github.com/apache/spark/pull/18312 [SPARK-20980][DOCS] update doc to reflect multiLine change ## What changes were proposed in this pull request? doc only change ## How was this patch tested? manually You can merge this pull request into a Git repository by running: $ git pull https://github.com/felixcheung/spark sqljsonwholefiledoc Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18312.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18312 commit a9aeb686faf9652c28d3aca775f5964e282c7663 Author: Felix Cheung Date: 2017-06-15T05:44:23Z rename --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18075: [SPARK-18016][SQL][CATALYST] Code Generation: Con...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/18075#discussion_r122122867

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/ColumnarBatchScan.scala

---

@@ -93,7 +93,7 @@ private[sql] trait ColumnarBatchScan extends

CodegenSupport {

}

val nextBatch = ctx.freshName("nextBatch")

--- End diff --

let's keep it as it was

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18075: [SPARK-18016][SQL][CATALYST] Code Generation: Con...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/18075#discussion_r122122817

--- Diff:

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/codegen/GeneratedProjectionSuite.scala

---

@@ -62,13 +62,63 @@ class GeneratedProjectionSuite extends SparkFunSuite {

val result = safeProj(unsafe)

// Can't compare GenericInternalRow with JoinedRow directly

(0 until N).foreach { i =>

- val r = i + 1

- val s = UTF8String.fromString((i + 1).toString)

- assert(r === result.getInt(i + 2))

+ val s = UTF8String.fromString(i.toString)

+ assert(i === result.getInt(i + 2))

assert(s === result.getUTF8String(i + 2 + N))

- assert(r === result.getStruct(0, N * 2).getInt(i))

+ assert(i === result.getStruct(0, N * 2).getInt(i))

assert(s === result.getStruct(0, N * 2).getUTF8String(i + N))

- assert(r === result.getStruct(1, N * 2).getInt(i))

+ assert(i === result.getStruct(1, N * 2).getInt(i))

+ assert(s === result.getStruct(1, N * 2).getUTF8String(i + N))

+}

+

+// test generated MutableProjection

+val exprs = nestedSchema.fields.zipWithIndex.map { case (f, i) =>

+ BoundReference(i, f.dataType, true)

+}

+val mutableProj = GenerateMutableProjection.generate(exprs)

+val row1 = mutableProj(result)

+assert(result === row1)

+val row2 = mutableProj(result)

+assert(result === row2)

+ }

+

+ test("SPARK-18016: generated projections on wider table requiring

class-splitting") {

+val N = 4000

+val wideRow1 = new GenericInternalRow((0 until N).toArray[Any])

--- End diff --

ditto

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18075: [SPARK-18016][SQL][CATALYST] Code Generation: Con...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/18075#discussion_r122122765

--- Diff:

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/codegen/GeneratedProjectionSuite.scala

---

@@ -33,10 +33,10 @@ class GeneratedProjectionSuite extends SparkFunSuite {

test("generated projections on wider table") {

val N = 1000

-val wideRow1 = new GenericInternalRow((1 to N).toArray[Any])

+val wideRow1 = new GenericInternalRow((0 until N).toArray[Any])

--- End diff --

nit: can be `new GenericInternalRow(N)`

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18306: [SPARK-21029][SS] All StreamingQuery should be st...

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18306#discussion_r122122675

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/streaming/StreamingQueryManagerSuite.scala

---

@@ -239,6 +237,40 @@ class StreamingQueryManagerSuite extends StreamTest

with BeforeAndAfter {

}

}

+ test("stopAllQueries") {

+val datasets = Seq.fill(5)(makeDataset._2)

+withQueriesOn(datasets: _*) { queries =>

+ assert(queries.forall(_.isActive))

+ spark.streams.stopAllQueries()

+ assert(queries.forall(_.isActive == false), "Queries are still

running")

+}

+ }

+

+ test("stop session stops all queries") {

+val inputData = MemoryStream[Int]

+val mapped = inputData.toDS.map(6 / _)

+var query: StreamingQuery = null

+try {

+ query = mapped.toDF.writeStream

+.format("memory")

+.queryName(s"queryInNewSession")

+.outputMode("append")

+.start()

+ assert(query.isActive)

+ spark.stop()

+ assert(spark.sparkContext.isStopped)

+ assert(query.isActive == false, "Query is still running")

+} catch {

+ case NonFatal(e) =>

+if (query != null) query.stop()

+throw e

--- End diff --

why still try/catch to stop the query? since this is a test of this

specific behavior, if the query isn't stopped, or throws, the test actually is

failing?

more importantly, why ignore NonFatal exception thrown?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18046: [SPARK-20749][SQL] Built-in SQL Function Support - all v...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18046 **[Test build #78083 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78083/testReport)** for PR 18046 at commit [`1e8cbcd`](https://github.com/apache/spark/commit/1e8cbcd26fa8905d1db3e41d8695a34b706d5dca). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18075: [SPARK-18016][SQL][CATALYST] Code Generation: Con...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/18075#discussion_r122122445

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/codegen/CodeGenerator.scala

---

@@ -233,10 +222,118 @@ class CodegenContext {

// The collection of sub-expression result resetting methods that need

to be called on each row.

val subexprFunctions = mutable.ArrayBuffer.empty[String]

- def declareAddedFunctions(): String = {

-addedFunctions.map { case (funcName, funcCode) => funcCode

}.mkString("\n")

+ val outerClassName = "OuterClass"

--- End diff --

nit: `private val`

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18284: [SPARK-21072][SQL] `TreeNode.mapChildren` should only ap...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18284 **[Test build #78082 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78082/testReport)** for PR 18284 at commit [`9a9d8af`](https://github.com/apache/spark/commit/9a9d8afee0e4ef59bfd4faca66e106851d51822e). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18202: [SPARK-20980] [SQL] Rename `wholeFile` to `multiLine` fo...

Github user felixcheung commented on the issue: https://github.com/apache/spark/pull/18202 oops - found this https://github.com/apache/spark/blame/ae33abf71b353c638487948b775e966c7127cd46/docs/sql-programming-guide.md#L1001 --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18284: [SPARK-21072][SQL] `TreeNode.mapChildren` should only ap...

Github user ConeyLiu commented on the issue: https://github.com/apache/spark/pull/18284 @cloud-fan thanks for reviewing, code has updated. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18284: [SPARK-21072][SQL] `TreeNode.mapChildren` should only ap...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18284 **[Test build #78081 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78081/testReport)** for PR 18284 at commit [`92d4a80`](https://github.com/apache/spark/commit/92d4a80929783cca9284b9b74c37fbfc8717dc96). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18202: [SPARK-20980] [SQL] Rename `wholeFile` to `multiLine` fo...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/18202 ah lucky :) merging to master/2.2! --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18202: [SPARK-20980] [SQL] Rename `wholeFile` to `multiL...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/18202 --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18300: [SPARK-21043][SQL] Add unionByName in Dataset

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/18300#discussion_r122121031

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -1764,6 +1765,58 @@ class Dataset[T] private[sql](

}

/**

+ * Returns a new Dataset containing union of rows in this Dataset and

another Dataset.

+ *

+ * This is different from both `UNION ALL` and `UNION DISTINCT` in SQL.

To do a SQL-style set

+ * union (that does deduplication of elements), use this function

followed by a [[distinct]].

+ *

+ * The difference between this function and [[union]] is that this

function

+ * resolves columns by name (not by position):

+ *

+ * {{{

+ * val df1 = Seq((1, 2, 3)).toDF("col0", "col1", "col2")

+ * val df2 = Seq((4, 5, 6)).toDF("col1", "col2", "col0")

+ * df1.unionByName(df2).show

+ *

+ * // output:

+ * // ++++

+ * // |col0|col1|col2|

+ * // ++++

+ * // | 1| 2| 3|

+ * // | 6| 4| 5|

+ * // ++++

+ * }}}

+ *

+ * @group typedrel

+ * @since 2.3.0

+ */

+ def unionByName(other: Dataset[T]): Dataset[T] = withSetOperator {

+// Creates a `Union` node and resolves it first to reorder output

attributes in `other` by name

+val unionPlan =

sparkSession.sessionState.executePlan(Union(logicalPlan, other.logicalPlan))

+unionPlan.assertAnalyzed()

+val Seq(left, right) = unionPlan.analyzed.children

+

+// Builds a project list for `other` based on `logicalPlan` output

names

+val resolver = sparkSession.sessionState.analyzer.resolver

+val rightProjectList = mutable.ArrayBuffer.empty[Attribute]

+val rightOutputAttrs = right.output

+for (lattr <- left.output) {

+ // To handle duplicate names, we first compute diff between

`rightOutputAttrs` and

+ // already-found attrs in `rightProjectList`.

+ rightOutputAttrs.diff(rightProjectList).find { rattr =>

resolver(lattr.name, rattr.name)}

--- End diff --

In the logic, it seems we cannot catch left column duplication, I think.

How about checking column name duplication first, then build a right

project list?

```

// Check column name duplication in both sides first

val leftOutputAttrs = left.output

val rightOutputAttrs = right.output

val caseSensitiveAnalysis =

sparkSession.sessionState.conf.caseSensitiveAnalysis

SchemaUtils.checkColumnNameDuplication(

leftOutputAttrs.map(_.name), "left column names",

caseSensitiveAnalysis)

SchemaUtils.checkColumnNameDuplication(

rightOutputAttrs.map(_.name), "right column names",

caseSensitiveAnalysis)

// Then, builds a project list for `other` based on `logicalPlan`

output names

val resolver = sparkSession.sessionState.analyzer.resolver

val rightProjectList = left.output.map { lattr =>

val foundAttrs = rightOutputAttrs.filter { rattr =>

resolver(lattr.name, rattr.name) }

assert(foundAttrs.size > 1)

if (foundAttrs.size == 1) {

foundAttrs.head

} else if (foundAttrs.size == 0) {

throw new AnalysisException(s"""Cannot resolve column name

"${lattr.name}" among """ +

s"""(${rightOutputAttrs.map(_.name).mkString(", ")})""")

}

}

```

(I used `SchemaUtils` here implemented in #17758

https://github.com/apache/spark/pull/17758/files#diff-dc9b15e4af298799d788b59d2baf96a9R29)

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18299: [SPARK-21092][SQL] Wire SQLConf in logical plan a...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/18299 --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18284: [SPARK-21072][SQL] `TreeNode.mapChildren` should ...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/18284#discussion_r122120954

--- Diff:

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/trees/TreeNodeSuite.scala

---

@@ -146,6 +154,23 @@ class TreeNodeSuite extends SparkFunSuite {

assert(actual === Dummy(None))

}

+ test("mapChildren should only works on children") {

+val children = Seq((Literal(1), Literal(2)))

+val notChildren = Seq((Literal(3), Literal(4)))

+val before = SeqTupleExpression(children, notChildren)

+val toZero: PartialFunction[Expression, Expression] = { case

Literal(_, _) => Literal(0) }

+val expect = SeqTupleExpression(Seq((Literal(0), Literal(0))),

notChildren)

+

+var actual = before transformDown toZero

+assert(actual === expect)

+

+actual = before transformUp toZero

+assert(actual === expect)

+

+actual = before transform toZero

--- End diff --

or we can testing `mapChildren` directly

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18284: [SPARK-21072][SQL] `TreeNode.mapChildren` should ...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/18284#discussion_r122120916

--- Diff:

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/trees/TreeNodeSuite.scala

---

@@ -146,6 +154,23 @@ class TreeNodeSuite extends SparkFunSuite {

assert(actual === Dummy(None))

}

+ test("mapChildren should only works on children") {

+val children = Seq((Literal(1), Literal(2)))

+val notChildren = Seq((Literal(3), Literal(4)))

+val before = SeqTupleExpression(children, notChildren)

+val toZero: PartialFunction[Expression, Expression] = { case

Literal(_, _) => Literal(0) }

+val expect = SeqTupleExpression(Seq((Literal(0), Literal(0))),

notChildren)

+

+var actual = before transformDown toZero

+assert(actual === expect)

+

+actual = before transformUp toZero

+assert(actual === expect)

+

+actual = before transform toZero

--- End diff --

I think testing `transform` is good enough

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18284: [SPARK-21072][SQL] `TreeNode.mapChildren` should ...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/18284#discussion_r122120851

--- Diff:

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/trees/TreeNodeSuite.scala

---

@@ -61,6 +61,14 @@ case class ExpressionInMap(map: Map[String, Expression])

extends Expression with

override lazy val resolved = true

}

+case class SeqTupleExpression(sons: Seq[(Expression, Expression)],

+notsons: Seq[(Expression, Expression)]) extends Expression with

Unevaluable {

--- End diff --

nit: `nonSons`

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18231: [SPARK-20994] Remove redundant characters in OpenBlocks ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18231 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78079/ Test PASSed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18284: [SPARK-21072][SQL] `TreeNode.mapChildren` should ...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/18284#discussion_r122120866

--- Diff:

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/trees/TreeNodeSuite.scala

---

@@ -146,6 +154,23 @@ class TreeNodeSuite extends SparkFunSuite {

assert(actual === Dummy(None))

}

+ test("mapChildren should only works on children") {

+val children = Seq((Literal(1), Literal(2)))

+val notChildren = Seq((Literal(3), Literal(4)))

--- End diff --

nit: `nonChildren`

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18231: [SPARK-20994] Remove redundant characters in OpenBlocks ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18231 Merged build finished. Test PASSed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18299: [SPARK-21092][SQL] Wire SQLConf in logical plan and expr...

Github user rxin commented on the issue: https://github.com/apache/spark/pull/18299 Merging in master. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18284: [SPARK-21072][SQL] `TreeNode.mapChildren` should ...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/18284#discussion_r122120741

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/trees/TreeNode.scala

---

@@ -340,11 +340,21 @@ abstract class TreeNode[BaseType <:

TreeNode[BaseType]] extends Product {

arg

}

case tuple@(arg1: TreeNode[_], arg2: TreeNode[_]) =>

-val newChild1 = f(arg1.asInstanceOf[BaseType])

-val newChild2 = f(arg2.asInstanceOf[BaseType])

+val newChild1 = if (containsChild(arg1)) {

+ f(arg1.asInstanceOf[BaseType])

+} else {

+ arg1

--- End diff --

we can call `arg1.asInstanceOf[BaseType]` here, to avoid [this

change](https://github.com/apache/spark/pull/18284/files#diff-eac5b02bb450a235fef5e902a2671254R357)

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18310: [SPARK-21103][SQL] QueryPlanConstraints should be...

Github user rxin closed the pull request at: https://github.com/apache/spark/pull/18310 --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18284: [SPARK-21072][SQL] `TreeNode.mapChildren` should ...

Github user cloud-fan commented on a diff in the pull request: https://github.com/apache/spark/pull/18284#discussion_r122120790 --- Diff: sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/trees/TreeNodeSuite.scala --- @@ -61,6 +61,14 @@ case class ExpressionInMap(map: Map[String, Expression]) extends Expression with override lazy val resolved = true } +case class SeqTupleExpression(sons: Seq[(Expression, Expression)], --- End diff -- `children` and `nonChildren` may be better names --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18231: [SPARK-20994] Remove redundant characters in OpenBlocks ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18231 **[Test build #78079 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78079/testReport)** for PR 18231 at commit [`6677bc9`](https://github.com/apache/spark/commit/6677bc9164ca3c04988fab943e0ce0f0bbed5b10). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18310: [SPARK-21103][SQL] QueryPlanConstraints should be part o...

Github user rxin commented on the issue: https://github.com/apache/spark/pull/18310 Closing for now, since @sameeragarwal said it might be useful in physical planning in the future. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18106: [SPARK-20754][SQL] Support TRUNC (number)

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18106 **[Test build #78080 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78080/testReport)** for PR 18106 at commit [`b391b6a`](https://github.com/apache/spark/commit/b391b6a3e51229e501982fe184685d7c2e185172). --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18309: [SPARK-21079] [SQL] Calculate total size of a partition ...

Github user wzhfy commented on the issue: https://github.com/apache/spark/pull/18309 Can you add a test case? In the test, we can add partitions with different paths by ALTER TABLE SET LOCATION command. I think that can reproduce your scenario, right? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18310: [SPARK-21103][SQL] QueryPlanConstraints should be part o...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18310 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78078/ Test PASSed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18310: [SPARK-21103][SQL] QueryPlanConstraints should be part o...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18310 Merged build finished. Test PASSed. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18310: [SPARK-21103][SQL] QueryPlanConstraints should be part o...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18310 **[Test build #78078 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78078/testReport)** for PR 18310 at commit [`40de35c`](https://github.com/apache/spark/commit/40de35c3ac6bdbf2bd2c43b9deca04a5cbdbc4ef). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18309: [SPARK-21079] [SQL] Calculate total size of a partition ...

Github user wzhfy commented on the issue: https://github.com/apache/spark/pull/18309 Please explain the failed scenario in PR description. That's why our test cases cannot catch it and we need to test it manually. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18309: [SPARK-21079] [SQL] Calculate total size of a par...

Github user wzhfy commented on a diff in the pull request:

https://github.com/apache/spark/pull/18309#discussion_r122116932

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/command/AnalyzeTableCommand.scala

---

@@ -81,6 +83,19 @@ case class AnalyzeTableCommand(

object AnalyzeTableCommand extends Logging {

def calculateTotalSize(sessionState: SessionState, catalogTable:

CatalogTable): Long = {

+if (catalogTable.partitionColumnNames.isEmpty) {

+ calculateTotalSize(sessionState, catalogTable.identifier,

catalogTable.storage.locationUri)

--- End diff --

rename `calculateLocationSize`?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18300: [SPARK-21043][SQL] Add unionByName in Dataset

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/18300#discussion_r122116812

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -1764,6 +1765,58 @@ class Dataset[T] private[sql](

}

/**

+ * Returns a new Dataset containing union of rows in this Dataset and

another Dataset.

+ *

+ * This is different from both `UNION ALL` and `UNION DISTINCT` in SQL.

To do a SQL-style set

+ * union (that does deduplication of elements), use this function

followed by a [[distinct]].

+ *

+ * The difference between this function and [[union]] is that this

function

+ * resolves columns by name (not by position):

+ *

+ * {{{

+ * val df1 = Seq((1, 2, 3)).toDF("col0", "col1", "col2")

+ * val df2 = Seq((4, 5, 6)).toDF("col1", "col2", "col0")

+ * df1.unionByName(df2).show

+ *

+ * // output:

+ * // ++++

+ * // |col0|col1|col2|

+ * // ++++

+ * // | 1| 2| 3|

+ * // | 6| 4| 5|

+ * // ++++

+ * }}}

+ *

+ * @group typedrel

+ * @since 2.3.0

+ */

+ def unionByName(other: Dataset[T]): Dataset[T] = withSetOperator {

+// Creates a `Union` node and resolves it first to reorder output

attributes in `other` by name

+val unionPlan =

sparkSession.sessionState.executePlan(Union(logicalPlan, other.logicalPlan))

+unionPlan.assertAnalyzed()

+val Seq(left, right) = unionPlan.analyzed.children

+

+// Builds a project list for `other` based on `logicalPlan` output

names

+val resolver = sparkSession.sessionState.analyzer.resolver

+val rightProjectList = mutable.ArrayBuffer.empty[Attribute]

+val rightOutputAttrs = right.output

+for (lattr <- left.output) {

+ // To handle duplicate names, we first compute diff between

`rightOutputAttrs` and

+ // already-found attrs in `rightProjectList`.

+ rightOutputAttrs.diff(rightProjectList).find { rattr =>

resolver(lattr.name, rattr.name)}

--- End diff --

Inside the map, we can find the column names by using `filter` +

`resolver`.

- If the number of found columns is larger than two, throw an error for

duplicate names.

- If the number is zero, throw an error.

- If the number is one, return the right-side attribute.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18301: [SPARK-21052][SQL] Add hash map metrics to join

Github user hvanhovell commented on a diff in the pull request:

https://github.com/apache/spark/pull/18301#discussion_r122116294

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/joins/HashedRelation.scala

---

@@ -573,8 +586,11 @@ private[execution] final class LongToUnsafeRowMap(val

mm: TaskMemoryManager, cap

private def updateIndex(key: Long, address: Long): Unit = {

var pos = firstSlot(key)

assert(numKeys < array.length / 2)

+numKeyLookups += 1

--- End diff --

IMO we should. The number of required probes is the different per key, and

is also dependent on the order in which the map was constructed. If you combine

this with some skew and missing keys, the number of probes can be much higher

than expected.