[GitHub] [spark] cloud-fan commented on a diff in pull request #36966: [SPARK-37753] [FOLLOWUP] [SQL] Fix unit tests sometimes failing

cloud-fan commented on code in PR #36966:

URL: https://github.com/apache/spark/pull/36966#discussion_r907040117

##

sql/core/src/test/scala/org/apache/spark/sql/execution/adaptive/AdaptiveQueryExecSuite.scala:

##

@@ -710,18 +711,20 @@ class AdaptiveQueryExecSuite

test("SPARK-37753: Inhibit broadcast in left outer join when there are many

empty" +

" partitions on outer/left side") {

-withSQLConf(

- SQLConf.ADAPTIVE_EXECUTION_ENABLED.key -> "true",

- SQLConf.NON_EMPTY_PARTITION_RATIO_FOR_BROADCAST_JOIN.key -> "0.5") {

- // `testData` is small enough to be broadcast but has empty partition

ratio over the config.

- withSQLConf(SQLConf.AUTO_BROADCASTJOIN_THRESHOLD.key -> "200") {

-val (plan, adaptivePlan) = runAdaptiveAndVerifyResult(

- "SELECT * FROM (select * from testData where value = '1') td" +

-" left outer join testData2 ON key = a")

-val smj = findTopLevelSortMergeJoin(plan)

-assert(smj.size == 1)

-val bhj = findTopLevelBroadcastHashJoin(adaptivePlan)

-assert(bhj.isEmpty)

+eventually(timeout(40.seconds), interval(500.milliseconds)) {

Review Comment:

the interval is 500 ms, do you know how long this test takes?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on a diff in pull request #36985: [SPARK-39597][PYTHON] Make GetTable, TableExists and DatabaseExists in the python side support 3-layer-namespace

zhengruifeng commented on code in PR #36985: URL: https://github.com/apache/spark/pull/36985#discussion_r907041945 ## python/pyspark/sql/catalog.py: ## @@ -164,6 +169,65 @@ def listTables(self, dbName: Optional[str] = None) -> List[Table]: ) return tables +def getTable(self, tableName: str, dbName: Optional[str] = None) -> Table: Review Comment: I think it's a good idea. functions with `dbName` and `tableName` are now somewhat confusing, when `tableName` start to support 3L namespace. Let me update this PR. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #36963: [SPARK-39564][SS] Expose the information of catalog table to the logical plan in streaming query

cloud-fan commented on code in PR #36963:

URL: https://github.com/apache/spark/pull/36963#discussion_r907049142

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/trees/TreeNode.scala:

##

@@ -877,13 +879,18 @@ abstract class TreeNode[BaseType <: TreeNode[BaseType]]

extends Product with Tre

t.copy(properties = Utils.redact(t.properties).toMap,

options = Utils.redact(t.options).toMap) :: Nil

case table: CatalogTable =>

- table.storage.serde match {

-case Some(serde) => table.identifier :: serde :: Nil

-case _ => table.identifier :: Nil

- }

+ stringArgsForCatalogTable(table)

+

case other => other :: Nil

}.mkString(", ")

+ private def stringArgsForCatalogTable(table: CatalogTable): Seq[Any] = {

+table.storage.serde match {

+ case Some(serde) => table.identifier :: serde :: Nil

Review Comment:

I think quoted string is better, let's keep it as it was first.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #36963: [SPARK-39564][SS] Expose the information of catalog table to the logical plan in streaming query

cloud-fan commented on code in PR #36963:

URL: https://github.com/apache/spark/pull/36963#discussion_r907049815

##

sql/catalyst/src/main/scala/org/apache/spark/sql/execution/datasources/v2/DataSourceV2Relation.scala:

##

@@ -68,7 +68,11 @@ case class DataSourceV2Relation(

override def skipSchemaResolution: Boolean =

table.supports(TableCapability.ACCEPT_ANY_SCHEMA)

override def simpleString(maxFields: Int): String = {

-s"RelationV2${truncatedString(output, "[", ", ", "]", maxFields)} $name"

+val tableQualifier = (catalog, identifier) match {

Review Comment:

nit: qualifiedTableName

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] mcdull-zhang commented on a diff in pull request #36966: [SPARK-37753] [FOLLOWUP] [SQL] Fix unit tests sometimes failing

mcdull-zhang commented on code in PR #36966:

URL: https://github.com/apache/spark/pull/36966#discussion_r907052628

##

sql/core/src/test/scala/org/apache/spark/sql/execution/adaptive/AdaptiveQueryExecSuite.scala:

##

@@ -710,18 +711,20 @@ class AdaptiveQueryExecSuite

test("SPARK-37753: Inhibit broadcast in left outer join when there are many

empty" +

" partitions on outer/left side") {

-withSQLConf(

- SQLConf.ADAPTIVE_EXECUTION_ENABLED.key -> "true",

- SQLConf.NON_EMPTY_PARTITION_RATIO_FOR_BROADCAST_JOIN.key -> "0.5") {

- // `testData` is small enough to be broadcast but has empty partition

ratio over the config.

- withSQLConf(SQLConf.AUTO_BROADCASTJOIN_THRESHOLD.key -> "200") {

-val (plan, adaptivePlan) = runAdaptiveAndVerifyResult(

- "SELECT * FROM (select * from testData where value = '1') td" +

-" left outer join testData2 ON key = a")

-val smj = findTopLevelSortMergeJoin(plan)

-assert(smj.size == 1)

-val bhj = findTopLevelBroadcastHashJoin(adaptivePlan)

-assert(bhj.isEmpty)

+eventually(timeout(40.seconds), interval(500.milliseconds)) {

Review Comment:

After communication, the unit test takes about 3s, the timeout is set to

15s, and it can be retried up to 5 times.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #36963: [SPARK-39564][SS] Expose the information of catalog table to the logical plan in streaming query

cloud-fan commented on code in PR #36963:

URL: https://github.com/apache/spark/pull/36963#discussion_r907053973

##

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/sources/WriteToMicroBatchDataSourceV1.scala:

##

@@ -0,0 +1,55 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.streaming.sources

+

+import org.apache.spark.sql.catalyst.catalog.CatalogTable

+import org.apache.spark.sql.catalyst.expressions.Attribute

+import org.apache.spark.sql.catalyst.plans.logical.{LogicalPlan, UnaryNode}

+import org.apache.spark.sql.execution.streaming.Sink

+import org.apache.spark.sql.streaming.OutputMode

+

+/**

+ * Marker node to represent a DSv1 sink on streaming query.

+ *

+ * Despite this is expected to be the top node, this node should behave like

"pass-through"

+ * since the DSv1 codepath on microbatch execution handles sink operation

separately.

+ *

+ * This node is eliminated in streaming specific optimization phase, which

means there is no

+ * matching physical node.

+ */

+case class WriteToMicroBatchDataSourceV1(

+catalogTable: Option[CatalogTable],

+sink: Sink,

+query: LogicalPlan,

+queryId: String,

+writeOptions: Map[String, String],

+outputMode: OutputMode,

+batchId: Option[Long] = None)

Review Comment:

where do we use this `batchId`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] panbingkun opened a new pull request, #36998: [SPARK-39613][BUILD] Upgrade shapeless to 2.3.9

panbingkun opened a new pull request, #36998: URL: https://github.com/apache/spark/pull/36998 ### What changes were proposed in this pull request? This PR aims to upgrade shapeless from 2.3.7 to 2.3.9. ### Why are the changes needed? This will bring some bug fix of shapeless ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Pass GA -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a diff in pull request #36963: [SPARK-39564][SS] Expose the information of catalog table to the logical plan in streaming query

HeartSaVioR commented on code in PR #36963:

URL: https://github.com/apache/spark/pull/36963#discussion_r907061291

##

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/sources/WriteToMicroBatchDataSourceV1.scala:

##

@@ -0,0 +1,55 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.streaming.sources

+

+import org.apache.spark.sql.catalyst.catalog.CatalogTable

+import org.apache.spark.sql.catalyst.expressions.Attribute

+import org.apache.spark.sql.catalyst.plans.logical.{LogicalPlan, UnaryNode}

+import org.apache.spark.sql.execution.streaming.Sink

+import org.apache.spark.sql.streaming.OutputMode

+

+/**

+ * Marker node to represent a DSv1 sink on streaming query.

+ *

+ * Despite this is expected to be the top node, this node should behave like

"pass-through"

+ * since the DSv1 codepath on microbatch execution handles sink operation

separately.

+ *

+ * This node is eliminated in streaming specific optimization phase, which

means there is no

+ * matching physical node.

+ */

+case class WriteToMicroBatchDataSourceV1(

+catalogTable: Option[CatalogTable],

+sink: Sink,

+query: LogicalPlan,

+queryId: String,

+writeOptions: Map[String, String],

+outputMode: OutputMode,

+batchId: Option[Long] = None)

Review Comment:

This is to make this class be symmetric with WriteToMicroBatchDataSource.

Many of parameters are not actually used.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun opened a new pull request, #36999: [SPARK-39614][K8S] K8s pod name follows `DNS Subdomain Names` rule

dongjoon-hyun opened a new pull request, #36999: URL: https://github.com/apache/spark/pull/36999 ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a diff in pull request #36963: [SPARK-39564][SS] Expose the information of catalog table to the logical plan in streaming query

HeartSaVioR commented on code in PR #36963:

URL: https://github.com/apache/spark/pull/36963#discussion_r907063933

##

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/sources/WriteToMicroBatchDataSourceV1.scala:

##

@@ -0,0 +1,55 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.streaming.sources

+

+import org.apache.spark.sql.catalyst.catalog.CatalogTable

+import org.apache.spark.sql.catalyst.expressions.Attribute

+import org.apache.spark.sql.catalyst.plans.logical.{LogicalPlan, UnaryNode}

+import org.apache.spark.sql.execution.streaming.Sink

+import org.apache.spark.sql.streaming.OutputMode

+

+/**

+ * Marker node to represent a DSv1 sink on streaming query.

+ *

+ * Despite this is expected to be the top node, this node should behave like

"pass-through"

+ * since the DSv1 codepath on microbatch execution handles sink operation

separately.

+ *

+ * This node is eliminated in streaming specific optimization phase, which

means there is no

+ * matching physical node.

+ */

+case class WriteToMicroBatchDataSourceV1(

+catalogTable: Option[CatalogTable],

+sink: Sink,

+query: LogicalPlan,

+queryId: String,

+writeOptions: Map[String, String],

+outputMode: OutputMode,

+batchId: Option[Long] = None)

Review Comment:

Previous self-comment:

> It'd be nice if we can deal with

[SPARK-27484](https://issues.apache.org/jira/browse/SPARK-27484), but let's

defer it as of now as it may bring additional works/concerns.

These parameters could probably help us to go with SPARK-27484.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on pull request #36999: [SPARK-39614][K8S] K8s pod name follows `DNS Subdomain Names` rule

dongjoon-hyun commented on PR #36999: URL: https://github.com/apache/spark/pull/36999#issuecomment-1166991352 cc @yaooqinn -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a diff in pull request #36963: [SPARK-39564][SS] Expose the information of catalog table to the logical plan in streaming query

HeartSaVioR commented on code in PR #36963:

URL: https://github.com/apache/spark/pull/36963#discussion_r907059119

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/trees/TreeNode.scala:

##

@@ -877,13 +879,18 @@ abstract class TreeNode[BaseType <: TreeNode[BaseType]]

extends Product with Tre

t.copy(properties = Utils.redact(t.properties).toMap,

options = Utils.redact(t.options).toMap) :: Nil

case table: CatalogTable =>

- table.storage.serde match {

-case Some(serde) => table.identifier :: serde :: Nil

-case _ => table.identifier :: Nil

- }

+ stringArgsForCatalogTable(table)

+

case other => other :: Nil

}.mkString(", ")

+ private def stringArgsForCatalogTable(table: CatalogTable): Seq[Any] = {

+table.storage.serde match {

+ case Some(serde) => table.identifier :: serde :: Nil

Review Comment:

OK. Let me leave this as it is, and see whether I can make it consistent for

other places in the following work (out of this PR).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #36999: [SPARK-39614][K8S] K8s pod name follows `DNS Subdomain Names` rule

dongjoon-hyun commented on code in PR #36999:

URL: https://github.com/apache/spark/pull/36999#discussion_r907065994

##

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala:

##

@@ -729,5 +729,6 @@ private[spark] object Config extends Logging {

val KUBERNETES_DRIVER_ENV_PREFIX = "spark.kubernetes.driverEnv."

- val KUBERNETES_DNSNAME_MAX_LENGTH = 63

+ val KUBERNETES_DNS_SUBDOMAIN_NAME_MAX_LENGTH = 253

+ val KUBERNETES_DNS_LABEL_NAME_MAX_LENGTH = 63

Review Comment:

K8s have two DNS name rules: DNS Subdomain and DNS Label.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a diff in pull request #36963: [SPARK-39564][SS] Expose the information of catalog table to the logical plan in streaming query

HeartSaVioR commented on code in PR #36963:

URL: https://github.com/apache/spark/pull/36963#discussion_r907066953

##

sql/catalyst/src/main/scala/org/apache/spark/sql/execution/datasources/v2/DataSourceV2Relation.scala:

##

@@ -68,7 +68,11 @@ case class DataSourceV2Relation(

override def skipSchemaResolution: Boolean =

table.supports(TableCapability.ACCEPT_ANY_SCHEMA)

override def simpleString(maxFields: Int): String = {

-s"RelationV2${truncatedString(output, "[", ", ", "]", maxFields)} $name"

+val tableQualifier = (catalog, identifier) match {

Review Comment:

[4ae4b81](https://github.com/apache/spark/pull/36963/commits/4ae4b813e295fda8f6c0dab4ffee43fef0761496)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on pull request #36774: [SPARK-39388][SQL] Reuse `orcSchema` when push down Orc predicates

cloud-fan commented on PR #36774: URL: https://github.com/apache/spark/pull/36774#issuecomment-1167008944 is `OrcUtils.readCatalystSchema` still needed anywhere? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] yaooqinn commented on a diff in pull request #36999: [SPARK-39614][K8S] K8s pod name follows `DNS Subdomain Names` rule

yaooqinn commented on code in PR #36999:

URL: https://github.com/apache/spark/pull/36999#discussion_r907082125

##

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/submit/KubernetesClientUtils.scala:

##

@@ -38,7 +38,7 @@ private[spark] object KubernetesClientUtils extends Logging {

// Config map name can be 63 chars at max.

def configMapName(prefix: String): String = {

val suffix = "-conf-map"

-s"${prefix.take(KUBERNETES_DNSNAME_MAX_LENGTH - suffix.length)}$suffix"

+s"${prefix.take(KUBERNETES_DNS_SUBDOMAIN_NAME_MAX_LENGTH -

suffix.length)}$suffix"

Review Comment:

nit: update L38

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #36774: [SPARK-39388][SQL] Reuse `orcSchema` when push down Orc predicates

LuciferYang commented on PR #36774: URL: https://github.com/apache/spark/pull/36774#issuecomment-1167015535 > readCatalystSchema Yes, it's useless. [05707f2](https://github.com/apache/spark/pull/36774/commits/05707f2654e20d191c7e8ba8b5640eedc685bf17) deleted it -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng opened a new pull request, #37000: [SPARK-39615][SQL][WIP] Make listColumns be compatible with 3 layer namespace

zhengruifeng opened a new pull request, #37000: URL: https://github.com/apache/spark/pull/37000 ### What changes were proposed in this pull request? Make listColumns be compatible with 3 layer namespace ### Why are the changes needed? for 3 layer namespace compatiblity ### Does this PR introduce _any_ user-facing change? Yes ### How was this patch tested? added UT -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #36774: [SPARK-39388][SQL] Reuse `orcSchema` when push down Orc predicates

LuciferYang commented on PR #36774: URL: https://github.com/apache/spark/pull/36774#issuecomment-1167018893 > > readCatalystSchema > > Yes, it's useless. [05707f2](https://github.com/apache/spark/pull/36774/commits/05707f2654e20d191c7e8ba8b5640eedc685bf17) deleted it Let's check this with GitHub Actions -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #36999: [SPARK-39614][K8S] K8s pod name follows `DNS Subdomain Names` rule

dongjoon-hyun commented on code in PR #36999:

URL: https://github.com/apache/spark/pull/36999#discussion_r907113328

##

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/submit/KubernetesClientUtils.scala:

##

@@ -38,7 +38,7 @@ private[spark] object KubernetesClientUtils extends Logging {

// Config map name can be 63 chars at max.

def configMapName(prefix: String): String = {

val suffix = "-conf-map"

-s"${prefix.take(KUBERNETES_DNSNAME_MAX_LENGTH - suffix.length)}$suffix"

+s"${prefix.take(KUBERNETES_DNS_SUBDOMAIN_NAME_MAX_LENGTH -

suffix.length)}$suffix"

Review Comment:

Oh, right. Thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] MaxGekk closed pull request #36965: [SPARK-39567][SQL] Support ANSI intervals in the percentile functions

MaxGekk closed pull request #36965: [SPARK-39567][SQL] Support ANSI intervals in the percentile functions URL: https://github.com/apache/spark/pull/36965 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on pull request #36999: [SPARK-39614][K8S] K8s pod name follows `DNS Subdomain Names` rule

dongjoon-hyun commented on PR #36999: URL: https://github.com/apache/spark/pull/36999#issuecomment-1167045418 Thank you, @yaooqinn . K8s UT passed at the first comment and the second commit is only changing comments. Merged to master/3.3. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun closed pull request #36999: [SPARK-39614][K8S] K8s pod name follows `DNS Subdomain Names` rule

dongjoon-hyun closed pull request #36999: [SPARK-39614][K8S] K8s pod name follows `DNS Subdomain Names` rule URL: https://github.com/apache/spark/pull/36999 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] chenzhx commented on a diff in pull request #36663: [SPARK-38899][SQL]DS V2 supports push down datetime functions

chenzhx commented on code in PR #36663:

URL: https://github.com/apache/spark/pull/36663#discussion_r907122926

##

sql/core/src/main/scala/org/apache/spark/sql/catalyst/util/V2ExpressionBuilder.scala:

##

@@ -254,6 +254,55 @@ class V2ExpressionBuilder(e: Expression, isPredicate:

Boolean = false) {

} else {

None

}

+case date: DateAdd =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("DATE_ADD",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case date: DateDiff =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("DATE_DIFF",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case date: TruncDate =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("TRUNC",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case Second(child, _) =>

+ generateExpression(child).map(v => new V2Extract("SECOND", v))

+case Minute(child, _) =>

+ generateExpression(child).map(v => new V2Extract("MINUTE", v))

+case Hour(child, _) =>

+ generateExpression(child).map(v => new V2Extract("HOUR", v))

+case Month(child) =>

+ generateExpression(child).map(v => new V2Extract("MONTH", v))

+case Quarter(child) =>

+ generateExpression(child).map(v => new V2Extract("QUARTER", v))

+case Year(child) =>

+ generateExpression(child).map(v => new V2Extract("YEAR", v))

+// The DAY_OF_WEEK function in Spark returns the day of the week for

date/timestamp.

+// Database dialects should avoid to follow ISO semantics when handling

DAY_OF_WEEK.

+case DayOfWeek(child) =>

+ generateExpression(child).map(v => new V2Extract("DAY_OF_WEEK", v))

+case DayOfMonth(child) =>

+ generateExpression(child).map(v => new V2Extract("DAY_OF_MONTH", v))

+case DayOfYear(child) =>

+ generateExpression(child).map(v => new V2Extract("DAY_OF_YEAR", v))

+// The WEEK_OF_YEAR function in Spark returns the ISO week from a

date/timestamp.

+// Database dialects need to follow ISO semantics when handling

WEEK_OF_YEAR.

+case WeekOfYear(child) =>

+ generateExpression(child).map(v => new V2Extract("WEEK_OF_YEAR", v))

+// The YEAR_OF_WEEK function in Spark returns the ISO week year from a

date/timestamp.

+// Database dialects need to follow ISO semantics when handling

YEAR_OF_WEEK.

+case YearOfWeek(child) =>

+ generateExpression(child).map(v => new V2Extract("YEAR_OF_WEEK", v))

Review Comment:

Yes. We can replace "Week_Of_Year" with "WEEK", "Day_Of_Year" with "DOY",

and "Day_Of_Month" with "DAY"

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer opened a new pull request, #37001: [SPARK-39148][SQL] DS V2 aggregate push down can work with OFFSET or LIMIT

beliefer opened a new pull request, #37001: URL: https://github.com/apache/spark/pull/37001 ### What changes were proposed in this pull request? Currently, DS V2 aggregate push-down cannot work with OFFSET and LIMIT. If it can work with OFFSET or LIMIT, it will be better performance. ### Why are the changes needed? Let DS V2 aggregate push down can work with OFFSET push down or LIMIT push down. ### Does this PR introduce _any_ user-facing change? 'No'. New feature. ### How was this patch tested? Update tests cases. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun closed pull request #36997: [SPARK-39394][SPARK-39253][DOCS][FOLLOW-UP] Fix the PySpark API reference links in the documentation

dongjoon-hyun closed pull request #36997: [SPARK-39394][SPARK-39253][DOCS][FOLLOW-UP] Fix the PySpark API reference links in the documentation URL: https://github.com/apache/spark/pull/36997 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on pull request #36997: [SPARK-39394][SPARK-39253][DOCS][FOLLOW-UP] Fix the PySpark API reference links in the documentation

dongjoon-hyun commented on PR #36997: URL: https://github.com/apache/spark/pull/36997#issuecomment-1167067833 Could you make a backport to branch-3.3? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #36936: [SPARK-39503][SQL] Add session catalog name for v1 database table and function

cloud-fan commented on code in PR #36936:

URL: https://github.com/apache/spark/pull/36936#discussion_r907136211

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/identifiers.scala:

##

@@ -34,13 +38,35 @@ sealed trait IdentifierWithDatabase {

private def quoteIdentifier(name: String): String = name.replace("`", "``")

def quotedString: String = {

+if (SQLConf.get.getConf(LEGACY_IDENTIFIER_OUTPUT_CATALOG_NAME) &&

database.isDefined) {

+ val replacedId = quoteIdentifier(identifier)

+ val replacedDb = database.map(quoteIdentifier(_))

Review Comment:

```suggestion

val replacedDb = quoteIdentifier(database.get)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #36936: [SPARK-39503][SQL] Add session catalog name for v1 database table and function

cloud-fan commented on code in PR #36936:

URL: https://github.com/apache/spark/pull/36936#discussion_r907137742

##

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala:

##

@@ -3848,6 +3848,15 @@ object SQLConf {

.booleanConf

.createWithDefault(false)

+ val LEGACY_IDENTIFIER_OUTPUT_CATALOG_NAME =

+buildConf("spark.sql.legacy.identifierOutputCatalogName")

+ .internal()

+ .doc("When set to true, the identifier will output catalog name if

database is defined. " +

+"When set to false, it restores the legacy behavior that does not

output catalog name.")

Review Comment:

nit: usually true means legacy behavior, we probably need to rename the

config a little bit.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on pull request #36984: [SPARK-39594][CORE] Improve logs to show addresses in addition to ports

dongjoon-hyun commented on PR #36984: URL: https://github.com/apache/spark/pull/36984#issuecomment-1167069520 Rebased to the master. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] MaxGekk commented on pull request #36965: [SPARK-39567][SQL] Support ANSI intervals in the percentile functions

MaxGekk commented on PR #36965: URL: https://github.com/apache/spark/pull/36965#issuecomment-1167040364 Merging to master. Thank you, @cloud-fan for review. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on pull request #36936: [SPARK-39503][SQL] Add session catalog name for v1 database table and function

cloud-fan commented on PR #36936: URL: https://github.com/apache/spark/pull/36936#issuecomment-1167076595 looks good in general. I'm taking a closer look at where we use `unquotedStringWithoutCatalog` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] martin-g commented on a diff in pull request #36980: [POC][SPARK-39522][INFRA] Uses Docker image cache over a custom image

martin-g commented on code in PR #36980:

URL: https://github.com/apache/spark/pull/36980#discussion_r907146312

##

.github/workflows/build_and_test.yml:

##

@@ -251,13 +254,59 @@ jobs:

name: unit-tests-log-${{ matrix.modules }}-${{ matrix.comment }}-${{

matrix.java }}-${{ matrix.hadoop }}-${{ matrix.hive }}

path: "**/target/unit-tests.log"

- pyspark:

+ infra-image:

needs: precondition

+if: >-

+ fromJson(needs.precondition.outputs.required).pyspark == 'true'

+ || fromJson(needs.precondition.outputs.required).sparkr == 'true'

+ || fromJson(needs.precondition.outputs.required).lint == 'true'

+runs-on: ubuntu-latest

+steps:

+ - name: Login to GitHub Container Registry

+uses: docker/login-action@v2

+with:

+ registry: ghcr.io

+ username: ${{ github.actor }}

+ password: ${{ secrets.GITHUB_TOKEN }}

+ - name: Checkout Spark repository

+uses: actions/checkout@v2

+# In order to fetch changed files

+with:

+ fetch-depth: 0

+ repository: apache/spark

+ ref: ${{ inputs.branch }}

+ - name: Sync the current branch with the latest in Apache Spark

+if: github.repository != 'apache/spark'

+run: |

+ echo "APACHE_SPARK_REF=$(git rev-parse HEAD)" >> $GITHUB_ENV

+ git fetch https://github.com/$GITHUB_REPOSITORY.git

${GITHUB_REF#refs/heads/}

+ git -c user.name='Apache Spark Test Account' -c

user.email='sparktest...@gmail.com' merge --no-commit --progress --squash

FETCH_HEAD

+ git -c user.name='Apache Spark Test Account' -c

user.email='sparktest...@gmail.com' commit -m "Merged commit" --allow-empty

+ -

+name: Set up QEMU

+uses: docker/setup-qemu-action@v1

+ -

+name: Set up Docker Buildx

+uses: docker/setup-buildx-action@v1

+ -

+name: Build and push

+id: docker_build

+uses: docker/build-push-action@v2

+with:

+ context: ./dev/infra/

+ push: true

+ tags: ghcr.io/${{ needs.precondition.outputs.user

}}/apache-spark-github-action-image:latest

+ # TODO: Change yikun to apache

+ # Use the infra image cache of build_infra_images_cache.yml

+ cache-from:

type=registry,ref=ghcr.io/yikun/apache-spark-github-action-image-cache:${{

inputs.branch }}

+

+ pyspark:

+needs: [precondition, infra-image]

if: fromJson(needs.precondition.outputs.required).pyspark == 'true'

name: "Build modules: ${{ matrix.modules }}"

runs-on: ubuntu-20.04

container:

- image: dongjoon/apache-spark-github-action-image:20220207

+ image: ghcr.io/${{ needs.precondition.outputs.user

}}/apache-spark-github-action-image:latest

Review Comment:

I think you could use `options: --user ${{

needs.preconditions.outputs.os_user }}` to avoid the steps for ` Github Actions

permissions workaround` later.

where `os_user` is defined earlier as:

`echo ::set-output name=os_user::$(id -u)`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #36998: [SPARK-39613][BUILD] Upgrade shapeless to 2.3.9

AmplabJenkins commented on PR #36998: URL: https://github.com/apache/spark/pull/36998#issuecomment-1167095745 Can one of the admins verify this patch? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] chenzhx commented on a diff in pull request #36663: [SPARK-38899][SQL]DS V2 supports push down datetime functions

chenzhx commented on code in PR #36663: URL: https://github.com/apache/spark/pull/36663#discussion_r907162042 ## sql/catalyst/src/main/java/org/apache/spark/sql/connector/expressions/Extract.java: ## @@ -0,0 +1,47 @@ +/* + * Licensed to the Apache Software Foundation (ASF) under one or more + * contributor license agreements. See the NOTICE file distributed with + * this work for additional information regarding copyright ownership. + * The ASF licenses this file to You under the Apache License, Version 2.0 + * (the "License"); you may not use this file except in compliance with + * the License. You may obtain a copy of the License at + * + *http://www.apache.org/licenses/LICENSE-2.0 + * + * Unless required by applicable law or agreed to in writing, software + * distributed under the License is distributed on an "AS IS" BASIS, + * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + * See the License for the specific language governing permissions and + * limitations under the License. + */ + +package org.apache.spark.sql.connector.expressions; + +import org.apache.spark.annotation.Evolving; + +import java.io.Serializable; + +/** + * Represent an extract expression, which contains a field to be extracted + * and a source expression where the field should be extracted. Review Comment: OK -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #36663: [SPARK-38899][SQL]DS V2 supports push down datetime functions

cloud-fan commented on code in PR #36663:

URL: https://github.com/apache/spark/pull/36663#discussion_r907164502

##

sql/core/src/main/scala/org/apache/spark/sql/catalyst/util/V2ExpressionBuilder.scala:

##

@@ -254,6 +254,55 @@ class V2ExpressionBuilder(e: Expression, isPredicate:

Boolean = false) {

} else {

None

}

+case date: DateAdd =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("DATE_ADD",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case date: DateDiff =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("DATE_DIFF",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case date: TruncDate =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("TRUNC",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case Second(child, _) =>

+ generateExpression(child).map(v => new V2Extract("SECOND", v))

+case Minute(child, _) =>

+ generateExpression(child).map(v => new V2Extract("MINUTE", v))

+case Hour(child, _) =>

+ generateExpression(child).map(v => new V2Extract("HOUR", v))

+case Month(child) =>

+ generateExpression(child).map(v => new V2Extract("MONTH", v))

+case Quarter(child) =>

+ generateExpression(child).map(v => new V2Extract("QUARTER", v))

+case Year(child) =>

+ generateExpression(child).map(v => new V2Extract("YEAR", v))

+// The DAY_OF_WEEK function in Spark returns the day of the week for

date/timestamp.

+// Database dialects should avoid to follow ISO semantics when handling

DAY_OF_WEEK.

+case DayOfWeek(child) =>

+ generateExpression(child).map(v => new V2Extract("DAY_OF_WEEK", v))

+case DayOfMonth(child) =>

+ generateExpression(child).map(v => new V2Extract("DAY_OF_MONTH", v))

+case DayOfYear(child) =>

+ generateExpression(child).map(v => new V2Extract("DAY_OF_YEAR", v))

+// The WEEK_OF_YEAR function in Spark returns the ISO week from a

date/timestamp.

+// Database dialects need to follow ISO semantics when handling

WEEK_OF_YEAR.

+case WeekOfYear(child) =>

+ generateExpression(child).map(v => new V2Extract("WEEK_OF_YEAR", v))

+// The YEAR_OF_WEEK function in Spark returns the ISO week year from a

date/timestamp.

+// Database dialects need to follow ISO semantics when handling

YEAR_OF_WEEK.

+case YearOfWeek(child) =>

+ generateExpression(child).map(v => new V2Extract("YEAR_OF_WEEK", v))

Review Comment:

SGMT. My only concern is the non-standard ones: `DAY_OF_WEEK`. One idea is

to translate the spark expression to standard functions. ISO DOW is 1 (Monday)

to 7 (Sunday)

Spark `DayOfWeek` -> `(EXTACT(DOW FROM ...) + 5) % 7 + 1`

Spark `WeekDay` -> `EXTACT(DOW FROM ...) + 1`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on pull request #36963: [SPARK-39564][SS] Expose the information of catalog table to the logical plan in streaming query

HeartSaVioR commented on PR #36963: URL: https://github.com/apache/spark/pull/36963#issuecomment-1167101071 Just rebased to pick up the fixes on GA. No changes during rebase. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng opened a new pull request, #37002: [SPARK-39616][BUILD][ML] Upgrade Breeze to 2.0

zhengruifeng opened a new pull request, #37002: URL: https://github.com/apache/spark/pull/37002 ### What changes were proposed in this pull request? Upgrade Breeze to 2.0 ### Why are the changes needed? since 1.3, breeze has replaced `com.github.fommil.netlib` with `dev.ludovic.netlib` after upgrade to 2.0: 1, breeze should be faster because of this replacement; 2, avoid the licensing issue related to `com.github.fommil.netlib:all` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? existing UT -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #36976: [SPARK-39577][SQL][DOCS] Add SQL reference for built-in functions

cloud-fan commented on code in PR #36976:

URL: https://github.com/apache/spark/pull/36976#discussion_r907179775

##

docs/sql-ref-functions-builtin.md:

##

@@ -77,3 +77,93 @@ license: |

{% endif %}

{% endfor %}

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-math-funcs-table.html' %}

+### Mathematical Functions

+{% include_relative generated-math-funcs-table.html %}

+ Examples

+{% include_relative generated-math-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-string-funcs-table.html' %}

+### String Functions

+{% include_relative generated-string-funcs-table.html %}

+ Examples

+{% include_relative generated-string-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-conditional-funcs-table.html' %}

+### Conditional Functions

+{% include_relative generated-conditional-funcs-table.html %}

+ Examples

+{% include_relative generated-conditional-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-bitwise-funcs-table.html' %}

+### Bitwise Functions

+{% include_relative generated-bitwise-funcs-table.html %}

+ Examples

+{% include_relative generated-bitwise-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-conversion-funcs-table.html' %}

+### Conversion Functions

+{% include_relative generated-conversion-funcs-table.html %}

+ Examples

+{% include_relative generated-conversion-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-predicate-funcs-table.html' %}

+### Predicate Functions

+{% include_relative generated-predicate-funcs-table.html %}

+ Examples

+{% include_relative generated-predicate-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-generator-funcs-table.html' %}

+### Generator Functions

Review Comment:

This is not scalar function and should be put in a new section. Shall we do

it in a new PR?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] chenzhx commented on a diff in pull request #36663: [SPARK-38899][SQL]DS V2 supports push down datetime functions

chenzhx commented on code in PR #36663:

URL: https://github.com/apache/spark/pull/36663#discussion_r907173999

##

sql/core/src/main/scala/org/apache/spark/sql/catalyst/util/V2ExpressionBuilder.scala:

##

@@ -254,6 +254,55 @@ class V2ExpressionBuilder(e: Expression, isPredicate:

Boolean = false) {

} else {

None

}

+case date: DateAdd =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("DATE_ADD",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case date: DateDiff =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("DATE_DIFF",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case date: TruncDate =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("TRUNC",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case Second(child, _) =>

+ generateExpression(child).map(v => new V2Extract("SECOND", v))

+case Minute(child, _) =>

+ generateExpression(child).map(v => new V2Extract("MINUTE", v))

+case Hour(child, _) =>

+ generateExpression(child).map(v => new V2Extract("HOUR", v))

+case Month(child) =>

+ generateExpression(child).map(v => new V2Extract("MONTH", v))

+case Quarter(child) =>

+ generateExpression(child).map(v => new V2Extract("QUARTER", v))

+case Year(child) =>

+ generateExpression(child).map(v => new V2Extract("YEAR", v))

+// The DAY_OF_WEEK function in Spark returns the day of the week for

date/timestamp.

+// Database dialects should avoid to follow ISO semantics when handling

DAY_OF_WEEK.

Review Comment:

OK

##

sql/core/src/main/scala/org/apache/spark/sql/catalyst/util/V2ExpressionBuilder.scala:

##

@@ -254,6 +254,55 @@ class V2ExpressionBuilder(e: Expression, isPredicate:

Boolean = false) {

} else {

None

}

+case date: DateAdd =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("DATE_ADD",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case date: DateDiff =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("DATE_DIFF",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case date: TruncDate =>

+ val childrenExpressions = date.children.flatMap(generateExpression(_))

+ if (childrenExpressions.length == date.children.length) {

+Some(new GeneralScalarExpression("TRUNC",

childrenExpressions.toArray[V2Expression]))

+ } else {

+None

+ }

+case Second(child, _) =>

+ generateExpression(child).map(v => new V2Extract("SECOND", v))

+case Minute(child, _) =>

+ generateExpression(child).map(v => new V2Extract("MINUTE", v))

+case Hour(child, _) =>

+ generateExpression(child).map(v => new V2Extract("HOUR", v))

+case Month(child) =>

+ generateExpression(child).map(v => new V2Extract("MONTH", v))

+case Quarter(child) =>

+ generateExpression(child).map(v => new V2Extract("QUARTER", v))

+case Year(child) =>

+ generateExpression(child).map(v => new V2Extract("YEAR", v))

+// The DAY_OF_WEEK function in Spark returns the day of the week for

date/timestamp.

+// Database dialects should avoid to follow ISO semantics when handling

DAY_OF_WEEK.

Review Comment:

OK

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #36976: [SPARK-39577][SQL][DOCS] Add SQL reference for built-in functions

beliefer commented on code in PR #36976:

URL: https://github.com/apache/spark/pull/36976#discussion_r907186103

##

docs/sql-ref-functions-builtin.md:

##

@@ -77,3 +77,93 @@ license: |

{% endif %}

{% endfor %}

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-math-funcs-table.html' %}

+### Mathematical Functions

+{% include_relative generated-math-funcs-table.html %}

+ Examples

+{% include_relative generated-math-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-string-funcs-table.html' %}

+### String Functions

+{% include_relative generated-string-funcs-table.html %}

+ Examples

+{% include_relative generated-string-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-conditional-funcs-table.html' %}

+### Conditional Functions

+{% include_relative generated-conditional-funcs-table.html %}

+ Examples

+{% include_relative generated-conditional-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-bitwise-funcs-table.html' %}

+### Bitwise Functions

+{% include_relative generated-bitwise-funcs-table.html %}

+ Examples

+{% include_relative generated-bitwise-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-conversion-funcs-table.html' %}

+### Conversion Functions

+{% include_relative generated-conversion-funcs-table.html %}

+ Examples

+{% include_relative generated-conversion-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-predicate-funcs-table.html' %}

+### Predicate Functions

+{% include_relative generated-predicate-funcs-table.html %}

+ Examples

+{% include_relative generated-predicate-funcs-examples.html %}

+{% break %}

+{% endif %}

+{% endfor %}

+

+{% for static_file in site.static_files %}

+{% if static_file.name == 'generated-generator-funcs-table.html' %}

+### Generator Functions

Review Comment:

OK

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on pull request #36877: [SPARK-39479][SQL] DS V2 supports push down math functions(non ANSI)

beliefer commented on PR #36877: URL: https://github.com/apache/spark/pull/36877#issuecomment-1167157368 ping @cloud-fan -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #36996: [SPARK-34305][SQL] Unify v1 and v2 ALTER TABLE .. SET SERDE tests

AmplabJenkins commented on PR #36996: URL: https://github.com/apache/spark/pull/36996#issuecomment-1167200348 Can one of the admins verify this patch? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #36995: [SPARK-39607][SQL][DSV2] Distribution and ordering support V2 function in writing

AmplabJenkins commented on PR #36995: URL: https://github.com/apache/spark/pull/36995#issuecomment-1167200402 Can one of the admins verify this patch? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #36993: [SPARK-39604][SQL][TESTS] Add UT for DerbyDialect getCatalystType method

AmplabJenkins commented on PR #36993: URL: https://github.com/apache/spark/pull/36993#issuecomment-1167292134 Can one of the admins verify this patch? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] EnricoMi closed pull request #36888: [CI] Check if tests are to be run for build and pyspark matrix jobs

EnricoMi closed pull request #36888: [CI] Check if tests are to be run for build and pyspark matrix jobs URL: https://github.com/apache/spark/pull/36888 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

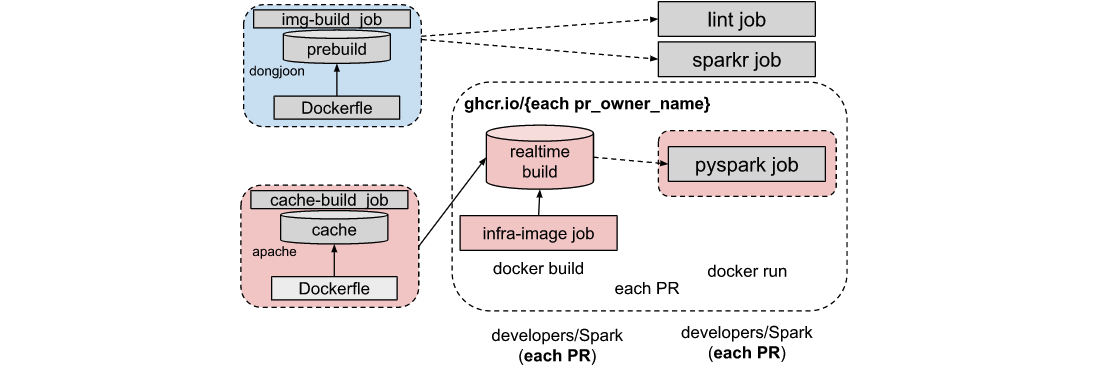

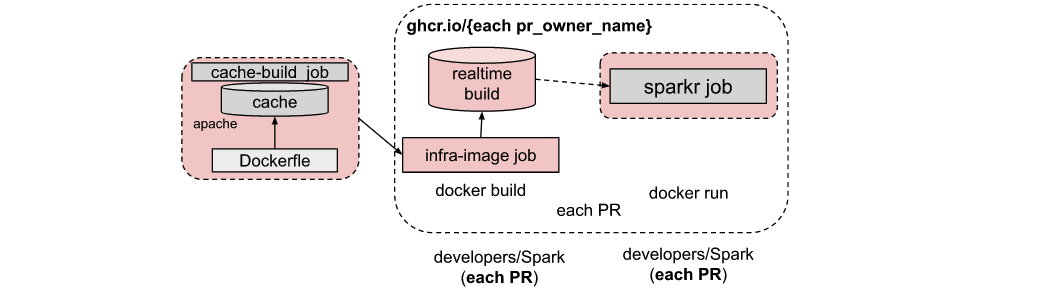

[GitHub] [spark] Yikun opened a new pull request, #37003: [WIP][SPARK-39522][INFRA] Add Apache Spark infra GA image cache

Yikun opened a new pull request, #37003: URL: https://github.com/apache/spark/pull/37003 ### What changes were proposed in this pull request?  This patch added github action yaml to build infra image cache, this image cache would be used by pyspark/sparkr/lint job later. See more in: https://docs.google.com/document/d/1_uiId-U1DODYyYZejAZeyz2OAjxcnA-xfwjynDF6vd0 ### Why are the changes needed? Help to speed up docker infra image build in each PR. ### Does this PR introduce _any_ user-facing change? No, dev only ### How was this patch tested? local test in my repo -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] MaxGekk opened a new pull request, #37004: [WIP][SQL] Add the `REGEXP_COUNT` function

MaxGekk opened a new pull request, #37004: URL: https://github.com/apache/spark/pull/37004 ### What changes were proposed in this pull request? In the PR, I propose to add new expression `RegExpCount` as a runtime replaceable expression. ### Why are the changes needed? To make the migration process from other systems to Spark SQL easier, and achieve feature parity to such systems. For example, ... supports the `REGEXP_COUNT` function, see ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? By running new tests: ``` $ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z regexp-functions.sql" $ build/sbt "sql/testOnly *ExpressionsSchemaSuite" $ build/sbt "sql/test:testOnly org.apache.spark.sql.expressions.ExpressionInfoSuite" ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Yikun opened a new pull request, #37005: [SPARK-39522][INFRA]Uses Docker image cache over a custom image in pyspark job

Yikun opened a new pull request, #37005: URL: https://github.com/apache/spark/pull/37005 ### What changes were proposed in this pull request?  Change pyspark container from original static image to just-in-time build image from cache. ### Why are the changes needed? Help to speed up docker infra image build in each PR. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? CI passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Yikun commented on a diff in pull request #37005: [SPARK-39522][INFRA]Uses Docker image cache over a custom image in pyspark job

Yikun commented on code in PR #37005:

URL: https://github.com/apache/spark/pull/37005#discussion_r907371578

##

.github/workflows/build_and_test.yml:

##

@@ -251,13 +256,57 @@ jobs:

name: unit-tests-log-${{ matrix.modules }}-${{ matrix.comment }}-${{

matrix.java }}-${{ matrix.hadoop }}-${{ matrix.hive }}

path: "**/target/unit-tests.log"

- pyspark:

+ infra-image:

needs: precondition

if: fromJson(needs.precondition.outputs.required).pyspark == 'true'

+runs-on: ubuntu-latest

+steps:

+ - name: Login to GitHub Container Registry

+uses: docker/login-action@v2

+with:

+ registry: ghcr.io

+ username: ${{ github.actor }}

+ password: ${{ secrets.GITHUB_TOKEN }}

+ - name: Checkout Spark repository

+uses: actions/checkout@v2

+# In order to fetch changed files

+with:

+ fetch-depth: 0

+ repository: apache/spark

+ ref: ${{ inputs.branch }}

+ - name: Sync the current branch with the latest in Apache Spark

+if: github.repository != 'apache/spark'

+run: |

+ echo "APACHE_SPARK_REF=$(git rev-parse HEAD)" >> $GITHUB_ENV

+ git fetch https://github.com/$GITHUB_REPOSITORY.git

${GITHUB_REF#refs/heads/}

+ git -c user.name='Apache Spark Test Account' -c

user.email='sparktest...@gmail.com' merge --no-commit --progress --squash

FETCH_HEAD

+ git -c user.name='Apache Spark Test Account' -c

user.email='sparktest...@gmail.com' commit -m "Merged commit" --allow-empty

+ -

+name: Set up QEMU

+uses: docker/setup-qemu-action@v1

+ -

+name: Set up Docker Buildx

+uses: docker/setup-buildx-action@v1

+ -

+name: Build and push

+id: docker_build

+uses: docker/build-push-action@v2

+with:

+ context: ./dev/infra/

+ push: true

+ # TODO: Cleanup the latest image

+ tags: ghcr.io/${{ needs.precondition.outputs.user

}}/apache-spark-github-action-image:${{ needs.precondition.outputs.img_tag }}

+ # TODO: Change yikun cache to apache cache

Review Comment:

Change this when https://github.com/apache/spark/pull/37003 ready

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Yikun commented on a diff in pull request #37005: [SPARK-39522][INFRA]Uses Docker image cache over a custom image in pyspark job

Yikun commented on code in PR #37005: URL: https://github.com/apache/spark/pull/37005#discussion_r907374687 ## dev/infra/Dockerfile: ## @@ -0,0 +1,55 @@ +# Review Comment: cleanup(rebase) this when #37003 ready -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan closed pull request #36966: [SPARK-37753] [FOLLOWUP] [SQL] Fix unit tests sometimes failing