[GitHub] spark issue #18904: [SPARK-21624]optimzie RF communicaiton cost

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18904 Thanks @MLnick, I will be glad if you can continue it. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18624: [SPARK-21389][ML][MLLIB] Optimize ALS recommendFo...

Github user mpjlu closed the pull request at: https://github.com/apache/spark/pull/18624 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19516: [SPARK-22277][ML]fix the bug of ChiSqSelector on ...

Github user mpjlu closed the pull request at: https://github.com/apache/spark/pull/19516 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu closed the pull request at: https://github.com/apache/spark/pull/19337 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19536: [SPARK-6685][ML]Use DSYRK to compute AtA in ALS

Github user mpjlu closed the pull request at: https://github.com/apache/spark/pull/19536 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #17739: [SPARK-20443][MLLIB][ML] set ALS blockify size

Github user mpjlu closed the pull request at: https://github.com/apache/spark/pull/17739 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17739: [SPARK-20443][MLLIB][ML] set ALS blockify size

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/17739 Because I don't have the environment to continue this work, I will close it. Thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18624: [SPARK-21389][ML][MLLIB] Optimize ALS recommendForAll by...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18624 Because I don't have the environment to continue this work, I will close it. Thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19337 Because I don't have the environment to continue this work, I will close it. Thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19536: [SPARK-6685][ML]Use DSYRK to compute AtA in ALS

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19536 Because I don't have the environment to continue this work, I will close it. Thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19516: [SPARK-22277][ML]fix the bug of ChiSqSelector on prepari...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19516 Because I don't have the environment to continue this work, I will close it. Thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18904: [SPARK-21624]optimzie RF communicaiton cost

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18904 Because I don't have the environment to continue this work, I will close it. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18904: [SPARK-21624]optimzie RF communicaiton cost

Github user mpjlu closed the pull request at: https://github.com/apache/spark/pull/18904 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18904: [SPARK-21624]optimzie RF communicaiton cost

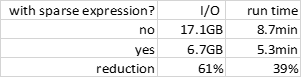

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18904 This is another case. Table 1 shows the improvement of random tree algorithm with sparse expression. We can see that when we use sparse expression, I/O can be reduced by 61% and total run time can be reduced by 39%. The dataset has 100k samples and 10k features in Gaussian distribution and its number of partitions is 300. The max depth of RF is 17 and number of bins is 40.  Only when the network is a bottleneck, this optimization will work better. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18904: [SPARK-21624]optimzie RF communicaiton cost

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18904  This is one of my test results. Now, I am not working on Spark MLLIB, and don't have hardware to do more test. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18624: [SPARK-21389][ML][MLLIB] Optimize ALS recommendForAll by...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18624 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18624: [SPARK-21389][ML][MLLIB] Optimize ALS recommendForAll by...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18624 Hi @holdenk, this is the PR we have discussed in Strata conference.  I have thought about the code again, for the performance, we can continue to optimize the code. Because we can merge the block matrices before shuffle. For only code simplification, I didn't get a good method. Any change needs many tests about the performance, and now I am in a DL team, and I will not have the test environment soon. I will be glad if anyone wants to continue this work. Thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18868: [SPARK-21638][ML]Fix RF/GBT Warning message error

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18868 For your case, this doesn't need to fix, this warning is right. Your data size is too large --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18624: [SPARK-21389][ML][MLLIB] Optimize ALS recommendForAll by...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18624 Thanks @WeichenXu123 , I will think about the method to simplify the code. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18624: [SPARK-21389][ML][MLLIB] Optimize ALS recommendFo...

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/18624#discussion_r150269984

--- Diff:

mllib/src/main/scala/org/apache/spark/mllib/recommendation/MatrixFactorizationModel.scala

---

@@ -286,40 +288,119 @@ object MatrixFactorizationModel extends

Loader[MatrixFactorizationModel] {

srcFeatures: RDD[(Int, Array[Double])],

dstFeatures: RDD[(Int, Array[Double])],

num: Int): RDD[(Int, Array[(Int, Double)])] = {

-val srcBlocks = blockify(srcFeatures)

-val dstBlocks = blockify(dstFeatures)

-val ratings = srcBlocks.cartesian(dstBlocks).flatMap { case (srcIter,

dstIter) =>

- val m = srcIter.size

- val n = math.min(dstIter.size, num)

- val output = new Array[(Int, (Int, Double))](m * n)

+val srcBlocks = blockify(rank, srcFeatures).zipWithIndex()

+val dstBlocks = blockify(rank, dstFeatures)

+val ratings = srcBlocks.cartesian(dstBlocks).map {

+ case (((srcIds, srcFactors), index), (dstIds, dstFactors)) =>

+val m = srcIds.length

+val n = dstIds.length

+val dstIdMatrix = new Array[Int](m * num)

+val scoreMatrix = Array.fill[Double](m *

num)(Double.NegativeInfinity)

+val pq = new BoundedPriorityQueue[(Int,

Double)](num)(Ordering.by(_._2))

+

+val ratings = srcFactors.transpose.multiply(dstFactors)

+var i = 0

+var j = 0

+while (i < m) {

+ var k = 0

+ while (k < n) {

+pq += dstIds(k) -> ratings(i, k)

+k += 1

+ }

+ k = 0

+ pq.toArray.sortBy(-_._2).foreach { case (id, score) =>

+dstIdMatrix(j + k) = id

+scoreMatrix(j + k) = score

+k += 1

+ }

+ // pq.size maybe less than num, corner case

+ j += num

+ i += 1

+ pq.clear()

+}

+(index, (srcIds, dstIdMatrix, new DenseMatrix(m, num, scoreMatrix,

true)))

+}

+ratings.aggregateByKey(null: Array[Int], null: Array[Int], null:

DenseMatrix)(

+ (rateSum, rate) => mergeFunc(rateSum, rate, num),

+ (rateSum1, rateSum2) => mergeFunc(rateSum1, rateSum2, num)

+).flatMap { case (index, (srcIds, dstIdMatrix, scoreMatrix)) =>

+ // to avoid corner case that the number of items is less than

recommendation num

+ var col: Int = 0

+ while (col < num && scoreMatrix(0, col) > Double.NegativeInfinity) {

+col += 1

+ }

+ val row = scoreMatrix.numRows

+ val output = new Array[(Int, Array[(Int, Double)])](row)

var i = 0

- val pq = new BoundedPriorityQueue[(Int,

Double)](n)(Ordering.by(_._2))

- srcIter.foreach { case (srcId, srcFactor) =>

-dstIter.foreach { case (dstId, dstFactor) =>

- // We use F2jBLAS which is faster than a call to native BLAS for

vector dot product

- val score = BLAS.f2jBLAS.ddot(rank, srcFactor, 1, dstFactor, 1)

- pq += dstId -> score

+ while (i < row) {

+val factors = new Array[(Int, Double)](col)

+var j = 0

+while (j < col) {

+ factors(j) = (dstIdMatrix(i * num + j), scoreMatrix(i, j))

+ j += 1

}

-pq.foreach { case (dstId, score) =>

- output(i) = (srcId, (dstId, score))

- i += 1

+output(i) = (srcIds(i), factors)

+i += 1

+ }

+ output.toSeq}

+ }

+

+ private def mergeFunc(rateSum: (Array[Int], Array[Int], DenseMatrix),

+rate: (Array[Int], Array[Int], DenseMatrix),

+num: Int): (Array[Int], Array[Int], DenseMatrix) =

{

+if (rateSum._1 == null) {

+ rate

+} else {

+ val row = rateSum._3.numRows

+ var i = 0

+ val tempIdMatrix = new Array[Int](row * num)

+ val tempScoreMatrix = Array.fill[Double](row *

num)(Double.NegativeInfinity)

+ while (i < row) {

+var j = 0

+var sum_index = 0

+var rate_index = 0

+val matrixIndex = i * num

+while (j < num) {

+ if (rate._3(i, rate_index) > rateSum._3(i, sum_index)) {

+tempIdMatrix(matrixIndex + j) = rate._2(matrixIndex +

rate_index)

+tempScoreMatrix(matrixIndex + j) = rate._3(i, rate_index)

+rate_index += 1

+ } else {

+tempIdMatrix(matrixIndex + j) = rateSum._2(matrixIndex +

sum_index)

+tempScoreMatrix(matrixIndex + j) = rateSum._3(i, sum_index)

+sum_index += 1

+ }

+

[GitHub] spark pull request #19516: [SPARK-22277][ML]fix the bug of ChiSqSelector on ...

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/19516#discussion_r146741139

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/feature/ChiSqSelector.scala ---

@@ -291,9 +291,13 @@ final class ChiSqSelectorModel private[ml] (

val featureAttributes: Array[Attribute] = if

(origAttrGroup.attributes.nonEmpty) {

origAttrGroup.attributes.get.zipWithIndex.filter(x =>

selector.contains(x._2)).map(_._1)

} else {

- Array.fill[Attribute](selector.size)(NominalAttribute.defaultAttr)

+ null

--- End diff --

Change the error description is ok, but ChiSqSelector maybe not the only

case causes this error. StringIndexer, QuantileDiscretizer may also causes this

error.

Maybe we need a solution for this problem?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19516: [SPARK-22277][ML]fix the bug of ChiSqSelector on ...

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/19516#discussion_r146537275

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/feature/ChiSqSelector.scala ---

@@ -291,9 +291,13 @@ final class ChiSqSelectorModel private[ml] (

val featureAttributes: Array[Attribute] = if

(origAttrGroup.attributes.nonEmpty) {

origAttrGroup.attributes.get.zipWithIndex.filter(x =>

selector.contains(x._2)).map(_._1)

} else {

- Array.fill[Attribute](selector.size)(NominalAttribute.defaultAttr)

+ null

--- End diff --

The problem is should we allow add Nominal and not set value or numvalues.

If we allow this, should we change the code of getCategoricalFeatures?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19516: [SPARK-22277][ML]fix the bug of ChiSqSelector on ...

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/19516#discussion_r146528222

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/feature/ChiSqSelector.scala ---

@@ -291,9 +291,13 @@ final class ChiSqSelectorModel private[ml] (

val featureAttributes: Array[Attribute] = if

(origAttrGroup.attributes.nonEmpty) {

origAttrGroup.attributes.get.zipWithIndex.filter(x =>

selector.contains(x._2)).map(_._1)

} else {

- Array.fill[Attribute](selector.size)(NominalAttribute.defaultAttr)

+ null

--- End diff --

For this problem, either here or the following code should be changed,

right?

" case nomAttr: NominalAttribute =>

nomAttr.getNumValues match {

case Some(numValues: Int) => Iterator(idx -> numValues)

case None => throw new IllegalArgumentException(s"Feature

$idx is marked as" +

" Nominal (categorical), but it does not have the number

of values specified.")

}"

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19516: [SPARK-22277][ML]fix the bug of ChiSqSelector on ...

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/19516#discussion_r146527616

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/feature/ChiSqSelector.scala ---

@@ -291,9 +291,13 @@ final class ChiSqSelectorModel private[ml] (

val featureAttributes: Array[Attribute] = if

(origAttrGroup.attributes.nonEmpty) {

origAttrGroup.attributes.get.zipWithIndex.filter(x =>

selector.contains(x._2)).map(_._1)

} else {

- Array.fill[Attribute](selector.size)(NominalAttribute.defaultAttr)

+ null

--- End diff --

This function is in ChiSqSelectorModel, in the model you only have

"selectedFeatures: Array[Int]", seems you cannot fill values and or numValues

only with the model information.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19337 Any comments for this? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19536: [SPARK-6685][ML]Use DSYRK to compute AtA in ALS

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/19536#discussion_r146511769

--- Diff: project/MimaExcludes.scala ---

@@ -1043,6 +1043,9 @@ object MimaExcludes {

// [SPARK-21680][ML][MLLIB]optimzie Vector coompress

ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.mllib.linalg.Vector.toSparseWithSize"),

ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.ml.linalg.Vector.toSparseWithSize")

+) ++ Seq(

+ // [SPARK-6685][ML]Use DSYRK to compute AtA in ALS

+

ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.ml.recommendation.ALS.train")

--- End diff --

I am ok to remove this, and use a loose threshold (e.g. 100), which is

helpful for most cases. How about it?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19536: [SPARK-6685][ML]Use DSYRK to compute AtA in ALS

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19536 cc @srowen @mengxr @yanboliang The performance data is updated, thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19536: [SPARK-6685][ML]Use DSYRK to compute AtA in ALS

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19536  100k*100k, sparsity 0.05 | old | old | New -- | -- | -- | -- rank | f2j | mkl | mkl 20 | 4 | 9 | 4 50 | 13 | 12 | 13 100 | 40 | 60 | 30 200 | 156 | 90 | 66 500 | 900 | 720 | 270 This is the performance data of this PR. The date size is 100,000*100,000, the data sparsity is 0.05. The test environment is: 3 workers: 35 cores/worker, 210G memory/worker, 1 executor/worker. The native BLAS is Intel MKL --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19536: [SPARK-6685][ML]Use DSYRK to compute AtA in ALS

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/19536#discussion_r145735791

--- Diff: mllib/src/main/scala/org/apache/spark/ml/recommendation/ALS.scala

---

@@ -589,6 +602,9 @@ class ALS(@Since("1.4.0") override val uid: String)

extends Estimator[ALSModel]

@Since("2.2.0")

def setColdStartStrategy(value: String): this.type =

set(coldStartStrategy, value)

+ @Since("2.3.0")

+ def setThreshold(value: Int): this.type = set(threshold, value)

--- End diff --

Yes, my test results is better than the previous results, especially for

native BLAS. I will update my test results here soon, and I will change this

set.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19536: [SPARK-6685][ML]Use DSYRK to compute AtA in ALS

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19536 Hi @yanboliang , I reopen this PR per your suggestion, thanks. I have tested the code, the performance result is matched with @hqzizania 's results. Thanks @hqzizania . --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19536: [SPARK-6685][ML]Use DSYRK to compute AtA in ALS

GitHub user mpjlu opened a pull request: https://github.com/apache/spark/pull/19536 [SPARK-6685][ML]Use DSYRK to compute AtA in ALS ## What changes were proposed in this pull request? This is a reopen of PR: https://github.com/apache/spark/pull/13891 with mima fix. Please reference PR13891 for the performance result and discussion. ## How was this patch tested? (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) (If this patch involves UI changes, please attach a screenshot; otherwise, remove this) Please review http://spark.apache.org/contributing.html before opening a pull request. You can merge this pull request into a Git repository by running: $ git pull https://github.com/mpjlu/spark 6658 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/19536.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #19536 commit 8fb4a82be98588795454127f4281363d105146f0 Author: hqzizania <qian.hu...@intel.com> Date: 2016-06-24T06:26:31Z add dsyrk to ALS commit 7e3d238c62269f923832e7cba237f750082450a9 Author: hqzizania <qian.hu...@intel.com> Date: 2016-06-24T16:28:57Z implicitprefs ut fails fix commit 3607bdcd22cf03c068e777a1927ee3d5da49045a Author: hqzizania <qian.hu...@intel.com> Date: 2016-06-28T13:51:55Z use "while" loop instead of "for" set stack size > 128 and comments added commit 56194eb8dc3ea8c233dbb362eeb83af5091abcba Author: hqzizania <qian.hu...@intel.com> Date: 2016-06-29T10:57:57Z add unit test for dostack ALS commit dc4f4badba26635aa95c6ade5a589d4bd50ae886 Author: hqzizania <qian.hu...@intel.com> Date: 2016-10-21T05:34:51Z reset threshold values for doStack and remove UT commit d29fd67a2a24675b7be2f7f51ba170fda11a85d7 Author: hqzizania <hqziza...@gmail.com> Date: 2016-10-24T01:58:18Z add threshold param to ALS commit 294164d839b0ce191fee341b0eb82b81d506d8c8 Author: hqzizania <hqziza...@gmail.com> Date: 2016-10-24T02:47:50Z nit fix commit 513e7915ecb807bc04ed8a17fdaa121e9ac578b5 Author: hqzizania <hqziza...@gmail.com> Date: 2016-10-24T02:56:39Z nit commit f56b586092a24c1010326ed7f94b6b1eb427d378 Author: hqzizania <hqziza...@gmail.com> Date: 2016-10-24T04:41:14Z Merge remote-tracking branch 'origin/master' into ALSdsyrk commit 1081e64c3fbd31c3d35b987b3200eae8c8c688e2 Author: hqzizania <hqziza...@gmail.com> Date: 2016-10-24T04:44:52Z mima fix commit a6b5a16cd78e4efe99fda40f92592c9712b04146 Author: hqzizania <hqziza...@gmail.com> Date: 2016-10-24T05:04:39Z oops commit 20774575ad51d2a10e47c8f8ee7581a1519ace6b Author: hqzizania <hqziza...@gmail.com> Date: 2016-10-25T17:28:45Z solve mima failure commit 061df755c80bbb050dcc85605f382b11e974f872 Author: Peng Meng <peng.m...@intel.com> Date: 2017-10-19T11:22:21Z reopen SPARK-6685 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19516: [SPARK-22277][ML]fix the bug of ChiSqSelector on ...

GitHub user mpjlu opened a pull request:

https://github.com/apache/spark/pull/19516

[SPARK-22277][ML]fix the bug of ChiSqSelector on preparing the output column

## What changes were proposed in this pull request?

To prepare the output columns when use ChiSqSelector, the master method

adds some additional feature attribute, this is not necessary, and sometimes

cause error.

`val featureAttributes: Array[Attribute] = if

(origAttrGroup.attributes.nonEmpty) {

origAttrGroup.attributes.get.zipWithIndex.filter(x =>

selector.contains(x._2)).map(_._1)

} else {

Array.fill[Attribute](selector.size)(NominalAttribute.defaultAttr)

}

val newAttributeGroup = new AttributeGroup($(outputCol),

featureAttributes)`

## How was this patch tested?

The existing UT.

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/mpjlu/spark testDFdirect

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/spark/pull/19516.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #19516

commit 3128133d76348666df82bf43aa42cd9ebae70faf

Author: Peng Meng <peng.m...@intel.com>

Date: 2017-10-17T13:04:08Z

fix the bug of ChiSqSelector on preparing the output column

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19337 For the comments about change the name of epsilon and add setter in localLADModel, we have agreed not to change it now after some offline discussion. Because epsilon doesn't control model convergence directly, and some other LDA implementations like Vowpal Vabbit also uses this name. Because there are many parameters in LDA, epsilon is just one of them, now there is no setter for any of them. If we need to add setter of them, we maybe add them together in another PR. Thanks. @hhbyyh @jkbradley --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on a diff in the pull request: https://github.com/apache/spark/pull/19337#discussion_r144060858 --- Diff: mllib/src/main/scala/org/apache/spark/ml/clustering/LDA.scala --- @@ -224,6 +224,24 @@ private[clustering] trait LDAParams extends Params with HasFeaturesCol with HasM /** * For Online optimizer only: [[optimizer]] = "online". * + * A (positive) learning parameter that controls the convergence of variational inference. + * Smaller value will lead to more accuracy model and longer training time. --- End diff -- Thanks, I will update the doc. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/19337#discussion_r143723408

--- Diff:

mllib/src/main/scala/org/apache/spark/mllib/clustering/LDAOptimizer.scala ---

@@ -573,7 +584,8 @@ private[clustering] object OnlineLDAOptimizer {

expElogbeta: BDM[Double],

alpha: breeze.linalg.Vector[Double],

gammaShape: Double,

- k: Int): (BDV[Double], BDM[Double], List[Int]) = {

+ k: Int,

+ epsilon: Double = 1e-3): (BDV[Double], BDM[Double], List[Int]) = {

--- End diff --

Maybe not need to set, we can add epsilon as a parameter in the model to

save it. Because most of training parameters are not in the model, so we don't

add epsilon to the model here. Thanks.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on a diff in the pull request: https://github.com/apache/spark/pull/19337#discussion_r143705102 --- Diff: mllib/src/test/scala/org/apache/spark/ml/clustering/LDASuite.scala --- @@ -119,6 +121,8 @@ class LDASuite extends SparkFunSuite with MLlibTestSparkContext with DefaultRead assert(lda.getLearningDecay === 0.53) lda.setLearningOffset(1027) assert(lda.getLearningOffset === 1027) +lda.setEpsilon(1e-3) --- End diff -- Thanks @mgaido91 , I will update the code. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19337 Thanks, @hhbyyh. I will create a JIRA for python API --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/19337#discussion_r141272887

--- Diff: mllib/src/main/scala/org/apache/spark/ml/clustering/LDA.scala ---

@@ -224,6 +224,20 @@ private[clustering] trait LDAParams extends Params

with HasFeaturesCol with HasM

/**

* For Online optimizer only: [[optimizer]] = "online".

*

+ * @group expertParam

+ */

+ @Since("2.3.0")

+ final val epsilon = new DoubleParam(this, "epsilon", "(For online

optimizer)" +

+" A (positive) learning parameter that controls the convergence of

variational inference.",

+ParamValidators.gt(0))

--- End diff --

Yes, use 0.0 is good. Because other Double in this class is using 0, to

keep the same code style, I also use 0 here.

It is ok to change all 0 to 0.0.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19337 Thanks @mgaido91 , I will update the ML api, maybe also python and java API. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19337 For LDA, the implementation is in mllib, ml calls mllib. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19337 Ok, thanks. we don't need to change the code here. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19337 Sorry, I got wrong. So you think assert is better here? now we use require. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19337 Because epsilon is Double, negative value should not cause the code run into dead loop. All other setting in LDA using require for check or no check. Should we use assert only for this change? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19337 OK, I will change it to assert. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/19337 Not check is also ok, user should know epsilon > 0 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/19337#discussion_r140727204

--- Diff:

mllib/src/main/scala/org/apache/spark/mllib/clustering/LDAOptimizer.scala ---

@@ -573,7 +584,8 @@ private[clustering] object OnlineLDAOptimizer {

expElogbeta: BDM[Double],

alpha: breeze.linalg.Vector[Double],

gammaShape: Double,

- k: Int): (BDV[Double], BDM[Double], List[Int]) = {

+ k: Int,

+ epsilon: Double = 1e-3): (BDV[Double], BDM[Double], List[Int]) = {

--- End diff --

This is for some other code, like in LDAModel, which calls this function

and does not need to set epsilon.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #19337: [SPARK-22114][ML][MLLIB]add epsilon for LDA

GitHub user mpjlu opened a pull request: https://github.com/apache/spark/pull/19337 [SPARK-22114][ML][MLLIB]add epsilon for LDA ## What changes were proposed in this pull request? The current convergence condition of OnlineLDAOptimizer is: while(meanGammaChange > 1e-3) The condition is critical for the performance and accuracy of LDA. We should keep this configurable, like it is in Vowpal Vabbit: https://github.com/JohnLangford/vowpal_wabbit/blob/430f69453bc4876a39351fba1f18771bdbdb7122/vowpalwabbit/lda_core.cc :638 ## How was this patch tested? The existing UT You can merge this pull request into a Git repository by running: $ git pull https://github.com/mpjlu/spark setLDA Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/19337.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #19337 commit a6c3c79efe4d77ef4beba3f431fd9c2527735875 Author: Peng Meng <peng.m...@intel.com> Date: 2017-09-25T09:12:47Z add epsilon for LDA --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18748: [SPARK-20679][ML] Support recommending for a subset of u...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18748 LGTM --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18624: [SPARK-21389][ML][MLLIB] Optimize ALS recommendForAll by...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18624 Thanks @MLnick , I think the ML ALS suite is ok, just MLLIB ALS suite is too simple. One possible enhancement is to add the same test cases as ML ALS suite. How do you think about it? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18748: [SPARK-20679][ML] Support recommending for a subset of u...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18748 Thanks @MLnick . I have double checked my test. Since there is no recommendForUserSubset , my previous test is MLLIB MatrixFactorizationModel::predict(RDD(Int, Int)), which predicts the rating of many users for many products. The performance of this function is low comparing with recommendForAll. This PR calls recommendForAll with a subset of the users, I agree with your test results. Thanks. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18899: [SPARK-21680][ML][MLLIB]optimize Vector compress

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18899 I have tested the performance of toSparse and toSparseWithSize separately. There is about 35% performance improvement for this change. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18904: [SPARK-21624]optimzie RF communicaiton cost

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18904 retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18899 Thanks @sethah @srowen . The comment is added. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18899 I did not only test this PR. Only work for PR 18904 and find this performance difference. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18899 Hi @srowen; how about using our first version? though duplicate some code, but change is small. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18899 For PR-18904, before this change, one iteration is about 58s, after this change, one iteration is about:40s --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18904: [SPARK-21624]optimzie RF communicaiton cost

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18904 A gentle ping: @sethah @jkbradley --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18899 retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18899 retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18899 Hi @sethah , the unit test is added. Thanks --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/18899#discussion_r132610165

--- Diff: project/MimaExcludes.scala ---

@@ -1012,6 +1012,10 @@ object MimaExcludes {

ProblemFilters.exclude[IncompatibleResultTypeProblem]("org.apache.spark.ml.classification.RandomForestClassificationModel.setFeatureSubsetStrategy"),

ProblemFilters.exclude[IncompatibleResultTypeProblem]("org.apache.spark.ml.regression.RandomForestRegressionModel.numTrees"),

ProblemFilters.exclude[IncompatibleResultTypeProblem]("org.apache.spark.ml.regression.RandomForestRegressionModel.setFeatureSubsetStrategy")

+) ++ Seq(

+ // [SPARK-21680][ML][MLLIB]optimzie Vector coompress

--- End diff --

The error message is"method toSparse(nnz: Int) in trait is present only in

current version"

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/18899#discussion_r132610049

--- Diff:

mllib-local/src/main/scala/org/apache/spark/ml/linalg/Vectors.scala ---

@@ -635,8 +642,9 @@ class SparseVector @Since("2.0.0") (

nnz

}

- override def toSparse: SparseVector = {

-val nnz = numNonzeros

+ override def toSparse: SparseVector = toSparse(numNonzeros)

--- End diff --

If define

`def toSparse: SparseVector = toSparse(numNonzeros)`

in the superclass, when call dv.toSparse (there are this kinds of call in

the code), there will be error message:

Both toSparse in the DenseVector of type (nnz:Int)

org.apache.spark.ml.linalg.SparseVector and toSparse in trait Vector of type

=>org.apache.spark.ml.linalg.SparseVector match .

So we should change the name of toSparse(nnz: Int), maybe

toSparseWithSize(nnz: Int).

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18868: [SPARK-21638][ML]Fix RF/GBT Warning message error

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18868 Yes, that is right. Thanks. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18899 Thanks @srowen. I will revise the code per your suggestion. when I wrote the code, I just concerned user call toSparse(size) and give a very small size. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

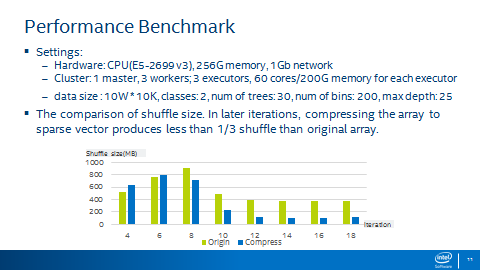

[GitHub] spark pull request #18904: [SPARK-21624]optimzie RF communicaiton cost

GitHub user mpjlu opened a pull request: https://github.com/apache/spark/pull/18904 [SPARK-21624]optimzie RF communicaiton cost ## What changes were proposed in this pull request? The implementation of RF is bound by either the cost of statistics computation on workers or by communicating the sufficient statistics. This PR will focus on optimizing communication cost. The statistics are stored in allStats: ` private var allStats: Array[Double] = new Array[Double](allStatsSize)` In real use case, the size of allStats is very large, and it can be very sparse, especially on the nodes that near the leave of the tree. It is better to change allStats from Array to SparseVector before shufffle. My tests show the communication is down by about **50% to 90%** with this PR. Test cases: 1000 features * 200Bins* 2 label, 1000 features * 50Bins * 2label 1 features * 200Bins * 2 label featureSubsetStrategy: auto, 0.2, 0.1 All test cases with the config: spark.shuffle.compress=true. ## How was this patch tested? The exist UT You can merge this pull request into a Git repository by running: $ git pull https://github.com/mpjlu/spark optRFcomm Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18904.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18904 commit 35d1f244f918bd8ea7fe7fdf10796a64e7a62fc9 Author: Peng Meng <peng.m...@intel.com> Date: 2017-08-10T07:19:59Z optimzie RF communicaiton cost --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18899 Yes, I just concern if add toSparse(size) we should check the size in the code, there will be no performance gain. If we don't need to check the "size" (comparing size with numNonZero) in the code, add toSparse(size) is ok. Thanks. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18899: [SPARK-21680][ML][MLLIB]optimzie Vector coompress

GitHub user mpjlu opened a pull request: https://github.com/apache/spark/pull/18899 [SPARK-21680][ML][MLLIB]optimzie Vector coompress ## What changes were proposed in this pull request? When use Vector.compressed to change a Vector to SparseVector, the performance is very low comparing with Vector.toSparse. This is because you have to scan the value three times using Vector.compressed, but you just need two times when use Vector.toSparse. When the length of the vector is large, there is significant performance difference between this two method. ## How was this patch tested? The existing UT You can merge this pull request into a Git repository by running: $ git pull https://github.com/mpjlu/spark optVectorCompress Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18899.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18899 commit 5dc5c89242a0c2a5ac6a693c3703eef8ee160616 Author: Peng Meng <peng.m...@intel.com> Date: 2017-08-10T01:59:17Z optimzie Vector coompress --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18868: [SPARK-21638][ML]Fix RF/GBT Warning message error

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18868 retest this please --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18868: [SPARK-21638][ML]Fix RF/GBT Warning message error

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/18868#discussion_r131605981

--- Diff:

mllib/src/main/scala/org/apache/spark/ml/tree/impl/RandomForest.scala ---

@@ -1107,9 +1108,11 @@ private[spark] object RandomForest extends Logging {

mutableTreeToNodeToIndexInfo

.getOrElseUpdate(treeIndex, new mutable.HashMap[Int,

NodeIndexInfo]())(node.id)

= new NodeIndexInfo(numNodesInGroup, featureSubset)

+numNodesInGroup += 1

+memUsage += nodeMemUsage

+ } else {

+flag = false

--- End diff --

We cannot clear stack here, because the nodes in the stack are used for all

iteration.

Native scala doesn't support break, we have to import other package to use

break, seems not necessary.

We cannot use nodeStack.pop at the top, because we don't know whether it

can be grouped here.

How about change "flag" to "groupDone" means Group is done, don't need to

loop again.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18832: [SPARK-21623][ML]fix RF doc

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18832 Thanks @srowen , I revised the comments per Seth's suggestion: "Parent stats need to be explicitly tracked in the DTStatsAggregator because the parent [[Node]] object does not have ImpurityStats on the first iteration." --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18868: [SPARK-21638][ML]Fix RF/GBT Warning message error

GitHub user mpjlu opened a pull request: https://github.com/apache/spark/pull/18868 [SPARK-21638][ML]Fix RF/GBT Warning message error ## What changes were proposed in this pull request? When train RF model, there are many warning messages like this: > WARN RandomForest: Tree learning is using approximately 268492800 bytes per iteration, which exceeds requested limit maxMemoryUsage=268435456. This allows splitting 2622 nodes in this iteration. This warning message is unnecessary and the data is not accurate. Actually, if all the nodes cannot split in one iteration, it will show this warning. For most of the case, all the nodes cannot split just in one iteration, so for most of the case, it will show this warning for each iteration. ## How was this patch tested? The existing UT You can merge this pull request into a Git repository by running: $ git pull https://github.com/mpjlu/spark fixRFwarning Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18868.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18868 commit f15f35e53115babd48357ec256f81e92e3480a6e Author: Peng Meng <peng.m...@intel.com> Date: 2017-08-07T08:05:24Z fix RF warning --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18832: [SPARK-21623][ML]fix RF doc

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18832 Thanks @sethah . I strongly think we should update the commend or just delete the comment as the current PR. Another reason is: there are three kinds of feature: categorical, ordered categorical, and continuous Only the first iteration of categorical feature need parentStats, the other two don't need. The comment seems all first iteration need parentStats. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18832: [SPARK-21623][ML]fix RF doc

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18832 I agree with you. Do you think we should update the comment to help others understand the code. Since parantStats is updated and used in each iteration. Thanks. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18832: [SPARK-21623][ML]fix RF doc

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18832 I know your point. I am confusing the code doesn't work that way. The code update parentStats for each iteration. Actually, we only need to update parentStats for the first Iteration. So we should update the code? Thanks. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18832: [SPARK-21623][ML]fix RF doc

Github user mpjlu commented on the issue:

https://github.com/apache/spark/pull/18832

parentStats is used in this

code:ãbinAggregates.getParentImpurityCalculator(), this is used in all

iteration.

So that comment seems very misleading.

`} else if (binAggregates.metadata.isUnordered(featureIndex)) {

// Unordered categorical feature

val leftChildOffset =

binAggregates.getFeatureOffset(featureIndexIdx)

val (bestFeatureSplitIndex, bestFeatureGainStats) =

Range(0, numSplits).map { splitIndex =>

val leftChildStats =

binAggregates.getImpurityCalculator(leftChildOffset, splitIndex)

val rightChildStats =

binAggregates.getParentImpurityCalculator()

.subtract(leftChildStats)

gainAndImpurityStats =

calculateImpurityStats(gainAndImpurityStats,

leftChildStats, rightChildStats, binAggregates.metadata)

(splitIndex, gainAndImpurityStats)

}.maxBy(_._2.gain)

(splits(featureIndex)(bestFeatureSplitIndex),

bestFeatureGainStats)

}`

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18832: [SPARK-21623][ML]fix RF doc

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18832 node.stats is ImpurityStats, and parentStats is Array[Double], there are different. Maybe this comment should be used on node.stats, but not on parentStats. Is my understanding wrong? --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18832: [SPARK-21623][ML]fix RF doc

GitHub user mpjlu opened a pull request: https://github.com/apache/spark/pull/18832 [SPARK-21623][ML]fix RF doc ## What changes were proposed in this pull request? comments of parentStats in RF are wrong. parentStats is not only used for the first iteration, it is used with all the iteration for unordered features. ## How was this patch tested? You can merge this pull request into a Git repository by running: $ git pull https://github.com/mpjlu/spark fixRFDoc Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/18832.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #18832 commit 83c75043fee2a20f1eb6298bd2dab1259409c3ef Author: Peng Meng <peng.m...@intel.com> Date: 2017-08-03T08:26:51Z fix RF doc --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18748: [SPARK-20679][ML] Support recommending for a subset of u...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18748 Thanks. This is my test setting: 3 workersï¼ each: 40 cores, 196G memory, 1 executor. Data Size: user 480,000, item 17,000 --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18748: [SPARK-20679][ML] Support recommending for a subset of u...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18748 Did you test the performance of this, I tested the performance of MLLIB recommendForUserSubset some days ago, the performance is not good. Suppose the time of recommendForAll is 35s, recommend for 1/3 Users use this may need 90s. Maybe it is faster to use recommendForAll then select 1/3 users. But if recommend tens or hundreds of users, this is faster than recommendForAll. So should we add come commends in the code about when it is better to use recommendForUserSubset. Thanks. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18624: [SPARK-21389][ML][MLLIB] Optimize ALS recommendForAll by...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18624 Hi @srowen @MLnick @jkbradley @mengxr @yanboliang Is this change acceptable? if it is acceptable, I will update ALS ML code following this method. Also update Test Suite, which are too simple, can not detect ALS errors. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18620: [SPARK-21401][ML][MLLIB] add poll function for BoundedPr...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18620 Thanks @srowen , my test also said pq.poll is a little faster on some cases. One possible benefit here is if we provide pq.poll, user's first choice may use pq.poll, not pq.toArray.sorted, which may causes performance reduction. As I have encounter for https://github.com/apache/spark/pull/18624 --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18620: [SPARK-21401][ML][MLLIB] add poll function for BoundedPr...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18620 I am ok to close this. Thanks @MLnick --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18620: [SPARK-21401][ML][MLLIB] add poll function for BoundedPr...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18620 My micro benchmark (write a program only test pq.toArray.sorted and pq.Array.sortBy and pq.poll), not find significant performance difference. Only in the Spark job, there is big difference. Confused. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18620: [SPARK-21401][ML][MLLIB] add poll function for BoundedPr...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18620 I also very confused about this. You can change https://github.com/apache/spark/pull/18624 to sorted and test. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18620: [SPARK-21401][ML][MLLIB] add poll function for BoundedPr...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18620 Hi @MLnick , @srowen . My test showing: pq.poll is not significantly faster than pq.toArray.sortBy, but significantly faster than pq.toArray.sorted. Seems not each pq.toArray.sorted (such as used in topByKey) can be replaced by pq.toArray.sortBy, so use pq.poll to replace pq.toArray.sorted will benefit. You can compare the performance of pq.sorted, pq.sortBy, and pq.poll using: https://github.com/apache/spark/pull/18624 The performance of pq.toArray.sortBy is about the same as pq.poll, and about 20% improvement comparing pq.toArray.sorted. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18620: [SPARK-21401][ML][MLLIB] add poll function for BoundedPr...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18620 Hi @MLnick , pq.toArray.sorted also used in other places, like word2vector and LDA, how about waiting for my other benchmark results. Then decide to close it or not. Thanks. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18620: [SPARK-21401][ML][MLLIB] add poll function for BoundedPr...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18620 I have tested much about poll and toArray.sorted. If the queue is much ordered (suppose offer 2000 times for queue size 20). Use pq.toArray.sorted is faster. If the queue is much disordered (suppose offer 100 times for queue size 20), Use pq.poll is much faster. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18620: [SPARK-21401][ML][MLLIB] add poll function for BoundedPr...

Github user mpjlu commented on the issue: https://github.com/apache/spark/pull/18620 Keep it or close it, both is ok for me. We have much discussion on: https://issues.apache.org/jira/browse/SPARK-21401 --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18624: [SPARK-21389][ML][MLLIB] Optimize ALS recommendFo...

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/18624#discussion_r127669102

--- Diff:

mllib/src/main/scala/org/apache/spark/mllib/recommendation/MatrixFactorizationModel.scala

---

@@ -286,40 +288,120 @@ object MatrixFactorizationModel extends

Loader[MatrixFactorizationModel] {

srcFeatures: RDD[(Int, Array[Double])],

dstFeatures: RDD[(Int, Array[Double])],

num: Int): RDD[(Int, Array[(Int, Double)])] = {

-val srcBlocks = blockify(srcFeatures)

-val dstBlocks = blockify(dstFeatures)

-val ratings = srcBlocks.cartesian(dstBlocks).flatMap { case (srcIter,

dstIter) =>

- val m = srcIter.size

- val n = math.min(dstIter.size, num)

- val output = new Array[(Int, (Int, Double))](m * n)

+val srcBlocks = blockify(rank, srcFeatures).zipWithIndex()

+val dstBlocks = blockify(rank, dstFeatures)

+val ratings = srcBlocks.cartesian(dstBlocks).map {

+ case (((srcIds, srcFactors), index), (dstIds, dstFactors)) =>

+val m = srcIds.length

+val n = dstIds.length

+val dstIdMatrix = new Array[Int](m * num)

+val scoreMatrix = Array.fill[Double](m *

num)(Double.NegativeInfinity)

+val pq = new BoundedPriorityQueue[(Int,

Double)](num)(Ordering.by(_._2))

+

+val ratings = srcFactors.transpose.multiply(dstFactors)

+var i = 0

+var j = 0

+while (i < m) {

+ var k = 0

+ while (k < n) {

+pq += dstIds(k) -> ratings(i, k)

+k += 1

+ }

+ var size = pq.size

+ while (size > 0) {

+size -= 1

+val factor = pq.poll()

--- End diff --

Hi @MLnick , thanks for your review.

My original test for sorted is using: pq.toArray.sorted(Ordering.By[(Int,

Double), Double](-_._2)),

because pq.toArray.sorted(-_._2) build error. Maybe there is

boxing/unboxing, the performance is very bad.

Now, I use pq.toArray.sortBy(-_._2), the performance is good than poll.

this 25s vs poll 26s.

Thanks.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #18624: [SPARK-21389][ML][MLLIB] Optimize ALS recommendFo...

Github user mpjlu commented on a diff in the pull request:

https://github.com/apache/spark/pull/18624#discussion_r127641933

--- Diff:

mllib/src/main/scala/org/apache/spark/mllib/recommendation/MatrixFactorizationModel.scala

---

@@ -286,40 +288,120 @@ object MatrixFactorizationModel extends

Loader[MatrixFactorizationModel] {

srcFeatures: RDD[(Int, Array[Double])],

dstFeatures: RDD[(Int, Array[Double])],

num: Int): RDD[(Int, Array[(Int, Double)])] = {

-val srcBlocks = blockify(srcFeatures)

-val dstBlocks = blockify(dstFeatures)

-val ratings = srcBlocks.cartesian(dstBlocks).flatMap { case (srcIter,

dstIter) =>

- val m = srcIter.size

- val n = math.min(dstIter.size, num)

- val output = new Array[(Int, (Int, Double))](m * n)

+val srcBlocks = blockify(rank, srcFeatures).zipWithIndex()

+val dstBlocks = blockify(rank, dstFeatures)

+val ratings = srcBlocks.cartesian(dstBlocks).map {

+ case (((srcIds, srcFactors), index), (dstIds, dstFactors)) =>

+val m = srcIds.length

+val n = dstIds.length

+val dstIdMatrix = new Array[Int](m * num)

+val scoreMatrix = Array.fill[Double](m *

num)(Double.NegativeInfinity)

+val pq = new BoundedPriorityQueue[(Int,

Double)](num)(Ordering.by(_._2))

+

+val ratings = srcFactors.transpose.multiply(dstFactors)

+var i = 0

+var j = 0

+while (i < m) {

+ var k = 0

+ while (k < n) {

+pq += dstIds(k) -> ratings(i, k)

+k += 1

+ }

+ var size = pq.size

+ while (size > 0) {

+size -= 1

+val factor = pq.poll()

--- End diff --

When num = 20, if use sorted here, the prediction time is about 31s, if

use poll, the prediction time is about 26s. I think this difference is large. I

have tested many times. The result is about the same.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18624: [SPARK-21389][ML][MLLIB] Optimize ALS recommendForAll by...