[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r429196227

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +202,22 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

Review comment:

@Ngone51 I have refactored the code as suggested. Kindly review it

again. Thanks.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r428727134

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +202,22 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

Review comment:

It's in the case "launch" as of now. I will move it to a global place

and refactor the code. Thanks for your suggestions.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r428699825

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +202,22 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

Review comment:

I agree that we can change the delay to 5 seconds to keep it consistent

with current logic. My question is that should we add the following block in

`case "kill" =>` as well?

`forwardMessageThread.scheduleAtFixedRate(() =>

Utils.tryLogNonFatalError {

MonitorDriverStatus()

}, 0, REPORT_DRIVER_STATUS_INTERVAL, TimeUnit.MILLISECONDS)

`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r428699825

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +202,22 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

Review comment:

I agree that we can change the delay to 5 seconds to keep it consistent

with current logic. My question is that should we add the following block in

`case "kill" =>` as well or should we just monitor with a single message

instead of scheduled messages?

`forwardMessageThread.scheduleAtFixedRate(() =>

Utils.tryLogNonFatalError {

MonitorDriverStatus()

}, 0, REPORT_DRIVER_STATUS_INTERVAL, TimeUnit.MILLISECONDS)

`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r428691523

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +202,22 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

Review comment:

Scheduling to monitor driver status is done only in case of submit and

not in kill as of now. So we may need to explicitly send a message to monitor

driver status after 5 seconds delay in case of kill.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r428657865

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +202,22 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

Review comment:

@Ngone51 Thanks for your feedback. `pollAndReportStatus` is only being

used the first time after submitting or killing drivers. I am not sure which is

the duplicate logic you are referring to. Also, `pollAndReportStatus` is only

polling the driver status and handling the response. If we removing polling

from that, what logic should be handled there?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r426150245

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +190,25 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

+ if (found) {

+state.get match {

+ case DriverState.FINISHED | DriverState.FAILED |

+ DriverState.ERROR | DriverState.KILLED =>

+logInfo(s"State of $submittedDriverID is ${state.get}, " +

+ s"exiting spark-submit JVM.")

+System.exit(0)

+ case _ =>

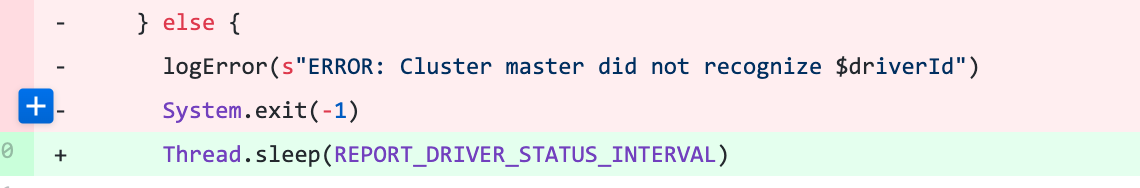

+Thread.sleep(REPORT_DRIVER_STATUS_INTERVAL)

Review comment:

@Ngone51 Thanks for reviewing. I have updated to use the task scheduler

to do the same. Could you kindly review it again and please let me know your

comments?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r426150245

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +190,25 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

+ if (found) {

+state.get match {

+ case DriverState.FINISHED | DriverState.FAILED |

+ DriverState.ERROR | DriverState.KILLED =>

+logInfo(s"State of $submittedDriverID is ${state.get}, " +

+ s"exiting spark-submit JVM.")

+System.exit(0)

+ case _ =>

+Thread.sleep(REPORT_DRIVER_STATUS_INTERVAL)

Review comment:

@Ngone51 Thanks for reviewing. I have updated to use the task scheduler

to do the same. Kindly review it again and let me know your comments.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r426150622

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,44 +129,53 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+ val statusResponse =

Review comment:

Thanks, updated the indentation in the latest commit.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r426150555

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +190,25 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

+ if (found) {

+state.get match {

+ case DriverState.FINISHED | DriverState.FAILED |

+ DriverState.ERROR | DriverState.KILLED =>

+logInfo(s"State of $submittedDriverID is ${state.get}, " +

+ s"exiting spark-submit JVM.")

+System.exit(0)

+ case _ =>

+Thread.sleep(REPORT_DRIVER_STATUS_INTERVAL)

+logInfo(s"State of $submittedDriverID is ${state.get}, " +

+ s"continue monitoring driver status.")

+asyncSendToMasterAndForwardReply[DriverStatusResponse](

Review comment:

@jiangxb1987 Thanks for reviewing. I have changed it to 10 seconds and

took care of your other comments. Kindly review the PR again.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258: URL: https://github.com/apache/spark/pull/28258#discussion_r426150313 ## File path: docs/spark-standalone.md ## @@ -374,6 +374,25 @@ To run an interactive Spark shell against the cluster, run the following command You can also pass an option `--total-executor-cores ` to control the number of cores that spark-shell uses on the cluster. +# Client Properties + +Spark applications supports the following configuration properties specific to standalone mode: + + + Property NameDefault ValueMeaningSince Version + + spark.standalone.submit.waitAppCompletion + false + + In standalone cluster mode, controls whether the client waits to exit until the application completes. + If set to true, the client process will stay alive polling the application's status. Review comment: Thanks, updated to `driver's`. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r426150245

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +190,25 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

+ if (found) {

+state.get match {

+ case DriverState.FINISHED | DriverState.FAILED |

+ DriverState.ERROR | DriverState.KILLED =>

+logInfo(s"State of $submittedDriverID is ${state.get}, " +

+ s"exiting spark-submit JVM.")

+System.exit(0)

+ case _ =>

+Thread.sleep(REPORT_DRIVER_STATUS_INTERVAL)

Review comment:

@Ngone51 Thanks for reviewing. I have updated to use the task scheduler

to do the same. Kindly review it again.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r426150154

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +190,25 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

+ if (found) {

+state.get match {

+ case DriverState.FINISHED | DriverState.FAILED |

+ DriverState.ERROR | DriverState.KILLED =>

+logInfo(s"State of $submittedDriverID is ${state.get}, " +

+ s"exiting spark-submit JVM.")

+System.exit(0)

+ case _ =>

+Thread.sleep(REPORT_DRIVER_STATUS_INTERVAL)

+logInfo(s"State of $submittedDriverID is ${state.get}, " +

Review comment:

Thanks, I have changed it to use `logDebug`.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r426150126

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +190,25 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

+ if (found) {

+state.get match {

+ case DriverState.FINISHED | DriverState.FAILED |

+ DriverState.ERROR | DriverState.KILLED =>

+logInfo(s"State of $submittedDriverID is ${state.get}, " +

Review comment:

Thanks, updated in the latest commit.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r426142394

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -61,6 +61,10 @@ private class ClientEndpoint(

private val lostMasters = new HashSet[RpcAddress]

private var activeMasterEndpoint: RpcEndpointRef = null

+ private val waitAppCompletion =

conf.getBoolean("spark.standalone.submit.waitAppCompletion",

Review comment:

@Ngone51 There doesn't seem to be any config class specific to

standalone deploy related code. Could you kindly suggest where I should be

adding this config?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r426130288

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +190,25 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

+ if (found) {

+state.get match {

+ case DriverState.FINISHED | DriverState.FAILED |

+ DriverState.ERROR | DriverState.KILLED =>

+logInfo(s"State of $submittedDriverID is ${state.get}, " +

+ s"exiting spark-submit JVM.")

+System.exit(0)

+ case _ =>

+Thread.sleep(REPORT_DRIVER_STATUS_INTERVAL)

Review comment:

@Ngone51 Thanks for reviewing. I tried simulating that case by

increasing the `Thread.sleep `time and I see that `onDisconnected` is called

when the master is stopped during sleep time and following logs are generated:

```

20/05/16 13:24:44 ERROR ClientEndpoint: Error connecting to master

127.0.0.1:7077.

20/05/16 13:24:44 ERROR ClientEndpoint: No master is available, exiting.

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r426130288

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -176,6 +190,25 @@ private class ClientEndpoint(

} else if (!Utils.responseFromBackup(message)) {

System.exit(-1)

}

+

+case DriverStatusResponse(found, state, _, _, _) =>

+ if (found) {

+state.get match {

+ case DriverState.FINISHED | DriverState.FAILED |

+ DriverState.ERROR | DriverState.KILLED =>

+logInfo(s"State of $submittedDriverID is ${state.get}, " +

+ s"exiting spark-submit JVM.")

+System.exit(0)

+ case _ =>

+Thread.sleep(REPORT_DRIVER_STATUS_INTERVAL)

Review comment:

@Ngone51 Thanks for reviewing. I tried simulating that case by

increasing the `Thread.sleep `time and I see that `onDisconnected` is called

when the master is stopped during sleep time and following logs are generated:

```

20/05/16 13:24:44 ERROR ClientEndpoint: Error connecting to master

127.0.0.1:7077.

20/05/16 13:24:44 ERROR ClientEndpoint: No master is available, exiting.

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r422640716

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

Review comment:

Hi @Ngone51 , I tried putting periodic messages in the loop in

`pollAndReportStatus` but it doesn't seem to receive message until the loop

sending is completed (checked with a `for` loop, will be stuck in an infinite

loop in case of current `while(true)` loop). Hence, I have implemented it based

on sending an async message from the `pollAndReportStatus` method and if need

be, send the message again or exit while receiving the message. Please let me

know what you think of this approach. I have tested for the common scenarios

and I could see `onNetworkError` method getting called on shutting down Spark

master when an application is running.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r422640716

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

Review comment:

Hi @Ngone51 , I tried putting periodic messages in the loop in

`pollAndReportStatus` but it doesn't seem to receive message until the loop

sending is completed (checked with a `for` loop, will be stuck in an infinite

loop in case of current `while(true)` loop). Hence, I have implemented it based

on sending an async message from the `pollAndReportStatus` method and if need

be, send the message again while receiving the message. Please let me know what

you think of this approach. I have tested for the common scenarios and I could

see `onNetworkError` method getting called on shutting down Spark master when

an application is running.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r422511601

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

Review comment:

@Ngone51 Yes, not sure about the logs from

`StandaloneAppClient$ClientEndpoint`. I will check again. This is the command I

am using to submit jobs:.`/bin/spark-submit --master spark://127.0.0.1:7077

--conf spark.standalone.submit.waitAppCompletion=true --deploy-mode cluster

--class org.apache.spark.examples.SparkPi

examples/target/original-spark-examples_2.12-3.1.0-SNAPSHOT.jar`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r422511601

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

Review comment:

@Ngone51 Yes, not sure about the logs. I will check again. This is the

command I am using to submit jobs:.`/bin/spark-submit --master

spark://127.0.0.1:7077 --conf spark.standalone.submit.waitAppCompletion=true

--deploy-mode cluster --class org.apache.spark.examples.SparkPi

examples/target/original-spark-examples_2.12-3.1.0-SNAPSHOT.jar`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r422502868

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

Review comment:

> We can periodically send a message (e.g. we can send it after

`Thread.sleep(REPORT_DRIVER_STATUS_INTERVAL)` ) to `ClientEndpoint` itself to

check driver's status.

@Ngone51 Thanks for this suggestion. Just to confirm, are you suggesting to

do this in line # 180 in pollAndReportStatus method? Or should we handle this

outside?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r422502868

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

Review comment:

> We can periodically send a message (e.g. we can send it after

`Thread.sleep(REPORT_DRIVER_STATUS_INTERVAL)` ) to `ClientEndpoint` itself to

check driver's status.

@Ngone51 Thanks for this suggestion. Just to confirm, are you suggesting to

do this in lin # 180 in pollAndReportStatus method? Or should we handle this

outside?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r422469087

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

Review comment:

@Ngone51 I launched a long-running application with flag enabled and

disabled and stopped the Spark Master in middle. In both cases, I see the

following in driver logs. I couldn't find any difference in logs.

```

20/05/09 13:42:59 WARN StandaloneAppClient$ClientEndpoint: Connection to

Akshats-MacBook-Pro.local:7077 failed; waiting for master to reconnect...

20/05/09 13:42:59 WARN StandaloneSchedulerBackend: Disconnected from Spark

cluster! Waiting for reconnection...

```

`onDisconnected` method from `StandaloneAppClient.scala` is getting called:

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r422469087

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

Review comment:

@Ngone51 I launched a long-running application with flag enabled and

disabled. In both cases, I see the following in driver logs:

20/05/09 13:42:59 WARN StandaloneAppClient$ClientEndpoint: Connection to

Akshats-MacBook-Pro.local:7077 failed; waiting for master to reconnect...

20/05/09 13:42:59 WARN StandaloneSchedulerBackend: Disconnected from Spark

cluster! Waiting for reconnection...

onDisconnected method from StandaloneAppClient.scala is getting called:

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r422469087

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

Review comment:

@Ngone51 I launched a long-running application with flag enabled and

disabled. In both cases, I see the following in driver logs:

```

20/05/09 13:42:59 WARN StandaloneAppClient$ClientEndpoint: Connection to

Akshats-MacBook-Pro.local:7077 failed; waiting for master to reconnect...

20/05/09 13:42:59 WARN StandaloneSchedulerBackend: Disconnected from Spark

cluster! Waiting for reconnection...

```

`onDisconnected` method from `StandaloneAppClient.scala` is getting called:

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r419481609

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

Review comment:

@Ngone51 Apologies, somehow missed this comment. How can I quickly

verify this? I am looking into this. Could you kindly suggest if you have any

pointers on how this can be fixed?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r419481609

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

Review comment:

@Ngone51 Apologies, somehow missed this comment. How can I quickly

verify this? Could you kindly suggest if you have any pointers on how this can

be fixed?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r418993885

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +128,58 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

+ val statusResponse =

+

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

+ if (statusResponse.found) {

+logInfo(s"State of $driverId is ${statusResponse.state.get}")

+// Worker node, if present

+(statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

+ case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

+logInfo(s"Driver running on $hostPort ($id)")

+ case _ =>

+}

+// Exception, if present

+statusResponse.exception match {

+ case Some(e) =>

+logError(s"Exception from cluster was: $e")

+e.printStackTrace()

+System.exit(-1)

+ case _ =>

+if (!waitAppCompletion) {

+ logInfo(s"spark-submit not configured to wait for completion, " +

+s"exiting spark-submit JVM.")

+ System.exit(0)

+} else {

+ statusResponse.state.get match {

+case DriverState.FINISHED | DriverState.FAILED |

+ DriverState.ERROR | DriverState.KILLED =>

+ logInfo(s"State of $driverId is

${statusResponse.state.get}, " +

+ s"exiting spark-submit JVM.")

Review comment:

@srowen I am presuming this was to fix indentation in line # 168. If so,

I have updated it in the latest commit. If not, kindly let me know which line #

you were referring to. Thanks.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258: URL: https://github.com/apache/spark/pull/28258#discussion_r418986974 ## File path: docs/spark-standalone.md ## @@ -374,6 +374,24 @@ To run an interactive Spark shell against the cluster, run the following command You can also pass an option `--total-executor-cores ` to control the number of cores that spark-shell uses on the cluster. +#Spark Properties + +Spark applications supports the following configuration properties specific to standalone Mode: + + Property NameDefault ValueMeaningSince Version + + spark.standalone.submit.waitAppCompletion + false + + In Standalone cluster mode, controls whether the client waits to exit until the application completes. Review comment: Updated in the latest commit. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258: URL: https://github.com/apache/spark/pull/28258#discussion_r418986898 ## File path: docs/spark-standalone.md ## @@ -374,6 +374,24 @@ To run an interactive Spark shell against the cluster, run the following command You can also pass an option `--total-executor-cores ` to control the number of cores that spark-shell uses on the cluster. +#Spark Properties Review comment: Added space and updated to call it "Client Properties". This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258: URL: https://github.com/apache/spark/pull/28258#discussion_r418986928 ## File path: docs/spark-standalone.md ## @@ -374,6 +374,24 @@ To run an interactive Spark shell against the cluster, run the following command You can also pass an option `--total-executor-cores ` to control the number of cores that spark-shell uses on the cluster. +#Spark Properties + +Spark applications supports the following configuration properties specific to standalone Mode: + Review comment: Updated in the latest commit. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r418977842

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -61,6 +61,10 @@ private class ClientEndpoint(

private val lostMasters = new HashSet[RpcAddress]

private var activeMasterEndpoint: RpcEndpointRef = null

+ private val waitAppCompletion =

conf.getBoolean("spark.standalone.submit.waitAppCompletion",

Review comment:

There doesn't seem to be any config class specific to standalone deploy

related code.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258: URL: https://github.com/apache/spark/pull/28258#discussion_r418488863 ## File path: docs/spark-standalone.md ## @@ -240,6 +240,16 @@ SPARK_MASTER_OPTS supports the following system properties: 1.6.3 + + spark.submit.waitAppCompletion Review comment: Updated the documentation file, please review. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258: URL: https://github.com/apache/spark/pull/28258#discussion_r418488645 ## File path: docs/spark-standalone.md ## @@ -240,6 +240,16 @@ SPARK_MASTER_OPTS supports the following system properties: 1.6.3 + + spark.submit.waitAppCompletion + true Review comment: Yes, corrected it in the latest commit. Thanks for catching it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r418488518

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

+ val statusResponse =

+

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

+ if (statusResponse.found) {

+logInfo(s"State of $driverId is ${statusResponse.state.get}")

+// Worker node, if present

+(statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

+ case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

+logInfo(s"Driver running on $hostPort ($id)")

+ case _ =>

+}

+// Exception, if present

+statusResponse.exception match {

+ case Some(e) =>

+logError(s"Exception from cluster was: $e")

+e.printStackTrace()

+System.exit(-1)

+ case _ =>

+if (!waitAppCompletion) {

+ logInfo(s"No exception found and waitAppCompletion is false, " +

+s"exiting spark-submit JVM.")

+ System.exit(0)

+} else if (statusResponse.state.get == DriverState.FINISHED ||

+ statusResponse.state.get == DriverState.FAILED ||

+ statusResponse.state.get == DriverState.ERROR ||

+ statusResponse.state.get == DriverState.KILLED) {

+ logInfo(s"waitAppCompletion is true, state is

${statusResponse.state.get}, " +

+s"exiting spark-submit JVM.")

+ System.exit(0)

+} else {

+ logTrace(s"waitAppCompletion is true, state is

${statusResponse.state.get}," +

Review comment:

Updated the log messages.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r418488364

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

+ val statusResponse =

+

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

+ if (statusResponse.found) {

+logInfo(s"State of $driverId is ${statusResponse.state.get}")

+// Worker node, if present

+(statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

+ case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

+logInfo(s"Driver running on $hostPort ($id)")

+ case _ =>

+}

+// Exception, if present

+statusResponse.exception match {

+ case Some(e) =>

+logError(s"Exception from cluster was: $e")

+e.printStackTrace()

+System.exit(-1)

+ case _ =>

+if (!waitAppCompletion) {

+ logInfo(s"No exception found and waitAppCompletion is false, " +

+s"exiting spark-submit JVM.")

+ System.exit(0)

+} else if (statusResponse.state.get == DriverState.FINISHED ||

Review comment:

Thanks, updated in the latest commit.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258:

URL: https://github.com/apache/spark/pull/28258#discussion_r418488364

##

File path: core/src/main/scala/org/apache/spark/deploy/Client.scala

##

@@ -124,38 +127,57 @@ private class ClientEndpoint(

}

}

- /* Find out driver status then exit the JVM */

+ /**

+ * Find out driver status then exit the JVM. If the waitAppCompletion is set

to true, monitors

+ * the application until it finishes, fails or is killed.

+ */

def pollAndReportStatus(driverId: String): Unit = {

// Since ClientEndpoint is the only RpcEndpoint in the process, blocking

the event loop thread

// is fine.

logInfo("... waiting before polling master for driver state")

Thread.sleep(5000)

logInfo("... polling master for driver state")

-val statusResponse =

-

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

-if (statusResponse.found) {

- logInfo(s"State of $driverId is ${statusResponse.state.get}")

- // Worker node, if present

- (statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

-case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

- logInfo(s"Driver running on $hostPort ($id)")

-case _ =>

- }

- // Exception, if present

- statusResponse.exception match {

-case Some(e) =>

- logError(s"Exception from cluster was: $e")

- e.printStackTrace()

- System.exit(-1)

-case _ =>

- System.exit(0)

+while (true) {

+ val statusResponse =

+

activeMasterEndpoint.askSync[DriverStatusResponse](RequestDriverStatus(driverId))

+ if (statusResponse.found) {

+logInfo(s"State of $driverId is ${statusResponse.state.get}")

+// Worker node, if present

+(statusResponse.workerId, statusResponse.workerHostPort,

statusResponse.state) match {

+ case (Some(id), Some(hostPort), Some(DriverState.RUNNING)) =>

+logInfo(s"Driver running on $hostPort ($id)")

+ case _ =>

+}

+// Exception, if present

+statusResponse.exception match {

+ case Some(e) =>

+logError(s"Exception from cluster was: $e")

+e.printStackTrace()

+System.exit(-1)

+ case _ =>

+if (!waitAppCompletion) {

+ logInfo(s"No exception found and waitAppCompletion is false, " +

+s"exiting spark-submit JVM.")

+ System.exit(0)

+} else if (statusResponse.state.get == DriverState.FINISHED ||

Review comment:

Thanks, updated in latest commit.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258: URL: https://github.com/apache/spark/pull/28258#discussion_r417437606 ## File path: docs/spark-standalone.md ## @@ -240,6 +240,16 @@ SPARK_MASTER_OPTS supports the following system properties: 1.6.3 + + spark.submit.waitAppCompletion Review comment: Sounds good, will do that. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode

akshatb1 commented on a change in pull request #28258: URL: https://github.com/apache/spark/pull/28258#discussion_r417364551 ## File path: docs/spark-standalone.md ## @@ -240,6 +240,16 @@ SPARK_MASTER_OPTS supports the following system properties: 1.6.3 + + spark.submit.waitAppCompletion Review comment:  How about updating in this Application Properties section and making the config name as spark.submit.standalone.waitAppCompletion as suggested by @HeartSaVioR . Asking this since changing existing configs to cluster config may confuse w.r.t. existing documentation and general configuration has specific mode properties as well. Please let me know what you think. Thanks. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] akshatb1 commented on a change in pull request #28258: [SPARK-31486] [CORE] spark.submit.waitAppCompletion flag to control spark-submit exit in Standalone Cluster Mode