[GitHub] spark issue #21933: [SPARK-24917][CORE] make chunk size configurable

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21933 **[Test build #94346 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94346/testReport)** for PR 21933 at commit [`e2961eb`](https://github.com/apache/spark/commit/e2961eb86f689de83770de5c3a73838512a62001). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21980: [SPARK-25010][SQL] Rand/Randn should produce different v...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21980 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21980: [SPARK-25010][SQL] Rand/Randn should produce different v...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21980 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/94332/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21980: [SPARK-25010][SQL] Rand/Randn should produce different v...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21980 **[Test build #94332 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94332/testReport)** for PR 21980 at commit [`d4d8d0f`](https://github.com/apache/spark/commit/d4d8d0fd2597d52dd2da5b36da6f05a60d89d25e). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21721: [SPARK-24748][SS] Support for reporting custom me...

Github user arunmahadevan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21721#discussion_r208106031

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/ProgressReporter.scala

---

@@ -196,6 +237,18 @@ trait ProgressReporter extends Logging {

currentStatus = currentStatus.copy(isTriggerActive = false)

}

+ /** Extract writer from the executed query plan. */

+ private def dataSourceWriter: Option[DataSourceWriter] = {

+if (lastExecution == null) return None

+lastExecution.executedPlan.collect {

+ case p if p.isInstanceOf[WriteToDataSourceV2Exec] =>

--- End diff --

yes, currently the progress is reported only for micro-batch mode. This

should be supported for continuous mode as well when we start reporting

progress, but needs some more work -

https://issues.apache.org/jira/browse/SPARK-23887

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21933: [SPARK-24917][CORE] make chunk size configurable

Github user kiszk commented on the issue: https://github.com/apache/spark/pull/21933 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21845: [SPARK-24886][INFRA] Fix the testing script to increase ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21845 **[Test build #94345 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94345/testReport)** for PR 21845 at commit [`7afc5c5`](https://github.com/apache/spark/commit/7afc5c52fa31595b1eb458100d37fe92f62e31aa). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21845: [SPARK-24886][INFRA] Fix the testing script to increase ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21845 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21845: [SPARK-24886][INFRA] Fix the testing script to increase ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21845 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1892/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/21898 @rxin, here we seems indeed starting to hit the time limit now. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21980: [SPARK-25010][SQL] Rand/Randn should produce different v...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21980 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21980: [SPARK-25010][SQL] Rand/Randn should produce different v...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21980 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/94331/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21087: [SPARK-23997][SQL] Configurable maximum number of...

Github user kiszk commented on a diff in the pull request:

https://github.com/apache/spark/pull/21087#discussion_r208105302

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala ---

@@ -1490,6 +1495,8 @@ class SQLConf extends Serializable with Logging {

def bucketingEnabled: Boolean = getConf(SQLConf.BUCKETING_ENABLED)

+ def bucketingMaxBuckets: Long = getConf(SQLConf.BUCKETING_MAX_BUCKETS)

--- End diff --

Do we still need `Long` instead of `Int`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21845: [SPARK-24886][INFRA] Fix the testing script to increase ...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/21845 I am reopening this per https://github.com/apache/spark/pull/21898#issuecomment-410909703 cc @cloud-fan, @rxin and @shaneknapp --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21980: [SPARK-25010][SQL] Rand/Randn should produce different v...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21980 **[Test build #94331 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94331/testReport)** for PR 21980 at commit [`f60a238`](https://github.com/apache/spark/commit/f60a2384f335b1c95e81a0c232299af9bb426654). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21845: [SPARK-24886][INFRA] Fix the testing script to in...

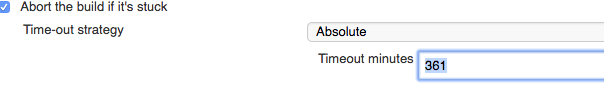

GitHub user HyukjinKwon reopened a pull request: https://github.com/apache/spark/pull/21845 [SPARK-24886][INFRA] Fix the testing script to increase timeout for Jenkins build (from 300m to 330m) ## What changes were proposed in this pull request? Currently, looks we hit the time limit time to time. Looks better increasing the time a bit. For instance, please see https://github.com/apache/spark/pull/21822 For clarification, current Jenkins timeout is already 361m:  This PR just proposes to fix the test script to increase it correspondingly. *This PR does not target to change the build configuration* ## How was this patch tested? Jenkins tests. You can merge this pull request into a Git repository by running: $ git pull https://github.com/HyukjinKwon/spark SPARK-24886 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21845.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21845 commit c57b74546b457d44e0c9d28d92a672fe5324d3ff Author: hyukjinkwon Date: 2018-07-23T05:30:16Z Fix the testing script to increase timeout for Jenkins build (from 300m to 350m) commit 7afc5c52fa31595b1eb458100d37fe92f62e31aa Author: hyukjinkwon Date: 2018-07-23T05:38:09Z Looks 330m good enough actually for now --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21898 **[Test build #94344 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94344/testReport)** for PR 21898 at commit [`1f71e65`](https://github.com/apache/spark/commit/1f71e6583f9f9f270d07323f15c731717e13518d). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22019: [WIP][SPARK-25040][SQL] Empty string for double and floa...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/22019 Hm.. wait let me take a closer look. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21898 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1891/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21898 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21860: [SPARK-24901][SQL]Merge the codegen of RegularHashMap an...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21860 **[Test build #94343 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94343/testReport)** for PR 21860 at commit [`9fab662`](https://github.com/apache/spark/commit/9fab662a6f99ee06be96d0c996ec1209b25a3f84). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user mengxr commented on the issue: https://github.com/apache/spark/pull/21898 test this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21087: [SPARK-23997][SQL] Configurable maximum number of...

Github user kiszk commented on a diff in the pull request:

https://github.com/apache/spark/pull/21087#discussion_r208103944

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/sources/BucketedWriteSuite.scala

---

@@ -48,16 +49,40 @@ abstract class BucketedWriteSuite extends QueryTest

with SQLTestUtils {

intercept[AnalysisException](df.write.bucketBy(2,

"k").saveAsTable("tt"))

}

- test("numBuckets be greater than 0 but less than 10") {

+ test("numBuckets be greater than 0 but less than default

bucketing.maxBuckets (10)") {

val df = Seq(1 -> "a", 2 -> "b").toDF("i", "j")

-Seq(-1, 0, 10).foreach(numBuckets => {

- val e = intercept[AnalysisException](df.write.bucketBy(numBuckets,

"i").saveAsTable("tt"))

- assert(

-e.getMessage.contains("Number of buckets should be greater than 0

but less than 10"))

+Seq(-1, 0, 11).foreach(numBuckets => {

--- End diff --

nit: Only two parts are necessary to be updated for ease of tracking

updates. Other changes look unnecessary.

`10` -> `11`

`less than 10` -> `less than`

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21991: [SPARK-25018] [Infra] Use `Co-authored-by` and `Signed-o...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21991 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/94329/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21991: [SPARK-25018] [Infra] Use `Co-authored-by` and `Signed-o...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21991 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21991: [SPARK-25018] [Infra] Use `Co-authored-by` and `Signed-o...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21991 **[Test build #94329 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94329/testReport)** for PR 21991 at commit [`272d8fd`](https://github.com/apache/spark/commit/272d8fd4c6a46164069e2e3a892f016e9664cf5f). * This patch **fails Spark unit tests**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22019: [SPARK-25040][SQL] Empty string for double and float typ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22019 **[Test build #94342 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94342/testReport)** for PR 22019 at commit [`ef57fdd`](https://github.com/apache/spark/commit/ef57fdd5b0a6f7f0b6343c91c6983d20bc67fb5b). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22019: [SPARK-25040][SQL] Empty string for double and float typ...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/22019 Looks few other types could potentially have this issue too. Let me fix them all here while I am here. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22019: [SPARK-25040][SQL] Empty string for double and float typ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22019 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22019: [SPARK-25040][SQL] Empty string for double and float typ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22019 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1890/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22019: [SPARK-25040][SQL] Empty string for double and fl...

GitHub user HyukjinKwon opened a pull request:

https://github.com/apache/spark/pull/22019

[SPARK-25040][SQL] Empty string for double and float types should be nulls

in JSON

## What changes were proposed in this pull request?

This PR proposes to treat empty strings for double and float types as

`null` consistently. Looks we mistakenly missed this corner case, which I guess

is not that serious since this looks happened betwen 1.x and 2.x, and pretty

corner case.

For an easy reproducer, in case of double, the code below raises an error:

```scala

spark.read.option("mode", "FAILFAST").json(Seq("""{"a":"", "b": ""}""",

"""{"a": 1.1, "b": 1.1}""").toDS).show()

```

```scala

Caused by: java.lang.RuntimeException: Cannot parse as double.

at

org.apache.spark.sql.catalyst.json.JacksonParser$$anonfun$makeConverter$7$$anonfun$apply$10.applyOrElse(JacksonParser.scala:163)

at

org.apache.spark.sql.catalyst.json.JacksonParser$$anonfun$makeConverter$7$$anonfun$apply$10.applyOrElse(JacksonParser.scala:152)

at

org.apache.spark.sql.catalyst.json.JacksonParser.org$apache$spark$sql$catalyst$json$JacksonParser$$parseJsonToken(JacksonParser.scala:277)

at

org.apache.spark.sql.catalyst.json.JacksonParser$$anonfun$makeConverter$7.apply(JacksonParser.scala:152)

at

org.apache.spark.sql.catalyst.json.JacksonParser$$anonfun$makeConverter$7.apply(JacksonParser.scala:152)

at

org.apache.spark.sql.catalyst.json.JacksonParser.org$apache$spark$sql$catalyst$json$JacksonParser$$convertObject(JacksonParser.scala:312)

at

org.apache.spark.sql.catalyst.json.JacksonParser$$anonfun$makeStructRootConverter$1$$anonfun$apply$2.applyOrElse(JacksonParser.scala:71)

at

org.apache.spark.sql.catalyst.json.JacksonParser$$anonfun$makeStructRootConverter$1$$anonfun$apply$2.applyOrElse(JacksonParser.scala:70)

at

org.apache.spark.sql.catalyst.json.JacksonParser.org$apache$spark$sql$catalyst$json$JacksonParser$$parseJsonToken(JacksonParser.scala:277)

at

org.apache.spark.sql.catalyst.json.JacksonParser$$anonfun$makeStructRootConverter$1.apply(JacksonParser.scala:70)

at

org.apache.spark.sql.catalyst.json.JacksonParser$$anonfun$makeStructRootConverter$1.apply(JacksonParser.scala:70)

at

org.apache.spark.sql.catalyst.json.JacksonParser$$anonfun$parse$2.apply(JacksonParser.scala:368)

at

org.apache.spark.sql.catalyst.json.JacksonParser$$anonfun$parse$2.apply(JacksonParser.scala:363)

at org.apache.spark.util.Utils$.tryWithResource(Utils.scala:2491)

at

org.apache.spark.sql.catalyst.json.JacksonParser.parse(JacksonParser.scala:363)

at

org.apache.spark.sql.DataFrameReader$$anonfun$5$$anonfun$6.apply(DataFrameReader.scala:450)

at

org.apache.spark.sql.DataFrameReader$$anonfun$5$$anonfun$6.apply(DataFrameReader.scala:450)

at

org.apache.spark.sql.execution.datasources.FailureSafeParser.parse(FailureSafeParser.scala:61)

... 24 more

```

Unlike other types:

```scala

spark.read.option("mode", "FAILFAST").json(Seq("""{"a":"", "b": ""}""",

"""{"a": 1, "b": 1}""").toDS).show()

```

```

+++

| a| b|

+++

|null|null|

| 1| 1|

+++

```

## How was this patch tested?

Unit tests were added and manually tested.

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/HyukjinKwon/spark double-float-empty

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/spark/pull/22019.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #22019

commit ef57fdd5b0a6f7f0b6343c91c6983d20bc67fb5b

Author: hyukjinkwon

Date: 2018-08-07T05:23:43Z

Empty string for double and float types should be nulls in JSON

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22019: [SPARK-25040][SQL] Empty string for double and float typ...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/22019 cc @cloud-fan, @viirya and @fuqiliang --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20666: [SPARK-23448][SQL] Clarify JSON and CSV parser behavior ...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/20666 That's not related to this change. The issue itself seems to be a behaviour change between 1.6 and 2.x for treating empty string as null or not in double and float, which is rather a corner case and which looks, yea, an issue. Let me try to fix it while I'm here. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21919: [SPARK-24933][SS] Report numOutputRows in SinkPro...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21919#discussion_r208100226

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/v2/WriteToDataSourceV2.scala

---

@@ -46,6 +46,9 @@ case class WriteToDataSourceV2(writer: DataSourceWriter,

query: LogicalPlan) ext

* The physical plan for writing data into data source v2.

*/

case class WriteToDataSourceV2Exec(writer: DataSourceWriter, query:

SparkPlan) extends SparkPlan {

--- End diff --

shall we create a new plan for writing micro-batch sink? If we are going to

add more streaming related functionalities to this plan, we'd better separate

the batch and streaming writing plans.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21305: [SPARK-24251][SQL] Add AppendData logical plan.

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/21305 LGTM, pending jenkins --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21898 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1889/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21898 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21898 **[Test build #94341 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94341/testReport)** for PR 21898 at commit [`1f71e65`](https://github.com/apache/spark/commit/1f71e6583f9f9f270d07323f15c731717e13518d). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/21898 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #16898: [SPARK-19563][SQL] avoid unnecessary sort in File...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/16898#discussion_r208098556

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala

---

@@ -119,23 +130,45 @@ object FileFormatWriter extends Logging {

uuid = UUID.randomUUID().toString,

serializableHadoopConf = new

SerializableConfiguration(job.getConfiguration),

outputWriterFactory = outputWriterFactory,

- allColumns = queryExecution.logical.output,

- partitionColumns = partitionColumns,

+ allColumns = allColumns,

dataColumns = dataColumns,

- bucketSpec = bucketSpec,

+ partitionColumns = partitionColumns,

+ bucketIdExpression = bucketIdExpression,

path = outputSpec.outputPath,

customPartitionLocations = outputSpec.customPartitionLocations,

maxRecordsPerFile = options.get("maxRecordsPerFile").map(_.toLong)

.getOrElse(sparkSession.sessionState.conf.maxRecordsPerFile)

)

+// We should first sort by partition columns, then bucket id, and

finally sorting columns.

+val requiredOrdering = partitionColumns ++ bucketIdExpression ++

sortColumns

+// the sort order doesn't matter

+val actualOrdering =

queryExecution.executedPlan.outputOrdering.map(_.child)

--- End diff --

That would be great, but may need some refactoring.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1888/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22009: [SPARK-24882][SQL] improve data source v2 API

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22009#discussion_r208098178

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/sources/memoryV2.scala

---

@@ -132,35 +134,15 @@ class MemoryV2CustomMetrics(sink: MemorySinkV2)

extends CustomMetrics {

override def json(): String = Serialization.write(Map("numRows" ->

sink.numRows))

}

-class MemoryWriter(sink: MemorySinkV2, batchId: Long, outputMode:

OutputMode, schema: StructType)

--- End diff --

this is actually a batch writer not micro-batch, and is only used in the

test. For writer API, micro-batch and continuous share the same interface, so

we only need one streaming write implementation.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22009 **[Test build #94340 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94340/testReport)** for PR 22009 at commit [`c224999`](https://github.com/apache/spark/commit/c22499964ac759670c3629c690f77018bc79a7c1). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22009 **[Test build #94338 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94338/testReport)** for PR 22009 at commit [`29b4f33`](https://github.com/apache/spark/commit/29b4f33567ccf6335dfd7dbfee620008c7d50b81). * This patch **fails to build**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/94338/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21305: [SPARK-24251][SQL] Add AppendData logical plan.

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21305 **[Test build #94339 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94339/testReport)** for PR 21305 at commit [`e81790d`](https://github.com/apache/spark/commit/e81790d072ed66f1126d5918bd1a39222a9f5cfa). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21305: [SPARK-24251][SQL] Add AppendData logical plan.

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21305 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1887/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21305: [SPARK-24251][SQL] Add AppendData logical plan.

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21305 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21305: [SPARK-24251][SQL] Add AppendData logical plan.

Github user rdblue commented on the issue: https://github.com/apache/spark/pull/21305 @cloud-fan, I've rebased and updated with the requested change to disallow missing columns, even if they're optional. Thanks for reviewing! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22009 **[Test build #94338 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94338/testReport)** for PR 22009 at commit [`29b4f33`](https://github.com/apache/spark/commit/29b4f33567ccf6335dfd7dbfee620008c7d50b81). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1886/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22009: [SPARK-24882][SQL] improve data source v2 API

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22009#discussion_r208095752

--- Diff:

sql/core/src/test/java/test/org/apache/spark/sql/sources/v2/JavaBatchDataSourceV2.java

---

@@ -1,114 +0,0 @@

-/*

- * Licensed to the Apache Software Foundation (ASF) under one or more

- * contributor license agreements. See the NOTICE file distributed with

- * this work for additional information regarding copyright ownership.

- * The ASF licenses this file to You under the Apache License, Version 2.0

- * (the "License"); you may not use this file except in compliance with

- * the License. You may obtain a copy of the License at

- *

- *http://www.apache.org/licenses/LICENSE-2.0

- *

- * Unless required by applicable law or agreed to in writing, software

- * distributed under the License is distributed on an "AS IS" BASIS,

- * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

- * See the License for the specific language governing permissions and

- * limitations under the License.

- */

-

-package test.org.apache.spark.sql.sources.v2;

-

-import java.io.IOException;

-import java.util.List;

-

-import org.apache.spark.sql.execution.vectorized.OnHeapColumnVector;

-import org.apache.spark.sql.sources.v2.DataSourceOptions;

-import org.apache.spark.sql.sources.v2.DataSourceV2;

-import org.apache.spark.sql.sources.v2.ReadSupport;

-import org.apache.spark.sql.sources.v2.reader.*;

-import org.apache.spark.sql.types.DataTypes;

-import org.apache.spark.sql.types.StructType;

-import org.apache.spark.sql.vectorized.ColumnVector;

-import org.apache.spark.sql.vectorized.ColumnarBatch;

-

-

-public class JavaBatchDataSourceV2 implements DataSourceV2, ReadSupport {

--- End diff --

This is renamed to `JavaColumnarDataSourceV2`, to avoid confusion about

batch vs streaming.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #16898: [SPARK-19563][SQL] avoid unnecessary sort in File...

Github user leachbj commented on a diff in the pull request:

https://github.com/apache/spark/pull/16898#discussion_r208094538

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala

---

@@ -119,23 +130,45 @@ object FileFormatWriter extends Logging {

uuid = UUID.randomUUID().toString,

serializableHadoopConf = new

SerializableConfiguration(job.getConfiguration),

outputWriterFactory = outputWriterFactory,

- allColumns = queryExecution.logical.output,

- partitionColumns = partitionColumns,

+ allColumns = allColumns,

dataColumns = dataColumns,

- bucketSpec = bucketSpec,

+ partitionColumns = partitionColumns,

+ bucketIdExpression = bucketIdExpression,

path = outputSpec.outputPath,

customPartitionLocations = outputSpec.customPartitionLocations,

maxRecordsPerFile = options.get("maxRecordsPerFile").map(_.toLong)

.getOrElse(sparkSession.sessionState.conf.maxRecordsPerFile)

)

+// We should first sort by partition columns, then bucket id, and

finally sorting columns.

+val requiredOrdering = partitionColumns ++ bucketIdExpression ++

sortColumns

+// the sort order doesn't matter

+val actualOrdering =

queryExecution.executedPlan.outputOrdering.map(_.child)

--- End diff --

@cloud-fan would it be possible to use the logical plan rather than the

executedPlan? If the optimizer decides the data is already sorted according

according to the logical plan the executedPlan won't include the fields.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22011: [WIP][SPARK-24822][PySpark] Python support for ba...

Github user HyukjinKwon commented on a diff in the pull request: https://github.com/apache/spark/pull/22011#discussion_r208093660 --- Diff: python/pyspark/rdd.py --- @@ -2429,6 +2441,29 @@ def _wrap_function(sc, func, deserializer, serializer, profiler=None): sc.pythonVer, broadcast_vars, sc._javaAccumulator) +class RDDBarrier(object): + +""" +.. note:: Experimental + +An RDDBarrier turns an RDD into a barrier RDD, which forces Spark to launch tasks of the stage +contains this RDD together. +""" + +def __init__(self, rdd): +self.rdd = rdd +self._jrdd = rdd._jrdd + +def mapPartitions(self, f, preservesPartitioning=False): +""" +Return a new RDD by applying a function to each partition of this RDD. +""" --- End diff -- shall we match the documentation, or why is it different? FWIW, for coding block, just `` `blabla` `` should be good enough. Nicer if linked properly by like `` :class:`ClassName` ``. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22009 **[Test build #94337 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94337/testReport)** for PR 22009 at commit [`2fc3b05`](https://github.com/apache/spark/commit/2fc3b05dde2c405e2af72b815700780f114767c1). * This patch **fails to build**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/94337/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22017: [SPARK-23938][SQL] Add map_zip_with function

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22017 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22017: [SPARK-23938][SQL] Add map_zip_with function

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22017 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/94325/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22011: [WIP][SPARK-24822][PySpark] Python support for ba...

Github user HyukjinKwon commented on a diff in the pull request: https://github.com/apache/spark/pull/22011#discussion_r208093240 --- Diff: python/pyspark/rdd.py --- @@ -2429,6 +2441,29 @@ def _wrap_function(sc, func, deserializer, serializer, profiler=None): sc.pythonVer, broadcast_vars, sc._javaAccumulator) +class RDDBarrier(object): + +""" +.. note:: Experimental + +An RDDBarrier turns an RDD into a barrier RDD, which forces Spark to launch tasks of the stage --- End diff -- nit: `RDDBarrier` -> `RDD barrier` --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22017: [SPARK-23938][SQL] Add map_zip_with function

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22017 **[Test build #94325 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94325/testReport)** for PR 22017 at commit [`ef56011`](https://github.com/apache/spark/commit/ef56011f03d8bae4634e5d3108e4d6502482383c). * This patch passes all tests. * This patch merges cleanly. * This patch adds the following public classes _(experimental)_: * `class ArrayDataMerger(elementType: DataType) ` * `case class ArrayUnion(left: Expression, right: Expression) extends ArraySetLike` * `abstract class GetMapValueUtil extends BinaryExpression with ImplicitCastInputTypes ` * `case class MapZipWith(left: Expression, right: Expression, function: Expression)` --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user rdblue commented on the issue: https://github.com/apache/spark/pull/22009 There must not be one. I thought you'd already started a PR, my mistake. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22011: [WIP][SPARK-24822][PySpark] Python support for ba...

Github user HyukjinKwon commented on a diff in the pull request: https://github.com/apache/spark/pull/22011#discussion_r208092650 --- Diff: python/pyspark/rdd.py --- @@ -2429,6 +2441,29 @@ def _wrap_function(sc, func, deserializer, serializer, profiler=None): sc.pythonVer, broadcast_vars, sc._javaAccumulator) +class RDDBarrier(object): + +""" +.. note:: Experimental + +An RDDBarrier turns an RDD into a barrier RDD, which forces Spark to launch tasks of the stage +contains this RDD together. --- End diff -- ditto let's add `.. versionadded:: 2.4.0` at the end. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user cloud-fan commented on the issue: https://github.com/apache/spark/pull/22009 @rdblue can you point me to the other PR? This is the only PR I send out for data source v2 API improvement. I'd appreciate your time to review it, thanks! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21991: [SPARK-25018] [Infra] Use `Co-authored-by` and `Signed-o...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21991 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/94323/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21991: [SPARK-25018] [Infra] Use `Co-authored-by` and `Signed-o...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21991 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22009 **[Test build #94337 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94337/testReport)** for PR 22009 at commit [`2fc3b05`](https://github.com/apache/spark/commit/2fc3b05dde2c405e2af72b815700780f114767c1). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1885/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/94336/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22009 **[Test build #94336 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94336/testReport)** for PR 22009 at commit [`cab6d28`](https://github.com/apache/spark/commit/cab6d2828dacaca6e62d3409c684d18a1fc861f2). * This patch **fails to build**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21991: [SPARK-25018] [Infra] Use `Co-authored-by` and `Signed-o...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21991 **[Test build #94323 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94323/testReport)** for PR 21991 at commit [`b3347f8`](https://github.com/apache/spark/commit/b3347f855a854740dc2d5082ea42fd8c723c3484). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22011: [WIP][SPARK-24822][PySpark] Python support for ba...

Github user HyukjinKwon commented on a diff in the pull request: https://github.com/apache/spark/pull/22011#discussion_r208092333 --- Diff: python/pyspark/rdd.py --- @@ -2406,6 +2406,18 @@ def toLocalIterator(self): sock_info = self.ctx._jvm.PythonRDD.toLocalIteratorAndServe(self._jrdd.rdd()) return _load_from_socket(sock_info, self._jrdd_deserializer) +def barrier(self): --- End diff -- I don't know why we didn't mark the version so far here but we really should `.. versionadded:: 2.4.0` here or ``` @since(2.4) def barrier(self): ... ``` --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user rdblue commented on the issue: https://github.com/apache/spark/pull/22009 Does this replace the other PR? I haven't looked at that one yet. If this is ready to review and follows the doc, I can review it. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22014: [SPARK-25036][SQL] avoid match may not be exhaustive in ...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/22014 LGTM except those rather nits. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22014: [SPARK-25036][SQL] avoid match may not be exhaust...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22014#discussion_r208091824

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/ScalaReflection.scala

---

@@ -709,6 +709,7 @@ object ScalaReflection extends ScalaReflection {

def attributesFor[T: TypeTag]: Seq[Attribute] = schemaFor[T] match {

case Schema(s: StructType, _) =>

s.toAttributes

+case _ => throw new RuntimeException(s"$schemaFor is not supported at

attributesFor()")

--- End diff --

How about this:

```scala

case other =>

throw new UnsupportedOperationException(s"Attributes for type $other

is not supported")

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22014: [SPARK-25036][SQL] avoid match may not be exhaust...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22014#discussion_r208091445

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/aggregate/ApproxCountDistinctForIntervals.scala

---

@@ -67,6 +67,7 @@ case class ApproxCountDistinctForIntervals(

(endpointsExpression.dataType, endpointsExpression.eval()) match {

case (ArrayType(elementType, _), arrayData: ArrayData) =>

arrayData.toObjectArray(elementType).map(_.toString.toDouble)

+ case _ => throw new RuntimeException("not found at endpoints")

--- End diff --

Can we do this like:

```scala

val endpointsType = endpointsExpression.dataType.asInstanceOf[ArrayType]

val endpoints = endpointsExpression.eval().asInstanceOf[ArrayData]

endpoints.toObjectArray(endpointsType.elementType).map(_.toString.toDouble)

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22014: [SPARK-25036][SQL] avoid match may not be exhaust...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22014#discussion_r208090085

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/codegen/CodeGenerator.scala

---

@@ -471,6 +471,7 @@ class CodegenContext {

case NewFunctionSpec(functionName, None, None) => functionName

case NewFunctionSpec(functionName, Some(_),

Some(innerClassInstance)) =>

innerClassInstance + "." + functionName

+ case _ => null // nothing to do since addNewFunctionInteral() must

return one of them

--- End diff --

Shall we throw an `IllegalArgumentException`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22014: [SPARK-25036][SQL] avoid match may not be exhaust...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22014#discussion_r208089613

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/statsEstimation/ValueInterval.scala

---

@@ -86,6 +87,7 @@ object ValueInterval {

val newMax = if (n1.max <= n2.max) n1.max else n2.max

(Some(EstimationUtils.fromDouble(newMin, dt)),

Some(EstimationUtils.fromDouble(newMax, dt)))

+ case _ => throw new RuntimeException(s"Not supported pair: $r1, $r2

at intersect()")

--- End diff --

Shall we do `UnsupportedOperationException`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21977: SPARK-25004: Add spark.executor.pyspark.memory li...

Github user rdblue commented on a diff in the pull request:

https://github.com/apache/spark/pull/21977#discussion_r208091782

--- Diff:

core/src/main/scala/org/apache/spark/api/python/PythonRunner.scala ---

@@ -60,14 +61,26 @@ private[spark] object PythonEvalType {

*/

private[spark] abstract class BasePythonRunner[IN, OUT](

funcs: Seq[ChainedPythonFunctions],

-bufferSize: Int,

-reuseWorker: Boolean,

evalType: Int,

-argOffsets: Array[Array[Int]])

+argOffsets: Array[Array[Int]],

+conf: SparkConf)

extends Logging {

require(funcs.length == argOffsets.length, "argOffsets should have the

same length as funcs")

+ private val bufferSize = conf.getInt("spark.buffer.size", 65536)

+ private val reuseWorker = conf.getBoolean("spark.python.worker.reuse",

true)

+ private val memoryMb = {

+val allocation = conf.get(PYSPARK_EXECUTOR_MEMORY)

+if (reuseWorker) {

--- End diff --

No, I'm not sure where that is. Is it on the python side? If you can point

me to it, I'll have a closer look.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22009 **[Test build #94336 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94336/testReport)** for PR 22009 at commit [`cab6d28`](https://github.com/apache/spark/commit/cab6d2828dacaca6e62d3409c684d18a1fc861f2). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/1884/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22009: [SPARK-24882][SQL] improve data source v2 API

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22009 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21898 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21898 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/94326/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21898: [SPARK-24817][Core] Implement BarrierTaskContext.barrier...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21898 **[Test build #94326 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94326/testReport)** for PR 21898 at commit [`1f71e65`](https://github.com/apache/spark/commit/1f71e6583f9f9f270d07323f15c731717e13518d). * This patch **fails Spark unit tests**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21991: [SPARK-25018] [Infra] Use `Co-authored-by` and `Signed-o...

Github user viirya commented on the issue: https://github.com/apache/spark/pull/21991 The failed test is `FlatMapGroupsWithStateSuite.flatMapGroupsWithState`. I saw it fails some times occasionally. I think it should not be related to this change. @HyukjinKwon @dbtsai --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21305: [SPARK-24251][SQL] Add AppendData logical plan.

Github user rdblue commented on a diff in the pull request:

https://github.com/apache/spark/pull/21305#discussion_r208090428

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/types/DataType.scala ---

@@ -336,4 +337,124 @@ object DataType {

case (fromDataType, toDataType) => fromDataType == toDataType

}

}

+

+ private val SparkGeneratedName = """col\d+""".r

+ private def isSparkGeneratedName(name: String): Boolean = name match {

+case SparkGeneratedName(_*) => true

+case _ => false

+ }

+

+ /**

+ * Returns true if the write data type can be read using the read data

type.

+ *

+ * The write type is compatible with the read type if:

+ * - Both types are arrays, the array element types are compatible, and

element nullability is

+ * compatible (read allows nulls or write does not contain nulls).

+ * - Both types are maps and the map key and value types are compatible,

and value nullability

+ * is compatible (read allows nulls or write does not contain nulls).

+ * - Both types are structs and each field in the read struct is present

in the write struct and

+ * compatible (including nullability), or is nullable if the write

struct does not contain the

+ * field. Write-side structs are not compatible if they contain fields

that are not present in

+ * the read-side struct.

+ * - Both types are atomic and the write type can be safely cast to the

read type.

+ *

+ * Extra fields in write-side structs are not allowed to avoid

accidentally writing data that

+ * the read schema will not read, and to ensure map key equality is not

changed when data is read.

+ *

+ * @param write a write-side data type to validate against the read type

+ * @param read a read-side data type

+ * @return true if data written with the write type can be read using

the read type

+ */

+ def canWrite(

+ write: DataType,

+ read: DataType,

+ resolver: Resolver,

+ context: String,

+ addError: String => Unit = (_: String) => {}): Boolean = {

+(write, read) match {

+ case (wArr: ArrayType, rArr: ArrayType) =>

+// run compatibility check first to produce all error messages

+val typesCompatible =

+ canWrite(wArr.elementType, rArr.elementType, resolver, context +

".element", addError)

+

+if (wArr.containsNull && !rArr.containsNull) {

+ addError(s"Cannot write nullable elements to array of non-nulls:

'$context'")

+ false

+} else {

+ typesCompatible

+}

+

+ case (wMap: MapType, rMap: MapType) =>

+// map keys cannot include data fields not in the read schema

without changing equality when

+// read. map keys can be missing fields as long as they are

nullable in the read schema.

+

+// run compatibility check first to produce all error messages

+val keyCompatible =

+ canWrite(wMap.keyType, rMap.keyType, resolver, context + ".key",

addError)

+val valueCompatible =

+ canWrite(wMap.valueType, rMap.valueType, resolver, context +

".value", addError)

+val typesCompatible = keyCompatible && valueCompatible

+

+if (wMap.valueContainsNull && !rMap.valueContainsNull) {

+ addError(s"Cannot write nullable values to map of non-nulls:

'$context'")

+ false

+} else {

+ typesCompatible

+}

+

+ case (StructType(writeFields), StructType(readFields)) =>

+var fieldCompatible = true

+readFields.zip(writeFields).foreach {

+ case (rField, wField) =>

+val namesMatch = resolver(wField.name, rField.name) ||

isSparkGeneratedName(wField.name)

+val fieldContext = s"$context.${rField.name}"

+val typesCompatible =

+ canWrite(wField.dataType, rField.dataType, resolver,

fieldContext, addError)

+

+if (!namesMatch) {

+ addError(s"Struct '$context' field name does not match (may

be out of order): " +

+ s"expected '${rField.name}', found '${wField.name}'")

+ fieldCompatible = false

+} else if (!rField.nullable && wField.nullable) {

+ addError(s"Cannot write nullable values to non-null field:

'$fieldContext'")

+ fieldCompatible = false

+} else if (!typesCompatible) {

+ // errors are added in the recursive call to canWrite above

+ fieldCompatible = false

+}

+}

+

+if (readFields.size > writeFields.size) {

+ val missingFieldsStr =

[GitHub] spark pull request #21305: [SPARK-24251][SQL] Add AppendData logical plan.

Github user rdblue commented on a diff in the pull request:

https://github.com/apache/spark/pull/21305#discussion_r208090280

--- Diff:

sql/catalyst/src/test/scala/org/apache/spark/sql/types/DataTypeWriteCompatibilitySuite.scala

---

@@ -0,0 +1,395 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.types

+

+import scala.collection.mutable

+

+import org.apache.spark.SparkFunSuite

+import org.apache.spark.sql.catalyst.analysis

+import org.apache.spark.sql.catalyst.expressions.Cast

+

+class DataTypeWriteCompatibilitySuite extends SparkFunSuite {

--- End diff --

I'm planning on adding this, but it would be great to get this in and I'll

add the tests next. It would be great to get this in to no longer keep rebasing

it! Thanks!

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20666: [SPARK-23448][SQL] Clarify JSON and CSV parser behavior ...

Github user fuqiliang commented on the issue:

https://github.com/apache/spark/pull/20666

for specify, the json file (Sanity4.json) is

`{"a":"a1","int":1,"other":4.4}

{"a":"a2","int":"","other":""}`

code ï¼

> val config = new SparkConf().setMaster("local[5]").setAppName("test")

> val sc = SparkContext.getOrCreate(config)

> val sql = new SQLContext(sc)

>

> val file_path =

this.getClass.getClassLoader.getResource("Sanity4.json").getFile

> val df = sql.read.schema(null).json(file_path)

> df.show(30)

then in spark 1.6, result is

+---++-+

| a| int|other|

+---++-+

| a1| 1| 4.4|

| a2|null| null|

+---++-+

root

|-- a: string (nullable = true)

|-- int: long (nullable = true)

|-- other: double (nullable = true)

but in spark 2.2, result is

+++-+

| a| int|other|

+++-+

| a1| 1| 4.4|

|null|null| null|

+++-+

root

|-- a: string (nullable = true)

|-- int: long (nullable = true)

|-- other: double (nullable = true)

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #17185: [SPARK-19602][SQL] Support column resolution of f...

Github user skambha commented on a diff in the pull request:

https://github.com/apache/spark/pull/17185#discussion_r208089990

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/package.scala

---

@@ -169,25 +181,50 @@ package object expressions {

})

}

- // Find matches for the given name assuming that the 1st part is a

qualifier (i.e. table name,

- // alias, or subquery alias) and the 2nd part is the actual name.

This returns a tuple of

+ // Find matches for the given name assuming that the 1st two parts

are qualifier

+ // (i.e. database name and table name) and the 3rd part is the

actual column name.

+ //

+ // For example, consider an example where "db1" is the database

name, "a" is the table name

+ // and "b" is the column name and "c" is the struct field name.

+ // If the name parts is db1.a.b.c, then Attribute will match

+ // Attribute(b, qualifier("db1,"a")) and List("c") will be the

second element

+ var matches: (Seq[Attribute], Seq[String]) = nameParts match {

+case dbPart +: tblPart +: name +: nestedFields =>

+ val key = (dbPart.toLowerCase(Locale.ROOT),

+tblPart.toLowerCase(Locale.ROOT),

name.toLowerCase(Locale.ROOT))

+ val attributes = collectMatches(name,

qualified3Part.get(key)).filter {

+a => (resolver(dbPart, a.qualifier.head) && resolver(tblPart,

a.qualifier.last))

+ }

+ (attributes, nestedFields)

+case all =>

+ (Seq.empty, Seq.empty)

+ }

+

+ // If there are no matches, then find matches for the given name

assuming that

+ // the 1st part is a qualifier (i.e. table name, alias, or subquery

alias) and the

+ // 2nd part is the actual name. This returns a tuple of

// matched attributes and a list of parts that are to be resolved.

//

// For example, consider an example where "a" is the table name, "b"

is the column name,

// and "c" is the struct field name, i.e. "a.b.c". In this case,

Attribute will be "a.b",

// and the second element will be List("c").

- val matches = nameParts match {

-case qualifier +: name +: nestedFields =>

- val key = (qualifier.toLowerCase(Locale.ROOT),

name.toLowerCase(Locale.ROOT))

- val attributes = collectMatches(name, qualified.get(key)).filter

{ a =>

-resolver(qualifier, a.qualifier.get)

+ matches = matches match {

--- End diff --

done.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17185: [SPARK-19602][SQL] Support column resolution of fully qu...

Github user skambha commented on the issue: https://github.com/apache/spark/pull/17185 Thanks for the review. I have addressed your comments and pushed the changes. @cloud-fan, Please take a look. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17185: [SPARK-19602][SQL] Support column resolution of fully qu...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/17185 **[Test build #94335 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/94335/testReport)** for PR 17185 at commit [`5f7e5d7`](https://github.com/apache/spark/commit/5f7e5d7bddca593d72818b07d71f678bd0a1982d). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21991: [SPARK-25018] [Infra] Use `Co-authored-by` and `Signed-o...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21991 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/94324/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22018: [SPARK-25038][SQL] Accelerate Spark Plan generation when...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22018 Can one of the admins verify this patch? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21721: [SPARK-24748][SS] Support for reporting custom me...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/21721#discussion_r208089043

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/ProgressReporter.scala

---

@@ -196,6 +237,18 @@ trait ProgressReporter extends Logging {

currentStatus = currentStatus.copy(isTriggerActive = false)

}

+ /** Extract writer from the executed query plan. */

+ private def dataSourceWriter: Option[DataSourceWriter] = {

+if (lastExecution == null) return None

+lastExecution.executedPlan.collect {

+ case p if p.isInstanceOf[WriteToDataSourceV2Exec] =>

--- End diff --

this only works for microbatch mode, do we have a plan to support

continuous mode?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org